Summary

Rime optimization algorithm (RIME) encounters issues such as an imbalance between exploitation and exploration, susceptibility to local optima, and low convergence accuracy when handling problems. This paper introduces a variant of RIME called IRIME to address these drawbacks. IRIME integrates the soft besiege (SB) and composite mutation strategy (CMS) and restart strategy (RS). To comprehensively validate IRIME’s performance, IEEE CEC 2017 benchmark tests were conducted, comparing it against many advanced algorithms. The results indicate that the performance of IRIME is the best. In addition, applying IRIME in four engineering problems reflects the performance of IRIME in solving practical problems. Finally, the paper proposes a binary version, bIRIME, that can be applied to feature selection problems. bIRIMR performs well on 12 low-dimensional datasets and 24 high-dimensional datasets. It outperforms other advanced algorithms in terms of the number of feature subsets and classification accuracy. In conclusion, bIRIME has great potential in feature selection.

Subject areas: Computing methodology, Artificial intelligence, Engineering

Graphical abstract

Highlights

-

•

This study introduces the SB and CMS-RS into RIME, named IRIME

-

•

IRIME addresses the drawbacks of balance between exploration and exploitation

-

•

IRIME performs well in benchmark functions and real-world engineering problems

-

•

IRIME has outstanding performance in feature selection

Computing methodology; Artificial intelligence; Engineering

Introduction

In engineering design optimization problems, balancing resource allocation and constraint conditions is often challenging.1 When facing real-world problems, the optimization process frequently involves multiple variables and diverse constraints, significantly increasing the difficulty of optimization.2 Engineering optimization also often considers factors such as performance and cost, leading to complex problems with multiple variables and objectives. Among optimization problems, feature selection is a problem widely investigated by scholars, especially in today’s era of rapid information growth, where data abundance leads to issues like data redundancy, high computational costs, and weakened model generalization abilities. Feature selection plays a crucial role in reducing computational expenses, simplifying models, and enhancing their generalization capabilities. Commonly used feature selection methods include filter, wrapper, and embedded methods.3,4,5,6 Filter methods primarily determine the importance of features to the target variable based on statistical properties between features or the relationship between feature variables and target variables. While filter methods can be independently analyzed without involving machine learning, they overlook other connections between features. Embedded methods, although capable of uncovering complex relationships between features, require consideration of intricate parameters and structures and are influenced by the machine learning model. Meanwhile, wrapper methods, favored by many researchers because of their straightforward nature and ease of implementation, face a challenge when dealing with an n-dimensional feature dataset, resulting in possible combinations of features.7,8 Faced with such complex computations, researchers have started using metaheuristic algorithms as a feasible solution for wrapper methods.

Heuristics are problem-solving strategies that use basic principles or shortcuts to quickly uncover approximate answers, generally valuing speed above accuracy.9,10,11,12,13 Metaheuristics, on the other hand, work at a higher abstraction level, directing the search of solution spaces.14 They enable the search of optimum or nearly optimal solutions across several issue domains by continually refining and adapting heuristic techniques, so overcoming the constraints of individual problem settings.15 Metaheuristic algorithms represent advanced optimization techniques that simulate certain biological or physical phenomena found in nature. These algorithms can generally be categorized into physics-based, swarm intelligence-based, and evolution-inspired. Physics-based metaheuristic algorithms, such as the sine cosine algorithm (SCA),16 RUNge Kutta optimizer (RUN),17 weighted mean of vectors (INFO),18 simulated annealing (SA),19 gravitational search algorithm (GSA),20 and rime optimization algorithm (RIME),21 draw inspiration from different natural entities. SCA is inspired by trigonometric functions like sine and cosine, simulating their properties for the search process. SA is inspired by material cooling from high temperatures to a stable state, involving the probabilistic selection of optimal solutions. Newton’s law of universal gravitation inspires GSA. The algorithm is modeled based on this concept, with individual fitness values treated as mass in the gravitational formula. An adaptive gravitational constant is introduced into GSA. RIME simulates the growth of rime-ice in nature, modeling both soft-rime and hard-rime and eventually incorporating a greedy selection strategy. Swarm intelligence-based metaheuristic algorithms have seen rapid development, featuring algorithms like particle swarm optimizer (PSO),22 water cycle algorithm (WCA),23 gray wolf optimizer (GWO),24 hunger games search (HGS),25 slime mold algorithm (SMA),26,27 Harris hawks optimizer (HHO),28 moth-flame optimization (MFO),29 liver cancer algorithm (LCA),30 parrot optimizer (PO),31 colony predation algorithm (CPA),32 among others. SMA draws inspiration from the foraging process of slime mold, including aspects like capturing, encircling, and approaching food. HHO mathematically models the soft besiege (SB) and hard besiege processes of Harris hawks. MFO involves the mathematical modeling of moths’ attraction to flames. In MFO, the number of flames is adjusted based on the iteration count, and flames are selected according to their fitness values. The author models moths’ spiraling flight behavior when they are close to flames. Evolution-inspired metaheuristic algorithms primarily include differential evolution (DE),33 genetic algorithm (GA),34 and biogeography-based optimization (BBO).35 DE operates through mutation, crossover, and selection operations, guiding individuals toward potentially better solutions. GA treats each individual as a chromosome, facilitating genetic operations among chromosomes to achieve search outcomes. BBO models migration and mutation in biogeography, relying on migration probability for population updates. These algorithms possess robust optimization capabilities and are expected to demonstrate superior performance in various applications such as fault identification,36 vehicle communication,37 text privacy,38 hemodialysis prediction,39 target tracking,40 economic emission,41,42 and intrusion detection.43

As scholars delve into the study of metaheuristic algorithms, numerous outstanding variants have emerged and been successfully applied in various domains.44,45,46,47 Ozsoydan et al.48 modified the mutation mechanism of elite wolves, proposing a new variant of GWO that effectively tackled multiple combinatorial problems and the 0–1 knapsack problem. Dhargupta et al.49 utilized spearman’s rank correlation coefficient to determine whether wolf packs engage in opposition learning, enhancing the convergence speed and capability of populations within GWO. Deng et al.50 divided the SMA into two populations dynamically, adjusting population sizes to balance the algorithm’s exploitation and exploration capabilities and successfully applying it to real-world engineering problems. Samantaray et al.51 combined SMA with PSO, successfully applying this hybrid approach to predict flood flow rates. Tan et al.52 combined the whale optimization algorithm (WOA) with the equilibrium optimizer, validating its performance on benchmark test sets. Wang et al.53 enhanced population diversity in WOA by reducing intra-population similarity, ultimately applying it to multi-threshold image segmentation tasks. Kumar et al.54 augmented the global search capability of HHO through opposition learning, successfully addressing multi-objective hydrothermal power generation scheduling problems. Tian et al.55 proposed a novel initialization method, incorporating elite opposition learning to improve HHO populations, ultimately applying it to engineering problems. Tiwari et al.56 addressed issues such as poor population diversity and inadequate exploration capabilities in DE. They improved DE by incorporating ideas from PSO to enhance its global search ability. Additionally, they changed the crossover rate of DE, proposing a new crossover rate, and introduced a new selection method to further promote DE’s convergence capabilities, successfully applied to engineering design optimization problems. Pham et al.57 proposed a strategy of opposition learning and roulette selection to improve the global optimization capability of SCA. The enhanced SCA maintains a balanced exploration and exploitation similar to the original SCA but with increased stability, ideal for challenging real-world optimization problems. Huang et al.58 combined various strategies, including Nelder-Mead simplex, opposition learning, and spiral strategy, to enhance beluga whale optimization (BWO). Combining different strategies at different algorithm stages improved BWO’s performance, successfully applied it to engineering design problems, and tested it on the CEC benchmark dataset. Gomes et al.59 proposed and compared a hybrid algorithm with GA. They applied metaheuristic algorithms to channel parameter estimation and successfully demonstrated GA’s advantages over the hybrid algorithm in channel parameter estimation. Gundogdu et al.60 successfully applied an improved GWO to photovoltaic systems. They improved GWO to escape local optima, enhancing performance in photovoltaic system applications. Yu et al.61 improved the teaching-learning-based optimization algorithm (TLBO) using reinforcement learning to enhance TLBO’s update phase, successfully applied to wind farm data problems. Moustafa et al.62 applied mantis search algorithm (MSA) to economic dispatch in combined heat and power systems, drawing inspiration from collective intelligence of mantises. Al-Areeq et al.63 utilized a hybrid two-population intelligence algorithm for flood hazard assessment. Tu et al.64 combined GWO and HHO into HGWO, improving collective search ability, convergence speed, and accuracy compared to GWO, applied to real-world engineering problems. Combining optimization algorithms is a crucial approach to improving metaheuristic algorithms. Silva et al.65 combined ant colony optimization (ACO) and GA, enhancing ACO’s convergence capability and mitigating the algorithm’s tendency to get stuck in local optima, applied to sustainable solution problems. Moreover, metaheuristic algorithms have found extensive applications in the domain of feature selection.

Peng et al.66 adopted hierarchical strategies to enhance HHO, conducting feature selection on both low and high-dimensional datasets. Yu et al.67 improved WOA using various strategies, including sine initialization and employed a kernel extreme learning machine as a classifier for feature selection. AbdelAty et al.68 utilized chaos theory to boost the convergence capability of the hunter-prey optimization algorithm, successfully applying it to feature selection. Al-Khatib et al.69 enhanced lemurs optimization by integrating local search strategies and opposition learning, evaluating feature selection performance on UCI datasets. Zaimoglu et al.70 employed different chaos learning methods to improve the herd optimization algorithm and conducted feature selection tests across multiple classifiers. Chhabra et al.71 improved bald eagle search by incorporating three distinct enhancement strategies at different stages of algorithm execution, successfully applying them to feature selection. Pan et al.72 improved the initialization strategy of GWO and enhanced GWO using differential and competition-guided strategies for feature selection in high-dimensional data. Askr et al.73 used various strategies to enhance the exploration and exploitation capabilities of the golden jackal optimization (GJO) algorithm. They proposed a binary form of GJO and tested it for feature selection on multiple high-dimensional datasets. Wang et al.74 made improvements to the transfer function, introducing a new function specifically targeting the deficiencies of the GWO in handling feature selection problems. They applied different enhancement methods to elite and ordinary wolves within the population to enhance the balance between exploration and exploitation in GWO. Ye et al.75 enhanced the optimization capability of the hybrid breeding optimization using the elite opposition mechanism and Levy flight strategy. They combined different classifiers for intrusion detection feature selection problems. Yang et al.76 focused on feature space, dividing it into regions and proposing a new initialization strategy. They successfully applied the golden eagle optimizer to solve feature selection problems in medium to small-dimensional spaces. Chakraborty et al.77 improved the WOA using a horizontal crossing strategy and collaborative hunting. They introduced a binary version of WOA and combined it with the K-nearest neighbor for feature selection on UCI datasets. Abdelrazek et al.78 incorporated different chaotic mappings into the dwarf mongoose optimization algorithm (DMO), enabling DMO to better adapt to wrapper-based feature selection methods. The improved algorithm was validated on various UCI datasets, demonstrating competitive performance compared to other metaheuristic algorithms. Mostafa et al.79 used spider wasp optimization to enhance DE, improving DE’s problem-solving capabilities and incorporating methods to enhance solution quality, specifically applied to feature selection. As per the no free lunch (NFL)80 theorem, no single algorithm can address all feature selection tasks, especially in complex optimization environments, where RIME tends to get stuck in local optima and encounter slow convergence issues. Hence, this paper develops a variant of RIME to enhance its performance in complex optimization environments and with intricate datasets. RIME, a new algorithm proposed by Su in 2023,21 has seen limited use in feature selection studies.

Research and application of RIME is underway. Yu et al.81 combined the triangular game search strategy and random follower search strategy to improve RIME, enhancing its global search capability and inter-population information exchange capabilities. The enhanced RIME was then applied in the diagnostic process of pulmonary hypertension. Yang et al.82 applied the improved RIME in photovoltaic systems to maintain temperature stability. Zhong et al.83 improved RIME by utilizing Latin hypercube sampling and distance-based selection mechanisms and enhanced the hard-rime process, ultimately applying the improved RIME to engineering design problems. Zhu et al.84 improved RIME using the Gaussian diffusion and interactive mechanism strategy, which effectively solved multi-threshold image segmentation problems. Li et al.85 also applied the improved RIME in multi-threshold image segmentation.

In this paper, to further enhance the capability of RIME in feature selection applications, we introduced SB, composite mutation strategy, and restart strategy (CMS-RS) into RIME, naming it IRIME. SB expands the search space of RIME, increases population diversity in the early stage, and effectively prevents the problem of local optima caused by greedy strategies. Additionally, CMS encourages more in-depth exploitation at the current position of RIME, to some extent, enhancing RIME’s exploitation abilities. RS keeps an eye on whether RIME falls into local optima and restarts when it does. The combination of these mechanisms involves adaptive parameters and does not run in a singular form like classical PSO and DE, but instead has multiple optimization methods. In sum, the combination of these approaches balances the exploration and exploitation abilities of RIME. To validate IRIME’s performance, this study conducted tests on the IEEE CEC 2017 benchmark tests and compared them with other advanced algorithms, demonstrating significant advantages for IRIME. In addition, IRIME’s performance in engineering design problems also reflects its ability to solve practical problems. Finally, it applied to feature selection in low-dimensional and high-dimensional datasets. In summary, this paper’s primary contributions encompass.

-

•

Proposed a variant of RIME named IRIME.

-

•

This paper effectively enhances the population diversity of RIME by using SB, expands the search space, and enhances the exploratory ability.

-

•

This paper integrates CMS-RS to improve the exploitation capacity of RIME and explores new solutions when stuck in a local optimum.

-

•

IRIME has demonstrated excellent performance in IEEE CEC 2017 benchmark functions and demonstrated the ability to solve practical problems in engineering design.

-

•

The paper proposes a binary version of IRIME applied to feature selection problems, which respectively achieves good results on high- and low-dimensional datasets.

Results and discussion

Experimental design and analysis of results

A series of systematic experiments were conducted in this study to validate the efficacy of the RIME variant. The IEEE CEC 2017 benchmark functions were utilized,86 comprising functions categorized into four types: simple unimodal (F1-F3), simple multimodal (F4-F10), hybrid (F11-F20), and composite functions (F21-F30),87 as shown in Table 1. The IEEE CEC 2017 benchmark functions were a set of standard functions used during the 2017 IEEE congress on evolutionary computation for evaluating the performance of evolutionary algorithms and other optimization algorithms.88,89 These functions are designed to test different optimization problem settings and have undergone extensive research and validation to ensure that they pose a certain level of complexity and diversity, effectively evaluating the performance of optimization algorithms. The experiments involved historical trajectory analysis, balance and diversity analyses of IRIME, stability analysis, and ablation studies. A comparison was made against 13 conventional algorithms and 11 advanced algorithms. In addition, apply IRIME to 4 practical engineering problems to verify its ability to solve engineering problems. Finally, it was applied to 12 low-dimensional and 24 high-dimensional datasets to validate its performance in feature selection. To ensure statistical significance in the experimental results, non-parametric statistical tests such as the Wilcoxon signed-rank test90 were employed, with a significance level set at 0.05. Additionally, average (AVG) and standard deviation (STD) analyses were used, and ranking was conducted using the Friedman test.91 In testing the IEEE CEC 2017 benchmark function, all experiments referred to previous research to minimize bias as much as possible.

Table 1.

IEEE CEC 2017 benchmark functions (Search Range: )

| Class | No. | Functions | Optimum |

|---|---|---|---|

| Unimodal | F1 | Shifted and Rotated Bent Cigar Function | 100 |

| F2 | Shifted and Rotated Bent Sum of Different Power Function | 200 | |

| F3 | Shifted Rotated Zakharov Function | 300 | |

| Multimodal | F4 | Shifted and Rotated Rosenbrock’s Function | 400 |

| F5 | Shifted and Rotated Rastrigin’s Function | 500 | |

| F6 | Shifted and Rotated Expanded Scaffer’s F6 Function | 600 | |

| F7 | Shifted and Rotated Lunacek Bi_Rastrigin’s Function | 700 | |

| F8 | Shifted and Rotated Non-Continuous Rastrigin’s Function | 800 | |

| F9 | Shifted and Rotated Levy Function | 900 | |

| F10 | Shifted and Rotated Schwefel’s Function | 1000 | |

| Hybrid | F11 | Hybrid Function 1 (N = 3) | 1100 |

| F12 | Hybrid Function 2 (N = 3) | 1200 | |

| F13 | Hybrid Function 3 (N = 3) | 1300 | |

| F14 | Hybrid Function 4 (N = 4) | 1400 | |

| F15 | Hybrid Function 5 (N = 4) | 1500 | |

| F16 | Hybrid Function 6 (N = 4) | 1600 | |

| F17 | Hybrid Function 6 (N = 5) | 1700 | |

| F18 | Hybrid Function 6 (N = 5) | 1800 | |

| F19 | Hybrid Function 6 (N = 5) | 1900 | |

| F20 | Hybrid Function 6 (N = 6) | 2000 | |

| Composition | F21 | Composition Function 1 (N = 3) | 2100 |

| F22 | Composition Function 2 (N = 3) | 2200 | |

| F23 | Composition Function 3 (N = 4) | 2300 | |

| F24 | Composition Function 4 (N = 4) | 2400 | |

| F25 | Composition Function 5 (N = 5) | 2500 | |

| F26 | Composition Function 6 (N = 5) | 2600 | |

| F27 | Composition Function 7 (N = 6) | 2700 | |

| F28 | Composition Function 8 (N = 6) | 2800 | |

| F29 | Composition Function 9 (N = 3) | 2900 | |

| F30 | Composition Function 10 (N = 3) | 3000 |

The experiments in this study were conducted using MATLAB R2020a on a system running the Windows 11 operating system, powered by an Intel(R) Core(TM) i5-12400 12th generation processor clocked at 2.50 GHz. The relevant parameters for the algorithms tested alongside IRIME are listed in Table 2.

Table 2.

The parameters of the algorithm involved

| Algorithm | Parameters |

|---|---|

| RIME | , |

| SCA | , , |

| WOA | , , |

| DE | , |

| SSA | , , |

| PSO | , , |

| MFO | , , |

| GWO | , , |

| BA | , |

| CS | , |

| FA | , |

| CPA | , |

| HHO | , |

| EBOwithCMAR | |

| LSHADE_cnEpSi | , |

| ALCPSO | , , , |

| CLPSO | |

| LSHADE | , |

| SADE | |

| JADE | , , |

| RCBA | , |

| EPSO | , |

| CBA | , |

| LWOA | , |

Qualitative analysis of IRIME

Analysis of historical search trajectories

In Figure 1, F1 represents a unimodal function. Observing Figures 1B and 1D, it is apparent that IRIME converges to smaller fitness values. Moreover, the sudden increase in the average fitness of all agents in later iterations is due to the CMS-RS initiating a restart upon identifying local optima, thereby exploring new solutions and enhancing the possibility of discovering potential solutions. In Figure 1A, the red dots indicate the positions where the best solution has been found so far, while the black dots represent the trajectory points during the search. Initially, individuals are randomly distributed in the solution space, but with IRIME iterations, they gradually approach the peak of the unimodal function. In Figure 1C, the one-dimensional trajectory also shows that IRIME has a broader search space in both the initial and final stages than RIME. In the middle stage of IRIME, there is a bias toward exploitation: initially influenced by SB and later influenced by RS, with CMS contributing more to exploitation in the middle stage. For functions F4-F10, representing simple multimodal functions, Figure 1A shows that IRIME’s individuals are distributed across each peak at the onset. Gradually, IRIME discovers better peaks and exploits them. In Figures 1B and 1D, IRIME consistently achieves better fitness values than RIME. When IRIME gets stuck in local optima, the CMS-RS opens up new spaces. Figure 1C also indicates that IRIME initially explores multiple peaks and gradually converges to a better peak, which is evident in the selected region, which shows better fitness values than RIME. Similarly, in composite functions F21 and F22, IRIME initially exhibits a larger search space than RIME and converges to better fitness values later, discovering regions where RIME fails to reach.

Figure 1.

The history trajectory analysis for IRIME

(A) The search trajectory of IRIME, (B) Average fitness of IRIME, (C) One-dimensional trajectory of IRIME, (D) convergence curves for IRIME (red) and RIME (blue).

Balance and diversity analysis

This section utilizes the IEEE CEC 2017 benchmark functions to evaluate the balance and diversity of IRIME and RIME. As depicted in Figures 2 and 3, the blue line represents the algorithm in an exploitation phase, the red line illustrates the algorithm in an exploration phase, and the green line indicates an increasing trend when the exploration outweighs the exploitation or a decreasing trend otherwise. As shown in the graphs for unimodal functions F2 and F3, the variant of RIME proposed in this paper, IRIME, tends to be explored more extensively in the early stages. This appropriate increase in the exploration phase expedites the algorithm’s convergence and mitigates the susceptibility to local optima. In contrast, RIME spends less time exploring F2 and F3, particularly with only 1.6321% in F2. This results in slow convergence of the algorithm in unimodal functions. However, the integration of SB effectively enhances IRIME’s exploratory capability, accelerating convergence. As observed in the diversity graph, IRIME’s individuals are initially distributed across a broader space due to SB’s influence, leading to higher diversity than RIME. During the mid-phase, extensive exploitation occurs, and a sudden rise in diversity toward the end is attributed to CMS-RS’s role, which detects the algorithm’s local entrapment and enhances IRIME’s precision. In the case of simple multimodal functions F8 and F10, RIME exhibits minimal exploration, especially in F10. This starkly contrasts IRIME, where the exploration capability exceeds 20%, while RIME’s exploration capability is only around 2%. Consequently, RIME is highly prone to local optima, only developing around specific peaks and failing to explore potentially more fruitful regions. The diversity curve further demonstrates that IRIME possesses greater initial population diversity and engages in substantial exploitation in the mid-phase, and after exploitation stagnation, IRIME attempts to break out of local optima to find better solutions. For hybrid functions F13, F15, and F20, RIME only explores about 1%, whereas IRIME explores more extensively. Additionally, the population’s diversity increases. CMS-RS also plays a role in discovering better solutions in the later phase. A similar pattern emerges for functions F21, F23, and F29, where RS and CMS-RS balance the exploration and exploitation capabilities of the original RIME, increasing population diversity and enhancing convergence accuracy. This also empowers IRIME to escape local optima. In conclusion, the combination of SB and CMS-RS equips IRIME with superior balance and diversity, allowing it to escape local optima more effectively.

Figure 2.

Balance analysis for IRIME and RIME

Figure 3.

Algorithm diversity analysis for IRIME and RIME

Parameter sensitivity experiment

The selection of parameters critically influences algorithm’s performance; therefore, conducting a parameter sensitivity analysis is essential.92 This analysis evaluates how different parameter values affect the performance of the algorithm, thereby optimizing algorithm efficiency and ensuring robustness under various conditions.93,94 In this paper, most parameters used in IRIME are supported by theoretical or empirical justifications from original papers. The only point of contention is the threshold at which the restart strategy in CMS-RS begins to execute. As mentioned earlier, the threshold is set at 50. To verify the appropriateness of this threshold, this paper conducted experiments at threshold values of 30, 50, and 100, represented as IRIME30, IRIME50, and IRIME100, respectively.

The experimental results are shown in Table 3. The symbols “+/ = /-” represent whether IRIME performs significantly better, equal to, or worse than other algorithms in this experiment on the Wilcoxon signed-rank test. From the table, it can be seen that the choice of threshold affects the overall performance of IRIME. However, the differences are not pronounced for most functions. As per the results, the performance difference between IRIME30 and IRIME50 is insignificant in 19 functions, while between IRIME100 and IRIME50, the difference is insignificant in 18 functions. On the whole, the overall performance of the IRIME algorithm is better when the threshold is set at 50. As shown in the table, the average ranking of IRIME50 is smaller, with a final score of 1.7.

Table 3.

Comparison results between IRIME and IRIMEs

| Overall Rank | |||

|---|---|---|---|

| Algorithm | Rank | +/ = /- | Avg |

| IRIME50 | 1 | ∼ | 1.7 |

| IRME30 | 3 | 10/19/1 | 2.4 |

| IRIME100 | 2 | 7/18/5 | 1.866667 |

The influence of SB and CMS-RS

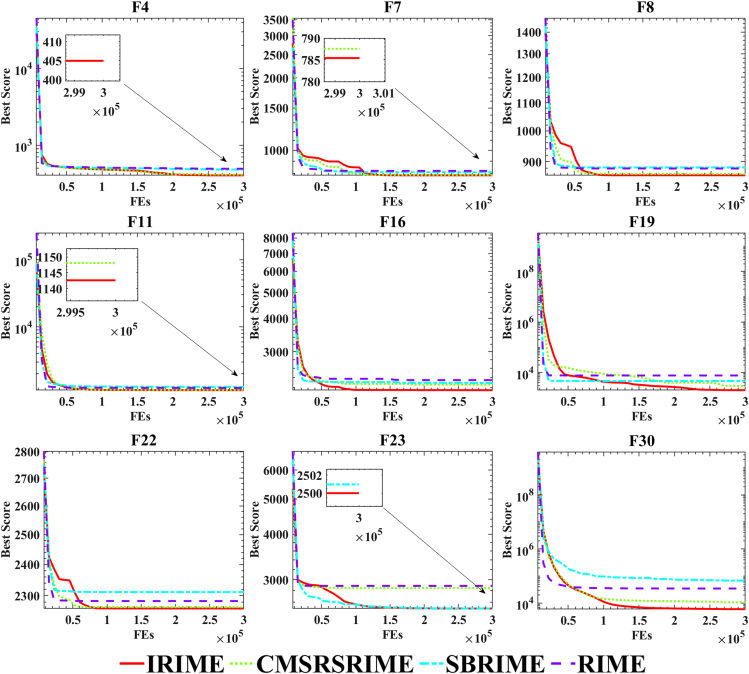

In this section, we specifically examine the precise impact of SB and CMS-RS on RIME using the IEEE CEC 2017 benchmark functions. In this experiment, the population size was set to 30, problem dimensionality was set to 30, and the maximum iteration count was 300,000. To eliminate potential incidental influences, each algorithm ran independently 30 times. SBRIME represents RIME integrated with SB, while CMSRSRIME denotes RIME integrated with CMS-RS. The symbols "+/ = /-" represent whether IRIME performs significantly better, equal to, or worse than other algorithms in this experiment on the Wilcoxon signed-rank test.

Table 4 shows that the average ranking of IRIME is 1.43333, securing the top position. This indicates that combining these two methods improves RIME in various aspects. Compared to the original RIME, 28 out of 30 benchmark functions perform better, while two functions converge to the same outcome. This suggests that the incorporation of SB and CMS-RS has not adversely affected RIME. We conducted a Friedman ranking, as depicted in Figure 4. From the Friedman ranking, it is evident that IRIME ranks first, and the algorithm’s performance improves with each additional mechanism integrated.

Table 4.

Comparison results between IRIME and RIMEs

| Overall Rank | |||

|---|---|---|---|

| Algorithm | Rank | +/ = /- | Avg |

| IRIME | 1 | ∼ | 1.433333 |

| SBRIME | 3 | 23/4/3 | 3.133333 |

| CMSRSRIME | 2 | 9/18/3 | 2.066667 |

| RIME | 4 | 28/2/0 | 3.366667 |

Figure 4.

The Friedman ranking of IRIME and RIMEs

Table 5 presents specific comparative data of the algorithms, with bold text highlighting the best results obtained among all algorithms. It also includes convergence graphs, as depicted in Figure 5. The graph and the table show that solely incorporating SB or CMS-RS does not lead the algorithm to perform optimally. Solely adding SB enhances population diversity, boosting RIME’s exploratory capability. This improvement is evident in RIME’s performance on composite functions like F22 and F23. However, it does not manifest advantages in unimodal functions like F3 or multimodal functions like F8. Solely incorporating CMS-RS strengthens the algorithm’s performance on unimodal functions such as F1, multimodal functions like F4 and F5, and hybrid functions like F11 and F12. However, CMS-RS does not demonstrate significant effects on composite functions, mainly because it effectively improves RIME’s convergence capability, meeting the requirements for local exploitation and, to some extent, providing the ability to escape local optima. Yet, it offers less in terms of population diversity and weaker exploratory capabilities. Only through the comprehensive integration of SB and CMS-RS can IRIME achieve the top-ranking position. Additionally, Table 6 provides the algorithm’s p-values. From a statistical perspective, IRIME holds a dominant position across most functions.

Table 5.

Comparison of IRIME with RIMEs

| F1 |

F2 |

F3 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 1.000000E+02 | 4.341879E-07 | 1.208397E+04 | 1.692978E+04 | 3.000675E+02 | 6.544860E-02 |

| CMSRSRIME | 1.000000E+02 | 6.787999E-07 | 9.608267E+03 | 2.901084E+04 | 3.000137E+02 | 1.440701E-02 |

| SBRIME | 6.912754E+03 | 3.342332E+03 | 1.903663E+04 | 3.287184E+04 | 3.019264E+02 | 7.738878E-01 |

| RIME | 6.740600E+03 | 4.683236E+03 | 1.725470E+04 | 4.617363E+04 | 3.016142E+02 | 7.393312E-01 |

| F4 |

F5 |

F6 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 4.049188E+02 | 1.660907E+01 | 5.612429E+02 | 1.547367E+01 | 6.000036E+02 | 1.901825E-03 |

| CMSRSRIME | 4.119034E+02 | 2.428008E+01 | 5.592430E+02 | 1.695952E+01 | 6.000025E+02 | 1.635151E-03 |

| SBRIME | 4.822844E+02 | 2.432146E+01 | 5.940733E+02 | 2.538615E+01 | 6.008424E+02 | 7.132176E-01 |

| RIME | 4.934413E+02 | 3.982441E+01 | 5.792156E+02 | 2.035945E+01 | 6.003355E+02 | 3.274886E-01 |

| F7 |

F8 |

F9 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 7.854226E+02 | 1.459656E+01 | 8.592915E+02 | 1.276500E+01 | 9.000000E+02 | 1.360593E-11 |

| CMSRSRIME | 7.875552E+02 | 1.590540E+01 | 8.632096E+02 | 1.265242E+01 | 9.031533E+02 | 9.154477E+00 |

| SBRIME | 8.092856E+02 | 2.294600E+01 | 8.825459E+02 | 2.181813E+01 | 1.832479E+03 | 8.498307E+02 |

| RIME | 8.196870E+02 | 2.584547E+01 | 8.795674E+02 | 2.032560E+01 | 1.300574E+03 | 5.075069E+02 |

| F10 |

F11 |

F12 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 3.370405E+03 | 6.680517E+02 | 1.142597E+03 | 2.458383E+01 | 3.778128E+03 | 1.308558E+03 |

| CMSRSRIME | 3.527053E+03 | 4.907452E+02 | 1.148122E+03 | 2.141947E+01 | 3.759469E+03 | 1.719976E+03 |

| SBRIME | 3.618576E+03 | 5.236817E+02 | 1.276824E+03 | 7.583414E+01 | 6.747955E+06 | 3.731713E+06 |

| RIME | 3.402741E+03 | 6.191174E+02 | 1.231434E+03 | 6.460259E+01 | 4.432634E+06 | 2.177431E+06 |

| F13 |

F14 |

F15 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 1.334285E+03 | 8.006018E+00 | 1.444883E+03 | 1.161457E+01 | 1.559461E+03 | 2.867685E+01 |

| CMSRSRIME | 1.338540E+03 | 9.647202E+00 | 1.449102E+03 | 1.254535E+01 | 1.569876E+03 | 3.745820E+01 |

| SBRIME | 4.021342E+03 | 3.087106E+03 | 1.564542E+03 | 8.300780E+01 | 4.034866E+03 | 3.706339E+03 |

| RIME | 4.693434E+03 | 2.467541E+03 | 1.504226E+03 | 3.706804E+01 | 6.473199E+03 | 4.852897E+03 |

| F16 |

F17 |

F18 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 2.153008E+03 | 2.439803E+02 | 1.937497E+03 | 8.960966E+01 | 1.363427E+04 | 8.005798E+03 |

| CMSRSRIME | 2.256912E+03 | 2.333114E+02 | 1.999397E+03 | 1.131100E+02 | 1.223189E+04 | 1.042454E+04 |

| SBRIME | 2.290189E+03 | 2.808756E+02 | 2.041873E+03 | 8.707292E+01 | 7.422992E+04 | 5.051162E+04 |

| RIME | 2.348066E+03 | 2.163382E+02 | 2.026551E+03 | 1.694096E+02 | 1.018748E+05 | 7.327155E+04 |

| F19 |

F20 |

F21 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 2.014868E+03 | 2.572843E+02 | 2.189910E+03 | 8.610377E+01 | 2.134962E+03 | 3.507469E+01 |

| CMSRSRIME | 2.925334E+03 | 3.347182E+03 | 2.181714E+03 | 9.010563E+01 | 2.125485E+03 | 3.190956E+01 |

| SBRIME | 4.612865E+03 | 4.464702E+03 | 2.361028E+03 | 1.289487E+02 | 2.207251E+03 | 3.255049E+01 |

| RIME | 7.662323E+03 | 6.550908E+03 | 2.290457E+03 | 1.005639E+02 | 2.214770E+03 | 3.275008E+01 |

| F22 |

F23 |

F24 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 2.259745E+03 | 1.569471E+01 | 2.500000E+03 | 8.301258E-06 | 2.600000E+03 | 1.888236E-13 |

| CMSRSRIME | 2.263672E+03 | 1.606741E+01 | 2.845609E+03 | 1.510916E+01 | 3.394899E+03 | 1.332673E+01 |

| SBRIME | 2.311125E+03 | 3.239709E+01 | 2.500972E+03 | 8.935810E-01 | 2.600053E+03 | 5.355676E-02 |

| RIME | 2.282943E+03 | 2.054662E+01 | 2.884192E+03 | 3.074977E+01 | 3.360063E+03 | 1.826656E+02 |

| F25 |

F26 |

F27 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 2.707120E+03 | 3.899517E+01 | 2.800000E+03 | 7.208242E-10 | 2.920793E+03 | 1.138842E+02 |

| CMSRSRIME | 2.918437E+03 | 2.484449E+01 | 5.090513E+03 | 1.921404E+02 | 3.437174E+03 | 3.633907E+01 |

| SBRIME | 2.700552E+03 | 3.402318E-01 | 2.800529E+03 | 4.689096E-01 | 2.902733E+03 | 9.562687E-01 |

| RIME | 2.965889E+03 | 4.976697E+01 | 4.887692E+03 | 9.898190E+02 | 3.547105E+03 | 7.414412E+01 |

| F28 |

F29 |

F30 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 3.031860E+03 | 7.474622E+01 | 3.255024E+03 | 1.067390E+02 | 5.978167E+03 | 3.490858E+03 |

| CMSRSRIME | 4.714342E+03 | 8.194617E+02 | 3.418242E+03 | 1.152990E+02 | 1.069414E+04 | 4.913513E+03 |

| SBRIME | 3.000791E+03 | 6.112466E-01 | 3.109261E+03 | 4.379583E+00 | 6.810517E+04 | 8.243885E+04 |

| RIME | 3.350192E+03 | 3.439794E+02 | 3.545178E+03 | 1.500152E+02 | 3.456162E+04 | 2.103248E+04 |

Figure 5.

Convergence curves of IRIME and RIMEs at IEEE CEC 2017

Table 6.

p−value of Wilcoxon signed−rank test between IRIME and other RIMEs

| F1 | F2 | F3 | F4 | F5 | F6 | |

|---|---|---|---|---|---|---|

| CMSRSRIME | 2.9574621307E-03 | 1.0746884002E-01 | 3.4052567233E-05 | 4.7794743855E-01 | 4.7794743855E-01 | 6.0350064738E-03 |

| SBRIME | 1.7343976283E-06 | 4.1653380739E-01 | 1.7343976283E-06 | 1.9209211049E-06 | 2.1630223984E-05 | 1.7343976283E-06 |

| RIME | 1.7343976283E-06 | 3.4934556237E-01 | 1.7343976283E-06 | 1.7343976283E-06 | 3.3788544377E-03 | 1.7343976283E-06 |

| F7 | F8 | F9 | F10 | F11 | F12 | |

|---|---|---|---|---|---|---|

| CMSRSRIME | 7.3432529144E-01 | 2.5364409755E-01 | 1.6367234818E-01 | 4.0483472216E-01 | 1.1092566513E-01 | 4.6528258188E-01 |

| SBRIME | 3.5888445045E-04 | 1.2505680433E-04 | 1.7343976283E-06 | 9.3675596532E-02 | 1.7343976283E-06 | 1.7343976283E-06 |

| RIME | 4.0715116266E-05 | 7.1570338462E-04 | 1.7343976283E-06 | 7.0356369987E-01 | 2.3534209951E-06 | 1.7343976283E-06 |

| F13 | F14 | F15 | F16 | F17 | F18 | |

|---|---|---|---|---|---|---|

| CMSRSRIME | 1.2543823903E-01 | 4.7794743855E-01 | 2.8947707171E-01 | 6.8713630797E-02 | 8.7296677536E-03 | 3.3885615525E-01 |

| SBRIME | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 | 5.4462503972E-02 | 2.2248266458E-04 | 1.7343976283E-06 |

| RIME | 1.7343976283E-06 | 2.1266360107E-06 | 1.7343976283E-06 | 2.1052603409E-03 | 2.8485956185E-02 | 1.7343976283E-06 |

| F19 | F20 | F21 | F22 | F23 | F24 | |

|---|---|---|---|---|---|---|

| CMSRSRIME | 3.0861485053E-01 | 7.0356369987E-01 | 1.5885549929E-01 | 1.6502656562E-01 | 1.7343976283E-06 | 1.7343976283E-06 |

| SBRIME | 2.3534209951E-06 | 4.8602606067E-05 | 2.3534209951E-06 | 4.7292023374E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| RIME | 1.7343976283E-06 | 4.1955098606E-04 | 1.7343976283E-06 | 8.1877534396E-05 | 1.7343976283E-06 | 1.7343976283E-06 |

| F25 | F26 | F27 | F28 | F29 | F30 | |

|---|---|---|---|---|---|---|

| CMSRSRIME | 1.9209211049E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7333066442E-06 | 1.9729484516E-05 | 1.6046383717E-04 |

| SBRIME | 3.1123151154E-05 | 1.7343976283E-06 | 3.1123151154E-05 | 5.7096495243E-02 | 4.7292023374E-06 | 3.1816794110E-06 |

| RIME | 1.7343976283E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.9209211049E-06 |

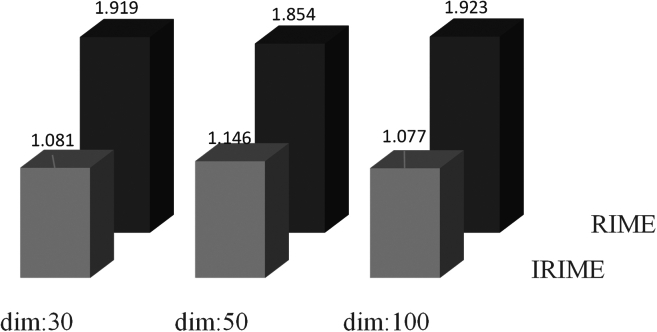

Stability testing of IRIME

Stability experiments were conducted in this section to validate the stability of IRIME. The parameter settings remained mostly similar to the previous experiments, except for variations in dimensions (30, 50, and 100). The specific experimental data is shown in Table 7. The table shows that IRIME outperforms RIME in 30, 29, and 29 benchmark functions across different dimensions, demonstrating significantly better stability than RIME. Particularly on functions like F6 and F26, as the dimensions increase, RIME’s convergence to the optimal value also grows, but IRIME maintains convergence at similarly low optimal values. A Friedman ranking was computed, as shown in Figure 6, demonstrating IRIME’s significant advantage as the dimensionality increases. In summary, with changing problem dimensions, IRIME sustains its competitiveness, showcasing remarkable stability and maintaining strong performance.

Table 7.

Stability testing of IRIME and RIME

| Metric | 30 |

50 |

100 |

|||

|---|---|---|---|---|---|---|

| IRIME | RIME | IRIME | RIME | IRIME | RIME | |

| AVG | 1.000000E+02 | 1.028739E+04 | 8.150018E+03 | 3.498054E+04 | 3.587010E+04 | 5.599304E+05 |

| STD | 6.624418E-07 | 1.822468E+04 | 1.360977E+04 | 1.030577E+04 | 2.748387E+04 | 1.181859E+05 |

| AVG | 6.779600E+03 | 3.152160E+04 | 3.707965E+13 | 8.353509E+13 | 9.181274E+56 | 1.820439E+80 |

| STD | 8.247370E+03 | 6.815905E+04 | 1.077243E+14 | 2.980378E+14 | 3.252605E+57 | 9.948538E+80 |

| AVG | 3.000804E+02 | 3.017968E+02 | 9.127787E+02 | 5.914656E+02 | 9.806014E+04 | 9.242816E+04 |

| STD | 8.807603E-02 | 7.545155E-01 | 3.786835E+02 | 1.216696E+02 | 1.448744E+04 | 1.933928E+04 |

| AVG | 4.161644E+02 | 4.904783E+02 | 4.920837E+02 | 5.228727E+02 | 6.357680E+02 | 6.980471E+02 |

| STD | 2.870048E+01 | 3.720042E+01 | 1.411291E+01 | 4.746566E+01 | 4.853969E+01 | 4.424859E+01 |

| AVG | 5.619552E+02 | 5.836654E+02 | 6.267921E+02 | 6.818772E+02 | 8.695937E+02 | 9.965034E+02 |

| STD | 1.790301E+01 | 1.722221E+01 | 2.854613E+01 | 3.959170E+01 | 6.564103E+01 | 8.890611E+01 |

| AVG | 6.000026E+02 | 6.002950E+02 | 6.000213E+02 | 6.027180E+02 | 6.002694E+02 | 6.182405E+02 |

| STD | 1.369855E-03 | 2.141152E-01 | 8.625810E-03 | 1.841028E+00 | 1.523005E-01 | 4.611132E+00 |

| AVG | 7.840374E+02 | 8.168247E+02 | 8.740425E+02 | 9.318452E+02 | 1.181254E+03 | 1.294812E+03 |

| STD | 1.300727E+01 | 2.777899E+01 | 1.874723E+01 | 4.792427E+01 | 6.421225E+01 | 9.746528E+01 |

| AVG | 8.621855E+02 | 8.839896E+02 | 9.318084E+02 | 9.770503E+02 | 1.184238E+03 | 1.324732E+03 |

| STD | 1.320405E+01 | 1.835873E+01 | 2.928632E+01 | 3.136398E+01 | 6.472031E+01 | 8.014492E+01 |

| AVG | 9.000181E+02 | 1.124300E+03 | 9.045256E+02 | 3.715535E+03 | 1.520706E+03 | 1.770827E+04 |

| STD | 8.398819E-02 | 2.651242E+02 | 8.449065E+00 | 1.840252E+03 | 7.826171E+02 | 7.679602E+03 |

| AVG | 3.403375E+03 | 3.318342E+03 | 6.472167E+03 | 6.607002E+03 | 1.483534E+04 | 1.553603E+04 |

| STD | 5.195037E+02 | 4.690800E+02 | 7.651949E+02 | 7.082011E+02 | 1.171622E+03 | 1.278887E+03 |

| AVG | 1.144627E+03 | 1.235619E+03 | 1.213375E+03 | 1.566197E+03 | 1.639799E+03 | 2.527533E+03 |

| STD | 1.801979E+01 | 7.120005E+01 | 2.596203E+01 | 1.123408E+02 | 1.378813E+02 | 2.185059E+02 |

| AVG | 3.259220E+03 | 3.185041E+06 | 6.215314E+03 | 8.247759E+06 | 2.146365E+04 | 9.556442E+07 |

| STD | 9.613443E+02 | 2.533263E+06 | 2.619565E+03 | 3.820874E+06 | 9.818197E+03 | 3.444275E+07 |

| AVG | 1.339929E+03 | 3.903406E+03 | 1.038753E+04 | 5.893687E+04 | 2.624556E+04 | 2.400604E+05 |

| STD | 1.078343E+01 | 3.101797E+03 | 7.408806E+03 | 4.089735E+04 | 1.241422E+04 | 8.196934E+04 |

| AVG | 1.443579E+03 | 1.497542E+03 | 1.466908E+03 | 1.651542E+03 | 1.532119E+03 | 1.599748E+04 |

| STD | 9.549785E+00 | 2.906395E+01 | 1.731724E+01 | 5.900106E+01 | 2.628161E+01 | 7.791170E+03 |

| AVG | 1.560320E+03 | 7.576501E+03 | 2.017887E+03 | 4.234476E+03 | 8.630684E+03 | 5.690617E+04 |

| STD | 3.352494E+01 | 6.640569E+03 | 7.957409E+02 | 2.180854E+03 | 9.731233E+03 | 2.010337E+04 |

| AVG | 2.189042E+03 | 2.341539E+03 | 2.641367E+03 | 2.967189E+03 | 5.169371E+03 | 5.974952E+03 |

| STD | 2.485710E+02 | 3.008552E+02 | 3.246147E+02 | 4.208589E+02 | 6.400325E+02 | 5.933374E+02 |

| AVG | 1.932272E+03 | 2.019690E+03 | 2.303726E+03 | 2.577466E+03 | 4.363901E+03 | 4.793590E+03 |

| STD | 1.219315E+02 | 1.098334E+02 | 2.243114E+02 | 2.717036E+02 | 4.906362E+02 | 5.352419E+02 |

| AVG | 1.321118E+04 | 1.282609E+05 | 1.076984E+05 | 6.718929E+05 | 8.873835E+05 | 2.215444E+06 |

| STD | 1.040334E+04 | 9.873021E+04 | 4.224163E+04 | 4.070633E+05 | 4.590897E+05 | 7.540629E+05 |

| AVG | 1.996303E+03 | 8.668593E+03 | 9.349882E+03 | 1.402825E+04 | 6.842005E+03 | 4.190986E+04 |

| STD | 2.888304E+02 | 7.195163E+03 | 1.038581E+04 | 1.488471E+04 | 3.688717E+03 | 2.169956E+04 |

| AVG | 2.171758E+03 | 2.261173E+03 | 2.579609E+03 | 2.792553E+03 | 4.184473E+03 | 4.741982E+03 |

| STD | 7.861606E+01 | 9.435565E+01 | 2.374063E+02 | 2.490740E+02 | 4.799121E+02 | 4.920016E+02 |

| AVG | 2.129702E+03 | 2.194063E+03 | 2.212182E+03 | 2.270509E+03 | 2.250000E+03 | 2.250002E+03 |

| STD | 3.233579E+01 | 2.859524E+01 | 3.470840E+01 | 3.920999E+01 | 1.705788E-06 | 1.661872E-04 |

| AVG | 2.261555E+03 | 2.278551E+03 | 2.339057E+03 | 2.389399E+03 | 2.350000E+03 | 2.350000E+03 |

| STD | 1.980115E+01 | 1.982225E+01 | 2.854209E+01 | 4.896348E+01 | 8.275093E-09 | 9.695178E-06 |

| AVG | 2.500000E+03 | 2.877338E+03 | 2.522419E+03 | 3.249900E+03 | 2.500380E+03 | 3.959631E+03 |

| STD | 9.799078E-06 | 2.785766E+01 | 1.227458E+02 | 5.579856E+01 | 3.389571E-01 | 8.897769E+01 |

| AVG | 2.600000E+03 | 3.299834E+03 | 2.600000E+03 | 3.829701E+03 | 2.600019E+03 | 5.362005E+03 |

| STD | 2.533334E-13 | 2.579968E+02 | 4.619013E-06 | 6.618639E+01 | 2.013730E-02 | 9.411192E+01 |

| AVG | 2.707120E+03 | 2.973604E+03 | 2.881642E+03 | 3.051111E+03 | 2.700225E+03 | 3.340697E+03 |

| STD | 3.899625E+01 | 5.501906E+01 | 1.531719E+02 | 2.489686E+01 | 2.330694E-01 | 6.969830E+01 |

| AVG | 2.800000E+03 | 5.014069E+03 | 2.800000E+03 | 7.360208E+03 | 2.800134E+03 | 1.570043E+04 |

| STD | 4.776900E-13 | 9.979314E+02 | 2.963824E-05 | 1.592764E+03 | 1.101589E-01 | 1.136894E+03 |

| AVG | 2.918219E+03 | 3.594628E+03 | 2.971923E+03 | 3.994026E+03 | 3.097434E+03 | 5.449449E+03 |

| STD | 9.978696E+01 | 8.565568E+01 | 2.188521E+02 | 1.697183E+02 | 5.004242E+02 | 3.176788E+02 |

| AVG | 3.023799E+03 | 3.412600E+03 | 3.275639E+03 | 3.342462E+03 | 3.000172E+03 | 3.407231E+03 |

| STD | 7.300070E+01 | 4.842390E+02 | 1.299708E+02 | 3.360505E+01 | 1.083659E-01 | 4.757740E+01 |

| AVG | 3.240401E+03 | 3.560112E+03 | 3.569520E+03 | 4.500026E+03 | 5.375933E+03 | 6.954458E+03 |

| STD | 6.760153E+01 | 1.833220E+02 | 2.907660E+02 | 3.105699E+02 | 4.990865E+02 | 5.511103E+02 |

| AVG | 6.144891E+03 | 4.017941E+04 | 2.279465E+04 | 7.241399E+05 | 9.420026E+03 | 6.578093E+06 |

| STD | 3.632263E+03 | 2.698645E+04 | 1.915455E+03 | 4.691778E+05 | 2.867600E+03 | 2.615942E+06 |

Figure 6.

Friedman ranking of IRIME in different dimensions

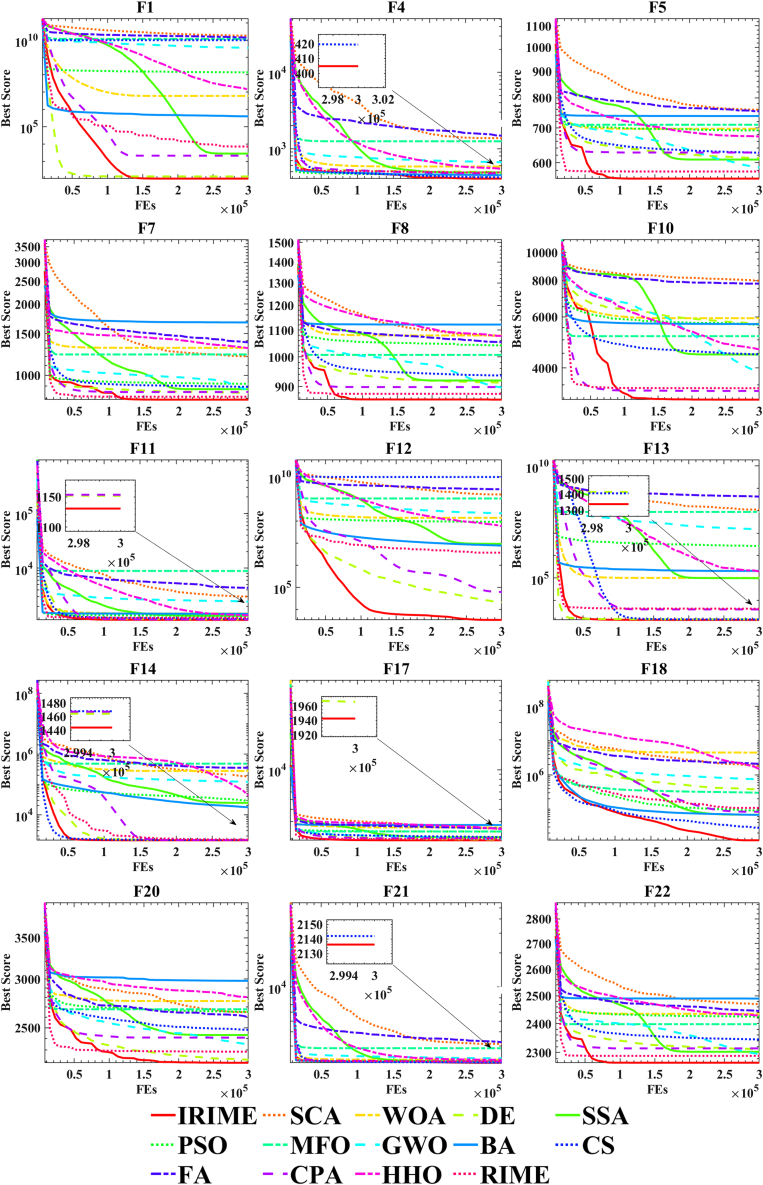

Comparison with conventional algorithms

In this section, IRIME was primarily compared against 13 conventional algorithms: RIME,21 SCA,16 WOA,95 DE,33 SSA,96 PSO,97 MFO,29 GWO,98 BA,99 CS,91 FA,100 CPA32 and HHO.28 Table 8 illustrates that IRIME achieves an average ranking of 1.733333, indicating its exceptional performance across all 30 benchmark test functions. Detailed experimental data is provided in Table 9. Upon careful comparison with SCA, WOA, DE, PSO, MFO, GWO, FA, and RIME, IRIME does not exhibit noticeably poorer performance than these algorithms. Furthermore, compared to other algorithms such as CPA, HHO, etc., there are many cases where IRIME is significantly superior. For a visual representation of IRIME’s performance, convergence curve plots were generated, as depicted in Figure 7. These curves demonstrate IRIME’s distinctive characteristics compared to RIME, especially evident in simple unimodal functions F1, multimodal functions F4, F5, F7, F8 and F10, hybrid functions F11, F12, F13, F14, F17, and F18, and composite functions F21 and F22. This notable performance is primarily attributed to balancing RIME’s exploration and exploitation abilities by SB and CMS-RS, enabling IRIME’s ability to escape local optima. Moreover, the Friedman ranking chart in Figure 8 positions IRIME at the top. To indicate the statistical significance of IRIME’s superiority over other algorithms, a table presenting p-values of the Wilcoxon signed-rank test is included in Table10.

Table 8.

Comparison results between IRIME and conventional algorithms

| Overall Rank | |||

|---|---|---|---|

| Algorithm | Rank | +/ = /- | Avg |

| IRIME | 1 | ∼ | 1.733333 |

| SCA | 13 | 30/0/0 | 12.2 |

| WOA | 11 | 27/3/0 | 10.3 |

| DE | 3 | 25/2/3 | 5 |

| SSA | 6 | 29/0/1 | 5.833333 |

| PSO | 9 | 30/0/0 | 8.366667 |

| MFO | 12 | 30/0/0 | 10.93333 |

| GWO | 8 | 28/2/0 | 7.566667 |

| BA | 10 | 28/1/1 | 9.366667 |

| CS | 5 | 27/2/1 | 5.766667 |

| FA | 14 | 30/0/0 | 12.3 |

| CPA | 2 | 18/4/8 | 3.333333 |

| HHO | 7 | 22/2/6 | 6.9 |

| RIME | 3 | 29/1/0 | 5 |

Table 9.

Comparison of IRIME with conventional algorithms

| F1 |

F2 |

F3 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 1.000000E+02 | 8.228886E-07 | 1.092587E+04 | 2.557057E+04 | 3.000768E+02 | 8.230567E-02 |

| SCA | 1.841382E+10 | 3.722769E+09 | 7.136067E+32 | 1.990604E+33 | 3.706668E+04 | 4.666736E+03 |

| WOA | 6.004626E+06 | 6.340405E+06 | 2.066092E+29 | 1.113738E+30 | 1.338299E+04 | 5.360271E+03 |

| DE | 1.333382E+02 | 1.663241E+02 | 1.983923E+25 | 3.478891E+25 | 1.996025E+04 | 3.770712E+03 |

| SSA | 2.792499E+03 | 3.017484E+03 | 4.056705E+06 | 2.112276E+07 | 3.000000E+02 | 8.818389E-09 |

| PSO | 1.389773E+08 | 1.936371E+07 | 2.561524E+12 | 2.515226E+12 | 6.259001E+02 | 3.466948E+01 |

| MFO | 1.178499E+10 | 5.277154E+09 | 4.606288E+38 | 2.268979E+39 | 1.139089E+05 | 5.365509E+04 |

| GWO | 3.656408E+09 | 2.680374E+09 | 8.477831E+28 | 2.813243E+29 | 3.158195E+04 | 9.978276E+03 |

| BA | 3.979009E+05 | 1.875329E+05 | 2.001667E+02 | 9.128709E-01 | 3.000746E+02 | 4.876542E-02 |

| CS | 1.000000E+10 | 0.000000E+00 | 1.000000E+10 | 0.000000E+00 | 1.185032E+04 | 2.895928E+03 |

| FA | 1.422799E+10 | 1.490877E+09 | 2.637550E+34 | 2.817855E+34 | 5.846299E+04 | 8.407209E+03 |

| CPA | 2.114254E+03 | 2.746738E+03 | 1.107555E+05 | 5.629322E+05 | 3.000000E+02 | 1.708056E-07 |

| HHO | 1.397816E+07 | 2.883833E+06 | 2.473100E+12 | 7.349195E+12 | 2.801396E+03 | 1.243863E+03 |

| RIME | 7.124393E+03 | 4.505485E+03 | 2.058783E+04 | 5.563495E+04 | 3.016689E+02 | 7.524137E-01 |

| F4 |

F5 |

F6 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 4.047558E+02 | 1.615793E+01 | 5.592333E+02 | 1.350497E+01 | 6.000030E+02 | 2.016607E-03 |

| SCA | 1.374591E+03 | 2.771825E+02 | 7.588440E+02 | 1.443074E+01 | 6.417769E+02 | 5.229569E+00 |

| WOA | 5.840244E+02 | 5.335661E+01 | 6.972605E+02 | 4.197744E+01 | 6.644217E+02 | 1.299889E+01 |

| DE | 4.901688E+02 | 3.989320E+01 | 6.123099E+02 | 1.004958E+01 | 6.000000E+02 | 0.000000E+00 |

| SSA | 4.880627E+02 | 3.685931E+01 | 6.083748E+02 | 3.205830E+01 | 6.293176E+02 | 1.206613E+01 |

| PSO | 4.559716E+02 | 3.206651E+01 | 6.922583E+02 | 2.502204E+01 | 6.343293E+02 | 1.113896E+01 |

| MFO | 1.249248E+03 | 7.468984E+02 | 7.099112E+02 | 3.925173E+01 | 6.444544E+02 | 9.505611E+00 |

| GWO | 6.646073E+02 | 1.181156E+02 | 5.870130E+02 | 2.823283E+01 | 6.064975E+02 | 3.413389E+00 |

| BA | 4.492754E+02 | 5.335580E+01 | 7.378640E+02 | 4.005934E+01 | 6.715805E+02 | 1.149586E+01 |

| CS | 4.193988E+02 | 2.958199E+01 | 6.269874E+02 | 2.190149E+01 | 6.269248E+02 | 8.245281E+00 |

| FA | 1.482195E+03 | 1.539432E+02 | 7.535040E+02 | 1.239110E+01 | 6.437613E+02 | 3.892201E+00 |

| CPA | 4.741417E+02 | 4.920473E+01 | 6.277521E+02 | 2.556818E+01 | 6.000000E+02 | 1.993686E-07 |

| HHO | 5.452018E+02 | 3.950364E+01 | 6.738793E+02 | 1.994907E+01 | 6.526138E+02 | 3.710877E+00 |

| RIME | 4.869630E+02 | 2.962177E+01 | 5.771337E+02 | 1.783255E+01 | 6.003665E+02 | 2.821859E-01 |

| F7 |

F8 |

F9 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 7.883173E+02 | 1.821927E+01 | 8.592822E+02 | 1.301643E+01 | 9.000454E+02 | 1.386272E-01 |

| SCA | 1.203477E+03 | 5.847467E+01 | 1.077141E+03 | 2.148756E+01 | 7.055291E+03 | 1.483905E+03 |

| WOA | 1.305250E+03 | 1.357140E+02 | 1.079100E+03 | 5.445599E+01 | 9.026235E+03 | 3.453609E+03 |

| DE | 8.431410E+02 | 9.738404E+00 | 9.123994E+02 | 6.583571E+00 | 9.000000E+02 | 2.985563E-14 |

| SSA | 8.727399E+02 | 4.585326E+01 | 9.195604E+02 | 3.175260E+01 | 3.902221E+03 | 1.700013E+03 |

| PSO | 9.195953E+02 | 1.906608E+01 | 1.041707E+03 | 3.675778E+01 | 6.310334E+03 | 2.485837E+03 |

| MFO | 1.223923E+03 | 2.587419E+02 | 1.007102E+03 | 4.956693E+01 | 8.133813E+03 | 2.578392E+03 |

| GWO | 8.687501E+02 | 5.299589E+01 | 8.966145E+02 | 2.657562E+01 | 2.549040E+03 | 8.862296E+02 |

| BA | 1.672298E+03 | 2.052284E+02 | 1.121454E+03 | 5.642799E+01 | 1.596200E+04 | 4.562495E+03 |

| CS | 8.931793E+02 | 2.914000E+01 | 9.358801E+02 | 2.485981E+01 | 5.137085E+03 | 1.624264E+03 |

| FA | 1.378286E+03 | 4.499559E+01 | 1.053642E+03 | 1.212715E+01 | 5.904095E+03 | 5.642448E+02 |

| CPA | 8.489678E+02 | 3.506446E+01 | 8.985005E+02 | 2.920458E+01 | 3.316187E+03 | 7.771718E+02 |

| HHO | 1.302266E+03 | 9.362298E+01 | 1.076979E+03 | 4.260311E+01 | 7.620443E+03 | 1.098960E+03 |

| RIME | 8.108969E+02 | 2.663949E+01 | 8.771250E+02 | 1.542515E+01 | 1.282633E+03 | 7.780124E+02 |

| F10 |

F11 |

F12 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 3.113983E+03 | 4.683815E+02 | 1.130403E+03 | 1.468068E+01 | 3.319172E+03 | 7.705038E+02 |

| SCA | 7.981345E+03 | 3.150563E+02 | 3.022295E+03 | 6.445754E+02 | 1.574493E+09 | 3.979278E+08 |

| WOA | 5.934370E+03 | 7.789161E+02 | 1.481984E+03 | 8.519552E+01 | 1.382265E+08 | 7.101250E+07 |

| DE | 5.672989E+03 | 2.624536E+02 | 1.152053E+03 | 8.966502E+00 | 2.025299E+04 | 4.813425E+04 |

| SSA | 4.452536E+03 | 7.094846E+02 | 1.344872E+03 | 7.211084E+01 | 9.182351E+06 | 5.040467E+06 |

| PSO | 5.643005E+03 | 5.073707E+02 | 1.347653E+03 | 5.680875E+01 | 8.995054E+07 | 3.766610E+07 |

| MFO | 5.147688E+03 | 8.203648E+02 | 8.873480E+03 | 7.933486E+03 | 1.033950E+09 | 1.367383E+09 |

| GWO | 3.885633E+03 | 9.366685E+02 | 2.439886E+03 | 1.375237E+03 | 2.211911E+08 | 5.231662E+08 |

| BA | 5.665602E+03 | 6.360143E+02 | 1.463662E+03 | 1.486178E+02 | 7.653362E+06 | 7.277742E+06 |

| CS | 4.458374E+03 | 2.296132E+02 | 1.185109E+03 | 1.742362E+01 | 9.666740E+09 | 1.825338E+09 |

| FA | 7.802109E+03 | 2.450349E+02 | 4.351898E+03 | 6.829350E+02 | 2.681007E+09 | 3.497603E+08 |

| CPA | 3.335836E+03 | 4.680002E+02 | 1.153764E+03 | 2.469058E+01 | 6.094811E+04 | 5.596317E+04 |

| HHO | 4.635003E+03 | 5.314861E+02 | 1.373707E+03 | 9.325560E+01 | 5.779730E+07 | 3.642617E+07 |

| RIME | 3.409716E+03 | 5.427260E+02 | 1.236769E+03 | 6.668725E+01 | 3.654135E+06 | 2.400589E+06 |

| F13 |

F14 |

F15 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 1.336538E+03 | 8.473889E+00 | 1.443824E+03 | 8.556915E+00 | 1.553682E+03 | 2.436474E+01 |

| SCA | 1.135809E+08 | 3.597453E+07 | 1.857652E+05 | 8.431296E+04 | 4.841776E+06 | 2.356802E+06 |

| WOA | 1.000657E+05 | 7.298211E+04 | 2.780113E+05 | 1.802183E+05 | 5.291920E+04 | 8.617273E+04 |

| DE | 1.412306E+03 | 3.210871E+02 | 1.464071E+03 | 7.837059E+00 | 1.550860E+03 | 1.334677E+01 |

| SSA | 9.785285E+04 | 1.030243E+05 | 2.465348E+04 | 2.001924E+04 | 3.396406E+04 | 1.937665E+04 |

| PSO | 2.672529E+06 | 6.920589E+05 | 2.900206E+04 | 1.903076E+04 | 3.161740E+05 | 1.329552E+05 |

| MFO | 8.890284E+07 | 2.019387E+08 | 4.818146E+05 | 1.166317E+06 | 4.578076E+04 | 4.589563E+04 |

| GWO | 1.493141E+07 | 2.605109E+07 | 1.207818E+05 | 1.325602E+05 | 2.080886E+06 | 1.127817E+07 |

| BA | 2.056385E+05 | 1.475900E+05 | 1.764583E+04 | 8.026951E+03 | 9.857857E+04 | 9.742760E+04 |

| CS | 1.403952E+03 | 2.910464E+01 | 1.467773E+03 | 8.666795E+00 | 1.566742E+03 | 1.286895E+01 |

| FA | 4.300832E+08 | 1.161389E+08 | 3.545473E+05 | 1.403319E+05 | 5.339371E+07 | 1.919045E+07 |

| CPA | 3.945263E+03 | 2.400788E+03 | 1.467093E+03 | 3.012936E+01 | 7.282572E+03 | 4.871670E+03 |

| HHO | 1.949828E+05 | 8.419094E+04 | 4.341608E+04 | 3.603063E+04 | 3.486027E+04 | 1.511152E+04 |

| RIME | 4.237048E+03 | 2.386911E+03 | 1.521076E+03 | 4.668063E+01 | 7.385270E+03 | 4.797001E+03 |

| F16 |

F17 |

F18 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 2.157519E+03 | 2.319810E+02 | 1.942563E+03 | 8.414615E+01 | 1.227806E+04 | 8.274997E+03 |

| SCA | 3.297912E+03 | 2.433252E+02 | 2.544122E+03 | 1.996151E+02 | 1.843845E+06 | 9.558486E+05 |

| WOA | 3.325774E+03 | 4.574786E+02 | 2.621453E+03 | 3.052444E+02 | 4.481267E+06 | 3.637390E+06 |

| DE | 2.025687E+03 | 1.610609E+02 | 1.965870E+03 | 4.022178E+01 | 3.533861E+05 | 1.845308E+05 |

| SSA | 2.392214E+03 | 2.408053E+02 | 2.084263E+03 | 1.671643E+02 | 6.859217E+04 | 5.922140E+04 |

| PSO | 2.668135E+03 | 2.316809E+02 | 2.379098E+03 | 2.458484E+02 | 8.997152E+04 | 4.816071E+04 |

| MFO | 3.191869E+03 | 4.313203E+02 | 2.382983E+03 | 2.636714E+02 | 2.996153E+05 | 6.695035E+05 |

| GWO | 2.252737E+03 | 2.427924E+02 | 1.984203E+03 | 1.120046E+02 | 7.415711E+05 | 1.403432E+06 |

| BA | 3.490398E+03 | 5.264603E+02 | 2.771914E+03 | 3.374694E+02 | 6.745901E+04 | 2.947320E+04 |

| CS | 2.458258E+03 | 1.646827E+02 | 2.093107E+03 | 8.895653E+01 | 2.839660E+04 | 8.739249E+03 |

| FA | 3.171391E+03 | 1.900067E+02 | 2.556076E+03 | 1.239653E+02 | 2.123477E+06 | 7.703717E+05 |

| CPA | 2.638873E+03 | 3.589458E+02 | 2.035829E+03 | 1.520298E+02 | 8.337727E+04 | 5.771694E+04 |

| HHO | 2.853986E+03 | 4.348123E+02 | 2.518518E+03 | 2.613917E+02 | 1.479073E+06 | 1.536378E+06 |

| RIME | 2.361515E+03 | 2.358123E+02 | 1.997354E+03 | 1.314076E+02 | 1.082342E+05 | 6.657172E+04 |

| F19 |

F20 |

F21 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 2.332181E+03 | 1.864812E+03 | 2.192876E+03 | 9.492175E+01 | 2.136135E+03 | 3.352597E+01 |

| SCA | 2.168794E+07 | 1.687208E+07 | 2.650003E+03 | 1.360133E+02 | 3.142386E+03 | 2.110077E+02 |

| WOA | 7.039075E+05 | 7.090756E+05 | 2.762562E+03 | 1.563571E+02 | 2.275860E+03 | 3.276134E+01 |

| DE | 4.947694E+03 | 2.473822E+03 | 2.216929E+03 | 4.611997E+01 | 2.187477E+03 | 1.809598E+01 |

| SSA | 1.667896E+05 | 1.140472E+05 | 2.432897E+03 | 1.772135E+02 | 2.203093E+03 | 3.248284E+01 |

| PSO | 3.430261E+05 | 1.514502E+05 | 2.653687E+03 | 1.678977E+02 | 2.177850E+03 | 3.668529E+01 |

| MFO | 3.757899E+05 | 1.837212E+06 | 2.678688E+03 | 2.917192E+02 | 2.874566E+03 | 8.105786E+02 |

| GWO | 5.753903E+04 | 6.494282E+04 | 2.350499E+03 | 1.051505E+02 | 2.337764E+03 | 7.529951E+01 |

| BA | 2.695612E+05 | 1.461260E+05 | 2.978931E+03 | 2.096352E+02 | 2.161001E+03 | 4.171884E+01 |

| CS | 1.931255E+03 | 5.385502E+00 | 2.480485E+03 | 8.794099E+01 | 2.141894E+03 | 3.215894E+01 |

| FA | 3.416584E+07 | 1.587037E+07 | 2.599760E+03 | 9.585333E+01 | 3.244746E+03 | 1.713522E+02 |

| CPA | 4.131226E+03 | 2.932877E+03 | 2.409347E+03 | 1.245356E+02 | 2.192308E+03 | 2.812837E+01 |

| HHO | 1.482658E+05 | 9.248775E+04 | 2.802366E+03 | 2.084324E+02 | 2.264632E+03 | 2.386389E+01 |

| RIME | 8.102371E+03 | 6.772024E+03 | 2.285830E+03 | 1.169991E+02 | 2.197692E+03 | 3.982284E+01 |

| F22 |

F23 |

F24 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 2.265552E+03 | 1.375038E+01 | 2.500000E+03 | 2.412039E-05 | 2.600000E+03 | 2.234190E-13 |

| SCA | 2.469766E+03 | 1.862005E+01 | 3.280074E+03 | 5.293000E+01 | 3.860830E+03 | 7.261677E+01 |

| WOA | 2.434663E+03 | 4.591771E+01 | 3.152556E+03 | 1.486188E+02 | 2.826444E+03 | 4.640745E+02 |

| DE | 2.312139E+03 | 9.803931E+00 | 2.873856E+03 | 1.165131E+01 | 3.396319E+03 | 7.143519E+00 |

| SSA | 2.302442E+03 | 2.696481E+01 | 2.899683E+03 | 4.591268E+01 | 2.600461E+03 | 1.754068E+00 |

| PSO | 2.424424E+03 | 2.981972E+01 | 4.664458E+03 | 5.424066E+02 | 2.667356E+03 | 4.579105E+00 |

| MFO | 2.397898E+03 | 4.542225E+01 | 2.955630E+03 | 3.010570E+01 | 3.492602E+03 | 4.045296E+01 |

| GWO | 2.294783E+03 | 2.927255E+01 | 2.885035E+03 | 4.101812E+01 | 3.028401E+03 | 3.720900E+02 |

| BA | 2.490033E+03 | 5.712484E+01 | 3.541796E+03 | 2.055549E+02 | 2.846532E+03 | 4.871311E+02 |

| CS | 2.344756E+03 | 2.801735E+01 | 2.913872E+03 | 2.132308E+01 | 2.869770E+03 | 2.713068E+02 |

| FA | 2.445136E+03 | 1.294870E+01 | 3.103677E+03 | 1.621235E+01 | 3.691747E+03 | 1.601300E+01 |

| CPA | 2.313971E+03 | 2.535603E+01 | 2.500000E+03 | 0.000000E+00 | 2.600000E+03 | 0.000000E+00 |

| HHO | 2.428248E+03 | 2.689161E+01 | 2.500000E+03 | 0.000000E+00 | 2.600000E+03 | 0.000000E+00 |

| RIME | 2.288698E+03 | 1.933506E+01 | 2.875461E+03 | 1.894064E+01 | 3.218714E+03 | 3.332643E+02 |

| F25 |

F26 |

F27 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 2.700000E+03 | 3.014049E-06 | 2.800000E+03 | 7.966475E-13 | 2.917703E+03 | 9.696226E+01 |

| SCA | 3.620928E+03 | 1.263230E+02 | 7.789088E+03 | 1.025837E+03 | 4.061770E+03 | 1.175104E+02 |

| WOA | 2.717575E+03 | 9.626031E+01 | 3.542405E+03 | 1.941872E+03 | 3.968988E+03 | 2.352272E+02 |

| DE | 2.912818E+03 | 4.515466E+00 | 5.310729E+03 | 3.259103E+02 | 3.440492E+03 | 2.054747E+01 |

| SSA | 2.956126E+03 | 4.508026E+01 | 2.803337E+03 | 1.825686E+01 | 3.603933E+03 | 8.474287E+01 |

| PSO | 2.953735E+03 | 3.770860E+01 | 3.409442E+03 | 3.172684E+01 | 5.031577E+03 | 7.931823E+02 |

| MFO | 3.699591E+03 | 7.905559E+02 | 6.711784E+03 | 5.120422E+02 | 3.590907E+03 | 6.526928E+01 |

| GWO | 3.212052E+03 | 1.861504E+02 | 4.866584E+03 | 9.148374E+02 | 3.681051E+03 | 9.477657E+01 |

| BA | 3.013904E+03 | 8.224810E+01 | 5.309482E+03 | 3.576900E+03 | 3.906917E+03 | 1.275351E+02 |

| CS | 2.907161E+03 | 8.298785E+00 | 3.789000E+03 | 1.166225E+03 | 3.509616E+03 | 7.246362E+01 |

| FA | 4.108952E+03 | 1.520293E+02 | 7.293080E+03 | 1.459334E+02 | 3.902377E+03 | 8.965520E+01 |

| CPA | 2.700000E+03 | 0.000000E+00 | 2.800000E+03 | 0.000000E+00 | 2.900000E+03 | 0.000000E+00 |

| HHO | 2.700000E+03 | 0.000000E+00 | 2.800000E+03 | 0.000000E+00 | 2.900000E+03 | 0.000000E+00 |

| RIME | 2.956893E+03 | 4.093764E+01 | 5.213364E+03 | 8.405995E+02 | 3.566066E+03 | 7.106036E+01 |

| F28 |

F29 |

F30 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 3.034850E+03 | 8.227287E+01 | 3.279330E+03 | 8.123477E+01 | 5.583571E+03 | 2.871580E+03 |

| SCA | 5.650824E+03 | 5.471978E+02 | 4.271864E+03 | 2.801958E+02 | 7.855927E+06 | 1.863115E+07 |

| WOA | 3.308537E+03 | 6.514672E+02 | 4.361104E+03 | 4.091531E+02 | 1.826287E+06 | 1.767812E+06 |

| DE | 3.941911E+03 | 6.995067E+02 | 3.466897E+03 | 8.981603E+01 | 6.628119E+04 | 2.352216E+04 |

| SSA | 3.305292E+03 | 3.583736E+02 | 3.771022E+03 | 1.643812E+02 | 1.179055E+06 | 1.017255E+06 |

| PSO | 3.311009E+03 | 1.132244E+02 | 4.050026E+03 | 2.446224E+02 | 2.561684E+06 | 1.127760E+06 |

| MFO | 4.866595E+03 | 7.300049E+02 | 4.138832E+03 | 2.317888E+02 | 1.941961E+06 | 2.782699E+06 |

| GWO | 3.705572E+03 | 2.929996E+02 | 3.524327E+03 | 1.794326E+02 | 1.259130E+06 | 4.317675E+06 |

| BA | 3.415627E+03 | 5.750275E+02 | 4.654428E+03 | 4.556327E+02 | 1.054830E+06 | 6.501027E+05 |

| CS | 3.228935E+03 | 4.607668E+01 | 3.677880E+03 | 1.126906E+02 | 7.589453E+03 | 1.446341E+03 |

| FA | 4.068826E+03 | 1.044341E+02 | 4.559695E+03 | 1.650738E+02 | 1.171501E+08 | 3.086506E+07 |

| CPA | 3.000000E+03 | 0.000000E+00 | 3.100000E+03 | 0.000000E+00 | 3.200000E+03 | 0.000000E+00 |

| HHO | 3.000000E+03 | 0.000000E+00 | 3.100000E+03 | 0.000000E+00 | 3.200000E+03 | 0.000000E+00 |

| RIME | 3.549195E+03 | 6.610575E+02 | 3.609103E+03 | 1.811955E+02 | 3.838771E+04 | 2.085730E+04 |

Figure 7.

Convergence curves of IRIME and conventional algorithms at IEEE CEC 2017

Figure 8.

The Friedman ranking of IRIME and conventional algorithms

Table 10.

p−value of Wilcoxon signed−rank test between IRIME and conventional algorithms

| F1 | F2 | F3 | F4 | F5 | F6 | |

|---|---|---|---|---|---|---|

| SCA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| WOA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| DE | 5.7516532694E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| SSA | 1.7343976283E-06 | 1.3594767037E-04 | 1.7343976283E-06 | 2.8785992194E-06 | 3.5152372790E-06 | 1.7343976283E-06 |

| PSO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| MFO | 1.7343976283E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| GWO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 2.1630223984E-05 | 1.7343976283E-06 |

| BA | 1.7343976283E-06 | 9.3385961710E-06 | 5.9993592817E-01 | 1.4772761749E-04 | 1.7343976283E-06 | 1.7343976283E-06 |

| CS | 4.3204630578E-08 | 1.7126865599E-06 | 1.7343976283E-06 | 5.4462503972E-02 | 1.9209211049E-06 | 1.7343976283E-06 |

| FA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| CPA | 1.7343976283E-06 | 5.5774268620E-01 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| HHO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| RIME | 1.7343976283E-06 | 7.3432529144E-01 | 1.7343976283E-06 | 2.1266360107E-06 | 6.1564062070E-04 | 1.7343976283E-06 |

| F7 | F8 | F9 | F10 | F11 | F12 | |

|---|---|---|---|---|---|---|

| SCA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| WOA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| DE | 1.7343976283E-06 | 1.7343976283E-06 | 1.6976242501E-06 | 1.7343976283E-06 | 2.1630223984E-05 | 1.7343976283E-06 |

| SSA | 1.7343976283E-06 | 3.1816794110E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| PSO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| MFO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| GWO | 2.6033283895E-06 | 3.5152372790E-06 | 1.7343976283E-06 | 8.9187274245E-05 | 1.7343976283E-06 | 1.7343976283E-06 |

| BA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| CS | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 |

| FA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| CPA | 1.9209211049E-06 | 4.7292023374E-06 | 1.7343976283E-06 | 2.0588822306E-01 | 4.5335631776E-04 | 1.7343976283E-06 |

| HHO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| RIME | 1.1973382000E-03 | 4.8602606067E-05 | 1.7343976283E-06 | 1.3974564120E-02 | 3.1816794110E-06 | 1.7343976283E-06 |

| F13 | F14 | F15 | F16 | F17 | F18 | |

|---|---|---|---|---|---|---|

| SCA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| WOA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| DE | 3.5008956820E-02 | 1.7343976283E-06 | 8.6121251974E-01 | 3.6826128416E-02 | 4.2766688017E-02 | 1.7343976283E-06 |

| SSA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 4.5335631776E-04 | 8.9443006475E-04 | 1.7343976283E-06 |

| PSO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 3.8821823861E-06 | 2.1266360107E-06 | 1.7343976283E-06 |

| MFO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 2.1266360107E-06 | 1.7343976283E-06 | 1.9209211049E-06 |

| GWO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 6.8713630797E-02 | 1.8462187723E-01 | 1.7343976283E-06 |

| BA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| CS | 1.7343976283E-06 | 2.8785992194E-06 | 1.7518393580E-02 | 4.4493372835E-05 | 1.6394463017E-05 | 2.3534209951E-06 |

| FA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| CPA | 1.7343976283E-06 | 3.5888445045E-04 | 1.9209211049E-06 | 2.8434237746E-05 | 6.4242118722E-03 | 4.2856858692E-06 |

| HHO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 6.3391355731E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| RIME | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 3.3788544377E-03 | 4.4918903765E-02 | 1.7343976283E-06 |

| F19 | F20 | F21 | F22 | F23 | F24 | |

|---|---|---|---|---|---|---|

| SCA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| WOA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 3.1250000000E-02 |

| DE | 2.3704477026E-05 | 1.8462187723E-01 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| SSA | 1.7343976283E-06 | 8.4660816904E-06 | 2.1266360107E-06 | 6.3391355731E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| PSO | 1.7343976283E-06 | 1.9209211049E-06 | 4.9915540124E-03 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| MFO | 8.4660816904E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| GWO | 1.7343976283E-06 | 2.5967125848E-05 | 1.7343976283E-06 | 6.8922902968E-05 | 1.7343976283E-06 | 1.3183388898E-04 |

| BA | 1.7343976283E-06 | 1.7343976283E-06 | 4.9915540124E-03 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| CS | 4.4918903765E-02 | 1.9209211049E-06 | 8.5895825870E-02 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| FA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| CPA | 5.3069919381E-05 | 2.3704477026E-05 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.0000000000E+00 |

| HHO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.0000000000E+00 |

| RIME | 3.4052567233E-05 | 2.9574621307E-03 | 6.9837831475E-06 | 1.1499217544E-04 | 1.7343976283E-06 | 1.7343976283E-06 |

| F25 | F26 | F27 | F28 | F29 | F30 | |

|---|---|---|---|---|---|---|

| SCA | 1.7343976283E-06 | 2.5630832507E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| WOA | 5.3710937500E-02 | 1.2500000000E-01 | 1.7343976283E-06 | 4.1652148500E-01 | 1.7343976283E-06 | 2.3534209951E-06 |

| DE | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 2.1266360107E-06 | 1.7343976283E-06 |

| SSA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| PSO | 1.7343976283E-06 | 1.7343976283E-06 | 1.9209211049E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| MFO | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7300371293E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| GWO | 2.5630832507E-06 | 8.2980993064E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 5.2164934470E-06 | 1.7343976283E-06 |

| BA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 2.3534209951E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| CS | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 3.8821823861E-06 | 1.7343976283E-06 | 9.6265892907E-04 |

| FA | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| CPA | 1.9531250000E-03 | 1.0000000000E+00 | 1.7343976283E-06 | 1.7191759139E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| HHO | 1.9531250000E-03 | 1.0000000000E+00 | 1.7343976283E-06 | 1.7191759139E-06 | 1.7343976283E-06 | 1.7343976283E-06 |

| RIME | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 1.7343976283E-06 | 2.1266360107E-06 |

Comparison with advanced algorithms

To further validate IRIME’s performance, this study compared it with some advanced algorithms using the IEEE CEC 2017 benchmark test suite. These algorithms include EBOwithCMAR,101 LSHADE_cnEpSi,86 ALCPSO,102 CLPSO,103 LSHADE,104 SADE,105 JADE,106 RCBA,107 EPSO,108 CBA,109 and LWOA,110 The specific experimental data is detailed in Table 11. The results in the table highlight that IRIME, alongside some advanced algorithms, achieves performance near the theoretical optimum in functions such as F1, F3, F6, and F9. Compared to exceptional variants of DE like JADE and SADE, IRIME demonstrates some drawbacks in multimodal functions (F4, F5, F7, and F10) and hybrid functions (F12, F16, F17, and F18). This can be attributed to the limitations of SB and CMS-RS in improving convergence accuracy. Nevertheless, these limitations do not significantly impact IRIME’s overall performance. IRIME can also find very good results on multimodal functions F7 and F8, indicating that IRIME is not uniformly poor on multimodal functions. In addition, the hybrid functions F11, F13, F14, and F15 can also reflect excellent results, demonstrating that the disadvantage of IRIME on hybrid functions is not significant. Particularly in composite functions (F23, F24, F25, F26, F27, F28 and F29), IRIME exhibits advantages that aren’t present in these advanced algorithms, such as EBOwithCMAR, LSHADE_cnEpSi, ALCPSO, and CLPSO. When compared to other successful improvements in swarm intelligence algorithms like RCBA, CBA, and LWOA, IRIME outperforms them in convergence capability, especially in simple unimodal functions such as F1 and F2. Despite potential shortcomings in convergence accuracy, IRIME’s strong exploration abilities and the balance between exploration and exploitation elevate its average ranking to the top among these algorithms, as depicted in Table 12.

Table 11.

Comparison of IRIME with advanced algorithms

| F1 |

F2 |

F3 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 1.000000E+02 | 6.154959E-07 | 1.010703E+04 | 1.303957E+04 | 3.000649E+02 | 5.911368E-02 |

| EBOwithCMAR | 1.000000E+02 | 7.463907E-15 | 2.456040E+21 | 1.345229E+22 | 2.400802E+04 | 3.386547E+04 |

| LSHADE_cnEpSi | 1.000000E+02 | 1.355622E-09 | 2.409411E+21 | 9.065340E+21 | 3.000060E+02 | 1.496863E-02 |

| ALCPSO | 1.090225E+03 | 1.626553E+03 | 1.080386E+19 | 4.265054E+19 | 2.652894E+04 | 4.688938E+03 |

| CLPSO | 1.064836E+02 | 1.516141E+01 | 6.521139E+13 | 1.787106E+14 | 8.761694E+03 | 2.317848E+03 |

| LSHADE | 1.000000E+02 | 3.369109E-14 | 3.545959E+12 | 1.851603E+13 | 5.041967E+03 | 1.485133E+04 |

| SADE | 1.000000E+02 | 4.856153E-06 | 2.000000E+02 | 0.000000E+00 | 3.224915E+02 | 1.121460E+02 |

| JADE | 1.000000E+02 | 2.447205E-14 | 4.353151E+11 | 2.250183E+12 | 2.152864E+03 | 4.413241E+03 |

| RCBA | 1.865639E+04 | 6.844783E+03 | 2.203667E+02 | 2.627767E+01 | 3.005611E+02 | 1.705823E-01 |

| EPSO | 6.291322E+02 | 8.551875E+02 | 1.672571E+13 | 6.510333E+13 | 5.967043E+03 | 1.583147E+03 |

| CBA | 1.418221E+05 | 7.288431E+05 | 1.079177E+04 | 1.420128E+04 | 3.142839E+02 | 5.455682E+00 |

| LWOA | 5.842765E+05 | 1.266102E+05 | 5.940121E+05 | 8.651440E+05 | 3.226856E+02 | 7.158850E+00 |

| F4 |

F5 |

F6 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 4.073550E+02 | 2.050446E+01 | 5.660927E+02 | 1.848911E+01 | 6.000037E+02 | 1.694265E-03 |

| EBOwithCMAR | 4.002521E+02 | 8.386622E-01 | 5.236547E+02 | 6.702270E+00 | 6.001585E+02 | 2.583089E-01 |

| LSHADE_cnEpSi | 4.055120E+02 | 1.717506E+01 | 5.380104E+02 | 1.063740E+01 | 6.017437E+02 | 1.478015E+00 |

| ALCPSO | 5.308335E+02 | 5.904290E+01 | 6.161281E+02 | 2.700655E+01 | 6.053601E+02 | 5.947913E+00 |

| CLPSO | 4.711778E+02 | 2.235273E+01 | 5.533829E+02 | 9.235232E+00 | 6.000000E+02 | 7.313105E-14 |

| LSHADE | 4.045201E+02 | 1.653684E+01 | 5.353546E+02 | 9.099230E+00 | 6.002333E+02 | 2.393252E-01 |

| SADE | 4.329790E+02 | 3.720693E+01 | 5.492836E+02 | 1.048001E+01 | 6.000063E+02 | 3.290097E-02 |

| JADE | 4.042788E+02 | 1.628497E+01 | 5.336508E+02 | 8.884047E+00 | 6.000000E+02 | 0.000000E+00 |

| RCBA | 4.743177E+02 | 3.834853E+01 | 7.518372E+02 | 5.131458E+01 | 6.655776E+02 | 1.003048E+01 |

| EPSO | 4.548814E+02 | 5.265618E+01 | 6.600886E+02 | 2.641726E+01 | 6.000003E+02 | 5.045327E-04 |

| CBA | 5.134138E+02 | 3.745535E+01 | 7.559000E+02 | 5.956804E+01 | 6.661605E+02 | 1.013116E+01 |

| LWOA | 5.100688E+02 | 4.313991E+01 | 7.240533E+02 | 4.400702E+01 | 6.517513E+02 | 9.359289E+00 |

| F7 |

F8 |

F9 |

||||

|---|---|---|---|---|---|---|

| AVG | STD | AVG | STD | AVG | STD | |

| IRIME | 7.870448E+02 | 1.584953E+01 | 8.597845E+02 | 1.429043E+01 | 9.000000E+02 | 1.461630E-11 |

| EBOwithCMAR | 7.617788E+02 | 1.086112E+01 | 8.241612E+02 | 6.468610E+00 | 1.010946E+03 | 1.865535E+02 |