Abstract

Humans have the remarkable cognitive capacity to rapidly adapt to changing environments. Central to this capacity is the ability to form high-level, abstract representations that take advantage of regularities in the world to support generalization1. However, little is known about how these representations are encoded in populations of neurons, how they emerge through learning and how they relate to behaviour2,3. Here we characterized the representational geometry of populations of neurons (single units) recorded in the hippocampus, amygdala, medial frontal cortex and ventral temporal cortex of neurosurgical patients performing an inferential reasoning task. We found that only the neural representations formed in the hippocampus simultaneously encode several task variables in an abstract, or disentangled, format. This representational geometry is uniquely observed after patients learn to perform inference, and consists of disentangled directly observable and discovered latent task variables. Learning to perform inference by trial and error or through verbal instructions led to the formation of hippocampal representations with similar geometric properties. The observed relation between representational format and inference behaviour suggests that abstract and disentangled representational geometries are important for complex cognition.

Subject terms: Cognitive neuroscience, Neural decoding, Hippocampus

A task in which participants learned to perform inference led to the formation of hippocampal representations whose geometric properties reflected the latent structure of the task, indicating that abstract or disentangled neural representations are important for complex cognition.

Main

Humans have a remarkable capacity to make inferences about hidden states that describe their environment3–5 and use this information to adjust their behaviour. One core cognitive function that enables us to perform inference is the construction of abstract representations of the environment5–7. Abstraction is a process through which relevant shared structure in the environment is compressed and summarized, while superfluous details are discarded or represented so that they do not interfere with the relevant ones8,9. This process often leads to the discovery of latent variables that parsimoniously describe the environment. By performing inference on the value of these variables, frequently from partial information, the appropriate actions for a given context can rapidly be deployed5,10, thereby generalizing from past experience to new situations.

What would be the signature of an abstract neural representation that enables this kind of adaptive behaviour? The simplest form of abstraction is one in which all the irrelevant information is discarded—for example, when the representations of pedestrian crossings in left-driving (UK) and right-driving (USA) nations are two unique and distinct patterns of neural activity that do not depend on sensory details (such as whether the crossing is in the city or countryside) (Fig. 1a). Looking left or right before crossing (two actions separated by a plane in the activity space that represents a linear readout) would readily generalize to the countryside after visiting a city. However, this kind of invariance is rarely observed in the brain. A more general geometric definition of an abstract representation has been proposed11; consider the non-trivial geometrical arrangement in Fig. 1b, in which the geographical area (city or countryside) and the nation of a crossing are represented along two orthogonal directions (the two variables are disentangled). The activity projected along one of these directions is invariant with respect to the value of the other variable. This type of invariance has important computational properties: it allows a simple linear readout to generalize to new situations. We therefore use this property as the defining characteristic of an abstract representation: a representation of a particular variable is abstract if a linear decoder trained to report the value of that variable can generalize to new conditions. The novel conditions are defined by the values of other variables. Representations with these properties have been observed in monkeys11–13, in rodents14,15 and in artificial neural networks11,16,17. Are these abstract representations also observed in the human brain? How are they formed as a function of learning, and do they matter for behaviour? The hippocampus is thought to be critical for the implementation of abstraction and inference-related computations10,11,16–23, but it remains unknown whether abstract representations can emerge in the hippocampus in the timescales needed for rapid learning.

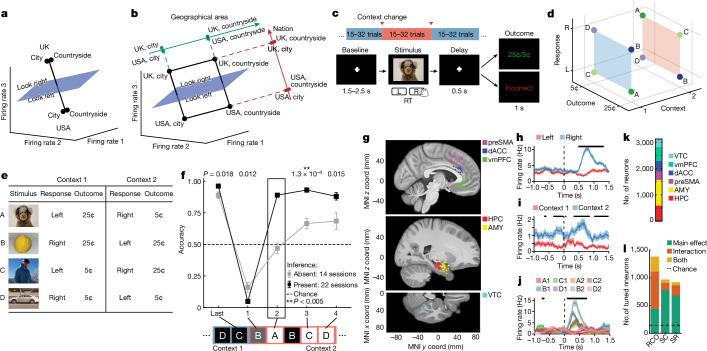

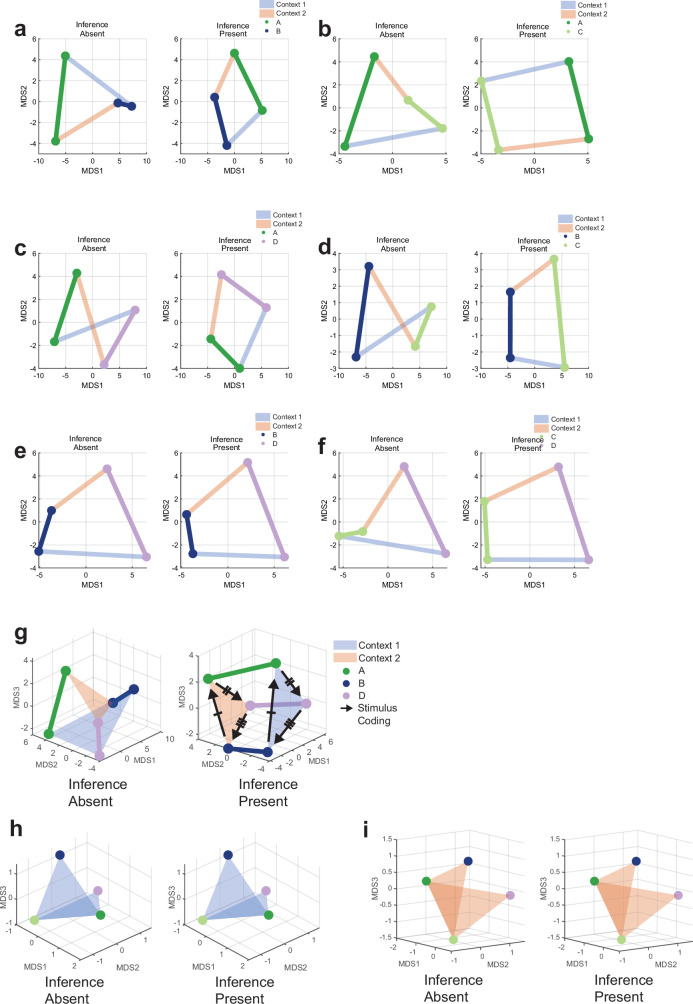

Fig. 1. Task, behaviour and single-neuron tuning.

a,b, Possible definitions of abstraction as clustering (a) or generalization (b). In the latter, the two variables are orthogonal to each other and preserved, whereas one of the variables (geographic area) is discarded in the former. c, Task and example trial. Blocks of trials alternated between the two contexts. In each trial, the stimulus remained on the screen until participants pressed a button, followed by the outcome. d,e, Task structure. d, Each stimulus (A–D) is associated with a single correct response and results in either a high or low reward if the correct response is given. e, Stimulus–response relationships are inverted between contexts 1 and 2. f, Behaviour. Accuracy is shown separately for inference present (n = 22) and absent (n = 14) sessions for the last trial before the context switch, the first trial after the context switch and for the remaining three inference trials averaged over all trials in each session (mean ± s.e.m. across sessions). The dashed line marks chance. The black box indicates inference trial 1. **P < 0.005 for rank-sum inference sbsent versus present over sessions. g, Electrode locations. Each dot denotes a microwire bundle. Locations are shown on the same hemisphere (right) for visualization purposes only. h–j, Example neurons that encode response (h), context (i) and mixtures of stimulus ID (indicated by A–D) and context (indicated by 1 or 2) (j). Error bars are ± s.e.m. across trials. t = 0 is stimulus onset. Black points indicate P < 0.05 of one-way ANOVA of plotted variables. k, Number of units recorded in each brain area. l, Number of single units across all brain areas showing significant main or interaction effects to at least one variable (n-way ANOVA, P < 0.05, Methods). Variables tested: response (R), context (C), outcome (O), and stimulus ID (S). Brain areas assessed: amygdala (AMY), dorsal anterior cingulate cortex (dACC), hippocampu (HPC), presupplementary motor area (preSMA), and ventromedial prefrontal cortex (vmPFC).

Task and behaviour

We recorded the activity of populations of neurons in the brains of patients with epilepsy while they learned to perform a reversal learning task (17 patients, 42 sessions; Supplementary Table 1). Patients viewed a sequence of images and indicated for each whether they thought that the associated action was a ‘left’ or ‘right’ response (Fig. 1c). Participants discovered from the feedback provided after each response what the correct response was for a given image. There were two fixed mappings (stimulus–response maps) between each of the four stimuli: the associated correct response and reward given for a correct response (Fig. 1e). Which of the two fixed mappings was active changed at random times (Fig. 1c), requiring participants to infer when a context switch occurred from the feedback received. The two stimulus–response maps were systematically related: all stimulus–response pairings were inverted between the two contexts (Fig. 1e). Therefore, the participants who had learned the stimulus–response maps could make a mistake immediately after the switch but then, following one instance of negative feedback, they could infer that the context had changed and update their stimulus–response associations according to the other map. Therefore, if participants were performing inference, they could respond accurately to stimuli that they had not yet experienced in the new context. We refer to the trials in which a given stimulus was encountered for the first time following a context switch as inference trials (excluding the first trial that resulted in the negative feedback) and to the remaining trials as non-inference trials. Patients performed with high accuracy in non-inference trials in 36 of the recorded sessions (Extended Data Fig. 1a,b and Methods state exclusion criteria). Each of the 36 included sessions was classified as either an ‘inference present’ or ‘inference absent’ session depending on the accuracy by which patients responded to the first inference trial (Fig. 1f (timepoint 2) and Methods).

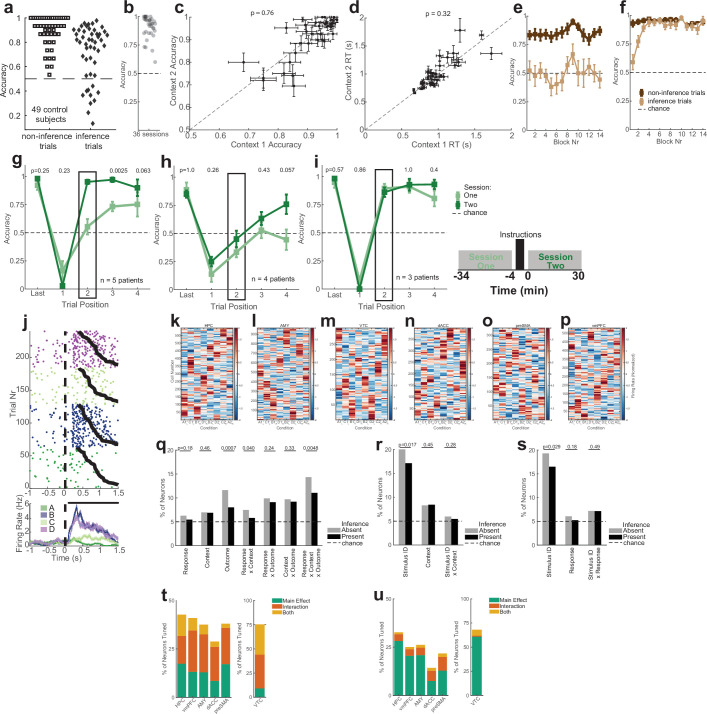

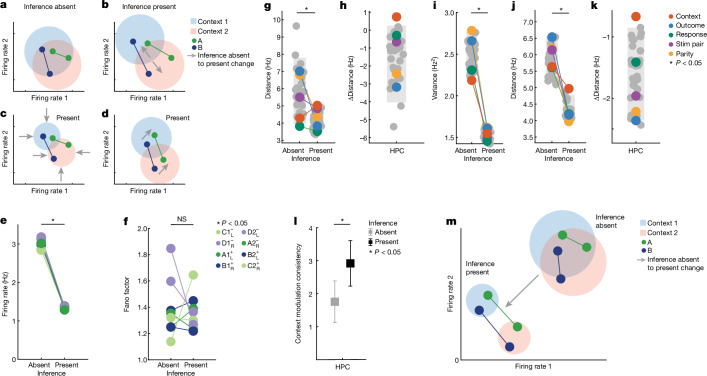

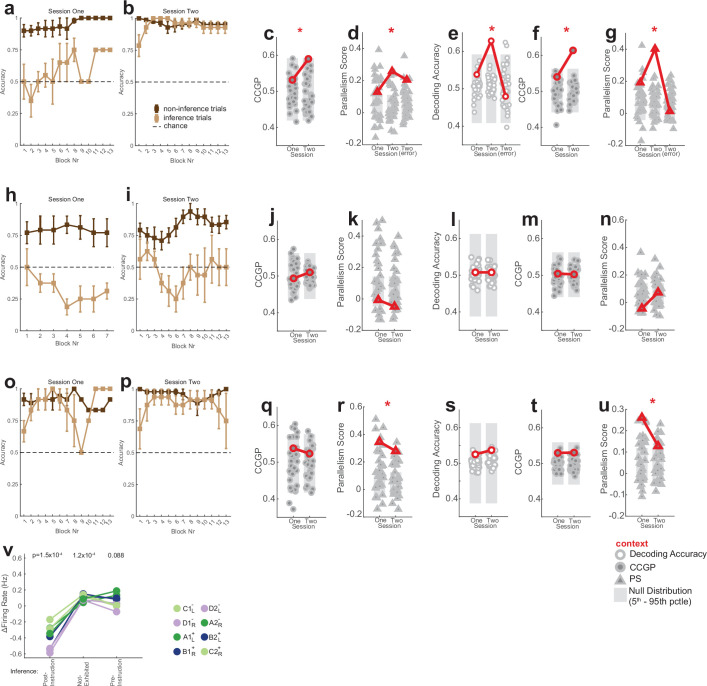

Extended Data Fig. 1. Task behavior and single-neuron responses across all recorded regions.

(a) Task performance of n = 49 control subjects. Accuracy is reported as an average for each subject over all non-inference trials (left) and all inference trials (right; included are 3 the three trials after every switch in which an image was seen the first time after the switch, i.e. timepoints 2–4 in Fig. 1f). Chance is 50%. This task variant is equivalent to the first session of the task encountered by patients (before explicit instructions of latent task structure). 46/49 subjects performing above chance on non-inference trials. (b) Performance of patients in non-inference trials. Each dot is a single session. Only sessions where patients exhibited above-chance accuracy on non-inference trials are shown (36/42 sessions, p < 0.05, one-sided Binomial Test on all non-inference trials vs. 0.5). (c) Non-inference performance for context 1 and 2 for the n = 36 sessions included in the analysis. Error bars are SEM over blocks. The reported p-value is a paired two-sided t-test between the mean accuracies for Context 1 and Context 2 across all sessions. (d) Same as (c), but with reaction time (RT), computed as time from stimulus onset to button press for every trial. Mean RT’s are also computed by block. n = 36 sessions. (e-f) Performance as a function of time in the task for the (e) inference absence and (f) inference present groups. Shown is the accuracy for the last non-inference trial before a switch and the first inference trial after a switch. Accuracy is shown block-by-block averaged over a 3-block window (mean ± s.e.m. across sessions). (g-i) Behavioral performance for the subjects in the post-instruction, not-exhibited, and pre-instruction groups, respectively. See Fig. 1f for notation. Plot shows performance on the last trial before the context switch, the first trial after the context switch, and for the first inference trial (Trial 2) averaged over all trials in each session (mean ± s.e.m. across sessions). Dashed line marks chance. The first inference trial performance (block box) was used to classify patients the patients, so significance is not reported for this trial. P-values are a one-way binomial test vs. 0.5. (j) Example hippocampal neuron that encodes stimulus identity. Raster trials are reordered based on stimulus identity, and sorted by reaction time therein (black curves). Stimulus onset occurs at time 0. Black points above PSTH indicate times where 1-way ANOVA over the plotted task variables was significant (p < 0.05). Errorbars are ±s.e.m. across trials. (k-p) Normalized activity for all neurons recorded from the hippocampus (k), amygdala (l), VTC (m), dACC (n), preSMA (o), and vmPFC (p). Each is plotted as a heat map of trial-averaged responses to each unique condition (8 total, specified by unique Response-Context-Outcome combinations). Z-scored firing rates are computed from 0.2 s to 1.2 s after stimulus onset for every trial. Each row of the heat map corresponds to the activity of a single neuron, and columns correspond to each of the 8 conditions. Neurons are ordered such that adjacent rows (neurons) are maximally correlated in 8-dimensional condition response space. This approach would allow for modular tuning to visibly emerge in the heat map if groups of neurons were clustered in their response profiles. (q) Percentage of neurons across all areas that exhibit tuning. Tuning was assessed by fitting a 2 × 2 × 2 (Response-Context-Outcome) ANOVA for every individual neuron’s firing rate during a 1 s window during the stimulus presentation period. Significant neurons were counted as p < 0.05 for main effects or interaction effects involving the stated variables. Significanctly different proportions of tuned neurons between inference present and absent sessions is determined via a two-sided z-test, where “*” indicates p < 0.05, “***” indicates p < 0.005, and “n.s.” indicates “not significant”. (r) Same analysis as (q), but for a 4 × 2 ANOVA for stimulus identity and context. (s) Same analysis as (q), but for a 4 × 2 ANOVA for stimulus identity and response. (t) Percentages of tuned neurons shown separately for each region (compare to Fig. 1j). Single-neuron tuning is identified using a 3-Way ANOVA (Response × Context × Outcome), corresponding to column 1 (RCO) of Fig. 1j. (u) Same as (t), but single-neuron tuning identified here using 2-Way ANOVA (Stimulus ID × Context), corresponding to column 2 (SC) of Fig. 1j.

Single-neuron recordings

Neural data recorded in the 36 included sessions yielded 2,694 (of 3,124) well-isolated single units, henceforth neurons, distributed across the hippocampus (494 neurons), amygdala (889 neurons), presupplementary motor area (269 neurons), dorsal anterior cingulate cortex (310 neurons), ventromedial prefrontal cortex (463 neurons) and ventral temporal cortex (VTC, 269 neurons) (Fig. 1g,k). Action potentials discharged by neurons were counted during two 1 s long trial epochs: during the baseline period (base, −1s to 0 s before stimulus onset), and during the stimulus period (stim, 0.2 to 1.2 s after stimulus onset).

Single-neuron responses during the two analysis periods were heterogeneous. During the stimulus period, some neurons showed selectivity to one or several of the four variables stimulus identity, response, (predicted) outcome and context (Fig. 1h–j and Extended Data Fig. 1j show example neurons tuned to response and context). Other neurons were modulated by combinations of these variables (Fig. 1j, example neuron tuned to conjunction of stimulus and context). Across all brain areas, 54% of units (1,447 out of 2,694) were tuned to one or several task variables, with 26% of units (706 out of 2,694) showing only interaction effects, 17% (449 out of 2,694) showing only main effects and 11% (292 out of 2,694) showing both when fitting a three-way analysis of variance (ANOVA) for response, context and outcome (Fig. 1l, RCO column, chance was 135 out of 2,694 units, factor significance at P < 0.05 and Extended Data Fig. 1t shows each brain area separately). These findings indicate diverse tuning to many task variables simultaneously across all brain regions (Extended Data Fig. 1k–p,t–u).

Geometric analysis approach

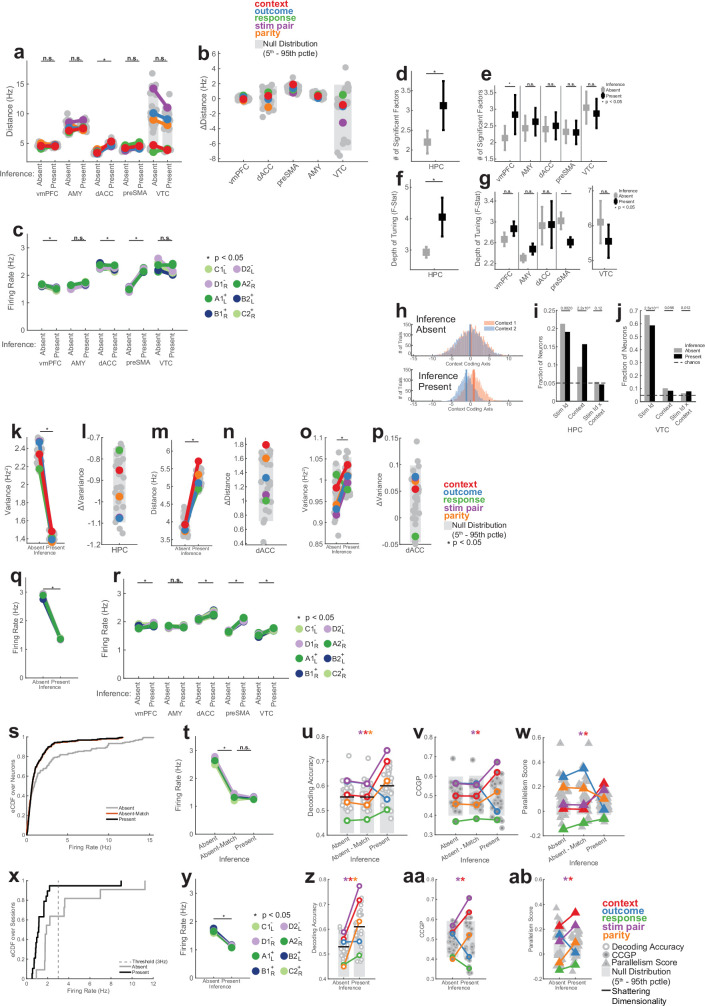

We analysed neural pseudo-populations constructed by pooling all recorded neurons across patients (Methods). In our task, the geometry of a representation was defined by the arrangement in the activity space of the eight points that represented the population responses in different experimental conditions (Fig. 2d). Low-dimensional disentangled geometries would be abstract because they confer on a linear readout the ability to cross-generalize. Consider a simplified situation with three neurons (the axes) and two stimuli in two contexts (Fig. 2a–c). Imagine that the four points (two per context) are arranged on a relatively low-dimensional square (the maximal dimensionality for four points is three), with the context encoded along one side and stimulus along the two orthogonal sides (Fig. 2a). Then, a linear decoder for stimulus (A versus B), trained only on context 1 conditions, can readily generalize to context 2 (Fig. 2b) and the stimulus is said to be abstract. This ability to generalize is due to the parallelism of the stimulus coding directions in the two contexts (Fig. 2c). Moreover, context and stimulus are represented in orthogonal subspaces, and hence, they are called disentangled variables24,25. This means that context is also abstract.

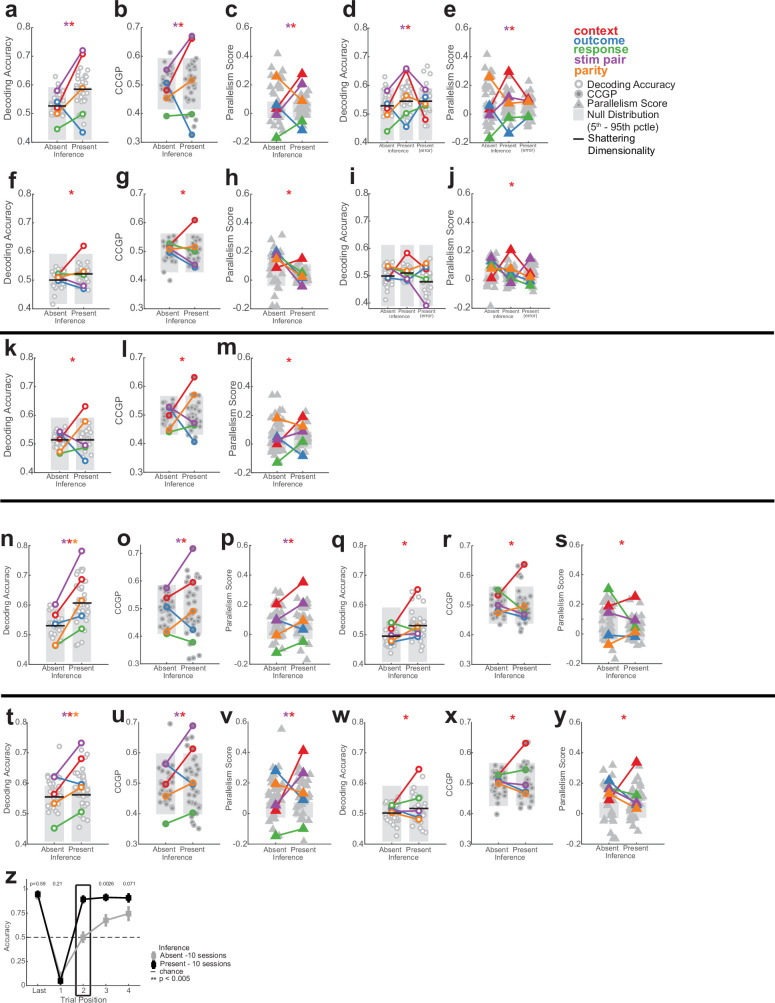

Fig. 2. Multiple abstract variables emerge with inference in hippocampus.

a, Example neural state space formed by three neurons. Points represent the response patterns in various task conditions. Black arrows mark coding vectors. b, CCGP. A decoder is trained to differentiate between stimulus A and B in context 1 and evaluated in context 2. If context is represented in an abstract format, then the decoder generalizes, yielding high CCGP for context. c, PS. In disentangled representations, the coding vectors (arrows) are parallel. d, Illustration of the dichotomies (variables) context, stim pair and parity with class labels indicated. See Extended Data Fig. 2 for all dichotomies. e,f, Neural representation during stimulus period in hippocampus. Context and stim pair are decodable in inference present sessions (e) and are encoded in an abstract format (f). Each dot shows one of the 35 dichotomies. The horizontal black line shows shattering dimensionality. Grey bars denote the 5th–95th percentile of the null distribution. Stars denote named dichotomies that are above chance in inference present sessions and are significantly different from their corresponding inference absent value (PRS < 0.05/35, two-sided rank-sum test, Bonferroni corrected for multiple comparisons across all dichotomies). g, Decodability of all dichotomies for the other brain areas. AMY, amygdala. See e for notation. h,i, Neural representation during baseline period in hippocampus is decodable in inference present (h) and encoded in an abstract format (i). Trials are labelled according to the previous trial. See e,f for notation. Context differed significantly between present and absent (P = 1.1 × 10−33 and P = 2.4 × 10−34, respectively). j, Hippocampal population response during the stimulus period in inference absent and present sessions shown using MDS (Methods). Points correspond to stimuli and context combinations, black lines show hypothetical hyperplanes for context and stimulus pair decoders. In all panels, neuron counts are balanced between inference absent and inference present sessions for every brain area to make values comparable. *P < 0.05.

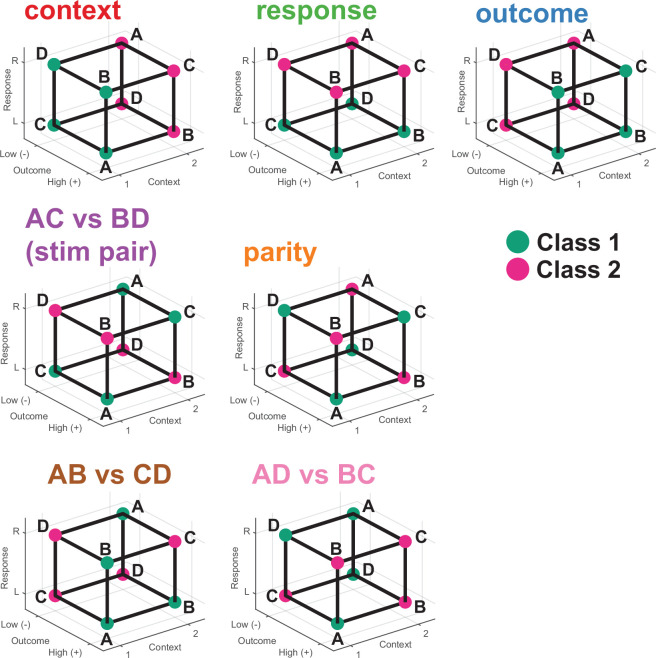

We use two metrics to assess whether information was represented in this way in the neural data: cross-condition generalization performance (CCGP), which assesses the ability of a linear decoder to generalize across conditions, and the parallelism score (PS), which measures the cosine similarity of different coding vectors. High CCGP and PS are defining characteristics of an abstract representation of a variable. We considered the representational geometry of all 35 possible variables. Each variable corresponds to one possible balanced split (dichotomy) of the eight task conditions into two groups of four conditions each (Fig. 1b,d and Extended Data Fig. 2). We highlight in Fig. 2d the interpretable variables that turned out to be important in the analysis: context, behaviourally relevant stimulus grouping (stim pair) and parity (which measures the degree of nonlinear interactions of variables in the neural population). Last, we refer to the average decodability across all possible variables as shattering dimensionality11,26, a metric that assesses the dimensionality of the representation.

Extended Data Fig. 2. Visual representation of all named balanced dichotomies.

Illustration of the named balanced dichotomies that correspond to condition splits that have clearly interpretable meaning with respect to the construction of the task. For example, the context dichotomy (top left), arises from assigning all conditions for context = 1 to one class and all conditions for which context = 2 to the other class. The specific assignment of class labels 1 and 2 is arbitrary, and inverting the labels still corresponds to the same meaning for the dichotomy. All named dichotomies shown here are color coded to reflect their value in all Shattering Dimensionality, CCGP, and Parallelism Score plots, and this color code remains consistent throughout the paper whenever balanced dichotomies are considered.

Context is abstract in hippocampus

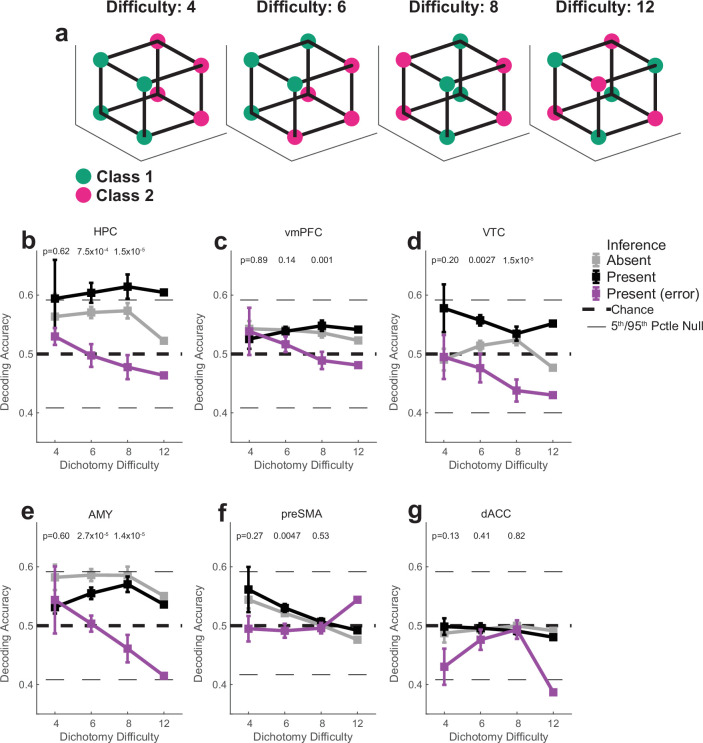

We first compared the decodability of variables between inference present and absent sessions in the hippocampus. Following stimulus onset, shattering dimensionality was larger in inference present sessions (Fig. 2e, inference absent versus present, 0.57 versus 0.62, PRS = 2.7 × 10−3, rank-sum over dichotomies). The two variables that increased the most in decodability were latent context (Fig. 2e, red, inference absent versus present, PRS = 2.9 × 10−27, PAbsent = 0.12, PPresent = 5.1 × 10−5; PAbsent and PPresent are non-parametric significance tests versus chance based on the empirically estimated null distribution and PRS is a pair-wise comparison using a two-tailed rank-sum test) and stim pair (Fig. 2e, purple, inference absent versus present, PRS = 5.0 × 10−27, PAbsent = 0.015, PPresent = 7.9 × 10−7, t). A third dichotomy also became more decodable: parity (Fig. 2d,e, orange; PRS = 1.5 × 10−21, PAbsent = 0.27, PPresent = 0.0055). The parity dichotomy is an indicator of the expressiveness of a neural representation because it probes for nonlinear interactions. Generalizing this finding, dividing different dichotomies into increasing levels of ‘difficulty’ reveals that average decoding accuracy is highest for the most difficult dichotomies in the hippocampus (Extended Data Fig. 5).

Extended Data Fig. 5. Effect of inference and errors on shattering dimensionality as a function of dichotomy difficulty.

“Dichotomy difficulty” quantifies the amount of non-linear interaction of task variables needed in a population of neurons to decode a given dichotomy (see methods). (a) Example dichotomies of increasing difficulty. The difficulty 4 dichotomy corresponds to context and difficulty 12 dichotomy corresponds to parity (Extended Data Fig. 2). (b-g) Decoding accuracy as a function of dichotomy difficulty for different brain regions. Reported values (mean +/− SEM) are computed over dichotomy decoding accuracies, where the average decoding accuracy for each dichotomy is computed with 1000 repetitions of re-sampled estimation (see methods). Black dashed lines indicate chance level (50% for binary decoding), horizontal black lines indicate the 5th and 95th pctle of the null distribution. P-values are computed by conducting a one-way ANOVA over dichotomies independently for every dichotomy difficulty (Bonferroni multipe comparison corrected). This value is not meaningfully computable for difficulty 12, which contains a single dichotomy (the parity dichotomy), and is therefore not reported. Decoding accuracy from the hippocampus (b) is higher in inference present compared to inference present sessions. In error trials, decoding is at chance. n = 1000 random resamples.

We next examined the format of the dichotomies context, stim pair and parity in the hippocampus. During the stim period, CCGP (Fig. 2f and Extended Data Fig. 3d) was significantly elevated for both the context (Fig. 2f, red, inference absent versus present, PRS = 2.0 × 10−28, PAbsent = 0.51, PPresent = 0.02) and stim pair (Fig. 2f, purple, inference absent versus present, PRS = 2.0 × 10−28, PAbsent = 0.17, PPresent = 0.0011) variables in inference present but not in inference absent sessions. Similarly, during the prestimulus baseline period, context alone was encoded in an abstract format only in sessions in which participants could perform inference (Fig. 2h,i and Supplementary Note 1).

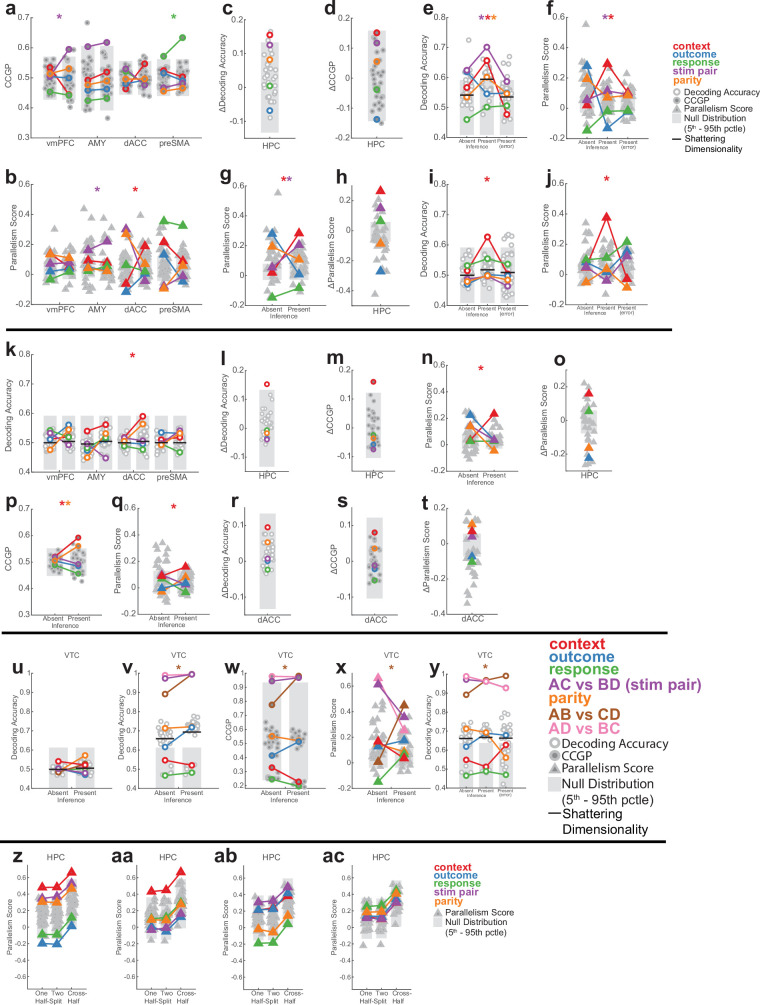

Extended Data Fig. 3. Additional geometric analysis during stimulus processing and baseline periods.

(a) CCGP for other brain regions during stimulus period. See Fig. 2 for notation. Significant named dichotomies are marked when the dichotomies are above the 95th percentile of the null distribution in inference present sessions and significantly different between inference absent and present (RankSum p < 0.01/35, Bonferroni corrected for balanced dichotomies). Significant increases were observed in vmPFC for stim pair (purple, pAbsent = 0.45, pPresent = 0.014) and preSMA for response (green, pAbsent = 0.045, pPresent = 0.0010) Stim pair CCGP in AMY was above chance for both inference absent and present sessions (purple, pAbsent = 0.050, pPresent = 0.039). (b) Same as (a), but for PS. PS increased significantly for stim pair in amygdala (purple, pAbsent = 1.3 × 10−4, pPresent = 9.0 × 10−8) and context in the dACC (red, pAbsent = 0.99, pPresent = 3.9 × 10−12). (c) Change in decoding accuracy. (d) Same as (c), but for CCGP. (e-f) Error trial analysis for neural response following stimulus onset in the hippocampus. Context (red) is not decodable and not in an abstract format in incorrect trials during inference present sessions. Only correct trials are used in inference absent sessions. Horizontal black bars indicate shattering dimensionality. Stars denote named dichotomies that are above chance in the inference present trials and are significantly different from their corresponding inference absent value (p < 0.05/35, Bonferroni corrected). pPresent = 0.0028, pPresent = 2.0 × 10−3, and pPresent = 0.037 for context, stim pair and parity, respectively in panel (e) and pPresent = 1.1 × 10−16 and pPresent = 0.0030 for context and stim pair in panel (f). (g) PS for hippocampus. Context PS was significantly larger (red, pAbsent = 0.55, pPresent = 1.4 × 10−15), as was stim pair (purple, pAbsent = 0.17, pPresent = 1.7 × 10−8). (h) Same as (c), but for PS. (i,j) Error trial analysis for the baseline period in the hippocampus. See (e-f) for notation. pPresent = 0.012 and pPresent = 0 for context in (i) and (j), respectively. (k-o) Analysis of baseline period for other brain regions (k) and the hippocampus (l-o). Compare to Fig. 2h. (k) Significant increases were observed in dACC for context (red, pAbsent = 0.37, pPresent = 0.049). SD was not different from chance (pRS>0.05 or all areas). (l-m) Change in decoding accuracy and CCGP. (n) PS. Context is the only named dichotomy for which the PS is significantly different from chance in nference present sessions (red, pAbsent = 0.37, pPresent = 1.2 × 10−10). (o) Change is PS shown in (n). (p-t) Analysis of baseline period for the dACC. (p) Context (red, pAbsent = 0.26, pPresent = 0.018) is in an abstract format. (q) Context PS (red, pAbsent = 0.18, pPresent = 0.013) is significant in inference present sessions. (r-s) Change in decoding accuracy (r), CCGP (s), and PS (t). Parity and context PS increase significantly (p = 0.0016 and p = 0.026, respectively). (u-y) Analysis of responses in VTC. (u) Decoding during pre-stimulus baseline. None of the dichotomies are decodable during inference absent or present (p > 0.05 for all dichotomies) and SD does not significantly differ (0.50 vs 0.51, pRS = 0.34). (v-y) Analysis of stimulus period. (v) Decodability. The stimulus dichotomies are decodable both during inference absent and inference present sessions. SD increased significantly (inference absent vs present, 0.66 vs 0.70, pRS = 0.0056). Dichotomies: purple, pAbsent = 6.8 × 10−13, pPresent = 6.6 × 10−14, brown, pAbsent = 2.2 × 10−9, pPresent = 6.0 × 10−14, pink, pAbsent = 1.1 × 10−13, pPresent = 6.7 × 10−14. Context is not significantly decodable (red, pAbsent = 0.24, pPresent = 0.38). (w) CCGP. Two stimulus dichotomies are in an abstract format in inference absent and all three are in an abstract format in inference present (purple, pAbsent = 0.0054, pPresent = 0.0036, brown, pAbsent = 0.057, pPresent = 0.0029, pink, pAbsent = 0.0030, pPresent = 0.0032). (x) PS. PS for two of the stimulus dichotomies is above chance in inference absent sessions, and all three are above chance in inference present sessions (purple, pAbsent = 0, pPresent = 4.3 × 10−13, brown, pAbsent = 0.73, pPresent = 0, pink, pAbsent = 0, pPresent = 5.9 × 10−7). (y) Error trial analysis. Decoders are trained on correct trials and evaluated on error trials in inference present sessions. All stimulus identity-related dichotomies are decodable during error trials (purple, pPresent(error) = 7.8 × 10−11, brown, pPresent(error) = 1.1 × 10−13, pink, pPresent(error) = 8.7 × 10−11) and SD does not decrease (black bar, inference present vs present (error), 0.67 vs. 0.66, pRS = 0.65). (z-ac) Cross-session generalization. (z) PS for context during the stimulus period for random half-splits of the inference present sessions (Left, Middle column, 11 sessions in each half). Cross-half context PS is also computed through cross-session neural geometry alignment (Right Column, see Methods). Baseline context PS is significantly above chance within each half and across halves (pHalf-Split One = 0.0081, pHalf-Split Two = 0.0098, pCross-Half = 0.033). (aa) Same as (z), but for the baseline period. Context PS is significantly above chance within each half and across halves (pHalf-Split One = 0.0029, pHalf-Split Two = 0.0022, pCross-Half = 0.010). (ab) Same as (z), but for the inference absent sessions (7 sessions in each half) during the stimulus period. (ac) Same as (ab), but for the baseline period. In all panels, the gray shaded bar indicates 5th-95th percentile of the null distribution and horizontal black lines indicate SD. All pAbsent, pPresent, pHalf-split, and pCross-Half values stated are estimated empirically based on the null distribution shown. All pRS values stated are a two-way ranksum test.

This difference in representation between inference absent and inference present sessions was unique to the hippocampus. No other recorded region showed a significant change in shattering dimensionality (Fig. 2g, black line, all P > 0.05) or decodability of the variable context or parity (Fig. 2g, red and orange). Although other task variables were also represented in an abstract format in other brain regions, only the hippocampus simultaneously represented the two variables context and stim pair in an abstract format (Extended Data Fig. 3a,b and Supplementary Results). These two variables are thus represented in roughly orthogonal subspaces (Fig. 2j shows a summary of this roughly disentangled neural geometry).

Context is absent in error trials

We next examined error trials to test whether the presence of context as an abstract variable in the hippocampus was associated with trial-level performance. Contrasting correct with error trials in inference present sessions revealed that decodability and format of the relevant dichotomies in error trials was similar to that in inference absent sessions both during the stimulus period (Extended Data Fig. 3e,f) and during the baseline period (Extended Data Fig. 3i,j). This includes, in particular, the context and parity dichotomy (Extended Data Fig. 3e,f, see legends for statistics). These findings demonstrate that both the content and format of the hippocampal neural representation are correlated with behaviour on a trial-by-trial basis.

Stimulus and context are abstract

Many individual hippocampal neurons in humans encode the identity of visual stimuli27,28. In our data, 109 out of 494 (22%) of neurons in the hippocampus were tuned to stimulus identity (Fig. 3a and Extended Data Fig. 6g,h show examples). We therefore next asked how the variable context interacted with stimulus identity and how this interaction changed with the ability to perform inference. As the four visual stimuli do not share any apparent structure, we do not expect to observe any structured geometry within each context. For this reason, we studied the geometry of pairs of stimuli (for example, stimulus A versus B) in the two contexts. To contrast with the hippocampal results, we examined the responses in the VTC, in which 195 out of 269 (73%) of neurons (Fig. 3d and Extended Data Fig. 6i,j show examples) were modulated by stimulus identity. At the population level, VTC neurons encoded stimulus identity-related balanced dichotomies in an abstract format (Extended Data Fig. 3u–y, purple, brown, pink, PAbsent/Present < 10−10). The dichotomy context, however, was not decodable in the VTC in both inference present and absent sessions (baseline period, Extended Data Fig. 3u, red; compare with Fig. 2h and stimulus period, Extended Data Fig. 3v, red, compare with Fig. 2e). Furthermore, error trial analysis showed that stimulus-related dichotomies were still decodable during errors in VTC (Extended Data Fig. 3y, purple, brown, pink, PPresent(Error) < 10−10) but not the hippocampus (Extended Data Fig. 3e, stim pair dichotomy). Context was therefore encoded as an abstract variable in the hippocampus but not in the VTC in correct trials. In error trials, VTC but not hippocampus represented stimulus identity. This contrast provides us with an opportunity to examine what changes in the hippocampus specifically when the behaviourally relevant variable context was represented in an abstract format.

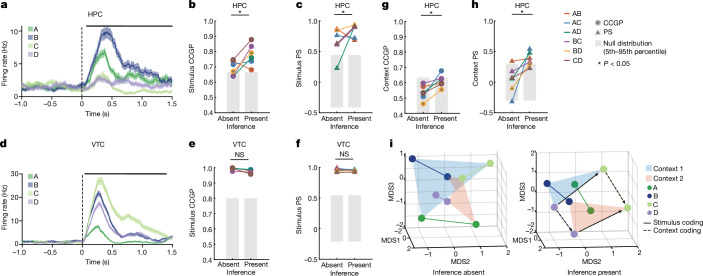

Fig. 3. Stimulus representations become structured around context with inference in hippocampus but not VTC.

a–f, Encoding of stimulus identity across contexts. a–c, Responses in hippocampus (HPC) following stimulus onset carry information about stimulus identity. a, Example hippocampal neuron encoding stimulus identity. b,c, Representational geometry of stimulus identity across contexts. Analysis is conducted over pairs of stimuli in each context (legend). Significance of differences is tested using a two-sided rank-sum test comparing inference absent and present over all stimulus pairs (*P < 0.05, NS otherwise). All other conventions identical to those in Fig. 2. b,c, CCGP (PRS = 0.041) (b) and PS (PRS = 0.040) (c) for stimulus coding across contexts significantly increased in inference present compared to inference absent sessions. d–f, Responses in VTC following stimulus onset carry information about stimulus identity. d, Example VTC neuron encoding stimulus identity. e,f, CCGP (PRS = 0.15) (e) and PS (PRS = 0.39) (f) for stimulus coding across contexts does not differ significantly between inference absent and inference present sessions. g,h, Same analysis as in a–f, but for encoding of context across stimulus pairs for hippocampus (see b,c for plotting conventions). CCGP (PRS = 0.012) (g) and PS for context coding vectors between pairs of stimuli (PRS = 0.015) (h) both significantly increase from inference absent to inference present sessions. i, Summary of changes in neural geometry in hippocampus. Shown is the MDS of condition-averaged responses of all recorded neurons shown for inference absent and present sessions. Points are average population vector responses to combinations of stimuli and context. Lines connect the same stimuli across context. Abstract coding of stimulus across contexts (solid arrows) and context across stimuli (dashed arrows) are highlighted for one pair of stimuli (C and D). The data in this plot are identical to those of Fig. 2j. Error bars in a,d are ±s.e.m. across trials. All PRS values are from a two-sided rank-sum test.

Extended Data Fig. 6. Cross-condition generalization performance for stimulus identity and context defined over stimulus pairs.

(a-f) Illustration of analysis over pairs of stimuli. When considering a pair of stimuli (e.g. A and B) across two contexts (e.g. 1 and 2), there are four possible task conditions (A1, B1, A2, B2). On these points, stimulus (A1A2 vs B1B2) and context (A1B1 vs A2B2) can be decoded in a straightforward manner, but is not informative about the format in which stimulus and context are encoded. Rather, the CCGP for stimulus across contexts (a-c) and for context across stimuli (d-f) provide information about the structure of the two variables and how they interact. (a-c) Illustration of CCGP for assessing whether stimuli are abstract with respect to context. (a) A linear decoder (blue bar) is trained to distinguish between stimuli A and B in context 1 (blue + and – correspond to class labels for training). The decoder is then tested (generalized) on context 2, where stimulus identity is decoded (red bar, + and – for class labels). (b) The training step. (c) The testing step. Arrows show the stimulus and context coding vectors. (d-f) Illustration of CCGP for assessing whether context is abstract with respect to stimulus identity. See (a-c) for notation. (g-j) Example neurons from hippocampus (g,h) and VTC (i,j) with tuning for stimulus identity. Plotting conventions identical to those used in Extended Data Fig. 1j. (k-l) Distances between pairs of stimulus representations in hippocampus (k) and VTPC (l). Color code indicates stimulus pair. Distance is the Euclidean distance between the stimulus centroids, each of which is an N (# of neurons) dimensional vector of average firing rates during stimulus presentation. Neuron counts are balanced between inference absent and inference present sessions. Null distributions are geometric nulls. Significance of the difference is tested by two-sided ranksum test computed over stimulus pairs, and n.s. indicates p > 0.01. pRS = 0.39, pRS = 0.40, pRS = 0.13, and pRS = 0.026 for panels (k-l), respectively. (m-n) Decodability of stimulus identity for hippocampus (m) and VTC (n). Each datapoint is a binary decoder between the two stimulus identities in a given pair. Significance of the difference between inference absent and inference present decodability is also established by Ranksum test over average decoding accuracies and n.s. indicates p > 0.05.

We next conducted a geometric stimulus-pair analysis to study the interaction of stimulus identity and context coding in the same neural population. The stimulus-pair analysis was designed to detect the presence of simultaneous abstract coding of stimulus identity across contexts and abstract coding of context across stimuli (see Extended Data Fig. 6a–f for an illustration).

The average stimulus decoding accuracy across all individual stimulus pairs in the hippocampus did not differ significantly between inference absent and inference present sessions (0.73 versus 0.76; Extended Data Fig. 6m, PRS = 0.13, rank-sum over stimulus pairs). By contrast, the geometry of the stimulus representation changed: it became disentangled from context as indicated by significant increases in stimulus CCGP (Fig. 3b, PRS = 0.041) and stimulus PS (Fig. 3c, PRS = 0.040) in the inference present sessions. This finding suggests that the representation of stimulus identity was reorganized with respect to the emerging context variable. Note that context was not decodable in inference absent sessions as a balanced dichotomy (Fig. 2e, red). Nevertheless, stimulus decoders did not generalize well across the two contexts in inference absent sessions. This result indicates that context did modulate stimulus representations in the hippocampus, but in a way that was entangled with stimulus identity. This effect was specific to the hippocampus: in VTC, the neural population geometry was unchanged, as indicated by no significant differences in stimulus decodability (Extended Data Fig. 6n, PRS = 0.15), stimulus CCGP (Fig. 3e, PRS = 0.15), stimulus CCGP (Fig. 3e, PRS = 0.15) and stimulus PS (Fig. 3f, PRS = 0.39).

The presence of abstract coding for one variable (stimulus identity) does not necessarily imply that the other variable (context) is also present in an abstract format. Therefore, we next examined the variable context separately for each pair of stimuli. In the hippocampus, context was decodable for individual pairs of stimuli both during inference absent and inference present sessions, without a significant difference between the two (Extended Data Fig. 7a; 0.63 versus 0.67; PRS = 0.065). However, in inference present sessions, the format of the variable context changed so that it was abstract across stimulus pairs as indicated by increases in context CCGP (Fig. 3g, PRS = 0.012) and context PS (Fig. 3h, PRS = 0.015) relative to the inference absent group. By contrast, in the VTC, whereas context was decodable from some stimulus pairs (Extended Data Fig. 7b, see legend for statistics), the format of the representation did not change in the way that would be expected for the formation of an abstract variable. Rather, there was a significant decrease in context CCGP (Extended Data Fig. 7c, PRS = 0.026) and no significant difference in context PS (Extended Data Fig. 7d, PRS = 0.39).

Extended Data Fig. 7. Additional context CCGP analysis over stimulus pairs for hippocampus and ventral temporal cortex (stimulus period).

(a-b) Context decoding accuracy for individual stimulus pairs in hippocampus (a) and VTC (b). (c-d) Context CCGP and Context PS for individual stimulus pairs for VTC (compare to Fig. 3g,h for hippocampus). n.s. is p > 0.01 of two-tailed ranksum test comparing absent vs. present. pRS = 0.026 for (c). (e-h) Example neurons from hippocampus (e-g) and VTC (f-h) that are modulated by both stimulus identity and context. Error bars in PSTH (bottom) are ± s.e.m. across trials. (g,h) Mean ± s.e.m. firing rates during the stimulus period. Black arrows indicate the direction in which the firing rate for a stimulus is modulated by context. n = 120 trials. (i) Change in the consistency of context-modulation for stimuli averaged over stimulus-tuned neurons in VTC (n = 104) and HPC (n = 63). Context modulation consistency is the tendency for a neuron’s firing rate to shift consistently (increase or decrease) to encode context across stimuli (see methods). There was a significant interaction between brain area (HPC/VTC) and session type (inference absent/present); 2 × 2 ANOVA, pArea = 0.36, pInference = 0.64, px = 4.5 × 10−5), indicating that modulation consistency increased in HPC in inference present sessions, whereas the opposite was the case in VTC.

These findings indicate that the emergence of context as an abstract variable in the hippocampus when patients can perform inference is coupled with the reorganization of stimulus representations so they are also more disentangled, thereby forming a jointly abstracted code for stimuli and context. This transformation of the representation is visible directly in a reduced dimensionality visualization of the data (Fig. 3i, Extended Data Fig. 8 and Supplementary Video 1). By contrast, we found no systematic reorganization of stimulus representations in VTC.

Extended Data Fig. 8. Hippocampal MDS plots summarizing changes in stimulus and context geometry.

(a-f) 2D MDS plots for individual stimulus pairs. See Fig. 2j for notation. MDS was conducted independently for inference absent and inference present sessions, making individual MDS axes not directly comparable. But note that relative distances are comparable because we matched the number of neurons. Only correct trials are shown. Disentangling of context and stimulus identity is present across most stimulus pairs, with the notable exception of the B/D stimulus pair (e), which is correlated with outcome and therefore cannot be dissociated from outcome using CCGP. The emergence of quadrilaterals with approximately parallel sides for all other stimulus pairs (a-d, f) is a signature of disentangling of stimulus identity and context. (g) Changes in neural geometry. MDS of condition-averaged responses of all recorded HPC neurons shown for inference absent (left) and inference present (right) sessions. All plotting conventions are identical to those in (a-f), except MDS was applied with Ndim = 3, and three stimuli (A,B,D) are plotted simultaneously. Black arrows on the inference present plot highlight parallel coding of stimuli across the two context planes. (h,i) MDS plots of HPC condition-averaged responses shown for context 1 (h) and context 2 (i) separately. Axes are directly comparable here between inference absent and present due to alignment via CCA prior to plotting. Note that the stimulus geometry in each context is a tetrahedral (maximal dimensionality, unstructured) regardless of the presence or absence of inference behavior.

Explaining the geometrical changes

We next examined what aspects of neuronal activity changed in the hippocampus to give rise to abstract variables. We considered the following non-mutually exclusive possibilities: (1) increase in distances between conditions (Fig. 4a,b), (2) decrease in variance of the population response along the coding direction (Fig. 4c) and (3) increase in parallelism of coding directions (Fig. 4d).

Fig. 4. Firing-rate properties underlying the emergence of abstract variables in the hippocampus.

a–d, Changes that could give rise to abstract variables. Shaded circles represent variability, and grey arrows signify changes between inference absent and present. a, Original, when variable is not abstract. b, Increase in distance. c, Decrease in variance. d, Increase in parallelism. e, Firing rates of hippocampal neurons during stimulus period decreased (PRS = 8.3 × 10−5, two-sided rank-sum over conditions). Colour indicates task state, with coding indicating identity (for example, task condition C1−L describes stimulus C, context 1, outcome , response L). f, Fano factor was not significantly different between inference present and absent sessions (two-sided rank-sum test, PRS = 0.99). g, Population distance between centroids for all 35 balanced dichotomies. Average distances decrease from inference absent to present (PRS = 2.9 × 10−8, rank-sum over dichotomies). Grey bars indicate the 5th–95th percentile of the geometric null distribution. h, Context alone is the only dichotomy whose distance significantly increases from inference absent to present (red, PΔDist = 0.040, against geometric null of difference). HPC, hippocampus. i, Average trial-by-trial variance projected along the coding direction decreased on average between inference absent and inference present sessions (PRS = 6.5 × 10−13, rank-sum test). j,k, Same as g,h, but for spike counts during the baseline period. Trials are grouped by identify the previous trial. Distance was significantly reduced across all dichotomies (j, PRS = 6.4 × 10−13, rank-sum over dichotomies) and context alone shows a distance reduction that is smaller than would be expected by chance (k, red, PΔDist = 0.027, against geometric null of difference). l, Stimulus-tuned neurons in the hippocampus were modulated by context more consistently in inference present sessions (PRS = 0.0039) during the stimulus period (n = 63, error bars are ±s.e.m. across neurons). m, Illustration of changes in neural state space. Context dichotomy distance increased, variance decreased and consistency of stimulus modulation across contexts increased. In all panels, PRS values are from a two-sided rank-sum test and grey bars indicate the 5th–95th percentile of the geometric null distribution.

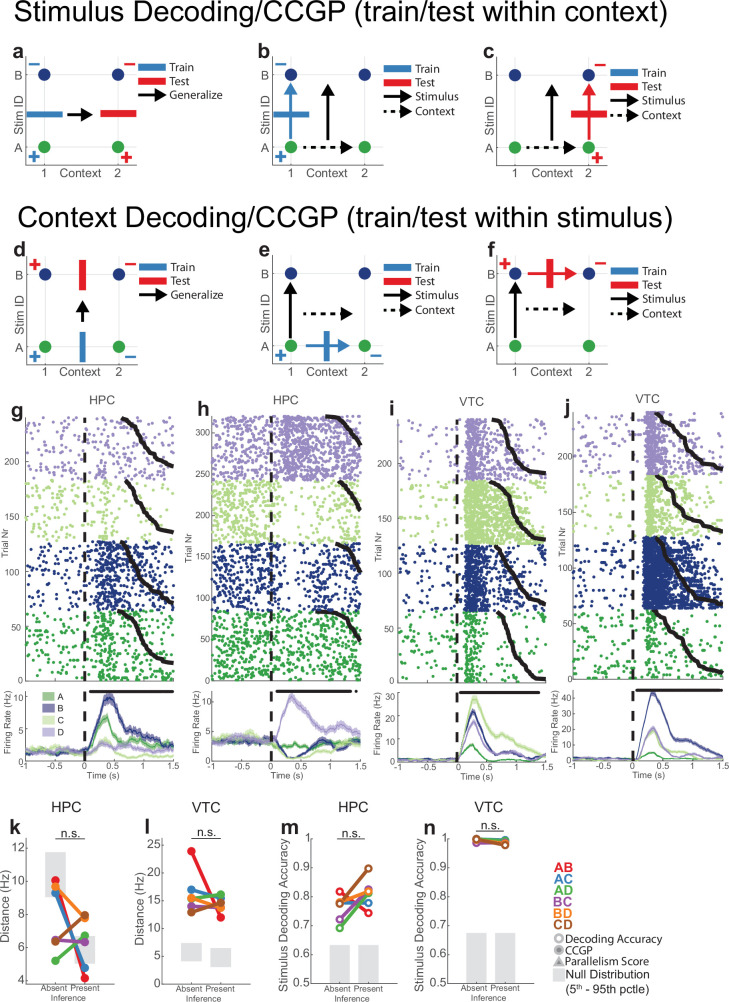

We first examined whether mean firing rates across all recorded neurons differed between inference absent and inference present sessions. The firing rate across conditions decreased from 3.37 ± 0.13 to 1.36 ± 0.03 Hz (±s.e.m.): a 60% reduction on average during the stimulus period (Fig. 4e, PRS = 8.3 × 10−5). Firing rates were also reduced during the baseline period (3.29 ± 0.09 to 1.38 ± 0.02 Hz, 58% reduction, Extended Data Fig. 9q). This firing-rate reduction was unique to the hippocampus (Extended Data Fig. 9c,r). The firing-rate reduction led to a decrease in the average distance between class centroids across all dichotomies in inference present sessions except one (5.77 ± 0.22 to 4.17 ± 0.07 Hz, PRS = 2.9 × 10−8, Fig. 4g). The lone exception was the context dichotomy, for which distance increased (4.3 versus 5.0 Hz, PAbsent = 0.87, PPresent = 0.076, PΔDist = 0.040, Fig. 4g,h and Extended Data Fig. 9h). Indeed, context was the dichotomy with the largest change in distance in firing-rate space when comparing the inference present and inference absent conditions (Fig. 4h). This isolated significant rise in context separability was not seen in any of the other recorded brain areas during the stimulus period (Extended Data Fig. 9a,b). Similarly, during the baseline period, the distance between context centroids decreased the least in the hippocampus (5.6 versus 5.0 Hz, PAbsent = 0.68, PPresent = 0.0007, PΔDist = 0.027, Fig. 4j,k) despite the significant decrease in distance over all dichotomies that was also observed here due to the firing-rate reduction (5.85 ± 0.08 to 4.25 ± 0.04 Hz, PRS = 6.5 × 10−13, Fig. 4j).

Extended Data Fig. 9. Additional analysis for firing rate property changes that are underlying geometric changes.

(a-j) Stimulus period analysis. (a) Distance between centroids for other brain regions. Plotting conventions are identical to Fig. 4g. Neuron counts were only balanced for each region. Significant change in average dichotomy separation determined by a two-tailed ranksum test, Bonferroni corrected for 5 multiple comparions. (b) Changes in inter-centroid distance for balanced dichotomies. No distances for named dichotomies changed more than would be expected by chance. (c) Mean firing rates for individual task conditions for all regions other than HPC. See Fig. 4e for notation. Significant change in average dichotomy separation determined by a two-tailed ranksum test, Bonferroni corrected for 5 multiple comparions. (d-g) Changes in single-neuron tuning quantified by a 3-way ANOVA (Response, Context, Outcome) with interactions. Significant factors (p < 0.05) were identified for every neuron and averages of both the number of factors per neuron (d,e) and the depth of tuning of those factors quantified by the F-Statistic (e,g) are reported (mean ± s.e.m. across neurons). Significance of difference between inference absent and present sessions was assessed by two-tailed ranksum test over significant neurons between the two groups. n = 58,47,24,22,96,118 for HPC, vmPFC, AMY, dACC, preSMA, and VTC, respectively. (h) Assessment of single trial variability of context coding. For each trial, the population response was projected onto the coding axis for context. Vertical lines indicating the mean. (i-j) Fraction of hippocampal (i) and VTC (j) neurons that exhibit selectivity for a given variable. For every neuron, selectivity is determined with a 4 × 2 ANOVA (Stimulus Identity, Context), with a per-factor significance threshold of p < 0.05. Significant differences in tuned fractions between inference absent and inference present assessed with two-tailed z-test. (k-r) Baseline period analysis for hippocampus (k-l) and dACC (m-p). (k) Average trial-by-trial variance of individual trials projected onto the coding direction for every dichotomy. See Fig. 4i for notation. Average variance along coding directions decreased significantly between inference absent and inference present sessions (pRS = 6.5 × 10−13, ranksum over dichotomies). (l) Change in variance for all dichotomies shown in (k). No named dichotomies fell outside the null distribution. (m-n) Same as (a,b) but for the dACC at baseline. See Fig. 4g for plotting conventions. Average distance between dichotomy centroids increased (pRS = 2.9 × 10−8, ranksum over dichotomies). Context was significantly separated (pAbsent = 0.48, pPresent = 0.0065). (n) Changes in distance between inference present and inference absent sessions for all dichotomies shown in (m). Context alone (red, pΔ = 0.047) exhibited a greater increase in distance than expected by chance. (o-p) Same as (k-l), but for he dACC. Average variance along coding directions increased significantly (pRS = 6.0 × 10−3, ranksum over dichotomies). (q) Mean baseline firing rates in hippocampus (pRS = 1.6 × 10−4, ranksum over conditions). See Fig. 4e for plotting conventions. Ranksum test over conditions. (r) Same as (q) but for the other brain areas. Ranksum test over conditions. Note that all brain regions other than AMY exhibit slight but significant increases (pRS = 0.050, 0.23, 1.6 × 10−4, 1.6 × 10−4, and 1.6 × 10−4 for vmPFC, AMY, dACC, preSMA, and VTC, respectively). (s-w) Control analysis for stimulus period after distribution-matching for firing rate. (s) Distribution of mean stimulus firing rates over all hippocampal neurons in the inference absent (gray) and inference present (black) sessions, as well as randomly thinned inference absent firing rates that distribution-match the inference present firing rates (orange). (t) Mean firing rates before and after distribution matching. Ranksum test over conditions. pRS = 1.6 × 10−4 for absent vs. absent-match. (u-w) Replication of key results for the set of neurons that are distribution matched. Plotting conventions are those shown in Fig. 2. No meaningful differences are present between inference absent and distribution-matched inference absent for any dichotomy/metric. (u) pPresent = 1.8 × 10−6, pPresent = 6.4 × 10−6, and pPresent = 0.016 for context, stim pair, and parity respectively. (v) pPresent = 0.035 and pPresent = 0.0047 for context and stim pair. (w) pPresent = 7.2 × 10−10 and pPresent = 3.6 × 10−6 for context and stim pair. (x-ab) Control analysis for stimulus period after excluding high-hippocampal-firing-rate sessions. (x) Distribution of mean hippocampal firing rate over inference absent (gray) and inference present (black) sessions. Each point in the distribution corresponds to the mean hippocampal firing rate over all neurons in a single session. Vertical dashed line indicates 3 Hz threshold. Hippocampal neurons from all inference absent and inference present sessions above this threshold were excluded from analysis shown in (y-ab). 131/169 inference absent neurons (10/14 sessions) and 318/325 inference present neurons (21/22 sessions) are retained. (y) Same as (t), but computed using all sessions with mean hippocampal firing rate <3 Hz (pRS = 1.6 × 10−4). (z-ab) Neural geometry measures re-computed excluding hippocampal neurons from high-firing-rate sessions. No meaningful differences are apparent except the above-chance context PS in inference absent sessions (red, pAbsent = 2.2 × 10−8). In all panels, * indicates p < 0.05 and ns indicates not significant. All pAbsent, and pPresent values stated are estimated empirically based on the null distribution shown. All pRS values stated are a two-sided ranksum test.

Next, we assessed changes in the variability of the population response along the coding direction of each dichotomy. The variance along the coding direction of neuronal responses in the hippocampus decreased for all dichotomies in inference present when compared to inference absent sessions during both the stimulus period (2.51 ± 0.16 versus 1.53 ± 0.06, PRS = 6.5 × 10−13, Fig. 4i) and the baseline period (2.49 ± 0.09 versus 1.58 ± 0.02, PRS = 6.5 × 10−13, Extended Data Fig. 9k,l). However, this decrease could be a consequence of the reduction in firing rates under the assumption of Poisson statistics. We conducted a condition-wise fano-factor analysis to assess whether the variance reduction was beyond that expected for the reduction in firing rates. This analysis revealed no significant differences in fano factors between inference absent and inference present sessions during the stimulus period (1.39 ± 0.22 versus 1.36 ± 0.14, PRS = 0.99, Fig. 4f) and the baseline period (1.61 ± 0.26 versus 1.45 ± 0.11, PRS = 0.19). Together, these two findings suggest that the decrease in variance along dichotomy coding directions is explained by the decreases in firing rate.

Did changes in tuning of individual neurons give rise to the increases in parallelism for context across stimuli (see Extended Data Fig. 7e–h for examples)? A stimulus-tuned neuron also modulated by context could do so consistently across all stimuli (for example, firing rate increased for all stimuli), or inconsistently (for example, firing rate increased for some stimuli and decreased for others). We quantified the consistency of context modulation across stimuli for each individual neuron (Methods). The consistency of context modulation in the hippocampus increased significantly in inference present sessions (Fig. 4l and Extended Data Fig. 7i, 1.8 ± 0.2 versus 2.9 ± 0.3, PRS = 0.0049). This effect was specific to hippocampus: in VTC, the same metric decreased significantly (Extended Data Fig. 7i, 2.6 ± 0.3 versus 1.6 ± 0.2, PRS = 0.0039).

These data indicate that the geometric changes seen in the hippocampus were due to the following (Fig. 4m): (1) an increase in separation between condition average representations of the two contexts despite relaxing towards the origin (decrease in firing rate), (2) decreases in variance along the coding direction, and (3) neurons becoming increasingly consistent (parallel coding directions) in their modulation across stimulus and context dimensions.

Effect of verbal instructions

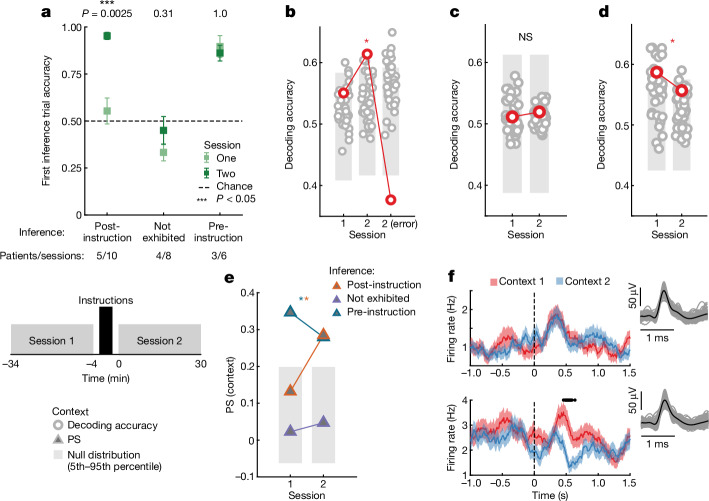

Did the format of the representation differ between participants who discovered the underlying latent variable context by themselves and those who only did so after receiving verbal instructions? We provided all patients with verbal instructions detailing the latent task structure after session 1 (Fig. 5, inset), allowing us to examine this question. We divided patients into three types on the basis of their behaviour: those who showed inference behaviour in the first session (pre-instruction inference, three patients, six sessions; Extended Data Fig. 1i); those who showed inference behavior after being given verbal instructions (post-instruction inference, five patients, ten sessions; Extended Data Fig. 1g); and those who did not perform inference even after being provided with verbal instructions (inference ‘not exhibited’, four patients, eight sessions; Extended Data Fig. 1h). Only patients who performed accurately in non-inference trials in both sessions one and two were included in one of these three groups (Extended Data Fig. 1g–i, ‘last’; five patients excluded, Supplementary Table 1). The principal difference between the post-instruction (Fig. 5a and Extended Data Fig. 10a,b) and inference not exhibited (Fig. 5a and Extended Data Fig. 10h,i) groups is their ability to perform inference following the verbal instructions, with both groups performing the task accurately otherwise. The pre-instruction inference group, on the other hand, showed above-chance inference performance during both sessions (Fig. 5a and Extended Data Fig. 10o,p).

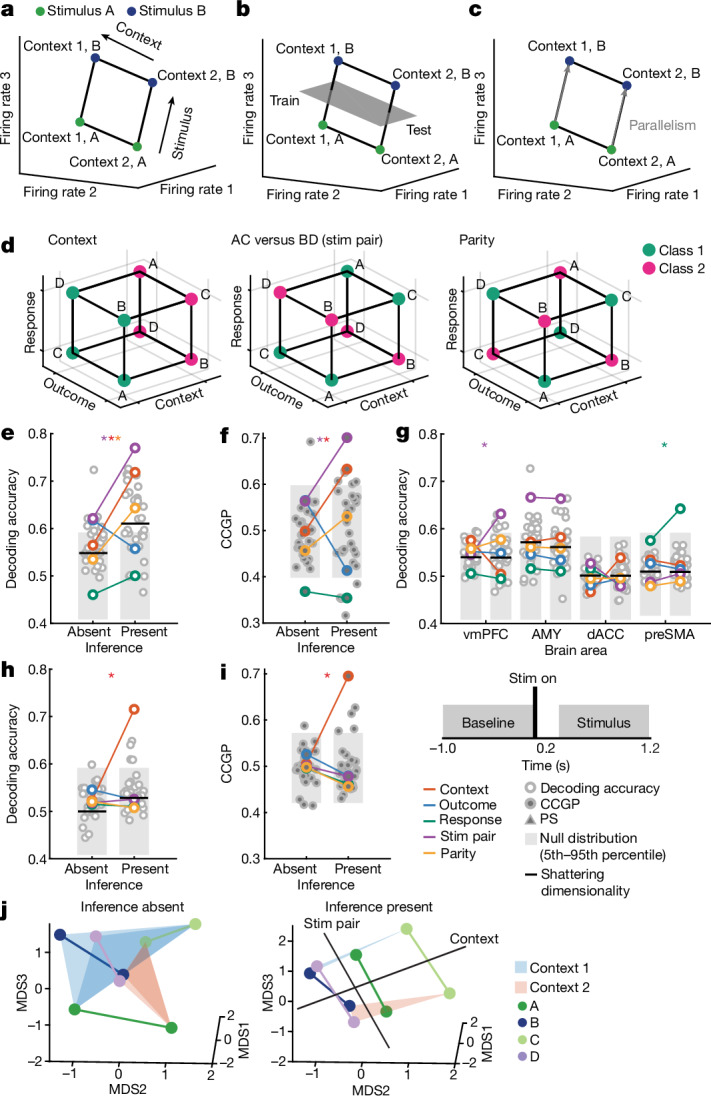

Fig. 5. Abstract hippocampal representation of context is present following successful verbal instructions.

a, Top, behavioural performance on the first inference trial for patients that performed inference after instructions (n = 10 sessions, post-instruction), those that did not perform inference even after instructions (n = 8 sessions, not exhibited) and those that performed inference already before instructions (n = 6 sessions, pre-instruction). Error bars are ±s.e.m. across sessions and P values are rank-sum session 1 versus 2. Bottom, schematic of the experiment. Session before and after high-level instructions are referred to as sessions 1 and 2, respectively. b–d, Encoding of context during the stimulus period in different groups of patients. The first trial following a switch is excluded from this analysis. *P < 0.05 against null in any column of a given geometric measure plot estimated empirically from the null distribution. b, Post-instruction group. Context was significantly decodable in session 2 correct but not error trials and also not in session 1 (P1 = 0.17, P1(correct) = 0.016, PRS = 3.1 × 10−19, P2(error) = 0.99). c, Not exhibited group. Context was not significantly decodable (P1 = 0.44, P2 = 0.42). d, Pre-instruction group. Context was decodable in session 1 (P1 = 0.014, PTwo = 0.17). e, Summary of changes due in instructions based on the PS for context. Neuron counts are equalized across groups by subsampling. Context PS increases significantly from session 1 to 2 in the post-instruction group (PPostinstruction,i = 0.20, PPostinstruction,2 = 0.0028). Context PS is not significantly different from chance for the not exhibited group (PNot exhibited,1/2 < 0.5) and is different from chance in both sessions for the pre-instruction inference (PPre-instruction,1/2 < 0.005) group. All P values are versus chance and are empirically estimated from the null distribution. f, Example hippocampal neuron with univariate context encoding in the session after (bottom) but not before (top) instructions (one-way ANOVA, POne = 0.40, PTwo = 0.010). Error bars are ±s.e.m. across trials.

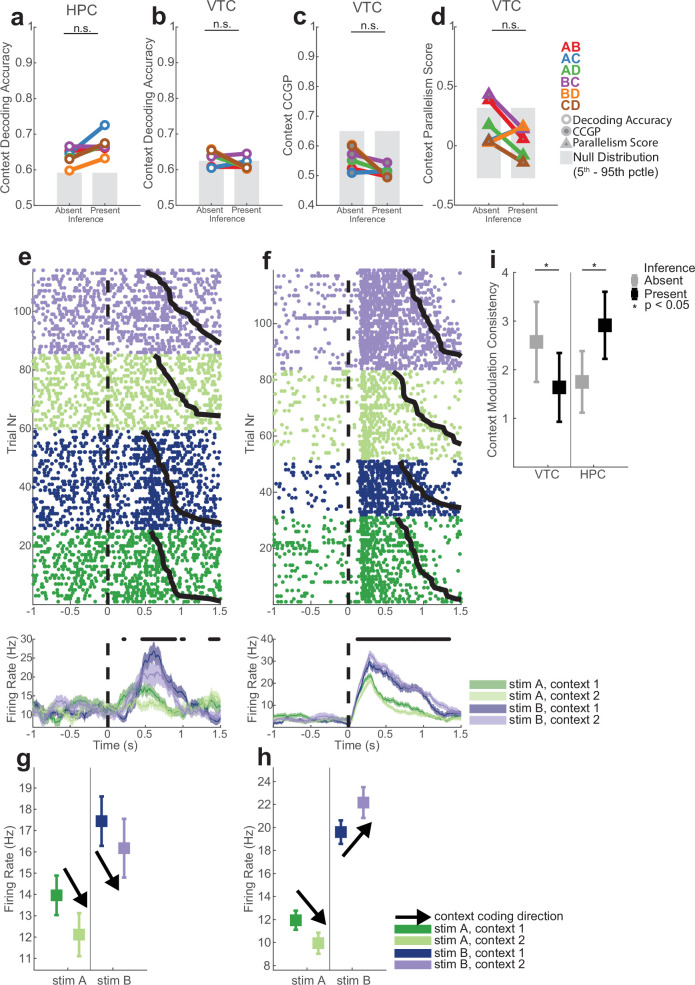

Extended Data Fig. 10. Additional analysis of the effect of instructions on hippocampal neural geometry.

(a-g) Post-instruction inference group. (a-b) Behavior. Identical to Extended Data Fig. 1e,f, except now the session recorded immediately preceding and immediately following verbal instructions are shown. Average performance is computed as a moving average with a 3-block window on the last three trials before a context switch (non-inference) and on the first inference trial after a switch (inference). Error bars are standard errors computed over subjects. Chance performance is 0.5. (c-d) Geometric measures during the stimulus period. Only context is shown as a named dichotomy for visual clarity. (c) CCGP (context, red, pOne = 0.27, pTwo = 0.046, pRS = 1.4 × 10−31) and (d) PS (context, red, pOne = 0.029, pTwo = 3.5 × 10−6, pTwo(error) = 0.0028). (e-g) Geometric measures during the baseline period. (e) Decoding accuracy (context, red, pOne = 0.35, pTwo = 0.0014, pTwo(error) = 0.55, pRS = 1.4 × 10−20). (f) CCGP (context, red, pOne = 0.33, pTwo = 0.0037, pRS = 3.0 × 10−34). (g) PS (context, red, pOne = 0.017, pTwo = 7.5 × 10−8, pTwo(error) = 0.40). (h-n) Same as (a-g), but for inference not-exhibited group. (j-k) Geometric measures during the stimulus period. (j) CCGP (context, red, pOne = 0.56, pTwo = 0.39, pRS = 0.004). (k) PS (context, red, pOne = 0.81, pTwo = 0.95). (l-n) Geometric measures during the baseline period. (l) Decoding accuracy (context, red, pOne = 0.45, pTwo = 0.45, pRS = 0.68). (m) CCGP (context, red, pOne = 0.45, pTwo = 0.47, pRS = 0.15). (n) PS (context, red, pOne = 0.93, pTwo = 0.30) for the. (o-u) Same as (a-g), but for the pre-instruction inference group. (q-r) Geometric measures during the stimulus period. (q) CCGP (context, red, pOne = 0.23, pTwo = 0.19, pRS = 0.0045). (r) Parallelism Score (context, red, pOne = 6.3 × 10−8, pTwo = 4.5 × 10−7). (s-u) Geometric measures during the baseline period. (s) Decoding accuracy (context, red, pOne = 0.37, pTwo = 0.47, pRS = 0.036), (t) CCGP (context, red, pOne = 0.30, pTwo = 0.50, pRS = 5.9 × 10−7), and (u) PS (context, red, pOne = 1.7 × 10−5, pTwo = 0.029). (v) Changes in hippocampal firing rates for the 3 different sub-groups of session pairs. Firing rate changes are computed during the stimulus presentation period (0.2 s to 1.2 s after stim onset) from consecutive sessions. Points are average changes in condition-averaged firing rates (8 unique conditions). Changes in firing rate that significantly differed from zero (two-sided t-test, p < 0.05/3, boneferroni corrected) are indicated with a “*” (p = 1.5 × 10−4, 1.2 × 10−4, and 0.088). Post-instruction inference group alone exhibited significant decrease in firing rate. Inference not-exhibited group exhibited an increase in firing rate. In all panels stated p-values denoted as pOne and pTwo are estimated empirically based on the null distribution shown. All pRS values stated are a two-way ranksum test.

In the post-instruction inference group, context was decodable in the hippocampus during the stimulus period on correct trials in the session following the verbal instructions (Fig. 5b, POne = 0.17, PTwo = 0.016, PRS = 3.1 × 10−19).This representation of context was in an abstract format, as indicated by significant increases in both CCGP (Extended Data Fig. 10c; POne = 0.28, PTwo = 0.047, PRS = 8.4 × 10−16 and PS (Extended Data Fig. 10d; POne = 0.023, PTwo = 1.2 × 10−6). Successful performance in the task was associated with context being represented abstractly in the hippocampus, as both the decodability (Fig. 5b, PTwo(error) = 0.99, session 2 correct versus error, PRS = 4.3 × 10−20) and PS (Extended Data Fig. 10d, PTwo(error) = 1.1 × 10−4) of context decreased significantly on error trials in session 2. Context was also encoded in an abstract format during the baseline period in the same performance dependent manner as context in the stimulus period (Extended Data Fig. 10e–g). By contrast, in patients in the inference not exhibited group, context was not encoded by hippocampal neurons during the stimulus (Fig. 5c and Extended Data Fig. 10j,k, all POne/Two > 0.05) nor the baseline (Extended Data Fig. 10l,n all POne/Two > 0.05) periods in session 2. Thus, the ability of post-instruction group patients to perform inference following instructions was associated with the rapid emergence of an abstract context variable in their hippocampus.

This effect could also be appreciated at the single-neuron level in the hippocampus. In the instruction successful group, the proportion of neurons that are linearly tuned to context (P < 0.05, one-way ANOVA for context) during both the stimulus (8% (6 out of 75 neurons) versus 18% (17 out of 93 neurons), P = 0.027) and baseline (7% (5 out of 75 neurons) versus 16% (15 out of 93 neurons), P = 0.029) periods increased in session 2 versus session 1 (Fig. 5f shows an example). By contrast, in the not exhibited group, there was no significant change in tuning to context at the single-neuron level both during the stimulus period (6% session 1 versus 6% session 2, P = 0.41) and the baseline period (8% session 1 versus 5% session 2, P = 0.27).

For the pre-instruction inference patient group, context was already decodable during session 1 (Fig. 5d, POne = 0.014) and the PS was significant and near the top of the dichotomy rank order in sessions 1 and 2 (Extended Data Fig. 10r, POne = 1.5 × 10−9, PTwo = 1.7 × 10−6). A similar trend was observed with the baseline context representation for these patients (Extended Data Fig. 10s–u). This finding suggests that the context variable these patients learned experientially during session 1 was in an abstract format.

Last, we compared the geometry of the context representations formed by each of the three patient groups (balancing number of neurons, Methods). Context PS increased significantly in the post-instruction inference group, from levels not different from chance during session 1 (POne,Post-inst = 0.20, Fig. 5e) to a level comparable to the pre-instruction inference group during session 2 (PTwo,Post-inst = 0.0028, PTwo,Pre-inst = 0.0035, Fig. 5e). The PS in the pre-instruction inference group, on the other hand, did not change significantly and was already above chance in session 1 (Fig. 5e, see legend for statistics). These findings suggest that hippocampal neurons in the pre-instruction inference group carried an abstract representation of context before receiving verbal instructions, and retained that geometry after receiving instructions. Furthermore, neurons of participants in the post-instruction inference group encode a task representation whose geometry resembled that of the pre-instruction group, indicating that a similar representational geometry can be constructed through either experience or within minutes through instruction to support inference in a new task.

Discussion

How can a neural or biological network efficiently encode many variables simultaneously11,29? One solution is to encode variables in an abstract format so they can be re-used in new situations to facilitate generalization and compositionality24,30–34. Here we show that such an abstract representation emerged in the human hippocampus as a function of learning to perform inference. The format by which latent context and stimulus identity were represented was predictive of the ability to perform behavioural generalizations that rely on contextual inference. Patients performed well on non-inference trials in all sessions included in the analysis, indicating that they understood the task. Therefore, the difference between the inference present and absent sessions was only in whether they performed inference following the covert context switch (Fig. 1f). For those sessions in which patients did not perform inference, there was no systematic relationship between context coding vectors across stimuli. For sessions in which patients performed inference, there was alignment of the context coding direction across stimuli (making them parallel), indicating that the context variable had been disentangled from the stimulus identity variable in the hippocampi of these patients (Figs. 2j and 3i). As a result, the two variables became disentangled, thereby allowing generalization. This representation was implemented by the hippocampus using a broadly distributed code as evidenced by the high context PS (Extended Data Fig. 3f,g,j,n) and the lack of reliance on univariately tuned context neurons to generate the abstract context representation (Extended Data Fig. 4a–j and Supplementary Note 2). Thus, the geometry we study here did not trivially arise from classically tuned neurons.

Extended Data Fig. 4. Additional control analyses for Hippocampal representational geometry after excluding univariantly tuned neurons.

Identical analysis to the main geometric analysis shown in Fig. 2, except that neurons are excluded from the analysis with the following criteria: in (a-j), neurons with significant linear tuning for Context, Response, or Outcome (2 × 2 × 2 ANOVA, Any Main Effect p < 0.01), and in (k-m), neurons with significant linear tuning for Stimulus Identity or Context (4x2 ANOVA, Any Main Effect p < 0.01). 455/494 neurons were retained for the stimulus period analysis (a-e) and 458/494 neurons were retained for the baseline period analysis (f-j). All primary results for changes in hippocampal geometry were recapitulated apart from decodability of the parity dichotomy during the stimulus period (a). (a-e) Stimulus period analysis. (a) Decodability. Context (red, pAbsent = 0.36, pPresent = 0.0001, pRS = 1.6 × 10−31) and stim pair (purple, pAbsent = 0.078, pPresent = 4.2 × 10−5, pRS = 6.6 × 10−31) was decodable and SD (0.54 vs. 0.58, pRS = 0.0013) increased. (b) CCGP. Context (red, pAbsent = 0.63, pPresent = 0.0016, pRS = 5.2 × 10−34) and stim pair (purple, pAbsent = 0.17, pPresent = 0.00095, pRS = 5.3 × 10−34) increased. (c) PS. Context (red, pAbsent = 0.40, pPresent = 3.7 × 10−13) and stim pair (purple, pAbsent = 0.83, pPresent = 1.2 × 10−7) increased. (d-e) Error trial analysis. (d) Decodability. Context (red, pAbsent = 0.36, pPresent = 0.0029, pPresent(error) = 0.64, pRS = 1.5 × 10−20) and stim pair (purple, pAbsent = 0.071, pPresent = 0.0021, pPresent(error) = 0.062, pRS = 2.0 × 10−5) were decodable only in error trials. SD was not significantly different (inference present vs present (error), 0.56 vs. 0.55, pRS = 0.62) during the stimulus presentation. (e) PS. Context (red, pAbsent = 0.40, pPresent = 4.6 × 10−15, pPresent(error) = 0.012) was largerest in correct trials. (f-j) Baseline analysis. (f) Context decodability (red, pAbsent = 0.37, pPresent = 0.013, pRS = 2.2 × 10−26) and SD (black, 0.50 vs. 0.52, pRS = 0.036). (g) CCGP. Context (red, pAbsent = 0.31, pPresent = 0.0044, pRS = 1.9 × 10−33) differed significantly. (h) PS. Context differed significantly (red, pAbsent = 0.12, pPresent = 0.0055). (i-j) Error trial analysis during the baseline. (i) Decodability. Context was elevated but not significantly during correct trials (red, pAbsent = 0.55, pPresent = 0.12, pPresent(error) = 0.37). SD increased significantly (black, inference present vs present (error), 0.51 vs. 0.49, pRS = 0.030). (j) PS. Context increased significantly in correct trials (red, pAbsent = 0.66, pPresent = 8.5 × 10−9, pPresent(error) = 0.30). (k-m) Same as (a-c), but after removing neurons tuned to stimulus identity using the 2-Way ANOVA during the stimulus period. 412/494 neurons were retained. Context remains in an abstract format. (k) Context decodability (red, pAbsent = 0.38, pPresent = 0.0088, pRS = 4.1 × 10−28). SD was not significantly different (black, 0.53 vs. 0.53, pRS = 0.69). (l) CCGP. Context (red, pAbsent = 0.51, pPresent = 6.0 × 10−4, pRS = 2.5 × 10−34) increased significantly. (m) PS. Context (red, pAbsent = 0.77, pPresent = 2.3 × 10−6) increased significantly. (n-s) Seizure onset zone exclusion analysis. Analysis shown is identical to Fig. 2, except that hippocampal neurons recorded in seizure onset zones were removed. 410/494 neurons were retained for analysis. Results were effectively identical to that reported in Fig. 2, with every significant named dichotomy increase during stimulus (n-p) and baseline (q-s) periods being recapitulated in the absence of SOZ hippocampal neurons. (t-z) Non-inference performance control analysis. Identical analysis to the main geometric analysis shown in Fig. 2, except that inference absent and inference present sessions were distribution-matched for non-inference trial performance. Pairs of inference absent and inference present sessions with at most 7.5% difference in non-inference trial performance were selected, prioritizing sessions with more hippocampal neurons. This matching process yielded 10 inference absent sessions (152 neurons) and 10 inference present sessions (187 neurons) whose average non-inference performances did not statistically significantly differ (92.8% v.s. 94.7%, pRS = 0.58, ranksum over sessions). All main geometric findings were recapitulated for the stimulus (t-v) and baseline (w-y) periods. (z) Distribution-matched behavior. P-values are one-way binominal test vs. 0.5. n = 10 sessions in each group. Error bars are ±s.e.m. across sessions. In all panels, the gray shaded bar indicates 5th–95th percentile of the null distribution and horizontal black lines indicate SD. All pAbsent, and pPresent values stated are estimated empirically based on the null distribution shown. All pRS values stated are a two-way ranksum test.

Inferential reasoning is thought to rely on cognitive maps, which have been observed in the hippocampus and other parts of the brain19,35–39. Cognitive maps are thought to underlie inferential reasoning in various complex cognitive and spatial domains3,10,35,36,40,41. However, little is known about how maps for cognitive spaces emerge at the cellular level in the human brain as a function of learning. Here we show that a cognitive map that organizes stimulus identity and latent context in an ordered manner emerges in the hippocampus. The cognitive map emerges because task states in one context, indexed by stimulus identity, become systematically related to the corresponding task states in the other context through a dedicated context coding direction that is disentangled from stimulus identity (Fig. 3b,c,g–i). Furthermore, the relational codes between task states (stimuli) in each context are preserved across contexts.

Hippocampal cognitive maps observed in other studies are often different from those that we observed because the encoded variables are observed to nonlinearly interact, a signature of high-dimensional representations. These representations are believed to be the result of a decorrelation of the neural representations (recoding) that is aimed at maximizing memory capacity42–44. This form of preprocessing leads to widely observed response properties, such as those of place cells45. However, there is some evidence of hippocampal neurons that encode one task variable independently of others15,21,46–51. In these studies, no correspondence was shown between different representational geometries in the hippocampus and differences in behaviour. Here the task representations generated when patients cannot perform inference (but can still perform the task) are systematically different from the abstract hippocampal representations of context and stimulus identity that correlate with inference behaviour11. Finally, it is important to stress that we also observed an increase in the shattering dimensionality, which has been in shown in other studies to be compatible with the low dimensionality of disentangled representations11,15.

We found stimulus identity codes in brain regions other than the hippocampus, but these mostly lacked reorganization as a function of learning to perform inference. This code stability is particularly salient in the VTC, a region analogous to macaque IT cortex, in which neurons construct a high-level representation of visual stimuli52–54. Some studies conducting unit recordings in this general region in humans show that neurons show strong tuning to stimulus identity55. We similarly find that VTC neurons encode visual stimulus identity (Fig. 3d–f and Extended Data Fig. 6n). However, these responses were not modulated by latent context in a systematic manner. As a result, despite being decodable for some individual stimulus pairs, context was not represented in an abstract format. Rather, in VTC, context was only weakly decodable for a subset of the stimuli, context decodability did not change between inference absent and inference present sessions (Extended Data Fig. 7b,c), and stimulus identity geometry was not reorganized relative to context in inference present sessions (Fig. 3e,f). Our study therefore shows that disentangled context-stimulus representations emerged in the hippocampus, but not in the upstream visually responsive region VTC.

In our study, verbal instructions resulted in changes in hippocampal task representations that correlated with behavioural changes. The emergence of this representation in the session immediately following the instructions in the post-instruction inference group is correlated with their newfound ability to perform inference and suggests that hippocampal representations can be modified on the timescale of minutes through verbal instructions (Fig. 5). This change in representation is qualitatively different from the standard approach of studying the emergence of a ‘learning set’, wherein a low-dimensional representation of abstract task structure emerges slowly over days through trial-and-error learning47,56,57. Our finding of similar representational structure in the hippocampus in participants who learned spontaneously and those who only learned after receiving verbal instructions suggests that both ways of learning can potentially lead to the same solution in terms of neural representations. In complex, high-dimensional environments, learning abstract representations through trial and error becomes exponentially costly (the curse of dimensionality), and instructions can be used to steer attention towards previously undiscovered latent structure that can be explicitly represented and used for behaviour. Our findings suggest that when high-level instructions successfully alter behaviour, underlying neural representations can be rapidly modified to resemble one learned through experience.

Methods

Participants