Abstract

Objectives

To examine whether comfort with the use of ChatGPT in society differs from comfort with other uses of AI in society and to identify whether this comfort and other patient characteristics such as trust, privacy concerns, respect, and tech-savviness are associated with expected benefit of the use of ChatGPT for improving health.

Materials and Methods

We analyzed an original survey of U.S. adults using the NORC AmeriSpeak Panel (n = 1787). We conducted paired t-tests to assess differences in comfort with AI applications. We conducted weighted univariable regression and 2 weighted logistic regression models to identify predictors of expected benefit with and without accounting for trust in the health system.

Results

Comfort with the use of ChatGPT in society is relatively low and different from other, common uses of AI. Comfort was highly associated with expecting benefit. Other statistically significant factors in multivariable analysis (not including system trust) included feeling respected and low privacy concerns. Females, younger adults, and those with higher levels of education were less likely to expect benefits in models with and without system trust, which was positively associated with expecting benefits (P = 1.6 × 10−11). Tech-savviness was not associated with the outcome.

Discussion

Understanding the impact of large language models (LLMs) from the patient perspective is critical to ensuring that expectations align with performance as a form of calibrated trust that acknowledges the dynamic nature of trust.

Conclusion

Including measures of system trust in evaluating LLMs could capture a range of issues critical for ensuring patient acceptance of this technological innovation.

Keywords: patient trust, public opinion, artificial intelligence, large language model

Introduction

In the Fall of 2022, few people in the general public were aware of the advances in large language models (LLMs) that would soon attract over 100 million people to sign onto ChatGPT within 1 month of its public launch.1 LLMs, a type of Generative Artificial Intelligence (GAI) that can create sophisticated and contextualized text and images based on natural language prompts, have been evolving for decades. However, the complexity of current LLMs (∼100 trillion parameters) and power of the generative pre-trained transformer (GPT) method have moved the technology from static models (ChatGPT3) into the phase of real-time human-reinforced training (ChatGPT4) in a matter of months. Moreover, major industry companies (eg, Google, Microsoft, Epic Systems) are racing to develop, manage, customize, privatize, and implement new derivatives of this powerful technology that will profoundly affect all major industries, including healthcare and public health.2

Current LLM applications suggest powerful capabilities and advancement in a number of uses, eg, generating clinic notes, accurately answering general medical knowledge questions (like those posed in standardized tests), mimicking “curbside consultations” that help make sense of typical patient scenarios (initial presentation, lab results, etc.),3 or summarizing medical forms and reports like Explanation of Benefit statements.4 The potential of generating and drafting messages and other communications to patients has immediate implications for clinician workflow and patient experience, and is already underway at several major academic medical centers.5 Much like how the general public can now use ChatGPT to query the Internet, LLMs like Epic’s GPT-4 tool are allowing electronic health record users including clinicians and researchers to conduct data analysis using natural language queries.

Conceptual model of public trust in LLMs

The social contract that makes people (eg, patients and clinicians) a core unit defining relationships in health care is fundamentally challenged by LLMs.6 For example, trust is foundational to the communication necessary for successful doctor-patient relationships.7 The ability of LLMs to produce written text in ways that mimic human knowledge and creativity means that some tasks related to information seeking, summarizing, synthesizing, and communicating can now be done by a computer. Yet LLM applications are prone to “hallucinations” or generating responses to queries that fabricate information in ways that effectively mimic truth or have face validity.3,8 LLMs and other AI tools have quickly revealed the ways in which people can be influenced by their interactions with the technology.9 Trust in the healthcare system has been identified as a predictor of patient engagement and willingness to share information with providers.10,11

Trust and trustworthiness are multidimensional constructs. Whether a person feels confident in being able to place their trust in another person, profession, organization, or system is an assessment based on several factors.7 In the context of health, dimensions frequently cited as shaping the meaning of trust include fidelity, ie, whether the trustee prioritizes the interests of the trustor; competency, ie, whether the trustee can or is perceived to be able to deliver on what they are being trusted to do; integrity, ie, whether the trustee is honest about intentions, conflicts of interest, etc., and general trustworthiness, ie, whether the trustor has confidence that the trustee is, in fact, trustworthy.12–14 Trust and trustworthiness are also often invoked in information technology, where adoption and use are linked to comfort and expecting a benefit from using the technology.15–17 However, we lack knowledge about how LLMs fare in comparison to other AI use cases for society generally.

Public expectations for the use of LLMs are likely to be shaped by past experiences and familiarity, if not knowledge, of both the context in which LLMs are used (ie, the healthcare system) and LLMs in general. Past experiences likely to shape attitudes about LLMs include whether a patient feels that their care is accessible and that they are empowered with options when seeking care.18,19 Trust is also likely to be associated with feelings of respect20 and comfort that private information will not be used for harm when receiving medical care.21–23 Knowledge and familiarity with technology such as LLMs outside of healthcare are also likely to shape public attitudes about benefits.24 In May 2023, the Pew Research Center reported that 58% of Americans had heard of ChatGPT, though few had used it.25 In August of the same year, fewer than 1 in 5 had used the technology and even fewer reported confidence that ChatGPT would be helpful to their jobs.26 However, it is unknown to what extent savviness or familiarity with ChatGPT may inform patient perspectives on the acceptability of LLMs in healthcare.

LLMs are set to be a part of a system of care where trust and expectations in one domain will have an impact on relationships in another.27,28 In other words, expectations for LLMs and trust in the health system (ie, system trust) are likely to be related, but have yet to be evaluated empirically.29 How system trust, experiences, and attitudes about access, privacy, respect, and tech-savviness are related to patient expectations for LLMs is important to a robust understanding of the impact of adopting LLMs on patient care and satisfaction.

Objective

The purpose of this paper is to examine 2 emerging research questions. First, is comfort with the use of ChatGPT in society different from comfort with other uses of AI in society? Second, is the expected benefit of the use of ChatGPT in healthcare associated with comfort with the use of ChatGPT in society, accessibility of healthcare, concerns about harm from lack of privacy, and perceived respect, and self-rated tech-savviness? How do these associations change when accounting for trust in the healthcare system?

Methods

We analyzed cross-sectional data from an original survey of English-speaking U.S. adults. The survey sample is a general population sample from the National Opinion Research Center’s (NORC) AmeriSpeak Panel. A total of 2039 participants completed the 22-minute survey (margin of error = ±2.97 percentage points). Black or African American respondents and Hispanic respondents were oversampled to ensure adequate representation. NORC produced post stratification survey weights from the Current Population Survey. To ensure clarity of the questions, we conducted cognitive interviews and piloted the instrument with a sample of AmeriSpeak panel participants. We also conducted semi-structured interviews with patients, clinicians, and experts that indicated that the difference between AI as an analytical method versus the technology or application it is used in was not commonly known or understood. As such, we felt confident in using various framings interchangeably. The final version of the survey was fielded from June 27, 2023 to July 17, 2023.

Data and analysis

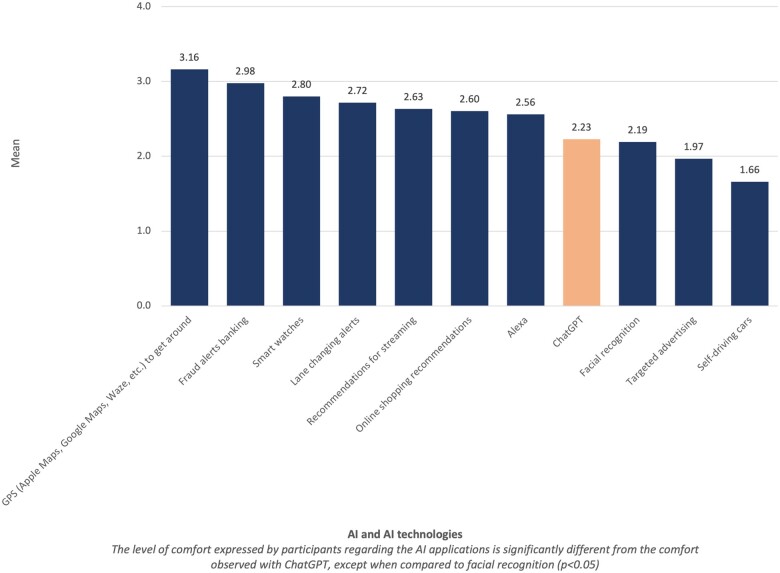

Our analytic sample consisted of people who provided responses to all questions (n = 1787). To answer our first research question of whether comfort with the use of ChatGPT in society is different from comfort with other uses of AI in society, we asked survey participants to rank their level of comfort with the use of AI in 11 different contexts such as GPS navigation apps (Google Maps, Apple Maps, Waze), streaming recommendations, and online advertising, many of which are familiar in everyday life.30 Participants were asked to rank comfort on a scale from Not at all Comfortable (1) to Very Comfortable (4). We then conducted paired t-tests to assess whether the mean level of comfort with the use of ChatGPT was different from each use. We intentionally asked about applications that varied in terms of both how long they have been used, and the sensitivity or risk that might be associated with them. We did this to be able to understand the magnitude of differences and gain insight into where various applications fall along a spectrum of high to low comfort.

Our second research question examined the expected benefit of using ChatGPT in healthcare as the outcome of interest. Specifically, we asked “How likely do you think it is that the use of ChatGPT in healthcare will improve the health of people living in the United States?” Response options were Very Unlikely (1) to Very Likely (4). We generated a binary dependent variable, “expected benefit,” grouping Very unlikely and Unlikely into one category and Very Likely and Likely into another based on the distribution of the variable and the bi-polar nature of the scale.

We then examined factors that might predict expected health benefit. These included ChatGPT’s potential benefit to society in general, accessibility of healthcare, concerns about harm from lack of privacy, and perceived respect, and self-rated tech-savviness, and system trust while controlling for demographic factors, health literacy (high/low), and familiarity with ChatGPT. Participants were asked to rank how true statements were on a scale from Not at all true (1) to Very True (4). Accessibility of care was assessed based on the statement: “I feel like I have options about where I receive my medical care” (access); harm from lack of privacy was evaluated based on responses to “I worry that private information about my health could be used against me” (privacy harm)10; and respect was framed as “I feel respected when I seek healthcare.” Tech-savviness was asked as “I consider myself a tech-savvy person.”31

System trust was measured as an index, a short form of a previously validated multidimensional measure that addresses the competency, fidelity, integrity, and trustworthiness of healthcare organizations that have health information and share it.10,13,15 For the current measure, we used 6 questions with a common stem, “the organizations that have my health information and share it” (organizations) Two assessed fidelity, “[organizations] value my needs” and “would not knowingly do anything to harm me.” Two assessed trustworthiness “[organizations] can be trusted to use my health information responsibly,” and “think about what is best for me.” The remaining questions addressed integrity, “[organizations] tell me how my health information is used” and competency, “[organizations] have specialized capabilities that can promote innovation in health.” To weigh each dimension trust variable equally, the questions evaluating fidelity (Cronbach’s alpha = 0.76) and trustworthiness (Cronbach’s alpha = 0.83) were combined as an average of the responses to those questions. A correlation matrix for questions included in the index is included in Supplementary Table S1. This left using 4 variables, on a scale from 1 to 4, that were added them together. The final measure had a minimum value of 4 and maximum of 16.

Control variables included demographic characteristics (sex, age, race/ethnicity, and education), health literacy, and familiarity with ChatGPT. Health literacy was measured based on responses to the question “How often do you need to have someone help you when you read instructions, pamphlets, or other written material from your doctor or pharmacy?”32 People who responded never (70.9% of respondents) were the reference group and categorized as “high literacy.” All others responded rarely, often, sometimes, or always and were categorized as “low literacy.” Familiarity was assessed based on responses to a Yes/No question “Have you ever heard of ChatGPT or a similar AI chatbot (eg, BARD, OpenAI)?”

Predictors of expected benefit of the use of ChatGPT in healthcare were identified using weighted logistic regression. We evaluate 2 models, one using all predictors except system trust, and another using all predictors, including system trust to identify how associations change when also accounting for system trust.

Results

Descriptive statistics of sample

Descriptive statistics for the sample are provided in Table 1. Our survey sample was split nearly evenly between male (47.9%) and female (52.2%) respondents. Respondents included those aged 18-29 years (15.8%), 30-44 years (29.7%), 45-59 years (23.4%), and over 60 years (31.2%). A nearly equal number of Black or African American and Hispanic respondents participated in the survey (26.2% and 25.2%, respectively); 43.8% of respondents identified as White and 4.9% identified as another race or ethnicity. A majority of respondents had at least some college or an Associate’s degree (40.4%) or a Bachelor’s degree (21.4%). Most people (70.9%) had high health literacy stating that they never need help with reading medical written materials. At the time the survey was conducted, 56.8% of the sample indicated that they had heard of ChatGPT.

Table 1.

Descriptive statistics of variables used in weighted logistic regression: demographic factors; independent and dependent variables (n = 1787).

| Characteristic | N (%) | Mean (SD) |

|---|---|---|

| Have you ever heard of ChatGPT? | ||

| Yes | 1015 (56.80%) | |

| No | 772 (43.20%) | |

| Sex | ||

| Male | 855 (47.85%) | |

| Female | 932 (52.15%) | |

| Age categories (years) | ||

| 18-29 | 282 (15.78%) | |

| 30-44 | 530 (29.66%) | |

| 45-59 | 418 (23.39%) | |

| 60+ | 557 (31.17%) | |

| Race/ethnicity | ||

| White, non-Hispanic | 782 (43.76%) | |

| Black, non-Hispanic | 468 (26.19%) | |

| Hispanic | 450 (25.18%) | |

| Other, including multiple races and Asian Pacific Islander | 87 (4.87%) | |

| Education | ||

| Less than High school | 120 (6.72%) | |

| High school graduate or equivalent | 294 (16.45%) | |

| Some college/Associate degree | 722 (40.40%) | |

| Bachelor's degree | 382 (21.38%) | |

| Post grad study/Professional degree | 269 (15.05%) | |

| Health literacy | ||

| High | 1267 (70.90%) | |

| Low | 520 (29.10%) | |

| Comfort with ChatGPT being used in societya | 2.23 (0.983) | |

| Tech-savvinessb | 2.38 (0.979) | |

| Worry about private information used against meb | 2.38 (1.07) | |

| I feel like I have optionsb | 2.63 (0.981) | |

| I feel respectedb | 2.71 (0.916) | |

| System trustc | 8.78 (2.65) | |

|

0.460 (0.499) | |

4-point scale: (1) Not at all comfortable; (4) Very comfortable.

4-point scale: (1) Not at all true; (4) Very true.

Index, Range: (4) Low; (16) High.

Binary variable coded as follows: (0) Very unlikely (n = 194, 10.9%) or unlikely (n = 772, 43.2%); (1) Very likely (n = 85, 4.8%) or likely (n = 736, 41.2%).

The mean response for whether people considered themselves technologically savvy was 2.4 (SD = 0.98). Most people felt like they had options when seeking medical care (mean 2.6, SD = 0.98) and felt they were respected when seeking medical care (mean 2.7, SD = 0.91), but were also concerned that private information could be used against them (mean 2.4, SD= 1.1). The mean of system trust was 8.8, based on an index on a scale that ranged from 4 to 16.

Comfort with the use of ChatGPT in society

The mean level of comfort with the use of ChatGPT in society was 2.2 (SD = 0.98) on a 4-point scale (1= Not at all comfortable; 4= Very comfortable). Comfort with the use of AI in other contexts ranging from GPS navigation apps to facial recognition and targeted advertising ranged from 1.7 (self-driving cars) to 3.2 (GPS navigation apps) (see Figure 1). We found that the difference in mean comfort between the use of ChatGPT in society compared to all other uses was significant (P < .05), except facial recognition software. This indicates that comfort with ChatGPT is on the lower end of the comfort spectrum than most AI applications included in the survey.

Figure 1.

Comfort with the use of AI in society (n = 1787).

Predictors of expected benefit of the use of ChatGPT in health care

We then examined the relationship between expectation of benefit of ChatGPT for health and comfort, accessibility of healthcare, concerns about harm from lack of privacy, and perceived respect, self-rated tech-savviness, and system trust.

Univariable analysis

When examining the univariable relationship between expectation of benefit and the independent variables of interest using weighted logistic models (see Table 2), we found that those who felt comfortable with the use of ChatGPT in society were nearly 2 times more likely to expect benefits from the use of ChatGPT in healthcare (OR = 1.9, P < .001) than those who did not feel comfortable with the technology in society. People who felt like they had options when seeking medical care (OR = 1.4), felt respected when receiving medical care (OR = 1.5), and had greater levels of system trust (OR = 1.3) were also more likely to expect benefits from the use of ChatGPT (P < .001). Concerns about private information being used for harm was negatively associated with expected benefit (OR = 0.8, P = .001). The relationship between identifying as “tech-savvy” was not associated with expectations of benefits from the use of ChatGPT for health.

Table 2.

Weighted regression analysis of expecting benefit of using ChatGPT to improve health: univariable and multivariable analysis with and without accounting for trust (n = 1787).

| Univariable |

Multivariable without system trust |

Multivariable with system trust |

|||||

|---|---|---|---|---|---|---|---|

| OR | P | OR | P | OR | P | ||

| Comfort with use | Comfort with use of ChatGPT in society | 1.91 | 1.9 × 10-16 | 1.86 | 5.9 × 10-15 | 1.68 | 2.5 × 10-10 |

| Tech-savviness | I consider myself a tech-savvy person | 1.15 | .072 | 1.07 | .544 | 1.00 | .981 |

| Privacy concerns | I worry that private information about my health could be used against me | 0.81 | 9.9 × 10-4 | 0.83 | .014 | 0.88 | .095 |

| Access | I feel like I have options about where I receive my medical care | 1.41 | 4.9 × 10-7 | 1.13 | .169 | 1.03 | .768 |

| Respect | I feel respected when I seek healthcare | 1.52 | 1.1 × 10-8 | 1.29 | .013 | 1.05 | .646 |

| Trust | System trust index | 1.34 | 6.6 × 10-20 | 1.26 | 1.6 × 10-11 | ||

| Heard of ChatGPT | Yes (Reference) | ||||||

| No | 1.31 | .064 | 1.30 | .151 | 1.36 | .100 | |

| Sex | Male (Reference) | ||||||

| Female | 0.74 | .023 | 0.72 | .025 | 0.69 | .010 | |

| Age | 18-29 (Reference) | ||||||

| 30-44 | 1.20 | .397 | 1.37 | .172 | 1.41 | .14 | |

| 45-59 | 1.26 | .252 | 1.56 | .075 | 1.76 | .025 | |

| 60+ | 1.29 | .171 | 1.85 | .007 | 2.04 | .002 | |

| Race/ethnicity | White, non-Hispanic (Reference) | ||||||

| Black, non-Hispanic | 1.63 | .003 | 1.54 | .014 | 1.41 | .051 | |

| Hispanic | 1.33 | .097 | 1.24 | .228 | 1.19 | .349 | |

| Other | 1.94 | .015 | 2.05 | .020 | 1.83 | .081 | |

| Education | Less than High school (Reference) | ||||||

| High school graduate or equivalent | 0.74 | .195 | 0.71 | .157 | 0.72 | .172 | |

| Some college/Associate degree | 0.72 | .165 | 0.65 | .086 | 0.62 | .052 | |

| Bachelor's degree | 0.82 | .420 | 0.81 | .443 | 0.83 | .489 | |

| Post grad study/Professional degree | 0.63 | .070 | 0.54 | .034 | 0.54 | .034 | |

| Health literacy | High (Reference) | ||||||

| Low | 1.18 | .260 | 1.16 | .350 | 0.99 | .937 | |

OR: Odds Ratio. Bold values indicate P < .05.

Expectation of benefit was also associated with some control variables including sex and race/ethnicity. We found that women were less likely to expect benefits than men (OR = 0.74, P = .023) and that non-Hispanic White respondents were less likely to expect benefits than Black, non-Hispanic respondents and those identifying as some other race or ethnicity. Age, education, health literacy, and having previously heard of ChatGPT were not associated with the outcome of interest.

Multivariable analysis without and with system trust

In the weighted logistic regression model including all variables except system trust (see Table 2), belief that ChatGPT would benefit society (OR = 1.9, P < .001) and feeling respected when seeking medical care (OR = 1.3, P = .013) were positively associated with expecting a benefit to the use of ChatGPT to the health of people living in the United States. Older respondents (60 years and older) were more likely to expect benefits from ChatGPT compared to younger respondents (OR = 1.85, P = .007) and Black respondents (OR = 1.5, P = .014), and those identifying as other race/ethnicities (OR =2.05, P = .020) were more likely to expect benefits from the use of ChatGPT compared to White respondents. People less likely to expect benefits from the use of ChatGPT included those who worried about the use of private information (OR = 0.83, P = .014), women compared to men (OR = 0.72, P = .025), and those with postgraduate education compared to those with lower education (OR = 0.54, P = .034). Tech-savviness, feeling like one has options when seeking medical care, having previously heard of ChatGPT and literacy were not statistically associated with expecting benefits to health from the use of ChatGPT (P > .05).

In the weighted logistic regression model with all variables including system trust (see Table 2), we found that system trust was one of the most strongly positive predictors of expecting benefit (OR =1.3, P < .001). Several predictors that were significant in the model without system trust were no longer statistically significant (feeling respected, concerns about privacy, and race/ethnicity). As in the previous model, belief that ChatGPT benefits society was positively associated with expected benefit while younger respondents, those with post-graduate education, and women were less likely to expect benefits for health from the use of ChatGPT.

Discussion

On average, comfort with the use of ChatGPT in society is low and different relative to other, common uses of AI. At the time the survey was conducted, comfort with ChatGPT was comparable to the use of facial recognition software. We found that comfort was highly associated with expecting that ChatGPT would have the benefit of improving the health of people living in the United States. As use of LLMs like ChatGPT become more ubiquitous and integrated into specific, but wide-ranging, applications in healthcare and in the public domain, expectations, trust, and comfort may shift. The current analysis provides a baseline for future research.

Trust is correlated with feeling respected when receiving medical care20 and feeling like one has options when seeking medical care.18,19 In our study, people worried about privacy were less likely to trust and less likely to expect benefits from the use of LLMs. This is consistent with prior work examining the relationship between trust and other information technologies such as data exchange.10 As LLMs become an integral part of healthcare, performing tasks such as communicating with patients and coordinating care, it will be critical to ensure that trust is guarded not only in functional ways (ie, by performing tasks competently) but also in ways that preserve the overall quality of care and personal connections. Patient and public perceptions are going to have implications for trust foundational to care delivery. Focusing on principles and trust as a technological issue of accuracy and reliability is important in shedding light on the issue of trust.33–35 However, demonstrating trustworthiness and prioritizing the public good are needed to provide people with reasons to trust health systems as they increasingly adopt AI in new, and potentially high-risk, ways.27 Our findings suggest that including measures of system trust in evaluation of LLM implementation could capture a range of issues critical for ensuring patient acceptance of this new tool.

We found that women were less likely than men to be confident that ChatGPT would benefit the health of people living in the United States in both of our regression models. This difference should be further explored in future studies. Our results also suggest that awareness, knowledge, education, and health literacy are related to expected benefit in nuanced ways. For example, we found that those with higher levels of education and those who have heard of ChatGPT were less likely to expect benefits to health from the use of ChatGPT. Health literacy and prior awareness of ChatGPT were not associated with expected benefit, even in univariable analysis. Similarly, self-identified tech-savviness was not associated with expectation of benefits. Other surveys have shown that people want to be notified about the use of LLMs in healthcare,36 and we have consistently found that people want to be notified about a broad set of data uses, beyond those required by current law and regulation.10,37 In supplementary analysis (see Table S2), we examined the relationship between system trust and age and sex, which were found to be statistically significant in this analysis. This additional analysis suggests there was no difference in system trust among these groups. The present survey further suggests that the relationships between trust, knowledge, and expertise should be examined in future studies to best inform education, notification policies, and practice.

Our study is limited to inferences about associations and not causation, which points to a need for future longitudinal analysis. This is particularly important given the pace with which the use of LLMs is evolving both in society and in healthcare. Unlike other medical technologies, LLMs are both a technical tool for clinicians and health systems as well as a technology readily available to the general public. Attitudes about LLMs are likely to shift with the changing landscape. Given the complexities of LLMs and how they are used, both qualitative and quantitative studies should be pursued to understand questions about why people have the attitudes that they do. For example, it may be that as LLMs become more familiar, they may also become a more comfortable technology. At the same time, our study provides a baseline understanding of current attitudes about what the U.S. public might expect from the use of LLMs and how these attitudes are related to trust in the health system.

Conclusion

Large language models and ChatGPT have galvanized the medical field and proponents are calling for major changes in the way medicine is practiced. Adoption of LLMs will impact patient relationships and interactions with their clinicians and the healthcare system. Understanding the impact of LLMs from the patient perspective is critical to ensuring that expectations align with performance as a form of calibrated trust that acknowledges the dynamic and dyadic nature of trust between people and institutions that are impacted by technology. New policies, such as the recent Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence and the ONC’s Algorithm Transparency, and Information Sharing (HTI-1) Final Rule that advocate transparency, reliability, and accuracy of AI are likely to extend to LLMs and are consistent with the bioethical principle of respect for persons and a tradition of informed consent. However, medical disclaimers will be necessary but insufficient if trustworthiness is the goal. Consensus on the appropriate level of communication with patients about the use of these tools in their care needs to be established. Understanding how the public calculates tradeoffs between potential risks and benefits will be necessary to inform evidence-based, ethical, and patient-centered approaches to widespread LLM adoption.

Supplementary Material

Contributor Information

Jodyn Platt, Department of Learning Health Sciences, University of Michigan Medical School, Ann Arbor, MI 48109, United States.

Paige Nong, Division of Health Policy & Management, University of Minnesota School of Public Health, Minneapolis, MN 55455, United States.

Renée Smiddy, Department of Learning Health Sciences, University of Michigan Medical School, Ann Arbor, MI 48109, United States.

Reema Hamasha, Department of Learning Health Sciences, University of Michigan Medical School, Ann Arbor, MI 48109, United States.

Gloria Carmona Clavijo, Department of Learning Health Sciences, University of Michigan Medical School, Ann Arbor, MI 48109, United States.

Joshua Richardson, Galter Health Sciences Library, Northwestern University Feinberg School of Medicine, Chicago, IL 60611, United States.

Sharon L R Kardia, Department of Epidemiology, University of Michigan School of Public Health, Ann Arbor, MI 48109, United States.

Author contributions

The authors of this manuscript meet the ICMJE guidelines for authorship.

Supplementary material

Supplementary material is available at Journal of the American Medical Informatics Association online.

Funding

The authors are grateful for the support of a grant from the National Institutes of Health, The National Institute of Biomedical Imaging and Bioengineering (NIBIB), Public Trust of Artificial Intelligence in the Precision CDS Health Ecosystem (Grant No. 1-RO1-EB030492).

Conflicts of interest

None declared.

Data availability

The data underlying this article will be shared on reasonable request to the corresponding author.

References

- 1. Milmo D. ChatGPT reaches 100 million users two months after launch. The Guardian. February 2, 2023. Accessed June 24, 2024. https://www.theguardian.com/technology/2023/feb/02/chatgpt-100-million-users-open-ai-fastest-growing-app?ref=salesenablementcollective.com [Google Scholar]

- 2. Oversight of AI. Rules for artificial intelligence | United States Senate Committee on the Judiciary. 2023. Accessed May 29, 2023. https://www.judiciary.senate.gov/committee-activity/hearings/oversight-of-ai-rules-for-artificial-intelligence

- 3. Bubeck S, Chandrasekaran V, Eldan R, et al. 2023. Sparks of artificial general intelligence: early experiments with gpt-4. arXiv, arXiv:2303.12712, preprint: not peer reviewed.

- 4.Program—SAIL: symposium on artificial intelligence for learning health systems. n.d.Accessed May 29, 2023. https://sail.health/event/sail-2023/program/

- 5. Center MN. Microsoft and Epic expand strategic collaboration with integration of Azure OpenAI Service Stories. 2023. Accessed May 29, 2023. https://news.microsoft.com/2023/04/17/microsoft-and-epic-expand-strategic-collaboration-with-integration-of-azure-openai-service/

- 6. Meskó B, Topol EJ. The imperative for regulatory oversight of large language models (or generative AI) in healthcare. npj Digit Med. 2023;6(1):1-6. 10.1038/s41746-023-00873-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Taylor LA, Nong P, Platt J. Fifty years of trust research in health care: a synthetic review. Milbank Q. 2023;101(1):126-178. 10.1111/1468-0009.12598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Edwards B. GPT-4 will hunt for trends in medical records thanks to Microsoft and Epic. Ars Technica. 2023. Accessed May 29, 2023. https://arstechnica.com/information-technology/2023/04/gpt-4-will-hunt-for-trends-in-medical-records-thanks-to-microsoft-and-epic/

- 9. Jakesch M, Bhat A, Buschek D, Zalmanson L, Naaman M. Co-writing with opinionated language models affects users’ views. In: Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. New York: Association for Computing Machinery; 2023:1-15. 10.1145/3544548.3581196. [DOI]

- 10. Platt J, Raj M, Büyüktür AG, et al. Willingness to participate in health information networks with diverse data use: evaluating public perspectives. eGEMs. 2019;7(1):33. 10.5334/egems.288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Nong P, Williamson A, Anthony D, Platt J, Kardia S. Discrimination, trust, and withholding information from providers: implications for missing data and inequity. SSM Popul Health. 2022;18:101092. 10.1016/j.ssmph.2022.101092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Hall MA, Camacho F, Dugan E, Balkrishnan R. Trust in the medical profession: conceptual and measurement issues. Health Serv Res. 2002;37(5):1419-1439. 10.1111/1475-6773.01070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Platt JE, Jacobson PD, Kardia SLR. Public trust in health information sharing: a measure of system trust. Health Serv Res. 2018;53(2):824-845. 10.1111/1475-6773.12654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Ozawa S, Sripad P. How do you measure trust in the health system? A systematic review of the literature. Soc Sci Med. 2013;91:10-14. [DOI] [PubMed] [Google Scholar]

- 15. Trinidad MG, Platt J, Kardia SLR. The public’s comfort with sharing health data with third-party commercial companies. Humanit Soc Sci Commun. 2020;7(1):1-10. 10.1057/s41599-020-00641-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Richardson JP, Smith C, Curtis S, et al. Patient apprehensions about the use of artificial intelligence in healthcare. npj Digit Med. 2021;4(1):1-6. 10.1038/s41746-021-00509-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Platt J, Raj M, Kardia SLR. The public’s trust and information brokers in health care, public health and research. JHOM. 2019;33(7/8):929-948. 10.1108/JHOM-11-2018-0332. [DOI] [Google Scholar]

- 18. Mechanic D. Changing medical organization and the erosion of trust. Milbank Q. 1996;74:171-189. [PubMed] [Google Scholar]

- 19. Ward PR. Improving access to, use of, and outcomes from public health programs: the importance of building and maintaining trust with patients/clients. Front Public Health. 2017;5:22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Thom DH. Physician behaviors that predict patient trust. J Fam Pract. 2001;50(4):323-328. [PubMed] [Google Scholar]

- 21. Walker DM, Johnson T, Ford EW, Huerta TR. Trust me, I’m a doctor: examining changes in how privacy concerns affect patient withholding behavior. J Med Internet Res. 2017;19(1):e2. 10.2196/jmir.6296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Shen N, Bernier T, Sequeira L, et al. Understanding the patient privacy perspective on health information exchange: a systematic review. Int J Med Informat. 2019;125:1-12. 10.1016/j.ijmedinf.2019.01.014. [DOI] [PubMed] [Google Scholar]

- 23. McGraw D, Mandl KD. Privacy protections to encourage use of health-relevant digital data in a learning health system. npj Digit Med. 2021;4(1):1-11. 10.1038/s41746-020-00362-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Jermutus E, Kneale D, Thomas J, Michie S. Influences on user trust in healthcare artificial intelligence: a systematic review. Wellcome Open Res. 2022;7:65. [Google Scholar]

- 25. Vogels E. a. A majority of Americans have heard of ChatGPT, but few have tried it themselves. Pew Research Center. n.d. Accessed May 29, 2023. https://www.pewresearch.org/short-reads/2023/05/24/a-majority-of-americans-have-heard-of-chatgpt-but-few-have-tried-it-themselves/

- 26. Park E, Gelles-Watnick R. Most Americans haven’t used ChatGPT; few think it will have a major impact on their job. Pew Research Center. n.d. accessed December 14, 2023. https://www.pewresearch.org/short-reads/2023/08/28/most-americans-havent-used-chatgpt-few-think-it-will-have-a-major-impact-on-their-job/

- 27. LaRosa E, Danks D. Impacts on trust of healthcare AI. In: Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society. New York: Association for Computing Machinery; 2018:210-215. 10.1145/3278721.3278771. [DOI]

- 28. Platt J, Goold SD. Betraying, earning, or justifying trust in health organizations. Hastings Cent Rep. 2023;53(S2):S53-9. 10.1002/hast.1524. [DOI] [PubMed] [Google Scholar]

- 29. Gille F, Jobin A, Ienca M. What we talk about when we talk about trust: theory of trust for AI in healthcare. Intell-Based Med. 2020;1-2(2020):100001. 10.1016/j.ibmed.2020.100001. [DOI] [Google Scholar]

- 30. Johnson A. You’re already using AI: here’s where it’s at in everyday life, from facial recognition to navigation apps. Forbes. n.d. Accessed June 4, 2024. https://www.forbes.com/sites/ariannajohnson/2023/04/14/youre-already-using-ai-heres-where-its-at-in-everyday-life-from-facial-recognition-to-navigation-apps/

- 31. Pinto dos Santos D, Giese D, Brodehl S, et al. Medical students’ attitude towards artificial intelligence: a multicentre survey. Eur Radiol. 2019;29(4):1640-1646. 10.1007/s00330-018-5601-1. [DOI] [PubMed] [Google Scholar]

- 32. Morris NS, MacLean CD, Chew LD, Littenberg B. The Single Item Literacy Screener: evaluation of a brief instrument to identify limited reading ability. BMC Fam Pract. 2006;7(1):1-7. 10.1186/1471-2296-7-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Benda NC, Novak LL, Reale C, Ancker JS. Trust in AI: why we should be designing for APPROPRIATE reliance. J Am Med Inform Assoc. 2022;29(1):207-212. 10.1093/jamia/ocab238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Bach TA, Khan A, Hallock H, Beltrão G, Sousa S. A systematic literature review of user trust in AI-enabled systems: an HCI perspective. Int J Human–Computer Interact. 2022;40(5):1251-1266. 10.1080/10447318.2022.2138826. [DOI] [Google Scholar]

- 35. Office of Science and Technology Policy. Blueprint for an AI Bill of Rights | OSTP. The White House. n.d. Accessed May 29, 2023. https://www.whitehouse.gov/ostp/ai-bill-of-rights/

- 36. Wolters Kluwer survey finds Americans believe GenAI is coming to healthcare but worry about content. n.d. Accessed December 15, 2023. https://www.wolterskluwer.com/en/news/wolters-kluwer-survey-finds-americans-believe-genai-is-coming-to-healthcare-but-worry-about-content

- 37. Spector-Bagdady K, Trinidad G, Kardia S, et al. Reported interest in notification regarding use of health information and biospecimens. JAMA. 2022;328(5):474-476. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article will be shared on reasonable request to the corresponding author.