Abstract

Purpose

Image-guided intervention (IGI) systems have the potential to increase the efficiency in interventional cardiology but face limitations from motion. Even though motion compensation approaches have been proposed, the resulting accuracy has rarely been quantified using in vivo data. The purpose of this study is to investigate the potential benefit of motion-compensation in IGS systems.

Methods

Patients scheduled for left atrial appendage closure (LAAc) underwent pre- and postprocedural non-contrast-enhanced cardiac magnetic resonance imaging (CMR). According to the clinical standard, the final position of the occluder device was routinely documented using x-ray fluoroscopy (XR). The accuracy of the IGI system was assessed retrospectively based on the distance of the 3D device marker location derived from the periprocedural XR data and the respective location as identified in the postprocedural CMR data.

Results

The assessment of the motion-compensation depending accuracy was possible based on the patient data. With motion synchronization, the measured accuracy of the IGI system resulted similar to the estimated accuracy, with almost negligible distances of the device marker positions identified in CMR and XR. Neglection of the cardiac and/or respiratory phase significantly increased the mean distances, with respiratory motion mainly reducing the accuracy with rather low impact on the precision, whereas cardiac motion decreased the accuracy and the precision of the image guidance.

Conclusions

In the presented work, the accuracy of the IGI system could be assessed based on in vivo data. Motion consideration clearly showed the potential to increase the accuracy in IGI systems. Where the general decrease in accuracy in non-motion-synchronized data did not come unexpected, a clear difference between cardiac and respiratory motion-induced errors was observed for LAAc data. Since sedation and intervention location close to the large vessels likely impacts the respiratory motion contribution, an intervention-specific accuracy analysis may be useful for other interventions.

Keywords: Image-guided intervention, 3D accuracy, Motion-induced accuracy-effects, Monoplane system

Introduction

Over the last years, image-guided intervention (IGI) systems have gained interest in interventional cardiology. The fusion of different imaging modalities has been proven to save procedure time and radiation dose [1–3] with the potential to improve patient outcome, especially for the increasingly complex percutaneous procedures in structural heart disease. Even though the fusion of periprocedural imaging modalities such as x-ray fluoroscopy (XR) and transoesophageal echocardiography (TEE) is increasingly applied [4–6], the additional integration of patient-specific anatomic and functional data, e.g., derived from preprocedural imaging such as computed tomography (CTA) and cardiac magnetic resonance imaging (CMR), appears as an attractive adjunct for providing accurate three-dimensional (3D) information for navigation and documentation [7–10].

The applicability of IGI systems, however, is restricted by their reliability and accuracy. Especially in interventional cardiology accuracy issues rise due to likely differences between pre- and periprocedural soft tissue anatomy, missing landmarks for straightforward co-registration, and motion of the target structure. Thus, in most reported interventional cardiac applications, IGI systems are commonly used for providing adjunct 3D information but the application for advanced navigation has so far not entered daily clinical routine [11].

Improved co-registration techniques have been presented for 3D-3D as well as for 2D-3D co-registration [12–14], and motion compensation approaches have been introduced mainly focusing on the compensation of respiratory motion [15–17]. As desired accuracy of an IGI system, a minimum of 5 mm has been reported for endovascular and cardiac procedures [18, 19]. Furthermore, the distinction between intervention-specific clinically-imposed accuracy restrictions and the IGI system’s limitations was recommended [20, 21]. Where the general accuracy of the co-registration between pre- and periprocedural imaging has been proven sufficient in phantom studies the impact of cardiac or respiratory motion on the accuracy of IGI systems has rarely been assessed [14, 22, 23].

The purpose of this study is to assess the limitations of fusing pre- and periprocedural image data during intervention guidance based on in vivo data, and to quantify the impact of respiratory and cardiac motion on the achievable 3D accuracy. The analysis is based on the assessment of the 3D location of the marker of the deployed left atrial appendage closure (LAAc) device, which is documented in the fused preprocedural CMR data during the intervention and compared to the real location identified in the postprocedural CMR.

Methods

The accuracy of the 3D location of specific device markers identified in preprocedural CMR data based on periprocedural XR data has been quantified in routinely acquired patient data obtained during percutaneous left atrial appendage closures. After co-registration of preprocedural CMR and XR data, the 3D locations of the radio opaque marker of the implanted occluder device (Watchman FLX™, Boston Scientific Corporation, Marlborough, MA, USA) were identified in XR data and their 3D location derived and marked in the preprocedural CMR for different cardiac and respiratory phases. For accuracy assessment, an additional CMR was acquired postprocedurally and co-registered to the preprocedural CMR and hence XR. The distance between the XR-derived 3D marker position and the respective marker location identified in the co-registered postprocedural CMR was used for cardiac and respiratory phase-dependent accuracy assessment.

Six patients undergoing LAAc were included in this study. Retrospectively, the XR data routinely acquired during the procedure were evaluated. One of the patients had to be excluded as the validation XR-run was too short with shallow respiration, and hence did not allow the distinction of different respiratory phases.

The analysis was performed in compliance with the ethical guidelines of the Declaration of Helsinki from 1975 and was approved by the local ethical committee. The procedure was performed according to the current clinical standards, and the retrospective data analysis has no impact whatsoever on the procedure guidance and XR data acquired. All patients provided written informed consent prior to the procedure.

Image data acquisition

Magnetic resonance imaging

On the day before the LAAc and 4–6 weeks after the procedure, the patients underwent CMR at a 3.0 T (Achieva 3.0 T, dStream, R5.6, Philips Medical Systems B.V., Best, The Netherlands). All data were acquired with a respiratory navigated (3 mm acceptance window) non-contrast enhanced 2-point 3D Dixon sequence [24] during expiration and at 30–40% RR-interval with an isotropic spatial resolution of 1.53 mm3 reconstructed at 1.33 mm3.

X-ray fluoroscopy

In adjunction to transoesophageal echocardiography for guiding the transseptal puncture and LAA dimension assessment, XR was periprocedural applied to guide the procedure, measure the LAA dimensions, and validate the correct positioning of the occluder. XR data were acquired on a monoplane XR system (Allura Clarity, Philips Medical Systems, Best, The Netherlands) at different clinically indicated procedure steps including: prior to the transseptal puncture (anterior–posterior angulation), contrast agent-enhanced after advancing the catheter into the LAA (two angulations for LAA dimension assessment), and after occluder deployment for validation (implantation plane angulation and angulation differing by at least 30°).

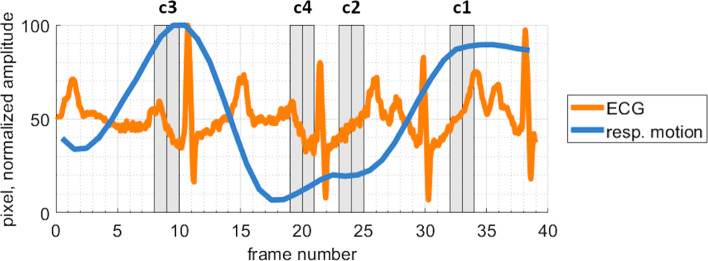

The cardiac phase of each frame of a single XR run was determined based on the simultaneously recorded ECG. The respective respiratory phase was identified by manual tracking of the diaphragm (if visible) or a clearly visible lung structure. The respiration tracking result was low-pass filtered with a Savitzky-Golay-Filter. To comply with the CMR data acquisition, an acceptance window in the iso-center of 3 mm was used for classifying frames in expiration or inspiration.

Co-registration

The preprocedural CMR data were co-registered with the XR-system geometry and the post-procedural CMR data were co-registered to the preprocedural CMR data thus enabling direct comparison of 3D locations between XR and pre- and post-CMR.

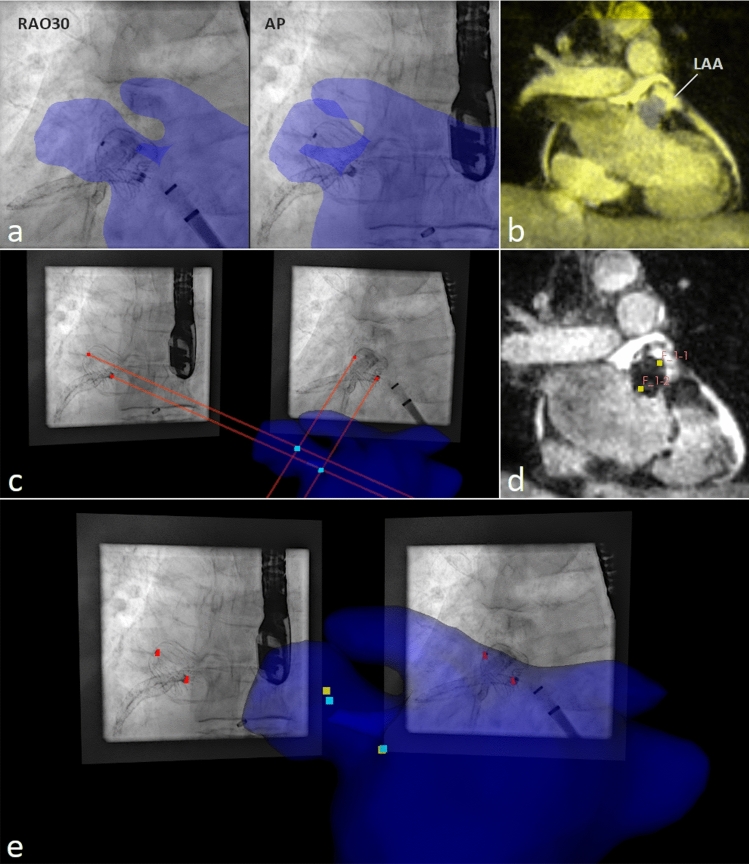

Co-registration: pre- to periprocedural (CMR to XR)

First, the aorta, the right atrium, the vena cava, and the left atrium including the left atrial appendage were segmented from the preprocedural CMR using the open-source tool 3DSlicer (www.slicer.org, [25]). From the resulting segmentation, manual rigid body co-registration with the XR system was performed using the open-source tool 3D-XGuide [22]. Thereby, initial co-registration was done based on XR-runs in anterior–posterior (AP) and left anterior oblique (LAO40) angulation by aligning the aorta and right atrium to their projection in XR as previously suggested [9]. Refinement of the co-registration was done based on the contrast agent-filled LAA in the runs acquired for dimension measurements. As the CMR data were acquired at a certain cardiac- and respiratory phase, frames of the XR-run in the respective phases (30–40% RR-interval and expiration) were selected for accurate final adjustments (Fig. 1a).

Fig. 1.

Validation approach: a co-registration of the LA including the LAA (blue structure) segmented from preprocedural CMR to periprocedural XR-runs; b co-registration of the postprocedural CMR (yellow scaled) to the preprocedural CMR (grayscaled); c device marker localized in 3D (, cyan marker) from marker positions identified in two XR projections (red marker) and their projection lines (red lines) relative to the co-registered LA segmentation (blue structure); d device marker identified in postprocedural CMR (, yellow marker); e XR-derived 3D marker positions (, cyan marker) reconstructed from marker identified in XR (red marker) to device marker positions identified in postprocedural CMR (, yellow marker) visualized with the preprocedural LA segmentation (blue structure)

Co-registration: post- to preprocedural CMR

An initial rigid-body transformation was performed manually using 3DSlicer. Thereafter, an automated affine transformation was generated with the BRAINSFit toolkit integrated into 3DSlicer [26] (Fig. 1b).

Accuracy assessment

The accuracy was assessed from the distance of the device marker locations identified in the co-registered peri- and postprocedural data. In all cases, the accuracy was assessed for data obtained in the same cardiac- and respiratory phase (motion-synchronized) and for data obtained in different phases to quantify the impact of the different motion components on the final accuracy.

Motion-synchronized 3D accuracy

Based on the frame pair comprising the frames of the validation XR in corresponding cardiac and respiratory phase, the two device markers were identified manually and the respective 3D location ( calculated using the well-known camera models as previously described [27, 28]. In detail, the Nadir points on the projection lines of the identified marker at the smallest distance between these lines was determined. Ideally, the two projections lines would intersect at, but in real world data the mid-point between the two Nadir points had to be defined as , as the projection lines intersect only approximately (Fig. 1c). Since the XR frames in the different angulations were acquired during subsequent runs, the accuracy of the XR-derived 3D localization of was first assessed by re-projecting onto the individual XR frames and calculating the respective distance to the identified marker locations in the XR image plane. For the assessment of the motion-synchronized 3D accuracy, the distance between the and the positions of the marker identified in the co-registered postprocedural CMR () was calculated (Fig. 1d, e). The resulting distances are reported as the averaged Euclidean distance (AED) with standard deviation (± SD).

Impact of motion on the 3D accuracy

To investigate the influence of heart beat and respiration on the accuracy, the XR frames were categorized into four different categories (Fig. 2) based on the assigned cardiac and respiratory phase: (c1) expiration; 30–40% RR-interval; (c2) inspiration; 30–40% RR-interval; (c3) expiration; 80–90% RR-interval; (c4) inspiration; 80–90% RR-interval. Due to the only short duration of the 2nd angulation XR run after deployment, the classification was only done for the first angulation. As the duration of the XR run and the respective cardiac/respiratory phases varies, different numbers of frames were assigned to each category (15 samples in (c1), 7 samples in (c2), 17 samples in (c3), 9 samples in (c4)). The Nadir point of the co-registered postprocedural on the projection line of the 2D-XR-derived marker position, equivalent to the closest point on the projection line to , was determined as the 3D marker position derived from a single XR-projection. The AED ± SD between and the was used as an accuracy measure.

Fig. 2.

Exemplified frame classification of a XR-run into the categories (c1) expiration; 30–40% RR-interval; (c2) inspiration; 30–40% RR-interval; (c3) expiration; 80–90% RR-interval; (c4) inspiration; 80–90% RR-interval determined based on ECG and tracked respiratory motion

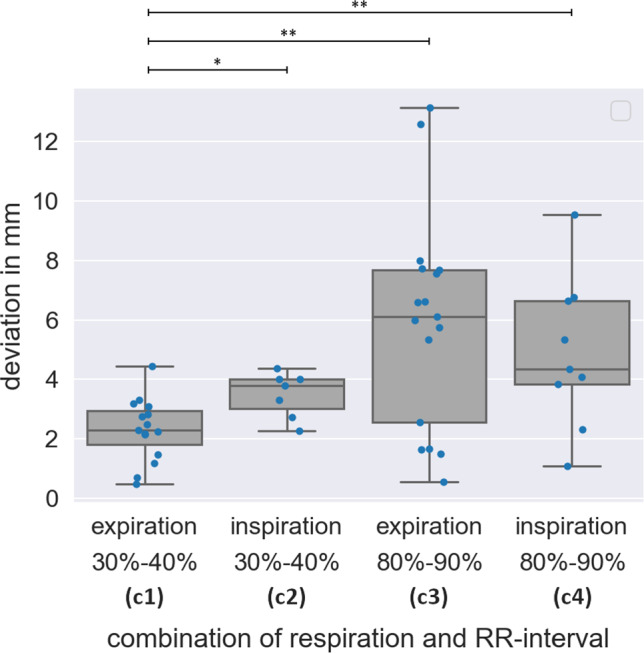

Statistics

Category c1 corresponds to the same cardiac and respiratory phase as used during CMR and is considered motion-synchronized. After testing for normal distribution of the samples using the Shapiro–Wilk test, the significance of the differences between c1 and c2–4 was tested applying Welch’s test. A p-value < 0.05 was considered significant.

Accuracy estimation

Assuming an almost ideal co-registration and negligible patient motion between the subsequently acquired XR validation runs, the estimated accuracy is governed by the accuracy of the manual identification of the marker points in XR and CMR. The accuracy of the marker identification is based on the underlying spatial image resolution. Assuming a maximal inaccuracy of the half diagonal of the respective voxel (XR: 2D, CMR: 3D), the maximum error was estimated by = 0.2 mm, respectively, = 1.1 mm. For the accuracy estimation of 3D localization from two XR projections, projected to the iso-center = 0.1 mm and the intrinsic inaccuracy of the XR geometry system of approximately = 0.2 mm [29] were taken into account, resulting in ≈ = 0.2 mm. re-projected onto the image plane resulted in = 0.3 mm. Accounting XR- and CMR-derived error sources, a total 3D accuracy estimation of ≈ = 1.1 mm was considered. 3D localizing from only a single monoplane projection reduces the accuracy but can be neglected as it does not affect the total accuracy estimation calculated from the 3D localization from two projections.

Results

The assessment of the resulting accuracy depending on the motion consideration employed was possible based on the patient data. Device marker were identifiable in XR and postprocedural CMR data. Based on the non-contrast-enhanced CMR, the segmentation of structures relevant for LAAc was successful. 3D-3D co-registration between pre- and postprocedural CMR as well as 2D-3D co-registration between preprocedural CMR and XR was visually correct.

The device markers re-projected onto the respective image plane deviated on average by 0.5 ± 0.5 mm from the initial marker position identified in the XR images. Thereby the estimated accuracy of XR-derived 3D localization of 0.4 mm was slightly exceeded. The largest inaccuracy of the position of was observed for the 3D localization based on two projections with the smallest angular difference (Table 1).

Table 1.

AED between re-projected onto the XR image plane and marker positions identified initially in the XR image plane () depending on the angular difference between the projections used for 3D localization and motion-synchronized 3D accuracy assessed by the AED between and respective co-registered ()

| ID | Angulation difference in ° | in mm AED (SD) | in mm AED (SD) |

|---|---|---|---|

| 1 | 30 | 1.5 (0.2) | 1.7 (0.5) |

| 2 | 40 | 0.4 (0.0) | 4.3 (0.9) |

| 3 | 80 | 0.5 (0.2) | 1.6 (0.6) |

| 4 | 72 | 0.1 (0.0) | 2.4 (1.4) |

| 5 | 85 | 0.2 (0.1) | 1.6 (0.4) |

| Ø | 0.5 (0.5) | 2.3 (1.0) |

The motion-synchronized differed from the respective co-registered on average by 2.3 ± 1.0 mm, which is again slightly above the theoretical limit of 1.1 mm (Table 1).

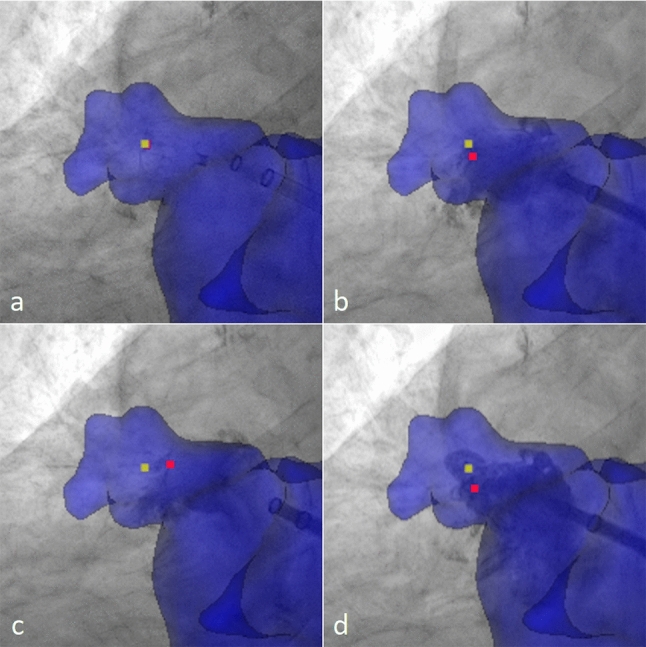

Figure 3 shows the effect of respiration- and cardiac-induced motion on the accuracy of image fusion. On average, the distance (m) of of category c1 (motion-synchronized) to the respective co-registered (m = 2.3 mm ± 1.0 mm) was slightly larger than the estimated accuracy and equal to the distance observed for of the corresponding category. The distances of to of categories c2 (m = 3.5 ± 0.7 mm; p < 0.05), c3 (m = 5.9 ± 3.5 mm; p < 0.01), and c4 (m = 4.9 ± 2.4 mm; p < 0.01) significantly exceeded the distances of to of category c1. As visualized in Fig. 4, the distances resulting from XR data obtained during atrial systole (c3, c4) showed larger mean values and interquartile ranges as during atrial diastole (c1, c2). For XR data obtained during atrial diastole, the resulting distances were on average larger in inspiration than in expiration, but showed similar interquartile ranges. Minimal distances were obtained for the motion-synchronized data (c1).

Fig. 3.

The effect of motion on the accuracy of image guidance. Preprocedural CMR segmentation (blue) and postprocedural marker (, yellow marker) acquired in expiration and 30%–40% RR-interval co-registered and superimposed to the periprocedural XR data with identified device marker (, red marker) in different heart and respiratory phases: a expiration and 30–40% RR-interval (c1, motion-synchronized, marker overlap), b inspiration and 30–40% RR-interval (c2), c expiration and 80–90% RR-interval (c3), d inspiration and 80–90% RR-interval (c4)

Fig. 4.

Effect of motion on the accuracy assessed by the distance of to for different cardiac and respiratory phases. Motion-synchronized image fusion (c1: expiration, 30–40% RR-interval) achieved significantly (*p < 0.05;**p < 0.01) superior accuracy than non-motion-synchronized image fusion (c2–c4)

Discussion

Even though image-based interventional guidance systems are frequently applied during different applications, the possible accuracy in organs impacted by respiratory and cardiac motion has not been investigated in vivo. In the presented study, we used data obtained during left arterial appendix closure (LAAc) procedures to initially investigate the possible accuracy of fusing preprocedural anatomic data with periprocedural XR fluoroscopy in a small number of patients. In the LAAc procedure, the 3D locations of the radio opaque closure device marker were calculated from two XR views and superimposed onto the co-registered 3D CMR data. The anatomic locations of the markers were validated in a postprocedural 3D CMR and compared to the 3D XR-derived locations after co-registration of the pre- and postprocedural CMR data.

Based on the patient data, the impact of the organ motion on the final accuracy could be assessed. As expected, the accuracy resulting from the fusion of the pre- and periprocedural data matching the respiratory and cardiac phase of the preprocedural 3D CMR yielded the smallest errors. Even though the achieved accuracy does not yet match the theoretical estimated limit, the previously reported minimal accuracy for endovascular and cardiac interventions of 5 mm [18, 21] could be clearly exceeded. The mismatch with the theoretical accuracy might be explained by additional unknown error sources. Here especially the angulation-dependent bending of the C-arm, not perfect co-registration between the pre- and periprocedural imaging, the subsequent acquisition of the XR views on a monoplane system, and, very likely most important, repositioning of the patient between the preprocedural CMR and the LAAc procedure impact the achievable accuracy.

Choosing XR data from different cardiac and/or respiratory phases yielded a significant increase of the resulting error in the calculated anatomic position of the marker. Where the general decrease in accuracy did not come unexpected, a clear difference between cardiac and respiratory motion-induced errors was observed. XR data with motion-synchronized cardiac but differing respiratory phase mainly increased the mean error with still rather low variance, thus indicating a predominant shift of the anatomic location. XR data from different cardiac phases, however, revealed large variance, which indicates non-predictable shifts of the resulting anatomic location. The huge impact of the cardiac motion may be specific for LAAc as the atrium contracts during atrial systole causing a substantial motion to the LAA. As previously shown [30], the respiratory-induced motion amplitude varies between different cardiac locations. The surprisingly rather low impact of the respiratory motion in atrial diastole may therefore result from the anatomic position of the LAA with relatively little influence of respiratory motion [31]. In addition, the patients were sedated during LAA closure which is known to cause shallow breathing [32].

Even though the achieved accuracy was in line with previously reported clinical demands, further improvement may be possible by using preprocedural data with higher spatial resolution images, e.g., CTA, and by ensuring a sufficient angular distance (35°–145°) between the two XR views used for 3D reconstruction as previously suggested [33]. Besides the consideration for 3D localization from two projections, the angular distance should also be considered for accurate co-registration with the XR system, and consequently accurate 3D localization on monoplane systems.

There are still some general limitations in this study. A major limitation of this study is the relatively small number of patients enrolled. Therefore, the results presented might be considered as indication of the relevance of motion-compensation with further validation in larger cohorts still needed. The co-registration as well as identification of the respiratory phase was done manually and using automated approaches such as, e.g., proposed earlier [15, 34, 35] might further increase the resulting accuracy. Further, the non-availability of XR runs in two angulations for the motion impact analysis and hence the projection of the marker displacement on the view axis might impact the absolute value of the differences between the analyzed data. Even though the absolute error may be underestimated for the non-motion synchronized data, the general trend and drawn conclusions should be correct. Further, the resulting error is likely intervention-specific. To draw a general conclusion regarding the relevance of motion synchronized pre- and periprocedural fusion, the accuracy has to be analyzed for different applications preferably in larger cohorts.

Conclusion

The impact of motion synchronization on the 3D accuracy of image guidance intervention systems could be clearly shown. Even though residual error sources like patient repositioning accuracy, quality of co-registration, and system limitations like c-arm bending could not be fully considered, a high accuracy well below 5 mm could be proven even on monoplane systems.

Acknowledgements

The authors would like to acknowledge the excellent support of the entire cath lab team and the ongoing support of the Ulm University center for translational imaging (MoMAN, funded by the German Research Foundation (grant number 447235146)).

Funding

Open Access funding enabled and organized by Projekt DEAL. The project was funded by the Federal Ministry of Education and Research (grant number: 13GW0372C).

Declarations

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Ethical approval

The analysis was performed in compliance with the ethical guidelines of the Declaration of Helsinki from 1975 and was approved by the local ethical committee. All patients provided written informed consent prior to the procedure.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Vernikouskaya I, Rottbauer W, Seeger J, Gonska B, Rasche V, Wöhrle J (2018) Patient-specific registration of 3D CT angiography (CTA) with X-ray fluoroscopy for image fusion during transcatheter aortic valve implantation (TAVI) increases performance of the procedure. Clin Res Cardiol 107:507–516. 10.1007/s00392-018-1212-8 10.1007/s00392-018-1212-8 [DOI] [PubMed] [Google Scholar]

- 2.Regeer MV, Ajmone Marsan N (2022) Fusion imaging in congenital heart disease: just a pretty picture or a new tool to improve patient management? Rev Esp Cardiol (Engl Ed) 76:2–3. 10.1016/j.rec.2022.05.025 10.1016/j.rec.2022.05.025 [DOI] [PubMed] [Google Scholar]

- 3.Nobre C, Oliveira-Santos M, Paiva L, Costa M, Gonçalves L (2020) Fusion imaging in interventional cardiology. Rev Port Cardiol 39:463–473. 10.1016/j.repc.2020.03.014 10.1016/j.repc.2020.03.014 [DOI] [PubMed] [Google Scholar]

- 4.Meucci F, Stolcova M, Mattesini A, Mori F, Orlandi G, Ristalli F, Sarti C, Di Mario C (2021) A simple step-by-step approach for proficient utilization of the EchoNavigator technology for left atrial appendage occlusion. Eur Heart J Cardiovasc Imaging 22:725–727. 10.1093/ehjci/jeaa165 10.1093/ehjci/jeaa165 [DOI] [PubMed] [Google Scholar]

- 5.Melillo F, Fisicaro A, Stella S, Ancona F, Capogrosso C, Ingallina G, Maccagni D, Romano V, Ruggeri S, Godino C, Latib A, Montorfano M, Colombo A, Agricola E (2021) Systematic fluoroscopic-echocardiographic fusion imaging protocol for transcatheter edge-to-edge mitral valve repair intraprocedural monitoring. J Am Soc Echocardiogr 34:604–613. 10.1016/j.echo.2021.01.010 10.1016/j.echo.2021.01.010 [DOI] [PubMed] [Google Scholar]

- 6.Ternacle J, Gallet R, Nguyen A, Deux J-F, Fiore A, Teiger E, Dubois-Randé JL, Riant E, Lim P (2018) Usefulness of echocardiographic-fluoroscopic fusion imaging in adult structural heart disease. Arch Cardiovasc Dis 111:441–448. 10.1016/j.acvd.2018.02.001 10.1016/j.acvd.2018.02.001 [DOI] [PubMed] [Google Scholar]

- 7.Mo B-F, Wan Y, Alimu A, Sun J, Zhang P-P, Yu Y, Chen M, Li W, Wang ZQ, Wang QS, Li YG (2021) Image fusion of integrating fluoroscopy into 3D computed tomography in guidance of left atrial appendage closure. Eur Heart J Cardiovasc Imaging 22:92–101. 10.1093/ehjci/jez286 10.1093/ehjci/jez286 [DOI] [PubMed] [Google Scholar]

- 8.Behar JM, Mountney P, Toth D, Reiml S, Panayiotou M, Brost A, Fahn B, Karim R, Claridge S, Jackson T, Sieniewicz B, Patel N, O’Neill M, Razavi R, Rhode K, Rinaldi CA (2017) Real-time X-MRI-guided left ventricular lead implantation for targeted delivery of cardiac resynchronization therapy. JACC Clin Electrophysiol 3:803–814. 10.1016/j.jacep.2017.01.018 10.1016/j.jacep.2017.01.018 [DOI] [PubMed] [Google Scholar]

- 9.Bertsche D, Rottbauer W, Rasche V, Buckert D, Markovic S, Metze P, Gonska B, Luo E, Dahme T, Vernikouskaya I, Schneider LM (2022) Computed tomography angiography/magnetic resonance imaging-based preprocedural planning and guidance in the interventional treatment of structural heart disease. Front Cardiovasc Med 9:931959. 10.3389/fcvm.2022.931959 10.3389/fcvm.2022.931959 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Khalil A, Faisal A, Lai KW, Ng SC, Liew YM (2017) 2D to 3D fusion of echocardiography and cardiac CT for TAVR and TAVI image guidance. Med Biol Eng Comput 55:1317–1326. 10.1007/s11517-016-1594-6 10.1007/s11517-016-1594-6 [DOI] [PubMed] [Google Scholar]

- 11.Hell MM, Kreidel F, Geyer M, Ruf TF, Tamm AR, Da Rocha e Silva JG, Münzel T, von Bardeleben RS (2021) The revolution in heart valve therapy: focus on novel imaging techniques in intra-procedural guidance. Struct Heart 5:140–150. 10.1080/24748706.2020.1853293 10.1080/24748706.2020.1853293 [DOI] [Google Scholar]

- 12.Li Z, Mancini ME, Monizzi G, Andreini D, Ferrigno G, Dankelman J, De Momi E (2021) Model-to-image registration via deep learning towards image-guided endovascular interventions. In: International symposium on medical robotics (ISMR) IEEE, pp.1–6. Doi:10.1109/ismr48346.2021.9661511.

- 13.Peoples JJ, Bisleri G, Ellis RE (2019) Deformable multimodal registration for navigation in beating-heart cardiac surgery. Int J CARS 14:955–966. 10.1007/s11548-019-01932-2 10.1007/s11548-019-01932-2 [DOI] [PubMed] [Google Scholar]

- 14.Unberath M, Gao C, Hu Y, Judish M, Taylor RH, Armand M, Grupp R (2021) The impact of machine learning on 2D/3D registration for image-guided interventions: a systematic review and perspective. Front Robot AI 8:716007. 10.3389/frobt.2021.716007 10.3389/frobt.2021.716007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Vernikouskaya I, Bertsche D, Rottbauer W, Rasche V (2022) Deep learning-based framework for motion-compensated image fusion in catheterization procedures. Comput Med Imaging Graph 98:102069. 10.1016/j.compmedimag.2022.102069 10.1016/j.compmedimag.2022.102069 [DOI] [PubMed] [Google Scholar]

- 16.Xu R, Athavale P, Krahn P, Anderson K, Barry J, Biswas L, Ramanan V, Yak N, Pop M, Wright GA (2015) Feasibility study of respiratory motion modeling based correction for MRI-guided intracardiac interventional procedures. IEEE Trans Biomed Eng 62:2899–2910. 10.1109/TBME.2015.2451517 10.1109/TBME.2015.2451517 [DOI] [PubMed] [Google Scholar]

- 17.Azizmohammadi F, Navarro Castellanos I, Miro J, Segars PW, Samei E, Duong L (2022) Patient-specific cardio-respiratory motion prediction in x-ray angiography using LSTM networks. Phys Med Biol. 10.1088/1361-6560/acaba8 10.1088/1361-6560/acaba8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Manstad-Hulaas F, Tangen GA, Demirci S, Pfister M, Lydersen S, Nagelhus Hernes TA (2011) Endovascular image-guided navigation: validation of two volume-volume registration algorithms. Minim Invasive Ther Allied Technol 20:282–289. 10.3109/13645706.2010.536244 10.3109/13645706.2010.536244 [DOI] [PubMed] [Google Scholar]

- 19.Ma YL, Penney GP, Rinaldi CA, Cooklin M, Razavi R, Rhode KS (2009) Echocardiography to magnetic resonance image registration for use in image-guided cardiac catheterization procedures. Phys Med Biol 54:5039–5055. 10.1088/0031-9155/54/16/013 10.1088/0031-9155/54/16/013 [DOI] [PubMed] [Google Scholar]

- 20.Linte CA, Moore J, Peters TM (2010) How accurate is accurate enough? A brief overview on accuracy considerations in image-guided cardiac interventions. In: 2010 annual international conference of the IEEE engineering in medicine and biology, pp. 2313–2316. 10.1109/IEMBS.2010.5627652. [DOI] [PubMed]

- 21.Linte CA, Lang P, Rettmann ME, Cho DS, Holmes DR, Robb RA, Peters TM (2012) Accuracy considerations in image-guided cardiac interventions: experience and lessons learned. Int J CARS 7:13–25. 10.1007/s11548-011-0621-1 10.1007/s11548-011-0621-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Vernikouskaya I, Bertsche D, Rottbauer W, Rasche V (2021) 3D-XGuide: open-source X-ray navigation guidance system. Int J CARS 16:53–63. 10.1007/s11548-020-02274-0 10.1007/s11548-020-02274-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Chen X, Diaz-Pinto A, Ravikumar N, Frangi A (2020) Deep learning in medical image registration. Prog Biomed Eng 3:12003. 10.1088/2516-1091/abd37c 10.1088/2516-1091/abd37c [DOI] [Google Scholar]

- 24.Homsi R, Meier-Schroers M, Gieseke J, Dabir D, Luetkens JA, Kuetting DL, Naehle CP, Marx C, Schild HH, Thomas DK, Sprinkart AM (2016) 3D-Dixon MRI based volumetry of peri- and epicardial fat. Int J Cardiovasc Imaging 32:291–299. 10.1007/s10554-015-0778-8 10.1007/s10554-015-0778-8 [DOI] [PubMed] [Google Scholar]

- 25.Fedorov A, Beichel R, Kalpathy-Cramer J, Finet J, Fillion-Robin J-C, Pujol S, Bauer C, Jennings D, Fennessy F, Sonak M, Buatti J, Aylward S, Miller JV, Pieper S, Kikinis R (2012) 3D Slicer as an image computing platform for the Quantitative Imaging Network. Magn Reson Imaging 30:1323–1341. 10.1016/j.mri.2012.05.001.26 10.1016/j.mri.2012.05.001.26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Johnson H, Harris G, Williams K (2007) BRAINSFit: mutual information registrations of whole-brain 3D images, using the insight toolkit. Insight J. 10.54294/hmb052 10.54294/hmb052 [DOI] [Google Scholar]

- 27.Vernikouskaya I, Bertsche D, Dahme T, Rasche V (2021) Cryo-balloon catheter localization in X-Ray fluoroscopy using U-net. Int J CARS 16:1255–1262. 10.1007/s11548-021-02366-5 10.1007/s11548-021-02366-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hartley R, Zisserman A (2018) Multiple view geometry in computer vision. Cambridge University Press, Cambridge [Google Scholar]

- 29.Rasche V, Mansour M, Reddy V, Singh JP, Qureshi A, Manzke R, Sokka S, Ruskin J (2008) Fusion of three-dimensional X-ray angiography and three-dimensional echocardiography. Int J CARS 2:293–303. 10.1007/s11548-007-0142-0 10.1007/s11548-007-0142-0 [DOI] [Google Scholar]

- 30.Kolbitsch C, Prieto C, Buerger C, Harrison J, Razavi R, Smink J, Schaeffter T (2012) Prospective high-resolution respiratory-resolved whole-heart MRI for image-guided cardiovascular interventions. Magn Reson Med 68:205–213. 10.1002/mrm.23216 10.1002/mrm.23216 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Rettmann ME, Holmes DR III, Johnson SB, Lehmann HI, Robb RA, Packer DL (2015) Analysis of left atrial respiratory and cardiac motion for cardiac ablation therapy. medical Imaging 2015: image-guided procedures. Robot Interv Model SPIE 9415:651–656. 10.1117/12.2081209 10.1117/12.2081209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Xie Y, Cao L, Qian Y, Zheng H, Liu K, Li X (2020) Effect of deep sedation on mechanical power in moderate to severe acute respiratory distress syndrome: a prospective self-control study. BioMed Res Int. 10.1155/2020/2729354 10.1155/2020/2729354 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Movassaghi B, Rasche V, Grass M, Viergever MA, Niessen WJ (2004) A quantitative analysis of 3-D coronary modeling from two or more projection images. IEEE Trans Med Imaging 23:1517–1531. 10.1109/tmi.2004.837340 10.1109/tmi.2004.837340 [DOI] [PubMed] [Google Scholar]

- 34.Häger S, Lange A, Heldmann S, Modersitzki J, Petersik A, Schröder M, Gottschling H, Lieth T, Zähringer E, Moltz JH (2022) Robust Intensity-based Initialization for 2D-3D Pelvis Registration (RobIn). In: Bildverarbeitung für die Medizin, pp. 69–74. 10.1007/978-3-658-36932-3_14.

- 35.Häger S, Heldmann S, Hering A, Kuckertz S, Lange A (2021) Variable Fraunhofer MEVIS RegLib comprehensively applied to Learn2Reg challenge. In: International conference on medical image computing and computer-assisted intervention, pp. 74–79. 10.1007/978-3-030-71827-5_9.