Abstract

Background

It is essential to consider the evidence of consumer preferences and their specific needs when determining which strategies to use to improve patient attendance at scheduled healthcare appointments.

Objectives

This study aimed to identify key attributes and elicit healthcare consumer preferences for a healthcare appointment reminder system.

Methods

A discrete choice experiment was conducted in a general Australian population sample. The respondents were asked to choose between three options: their preferred reminder (A or B) or a ‘neither’ option. Attributes were developed through a literature review and an expert panel discussion. Reminder options were defined by four attributes: modality, timing, content and interactivity. Multinomial logit and mixed multinomial logit models were estimated to approximate individual preferences for these attributes. A scenario analysis was performed to estimate the likelihood of choosing different reminder systems.

Results

Respondents (n = 361) indicated a significant preference for an appointment reminder to be delivered via a text message (β = 2.42, p < 0.001) less than 3 days before the appointment (β = 0.99, p < 0.001), with basic details including the appointment cost (β = 0.13, p < 0.10), and where there is the ability to cancel or modify the appointment (β = 1.36, p < 0.001). A scenario analysis showed that the likelihood of choosing an appointment reminder system with these characteristics would be 97%.

Conclusions

Our findings provide evidence on how healthcare consumers trade-off between different characteristics of reminder systems, which may be valuable to inform current or future systems. Future studies may focus on exploring the effectiveness of using patient-preferred reminders alongside other mitigation strategies used by providers.

Supplementary Information

The online version contains supplementary material available at 10.1007/s40271-024-00692-9.

Key Points for Decision Makers

| Given the evolution of digital communication tools such as reminder systems, there are a range of characteristics that could be integrated into such systems to help address patient non-attendance and improve patient engagement; however, little is known about patient preferences for aspects of these reminder systems in Australia. |

| Respondents had a significant preference for an appointment reminder to be delivered via a text message, less than 3 days before the appointment, with basic details including the appointment cost, and where there is the ability to cancel or modify the appointment. |

| A scenario analysis showed that the likelihood of choosing an appointment reminder system with the preferred characteristics would be 97%. |

Introduction

Unplanned non-attendance, referring to instances where patients do not attend scheduled healthcare appointments, is considered a major source of waste within healthcare systems [1]. Within the reported literature, there is a wide range of non-attendance rates, ranging between 6.5 and 43% [2]. Non-attendance has both monetary (i.e. financial) and non-monetary (i.e. social) costs [3]. Monetary costs include the lost income of healthcare providers, including lost or lower reimbursement received by providers as a result of having patients who do not attend their appointments [3]. Non-monetary costs relate to the ineffective, inefficient or wasteful use of resources such as staff time, equipment and ward capacity [3]. Non-attendance may also have flow-on impacts for patients, such as spending prolonged periods on waiting lists and potentially delayed access to healthcare services [3, 4]. This can in turn lead to reduced patient satisfaction and may have detrimental consequences for patient outcomes [4].

Non-attendance is a multi-faceted issue that has been attributed to both patient and system-level factors [5]. In recent years, the evolution of digital communication tools have contributed to a wide range of options for increasing appointment attendance and mitigating the impact of non-attendance at scheduled healthcare appointments. Among the most common mitigation strategies used in healthcare systems are reminder systems. However, the success of reminders varies across different contexts [6]. The literature suggests that intervention success may be dependent on providers and consumer preferences, the needs of specific patients and the level of resources available [7–9]. Given this context, it is essential to consider the evidence of consumer preferences and their specific needs when determining which strategies to use to improve patient attendance at scheduled healthcare appointments.

Discrete choice experiments (DCEs) are a widely used method to elicit preferences for different aspects of healthcare products or services [10–12]. A DCE presents individuals with several hypothetical health scenarios (i.e. choice sets), each containing two or more alternatives between which individuals are asked to choose [11, 13, 14]. There has been limited use of DCE methods to elicit preferences for healthcare appointment reminders, with no previous studies conducted in an Australian setting. Accounting for contextual variations and the potential differences in population preferences is an important consideration when interpreting DCE findings and their implications for the implementation of strategies into varying clinical settings [15]. Understanding how patients value the different attributes of a healthcare appointment reminder may lead to improved reminder systems that reduce unplanned non-attendance, enhance patient engagement with healthcare systems and result in a more efficient use of resources. Hence, this study aimed to (1) identify the key attributes and levels of a healthcare appointment reminder system for use in a DCE and (2) conduct a DCE to understand preferences for a healthcare appointment reminder system from the perspective of healthcare consumers.

Methods

DCE Development Process

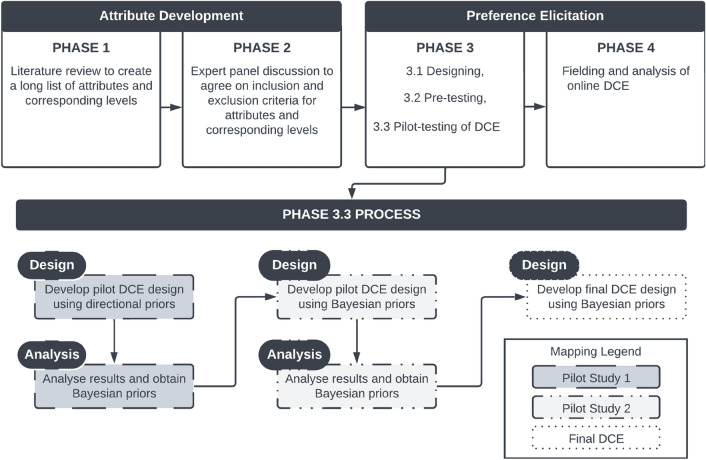

To elicit preferences for a healthcare appointment reminder, this study employed a four-stage approach (Fig. 1), encompassing attribute development and preference elicitation. The study was designed and reported in line with published recommendations [11, 16–18]. The approach comprised a literature review (phase 1); an expert panel discussion (phase 2); designing, pre-testing and piloting (phase 3), and fielding and analysis of the online DCE (phase 4) [19–21]. The study phases were conducted from August 2022 to September 2023.

Fig. 1.

Flowchart of discrete choice experiment (DEC) study process

Phase 1: Literature Review to Create a Long List of Attributes and Corresponding Levels

A literature review was performed to identify relevant attributes and levels for healthcare appointment reminders. Three electronic databases, PubMed, Embase and Web of Science, were searched from inception to August 2022. A combination of commonly used terms related to the topics of ‘reminder system’ and ‘patient preference’ was used. There were no restrictions applied to the study design. Only studies that were reported in the English language were included. The search strings for each database and results are provided in Tables 1.1–1.3 of the Electronic Supplementary Material (ESM).

The literature review identified seven types of attributes for healthcare appointment reminders: reminder modality, reminder timing (including time of day, and day of week of arrival of the reminder), reminder content, number of reminders, messenger (who sends the message), reminder length and ability to personalise the reminder. These results are presented in Fig. 1.1 and Table 1.4 of the ESM.

Phase 2: Expert Panel Discussion to Agree on Inclusion and Exclusion Criteria for Attributes and Corresponding Levels

The expert panel comprised the research team, which included six health service researchers with backgrounds in health economics, medicine, allied health, psychology and implementation science. The expert panel discussed all seven types of attributes for a healthcare appointment reminder identified from the literature and deliberated on which attributes and corresponding levels to include in the DCE survey. Elements that were discussed included the clinical face validity of the attributes (i.e. realism of system attributes and suitable levels to be included), the level of detail in the presented scenario that might be needed to make a real-world decision, attribute dominance, attribute overlapping and the trading-off of attributes. Definitions for the described elements are presented in Sect. 2 of the ESM.

Four attributes were identified by the expert panel as the most salient features of patient reminder systems. These attributes were maintained as they were or collapsed, simplified and reworded; they included reminder modality, timing, content and interactivity. The number of levels was capped at a maximum of five per attribute (Table 1).

Table 1.

Finalised list of attributes from the expert panel discussion

| Attributes | Levels | Description |

|---|---|---|

| Modality | Automated text message | Type of reminder that will be received |

| Automated/robot phone call | ||

| Phone call with a person | ||

| Postal letter | ||

| Timing | Less than 3 days before the appointment | When the reminder is received |

| 1 week before the appointment | ||

| 1 month before the appointment | ||

| Content | Basic details (clinic location information (address, directions and map) + time and date) | Information provided in the reminder |

| Basic details + clinician name | ||

| Basic details + appointment cost | ||

| Interactivity | Ability to cancel or modify the appointment | What can be done with the reminder |

| No ability to cancel or modify the appointment |

Phase 3: Designing, Pre-Testing and Piloting

Survey Design

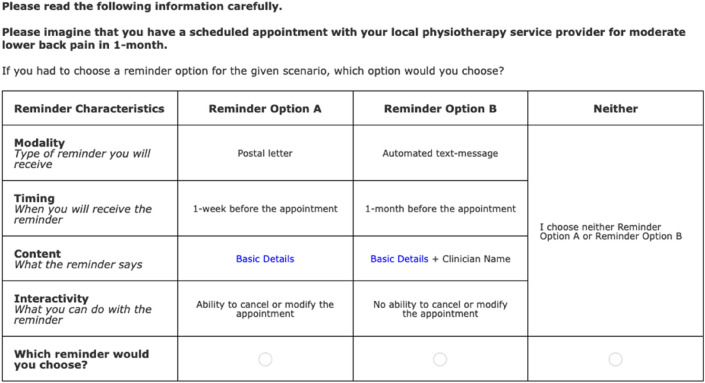

The online survey contained two main sections. The first section contained instructions on how to complete the DCE survey, a practice DCE choice task, ten DCE choice tasks and one repeat DCE choice task (selected from one of the ten presented DCE choice tasks). The same hypothetical scenario of having a scheduled outpatient clinic appointment was shown across all choice tasks. In the survey, for each choice task presented to participants, they were asked to choose their preferred reminder (A or B) or a ‘neither’ option. The two reminders differed in terms of the presented attributes and their corresponding levels (Fig. 2). The survey presented respondents with both a conditional choice and an unconditional choice (definitions and considerations surrounding the inclusion of a ‘neither’ option are presented in Sect. 3 of the ESM). In instances where participants chose the ‘neither’ option, a supplementary question was asked: if they must choose between the two reminders, which one would they choose. This is considered as a conditional choice as the participants are only presented with this option based on the selection of the neither option [22]. The order of the alternatives was varied randomly for each choice task, to minimise “order bias” [23]. The second section of the survey asked participants to provide demographic data including sex, age, education, marital status and employment. Participants were also asked about their current appointment attendance patterns (e.g. number of appointments attended in the past 24 months, number of missed appointments and the reason/s for missing an appointment).

Fig. 2.

Sample choice task

Survey Participant Recruitment

Participants were recruited from PureProfile (www.pureprofile.com), an Australian online panel company. These participants have expressed their interest in completing research surveys as part of signing up for PureProfile. Through the use of an online panel company, a sample that is largely representative of the general Australian population in terms of key demographic variables (e.g. age, sex, state of residence) was able to be recruited. Evidence suggests that DCE data collected via online surveys does not yield inferior results when compared to paper-based surveys [24]. Additionally, the reach of the DCE survey may be improved when conducted online [25]. The inclusion criteria for the DCE study were (1) adults (aged 18 years and above); (2) who have attended at least one healthcare appointment in the past 24 months; (3) who are able to complete the survey in English without the need for a translator; and (4) who live in Australia.

Designing DCE Choice Tasks (Experimental Design)

An unlabeled DCE design was used for this study. Given the number of selected attributes and their corresponding levels, a full factorial design would result in 8100 possible choice tasks. As it is not feasible for participants to be presented with and asked to evaluate such a large number of profiles, an attribute-level, balanced, D-efficient, fractional factorial design was used to select a subset of the profiles using Ngene software [26]. By using a fractional factorial design, participants are presented with a manageable number of choice tasks while maximising the design’s statistical efficiency. This yields smaller standard errors and increases the precision of the parameter estimates [27, 28]. An efficient design requires the specification of priors, which refers to the best estimation of the coefficients associated with each attribute. A total of 30 choice tasks were generated; these were blocked into three groups. Hence, each participant was shown ten choice tasks. This was then followed by one repeat task. This is in line with the number of choice tasks used in other health-related studies [29, 30].

Survey Validity (Pre-Testing)

The online survey was pre-tested to investigate its content and face validity. This included ascertaining participants’ comprehension and understanding of the wording used to characterise the attributes of the appointment reminder, the easiness of following the instructions and the overall presentation of the survey [31]. The pre-test was administered to a sample of ten members of the general population. Potential pre-testing participants were identified through the existing contacts of members of the research team, as well as existing collaborative research networks. Other studies have shown that a minimum sample size of ten respondents is sufficient for checking for readability and clarity within the questionnaire before it goes out to a broader population [32, 33]. Following the completion of the pre-test, the research team reviewed the content of the survey and made necessary changes to the survey. This included revising the survey based on recommendations received from the respondents to improve survey clarity.

Obtaining Priors and Testing Choice Reliability (Pilot Testing)

To obtain the Bayesian priors (where the parameter estimate is not known with certainty but could be described by its probability distribution) to be used to design the final D-efficient design, it is considered best practice to conduct a pilot test [28]. The revised survey (developed following the completion of the pre-test) was used for the pilot test. In the absence of prior information on the coefficients of the different attributes, either small positive or negative priors, or zero priors (non-informative priors), may be used in the D-efficient design. Based on findings from the literature review, the following priori hypothesis was used to determine the direction of the priors for the pilot: people prefer automated text messages, that are delivered less than 3 days before the appointment, that provide basic details and the appointment cost, and where they have the ability to cancel or modify the appointment. The pilot was administered to a sample of 54 members of the general population; recruited from PureProfile. Following the completion of the pilot, the research team amended the survey as per the respondents’ recommendations, which included changing the wording used for the ‘neither’ option. Further, the survey provided estimations that enabled the generation of Bayesian priors that were then used in designing the choice tasks for a second pilot test.

The S-estimate refers to the minimum number of respondents required to achieve statistically significant coefficients with 95% confidence [22]. Following the completion of the first pilot, an experimental design was developed; however, upon checking the results (presented in Sect. 3 of the ESM), a high S-estimate was shown. Given budgetary constraints, the research team opted to conduct a second pilot with a small sample size to determine if the S-estimate could possibly be reduced, and if so, use the priors generated from this in the final DCE design. The second pilot test was conducted with a sample of 53 (recruited from PureProfile) to compute priors that could be used in the final DCE survey as well as to check the comprehension of the amended ‘neither’ option. Following the completion of the second pilot, it was seen that the S-estimate and the drivers for high Sb estimates were reduced. There is no formal guidance on the exact sample size required for piloting. However, the accuracy of the priors is said to improve as the sample size is increased. Other studies have indicated that a sample of 30 may be sufficient to generate useable data [28]. The priors and additional information on the process followed during pilot testing are presented in Sect. 3 of the ESM. No further testing was performed as participants provided positive feedback, indicating that the survey was easy to understand. A breakdown of each step conducted within this phase is presented in Fig. 1.

To assess the consistency of the participants’ responses to choice tasks for the duration of the survey, a “test-retest stability” was used. This refers to the inclusion of a repeat-choice task, where one of the ten choice tasks presented to the respondent was shown again as the 11th task. The proportion of respondents that answered the repeat-choice tasks correctly (same response) was then used to assess stability [34]. The repeat-choice task that was used for the assessment of reliability was excluded from the final analysis.

Phase 4: Fielding and Analysis of the Online DCE

Following the completion of the pilot tests, the final DCE study was fielded. The data from the DCE were analysed within a random utility theory framework, which assumes that respondents choose the option that maximises their utility [22]. The underlying utility function used was:

where U is the individual specific utility derived from choosing an alternative in each choice scenario, Β is the vector of coefficients representing the desirability of each attribute level and Ε is the random error of the model.

In the equation, all attributes were treated as categorical variables and dummy coding was used. Two statistical methods were considered, a multinomial logit model that assumes preference homogeneity among survey respondents and a mixed multinomial logit (MMNL) model that accounts for unobserved continuous preference heterogeneity, whereby it takes into consideration the panel nature of the stated-preference data (i.e. multiple observations by a single respondent) [35, 36]. For the MMNL model, a normal distribution was specified for all dummy-coded variables. For dummy-coded variables, each coefficient that is estimated by the model is a measure of the strength of preference of that level relative to the omitted level of that attribute (reference level) [35]. Further, all parameters were initially treated as random within a normal distribution. Following the initial analysis, given that all parameters had a statistically significant standard deviation, they were all treated as random in the final model; no parameters were considered fixed. The MMNL model used 500 Halton draws. In addition, observed variables such as age, sex and whether respondents had missed an appointment in the past were also used to estimate the influence on preferences by including a series of interaction terms with attribute levels. The Akaike information criterion (AIC)/N and the log-likelihood function (LL) were used to facilitate the selection of optimal estimation between the multinomial logit and MMNL models, where the smaller the value of AIC/N and the higher the LL, the better the model fit [35]. The relative importance of each attribute was calculated by dividing the utility range of a particular attribute by the sum of utility ranges for all attributes. The unconditional and conditional datasets were merged during the final analysis (Sect. 4 of the ESM).

Various scenarios were simulated to estimate the likelihood of individuals choosing different reminder system variations (Sect. 4 of the ESM). These scenarios are based on changing the modality, timing, content and interactivity of the reminder system. For example, if the reminder received is an automated text message, sent less than 3 days before the appointment, with basic details and the appointment cost, and provides the ability to cancel or modify the appointment, what would be the likelihood of choosing this reminder system compared to the base scenario (reference level)? To run the scenario analysis, we first estimated the MMNL model, and then the simulation commands were specified in the model (Sect. 4 of the ESM). This yielded the probabilities of choosing a reminder system for the given scenarios. The scenario analysis results were presented in a simple descriptive (%) form. All statistical analyses were performed in NLOGIT 5 [37].

Results

Respondents’ Characteristics

Three-hundred and sixty-one participants completed the final online DCE survey. The study sample had a mean age (range) of 43 (18–70) years and 54.6% were female. Further, 72.9% of respondents attended between one and ten appointments in the past 24 months, 68.4% had missed zero out of the last ten scheduled appointments, and those who had reported missing appointments cited forgetting the appointment (9.4%) and other commitments (8.6%) as the main reasons for doing so. Further details on the final online DCE respondents’ characteristics are presented in Table 2, and pilot study respondents’ characteristics are presented in Sect. 3 of the ESM.

Table 2.

Respondent characteristics

| Variable | Categories | Number (%) N = 361 |

|---|---|---|

| Age, years | 18–24 | 45 (12.50) |

| 25–34 | 78 (21.60) | |

| 35–44 | 71 (19.70) | |

| 45–54 | 70 (19.40) | |

| 55–64 | 67 (18.60) | |

| 65–70 | 30 (8.30) | |

| Sex | Male | 163 (45.20) |

| Female | 197 (54.60) | |

| Non-binary | 1 (0.30) | |

| State of residence | Australian Capital Territory | 8 (2.20) |

| New South Wales | 120 (33.20) | |

| South Australia | 25 (6.90) | |

| Victoria | 90 (24.90) | |

| Queensland | 71 (19.70) | |

| Western Australia | 46 (12.70) | |

| Tasmania | 1 (0.30) | |

| Level of education | No school certificate or other qualifications | 10 (2.80) |

| School or intermediate certificate (or equivalent) | 20 (5.50) | |

| Higher school or leaving certificate (or equivalent) | 85 (23.50) | |

| Trade/apprenticeship (e.g. hairdresser, chef) | 19 (5.30) | |

| Certificate/diploma (e.g. childcare, technician) | 65 (18.00) | |

| University degree or higher | 161 (44.60) | |

| Other | 1 (0.30) | |

| Marital status | Single | 115 (31.90) |

| Widowed | 6 (1.70) | |

| Married | 157 (43.50) | |

| Divorced | 26 (7.20) | |

| De facto/living with partner | 51 (14.10) | |

| Separated | 6 (1.70) | |

| Employment status | Full-time employment | 184 (51.00) |

| Part-time employment | 73 (20.20) | |

| Unemployed | 38 (10.50) | |

| Disability pension | 14 (3.90) | |

| Retired | 37 (10.20) | |

| Other | 15 (4.20) | |

| How many healthcare appointments do you think you have attended in the past 24 months? | 1–10 | 263 (72.90) |

| 11–20 | 64 (17.70) | |

| 21–30 | 19 (5.30) | |

| 31–40 | 8 (2.20) | |

| More than 40 | 7 (1.90) | |

| We would like you to consider the last 10 healthcare appointments of any kind that you were scheduled to attend. How many of these 10 appointments did you miss without cancelling or rescheduling beforehand? | 0 | 247 (68.40) |

| 1–2 | 52 (14.40) | |

| 3–4 | 8 (2.20) | |

| 5–6 | 11 (3.00) | |

| 7–8 | 23 (6.40) | |

| 9–10 | 20 (5.50) | |

| If you have missed a healthcare appointment in the past, what was the reason/s for this?a | I forgot about the appointment | 34 (9.40) |

| I was not able to leave an event/other commitment | 31 (8.60) | |

| I was confused about the time, date or location of the appointment | 19 (5.30) | |

| I had transportation difficulties | 21 (5.80) | |

| I no longer felt sick | 21 (5.80) | |

| I was too sick | 16 (4.40) | |

| Other | 5 (1.40) |

aParticipants could select more than one option

Preferences for a Healthcare Appointment Reminder

We ran both a multinomial logit and an MMNL model, from which we compared the model fit using the AIC/N and LL results. The MMNL model had a smaller AIC/N (1.85 vs 1.69) and a higher LL (− 3775.01 vs − 3434.12), indicating a better model fit, hence we presented these results. The results of the multinomial logit model are presented in Sect. 4 of the ESM. The MMNL model (Table 3) showed several significant attribute coefficients that respondents considered while choosing the reminder system characteristics they preferred. The results indicate that respondents most preferred an automated text message (β = 2.42, p < 0.001) compared with a postal letter (reference level), as shown by the positive (statistically significant) coefficient for this level. The second most preferred reminder modality was e-mail (β = 1.6, p < 0.001). In terms of timing, respondents most preferred to receive reminders less than 3 days before the appointment (β = 0.99, p < 0.001) compared with 1-month before the appointment (reference level). Respondents also most preferred to receive reminders displaying basic details and the appointment cost (β = 0.13, p < 0.10) compared to only basic details (reference level). Additionally, they preferred to have the ability to cancel or modify the appointment (β = 1.36, p < 0.001) compared to not having the ability to do so (reference level).

Table 3.

Mixed multinomial logit model estimates

| Attributes and levels | Mixed multinomial logit | Mixed multinomial logit (with systematic preference heterogeneity) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | |||||||||

| COEFF | SE | 95% CI | COEFF | SE | 95% CI | COEFF | SE | 95% CI | COEFF | SE | 95% CI | |

| ASC1 | − 1.06*** | 0.15 | − 1.36 to − 0.76 | – | – | – | − 1.07*** | 0.15 | − 1.37 to − 0.77 | – | – | – |

| ASC2 | − 1.13*** | 0.15 | − 1.42 to − 0.84 | – | – | – | − 1.14*** | 0.15 | − 1.44 to − 0.85 | – | – | – |

| ASC3 | 0.40*** | 0.13 | 0.14 to 0.66 | – | – | – | 0.40*** | 0.13 | 0.14 to 0.67 | – | – | – |

| Modality | ||||||||||||

| Postal letter | Reference | |||||||||||

| 1.61*** | 0.14 | 1.33 to 1.90 | 1.09*** | 0.15 | 0.79 to 1.39 | 2.45*** | 0.40 | 1.66 to 3.24 | 1.10*** | 0.16 | 0.80 to 1.41 | |

| Robot phone call | 0.67*** | 0.12 | 0.43 to 0.91 | 1.40*** | 0.14 | 1.13 to 1.68 | 1.06*** | 0.40 | 0.28 to 1.85 | 1.40*** | 0.14 | 1.12 to 1.68 |

| Phone call with a person | 1.18*** | 0.13 | 0.93 to 1.43 | 1.50*** | 0.13 | 1.23 to 1.76 | 0.90** | 0.40 | 0.11 to 1.69 | 1.45*** | 0.13 | 1.19 to 1.71 |

| Automated text message | 2.42*** | 0.18 | 2.07 to 2.78 | 1.53*** | 0.15 | 1.24 to 1.83 | 2.72*** | 0.48 | 1.78 to 3.66 | 1.52*** | 0.15 | 1.23 to 1.81 |

| Timing | ||||||||||||

| 1 month before the appointment | Reference | |||||||||||

| 1 week before the appointment | 0.87*** | 0.09 | 0.69 to 1.05 | 0.76*** | 0.09 | 0.58 to 0.93 | 1.03*** | 0.28 | 0.48 to 1.57 | 0.75*** | 0.09 | 0.57 to 0.93 |

| Less than 3 days before the appointment | 0.99*** | 0.11 | 0.77 to 1.20 | 1.04*** | 0.10 | 0.85 to 1.24 | 0.81** | 0.32 | 0.19 to 1.43 | 1.05*** | 0.10 | 0.85 to 1.25 |

| Content | ||||||||||||

| Basic details | Reference | |||||||||||

| Basic details + clinician name | 0.12 | 0.08 | − 0.03 to 0.27 | 0.34** | 0.14 | 0.07 to 0.60 | − 0.03 | 0.26 | − 0.55 to 0.48 | 0.34** | 0.14 | 0.07 to 0.61 |

| Basic details + appointment cost | 0.13* | 0.07 | − 0.02 to 0.28 | 0.60*** | 0.11 | 0.39 to 0.82 | 0.52** | 0.26 | 0.01 to 1.04 | 0.60*** | 0.11 | 0.39 to 0.82 |

| Interactivity | ||||||||||||

| No ability to cancel or modify the appointment | Reference | |||||||||||

| Ability to cancel or modify the appointment | 1.36*** | 0.11 | 1.14 to 1.57 | 1.48*** | 0.09 | 1.30 to 1.66 | 1.28*** | 0.34 | 0.62 to 1.95 | 1.50*** | 0.09 | 1.31 to 1.68 |

| Systematic preference heterogeneity | ||||||||||||

| Age × phone call with a person | – | – | – | – | – | – | − 0.015* | 0.008 | − 0.03 to 0.0002 | – | – | – |

| Age × automated/robot phone call | – | – | – | – | – | – | − 0.011 | 0.008 | − 0.027 to 0.005 | – | – | – |

| Age × e-mail | – | – | – | – | – | – | 0.001 | 0.008 | − 0.015 to 0.016 | – | – | – |

| Age × automated text message | – | – | – | – | – | – | − 0.010 | 0.009 | − 0.028 to 0.009 | – | – | – |

| Age × 1 week before appointment | – | – | – | – | – | – | 0.877D–04 | 0.006 | − 0.107D–01 to 0.109D–01 | – | – | – |

| Age × less than 3 days before appointment | – | – | – | – | – | – | 0.003 | 0.006 | − 0.01 to 0.015 | – | – | – |

| Age × basic details + clinician name | – | – | – | – | – | – | –0.0004 | 0.005 | − 0.011 to 0.01 | – | – | – |

| Age × basic details + appointment cost | – | – | – | – | – | – | − 0.009* | 0.005 | − 0.02 to 0.001 | – | – | – |

| Age × ability to cancel or modify the appointment | – | – | – | – | – | – | 0.004 | 0.007 | − 0.009 to 0.018 | – | – | – |

| Male × phone call with a person | – | – | – | – | – | – | − 0.027 | 0.212 | − 0.442 to 0.387 | – | – | – |

| Male × automated robot phone call | – | – | – | – | – | – | − 0.024 | 0.231 | − 0.477 to 0.428 | – | – | – |

| Male × e-mail | – | – | – | – | – | – | 0.329 | 0.223 | − 0.109 to 0.767 | – | – | – |

| Male × automated text message | – | – | – | – | – | – | 0.386 | 0.260 | − 0.124 to 0.896 | – | – | – |

| Male × 1 week before appointment | – | – | – | – | – | – | − 0.267* | 0.153 | − 0.567 to 0.033 | – | – | – |

| Male × less than 3 days before appointment | – | – | – | – | – | – | 0.018 | 0.180 | − 0.334 to 0.37 | – | – | – |

| Male × basic details + clinician name | – | – | – | – | – | – | 0.223 | 0.148 | − 0.066 to 0.512 | – | – | – |

| Male × basic details + appointment cost | – | – | – | – | – | – | 0.198 | 0.149 | − 0.095 to 0.491 | – | – | – |

| Male × ability to cancel or modify the appointment | – | – | – | – | – | – | − 0.215 | 0.193 | − 0.594 to 0.164 | – | – | – |

| Missed × phone call with a person | – | – | – | – | – | – | − 0.570** | 0.247 | − 1.055 to − 0.086 | – | – | – |

| Missed × automated/robot phone call | – | – | – | – | – | – | 0.301 | 0.258 | − 0.205 to 0.807 | – | – | – |

| Missed × e-mail | – | – | – | – | – | – | 0.316 | 0.252 | − 0.178 to 0.809 | – | – | – |

| Missed × automated text message | – | – | – | – | – | – | − 0.204 | 0.293 | − 0.778 to 0.37 | – | – | – |

| Missed × 1 week before appointment | – | – | – | – | – | – | −0.073 | 0.172 | − 0.41 to 0.263 | – | – | – |

| Missed × less than 3 days before appointment | – | – | – | – | – | – | 0.199 | 0.199 | − 0.192 to 0.589 | – | – | – |

| Missed × basic details + clinician name | – | – | – | – | – | – | 0.183 | 0.166 | − 0.143 to 0.509 | – | – | – |

| Missed × basic details + appointment cost | – | – | – | – | – | – | − 0.261 | 0.170 | − 0.594 to 0.072 | – | – | – |

| Missed × ability to cancel or modify the appointment | – | – | – | – | – | – | − 0.020 | 0.209 | − 0.43 to 0.391 | – | – | – |

| Model fit | ||||||||||||

| LL | − 3434.12 | − 3414.88 | ||||||||||

| AIC/N | 1.69 | 1.69 | ||||||||||

AIC Akaike information criterion, ASC alternative specific constant, CI confidence interval, COEFF coefficient, LL log likelihood, N number of respondents, SD standard deviation, SE standard error, *significant at the 10% level; **significant at the 5% level; ***significant at the 1% level

Dummy coding was used for all attributes

For values indicated as nnnnn.D–xx or D+xx => multiply by 10 to − xx or + xx

In an MMNL model, the standard deviation reveals the degree of heterogeneity for each attribute level that was presented in the DCE survey. A significant standard deviation implies that the preferences varied systematically among the respondents. The standard deviation for all levels except ‘basic details and clinician name’ (which was statistically significant at a 5% level) were statistically significant at the 1% level, suggesting significant preference heterogeneity for these reminder characteristics.

We assessed the interaction between respondent characteristics, such as age, sex and whether they had missed an appointment in the past, and all attribute-level coefficients. The results indicated that only four interaction terms were statistically significant, all of which had negative coefficients. This suggests that this would negatively affect reminder preference: older respondents did not prefer to be reminded via a phone call with a person and to receive a reminder with basic details and the appointment cost, male respondents did not prefer to receive a reminder 1-week before the appointment, and those who had missed an appointment in the past did not prefer to receive a phone call with a person.

Relative Importance of Attributes

Overall, respondents considered modality (i.e. how the reminder is received) as the most important attribute influencing their decision making regarding the selection of a reminder system for healthcare appointments. The relative importance (RI) of modality was 54.1%. The next important factor was interactivity (RI = 42%), followed by timing (RI = 3.6%), while the content of the reminder was the least important factor (RI = 0.3%).

Scenario Analysis

To assess the likelihood of choosing a reminder system, a scenario analysis was undertaken for three hypothetical scenarios. These scenarios are presented in Table 4. All scenarios were shown to have a relatively high likelihood of being chosen when compared to the base scenario. The most preferred scenario (appointment reminder), with a 97% estimated likelihood of being chosen, was an automated text message reminder, received less than 3 days before the appointment, with basic details and the appointment cost, and the ability to cancel or modify the appointment.

Table 4.

Likelihood of choosing a reminder system under different scenarios

| Base scenario | Scenario 1 | Scenario 2 | Scenario 3 | |

|---|---|---|---|---|

| Modality | Postal letter | Automated text message | Phone call with a person | |

| Timing | 1 month before the appointment | Less than 3 days before the appointment | 1 week before the appointment | Less than 3 days before the appointment |

| Content | Basic details | Basic details + appointment cost | Basic details + appointment cost | Basic details + appointment cost |

| Interactivity | No ability to cancel or modify the appointment | Ability to cancel or modify the appointment | Ability to cancel or modify the appointment | Ability to cancel or modify the appointment |

| Probability of choosing the specified reminder system | 88.7% | 97.1% | 96.2% | 94.4% |

Discussion

This study investigated consumer preferences for a healthcare appointment reminder in Australia and found that respondents most preferred an appointment reminder to be delivered via a text message, less than 3 days before the appointment, with basic details and the appointment cost, and where there is the ability to cancel or modify the appointment. A scenario analysis showed that the likelihood of individuals choosing an appointment reminder system with these characteristics would be 97%.

Though this study indicates that the most preferred reminder modality is an automated text message, findings also highlighted that other modalities including an e-mail, a phone call with a person and a robot phone call are also preferred compared to the use of postal reminders. With the current national and international landscape of individuals having increased access to digital technology, such as smartphones, this is not unexpected and is consistent with findings from other studies conducted in industrialised countries [8, 38–41]. Within an Australian context, 93% of the population has a home internet connection and 94% of online adults use a mobile phone to connect to the internet [42]. However, regardless of the high usage of digital technology, it remains important to consider issues surrounding the digital divide, which refers to the gap between healthcare consumers who can access and afford to use digital technology and use it with confidence and those who cannot [43, 44]. Vulnerable populations, such as those who live in rural or remote areas with unreliable phone coverage, who experience disadvantages because of socioeconomic factors, or those inexperienced with technology, may be differentially impacted by the use of digitally focused reminder systems [43, 44]. Other studies have also highlighted that vulnerable populations may be more susceptible to missing scheduled healthcare appointments [44, 45]. Additionally, automated systems have drawbacks; where postal letters are susceptible to delivery delays and automated reminders have the potential of not being received by patients should there be a disruption to the technology that delivers these reminders [6].

The ability to cancel or modify the appointment was shown to be the preferred level of reminder interactivity compared to not having the ability to do so. Providing patients with this flexibility may be useful when considering the reasons why patients miss appointments such as having other competing commitments and where they may no longer feel sick and therefore may no longer need the appointment. Different types of reminder interactivity were considered during the expert panel discussion. However, given that there may be variation in how interactivity might be implemented in the real world (i.e. different healthcare providers may implement different forms of interactivity such as providing the option to respond with a ‘yes’ or ‘no’), the research team elected to broadly categorise the interactivity level. Future research may need to consider this caveat.

Other studies have highlighted that patient-centric scheduling systems integrated with appointment reminders may beneficially impact healthcare clinics’ operational efficiency, improve patient experience and access to care [46–48]. For example, enabling patients with the ability to respond back to appointment reminders may allow for timely cancellations, of which an automated rescheduling system can then pick up and provide notifications to other patients on clinic waiting lists to accept and use the freed-up appointment slots [46–48]. Additionally, interactive scheduling systems that incorporate reminders could potentially capture valuable metrics such as appointment attendance, cancellation and rescheduling rates alongside peak appointment times (i.e. popular appointment slots). This information could be used by healthcare providers to assist with determining appropriate measures to better optimise appointment schedules [9, 49].

This study had some limitations. First, we only explored preferences for four attributes related to a healthcare appointment reminder that were selected based on findings from a literature review and discussions from an expert panel. There may be other context-specific attributes that may not have been presented in this study that might be relevant when considering the design of a reminder system to be implemented in other contexts. Second, this study did not include qualitative data collection methods for the raw data collection and data reduction process for attribute development. Hence, the results generated from including such methods cannot be determined, including if there may be any context-specific characteristics for appointment reminders that might be relevant for different healthcare providers. Third, we used a generic scheduled outpatient appointment scenario that the authors believed to potentially be relevant to a broad range of people. The use of other scenarios that may indicate more severe illnesses or clinic contexts may yield different reminder preferences than those observed in our study. Finally, this study used a sample that had good representation across several demographic variables including age, sex, state of residence, level of education, marital status and employment status [50]. This aligns with the study aim to elicit preferences amongst the general Australian population. However, we acknowledge that using an online panel alongside having certain recruitment requirements (e.g. able to read English) may have excluded disadvantaged groups who may be more likely to miss appointments. Further investigation is required to understand the real-world effectiveness of implementing patient-preferred reminder systems on patient attendance. This includes exploring if there are differential effects of using varied patient-preferred reminder systems on vulnerable population groups. In addition, it remains unclear whether patient-centric scheduling that integrates different methods to cancel or modify appointments would have an impact on patient engagement. Similarly, cost-effectiveness studies on both patient-preferred and patient-centric reminder-integrated scheduling systems remain a priority for further research.

Conclusions

Our findings contribute to evidence around preference elicitation to understand how healthcare consumers trade-off between different characteristics of reminder systems, which may be valuable in providing insight to inform the improvement of current or future systems used by healthcare providers. Future studies may focus on exploring the effectiveness of using patient-preferred reminders alongside other mitigation strategies used by providers.

Supplementary Information

Below is the link to the electronic supplementary material.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Declarations

Funding

This work was supported by funding from Digital Health CRC Limited (“DHCRC”). DHCRC is funded under the Commonwealth’s Cooperative Research Centres. Shayma Mohammed Selim was supported by a National Health and Medical Research Council administered fellowship (#1181138). The funders had no role in the study design or decision to submit for publication.

Conflicts of Interest/Competing Interests

Shayma Mohammed Selim, Sameera Senanayake, Steven M. McPhail, Hannah E. Carter, Sundresan Naicker and Sanjeewa Kularatna have no conflicts of interest that are directly relevant to the content of this article.

Ethics Approval

This study was approved by the Queensland University of Technology’s Human Research Ethics Committee (approval number 6450).

Consent to Participate

All survey respondents were informed that their participation is voluntary and were asked to consent to participate prior to beginning the online survey.

Consent for Publication

Not applicable.

Availability of Data and Material

The datasets generated and analysed during the current study are available upon reasonable request. Other data relevant to the study has been included in the article or uploaded as supplemental material.

Code Availability

Not applicable.

Authors’ Contributions

SMS, SK, SS, SMM, HC, and SN conceived the study. All authors carried out or were involved in the data collection process. SS and SK supervised the data analysis process and SMS wrote the first draft of the manuscript. All authors prepared the final draft for submission. All authors contributed to the interpretation of results, manuscript preparation and revisions. All authors read and approved the final manuscript.

References

- 1.Kheirkhah P, Feng Q, Travis LM, Tavakoli-Tabasi S, Sharafkhaneh A. Prevalence, predictors and economic consequences of no-shows. BMC Health Serv Res. 2015;16:13. 10.1186/s12913-015-1243-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Nancarrow S, Bradbury J, Avila C. Factors associated with non-attendance in a general practice super clinic population in regional Australia: a retrospective cohort study. Australas Med J. 2014;7:323. 10.4066/AMJ.2014.2098 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bech M. The economics of non-attendance and the expected effect of charging a fine on non-attendees. Health Policy. 2005;74:181–91. 10.1016/j.healthpol.2005.01.001 [DOI] [PubMed] [Google Scholar]

- 4.Marbouh D, Khaleel I, Al Shanqiti K, Al Tamimi M, Simsekler MCE, Ellahham S, et al. Evaluating the impact of patient no-shows on service quality. Risk Manag Healthc Policy. 2020;13:509–17. 10.2147/RMHP.S232114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Schwalbe D, Sodemann M, Iachina M, Nørgård BM, Chodkiewicz NH, Ammentorp J, et al. Causes of patient nonattendance at medical appointments: protocol for a mixed methods study. JMIR Res Protoc. 2023;12:e46227. 10.2196/46227 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mohammed-Selim S, Kularatna S, Carter H, Bohorquez NG, McPhail SM. Digital health solutions for reducing the impact of non-attendance: a scoping review. Health Policy Technol. 2023;2023:100759. 10.1016/j.hlpt.2023.100759 [DOI] [Google Scholar]

- 7.Rubin G, Bate A, George A, Shackley P, Hall N. Preferences for access to the GP: a discrete choice experiment. Br J Gen Pract. 2006;56:743–8. [PMC free article] [PubMed] [Google Scholar]

- 8.Crutchfield TM, Kistler CE. Getting patients in the door: medical appointment reminder preferences. Patient Prefer Adherence. 2017;2017:141–50. 10.2147/PPA.S117396 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Liu N, Finkelstein SR, Kruk ME, Rosenthal D. When waiting to see a doctor is less irritating: understanding patient preferences and choice behavior in appointment scheduling. Manag Sci. 2018;64:1975–96. 10.1287/mnsc.2016.2704 [DOI] [Google Scholar]

- 10.Ryan M, Bate A, Eastmond CJ, Ludbrook A. Use of discrete choice experiments to elicit preferences. BMJ Qual Saf. 2001;10:i55-60. 10.1136/qhc.0100055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user’s guide. Pharmacoeconomics. 2008;26:661–77. 10.2165/00019053-200826080-00004 [DOI] [PubMed] [Google Scholar]

- 12.Clark MD, Determann D, Petrou S, Moro D, de Bekker-Grob EW. Discrete choice experiments in health economics: a review of the literature. Pharmacoeconomics. 2014;32:883–902. 10.1007/s40273-014-0170-x [DOI] [PubMed] [Google Scholar]

- 13.Mandeville KL, Lagarde M, Hanson K. The use of discrete choice experiments to inform health workforce policy: a systematic review. BMC Health Serv Res. 2014;14:1–14. 10.1186/1472-6963-14-367 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Marshall D, Bridges JF, Hauber B, Cameron R, Donnalley L, Fyie K, Reed Johnson F. Conjoint analysis applications in health: how are studies being designed and reported? An update on current practice in the published literature between 2005 and 2008. Patient. 2010;3:249–56. 10.2165/11539650-000000000-00000 [DOI] [PubMed] [Google Scholar]

- 15.Jaeger SR, Rose JM. Stated choice experimentation, contextual influences and food choice: a case study. Food Qual Prefer. 2008;19:539–64. 10.1016/j.foodqual.2008.02.005 [DOI] [Google Scholar]

- 16.Bridges JF, Hauber AB, Marshall D, Lloyd A, Prosser LA, Regier DA, Johnson FR, Mauskopf J. Conjoint analysis applications in health: a checklist. A report of the ISPOR Good Research Practices for Conjoint Analysis Task Force. Value Health. 2011;14:403–13. 10.1016/j.jval.2010.11.013 [DOI] [PubMed] [Google Scholar]

- 17.Marshall DA, Veldwijk J, Janssen EM, Reed SD. Stated-preference survey design and testing in health applications. Patient. 2024. 10.1007/s40271-023-00671-6. 10.1007/s40271-023-00671-6 [DOI] [PubMed] [Google Scholar]

- 18.Mühlbacher AC, de Bekker-Grob EW, Rivero-Arias O, Levitan B, Vass C. How to present a decision object in health preference research: attributes and levels, the decision model, and the descriptive framework. Patient. 2024. 10.1007/s40271-024-00673-y. 10.1007/s40271-024-00673-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Allen MJ, Doran R, Brain D, Powell EE, O’Beirne J, Valery PC, Barnett A, Hettiarachchi R, Hickman IJ, Kularatna S. A discrete choice experiment to elicit preferences for a liver screening programme in Queensland, Australia: a mixed methods study to select attributes and levels. BMC Health Serv Res. 2023;23:950. 10.1186/s12913-023-09934-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kularatna S, Allen M, Hettiarachchi RM, Crawford-Williams F, Senanayake S, Brain D, Hart NH, Koczwara B, Ee C, Chan RJ. Cancer survivor preferences for models of breast cancer follow-up care: selecting attributes for inclusion in a discrete choice experiment. Patient. 2023;16(4):371–83. 10.1007/s40271-023-00631-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Brain D, Jadambaa A, Kularatna S. Methodology to derive preference for health screening programmes using discrete choice experiments: a scoping review. BMC Health Serv Res. 2022;22:1079. 10.1186/s12913-022-08464-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hensher DA, Rose JM, Greene WH. Applied choice analysis: a primer. Cambridge: Cambridge University Press; 2005. [Google Scholar]

- 23.Scheufele G, Bennett J. Response strategies and learning in discrete choice experiments. Environ Resour Econ. 2012;52:435–53. 10.1007/s10640-011-9537-z [DOI] [Google Scholar]

- 24.Determann D, Lambooij MS, Steyerberg EW, de Bekker-Grob EW, De Wit GA. Impact of survey administration mode on the results of a health-related discrete choice experiment: online and paper comparison. Value Health. 2017;20:953–60. 10.1016/j.jval.2017.02.007 [DOI] [PubMed] [Google Scholar]

- 25.Norman R, Mulhern B, Lancsar E, Lorgelly P, Ratcliffe J, Street D, Viney R. The use of a discrete choice experiment including both duration and dead for the development of an EQ-5D-5L value set for Australia. Pharmacoeconomics. 2023;41:427–38. 10.1007/s40273-023-01243-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.ChoiceMetrics. Ngene 1.2 user manual & reference guide. 2018. https://choice-metrics.com/NgeneManual120.pdf.

- 27.Rose JM, Bliemer MC. Constructing efficient stated choice experimental designs. Transp Rev. 2009;29:587–617. 10.1080/01441640902827623 [DOI] [Google Scholar]

- 28.Szinay D, Cameron R, Naughton F, Whitty JA, Brown J, Jones A. Understanding uptake of digital health products: methodology tutorial for a discrete choice experiment using the Bayesian efficient design. J Med Internet Res. 2021;23:e32365. 10.2196/32365 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ryan M, Gerard K. Using discrete choice experiments to value health care programmes: current practice and future research reflections. Appl Health Econ Health Policy. 2003;2:55–64. [PubMed] [Google Scholar]

- 30.Wong SF, Norman R, Dunning TL, Ashley DM, Lorgelly PK. A protocol for a discrete choice experiment: understanding preferences of patients with cancer towards their cancer care across metropolitan and rural regions in Australia. BMJ Open. 2014;4:e006661. 10.1136/bmjopen-2014-006661 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Campoamor NB, Guerrini CJ, Brooks WB, Bridges JF, Crossnohere NL. Pretesting discrete-choice experiments: a guide for researchers. Patient. 2024;17:109–20. 10.1007/s40271-024-00672-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Howard K, Salkeld GP, Patel MI, Mann GJ, Pignone MP. Men’s preferences and trade-offs for prostate cancer screening: a discrete choice experiment. Health Expect. 2015;18:3123–35. 10.1111/hex.12301 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Mansfield C, Ekwueme DU, Tangka FK, Brown DS, Smith JL, Guy GP, Li C, Hauber B. Colorectal cancer screening: preferences, past behavior, and future intentions. Patient. 2018;11:599–611. 10.1007/s40271-018-0308-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sarikhani Y, Ostovar T, Rossi-Fedele G, Edirippulige S, Bastani P. A protocol for developing a discrete choice experiment to elicit preferences of general practitioners for the choice of specialty. Value Health Reg Issues. 2021;25:80–9. 10.1016/j.vhri.2020.12.001 [DOI] [PubMed] [Google Scholar]

- 35.Hauber AB, González JM, Groothuis-Oudshoorn CG, Prior T, Marshall DA, Cunningham C, IJzerman MJ, Bridges JF. Statistical methods for the analysis of discrete choice experiments: a report of the ISPOR Conjoint Analysis Good Research Practices Task Force. Value Health. 2016;19:300–15. 10.1016/j.jval.2016.04.004 [DOI] [PubMed] [Google Scholar]

- 36.McFadden D, Train K. Mixed MNL models for discrete response. J Appl Econ. 2000;15:447–70. [DOI] [Google Scholar]

- 37.Greene WH. Nlogit. Stud. Ref. Guide. Zuletzt Geprüft Am. 2012;11:2014. [Google Scholar]

- 38.Australian Institute of Health and Welfare. Digital health. 2022. https://www.aihw.gov.au/reports/australias-health/digital-health.

- 39.Australian Digital Health Agency. Australia’s National Digital Health Strategy: safe, seamless and secure: evolving health and care to meet the needs of modern Australia. 2018. https://www.digitalhealth.gov.au/national-digital-health-strategy.

- 40.OECD. OECD science, technology and industry scoreboard 2017: the digital transformation. Paris: Organisation for Economic Co-operation and Development. 2017. 10.1787/9789264268821-en.

- 41.Finkelstein SR, Liu N, Jani B, Rosenthal D, Poghosyan L. Appointment reminder systems and patient preferences: patient technology usage and familiarity with other service providers as predictive variables. Health Inf J. 2013;19:79–90. 10.1177/1460458212458429 [DOI] [PubMed] [Google Scholar]

- 42.Australian Communications and Media Authority. How we use the internet: executive summary and key findings. Commun Media Aust. 2022;2022:56. [Google Scholar]

- 43.Good Things Foundation Australia. Digital Nation Australia 2021. 2021. https://www.goodthingsfoundation.org.au/news/digital-nation-australia-2021/.

- 44.Saeed SA, Masters RM. Disparities in health care and the digital divide. Curr Psychiatry Rep. 2021;23:61. 10.1007/s11920-021-01274-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ellis DA, McQueenie R, McConnachie A, Wilson P, Williamson AE. Demographic and practice factors predicting repeated non-attendance in primary care: a national retrospective cohort analysis. Lancet Public Health. 2017;2:e551–9. 10.1016/S2468-2667(17)30217-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Boone CE, Celhay P, Gertler P, Gracner T, Rodriguez J. How scheduling systems with automated appointment reminders improve health clinic efficiency. J Health Econ. 2022;82:102598. 10.1016/j.jhealeco.2022.102598 [DOI] [PubMed] [Google Scholar]

- 47.Horvath M, Levy J, L'Engle P, Carlson B, Ahmad A, Ferranti J. Impact of health portal enrollment with email reminders on adherence to clinic appointments: a pilot study. J Med Internet Res. 2011;13:e41. 10.2196/jmir.1702 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Chung S, Martinez MC, Frosch DL, Jones VG, Chan AS. Patient-centric scheduling with the implementation of health information technology to improve the patient experience and access to care: retrospective case-control analysis. J Med Internet Res. 2020;22:e16451. 10.2196/16451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Agrawal D, Pang G, Kumara S. Preference based scheduling in a healthcare provider network. Eur J Oper Res. 2023;307:1318–35. 10.1016/j.ejor.2022.09.027 [DOI] [Google Scholar]

- 50.Australian Bureau of Statistics. Australia. 2021 Census All persons QuickStats. 2021. https://www.abs.gov.au/census/find-census-data/quickstats/2021/AUS.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.