Abstract

Background:

Oncology practice can be enhanced by the integration of the assessment of patient-reported symptoms and concerns into the electronic health record (EHR) and clinical workflows.

Methods:

Adult oncology outpatients (n = 6825) received 38,422 invitations to complete assessments through the EHR patient portal. Patient-Reported Outcomes Measurement Information System computer adaptive tests were administered to assess fatigue, pain interference, physical function, depression, and anxiety. Checklists identified psychosocial, nutritional, and informational needs. In real time, assessment results were populated in the EHR, and clinicians were notified of elevated symptoms and needs.

Results:

In all, 3521 patients (51.6%) completed 8162 assessments; approximately 55% of the responding patients completed 2 or more within 32 months. Fatigue, pain, anxiety, and depression scores were comparable to those of the general population (approximately 5% of assessments triggered clinical alerts across those domains); mean scores indicated a lower level of physical function (with severe scores prompting alerts in nearly 5% of assessments). More than half of assessments triggered an alert based on patient endorsement of supportive care needs, with the majority of those being nutritional (41.82% of assessments). Patient endorsement of supportive care needs was associated with significantly higher anxiety, depression, fatigue, and pain interference scores and lower physical function scores. Patients who triggered clinical alerts tended to be younger and more recently diagnosed, to have greater comorbidities, and to be a racial/ethnic minority. Patients who triggered clinical alerts had more health care service encounters in the ensuing month.

Conclusions:

EHR integration facilitated the assessment and reporting of patient-reported symptoms and needs within routine oncology outpatient care.

Keywords: ehealth, electronic health records, outcomes measurement, patient-reported outcomes, psychosocial care, symptom management

INTRODUCTION

Patients with cancer commonly experience symptoms and psychosocial concerns that are best evaluated through self-report and can otherwise go unrecognized by clinicians.1 Leading organizations have emphasized 1) the integration of patient-reported outcomes (PROs) within cancer care, 2) the utilization of health information technology to facilitate patient-reported data informing oncology practice, and 3) the importance of responding to the psychosocial needs of patients with cancer.2 To help meet Commission on Cancer emotional distress screening standards3 at the Robert H. Lurie Comprehensive Cancer Center (RHLCCC), we implemented an electronic patient-reported outcome (ePRO) screening assessment that is integrated into the electronic health record (EHR).

Including PROs in oncology care can foster a focus on priority symptoms,4 and improved symptom monitoring promotes favorable patient outcomes, including increased survival and better health-related quality of life as well as fewer adverse events.5 However, the majority of existing ePROs in clinical interventions6 use measures that lack clear clinical action thresholds, are too lengthy for repeat administration in busy oncology settings, or are not integrated into EHRs or clinical workflows. Building upon a pilot in gynecological oncology,7 we implemented our ePRO screening assessment within care practices across RHLCCC medical oncology clinics.

Our primary aim was to implement an ePRO screener, which uses Patient-Reported Outcomes Measurement Information System (PROMIS) computer adaptive tests (CATs) that allow for simultaneously brief and precise measurement as well as easily interpretable results, into standard clinical care. The EHR integration provides a means of overcoming common patient-clinician communication barriers by delivering and triaging screening results so that clinicians can address significant symptoms and concerns in a timely manner. Here we present the primary metrics from this systemwide implementation.

MATERIALS AND METHODS

This project was part of a clinical quality improvement initiative at the RHLCCC. The analyses that we present were part of a performance improvement project and were, therefore, exempt from institutional review board review according to institutional review board guidance.8 We obtained all EHR data via queries through the Northwestern Enterprise Data Warehouse (EDW).

Screening Procedures

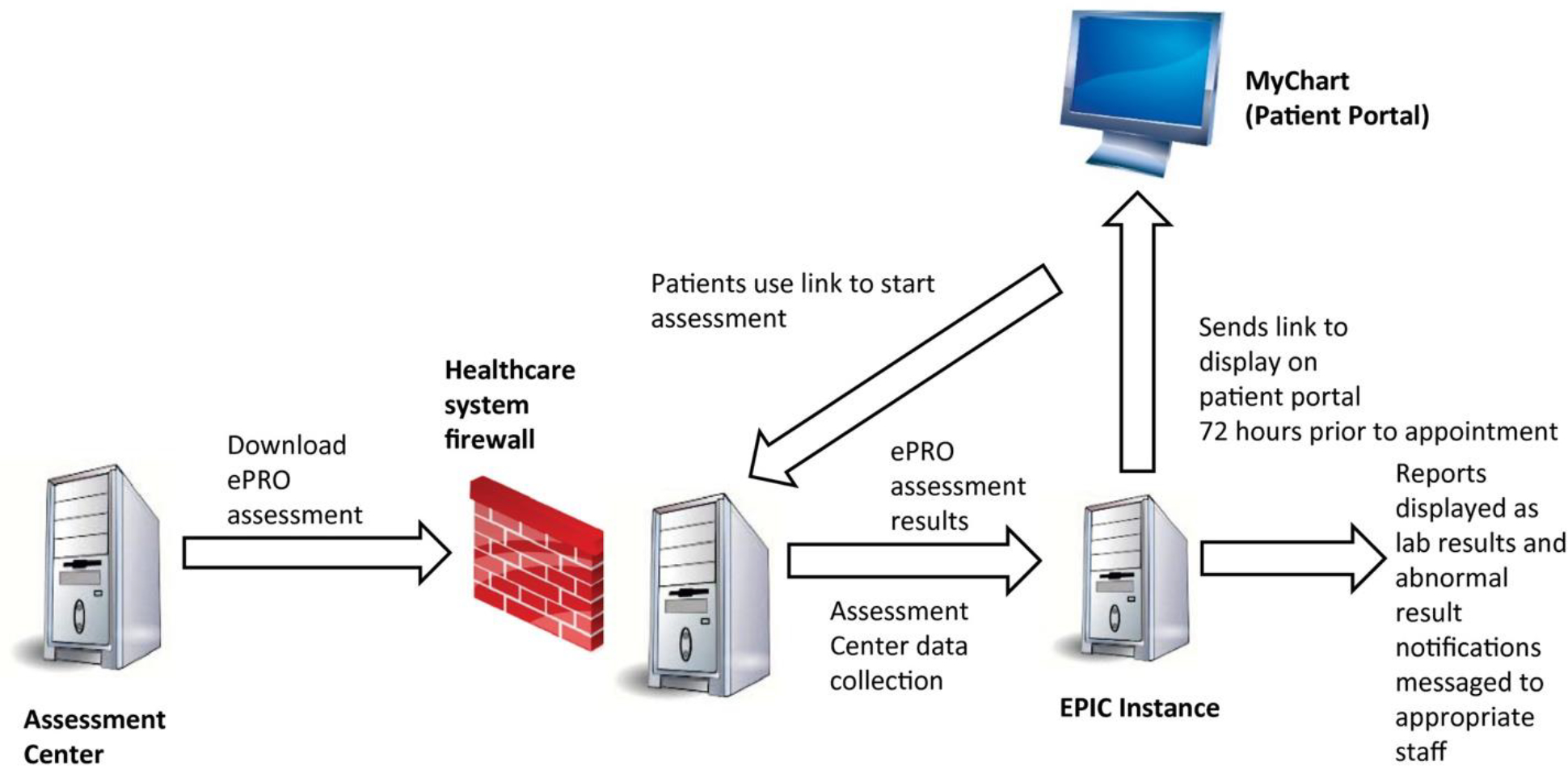

The screener was first created in Assessment Center (AC),9 a web-based platform for the administration of PROMIS CATs. It was then exported and loaded into AC Lite (Fig. 1), an offline version of the platform, and installed within the Northwestern Medicine firewall for EHR (Epic) integration. Using HL7 messages and Epic’s web service platform, Interconnect, we integrated assessment administration, scoring, and clinician notification within Epic.

Figure 1.

Electronic health record integration of the patient-reported symptom and supportive care need screener. ePRO indicates electronic patient-reported outcome.

Eligible patients had scheduled outpatient physician visits at RHLCCC medical oncology clinics and active accounts for Epic MyChart, the patient communication portal, through which they received invitations to complete the screener 72 hours before their appointments. Patients received MyChart messages with hyperlinks to the assessment, which informed them that it might take up to 72 hours for the results to be evaluated by the health care team (eg, for assessments completed on Friday, clinicians typically would respond on the following Monday) and advised them to call 911 or proceed immediately to the nearest emergency department if they were experiencing any emergencies. Patients’ assessment results were immediately captured in designated EHR sections, were available for clinicians to copy into EHR progress notes, and were, in the case of severe symptoms and endorsed supportive care concerns, sent through automatically generated EHR in-basket messages to alert designated clinicians.

Severe PROMIS CAT T scores above alert thresholds10 (described in the Measures section) triggered messages to social workers for Anxiety and Depression (with the treating medical oncologist also notified) and to medical oncologists and their nursing pools for Pain Interference, Fatigue, and Physical Function. Endorsed needs on the supportive care checklist triggered automatic alerts to supportive oncology providers: dieticians for nutritional concerns, social workers for psychosocial concerns, and health educators for informational concerns. The designated medical and supportive oncology providers followed standard care guidelines when providing subsequent services to patients by telephone, through MyChart messages, or during clinic visits. This included social workers’ evaluating whether patients should be referred to psychologists or psychiatrists for further services.

Measures

Electronic symptom and supportive care need screener

The assessment included PROMIS Anxiety, Depression, Pain Interference, Fatigue, and Physical Function CATs11 along with single-item checklists to assess psychosocial and nutritional concerns and needs.7 PROMIS CATs use algorithms to administer PRO items dynamically selected (on the basis of a patient’s symptom severity as indicated in previous responses) from item banks calibrated with item response theory–driven analyses. These CATs provide precise, reliable, and valid scores typically with 4 to 12 items.12 The CATs are computer-scored and benchmarked on the basis of normative data from patients with cancer and the general population.13 PROMIS measures use T scores. A T score of 50 is the mean, and 10 is the standard deviation (SD) of the reference population. Higher PROMIS scores equal more of the concept being measured and can be considered either a favorable or unfavorable outcome according to the domain being measured (eg, higher Physical Function vs higher Depression, respectively).

The screener also included items assessing psychosocial and nutritional concerns for triage to supportive oncology services (Table 1). These items were informed by the National Comprehensive Cancer Network Distress Thermometer Problem Checklist14; the Patient-Generated Subjective Global Assessment15; and input from RHLCCC psychologists, social workers, dieticians, and health educators. The total assessment length for the PROMIS CATs and supportive care concern checklist was approximately 40 items (depending on the number of items administered in the CATs), and the assessment required less than 10 minutes on average to complete.

TABLE 1.

Supportive Care Needs Assessment Checklist Responses

| No. | % | |

|---|---|---|

|

| ||

| I could use help in the following areas: | ||

| None | 7098 | 86.26 |

| Financial resources | 645 | 7.84 |

| Insurance questions | 408 | 4.96 |

| Help at home | 309 | 3.76 |

| Transportation resources | 308 | 3.74 |

| Housing/lodging questions | 98 | 1.19 |

| I could use support in the following areas: | ||

| None | 6454 | 79.56 |

| Managing stress | 961 | 11.85 |

| Coping with cancer diagnosis | 560 | 6.90 |

| Communicating with my medical team | 498 | 6.14 |

| Getting information on support groups | 449 | 5.54 |

| Communicating about my cancer with | 289 | 3.56 |

| others | ||

| Communicating with children about | 139 | 1.71 |

| cancer | ||

| I have had the following problems that have kept me from eating enough during the |

||

| past 2 weeks: | ||

| No problems eating | 5758 | 71.91 |

| Did not feel like eating | 1214 | 15.16 |

| No appetite | 1214 | 15.16 |

| Fatigue | 972 | 12.14 |

| Things taste funny | 909 | 11.35 |

| Feel full quickly | 886 | 11.07 |

| Nausea | 686 | 8.57 |

| Dry mouth | 501 | 6.26 |

| Constipation | 490 | 6.12 |

| Diarrhea | 490 | 6.12 |

| Smells bother me | 394 | 4.92 |

| Pain | 388 | 4.85 |

| Mouth sores | 260 | 3.25 |

| Other | 235 | 2.93 |

| Problems swallowing | 208 | 2.60 |

| Vomiting | 160 | 2.00 |

Diagnosis codes

Patients received diagnosis codes at health service visits. We generated International Classification of Diseases, Ninth Revision (ICD-9) and International Classification of Diseases, Tenth Revision (ICD-10) codes from EDW queries according to the date of service. We converted ICD-9 codes into ICD-10 values with 2017 General Equivalence Mappings.

Health service usage

EDW data reports captured patient encounters in the 30 days following the initial completion of the ePRO assessment. All encounters were assigned a medical specialty and encounter type (with approximately 3330 unique combinations) as labeled in Epic by the documenting health care providers. We created broader categories more suitable for analysis: inpatient, supportive oncology, outpatient, telephone, and MyChart (excluding supportive oncology). Supportive oncology encounters included social work, nutrition, psychology and psychiatry, fertility preservation, and breast cancer nurse navigator services. We reported supportive oncology encounters separately because 1) the initiative began in that program, 2) supportive oncology clinicians were responsible for responding to a majority of the alerts, and 3) a clinical response to emotional distress and psychosocial needs is required for the RHLCCC to maintain Commission on Cancer accreditation.

Analyses

We used univariate statistics to describe patient sociodemographic and clinical characteristics and their ePRO screener responses. We used t tests and chi-square tests to examine sociodemographic differences between subgroups, including comparisons between responders and nonresponders as well as those who completed 1 or more assessments. We also used t tests to examine differences in health service usage and the number of supportive care needs endorsed between those above and below PROMIS CAT alert thresholds as well as PROMIS T score differences between those who triggered supportive care need alerts and those who did not.

RESULTS

Project Metrics

Initial data set

From January 2015 to August 2017, a total of 38,422 MyChart messages with invitations to complete the screening assessment were sent to 6825 patients. Fewer than 100 patients who were invited to complete the assessment asked not to receive future invitations to do so. After feedback from participating clinicians, beginning in February 2016, we altered the screening protocol so that patients received no more than 1 invitation to complete the assessment every 30 days (even if they had numerous scheduled appointments in that time). Across the range of the study period, each patient received an average of 5.6 MyChart messages inviting them to complete the screener. More than half of the patients who were messaged (n = 3526) attempted at least 1 assessment. The average time that elapsed between the MyChart invitation to the screener and the patients’ accessing the assessment was 1.5 days (once the 30-day limit rule was implemented). We removed from the analysis the assessments of 39 patients who completed 2 assessments on the same day because the EHR data structure made it difficult to differentiate among those data points. Five of those 39 patients completed no other assessments and were consequently removed from the data set entirely. All subsequent results reference that final data set. Notably, we conducted sensitivity analyses excluding data from the period before February 2016 when opportunities for patients to complete assessments were limited to 1 per month. Doing so significantly affected only 2 of numerous results. Given that this was a real-world implementation project, we opted to use data from the full time frame (noting the 2 results that would be different with only post–February 2016 data).

Final data set

From January 2015 to August 2017, a total of 8636 screening assessments were initiated, and 8162 were completed. We defined an assessment as complete if all CAT scores and supportive care needs checklist responses were captured in the EHR. Of those patients who had any assessment data (n = 3521), approximately 45% (n = 1596) had data at 1 time point only. Data were available for at least 2 time points for approximately 55% of the patients (n = 1925) and for 3 or more time points for approximately 30% of the patients (n = 1061). The mean number of assessments per patient was 2.45 (SD, 2.47; minimum, 1; maximum, 37; median, 2). The median time between assessments was 98 days (mean, 157.9 days; SD, 156.3 days) from the first assessment to the second assessment and 92 days (mean, 141.1 days; SD, 132.5 days) from the second to the third.

Sociodemographic and Clinical Characteristics

Differences between responders and nonresponders

We examined patient characteristics to determine whether there were differences between those who chose to participate in the screening assessments (responders) and those who did not (nonresponders). Responder and nonresponder groups were not statistically different in age or sex. Compared with nonresponders, responders had a slightly but significantly more recent cancer diagnosis (mean, 2.6 years [SD, 3.7 years] vs 2.9 years [SD, 3.7 years]; P < .0001), were more likely to be non-Hispanic (88.98% vs 85.54%; P < .0001) and white (76.14% vs 65.60%; P < .0001), were less likely to be black (6.99% vs 12.34%; P < .0001), and were more likely to have hematologic malignancies (30.02% vs 24.31%; P < .0001).

Responder characteristics

Table 4 presents sociodemographic and clinical variables of patients who responded to the screening assessment. At the time of the initial invitation to complete the assessment, responders had a mean age of 57.15 years (median, 59 years) and were 2.59 years past the initial cancer diagnosis on average. The sample was predominantly female, non-Hispanic, and white. The most common cancer diagnoses at the initial ePRO assessment were hematologic malignancies, breast cancer, and gynecologic malignancies. Patients’ body mass index (BMI) was captured at their last assessment; the mean was 27.82 kg/m2 (median, 26.67 kg/m2), which was within the overweight range. The mean Charlson Comorbidity Index, as determined by active diagnoses listed in the EHR, was 5.41 (median, 6).

TABLE 4.

Sociodemographic and Clinical Characteristics of Patients Who Responded to the Screening Assessment (n = 3521)

| Characteristic | Mean | SD |

|---|---|---|

|

| ||

| Age, y | 57.15 | 13.39 |

| Time since diagnosis, y | 2.59 | 3.67 |

| BMI, kg/m2 | 27.82 | 6.44 |

| Charlson Comorbidity Index | 5.41 | 2.76 |

| Characteristic | No. | % |

| Sex: female | 2398 | 68.11 |

| Ethnicity | ||

| Hispanic | 143 | 4.06 |

| Not Hispanic | 3133 | 88.98 |

| Unable to answer or declined | 245 | 6.96 |

| Race | ||

| Asian | 124 | 3.52 |

| Black or African American | 246 | 6.99 |

| White | 2681 | 76.14 |

| Other | 217 | 6.16 |

| Missing | 253 | 7.19 |

| Cancer type | ||

| Hematologic malignancies (lymphoma and leukemia) | 1057 | 30.02 |

| Breast | 787 | 22.35 |

| Gynecologic malignancies | 545 | 15.48 |

| Gastrointestinal malignancies | 289 | 8.21 |

| Sarcoma (soft tissue and bone) | 65 | 1.85 |

| Lung and other thoracic malignancies | 142 | 4.03 |

| Other or unspecified malignancies | 136 | 3.86 |

| Prostate | 58 | 1.65 |

| Skin | 68 | 1.93 |

| Other genitourinary | 61 | 1.73 |

| Central nervous system | 54 | 1.53 |

| Head and neck | 32 | 0.91 |

| Thyroid and other endocrine glands | 13 | 0.37 |

| Missing | 214 | 6.08 |

Abbreviations: BMI, body mass index; SD, standard deviation.

Differences between patients responding to the assessment once or more

We found no significant differences in the time since diagnosis, BMI, Charlson comorbidity score, or sex when we compared patients who completed 1 assessment with those who completed more than 1. Patients who completed more than 1 assessment were on average older (58.37 vs 55.67 years; P < .0001), were less likely to be Hispanic (3.12% vs 5.20%; P = .002), were more likely to be white (79.79% vs 71.74%; P < .0001), were more likely to have hematologic malignancies (32.78% vs 26.69%), and were less likely to have gastrointestinal malignancies (7.27% vs 9.34%) or to be missing diagnosis data (3.74% vs 8.90%).

Differences between patients who did and did not trigger alerts

Patients who triggered clinical alerts at their first assessment, compared with those who did not, tended to be younger (mean, 56.62 years [SD, 13.57 years] vs 58.20 years [SD, 12.96 years]; P = .001); were diagnosed more recently (mean, 2.22 years [SD, 3.49 years] vs 3.35 years [SD, 3.90 years]; P < .0001); had a higher BMI (28.06 vs 27.35 kg/m2; P = .001), had higher Charlson scores (5.58 vs 5.06; P < .0001); and were more likely to be Hispanic (4.71% vs 2.78%; P = .02), black (8.82% vs 3.37%; P < .0001), or other race (7.28% vs 3.96%; P < .0001). Patients triggering alerts were less likely to have gynecological malignancies (13.75% vs 18.89%) or to be missing a diagnosis (5.18% vs 7.84%) but were more likely to have gastrointestinal malignancies (9.46% vs 5.73%; P < .0001). There was no significant difference evidenced in sex.

Screening Assessment Scores

PROMIS CATs

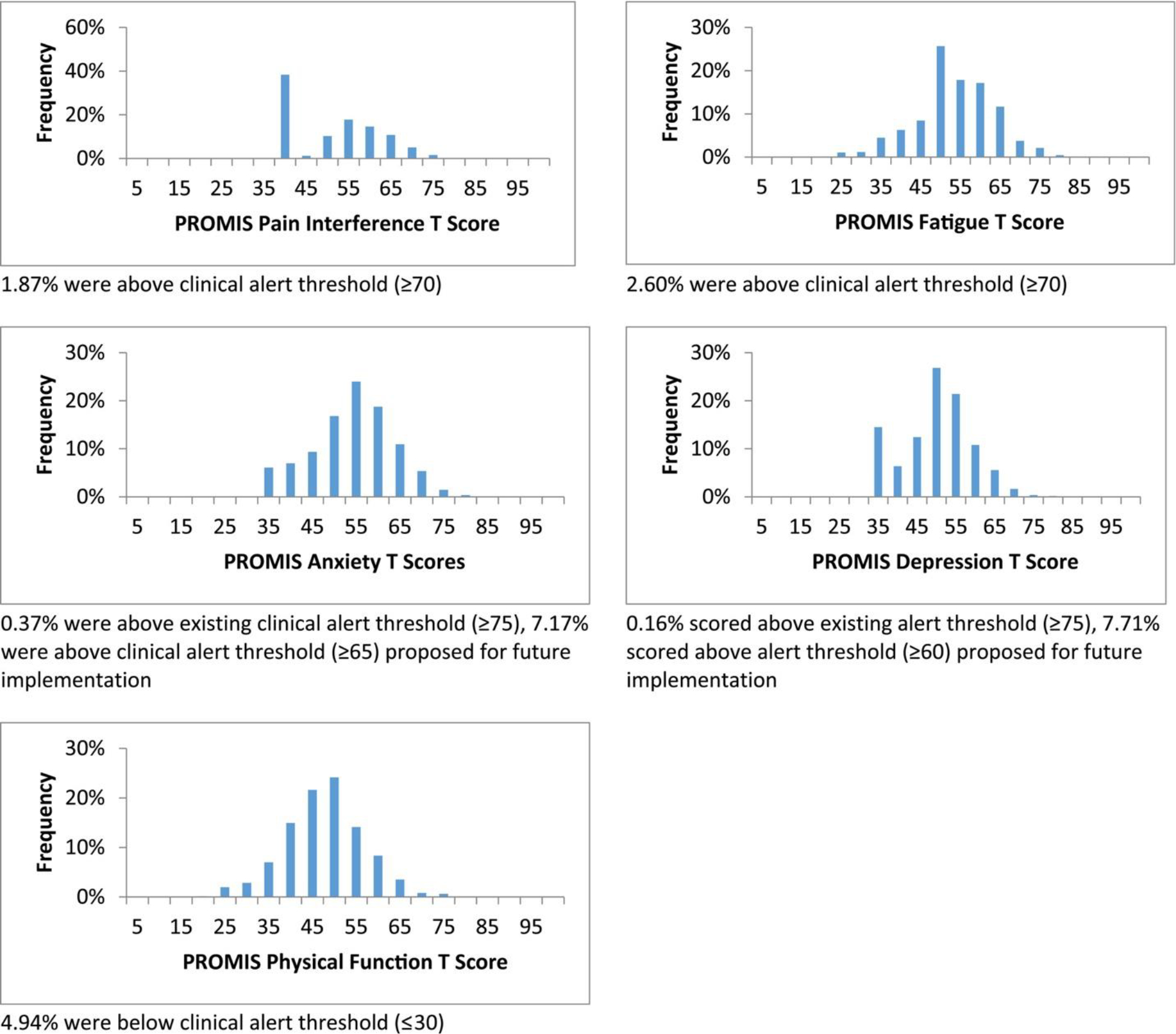

Figure 2 presents PROMIS CAT T score distributions. Average PROMIS T scores were within 2 points of general population means (Anxiety, 51.65 [9.37]; Depression, 48.08 [8.46]; Fatigue, 50.90 [9.76]; and Pain Interference, 49.29 [9.98]) with the exception of Physical Function (45.15 [8.97]). The average Physical Function score of patients responding to the screener indicated that their physical function was lower than the general population average. Examining only those scores from each responding patient’s first ePRO screening assessment yielded similar average CAT T scores (Anxiety, 52.81 [9.07]; Depression, 48.69 [8.28]; Fatigue, 51.09 [9.72]; Pain Interference, 49.61 [9.96]; and Physical Function, 45.13 [9.29]).

Figure 2.

PROMIS computer adaptive test T score distributions. PROMIS indicates Patient-Reported Outcomes Measurement Information System.

Patient PROMIS Physical Function CAT scores below the threshold (≤30) triggered the most clinical alerts (4.94% of all assessments). Fatigue and Pain Interference CAT scores above the set threshold (≥70) triggered 2.60% and 1.87% of alerts, respectively. PROMIS Anxiety and Depression CAT scores above the thresholds established during the study period (≥75) triggered alerts for less than 1% of assessments (0.37% and 0.16%, respectively). If we had used the Anxiety and Depression CAT T score thresholds (≥65 and ≥60) proposed for the next phase of the ePRO screening implementation, 7.17% and 7.71% of assessments in this study, respectively, would have triggered clinical alerts.

Supportive care needs assessment checklist

Table 1 presents patients’ responses to the supportive care needs checklist. Of the patient assessments that contained needs assessment data (n = 8322 answered at least one question), more than half (57.21%; n = 4761/8322) triggered an alert based on patient responses to the needs assessment checklist items. Nutrition needs were the most frequently endorsed, with 41.82% of assessments (n = 3460/8274) resulting in that type of alert. Social work needs were identified in 31.73% of assessments (n = 2639/8317), and Health Learning Center needs were identified in 20.30% (n = 1660/8179; this was based on the response to the question “Would you like to be contacted by someone from the Health Learning Center for one-on-one assistance with educational materials?”). Among those who had available BMI information and complete nutritional needs assessment data, patients who endorsed nutritional needs on their last assessment had a higher average BMI than those who did not (28.09 vs 27.64 kg/m2; P = .0482; n = 3292; this difference was not significant in the post–February 2016 restricted data set). Patient screening assessments that triggered alerts based on PROMIS T scores included the endorsement of statistically higher numbers of supportive care needs (see Table 5). Similarly, assessments with endorsements of any supportive care needs, which triggered subsequent clinical alerts, were associated with significantly higher PROMIS Anxiety, Depression, Fatigue, and Pain Interference T scores and lower PROMIS Physical Function T scores (Table 6).

TABLE 5.

Number of Supportive Care Needs Endorsed by PROMIS T Score Screening Alerts

| No. of Supportive Care Needs Endorsed |

||||||||

|---|---|---|---|---|---|---|---|---|

| PROMIS Scores Did Not Trigger Alert | PROMIS Scores Triggered Alert | |||||||

| PROMIS CAT | No. | Mean | SD | No. | Mean | SD | P (t Test) | Effect Size (Cohen’s d) |

|

| ||||||||

| Anxiety | ||||||||

| Original threshold | 8287 | 1.62 | 2.54 | 31 | 7.19 | 5.14 | <.0001 | 2.16 |

| Relaxed threshold | 7722 | 1.38 | 2.23 | 596 | 5 | 4.01 | <.0001 | 1.4 |

| Depression | ||||||||

| Original threshold | 8304 | 1.64 | 2.57 | 13 | 4.77 | 3.59 | <.0001 | 1.21 |

| Relaxed threshold | 7678 | 1.41 | 2.28 | 639 | 4.39 | 3.99 | <.0001 | 1.16 |

| Fatigue | 8092 | 1.54 | 2.43 | 213 | 5.59 | 4.43 | <.0001 | 1.57 |

| Pain Interference | 8162 | 1.57 | 2.49 | 155 | 5.28 | 4.24 | <.0001 | 1.44 |

| Physical Function | 7908 | 1.49 | 2.38 | 409 | 4.7 | 4.04 | <.0001 | 1.24 |

Abbreviations: CAT, computer adaptive test; PROMIS, Patient-Reported Outcomes Measurement Information System; SD, standard deviation

TABLE 6.

PROMIS T Scores by Supportive Care Need Alerts

| No Supportive Care Need Alert | Supportive Care Need Alert | |||||||

|---|---|---|---|---|---|---|---|---|

| PROMIS CAT | No. | Mean | SD | No. | Mean | SD | P (t Test) | Effect Size (Cohen’s d) |

|

| ||||||||

| Anxiety | 3545 | 47.9 | 8.53 | 4854 | 54.39 | 9 | <.0001 | 0.69 |

| Depression | 3509 | 45.11 | 7.72 | 4852 | 50.23 | 8.32 | <.0001 | 0.61 |

| Fatigue | 3768 | 47.16 | 8.88 | 4855 | 53.81 | 9.42 | <.0001 | 0.68 |

| Pain Interference | 3676 | 46.07 | 8.55 | 4860 | 51.73 | 10.3 | <.0001 | 0.57 |

| Physical Function | 3575 | 48.31 | 7.88 | 4858 | 42.82 | 9.01 | <.0001 | 0.61 |

Abbreviations: CAT, computer adaptive test; PROMIS, Patient-Reported Outcomes Measurement Information System; SD, standard deviation.

Health Service Usage

Patients had an average of 13.11 health service encounters in the 30 days following an ePRO screening assessment (median, 10; SD, 11.10; minimum, 0; maximum, 100). The most frequent type of encounter was an outpatient visit (mean, 8.54; SD, 7.89), which was followed by a MyChart message (mean, 2.54; SD, 3.19), a telephone encounter (mean, 1.94; SD, 2.81), and an inpatient stay (mean, 0.09; SD, 0.34). Table 7 presents health service usage in the month after assessments with PROMIS Fatigue, Pain Interference, and Physical Function CAT scores above and below clinical alert thresholds. Patient ePRO screening assessments that triggered a clinical alert because of severe Fatigue, Pain Interference, and Physical Function scores were followed by significantly more health service encounters of every type (outpatient, inpatient, and telephone encounters and MyChart messages) than those that did not trigger alerts. Table 3 presents health service usage in the month after assessments with PROMIS Anxiety and Depression CAT scores above and below clinical alert thresholds (both the ones in effect during the study and those proposed for future implementation). Patient assessments that triggered alerts based on PROMIS Anxiety CAT scores were followed by significantly more telephone encounters, MyChart messages, and supportive oncology encounters (the last significant in the full data set but not in the post–February 2016 restricted data set) than those that did not trigger alerts. Patient assessments that triggered alerts based on severe Depression CAT scores did not have significantly more (or fewer) encounters than those did not trigger an alert. This was likely due in part to a lack of statistical power resulting from the small sample of assessments that triggered a Depression CAT alert based on the threshold used during the study period. When we applied the clinical thresholds for Anxiety and Depression CAT scores recommended for future implementation of the ePRO screening assessment, patients with assessments within this study that would have triggered clinical alerts had significantly more health service encounters of every type in the 30 days following the assessment.

TABLE 7.

Health Service Usage by PROMIS Fatigue, Pain Interference, and Physical Function CAT Screening Alerts

| Fatigue | Pain Interference | Physical Function | ||

|---|---|---|---|---|

|

| ||||

| No. of alerts | 213 | 150 | 404 | |

| Outpatient encounters | Alert | 11.23 (9.41) | 12.41 (9.71) | 11.96 (8.44) |

| No alert | 8.47 (7.83) | 8.51 (7.85) | 8.44 (7.87) | |

| P | <.0001 | <.0001 | <.0001 | |

| d | 0.323 | 0.457 | 0.412 | |

| Inpatient encounters | Alert | 0.18 (0.42) | 0.17 (0.39) | 0.23 (0.51) |

| No alert | 0.08 (0.37) | 0.09 (0.34) | 0.08 (0.33) | |

| P | .0013 | .0126 | <.0001 | |

| d | 1.11 | 0.889 | 1.667 | |

| Telephone encounters | Alert | 3.44 (3.65) | 3.81 (4.01) | 4.09 (4.51) |

| No alert | 1.90 (2.78) | 1.91 (2.78) | 1.84 (2.66) | |

| P | <.0001 | <.0001 | <.0001 | |

| d | 0.794 | 0.979 | 1.160 | |

| MyChart messages | Alert | 4.70 (4.22) | 5.12 (5.51) | 4.68 (4.81) |

| No alert | 2.48 (3.14) | 2.51 (3.12) | 2.46 (3.06) | |

| P | <.0001 | <.0001 | <.0001 | |

| d | 0.874 | 1.028 | 0.874 | |

Abbreviations: CAT, computer adaptive test; PROMIS, Patient-Reported Outcomes Measurement Information System.

“Alert” and “no alert” figures are the numbers of health encounters in the month after assessments that triggered or did not trigger clinical alerts, respectively.

TABLE 3.

Health Service Usage by PROMIS Anxiety and Depression CAT Screening Alert Thresholds

| Clinical Alert Thresholds (T Scores ≥ 75) |

Newly Proposed Thresholds |

||||

|---|---|---|---|---|---|

| Anxiety | Depression | Anxiety (T Scores ≥ 65) | Depression (T Scores ≥ 60) | ||

|

| |||||

| No. assessments above | 30 | 10 | 587 | 629 | |

| clinical thresholds | |||||

| Outpatient encounters | Above | 11.90 (15.22) | 11.60 (9.34) | 10.17 (8.82) | 9.75 (8.54) |

| Below | 8.30 (7.67) | 8.31 (7.72) | 8.16 (7.60) | 8.20 (7.64) | |

| P | .2052 | .1788 | <.0001 | <.0001 | |

| d | 0.436 | 0.399 | 0.244 | 0.188 | |

| Inpatient encounters | Above | 0.10 (0.31) | 0.20 (0.42) | 0.16 (0.45) | 0.13 (0.41) |

| Below | 0.09 (0.34) | 0.09 (0.34) | 0.08 (0.33) | 0.08 (0.34) | |

| P | .8452 | .3012 | <.0001 | .0044 | |

| d | 0.111 | 1.222 | 0.889 | 0.556 | |

| Telephone encounters | Above | 4.30 (5.31) | 3.40 (3.34) | 3.35 (3.87) | 3.01 (3.66) |

| Below | 1.86 (2.73) | 1.87 (2.75) | 1.76 (2.60) | 1.77 (2.63) | |

| P | .0177 | .0778 | <.0001 | <.0001 | |

| d | 1.312 | 0.823 | 0.855 | 0.667 | |

| MyChart messages | Above | 4.03 (3.59) | 4.60 (4.38) | 3.53 (4.30) | 3.39 (4.17) |

| Below | 2.20 (3.04) | 2.21 (3.05) | 2.11 (2.90) | 2.11 (2.91) | |

| P | .001 | .0131 | <.0001 | <.0001 | |

| d | 0.839 | 1.096 | 0.651 | 0.587 | |

| Supportive oncology | Above | 3.27 (2.30) | 2.70 (2.79) | 1.70 (1.85) | 1.51 (1.77) |

| encounters | Below | 0.74 (1.21) | 0.75 (1.22) | 0.67 (1.13) | 0.68 (1.15) |

| P | <.0001 | .0541 | <.0001 | <.0001 | |

| d | 3.466 | 2.671 | 1.411 | 1.137 | |

Abbreviations: CAT, computer adaptive test; PROMIS, Patient-Reported Outcomes Measurement Information System.

“Above” and “below” figures are the numbers of health encounters in the month after assessments that were above or below clinical alert thresholds, respectively.

DISCUSSION

We established the feasibility of expanding our screening assessment into standard care workflows within medical oncology clinics at the RHLCCC. More than half of the patients invited to do so attempted the assessment before scheduled medical visits. This response rate was somewhat lower than those observed with other ePRO initiatives.16 Unlike those, however, our ePRO assessment was initiated by patients from home as part of their clinical care rather than being facilitated in the clinic by study staff as part of a research study that provided compensation for participation. With respect to acceptability, more than 94% of the assessments that patients initiated were completed, and more than half of the patients who completed the ePRO screener did so more than once.

Medical oncology outpatients’ pain, fatigue, anxiety, and depression scores were comparable to those of the general population. For all of those domains combined, only 5% of assessments generated clinical alerts based on scores above the established thresholds. On the basis of these findings, we decreased the clinical alert thresholds for depression and anxiety in an effort to provide clinical follow-up for as many symptomatic patients as feasible within institutional resources. On average, patients reported impaired physical functioning in comparison with the general population. Approximately 5% of assessments generated clinical alerts based on scores below the physical functioning threshold. In contrast, more than half of assessments triggered an alert based on patient endorsement of supportive care needs assessment items, with the majority of those being nutritional needs. Patient endorsements of supportive care needs were associated with significantly higher anxiety, depression, fatigue, and pain interference and lower physical function. Patients whose assessments triggered any kind of clinical alert tended to be younger and to have been diagnosed more recently; to have greater comorbidities; and to be Hispanic, African American/black, or other race. Given that patients who responded to the assessment tended to be older and non-Hispanic white, we plan to extend future versions of the screening program to better reach younger and racial/ethnic minority patients who may benefit from participation (eg, translation into Spanish).

We established means of querying and analyzing patients’ EHR-documented health service usage in relation to their ePRO assessments. In general, patients with initial assessments that triggered clinical alerts had more health care service encounters in the ensuing month than those who did not. This suggests that patients with more expressed needs are subsequently using more health care services. As we extend our work in this area, we plan to leverage more complex EHR queries to systematically evaluate patients’ ePRO scores with respect to their outcomes over time.

We note several limitations to this clinical care quality improvement project. Our EHR data extraction was constrained to data permitted under an institutional review board–exempt project (eg, we did not have access to detailed treatment or socioeconomic status variables). Furthermore, access to our screening assessment was limited to patients who had active EHR patient portal accounts (estimated to be between one-third and one-half of patients across participating RHLCCC medical oncology clinics) and to those who read English, the sole language in which MyChart was available at the time (this likely decreased the participation of Spanish-speaking Hispanic patients and other groups). The next step in our expanded implementation will be an attempt to reach patients without MyChart access by including administration of the ePRO screening assessment in the clinic via Epic Hyperspace. Our current sample of patients responding to the assessment was predominantly female, non-Hispanic, and white. This limits the generalizability of our results, as does our implementation in a well-resourced comprehensive cancer center.

This work demonstrates a wide-scale implementation of EHR-integrated PRO assessment and reporting within routine ambulatory cancer care. Our ePRO screening platform includes precise, valid, and robust measurement of common cancer-related symptoms and concerns with immediate triage and clinician notification. It was built with implementation and dissemination in mind and is scalable to other health care settings. We have preliminary evidence that patients whose assessments result in clinical alerts receive more subsequent care. Future work will examine how clinicians use results as well as the impact of expanded implementation on patient and system outcomes, all with the ultimate aim of improving cancer care quality and efficiency.

TABLE 2.

Numbers and Percentages of Assessments in PROMIS CAT T Score–Based Severity Categories

| PROMIS CAT | Severity Category |

||||

|---|---|---|---|---|---|

| Normal | Mild | Moderate | Severe | Total | |

|

| |||||

| Fatigue | <50 | 50–59 | 60–69 | ≥70 | |

| No. | 4057 | 3013 | 1329 | 224 | 8623 |

| % | 47 | 34.9 | 15.4 | 2.6 | 100 |

| Pain Interference | <50 | 50–59 | 60–69 | ≥70 | |

| No. | 4258 | 2764 | 1354 | 160 | 8536 |

| % | 49.9 | 32.4 | 15.9 | 1.9 | 100 |

| Physical Function | >55 | 55–46 | 45–31 | ≤30 | |

| No. | 1119 | 3226 | 3671 | 417 | 8433 |

| % | 13.3 | 38.3 | 43.5 | 4.9 | 100 |

| Anxiety | <55 | 55–64 | 65–74 | ≥75 | |

| No. | 5303 | 2494 | 571 | 31 | 8399 |

| % | 63.1 | 29.7 | 6.8 | 0.4 | 100 |

| Depression | <55 | 55–64 | 65–74 | ≥75 | |

| No. | 6557 | 1623 | 168 | 13 | 8361 |

| % | 78.4 | 19.4 | 2 | 0.2 | 100 |

Abbreviations: CAT, computer adaptive test; PROMIS, Patient-Reported Outcomes Measurement Information System.

Funding Support

This study was supported by the Robert H. Lurie Comprehensive Cancer Center, the Coleman Foundation, and the Northwestern University Clinical and Translational Sciences Institute (grant UL1TR001422). David Cella reports funding from the National Institutes of Health (grant U54AR057951).

We thank Leonidas Platanias, MD, PhD, Nick Rave, MS, and Rebecca Caires, MBA, for their leadership in facilitating implementation; Ryan Chmiel, MS, and Alpa Patel, BS, for Epic programming; Oana Popescu, MS, for enterprise data warehouse querying and reporting; Megan Slocum, PA-C, for her assistance with health record queries; and Yanina Guevara, BA, Stacey Rogers, Jessica Thomas, MSW, and Allison Fisher, BA, for project coordination.

Footnotes

Conflict of Interest Disclosures

David Cella is a board member and officer of the PROMIS Health Organization, a 501(c)(3) charitable organization. Timothy Pearman belongs to the Pfizer and Genentech speakers’ bureaus. Lynne I. Wagner reports other from Celgene and funding and other from EveryFit, Inc, outside the submitted work. The other authors made no disclosures.

REFERENCES

- 1.Holland J NCCN practice guidelines for the management of psycho- social distress. Oncology. 1999;13:113–147. [PubMed] [Google Scholar]

- 2.Levit LA, Balogh E, Nass SJ, Ganz P. Delivering High-Quality Cancer Care: Charting a New Course for a System in Crisis. Washington, DC: National Academies Press; 2013. [PubMed] [Google Scholar]

- 3.Jacobsen PB, Wagner LI. A new quality standard: the integration of psychosocial care into routine cancer care. J Clin Oncol. 2012; 30:1154–1159. [DOI] [PubMed] [Google Scholar]

- 4.Velikova G, Booth L, Smith AB, et al. Measuring quality of life in routine oncology practice improves communication and patient well-being: a randomized controlled trial. J Clin Oncol. 2004;22:714–724. [DOI] [PubMed] [Google Scholar]

- 5.Snyder C, Jensen R, Geller G, Carducci M, Wu A. Relevant content for a patient-reported outcomes questionnaire for use in oncology clinical practice: putting doctors and patients on the same page. Qual Life Res. 2010;19:1045–1055. [DOI] [PubMed] [Google Scholar]

- 6.Abernethy AP, Herndon JE II, Wheeler JL, et al. Feasibility and acceptability to patients of a longitudinal system for evaluating cancer-related symptoms and quality of life: pilot study of an e/tablet data-collection system in academic oncology. J Pain Symptom Manage. 2009;37:1027–1038. [DOI] [PubMed] [Google Scholar]

- 7.Wagner LI, Schink J, Bass M, et al. Bringing PROMIS to practice: brief and precise symptom screening in ambulatory cancer care. Cancer. 2015;121:927–934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.US Department of Health and Human Services Office for Human Research Protections. Quality improvement activities FAQs. https://www.hhs.gov/ohrp/regulations-and-policy/guidance/faq/quality-improvement-activities/. Accessed April 27, 2018.

- 9.Gershon R, Rothrock NE, Hanrahan RT, Jansky LJ, Harniss M, Riley W. The development of a clinical outcomes survey research application: Assessment CenterSM. Qual Life Res. 2010;19:677–685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cella D, Choi S, Garcia S, et al. Setting standards for severity of common symptoms in oncology using the PROMIS item banks and expert judgment. Qual Life Res. 2014;23:2651–2661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cella D, Riley W, Stone A, et al. ; PROMIS Cooperative Group. The Patient-Reported Outcomes Measurement Information System (PROMIS) developed and tested its first wave of adult self-reported health outcome item banks: 2005–2008. J Clin Epidemiol. 2010;63: 179–1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cella D, Gershon R, Lai JS, Choi S. The future of outcomes measurement: item banking, tailored short-forms, and computerized adaptive assessment. Qual Life Res. 2007;16(suppl 1):133–141. [DOI] [PubMed] [Google Scholar]

- 13.Rothrock N, Hays R, Spritzer K, Yount SE, Riley W, Cella D. Relative to the general US population, chronic diseases are associated with poorer health-related quality of life as measured by the Patient- Reported Outcomes Measurement Information System (PROMIS). J Clin Epidemiol. 2010;63:1195–1204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Holland JC, Andersen B, Breitbart WS, et al. Distress management. J Natl Compr Canc Netw. 2013;11:190–209. [DOI] [PubMed] [Google Scholar]

- 15.Bauer J, Capra S, Ferguson M. Use of the scored Patient-Generated Subjective Global Assessment (PG-SGA) as a nutrition assessment tool in patients with cancer. Eur J Clin Nutr. 2002;56:779–785. [DOI] [PubMed] [Google Scholar]

- 16.Carlson LE, Waller A, Groff SL, Zhong L, Bultz BD. Online screening for distress, the 6th vital sign, in newly diagnosed oncology out- patients: randomised controlled trial of computerised vs personalised triage. Br J Cancer. 2012;107:617–625. [DOI] [PMC free article] [PubMed] [Google Scholar]