Abstract

Event-based surveillance (EBS) systems have been implemented globally to support early warning surveillance across human, animal, and environmental health in diverse settings, including at the community level, within health facilities, at border points of entry, and through media monitoring of internet-based sources. EBS systems should be evaluated periodically to ensure that they meet the objectives related to the early detection of health threats and to identify areas for improvement in the quality, efficiency, and usefulness of the systems. However, to date, there has been no comprehensive framework to guide the monitoring and evaluation of EBS systems; this absence of standardisation has hindered progress in the field. The Africa Centres for Disease Control and Prevention and US Centers for Disease Control and Prevention have collaborated to develop an EBS monitoring and evaluation indicator framework, adaptable to specific country contexts, that uses measures relating to input, activity, output, outcome, and impact to map the processes and expected results of EBS systems. Through the implementation and continued refinement of these indicators, countries can ensure the early detection of health threats and improve their ability to measure and describe the impacts of EBS systems, thus filling the current evidence gap regarding their effectiveness.

Introduction

Event-based surveillance (EBS) is often framed as a non-traditional approach to early warning surveillance of health threats, complementing notifiable disease reporting and other forms of health surveillance such as sentinel or mortality surveillance. As defined by WHO, EBS is the organised collection, monitoring, assessment and interpretation of mainly unstructured ad hoc information regarding health events or risks that may represent an acute risk to human health.1 Other operational definitions, including the one used by the Africa Centres for Disease Control and Prevention (Africa CDC), have expanded the definition beyond human health to a multisectoral perspective that incorporates animal and environmental health. The practice of EBS was documented in public health literature as early as 2006,2 and implementation guidance on the topic was first published by WHO in 2008,3 driven by the 2005 revisions to the International Health Regulations that charged WHO member states to develop early warning and response systems.4 In 2018, Africa CDC developed a framework to guide the roll-out of EBS in Africa, and this framework was further revised in 2022 to reflect multisectoral collaboration in EBS implementation.5 EBS systems have been implemented by national health authorities to support early warning surveillance across human, animal, and environmental health sectors in diverse settings, including at the community level, within health facilities, and at border points of entry, and are used to monitor media and a wide range of internet-based sources.6,7

To ensure compliance with WHO’s International Health Regulations (2005),4 many countries use the WHO Joint External Evaluation (JEE) tool8 to evaluate progress on global health security initiatives, including surveillance initiatives. The JEE tool recognises EBS as a core component of the surveillance technical area and underscores the importance of assessing EBS performance. Similarly, WHO’s mosaic respiratory surveillance framework released in 20239 includes EBS as a core recommended surveillance approach for the detection and assessment of respiratory pathogens, and the update of WHO’s Early Warning Alert and Response in Emergencies: an operational guide10 includes EBS as a core component for early warning. In addition, the third edition of the Technical Guidelines for Integrated Disease Surveillance and Response in the WHO African Region11 notes that EBS is part of the “backbone” of the Integrated Disease Surveillance and Response (IDSR) strategy. Over 50 WHO member states have been onboarded to use the Epidemic Intelligence for Open Sources platform and, since 2020, the US CDC and Africa CDC have supported the implementation of EBS in communities and health facilities in 33 countries. These developments highlight the fact that EBS systems have gained a prominent and crucial role in public health surveillance, accompanied by increasing investment. However, despite the growing recognition of the importance of these systems in achieving disease surveillance objectives, the monitoring and evaluation of EBS systems have not kept pace with their increasingly prominent role.

Challenges in EBS monitoring and evaluation

EBS systems should be routinely monitored and periodically evaluated to ensure that they are meeting objectives related to the early detection of health threats and to identify areas for improvement in their quality, efficiency, and usefulness.12 The breadth and variety of EBS systems—and the underlying complexity of data collection across various sources—pose an inherent challenge to the creation of comprehensive monitoring and evaluation guidelines. EBS data collection tools and reporting mechanisms vary widely in their design. Some EBS systems are established in response to acute events, whereas others are implemented as routine systems. EBS systems have also been established across a range of sectors, settings, and modalities.7,13–15

Despite variability in the design of EBS systems, they are typically deployed with the intention of providing timely, sensitive surveillance that can complement case-based or indicator-based surveillance systems.1,6 As such, timeliness and sensitivity are two crucially important attributes that should be monitored and evaluated in an EBS system.1 However, among the few studies describing or evaluating EBS systems, only a small minority assessed timeliness or provided measures of sensitivity.7,16 If an EBS system is not achieving timely, sensitive surveillance—or if the data to assess performance in these areas are unavailable—then the primary objective of early detection is at risk. Signals that are not reported in a timely manner quickly lose usefulness, and if reported signals are not acted upon by health authorities, community focal points and health facility staff will quickly lose the incentive to report. Clearly defined, consistent indicators are crucial to ensure that signals are being detected rapidly and that triage and verification steps are being completed for the majority of reported signals. Furthermore, these indicators are crucial to enable the comparison of EBS systems with other complementary surveillance systems and facilitate the understanding of the relative value of EBS in the larger context of health surveillance.

Despite nearly two decades of implementation and evolution of EBS strategies, there are no widely accepted, comprehensive frameworks to guide the monitoring and evaluation of EBS systems towards the goals of timely, sensitive surveillance. Although surveillance system attributes including timeliness, sensitivity, positive predictive value, representativeness, and usefulness have been highlighted in EBS implementation guidance as important metrics, the establishment of detailed indicator definitions and specific guidance on how to apply them to EBS systems has been left to individual countries and implementing organisations.11,17,18 The absence of standardisation in monitoring and evaluation has limited the evidence base available to support EBS implementation and allowed key strategic questions to remain unanswered. Such questions concern the identification of how quickly EBS systems are able to detect and verify health threats, the settings in which community EBS systems are most effective, the optimal distribution of health facilities using EBS reporting, and the pathogens and event types to which EBS systems are most sensitive and for which they are most appropriate. A monitoring and evaluation framework designed to support the generation of relevant data at the country level could help to address these questions and support advocacy for investment in early-warning systems, which have been identified as essential for countering future health threats.19,20

In response to this persistent gap in the standardisation of monitoring and evaluation for EBS systems, we have collaborated to develop—in consultation with many countries and subject matter experts—an EBS monitoring and evaluation indicator framework that can be adapted to specific country contexts to support surveillance objectives and strengthen the evidence base for EBS.21 This adaptable framework serves as a tool to quantify the performance of EBS systems and provide standardised measurement approaches. The indicators developed for this framework complement other global efforts to measure the timeliness of infectious disease surveillance systems, such as the Resolve to Save Lives (RTSL) 7-1-7 initiative,22 the IDSR strategy of WHO’S regional office for Africa,11 and tools for assessing countries’ capacity to prevent, detect, and respond to pandemic and epidemic threats, such as the States Parties self-assessment annual reporting tool23 and JEE,8 led by WHO.

Development of EBS monitoring and evaluation indicators

In January, 2022, US CDC and Africa CDC staff engaged in EBS programmes identified EBS monitoring and evaluation indicators as a priority area of work that each agency had recently begun to develop independently. Staff from both agencies agreed to collaborate and jointly develop monitoring and evaluation indicators to support EBS implementation in African Union member states and US CDC partner countries in Africa, southeast Asia, the Middle East, and South America. A logical framework of input, activity, output, outcome, and impact measures was developed to map the processes and expected results of EBS systems and used to frame indicators for routine monitoring and periodic evaluations.

The formulation of indicators was supported by an informal review of published and unpublished literature related to EBS evaluations and the examination of national EBS guidelines and protocols from countries in which either agency was supporting EBS implementation. Indicator formulation was further informed by ongoing programmatic work (including the piloting of some EBS indicators) conducted by both Africa CDC and the US CDC across African Union member states and US CDC partner countries.

To further refine and validate the indicator framework, it was presented to participants at a workshop in July, 2022, in Nairobi, Kenya (appendix p 2), as a component of a new monitoring and evaluation module within Africa CDC’s EBS framework. The goal of the workshop was to review and finalise the second edition of Africa CDC’s EBS framework. The workshop included 30 subject matter experts representing seven African Union member states; the US CDC; the European Centre for Disease Prevention and Control; the Food and Agriculture Organization of the UN; the World Organization for Animal Health; The Global Fund to Fight AIDS, Tuberculosis and Malaria; RSTL; WHO; and the East, Central and Southern Africa Health Community. The indicator framework drafted by the US CDC and Africa CDC was further revised through plenary review and discussion, complemented by written feedback from working groups formed among subject matter experts.

Feedback and revisions from the workshop were consolidated and, following the workshop, an additional round of input from subject matter experts from the US CDC and RTSL ensured that there was adequate alignment with the US CDC’s EBS monitoring and evaluation efforts and RTSL’s 7-1-7 initiative. Indicator labels, definitions, numerators and denominators, and suggested disaggregates and data sources were agreed upon and are presented in Africa CDC’s Event-Based Surveillance Framework (second edition),21 released in March, 2023, and can also be found in the appendix (pp 3–15).

Indicators for the monitoring and evaluation of EBS

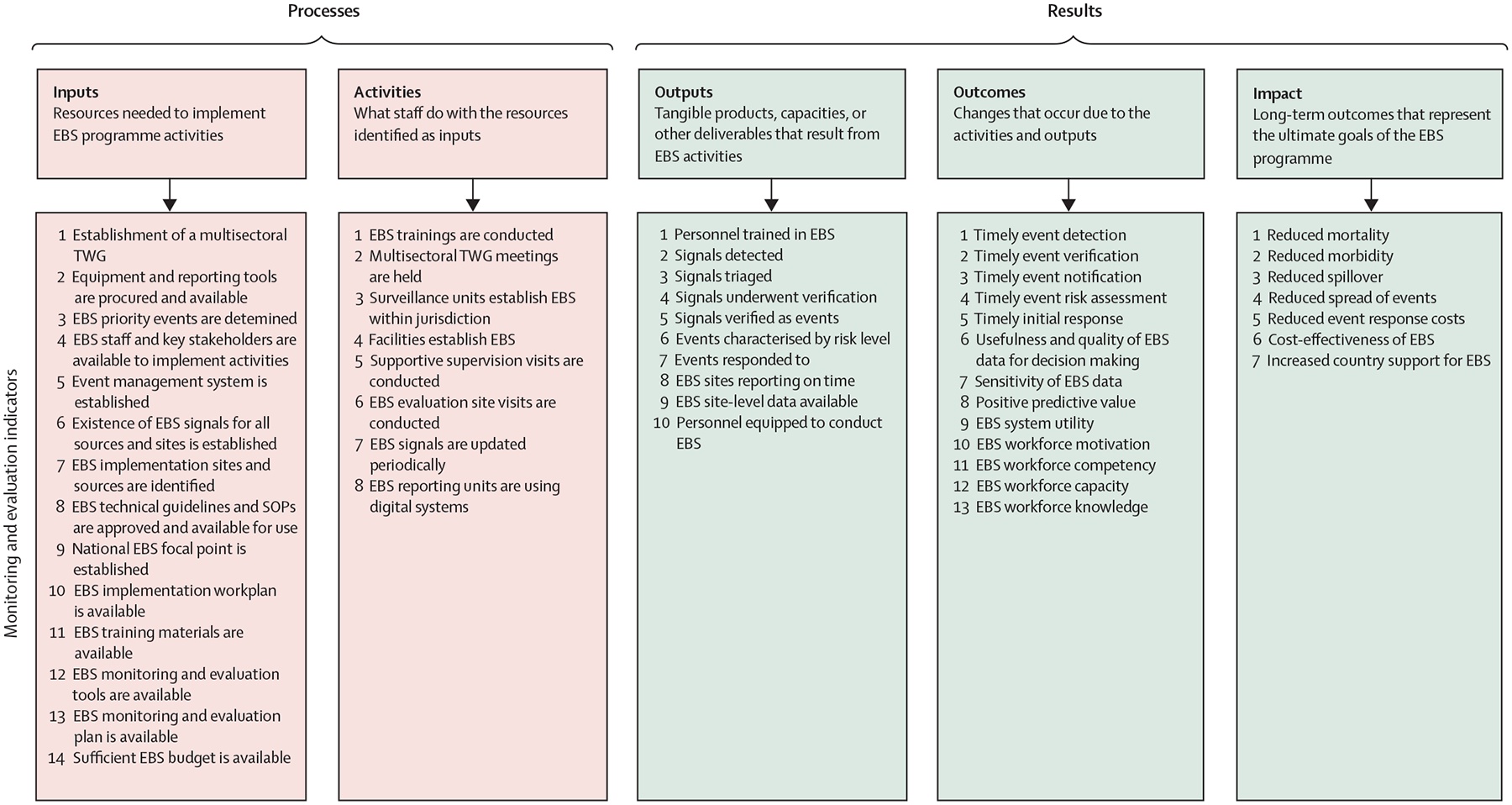

The resulting indicator framework includes 14 monitoring and evaluation indicators for input, eight for activity, ten for output, 13 for outcome, and seven for impact (figure). Many of the indicators can be captured on a routine basis through the existing tools, forms, and data exchange that comprise the day-to-day operation of an EBS system. These monitoring indicators could be assessed on a weekly, monthly, or quarterly basis to inform the operation of the EBS system. The indicators can be captured using various paper or electronic forms, but the implementation of an electronic event management system would enable the automation and routine assessment of these measures on a frequent basis.

Figure: EBS indicators from inputs to impact.

Indicator definitions and other details can be found in the second edition of Africa Centres for Disease Control and Prevention’s framework for EBS21 and in the appendix (pp 3–15). EBS=event-based surveillance. TWG=technical working group. SOP=standard operating procedure.

In addition to monitoring indicators, additional indicators are included to support periodic evaluations to assess one or more surveillance system attributes and address questions that will guide the sustainability and performance of the EBS system. Long-term impact indicators, such as reduced morbidity and mortality or cost-effectiveness, could be assessed every few years, given the relative complexity of data collection and analysis required.

Considerations for the implementation of EBS monitoring and evaluation indicators

The EBS monitoring and evaluation indicators presented here and the updated Africa CDC framework for event-based surveillance are both intended to be flexible and might require adaptation to support a country’s EBS programme. Many EBS programmes have already established monitoring and evaluation plans that might include some—but not all—of the indicators presented here. To enable the prioritisation of new indicators on the basis of local priorities and needs, countries could consider mapping their existing EBS indicators to the framework outlined in this Viewpoint, identifying areas of alignment.

The feasibility of incorporating new indicators will depend on multiple factors, including the level of digitisation across EBS reporting tools and tracking systems. Countries could also consider implementing event management systems to enable the timely and consistent capture of EBS monitoring data. For example, in 2020, Africa CDC developed an event management system on the District Health Information System 2 (DHIS2) platform to support the continental EBS workflow. The use of this system has improved the capture and timeliness of EBS-related data over time.24 This system has since been adapted to support EBS in Lesotho, Sierra Leone, Uganda, and Zambia, and further work is being done to link this system to additional sources at the continental and country levels to improve epidemic intelligence and early warning and response systems.

For this monitoring and evaluation framework to be effective, the operational definitions of indicators and other key terms must be clearly understood and consistently applied. EBS uses specific terminology, such as signal, triage, and verification, that might have different meanings in other contexts. In addition, the use of indicators such as timely event detection requires a clear understanding of timeliness metrics and milestones for outbreaks, as well as related EBS terminologies. Thus, for these timeliness measures, the guidance presented here is aligned with definitions used by RTSL’s 7-1-7 framework22 and WHO’S Thirteenth General Programme of Work,25 which have been piloted, refined, and agreed upon through multiple consultations with global experts.26,27

Validation of indicators and future steps

Monitoring and evaluation activities must be prioritised, incorporated directly into EBS workplans, and resourced appropriately. Integrating the collection of EBS monitoring data into routine reporting processes and reviewing these data as part of standard operating procedures will ensure that monitoring and evaluation are a central part of each EBS programme, enabling timely recognition of both gaps and successes. Surveillance programmes will benefit from surveillance officers and health ministries having the ability to compare the performances of EBS systems and other indicator-based surveillance systems and understand how well the different systems contribute to the overall surveillance objectives.

A monitoring and evaluation framework must itself be evaluated. Some traditional surveillance system attributes, including simplicity, flexibility, and acceptability, apply in this case. Following the release of the guidance presented here, there will remain a need to monitor uptake of the indicator framework by countries in different regions, identify and address barriers to use, and assess which indicators provide the most value to EBS programmes. We hope that through this process, a subset of core indicators might be identified for prioritisation across all EBS programmes. Full adoption of the framework by countries would require them to integrate standard indicators into the tools and systems used for the management of EBS data. Through the implementation and continued refinement of these indicators, countries will be able to improve their ability to measure and describe the impact of EBS and, thus, collectively contribute towards a robust evidence base that will inform EBS implementation strategies in the future. Such evidence is needed to support advocacy efforts for continued investment in early warning systems as well as to contribute to improved strategies for impactful and cost-effective surveillance systems globally.

Supplementary Material

Acknowledgments

We gratefully acknowledge the insightful comments of Eva Leidman during the development of early drafts of this manuscript. We thank Calvin Sindato, Mohamed Mohamed, Martin Matu, John Ojo, Kira Coggeshall, Ray Arthur, Ahmed Zaghloul, Christopher T Lee, Aaron Bochner, and Rahel Yemanaberhan for their contributions and expertise in the process of developing the EBS indicators.

Footnotes

Declaration of interests

We declare no competing interests.

See Online for appendix

For more on the DHIS2 system see https://dhis2.org/

References

- 1.WHO. Early detection, assessment and response to acute public health events: implementation of early warning and response with a focus on event-based surveillance. Geneva: World Health Organization, 2014. [Google Scholar]

- 2.Paquet C, Coulombier D, Kaiser R, Ciotti M. Epidemic intelligence: a new framework for strengthening disease surveillance in Europe. Euro Surveill 2006; 11: 212–14. [PubMed] [Google Scholar]

- 3.WHO Regional Office of the Western Pacific. A guide to establishing event-based surveillance. https://iris.who.int/handle/10665/207737 (accessed Jan 25, 2024).

- 4.WHO. International Health Regulations (2005)—third edition. Geneva: World Health Organization, 2016. [Google Scholar]

- 5.Mercy K, Balajee A, Numbere T-W, Ngere P, Simwaba D, Kebede Y. Africa CDC’s blueprint to enhance early warning surveillance: accelerating implementation of event-based surveillance in Africa. J Public Health Africa 2023; 14: 2827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Balajee SA, Salyer SJ, Greene-Cramer B, Sadek M, Mounts AW. The practice of event-based surveillance: concept and methods. Glob Secur Health Sci Policy 2021; 6: 1–9. [Google Scholar]

- 7.Kuehne A, Keating P, Polonsky J, et al. Event-based surveillance at health facility and community level in low-income and middle-income countries: a systematic review. BMJ Glob Health 2019; 4: e001878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.WHO. Joint External Evaluation tool: International Health Regulations (2005)—third edition. Geneva: World Health Organization, 2022. [Google Scholar]

- 9.WHO. Crafting the mosaic: a framework for resilient surveillance for respiratory viruses of epidemic and pandemic potential. Geneva: World Health Organization, 2023. [Google Scholar]

- 10.WHO. Early warning alert and response in emergencies: an operational guide. Geneva: World Health Organization, 2022. [Google Scholar]

- 11.WHO. Technical guidelines for integrated disease surveillance and response in the WHO African region: third edition. Brazzaville: World Health Organization Regional Office for Africa, 2019. [Google Scholar]

- 12.German RRLL, LM Lee, Horan JM, RL Milstein, Pertowski CA, MN Waller. Updated guidelines for evaluating public health surveillance systems: recommendations from the Guidelines Working Group. MMWR Recomm Rep 2001; 50: 1–35. [PubMed] [Google Scholar]

- 13.Ratnayake R, Tammaro M, Tiffany A, Kongelf A, Polonsky JA, McClelland A. People-centred surveillance: a narrative review of community-based surveillance among crisis-affected populations. Lancet Planet Health 2020; 4: e483–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lee CT, Bulterys M, Martel LD, Dahl BA. Evaluation of a national call center and a local alerts system for detection of new cases of Ebola virus disease—Guinea, 2014–2015. MMWR Morb Mortal Wkly Rep 2016; 65: 227–30. [DOI] [PubMed] [Google Scholar]

- 15.Severi E, Kitching A, Crook P. Evaluation of the health protection event-based surveillance for the London 2012 Olympic and Paralympic Games. Euro Surveill 2014; 19: 20832. [DOI] [PubMed] [Google Scholar]

- 16.Ganser I, Thiébaut R, Buckeridge DL. Global variations in event-based surveillance for disease outbreak detection: time series analysis. JMIR Public Health Surveill 2022; 8: e36211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Clara A, Dao ATP, Mounts AW, et al. Developing monitoring and evaluation tools for event-based surveillance: experience from Vietnam. Global Health 2020; 16: 38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Beebeejaun K, Elston J, Oliver I, et al. Evaluation of national event-based surveillance, Nigeria, 2016–2018. Emerg Infect Dis 2021; 27: 694–702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Global Preparedness Monitoring Board. From worlds apart to a world prepared (GPMB 2021 annual report). Geneva: World Health Organization, 2021. [Google Scholar]

- 20.WHO. Report of the review committee on the functioning of the International Health Regulations (2005) during the COVID-19 response. Geneva: World Health Organization, 2021. [Google Scholar]

- 21.Africa Centres for Disease Control and Prevention. Event-based surveillance framework. Second edition, 2023. https://africacdc.org/download/africa-cdc-event-based-surveillance-framework-2/ (accessed Jan 25, 2024). [Google Scholar]

- 22.Frieden TR, Lee CT, Bochner AF, Buissonnière M, McClelland A. 7-1-7: an organising principle, target, and accountability metric to make the world safer from pandemics. Lancet 2021; 398: 638–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.WHO. IHR (2005) States Parties self-assessment annual reporting tool, 2nd edn. Geneva: World Health Organization, 2021. [Google Scholar]

- 24.Africa Centres for Disease Control and Prevention. Africa CDC annual report 2022. 2023. https://africacdc.org/download/africa-cdc-annual-report-2022/ (accessed Jan 28, 2024).

- 25.WHO. Thirteenth general programme of work (GPW13): methods for impact measurement, version 2.1. Geneva: World Health Organization, 2020. [Google Scholar]

- 26.Kluberg SA, Mekaru SR, McIver DJ, et al. Global capacity for emerging infectious disease detection, 1996–2014. Emerg Infect Dis 2016; 22: E1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Crawley AW, Divi N, Smolinski MS. Using timeliness metrics to track progress and identify gaps in disease surveillance. Health Secur 2021; 19: 309–17. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.