Abstract

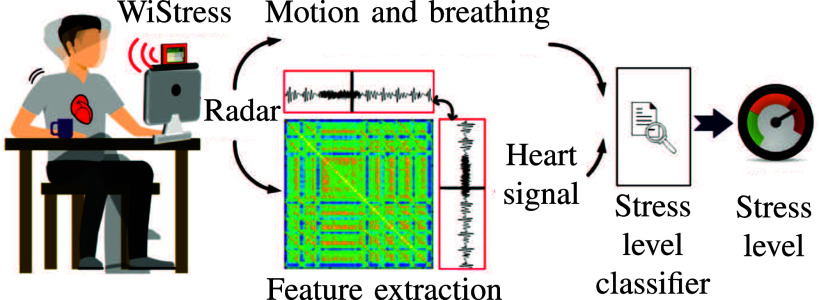

Radio detection and ranging-based (radar) sensing offers unique opportunities for biomedical monitoring and can help overcome the limitations of currently established solutions. Due to its contactless and unobtrusive measurement principle, it can facilitate the longitudinal recording of human physiology and can help to bridge the gap from laboratory to real-world assessments. However, radar sensors typically yield complex and multidimensional data that are hard to interpret without domain expertise. Machine learning (ML) algorithms can be trained to extract meaningful information from radar data for medical experts, enhancing not only diagnostic capabilities but also contributing to advancements in disease prevention and treatment. However, until now, the two aspects of radar-based data acquisition and ML-based data processing have mostly been addressed individually and not as part of a holistic and end-to-end data analysis pipeline. For this reason, we present a tutorial on radar-based ML applications for biomedical monitoring that equally emphasizes both dimensions. We highlight the fundamentals of radar and ML theory, data acquisition and representation and outline categories of clinical relevance. Since the contactless and unobtrusive nature of radar-based sensing also raises novel ethical concerns regarding biomedical monitoring, we additionally present a discussion that carefully addresses the ethical aspects of this novel technology, particularly regarding data privacy, ownership, and potential biases in ML algorithms.

Keywords: Radar, machine learning, medicine, ethics, biomedical monitoring

I. Introduction

Early disease detection is crucial for successful treatment [1], [2], [3]. Therefore, simple and cost-effective biomedical monitoring tools are necessary, enabling a continuous assessment of a patient's state of health. Over the past decades, biomedical monitoring has transitioned from commonly invasive procedures, such as obtaining blood samples and subjective assessments based on human observation, to more objective, minimally – or even non-invasive – monitoring modalities, such as electrophysiology [4], motion capturing [5], [6], and wearable sensors [7], [8]. While these less disruptive methods allow the precise measurement of physiological parameters that enable reliable diagnosis and treatment monitoring, they have several limitations.

Even though current measurement setups are often non-invasive, they commonly still require the attachment of sensors, wires, or markers on the human body, which may interfere with human behavior during the measurement [9]. In addition, complex medical measurement devices, such as motion capture systems or polysomnographs (PSG), are cost intensive, and comprehensive examinations can often only be performed at locations with access to this technology. Furthermore, operating and maintaining these biomedical monitoring devices requires specially trained staff, which gives rise to considerable additional costs. Therefore, these measurements can only be conducted in major hospitals or outpatient specialized centers, thus solely allowing measurements at discrete points in time and only when the health condition is present in the patient. This hinders the seamless translation of laboratory measurements to more realistic, real-world environments. However, many chronic diseases would benefit from a continuous monitoring in real-world environments to better track disease progression and, consequently, adjust medication more efficiently [10].

In recent times, the trend in healthcare is advancing towards a widespread, technology-assisted, and personalized approach. This includes the introduction of continuous medical monitoring that fits seamlessly into everyday life and monitors peoples' behavior and their activities around the clock. This setup should reduce the patient's hospital stay and, at the same time, achieve better monitoring, thus enabling a better assessment of the health state [7], [11]. It has been demonstrated that measurements in a clinical setting do not necessarily reflect the values of measured parameters in a real-world setting [9]. One example of measurements influenced by the measurement setup is the so-called “white-coat hypertension”, in which a person's blood pressure readings are consistently higher when measured in a medical setting, compared to when it is measured in a more relaxed, non-medical environment [12]. Therefore, unobtrusive, or even unperceived, measurements have the potential to considerably enhance data quality.

In the dynamic field of biomedical monitoring, radio detection and ranging (radar) sensors play an important role in facilitating unobtrusive and contactless measurements. Their wave-based nature not only presents a significant alternative to traditional sensors but also mitigates obtrusiveness, thereby broadening the scope for gathering more comprehensive sets of real-world data [13]. Depending on the used frequencies, radar technology may be used for measurement applications in which the subject is occluded by clothing or other materials [14]. This distinct advantage over optical methods, in conjunction with the high sensitivity of radar-based methods, facilitates many applications that could only be addressed by wearable sensor solutions in the past. In contrast to wearables, radar systems enable long-term patient monitoring, thus allowing healthcare professionals to gain more insights into disease progression. In particular, technologically, highly advanced radar sensors are affordable for widespread use in home environments for everyday data collection. However, raw radar data are difficult to interpret, even for experts. Machine learning (ML) algorithms enable the recognition of patterns in radar data streams to extract various biosignals or analyze other inner states of humans. This not only allows the improvement of diagnostics but can also support medical staff in the prevention of diseases and improve biomedical monitoring.

Given the unique opportunities that radar-based sensing can offer for biomedical monitoring, we aim to bring this technology closer to the biomedical engineering community. For this reason, we present a tutorial on radar-based applications for biomedical monitoring, with special focus on applications that make use of ML to extract meaningful parameters. After introducing the fundamentals of radars (Section II) and ML (Section III), specific representations of radar data and its applications within medicine are introduced in Section IV. Section V gives an overview of the study planning to generate sufficient training data, which are essential for the ML process, and discusses the “dos and don'ts” within that scope. As with the introduction of every novel technology, the integration of radar technology into biomedical monitoring procedures raises ethical concerns. This aspect is discussed in Section VI, which highlights the ethical parameters and aspects of this technology. Then, Section VII addresses the categories of clinical relevance. Finally, Sections VIII and IX discuss the findings and conclude the tutorial paper, respectively.

II. Radar Fundamentals

Radar is a radiolocation technique that uses electromagnetic waves to determine distance, velocity, and the angle of objects relative to the radar site. The basic principle of a radar system can be easily explained. A microwave transmitter emits a radio signal – e.g., a short pulse-shaped wave via an antenna in the direction of an object. This signal is reflected by the object, and the reflected echo is received by the radar system [15]. By measuring the roundtrip time of flight (RTOF)  of the signal from the radar to the object and back – i.e., the time elapsed between transmitting the impulse and receiving the respective echo – the distance

of the signal from the radar to the object and back – i.e., the time elapsed between transmitting the impulse and receiving the respective echo – the distance  can be calculated using the following relationship:

can be calculated using the following relationship:

|

where  is the propagation speed of the electromagnetic wave, which is approximately

is the propagation speed of the electromagnetic wave, which is approximately  in air. Therefore, the radar signal only needs

in air. Therefore, the radar signal only needs  to travel

to travel  . The radar signal carrier frequency

. The radar signal carrier frequency  commonly used today is in the range of

commonly used today is in the range of  to

to  , with the vast majority of radar systems being used for biomedical sensing operating in the regulated industrial, scientific, and medical (IMS) frequency bands at

, with the vast majority of radar systems being used for biomedical sensing operating in the regulated industrial, scientific, and medical (IMS) frequency bands at  [16],

[16],  [17], [18], [19], [20], [21], and

[17], [18], [19], [20], [21], and  [22], [23] or more recently also

[22], [23] or more recently also  [24], [25] and

[24], [25] and  [26], [27]. The wavelength

[26], [27]. The wavelength  of the radar signal is defined as follows:

of the radar signal is defined as follows:

|

For a  radar, for instance, the wavelength is

radar, for instance, the wavelength is  . The wavelength will be important in the further course of the explanations because, as we will see, it determines the radar accuracy, radar resolution, and applicability of radar systems. While this rather simple introduction may clarify the basic principle of radar operation, it reveals neither the most exciting measurement capabilities and characteristics of radar systems nor the actual hardware structure of the latter. To really understand biomedical radar systems, we take a closer look at the so-called continuous wave (CW) radar.

. The wavelength will be important in the further course of the explanations because, as we will see, it determines the radar accuracy, radar resolution, and applicability of radar systems. While this rather simple introduction may clarify the basic principle of radar operation, it reveals neither the most exciting measurement capabilities and characteristics of radar systems nor the actual hardware structure of the latter. To really understand biomedical radar systems, we take a closer look at the so-called continuous wave (CW) radar.

A. Continuous Wave (CW) Radar

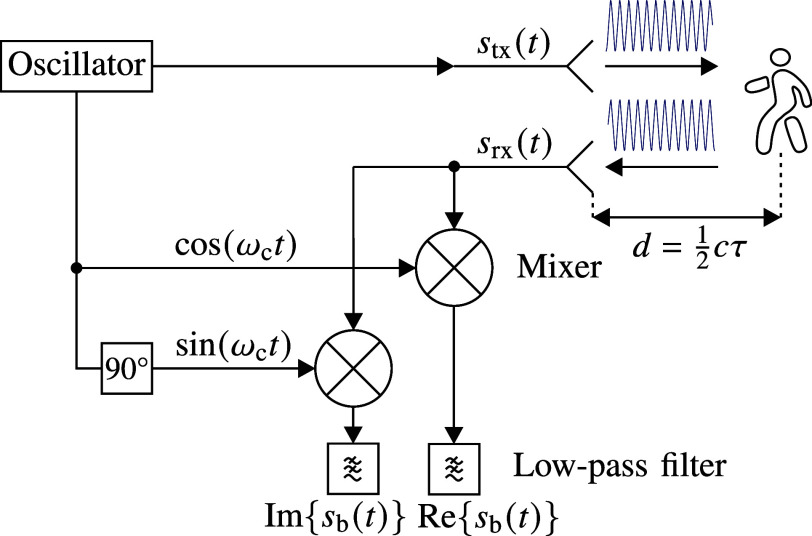

The basic setup of a CW radar, also referred to as Doppler radar, is illustrated in Fig. 2. The oscillator generates a sine wave signal. With the angular frequency of the carrier signal  , amplitude

, amplitude  , and zero phase

, and zero phase  , the transmit signal

, the transmit signal  can be written as follows:

can be written as follows:

|

Fig. 2.

Block diagram of the basic CW radar setup.

The receive signal  has the same form as the transmit signal

has the same form as the transmit signal  ; however, it is delayed by the RTOF

; however, it is delayed by the RTOF  and has a notably lower amplitude. It is given as follows:

and has a notably lower amplitude. It is given as follows:

|

The receive signal amplitude  depends on the target distance and the reflectivity of the object. In addition, we need to introduce a constant phase offset

depends on the target distance and the reflectivity of the object. In addition, we need to introduce a constant phase offset  , first because the reflection from the object can cause a material-dependent phase change and second because of possible phase offsets in the radar hardware.

, first because the reflection from the object can cause a material-dependent phase change and second because of possible phase offsets in the radar hardware.

From a system theoretic perspective, the following mixing process that facilitates radar interpretation can be described as a simple complex-valued multiplication. Here, the receive signal is once multiplied with the transmit signal and once separately multiplied with its quadrature component shifted by  . Since the receive signal is a cosine term, a shift by

. Since the receive signal is a cosine term, a shift by  transforms the quadrature component into a sine term. Based on trigonometric product-to-sum identities, this multiplication yields the so-called baseband signal

transforms the quadrature component into a sine term. Based on trigonometric product-to-sum identities, this multiplication yields the so-called baseband signal  , which is as follows:

, which is as follows:

|

The low-pass filter after the mixer (Fig. 2) is used to suppress unwanted signal components at twice the carrier frequency.

The resulting baseband signal  is a constant, complex direct current value (i.e., a complex pointer) as long as the target distance

is a constant, complex direct current value (i.e., a complex pointer) as long as the target distance  is constant. However, if the target distance and, thus, the RTOF

is constant. However, if the target distance and, thus, the RTOF  change with time, the phase argument of the baseband signal, which is given as follows, also changes:

change with time, the phase argument of the baseband signal, which is given as follows, also changes:

|

Considering the definitions of the wavelength (2) and the relation between travel time and distance, we see that a change of distance by only half a wavelength causes a phase rotation of  .

.

Measuring the phase with a statistical uncertainty of below  is usually not a problem in a well-designed radar [28], [29]. To better understand the relationship between the wavelength and phase, we present the following example using a radar system operating at a frequency of

is usually not a problem in a well-designed radar [28], [29]. To better understand the relationship between the wavelength and phase, we present the following example using a radar system operating at a frequency of  , resulting in a wavelength of

, resulting in a wavelength of  : This means that a

: This means that a  phase rotation corresponds to a distance change of about

phase rotation corresponds to a distance change of about  . This fine range sensitivity makes it possible, for instance, to detect microscopic movements on the body surface caused by pulsating blood flow [30]. In addition to capturing microscopic movements, it is also possible to measure larger motions, such as those involving limbs or other parts of the body. However, one drawback of the CW radar is the extremely low unambiguous range for tracking distance changes. This refers to the maximum target range measurable by the radar system while an unambiguous association with a specific distance can be ensured. For CW radar technology, this maximum unambiguous range is

. This fine range sensitivity makes it possible, for instance, to detect microscopic movements on the body surface caused by pulsating blood flow [30]. In addition to capturing microscopic movements, it is also possible to measure larger motions, such as those involving limbs or other parts of the body. However, one drawback of the CW radar is the extremely low unambiguous range for tracking distance changes. This refers to the maximum target range measurable by the radar system while an unambiguous association with a specific distance can be ensured. For CW radar technology, this maximum unambiguous range is  and, therefore,

and, therefore,  with respect to the prior example. Thus, tracking certain motions can span multiple phase cycles. Therefore, phase changes need to be tracked with a sufficient sampling rate fitted to the motion speed in order to reconstruct the total path length of the displacement. For instance, to measure the total displacement of the chest due to respiration (multiple centimeters) with a

with respect to the prior example. Thus, tracking certain motions can span multiple phase cycles. Therefore, phase changes need to be tracked with a sufficient sampling rate fitted to the motion speed in order to reconstruct the total path length of the displacement. For instance, to measure the total displacement of the chest due to respiration (multiple centimeters) with a  radar system, the Doppler phase needs to be sampled at least at every

radar system, the Doppler phase needs to be sampled at least at every  point of motion.

point of motion.

B. Doppler Principle

The phase argument of the baseband signal  is called the Doppler phase

[31], [32]. The derivative of the Doppler phase, given as

is called the Doppler phase

[31], [32]. The derivative of the Doppler phase, given as

|

is called instantaneous Doppler frequency. If the target moves with constant radial velocity (i.e., only the vector component in the direction of the radar) toward or away from the radar, the Doppler phase changes linearly with time, and thus, its derivative is constant. If this is the case,  is constant and one can derive a scalar Doppler frequency value – e.g., by a Fourier transform (FT) of the baseband signal. In this case, we have the following:

is constant and one can derive a scalar Doppler frequency value – e.g., by a Fourier transform (FT) of the baseband signal. In this case, we have the following:

|

Thus, measuring the Doppler frequency is perfectly suited for measuring the target speed. Note that with a single antenna, only the radial velocity can be measured. The maximum unambiguous velocity, which describes the maximum speed that can be accurately measured without encountering velocity ambiguity, is limited by the sampling rate of the Doppler phase. Hence, to unambiguously measure the velocity of a foot kicking a ball at a maximum speed of  following the example of a

following the example of a  signal frequency, the sampling rate of the Doppler phase needs to be at least at

signal frequency, the sampling rate of the Doppler phase needs to be at least at  , which depicts twice the maximum occurring Doppler frequency.

, which depicts twice the maximum occurring Doppler frequency.

It is important to precisely distinguish between the instantaneous Doppler frequency and the Doppler frequency. A Doppler frequency is the average value of the instantaneous frequency over a certain observation period. Very often, the Doppler frequency is determined by an FT, where the averaging is inherently done by the Fourier integral. However, an FT only provides a meaningful frequency estimate if the frequency values of the signal components are almost constant during the integration time. In an FT, the integration (or averaging) time equals the length of the signal that is Fourier transformed.

In biomedical applications, the target velocity – e.g., the cardiopulmonary body movements or the movement of the limbs, among others – is usually not constant or at least not constant over a meaningful observation time. Therefore, the determination of Doppler frequencies over longer sections of the CW radar baseband signal with the FT is rarely useful in biomedical settings. Instead, the baseband signal is divided into short sections, where the time duration  of the sections chosen is so short that constant velocities of all targets can be assumed in this observation period. In the so-called short-time Fourier transform (STFT), each signal section is Fourier transformed separately. If the temporal change of these individual spectra is plotted over time, it leads to a Doppler spectrogram [33].

of the sections chosen is so short that constant velocities of all targets can be assumed in this observation period. In the so-called short-time Fourier transform (STFT), each signal section is Fourier transformed separately. If the temporal change of these individual spectra is plotted over time, it leads to a Doppler spectrogram [33].

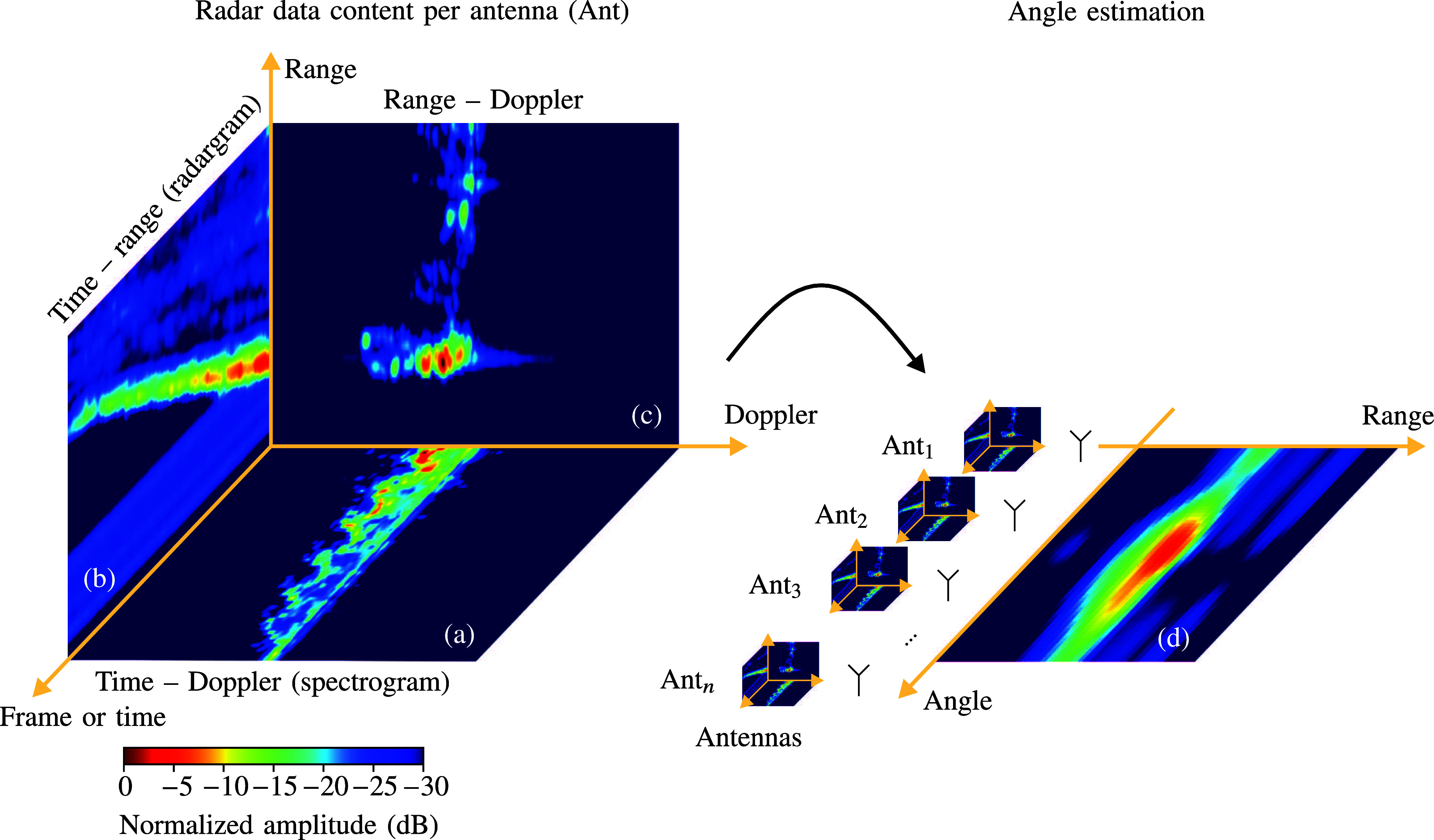

The temporal change in the spectral composition of the radar baseband signal is called the micro-Doppler signature of a moving or rotating target [34], [35]. In Fig. 3(a), a typical Doppler spectrogram of a person moving with near-constant velocity away from the radar is illustrated. The characteristic micro-Doppler signature is clearly visible. While the person's torso exhibits an almost constant velocity, the limbs yield an undulating pattern. Whereas the most accurate representation of the target micro-Doppler signature is the direct use of the Doppler phase  or of the instantaneous Doppler frequency

or of the instantaneous Doppler frequency  , the STFT is a suitable tool to estimate

, the STFT is a suitable tool to estimate  . It is especially useful when there is more than one moving target within the radar's detection range. This aspect will be discussed in Section II-C of this article.

. It is especially useful when there is more than one moving target within the radar's detection range. This aspect will be discussed in Section II-C of this article.

Fig. 3.

Radar data content overview: Here, a person is moving away from a radar system with almost constant velocity of approximately  . The sectional images are generated by summing up the remaining dimension. Stationary objects were removed using static clutter removal algorithms. (a) illustrates an exemplary Doppler spectrogram. The distinctive micro-Doppler signatures resulting from various body reflections with different velocity components during the walking activity are easily discernible. In (b), an exemplary radargram depicts the target's distance from the radar system over time. Reflections occurring beyond the dominant scatterers are attributed to multi-path effects. Further, undesirable horizontal smears stem from the hardware characteristics of the radar system. (c) illustrates an exemplary range – Doppler diagram. The superimposition of multiple reflections from distinct body parts, each exhibiting varied velocity components (e.g., torso or swinging arms), gives rise to micro-Doppler effects. Reflections extending clearly behind dominant body parts are attributable to multi-path effects. In (d), an angle image corresponding to the range – Doppler image in (c) is presented. The examination of the data from multiple antennas allows an accurate angle estimation.

. The sectional images are generated by summing up the remaining dimension. Stationary objects were removed using static clutter removal algorithms. (a) illustrates an exemplary Doppler spectrogram. The distinctive micro-Doppler signatures resulting from various body reflections with different velocity components during the walking activity are easily discernible. In (b), an exemplary radargram depicts the target's distance from the radar system over time. Reflections occurring beyond the dominant scatterers are attributed to multi-path effects. Further, undesirable horizontal smears stem from the hardware characteristics of the radar system. (c) illustrates an exemplary range – Doppler diagram. The superimposition of multiple reflections from distinct body parts, each exhibiting varied velocity components (e.g., torso or swinging arms), gives rise to micro-Doppler effects. Reflections extending clearly behind dominant body parts are attributable to multi-path effects. In (d), an angle image corresponding to the range – Doppler image in (c) is presented. The examination of the data from multiple antennas allows an accurate angle estimation.

C. Radar Resolution and Radar Data Content

If there is more than one moving target or scattering structure within the radar's detection range, the micro-Doppler signatures of all scattering structures will overlap. Thus, it is necessary to separate or resolve the different scattering structures. The ability to separate closely spaced structures is defined as the resolution of a radar. The available separation dimensions are instantaneous Doppler frequency, the radar viewing angle to the target, and the distance to the target. If a target can be resolved in one dimension, all attributes of the other dimensions corresponding to that specific target can be assigned.

If the above STFT is used, two targets can be separated if they have a velocity that differs more than the Doppler resolution  . The Doppler resolution when using STFT is inversely proportional to the time duration

. The Doppler resolution when using STFT is inversely proportional to the time duration  of the signal sections and can be estimated as follows [36]:

of the signal sections and can be estimated as follows [36]:

|

The precise value of  depends on the used window function of the applied Fourier processing. If the Doppler frequencies of two targets differ by at least this value, they lie in the spectrum at different frequency bins and can, therefore, be distinguished.

depends on the used window function of the applied Fourier processing. If the Doppler frequencies of two targets differ by at least this value, they lie in the spectrum at different frequency bins and can, therefore, be distinguished.

The radar viewing angle facilitates the separation of the Doppler signatures of several body parts and prevents overlapping. Therefore, the radar signal is focused in an angular direction on a specific point of the body. The ability to focus a wave is determined by the size  of the radar antenna aperture related to the wavelength. The aperture is an equivalent area from which the waves emanate or on which the wave is received. Two targets can be separated in an angular dimension if their angle to the radar differs by more than the angular resolution

of the radar antenna aperture related to the wavelength. The aperture is an equivalent area from which the waves emanate or on which the wave is received. Two targets can be separated in an angular dimension if their angle to the radar differs by more than the angular resolution  . The latter is given as follows [36]:

. The latter is given as follows [36]:

|

To obtain a good angular resolution, a radar system should have apertures with  . As a rule of thumb, an angular resolution of

. As a rule of thumb, an angular resolution of  would require an aperture size of

would require an aperture size of  .

.

An aperture can be created by one physical antenna or by an antenna array. In medical applications, it is not always practical to mechanically direct a large physical antenna or antenna array to a specific point on the body. While this manual alignment may still be possible in stationary applications, where the person is sitting quietly on a chair or lying in bed, it is not applicable to scenarios where a person is moving. One solution to this problem is digital aperture synthesis. With the suitable selection of all transmit signal phases in an array or with a computational superposition of all receive signals of an array in a reconstruction algorithm, the radar signal can be focused on certain spatial areas. The most powerful variants are multiple-input multiple-output (MIMO) array apertures and synthetic aperture reconstruction algorithms [37], [38], by which a variety of different RTOFs is evaluated by many different antenna positions. This reconstruction process can be seen as an imaging process. By separating all scattering structures in the lateral dimension, an image of the target in lateral dimension is created. Commonly utilized MIMO radars employ the time-division multiplexing (referred to hereon as TDM-MIMO) principle, where each transmit antenna sequentially transmits its signal resulting in lower update (frame) rates and a decreased maximum unambiguous velocity. The unambiguous angle of a radar system is defined by the angle range where an unambiguous association of the target to a specific angle can be made. It is determined by the spatial spacing between antenna elements. To obtain a resolution in range, an impulse-shaped or modulated broadband radar signal is necessary.

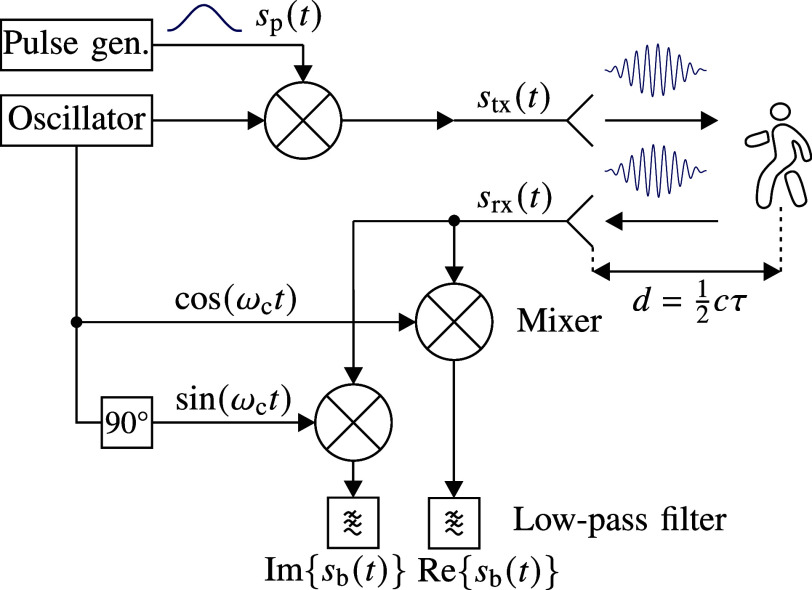

A comprehensible way to understand how radar ranging and the target separation in the range is done is to look at a radar system concept referred to as coherent impulse Doppler radar

[15], which is depicted in Fig. 4. Compared to the simple Doppler setup in Fig. 2, a pulse generator that modulates the sinusoidal carrier signal with a pulse-shaped envelope  has been added. The impulse transmitted by the radar is given as follows:

has been added. The impulse transmitted by the radar is given as follows:

|

Fig. 4.

Setup of a coherent impulse Doppler radar.

Using the same mathematics as before, the final baseband signal can be calculated as follows:

|

If there are  targets within the radar's detection range, the so-called radar echo profile is a superposition of the echoes of all

targets within the radar's detection range, the so-called radar echo profile is a superposition of the echoes of all  reflecting structures, which all have different RTOFs:

reflecting structures, which all have different RTOFs:

|

The echoes can be separated in range if their maxima are still recognizable from the superimposition of the envelopes, resulting in a distinct maximum for each target. It can be shown that the width of the envelope  is inversely proportional to the signal bandwidth

is inversely proportional to the signal bandwidth  of the baseband signal

of the baseband signal  in (12). Thus, the radial range resolution

in (12). Thus, the radial range resolution  of a radar system is given as follows [15],

of a radar system is given as follows [15],

|

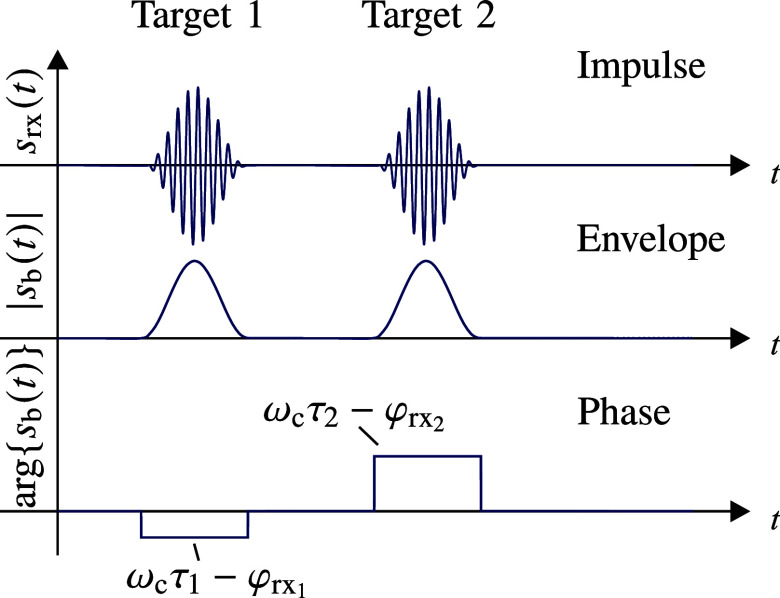

The exact value of the range resolution depends on the actual envelope shape and other signal properties. Furthermore, it becomes clear that CW radar systems do not offer any range resolution as they lack system bandwidth. Based on the above formula and also by looking at Fig. 5, it is obvious that the distance to the targets can be determined by evaluating the envelope positions alone. When the targets move, their echo envelopes move as well. When a successively measured sequence of echo profiles and the variation of the echo positions are plotted over time, this two-dimensional representation is called a radargram. A typical radargram from a person moving away with almost constant velocity from the radar is visualized in Fig. 3(b).

Fig. 5.

Envelope and phase of an exemplary radar echo profile.

The unambiguous range that can be measured is determined by the pulse repetition interval, which is defined by the time delay between successive pulse emissions. A longer pulse repetition interval allows for an unambiguous association of the pulse with a specific distance. Therefore, in contrast to CW radars, an impulse Doppler radar offers an unambiguous range information that is proportional to the pulse repetition interval.

As we can derive from (13), each radar echo does not only have an envelope  but also a phase

but also a phase  . The phase is exactly the one discussed in the CW radar section. Thus, if sequential radar measurements are done, all of the above Doppler evaluations can also be performed. It is required that the measurements are performed in such a rapid succession that the sampling theorem for the highest occurring Doppler frequency is not violated. Otherwise, ambiguities in the velocity measurement may1 occur. When the range profile is plotted in one dimension and the STFT over the Doppler signature in the other dimension, this is called a range–Doppler diagram. A typical range–Doppler diagram is depicted in Fig. 3(c).

. The phase is exactly the one discussed in the CW radar section. Thus, if sequential radar measurements are done, all of the above Doppler evaluations can also be performed. It is required that the measurements are performed in such a rapid succession that the sampling theorem for the highest occurring Doppler frequency is not violated. Otherwise, ambiguities in the velocity measurement may1 occur. When the range profile is plotted in one dimension and the STFT over the Doppler signature in the other dimension, this is called a range–Doppler diagram. A typical range–Doppler diagram is depicted in Fig. 3(c).

It is very important to note that the mentioned radar resolution values have to be strictly separated from the technical terms “measurement accuracy” and “precision”. As soon as a target can be resolved, the measurement precision and especially the accuracy of the measured distance, angle, and velocity values are orders of magnitude better than the resolution limits in a well-designed and well-calibrated radar. The possible accuracy can be estimated via the Cramer Rao lower bound, which is influenced by the radar resolution; however, ultimately, it is limited only by the signal-to-noise ratio of the baseband signal [39], [40].

It should also be noted at this point that the coherent impulse Doppler radar principle is by far not the most frequently used concept in the field of medical technology. In this article, we chose this principle because it is the easiest to understand and allows us to derive common radar signal representations. The most commonly used commercial radar principle today is the frequency modulated continuous wave (FMCW) or stepped frequency continuous wave (SFCW) radar [15], [41]. If impulse radar systems are used in the medical area, very often, ultrawideband (UWB) systems are used, and the so-called sequential sampling impulse radar concept is applied [33], [42].

In simple terms, FMCW or SFCW radars use the same impulse response measurements as impulse radars; however, this does not happen in the time domain but in the frequency domain. Thus, a wide signal bandwidth is achieved by modulating the transmitted frequency as opposed to relying on short pulses, which also exhibit a high signal bandwidth when transformed into the frequency domain. From the system theory, it is known that one can switch back and forth between the two domains (i.e., system impulse response vs. system transfer function) at any time via a Fourier transformation. Thus, after Fourier transforming the baseband signal of an FMCW radar, one gets nearly the same echo profile as represented in (13). Since the evaluation of the distance to the target in FMCW radar takes place in the frequency domain, a frequency shift induced by the Doppler effect is superimposed on the signal. Therefore, Doppler effects must be considered already within a single measurement. This is the only small difference; however, it is relatively easy to take into account. If many successive FMCW measurements are carried out one after the other and evaluated together, it is called the FMCW chirp sequence radar or fast chirp radar method [43], [44]. In this case, the range–Doppler diagram can be computed efficiently from the two-dimensional Fourier transform of the raw radar data. In terms of resolution and sensitivity, which describes the radar's ability to detect small distance changes, this is one of the best-performing radar variants today. However, CW radars may outperform this type of radar in terms of sensitivity due to the lower noise contribution of their components.

If range resolution is combined with lateral resolution, it is even possible to obtain a 3D image of the target scene. In addition, as explained above, each voxel can be assigned a Doppler frequency or a micro-Doppler signature. This results in a multidimensional raw radar data representation in the form of a hypercube, which is referred to as radar cube. To offer a visual representation of the content of a radar cube, Fig. 3 provides a comprehensive overview. Each frame is equipped with information on the range, Doppler, and amplitude through a single antenna. By assessing the phase changes across multiple antennas, the system can also perform an angle estimation, as shown in Fig. 3(d). A comprehensive comparison of the common radar principles relevant to human motion detection is presented in Table I. For a general overview, the most important terms related to radar are summarized in Table II.

TABLE I. Comparison of Common Radar Concepts Used for Human Motion Detection: Continuous Wave (CW), Impulse Doppler, Frequency Modulated Continuous Wave (FMCW), Stepped Frequency Continuous Wave (SFCW) and Time-Division Multiplexing Multiple-Input Multiple-Output (TDM-MIMO) FMCW. Metrics:  : Excellent;

: Excellent;  : High;

: High;  : Moderate;

: Moderate;  : Low;

: Low;  : Number of Transmit Antennas.

: Number of Transmit Antennas.

| Radar Concepts used for Human Motion Detection | |||

|---|---|---|---|

| 1) CW | 2) Impulse Doppler / FMCW / SFCW | 3) TDM-MIMO combined with FMCW | |

| Velocity resolution | Yes | Yes | Yes |

| Range resolution | No | Yes | Yes |

| Angle resolution | No | No | Yes |

| Unambiguous velocity |

|

|

(dependent on (dependent on  ) ) |

| Unambiguous range |

|

|

|

| Sensitivity |

|

|

|

| Update rate |

|

|

(dependent on (dependent on  ) ) |

TABLE II. Overview of Technical Radar Terms and Definitions.

| Overview of Technical Radar Terms and Definitions | |

|---|---|

| Doppler frequency | Instantaneous Doppler frequency averaged over a specific observation period for a target moving at constant speed |

| Doppler phase | The instantaneous phase of a radar echo |

| Doppler spectrogram | The representation of the change of velocity spectrum over time |

| Instantaneous Doppler frequency | The derivative of the Doppler phase |

| Micro-Doppler signature | The temporal course of the instantaneous Doppler frequency or Doppler phase characteristic for a moving object |

| Precision | Statistical variation of several measurements under identical conditions |

| Radargram | The representation of the change of radar echo profile over time |

| Radar accuracy | How close a measurement is to its true value |

| Radar Doppler spectrogram | The representation of the change of Doppler spectrum over time |

| Radar echo profile | The representation of the echo amplitude and/or phase over distance |

| Radar resolution | The ability to separate closely adjacent targets in range, angle, or Doppler dimension |

| Radar sensitivity | The ability to detect small distance changes |

| Range – angle profile | The two-dimensional display of the target distance (dim. 1) and the azimuth or elevation angle (dim. 2) |

| Radar cube | Radar data hypercube representation with its dimensions amplitude, range, azimuth/elevation angle, and Doppler |

| Range – Doppler profile | The two-dimensional display of the target distance (dim. 1) and the Doppler frequency (dim. 2) |

| Unambiguous range | The maximum distance that the radar is able to measure unambiguously |

| Unambiguous velocity | The maximum target speed that is unambiguously measurable by the radar |

| Update rate | The frequency at which the radar system provides a new measurement frame |

It is obvious that this radar cube contains an enormous information content. Most of the information relevant to biomedical measurements is contained in the micro-Doppler signatures. Unfortunately, this part is mostly inaccessible for human visual perception because our brain is primarily trained for two-dimensional optical imaging. Neural networks, however, give us the ideal tool to comprehensively evaluate the multidimensional data treasure that radar technology offers. To better understand how ML can be used to extract meaningful information, we will introduce the fundamentals of ML and deep learning (DL) in the following section.

III. Machine Learning and Deep Learning Models

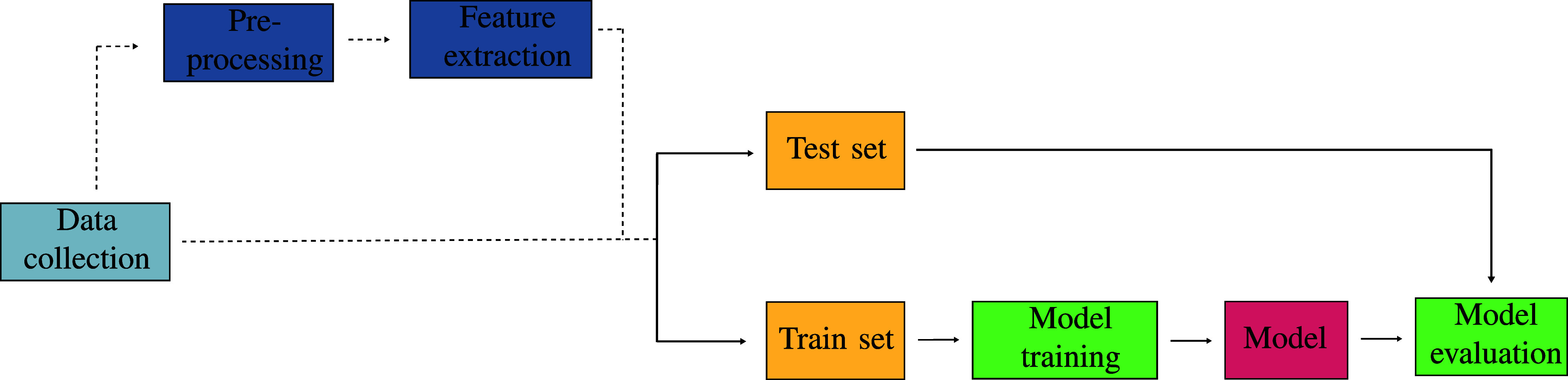

Due to the multidimensional nature of the underlying radar data, one of the most promising ways to process and evaluate them is the use of ML algorithms. Generally, ML is one sub-field of artificial intelligence (AI) that enables computers to learn and improve without being explicitly programmed [82]. This involves the development of algorithms that enable machines to make predictions or identify patterns based on data. The general approach, which is visualized in Fig. 6, includes data pre-processing, feature extraction, and classification [82]. As DL models can inherently learn features and pre-processing steps, this is represented by dashed lines. Conversely, classical ML approaches commonly require explicit pre-processing and expert feature engineering. These single steps will be explained in the following sections.

Fig. 6.

The typical ML pipeline consists of data collection and pre-processing, followed by feature extraction to enhance input features. As DL models can inherently learn features and pre-processing steps from raw sensor data, this is represent by dashed lines. Following this, the model is trained using the training data and evaluated on a separate test set.

A. Pre-Processing and Feature Extraction

The first crucial step in creating robust models is pre-processing the raw radar data. This should include filtering, noise suppression, and the handling of outliers, as well as dealing with missing data points and the synchronization of data streams. As raw sensor data is often complex and contains redundant information, the extraction of features containing relevant information for the specific task is essential. Expert knowledge plays a key role in this process as handcrafting proper features can enhance the models' ability to discover relevant patterns and to make accurate predictions [82].

Some ML algorithms rely on distance metrics to calculate the distance between different data points in an n-dimensional feature space. Those algorithms commonly lack prediction performance when incorporating high-dimensional features. One reason for this is that data points become increasingly sparse in high-dimensional spaces, which makes meaningful distance calculations more difficult. Thus, dimensionality reduction techniques are required [61], which, among others, can involve applying algorithms such as the principle component analysis or feature selection methods that rank features according to their information content and the redundancy (e.g., correlation between features, chi-square test, or mutual information) [82]. Furthermore, ensuring that the features are on a consistent scale is a critical aspect of feature engineering in distance-based algorithms. Scaling methods, such as min-max scaling or z-score normalization, mitigate the impact of features with different magnitudes [82].

B. Supervised Vs. Unsupervised Learning

To create ML models, training is required, which can be performed in a supervised and an unsupervised approach. For supervised learning, the correct output for the training data is known, and the model is trained to determine these outputs as accurately as possible. Therefore, to train supervised ML algorithms, each data point needs to have an associated label. During the training phase, the algorithm optimizes its parameters to minimize the disparities between its predictions and the actual target labels [83]. In unsupervised learning, this information is not available. Thereby, the primary use case is to discover patterns, relationships, and inherent trends within a dataset [83]. For most applications in radar-based biomedical monitoring, ML models are trained with radar data that is recorded in combination with ground truth data. Therefore, we focus on supervised ML in the rest of this section.

C. Classification or Regression Problems

In supervised learning, most ML algorithms can be used for classification or regression tasks. In classification, the outcome is represented as a discrete label, while regression tasks provide continuous output [83]. This continuous output can then be rounded to discrete values such as disease severity scales.

In the context of biomedical monitoring, classification tasks are commonly used for diagnostic purposes, whereas regression tasks can help to monitor the disease progression by estimating the disease severity over time.

D. Choice of ML Algorithms

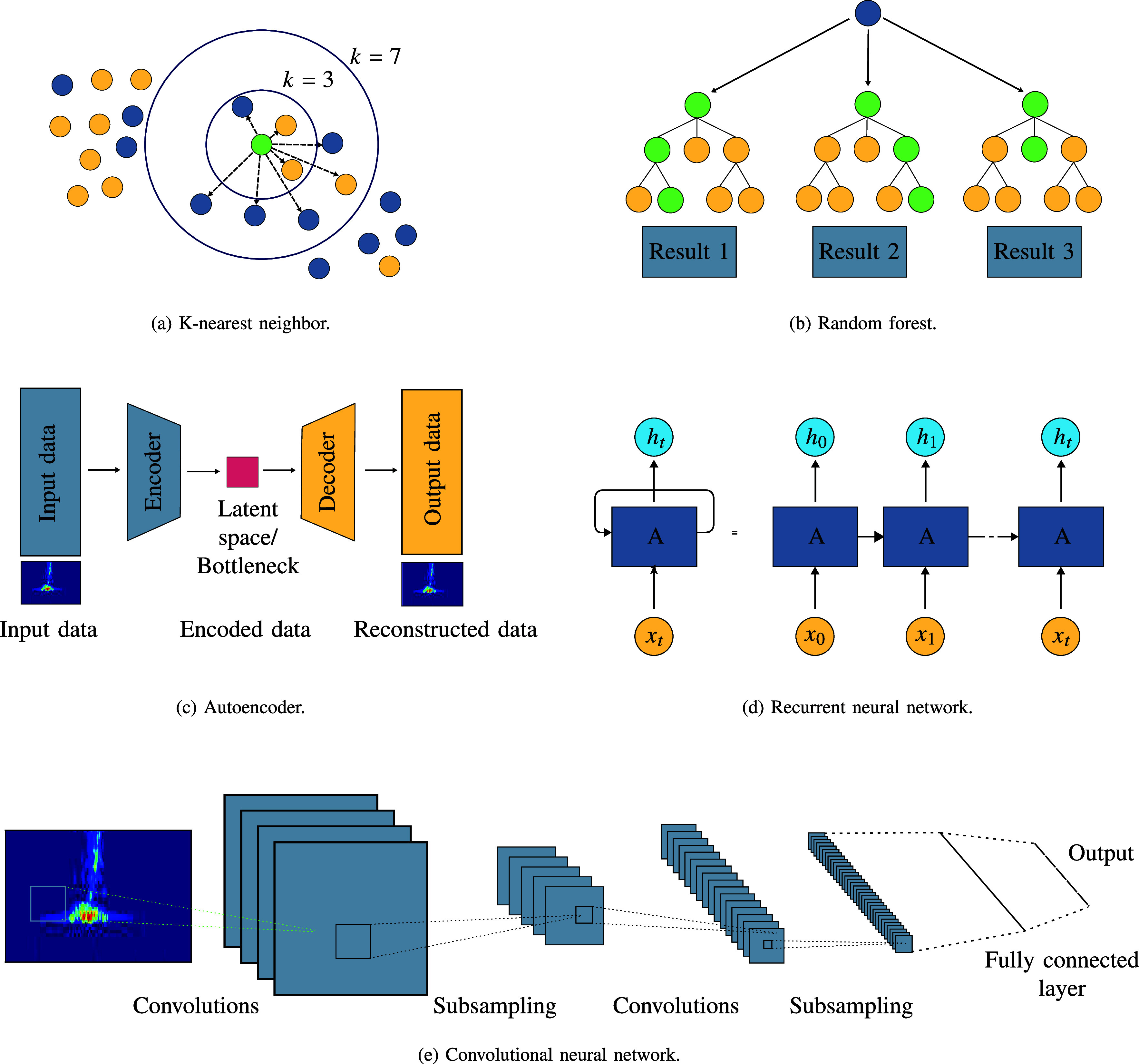

In simple terms, ML algorithms can be grouped into classical ML and DL techniques. The classical ML models typically have a simpler architecture with fewer parameters to optimize, while DL models can have a deep and hierarchical architecture with multiple layers. Because DL models are fundamentally inspired by the human brain, composed of interconnected neurons, these models are also referred to as neural networks. Each connection between the different neurons has an associated weight, which determines the impact of the connection [84]. Tables III and IV give an overview on frequently used classical ML and DL algorithms, while Fig. 7 displays a selection of greater relevance of those ML (k-nearest neighbor algorithm (kNN), random forest algorithm) and DL (autoencoder, long-short-term memory (LSTM), convolutional neural network (CNN)) algorithms.

TABLE III. Frequently Used Classical ML Algorithms in Radar-Based Applications for Biomedical Monitoring With Relevant Papers in the Rightmost Column.

| Overview of classical ML algorithms. | ||

|---|---|---|

| K-nearest neighbors (kNN) | The kNN algorithm is a simple but computationally efficient algorithm used mainly for classification tasks. The algorithm clusters the training data into subsets and assigns new data to the class with the majority of nearest neighbors. Mostly, the classifier is used due to its simplicity and short computation time. However, complex relationships are difficult to learn [45]. A schematic explanation of the algorithm can be found in Fig. 7(a). | [46], [47], [48], [49], [50] |

| Random forest | random forests are frequently used ML algorithms used for both regression and classification tasks. They describe the combination of multiple randomized decision trees, where the predictions are determined by a majority vote for classification tasks and by averaging for regression tasks [51]. A visual representation of the random forest algorithm is provided as found in Fig. 7(b) | [52], [53], [54], [55] |

| Naive Bayes | The naive Bayes algorithm is a probabilistic classification algorithm based on Bayes' theorem. Thereby, Bayes' theorem is used to determine the probability of a label based on prior knowledge of conditions associated with the event. The term naive comes from simplifying the computation with the assumption that the features used are input independent of each other [56]. | [49], [50], [53] |

| Logistic regression | Logistic regression is used for binary classification tasks, where the output is a categorical variable with two classes. As a decision based on a linear function is highly susceptible to outliers, it will not perform well in classification tasks. Therefore, the output values are fed to a sigmoid function that returns probabilities. Based on a pre-defined threshold, we can obtain the classification [57]. | [49], [53], [58] |

| Linear regression | Linear regression is one of the simplest ML algorithms and is used to model a continuous output variable (dependent variable) based on one or more input features (independent variable) as a linear equation [59]. | [50], [60] |

| Support vector machine (SVM) | SVMs are used for classification tasks. The decision function of an SVM determines a linear or non-linear hyperplane that can be used to predict the class label for unseen data. In this process, the decision function aims to maximize the margin between the class labels. As SVM classifiers typically work worse in high-dimensional feature spaces, feature selection methods should be applied [61]. The regressor counterpart of an SVM is named support vector regression. | [49], [50], [53], [54], [55], [62] |

TABLE IV. Frequently Used DL Algorithms in Radar-Based Applications for Biomedical Monitoring With Relevant Papers in the Rightmost Column.

| Overview of DL Algorithms | ||

|---|---|---|

| Autoencoder | Autoencoder are neural networks that are used to efficiently process data. By introducing a bottleneck between the encoder and decoder structure, a low-dimensional representation is imposed that can be used for data compression, feature extraction, denoising, or data generation. Special attention needs to be paid to the bias-variance trade-off that balances the reconstruction and generalization capabilities [63]. | [64], [65] |

| Long short-term memory (LSTM) | LSTMs are a special type of RNN focussing on long-term dependencies, making them suitable for sequence prediction tasks. The network commonly consists of three gates: The forget gate determines how much information of the previous time stamp is to be remembered; the input gate introduces new information from the current time step, whereas in the output gate, the updated information is passed to the next time step [66]. | [54], [55], [67], [68], [69] |

| Convolutional neural network (CNN) | CNNs are feed-forward neural networks inspired by biological neurons, which are frequently used to find patterns in images, but also for classifying audio, time series, and other biosignal data. Typically, a CNN consists of different layers: Convolutional layers with only local connections and pooling layers to perform downsampling and dimensionality reduction; this results in a smaller number of parameters and faster convergence. The fully connected layers at the end are used to specify the intended output [70]. | [55], [71] |

| U-Net | U-Net is a neural network based on a fully convolutional network with an encoder–decoder structure, which is commonly used for efficient medical image segmentation. By using skip connections from the encoder to the decoder part, it combines the high-level feature maps from the decoder and the corresponding low-level details from the encoder [72]. | [49] |

| Transformer | Transformers are neural networks for sequence-to-sequence tasks that are well suited to learning long-term dependencies. This solves the common problems of RNNs such as vanishing gradients or maintaining old connections in long sequences. By introducing self-attention layers that weigh each input sequence by its importance, the vanishing gradient problem can be solved. Furthermore, as the attention layers are independent of each other, parallelization can be applied, reducing the duration of training [73]. One special type of Transformer is called Vision Transformer, where input images, such as feature maps from radar data, are split into patches and linearly embedded to create a sequence of vectors. These are then further processed by Transformer encoder layers to capture both local and global relationships. The main advantages of using VITs are the better understanding of global contexts as well as the scalability. Furthermore, as to the self-attention maps can be overlaid with the input image, it is visible which regions of the image are considered most important. This makes it easier to understand and interpret the models' decision [74]. | [75], [76], [77], [78], [79] |

| Multilayer perceptron (MLP) | MLPs are fully connected feed-forward neural networks used for regression and classification tasks. They consist of an input layer, an output layer, and one or more hidden layers. After each training step, the respective weights are updated using back-propagation to minimize a cost function. MLPs can handle large amounts of data and model complex non-linear relationships between input and output data [80]. | [75], [81] |

Fig. 7.

Frequently used ML algorithms: (a) K-nearest neighbor: The algorithm assigns new data points to the class with the majority of nearest neighbors; (b) Random forest Algorithm: Predictions are determined by the combination of multiple decision trees. Frequently used DL algorithms: (c) Autoencoder: By inducing a low-dimensional representation between the encoder and decoder structure feature extraction or data generation can be performed; (d) Recurrent neural network: By storing information from previous inputs memory information is induced to learn long-term dependencies; (e) Convolutional neural network (CNN): Typically a CNN consists of convolutional, pooling and fully connected layers.

The choice of the most suitable ML algorithm depends on the specific problem, available data, and computational resources.

Classical ML algorithms commonly rely on handcrafted features extracted from the data, requiring expert domain knowledge to identify relevant features [82]. As explained in Section III-A, distance-based algorithms require dimensionality reduction in the pre-processing step to reach good prediction results. The training process of tree-based ML algorithms, characterized by decision-making through a hierarchical tree-like structure, typically incorporates inherent feature selection, making the model training less vulnerable to high-dimensional feature spaces [51], [82]. In contrast, the input data for DL algorithms are typically raw or only slightly processed, as the models can handle complex high-dimensional data such as images, audio, and text. Due to the multi-layered network architecture, DL algorithms are able to automatically learn relatively complex hierarchical features, making manual feature selection obsolete [85].

Another advantage of neural networks is their superiority in handling time-series data. While time dependency in classical ML models is typically induced by engineering time-dependent features that are incorporated into the models, there are DL architectures that were particularly designed to handle time-series data, such as recurrent neural networks (RNNs), including models such as LSTMs or gated recurrent units (GRUs) [66], [85].

However, the aforementioned advantage of DL models to learn the complex relationship from (raw) data also bears a downside: The decision process of DL models is often less interpretable compared to classical ML algorithms, where feature engineering is typically performed using expert knowledge [86]. Thus, DL models are often referred to as “black-box models” that can be troublesome for biomedical monitoring, where a transparent way of decision-making is particularly important and can even prevent clinical application.

As the feature engineering in classical ML algorithms is performed with expert knowledge, patterns are created, making the algorithms effective for small- to medium-sized datasets. Therefore, classical ML algorithms are suitable for tasks with limited computational resources such as on-device or real-time applications. In contrast, DL algorithms often require substantial amounts of data for training, which makes them computationally expensive and requires special hardware, such as graphics processing units (GPUs) [84].

E. Training Process

ML models require a training process in which the internal model parameters are adjusted through optimization techniques. For most classical ML algorithms, this means optimizing their internal parameters to minimize an error between predictions and actual target labels.

Neural networks are trained using a process called back-propagation. This involves feeding input data into the network, calculating the output, comparing it with the actual output, and adjusting the weights to minimize the error. This process is repeated iteratively using optimization algorithms such as gradient descent [84].

To achieve generalizability, this training process should be performed on a designated subset of the dataset, which is referred to as the training set. The performance should then be evaluated on a separate test set that was never used in the training process to ensure generalizability and make the model suitable for real-world applications [82].

Due to complex data acquisition and labelling, biomedical radar datasets are often only small-scale datasets. Therefore, the amount of data might be insufficient for establishing a train-test split in which the model optimization does not heavily depend the data to which the model is optimized. As this split is commonly performed randomly, the classification performance might heavily depend on the random split.

To mitigate this issue, cross validation can be used, in which multiple train-test splits are conducted on the same dataset and the resulting prediction performance in averaged. This can help to diminish the impact of a single arbitrary split and to get a more robust result [82].

If the dataset contains several recordings of the same participant, the train-test split should be conducted on a participant level to ensure that data from one participant are only in the training or test set, respectively. This helps to prevent information leakage from the test set into the training process. Furthermore, it is important to ensure that both train and test sets do not contain confounders [87]. For instance, if the control group primarily consists of young, healthy adults while the intervention group is predominantly composed of elderly individuals with Parkinson's disease, the model may learn to classify based on age rather than the intended target, which is Parkinson's disease.

IV. Radar Data Representations and Their Applications in Biomedical Monitoring Using Machine Learning

Raw radar data are complex time series, which are often multidimensional. As mentioned in Section II, even comparatively simple monostatic radar setups in which the sender and transmitter are co-located, such as FMCW or pulse-Doppler, already yield a three-dimensional radar data cube that consists of the dimensions: frames, range, and Doppler (see Fig. 3). For pure CW radars, a range determination is not possible due to the lack of signal bandwidth. However, the Doppler dimension – i.e., the occurring velocity components – can still be measured excellently. In the case of a MIMO setup, which leads to additional spatial information, the dimensions of the radar cube rise to 4D, multiplied by the number of antennas. By choosing the antenna position in two orthogonal spatial directions, the antenna dimension can be further expanded by two dimensions to facilitate the processing procedure. The radar data cube then results in a 5D hypercube.

A. Choice of Radar Data Representation as ML Input

While raw radar data can be directly used as an input for ML models, further signal processing steps can also be applied to extract relevant information from the raw data. Feeding raw radar data directly into an ML network may be quite challenging, as it can lead to a very large and complex network. One reason for this is the high dimensionality of the radar data, which leads to many input parameters and, therefore, more weights. If the raw data also contains complicated patterns that the network has to learn, it may need more parameters to represent these relationships. On the one hand, those large and complex network architectures may be more powerful, as they contain all the present information. On the other hand, they are more susceptible to training errors, may not converge properly, and may lead to unacceptable training times. For that reason, often, only a moderate fraction of the radar cube is fed directly into ML networks as, for instance, 1D or 2D data. While the reduction of the data dimension is a valid method, this can also lead to the loss of relevant information. If, for instance, only the velocity components are analyzed over time and the spatial dimensions are combined into one dimension, a local separation of the occurring signals can no longer be achieved. Thus, it is not possible anymore to detect in which area of the considered scenery the velocity components are present and only the ensemble of all components is available. If the location or position of the signal to be measured is already known before the measurement or if the radar's field of view is restricted accordingly in advance, it is largely sufficient to use the strongest occurring measurement signal. However, it becomes much more difficult if velocity components have to be additionally resolved spatially. However, depending on the use case, it can be advantageous to feed raw radar data directly into an ML framework. In the following section, we summarize different approaches that use raw radar data based on previous work.

B. Applications Using Raw Radar Data

Several examples in the literature have extracted small body movements from raw radar data to predict vital signs, such as respiration and heart sounds, which are mostly recorded with the radar phase profile over time. For instance, Khan et al. [88] used raw radar data for the contactless monitoring of photoplethysmography (PPG) by measuring chest and heart movements. To achieve this, they used a self-attention DL network with an encoder–decoder structure to generate a prediction for the PPG waveform. Shi et al. [67] predicted heart rate variability (HRV) by feeding raw radar data into a bidirectional LSTM network. In addition, the phase was evaluated to record small body vibrations.

The extraction of vital signs was also utilized in other applications. For instance, several approaches for classifying sleep phases from raw radar data (also referred to as “sleep staging”) were presented [48], [53], [68]. In this work, biosignals were extracted from radar sensors placed next to the bed, and different ML and DL algorithms were applied to predict individual sleep phases. In a different application, Ha et al. [52] proposed a contactless stress monitoring setup using an FMCW radar. HRV, respiration, and expert movement features were extracted from the raw radar signal and used to predict the stress level using a random forest-based approach.

A model to classify sleep postures using different DL models is presented by Lai et al. They used IR-UWB radar data from three perspectives, and resized the data to images after several pre-processing and denoising steps.They found that the vision transformer in combination with the radars from side and top reached the best performance [78].

A fall detection application is presented by Hanifi et al. [50] where time- and frequency-domain features computed from raw radar data were fed to different traditional ML models. Another application using radar technology was proposed by He et al. [49], in which the blood oxygen levels of participants were estimated from radar-based respiration measurement using a bidirectional transformer and U-Net approach. The detection and assessment of Parkinson's disease was presented by Yang et al. [89]. Here, a customized DL network architecture was used to extract nocturnal breathing signals from radar data.

The aforementioned multidimensional comprehensiveness of raw radar data makes it difficult to interpret it all at once, even for radar experts. Therefore, other representations of radar data are often preferred, such as 2D sectional images or heatmaps. The representations have the advantage of being better understandable and interpretable representations of the multidimensional radar cube and allow one to gain more information about the scenery. In the past, certain representations have been shown to be more suitable than others for extracting the target information. Therefore, it is necessary to choose the right representation carefully. In the following sections, the most prevalently used sectional images are presented. In addition, we introduce examples from the literature that apply appropriate ML algorithms to respective sectional images.

C. Applications Using Doppler Spectrograms

Doppler spectrograms (see Table 2) are widely used for motion recognition, as radars usually provide an excellent velocity resolution, and even a relatively simple monostatic CW radar is sufficient to acquire this information. Specific motions result in unique so-called micro-Doppler signatures, which are also explained in Table 2. An exemplary Doppler spectrogram is given in Fig. 3(a). Previous work has used Doppler spectrograms for analyzing different types of movements, from large movements or gestures of people [54], [64], [75], [90], [91] to their smaller movements, such as chest movements induced by respiration and heart beats [92], [93].

Furthermore, several methods have been proposed that use Doppler spectrograms as input data for ML algorithms. In fact, Dey et al. [75] converted the magnitude of time-range plots and Doppler spectrograms to RGB images and applied a vision transformer to perform fall classification. Huan et al. extracted features from the Micro-doppler map via feature pyramid extraction and used a a lightweight hybrid vision transformer for classification of various daily life tasks and fall detection [76]. Jokanovic et al. [64] converted the Doppler spectrogram to a gray-scale image and applied stacked autoencoders for feature extraction. Based on these features, they classified fall events using softmax regression. In contrast, He et al. [49] manually extracted different features from time-range and Doppler spectrograms and compared different state-of-the-art ML classifiers to detect fall events. Additionally, Seifert et al. [46] extracted features from Doppler spectrograms based on a sum-of-harmonics analysis and used a nearest neighbor model to classify gait abnormalities. In [92], Yamamoto et al. used Doppler spectrograms as the input for a convolutional LSTM network to detect heart beats.

D. Applications Using Range – Doppler Images

Range – Doppler images simultaneously provide information about the distance and the velocity of the radar targets. Similar to Doppler spectrograms, only a monostatic setup is necessary to create a range – Doppler image; however, here, the acquisition of additional range information is required. Range – Doppler images do not inherently comprise time dependency and angular information about the target toward the radar. Range – Doppler images are widely used for scene interpretation – e.g., in automotive radar or object and person detection since the resolution of range – Doppler images is usually higher compared to angle resolution, even for low-cost radars. An example of a range – Doppler image is depicted in Fig. 3(b).

Bhavanasi et al. [55] used range – Doppler images as an input for a CNN-based network structure together with a softmax classifier to perform patient activity recognition in a hospital environment. Time-integrated range – Doppler images were used in Erol et al.’s [62] study for human motion classification. From gray-level representations, they extracted 13 different statistical features and performed the classification using a support vector machine (SVM). Zhao et al. used range – Doppler images measured by five UWB radar systems to classify nine different activities in arbitrary directions. Therefore, they applied a vision transformer and found initial results below CNN algorithms. However, they indicated that vision transformers might generalize better on unseen individuals [77].

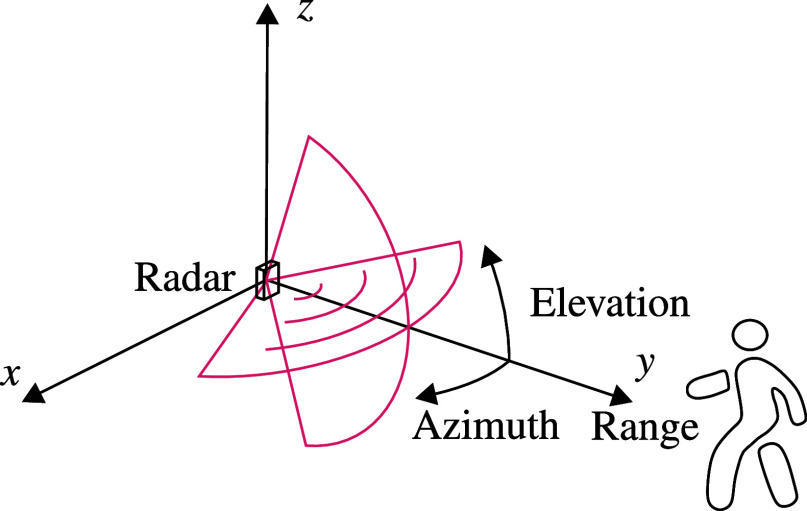

E. Applications Using Range – Angle Images and Spatial Heatmaps

If several antennas (e.g., MIMO arrays) are used instead of just one, the spatial resolution of the scene is also possible. In general, spatial resolution improves with an increasing aperture size, especially with the number of antennas used. To generate spatially resolved images from raw radar data, the dimensions range and antennas are required because the change of the phase over the antennas needs to be evaluated. The resulting data is usually angle dependent and provides information about the angular position between the radar and the radar echo. Therefore, 2D sectional images can be created using the dimensions range and angle (e.g., azimuth and/or elevation; see Fig. 1), as depicted in Fig. 3(d). The images can be converted into spatial heatmaps in Cartesian coordinates, providing the target's position in x, y, and z coordinates. Range – angle images or heatmaps do not inherently comprise information about time dependency and velocity; they are primarily employed to obtain the position of radar echoes in the room. For instance, Yue et al. [81] used range – angle images from radar technology to estimate human poses. A combination of range – Doppler, range – angle, and Doppler-angle images was used in [94] to automatically detect activities of daily living within a palliative care environment.

Fig. 1.

Radar coordinate system: The red area determines the field of view of the radar system.

V. Study Planning and Data Acquisition

A planned data acquisition needs to be tailored to the specific motion and ML task. Different radar types have advantages and disadvantages (as listed in Table I). For example, in the analysis of microscopic motion, CW radars are sufficient, as they make the continuous tracking of small distance changes possible, and the small system bandwidth allows for a higher signal-to-noise ratio (SNR) compared to broadband systems. On the other hand, capturing the range information of macroscopic motion usually requires different signal shapes (e.g., impulse Doppler, FMCW, or SFCW). To also retrieve angle information, multiple antennas need to be used. When multiple body parts are involved in the motion, range and angle resolution help to distinguish between the different targets. However, in TDM-MIMO radars, the maximum frame rate is inversely proportional to the number of transmit antennas, which restricts unambiguous Doppler (velocity) measurements and, therefore, the types of movement that can be captured.

The data acquisition procedure is also influenced by the choice of the ML model, as the amount of the required training data strongly depends on the number of trainable parameters within the model. Therefore, classical ML typically requires less training data than DL [95]. Furthermore, even with sufficient data, DL does not necessarily outperform classical ML [96].

Most previous radar – ML studies focused on supervised learning, necessitating labeled training data. Whenever feasible, it is advisable to gather synchronized ground truth reference data. This facilitates the potential for automatic labeling but can also serve as a basis for manual labeling. For instance, in a gait analysis, the participants were given the task of performing specific movement patterns [47], [54], [58], [65]. In contrast, sleep stages were labeled based on gold standard PSG recordings, either by experts [60], [68] or through software [48]. Further, precise time synchronization between radar sensors and reference systems needs to be adequately addressed. Long-term synchronization can be achieved by recording a synchronization signal in both the gold standard and target signal [67] or by implementing a synchronization invariant loss function [88]. If the ML task allows it, rough synchronization – e.g., using time-stamps – can be sufficient [60].

The recording of a high amount of training data is not necessary in the case of unsupervised or self-supervised ML tasks. By utilizing deep contrastive learning, Chen et al. [97] dealt with the task of separating radar responses caused by vital signs from signal components generated by macroscopic motions. However, it is worth noting that a significant portion of published work relies on supervised learning, emphasizing the critical role of generating high-quality training data.

The generation of sufficient training data can be challenging. Primarily, this stems from the tasks associated with labeling and the need to ensure a data set that represents the complexities and diverse scenarios of real-world biomedical conditions as best as possible. In this regard, radar simulations are a vital approach, as they allow automatic labeling, while the variability within the simulation model enhances the diversity of the generated data [94], [98], [99]. Furthermore, with this approach, unavailable radar hardware can be simulated, aiding in the identification of the most suitable radar sensor for the respective biomedical application.

VI. Ethical Analysis of Machine Learning-Enabled Radar-Based Biomedical Monitoring

While numerous ethical papers are available that have examined the use of ML for biomedical monitoring, there is still a scarcity of ethical investigations into radar-based ML applications for monitoring. The ethics of these technologies are currently facing numerous methodological and application-related questions. As clear as the basic line seems to be that this emerging technology should be used in such a way that it serves people, it quickly becomes unclear what this can mean in concrete terms. Central to this is the development of ethical criteria that are both context sensitive and clearly evaluable.

So far, the ethics of ML have been very much focused on data processing and data use [112], [113]. There has not been an equal focus on the methods and modes of data acquisition. However, when employing radar-based systems for biomedical monitoring, the new forms of data acquisition pose important ethical questions. This is why our ethical analysis focuses in particular on the new data acquisition procedures. On the one hand, forms of data collection that involve physical contact with the body of the respected person can lead to uncomfortable experiences. Radar-based applications, on the other hand, present a form of data collection without physical contact. Some scholars, such as Fioranelli and Le Kernec [114], highlight the enormous advantages that such systems may have to not compromise the bodily integrity of the patients: “The advantage of radar sensing comes from its contactless and non-intrusive nature. The subjects do not need to wear or carry or interact with devices, which can be an advantage for users' compliance, especially for those affected by cognitive impairments. Furthermore, radar sensors do not generate plain images or videos of people and their environments, which can be an advantage for users' acceptance in term of privacy.” [114]. At the same time, however, the question arises whether radar-based applications are really less impairing if they measure in a contactless and, in a sense, invisible manner, which makes transparency about the measurement and the time of measurement an additional challenge.

We conducted a narrative literature review [115] to examine the ethical criteria discussed in the literature concerning radar-based systems. We utilized the ethics principles of AI discussed in Jobin et al.'s [100] study and assessed which of these are discussed with respect to radar-based systems. We developed the following search strategy: intitle:radar AND intitle:biomedical AND [ethical principle]. We used the term ”biomedical” to filter the results with regard to those radar-based systems that are used for data collection in biomedicine and to rule out papers on radar systems that have applications in non-medical and non-human industrial contexts (e.g., weather radars). Jobin et al. [100] identified a total of 11 different ethical principles. As ethical principles are not always referred to by the same phrase, we also included their synonyms defined by Jobin et al. [100]. For instance, in the case of the ethical principle of transparency, the search strategy looks as follows: intitle:radar AND intitle:biomedical AND (transparency OR explainability OR explicability OR understandability OR interpretability OR communication OR disclosure OR showing). If the scope of the terms was too large because verb forms were also included or the synonyms of the ethical principle contained more than one word, inverted commas were used (e.g., for “communication” or “show”). The database search was conducted on Google Scholar on September 29–30, 2023.

The results of our narrative literature search are summarized in Table V. In total, we found  papers that contained at least one of the ethical principles mentioned by Jobin et al. [100] and radar-based biomedical monitoring. Papers that matched more than one ethical principle are listed in each matching category.

papers that contained at least one of the ethical principles mentioned by Jobin et al. [100] and radar-based biomedical monitoring. Papers that matched more than one ethical principle are listed in each matching category.

TABLE V. Results of the Search for the Employment of Ethical Principles According to [100] in the Context of Radar-Based Systems for Biomedical Monitoring.

| Overview of Ethical Principles | |||

|---|---|---|---|

| Ethical principle | Included terms (cf. Jobin et al.; frequencies) [100] | Results | Especially relevant papers |

| Transparency | Transparency ( ), explainability ( ), explainability ( ), explicability ( ), explicability ( ), understandability ( ), understandability ( ), interpretability ( ), interpretability ( ), communication ( ), communication ( ), disclosure ( ), disclosure ( ), showing ( ), showing ( ) ) |

|

|

| Justice and fairness | Justice ( ), fairness ( ), fairness ( ), consistency ( ), consistency ( ), inclusion ( ), inclusion ( ), equality ( ), equality ( ), equity ( ), equity ( ), (non-) bias ( ), (non-) bias ( ), (non-)discrimination ( ), (non-)discrimination ( ), diversity ( ), diversity ( ), plurality ( ), plurality ( ), accessibility ( ), accessibility ( ), reversibility ( ), reversibility ( ), remedy ( ), remedy ( ), redress ( ), redress ( ), challenge ( ), challenge ( ), access and distribution ( ), access and distribution ( ) ) |

|

Detection bias due to psychological stress can be eliminated [101], diversity [102], accessibility [102] |

| Non-maleficence | Non-maleficence ( ), security ( ), security ( ), safety ( ), safety ( ), harm ( ), harm ( ), protection ( ), protection ( ), precaution ( ), precaution ( ), prevention ( ), prevention ( ), integrity (bodily or mental) ( ), integrity (bodily or mental) ( ), non-subversion ( ), non-subversion ( ) ) |

|

Safety [102], [103], prevention [104], [105], prevention [106] |

| Responsibility | Responsibility ( ), accountability ( ), accountability ( ), liability ( ), liability ( ), acting with integrity ( ), acting with integrity ( ) ) |

|

|

| Privacy | Privacy ( ), personal or private information ( ), personal or private information ( ) ) |

|

Privacy [101], [102], [104], [107] |

| Beneficence | Benefits ( ), beneficence ( ), beneficence ( ), well-being ( ), well-being ( ), peace ( ), peace ( ), social good ( ), social good ( ), common good ( ), common good ( ) ) |

|

Benefit [102], [105], [108], [109], [110], [111] |

| Freedom and autonomy | Freedom ( ), autonomy ( ), autonomy ( ), consent ( ), consent ( ), choice ( ), choice ( ), self-determination ( ), self-determination ( ), liberty ( ), liberty ( ), empowerment ( ), empowerment ( ) ) |

|

|

| Trust | Trust ( ) ) |

|

|