Abstract

COVID-19 poses a global health crisis, necessitating precise diagnostic methods for timely containment. However, accurately delineating COVID-19-affected regions in lung CT scans is challenging due to contrast variations and significant texture diversity. In this regard, this study introduces a novel two-stage classification and segmentation CNN approach for COVID-19 lung radiological pattern analysis. A novel Residual-BRNet is developed to integrate boundary and regional operations with residual learning, capturing key COVID-19 radiological homogeneous regions, texture variations, and structural contrast patterns in the classification stage. Subsequently, infectious CT images undergo lesion segmentation using the newly proposed RESeg segmentation CNN in the second stage. The RESeg leverages both average and max-pooling implementations to simultaneously learn region homogeneity and boundary-related patterns. Furthermore, novel pixel attention (PA) blocks are integrated into RESeg to effectively address mildly COVID-19-infected regions. The evaluation of the proposed Residual-BRNet CNN in the classification stage demonstrates promising performance metrics, achieving an accuracy of 97.97%, F1-score of 98.01%, sensitivity of 98.42%, and MCC of 96.81%. Meanwhile, PA-RESeg in the segmentation phase achieves an optimal segmentation performance with an IoU score of 98.43% and a dice similarity score of 95.96% of the lesion region. The framework’s effectiveness in detecting and segmenting COVID-19 lesions highlights its potential for clinical applications.

Keywords: COVID-19, CT scan, CNN, region, boundary, residual, transfer learning, classification, segmentation

1. Introduction

COVID-19, emerging from Wuhan, China, swiftly disseminated in early 2020 [1], and is continuing to impact continents worldwide [2]. Currently, global COVID-19 cases stand at around 705 million and 7 million cases and deaths, respectively. The vast majority, about 99.6%, experience mild symptoms, while 0.4% develop severe or critical conditions [3]. Severe COVID-19 cases may lead to respiratory inflammation, and alveolar and lung damage, potentially resulting in death [4]. COVID-19 pneumonia often presents with signs like pleural effusion, ground glass opacities (GGO), and consolidation [5].

Typical diagnostic approaches for COVID-19 patients include gene sequencing, RT-PCR, as well as X-ray and CT imaging [6,7]. RT-PCR is considered the standard test; it typically requires up to 2 days for results to come through and is susceptible to viral RNA instability, resulting in a detection rate of approximately 30% to 60% and requiring serial testing to mitigate false negatives [8]. Therefore, additional precise detection methods are essential for timely treatment and halting the transmission of COVID-19 infections.

CT imaging, available and cost-effective in hospitals, is a reliable tool for detecting, prognosing, and monitoring COVID-19 patients, particularly in epidemic regions [9]. CT image analysis offers detailed insights into lung infections and disease severity. Common radiographic features of COVID-19 patients comprise GGO, consolidation, peripheral lung distribution, etc. [10]. Analyzing numerous CT images strains radiologists, especially in areas where they lacking expertise, impacting the effectiveness of these images. Studies confirm the diagnostic accuracy of identifying lung abnormalities in COVID-19 cases, even without typical clinical symptoms, and in cases of false-negative RT-PCR results [11].

During public health crises like epidemics and pandemics, radiologists and healthcare facilities are overwhelmed. Radiologists struggle with identifying COVID-19 infection through CT scans, emphasizing the need for automated tools to improve performance and handle patient loads [12]. Prior to the current pandemic, deep learning (DL)-based systems supported radiologists in spotting lung anomalies, ensuring reproducibility, and detecting subtle irregularities not visible to the naked eye [13]. Amid the ongoing COVID-19 crisis, many research teams are concentrating on creating automated systems for identifying infected individuals using CT images [14].

The unique radiographic patterns associated with COVID-19, such as region homogeneity, texture variation, and characteristic features like GGO, pleural effusion, and consolidation, have been extensively documented [15]. In this regard, we propose an integrated CNN framework for COVID-19 infection radiological pattern detection and analysis in CT images. The evaluation of the framework’s performance was conducted on a standard CT dataset, with efficacy comparisons made against established CNNs. Key contributions of the study encompass:

A new two-stage framework was developed for the identification and analysis of COVID-19 infection regions in CT that integrates Residual-BRNet classification and PA-RESeg segmentation CNNs.

A deep Residual-BRNet classifier integrates regional operations, edge operations, and residual learning to extract diverse features capturing COVID-19 radiological homogeneous areas, texture variations, and boundary patterns. Moreover, residual learning is implemented to reduce the chance of a vanishing gradient.

A newly introduced RESeg CNN accurately identifies COVID-19-affected areas within the lungs. This model systematically incorporates both average- and max-pooling implementation across encoder and decoder blocks to leverage region homogeneity and inter-class/heterogeneous features.

The inclusion of a novel pixel attention (PA) block within RESeg effectively mitigates sparse representation issues, leading to improved segmentation of mildly infectious regions. Finally, the proposed detection and segmentation techniques are fine-tuned through TL and assessed against existing techniques.

The paper follows this structure: Section 2 offers an overview of previous COVID-19 diagnosis research, Section 3 delineates the developed framework, while Section 4 elaborates on its experimental dataset and performance metrics. Section 5 assesses results and discusses experimental evaluation, and Section 6 provides conclusions.

2. Related Research

Currently, CT technology is globally employed for COVID-19 analysis, including in developed and under-developed countries. However, CT scan analysis is often slow, laborious, and susceptible to human error. Consequently, DL-based diagnostic tools have been developed to expedite and improve image analysis, aiding healthcare professionals [16,17,18]. DL techniques have demonstrated optimal performance in image analysis with deep CNNs being particularly prominent [19].

Several recent CNNs have been utilized to analyze COVID-19-infected CT scans, employing diverse approaches [20]. Additionally, researchers have explored transfer learning (TL) to predict COVID-19-infected CTs, achieving accuracies ranging from 87% to 98% [21,22,23]. For instance, COVID-Net, inspired by ResNet, achieves an accuracy of 92% in differentiating multiple types of COVID-19 infections but with a detection rate of 87% [24]. Similarly, COVID-CAPS, achieves a high accuracy (98%) but lower sensitivity (80%) to COVID-19 infection [25]. Additionally, COVID-RENet, a recently developed classification model, incorporates both smooth and boundary image features, achieving a 97% accuracy rate. These models are first trained on normal data and subsequently fine-tuned with COVID-19-specific images [26]. Alternatively, segmentation is commonly utilized to determine infection location and severity of medical challenges. Classical methods like watersheds and active contour models were initially used, since they often exhibited good performance [27,28]. As a result, a DL-based method named ‘VB-Net’ was implemented for segmenting COVID-19 lesions in CT, achieving a quantified 91% dice similarity (DS) score [22]. Additionally, the COVID-19 JCS system integrates both detection and analysis features to visualize and delineate infected areas, achieving a 95.0% detection rate and 93.0% specificity in classification, albeit with a lower 78.30% DS score in segmentation [29]. Furthermore, the DCN method is proposed for COVID-19 analysis, showcasing 96.74% accuracy and infection analysis DS of 83.50% [30].

3. Methodology

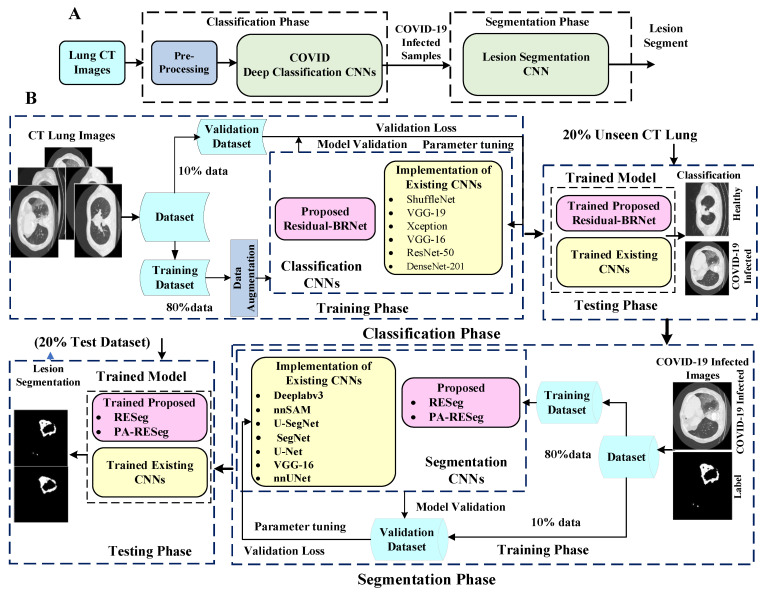

The research presents a new CNN-based diagnosis approach for automated COVID-19 abnormality analysis in lung CT. The framework is structured into two stages: classification and segmentation. Initially, the classification model discriminates between individuals with COVID-19 infection and healthy CT samples. PA-RESeg, a Region Estimation-based segmentation CNN, has subsequently been introduced for analyzing infectious lung regions. This framework introduces three key technical novelties: (1) the Residual-BRNet classification technique, (2) the PA-RESeg segmentation technique, and (3) the implementation of customized classification and analysis CNNs. Moreover, the framework conducts the segmentation of the COVID-19 lesion regions in CT to capture detailed region information, assisting in assessing infection spread. Figure 1 shows the developed framework, encompassing both summary and detailed processes.

Figure 1.

Panels (A,B) outline the key steps of the proposed two-stage framework and a detailed illustration of the complete workflow, respectively.

3.1. COVID-19 Infected CT Classification

Currently, COVID-19-infected CT samples are classified on a broad scale to differentiate them from healthy samples. Initially, in the classification stage, two distinct experimental configurations are utilized: (1) the newly developed Residual-BRNet (explained in Section 3.1.1), and (2) fine-tuning deep CNN via weight transfer. Implementation setups are provided below.

3.1.1. Proposed Residual-BRNet

This study presents a novel Residual-BRNet, a residual learning-based CNN, developed to distinguish COVID-19 infectious CT. The Residual-BRNet consists of four unique residual blocks comprising distinct convolutional (Conv)-based feature extractions, followed by novel homogenous operations and boundary operations. These components systematically extract homogeneous region and boundary features specific to COVID-19 at each stage. Within the residual block, Conv blocks are linked with shortcut connections to optimize Conv filters and capture textural variations. Average- and max-pooling are implemented to retain relevant infected patterns, specifically homogeneous and deformed regions. The architectural layout of Residual-BRNet is illustrated in Figure 2. In each block’s conclusion, a pooling operation with a stride of two is conducted to manage model complexity and improve invariant feature learning [31]. The mathematical operations within the Conv block are detailed in Equations (1) and (3), while the residual block is outlined in Equations (4) and (5). The Conv block includes a Conv layer, BN, and ReLU for both the nth and n − 1 layers.

| (1) |

| (2) |

Figure 2.

The proposed Residual-BRNet architecture.

Equation (1) describes Conv operation () and filter () for the lth layer. M × N and D signify the resolution and depth, respectively. Equation (2) denotes the batch normalization (BN) for Conv outcome (Cl), whereas and characterizes the mean and variance. Additionally, residual learning offers advantages by diminishing gradient issues, enhancing feature map representation, and fostering convergence. Equation (3) f(c) shows activation, while Equations (4) and (5) demonstrate the residual learning process; and are outputs of Blockn and Blockn−1, respectively. Finally, Equation (6) depicts the fully connected layer to reduce the feature space used to assess their significance, where represents the Conv and is kth neuron. Moreover, the cross-entropy (L) loss activation function is presented in Equation (7), and PCT represents the predicted class.

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

3.1.2. Implementation of Existing Classification CNNs

Deep CNNs, a form of DL model, exploit spatial correlations and have demonstrated good outcomes, especially in biomedical imaging [32,33,34]. To compare the proposed Residual-BRNet, we utilized several established CNN models with variations in depth and architecture to detect COVID-19 infection in CT. The used detection CNNs comprise VGG, ResNet, DenseNet, ShuffleNet, and Xception [35,36,37,38,39,40]. These CNNs underwent fine-tuning using TL for comparisons. Deep CNN architectures typically require a substantial dataset for efficient learning. Therefore, TL was utilized to leverage the learning from pre-trained extensive benchmarked datasets like ImageNet [41]. In this scenario, optimizing existing deep CNN models using TL involves adapting the architecture to match CT samples and replacing final layers with the target class.

3.2. COVID Infection Segmentation

Accurately identification and quantification, the infected region is important for analyzing radiological patterns and severity in diagnosis. Semantic segmentation, performed after initially distinguishing CT images at an abrasive scale, offers detailed insights into the infected areas. COVID-19 infections are separated from the surrounding areas through labeling binary pixels within the infection as the positive class and considering all others as healthy (background). Semantic segmentation involves pixel-level classification, assigning each pixel to its respective class [42]. This study employed two distinct segmentation setups: (i) the proposed PA-RESeg and (ii) the implementation of segmentation CNN; details are provided below.

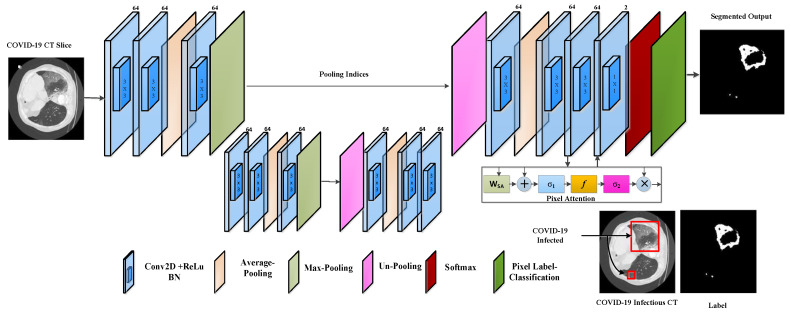

3.2.1. Proposed PA-RESeg Technique

The proposed RESeg segmentation CNN features two encoder and decoder blocks meticulously designed to enhance feature learning. Our approach systematically integrates average with max-pooling in the encoding stages (Equations (8) and (9)). We employ a combination of average pooling and max-unpooling, with the decoder part distinguishing our model from others in the field. The architectural design of RESeg is depicted in Figure 3. Distinguishing between COVID-19 infectious regions and background areas presents challenges due to poorly defined borders and potential overlap with healthy lung sections. To overcome this, we use max-pooling to capture boundary information, while average pooling evaluates the homogeneity of the COVID-19-infected region.

| (8) |

| (9) |

Figure 3.

The proposed PA-RESeg architecture.

In Equations (8) and (9), and represent the average and maximum pooling operations with ‘s’ stride, respectively, and are applied to the convolved output . We utilized an encoder–decoder technique for precise segmentation, capitalizing on the encoding stages’ capacity to learn semantically significant object-specific details. However, the encoding process can result in the loss of spatial information critical for object segmentation. To resolve this, in the decoding stage, the encoder’s channels are reconstructed using max-pooling indices to locate infectious regions. The final layer employs a 2 × 2 Conv operation to classify each pixel as a COVID-19 infectious region or background (healthy) using cross-entropy activation. The encoder–decoder design exhibits symmetry, with the encoder’s max-pooling layer replaced by the decoder’s unpooling layers.

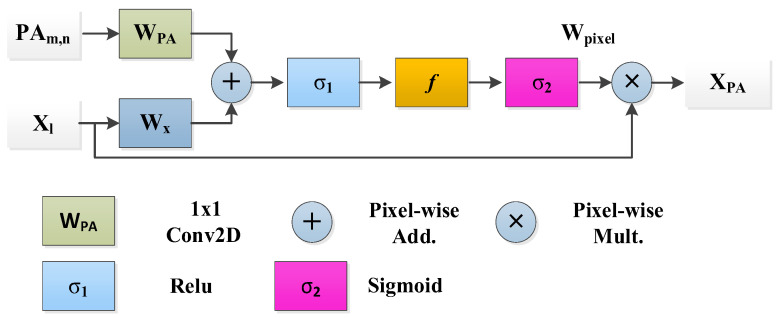

New Pixel Attention Block

This study presents a novel technique for focusing on individual pixels during training, guided by their representation to address the unrecognized mildly infected regions. This pixel attention (PA) method emphasizes COVID-19 infection with a high weightage while assigning a lower weight to background region pixels. This strategy is incorporated into the proposed RESeg, with details depicted in Figure 4.

| (10) |

| (11) |

| (12) |

Figure 4.

Pixel attention block.

Equation (10) defines as the input map and as the pixel-weightage within the range of [0, 1]. The outcome delineates the infectious area and attenuates redundant information. Equations (11) and (12) utilize and as the ReLU and Sigmoid activation, respectively. and represent biases, and , , and is the linear transformation.

3.2.2. Existing Segmentation CNNs

Various DL techniques with diverse architectures have been proposed and evaluated for semantic segmentation across various datasets and categories [43]. These models vary in terms of encoders and decoders, upsampling methods, and skip connections. In this study, we customized segmentation models, including nnSegment Anything Model (SAM), nnUNet, VGG-16, SegNet, U-Net, U-SegNet, and DeepLabV3 [44,45,46,47,48], for application on the COVID-19 lesion. We modified the segmentation CNN by replacing the initial and target with customized layers adjusted to the data dimensions.

4. Experimental Configuration

4.1. Dataset

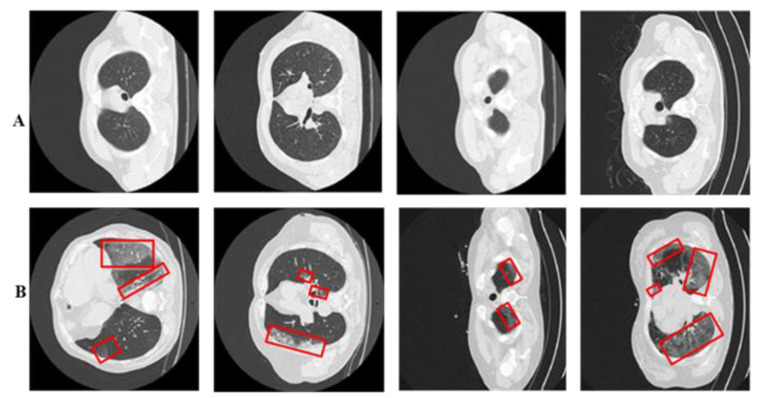

The proposed diagnosis utilized a standardized CT image prepared by the Italian Society of Radiology (SIRM) and UESTC-COVID-19 Radiological Center [49]. The dataset consists of 70 patients with 10,838 used axial CT samples, meticulously reviewed by experienced radiologists, with marked infected lung segments. Of these samples, 5152 display COVID-19 infection patterns, while 5686 are healthy. The whole dataset distributions are available in Table 1. Every CT sample includes a binary mask provided by a radiologist (ground truth), offering detailed pixel-level binary labels. The dataset encompasses diverse infection levels: mild, moderate, and severe. To optimize computational efficiency, all images are dimensioned as 304 × 304 × 3 using interpolation. See Figure 5 for illustrations of COVID-19-infected and healthy images.

Table 1.

COVID-19 CT data distribution.

| Properties | Description |

|---|---|

| Total Slices | 10,838 |

| Healthy Slices | 5686 |

| COVID-19 Infectious Slices | 5152 |

| Phase 1: Detection Train and Validation (90%) | (7720, 772) |

| Detection Test Portion (20%) | (2346) |

| Phase 2: Segmentation Train and Validation (90%) | (4121, 412) |

| Segmentation Test (20%) | (1031) |

Figure 5.

Panel (A) displays healthy lung CT samples, while panel (B) showcases COVID-19-infected lung CT samples. Red boxes highlight regions of infection.

4.2. Implementation Details

The framework entails training in separate classification and analysis CNNs. The COVID-19 dataset comprises 10,838 CT for detection, with 5152 infected and 5686 healthy images. These infected images and their labels are used for segmentation model training. We maintain a fixed experimental setup for both stage models, with an 8:2 split ratio for training and testing. Additionally, the training was divided into training and validation sets at a 9:1 ratio for hyperparameter selection. Cross-validation improves model robustness and generalization during hyperparameter selection. Hold-out cross-validation is employed for training both detection and segmentation CNNs. Hyperparameters are crucial for optimizing deep CNN models trained with SGD to minimize cross-entropy loss. The models undergo 30 epochs with selected optimal hyperparameters (learning rate (0.001), batch size (8), momentum (0.95)) to ensure convergence [50]. Softmax is employed for class probability assignment in both classification and segmentation tasks. The 95% confidence interval (CI) for sensitivity and the area under curve (AUC) of detection models is computed [51,52]. MATLAB 2023b is used on an Intel Core i7 processor and Nvidia GTX 1080 Tesla GPU-enabled system. Training all networks takes approximately 3 days.

4.3. Performance Evaluation

The diagnosis framework’s performance is evaluated using detection and segmentation metrics. Detection metrics, including accuracy (Acc), sensitivity (S), precision (P), specificity (Sp), MCC, and F-score, are accompanied by Equations (13)–(17). Segmentation models are assessed based on segmentation accuracy (S-Acc), IoU, and DS coefficient, as presented in Equations (18) and (19). Accuracy represents the accurate segregation of infected and healthy class samples, while S-Acc indicates the accurate prediction of infected and healthy pixels. The DSC metric evaluates structure similarity, and IoU quantifies the overlapping ratio between detected and labelled pixels. A further explanation of performance metrics is shown in Table 1.

| (13) |

| (14) |

| (15) |

| (16) |

| (17) |

| (18) |

| (19) |

5. Results

This study introduces a two-stage framework for analyzing infected CT samples, followed by exploring lung infection patterns within classified COVID-19 images. This staging process reflects clinical workflows, where patients undergo additional diagnostic tests after detection.

5.1. CT Classification of COVID-19 Infection

In this study, we introduce Residual-BRNet CNN for initial screening and categorize the infected and healthy images. The Residual-BRNet optimized performance for high detection rates of characteristic patterns and minimal false positives of COVID-19, as demonstrated in Table 2. We evaluate Residual-BRNet’s learning potential for COVID-19 patterns by comparing its performance with existing CNNs.

5.1.1. Proposed Residual-BRNet Performance Analysis

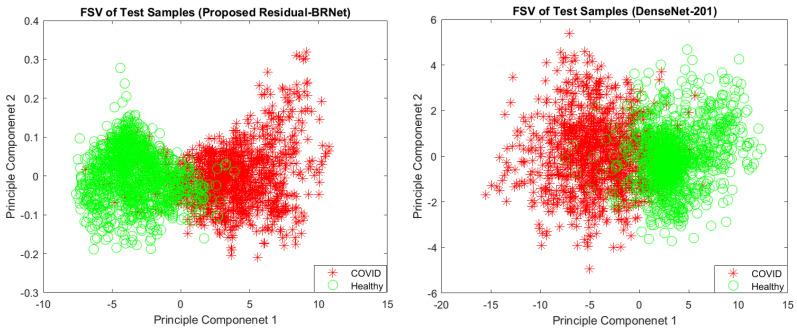

The proposed Residual-BRNet’s performance is assessed on the test set, measuring accuracy, F-score, MCC, sensitivity, specificity, and precision (Table 2). Compared to baseline DenseNet, Residual-BRNet exhibits superior generalization, with a higher F-score (Residual-BRNet: 98.01%, DenseNet: 96.77%), accuracy (Residual-BRNet: 97.97%, DenseNet: 96.73%), and MCC (Residual-BRNet: 96.81%, DenseNet: 92.02%). The discriminative ability of Residual-BRNet is further demonstrated in the PCA plot. Additionally, a comparative feature-based analysis of the best-performing DenseNet models is presented in Figure 6 for reference.

Figure 6.

PC1/PC2-based feature space visualization of the techniques.

Table 2.

Comparison of the developed Residual-BRNet with existing CNNs.

| CNNs | Acc. | F-Score | Pre. | MCC | Spec. | Sen. |

|---|---|---|---|---|---|---|

| ShuffleNet | 89.88 | 90.00 | 88.85 | 79.76 | 88.55 | 91.38 |

| VGG-19 | 92.26 | 92.44 | 92.78 | 81.87 | 90.96 | 92.18 |

| Xception | 94.35 | 94.43 | 94.15 | 87.21 | 93.98 | 93.94 |

| VGG-16 | 95.83 | 95.81 | 97.56 | 90.67 | 96.99 | 94.67 |

| ResNet-50 | 96.13 | 96.12 | 97.58 | 91.52 | 97.59 | 95.35 |

| DenseNet-201 | 96.73 | 96.77 | 96.49 | 92.02 | 96.39 | 96.71 |

| Proposed Residual-BRNet | 97.97 | 98.01 | 97.61 | 96.81 | 97.62 | 98.42 |

| Reported Studies | ||||||

| JCS [29] | --- | --- | --- | --- | 93.17 | 95.13 |

| VB-Net [22] | --- | --- | --- | --- | 90.21 | 87.11 |

| DCN [30] | --- | 96.74 | --- | --- | --- | --- |

| 3DAHNet [53] | -- | --- | --- | --- | 90.13 | 85.22 |

5.1.2. Existing CNN Performance

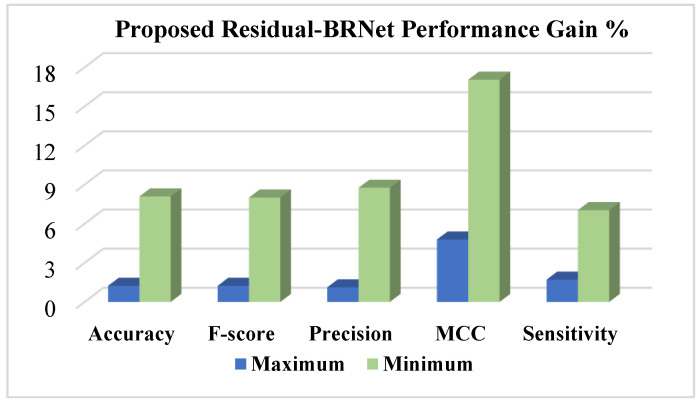

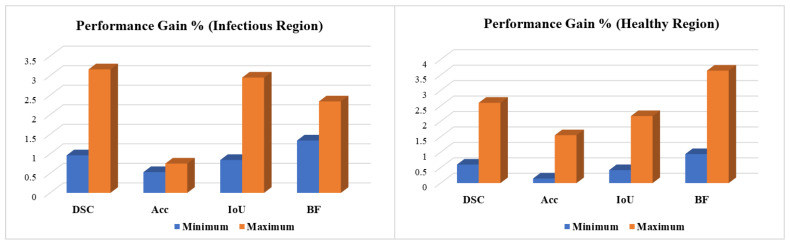

The Residual-BRNet’s effectiveness is benchmarked against established deep CNN-based detection CNNs, renowned for their effectiveness in tasks such as lung abnormality classification. TL facilitates the learning of COVID-19-specific features more efficiently from CT images. Conversely, Residual-BRNet demonstrates superior performance in F-score, MCC, and accuracy (Table 3) compared to established CNNs. Notably, Residual-BRNet significantly improves classification performance by approximately 1.24 to 8.01% for F-score, 4.79 to 17.05% for MCC, and 1.24 to 8.09% for accuracy. Figure 7 illustrates the performance enhancement of Residual-BRNet over the maximum- and minimum-performing deep CNNs in terms of detection metrics.

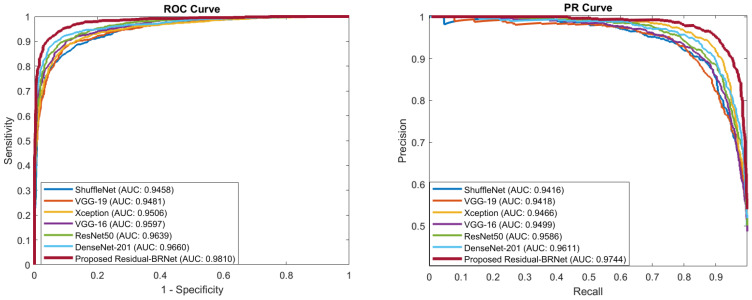

5.1.3. PR- and ROC-Curve-Based Comparison

PR and ROC curves are utilized for the quantitative evaluation of the segregation ability of detection CNNs, as depicted in Figure 8. These curves act as performance metrics, assessing the classifier’s generalization ability by showcasing the distinction of inter-class variation across varying thresholds. PR curves for Residual-BRNet and existing CNN, demonstrating the superior learning capacity of the proposed CNN. The proposed Residual-BRNet outperforms DenseNet and other deep CNN models in terms of AUCs, F-score, accuracy, and overall MCC (Table 2).

Figure 8.

The proposed Residual-BRNet PR and ROC AUC comparison with the existing CNNs.

Figure 7.

The performance evaluation of the proposed Residual-BRNet is conducted, assessing detection metrics.

5.2. Infectious Regions Analysis

The proposed Residual-BRNet identifies images as COVID-19-infected and channels them to a segmentation CNN for exploring infected regions. Analyzing infected lung lobes is pivotal for understanding infection patterns, and their impact on adjacent lung segments. Additionally, region analysis is essential for quantifying infection severity, potentially assisting in patient grouping and treatment planning for mild versus severe cases. We present PA-RESeg for segmentation, and a series of segmentation CNNs are utilized to measure the model’s learning ability. These models are fine-tuned to detect characteristic COVID-19 imagery features, such as GGO, consolidation, etc., to discriminate between typical and infected regions on CT images (Table 3).

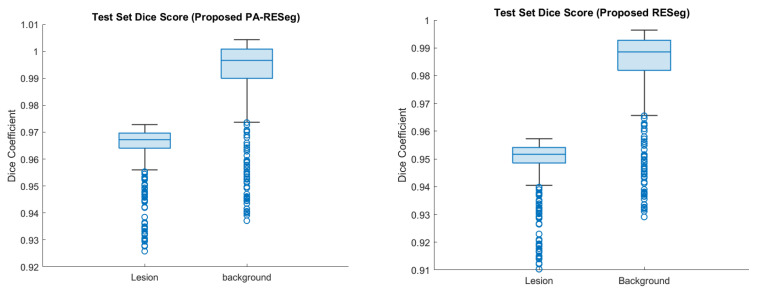

5.2.1. Proposed RESeg Segmentation Analysis

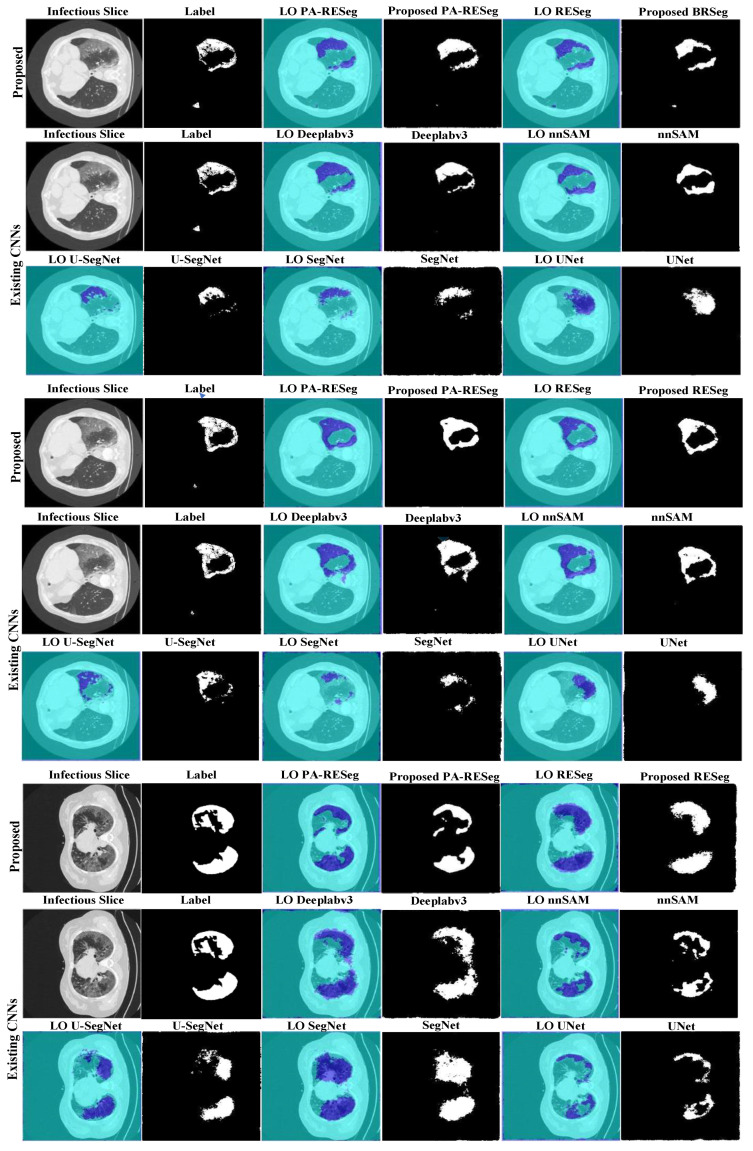

The challenge in detecting COVID-19 infection lies in its varied patterns, such as ground-glass opacities, consolidation, and patchy bilateral shadows. Furthermore, the infection’s pattern and extent vary temporally and across individuals, making early-stage differentiation between infected and healthy regions challenging. Thus, a critical aspect is accurately delineating infectious regions with well-defined boundaries within the lungs. To address this, we introduce a novel approach that integrates max- and average pooling within the RESeg and finally, mildly infected region emphasis through PA block. The proposed PA-RESeg demonstrates robust analysis, achieving DSC and IoU of 95.96% and 98.43%, respectively, for lesion regions (Table 3). Notably, precise boundary discrimination is evident from the higher boundary F-score (BFS) value of 98.87%. The proposed PA-RESeg surpasses performance over the benchmark DeepLabv3 in terms of DS score, Acc, and IoU. Additionally, generated segmented binary masks illustrate superior visual quality for the proposed PA-RESeg and accurately identify all infected regions (Figure 9). Qualitative analysis confirms the model’s efficacy in segmenting various infection levels (low, medium, high) across different lung lobes, accurately localizing infections, whether isolated or multiple distinct segments.

Figure 9.

Visual evaluation of the original slice, ground truth, label-overlay (LO), and segmented results of CNNs.

Table 3.

The developed PA-RESeg and current segmentation CNN analysis.

| Model | Region | DSC% | Acc% | IoU% | BF% |

|---|---|---|---|---|---|

| Ablation Study | |||||

| Proposed PA-RESeg | Lesion | 95.96 | 99.01 | 98.43 | 98.87 |

| Healthy | 98.90 | 99.48 | 99.09 | 97.33 | |

| Proposed-RESeg | Lesion | 95.61 | 98.83 | 98.35 | 98.47 |

| Healthy | 98.40 | 99.38 | 98.85 | 96.73 | |

| Existing CNNs | |||||

| Deeplabv3 | Lesion | 95.00 | 98.48 | 97.59 | 97.53 |

| Healthy | 98.30 | 99.33 | 98.67 | 96.39 | |

| nnSAM | Lesion | 94.90 | 98.74 | 97.62 | 98.19 |

| Healthy | 98.20 | 99.07 | 98.49 | 95.86 | |

| U-SegNet | Lesion | 94.65 | 98.25 | 97.01 | 97.02 |

| Healthy | 98.01 | 99.16 | 98.10 | 95.22 | |

| SegNet | Lesion | 93.60 | 97.94 | 97.2 | 97.03 |

| Healthy | 96.70 | 99.27 | 98.05 | 95.85 | |

| U-Net | Lesion | 93.20 | 98.01 | 97.21 | 96.50 |

| Healthy | 96.60 | 99.51 | 98.07 | 96.44 | |

| VGG-16 | Lesion | 93.00 | 98.61 | 95.88 | 96.91 |

| Healthy | 96.70 | 98.28 | 97.32 | 94.07 | |

| nnUNet | Lesion | 92.80 | 98.26 | 95.48 | 96.53 |

| Healthy | 96.30 | 97.93 | 96.92 | 93.69 | |

| Reported Studies | |||||

| VB-Net [22] | Lesion | 91.12 | --- | --- | --- |

| Weakly Sup. [54] | Lesion | 90.21 | --- | --- | --- |

| Multi-stask Learning [55] | Lesion | 88.43 | --- | --- | --- |

| DCN [30] | Lesion | 83.55 | --- | --- | --- |

| U-Net-CA [56] | Lesion | 83.17 | --- | --- | --- |

| Inf-Net [57] | Lesion | 68.23 | --- | --- | --- |

5.2.2. Segmentation Stage Performance Comparison

We evaluated the learning capacity of the proposed PA-RESeg and compared it with current segmentation CNNs (Figure 10 and Table 3). The performance of PA-RESeg is assessed under four metrics (DSC, Acc, IoU, and BFS plots), indicating that our proposed method outperforms the existing techniques across maximum and minimum scores (Figure 10). The proposed PA-RESeg segmentation model exhibits performance enhancements over existing CNN models on lesion regions in BFS (1.34–2.34%), IoU (0.84–2.95%), and DS score (1–3.16%) (Table 3). Segmented masks generated by PA-RESeg and existing segmentation CNNs are illustrated in Figure 9. Qualitative analysis indicates the consistently good performance of PA-RESeg compared to existing segmentation CNNs. Existing CNNs exhibit poor performance, particularly for mildly infected CT samples, with fluctuations observed in nnUNet, VGG16, and U-Net models, suggesting poor generalization. Among existing models, DeepLabV3 demonstrates good performance, with a DSC of 95.00%, IoU of 97.59%, and BFS of 97.53%. In contrast, our proposed model, though smaller in size, exhibits the best performance in high-capacity DeepLabV3.

Figure 10.

Performance gain of the proposed PA-RESeg over existing segmentation CNNs.

5.2.3. Pixel Attention Concept

The dataset primarily contains typical healthy lung segments, which can overshadow COVID-19-infected areas, impacting segmentation model performance. To address this challenge, we implemented an attention concept that integrates pixel weights consistently and enhances segmentation across different infection categories, evident in its visual quality. Notably, there is a significant enhancement in less severely infected lung sections, with performance gains as shown in Figure 11.

Figure 11.

Performance analysis of the proposed segmentation CNNs. The blue circle represents each instances test result.

6. Conclusions

Prompt identification of COVID-19 infection patterns is important for its efficient prevention and transmission control. A two-stage diagnosis framework is proposed, including a novel Residual-BRNet CNN for categorization and new PA-RESeg for scrutinizing COVID-19 infection in CT images. By leveraging various consistent contrast and texture variations, as well as structural features, this integrated approach effectively captures COVID-19 radiological patterns. The proposed Residual-BRNet screening CNN demonstrates a notable discrimination ability in the initial stage (F-score: 98.01, accuracy: 97.97%, sensitivity: 98.42%) compared to current CNNs, proficiently identifying infectious CT samples. Furthermore, simulations reveal that PA-RESeg can be used for the precise identification and analysis of infectious images (IoU: 98.43%, DSC: 95.96%). This promising performance validates the efficacy of the two-stage approach in accurately detecting and analyzing COVID-19-infected regions. Such an integrated method aids radiologists in estimating disease severity (mild, medium, severe), whereas single-phase frameworks may lack precision and detailed analysis. Future endeavors will concentrate on applying the proposed framework to larger datasets to enhance the effectiveness and reliability of real-time diagnostics. Additionally, employing dataset augmentation techniques like GANs to generate synthetic examples and expanding the framework by utilizing the integration of CNN with vision transformers, in order to automatically divided infected regions into multi-class patterns, will provide comprehensive insights into infectious patterns.

Acknowledgments

I thank the King Saud University for providing the necessary resources.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Correspondence and requests for materials should be addressed to Saddam Hussain Khan.

Conflicts of Interest

The author declares that he has no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Spinelli A., Pellino G. COVID-19 pandemic: Perspectives on an unfolding crisis. Br. J. Surg. 2020;107:785–787. doi: 10.1002/bjs.11627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wu J.T., Leung K., Bushman M., Kishore N., Niehus R., De Salazar P.M., Cowling B.J., Lipsitch M., Leung G.M. Estimating clinical severity of COVID-19 from the transmission dynamics in Wuhan, China. Nat. Med. 2020;26:506–510. doi: 10.1038/s41591-020-0822-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Coronavirus Update (Live): 704,753,890 Cases and 7,010,681 Deaths from COVID-19 Virus Pandemic–Worldometer 2024. [(accessed on 16 July 2024)]. Available online: https://www.worldometers.info/coronavirus/

- 4.Xu X., Chen P., Wang J., Feng J., Zhou H., Li X., Zhong W., Hao P. Evolution of the novel coronavirus from the ongoing Wuhan outbreak and modeling of its spike protein for risk of human transmission. Sci. China Life Sci. 2020;63:45–460. doi: 10.1007/s11427-020-1637-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Huang C., Wang Y., Li X., Ren L., Zhao J., Hu Y., Zhang L., Fan G., Xu J., Gu X. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet. 2020;395:497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.He D.D., Zhang X.K., Zhu X.Y., Huang F.F., Wang Z., Tu J.C. Network pharmacology and RNA-sequencing reveal the molecular mechanism of Xuebijing injection on COVID-19-induced cardiac dysfunction. Comput. Biol. Med. 2021;131:104293. doi: 10.1016/j.compbiomed.2021.104293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Khan S.H., Sohail A., Khan A., Lee Y.S. COVID-19 Detection in Chest X-ray Images Using a New Channel Boosted CNN. Diagnostics. 2022;12:267. doi: 10.3390/diagnostics12020267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fang Y., Zhang H., Xie J., Lin M., Ying L., Pang P., Ji W. Sensitivity of Chest CT for COVID-19: Comparison to RT-PCR. Radiology. 2020;296:E115–E117. doi: 10.1148/radiol.2020200432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tello-Mijares S., Woo L. Computed Tomography Image Processing Analysis in COVID-19 Patient Follow-Up Assessment. J. Healthc. Eng. 2021;2021:8869372. doi: 10.1155/2021/8869372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Salehi S., Abedi A., Balakrishnan S., Gholamrezanezhad A. Coronavirus disease 2019 (COVID-19): A systematic review of imaging findings in 919 patients. Am. J. Roentgenol. 2020;215:87–93. doi: 10.2214/AJR.20.23034. [DOI] [PubMed] [Google Scholar]

- 11.Long C., Xu H., Shen Q., Zhang X., Fan B., Wang C., Zeng B., Li Z., Li X., Li H. Diagnosis of the Coronavirus disease (COVID-19): rRT-PCR or CT? Eur. J. Radiol. 2020;126:108961. doi: 10.1016/j.ejrad.2020.108961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zheng W., Yan L., Gou C., Zhang Z.C., Zhang J.J., Hu M., Wang F.Y. Learning to learn by yourself: Unsupervised meta-learning with self-knowledge distillation for COVID-19 diagnosis from pneumonia cases. Int. J. Intell. Syst. 2021;36:4033–4064. doi: 10.1002/int.22449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Park S., Lee S.M., Lee K.H., Jung K.H., Bae W., Choe J., Seo J.B. Deep learning-based detection system for multiclass lesions on chest radiographs: Comparison with observer readings. Eur. Radiol. 2020;30:1359–1368. doi: 10.1007/s00330-019-06532-x. [DOI] [PubMed] [Google Scholar]

- 14.Khan A., Khan S.H., Saif M., Batool A., Sohail A., Waleed Khan M. A Survey of Deep Learning Techniques for the Analysis of COVID-19 and their usability for Detecting Omicron. J. Exp. Theor. Artif. Intell. 2023:1–43. doi: 10.1080/0952813X.2023.2165724. [DOI] [Google Scholar]

- 15.Liu J., Dong B., Wang S., Cui H., Fan D.P., Ma J., Chen G. COVID-19 lung infection segmentation with a novel two-stage cross-domain transfer learning framework. Med. Image Anal. 2021;74:102205. doi: 10.1016/j.media.2021.102205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Rauf Z., Sohail A., Khan S.H., Khan A., Gwak J., Maqbool M. Maqbool Attention-guided multi-scale deep object detection framework for lymphocyte analysis in IHC histological images. Microscopy. 2023;72:27–42. doi: 10.1093/jmicro/dfac051. [DOI] [PubMed] [Google Scholar]

- 17.Ozsahin I., Sekeroglu B., Musa M.S., Mustapha M.T., Uzun Ozsahin D. Review on Diagnosis of COVID-19 from Chest CT Images Using Artificial Intelligence. Comput. Math. Methods Med. 2020;2020:9756518. doi: 10.1155/2020/9756518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lee R.J., Wysocki O., Bhogal T., Shotton R., Tivey A., Angelakas A., Aung T., Banfill K., Baxter M., Boyce H. Longitudinal characterisation of haematological and biochemical parameters in cancer patients prior to and during COVID-19 reveals features associated with outcome. ESMO Open. 2021;6:100005. doi: 10.1016/j.esmoop.2020.100005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rehouma R., Buchert M., Chen Y.P.P. Chen Machine learning for medical imaging-based COVID-19 detection and diagnosis. Int. J. Intell. Syst. 2021;36:5085–5115. doi: 10.1002/int.22504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Serena Low W.C., Chuah J.H., Tee C.A.T., Anis S., Shoaib M.A., Faisal A., Khalil A., Lai K.W. An Overview of Deep Learning Techniques on Chest X-Ray and CT Scan Identification of COVID-19. Comput. Math. Methods Med. 2021;2021:5528144. doi: 10.1155/2021/5528144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Narin A., Kaya C., Pamuk Z. Automatic Detection of Coronavirus Disease (COVID-19) Using X-ray Images and Deep Convolutional Neural Networks. Comput. Vis. Pattern Recognit. 2020;24:1207–1220. doi: 10.1007/s10044-021-00984-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Shan F., Gao Y., Wang J., Shi W., Shi N., Han M., Xue Z., Shen D., Shi Y. Lung Infection Quantification of COVID-19 in CT Images with Deep Learning. arXiv. 20202003.04655 [Google Scholar]

- 23.Wang S., Kang B., Ma J., Zeng X., Xiao M., Guo J., Cai M., Yang J., Li Y., Meng X., et al. A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19) medRxiv. 2020 doi: 10.1007/s00330-021-07715-1. medRxiv:2020.02.14.20023028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wang L., Lin Z.Q., Wong A. COVID-Net: A Tailored Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest X-Ray Images. Sci. Rep. 2020;10:19549. doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Afshar P., Heidarian S., Naderkhani F., Oikonomou A., Plataniotis K.N., Mohammadi A. COVID-CAPS: A Capsule Network-based Framework for Identification of COVID-19 cases from X-ray Images. Pattern Recognit. Lett. 2020;138:638–643. doi: 10.1016/j.patrec.2020.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Khan S.H., Sohail A., Zafar M.M., Khan A. Coronavirus disease analysis using chest X-ray images and a novel deep convolutional neural network. Photodiagn. Photodyn. Ther. 2021;35:102473. doi: 10.1016/j.pdpdt.2021.102473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rajinikanth V., Dey N., Raj A.N.J., Hassanien A.E., Santosh K.C., Raja N. Harmony-Search and Otsu based System for Coronavirus Disease (COVID-19) Detection using Lung CT Scan Images. arXiv. 20202004.03431 [Google Scholar]

- 28.Wang G., Li Z., Weng G., Chen Y. An optimized denoised bias correction model with local pre-fitting function for weak boundary image segmentation. Signal Process. 2024;220:109448. doi: 10.1016/j.sigpro.2024.109448. [DOI] [Google Scholar]

- 29.Wu Y.H., Gao S.H., Mei J., Xu J., Fan D.P., Zhang R.G., Cheng M.M. JCS: An Explainable COVID-19 Diagnosis System by Joint Classification and Segmentation. IEEE Trans. Image Process. 2021;30:3113–3126. doi: 10.1109/TIP.2021.3058783. [DOI] [PubMed] [Google Scholar]

- 30.Gao K., Su J., Jiang Z., Zeng L.L., Feng Z., Shen H., Rong P., Xu X., Qin J., Yang Y. Dual-branch combination network (DCN): Towards accurate diagnosis and lesion segmentation of COVID-19 using CT images. Med. Image Anal. 2021;67:101836. doi: 10.1016/j.media.2020.101836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Arshad M.A., Khan S.H., Qamar S., Khan M.W., Murtza I., Gwak J., Khan A. Drone navigation using region and edge exploitation-based deep CNN. IEEE Access. 2022;10:95441–95450. doi: 10.1109/ACCESS.2022.3204876. [DOI] [Google Scholar]

- 32.Khan A., Sohail A., Zahoora U., Qureshi A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020;53:5455–5516. doi: 10.1007/s10462-020-09825-6. [DOI] [Google Scholar]

- 33.Zahoor M.M., Khan S.H. Brain tumor MRI Classification using a Novel Deep Residual and Regional CNN. arXiv. 2022 doi: 10.3390/biomedicines12071395.2211.16571 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Khan S.H., Iqbal R., Naz S. A Recent Survey of the Advancements in Deep Learning Techniques for Monkeypox Disease Detection. arXiv. 20232311.10754 [Google Scholar]

- 35.Simonyan K., Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv. 20141409.1556 [Google Scholar]

- 36.Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. Going Deeper with Convolutions; Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Boston, MA, USA. 7–12 June 2015; pp. 1–9. [Google Scholar]

- 37.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. Densely connected convolutional networks; Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Honolulu, HI, USA. 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- 38.Zhang X., Zhou X., Lin M., Sun J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; Salt Lake City, UT, USA. 18–23 June 2018. [Google Scholar]

- 39.Chollet F. Xception: Deep Learning with Depthwise Separable Convolutions; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVRP); Honolulu, HI, USA. 21–26 July 2017. [Google Scholar]

- 40.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR); Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 41.Weiss K., Khoshgoftaar T.M., Wang D. A survey of transfer learning. J. Big Data. 2016;3:9. doi: 10.1186/s40537-016-0043-6. [DOI] [Google Scholar]

- 42.Asgari Taghanaki S., Abhishek K., Cohen J.P., Cohen-Adad J., Hamarneh G. Deep semantic segmentation of natural and medical images: A review. Artif. Intell. Rev. 2020;54:137–178. doi: 10.1007/s10462-020-09854-1. [DOI] [Google Scholar]

- 43.Liu X., Deng Z., Yang Y. Recent progress in semantic image segmentation. Artif. Intell. Rev. 2019;52:1089–1106. doi: 10.1007/s10462-018-9641-3. [DOI] [Google Scholar]

- 44.Li Y., Jing B., Feng X., Li Z., He Y., Wang J., Zhang Y. nnSAM: Plug-and-play Segment Anything Model Improves nnUNet Performance. arXiv. 20232309.16967 [Google Scholar]

- 45.Badrinarayanan V., Kendall A., Cipolla R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 46.Kumar P., Nagar P., Arora C., Gupta A. U-segnet: Fully convolutional neural network based automated brain tissue segmentation tool. arXiv. 20181806.04429 [Google Scholar]

- 47.Long J., Shelhamer E., Darrell T. Fully convolutional networks for semantic segmentation; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR); Boston, MA, USA. 7–12 June 2015. [Google Scholar]

- 48.Chen L.C., Papandreou G., Kokkinos I., Murphy K., Yuille A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018;40:834–848. doi: 10.1109/TPAMI.2017.2699184. [DOI] [PubMed] [Google Scholar]

- 49.Ma J., Wang Y., An X., Ge C., Yu Z., Chen J., Zhu Q., Dong G., He J., He Z. Towards Data-Efficient Learning: A Benchmark for COVID-19 CT Lung and Infection Segmentation. Med. Phys. 2021;48:1197–1210. doi: 10.1002/mp.14676. [DOI] [PubMed] [Google Scholar]

- 50.Goodfellow I., Bengio Y., Courville A. Deep Learning. MIT Press; Cambridge, MA, USA: 2016. p. 800. [Google Scholar]

- 51.Khan S.H., Alahmadi T.J., Alsahfi T., Alsadhan A.A., Mazroa A.A., Alkahtani H.K., Albanyan A., Sakr H.A. COVID-19 infection analysis framework using novel boosted CNNs and radiological images. Sci. Rep. 2023;13:21837. doi: 10.1038/s41598-023-49218-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Asif H.M., Khan S.H., Alahmadi T.J., Alsahfi T., Mahmoud A. Malaria parasitic detection using a new Deep Boosted and Ensemble Learning framework. Complex Intell. Syst. 2024;10:4835–4851. doi: 10.1007/s40747-024-01406-2. [DOI] [Google Scholar]

- 53.Harmon S.A., Sanford T.H., Xu S., Turkbey E.B., Roth H., Xu Z., Yang D., Myronenko A., Anderson V. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat. Commun. 2020;11:4080. doi: 10.1038/s41467-020-17971-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Hu S., Gao Y., Niu Z., Jiang Y., Li L., Xiao X., Wang M., Fang E.F., Menpes-Smith W., Xia J. Weakly Supervised Deep Learning for COVID-19 Infection Detection and Classification From CT Images. IEEE Access. 2020;8:118869–118883. doi: 10.1109/ACCESS.2020.3005510. [DOI] [Google Scholar]

- 55.Amyar A., Modzelewski R., Li H., Ruan S. Multi-task deep learning based CT imaging analysis for COVID-19 pneumonia: Classification and segmentation. Comput. Biol. Med. 2020;126:104037. doi: 10.1016/j.compbiomed.2020.104037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Zhou T., Canu S., Ruan S. An automatic COVID-19 CT segmentation network using spatial and channel attention mechanism. arXiv. 2020 doi: 10.1002/ima.22527.2004.06673 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fan D.P., Zhou T., Ji G.P., Zhou Y., Chen G., Fu H., Shen J., Shao L. Inf-Net: Automatic COVID-19 Lung Infection Segmentation from CT Images. IEEE Trans. Med. Imaging. 2020;39:2626–2637. doi: 10.1109/TMI.2020.2996645. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Correspondence and requests for materials should be addressed to Saddam Hussain Khan.