Abstract

Introduction

Medical misinformation, which contributes to vaccine hesitancy, poses challenges to health professionals. Health professions students, while capable of addressing and advocating for vaccination, may lack the confidence to engage with vaccine-hesitant individuals influenced by medical misinformation.

Methods

An interprofessional in-person simulation activity (90 minutes) using standardized patients was developed and instituted for students in medicine, nursing, pharmacy, and public health programs. Student volunteers were recruited from classes approximately halfway through their respective degree programs (i.e., second or third year of a 4-year program). Online simulation was used as a method to prepare for in-person simulation. Impact on students was assessed primarily through a postprogram student self-assessment.

Results

A total of 220 students participated in the program; 206 (94%) had paired data available to analyze. Following program participation, self-assessed abilities increased from pre to post, from 2.8 out of 5 (good) to 3.9 out of 5 (very good; p < .001). Ninety-eight percent of students felt that their ability to address medical misinformation was somewhat/much better after the activity, compared to before, and that their ability to address vaccine hesitancy was somewhat/much better. The overall program was rated highly, with mean scores for each program evaluation item >4 out of 5 (very good).

Discussion

An interprofessional cohort of students demonstrated improvement in self-assessed skills to participate in a conversation with an individual with hesitancy to receive vaccines and/or beliefs informed by misinformation. Students felt that this program was relevant and important to their professional development.

Keywords: Medical Misinformation, Vaccination Hesitancy, Communication Skills, Computer-Based Simulation, Simulation, Standardized Patient, Interprofessional Education, Misinformation

Educational Objectives

By the end of this activity, learners will be able to:

-

1.

Describe how to actively listen to an individual's concerns related to vaccines.

-

2.

Describe how to assess an individual's readiness for change to encourage discussion around vaccine decision-making.

-

3.

List ways to respond appropriately to an individual's level of resistance to vaccination.

Introduction

The World Health Organization (WHO) recognized vaccine hesitancy as a top 10 public health threat in 2019.1 This hesitancy is fueled by various factors, including an individual's confidence in the safety and effectiveness of vaccines, perceived need for vaccination, and access to vaccines.2 Misinformation and disinformation, often propagated through social networks, can significantly influence an individual's confidence and perceived need for vaccination.3,4 Navigating conversations to address misinformation and promote vaccination can prove difficult, particularly when individuals hold deep emotional attachments to their beliefs. Although health professionals and students are adept at addressing factual gaps in vaccine-hesitant individuals, they may lack the tools to influence those entrenched in misinformation-driven beliefs. This underscores the importance of an empathetic and inquisitive approach that goes beyond mere correction of facts.5

According to Kolb, experiential learning is a continuous four-step cycle including concrete experience, reflective observation, abstract conceptualization, and active experimentation.6 This cycle can initiate from any point but commonly commences with a concrete experience where the student actively engages in or feels a real-life situation. Subsequently, the student engages in reflection to gain insights into their actions. The third step involves grasping the overarching principles underlying those actions. Finally, active experimentation permits the student to apply newfound knowledge to novel situations. Simulation enables students to complete the entire experiential learning cycle, and research indicates that simulation can yield positive outcomes in terms of learning, skill development, learner satisfaction, critical thinking, and self-confidence.7 This approach to learning can be leveraged when aiming to improve learner confidence and skill in a complex domain like vaccine hesitancy.

We hypothesize that an experiential, interprofessional education (IPE) activity could be a novel approach to helping prepare a diverse group of students to address misinformation and vaccine hesitancy. An interprofessional approach aligns with the WHO's interprofessional collaborative practice framework.8 Furthermore, an IPE approach allows students to explore how they can work in complementary ways to address shared challenges.9 By developing health professions students’ confidence in engaging in these challenging conversations, we aim to equip them with the skills necessary to effectively address misinformation, advocate for vaccination, and, ultimately, influence individual behaviors towards a healthier future.

Previous studies have focused on uniprofessional approaches and impact on students’ or residents’ confidence in addressing vaccine hesitancy.10–13 Norton, Olson, and Sanguino developed a vaccine curriculum for medical residents that includes didactic instruction and standardized patient (SP) simulations.14 Similarly, Morhardt and colleagues combined SP simulated encounters with didactic instruction of medical residents while preforming pre- and postcurriculum assessments of resident self-confidence and performance.15 Our educational program is similar in that it includes SP simulated encounters; however, we have incorporated two unique elements. First, our program is designed to be interprofessional, with student learners from multiple health professions schools extending beyond medical resident training. Second, we have incorporated online simulations as a method to prepare learners for in-person simulation. Data from our pilot program (n = 51) have been previously published and indicate that the program had a positive impact on students’ self-assessed abilities and that the program overall was rated highly.16 These data are encouraging and support our efforts to scale up the program to a larger cohort of students.

Methods

An interprofessional team of faculty from the schools of medicine, nursing, pharmacy, and public health designed the educational program. These faculty had prior experience with facilitating IPE and simulation-based learning following the health care simulation standards of best practices.17 The educational program included two major elements: online simulation and in-person simulation.

Participants

Student volunteers from the schools of medicine, nursing, pharmacy, and public health were invited to participate. Due to the varying length of each degree program, no single class year of students was targeted. We sought to recruit students who had some professional awareness and consequently did not recruit first-year students. Otherwise, there was no restriction on participation.

Prework

Prework was made available to students but was optional. Using our university's learning management system, participating students were enrolled in an administrative (i.e., non-credit-bearing) course where they accessed the simulation prework. To facilitate readiness for simulation, all students were encouraged to explore information related to vaccine misinformation, vaccine hesitancy, and how to address vaccine misinformation. While information related to vaccine misinformation could change rapidly, organizations like the Centers for Disease Control and Prevention provided high-quality, regularly updated information and training tools. The “Vaccine Recipient Education” page offered helpful information to trainees that was relevant to our simulations.18 More specifically, “How to Address COVID-19 Vaccine Misinformation”19 and “Talking With Patients About COVID-19 Vaccination”20 were helpful in giving students generalized background information regarding communication strategies. Future educators can consider these, or other similar resources, as prework for this simulation.

Online Simulations

Students had the option to engage in online simulations accessed through our university's learning management system. These simulations, lasting 20 minutes each, allowed students to practice navigating an encounter with individuals with varying levels of vaccine hesitancy (Appendices A–D). Designed according to best practices,21–24 the simulations involved observing health worker interactions with vaccine-hesitant individuals. Students received real-time feedback based on their choices during the simulations. At the end of each online simulation, students were guided through a short self-reflection (Appendix E). Completing all four online simulations and the short self-reflection was estimated to take approximately 100 minutes.

In-Person Simulations

The in-person simulation scenarios were required. Approximately 2 weeks after gaining access to the optional prework and online simulations, students were assigned to attend a single, 90-minute, in-person simulation session. Four in-person simulation scenarios were designed to allow students the opportunity to actively experiment and debrief. Like the online simulations, each in-person simulation was designed to represent an encounter between a health worker and an individual hesitant to receive vaccines and/or an individual with beliefs informed by misinformation. The in-person simulation scenarios took place in our school of medicine's simulation center. The center consisted of 18 exam rooms with digital audio and video systems in each room that transmitted live feeds to faculty and staff in monitoring rooms. Faculty and staff could operate the cameras to change the angle and zoom and record each session. We created case summary files for each of the four in-person simulation scenarios (Appendices F–I). Most scenarios were designed to occur as a brief interaction in a community setting between a health worker (or student learner) and a community member; therefore, the SP cases did not require many of the clinical history components typically discussed in a health care encounter.

When students arrived at their assigned session, they gathered in a large classroom where they received prebriefing and met their partner for simulation (30 minutes). Prebriefing was intended to establish psychological safety by situating learners in a common mental model and conveying ground rules for the simulation activity.25 To promote interprofessional collaboration, students completed the in-person simulation scenarios in pairs. We aimed to pair each student with one from a different health professions program. However, based on scheduling, there were select instances where students from the same professional program were paired together. Both students were expected to speak with the SP during the encounter. Facilitators followed a standard prebriefing script (Appendix J). Students were reminded that this was an ungraded experience and an opportunity to apply what they had learned during the prework.

After prebriefing, the student pairs proceeded to their first simulation scenario. On the door of each simulation room, a brief description of the encounter was available for students to review prior to beginning the simulation (Appendix K). When all students were ready, an announcement to begin the simulation was made on an overhead speaker. Student pairs had 7 minutes to complete their conversation, with an overhead prompt when 2 minutes remained. At the end of the scenario, student teams exited the room, moved to their next simulation room, and read the next brief. Participants completed three of the four scenarios. The total time to complete all three scenarios and move between rooms was approximately 30 minutes. When the simulations were complete, students returned to the original classroom for debriefing. The faculty led a 30-minute, reflection-based debriefing (Appendix L) session following the plus-delta and Debriefing With Good Judgment debriefing frameworks.26,27 The purpose of using the plus-delta framework was to encourage constructive feedback, highlight successes, and identify areas for growth or enhancement.26 It helped with continuous improvement by capturing both strengths and weaknesses. The primary purpose of using Debriefing With Good Judgment was to promote learning from experience by analyzing both successes and failures through a lens of good judgment.26 It helped participants develop better decision-making skills, enhance situational awareness, and foster a culture of continuous learning and improvement.

SP Training

An SP playing the role of the vaccine-hesitant individual was the most important resource. The SPs were recruited from the pool of SPs utilized consistently by the school of medicine. These SPs were familiar with the school of medicine simulation center and therefore did not require orientation to the facilities. The SPs were provided with the case summary files (Appendices F–I) in advance and then met with the authors and school of medicine simulation staff for 60 minutes to ask questions about the cases. The SPs were not given a script but were encouraged to ad-lib so long as they were consistent with the information provided in the case summary files. We emphasized with the SPs that there was not an end outcome that needed to be achieved (i.e., agreeing to receive a vaccine). Rather, the students would be expected to engage in a brief, respectful conversation with the SP about their hesitancy to receive vaccines. The SPs were instructed to allow the students to lead the conversation and that their responses could be guided by how the student was performing. For example, if the student was respectful and empathetic, the SP could conclude the scenario with a positive statement such as “You've given me a lot to think about, and I'd be interested in speaking with you again.” If the student was judgmental or dismissive, then the SP could conclude the scenario with a statement such as “I'm not interested in any more information right now.”

Data Collection and Assessment

To evaluate the overall educational program, we asked students to complete a 26-item, locally developed survey (Appendix M). We developed a communication rubric to be used by faculty observers as a standard objective evaluation tool (Appendix N) and piloted it with a small group of students. To assess the accuracy of the rubric, two faculty observers rated student pairs, and revisions to the communication rubric were made based on feedback from the faculty observers. The communication rubric was not completed for each student pair enrolled in our program but is included here as a suggested tool for those interested in a mechanism to provide objective feedback to learners.

Results

A total of 220 students participated in this IPE program, and 206 (94%) completed paired datasets and were included in the primary analyses. Of these 206 students, 51 came from our pilot study, which has been described previously.16 Following the pilot, we were able to expand our program to include additional participants while maintaining the same sources of data collection (i.e., student self-assessments and surveys); these data were combined and are presented here. The 206 students included in the primary analyses represented medicine (n = 32), nursing (n = 41), pharmacy (n = 115), and public health (n = 18). In general, these students were at a point in their degree programs where they had developed an awareness of their professional roles and had some clinical/field experience; however, were not in the final (clinical) years of their training (e.g., year 3 of a 4-year program).

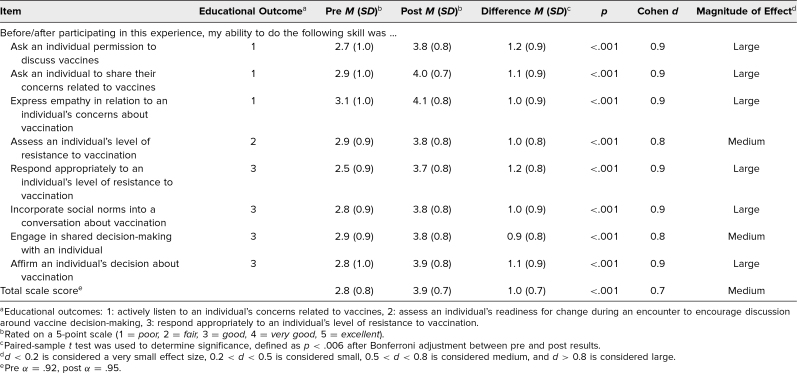

A comparison of students’ self-assessed abilities is summarized in Table 1. A significant increase was observed for all individual items and for the total scale score from pre- to postexperience, with moderate to large effect sizes. Mean preexperience scores were significantly different (p = .01) across professions due to a difference between nursing (3.1, SD = 0.9) and pharmacy (2.7, SD = 0.7; p = .03). Mean postexperience scores were significantly different (p < .001) across professions due to the difference between medicine (4.1, SD = 0.5) and pharmacy (3.7, SD = 0.7; p < .001) and between nursing (4.1, SD = 0.7) and pharmacy (3.7, SD = 0.7; p < .006). No difference (p = .08) existed between professions in magnitude of change from pre- to postexperience.

Table 1. Comparison of Retrospective Pre- and Postexperience Self-Assessed Abilities by Student Participants (N = 206).

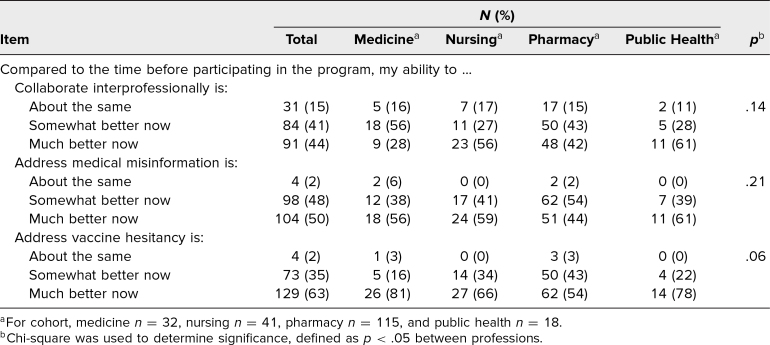

Students’ evaluation of their abilities to collaborate interprofessionally, address medical misinformation, and address vaccine hesitancy pre- and postexperience are summarized in Table 2. Overall, most students reported that their abilities were somewhat to much better now than before the activity. No differences existed between professions.

Table 2. Comparison of Self-Assessed Abilities After Completing Educational Experience Between Professions (N = 206).

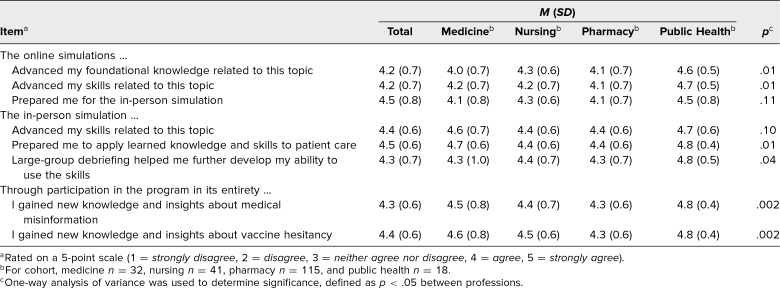

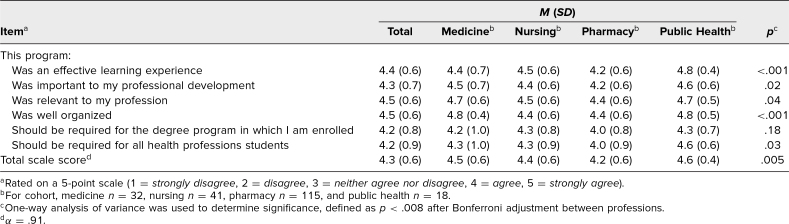

Mean responses to the programmatic evaluation items are summarized in Table 3. Overall, the individual elements of the program were rated highly. Table 4 summarizes the overall program evaluation data. Again, a significant difference (p = .005) existed between professions due to a difference between pharmacy (4.2, SD = 0.6) and public health (4.6, SD = 0.4; p = .03).

Table 3. Comparison of Ratings of Individual Components and Overall Experience Between Professions (N = 206).

Table 4. Comparison of Overall Evaluation of Program Between Professions (N = 206).

Student comments provided insight into the overall quality of the program and its impact. One student commented, “The in-person simulations were very well organized and extremely helpful in preparing me for future real-world conversations with patients.” Another student wrote, “This blew my expectations out of the water. This should be a part of the curriculum for medical students because we see it daily!” Regarding the interprofessional collaboration component, a student commented, “I think working in pairs was a great idea. I learned new information and different ways to approach these realistic challenges. This was a very valuable experience and one that I would certainly recommend to fellow students.”

Discussion

We sought to develop, implement, and assess an IPE program to enhance health professions students’ confidence in engaging in conversations with individuals with vaccine hesitancy and/or beliefs influenced by medical misinformation. Our data indicate that this program was successful in improving students’ self-assessed skills. It addressed an important need within each of the health professions’ curricula and complemented our university's existing IPE program. These data provide useful information to health professions educators on how a hybrid instructional design strategy can positively impact a student's self-assessed skills in having these challenging conversations.

We observed a significant difference in mean self-assessment scores between professions at pre- and postexperience. In general, pharmacy students rated themselves lower than their colleagues at both pre- and postexperience. As mentioned, the individual health professions curricula lack application-based activities related to misinformation and/or vaccine hesitancy, and so, this difference, particularly at preexperience, is likely not related to the didactic content in each curriculum. Previous studies have indicated that a difference in perceived self-assessed skills may exist across students of health professions programs and may be related to gender or their understanding of their role as health professionals.28 We did not collect data to evaluate this; however, it underscores the importance of IPE in cultivating a team-based approach to patient/population health. IPE promotes collaboration among health care professionals, fostering a team-based approach that leads to enhanced patient care quality, shorter hospital stays, cost savings, and fewer medical errors.29,30 The magnitude of change from pre- to postexperience was similar across all professions, indicating that the program impacted students to a similar magnitude despite the observed differences at baseline.

While we included students from medicine, nursing, pharmacy, and public health programs, further development could expand this activity to reach students in other health professions programs. The online and in-person simulation scenarios were developed so that they would not be profession-centric but rather provide a common challenge that could present in multiple environments. Programs can edit or develop other contextually relevant in-person simulation scenarios emphasizing more profession-specific situations if desired. While not necessarily realistic that students, upon entering practice, will engage in these conversations in pairs with another health professional, as a learning experience it is helpful for students to learn about, with, and from each other so that they appreciate how other members of the health care team can complement them even if they are not physically in the same space.

The quantitative data from 206 participants presented here are like those of the 51 participants from the pilot study.16 The larger sample size in the present analyses strengthens our findings that the educational program had positive impact on student self-assessed abilities. In the pilot program, we also assessed student knowledge, which demonstrated modest improvement, but we moved away from that assessment as we felt measuring knowledge was not necessarily in line with the objectives of the program; rather, practicing skills was the focus. A larger sample size also allowed us to gain further insight into the program. Specifically, during debriefing, faculty gained valuable insights into student learning. First, we learned how unique this experience was in the students’ training and how important they felt it was to day-to-day clinical practice or field work. Students often commented that the SPs made the simulations feel more real and applicable. This emphasizes not only the value of simulation but also the importance of SP training. Students appreciated having a partner from a different profession to collaborate with. We often observed students providing insight and advice to each other based on either past personal or professional experiences, which we think helped to provide each student with a different perspective and an appreciation for how another profession can address a shared challenge. While all the in-person simulation scenarios involved interactions with a patient or community member, students were asked to reflect on how their approach would differ if they were interacting with another health professional. Students often struggled with how their approach would be different if communicating with a peer. This revealed an opportunity for future directions of the in-person simulation activities by incorporating peer-to-peer cases.

Our educational program has several limitations. Our evaluation approach relied on student self-assessment. It is important to note that if student competency evaluation is desired, then programs should develop an objective assessment beyond student self-assessment.31 Items included in Table 2 were adopted from the language used in question 21 on the Interprofessional Collaborative Competency Attainment Survey.32 Note that we modified the response scale to set the floor to “stayed the same” as we felt that students’ abilities should not worsen because of participating in IPE. Although not an element of our educational program, the communication rubric we developed (Appendix N) may be helpful to those programs seeking to measure competency attainment. The cost associated with the use of a simulation center is a potential barrier for widespread adoption of this program. However, it is important to note that participants in our study felt the in-person simulations were valuable and impactful. Additionally, because the opportunity to apply and reflect upon skills is an important step in the experiential learning framework, in-person simulation is a critical piece of the program. The essential elements of the in-person simulations are the SPs. Therefore, if an institution is prioritizing how to spend resources, this educational program could be adapted to include SPs alone, without much of the technology or audiovisual recording equipment we used. The prework, including the online simulations, was optional, and completion was not verified prior to students attending the in-person simulations. Although the online simulations were rated favorably, we cannot evaluate the impact they had on performance because they likely were not completed by all participants. We felt it was important to provide participants with some information and an opportunity to practice prior to attending the in-person simulation. Future research should investigate the extent presimulation preparatory work impacts performance. As the landscape relate to vaccine confidence and misinformation continues to evolve, educators will need to update this educational program to reflect contemporary issues. The student participants primarily came from pharmacy; however, we feel that our data (Table 4) support the applicability of this program to various health professions programs.

In addition to expanding the types of in-person case scenarios, future directions include creating an online platform to host live simulations. Rather than students physically presenting themselves at a simulation center, scenarios mirroring telehealth encounters could be developed. This direction would help students develop valuable communication skills in a digital environment. It would also expand the opportunity for students in online health professions degree programs to have access to all elements of the educational program. The program should be piloted with different health professions programs to expand reach. Furthermore, an objective assessment of student performance should be tested.

Vaccine hesitancy, often influenced by medical misinformation, is pervasive and will be an ongoing challenge for health care professionals and students of health professions programs. Our educational program provides health professions students an opportunity to learn, practice, and reflect upon communication skills that are important to their role in addressing this public health crisis.

Appendices

- Addressing Vaccine Hesitancy at a Community Health Clinic folder

- Addressing Vaccine Hesitancy at a Vaccine Clinic folder

- Addressing Vaccine Hesitancy at an Office Visit folder

- Addressing Vaccine Hesitancy in Pregnancy folder

- Virtual Simulation Self-Assessment Rubric.docx

- SP Jesse Fick.docx

- SP Sam Sampson.docx

- SP Jamie Wilcox.docx

- SP Pat Smith.docx

- In-Person Simulation Prebriefing Script.docx

- In-Person Simulation Door Instructions.docx

- In-Person Simulation Debriefing Guide.docx

- Postprogram Survey.docx

- In-Person Simulation Communication Rubric.docx

All appendices are peer reviewed as integral parts of the Original Publication.

Acknowledgments

The authors would like to acknowledge Karen Zinnerstrom, PhD, and Phillip Wade of the University at Buffalo Clinical Competency and Behling Human Simulation Centers for their support in offering this educational program.

Disclosures

None to report.

Funding/Support

This educational program was funded in part by a cooperative agreement between the Centers for Disease Control and Prevention and the Association of American Medical Colleges (AAMC) entitled “AAMC Improving Clinical and Public Health Outcomes Through National Partnerships to Prevent and Control Emerging and Re-Emerging Infectious Disease Threats” (FAIN: NU50CK000586).

Ethical Approval

The University at Buffalo Institutional Review Board approved this project.

Disclaimer

The Centers for Disease Control and Prevention (CDC) is an agency within the Department of Health and Human Services (HHS). The information in this educational program does not necessarily represent the policy of CDC or HHS and should not be considered an endorsement by the Federal government.

References

- 1.Ten threats to global health in 2019. World Health Organization. Accessed July 23, 2024. https://www.who.int/news-room/spotlight/ten-threats-to-global-health-in-2019

- 2.MacDonald NE; SAGE Working Group on Vaccine Hesitancy. Vaccine hesitancy: definition, scope and determinants. Vaccine. 2015;33(34):4161–4164. 10.1016/j.vaccine.2015.04.036 [DOI] [PubMed] [Google Scholar]

- 3.Garett R, Young SD. Online misinformation and vaccine hesitancy. Transl Behav Med. 2021;11(12):2194–2199. 10.1093/tbm/ibab128 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Brunson EK. The impact of social networks on parents’ vaccination decisions. Pediatrics. 2013;131(5):e1397–e1404. 10.1542/peds.2012-2452 [DOI] [PubMed] [Google Scholar]

- 5.Beckwith VZ, Beckwith J. Motivational interviewing: a communication tool to promote positive behavior change and optimal health outcomes. NASN Sch Nurse. 2020;35(6):344–351. 10.1177/1942602X20915715 [DOI] [PubMed] [Google Scholar]

- 6.Kolb DA. Experiential Learning: Experience as the Source of Learning and Development. Prentice-Hall; 1984. [Google Scholar]

- 7.Jeffries PR, ed. Simulation in Nursing Education: From Conceptualization to Evaluation. 2nd ed. National League for Nursing; 2012. [Google Scholar]

- 8.Framework for Action on Interprofessional Education & Collaborative Practice. World Health Organization; 2010. Accessed July 23, 2024. http://apps.who.int/iris/bitstream/handle/10665/70185/WHO_HRH_HPN_10.3_eng.pdf;jsessionid=8D63F97C874D45FB844B3D48A30FBD46?sequence=1 [Google Scholar]

- 9.IPEC Core Competencies for Interprofessional Collaborative Practice: Version 3. Interprofessional Education Collaborative. Accessed July 23, 2024. https://www.ipecollaborative.org/assets/core-competencies/IPEC_Core_Competencies_Version_3_2023.pdf [Google Scholar]

- 10.Vyas D, Galal SM, Rogan EL, Boyce EG. Training students to address vaccine hesitancy and/or refusal. Am J Pharm Educ. 2018;82(8):6338. 10.5688/ajpe6338 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Schnaith AM, Evans EM, Vogt C, et al. An innovative medical school curriculum to address human papillomavirus vaccine hesitancy. Vaccine. 2018;36(26):3830–3835. 10.1016/j.vaccine.2018.05.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kelekar A, Rubino I, Kavanagh M, et al. Vaccine hesitancy counseling—an educational intervention to teach a critical skill to preclinical medical students. Med Sci Educ. 2022;32(1):141–147. 10.1007/s40670-021-01495-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jackson J, Guirguis E, Sourial M, Pirmal S, Pinder L. Preparing student-pharmacists to utilize motivational interviewing techniques to address COVID-19 vaccine hesitancy in underrepresented racial/ethnic patient populations. Curr Pharm Teach Learn. 2023;15(8):742–747. 10.1016/j.cptl.2023.07.008 [DOI] [PubMed] [Google Scholar]

- 14.Norton ZS, Olson KB, Sanguino SM. Addressing vaccine hesitancy through a comprehensive resident vaccine curriculum. MedEdPORTAL. 2022;18:11292. 10.15766/mep_2374-8265.11292 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Morhardt T, McCormack K, Cardenas V, Zank J, Wolff M, Burrows H. Vaccine curriculum to engage vaccine-hesitant families: didactics and communication techniques with simulated patient encounter. MedEdPORTAL. 2016;12:10400. 10.15766/mep_2374-8265.10400 [DOI] [Google Scholar]

- 16.Fusco NM, Foltz-Ramos K, Kruger JS, Vargovich A, Prescott WA Jr. Interprofessional simulation to prepare students to address medical misinformation and vaccine hesitancy. J Interprof Educ Pract. 2023;32:100644. 10.1016/j.xjep.2023.100644 [DOI] [Google Scholar]

- 17.INACSL Standards Committee. INACSL Standards of Best Practice: Simulation simulation design. Clin Simul Nurs. 2016;12(suppl):S5–S12. 10.1016/j.ecns.2016.09.005 [DOI] [Google Scholar]

- 18.Vaccine recipient education. Centers for Disease Control and Prevention. Updated April 14, 2022. Accessed July 23, 2024. https://www.cdc.gov/vaccines/covid-19/hcp/index.html

- 19.How to address COVID-19 vaccine misinformation. Centers for Disease Control and Prevention. Updated November 3, 2021. Accessed July 23, 2024. https://www.cdc.gov/vaccines/covid-19/health-departments/addressing-vaccine-misinformation.html

- 20.Talking with patients about COVID-19 vaccination. Centers for Disease Control and Prevention. Updated November 3, 2021. Accessed July 23, 2024. https://www.cdc.gov/vaccines/covid-19/hcp/engaging-patients.html

- 21.INACSL Standards Committee. Healthcare Simulation Standards of Best Practice simulation-enhanced interprofessional education. Clin Simul Nurs. 2021;58:49–53. 10.1016/j.ecns.2021.08.015 [DOI] [Google Scholar]

- 22.Keys E, Luctkar-Flude M, Tyerman J, Sears K, Woo K. Developing a virtual simulation game for nursing resuscitation education. Clin Simul Nurs. 2020;39:51–54. 10.1016/j.ecns.2019.10.009 [DOI] [Google Scholar]

- 23.Luctkar-Flude M, Tyerman J, Tregunno D, et al. Designing a virtual simulation game as presimulation preparation for a respiratory distress simulation for senior nursing students: usability, feasibility, and perceived impact on learning. Clin Simul Nurs. 2021;52:35–42. 10.1016/j.ecns.2020.11.009 [DOI] [Google Scholar]

- 24.Tyerman J, Luctkar-Flude M, Chumbley L, et al. Developing virtual simulation games for presimulation preparation: a user-friendly approach for nurse educators. J Nurs Educ Pract. 2021;11(7):10–18. 10.5430/jnep.v11n7p10 [DOI] [Google Scholar]

- 25.INACSL Standards Committee. Healthcare Simulation Standards of Best Practice prebriefing: preparation and briefing. Clin Simul Nurs. 2021;58:9–13. 10.1016/j.ecns.2021.08.008 [DOI] [Google Scholar]

- 26.Cheng A, Eppich W, Epps C, Kolbe M, Meguerdichian M, Grant V. Embracing informed learner self-assessment during debriefing: the art of plus-delta. Adv Simul (Lond). 2021;6:22. 10.1186/s41077-021-00173-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rudolph JW, Simon R, Rivard P, Dufresne RL, Raemer DB. Debriefing with good judgment: combining rigorous feedback with genuine inquiry. Anesthesiol Clin. 2007;25(2):361–376. 10.1016/j.anclin.2007.03.007 [DOI] [PubMed] [Google Scholar]

- 28.Peterson E, Keehn MT, Hasnain M, et al. Exploring differences in and factors influencing self-efficacy for competence in interprofessional collaborative practice among health professions students. J Interprof Care. 2024;38(1):104–112. 10.1080/13561820.2023.2241504 [DOI] [PubMed] [Google Scholar]

- 29.Institute of Medicine. Health Professions Education: A Bridge to Quality. National Academies Press; 2003. [PubMed] [Google Scholar]

- 30.Reeves S, Perrier L, Goldman J, Freeth D, Zwarenstein M. Interprofessional education: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2013;(3):CD002213. 10.1002/14651858.CD002213.pub3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gabbard T, Romanelli F. The accuracy of health professions students’ self-assessments compared to objective measures of competence. Am J Pharm Educ. 2021;85(4):8405. 10.5688/ajpe8405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Schmitz CC, Radosevich DM, Jardine P, MacDonald CJ, Trumpower D, Archibald D. The Interprofessional Collaborative Competency Attainment Survey (ICCAS): a replication validation study. J Interprof Care. 2017;31(1):28–34. 10.1080/13561820.2016.1233096 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

- Addressing Vaccine Hesitancy at a Community Health Clinic folder

- Addressing Vaccine Hesitancy at a Vaccine Clinic folder

- Addressing Vaccine Hesitancy at an Office Visit folder

- Addressing Vaccine Hesitancy in Pregnancy folder

- Virtual Simulation Self-Assessment Rubric.docx

- SP Jesse Fick.docx

- SP Sam Sampson.docx

- SP Jamie Wilcox.docx

- SP Pat Smith.docx

- In-Person Simulation Prebriefing Script.docx

- In-Person Simulation Door Instructions.docx

- In-Person Simulation Debriefing Guide.docx

- Postprogram Survey.docx

- In-Person Simulation Communication Rubric.docx

All appendices are peer reviewed as integral parts of the Original Publication.