Abstract

Dynamic mode decomposition (DMD) and its variants, such as extended DMD (EDMD), are broadly used to fit simple linear models to dynamical systems known from observable data. As DMD methods work well in several situations but perform poorly in others, a clarification of the assumptions under which DMD is applicable is desirable. Upon closer inspection, existing interpretations of DMD methods based on the Koopman operator are not quite satisfactory: they justify DMD under assumptions that hold only with probability zero for generic observables. Here, we give a justification for DMD as a local, leading-order reduced model for the dominant system dynamics under conditions that hold with probability one for generic observables and non-degenerate observational data. We achieve this for autonomous and for periodically forced systems of finite or infinite dimensions by constructing linearizing transformations for their dominant dynamics within attracting slow spectral submanifolds (SSMs). Our arguments also lead to a new algorithm, data-driven linearization (DDL), which is a higher-order, systematic linearization of the observable dynamics within slow SSMs. We show by examples how DDL outperforms DMD and EDMD on numerical and experimental data.

Supplementary Information

The online version contains supplementary material available at 10.1007/s11071-024-10026-x.

Introduction

In recent years, there has been an overwhelming interest in devising linear models for dynamical systems from experimental or numerical data (see the recent review by Schmid [51]). This trend was largely started by the dynamic mode decomposition (DMD), put forward in seminal work by Schmid [50]. The original exposition of the method has been streamlined by various authors, most notably by Rowley et al. [49] and Kutz et al. [32].

To describe DMD, we consider an autonomous dynamical system

| 1 |

for some . Trajectories of this system evolve from initial conditions . The flow map is defined as the mapping taking the initial trajectory positions at time to current ones at time t, i.e.,

| 2 |

As observations of the full state space variable of system (1) are often not available, one may try to explore the dynamical system (1) by observing d smooth scalar functions along trajectories of the system. We order these scalar observables into the observable vector

| 3 |

The basic idea of DMD is to approximate the observed evolution of of the dynamical system with the closest fitting autonomous linear dynamical system

| 4 |

based on available trajectory observations.

This is a challenging objective for multiple reasons. First, the original dynamical system (1) is generally nonlinear whose dynamics cannot be well approximated by a single linear system on a sizable open domain. For instance, one may have several isolated, coexisting attracting or repelling stationary states (such as periodic orbits, limit cycles or quasiperiodic tori), which linear systems cannot have. Second, it is unclear why the dynamics of d observables should be governed by a self-contained autonomous dynamical system induced by the original system (1), whose dimension is n. Third, the result of fitting system (4) to observable data will clearly depend on the initial conditions used, the number and the functional form of the observables chosen, as well as on the objective function used in minimizing the fit.

Despite these challenges, we may proceed to find an appropriately defined closest linear system (4) based on available observable data. We assume that for some fixed time step , discrete observations of m initial conditions, , and their images , under the sampled flow map are available in the data matrices

| 5 |

respectively. We seek the best fitting linear system of the form (4) for which

| 6 |

holds. The eigenvalues of such a are usually called DMD eigenvalues, and their corresponding eigenvectors are called the DMD modes.

Various norms can be chosen with respect to which the difference of and is to be minimized. The most straightforward choice is the Euclidean matrix norm , which leads to the minimization principle

| 7 |

An explicit solution to this problem is given by

| 8 |

with the dagger referring to the pseudo-inverse of a matrix (see, e.g., Kutz et al. [32] for details). We note that the original formulation of Schmid [50] is for discrete dynamical processes and assumes observations of a single trajectory (see also Rowley et al. [49]).

Among several later variants of DMD surveyed by Schmid [51], the most broadly used one is the Extended Dynamic Mode Decomposition (EDMD) of Williams et al. [58]. This procedure seeks the best-fitting linear dynamics for an a priori unknown set of functions of , rather than for itself. In practice, one often chooses as an N(d, k)-dimensional vector of d-variate scalar monomials of order k or less, where is the total number of all such monomials. The underlying assumption of EDMD is that a self-contained linear dynamical system of the form

| 9 |

can be obtained on the feature space by optimally selecting . For physical systems, the N(d, k)-dimensional ODE in Eq. (9) defined on the feature space can be substantially higher-dimensional than the d-dimensional ODE (4). In fact, N(d, k) may be substantially higher than the dimension n of the phase space of the original nonlinear system (1).

Once the function library used in EDMD is fixed, one again seeks to choose so that

This again leads to a linear optimization problem that can be solved using linear algebra tools. For higher-dimensional systems, a kernel-based version of EDMD was developed by Williams et al. [59]. This method computes inner products necessary for EDMD implicitly, without requiring an explicit representation of (polynomial) basis functions in the space of observables. As a result, kernel-based EDMD operates at computational costs comparable to those of the original DMD.

Prior justifications for DMD methods

Available justifications for DMD (see [49]) and EDMD (see [58]) are based on the Koopman operator, whose basics we review in Appendix A.1 for completeness. The argument starts with the observation that special observables falling in invariant subspaces of this operator in the space of all observables obey linear dynamics. Consequently, DMD should recover the Koopman operator restricted to this subspace if the observables are taken from such a subspace.

In this sense, DMD is viewed as an approximate, continuous immersion of a nonlinear system into an infinite dimensional linear dynamical system. While such an immersion is not possible for typical nonlinear systems with multiple limit sets (see [38, 39]), one still hopes that this approximate immersion is attainable via DMD or EDMD for nonlinear systems with a single attracting steady state that satisfies appropriate nondegeneracy conditions (see [33]). In that case, unlike classic local linearization near fixed points, the linearization via DMD or EDMD is argued to be non-local, as it covers the full domain of definition of Koopman eigenfunctions spanning the underlying Koopman-invariant subspace.

However, Koopman eigenfunctions, whose existence, domain of definition and exact form are a priori unknown for general systems, are notoriously difficult–if not impossible–to determine accurately from data. More importantly, even if Koopman-invariant subspaces of the observable space were known, any countable set of generically chosen observables would lie outside those subspaces with probability one. As a consequence, DMD eigenvectors (which are generally argued to be approximations of Koopman eigenfunctions and can be used to compute Koopman modes1) would also lie outside Koopman-invariant subspaces, given that such eigenvectors are just linear combinations of the available observables. Consequently, practically observed data sets would fall under the realm of Koopman-based explanation for DMD with probability zero. This is equally true for EDMD, whose flexibility in choosing the function set of observables also introduces further user-dependent heuristics beyond the dimension d of the DMD.

One may still hope that by enlarging the dimension d of observables in DMD and enlarging the function library in EDMD, the optimization involved in these methods brings DMD and EDMD eigenvectors closer and closer to Koopman eigenfunctions. The required enlargements, however, may mean hundreds or thousand of dimensions even for dynamical system governed by simple, low-dimensional ODEs [59]. These enlargements succeed in fitting linear systems closely to sets of observer trajectories, but they also unavoidably lead to overfits that give unreliable predictions for initial conditions not used in the training of DMD or EDMD. Indeed, the resulting large linear systems can perform substantially worse in prediction than much lower dimensional linear or nonlinear models obtained from other data-driven techniques (see, e.g., Alora et al. [1]).

Similar issues arise in justifying the kernel-based EDMD of Williams et al. [59] based on the Koopman operator. Additionally, the choice of the kernel function that represents the inner product of the now implicitly defined polynomial basis functions remains heuristic and problem-dependent. Again, the accuracy of the procedure is not guaranteed, as available observer data is generically not in a Koopman eigenspace. As Williams et al. [59] write: “Like most existing data-driven methods, there is no guarantee that the kernel approach will produce accurate approximations of even the leading eigenvalues and modes, but it often appears to produce useful sets of modes in practice if the kernel and truncation level of the pseudoinverse are chosen properly.”

Finally, a lesser known limitation of the Koopman-based approach to DMD is the limited domain in the phase space over which Koopman eigenfunctions (and hence their corresponding invariant subspaces) are defined in the observable space. Specifically, at least one principal Koopman eigenfunction necessarily blows up near basin boundaries of attracting and repelling fixed points and periodic orbits (see Proposition 1 of our Appendix A.4 for a precise statement and Theorem 3 of Kvalheim and Arathoon [33] for a more general related result).

Expansions of observables in terms of such blowing-up eigenfunctions have even smaller domains of convergence, as was shown explicitly in a simple example by Page and Kerswell [44]. This is a fundamental obstruction to the often envisioned concept of global linear models built of different Koopman eigenfunctions over multiple domains of attraction (see, e.g., Williams et al. [58], p. 1309). While it is broadly known that such models would be discontinuous along basin boundaries [33, 38, 39], it is rarely noted (see Kvalheim and Arathoon [33] for a rare exception) that such models would also generally blow up at those boundaries and hence would become unmanageable even before reaching the boundaries.

For these reasons, an alternative mathematical foundation for DMD is desirable. Ideally, such an approach should be defined on an equal or lower dimensional space, rather than on higher or even infinite-dimensional spaces, as suggested by the Koopman-based view on DMD. This should help in avoiding overfitting and computational difficulties. Additionally, an ideal treatment of DMD should also provide specific non-degeneracy conditions on the underlying dynamical system, on the available observables, and on the specific data to be used in the DMD procedure.

In this paper, we develop a treatment of DMD that satisfies these requirements. This enables to derive conditions for DMD to approximate the dominant linearized observable dynamics near hyperbolic fixed points and periodic orbits of finite- and infinite-dimensional dynamical systems.

Our approach to DMD also leads to a refinement of DMD which we call data-driven linearization (or DDL, for short). DDL effectively carries out exact local linearization via nonlinear coordinate changes on a lower-dimensional attracting invariant manifold (spectral submanifold) of the dynamical system. We illustrate the increased accuracy and domain of validity of DDL models relative to those obtained from DMD and EDMD on examples of autonomous and forced dynamical systems.

A simple justification for the DMD algorithm

Here we give an alternative interpretation of DMD and EDMD as approximate models for a dynamical system known through a set of observables. The main idea (to be made more precise shortly) is to view DMD executed on d observables as a model reduction tool that captures the leading-order dynamics of phase space variables along a d-dimensional slow manifold in terms of .

Such manifolds arise as slow spectral submanifolds (SSMs) under weak non-degeneracy assumptions on the linearized spectrum at stable hyperbolic fixed points of the n-dimensional dynamical system (see [10, 12, 22]). Specifically, a slow SSM is tangent to the real eigenspace E spanned by the d slowest decaying linearized modes at the fixed point. If m sample trajectories are released from a set of initial conditions at time , then due to their fast decay along the remaining fast spectral subspace F , the trajectories will become exponentially close to by some time , and closely synchronize with its internal dynamics, as seen in Fig. 1.

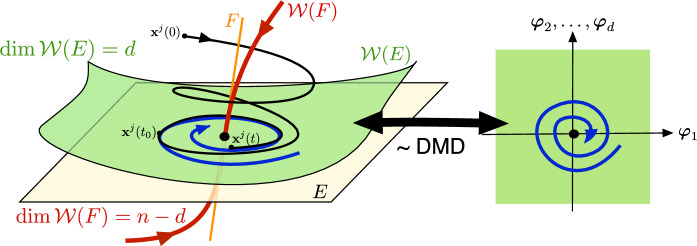

Fig. 1.

The geometric meaning of DMD performed on d observables, , after initial fast transients die out at an exponential rate in the data. DMD then identifies the leading-order (linear) dynamics on a d-dimensional attracting spectral submanifold (SSM) tangent to the d-dimensional slow spectral subspace E. These linearized dynamics can be expressed in terms of the SSM-restricted observables . Also shown is the spectral subspace F of the faster decaying modes and its associated nonlinear continuation, the fast spectral subspace

If admits a non-degenerate parametrization with respect to the observables , then one can pass to these observables as new coordinates in which to approximate the leading order, linearized dynamics inside . Specifically, DMD executed over time the interval provides the closest linear fit to the reduced dynamics of from the available observable histories for , as illustrated in Fig. 1. This fit is a close representation of the actual linearized dynamics on the SSM if the trajectory data is sufficiently diverse for . The resulting DMD model with coefficient matrix will then be smoothly conjugate to the linearized reduced dynamics on with an error of the order of the distance of from between the times and .

The slow SSM may also contain unstable modes in applications. Similar results hold for that case as well, provided that we select a small enough , ensuring that the trajectories are not ejected from the vicinity of the fixed point origin for . As DMD will show sensitivity with respect to the choice of and in this case, we will not discuss the justification of DMD near unstable fixed points beyond Remarks 1 and 7.

In the following sections, we make this basic idea more precise both for finite and infinite-dimensional dynamical systems. This approach also reveals explicit, previously undocumented non-degeneracy conditions on the underlying dynamical system, on the available observable functions and on the specific data set used, under which DMD should give meaningful results.

Justification of DMD for continuous dynamical systems

We start by assuming that the observed dynamics take place in a domain containing a fixed point, which is assumed to be at the origin, without loss of generality, i.e.,

| 10 |

We can then rewrite the dynamical system (1) in the more specific form

| 11 |

where the classic notation refers to the fact that .

Most expositions of DMD methods and their variants do not state assumption (10) explicitly and hence may appear less restrictive than our treatment here. However, all of them implicitly assume the existence of such a fixed point, as all of them end up returning homogeneous linear ODEs or mappings with a fixed point at the origin. Indeed, all known applications of these methods that produce reasonable accuracy target the dynamics of ODEs or discrete maps near their fixed points.

Assumption (10) can be replaced with the existence of a limit cycle in the original system (1), in which case the first return map (or Poincaré map) defined near the limit cycle will have a fixed point. We give a separate treatment on justifying DMD as a linearization for such a Poincaré map in Sect. 3.2.

We do not advocate, however, the often used procedure of applying DMD to fit a linear system to the flow map (rather than the Poincaré map) near a stable limit cycle. Such a fit only produces the desired limiting periodic behavior if one or more of the DMD eigenvalues are artificially constrained to be on the complex unit circle by the user of DMD. This renders the DMD model both structurally unstable and conceptually inaccurate for prediction. Indeed, the model will approximate the originally observed limit cycle and convergence to it only within a measure zero, cylindrical set of its phase space. Outside this set, all trajectories of the DMD model will converge to some other member of the infinite family of periodic orbits or invariant tori within the center subspace corresponding to the unitary eigenvalues. These periodic orbits or tori have a continuous range of locations and amplitudes, and hence represent spurious asymptotic behaviors that are not seen in the original dynamical system (1).

In the special case of and for the special observable , the near-linear form of Eq. (11) motivates the DMD procedure because a linear approximation to the system near seems feasible. It is a priori unclear, however, to what extent the nonlinearities distort the linear dynamics and how DMD would account for that. Additionally, in a data-driven analysis, choosing the full phase space variable as the observable is generally unrealistic. For these reasons, a mathematical justification of DMD requires further assumptions, as we discuss next.

Let denote the eigenvalues of and let denote the corresponding generalized eigenvectors. We assume that at least one of the modes of the linearized system at decays exponentially and there is at least one other mode that decays slower or even grows. More specifically, for some positive integer , we assume that the spectrum of A can be partitioned as

| 12 |

This guarantees the existence of a d-dimensional, normally attracting slow spectral subspace

| 13 |

for the linearized dynamics, with linear decay rate towards E strictly dominating all decay rates inside E. Note that the set of vectors is, in general, not linearly independent, but they span a d-dimensional subspace. We also define the (real) spectral subspace of faster decaying linear modes:

| 14 |

We will also use matrices containing the left and right eigenvectors of the operator and of its restrictions, and , to its spectral subspaces E and F, respectively. Specifically, we let

| 15 |

where the columns of are the real and imaginary parts of the generalized right eigenvectors of and the columns of are defined analogously for Similarly, the rows of are the real and imaginary parts of the generalized left eigenvectors of and the rows of are defined analogously for Under assumption (12), F is always a fast spectral subspace, containing all trajectories of the linearized system that decay faster to the origin as any trajectory in E.

We will use the notation

| 16 |

for trajectory data in the underlying dynamical system (1) on which the observable data matrices and defined in (5) are defined. In truly data-driven applications, the matrices and are not known. We will nevertheless use them to make precise statements about a required dominance of the slow linear modes of E in the available data. Such a dominance will arise for generic initial conditions if one selects the initial conditions after initial fast transients along F have died out. This can be practically achieved by initializing after a linear spectral analysis of the observable data matrix returns a number of dominant frequencies consistent with a d-dimensional SSM.

We now state a theorem that provides a general justification for the DMD procedure under explicit nondegeneracy conditions and with specific error bounds. Specifically, we give a minimal set of conditions under which DMD can be justified as an approximate, leading-order, d-dimensional reduced-order model for an nonlinear system of dimension near its fixed point. Based on relevance for applications, we only state Theorem 1 for stable hyperbolic fixed points, but discuss subsequently in Remark 1 its extension to unstable fixed points.

Theorem 1

(Justification of DMD for ODEs with stable hyperbolic fixed points) Assume that

The origin, , is a stable hyperbolic fixed point of system (11) with a spectral gap, i.e., the spectrum satisfies Eq. (12).

in a neighborhood of the origin.

- For some integer , a d-dimensional observable function and the d-dimensional slow spectral subspace E of the hyperbolic fixed point of system (11) satisfy the non-degeneracy condition

17 - The data matrices and are non-degenerate and the initial conditions in and have been selected after fast transients from the modes outside E have largely died out, i.e.,

for some .18

Then the DMD computed from and yields a matrix that is locally topologically conjugate with order error to the linearized dynamics on a d-dimensional, slow attracting spectral submanifold tangent to E at . Specifically, we have

| 19 |

Proof

Under assumptions (A1) and (A2), any trajectory in a neighborhood of the origin in the nonlinear system (11) converges at an exponential rate to a d-dimensional attracting spectral submanifold tangent to a d-dimensional attracting slow spectral subspace E of the linearized system at the origin. This follows from the linearization theorem of Hartman [23], which is applicable to dynamical systems with a stable hyperbolic fixed point. Under assumption (A3), the d-dimensional observable function restricted to can be used to parametrize near the origin, and hence a d-dimensional, self-contained nonlinear dynamical system can be written down for the restricted observable along . Under the first assumption in (A4), the available observational data matrices and are rich enough to characterize the reduced dynamics on . Under the second assumption in (A4), transients from the faster modes outside E have largely died out before the selection of the initial conditions in , so that the linear part of the dynamics on can be approximately inferred from and . In that case, up to an error proportional to the distance of the training data from , the matrix returned by DMD is similar to the time- flow map of the linearized flow of the underlying dynamical system restricted to . This linearized flow then acts as a local reduced-order model with which nearby trajectory observations synchronize exponentially fast in the observable space. We give a more detailed proof of the theorem in Appendix (B).

Remark 1

In Theorem 1, we can replace assumption (A1) with

| 20 |

This means that the fixed point is only assumed hyperbolic with a spectral gap and has an attracting d-dimensional spectral subspace E that possibly contains some instabilities, i.e., eigenvalues with positive real parts. Then the statements of Theorem 1 still hold, but will be only be guaranteed at and Hölder-continuous at other points near the fixed point. This follows by replacing the linearization theorem of Hartman [23] with that of van Strien [56], which still enables us to use Eq. (79) in the proof. Therefore, slow subspaces E containing a mixture of stable and unstable modes can also be allowed, as long as F contains only fast modes consistent with the splitting assume in Eq. (12). In that case, however, the time must be chosen carefully to ensure that still holds, i.e., the data used in DMD still samples a neighborhood of the origin.

Remark 2

In related work, Bollt et al. [7] construct the transformation relating a pair of conjugate dynamical systems based on a limited set of matching Koopman eigenfunctions, which are either known explicitly or constructed from EDMD with dictionary learning (EDMD-DL; see [36]). In principle, this could be used to construct linearizing transformation as well. However, even when the eigenfunctions are approximated from data, the approach assumes that the linearized system, as well as a linearized trajectory and its preimage under the linearization, are available. As these assumptions are not satisfied in practice, only very simple and low-dimensional analytic examples are treated by Bollt et al. [7].

In Appendix (B), Remarks 4 and 5 summarize technical points on the application and possible further extensions of Theorem 1. In practice, Theorem 1 provides previously unspecified non-degeneracy conditions on the linear part of the dynamical system to be analyzed via DMD (assumption (A1)), on the regularity of the nonlinear part of the system (assumption (A2)), on the type of observables available for the analysis (assumption (A3)) and on the specific observable data used in the analysis (assumption (A4)). The latter assumption requires that there have to be at least as many independent observations in time as observables. This specifically excludes the popular use of tall observable data matrices which provide more free parameters to pattern-match observational data but will also lead to an overfit that diminishes the predictive power of the DMD model on initial conditions not used in its training.

To illustrate these points, we demonstrate the necessity of assumptions (A2)–(A4) of Theorem 1 in Appendix C on simple examples.

Justification of DMD for discrete and for time-periodic continuous dynamical systems

The linearization results we have applied to deduce Theorem 1 are equally valid for discrete dynamical systems defined by iterated mappings. Such mappings are of the form

| 21 |

We will use a similar ordering for the eigenvalues of as in the continuous time case:

| 22 |

As in the continuous time case, we will use the observable data matrices

| 23 |

with the initial conditions for the map stored in .

With these ingredients, we need only minor modifications in the assumptions of the theorems that account for the usual differences between the spectrum of an ODE and a map.

Theorem 2

(Justification of DMD for maps with stable hyperbolic fixed points) Assume that

is a stable hyperbolic fixed point of system (21), i.e., assumption (22) holds.

In Eq. (21), holds in a neighborhood of the origin.

- For some integer , a d-dimensional observable function and the d-dimensional slow spectral subspace E of the hyperbolic fixed point of system (21) satisfy the non-degeneracy condition

24 - The data matrices and collected from iterations of system (21) are non-degenerate and are dominated by data near E, i.e.,

for some .25

Then the DMD computed from and yields a matrix that is locally topologically conjugate with order error to the linearized dynamics on a d-dimensional attracting spectral submanifold tangent to E at . Specifically, we have

| 26 |

The spectral submanifold and its reduced dynamics are of class at the origin, and at least Hölder continuous in a neighborhood of the origin.

Proof

The proof is identical to the proof of Theorem 1 but uses the discrete version of the linearization result by Hartman [23] for stable hyperbolic fixed points of maps.

Theorem 1 can be immediately applied to justify DMD as a linearization tool for period-one maps (or Poincaré maps) of time-periodic, non-autonomous dynamical systems near their periodic orbits. This requires the data matrices and to contain trajectories of such a Poincaré map. Remark 8 on the treatment of slow spectral subspaces E containing possible instabilities also applies here under the modified assumption

| 27 |

which only requires the fixed point to be hyperbolic and to have a d-dimensional normally attracting subspace.

Justification of DMD for infinite-dimensional dynamical systems

Most data sets of interest arguably arise from infinite-dimensional dynamical systems of fluids and solids. Examples include experimental or numerical data describing fluid motion, continuum vibrations, climate dynamics or salinity distribution in the ocean. In the absence of external forcing, these problems are governed by systems of autonomous nonlinear partial differential equations that can often be viewed as evolutionary differential equations in a form similar to Eq. (1), but defined on an appropriate infinite-dimensional Banach space. Accordingly, time-sampled solutions of these equations can be viewed as iterated mappings of the form (21) but defined on Banach spaces.

Our approach to justifying DMD generally carries over to this infinite-dimensional setting, as long as the observable vector remains finite-dimensional, and both the Banach space and the discrete or continuous dynamical system defined on it satisfy appropriate regularity conditions. These regularity conditions tend to be technical, but when they are satisfied, they do guarantee the extension of Theorems 1 and 2 to Banach spaces. This offers a justification to use DMD to obtain an approximate finite-dimensional linear model for the dynamics of the underlying continuum system on a finite-dimensional attracting slow manifold (or inertial manifold) in the neighborhood of a non-degenerate stationary solution.

To avoid major technicalities, we only state here a generalized version of Theorem 1 to justify the use of DMD for observables defined on Banach spaces for a discrete evolutionary process with a stable hyperbolic stationary state. We consider mappings of the form

| 28 |

where is a Banach space, U is an open set in , and is an invertible linear operator that is bounded in the norm defined on . The function can be here the time-sampled version of an infinite-dimensional flow map of an autonomous evolutionary PDE or the Poincaré map of a time-periodic evolutionary PDE. We assume that for some , holds, i.e., is (Fréchet-) differentiable in U and its derivative, , is Hölder-continuous in with Hölder exponent .

The spectral radius of A is defined as

We recall that in the special case treated in Sect. 3.2, we have . For some , the linear operator is called -contracting if

| 29 |

which can only hold if (see [41]). Therefore, in the simple case of , A is -contracting if it is a contraction (i.e., all its eigenvalues are less than one in norm) and

showing that the spectrum of is confined to an annulus of outer radius and inner radius . We can now state our main result on the justification of DMD for infinite-dimensional discrete dynamical systems.

Theorem 3

(Justification of DMD for infinite-dimensional maps with stable hyperbolic fixed points) Assume that

For some , the linear operator is -contracting (and hence the fixed point of system (28) is linearly stable).

In Eq. (28), holds in a U neighborhood of the origin.

- For some integer , there is a splitting of into two A-invariant subspaces such that E is d-dimensional and slow, i.e.,

Furthermore, a d-dimensional observable function satisfies the non-degeneracy condition30 31 - The data matrices and collected from iterations of system (28) are non-degenerate and are dominated by data near E, i.e.,

for some .32

Then the DMD computed from and yields a matrix that is locally topologically conjugate with order error to the linearized dynamics on a d-dimensional attracting spectral submanifold tangent to E at . Specifically, we have

| 33 |

The spectral submanifold and its reduced dynamics are of class in a neighborhood of the origin.

Proof

The proof follows the steps in the proof of Theorem 2 but uses an infinite-dimensional linearization result, Theorem 3.1 of Newhouse [41], for stable hyperbolic fixed points of maps on Banach spaces. Specifically, if is -contracting, then Newhouse [41] shows the existence of a near-identity linearizing transformation for the discrete dynamical system (28) such that holds on a small enough ball centered at . Using this linearization theorem instead of its finite-dimensional version from Hartman [23], we can follow the same steps as in the proof of Theorem 2 to conclude the statement of the theorem.

In Appendix B, Remarks 6 and 7 summarize technical remarks on possible further extensions of Theorem 3.

Data-driven linearization (DDL)

Theoretical foundation for DDL

Based on the results of the previous section, we now refine the first-order approximation to the linearized dynamics yielded by DMD near a hyperbolic fixed point. Specifically, we construct the specific nonlinear coordinate change that linearizes the restricted dynamics on the attracting spectral submanifold illustrated in Fig. 1. This classic notion of linearization on yields a d-dimensional linear reduced model, which can be of significantly lower dimension than the original n-dimensional nonlinear system. This is to be contrasted with the broadly pursued Koopman embedding approach (see, e.g., [8, 40, 49]), which seeks to immerse nonlinear systems into linear systems of dimensions substantially higher (or even infinite) relative to n.

The following result gives the theoretical basis for our subsequent data-driven linearization (DDL) algorithm. We will use the notation for the class of real analytic functions. We also use the notation to denote the integer part of x.

Theorem 4

(DDL principle for ODEs with a stable hyperbolic fixed points) Assume that the origin, is a stable hyperbolic fixed point of system (11) and the spectrum of has a spectral gap as in Eq. (12). Assume further that for some , the following conditions are satisfied:

- and the nonresonance conditions

hold for the eigenvalues of .34 - For some integer , a d-dimensional observable function and the d-dimensional slow spectral subspace E of the stable fixed point of system (11) satisfy the non-degeneracy condition.

35

Then the following hold:

-

(i)On the unique d-dimensional attracting spectral submanifold tangent to E at , the reduced observable vector can be used to describe the reduced dynamics as

36 -

(ii)There exists a unique, change of coordinates

that transforms the reduced dynamics on to its linearization37

inside the domain of attraction of within the spectral submanifold38 -

(iii)The transformation (37) satisfies the d-dimensional system of nonlinear PDEs

If , solutions of this PDE can locally be approximated as39

If , then the local approximation (40) can be refined to a convergent Taylor series40

in a neighborhood of the origin. In either case, the coefficients can be determined by substituting the expansion for into the PDE (39), equating coefficients of equal monomials and solving the corresponding recursive sequence of d-dimensional linear algebraic equations for increasing .41

Proof

The proof builds on the existence of the d-dimensional spectral submanifold guaranteed by Theorem 1. For a dynamical system with , is also smooth based on the linearization theorems of Poincaré [46] and Sternberg [52], as long as the nonresonance condition (34) holds. Condition (35) then ensures that can be parametrized locally by the restricted observable vector and hence its reduced dynamics can be written as a nonlinear ODE for . This ODE can again be linearized by a near-identity coordinate change (37) using the appropriate linearization theorem of the two cited above. The result is the restricted linear system (38) to which the dynamics is conjugate within the whole domain of attraction of the fixed point inside . The invariance PDE (39) can be obtained by substituting the linearizing transformation (37) into the reduced dynamics on . This PDE can then be solved via a Taylor expansion up to order r. We give more a more detailed proof in Appendix D.

Note that 1:1 resonances are not excluded by the condition (34), and hence repeated eigenvalues arising from symmetries in physical systems are still amenable to DDL. Also of note is that the non-resonance conditions (34) do not exclude frequency-type resonances among imaginary parts of oscillatory eigenvalues. Rather, they exclude simultaneous resonances of the same type between the real and the imaginary parts of the eigenvalues. Such resonances will be absent in data generated by generic oscillatory systems.

Assuming hyperbolicity is essential for Theorem 4 to hold, since in this case the linearization is the same as transforming the dynamics to the Poincaré-normal form. For a non-hyperbolic fixed point, this normal form transformation results in nonlinear dynamics on the center manifold. This would, however, only arise in highly non-generic systems, precisely tuned to be at criticality. Since this is unlikely to happen in experimentally observed or numerically simulated systems, the hyperbolicity assumption is not restrictive.

Finally, under the conditions of Theorem 3, the DDL results of Theorem 4 also apply to data from infinite-dimensional dynamical systems, such as the fluid sloshing experiments we will analyze using DDL in Sect. 5.4. In practice, the most restrictive condition of Theorem 3 is (A1), which requires the solution operator to have a spectrum uniformly bounded away from zero. Such uniform boundedness is formally violated in important classes of infinite-dimensional evolution equations, presenting a technical challenge for the direct applications of SSM results to certain delay-differential equations (see [54]) and partial differential equations (see, e.g., [9, 30]). However, this challenge only concerns rigorous conclusions on the existence and smoothness of a finite-dimensional, attracting SSM. If the existence of such an SSM is convincingly established from an alternative mathematical theory (as is [9]) or inferred from data (as in [54]), then the DDL algorithm based on Theorem 4 can be used to obtain a data-driven linearization of the dynamics on that SSM.

DDL versus EDMD

Here we examine whether there is a possible relationship between DDL and the extended DMD (or EDMD) algorithm of Williams et al. [58]. For simplicity, we assume analyticity for the dynamical system () and hence we can write the inverse of the linearizing transformation (40) behind the DDL algorithm as a convergent Taylor expansion of the form

| 42 |

We then differentiate this equation in time to obtain from the linearized equation (38) a d-dimensional system of equations

that the restricted observable and its monomials must satisfy. This last equation can be rewritten as a d-dimensional autonomous system of linear system of ODEs,

| 43 |

for the reduced observable and the infinite-dimensional vector of all nonlinear monomials of . Here denotes the d-dimensional identity matrix and contains all coefficients as column vectors starting from order .

If we truncate the infinite-dimensional vector of monomials to the vector of nonlinear monomials up to order k, then Eq. (43) becomes

| 44 |

This is a d-dimensional implicit system of linear ODEs for the dependent variable vector whose dimension is always larger than d. Consequently, the operator is never invertible and hence, contrary to the assumption of EDMD, there is no well-defined linear system of ODEs that governs the evolution of an observable vector and the monomials of its components.

The above conclusion remains unchanged even if one attempts to optimize with respect to the choice of the coefficients in the matrix .

Implementation and applications of DDL

Basic implementation of DDL for model reduction and linearization

Theorem 4 allows us to define a numerical procedure to construct a linearizing transformation on the d-dimensional attracting slow manifold systematically from data. From Eq. (44), the matrices and are to be determined, given a set of observed trajectories. In line with the notation used in Sect. 4.2, let the data matrix contain monomials (from order 2 to order k) of the observable vector and let contain denote the evaluation of time later. Passing to the discrete version of the invariance equation (44), we obtain

for some matrices and . Moreover, the inverse transformation of the linearization on the SSM is well-defined, and hence with an appropriate matrix , we can write

This allows us to define the cost functions

| 45 |

where measures the invariance error along the observed trajectories and measures the error due to the computation of the inverse. We aim to jointly minimize and . To this end, we define the combined cost function

| 46 |

for some . In our examples, we choose , which puts the same weight on both terms in the cost function (46). Minimizers of provide optimal solutions to the DDL principle and can be written as

| 47 |

or, equivalently, as solutions of the system of equations

| 48 |

| 49 |

The optimal solution (47) does not necessarily coincide with the Taylor-coefficients of the linearizing transformation (41). Instead of giving the best local approximation, approximates the linearizing transformation and the linear dynamics in a least-squares sense over the domain of the training data. This means that DDL is not hindered by the convergence properties of the analytic linearization. Note that for , one can estimate the radius of convergence of (41), for example, by constructing the Domb–Skyes plot (see [15]), or by finding the radius of the circle in the complex plane onto which the roots of the truncated expansion accumulate under increasing orders of truncation (see [25, 47]). For , such analysis is more difficult, since multivariate Taylor-series have more complicated domains of convergence. In our numerical examples, we estimate the domain of convergence of such analytic linearizations as the domain on which holds to a good approximation. As we will see, this domain of convergence may be substantially smaller the domain of validity of transformations determined in a fully data-driven way.

Since the cost function (45) is not convex, the optimization problem (47) has to be solved iteratively starting from an initial guess . For the examples presented in the paper, we use the Levenberg–Marquardt algorithm (see [4]), but other nonlinear optimization methods, such as gradient descent or Adam (see [29]) could also be used. For our implementation, which is available from the repository [28], we used the Scipy and Pytorch libraries of Virtanen et al. [57], Paszke et al. [45]. In summary, we will use the following Algorithm 1 in our examples for model reduction via DDL.

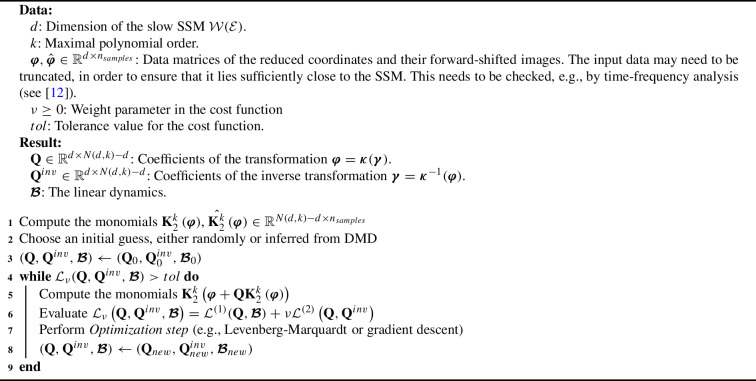

Algorithm 1.

Model reduction with DDL

Remark 3

The expressions (45)–(46) define one of the possible choices for the cost function. With , (46) simply corresponds to a one-step-ahead prediction with the linearized dynamics. Alternatively, a multi-step prediction can also be enforced. For a training trajectory the invariance

could be required, where is a tensor composed of powers of the linear map . Optimizing over the entire trajectory is, however, more costly than simply minimizing (45), and we found no noticeable improvement in accuracy in our numerical examples.

Relationship with DMD implementations

Note that setting in the optimization problem (47) turns the problem into DMD. In this case, the usual DMD algorithm surveyed in the Introduction returns

which is a good initial guess for the non-convex optimization problem (47). More importantly, since Theorem 4 guarantees the existence of a near-identity linearizing transformation, we expect that the true minimizer is close to the DMD-solution. Therefore, we may explicitly expand the cost function (45) around the DMD solution as

where and are the Jacobian and the Hessian of the cost function evaluated at the DMD solution, respectively. Since the Jacobian is nonsingular at the DMD solution, the minimum of the quadratic approximation of the cost function satisfies the linear equation

| 50 |

This serves as the first-order correction to the DMD-solution in the DDL procedure. The Eq. (50) is explicitly solvable and is equivalent to performing a single Levenberg–Marquardt step on the non-convex cost function (45), with the DMD solution serving as an initial guess.

Minimization of (45) leads to a non-convex optimization problem. Besides computing the leading-order approximation (50), a possible workaround to this challenge is to carry out the linearization in two steps. First, one can fit a polynomial map to the reduced dynamics by linear regression. Then, if the reduced-dynamics is non-resonant, it can be analytically linearized. Axås et al. [3] follow this approach to automatically find the extended normal form style reduced dynamics on SSMs using the implementation of SSM Tool by Jain et al. [24]. Although this procedure does convert the DDL principle into a convex problem, the drawback is that the linearization is obtained as a Taylor-expansion, with possibly limited convergence properties.

Using DDL to construct spectral foliations

The mathematical foundation of SSM-reduced modeling is that any trajectory converging to a slow SSM is guaranteed to synchronize up to an exponentially decaying error with one of the trajectories on the SSM. This follows from the general theory of invariant foliations by Fenichel [18], when applied to the d-dimensional normally hyperbolic invariant manifold .2 The main result of the theory is that off-SSM initial conditions synchronizing with the same on-SSM trajectory turn out to form a class smooth, -dimensional manifold, denoted , which intersects in a unique point . The manifold is called the stable fiber emanating from the base point . Fenichel proves that any off-SSM trajectory with initial condition converges to the specific on-SSM trajectory with initial condition faster than any other nearby trajectory might converge to Recently, Szalai [55] studied this foliation in more detail under the name “invariant spectral foliation”, discussed its uniqueness in an appropriate smoothness class and proposed its use in model reduction.

To predict the evolution of a specific, off-SSM initial condition up to time t from an SSM-based model, we first need to relate that initial condition to the base point of the stable fiber . Next, we need to run the SSM-based reduced model up to time t to obtain . Based on the exponentially fast convergence of the full solution to the SSM-reduced solution we obtain an accurate longer-term prediction for using this procedure. Such a longer-term prediction is helpful, for instance, when we wish to predict steady states, such as fixed points and limit cycles, from the SSM-reduced dynamics.

Constructing this spectral foliation directly from data, however, is challenging for nonlinear systems. Indeed, one would need a very large number of initial conditions that cover uniformly a whole open neighborhood of the fixed point in the phase space. For example, while one or two training trajectories are generally sufficient to infer accurate SSM-reduced models even for very high-dimensional systems (see e.g., [3, 12, 13]), thousands of uniformly distributed initial conditions in a whole open set of a fixed point are required to infer accurate spectral foliation-based models even for low-dimensional systems (see [55]). The latter number and distribution of initial conditions is unrealistic to acquire in a truly data-driven setting.

To avoid constructing the full foliation, one may simply project an initial condition orthogonally to an observed spectral submanifold to obtain , but this may result in large errors if E and F are not orthogonal. In that case, may divert substantially from E (see [42, 43, 48] for a discussion of the limitations of this projection for general invariant manifolds).

A better solution is to project orthogonally to the slow spectral subspace E over which is a graph in an (often large) neighborhood of the fixed point. This approach assumes that E and F are nearly normal and is nearly flat. As the latter is typically the case for delay-embedded observables [2], orthogonal projection onto E has been the choice so far in data-driven SSM-based reduction via the SSMLearn algorithm [11]. This approach has produced highly accurate reduced-order models in a number of examples (see [3, 12, 13]). There are nevertheless examples in which the linear part of the dynamical system is significantly non-normal and hence E and F are not close to being orthogonal (see [6]).

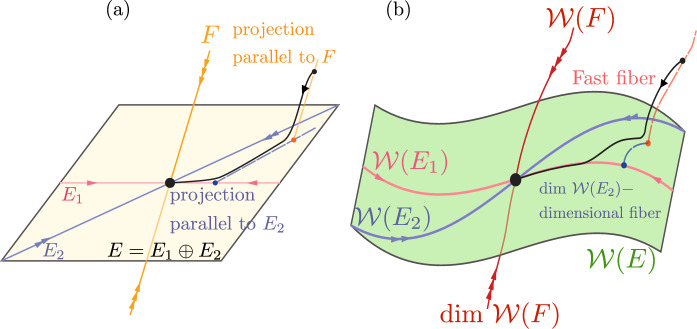

Near hyperbolic fixed points, the use of DDL eliminates the need to construct involved nonlinear spectral foliations. Indeed, let us assume that the slow spectral subspace E in Theorem 4 can be decomposed into a direct sum , where denotes the slowest spectral subspace with dim and denotes the second-slowest spectral subspace with dim , as sketched in Fig. 2. Reducing the dynamics to the SSM is accurate for transient times given by the decay rate of This initial reduction can be done simply by a normal projection onto E. Inside E, one can simply locate spectral foliations of the DDL-linearized systems explicitly and map them back to the original nonlinear system under the DDL transformation . The unique class foliation of a linear system within E is the family of stable fibers forming the affine space

where . The trajectories started inside all synchronize with . The linear projection onto along directions parallel to , when applied to an initial condition , returns the base point

| 51 |

In the nonlinear system (1), the leaves of the smooth foliation within

where is the image of under the mapping defined in (37). The SSM can then be parametrized via the foliation

Fig. 2.

a The linearized phase space geometry governed by the slow spectral subspace and the slow invariant foliation within E. b) Phase space geometry in the original coordinates

Using DDL to predict nonlinear forced response from unforced data

We now discuss how DDL performed near the fixed point of an autonomous dynamical system can be used to predict nonlinear forced response under additional weak periodic forcing in the domain of DDL. The addition of such small forcing is frequent in structural vibration problems in which the unforced structure (e.g., a beam or disk) is rigid enough to react with small displacements under practically relevant excitation levels (see, e.g., [12, 13] for specific examples).

We append system (11) with a small, time-periodic forcing term to obtain the system

| 52 |

with for some period . If the conditions of Theorem 4 hold for the system (52) for , then, for small enough, exists a unique d-dimensional, T-periodic, attracting spectral submanifold of a locally unique attracting T-periodic orbit perturbing from (see, e.g., [10, 22]). The manifold is -close to and hence its reduced dynamics can be parametrized using the reduced observable vector in the form

| 53 |

where we have relegated the details of this calculation to Appendix E.

Then the unique, change of coordinates,

| 54 |

guaranteed by statement (iii) of Theorem 4 transforms the reduced dynamics (53) to its final form

| 55 |

The transformation is valid on trajectories of (52) as long as they remain in the domain of definition of the coordinate change (54).

Note that Eq. (55) is a weakly perturbed, time-periodic nonlinear system. The matrix and the nonlinear terms can be determined using data from the unforced () system. As a result, nonlinear time-periodic forced response can be predicted solely from unforced data by applying numerical continuation to system (55) for . This is not expected to be as accurate as SSM-based forced response prediction (see, e.g., [2, 3, 12, 13]), but nevertheless offers a way to make predictions for non-linearizable forced response based solely on DDL performed on unforced data. These predictions are valid for forced trajectories that stay in the domain of convergence of DDL carried out on the unforced system. We will illustrate such predictions using actual experimental data from fluid sloshing in Sect. 5.4.

Setting in formula (55) enables us to carry out a forced-response prediction based on DMD as well. Such a prediction will be fundamentally linear with respect to the forcing and can only be reasonably accurate for very small forcing amplitudes, as we will indeed see in examples. There is no systematic way to model the addition of non-autonomous forcing in the EDMD procedure, and hence EDMD will not be included in our forced response prediction comparisons.

We also note, that one might be tempted to solve an approximate version of (55) by assuming

| 56 |

This assumption simplifies the computation of the forced response of the nonlinear system (55) to those of a simple linear system. Although the forced response computed using this approximate DDL method turns out to be more accurate than DMD on our example, we do not recommend this approach. This is because neglecting the nonlinear effects of the coordinate change in (55) is, in general, inconsistent with . We give more detail on this approximation in Appendix F of the Supplementary Information.

Examples

In this section, we compare the DMD, EDMD and DDL algorithms on specific examples. When applicable, we also compute the exact analytic linearization of the dynamical system near its fixed point as a benchmark. On a slow SSM , an observer trajectory , starting from a select initial condition , will be tracked as the image of the linearized reduced observer trajectory under the linearizing transformation (54):

| 57 |

When model reduction has also taken place, i.e., when the observable vector is not defined on the full phase space, we will nevertheless provide a prediction in the full phase space via the parametrization of the slow SSM.

By Theorem 1, DMD can be interpreted as setting in (57) and finding the linear operator as a best fit from the available data. In contrast, DDL finds the linear operator , the transformation , and its inverse simultaneously. As we explained in Sect. 4.2, EDMD cannot quite be interpreted in terms of the linearizing transformation (57) as it is an attempt to immerse the dynamics into a higher dimensional space. For our EDMD tests, we will use monomials of the observable vector .

1D nonlinear system with two isolated fixed points

Consider the one-dimensional ODE obtained as the radial component of the Stuart–Landau equation, i.e.,

which can be rescaled to

| 58 |

For , the system has a repelling fixed point at and an attracting one at . Page and Kerswell [44] show that local expansion of observables in terms of the Koopman eigenfunctions computed near each fixed point are possible, but the expansions at the two fixed points are not compatible with each other and both diverge at . This is a consequence of the more general result that the Koopman eigenfunctions themselves inevitably blow up near basin boundaries (see our Proposition 1 in Appendix A of the Supplementary Information). Both DMD and EDMD can nevertheless be computed from data, even for a trajectory crossing the turning point at , but the resulting models cannot have any connection to the Koopman operator.

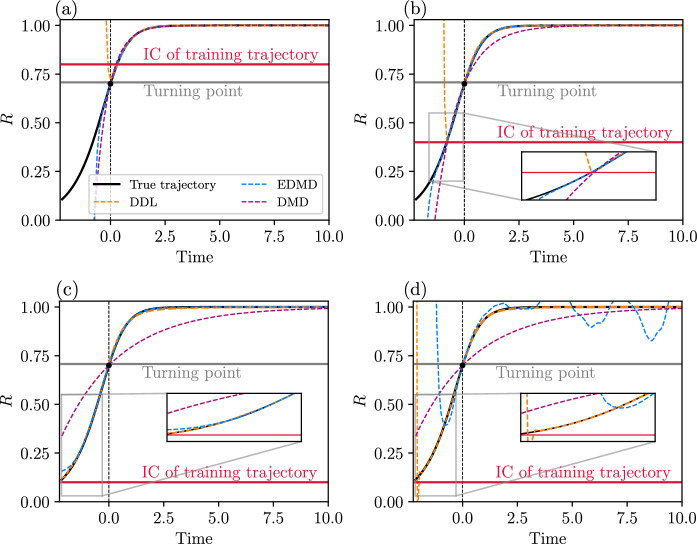

In each comparison performed on system (58), we generate a single trajectory in the domain of attraction of the fixed point and use it as training data for DMD, EDMD and DDL. In each subplot of Fig. 3, the single training trajectory starts from the intersection of the red horizontal line “IC of training trajectory” with the dashed line. We then also generate a new test trajectory (black) with its initial condition denoted with a black dot over the line . We place this initial condition slightly outside the domain of linearization for system (58) (under the grey line labeled “Turning point”). We use DMD, order- EDMD, and DDL trained on a single training trajectory to make predictions for the black testing trajectory (not used in the training).

Fig. 3.

Predictions of DMD, EDMD and DDL on trajectories of (58). a Training trajectory starts inside the domain of convergence of the linearization, i.e. (see [44]). For both DDL and EDMD the order of the monomials used is . b Same for a different training trajectory with and c same for and d same for and

Figure 3a shows DDL to be the most accurate of the three methods when applied to forward-time () segments of the test trajectory. If we try to predict the backward-time () segment of the same trajectory as it leaves the training domain, DDL diverges immediately upwards, whereas DMD and EDMD diverge more gradually downwards. As we increase the training domain in Fig 3b, DDL continues to be the most accurate in both forward and backward time until it reaches the domain of its training range in backward time. At that point, it diverges quickly upwards, while DMD and EDMD diverge more slowly downwards.

Importantly, increasing the approximation order for DDL first to then to (see Fig. 3c, d), makes DDL predictions more and more accurate in backward time inside the training domain. At the same time, the same increase in order makes EDMD less and less accurate inside the same domain. This is not surprising for EDMD because it seeks to approximate the dynamics within a Koopman-invariant subspaces for increasing k, and Koopman mode expansions blow up at the “Turning point line”, as shown both analytically and numerically by Page and Kerswell [44]. Interestingly, however, EDMD becomes less accurate even within the domain of linearization under increasing k. This is clearly visible in Fig. 3d which shows spurious, growing oscillations in the EDMD predictions close to the fixed point.

In summary, of the three methods tested, DDL makes the most accurate predictions in forward time. This remains true in backward time as longs as the trajectory remains in the training range used for the three methods, even if this range is larger than the theoretical domain of linearization. Inside the training range, an increase of the order k of the monomials used increases the accuracy of DDL but introduces growing errors in EDMD.

3D linear system studied via nonlinear observables

Wu et al. [60] studied the ability of DMD to recover a 3D linear system based on the time history of three nonlinear observables evaluated on the trajectories of the system. To define the linear system, they use a block-diagonal matrix and a basis transformation matrix of the form

| 59 |

to define the linear discrete dynamical system

| 60 |

The linear change of coordinates rotates the real eigenspace of corresponding to the eigenvalue c and hence introduces non-normality in system (60). This system is then assumed to be observed via a 3D nonlinear observable vector

| 61 |

Ideally, DMD should closely approximate the linear dynamics of system (60) because the observable function defined in Eq. (61) is close to the identity and has only weak nonlinearities. Wu et al. [60] find, however, that this system poses a challenge for DMD, which produced inaccurate predictions for the spectrum of .

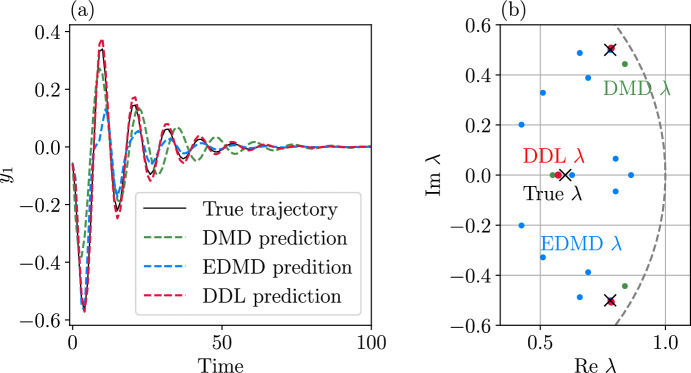

Following one of the parameter settings of Wu et al. [60], we set , , , , and . We initialize three training trajectories with each containing 100 iterations of system (60). We then compute the predictions of a order DDL model and compare to those of DMD and EDMD on a separate test trajectory not used in training these three methods. The predictions and the spectrum obtained from the three methods are shown in Fig. 4.

Fig. 4.

Predictions by DMD, EDMD, and DDL on the discrete dynamical system (59) and (61). a Predicted and true components of a test trajectory. b Spectra identified by DMD, EDMD, and DDL superimposed on the true spectrum (marked by crosses). The dashed line represents the unit circle. (Color figure online)

The predictions of DMD and EDMD can only be considered accurate for very low amplitude oscillations, while DDL returns accurate predictions throughout the whole trajectory. This example consists of linear dynamics and monomial observables of the state, and hence should be an ideal test case for EDMD. Yet, EDMD is inaccurate in identifying the spectrum of system (60). Indeed, as seen in Fig. 4b, a number of spurious eigenvalues arise from EDMD, both real and complex. DMD performs clearly better but it is still markedly less accurate than DDL. These inaccuracies in the predictions of EDMD and DMD spectra are also reflected by considerable errors in their predictions for trajectories, as seen in Fig. 4a. In contrast, DDL produces the most accurate prediction for the test trajectory.

Damped and periodically forced Duffing equation

We consider the damped and forced Duffing equation

| 62 |

with damping coefficient , forcing frequency and forcing amplitude . We perform a change of coordinates that moves the stable focus at to the origin and makes the linear part block-diagonal. The resulting system is of the form

| 63 |

where

| 64 |

and is the transformed image of the physical forcing vector in (62). We first consider the unforced system with . In this case, the 2D slow SSM of the fixed point coincides with the phase space and hence no further model reduction is possible. However, since the non-resonance conditions (34) hold for the linear part (64), the system is analytically linearizable near the origin. The linearizing transformation and its inverse can both be computed from Eq. (63), as outlined in Eq. (41). For reference, we carry out this linearization analytically up to order . The Taylor series of the linearization is estimated to converge for . The details of the calculation can be found in the repository [28].

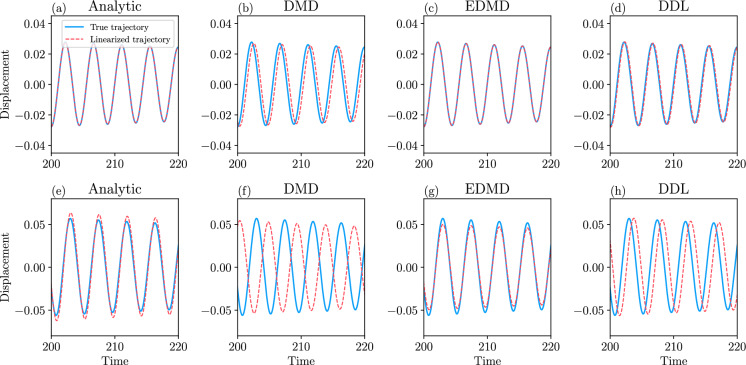

We now compare the analytic linearization results it to DMD, EDMD and DDL, with all three trained on the same three trajectories, launched both inside and outside the domain of convergence of the analytic linearization. The polynomial order of approximation is for both the EDMD and the DDL algorithms. The performance of the various methods is compared in Fig. 5. Close to the fixed point, in the domain of convergence of the analytic linearization, all three methods perform well. Moving away from the fixed point, the analytic linearization is no longer possible. Both DMD and EDMD perform worse, while DDL continues to accurately linearize the system even outside the domain of convergence of the analytic linearization.

Fig. 5.

Comparison of the time evolution of the linearized trajectories (red) and the full trajectories of the nonlinear system (63) (blue), (63). a–d Analytic linearization, DMD, EDMD and DDL models trained and evaluated on trajectories inside the domain of convergence. e–h Same as (a)–(d) but outside the domain of convergence of the analytic linearization. (Color figure online)

Using formula (55) and our DDL-based model, we can also predict the response of system (63) for the forcing term of the form

| 65 |

without using any data from the forced system. As the forced DDL model (55) is nonlinear, it can capture non-linearizable phenomena such as coexisting of stable and unstable periodic orbits arising under the forcing. We can also make a forced response prediction from DMD simply by setting in Eq. (55). As an inhomogeneous linear system of ODEs, however, this forced DMD model cannot predict coexisting stable and unstable periodic orbits.

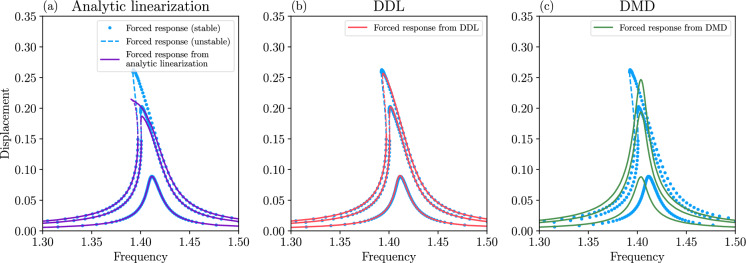

In Fig. 6, we compare the forced predictions of the analytic linearization, DMD, and DDL to those computed from the nonlinear system directly via the continuation software COCO of Dankowicz and Schilder [14]. Since the forced and linearized systems are also nonlinear, we use the same continuation software to determine the stable and unstable branches of periodic orbits.

Fig. 6.

Periodic response of the Duffing oscillator under the forcing (63). The three distinctly colored forced response curves correspond to . a Actual nonlinear forced response from numerical continuation (blue) and prediction for it from analytic linearization (purple) b forced response predictions from DDL (red) c forced response predictions from DMD (green). The training data for panels b and c is the same unforced trajectory data set as the one used in Fig. 5. (Color figure online)

As expected, the analytic linearization is accurate while the forced response is inside the domain of convergence but deteriorates quickly for larger amplitudes. DMD gives good predictions for the peaks of the forced response diagrams, but cannot account for any of the nonlinear softening behavior, i.e., the overhangs in the curves that signal multiple coexisting periodic responses at the same forcing frequency. In contrast, while the DDL model of order starts becoming inaccurate for peak prediction at larger amplitudes outside the domain of analytic linearization, it continues to capture accurately the overhangs arising from non-linearizable forced response away from the peaks. Notably, DDL even identifies the unstable branches (in dashed lines) of the periodic response accurately. For completeness, we also show results of approximate DDL, by assuming (56) in Appendix F.

Water sloshing experiment in a tank

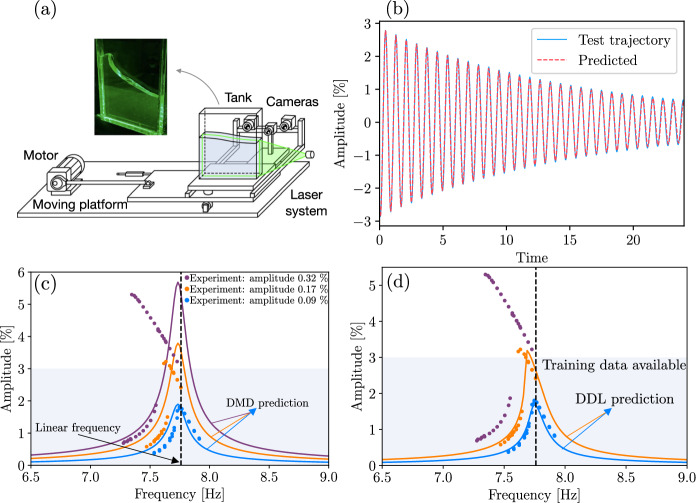

In this section, we analyze experimental data generated by Bäuerlein and Avila [5] for forced and unforced fluid sloshing in a tank. Previous studies of this data set used nonlinear SSM-reduction to predict forced response [2, 3, 12]. Here we will use DMD and DDL to extract and compare linear reduced-order models from unforced trajectory data, then use them to predict and verify forced response curves obtained from forced trajectory data. Neither DMD nor DDL is expected to outperform the fully nonlinear approach of SSM reduction, so we will only compare them against each other.

The tank in the experiments is mounted on a platform that is displaced sinusoidally in time with various forcing amplitudes and frequencies (Fig. 7a). To train DMD and DDL, we use unforced sloshing data obtained by freezing the movement of the tank near a resonance and recording the ensuing decaying oscillations of the water surface with a camera under they die out. The resulting videos serve as input data to our analysis. Specifically, the horizontal position of the center of mass of the fluid is extracted from each video frame tracked and used as the single scalar observable.

Fig. 7.

a Schematic representation of the experimental setup (adopted from [12]). b Prediction of the decay of a test trajectory with order- DDL. c Prediction of the forced response from DMD. d Prediction of the forced response from DDL. Light shading indicates the domain, in which training data for DMD and DDL was available

During such a resonance decay experiment, the system approaches its stable unforced equilibrium via oscillations that are dominated by a single mode. In terms of the phase space geometry, this means an approach to a stable fixed point along its 2D slow SSM tangent to the slowest 2D real eigenspace E . As we only have a single observable from the videos, we use delay embedding to generate a larger observable space that can accommodate the 2D manifold . As discussed by Cenedese et al. [12], we need an at least 5D observable space for this purpose by the Takens embedding theorem. In this space, turns out to be nearly flat for short delays (see [2]), which allows us to use a linear approximation for its parametrization. The reduced coordinates on can then be identified via a singular value decomposition of the data after one removes initial transients from the experimental data. The end of the transients can be identified as a point beyond which a frequency analysis of the data shows only one dominant frequency, the imaginary part of the eigenvalue corresponding to E.

All this analysis has been carried out using the publicly available SSMLearn package Cenedese et al. [11]. With identified, we use the DDL method with order to find the linearizing transformation and the linearized dynamics on . In Fig 7b we show the prediction of the model on a decaying trajectory reserved for testing. The displacements are reported as percentage values, with respect to the depth of the tank. In Fig. 7c and d, we show predictions from DMD and DDL models for the forced response, compared with the experimentally observed response. Since the exact forcing function is unknown, we follow the calibration procedure outlined by Cenedese et al. [12] to find an equivalent forcing amplitude in the reduced-order model.

We present data for three forcing amplitudes. The DDL predictions are accurate up to amplitude, even capturing the softening trend. The largest-amplitude forcing resulted in response significantly outside the range of the training data; in this range, we were unable to find the converged forced response from DDL. We also show the corresponding DMD-predictions in Fig. 7c. Although the linear response can formally be evaluated for any forcing amplitude, DMD shows no trace of the softening trend, and is even inaccurate for low forcing amplitudes.

Model reduction and foliation in a nonlinear oscillator chain

As a final example, we consider the dynamics of a chain of nonlinear oscillators, which has been analyzed in the SSMLearn package [11]. Denoting the positions of the oscillators as for , we assume that the springs and dampers are linear, except for the first oscillator. The non-dimensionalized equations of motion can be written as

| 66 |

where ; the springs have the same linear stiffness which is encoded in via nearest-neighbor coupling. The damping is assumed to be proportional, i.e., we specifically set .

Three numerically generated training trajectories show decay to the fixed point, as expected from the damped nature of the linear part of the system. In this example, we also seek to capture some of the transients, which motivates us to select the slow SSM to be 4D, tangent to the spectral subspace spanned by the the slowest mode ( and the second slowest mode (). As the mode corresponding to does disappear over time from the decaying signal, there is no resonance between the eigenvalues and hence Theorem 4 is applicable. As already noted, numerical data from a generic physical system described by Eq. (66) will be free from resonances. An exception is a resonance arising from a perfect symmetry, but this resonance is not excluded by Theorem 4 and has is amenable to DDL.

Within the 4D SSM , we also demonstrate how to optimally reduce the dynamics to its 2D slowest SSM . As explained in Sect. 4.3.3, to find the trajectory in with which a given trajectory close to ultimately synchronizes, we need to project along a point of the full trajectory first onto orthogonally to obtain a point . We then need to identify the stable fiber in for which holds. Finally, one has to project along to locate its base point . The trajectory through in will then be the one with which the full trajectory will synchronize faster than with any other trajectory. As noted in Sect. 4.3.3, computing the full nonlinear stable foliation

| 67 |

of is simple in the linearized coordinates, in which it can be achieved via a linear projection along the faster eigenspace .

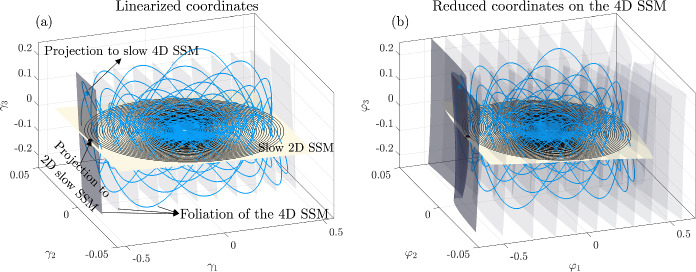

We use a third-order polynomial approximation for based on the three training trajectories. The polynomials depend on the reduced coordinates we introduce along E using a singular value decomposition of the trajectory data. These reduced coordinates are shown in Fig. 8, where we show a representative training trajectory, the 2D slow SSM , as well as the foliation (67) computed from DDL for this specific problem.

Fig. 8.

Reduced coordinates of the 4D SSM for the oscillator chain. The slow 2D SSM , a typical trajectory and its projection to the slow SSM along the fibers are also shown. Panel a shows the linearized coordinates computed from DDL, and b shows their image under the inverse of the linearizing transformation. The order of approximation used in DDL is 3

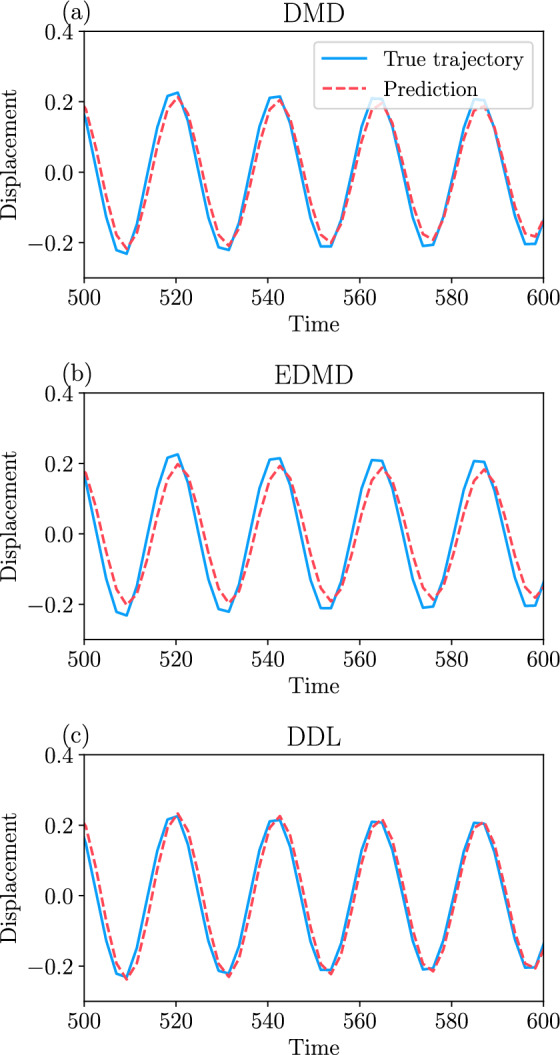

We also evaluate the DDL-based predictions on and by comparing them to predictions from DMD and EDMD. Performing DMD and EDMD with the data first projected to E can be interpreted as finding the linear approximation to the dynamics in . Similarly, performing DMD and EDMD with the data first projected to can be interpreted as finding the linear approximation to the dynamics in . These are to be contrasted with performing DDL that finds the linearized reduced dynamics within , which in turn contains the linearized reduced dynamics within . Figure 9 shows that DMD and EDMD both perform similarly to DDL on . However, the 2D DMD and EDMD results obtained for are noticeably less accurate than the DDL results.

Fig. 9.

Predictions of a DMD b EDMD and c DDL models on a test trajectory of the oscillator chain. The order of approximation for DDL and EDMD is

Conclusions

We have given a new mathematical justification for the broadly used DMD procedure to eliminate the shortcomings of prior proposed justifications. Specifically, we have shown that under specific non-degeneracy conditions on the n-dimensional dynamical system, on observable functions defined for that system, and on the actual data from these observables, DMD gives a leading-order approximation to the observable dynamics on an attracting d-dimensional spectral submanifold (SSM) of the system.

This result covers both discrete and continuous dynamical systems even for . Our Theorem 1 only makes explicit non-degeneracy assumptions on the observables which will hold with probability one in practical applications. This is to be contrasted with prior approaches to DMD and its variants based on the Koopman operator, whose assumptions on the observables fail with probability one on generic observables.

Our approach also yields a systematic procedure that gradually refines the leading-order DMD approximation of the reduced observable dynamics on SSMs to higher orders. This procedure, which we call data-driven linearization (DDL), builds a nonlinear coordinate transformation under which the observable becomes linear on the attracting SSM. We have shown on several examples how DDL indeed outperforms DMD and extended DMD (EDMD), as expected. In addition to this performance increase, DDL also enables a prediction of truly nonlinear forced response from unforced data within its training range. Although we have only illustrated this for periodically forced water sloshing experiments in a tank, recent results on aperiodically time-dependent SSMs by Haller and Kaundinya [19] allow us to predict more general forced response using DDL trained on unforced observable data.

Despite all these advantages, DDL (as any linearization method) remains applicable only in parts of the phase space where the dynamics are linearizable. Yet SSMs continue to exist across basin boundaries and hence are able to carry characteristically nonlinear dynamics with multiple coexisting attractors. For such nonlinearizable dynamics, data-driven nonlinear SSM-reduction algorithms, such as SSMLearn and fastSSM, are preferable and have been showing high accuracy and predictive ability in a growing number of physical settings (see, e.g., [1, 3, 12, 13, 26, 27, 37]).

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

We are grateful to Matthew Kvalheim and Shai Revzen for several helpful comments on an earlier version of this manuscript.

Funding

Open access funding provided by Swiss Federal Institute of Technology Zurich This work was supported by the Swiss National Science Foundation.

Data availability

All data and codes used in this work are downloadable from the repository https://github.com/haller-group/DataDrivenLinearization.

Declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Footnotes

Assuming that each coordinate component of the full phase space vector of system (1) falls in a span of the same set of Koopman eigenfunctions , one defines the Koopman mode associated with as the vector for which (see, e.g., Williams et al. [58]).

More precisely, Fenichel’s foliation results become applicable after the wormhole construct in Proposition B1 of Eldering et al. [17] is applied to extend smoothly into a compact normally attracting invariant manifold without boundary. This is needed because Fenichel’s results only apply to compact normally attracting invariant manifolds with an empty or overflowing boundary, whereas the boundary of is originally inflowing.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Alora, J.I., Cenedese, M., Schmerling, E., Haller, G., Pavone, M.: Practical deployment of spectral submanifold reduction for optimal control of high-dimensional systems. IFAC PapersOnLine 56–2, 4074–4081 (2023) [Google Scholar]

- 2.Axås, J., Haller, G.: Model reduction for nonlinearizable dynamics via delay-embedded spectral submanifolds. Nonlinear Dyn. (2023). 10.1007/s11071-023-08705-2 10.1007/s11071-023-08705-2 [DOI] [Google Scholar]

- 3.Axås, J., Cenedese, M., Haller, G.: Fast data-driven model reduction for nonlinear dynamical systems. Nonlinear Dyn. (2022). 10.1007/s11071-022-08014-0 10.1007/s11071-022-08014-0 [DOI] [Google Scholar]

- 4.Bates, D.M., Watts, D.G.: Nonlinear Regression Analysis and Its Applications. Wiley, Hoboken (1988) [Google Scholar]

- 5.Bäuerlein, B., Avila, K.: Phase lag predicts nonlinear response maxima in liquid-sloshing experiments. J. Fluid Mech. (2021). 10.1017/jfm.2021.576 10.1017/jfm.2021.576 [DOI] [Google Scholar]

- 6.Bettini, L., Kaszás, B., Zybach, B., Dual, J., Haller, G.: Model reduction to spectral submanifolds via oblique projection. Preprint (2024)

- 7.Bollt, E.M., Li, Q., Dietrich, F., Kevrekidis, I.: On matching, and even rectifying, dynamical systems through Koopman operator eigenfunctions. SIAM J. Appl. Dyn. Syst. 17(2), 1925–1960 (2018) [Google Scholar]

- 8.Budišić, M., Mohr, R., Mezić, I.: Applied Koopmanism. Chaos Interdiscip. J. Nonlinear Sci. 22, 047510 (2012) [DOI] [PubMed] [Google Scholar]

- 9.Buza, G.: Spectral submanifolds of the Navier–Stokes equations. SIAM J. Appl. Dyn. Syst. 23(2), 1052–1089 (2024) [Google Scholar]