Abstract

OBJECTIVES

Programmatic assessment approaches can be extended to the design of allied health professions training, to enhance the learning of trainees. The Australasian College of Physical Scientists and Engineers in Medicine worked with assessment specialists at the Australian Council for Educational Research and Amplexa Consulting, to revise their medical physics and radiopharmaceutical science training programs. One of the central aims of the revisions was to produce a training program that provides standardized training support to their registrars throughout the 3 years, better supporting their registrars to successfully complete the program in the time frame through providing timely and constructive feedback on the registrar's progression.

METHODS

We used the principles of programmatic assessment to revise the assessment methods and progression decisions in the three training programs.

RESULTS

We revised the 3-year training programs for diagnostic imaging medical physics, radiation oncology medical physics and radiopharmaceutical science in Australia and New Zealand, incorporating clear stages of training and associated progression points.

CONCLUSIONS

We discuss the advantages and difficulties that have arisen with this implementation. We found 5 key elements necessary for implementing programmatic assessment in these specialized contexts: embracing blurred boundaries between assessment of and for learning, adapting the approach to each specialized context, change management, engaging subject matter experts, and clear communication to registrars/trainees.

Keywords: programmatic assessment, training, medical, physics, radiopharmaceutical

Introduction

The Australasian College of Physical Scientists and Engineers in Medicine (ACPSEM) certifies and registers physicists, scientists, and engineers working in medicine in Australia and New Zealand. In 2020, the ACPSEM Board initiated the reform of the 3-year Radiation Oncology Medical Physics (ROMP) Training, Education and Assessment Program (TEAP) curriculum. This was followed by a request to reform the 3-year Diagnostic Imaging Medical Physics (DIMP) TEAP curriculum, and the 3-year Radiopharmaceutical Sciences (RPS) TEAP curriculum. The Board required the revised curricula to be flexible enough to cope with changing technology and to ensure that training could be feasibly completed within a 3-year timeframe for full-time trainees, while producing competent professionals who could work independently. The curricula and assessment programs for ROMP and DIMP were designed to align to the Australian Medical Council accreditation standards for Specialist Medical Programs, 1 despite this not being a formal requirement.

The original ROMP TEAP was established in 2003 and was structured around competency-based training. 2 Registrars (learners in training) needed to demonstrate not just clinical competency in treatment planning and advanced technologies such as brachytherapy, but also “domain-independent” generic and professional skills such as the ability to research and communicate to a variety of stakeholders at the required standard. A registrar's competency was assessed via both their supervisor throughout the training and a certification panel through a final examination, including written, oral, and practical components.

The DIMP TEAP was designed in 2011 and followed a similar structure to the ROMP program. 3 The Radiopharmaceutical science program was established in 2016 but differed from the other 2 programs as it was designed under the guidance of the Australian Council for Educational Research in collaboration with a small numbers of radiopharmaceutical science professionals. The radiopharmaceutical science TEAP implemented a progressive assessment program where evidence of knowledge, skills, and professional capabilities were assessed by submission of evidence and reports assessed by subject matter experts.

Registrars were typically completing the TEAP over a 3-to-5-year period. There was a lack of clarity on the expected graduate outcomes from the program, lack of training support for supervisors, lack of timely and detailed feedback, and the ongoing assessments were found to be too onerous. 2 There were concerns around the lack of checkpoints to ensure progression, and issues with “over-assessment.” Some skills and content knowledge were assessed on multiple occasions unnecessarily, while some skills required more observation and evaluation to ensure progressive judgments were able to be made about the registrar's preparedness for specialist practice.

The ACPSEM Board initiated the reform of the TEAP to address these issues. Many elements of the TEAP are essential for demonstrating readiness to work as a medical physicist and/or radiopharmaceutical scientist, but a new approach was needed to ensure support for registrars and supervisors for timely completion, opportunities for registrars to demonstrate progression in their skills over time, and opportunities for feedback so registrars and supervisors could target and improve learning.4,5 A programmatic assessment approach was implemented to achieve these goals.

This article discusses the development of 3-year training programmes for the development of professional medical physicists and radiopharmaceutical scientists, how programmatic approaches to assessment were implemented and some of the challenges in adapting programmatic assessment to these specialist contexts.

Methods

What is Programmatic Assessment?

Programmatic assessment is an approach which uses multiple complementary assessment methods to give a more holistic understanding of a learner's performance throughout a training program or course. No single assessment method can be used to cover all elements of competence, however, when a variety of assessment methods are selected and used together, this combination can be optimized to give a broad, detailed picture of a competence. 6

Traditionally assessments are placed at the end of the training program to measure the competence of the learner upon completion. However, these assessments only give a picture of the competence at a particular point in training, on a particular day and time. In programmatic assessment, rich assessment data is collected over time to provide a clear picture of performance across multiple aspects of the curriculum being taught.6,7

There are 12 principles of programmatic assessment, outlined in the 2020 Ottawa Consensus Statement5,8:

Every assessment is but a data-point,

Every data-point is optimized for learning by giving meaningful feedback to the learner,

Pass/fail decisions are not given on a single data-point,

There is a mix of methods of assessment,

The method chosen should depend on the educational justification for using that method,

The distinction between summative and formative is replaced by a continuum of stakes,

Decision-making on learner progress is proportionally related to the stake,

Assessment information is triangulated across data-points towards an appropriate framework,

High-stakes decisions are made in a credible and transparent manner, using a holistic approach,

Intermediate review is made to discuss and decide with the learner on their progression,

Learners have recurrent learning meetings with mentors/coaches using a self-analysis of all assessment data,

Programmatic assessment seeks to gradually increase the learner's agency and accountability for their own learning through the learning being tailored to support individual learning priorities.

To ensure regular, timely feedback throughout the program and to support the process of learning, it is ideal to have a series of low-stakes and high-stakes assessments which provide feedback on registrar's areas of weakness and strength, and guide both the registrar and supervisor along the training journey.6,7 As stated in principle 2, every piece of assessment is a data-point that should be optimized for learning by giving meaningful feedback to the learner or in this case, registrar. Many “domain-independent skills,” such as communication, collaboration, and leadership, develop progressively over time and are better suited to assessments that provide longitudinal data about this progress. 6

Any high-stakes progress decision in the program should be made by triangulating this rich assessment data as an evidence base, as stated in principles 8 and 9. Multiple checkpoints over time, that use this evidence base as a source for the high-stakes decision making, are necessary to ensure feedback, further learning and to help maintain progress throughout the program. However, it is important to be mindful of the type of low and high stakes assessments being implemented, to ensure the types and number of assessments is not too onerous for the examiners, supervisors, and registrars.

Programmatic assessment is more than just using a series of multiple different assessments. It is imperative to think about the timing of the low and high stakes assessment through the education program, to ensure there are multiple points at which low stakes and high stakes assessment data is aggregated. Having multiple decision points throughout the training ensures not only timely feedback to registrars and supervisors, but also ensures decisions around registrar progression can be made in a transparent and holistic manner, as outlined in principle 9.

The Rationale for Using Programmatic Assessment in Medical Physics and Radiopharmaceutical Science Training

Programmatic assessment is common in undergraduate medical education,8-11 and many specialist training programs in Australasia and overseas are moving to using programmatic approaches in postgraduate education and training.7,11,12 Programmatic assessment works well in postgraduate settings, especially where training occurs in the workplace. The approach ensures that assessment types are selected that best suit the learning outcomes that are to be assessed as discussed in principle 5, and in the cases where such learning outcomes are directly related to demonstrating a workplace-based skill, supervisors can assess a candidates’ ability during regular workplace activities.

The rationale for adopting programmatic assessment for this specialist context was to increase assessment standardization, facilitate tracking of registrar progress and reduce any unnecessary, nonmeaningful and burdensome assessment. The programmatic assessment approach ensures a holistic view of performance across multiple assessment data points. The approach ensures that information is gathered in a progressive way over time, enabling contributions from multiple assessors and ensuring a well-rounded view of how the registrar's competency progresses. Assessing complex competencies, such as those needed for specialist medical physics and radiopharmaceutical science practice, requires the use of a range of assessments and learning activities. 6

Programmatic assessment can be implemented in a range of ways, with specific assessment data points arising from activities such as reflective reports, annotated treatment plans, to the more traditional high stakes examination. Each assessment method has its own strengths and weaknesses, and if the assessment methods are implemented holistically, in a manner to support learning, and providing valuable feedback to registrars,4,5 this helps support registrars and supervisors as they progress through the training program. It remains important that whatever assessment formats and number of assessments are chosen, the assessment is reliable, valid, has educational impact and benefit to the registrar and supervisor, is acceptable in terms of timing and practicality, and the cost is reasonable. 13

The ACPSEM had already partially implemented a programmatic assessment approach in their RPS TEAP, and as part of this reform, wanted to ensure all 3 programs (ROMP, DIMP, and RPS) had the same overarching programmatic assessment framework. As in all applications of programmatic assessment approaches to postgraduate medical education, it's important to think pragmatically about how the approach would work in practice for that training context. All assessments chosen should be timed so registrars receive timely and constructive feedback, but the number of assessments should not be too onerous, while still covering the breadth of the desired learning outcomes of the program.

Design and Development of the Programmatic Systems

The ROMP curriculum revisions began in 2020. The Australian Council for Educational Research was requested to provide a desktop review of the program, identify any gaps in meeting the Australian Medical Council standards, and highlight any recent trends in assessment research and practice. The review was followed by stakeholder consultation which was conducted using targeted online questionnaires. From these 2 processes it was found that the original TEAP program required:

A re-structure of the Clinical Training Guide to meet Standard 3.1: Curriculum Framework;

Clearly identified program outcomes to meet Standard 2.2: Program Outcomes;

New program content to meet Standard 3.2: The content of the curriculum;

A new assessment process, programmatic assessment, to meet Standard 5.1: Assessment approach.

To address these revisions, working groups were formed consisting of ROMP specialists and Australian Council for Educational Research assessment experts. These groups reviewed content, defined program outcome statements, and designed a standardized model of assessment using programmatic assessment principles. The result was moving from a single ROMP Clinical Training Guide to 2 new documents: ROMP TEAP Curriculum Framework and ROMP TEAP Handbook. 14

In 2022, the Diagnostic Imaging Medical Physics (DIMP) TEAP curriculum revision began. Like ROMP, the DIMP TEAP developed program outcomes statements and adopted a programmatic assessment approach. They also formed working groups of DIMP specialists and assessment experts to review content and design a standardized model of assessment. Through the revision process, they were able to:

Reduce their 69 modules down to 10 key areas of education and remove duplication;

Develop their program outcome statements with defined learning outcomes;

Include the teaching of communication, leadership, health advocacy, professionalism and collaboration;

Develop a framework that introduces stages of training guiding registrars and supervisors to build knowledge in a sequential, scaffolded manner while still allowing flexibility for individual registrars and training sites.

As with ROMP, the result was moving from a single DIMP Clinical Training Guide to 2 new documents: DIMP TEAP Curriculum Framework and DIMP TEAP Handbook. 15

The RPS TEAP was originally established in 2016 and already contained some elements of programmatic assessment. The curriculum review for the RPS TEAP began toward the end of 2022 and was able to incorporate recent advances in evidence-based programmatic assessment practices as well as build on the programmatic assessment approaches being discussed in ROMP and DIMP.

All 3 programs have adopted the programmatic approach to assessment. The educational assessment framework for the ROMP, DIMP, and RPS TEAP was built upon the following needs of the program:

A program that builds a culture which promotes high-quality feedback for learning through ongoing training, support, and engagement with all stakeholders.

A program that supports a process of mentoring registrars through TEAP and has the flexibility to allow for personalized remediation for registrars that are experiencing difficulties.

The ability to enhance and improve the training and assessment resources based on feedback from stakeholders.

Successful completion of the revised TEAPs hinges on supervisors and assessors providing high-quality feedback and registrars using this feedback to improve their learning. Communication between the different groups needs to be effective to ensure difficulties are picked up as they arise. 5

The first step in the process was to define the Program Outcome Statements for the 3 TEAPs and build the curriculum framework around these statements. These statements describe the attributes that graduates of the TEAPs are expected to display once certified and aim to further develop throughout their professional careers. The Program Outcome Statements fall under the following 7 categories 14 :

Safety

Knowledge

Critical thinking/problem-solving

Communication and teamwork

Patient-focused

Educator

Continuing Professional Development

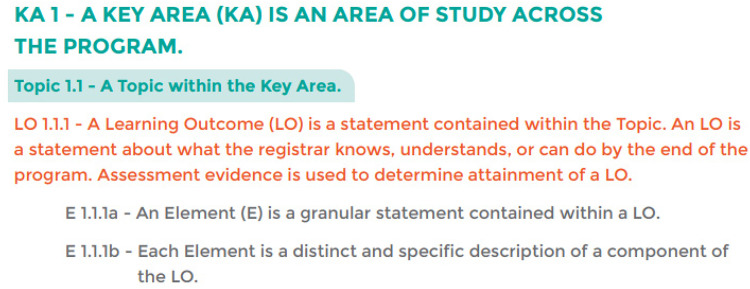

The curriculum framework contains Key Areas, Topics, Learning Outcomes and Elements (Figure 1). These Key Areas were determined by small working groups of specialists in the 3 areas of ROMP, DIMP, and RPS. These key areas differ by program but represent overarching areas of study which split into smaller topics, for example, Key Area 1 of DIMP is “Fundamental Radiation Physics” which splits into the 3 topics of “Radioactive decay and the interaction of ionising radiation with matter,” “Ultrasound physics” and “Nuclear magnetic resonance physics.” 15

Figure 1.

Curriculum framework structure of Key Areas, Topics, Learning Outcomes and Elements. 15

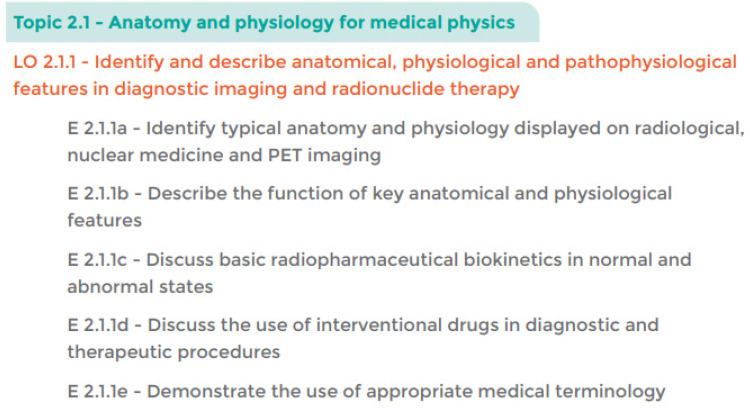

Learning outcomes and elements were then defined for each topic to ensure both supervisors and registrars knew the desired outcome of studying that topic, and how registrars were to demonstrate their understanding. These learning outcomes were developed by the working groups in collaboration with education specialists at the Australian Council for Educational Research and Amplexa Consulting. An example of the Learning outcome for Topic 2.1 and its associated elements is shown in Figure 2. The Curriculum was then sent to stakeholders for consultation.

Figure 2.

Diagnostic Imaging Medical Physics Curriculum Framework, Topic 2.1. 15

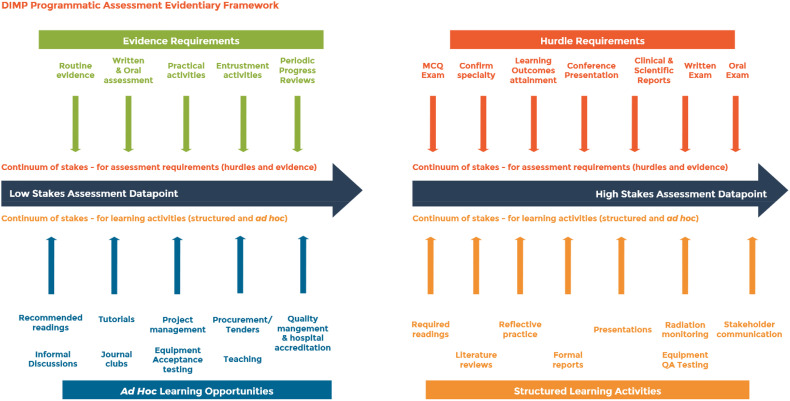

The Curriculum in all 3 programs is implemented using a programmatic assessment approach. A Programmatic Assessment Evidentiary Framework was developed for all 3 TEAPs, and the one for DIMP is shown in Figure 3. To achieve the learning outcomes of the TEAP, it is important registrars are given the opportunity to learn and progress in the skills necessary. The working groups and educational consultants worked together to devise structured learning activities and ad hoc learning opportunities that support the learning of the key areas.

Figure 3.

Diagnostic Imaging Medical Physics Programmatic Assessment Evidentiary Framework. 15

These learning opportunities range from low stakes to high stakes, with low stakes opportunities including activities such as journal clubs and recommended reading lists, and high stakes involving the submission of formal reports and literature reviews. As discussed in principle 6, formative and summative assessments have been replaced by a continuum of stakes. There are also lower stakes and higher stakes evidentiary and hurdle requirements, such as routine evidence to written and oral exams. Figure 3 aims to make the relative stakes of all datapoints explicit for learners. 16

Evidence generated from these assessments and learning activities form a progression of low stakes to high stakes assessment datapoints, and the progression of the registrar is proportional to the level of the stake as outlined in principle 7. The lower stakes assessments are designed as both an assessment and a teaching tool to enable rich feedback to the registrar as they progress through the program. The continuum of evidence accumulated as the registrar progresses provides certification committees and supervisors with rich data to evaluate a registrar's progress and support them with areas needing improvement. This ensures the committee are able to make evidence-based decisions in a holistic manner, as outlined in principle 9.

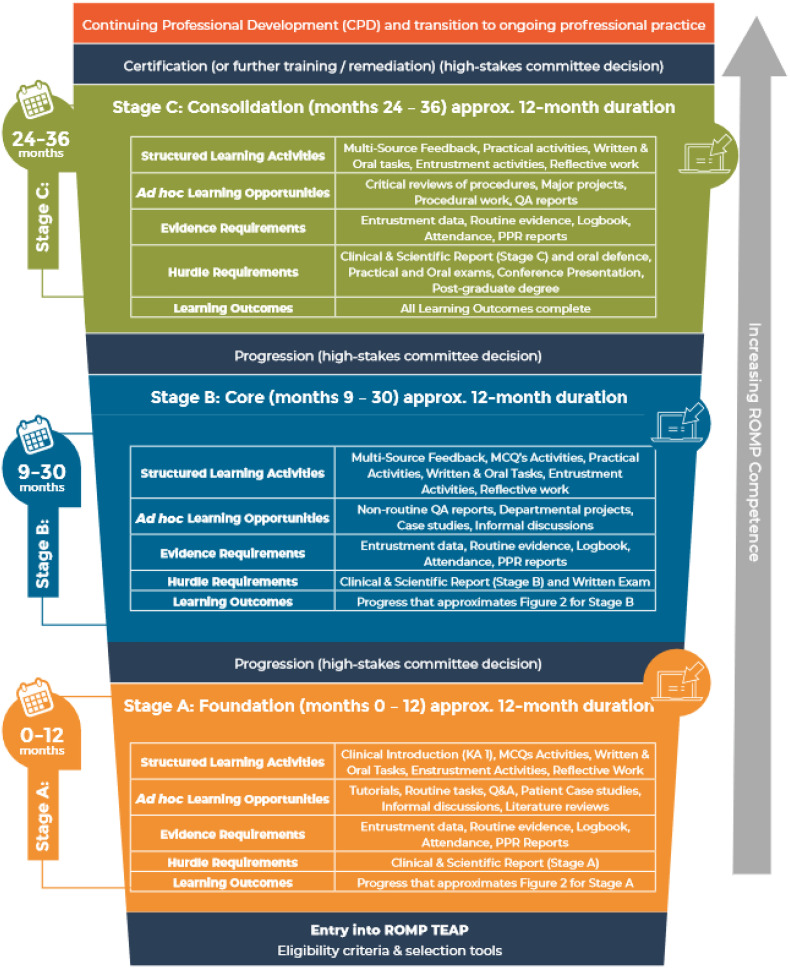

It's important to ensure timely and detailed feedback throughout the 3-year program. The working groups and education consultants split the program into 3 stages, with hurdles at the end of each stage and a range of learning activities within the stage so registrars receive feedback during the stage and not just at the hurdle points. The committees are then able to make the high-stakes decisions at the end of each stage. The TEAP Summary for ROMP is shown in Figure 4.

Figure 4.

Radiation Oncology Medical Physics Training, Education and Assessment Program Summary. 14

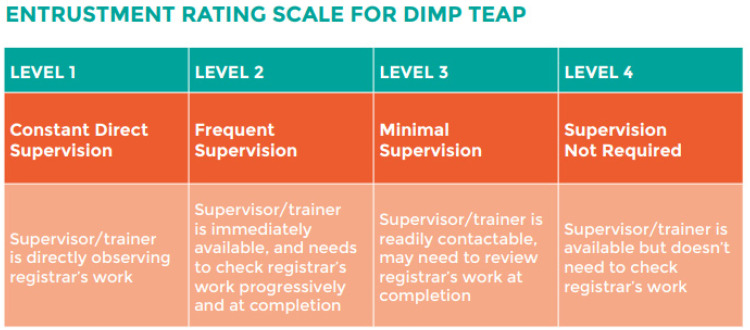

One of the challenges of having a large suite of learning activities and evidence of the learning activities, is over-assessment. Where possible, assessments are in the form of entrustment scale ratings where supervisors simply select the entrustment level for their registrar for routine day to day tasks aligned to the learning outcomes (Figure 5). The entrustment scale was designed for ACPSEM, and informed by the entrustment literature in health professions education. 17 These levels range from level 1—needing constant direct supervision to level 4—supervision not required.

Figure 5.

Diagnostic Imaging Medical Physics Entrustment Rating Scale. 15

The Challenges of Making Programmatic Assessment “Digestible” in a Clinical Setting

Clinical training and assessment had long been a part of the development and structure of TEAP. The final assessment methods for major milestones included a balance between demonstrating fundamental knowledge, practical application and showing analytical and problem-solving skills, via written, practical, and oral examinations. The milestones were a strong representation of the breadth of skills and knowledge that were required to competently perform the tasks required. However, the steps throughout the training program relied upon the discretion of the supervisor and their choice of assessment. Clinical physicists and radiopharmaceutical scientists are not trained in assessment techniques and have often come from an undergraduate university environment that requires vastly different skills. This meant the experience registrars had during their training varied significantly, with different registrars receiving different opportunities to learn and demonstrate their skills, and receive feedback, before sitting the milestone examinations.

The move to programmatic assessment aligns the skills required to be demonstrated by the registrar to both an appropriate method of providing learning of the skill, but also assessment of that learning in context. It also serves to provide a reduced burden of providing evidence of learning by the registrars that may not be intrinsically linked to the tasks. The process now relies on the supervisors and workplace assessors to provide meaningful feedback that is tied to well described expectations and standards.

Although physical scientists have a natural culture of education, many do not have training in providing meaningful feedback or how to assess in a clinical setting. Therefore, part of the implementation phase included showing the new framework of assessment, including the milestones and stages of training, as well as individual training sessions on each type of assessment. Providing ongoing training, in monthly sessions, has been a core part of the ongoing change in the landscape of assessment. Supervisors are provided the opportunity for ongoing direct feedback with ACPSEM training coordinators, as well as integrating their feedback to ensure clarity of understanding their new requirements.

Many supervisors in the RPS TEAP have found the implementation of entrustment activities and progression interviews a useful tool for ensuring those important feedback conversations between supervisors and registrars are being had. The wording in the entrustment scale has also helped support supervisors in these feedback conversations.

For many supervisors, the change in the assessment model has been significant compared to the methods they had used previously. Although many can see the benefit of a model of assessment that is more tailored to the learning and acknowledge that the approach makes sense, there has been commentary from some supervisors around the increased requirement for their engagement in learning via feedback. Previously, supervisor feedback was encouraged, but it was not embedded in the learning model and many supervisors recorded little to no feedback. The use of rubrics is also a new concept to many of the supervisors, and education about how to read and apply a rubric has been an important phase of the implementation. The registrars, by contrast, are taking to the new assessment model extremely well and generally have no issue with the type of assessment required, only in clarification with their supervisors. In particular in the RPS TEAP, registrars have commented on the ease of use of some of the new assessment methods, such as the records. Records are generated during normal work activities, annotated by the registrar, and submitted as evidence of learning. These types of assessments, that can be easily completed as part of day-to-day activities, have been seen quite favorably by many registrars.

Conclusions

Considerations for Future Use of Programmatic Assessment in Other Areas of Allied Medical Education

From the revision of the ROMP, DIMP, and RPS TEAP, 5 key elements became apparent in the use of programmatic assessment in these specialized clinical contexts. Many of the elements aligned to the barriers and enablers described by Torre et al, when using programmatic assessment approaches in undergraduate and postgraduate Health Profession Education programmes. 18

Embracing Blurred Boundaries

When developing training programs, it is imperative to consider training and assessment at the same time. Implementing the curriculum through a programmatic assessment approach requires careful thought around the types of training activities, the evidence requirements, and the timing of the activities. It is important to blur the formative/summative distinction and move to a series of stakes. It is important to foster an understanding that these activities are a source of valuable feedback to registrars and supervisors. However, in doing so, communication around the assessment stakes to registrars is critical, as is building a supportive learning environment for all.

Adaptability to Contexts

The overall model is the same across all 3 TEAP programs, but all have slightly different instantiations. In part these differences are due to idiosyncrasies of the profession, and applications of the TEAP in the varied clinical departments. There is no “one-size-fits-all” approach to programmatic assessment, so it is important to apply the fundamental principles in ways that will work in context.

Change Management Considerations

This type of reform is a massive undertaking. Such changes cannot be done quickly, as they require good consultation, drafting and iterative development, as well as stakeholder engagement and management. Most importantly, there needs to be a cultural change in thinking around the purpose and use of assessment, so all understand and hopefully embrace the assessment and learning intentions. This shift in the culture of assessment, strong support from leadership and organizational commitment to change was highlighted as necessary elements in implementing programmatic assessment in undergraduate and postgraduate Health Profession Education Programmes. 18

Engagement of Subject Matter Experts

Training programs are the tools to train future experts. These programs cannot be designed without the input of subject matter experts in the field who often also act as supervisors, and new training program structures should not be imposed on them. Any development of curriculum and evidentiary assessment frameworks requires their collaboration and input to be practically and successfully implemented and aligned with current practice.

Importance of Communication With Registrars

Finally, training programs are developed to support registrars and it's important to clearly communicate the benefit and necessity of changes to the registrars. It's imperative that any changes are transparent, the reason for the change is highlighted, and the necessity of how these changes benefit the training program is emphasized. This finding was similar to the need to communicate clear expectations about the roles of assessment to students in undergraduate and postgraduate Health Profession Education Programmes. 18

The use of programmatic assessment approaches in workplace-based training programs provides the opportunity to give registrars and supervisors feedback throughout training, ensuring assessment is not just of learning, but for learning. However, implementation requires a large cultural shift on the purpose of assessment for specialist training organisations, registrars, and supervisors. If successful, such implementation holds the promise of ensuring timely and constructive feedback, enabling training organisations to provide remediation early in the program to registrars that require further supports, and should help ensure timely completion of the training program.

Acknowledgments

The authors would like to acknowledge fellow ACPSEM Fellows and Australian Council for Educational Research researchers for their feedback and support.

Footnotes

Author Contribution: All authors contributed significantly to the manuscript writing and implementation of programmatic assessment approaches to the training programs. All authors read and approved the manuscript.

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: ACPSEM thanks the Australian Commonwealth Government for their financial support toward the TEAP reforms.

ORCID iD: Kristy C. Osborne https://orcid.org/0000-0002-1808-7453

References

- 1.Australian Medical Council. Standards for Assessment and Accreditation of Specialist Medical Programs and Professional Development Programs by the Australian Medical Council. Australian Medical Council Limited; 2015. [Google Scholar]

- 2.Perkins A. Reflections on TEAP: training for the future of medical physics. Australasian Physical and Engineering Sciences in Medicine. 2013;36(2):143-145. [DOI] [PubMed] [Google Scholar]

- 3.Cormack J. Editorial: the ACPSEM diagnostic imaging TEAP project. Australasian Physical and Engineering Sciences in Medicine. 2011; 34:173-178. [DOI] [PubMed] [Google Scholar]

- 4.Sadler DR. Making competent judgements of competence. In: Blomeke S, Zlatkin-Troischanskaia O, Kuhn C, Fege J, eds. Modeling and measuring competencies in higher education: tasks and challenges. Sense Publishers; 2013:13-27. [Google Scholar]

- 5.van der Vleuten CP, Schuwirth LWT, Driessen EW, Govaerts MJB, Heeneman S. Twelve tips for programmatic assessment. Med Teach. 2015;37(7):641-646. [DOI] [PubMed] [Google Scholar]

- 6.van der Vleuten C, Heeneman S, Schuwirth L. Programmatic assessment. In: Dent J, Harden RM, Hunt D, eds. A practical guide for medical teachers. Elsevier health sciences; 2017:295-303. [Google Scholar]

- 7.Pearce J, Reid K, Chiavaroli N, Hyam D. Incorporating aspects of programmatic assessment into examinations: aggregating rich information to inform decision-making. Med Teach. 2021;43(5):567-574. [DOI] [PubMed] [Google Scholar]

- 8.Heeneman S, de Jong LH, Dawson LJet al. Ottawa 2020 Consensus statement for programmatic assessment – 1. Agreement on the principles. Med Teach. 2021;43(10):1139-1148. [DOI] [PubMed] [Google Scholar]

- 9.van der Vleuten CP. Revisiting ‘assessing professional competences; from methods to programmes’. Med Educ. 2016;50(9):885-888. [DOI] [PubMed] [Google Scholar]

- 10.Roberts C, Khanna P, Bleasel Jet al. Student perspectives on programmatic assessment in a large medical programme: a critical realist analysis. Med Educ. 2022;56(9):901-914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Schut S, Driessen E, van Tartwijk J, van der Vleuten C, Heeneman S. Stakes in the eye of the beholder: an international study of learners’ perceptions within programmatic assessment. Med Educ. 2018;52(6):654-663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Schut S, Heeneman S, Bierer B, Driessen E, van Tartwijk J, van der Vleuten C. Between trust and control: teachers assessment conceptualisations and relationships within programmatic assessment. Med Educ. 2020;54(6):528-537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.van der Vleuten CPM. The assessment of professional competence: developments, research and practical implications. Advances in Health Sciences Education . 1996;1:41-67. [DOI] [PubMed] [Google Scholar]

- 14.Australasian College of Physical Scientists and Engineers in Medicine. Radiation oncology medical physics (ROMP) training, education and assessment program (TEAP) Handbook, v1.0. Australasian College of Physical Scientists and Engineers in Medicine; 2022;8-9. [Google Scholar]

- 15.Australasian College of Physical Scientists and Engineers in Medicine. Diagnostic Imaging Medical Physics (DIMP) Training, Education and Assessment Program (TEAP) Handbook, v1.0.. Australasian College of Physical Scientists and Engineers in Medicine; 2023;22-23. [Google Scholar]

- 16.Kinnear B, Warm EJ, Caretta-Weyer Het al. et al. Entrustment unpacked: aligning purposes, stakes, and processes to enhance learner assessment. Acad Med. 2021;96(7S):S56-S63. [DOI] [PubMed] [Google Scholar]

- 17.Ten Cate O. When I say … entrustability. Med Educ. 2020;54(2):103-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Torre D, Rice NE, Ryan Aet al. Ottawa 2020 Consensus statement for programmatic assessment – 2. Implementation and practice. Med Teach. 2021;43(10):1149-1160. [DOI] [PubMed] [Google Scholar]