Graphical abstract

Keywords: Seed phenotype, Optical sensor, Digital technique, Artificial intelligence, Spectroscopy, Imaging processing

Highlights

-

•

Optical sensors are highly-promising for seed phenotype digitization.

-

•

Spectroscopy, digital imaging, and 3D reconstruction are primarily used digital techniques.

-

•

Optical sensors can detect both visible external and invisible internal phenotypes.

-

•

Matched optical sensors can effectively reduce resource loss in seed phenotype evaluation.

-

•

Future research should focus on phenotype equipment, platform, and data processing algorithms for automatic, integrated, and intelligent evaluation.

Abstract

Background

The breeding of high-quality, high-yield, and disease-resistant varieties is closely related to food security. The investigation of breeding results relies on the evaluation of seed phenotype, which is a key step in the process of breeding. In the global digitalization trend, digital technology based on optical sensors can perform the digitization of seed phenotype in a non-contact, high throughput way, thus significantly improving breeding efficiency.

Aim of review

This paper provides a comprehensive overview of the principles, characteristics, data processing methods, and bottlenecks associated with three digital technique types based on optical sensors: spectroscopy, digital imaging, and three-dimensional (3D) reconstruction techniques. In addition, the applicability and adaptability of digital techniques based on the optical sensors of maize seed phenotype traits, namely external visible phenotype (EVP) and internal invisible phenotype (IIP), are investigated. Furthermore, trends in future equipment, platform, phenotype data, and processing algorithms are discussed. This review offers conceptual and practical support for seed phenotype digitization based on optical sensors, which will provide reference and guidance for future research.

Key scientific concepts of review

The digital techniques based on optical sensors can perform non-contact and high-throughput seed phenotype evaluation. Due to the distinct characteristics of optical sensors, matching suitable digital techniques according to seed phenotype traits can greatly reduce resource loss, and promote the efficiency of seed evaluation as well as breeding decision-making. Future research in phenotype equipment and platform, phenotype data, and processing algorithms will make digital techniques better meet the demands of seed phenotype evaluation, and promote automatic, integrated, and intelligent evaluation of seed phenotype, further helping to lessen the gap between digital techniques and seed phenotyping.

Introduction

Quality seed development remains the primary goal of today’s breeding programs since it is considered one of agriculture’s most essential and fundamental components [1]. Over time, the evolution of breeding has evolved drastically from breeding 1.0 to breeding 4.0. Breeding 1.0 is based on farmers’ experience, which is essential for the subjective selection of seeds; breeding 2.0 places a greater emphasis on field statistics and genetic analysis. Breeding 3.0 (the current state of the art) is concerned with establishing the relationship between genes and crop traits, and breeding 4.0 emphasizes interdisciplinary research (such as life science and information science) and data material [2]. Regardless of this evolution, seed phenotypes have consistently been the most direct expressions of breeding [3].

Seed phenotypes are primarily composed of the original apparent traits such as weight, color, size, shape, and number [4]. Besides, there are also physiological and biochemical traits, including protein, moisture, oil content in seed variety, fungal infection in food safety, and internal structure in seed damage [5]. The evaluation of seed phenotypes (or seed testing) is the main procedure for breeders to obtain accurate seed quality information, which is always faced with the challenge of being more efficient, accurate, and automatic [6]. Numerous external phenotypes are typically evident to the naked eye. Thus traditional seed testing procedures are mainly based on manual measurement techniques and sensory evaluations of color, shape, and quantitative factors. However, these evaluation criteria are frequently inconsistent. Additionally, it requires considerable time and workforce.

Over the last decades, with the breakthrough in information and communication technologies, digital techniques such as artificial intelligence (AI), machine vision, and big data have developed tremendously [7], especially in agriculture. Digital techniques have spread throughout the global life cycle of crops by assisting in a very effective way during automated detection and tracking [8], aiming to establish the entire network of processing information and promoting food security and human health [9]. Optical sensors, as one of the representative digital techniques, have been rapidly used in seed phenotype analysis due to their non-contact and high-throughput measurement characteristics [8], [10]. Compared with traditional manual phenotype evaluation, optical sensor based techniques can decompose the complex compound phenotype with non-destructive testing and eliminate the subjective deviation introduced by naked eye investigation [2]. These advantages could reduce seed loss and offer high-quality digital phenotype information, by combining with advanced data processing algorithms. Moreover, the significant improvement of seed phenotype data quality reduces the resource expenditure of critical breeding decisions [11]. It facilitates rapidly mining of crop trait regulatory genes, providing a basis for ameliorating target traits and accurately predicting phenotypes [12]. Digital techniques based on optical sensors can assist in different seed phenotype detection, promoting the efficiency of seed evaluation and breeding decision-making.

Because of the diversity of optical sensors and the complexity of seed phenotypes, it is essential to match suitable techniques according to the characteristics of seed phenotype traits. In this review, we first illustrate the principles of optical sensors commonly used in seed phenotype digitization, summarize the data processing methods, and emphasize the bottlenecks of different digital techniques. Secondly, taking maize ear as a typical case, we explore the applicability of relevant digital techniques according to the phenotype characteristics. Finally, challenges and trends are discussed to promote the development of seed phenotype digitization for providing guidance and reference to future research.

Review methodology

A thorough literature search was conducted using electronic databases such as Web of Science, ScienceDirect, and IEEE Explore, as well as Google Scholar, to find studies reporting on digital techniques for seed phenotyping. Since this review focuses on the development and application of these techniques, the year of publication was not limited.

The search included titles, abstracts, and keywords of articles. The search keywords of the study were divided into three groups. The first group included keywords related to the objects being detected, specifically “seed” and “corn or maize”, corresponding to the research in the third and fourth sections of the review. The second group consisted of digital technique keywords, including “spectroscopy”, “imaging or RGB”, and “three-dimension (3D) reconstruction”. The third group included application object keywords, such as “content”, “disease”, “damage”, “quality”, “viability”, “variety”, “purity”, and “microstructure”. We established multiple combinations of these groups of keywords to complete the comprehensive search of this study. After conducting a general search, research articles were carefully selected based on the following inclusion criteria: (1) relevance to seed phenotype, including traits such as original apparent, exogenous infection, biochemical composition, and physiological structure, (2) detailed data processing methods and evaluation performance, and (3) prioritized selection of newly published literature for similar research. Additionally, the impact factor of journals and the number of citations each paper received up to the studied period were considered.

Seed phenotype digitization techniques and algorithms based on optical sensors

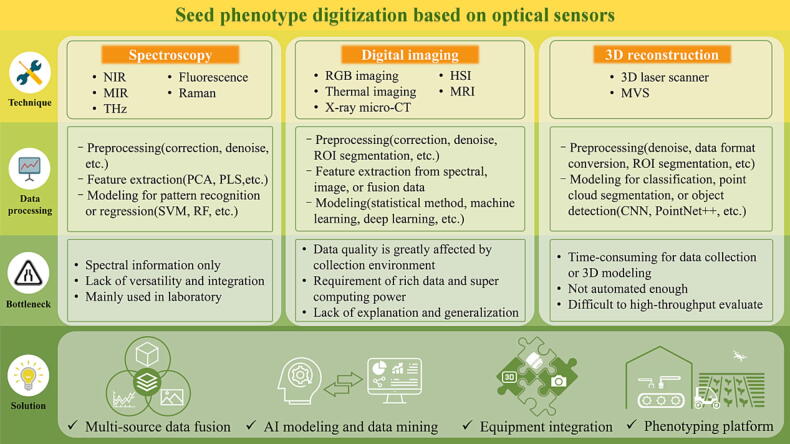

The commonly used optical sensors for seed phenotype include spectroscopy for reflecting chemical components, digital imaging for morphology and component visualization, and 3D reconstruction for restoring seed morphology from a 3D level. These techniques have different advantages in digitizing seed phenotypes according to their characteristics. In this section, we introduce the principles and characteristics of different optical sensors, summarize the common processing pipelines or algorithms of the obtained data, and discuss the current development bottlenecks and existing solutions (Fig. 1).

Fig. 1.

Seed phenotype digitization based on optical sensors. The process of seed phenotype digitization based on optical sensors is to record and obtain the seed phenotype through spectroscopy, digital imaging, 3D reconstruction techniques, and then digitize the seed phenotype through various data processing methods. Various optical sensors have different characteristics and limitations, affecting seed phenotype digitization development. Through multi-source data fusion, AI modeling and data mining, equipment integration, and multi-scale phenotyping platforms, efficient, accurate, and high-throughput evaluation of seed phenotypes can be achieved. Abbreviations: NIR, near-infrared; MIR, mid-infrared; THz, Terahertz; RGB, red–green–blue; HSI, hyperspectral imaging; MRI, magnetic resonance imaging; X-ray micro-CT, X-ray micro-computed tomography; 3D, three-dimensional; MVS, multi-view stereo; PCA, principal component analysis; PLS, partial least squares; SVM, support vector machine; RF, random forest; ROI, region of interest; CNN, convolution neural network; AI, artificial intelligence. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Spectroscopy techniques and data processing algorithms

Three types of energy transfer occur when light interacts with objects: reflection, absorption, and transmission. However, due to the difference in seed apparent morphology, texture, and internal biochemical components, the optical signal attenuates with different intensities [13]. The signals can be collected by optical sensors and visualized into spectral lines with computers. Integrated with chemometric methods, the internal composition, variety, and microstructure of seeds can be determined [14]. A basic spectral system typically comprises an optical platform and a detecting unit [15]. As the system’s central component, different optical sensors can reflect the optical properties of seeds in various regions of the electromagnetic spectrum. Near-infrared (NIR), mid-infrared (MIR), fluorescence, Raman, and Terahertz (THz) spectroscopy are commonly used optical sensors for seed phenotype digitization.

Different spectroscopies offer distinct benefits for detecting specific characteristics. NIR spectroscopy mainly reflects the information of double and combined frequencies of molecules, i.e., the information of hydrogen-containing groups such as O–H, N–H, C-O, and C–H in organic compounds [16]. MIR spectroscopy measures the fundamental frequency signal, and its spectral peak information is relatively affluent, putting forward higher requirements for data processing and model optimization [17]. For fluorescence spectroscopy, the substance is stimulated to generate characteristic lines, which are related to its internal structure and component composition, enabling the substance to be identified rapidly and accurately [18]. Compared with other techniques, Raman spectroscopy is more sensitive to the symmetrical vibration of covalent bonds in nonpolar molecular groups and is not disturbed by water molecules [19]. As the fingerprint spectrum of substances, THz spectroscopy can reflect the structure and properties of organic biomolecules qualitatively and quantitatively, which is between microwave and infrared light in the electromagnetic spectrum. Many molecules, especially organic molecules, show strong absorption and dispersion characteristics in the THz frequency band [20]. These spectroscopy techniques can be complemented and integrated according to the detection characteristics for precise and comprehensive information. The application of spectroscopy techniques to the digitalization of seed phenotype provides the benefits of convenience, speed, precision, and environmental friendliness. Therefore, spectroscopy techniques have become a research hot-spot, resulting in more and more scholars devoted to related studies. Table 1 summarizes the applications of spectroscopy techniques for distinct phenotypes of diverse seeds.

Table 1.

Application of spectroscopy techniques for seed phenotype digitization. Abbreviations: DA, discriminant analysis; DBN, deep belife network; iPLS, interval partial least squares; LDA, linear discriminant analysis; LOD, limit of detection; MPLS, modified partial least squares; MPLSR, modified partial least square regression; NIR, near-infrared; PCA-SVM, principal component analysis-support vector machine; PLS-DA, partial least squares discriminant analysis; PLSR, partial least squares; SKNIR, single-kernel near-infrared.

| Optical sensor | Pros and cons | Seed | Application | Method and performance | Ref |

|---|---|---|---|---|---|

| NIR | Affordable price High penetration depth Poor sensitivity for low concentrationsBroad and overlapping absorption bands |

Soybean | Protein and oil content determination | SAS, NIR analyzer | [21] |

| Amino and fatty acid determination | ISI program, PLSR, R2 = 0.06 ∼ 0.85 | [22] | |||

| Sunflower | Oleic acid determination | Regression analysis, R2 = 0.983 | [23] | ||

| Brassica napus | Tocopherol content determination | MPLS; R2 = 0.74 | [24] | ||

| Wheat | Fusarium-damaged kernels and deoxynivalenol identification | SAS, SKNIR, R = 0.72 | [25] | ||

| Sesame | Origin discrimination | IBM SPSS Statistics, DA, accuracy = 89.4 % | [26] | ||

| Maize | Provitamin A Carotenoids Content determination | WinISI III Software, bayesian and MPLSR, R2 = 0.22 ∼ 0.75 | [27] | ||

| Viability evaluation | Matlab, PLS-DA, accuracy > 98 % | [28] | |||

| Forage grass | Seed germination and vigor evaluation | R software, PLS-DA, accuracy = 61 ∼ 82 % | [29] | ||

| MIR | High specificity Few overlaps Low sensitivity for quantitative analysisHigh requirements for analysis models |

Soybean | Isoflavones and oligosaccharides determination | Matlab, PLSR, R2 = 0.72,0.80 | [30] |

| Pea | total protein, starch, fiber, phytic acid, and carotenoids determination | Orange, PLSR, R > 0.71 | [17] | ||

| Brown rice | Aflatoxin contamination identification | Matlab, DA, accuracy = 90.6 % | [31] | ||

| Peanut | Fungal contamination levels identification | TQ Analyst, PLSR, R2 = 0.9157 | [32] | ||

| Fluorescence | High sensitivity High specificity Cluttered and weak signalBackground interference |

Pea | Mineral nutrient (K, Ca, Mn, Cu, Zn, and Se) analysis | PyMca, R > 0.85 | [33] |

| Maize | Aflatoxin contamination identification | IBM SPSS Statistics, LDA, accuracy = 100 % | [34] | ||

| Rice | Seed germination and vigor evaluation | DBN, R = 0.9792 | [35] | ||

| Brassica oleracea | Seed maturity and quality evaluation | – | [36] | ||

| Raman | High specificity Good signal-to-noise ratio Hardly disturbed by water molecules Expensive experiment materialsUnsuitable for fluorescent samples |

Soybean | Crude protein and oil content determination | Matlab, PLSR, R2 = 0.916, 0.872 | [37] |

| Rapeseed | Iodine value determination | OPUS IDENT, R = 0.9904 | [38] | ||

| Kidney beans | Deoxynivalenol identification | Gaussian 03 package, LOD = 10-6M | [39] | ||

| Maize | Aflatoxin contamination identification | SAS, PLSR, R2 = 0.941 ∼ 0.957 | [40] | ||

| Transgenic maize discrimination | Matlab, LDA, accuracy = 87.5 % | [41] | |||

| Viability evaluation | Matlab, PLS-DA, accuracy > 95 % | [42] | |||

| THz | Fingerprint spectrum High sensitivity Mostly used for solution detectionHardly nondestructive detection |

Maize | Moisture content determination | PLSR, R = 0.9969 | [43] |

| Viability evaluation | – | [44] | |||

| Suger beet | Seed quality identification | Python, accuracy = 87 % | [45] | ||

| Wheat | Seed quality identification | PCA-SVM, accuracy = 95 % | [46] | ||

| Variety discrimination | Matlab, iPLS, R = 0.992 | [47] | |||

| Rice | Transgenic rice discrimination | TQ Analyst, DA, accuracy = 89.4 % | [48] |

Data processing methods such as preprocessing, feature extraction, and modeling are necessary after obtaining spectral data to perform qualitative or quantitative chemometric analysis of the measured data and extract sufficient information from it [49]. Multiple sampling is often needed for spectral data to improve the signal-to-noise ratio (SNR). Then, preprocessing methods (such as normalization, smoothing algorithm, and wavelet transform) are applied for noise reduction [24]. Due to the large volume of spectral data, in order to further eliminate irrelevant information and noise, feature extraction methods (such as principal component analysis, partial least squares, and linear discriminant analysis) are used to extract essential information to prepare for subsequent modeling [29]. Finally, depending on the phenotype requirements, the models are built based on the extracted features employing pattern recognition (such as K-means clustering, Fisher discriminant, and support vector machine) or regression (such as multiple linear regression, logistic regression, random forest, and artificial neural network) [31], [37].

For breeding, seed phenotype evaluation usually consists of many steps, such as weighing, size measurement, purity, quality detection, and biochemical component analysis [50]. Spectroscopy techniques are generally used to obtain the spectral information of seeds, detect biochemical components, and evaluate the quality of samples. Many spectral instruments are often utilized to detect a single or specific trait and a complete assessment always requires adding the samples to different equipment, which may result in complicated evaluation steps and unavoidable seed waste. Therefore, it is necessary to improve the versatility or integration of spectral instruments. Moreover, environmental conditions have a great impact on the detection results, and some instruments are employed in laboratory detection, which also limits the potential of spectroscopy techniques for field detection.

Digital imaging techniques and data processing algorithms

The fast development of optical sensors has led to the emergence and evolution of digital imaging techniques. To examine the apparent morphology of seeds, red–green–blue (RGB) imaging is frequently substituted for the naked eye. Typically, spectroscopy imaging techniques, including hyperspectral imaging (HSI), thermal imaging, X-ray micro-computed tomography (X-ray micro-CT), and magnetic resonance imaging (MRI), are used to observe the internal composition and structure of seeds. Table 2 lists the applications of digital imaging techniques on various seed phenotypes.

Table 2.

Application of digital imaging techniques for seed phenotype digitization. Abbreviations: BPNN, back propagation neural network; CNN, convolutional neural networks; FNN, feed-forward neural network; ICA-ANN, imperialist competitive algorithm and artificial neural networks; JMBoF, jointly multi-modal bag-of-feature; KNN, k-nearest neighbor; LS-SVM, least squares–support vector machines; MLP, multilayer perceptron; QDA, quadratic discriminant analysis; RBGM, row-by-row gradient based method; SVM, support vector machine.

| Optical sensor | Pros and cons | Seed | Application | Method and performance | Ref |

|---|---|---|---|---|---|

| RGB imaging | Low equipment cost Wide application Only apparent analysisRely on image processing algorithms |

Maize | Variety discrimination | Matlab, neuro-fuzzy, accuracy = 94 % | [51] |

| Kernel counting | Multi-threshold segmentation and RBGM, accuracy = 96.4 % | [52] | |||

| Size measurement | – | [53] | |||

| Peeling damage identification | Matlab, accuracy = 95.35 % | [54] | |||

| Haploid seed sorting | Python, CNN, accuracy = 96.8 % | [55] | |||

| Soybean | Quality grading | SVM, accuracy > 97 % | [56] | ||

| Appearance quality discrimination | JMBoF and SVM, accuracy = 82.1 % | [57] | |||

| Seed germination and vigor evaluation | Matlab, accuracy = 92.6 ∼ 98.8 % | [58] | |||

| Rapeseed | Kernel counting | Visual Studio, accuracy = 89.33 % | [59] | ||

| Variety discrimination | Python, SVM, accuracy = 99.24 % | [60] | |||

| Rice | Quality grading | LabVIEW, image processing algorithm | [61] | ||

| Variety discrimination | Visual C++, FNN, accuracy = 86.65 % | [62] | |||

| Wheat | Damage identification (mold, black tip, and sprout) | SAS, LDA and KNN, accuracy = 91 ∼ 94 % | [63] | ||

| Purity discrimination | ICA-ANN, accuracy = 96.25 % | [64] | |||

| Pepper | Variety discrimination | Matlab, MLP, accuracy = 84.94 % | [65] | ||

| Areca nuts | Disease identification | Visual C++, BPNN, accuracy = 90.9 % | [66] | ||

| HSI | Rich spatial and spectral information Internal and external inspection High equipment costComplex data processing |

Soybean | Crude protein and fat content determination | PLSR, R > 0.9 | [67] |

| Peanut | Fungal contamination identification | Matlab, SVM, accuracy > 94 % | [68] | ||

| Seed quality identification | PCA and watershed algorithm, accuracy = 98.73 % | [69] | |||

| Rice | Origin discrimination | Matlab and Unscrambler, SVM, accuracy = 91.67 % | [70] | ||

| Canola | Fungal contamination identification | Matlab and SAS, quadratic and mahalanobis classifiers, accuracy = 91.7 ∼ 100 % | [71] | ||

| Maize | Variety discrimination | LS-SVM, accuracy > 90 % | [72] | ||

| Seed vigor and aging degree evaluation | SVM, accuracy = 61 %∼100 % | [73] | |||

| Wheat | Viability evaluation | Matlab, PLS-DA, accuracy > 89.5 % | [74] | ||

| Cucumber | Viability evaluation | Matlab and Unscrambler, PLS-DA, accuracy > 99 % | [75] | ||

| Grape | Seed maturity evaluation | Unscrambler, PLSR, R > 0.95 | [76] | ||

| Thermal imaging | Non-contact No lighting required Poor spatial resolution and repeatabilityHighly affected by environmental factors |

Wheat | Fungal contamination identification | SAS, LDA and QDA, accuracy > 96 % | [77] |

| Cryptolestes ferrugineus infestation identification | Matlab and SAS, QDA and LDA, accuracy > 80 % | [78] | |||

| Variety discrimination | SAS, QDA, accuracy = 95 % | [79] | |||

| X-raymicro-CT | 3D microstructure Sensitive Time consuming for imagingNegative impact on seed germination |

Maize | Internal microstructure analysis | Scan IP, region growing, R > 0.8 | [80] |

| Hardness classification | VGStudio MAX and Statistica, PCA, accuracy > 75 % | [81] | |||

| Mechanical damage detection | NRecon software | [82] | |||

| Kidney bean | Internal crack detection | Matlab, histogram thresholding and morphological operations | [83] | ||

| Muskmelon | Seed germination and vigor evaluation | LDA, accuracy = 98.9 % | [84] | ||

| Tomato | Seed germination and vigor evaluation | Visual Studio | [85] | ||

| MRI | High resolution Ensure germination vigor Long imaging timeHigh cost |

Wheat | Sugar allocation determination | Matlab | [86] |

| Soybean | Water distribution observation | Stanford Graphics and PV-Wave programs | [87] | ||

| Jatropha curcas | Fungal contamination identification | – | [88] | ||

| Rice | Seed germination and vigor evaluation | IBM SPSS Statistics | [89] |

The main flow of extracting the external seed phenotype by machine vision instead of naked eyes is utilizing a digital camera for RGB imaging and then mining the required phenotype traits from the RGB image. RGB image records the apparent information of objects such as color, morphology, and texture [90]. Over the past decades, researchers have invested significant efforts in RGB image processing algorithms to explore the information as much as possible, making machine vision develop rapidly. Based on the features of different classes, the classification algorithms can achieve seed variety recognition [62], obvious damage detection [54], and seed purity analysis [51]. According to the discontinuity and similarity between pixels, the segmentation algorithms can complete the rapid counting of seeds [52], quality grading [56], and appearance size measurement [53]. Combined with object recognition and location functions, the detection algorithms can accurately identify each seed’s difference, position, and shape [50], [55], which provides decision support for seed sorting and promotes the automation of the whole process of seed phenotype evaluation. On this basis, machine vision based on RGB imaging technique has been relied on to extract seed’s external information such as the size, area, and quantity of various seeds with high throughput and automation, which significantly accelerated the efficiency of seed testing.

HSI is a high-resolution spectroscopy imaging technique that provides data covering a spectral range from 350 to 2,500 nm, containing dozens to hundreds of bands [91]. Compared with spectroscopy and RGB images, HSI technique can fuse spatial information and spectral information simultaneously and create 3D “hypercube” datasets composed of two spatial dimensions and a single spectral dimension [10]. Therefore, HSI data is generally analyzed using a combination of image processing and spectral analysis techniques. A typical HSI system consists of a hyperspectral optical sensor, a light source, and a control unit for measuring and analyzing images [91]. After the system obtains the image of the object in hundreds of wavelengths, the hyperspectral image is processed by several procedures, including calibration, noise reduction, region of interest (ROI) selection, segmentation, and spectral or spatial feature extraction [92]. In addition, the images from some spectra can reflect information that is difficult to observe with RGB images or the naked eye, especially in the infrared range [93]. HSI has excellent potential and performance in seed grading, vigor evaluation, disease identification, composition discrimination, and quality-related detection.

All objects produce infrared radiation, and the differences in the internal structure or composition of seeds lead to heat transfer changes. The thermal imaging technique can record the infrared radiation emitted by seeds using infrared detectors without the need for light sources [94]. Therefore, the seed variety and quality can be evaluated intuitively, quickly, and non-destructive from a thermal image. X-ray micro-CT and MRI techniques are gradually explored for precise evaluation of seed phenotypes, such as distinguishing seed quality and variety based on internal structure and composition differences. According to the difference in X-ray absorption and transmittance of the object, a highly sensitive sensor can measure the signal and transmit it to a computer for data processing. Afterward, the collection of the cross-section or 3D image of the object can directly reflect the internal defect and the change of density and structure [80]. Nuclear magnetic resonance (NMR) is often used for the non-destructive detection of water content in objects. By detecting the vibration signal of hydrogen atoms in objects and imaging by computer processing, MRI can show the change and distribution of water content in seeds based on the difference in signal density [95].

Traditional image processing pipeline involves steps such as image preprocessing, image segmentation, image enhancement, and feature mining, requiring professional knowledge and complex parameter adjustment processes [96]. With the increasing growth of data information, these steps are time-consuming, especially for the HSI data. Usually, the processing flow of the HSI data includes data preprocessing (correction, noise reduction, ROI segmentation, etc.), feature extraction (feature of spectral scale, image scale, or fusion data), and model establishment for tasks (statistical method, machine learning, deep learning, etc.) [93], [97]. Commonly used analysis software includes ImageJ (https://imagej.nih.gov/ij/) and Fiji (https://fiji.sc/) for image processing, and ENVI (https://www.nv5geospatialsoftware.com/Products/ENVI) for spectral imagery processing. With the improvement of computer performance, researchers focus on the computer’s understanding and learning of data, using computing power to extract effective information and train a robust model with better performance. Deep learning is popularly used to automatically extract image features, making the computer more intelligent [98]. Before model building, the preprocessing of standardized data is necessary. As deep learning has high requirements for data, data augmentation methods are often used to avoid overfitting [99]. Subsequently, the network architecture is defined or selected according to the target task. Then, researchers train the model by setting up the hyperparameters, including learning rate, optimizer, training epoch, etc., to obtain optimal results. Apart from model training, deploying the model into practical applications to reach a wide range of users is also involved. Many researchers have paid attention to the practical application of deep learning and put forward a series of lightweight networks designed for mobile devices, such as MobileNet [100], [101], [102] and ShuffleNet [103], [104]. The lightweight networks are lighter while retaining the performance of models, which are portable for mobile terminals, further accelerating the application of deep learning models and the digital extraction of field phenotype.

The discussion on the application of digital imaging techniques in digitizing seed phenotypes involves two parts: hardware and model. With the decrease in the cost of RGB cameras and the development of sensors, acquiring high-quality RGB images has become more convenient, making RGB imaging a widely used method to acquire seed traits. On the other hand, HSI cameras are costly and generally have lower image quality compared to RGB cameras. In addition, images collected by HSI cameras often need complex preprocessing steps, which require the appropriate use of a computer. Therefore, it is worth further exploring the efficiency improvement of HSI cameras for obtaining seed phenotypes in the field [105], such as integrating small devices and developing end-to-end models. The thermal infrared image also has low resolution and is easily disturbed by ambient temperature. The thermal infrared camera can only be used to detect defects or abnormal states that cause noticeable temperature differences, which is difficult for a single tiny seed damage detection. As for X-ray micro-CT and MRI instruments, the application in seed phenotype is mainly in the exploratory stage because of the high cost and complex operation processes. Even though deep learning has achieved significant advances in image processing, the model’s excellent performance cannot be separated from the support of rich datasets and powerful computing capabilities. So, it faces the query of high hardware cost and challenging field online detection while applied in agriculture [106]. In addition, the results of deep learning models often lack interpretability, which leads to difficulties in finding the problem’s essence.

3D reconstruction techniques and data processing algorithms

The 3D reconstruction technique is used to replicate 3D structures of real objects in computers and is gaining increasing attention [107]. The principles of 3D reconstruction are divided into active vision and passive vision. The active vision method transmits energy sources such as laser and electromagnetic waves to the target object and obtains the depth information of the object through the returned signal, which can be represented by the time-of-flight (TOF) method [108] and structured light method [109]. The passive vision approach collects the multi-directional projection image of the target object using cameras and then reconstructs the object’s 3D information by image measurement and the correlation of feature information, which can be represented by multi-view stereo (MVS) [110]. Based on the above methods and theories, researchers have developed a variety of 3D reconstruction instruments and platforms. Among the four main output and presentation forms (i.e., depth image, point cloud, mesh, and volumetric grid) of 3D models, the point cloud is the preferred representation for extracting model structure parameters from 3D data [111]. In the field of agriculture, 3D laser scanners, MVS, and TOF cameras are commonly employed to obtain 3D point cloud data of plants, extract the plant skeleton, and achieve morphological feature measurement (e.g., stem major axis, stem minor axis, stem height, leaf length, and leaf angle) [112], [113], [114], [115].

The 3D laser scanner adopts an active light source, which can quickly obtain high-precision and high-resolution point cloud data without being limited by the lighting environment [116]. Usually, the 3D laser scanner has matching platforms to support real-time construction and viewing of the model. And the calibration steps and repeated scanning are necessary for high-precision point cloud data. The MVS method enables direct acquisition of the projected image of an object from multiple camera views, followed by several procedures including camera calibration, feature extraction, and feature matching to reconstruct the model [117]. Some open-source implementation platforms, such as COLMAP [118], have already integrated these steps and supported automatic 3D reconstruction. The MVS method has the advantages of low cost, simple image acquisition operation, and high point cloud precision. It should be noted that MVS is highly dependent on object features and unsuitable for smooth or texture-lacked objects. TOF camera measures the object’s distance through the round-trip light time by continuously sending laser pulses to the object [119]. This method has a high capability of anti-interference. Besides, it is not affected by the object’s surface characteristics and can quickly calculate the depth information of the object in real-time.

Because of the small size of some seeds like rapeseed, rice, and Arabidopsis, the resolution of the 3D reconstruction technique for large scenes is not applicable to depict the details of seeds [120]. As indicated in Table 3, current research on 3D seed reconstruction focuses mainly on the 3D laser scanner and MVS [121], [122], [123], [124]. Compared with an image, a 3D model provides an opportunity for a better understanding of the real object [111] and can obtain the object’s size, volume, surface area, and other external properties more conveniently from the 3D level. Also, the 3D model can construct 3D-seed phenotype databases, bringing more breeding references.

Table 3.

Application of 3D reconstruction techniques for seed phenotype digitization.

| Optical sensor | Pros and cons | Seed | Application | Method and performance | Ref |

|---|---|---|---|---|---|

| 3D laser scanner | High-precision and high-resolution Not limited by lighting environment Cumbersome to operateTime-consuming for data collection |

Rice | 3D geometric characteristic modeling | Geomagic Studio | [122] |

| Surface shape feature measurement | Geomagic Studio, accuracy > 98 % | [125] | |||

| Maize | 3D geometric characteristic modeling | – | [126] | ||

| Legume | 3D modeling and trait measurement | Visual Studio | [121] | ||

| Grape | Variety discrimination | cluster analysis and PCA | [123] | ||

| MVS | Convenient image acquisition operation High color reproduction Time-consuming for calculationNot suitable for texture-lacked objects |

Maize | 3D geometric characteristic modeling | Visual Studio, Matlab and Python, relative error = 0.1 % | [120] |

| Arabidopsis, rapeseed, barley | 3D modeling and trait measurement | Matlab, R2 = 0.492 ∼ 0.994 | [124] |

The processing of 3D data should be combined with the types and properties of the data. For example, point cloud can contain much information about the object, including the primary 3D coordinates of each point, the color and normal vector information with photogrammetry measurement, and the laser reflection intensity with laser measurement. Noise and outliers are always unavoidable in data acquisition and modeling; therefore, removing noise from the raw 3D data is necessary before analyzing [127], [128]. When it comes to processing 3D data, 3D data processing software like CloudCompare (https://www.cloudcompare.org/) and MeshLab (http://www.meshlab.net/) are commonly employed to perform essential processing steps, including noise removal, point cloud segmentation, and apparent information extraction. These steps play a crucial role in enhancing the accuracy and comprehensiveness of 3D data analysis. Due to the pursuit of evaluation efficiency and automation, the development of deep learning algorithms has also promoted the research of 3D processing algorithms based on the point cloud. The goals of processing point cloud data are similar to those of 2D images, including 3D shape classification, point cloud segmentation, and detecting 3D objects [111]. Given the unstructured and disorderly nature of the 3D point cloud, indirect and direct processing methods have been developed for extracting information. The indirect method must convert the point cloud data into a structured voxel grid [129] or 2D images based on multi-views [130]. Besides that, the converted data was fed into a relatively standard and mature 2D convolution neural network (CNN). The indirect method can overcome CNN’s inability to analyze point cloud data, minimize the point cloud’s dimensions, and achieve greater accuracy at a lower computational cost. The direct method retains the complete geometric data information by inputting the point cloud directly into the network. Representative frameworks include PointNet++ [131] and DCCNN [132], which can fully learn the characteristics of each point and minimize the loss of spatial information.

Compared with the digital imaging technique, 3D reconstruction can provide a more accurate presentation of the seed phenotype, but the seed size may require a high resolution of 3D reconstruction instruments, which will increase the cost. When point cloud is collected, repeated scanning or multi-view data acquisition is often needed to ensure the high-quality reproduction of the object at the 3D level. Such a delicate collection operation makes it challenging to meet the demand for phenotype evaluation of a large number of seeds, which is more suitable for single seed testing. As for 3D data processing algorithms, indirect and direct methods also have disadvantages. The indirect method cannot avoid the loss of crucial information in the conversion process, which may reduce the model’s performance. The direct method has obvious limitations, such as the complex network model and a large number of calculations, which need to be continuously optimized. In addition, the automation from 3D modeling of seed to phenotype extraction has not been completed, which further hinders the application of the 3D reconstruction technique in seed phenotype digitization [107]. In recent years, 3D reconstruction research for seed phenotype in agriculture has evolved, necessitating additional effort to investigate equipment and algorithms with adequate performance and cost-effectiveness for practical applications.

Generally, optical sensor-based techniques are employed for digitizing seed phenotypes, which involves two main parts: capturing and recording phenotypes through optical sensors, and analyzing and mining the phenotypes using relevant algorithms. Therefore, improving the efficiency of obtaining phenotypes and the analysis accuracy is the primary research objective of seed phenotype digitization. To date, many studies have used multi-source data fusion to obtain diverse phenotype data [133] and enhanced the ability of seed phenotype mining by combining state-of-the-art algorithms [55], [134]. Moreover, sensors and algorithms have been integrated into equipment to achieve automated seed phenotype acquisition [124]. The phenotyping platforms have been accepted to complete multi-scale and high-throughput seed phenotype evaluation [135], [136]. These studies have provided valuable solutions to overcome the bottlenecks of the development of seed phenotype digitization and bring more precise references for breeding decision-making.

Seed phenotype digitization – A case of maize ear

As one of the three major food crops in the world, maize (Zea mays L.) is an essential source of food and industrial raw materials. The evaluation of maize ear phenotype plays a vital role in maize breeding and yield determination, leading to numerous studies. In this section, the applications of digital techniques based on optical sensors in seed phenotype are summarized and discussed according to the studies on maize ear and kernel, aiming to provide a reference for the overall development of seed phenotype digitization.

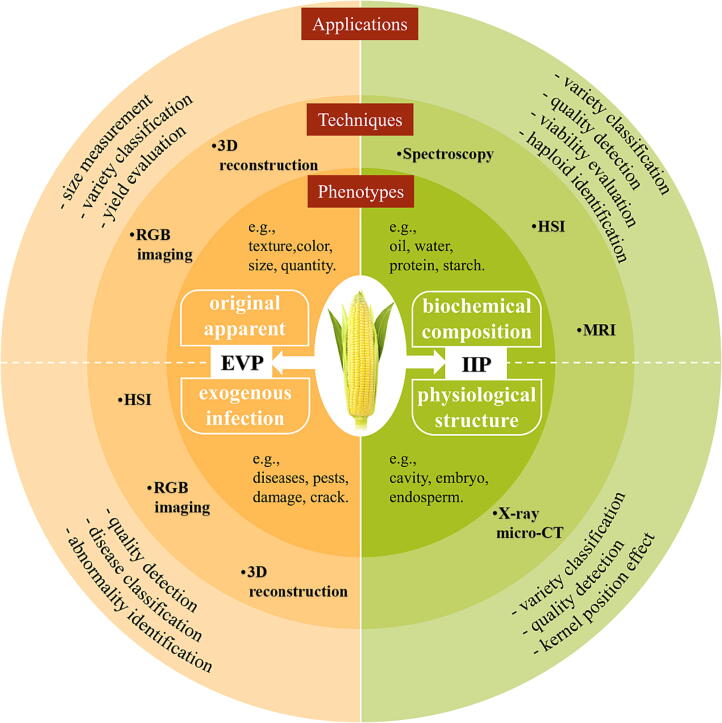

Maize seed testing includes the evaluation of maize ear, maize ear cross-section, and maize kernel/seed phenotype; since maize ear includes maize ear cross-section and maize kernel, their phenotypes are grouped as maize ear phenotype. The seed phenotype traits can be generally divided into external visible phenotype (EVP) and internal invisible phenotype (IIP) according to the phenotype properties of the maize ear.

The EVP traits of maize ear are related to original apparent and exogenous infection traits. Original apparent traits refer to physical morphological indexes of maize ear, such as apparent color, weight, shape (e.g., roundness, flatness), size (e.g., ear length, width, projected area, volume), and number traits (e.g., the number of rows, maize kernels). Exogenous infection traits refer to the obvious external state changes of maize ears affected by diseases, insect pests, and mechanical damage [50]. The digitization of EVP can assist in evaluating apparent size characters, variety classification, quality detection, and disease classification. Since the EVP traits of the maize ear have prominent surface characteristics, which are usually visible to the naked eye, RGB imaging, hyperspectral/multispectral imaging, and 3D reconstruction techniques are popular in analyzing seed EVP traits due to their different imaging/modeling principles.

The IIP traits of maize ear focus on the traits related to the biochemical composition and physiological structure of seeds. Biochemical composition traits include protein, water, starch, oil content, fatty acids, etc., and physiological structure traits refer to microstructure, including embryo, endosperm, cavity, etc., in the maize seed [19], [137]. Through the digitization of IIP, the nutrient content, seed vigor, pesticide residues, and internal damage of maize ears can be measured to guarantee maize seed quality, food safety, and breeding efficiency. IIP traits are always not visible and are difficult to reflect by appearance. Using traditional biochemical analysis methods to evaluate the IIP of maize ears, the determination speed is slow, and the pretreatment is complex. Furthermore, the methods also cause seed destruction, which may result in no seed being sown in the next season, so they are not advisable for quality identification and selection of early-generation materials in breeding [73]. Currently, spectroscopy and spectral imaging techniques are the primary methods used for digitizing IIP traits of maize ears.

By dividing the phenotypes into external visible and internal invisible, we can efficiently match the appropriate phenotype digitization techniques in terms of the characteristics of these two phenotypes and promote the development of the whole process automation of seed testing. Fig. 2 depicts the primary matching digital techniques and applications for different maize ear phenotypes.

Fig. 2.

Techniques and applications for maize ear phenotype digitization. Maize ear phenotype traits can be divided into external visible phenotype (including original apparent and exogenous infection traits) and internal invisible phenotype (including biochemical composition and physiological structure traits). The refined phenotype can be effectively extracted and evaluated by matching digital techniques to promote the whole process of digitizing the seed phenotype. Abbreviations: EVP, external visible phenotype; IIP, internal invisible phenotype.

Original apparent traits digitization of maize ear

Digital imaging and 3D reconstruction techniques can record the color, shape, texture, size, and number information of maize ears. In conjunction with an RGB camera, there are numerous digital and automatic tools on the market for evaluating the original apparent features of maize ear. The majority of them primarily serve laboratory and seed companies. They can detect basic parameters, such as maize ear weight, ear length, ear diameter, bald tip length, ear rows, kernel number per row, kernel number, kernel length, kernel width, kernel thickness, and 100-seed weight [53], [138]. Specific indicators related to the evaluation demand can be calculated with these basic parameters, for example, length–width ratio, volume, area, perimeter, and bald tip ratio of maize ear [139]. The digitization process of original apparent traits on maize ear involves placing the maize ear into the seed testing instrument, RGB image acquisition, parameter extraction, ear weighing, and ear threshing, followed by RGB image acquisition for the maize kernel, kernel weighing, and packaging [140]. This process can obtain most EVP traits of maize ears in high throughput. In addition, the specific size trait of a maize ear can also be calculated by professional image analysis software, such as ImageJ [50], [141], which can be used to extract the statistical information of ROI. The weight of the seed is determined by both its volume and density, yet imaging tools can only estimate its size based on the number of pixels. Makanza et al. [141] tried to mine the weight of maize ears directly using an RGB image, but because of the different moisture content in maize seeds, the weight estimation model is not universal and unreliable compared to the electronic scale.

Accurately and quickly counting the kernels of a maize ear is crucial for estimating maize yield in the current season [142]. With the improvement of camera image quality, researchers have used the developing image processing algorithms to perform the in-situ counting of maize kernels. Khaki et al. [143] took images of maize ears on one side in uncontrolled lighting conditions, then used a sliding window and CNN classifier to complete the counting task, achieving an accuracy of 91.84 % within 5.79 s per ear. Shi et al. [144] used a developed network and row mask generator to generate density maps from a single image and inferred kernels per ear, rows per ear, and kernels per row, achieving a mean absolute error of 7.48, 0.32, and 1.07, respectively. Different maize cultivars and field management lead to differences in the appearance of mature maize ears. Especially when the arrangement of corn kernels is irregular, there will be a large error in estimating the total number of maize kernels from one side image. In order to accurately and quickly count kernels, it will be more practical to use multi-side images to cover the whole maize ear surface for predicting the number of maize kernels.

The evaluation performance of maize ear’s original traits highly correlates with the imaging resolution. Images with high resolution can bring clear texture and shape information. However, compared with the RGB image, the resolution of the image dimension in HSI data is low; the cost, portability, and operability also do not have enough competitiveness. These limitations may explain why there are few relevant studies on the application of HSI cameras for digitizing the original apparent features of maize ears.

The research of 3D reconstruction techniques on the digitization of maize ear phenotype has emerged in recent years. Wen et al. [126] used a 3D scanner to obtain high-resolution 3D point cloud data of ear and seed by rotating the maize ear in three directions. Given the deviation between the reduced dimensional 2D image and the actual object, the 3D model has precise details, such as object type, morphological parameters, connection, and spatial coordinates [115]. Moreover, it shows the appearance characteristics of the measured object more intuitively and stereoscopically. However, from the number of relevant literature, the application of the 3D reconstruction technique in the study of maize ear phenotype is still in the exploratory stage. Jahnke et al. [124] introduced a device named phenoSeeder, and MVS 3D reconstruction was used to complete the modeling of seeds, which determined the seed volume and calculated the seed’s length, width, and height. Furthermore, the results showed good repeatability for both rapeseed and barley seeds. Therefore, the 3D reconstruction technique has great potential and research value for the digitization of EVP.

Exogenous infection traits digitization of maize ear

The apparent exogenous infection traits reveal the health state of maize ears. Indeed, the original apparent traits, such as color, shape, and texture of maize ear will change in case of infection (diseases and pests), mechanical damage, storage, and marking. These traits can be extracted from maize ear’s RGB images and used as features to detect and sort the obvious aberrant states or differences. For example, variety classification in terms of morphological and color differences of maize seeds [51], detection of defective seeds/ears due to apparent unavoidable damage during mechanical harvest [54], [145], identification of moldy seeds because of color differences caused by mold infection during storage [146], and mutation gene transfer tracking based on visible endosperm markers [147]. According to the differences in EVP traits, combined with the image processing algorithms, the detection and marking of maize variety, quality, and abnormality can be completed rapidly and automatically.

Compared to RGB imaging, which only captures three wavelengths, HSI can reflect more information from the spectral dimension, providing additional references for identifying exogenous infection traits [105]. This is particularly useful for the early detection of fungi in maize kernels. Williams et al. [148] assessed the feasibility of using near-infrared hyperspectral imaging (NIR-HSI) and hyperspectral image analysis models to detect fungal contamination and activity of maize kernels before the appearance of visual symptoms. Chu et al. [149] have shown that both object-wise and pixel-wise methods based on NIR-HSI can be used for the classification of fungi-infected maize kernels. Furthermore, pixel-wise methods are useful for visualizing the extent of disease infection. Combining with sparse auto-encoders and CNN algorithms, Yang et al. [150] distinguished four grades of moldy kernels using hyperspectral imaging and achieved high correct recognition rates of 99.47 % and 98.94 % for the training and testing sets, respectively. Compared with HSI, a multispectral imaging (MSI) camera captures images at the selected wavelengths for specific applications with a lower volume of data, and higher processing efficiency, which also has good test capability [10]. Wang et al. [151] applied an MSI camera to form RGB and NIR images of maize seeds for defect detection. The result showed that with a two-pathway CNN model, the average detection accuracy could be improved by 1.83 % compared with only RGB images. Nevertheless, the HSI/MSI data inevitably need more processing steps and computing resources to mine principal component features. Considering the low cost-effectiveness of an HSI camera, the balance between the cost and benefits of the application must be comprehensively considered while using the HSI technique.

When the 3D reconstruction technique is employed to analyze the EVP traits of maize ears, the phenotype results are more detailed and reliable [152]. Compared to the RGB-based evaluation of exogenous infection traits, the 3D reconstruction technique can complete the recording of the arrangement and features of maize ears and seeds, yielding more comprehensive results. Besides, the 3D point cloud’s processing algorithm needs to be explored to migrate for evaluating the maize ear EVP trait, which will promote the development of maize ear digitization and automation [153]. The development of a 3D assessment index based on the 3D processing method to replace traditional estimation will also give more accurate assistance for maize ear quality analysis, for example, by using the proportion of maize disease area instead of the simple disease degree.

Biochemical composition traits digitization of maize ear

Spectroscopy techniques have been widely used in the qualitative and quantitative analysis of various chemical components in maize seeds. NIR spectroscopy is often used to analyze compounds’ component concentration or quality parameters and their mixtures. It has many applications in the determination of nutritional substances such as maize kernel protein [154], starch [155], oil [154], [156], fatty acid[157], and carotenoid [158]. Compared with NIR spectroscopy, small Raman spectroscopy combined with chemometrics can form a set of fast, low-cost, and field detection methods for maize kernel components (e.g., endosperm, germ, and peel) [19]. The sensitivity and specificity of detection can be further improved by surface-enhanced Raman spectroscopy (SERS) based on electromagnetic and chemical enhancement mechanisms [159]. SERS is often used for the rapid determination of trace residues in agricultural products; for example, the detection limit of fenitrothion in maize could reach 0.48 μg·mL−1 with SERS, which is lower than the maximum residue limit of China in crops and suggests the high sensitivity of SERS [160]. THz spectroscopy is a highly competitive emerging detection technique that has been studied in determining components, toxin detection, and viability analysis of maize seeds [161]. However, since most current research objects are solution or powder, it is not easy to perform non-destructive detection now [20]. Fluorescence spectroscopy can also detect the concentration of toxins in maize seeds, but it is easily disturbed by the background because many substances emit fluorescence. Laser-induced fluorescence spectroscopy (LIFS) can enhance the sensitivity of aflatoxin measurements [162], promoting fluorescence spectroscopy’s application. The nuclear magnetic resonance (NMR) technique can infer the water and oil content of maize seed according to the hydrogen proton vibration signal. This technique does not damage the seed but also maintains its germination vigor, which is especially suitable for liveness detection [51], [163]. Low-field NMR technique can also reflect the combination degree of seed moisture and macromolecular substances to further distinguish bound water, semi-bound water, and free water. It has been widely used in the study of the water phase, distribution, and migration of food and crops [164].

Due to the increase in the spatial dimension, the spectral imaging technique can also reflect the distribution and change of components based on chemical components. For instance, the moisture change of maize seed during drying can be predicted by the NIR-HSI technique [165], the distribution of components in maize kernel can be visualized by the Raman HSI technique [166], and the distribution and migration of water in maize seeds during storage can be demonstrated by MRI technique [95]. THz spectral imaging technique can extract the spectral information of different tissues of maize seeds [161], which makes up for the limitation of THz spectroscopy. In addition to measuring the internal components, HSI and fluorescence imaging techniques also perform well in the early and rapid detection of aflatoxin infections in maize seeds [167], [168], [169]. According to the difference in internal composition and distribution, combined with data processing algorithms, the variety, freshness, hardness, viability, and damage of maize seed can be further detected and classified. Due to the internal component differences between new and old seeds, maize seeds of different years can be detected [170], [171]. Heating changes the physical morphology and chemical composition of maize seeds, which can be used for heat-damaged seeds’ automatic detection [172]. The hardness of maize seeds can be classified because of the hardness difference caused by distinct endosperm composition [173], and seed viability can be detected according to changes in starch and protein content after seed aging [174]. Besides, rapid sorting of haploid maize kernels can also rely on the significant difference in oil content between diploid and haploid kernels induced by high oil [163].

Physiological structure traits digitization of maize ear

The microscopic 3D structure of the maize kernel is an essential part of the IIP of the maize ear, which reflects the inner characteristics at the tissue level of the maize ear [80]. As a typical 3D tomography technique, X-ray micro-CT reconstructs the cross-section or 3D image of object tissue in terms of the attenuation difference of X-ray passing through plant tissue, which can identify and analyze the 3D structure and function of maize seed from the microscopic perspective [175]. Guelpa et al. [81] determined the density of vitreous and floury endosperm of maize kernels by micro-CT and achieved the non-destructive grading of kernel hardness with an accuracy of 88 %. Kernel density and subcutaneous cavity volume are crucial factors affecting kernel breakage rate, which can be obtained by X-ray micro-CT technique and then used to predict the breakage rate of maize varieties [176]. Gargiulo et al. [177] suggested that X-ray exposure would lead to abnormal seedlings; consequently, paying attention to possible side effects during living-seed research with X-ray micro-CT is essential. In addition, since the existing micro-CT image processing process involves a large number of manual interactions, which are time-consuming and inefficient, it is challenging to detect maize kernels in the field. Besides, it cannot meet the high-throughput acquisition of micro internal phenotype characteristics. Even so, micro-CT has great value in providing technical support for the research of variety classification, quality detection, and kernel position effect, which completes the precise identification of seed phenotype at the micro-level through the analysis of maize kernel traits such as embryo, endosperm, cavity, subcutaneous cavity, embryo cavity, and endosperm cavity [80], [137].

In summary, for evaluating EVP traits, an RGB camera is mainly applied for the digitization of EVP traits of maize ears due to the low cost, the convenience of acquisition, and the rapid development of image processing algorithms. The HSI technique can provide richer information than RGB images because of the fusion of spectral and spatial information, which may improve performance in evaluating the exogenous infection traits. The 3D reconstruction technique provides a convenient and potential method for researchers to access, record, and store at any time. 3D reconstruction and data processing algorithms are urgently needed to meet the demands of the digitization of maize ear morphological traits [153]. Moreover, the digitization of IIP traits of maize ears is mainly based on spectroscopy and its imaging techniques, which have the advantage of obtaining the internal chemical components of maize ears in a non-destructive and quick way. The HSI technique is popular for digitizing IIP traits due to its rich information and universality across various IIP traits. Raman, THz, NMR spectroscopy/imaging, and X-ray micro-CT have particular advantages in water and oil content, trace pesticide residues, in vivo detection, and internal structure measurement, respectively, according to their detection characteristics. Due to the diversity and special advantages of techniques, precision, efficiency, and cost-effectiveness should be considered comprehensively to select suitable detection methods based on the requirements of phenotype digitization.

Concluding remarks and prospects

The richness of crop resources worldwide is reflected in the wide range of seed varieties, resulting in a need to evaluate complex seed phenotypes. Although plentiful phenotype digitization techniques have been employed for the research of seed phenotypes, there is still much work to be done to match the requirements of various seed phenotypes. This review aimed to lessen the gap by providing an overview of standard optical sensors and related data processing algorithms for the digital evaluation of seed phenotype. In addition, it discusses corresponding digital techniques for each set of seed phenotype characteristics, using maize ear as a case study. In order to make the digital techniques better meet the demands of seed phenotype in practical applications, further support and research can be considered in phenotype digitization equipment and platform, phenotype data, and processing algorithms.

To effectively apply seed phenotype digitization techniques in practical settings and derive direct benefits for seed phenotype evaluation, it is essential to develop phenotype digitization devices and platforms that offer high performance and cost-effectiveness. These devices should be easy to operate, have stable performance, support high-throughput acquisition for high efficiency, and be portable for field testing. Therefore, the miniaturization of spectrometers is one of the development trends of portable spectrometers [178]. At the same time, phenotype devices need to be integrated to support the detection of multiple phenotypes, to reduce the seed wasting and time-consuming caused by frequent replacement of devices or repeated detections. In addition, much attention has been paid to the field detection of seed phenotypes based on unmanned aerial vehicles (UAVs) [135], which can further promote the efficient acquisition and multi-scale analysis of seed phenotypes. Considering the widespread use of mobile phones and the superior recording capability of RGB images for EVP, it becomes necessary to consider how to obtain seed appearance, texture, quantity, and status information in the field through mobile phones or mobile devices, which also involves the deployment of lightweight models and microspectrometer systems in mobile devices.

The high-throughput phenotype digitization techniques make the phenotype data expand rapidly. Establishing a standard, high-quality, and shared phenotype database can effectively promote the analysis and mining of phenotype data and maximize the utilization of phenotype data resources. On the other hand, various phenotype digitization techniques produce different formats of phenotype data. Therefore, research on the fusion of heterogeneous data can also enhance the systematic evaluation of seed phenotypes and has the potential to achieve the integrated evaluation of seed external phenotypes, internal components, and structures.

With the emergence of data-driven processing algorithms, it is important to focus on the interpretability and universality of evaluation models in the agricultural field. Taking the seed variety classification as an example, mining the classification features learned by the deep learning algorithms and the attention to the task can promote researchers’ understanding of the inherent differences in seeds of different varieties. Meanwhile, the classification weight and score of various classes can further refine the variety differences [179]. As for universality, an excellent and effective model should be compatible with complex and diverse application scenarios, particularly in the agricultural environment. It is important to note that many models exhibit excellent performance only on the datasets used in related studies. When these models are applied to new locations or different varieties, they often require retraining from scratch, which can be inefficient and lead to a waste of computational resources. To address this issue, dataset construction should consider the possible diverse data in the task to ensure the stability and robustness of the model in practical applications. Additionally, transfer learning can be employed to leverage pre-training parameters from similar tasks, reducing model fitting time and improving training efficiency [180].

It is noteworthy to highlight the universality of dividing seed phenotypes and utilizing optical sensor-based digital techniques for evaluation. These methodologies extend beyond maize ears and can be effectively employed to digitize diverse seed phenotypes across various contexts, encompassing rice seeds, wheat seeds, rapeseeds, and more. We believe that the continuous development of optical sensors and data processing methods will help to understand seeds better and pave the way for the process’s digitization, standardization, and automation of seed phenotype evaluation.

CRediT authorship contribution statement

Fei Liu: Conceptualization, Methodology, Writing – review & editing. Rui Yang: Writing – original draft, Investigation, Visualization. Rongqin Chen: Writing – review & editing, Visualization. Mahamed Lamine Guindo: Writing – review & editing. Yong He: Conceptualization, Resources. Jun Zhou: Investigation, Validation. Xiangyu Lu: Investigation, Validation. Mengyuan Chen: Investigation, Validation. Yinhui Yang: Conceptualization, Writing – review & editing. Wenwen Kong: Supervision, Writing – review & editing.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

This work was supported by Science and Technology Department of Zhejiang Province (2022C02034, 2021C02023) and Science and Technology Department of Shenzhen (CJGJZD20210408092401004).

Biographies

Fei Liu received his Bachelor degree in Agricultural Mechanization and Automation from China Agricultural University, Beijing, China, in 2006, and Ph.D. degree in Biosystems Engineering from Zhejiang University, Hangzhou, China, in 2011. He is currently a professor in Zhejiang University. He is the deputy director of Institute of Agricultural Information Technology, Zhejiang University. He is mainly focused on the sensing technology and equipment development for smart agriculture, inlcuding agricultrual IoT, remote sensing using UAS, hyperspectral imaging and LIBS for soil-plant-agricultural products information detection.

Rui Yang received the Bachelor degree in Agricultural Architectural Environment and Energy Engineering from China Agriculture University, Beijing, China, in 2020. She is currently a Ph.D. candidate in College of Biosystems Engineering and Food Science in Zhejiang University. Her research interests include nondestructive detection of plant phenotype and remote sensing on agriculture.

Rongqin Chen received Bachelor degree in Food Science and Engineering from Fujian Agriculture and Forestry University, Fuzhou, China, in 2019. She is currently a Ph.D. candidate in College of Biosystems Engineering and Food Science, Zhejiang University. Her research focuses on nondestructive detection of quality and safety of agricultural products using spectroscopy techniques.

Mahamed Lamine Guindo received a Bachelor degree in Information technology from Dakar University, Senegal, in 2017, and an Master degree in Mechanical Engineering from Zhejiang University of Science and Technology in 2019. He received Ph.D. degree in Agricultural Electrification and Automation from Zhejiang University, Hangzhou, China, in 2023. His research interests include machine learning, multi-spectral, and spectral imaging technology for plant and soil information detection.

Yong He received the Bachelor and Master degrees in Agricultural Engineering from Zhejiang Agricultural University, Hangzhou, China, in 1984 and 1987, respectively. He received Ph.D. degree in Biosystems Engineering from Zhejiang University, Hangzhou, China, in 1998. He is currently a professor in Zhejiang University. He is the director of the Key Laboratory of spectroscopy, Ministry of Agricultural and Rural Affairs; the national prestigious teacher; one of the hundred thousands of national talents. He was selected as Clarivate Analytics Global Highly Cited Researchers in 2016-2018. He is the Editor-in-Chief of Computers and Electronics in Agriculture and editorial board member of Food and Bioprocess Technology.

Jun Zhou received the Bachelor degree in 2012 and the Master in 2015 in College of Mechanical Engineering and Traffic from Xinjiang Agricultural University, Urumqi, China. He received Ph.D. degree in Biosystems Engineering from Zhejiang University, Hangzhou, China, in 2023. His research interests include remote sensing on agricultural and agricultural informatization.

Xiangyu Lu received the Bachelor degree in Agricultural Mechanization and Automation from Northwest A&F University, Yangling, China, in 2020. He is currently a Ph.D. candidate in College of Biosystems Engineering and Food Science in Zhejiang University. His research interests include image processing and interpretation, direct geolocation of target in drone images and automatic data processing workflow.

Mengyuan Chen received the Bachelor degree in agricultural engineering from Zhejiang University, Hangzhou, China, in 2021. She is currently a master student in College of Biosystems Engineering and Food Science in Zhejiang University. Her research interests include forestry remote sensing and detection of forest phenotype.

Yinhui Yang received his Bachelor degree in Electronic Information Science and Technology and Master degree in Biophysics from China Agricultural University, Beijing, China, in 2006 and 2009, respectively. He received Ph.D. degree in Computer Science and Technology from Zhejiang University, Hangzhou, China, in 2017. He is currently a lecturer in Zhejiang A& F University. He is mainly focused on image-based 3D reconstruction technology and its applications in digital agriculture and forestry.

Wenwen Kong received her Bachelor degree in Agricultural Engineering from China Agricultural University, Beijing, China, in 2009, and Ph.D. degree in Biosystems Engineering from Zhejiang University, Hangzhou, China, in 2015. She is currently a associate professor in Zhejiang A&F University. She is mainly focused on information digital detection and sensing technology of plants.

Contributor Information

Fei Liu, Email: fliu@zju.edu.cn.

Wenwen Kong, Email: wwkong16@zafu.edu.cn.

References

- 1.Zheng Y., Yuan F., Huang Y., Zhao Y., Jia X., Zhu L., et al. Genome-wide association studies of grain quality traits in maize. Sci Rep. 2021;11:9797. doi: 10.1038/s41598-021-89276-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wang X., Cai Z. Era of maize breeding 4.0. Journal of Maize Sciences. 2019;27:1–9. doi: 10.13597/j.cnki.maize.science.20190101. [DOI] [Google Scholar]

- 3.Wallace J.G., Rodgers-Melnick E., Buckler E.S. On the road to breeding 4.0: unraveling the good, the bad, and the boring of crop quantitative genomics. Annu Rev Genet. 2018;52:421–444. doi: 10.1146/annurev-genet-120116-024846. [DOI] [PubMed] [Google Scholar]

- 4.Wang X., Qiu L., Jing R., Ren G., Li Y., Li C., et al. Evaluation on phenotypic traits of crop germplasm: status and development. Journal of Plant Genetic Resources. 2022;23:12–20. doi: 10.13430/j.cnki.jpgr.20210802001. [DOI] [Google Scholar]

- 5.Rahman A., Cho B.K. Assessment of seed quality using non-destructive measurement techniques: a review. Seed Sci Res. 2016;26:285–305. doi: 10.1017/S0960258516000234. [DOI] [Google Scholar]

- 6.Araus J.L., Cairns J.E. Field high-throughput phenotyping: the new crop breeding frontier. Trends Plant Sci. 2014;19:52–61. doi: 10.1016/j.tplants.2013.09.008. [DOI] [PubMed] [Google Scholar]

- 7.Budd J., Miller B.S., Manning E.M., Lampos V., Zhuang M., Edelstein M., et al. Digital technologies in the public-health response to COVID-19. Nat Med. 2020;26:1183–1192. doi: 10.1038/s41591-020-1011-4. [DOI] [PubMed] [Google Scholar]

- 8.Sun D., Robbins K., Morales N., Shu Q., Cen H. Advances in optical phenotyping of cereal crops. Trends Plant Sci. 2022;27:191–208. doi: 10.1016/j.tplants.2021.07.015. [DOI] [PubMed] [Google Scholar]

- 9.Rotz S., Duncan E., Small M., Botschner J., Dara R., Mosby I., et al. The politics of digital agricultural technologies: a preliminary review. Sociol Rural. 2019;59:203–229. doi: 10.1111/soru.12233. [DOI] [Google Scholar]

- 10.Xia Y., Xu Y., Li J., Zhang C., Fan S. Recent advances in emerging techniques for non-destructive detection of seed viability: a review. Artificial Intelligence in Agriculture. 2019;1:35–47. doi: 10.1016/j.aiia.2019.05.001. [DOI] [Google Scholar]

- 11.Clohessy J.W., Pauli D., Kreher K.M., Buckler V.E.S., Armstrong P.R., Wu T., et al. A low-cost automated system for high-throughput phenotyping of single oat seeds. The Plant Phenome Journal. 2018;1 doi: 10.2135/tppj2018.07.0005. [DOI] [Google Scholar]

- 12.Nordborg M., Weigel D. Next-generation genetics in plants. Nature. 2008;456:720–723. doi: 10.1038/nature07629. [DOI] [PubMed] [Google Scholar]

- 13.Lu R., Van Beers R., Saeys W., Li C., Cen H. Measurement of optical properties of fruits and vegetables: a review. Postharvest Biol Tec. 2020;159 doi: 10.1016/j.postharvbio.2019.111003. [DOI] [Google Scholar]

- 14.Kokot S., Grigg M., Panayiotou H., Phuong T.D. Data interpretation by some common chemometrics methods. Electroanal. 1998;10:1081–1088. doi: 10.1002/(SICI)1521-4109(199811)10:16<1081::AID-ELAN1081>3.0.CO;2-X. [DOI] [Google Scholar]

- 15.Jin X., Zarco-Tejada P.J., Schmidhalter U., Reynolds M.P., Hawkesford M.J., Varshney R.K., et al. High-throughput estimation of crop traits: a review of ground and aerial phenotyping platforms. IEEE Geosc Rem Sen M. 2021;9:200–231. [Google Scholar]

- 16.Zhou L., Zhang C., Qiu Z., He Y. Information fusion of emerging non-destructive analytical techniques for food quality authentication: a survey. TrAC-Trend Anal Chem. 2020;127 doi: 10.1016/j.trac.2020.115901. [DOI] [Google Scholar]

- 17.Karunakaran C., Vijayan P., Stobbs J., Bamrah R.K., Arganosa G., Warkentin T.D. High throughput nutritional profiling of pea seeds using Fourier transform mid-infrared spectroscopy. Food Chem. 2020;309 doi: 10.1016/j.foodchem.2019.125585. [DOI] [PubMed] [Google Scholar]

- 18.Smeesters L., Meulebroeck W., Raeymaekers S., Thienpont H. Optical detection of aflatoxins in maize using one- and two-photon induced fluorescence spectroscopy. Food Control. 2015;51:408–416. doi: 10.1016/j.foodcont.2014.12.003. [DOI] [Google Scholar]

- 19.Ildiz G.O., Kabuk H.N., Kaplan E.S., Halimoglu G., Fausto R. A comparative study of the yellow dent and purple flint maize kernel components by raman spectroscopy and chemometrics. J Mol Struct. 2019;1184:246–253. doi: 10.1016/j.molstruc.2019.02.034. [DOI] [Google Scholar]

- 20.Ge H., Jiang Y., Lian F., Zhang Y., Xia S. Quantitative determination of aflatoxin B1 concentration in acetonitrile by chemometric methods using terahertz spectroscopy. Food Chem. 2016;209:286–292. doi: 10.1016/j.foodchem.2016.04.070. [DOI] [PubMed] [Google Scholar]

- 21.Jiang G. Comparison and application of non-destructive NIR evaluations of seed protein and oil content in soybean breeding. Agronomy. 2020;10:77. doi: 10.3390/agronomy10010077. [DOI] [Google Scholar]

- 22.Pazdernik D.L., Killam A.S., Orf J.H. Analysis of amino and fatty acid composition in soybean seed, using near infrared reflectance spectroscopy. Agron J. 1997;89:679–685. doi: 10.2134/agronj1997.00021962008900040022x. [DOI] [Google Scholar]

- 23.Hutsalo I., Mank V., Kovaleva S. Determination of oleic acid in the samples of sunflower seeds by method of NIR-spectroscopy. Ukr Food j. 2017:6. doi: 10.24263/2304-974X-2017-6-1-6. [DOI] [Google Scholar]

- 24.Xu J., Nwafor C.C., Shah N., Zhou Y., Zhang C. Identification of genetic variation in Brassica napus seeds for tocopherol content and composition using near-infrared spectroscopy technique. Plant Breed. 2019;138:624–634. doi: 10.1111/pbr.12708. [DOI] [Google Scholar]

- 25.Jin F., Bai G., Zhang D., Dong Y., Ma L., Bockus W., et al. Fusarium-damaged kernels and deoxynivalenol in Fusarium-infected U.S. winter wheat. Phytopathology. 2014;104:472–478. doi: 10.1094/PHYTO-07-13-0187-R. [DOI] [PubMed] [Google Scholar]

- 26.Choi Y.H., Hong C.K., Park G.Y., Kim C.K., Kim J.H., Jung K., et al. A nondestructive approach for discrimination of the origin of sesame seeds using ED-XRF and NIR spectrometry with chemometrics. Food Sci Biotechnol. 2016;25:433–438. doi: 10.1007/s10068-016-0059-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rosales A., Crossa J., Cuevas J., Cabrera-Soto L., Dhliwayo T., Ndhlela T., et al. Near-infrared spectroscopy to predict provitamin a carotenoids content in maize. Agronomy. 2022;12:1027. doi: 10.3390/agronomy12051027. [DOI] [Google Scholar]

- 28.Qiu G., Lv E., Lu H., Xu S., Zeng F., Shui Q. Single-kernel FT-NIR spectroscopy for detecting supersweet corn (Zea mays L. saccharata sturt) seed viability with multivariate data analysis. Sensors. 2018;18:1010. doi: 10.3390/s18041010. [DOI] [PMC free article] [PubMed] [Google Scholar]