Abstract

Background

Mobile health (mHealth) technologies are increasingly used in contact tracing and case finding, enhancing and replacing traditional methods for managing infectious diseases such as Ebola, tuberculosis, COVID-19, and HIV. However, the variations in their development approaches, implementation scopes, and effectiveness introduce uncertainty regarding their potential to improve public health outcomes.

Objective

We conducted this systematic review to explore how mHealth technologies are developed, implemented, and evaluated. We aimed to deepen our understanding of mHealth’s role in contact tracing, enhancing both the implementation and overall health outcomes.

Methods

We searched and reviewed studies conducted in Africa focusing on tuberculosis, Ebola, HIV, and COVID-19 and published between 1990 and 2023 using the PubMed, Scopus, Web of Science, and Google Scholar databases. We followed the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines to review, synthesize, and report the findings from articles that met our criteria.

Results

We identified 11,943 articles, but only 19 (0.16%) met our criteria, revealing a large gap in technologies specifically aimed at case finding and contact tracing of infectious diseases. These technologies addressed a broad spectrum of diseases, with a predominant focus on Ebola and tuberculosis. The type of technologies used ranged from mobile data collection platforms and smartphone apps to advanced geographic information systems (GISs) and bidirectional communication systems. Technologies deployed in programmatic settings, often developed using design thinking frameworks, were backed by significant funding and often deployed at a large scale but frequently lacked rigorous evaluations. In contrast, technologies used in research settings, although providing more detailed evaluation of both technical performance and health outcomes, were constrained by scale and insufficient funding. These challenges not only prevented these technologies from being tested on a wider scale but also hindered their ability to provide actionable and generalizable insights that could inform public health policies effectively.

Conclusions

Overall, this review underscored a need for organized development approaches and comprehensive evaluations. A significant gap exists between the expansive deployment of mHealth technologies in programmatic settings, which are typically well funded and rigorously developed, and the more robust evaluations necessary to ascertain their effectiveness. Future research should consider integrating the robust evaluations often found in research settings with the scale and developmental rigor of programmatic implementations. By embedding advanced research methodologies within programmatic frameworks at the design thinking stage, mHealth technologies can potentially become technically viable and effectively meet specific contact tracing health outcomes to inform policy effectively.

Keywords: mobile health, mHealth, design thinking, tuberculosis, Ebola, HIV, COVID-19, infectious diseases, contact tracing, mobile phone

Introduction

Background

Mobile health (mHealth) technologies have become increasingly popular tools for facilitating data collection and delivery of health services worldwide [1,2]. The emergence of COVID-19 in 2020 increased interest in using mHealth technologies for contact tracing due to their ability to achieve case finding goals without the need for physical contact with an infected person [1,2]. Before COVID-19, mHealth technologies had already been used in Africa for contact tracing of infectious diseases such as Ebola [3-7], tuberculosis [8-11], and HIV, albeit with limited success. Compared to paper-based systems, the most apparent advantages of mHealth include its ability to reduce repetitive tasks and errors, systematic delivery of services, and improved monitoring due to efficient data processing in databases. Therefore, at face value, mHealth technologies have the potential to overcome challenges encountered when using traditional paper-based systems for contact tracing, thereby improving outcomes.

Contact tracing is a strategy for actively and systematically screening for symptoms among individuals exposed to someone with a transmissible disease to determine whether they require further diagnostic evaluation [12-14]. A key advantage of contact tracing is that, in principle, it reduces the time from when an individual falls ill with an infectious disease to when they are diagnosed, preventing further transmission to healthy persons. A contact, defined as any person living with or someone in a social circle who has regular contact with an individual with a transmissible disease, is at the highest risk of contracting the same disease due to their proximity to the infected person. Therefore, contacts are defined as a high-risk priority group for contact tracing [12]. The World Health Organization has long recommended and supported contact tracing for tuberculosis and Ebola [15,16] and promotes contact tracing as a critical intervention for tuberculosis control in high-burden countries [16].

Tuberculosis contact tracing can be seen as a cascade of activities that begins with finding an individual with the disease of interest, referred to as the index patient, and collecting information about their close contacts [12]. These activities are followed by a household visit to screen the enumerated persons for tuberculosis symptoms and may include collecting sputum samples from symptomatic contacts for laboratory testing—anyone testing positive for tuberculosis is referred to health facilities for linkage to care [12]. During household visits, contact tracing also serves as a pathway for accessing household contacts eligible for and initiating tuberculosis preventive therapy [14]. There are variations to contact tracing, with recent modalities using portable chest x-rays for identifying individuals eligible for tuberculosis testing [17,18] and oral swabs as an alternative to sputum samples [19,20]. In South Africa, contact tracing has now evolved from testing only symptomatic persons to universal testing of all household contacts and, in the process, initiating tuberculosis preventive therapy in eligible contacts [21].

The process for conducting contact tracing is rigorous. Each step in the contact tracing cascade requires documentation to enable contact follow-up, communication of results, linkage to care, and program monitoring and evaluation. Contact tracing programs rely significantly on efficient data collection to inform decision-making and patient management—in the absence of this, the process loses its cost-effectiveness and may become unattractive to national tuberculosis programs. Within Africa, countries have relied on inefficient paper-based data collection that overburdens outreach workers responsible for tracing household contacts but also results in poor data quality due to inevitable human error and inadequate accountability due to the manual processes required to collate field data [12,22-25]. These systems could be improved or overhauled by introducing mHealth technologies to optimize the documentation of activities in each step of the cascade.

Objectives

Despite the potential of mHealth to overcome traditional paper-based system challenges, there is evidence suggesting that improvements in contact tracing outcomes using mHealth technologies remain insufficient and “largely unproven” [24,26-28]. To address the evidence gaps, this systematic review assessed technologies on tuberculosis, Ebola, HIV, and COVID-19. While our focus remains predominantly on tuberculosis, we included these additional diseases due to similarities in contact tracing methods, enhancing the wider applicability of our findings. We aimed to synthesize information on the development, implementation, and evaluation of these technologies to better guide future work and enhancements. We hypothesized that synthesizing this existing information on the technologies will reveal valuable insights into the continuum of mHealth apps, their benefits, and limitations, thereby shaping improvements in future contact tracing efforts.

Methods

Literature Search

We conducted a systematic review of mHealth technologies used for contact tracing for selected infectious diseases and reported the results using the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines. We conducted an iterative search of all studies published in or translated into English from January 1, 1990, to December 31, 2023, in electronic databases, including PubMed (MEDLINE), Scopus, Web of Science, and Google Scholar (Multimedia Appendix 1). The year 1990 was chosen because it represents the earliest period during which the literature suggests that digital phones were introduced and available for use in health [29,30]. In addition, the 2023 cutoff allowed for the inclusion of technologies that may have been used during the COVID-19 pandemic. The following search terms were applied within the selected databases: tuberculosis, mHealth or mobile health, telehealth or telemedicine and case finding or contact tracing/investigation or tuberculosis screening or COVID-19 or Ebola or HIV. We used Medical Subject Heading (MeSH) terms to search in PubMed. We used subject headings and keywords in databases that do not use MeSH terms, such as Scopus, Web of Science, and Google Scholar. The search terms are presented in Multimedia Appendix 1. The Boolean operators “AND,” “OR,” and “NOT” were used to join words in all databases to improve the accuracy and relevance of the literature.

Inclusion and Exclusion Criteria

The following study inclusion criteria were applied: (1) studies conducted in Africa and published between 1990 and 2023 (2) that included an mHealth technology either as an intervention or part of procedures for contact tracing (3) used to screen for tuberculosis, COVID-19, Ebola, and HIV or find and screen contacts of people infected with these diseases.

The following studies were excluded: (1) mHealth modeling studies; (2) mHealth protocols and proposals; and (3) mHealth systematic reviews, commentaries, and scoping reviews.

Screening of the Literature, Extraction, and Analysis

The initial database search was conducted by DLM, and all references were uploaded to a reference management software library (EndNote version 20; Clarivate Analytics) for abstract and title screening. In total, 2 reviewers (DLM and HC) performed the initial review of all articles according to the inclusion and exclusion criteria. Where there was disagreement on the classification of a reference, a third reviewer (JN) conducted an additional review and confirmed the final classification.

A web-based data extraction tool was developed on Microsoft Office 365 Forms (Microsoft Corp; Multimedia Appendix 2). Extracted data elements included the study title, year of publication, country, location where the technology was implemented (community, facility, or both), study design, target disease, and type of technology used. The same form also contained sections to capture qualitative data about how the technologies were developed and their implementation processes, including challenges and the outcomes to measure effectiveness.

We summarized and synthesized the systematic review results using the Joanna Briggs Institute approach [31], and the data were presented in tables and narrative text. The themes used in the analysis were predetermined: development, implementation, and outcomes. The development theme described the steps taken in the development of the technologies. The implementation theme described how the technologies were deployed, what and how they collected contact tracing data, and challenges and successes. The final theme focused on the contact tracing outcomes measured when mHealth technologies were used.

Quality Assessments and Risk of Bias

The included studies were assessed for risk of bias using tools appropriate for each study type. The steps followed in assessing risk are detailed in Multimedia Appendix 3 [6,8,27,32-48]. The Cochrane Risk of Bias 2 tool was used for randomized clinical trials [49]. Technologies used in programmatic settings and pretest-posttest studies were assessed using the Quality Assessment Tool for Before-After (pretest-posttest) Studies With No Control Group developed by the US National Heart, Lung, and Blood Institute [50]. Finally, cross-sectional studies were assessed using the Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies also from the National Heart, Lung, and Blood Institute [50].

Definitions

The World Health Organization broadly defines eHealth as “the use of information and communications technology (ICT) in support of health and health-related fields, including health care services, health surveillance, health literature, health education, knowledge and research” [51].

mHealth is a branch of eHealth that refers to mobile wireless technologies to support public health objectives [51,52]. mHealth technologies are mainly used on portable devices such as phones and tablets [52,53] and allow for the ubiquitous provision of services such as public health surveillance, sharing of clinical information, data collection, health behavior communication, and the use of mobile technologies to reach health goals [54].

“Programmatic pretest-posttest” describes the use of technologies in programmatic settings to deliver an interventions to large populations targeting specific outputs and outcomes.

“In-house software platform” refers to bespoke technologies without using existing platforms. For example, many public health projects build forms on platforms such as Open Data Kit (ODK), Epi Info, and REDCap (Research Electronic Data Capture; Vanderbilt University), and in this review, these were not considered in-house software platforms.

“The effectiveness of an mHealth technology used in programmatic settings” is defined in the context of a project or program designed to implement an intervention with a target and without the use of research principles. Effectiveness in this sense is the achievement of a set target, such as the number of people screened using an mHealth technology.

“Effectiveness in a research project of an mHealth technology” is defined as the improvement of a contact tracing outcome before and after, such as the number of people diagnosed when screened using an mHealth technology.

Results

Literature Search

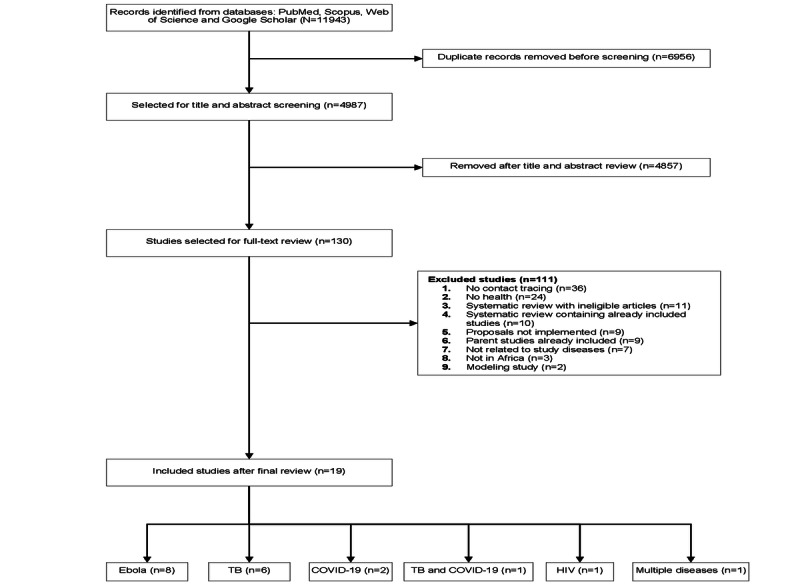

Figure 1 provides a PRISMA flowchart for the literature search and review process. The initial literature search yielded 11,943 articles. After removing 58.24% (6956/11,943) of duplicates, 41.76% (4987/11,943) of the articles remained for screening. A review of titles and abstracts led to the exclusion of 97.39% (4857/4987) of the articles, resulting in 130 articles being selected for full-text review. Only 19 studies were retained after the full-text review [6,8,27,32-47]. Of these 19 studies, 8 (42%) were on Ebola [32-39], 6 (32%) were on tuberculosis [6,27,40-43], 2 (11%) were on COVID-19 [44,45], 1 (5%) was on HIV [8], 1 (5%) was on tuberculosis and COVID-19 [46], and 1 (5%) was on multiple diseases in humans and animals [47]. No study needed translation.

Figure 1.

The PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) flow diagram for the systematic review. TB: tuberculosis.

Description of the Studies

Table 1 provides an overview of the key characteristics of the 19 studies that formed the basis of this systematic review. The studies covered a range of diseases, including Ebola, tuberculosis, COVID-19, HIV, and multiple diseases in humans and animals. In total, 74% (14/19) of the included studies were about technologies used for contact tracing of Ebola and tuberculosis. Ebola had the most studies with 42% (8/19), whereas tuberculosis had 32% (6/19). One other technology was used for both tuberculosis and COVID-19. The remaining 4 studies discussed technologies for the contact tracing of HIV (n=1, 25%), COVID-19 (n=2, 50%), and multiple diseases among humans and animals (n=1, 25%). Multimedia Appendix 4 [6,8,27,32-47,55-75] provides a detailed summary of each technology.

Table 1.

Description of all retained studies.

| Disease and target users | Technology type | Study | Country | Study design | Location | |

| Ebola | ||||||

|

|

Health workers | Smartphone app, GISa, and BIb dashboard | Tom-Aba et al [34], 2015 | Nigeria | Programmatic pretest-posttest | Community |

|

|

Health workers | Smartphone app and BI dashboard | Sacks et al [32], 2015 | Guinea | Programmatic pretest-posttest | Community |

|

|

Contacts | SMS text messaging and phone calls | Jia and Mohamed [33], 2015 | Sierra Leone | Programmatic pretest-posttest | Community |

|

|

Health workers | Smartphone app | Adeoye et al [37], 2017 | Nigeria | Programmatic pretest-posttest | Community |

|

|

Contacts | Phone calls | Alpren et al [36], 2017 | Sierra Leone | Programmatic pretest-posttest | Community |

|

|

Health workers | Mobile tracking | Wolfe et al [35], 2017 | Liberia | Programmatic pretest-posttest | Community |

|

|

Health workers | Smartphone app | Danquah et al [38], 2019 | Sierra Leone | Cross-sectional | Community |

|

|

Health workers | Smartphone app | Whitesell et al [39], 2021 | DRCc | Programmatic pretest-posttest | Community |

| Tuberculosis | ||||||

|

|

Health workers | Smartphone app and GIS | Chisunkha et al [6], 2016 | Malawi | Cross-sectional | Community |

|

|

Health workers | Smartphone app | Ha et al [40], 2016 | Botswana | Pretest-posttest | Community |

|

|

Contacts | USSDd | Diaz and Moturi [42], 2019 | Tanzania | Programmatic pretest-posttest | Community |

|

|

Health workers | Smartphone app | Davis et al [27], 2019 | Uganda | Trials | Used or intended for use in both the facility and community |

|

|

Health workers | Smartphone app | Szkwarko et al [41], 2021 | Kenya | Cross-sectional | Facility |

|

|

Both | Smartphone app | URCe [43], 2020 | South Africa | Programmatic pretest-posttest | Used or intended for use in both the facility and community |

| COVID-19 | ||||||

|

|

Contacts | Web application and USSD | Owoyemi et al [45], 2021 | Nigeria | Programmatic pretest-posttest | Community |

|

|

Contacts | Smartphone app | Mugenyi et al [44], 2021 | Uganda | Pretest-posttest | Community |

| Tuberculosis and COVID-19 | ||||||

|

|

Contacts | USSD and WhatsApp | Praekelt.org [46], 2021 | South Africa | Programmatic pretest-posttest | Community |

| HIV | ||||||

|

|

Health workers | Smartphone app | Rajput et al [8], 2012 | Kenya | Programmatic pretest-posttest | Community |

| Multiple diseases | ||||||

|

|

Health workers | Smartphone app | Karimuribo et al [47], 2017 | Tanzania | Programmatic pretest-posttest | Community |

aGIS: geographic information system.

bBI: business intelligence.

cDRC: Democratic Republic of the Congo.

dUSSD: unstructured supplementary service data.

eURC: University Research Co.

The type of mHealth technology varied across the selected studies. Exactly 42% (5/19) used communications platforms with SMS and phone calls accounting for 11% (2/19) [33,36] and unstructured supplementary service data or WhatsApp used in 16% (3/19) [42,45,46]. Smartphone apps were the most common, appearing in 68% (13/19) of the studies [6,8,27,32,34,37-41,43,44,47]. Among these smartphone apps, 25% (3/12) incorporated geographic information systems [6,34] or a business intelligence dashboard [32,34]. Only one out of the 19 (5%) used a mobile tracking system managed through cellphone tower technologies [35].

Most of the technologies (16/19, 84%) [6,8,32-40,42,44-47] were designed for use in the community during outreach visits, whereas 11% (2/19) were used in both the community [27,43] and the household and only 5% (1/19) were designed for use in a health facility [41]. Of all 19 technologies, 11 (58%) were designed for use by outreach workers to capture data during contact tracing [6,8,27,32,34,37-41,43,47], whereas 7 (37%) were designed for self-screening disease symptoms by the contacts [33,35,36,42,44-46] and only 1 (5%) could be used by both patients and outreach workers [43]. In contact-targeted technologies, contacts interacted with the system by sending messages, responding to built-in prompts, and making or receiving phone calls for contact tracing, whereas outreach workers used the technologies to facilitate data collection. Only 5% (1/19) of the studies used a technology to facilitate linkage to care of household contacts, a distal outcome from contact tracing [27].

Regarding study designs, 68% (13/19) of the studies that implemented technologies in programmatic settings used pretest-posttest designs. In these cases, the technology served as an intervention aimed at achieving specific contact tracing or case finding objectives within broad population programs, often without the technology of stringent research methodologies. The remaining 6 studies used various designs: 3 (50%) were pretest-posttest studies, 2 (33%) were cross-sectional studies, and 1 (17%) was a randomized trial.

The Uses of Technologies in the Included Studies

The uses of the technologies in the included studies are also presented in Table 1. As predetermined in the inclusion criteria, all technologies were designed to assist in contact tracing and infectious disease case finding. However, some were primarily designed for disease surveillance incorporating contact-tracing functionality [41,45,47,76]. The predominant contact tracing modality in the technologies involved identifying index patients, finding their contacts, and collecting data using mobile technologies instead of paper.

Other studies used unconventional contact tracing methods that did not necessarily require initial contact with index patients or arrangements with household contacts. For instance, in a study conducted in Malawi by Chisunkha et al [6], Google Earth, a publicly available software, was used to identify and visit households for recruitment into a chronic airway disease and tuberculosis trial even in the absence of previous knowledge of index patients. This approach involved the use of global positioning technology to locate households within a disease hot spot area on a map. Subsequently, data collectors were dispatched to these identified locations to recruit participants for the trial. While the system was not exclusively designed as a contact tracing project but rather as a tool to facilitate the location of participants within a study, the authors proposed that it could also serve contact tracing purposes in remote settings. This approach allowed for the swift identification of households before actual visits, thereby reducing costs and enhancing efficiency [6]. In Monrovia, Liberia, Wolfe et al [35] described an Ebola contact tracing system in which the government issued subpoenas to cell phone companies forcing them to provide locations of contacts that outreach workers could not locate. The authors reported that these subpoenas assisted in successfully locating 29 missing contacts [35]. In Sierra Leone, Alpren et al [36] described a repurposed call center, also for Ebola, in which the public acted as primary informants to authorities to report details and locations of known or suspected patients with Ebola (live alerts) and deaths (death alerts). The study showed >10,000 weekly alerts at the peak of the Ebola outbreak in October 2014. Cumulatively, between 2014 and 2016, the call center received 248,789 death alerts and 95,136 live alerts [36]. Finally, Mugenyi et al [44] developed and implemented the Wetaase app in a small pilot study to monitor the symptoms and movement of household members during the COVID-19 outbreak in Uganda. Unique to this technology was that household members downloaded and self-reported daily symptoms through Wetaase, and if symptomatic, they were tested for COVID-19 by workers visiting the household. Only 101 participants were enrolled, and out of an expected daily report of 8949 in 90 days, the app achieved 6617 reports, a use rate of 78%. Of these 6617 reports, only 57 (0.8%) self-reported COVID-19 symptoms, and no cases were diagnosed.

Development of mHealth Technologies for Contact Tracing

Type of Platform for Development

In total, 84% (16/19) of the technologies in the included studies were digital technologies developed to work on mobile phones. Of these 16 technologies, 11 (69%) were developed by customizing existing platforms such as ODK and CommCare, while 6 (38%) were developed as in-house software platforms. Of the 11 customized technologies, ODK was used to develop 5 (45%), and CommCare was used to develop 3 (27%) [27,32,38], while Unstructured Supplementary Service Data was used to develop 2 (18%) technologies and only 1 (9%) was developed using KoboToolbox. The remaining 16% (3/19) of the technologies used existing cellular network infrastructure such as SMS text messaging and phone calls. Of these 3 technologies that used existing cellular network infrastructure, 2 (67%) used phone calls, while 1 (33%) used cell phone tower technology.

Scope and Scale of Implementation

The scope and implementation scale of the technologies in the included studies varied depending on the intended use, that is, in programmatic or research settings. Technologies used in programmatic settings of contact tracing activities had a larger scope aimed at targeting large sections of the population to meet contact tracing targets of the program. Large-scope technologies in the selected studies included the Surveillance Outbreak Response Management and Analysis System (SORMAS) in Nigeria, the Academic Model Providing Access to Healthcare (AMPATH) in Kenya, Tanzania’s Tambua tuberculosis and Afyadata, tuberculosis HealthCheck in South Africa, and the Ebola contact tracing app in Guinea. For example, AMPATH in Kenya was developed to facilitate screening among >2 million individuals in 3 years [8]. Similarly, HealthCheck in South Africa was initially designed for population-wide self-screening of COVID-19 and expanded to tuberculosis at a national level [46]. In Tanzania, Afyadata, which veterinary specialists conceptualized, had a large scope for screening and reporting diseases in animals and humans [47,55]. Among humans, Afyadata has been used for finding diseases such as Ebola [47,77,78], COVID-19 [56,79], cholera [55,80,81], brucellosis [82,83], and an impetigo-like outbreak [47,55,84]. In contrast, technologies developed for research studies had a narrow scope, mainly built to demonstrate the value of mHealth in research settings or as research data collection tools, and had no evidence of uptake beyond the research. In Botswana, Ha et al [40] demonstrated that mHealth was better than paper by developing a mobile technology using ODK, but only 376 contacts were screened before the technology was probably retired. Chisunkha et al [6] developed a mobile technology using ODK to enumerate households for a tuberculosis contact tracing trial in Malawi and a small population. We did not find any further information about the tenure of this mHealth technology after the enumeration of the households. In Kenya, Szkwarko et al [41] developed PPTBMAPP to facilitate tuberculosis screening of 276 children in a health facility and demonstrated its superiority over paper, but no further use is documented. Also in Uganda, a randomized trial used an mHealth technology developed using CommCare as a data collection and screening tool and then evaluated the effectiveness of SMS text messaging to complete tuberculosis evaluation within 2 weeks. This technology screened only 919 contacts in the intervention and control arms [27].

Funding for Developing mHealth Technologies

Large-scale technologies developed for programmatic settings often received substantial funding for extensive development and implementation processes. For instance, SORMAS received €850,000 (US $929,509) from the European Union [85], Afyadata obtained US $450,000 from the Skoll Global Threats Fund [55], and the CommCare app for HIV screening was supported by a US $74.9–million US Agency for International Development–AMPATH program [86]. Tambua tuberculosis received government backing through Tanzania’s National Tuberculosis and Leprosy Programme, which facilitated support and acceptance from end users [42].

In contrast, many research projects included in this review did not report their funding sources. This lack of reported funding highlights the resource disparities between technologies used in programmatic settings and research studies. Research projects often operated with limited financial resources, which impacted the scope and scale of their technology development processes. For example, in Botswana, Ha et al [40] evaluated a pragmatic mHealth technology for tuberculosis contact tracing. While the technology demonstrated promising outcomes compared to traditional paper-based methods, its application was limited to a pilot study across 7 urban and semiurban health facilities, and there is no record of its broader implementation, underscoring a challenge of limited scope in assessing the technology’s full potential and scalability [40].

Participatory Development Processes as an Indicator of Successful Implementation

In total, 21% (4/19) of the technologies—SORMAS [57,76], Afyadata [47,55], AMPATH [8], and Tambua tuberculosis [42]—documented the use of design thinking frameworks in their development processes. Design thinking frameworks increase the likelihood of producing technologies that are fit for the intended purposes by using participatory processes to capture all the requirements and what outcomes the technology must deliver and how and also address the context in which the technology will be used [57,58]. Design thinking also involves extensive consultation with relevant stakeholders such as public health experts, government policy makers, health workers, and information technologists.

For example, Afyadata was intended to be a surveillance technology for use among humans and animals to monitor common diseases in the 2 fields. Professionals from human and animal health, as well as software developers, extensively collaborated to elicit project needs and goals. Relevant government officials were also involved and endorsed the technology throughout all its development and implementation stages. As a result, Afyadata is now widely used for surveillance purposes in Tanzania and has been used in the surveillance of cholera cases, monitoring of hygiene and sanitation practices, early detection of Ebola in neighboring Democratic Republic of the Congo, and surveillance of diseases occurring among animals. Afyadata was also adopted for the screening of COVID-19 in neighboring Mozambique.

Similarly, Nigeria’s SORMAS followed a design thinking framework as a response to its precursor, the Sense Follow-up app. The Sense Follow-up app was an Android mobile technology rapidly developed in an emergency to support data collection during home visits following contacts of patients with Ebola for 21 days to document people who had been in contact with index patients with Ebola and support data collection during the first Ebola outbreak in Nigeria in 2014 [34,87]. However, the development was not sufficiently consultative because of time constraints imposed by the quick response time required to control Ebola. It was later deemed to have failed to meet the needs of the Ebola control team, specifically that it did not sufficiently support the bidirectional exchange of information; did not address case finding [57]; and had complex data manageability because of its modular architecture with separate systems for data storage, functions, and format and its interface [34,87]. The same team that developed the Sense Follow-up app then teamed up with philanthropists and public health and IT experts in designing SORMAS following a design thinking approach. The design thinking framework guided the SORMAS development team to identify system, user, and technical requirements and addressed all the shortcomings of the Sense Follow-up app. Although there is limited evidence of its evaluation, SORMAS is now a widely used technology for contact tracing various diseases in Nigeria. In addition, because it is easily customizable, SORMAS was central in response to COVID-19.

Similar to Afyadata and SORMAS, the technology for HIV case finding in the AMPATH program with clear objectives was able to screen thousands as had been originally planned [8]. It was designed to be reliable in resource-constrained settings, scalable to allow for screening of >2 million people in 3 years, and open-source software; easily integrate with other systems and devices; and have GPS capabilities. As a result, public health experts, software technologists, and government stakeholders contributed to successfully developing a technology to screen >1 million HIV contacts.

However, in Guinea, the Ebola contact tracing technology was developed using participatory processes and design thinking but lacked government policy maker buy-in, which led to suboptimal implementation, and most of the intended results were not achieved [32]. Tuberculosis HealthCheck and COVID-19 alert technology in South Africa did not have documented participatory development, but government support led to their nationwide rollout [46].

All technologies used in research studies were developed by research teams and did not have any documented use of design thinking frameworks or participatory development. These technologies were used to reach research objectives, and their tenure did not extend beyond the project. However, the research team in Uganda that implemented the trial by Davis et al [27] conducted a post hoc analysis of the trial using an implementation science framework, the Consolidated Framework for Implementation Research, to understand the reasons for the poor performance of the mHealth technology on their main outcome [59]. While not explicitly mentioning design thinking as a framework to follow, the researchers conceded that the lack of consultation with local stakeholders was a critical gap in development that may have deprived them of a robust outer setting, which is a critical indicator for successful implementation even in smaller research studies.

Effectiveness of the mHealth Technologies and Reporting of Outcomes

Table 2 provides an overview of outcome reporting and technology development in the included studies. Technologies used in programmatic settings with a pretest-posttest setup were designed to reach specific targets and mainly reported outputs without comparisons, whereas research studies designed to answer a specific question reported both outputs and outcomes. Hence, effectiveness in the 2 approaches was defined differently. In technologies used for programmatic settings, effectiveness was defined as rolling out the technology to a large population and producing output. In research studies, effectiveness was defined as improving outcomes.

Table 2.

Outcomes and app development.

| Study title (design) | Output or outcomes | Development and evaluation |

| “Home-based tuberculosis contact investigation in Uganda: a household randomised trial” [27] (randomized study) |

|

The app was developed by public health professionals with experience in implementing and evaluating mHealtha technologies and by Dimagi CommCare specialists. |

| “Use of a mobile technology for Ebola contact tracing and monitoring in Northern Sierra Leone: a proof-of-concept study” [38] (cross-sectional study) |

|

Developed by an information technologist in the United States working with the study team in Sierra Leone. |

| “Implementation of digital technology solutions for a lung health trial in rural Malawi” [6] (cross-sectional study) |

|

No app development; Google Earth was used to identify households. |

| “Using a mobile technology to Improve paediatric presumptive Tuberculosis identification in western Kenya” [41] (cross-sectional study) |

|

The app was developed in Bangladesh and customized for use in this study. |

| “Evaluation of a Mobile Health Approach to Tuberculosis Contact Tracing in Botswana” [40] (pretest-posttest study) |

|

Researchers partnered with an IT firm to produce the contact tracing app. |

| “Feasibility of using a mobile app to monitor and report COVID-19-related symptoms and people’s movements in Uganda” [44] (pretest-posttest study) |

|

Developed by a technology designer in consultation with officials from the Ministry of Health. No further details of the development process are given. |

| “The 117-call alert system in Sierra Leone: from rapid Ebola notification to routine death reporting” [36] (programmatic pretest-posttest) |

|

No app development, and a call center was repurposed to report suspected Ebola cases. |

| “A Smartphone App (AfyaData) for Innovative One Health Disease Surveillance from Community to National Levels in Africa: Intervention in Disease Surveillance” [47] (programmatic pretest-posttest) |

|

Designed through a collaboration between public health and ICTb specialists and government personnel. They developed a theory of change to guide development and implementation. |

| “Ebola virus disease contact tracing activities, lessons learned and best practices during the Duport Road outbreak in Monrovia, Liberia, November 2015” [35] (programmatic pretest-posttest) |

|

No app development. The program used subpoenas to cell phone companies to provide location details of suspected Ebola contacts. |

| “Introduction of Mobile Health Tools to Support Ebola Surveillance and Contact Tracing in Guinea” [32] (programmatic pretest-posttest) |

|

An IT team developed it in the United States with local public health specialists in Guinea, local UNc partners, and the government. |

| “USAID/South Africa Tuberculosis South Africa Project (TBSAP) Midterm Evaluation Report” [43] (programmatic pretest-posttest) |

|

Not reported |

| “Development and implementation of the Ebola Exposure Window Calculator: A tool for Ebola virus disease outbreak field investigations” [39] (programmatic pretest-posttest) |

|

Not reported |

| “Innovative Technological Approach to Ebola Virus Disease Outbreak Response in Nigeria Using the Open Data Kit and Form Hub Technology” [34] (programmatic pretest-posttest) |

|

CDCd, WHOe, and DRCf field teams and Johns Hopkins teams developed the technology, and the government endorsed its use. |

| “Evaluation of an Android-based mHealth system for population surveillance in developing countries” [8] (programmatic pretest-posttest) |

|

Members of the AMPATHg project team developed the app in collaboration with staff from the Ministry of Health in Kenya. They had clear objectives for their scope and what they wanted the app to achieve. |

| “Evaluating the use of cell phone messaging for community Ebola syndromic surveillance in high-risk settings in Southern Sierra Leone” [33] (programmatic pretest-posttest) |

|

No app development |

| “Implementing Surveillance and Outbreak Response Management and Analysis System (SORMAS) for Public Health in West Africa- Lessons Learnt and Future Direction” [37] (programmatic pretest-posttest) |

|

Design thinking workshops were held in Nigeria and Germany to assess software requirements. Nigeria Centre for Disease Control and Prevention, Port Health, and other stakeholders were also involved in the design thinking methodology. Contact tracing requirements for other diseases, not just Ebola, were also considered in the design process. |

| “Using mHealth to self-screen and promote Tuberculosis awareness in Tanzania” [42] (programmatic pretest-posttest) |

|

Designed by the government and other implementors using the Ministry of Health mHealth platforms in a participatory process. |

| “TB HealthCheck puts tuberculosis self-screening in everyone’s hands ahead of World TB Day” [46] (programmatic pretest-posttest) |

|

Not clearly stated |

| “Mobile health approaches to disease surveillance in Africa; Wellvis COVID triage tool” [45] (programmatic pretest-posttest) |

|

The development process is not described in detail. |

amHealth: mobile health.

bICT: information and communications technology.

cUN: United Nations.

dCDC: Centers for Disease Control and Prevention.

eWHO: World Health Organization.

fDRC: Democratic Republic of the Congo.

gAMPATH: Academic Model Providing Access to Healthcare.

Of all the included studies, only the trial from Uganda randomly assigned participants to different groups, comparing outcomes objectively. The trial had primary outcomes of contacts completing tuberculosis evaluation within 14 days and secondary outcomes of treatment initiation and linking patients to care [27]. Contacts were assigned to the control, and SMS text messaging–facilitated interventions were at a household level. The trial showed that the mHealth technology had no effect on the primary outcome.

In addition, although they did not randomize participants or calculate sample size as in the randomized trial, the cross-sectional surveys and pretest-posttest studies included in this systematic review attempted to evaluate the performance of the mHealth technologies by reporting outcomes such as proportions of contacts diagnosed, and some compared performance between groups. For example, a study in Sierra Leone compared the number of Ebola contacts identified and traced between the Ebola contact tracing app and a paper-based data collection system using a conveniently selected sample [38]. The researchers also evaluated completion, the proportion of cases detected, and implementation feasibility between the 2 arms. Similarly, the study by Ha et al [40] also compared outcomes in proportions before and after implementation and in contrast to a paper-based system. This pretest-posttest study compared the number of contacts screened, time to complete an evaluation, and data quality between using a contact tracing app and paper-based systems [40]. Other cross-sectional studies only reported outcomes in one conveniently selected group [6,41], and one pretest-posttest study only reported outputs [44].

Programmatic setting studies predominantly reported immediate outputs or implementation experiences of the mHealth technologies. SORMAS, AMPATH, and Afyadata, which were the largest and most comprehensively developed technologies, only reported absolute numbers of the people screened and implementation experiences rather than actual outcomes to determine the performance of the mHealth technology. In addition, Tambua tuberculosis in Tanzania reported immediate outputs on tuberculosis screening but did not have an evaluation done on implementation outcomes. In South Africa, ConnecTB and tuberculosis HealthCheck, both used to facilitate tuberculosis screening, did not provide sufficient details on the outputs or evaluation of the technologies. Nevertheless, these technologies in programmatic settings reached large populations for contact tracing.

Discussion

Principal Findings

We reviewed the continuum of mHealth technologies used for contact tracing and case finding. We synthesized this information to improve understanding of mHealth’s value in contact tracing and inform how future projects can develop or implement it efficiently to improve contact tracing or implementation outcomes. Only 19 studies met the criteria and were included in the review. The technologies were developed by either customizing existing platforms or creating original software. Some technologies used to trace contacts did not require software development but used existing cellular network infrastructure. Technologies developed using design thinking frameworks with participatory activities had a higher likelihood of implementation fidelity. However, the effectiveness of these technologies was not sufficiently evaluated, and the outcomes of most technologies were only reported in small research studies.

Our search yielded a greater number of articles on studies using mHealth technologies for contact tracing than those obtained in previous reviews, likely reflecting the growing adoption of such technologies during the search period. In addition, our iterative search strategy, which featured relaxed criteria and encompassed a variety of infectious diseases, may have played a significant role in identifying this increased volume of articles. The review has also presented the entire pathway of using these technologies from the development stage until deployment and evaluation of outcomes. Our findings also confirm that mHealth outperforms traditional paper-based contact tracing regarding the timeliness of screening, data accuracy, and streamlining of the screening process. However, little evidence exists of mHealth’s impact or incremental value on contact tracing outcomes compared to paper because of underreporting. Despite this challenge, our study underscores the potential of using validated design frameworks during the developmental phase, which is likely to enhance the overall effectiveness of such solutions. Future public health projects that intend to develop and implement mHealth technologies can consider these insights as a guide into some of the prerequisites for implementing useful technologies that can meaningfully improve outcomes.

Our systematic review suggests that a more systematic approach to development using design thinking frameworks with participatory development, including buy-in from policy makers, could help technologies in achieving the intended case finding outputs and outcomes when compared to an approach that does not use these frameworks. For example, SORMAS was developed in Nigeria following design thinking and participatory processes involving public health experts, information technologists, and government officials. SORMAS was responding to an earlier mHealth app, Sense Follow-up, which had failed at the implementation stage due to not meeting user requirements because of poor development. Similarly, Afyadata in Tanzania and AMPATH in Kenya both involved extensive consultative processes, increasing their outputs. Contrastingly, even a collaboratively built technology may fail at implementation without government backing. For example, in Sierra Leone, Sacks et al [32] reported poor outputs from the Ebola contact tracing program, partly due to the lack of government officials’ commitment to supporting the program. In South Africa, ConnecTB did not document any design thinking frameworks or participatory development and was discontinued due to technical challenges despite having the policy makers’ support. None of the research studies documented the use of a design thinking framework, which may have affected their results, most of which were suboptimal, with the technology failing to show an impact on case finding. A post hoc analysis of the trial by Davis et al [27] also recommended using design thinking frameworks even in research studies to ensure that they are impactful [59].

In addition to using established frameworks in the development stage, financial resources were a significant factor in determining the extent of development and implementation. For example, among the technologies used in programmatic settings, SORMAS received an initial funding of €850,000 (US $929,509) from the European Union [85], Afyadata received US $450,000 from the Skoll Global Threats Fund [55], and the CommCare app for HIV screening was developed under a US $74.9–million US Agency for International Development–AMPATH program [86]. In addition, Tambua tuberculosis was government backed through Tanzania’s National Tuberculosis and Leprosy Programme [42]. Government involvement also assisted in obtaining buy-in from the ultimate end users of the technologies. However, compared to studies implementing technologies in programmatic settings, most research studies included in this review did not have the financial resources to execute the extensive development processes required for a technology to succeed. However, the dilemma is that well-funded programs tend to develop and implement large and sustainable technologies but often overlook comprehensive evaluations and, hence, fail to inform any future work meaningfully. Research studies, on the other hand, while rigorously evaluating their technologies, undergo a suboptimal development process due to limited funding. Consequently, despite restricted resources, research studies tend to use more robust evaluation methods in their assessments. For example, in Botswana, Ha et al [40] evaluated a pragmatic mHealth technology for tuberculosis contact tracing, but it was only implemented in a few settings, possibly due to limited funding, and the researchers could not conduct a large study to fully evaluate the program. Therefore, nesting evaluations in large programs as implementation research processes may be a way to improve evidence of mHealth technologies’ utility for contact tracing.

The primary focus of contact tracing programs is on improving case finding. However, there is also an expectation in some studies that improved case finding should also trickle down to the betterment of other outcomes down the tuberculosis care and treatment cascade, such as treatment initiation and completion [88,89]. However, using current evidence, it can be argued for mHealth technologies that, unless initially planned and incorporated in the design process, the desired contact tracing outcomes further down the cascade are inappropriate to determine the success of a contact tracing program and the effectiveness of mHealth technologies. Only 1 study in this review, a randomized trial, reported treatment initiation further down the contact tracing cascade [24,27], and the rest focused on outputs immediately after screening, such as numbers screened and testing positive. When using mHealth, immediate implementation outcomes such as technology development, implementation fidelity, feasibility, and acceptability could more appropriately measure effectiveness. This is because mHealth technologies can only measure what they are designed for. For instance, all technologies used in programmatic setting studies were designed to find and screen contacts but did not have modules developed for linkage to care, which would have allowed outcomes further down the cascade to be measured. Although this narrow focus on immediate outputs may limit the tenure of the technologies because they cannot demonstrate any value for the desired contact tracing outcomes, these outcomes are not determined only by finding the people with the disease. Instead, additional steps are required to optimize care linkage and treatment adherence. Small research studies with a limited scope cannot include all the steps from finding contacts, linking them to care, and showing a reduction in disease incidence, but without these steps, they may be too expensive and less likely to be adopted. Therefore, as discussed in the previous section, a participatory developmental process must be used to include such capabilities for mHealth and, where possible, develop the technology within a large well-funded program.

A cost analysis of the mHealth technology for contact tracing in Uganda found that this technology was underused because of its limited scope despite substantial investment in the development stages and initial implementation [90]. The authors suggested expanding the scope by increasing the volume of contacts served or expanding the use of the technology to the later stages of the contact tracing cascade. From this review, most technologies used to support Ebola contact tracing became defunct after the outbreak subsided, thus ultimately costing health programs because they were not used long enough to absorb or justify the costs of their development. Only SORMAS, which had an expanded scope beyond a single disease and activities beyond tracing, survived and continues to receive funding. Afyadata in Tanzania also has a large scope of contact tracing and surveillance activities in humans and animals and continues to be valuable to both health systems. Therefore, health programs should consider using technologies for contact tracing and case finding more broadly to capitalize on synergies and bring down costs to make them more sustainable. This will expand their scope and include multiple diseases or higher volumes of patients or expand the use of mHealth to all stages of the cascade, increasing the likelihood of the interventions’ sustainability. Investment cases for future mHealth technologies may be supported if there is evidence of their cost-effectiveness, and this may be necessitated by exploiting economies of scale and scope. Health economics studies and models have also shown that unit costs of interventions may decrease and be optimized for cost-effectiveness by implementing at the appropriate scale, within the right scope [91,92], and without compromising quality by exceeding these bounds [93].

Limitations and Strengths of the Study

The primary limitation of our study stems from the context in which most of the reviewed mHealth technologies were deployed. Predominantly used within programmatic settings rather than through rigorous formal research, these technologies often lacked comprehensive outcome evaluations. Consequently, without these evaluations, our review faces challenges in definitively assessing the impact of mHealth technologies on enhancing contact tracing efforts. Despite this, the deployment of these technologies in well-funded programmatic settings offers valuable insights into the foundational requirements for successful contact tracing technologies, demonstrating how strategic funding can facilitate robust development and implementation.

In addition, our review faces limitations due to the unavailability of some key information, such as tenure of technology use beyond the initial studies, especially for technologies evaluated in research settings. The scarcity of detailed data in both primary literature and supplementary documents makes it difficult to assess whether the technologies were continued or discontinued after the research. This gap underscores the vital need for comprehensive documentation and rigorous reporting standards in the mHealth domain.

Recommendations and Conclusions

The essential ingredients for developing functional and impactful mHealth technologies, whether for contact tracing or other health care interventions, include using a participatory design thinking framework, securing adequate funding, and establishing a clear plan for evaluating the implementation outcomes of the technology.

Many projects, especially research projects, often face challenges of limited funds to fully develop and implement their technologies. In such cases, an alternative approach can be integrating the development of mHealth technologies within larger technologies used in programmatic setting projects. This integration can provide the necessary resources and infrastructure to sufficiently develop and efficiently evaluate the technology. However, such ideal scenarios may not always be feasible, and the use of participatory design thinking frameworks becomes a prerequisite for developing an effective mHealth technology. This approach emphasizes collaboration with end users, stakeholders, and experts to ensure that the technology aligns with user needs, is inclusive, and considers the context-specific requirements.

Abbreviations

- AMPATH

Academic Model Providing Access to Healthcare

- MeSH

Medical Subject Heading

- mHealth

mobile health

- ODK

Open Data Kit

- PRISMA

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- REDCap

Research Electronic Data Capture

- SORMAS

Surveillance Outbreak Response Management and Analysis System

Search terms.

Systematic review data extraction tool.

Quality assessment of the included studies.

Detailed explanation of each article in the systematic review.

PRISMA checklist.

Footnotes

Authors' Contributions: The conception of this work was presented by SC and KV as part of DLM’s PhD studies. Manuscript reviews were diligently conducted by HC and JN. JLD provided invaluable senior scientific guidance based on his extensive experience in contact tracing. TN collaborated with DLM to structure the systematic review chapters and review the written content drawing insights from their involvement in the Community and Universal Testing for tuberculosis project—a contact tracing cluster randomized trial—in which both are researchers dedicated to the development of a mobile health technology to support tuberculosis contact tracing.

Conflicts of Interest: None declared.

References

- 1.Asadzadeh A, Kalankesh LR. A scope of mobile health solutions in COVID-19 pandemics. Inform Med Unlocked. 2021;23:100558. doi: 10.1016/j.imu.2021.100558. https://linkinghub.elsevier.com/retrieve/pii/S2352-9148(21)00048-4 .S2352-9148(21)00048-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Tilahun B, Gashu KD, Mekonnen ZA, Endehabtu BF, Angaw DA. Mapping the role of digital health technologies in prevention and control of COVID-19 pandemic: review of the literature. Yearb Med Inform. 2021 Aug;30(1):26–37. doi: 10.1055/s-0041-1726505. http://www.thieme-connect.com/DOI/DOI?10.1055/s-0041-1726505 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Brinkel J, Krämer A, Krumkamp R, May J, Fobil J. Mobile phone-based mHealth approaches for public health surveillance in sub-Saharan Africa: a systematic review. Int J Environ Res Public Health. 2014 Nov 12;11(11):11559–82. doi: 10.3390/ijerph111111559. https://www.mdpi.com/resolver?pii=ijerph111111559 .ijerph111111559 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Falzon D, Raviglione M, Bel EH, Gratziou C, Bettcher D, Migliori GB. The role of eHealth and mHealth in tuberculosis and tobacco control: a WHO/ERS consultation. Eur Respir J. 2015 Aug;46(2):307–11. doi: 10.1183/09031936.00043315. https://air.unimi.it/handle/2434/817577 .46/2/307 [DOI] [PubMed] [Google Scholar]

- 5.Falzon D, Timimi H, Kurosinski P, Migliori GB, Van Gemert W, Denkinger C, Isaacs C, Story A, Garfein RS, do Valle Bastos LG, Yassin MA, Rusovich V, Skrahina A, Van Hoi L, Broger T, Abubakar I, Hayward A, Thomas BV, Temesgen Z, Quraishi S, von Delft D, Jaramillo E, Weyer K, Raviglione MC. Digital health for the End TB Strategy: developing priority products and making them work. Eur Respir J. 2016 Jul;48(1):29–45. doi: 10.1183/13993003.00424-2016. https://air.unimi.it/handle/2434/627461 .13993003.00424-2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chisunkha B, Banda H, Thomson R, Squire SB, Mortimer K. Implementation of digital technology solutions for a lung health trial in rural Malawi. Eur Respir J. 2016 Jun;47(6):1876–9. doi: 10.1183/13993003.00045-2016. http://erj.ersjournals.com/cgi/pmidlookup?view=long&pmid=27076597 .13993003.00045-2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Babirye D, Shete PB, Farr K, Nalugwa T, Ojok C, Nantale M, Oyuku D, Ayakaka I, Katamba A, Davis JL, Nadunga D, Joloba M, Moore D, Cattamanchi A. Feasibility of a short message service (SMS) intervention to deliver tuberculosis testing results in peri-urban and rural Uganda. J Clin Tuberc Other Mycobact Dis. 2019 Aug;16:100110. doi: 10.1016/j.jctube.2019.100110. https://linkinghub.elsevier.com/retrieve/pii/S2405-5794(19)30018-X .S2405-5794(19)30018-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Rajput ZA, Mbugua S, Amadi D, Chepngeno V, Saleem JJ, Anokwa Y, Hartung C, Borriello G, Mamlin BW, Ndege SK, Were MC. Evaluation of an android-based mHealth system for population surveillance in developing countries. J Am Med Inform Assoc. 2012;19(4):655–9. doi: 10.1136/amiajnl-2011-000476. https://europepmc.org/abstract/MED/22366295 .amiajnl-2011-000476 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cherutich P, Golden M, Betz B, Wamuti B, Ng'ang'a A, Maingi P, Macharia P, Sambai B, Abuna F, Bukusi D, Dunbar M, Farquhar C. Surveillance of HIV assisted partner services using routine health information systems in Kenya. BMC Med Inform Decis Mak. 2016 Jul 20;16:97. doi: 10.1186/s12911-016-0337-9. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-016-0337-9 .10.1186/s12911-016-0337-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Coleman J, Bohlin KC, Thorson A, Black V, Mechael P, Mangxaba J, Eriksen J. Effectiveness of an SMS-based maternal mHealth intervention to improve clinical outcomes of HIV-positive pregnant women. AIDS Care. 2017 Jul;29(7):890–7. doi: 10.1080/09540121.2017.1280126. [DOI] [PubMed] [Google Scholar]

- 11.Abdulrahman S, Ganasegeran K. Chapter 11 - m-Health in public health practice: a constellation of current evidence. In: Jude HD, Balas VE, editors. Telemedicine Technologies: Big Data, Deep Learning, Robotics, Mobile and Remote Applications for Global Healthcare. Cambridge, MA: Academic Press; 2019. pp. 171–82. [Google Scholar]

- 12.Fox GJ, Barry SE, Britton WJ, Marks GB. Contact investigation for tuberculosis: a systematic review and meta-analysis. Eur Respir J. 2013 Jan;41(1):140–56. doi: 10.1183/09031936.00070812. http://erj.ersjournals.com/cgi/pmidlookup?view=long&pmid=22936710 .09031936.00070812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Baxter S, Goyder E, Chambers D, Johnson M, Preston L, Booth A. Interventions to improve contact tracing for tuberculosis in specific groups and in wider populations: an evidence synthesis. Health Serv Deliv Res. 2017 Jan;5(1):1–102. doi: 10.3310/hsdr05010. [DOI] [PubMed] [Google Scholar]

- 14.Velen K, Shingde RV, Ho J, Fox GJ. The effectiveness of contact investigation among contacts of tuberculosis patients: a systematic review and meta-analysis. Eur Respir J. 2021 Dec;58(6):2100266. doi: 10.1183/13993003.00266-2021. http://erj.ersjournals.com/cgi/pmidlookup?view=long&pmid=34016621 .13993003.00266-2021 [DOI] [PubMed] [Google Scholar]

- 15.Contact tracing during an outbreak of Ebola virus disease. World Health Organization Regional Office for Africa. 2014. [2024-07-26]. https://www.icao.int/safety/CAPSCA/PublishingImages/Pages/Ebola/contact-tracing-during-outbreak-of-ebola.pdf .

- 16.Recommendations for investigating contacts of persons with infectious tuberculosis in low- and middle-income countries. World Health Organization. 2012. Apr 11, [2024-07-26]. https://www.who.int/publications/i/item/9789241504492 . [PubMed]

- 17.Breuninger M, van Ginneken B, Philipsen RH, Mhimbira F, Hella JJ, Lwilla F, van den Hombergh J, Ross A, Jugheli L, Wagner D, Reither K. Diagnostic accuracy of computer-aided detection of pulmonary tuberculosis in chest radiographs: a validation study from sub-Saharan Africa. PLoS One. 2014 Sep 5;9(9):e106381. doi: 10.1371/journal.pone.0106381. https://dx.plos.org/10.1371/journal.pone.0106381 .PONE-D-14-07412 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Murphy K, Habib SS, Zaidi SM, Khowaja S, Khan A, Melendez J, Scholten ET, Amad F, Schalekamp S, Verhagen M, Philipsen RH, Meijers A, van Ginneken B. Computer aided detection of tuberculosis on chest radiographs: an evaluation of the CAD4TB v6 system. Sci Rep. 2020 Mar 26;10(1):5492. doi: 10.1038/s41598-020-62148-y. doi: 10.1038/s41598-020-62148-y.10.1038/s41598-020-62148-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cox H, Workman L, Bateman L, Franckling-Smith Z, Prins M, Luiz J, Van Heerden J, Ah Tow Edries L, Africa S, Allen V, Baard C, Zemanay W, Nicol MP, Zar HJ. Oral swab specimens tested with Xpert MTB/RIF ultra assay for diagnosis of pulmonary tuberculosis in children: a diagnostic accuracy study. Clin Infect Dis. 2022 Dec 19;75(12):2145–52. doi: 10.1093/cid/ciac332. https://europepmc.org/abstract/MED/35579497 .6586837 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kang YA, Koo B, Kim OH, Park JH, Kim HC, Lee HJ, Kim MG, Jang Y, Kim NH, Koo YS, Shin Y, Lee SW, Kim SH. Gene-based diagnosis of tuberculosis from oral swabs with a new generation pathogen enrichment technique. Microbiol Spectr. 2022 Jun 29;10(3):e0020722. doi: 10.1128/spectrum.00207-22. https://journals.asm.org/doi/10.1128/spectrum.00207-22?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.National guidelines on the treatment of tuberculosis infection. National Department of Health, Republic of South Africa. 2023. [2024-04-19]. https://knowledgehub.health.gov.za/elibrary/national-guidelines-treatment-tuberculosis-infection .

- 22.Thind D, Charalambous S, Tongman A, Churchyard G, Grant AD. An evaluation of 'Ribolola': a household tuberculosis contact tracing programme in North West Province, South Africa. Int J Tuberc Lung Dis. 2012 Dec;16(12):1643–8. doi: 10.5588/ijtld.12.0074. [DOI] [PubMed] [Google Scholar]

- 23.Deery CB, Hanrahan CF, Selibas K, Bassett J, Sanne I, Van Rie A. A home tracing program for contacts of people with tuberculosis or HIV and patients lost to care. Int J Tuberc Lung Dis. 2014 May;18(5):534–40. doi: 10.5588/ijtld.13.0587. https://europepmc.org/abstract/MED/24903789 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Armstrong-Hough M, Turimumahoro P, Meyer AJ, Ochom E, Babirye D, Ayakaka I, Mark D, Ggita J, Cattamanchi A, Dowdy D, Mugabe F, Fair E, Haberer JE, Katamba A, Davis JL. Drop-out from the tuberculosis contact investigation cascade in a routine public health setting in urban Uganda: a prospective, multi-center study. PLoS One. 2017 Nov 6;12(11):e0187145. doi: 10.1371/journal.pone.0187145. https://dx.plos.org/10.1371/journal.pone.0187145 .PONE-D-17-12775 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Chetty-Makkan CM, deSanto D, Lessells R, Charalambous S, Velen K, Makgopa S, Gumede D, Fielding K, Grant AD. Exploring the promise and reality of ward-based primary healthcare outreach teams conducting TB household contact tracing in three districts of South Africa. PLoS One. 2021 Aug 13;16(8):e0256033. doi: 10.1371/journal.pone.0256033. https://dx.plos.org/10.1371/journal.pone.0256033 .PONE-D-21-04123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Meyer AJ, Babirye D, Armstrong-Hough M, Mark D, Ayakaka I, Katamba A, Haberer JE, Davis JL. Text messages sent to household tuberculosis contacts in Kampala, Uganda: process evaluation. JMIR Mhealth Uhealth. 2018 Nov 20;6(11):e10239. doi: 10.2196/10239. https://mhealth.jmir.org/2018/11/e10239/ v6i11e10239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Davis JL, Turimumahoro P, Meyer AJ, Ayakaka I, Ochom E, Ggita J, Mark D, Babirye D, Okello DA, Mugabe F, Fair E, Vittinghoff E, Armstrong-Hough M, Dowdy D, Cattamanchi A, Haberer JE, Katamba A. Home-based tuberculosis contact investigation in Uganda: a household randomised trial. ERJ Open Res. 2019 Jul;5(3):00112-2019. doi: 10.1183/23120541.00112-2019. https://europepmc.org/abstract/MED/31367636 .00112-2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Anglemyer A, Moore TH, Parker L, Chambers T, Grady A, Chiu K, Parry M, Wilczynska M, Flemyng E, Bero L. Digital contact tracing technologies in epidemics: a rapid review. Cochrane Database Syst Rev. 2020 Aug 18;8(8):CD013699. doi: 10.1002/14651858.CD013699. https://europepmc.org/abstract/MED/33502000 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Skiba DJ. Intellectual property issues in the digital health care world. Nurs Adm Q. 1997;21(3):11–20. [PubMed] [Google Scholar]

- 30.Fiordelli M, Diviani N, Schulz PJ. Mapping mHealth research: a decade of evolution. J Med Internet Res. 2013 May 21;15(5):e95. doi: 10.2196/jmir.2430. https://www.jmir.org/2013/5/e95/ v15i5e95 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Santos WM, Secoli SR, Püschel VA. The Joanna Briggs Institute approach for systematic reviews. Rev Lat Am Enfermagem. 2018 Nov 14;26:e3074. doi: 10.1590/1518-8345.2885.3074. https://www.scielo.br/scielo.php?script=sci_arttext&pid=S0104-11692018000100701&lng=en&nrm=iso&tlng=en .S0104-11692018000100701 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Sacks JA, Zehe E, Redick C, Bah A, Cowger K, Camara M, Diallo A, Gigo AN, Dhillon RS, Liu A. Introduction of mobile health tools to support Ebola surveillance and contact tracing in Guinea. Glob Health Sci Pract. 2015 Nov 12;3(4):646–59. doi: 10.9745/GHSP-D-15-00207. http://www.ghspjournal.org/lookup/pmidlookup?view=long&pmid=26681710 .GHSP-D-15-00207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Jia K, Mohamed K. Evaluating the use of cell phone messaging for community Ebola syndromic surveillance in high risked settings in Southern Sierra Leone. Afr Health Sci. 2015 Sep;15(3):797–802. doi: 10.4314/ahs.v15i3.13. https://europepmc.org/abstract/MED/26957967 .jAFHS.v15.i3.pg797 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tom-Aba D, Olaleye A, Olayinka AT, Nguku P, Waziri N, Adewuyi P, Adeoye O, Oladele S, Adeseye A, Oguntimehin O, Shuaib F. Innovative technological approach to Ebola virus disease outbreak response in Nigeria using the open data kit and form hub technology. PLoS One. 2015 Jun 26;10(6):e0131000. doi: 10.1371/journal.pone.0131000. https://dx.plos.org/10.1371/journal.pone.0131000 .PONE-D-14-53622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Wolfe CM, Hamblion EL, Schulte J, Williams P, Koryon A, Enders J, Sanor V, Wapoe Y, Kwayon D, Blackley DJ, Laney AS, Weston EJ, Dokubo EK, Davies-Wayne G, Wendland A, Daw VT, Badini M, Clement P, Mahmoud N, Williams D, Gasasira A, Nyenswah TG, Fallah M. Ebola virus disease contact tracing activities, lessons learned and best practices during the Duport Road outbreak in Monrovia, Liberia, November 2015. PLoS Negl Trop Dis. 2017 Jun;11(6):e0005597. doi: 10.1371/journal.pntd.0005597. https://dx.plos.org/10.1371/journal.pntd.0005597 .PNTD-D-16-02179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Alpren C, Jalloh MF, Kaiser R, Diop M, Kargbo S, Castle E, Dafae F, Hersey S, Redd JT, Jambai A. The 117 call alert system in Sierra Leone: from rapid Ebola notification to routine death reporting. BMJ Glob Health. 2017;2(3):e000392. doi: 10.1136/bmjgh-2017-000392. https://gh.bmj.com/lookup/pmidlookup?view=long&pmid=28948044 .bmjgh-2017-000392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Adeoye OO, Tom-Aba D, Ameh CA, Ojo OE, Ilori EA, Gidado SO, Waziri EN, Nguku PM, Mall S, Denecke K, Lamshoeft M, Schwarz NG, Krause G, Poggensee G. Implementing surveillance and outbreak response management and analysis system (SORMAS) for public health in west Africa- lessons learnt and future direction. Intl J Tropical Dis Health. 2017 Jan 10;22(2):1–17. doi: 10.9734/IJTDH/2017/31584. [DOI] [Google Scholar]

- 38.Danquah LO, Hasham N, MacFarlane M, Conteh FE, Momoh F, Tedesco AA, Jambai A, Ross DA, Weiss HA. Use of a mobile application for Ebola contact tracing and monitoring in northern Sierra Leone: a proof-of-concept study. BMC Infect Dis. 2019 Sep 18;19(1):810. doi: 10.1186/s12879-019-4354-z. https://bmcinfectdis.biomedcentral.com/articles/10.1186/s12879-019-4354-z .10.1186/s12879-019-4354-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Whitesell A, Bustamante ND, Stewart M, Freeman J, Dismer AM, Alarcon W, Kofman A, Ben Hamida A, Nichol ST, Damon I, Haberling DL, Keita M, Mbuyi G, Armstrong G, Juang D, Dana J, Choi MJ. Development and implementation of the Ebola exposure window calculator: a tool for Ebola virus disease outbreak field investigations. PLoS One. 2021 Aug 5;16(8):e0255631. doi: 10.1371/journal.pone.0255631. https://dx.plos.org/10.1371/journal.pone.0255631 .PONE-D-21-09987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ha YP, Tesfalul MA, Littman-Quinn R, Antwi C, Green RS, Mapila TO, Bellamy SL, Ncube RT, Mugisha K, Ho-Foster AR, Luberti AA, Holmes JH, Steenhoff AP, Kovarik CL. Evaluation of a mobile health approach to tuberculosis contact tracing in Botswana. J Health Commun. 2016 Oct 26;21(10):1115–21. doi: 10.1080/10810730.2016.1222035. https://europepmc.org/abstract/MED/27668973 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Szkwarko D, Amisi JA, Peterson D, Burudi S, Angala P, Carter EJ. Using a mobile application to improve pediatric presumptive TB identification in western Kenya. Int J Tuberc Lung Dis. 2021 Jun 01;25(6):468–74. doi: 10.5588/ijtld.20.0890. [DOI] [PubMed] [Google Scholar]

- 42.Diaz N, Moturi E. Using mHealth to self-screen and promote TB awareness in Tanzania. Challenge TB. 2019. [2024-07-26]. https://www.challengetb.org/publications/tools/briefs/CTB_Tanzania_mHealth.pdf .

- 43.USAID/South Africa: Tuberculosis South Africa Project (TBSAP) midterm evaluation report. United States Agency for International Development. 2020. Oct 15, [2024-07-26]. https://pdf.usaid.gov/pdf_docs/PA00XB1Q.pdf .

- 44.Mugenyi L, Nsubuga RN, Wanyana I, Muttamba W, Tumwesigye NM, Nsubuga SH. Feasibility of using a mobile app to monitor and report COVID-19 related symptoms and people's movements in Uganda. PLoS One. 2021;16(11):e0260269. doi: 10.1371/journal.pone.0260269. https://dx.plos.org/10.1371/journal.pone.0260269 .PONE-D-21-09286 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Owoyemi A, Ikpe R, Toye M, Rewane A, Abdullateef M, Obaseki E, Mustafa S, Adeosun W. Mobile health approaches to disease surveillance in Africa; Wellvis COVID triage tool. Digit Health. 2021 Feb 20;7:2055207621996876. doi: 10.1177/2055207621996876. https://journals.sagepub.com/doi/10.1177/2055207621996876?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed .10.1177_2055207621996876 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.TB HealthCheck puts self-screening in everyone’s hands ahead of World TB Day. Rising Sun. 2021. Mar 24, [2024-07-26]. https://risingsunnewspapers.co.za/lnn/1151916/tb-healthcheck-puts-self-screening-in-everyones-hands-ahead-of-world-tb-day/

- 47.Karimuribo ED, Mutagahywa E, Sindato C, Mboera L, Mwabukusi M, Kariuki Njenga M, Teesdale S, Olsen J, Rweyemamu M. A smartphone app (AfyaData) for innovative one health disease surveillance from community to national levels in Africa: intervention in disease surveillance. JMIR Public Health Surveill. 2017 Dec 18;3(4):e94. doi: 10.2196/publichealth.7373. https://publichealth.jmir.org/2017/4/e94/ v3i4e94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Kangbai JB. Social network analysis and modeling of cellphone-based syndromic surveillance data for Ebola in Sierra Leone. Asian Pac J Trop Med. 2016 Sep;9(9):851–5. doi: 10.1016/j.apjtm.2016.07.005. https://linkinghub.elsevier.com/retrieve/pii/S1995-7645(16)30142-0 .S1995-7645(16)30142-0 [DOI] [PubMed] [Google Scholar]

- 49.Sterne JA, Savović J, Page MJ, Elbers RG, Blencowe NS, Boutron I, Cates CJ, Cheng HY, Corbett MS, Eldridge SM, Emberson JR, Hernán MA, Hopewell S, Hróbjartsson A, Junqueira DR, Jüni P, Kirkham JJ, Lasserson T, Li T, McAleenan A, Reeves BC, Shepperd S, Shrier I, Stewart LA, Tilling K, White IR, Whiting PF, Higgins JP. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ. 2019 Aug 28;366:l4898. doi: 10.1136/bmj.l4898. https://eprints.whiterose.ac.uk/150579/ [DOI] [PubMed] [Google Scholar]

- 50.Study quality assessment tools. National Institutes of Health National Heart, Lung, and Blood Institute. [2022-05-15]. https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools .

- 51.WHO guideline: recommendations on digital interventions for health system strengthening. World Health Organization. 2019. [2024-07-26]. https://iris.who.int/bitstream/handle/10665/311941/9789241550505-eng.pdf?ua=1 . [PubMed]

- 52.mHealth: use of mobile wireless technologies for public health. World Health Organization. 2016. May 27, [2024-07-26]. https://apps.who.int/gb/ebwha/pdf_files/EB139/B139_8-en.pdf .

- 53.Khandpur RS. Telemedicine: Technology and Applications (mHealth, TeleHealth and eHealth) Delhi, India: PHI Learning; 2017. [Google Scholar]

- 54.Crico C, Renzi C, Graf N, Buyx A, Kondylakis H, Koumakis L, Pravettoni G. mHealth and telemedicine apps: in search of a common regulation. Ecancermedicalscience. 2018 Jul 11;12:853. doi: 10.3332/ecancer.2018.853. https://air.unimi.it/handle/2434/587365 .can-12-853 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.The DODRES project: revolutionizing human and animal disease surveillance in Tanzania. Ending Pandemic. 2017. [2022-06-20]. https://endingpandemics.org/wp-content/uploads/2017/04/EP_DODRES_CaseStudy.pdf .

- 56.Divi N, Smolinski M. EpiHacks, a process for technologists and health experts to cocreate optimal solutions for disease prevention and control: user-centered design approach. J Med Internet Res. 2021 Dec 15;23(12):e34286. doi: 10.2196/34286. https://www.jmir.org/2021/12/e34286/ v23i12e34286 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fähnrich C, Denecke K, Adeoye OO, Benzler J, Claus H, Kirchner G, Mall S, Richter R, Schapranow MP, Schwarz N, Tom-Aba D, Uflacker M, Poggensee G, Krause G. Surveillance and Outbreak Response Management System (SORMAS) to support the control of the Ebola virus disease outbreak in West Africa. Euro Surveill. 2015 Mar 26;20(12):21071. doi: 10.2807/1560-7917.es2015.20.12.21071. http://www.eurosurveillance.org/ViewArticle.aspx?ArticleId=21071 .21071 [DOI] [PubMed] [Google Scholar]

- 58.Grainger C. A software for disease surveillance and outbreak response: insights from implementing SORMAS in Nigeria and Ghana. Federal Ministry for Economic Cooperation and Development, Germany. 2020. Mar, [2024-07-26]. https://health.bmz.de/studies/a-software-for-disease-surveillance-and-outbreak-response/

- 59.Meyer AJ, Armstrong-Hough M, Babirye D, Mark D, Turimumahoro P, Ayakaka I, Haberer JE, Katamba A, Davis JL. Implementing mHealth interventions in a resource-constrained setting: case study from Uganda. JMIR Mhealth Uhealth. 2020 Jul 13;8(7):e19552. doi: 10.2196/19552. https://mhealth.jmir.org/2020/7/e19552/ v8i7e19552 [DOI] [PMC free article] [PubMed] [Google Scholar]