Abstract

Transferring and replicating predictive algorithms across healthcare systems constitutes a unique yet crucial challenge that needs to be addressed to enable the widespread adoption of machine learning in healthcare. In this study, we explored the impact of important differences across healthcare systems and the associated Electronic Health Records (EHRs) on machine-learning algorithms to predict mental health crises, up to 28 days in advance. We evaluated both the transferability and replicability of such machine learning models, and for this purpose, we trained six models using features and methods developed on EHR data from the Birmingham and Solihull Mental Health NHS Foundation Trust in the UK. These machine learning models were then used to predict the mental health crises of 2907 patients seen at the Rush University System for Health in the US between 2018 and 2020. The best one was trained on a combination of US-specific structured features and frequency features from anonymized patient notes and achieved an AUROC of 0.837. A model with comparable performance, originally trained using UK structured data, was transferred and then tuned using US data, achieving an AUROC of 0.826. Our findings establish the feasibility of transferring and replicating machine learning models to predict mental health crises across diverse hospital systems.

Subject terms: Predictive markers, Psychiatric disorders

Introduction

Recent decades have witnessed a consistent increase in the proportion of mental health-related visits to emergency departments1–3, a trend further accelerated by the COVID-19 pandemic4. These visits stem primarily from individuals who are suffering mental health crises. In general, a mental health crisis, also called a mental health emergency, is a broad term that encompasses any situation in which a person’s actions, feelings, and behaviors put them at risk of hurting themselves or others, and/or prevent them from being able to care for themselves or function effectively in the community in a healthy manner5. Some examples include suicide attempts, psychosis, self-harm, or mental breakdowns. Accurate foresight or prediction of such crises would open new avenues for healthcare providers to not only manage their limited resources but to also proactively intervene. This proactive approach could mitigate the adverse effects on individuals while reducing the burden on healthcare infrastructures6,7.

The evolution of machine learning (ML) techniques, along with research on their applicability across different medical domains, has paved the way for predictive analytics of critical events in areas such as cardiovascular disorders, circulatory failure, and diabetes8–12. In psychiatry, predictive models leveraging electronic health records (EHRs) are emerging as a promising avenue for forecasting mental health crises13,14, thus enabling a long-awaited shift from reactive to proactive healthcare. Moreover, these predictive tools hold the potential to alleviate the global burden of mental disorders, which ranked as the second leading cause of years lived with disability in 201915. However, a notable gap in the current predictive analytics landscape is the robustness of the algorithms across diverse healthcare systems, which limits their adoption and practical use. The predominant focus of research has been placed on developing and evaluating models within single test-bed settings, thereby leaving us ill-informed about the universal applicability and scalability of ML in psychiatry.

In this regard, the ability to transfer and replicate predictive algorithms across healthcare systems represents one of the key enablers of the widespread adoption of machine learning not only in psychiatry but also in the broader healthcare spectrum. This entails unique and vital challenges, ranging from disparate data collection processes, data architectures, operational protocols, and patient demographics that vary considerably across different systems. These challenges appear both when transferring algorithms - where models trained on one specific dataset are implemented and evaluated in a different setting—, and when replicating them—where the approach and findings should be reproduced under new conditions. Additionally, how well the model performs when transferred or replicated is another critical aspect for the successful implementation. This is typically referred to as the models’ generalizability, which describes the extent to which a model’s metrics remain consistent beyond the specific conditions and data used when training the algorithm.

Despite the pivotal role of understanding whether a model can be transferred or replicated and how well it would perform, only a handful of studies touched upon the replication of machine learning models16 or their transfer17–24 across different hospitals. Even a smaller fraction of the studies attempted to replicate their findings internationally25–28. Particularly, the successful transferability of algorithms appears to be highly domain-specific. However, within the mental health domain, especially in the context of predicting significant events and crises, these vital aspects of transferability and applicability, in large part, remain uncharted. Moreover, clinical prediction models have been increasingly criticized for their illusory generalizability and for underperforming when applied out-of-sample29.

In this study, we directly address this gap by exploring whether and how a UK-derived predictive algorithm can be effectively transferred and replicated in a US hospital context. Our prior research13,14, in a single test-bed, has demonstrated the efficacy of ML-based prediction of mental health crises 28 days ahead, utilizing EHRs from the Birmingham and Solihull Mental Health NHS Foundation Trust, a UK-based public healthcare provider. Herein, we investigate the feasibility of applying a UK model within a US healthcare environment, assessing its performance, and exploring potential enhancements through algorithm calibration to new data unique to the US. Given the multitude of approaches when defining a mental health crisis and the lack of global consensus across hospitals30, the target variable needs to be defined locally with each clinical provider. To thoroughly explore the transferability and replicability of our approach, we deploy and evaluate six discrete models, each delving into varying calibration strategies and feature sets—both structured data and language-based features. These models and features were created following standard ML techniques, advancing said techniques remains out of the scope of this study.

This manuscript targets the challenges and resolutions inherent in replicating and transferring ML models across different healthcare systems, pushing for a shift towards a more global application of ML in psychiatry. Our work aims to bridge the theoretical, often siloed, AI potential and its practical utility in diverse, real-world healthcare settings transcending geographical or operational boundaries.

Results

Overview of the transfer and replication pipelines

Machine learning models using features and methods developed on EHR data from the Birmingham and Solihull Mental Health NHS Foundation Trust (UK) were transferred, and their performance was evaluated on EHR data from the Rush University System for Health (US).

The dataset from the UK healthcare provider included data from both inpatient and outpatient visits, as well as various patient interactions, such as telephone calls. In contrast, the EHR dataset from the US healthcare provider solely comprised inpatient data. These differences significantly impact the level of data granularity available within each setting, posing a considerable challenge to the replicability of features and prediction models. Figure 1 provides a visual representation of these disparities, offering an illustrative example of how a patient’s data may differ depending on which healthcare provider collected it.

Fig. 1. Patient timelines example.

Example of patient timelines as collected by the UK and US healthcare systems, including dates and type of EHR. Dots and crosses represent inpatient and outpatient events, respectively.

The process of transferring and replicating Machine Learning models across the two healthcare settings is depicted in Fig. 2. Two machine learning models trained in the UK healthcare system, UK Original, and UK Tuned, were transferred and evaluated for crisis prediction on US data, with the latter being further tuned on US data before evaluation. Another model, UK retrained, was trained on US data using a set of features designed for the UK system, which were then replicated using the US dataset. Finally, three new models were trained using novel feature sets tailored to the US dataset, replicating the methods used in the UK model development. Note that the replicated methods encompass the definition of modeling setup, aimed at predicting mental health crisis in the following 28 days, as well as the feature creation, both in terms of their outer structure (weekly basis) and the aspects of the patient’s journey that they cover (e.g., diagnosis, hospitalization, etc). Supplementary Table 1 details, for each machine learning model, whether they were transferred or replicated and, if the latter, the level of replication.

Fig. 2. Transfer and replication pipelines diagram.

Diagram of the transfer and replication pipelines of machine learning models from a UK healthcare provider to a US healthcare provider.

Overview of the study cohort

The study cohort comprised of patients hospitalized at the Rush University System for Health (USA) during 2018, 2019, and 2020. Patients, aged 16 and older with a history of mental health crises, having had at least one crisis episode, were included in this study. This yielded a study cohort containing records from 2907 unique patients aged between 16 and 100 years, with both genders being well represented (54.5% males, 45.5% females). Patient and crisis episode distribution by age, gender, and race are described in Table 1, for both the US study cohort and the UK cohort originally used for the algorithm’s development. Crisis episodes were defined as a sequence of crisis events preceded by one full week of stability, without any crisis occurring.

Table 1.

US and UK study cohorts’ demographics

| US cohort | UK cohort | |||

|---|---|---|---|---|

| Patients | Crisis episodes | Patients | Crisis episodes | |

| Total | 2907 | 3614 | 17,122 | 55,051 |

| Age (%) | ||||

| <18 | 11 (0.4) | 11 (0.3) | 291 (1.7) | 701 (1.3) |

| 18–34 | 1005 (34.6) | 1264 (35.0) | 6512 (38.0) | 20,334 (36.9) |

| 35–64 | 1404 (48.3) | 1784 (49.4) | 8679 (50.7) | 29,472 (53.5) |

| 65+ | 487 (16.8) | 555 (15.4) | 1640 (9.6) | 4544 (8.3) |

| Gender (%) | ||||

| Female | 1324 (45.5) | 1599 (44.2) | 8321 (48.6) | 26,694 (48.5) |

| Male | 1583 (54.5) | 2015 (55.8) | 8789 (51.3) | 28,312 (51.4) |

| Race (%) | ||||

| Black/African American | 1044 (35.9) | 1310 (36.2) | 1528 (8.9) | 4879 (8.9) |

| White | 1324 (45.5) | 1663 (46.0) | 11,321 (66.1) | 37,247 (67.7) |

| Asian | 44 (1.5) | 49 (1.4) | 2529 (14.8) | 7930 (14.4) |

| American Indian/Alaska Native | 10 (0.3) | 15 (0.4) | N/A | N/A |

| Native Hawaiian/Other Pacific Islander | 4 (0.1) | 7 (0.2) | N/A | N/A |

| Mixed | N/A | N/A | 1161 (6.8) | 3680 (6.7) |

| Other/not known | 421 (14.5) | 506 (15.0) | 583 (3.4) | 1315 (2.4) |

Machine learning modeling and features

Six machine learning models were developed to predict mental health crises up to 4 weeks in advance, either relying on a set of features developed in the UK (UK Original, UK Retrained, UK Tuned models) or on an extended set of features, some of which derived from medical Concept Unique Identifiers (CUIs), developed in the US (US Structured, US CUI tf-idf, US CUI LDA models). The models using UK features were either trained on UK data (UK Original model), on US data (UK Retrained model), or on a combination of both (UK Tuned model). The models using the extended set of features were solely trained on US data using either structured features (US Structured model) or a combination of structured and unstructured features (US CUI tf-idf, US CUI LDA models).

The set of features developed in the UK consisted of 30 features describing mental health diagnosis, the most recent crisis and hospitalization, contacts, referrals, and demographic information. These were replicated on the US dataset, with 22 of them being computed exactly, 4 of them being approximated, and 4 of them not having the necessary input to be computed and were left as missing values during the modeling phase (Supplementary Table 2).

An extended set of features was created from US data, with 87 structured features describing diagnosis, the most recent crisis and hospitalization, interventions and discharge information, and demographics (Supplementary Table 3). Additionally, model-specific unstructured features were used in the predictions by US CUI tf-idf and US CUI LDA.

Models’ performance

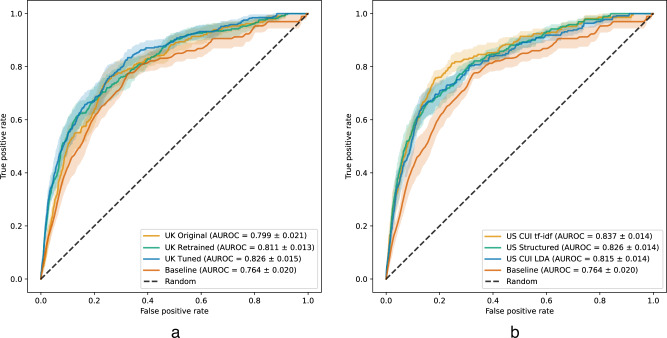

Mean ranking metrics over the test set weeks for each of the models considered are presented in Table 2. All models were statistically significantly better than the baseline, which determines patients’ risk scores based on the number of weeks since the most recent crisis episode, according to the area under the receiver operating characteristic curve (AUROC), with all comparisons yielding p-values <0.001. Moreover, the models that rely on US data for training outperform the UK Original model, trained solely on UK data. The model with the highest AUROC and area under the precision-recall curve (AUPRC) is US CUI tf-idf, showing statistically significant improvements in AUROC against all other models (p-values < 0.05). All pairwise statistical comparisons between models are in Supplementary Table 4. The receiver operating characteristic (ROC) curves for UK-based models and US-based models can be found in Fig. 3.

Table 2.

Summary of ranking metrics, the best model for each metric in bold

| AUROC | AUPRC | Precision@top100 | Recall@top100 | |

|---|---|---|---|---|

| Baseline | 0.764 ± 0.020 | 0.037 ± 0.006 | 0.045 ± 0.009 | 0.193 ± 0.032 |

| UK Original | 0.799 ± 0.021 | 0.053 ± 0.012 | 0.049 ± 0.001 | 0.212 ± 0.036 |

| UK Retrained | 0.811 ± 0.013 | 0.070 ± 0.008 | 0.074 ± 0.001 | 0.324 ± 0.010 |

| UK Tuned | 0.826 ± 0.015 | 0.077 ± 0.012 | 0.079 ± 0.013 | 0.343 ± 0.048 |

| US Structured | 0.826 ± 0.014 | 0.074 ± 0.013 | 0.077 ± 0.012 | 0.338 ± 0.051 |

| US CUI tf-idf | 0.837 ± 0.014 | 0.081 ± 0.019 | 0.071 ± 0.015 | 0.316 ± 0.063 |

| US CUI LDA | 0.815 ± 0.014 | 0.074 ± 0.010 | 0.074 ± 0.013 | 0.327 ± 0.057 |

The errors correspond to standard deviation values.

Fig. 3. Receiver operating characteristic curves.

a UK-based models; b US-based models. Solid lines and lighter-colored envelopes around each line were derived from the test evaluations (n = 17) as the mean and 95% confidence intervals, respectively. Values in the legend correspond to the mean ± standard deviation.

If we only consider those models that were only trained on structured data, UK Tuned is the model that has the highest performance in all ranking metrics, although US Structured’s metrics are comparable. It also tops the metrics that only consider the top 100 patients.

As the AUPRC can also be interpreted as the average ranking precision, one can use the target prevalence as a useful benchmark. The overall prevalence of the target variable, which indicates a patient will have a crisis in the next 4 weeks, was 1.10%. The prevalence was 1.24%, 1.05%, and 0.91% in the training, validation and test sets, respectively. As the prevalence of the target in the test set is less than 1% then a random ranking of the patients would yield an AUPRC of less than 0.01, which is 5 to 8 times smaller than the AUPRC of the trained models. If we consider the values of Precision@top100, then we expect to flag 7 to 8 patients (except with UK Original) who will have a crisis in the next 4 weeks, in comparison with a single patient if any 100 patients are selected. The precision-recall curves for UK-based models and US-based models can be found in Fig. 4.

Fig. 4. Precision-recall curves.

a UK-based models; b US-based models. Solid lines and lighter-colored envelopes around each line were derived from the test evaluations (n = 17) as the mean and 95% confidence intervals, respectively. Values in the legend correspond to the mean ± standard deviation.

Classification metrics are presented in Table 3. The cutoff point was selected in each case to be around 85% specificity in order to maintain a 15% false positive rate across models while comparing their sensitivity, precision, and F1 scores. The US CUI tf-idf model performed the best according to all classification metrics at 85% specificity, achieving almost 70% sensitivity.

Table 3.

Summary of classification metrics at a specificity level of about 85%, the best model for each metric in bold

| Sensitivity | Specificity | Precision | F1 score | |

|---|---|---|---|---|

| Baseline | 0.51 | 0.850 | 0.030 | 0.058 |

| UK Original | 0.554 | 0.848 | 0.032 | 0.061 |

| UK Retrained | 0.631 | 0.852 | 0.040 | 0.075 |

| UK Tuned | 0.615 | 0.850 | 0.039 | 0.073 |

| US Structured | 0.659 | 0.850 | 0.039 | 0.074 |

| US CUI tf-idf | 0.676 | 0.850 | 0.040 | 0.076 |

| US CUI LDA | 0.658 | 0.850 | 0.039 | 0.074 |

Most predictive features

We used SHAP values31 to assess the contribution of each feature to the models’ output and find the most important features. The features that had the greatest influence on the predictions across all models were “Weeks since last crisis” and “Number of crisis episodes”. Moreover, patient’s “Age” and some diagnosis features were found to be important features in all models, particularly “F0 organic disorders” and “F1 substance abuse.”

Among the top 20 features of the UK Original model, 4 exhibited a constant value on US data: “Never hospitalized”, “Weeks since last referral from acute services”, “Weeks since last discharge category internal” and “Weeks since last contact not attended”. Regardless, the model is using them for making predictions on the new dataset because it was trained in a different system where the features were useful predictors of mental health crises. By fine-tuning the model with the new data, new trees are learned to correct the bias carried from the previous system that does not apply to the new system’s data. For instance, the second most important feature of the UK Tuned model becomes “Number of crisis episodes" (which was the fifth most predictive for UK Original), and some new features show up as highly predictive, such as “Week sine” and “Week cosine”, which encode information about the time of the year, while the impact of the less relevant features on US data is diminished. Nevertheless, the model does not “unlearn" the trees that were trained on the original dataset, which preserves the generalizability of the model to both datasets.

When US-specific features were incorporated, “Weeks since last crisis” and “Number of crisis episodes” remained at the top 5 most important features, but a large portion of the most predictive features are specific to the US-based models. Concretely, the other structured features that showed up as highly predictive include the patient’s primary and secondary diagnosis and the number of weeks since the last discharge. Importantly, when the CUIs were included, the model took them into account and they replaced most of the diagnosis features in terms of relevance. A potential reason for this is that the CUIs bring information about the treatment and symptoms that are related to a mental health crisis. For instance, “Hallucinations, Auditory” and “Aripiprazole” are related to schizophrenia, and “Depakote” or “Lithium” are medications given to patients with bipolar disorder. Supplementary Figures 1 to 6 show the complete distribution of SHAP values for the top 20 most predictive features for each of the models.

Discussion

To our knowledge, this is the first study to demonstrate the transferability and replicability of machine learning models to predict mental health crises from EHRs across two very distinct healthcare systems. Moreover, the trained models demonstrated this transfer or replication could be done in various settings, depending on the availability of a previously trained model, historical data and resources for EHR analysis.

In a setting where historical EHR data is unavailable for a particular hospital system, then a pretrained model can be transferred (UK Original), while if historical EHR data is available but no pretrained model exists (e.g., due to contractual reasons), then one can replicate the feature set and train a new model (UK Retrained). If both historical EHR data and a pretrained model exists then it is possible to combine both (UK Tuned), for increased performance. This method can potentially yield further performance improvements by leveraging data from more than two hospital systems.

Furthermore, it is possible to replicate the methods used to create new features and models (US Structured, US CUIs tf-idf, US CUIs LDA) for a new hospital system, provided the resources exist for this analysis. Doing the replication at the methodology level led to the best-performing model (US CUIs tf-idf) by leveraging clinical notes, but this approach consumes more resources and requires expert knowledge.

The inclusion of textual features computed from CUIs brought additional predictive power overall (AUROC = 0.837, AUPRC = 0.081), and achieved the highest classification metrics at 85% of specificity. This shows that textual features demonstrate potential for predicting mental health crises, and it is plausible that a model incorporating these features if trained on an even larger set of patient data and hospital-specific tuning, could offer clinically relevant improvements. The preprocessing step that converts clinical notes into CUIs simplifies the process of replicating the model from one hospital to another since it standardizes textual data into a set of concepts that are uniquely identified.

Overall, ranking metrics for all models (AUROC 0.799–0.837) replicated the results from previous studies on the prediction of mental health crises13,14,32,33 (AUROC 0.702-0.865). As for the classification metrics, we opted to set the specificity level at 85% and obtained sensitivity values in the range 55.4–67.6%, which are in line with the sensitivity of 58% from our previous work13, and higher than the sensitivity level of 14.0–29.3% reported in the prediction of crisis associated with depression32 (albeit at a higher specificity level). The precision values, and consequently the F1 score values, obtained are lower than those in other studies32,33 (Precision 0.10–0.65, F1 score 0.15–0.65), likely due to the high imbalance in our dataset.

The low precision values should not hinder the clinical use of our models as they are driven by the high number of false positives, in comparison to the number of true positives. Monitoring or contacting a patient that ends up being a false positive (i.e., does not have a crisis in 4 weeks) won’t be harmful for the patient and can, in fact, be beneficial for their mental health condition. The main drawback is the allocation of resources that could have been used toward a higher-risk patient.

The main limitation of this work concerns the definition of a mental health crisis. Depending on the hospital system, this may or may not already exist, and even when it does exist, it can vary from one system to another. If a definition does not yet exist, then it needs to be created based on the EHR data available at that hospital and the inputs from clinical practitioners locally. Another limitation is related to the variability of EHR datasets. EHRs can vary in terms of granularity, level of detail, length of patient history, etc., and the transferability or replicability of a prediction model might be more variable if the two systems differ more significantly. In this study, one significant difference between the EHR systems was their granularity. While the UK dataset included both inpatient and outpatient records, the US dataset only included inpatient records. It is paramount that these sorts of differences are evaluated in practice, both during and after the implementation of a model such as the one described in our work34. Finally, we note that the study period overlapped with the COVID-19 pandemic, in particular for the periods of time used in the validation and test sets. During the COVID-19 pandemic, there was a decrease in acute medical service use that was not directly connected to the pandemic, including the utilization of mental health services.

Importantly, the results achieved by UK Tuned are indicative that a model trained with a carefully selected set of features in a large pool of patients can be brought to a smaller hospital; moreover, with moderate tuning can achieve comparable or even better results than starting the feature extraction process from scratch. This opens the door to scaling the model into multiple hospitals while reducing the time dedicated to feature extraction and modeling. Moreover, the use of various EHR data sets, with different characteristics, for modeling can also help uncover those underlying patterns that are common to mental health crises across hospital systems while minimizing the effect of spurious correlations.

The findings from this paper have significant clinical implications. As we move toward precision medicine approaches for psychiatric spectrum conditions, there is significant potential value and cost savings in crisis prediction and the potential for early intervention in crisis pathways. Imagine a scenario where a patient is getting more depressed and at risk for suicide, and the algorithms alert a care management team to reach out to this patient as the trajectory begins rather than waiting for the event to take place.

This approach has already been validated via a prospective study in a hospital setting13, with clinically valuable outcomes. In practice, a ranked list of patients is provided to a clinical team, on a regular basis (e.g., weekly and monthly), sorted according to the risk provided by the machine learning model. Benefits of such a tool include better caseload management and clinical decision-making which may lead to better patient outcomes and reduce a health provider’s costs35,36.

This project also highlights the value of data embedded within EHR systems. The next frontier will move toward the integration of this data with personal and mobile data sources that have shown important potential to track depressive symptoms37–41. The convergence of these approaches has the potential to help us create systemic approaches to population management of high-risk and high-cost conditions like depression. Moreover, on the machine learning aspect, the integration of multiple EHR systems can be leveraged by using privacy-preserving approaches that can train a single model while keeping data decentralized, including federated learning42,43.

Methods

Dataset

The study examined anonymized EHRs collected from patients hospitalized at the Rush University System for Health (USA) between January 2018 and December 2020. The EHRs contained demographic information, hospitalization records, and anonymized patient notes. The model and methods were originally developed on EHRs by the Birmingham and Solihull Mental Health NHS Foundation Trust (UK).

Unstructured data (i.e., progress notes, reports, consultations) were processed through a data pipeline using Apache Clinical Text Analysis and Knowledge Extraction System (cTAKES)44 indexed against the National Library of Medicine’s Metathesaurus. This reduced all of the vocabulary to a set of Concept Unique Identifiers (CUIs) that link to medical terms and thereby removed Protected Health Information (PHI) like patient names. The temporality of these CUIs relative to the notes was retained and maintained for analysis. Structured data elements were extracted, and the date shifted to remove PHI.

The EHR data were processed into features at a weekly level, per patient, resulting in a total of 237,424 patient-weeks. Patient weeks during which a patient was in crisis were excluded since the patient was already being appropriately monitored at the hospital, and deceased patients were excluded once their death was recorded. Moreover, the first six weeks and last 6 weeks of the dataset were also excluded, to account for the date shifts applied as part of PHI removal. As such, the information from those weeks was potentially incomplete.

The processed dataset was split into training, validation and test sets, chronologically, as follows: the training set contains the weeks between week 7 of 2018 and week 48 of 2019; the validation set contains weeks 1 to 18 of 2020; and the test set contains weeks 23 to 40 of 2020. The 4-week gaps between the sets were chosen to avoid data leakage, since the prediction target has information about the following four weeks of a patient.

Crisis episodes and crisis risk prediction

Crisis events were defined as either an admission (or transfer) to a psychiatric unit, an intervention due to suicide, or a specific psychiatric diagnosis (Supplementary Table 5). Note that these diagnoses were recorded and associated with a hospitalization, where the diagnosis served as a descriptor of a crisis event. Moreover, changes in diagnosis were prompted by additional events that led doctors to establish a new diagnosis, often accompanied by a transfer to another service within the hospital. The list of relevant diagnoses was defined in a comprehensive manner. These are diagnoses events that would either merit inpatient hospitalization or require a one-to-one sitter in the hospital setting due to their acute nature.

Crisis events tend to occur in quick succession, and patients are often closely monitored after one happens. For example, in New York, state guidelines recommend that patients have a psychiatric aftercare appointment scheduled within seven calendar days following discharge45. Therefore, we cluster related crisis events into a single crisis episode, defined as a set of crisis events preceded by one full week of stability, without any such events. Correspondingly, the target of our analysis is to predict whether the onset of a crisis episode, which is the initial event of the episode, will occur during the following 4 weeks.

Ethical approval and consent

The study was approved by Rush University’s Office of Research Affairs’s institutional review board. The need to obtain consent was waived due to the exclusive use of anonymized data that cannot be linked to any individual patient.

Features

Two types of features were extracted from the EHRs: structured features, from five data tables (Supplementary Table 6), and unstructured features, from patient notes.

To build the structured features, a set of 30 features developed in the UK was replicated using US data (UK feature set), and then expanded with the information available in the US dataset. A total of 87 structured features were obtained and, after feature selection during the modeling phase, 57 of them were selected (Supplementary Table 3). This set of features (US structured feature set) can be broadly categorized as follows (with the number of features in the category in parenthesis):

Primary Diagnosis (17): Describing existing patients’ primary diagnosis using the ICD-1046 code system and grouped by the first two characters of the code (e.g., F3 for Mood: mood (affective) disorders), as well as the time elapsed since the most recent diagnosis in each group;

Secondary Diagnosis (16): Similar to the ones in the Primary Diagnosis set but calculated with respect to patients’ secondary diagnosis;

Additional Diagnosis (9): Including features about dual diagnosis as well as statistics on the number of previous diagnoses, both primary and secondary (e.g., the total number of previous primary diagnoses);

Last Crisis/Hospitalization (6): Describing the most recent crisis/hospitalization (e.g., length of hospitalization during the most recent crisis);

Intervention (4): Describing interventions done during previous hospitalizations (e.g., number of days with an intervention due to risk of suicide);

Demographics (3): Including gender and age features; and

Discharge (2): Comprising features that describe the time elapsed since the most recent hospital discharge and discharge from a psychiatric unit.

Patient notes were anonymized before analysis using the Apache cTAKES pipeline, in which Concept Unique Identifiers describing each note were automatically generated. Unstructured features based on CUIs were then extracted using two different statistical methods: term frequency-inverse document frequency (tf-idf) and Latent Dirichlet Allocation (LDA).

The tf-idf method assigns a weight to each CUI, per document (corresponding to a hospital visit), depending on the frequency of the CUI in the document, as well as the number of documents containing that CUI. In total, 70,259 unique CUI were extracted from patient notes. To select the most important CUI for the study cohort a three-step approach was used: first, select the 3,000 most common CUI and train a tf-idf model using the whole hospital cohort; second, filter the 10 most important CUI per patient in the study cohort, and compute the 100 most common CUI overall, across the study cohort (Supplementary Table 7); finally, the tf-idf algorithm was applied to the patient notes in the study cohort, restricted to the CUI selected in the second step.

Using the LDA method, a list of twenty topics was constructed by grouping the CUI that more commonly appear together in a patient note. A topic contains a series of CUI, and a CUI may be present in more than one topic (Supplementary Table 8). Each patient note is represented by the matching weights between the note and each topic. The number of topics was selected to optimize a coherence metric based on the cv measure47.

Crisis prediction models

All crisis prediction models are ensemble models trained using the eXtreme Gradient Boosting (XGBoost)48 algorithm, an implementation of Gradient Boosting Machines49. In addition, a baseline model was constructed by scoring the patients on a weekly basis, based on the last time they had a crisis episode. Below are the descriptions of all models analyzed in this study:

Baseline: Heuristic model that scores patients based on the number of weeks passed since the last crisis episode, the risk score assigned is equal to , where M is the maximum number of weeks since the last crisis episode, taken over all patients;

UK Original: Trained on the UK dataset using the UK feature set;

UK Retrained: Trained on the US data set using the UK feature set. Hyperparameters tuned using the validation set;

UK Tuned: Trained on the UK dataset using the UK feature set, subsequently tuned on the US dataset. Hyperparameters tuned using the validation set;

US Structured: Trained on the US dataset using the US structured feature set. Hyperparameters tuned and feature selection performed using the validation set;

US CUI tf-idf: Trained on the US dataset using the US structured feature set combined with tf-idf features. Hyperparameters tuned using the validation set; and

US CUI LDA: Trained on the US dataset using the US structured feature set combined with LDA features. Hyperparameters are tuned using the validation set.

All models except UK Original were trained (or tuned) on the training set, had their hyperparameters tuned on the validation set, and were ultimately trained a final time on both the training set and validation set combined with the best set of hyperparameters. UK Original is a model trained on the UK dataset and tested directly on US data. A particular case is the UK Tuned model, which is an instance of UK Original that was further tuned on US data using the same hyperparameters as UK Retrained. Every model was evaluated on the testing set and their output is a predicted risk score (between 0 and 1) indicating the likelihood of a crisis in the following four weeks.

The Bayesian optimization algorithm called TreeParzen estimator50, implemented in the Python library Hyperopt51, was used to select the best set of hyperparameters. For each of the models, 50 rounds of hyperparameter search were run, optimizing the AUROC on the validation set. As part of this process, for the US structured model, we performed feature selection by grouping the features in categories and added a binary parameter to select the feature or not in the hyperparameter space provided to Hyperopt. See the hyperparameter space explored in Supplementary Table 9 and the final set of hyperparameters selected for each model in Supplementary Table 10.

Evaluation metrics

Two distinct sets of metrics were used to evaluate the model’s performance on US data, ranking metrics, and classification metrics. The ranking metrics were the primary metrics as the prediction model is meant to provide a ranked list of patients by likelihood of having a crisis in the near future, at a weekly level. These metrics include the AUROC, AUPRC, precision@top100, and recall@top100, where the precision and recall of the model are computed based on the top 100 patients of the ranked list. The AUROC and AUPRC provide an aggregate measure of performance across all possible classification thresholds, based on the tradeoff between sensitivity (how accurately crisis is predicted) and specificity (how accurately non-crisis is detected), and between precision and recall respectively. As secondary metrics, we considered four classification metrics: sensitivity, specificity, precision, and F1 score.

All metrics were computed on a weekly basis, and the reported results correspond to the average across all weeks within the test set. To compare the models, statistical significance was assessed using the DeLong test52.

Model interpretation

SHAP values31 were used to measure the contribution of each feature to the models. In particular, we applied the TreeExplainer algorithm, a technique developed to interpret at a local level tree based Machine Learning models through additive feature attribution53. This method was run on each trained model and the feature attributions were computed for each prediction in the test set, assigning a numerical score per feature corresponding to the feature’s influence in the predicted value. The most predictive features for each model were selected based on the average absolute SHAP values across predictions in the test set. The distribution of SHAP values on the test set corresponding to the 20 most predictive features is illustrated in Supplementary Figs. 1–6.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

The authors wish to thank Drs. Ranga Krishnan, Hale Thompson, Ali Keshavarzian at Rush University Medical Center, and Elise Krob and Ollie Smith at Koa Health who helped and supported this project by hosting aspects of the work and assisting with institutional and organizational processes. We sincerely thank Dr. Oliver Harrison (CEO, Koa Health) for his visionary leadership that led to this project, and for his ongoing advice on clinical maters, expert feedback, and innovative ideas since the inception of this work. The authors are thankful to Vaibhav Narayan and Peter Hoehn for their invaluable insights, feedback, and expert consultation, which were instrumental in making this project possible and enhancing the quality of our research. This study was funded by Janssen Pharmaceuticals. The funder played no role in the study design, data collection, analysis, and interpretation of data, or the writing of this manuscript. It reviewed the manuscript prior to submission.

Author contributions

JG, RG, NSK, and AM designed the study. BS accessed the raw data and removed Protected Health Information. JG, RG, and TLB analyzed the data and developed the software for training and evaluation. JG and RG wrote the first draft of this paper, which was reviewed and edited by TLB, BS, NSK, and AM. All authors were responsible for the decision to submit the paper for publication.

Data availability

Electronic health records analyzed in this study contain sensitive information about vulnerable populations and as such, cannot be publicly shared. Any request to access the data will need to be reviewed and approved by the Rush University Medical Center’s Chief Research Informatics Officer.

Code availability

The code developed in this study is property of Koa Health and cannot be publicly shared. Data processing and modeling were conducted using Apache cTAKES and Python 3.6.7 using publicly available libraries (pandas, numpy, scipy, scikit-learn, xgboost, matplotlib, seaborn, gensim and hyperopt).

Competing interests

The authors declare no competing interests but the following competing financial interests: Koa Health has provided financial resources to support the realization of this project. JG, RG, and AM are employees of Koa Health. NSK and BS declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s41746-024-01203-8.

References

- 1.Larkin, G. L., Claassen, C. A., Emond, J. A., Pelletier, A. J. & Camargo, C. A. Trends in US emergency department visits for mental health conditions, 1992 to 2001. Psychiatr. Serv.56, 671–677 (2005). 10.1176/appi.ps.56.6.671 [DOI] [PubMed] [Google Scholar]

- 2.Santillanes, G., Axeen, S., Lam, C. N. & Menchine, M. National trends in mental health-related emergency department visits by children and adults, 2009–2015. Am. J. Emerg. Med.38, 2536–2544 (2020). 10.1016/j.ajem.2019.12.035 [DOI] [PubMed] [Google Scholar]

- 3.Bommersbach, T. J., McKean, A. J., Olfson, M. & Rhee, T. G. National trends in mental health–related emergency department visits among youth, 2011-2020. J. Am. Med. Assoc.329, 1469–1477 (2023). 10.1001/jama.2023.4809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Holland, K. M. et al. Trends in US emergency department visits for mental health, overdose, and violence outcomes before and during the COVID-19 pandemic. JAMA Psychiatry78, 372–379 (2021). 10.1001/jamapsychiatry.2020.4402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.National Alliance of Mental Illness. Navigating a Mental Health Crises (2018).

- 6.Heyland, M. & Johnson, M. Evaluating an alternative to the emergency department for adults in mental health crisis. Issues Ment. Health Nurs.38, 557–561 (2017). 10.1080/01612840.2017.1300841 [DOI] [PubMed] [Google Scholar]

- 7.Miller, V. & Robertson, S. A role for occupational therapy in crisis intervention and prevention. Aust. Occup. Ther. J.38, 143–146 (1991). 10.1111/j.1440-1630.1991.tb01710.x [DOI] [Google Scholar]

- 8.Hyland, S. et al. Early prediction of circulatory failure in the intensive care unit using machine learning. Nat. Med.26, 1–10 (2020). 10.1038/s41591-020-0789-4 [DOI] [PubMed] [Google Scholar]

- 9.Arcadu, F. et al. Deep learning algorithm predicts diabetic retinopathy progression in individual patients. npj Digit. Med.2, 92 (2019). 10.1038/s41746-019-0172-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.He, Z. et al. Early sepsis prediction using ensemble learning with deep features and artificial features extracted from clinical electronic health records. Crit. Care Med.48, e1337–e1342 (2020). 10.1097/CCM.0000000000004644 [DOI] [PubMed] [Google Scholar]

- 11.Li, X. et al. A time-phased machine learning model for real-time prediction of sepsis in critical care. Crit. Care Med.48, e884–e888 (2020). 10.1097/CCM.0000000000004494 [DOI] [PubMed] [Google Scholar]

- 12.Ye, C. et al. Prediction of incident hypertension within the next year: prospective study using statewide electronic health records and machine learning. J. Med. Internet Res.20, e22 (2018). 10.2196/jmir.9268 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Garriga, R. et al. Machine learning model to predict mental health crises from electronic health records. Nat. Med.28, 1240–1248 (2022). 10.1038/s41591-022-01811-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Garriga, R. et al. Combining clinical notes with structured electronic health records enhances the prediction of mental health crises. Cell Rep. Med.4, 101260 (2023). 10.1016/j.xcrm.2023.101260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.GBD 2019 Mental Disorders Collaborators. Global, regional, and national burden of 12 mental disorders in 204 countries and territories, 1990–2019: a systematic analysis for the Global Burden of Disease Study 2019. Lancet Psychiatry9, 137–150 (2022). 10.1016/S2215-0366(21)00395-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nunez, J.-J. et al. Replication of machine learning methods to predict treatment outcome with antidepressant medications in patients with major depressive disorder from STAR*D and CAN-BIND-1. PLoS ONE16, 1–15 (2021). 10.1371/journal.pone.0253023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Curth, A. et al. Transferring clinical prediction models across hospitals and electronic health record systems. In Machine Learning and Knowledge Discovery in Databases: International Workshops of ECML PKDD 2019, Würzburg, Germany, September 16–20, 2019, Proceedings, Part I, 605–621 (Springer, 2020).

- 18.Barak-Corren, Y. et al. Validation of an electronic health record–based suicide risk prediction modeling approach across multiple health care systems. JAMA Netw. Open3, e201262–e201262 (2020). 10.1001/jamanetworkopen.2020.1262 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chin, Y. P. H. et al. Assessing the international transferability of a machine learning model for detecting medication error in the general internal medicine clinic: Multicenter preliminary validation study. JMIR Med. Inform.9, e23454 (2021). 10.2196/23454 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Song, X. et al. Cross-site transportability of an explainable artificial intelligence model for acute kidney injury prediction. Nat. Commun.11, 5668 (2020). 10.1038/s41467-020-19551-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kamran, F. et al. Early identification of patients admitted to hospital for COVID-19 at risk of clinical deterioration: model development and multisite external validation study. Br. Med. J.376, e068576 (2022). 10.1136/bmj-2021-068576 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wardi, G. et al. Predicting progression to septic shock in the emergency department using an externally generalizable machine-learning algorithm. Ann. Emerg. Med.77, 395–406 (2021). 10.1016/j.annemergmed.2020.11.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Churpek, M. M. et al. Internal and external validation of a machine learning risk score for acute kidney injury. JAMA Netw. Open3, e2012892–e2012892 (2020). 10.1001/jamanetworkopen.2020.12892 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Yang, J., Soltan, A. A. S. & Clifton, D. A. Machine learning generalizability across healthcare settings: insights from multi-site COVID-19 screening. NPJ Digit. Med.5, 69 (2022). 10.1038/s41746-022-00614-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kwong, J. C. et al. Development, multi-institutional external validation, and algorithmic audit of an artificial intelligence-based side-specific extra-prostatic extension risk assessment tool (sepera) for patients undergoing radical prostatectomy: a retrospective cohort study. Lancet Digit. Health5, e435–e445 (2023). 10.1016/S2589-7500(23)00067-5 [DOI] [PubMed] [Google Scholar]

- 26.Wagner, S. K. et al. Development and international validation of custom-engineered and code-free deep-learning models for detection of plus disease in retinopathy of prematurity: a retrospective study. Lancet Digit. Health5, e340–e349 (2023). 10.1016/S2589-7500(23)00050-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sjoding, M. W. et al. Deep learning to detect acute respiratory distress syndrome on chest radiographs: a retrospective study with external validation. Lancet Digit. Health3, e340–e348 (2021). 10.1016/S2589-7500(21)00056-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Roggeveen, L. et al. Transatlantic transferability of a new reinforcement learning model for optimizing haemodynamic treatment for critically ill patients with sepsis. Artif. Intell. Med.112, 102003 (2021). 10.1016/j.artmed.2020.102003 [DOI] [PubMed] [Google Scholar]

- 29.Chekroud, A. M. et al. Illusory generalizability of clinical prediction models. Science383, 164–167 (2024). 10.1126/science.adg8538 [DOI] [PubMed] [Google Scholar]

- 30.Paton, F. et al. Improving outcomes for people in mental health crisis: a rapid synthesis of the evidence for available models of care. Health Technol. Assess.20, 1–162 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lundberg, S. M. & Lee, S.-I. A unified approach to interpreting model predictions. In Proceedings of the 31st international conference on neural information processing systems, 4768–4777 (2017).

- 32.Msosa, Y. J. et al. Trustworthy data and AI environments for clinical prediction: Application to crisis-risk in people with depression. IEEE J. Biomed. Health Inform.27, 5588–5598 (2023). 10.1109/JBHI.2023.3312011 [DOI] [PubMed] [Google Scholar]

- 33.Saggu, S. et al. Prediction of emergency department revisits among child and youth mental health outpatients using deep learning techniques. BMC Med. Inf. Decis. Mak.24, 42 (2024). 10.1186/s12911-024-02450-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Youssef, A. et al. External validation of AI models in health should be replaced with recurring local validation. Nat. Med.29, 2686–2687 (2023). 10.1038/s41591-023-02540-z [DOI] [PubMed] [Google Scholar]

- 35.Horwitz, L. I., Kuznetsova, M. & Jones, S. A. Creating a learning health system through rapid-cycle, randomized testing. N. Engl. J. Med.381, 1175–1179 (2019). 10.1056/NEJMsb1900856 [DOI] [PubMed] [Google Scholar]

- 36.Graham, A. K. et al. Implementation strategies for digital mental health interventions in health care settings. Am. Psychol.75, 1080 (2020). 10.1037/amp0000686 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Auerbach, R. P., Srinivasan, A., Kirshenbaum, J. S., Mann, J. J. & Shankman, S. A. Geolocation features differentiate healthy from remitted depressed adults. J. Psychopathol. Clin. Sci.131, 341–349 (2022). 10.1037/abn0000742 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ilyas, Y. et al. Geolocation Patterns, Wi-Fi Connectivity Rates, and Psychiatric Symptoms Among Urban Homeless Youth: Mixed Methods Study Using Self-report and Smartphone Data. JMIR Form. Res.7, e45309 (2023). 10.2196/45309 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kathan, A. et al. Personalised depression forecasting using mobile sensor data and ecological momentary assessment. Front. Digit. Health4, 964582 (2022). 10.3389/fdgth.2022.964582 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Saeb, S. et al. Mobile phone sensor correlates of depressive symptom severity in daily-life behavior: an exploratory study. J. Med. Internet Res.17, e175 (2015). 10.2196/jmir.4273 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Saeb, S., Lattie, E. G., Schueller, S. M., Kording, K. P. & Mohr, D. C. The relationship between mobile phone location sensor data and depressive symptom severity. PeerJ4, e2537 (2016). 10.7717/peerj.2537 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Rieke, N. et al. The future of digital health with federated learning. npj Digit. Med.3, 1–7 (2020). 10.1038/s41746-020-00323-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Antunes, R. S., André da Costa, C., Küderle, A., Yari, I. A. & Eskofier, B. Federated learning for healthcare: systematic review and architecture proposal. ACM Trans. Intell. Syst. Technol.13, 1–23 (2022). 10.1145/3501813 [DOI] [Google Scholar]

- 44.Savova, G. K. et al. Mayo clinical text analysis and knowledge extraction system (ctakes): architecture, component evaluation and applications. J. Am. Med. Inform. Assoc.17, 507–513 (2010). 10.1136/jamia.2009.001560 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Office of Mental Health. Guidance on evaluation and discharge practices for comprehensive psychiatric emergency programs (CPEP) and §9.39 emergency departments (ed). Tech. Rep., New York State Department of Health (2023).

- 46.World Health Organization. ICD-10: International Statistical Classification of Diseases and Related Health Problems: Tenth Revision (2004).

- 47.Röder, M., Both, A. & Hinneburg, A. Exploring the space of topic coherence measures. In Proceedings of the Eighth ACM International Conference on Web Search and Data Mining, WSDM ’15, 399-408 (Association for Computing Machinery, New York, NY, USA, 2015).

- 48.Chen, T. & Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’16, 785-794 (Association for Computing Machinery, New York, NY, USA, 2016).

- 49.Friedman, J. H. Greedy function approximation: a gradient boosting machine. Ann. Stat.29, 1189–1232 (2001). 10.1214/aos/1013203451 [DOI] [Google Scholar]

- 50.Bergstra, J. S., Bardenet, R., Bengio, Y. & Kégl, B. Algorithms for hyper-parameter optimization. In (eds Shawe-Taylor, J., Zemel, R. S., Bartlett, P. L., Pereira, F. & Weinberger, K. Q.) Advances in Neural Information Processing Systems 24, 2546–2554 (Curran Associates, Inc., 2011).

- 51.Dasgupta, S. & McAllester, D. (eds.). Making a Science of Model Search: Hyperparameter Optimization in Hundreds of Dimensions for Vision Architectures, vol. 28 of Proceedings of Machine Learning Research (PMLR, Atlanta, Georgia, USA, 2013).

- 52.DeLong, E. R., DeLong, D. M. & Clarke-Pearson, D. L. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics44, 837–845 (1988). 10.2307/2531595 [DOI] [PubMed] [Google Scholar]

- 53.Lundberg, S. M. et al. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell.2, 2522–5839 (2020). 10.1038/s42256-019-0138-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Electronic health records analyzed in this study contain sensitive information about vulnerable populations and as such, cannot be publicly shared. Any request to access the data will need to be reviewed and approved by the Rush University Medical Center’s Chief Research Informatics Officer.

The code developed in this study is property of Koa Health and cannot be publicly shared. Data processing and modeling were conducted using Apache cTAKES and Python 3.6.7 using publicly available libraries (pandas, numpy, scipy, scikit-learn, xgboost, matplotlib, seaborn, gensim and hyperopt).