Abstract

Diabetes is one of the leading causes of morbidity and mortality in the United States and worldwide. Traditionally, diabetes detection from retinal images has been performed only using relevant retinopathy indications. This research aimed to develop an artificial intelligence (AI) machine learning model which can detect the presence of diabetes from fundus imagery of eyes without any diabetic eye disease. A machine learning algorithm was trained on the EyePACS dataset, consisting of 47,076 images. Patients were also divided into cohorts based on disease duration, each cohort consisting of patients diagnosed within the timeframe in question (e.g., 15 years) and healthy participants. The algorithm achieved 0.86 area under receiver operating curve (AUC) in detecting diabetes per patient visit when averaged across camera models, and AUC 0.83 on the task of detecting diabetes per image. The results suggest that diabetes may be diagnosed non-invasively using fundus imagery alone. This may enable diabetes diagnosis at point of care, as well as other, accessible venues, facilitating the diagnosis of many undiagnosed people with diabetes.

Keywords: Diabetes, Artificial intelligence, Machine learning

1. Introduction

Prevalence and incidence of diabetes, one of the leading causes of morbidity and mortality in the United States, are rapidly increasing and projected to continue climbing. Worldwide, an estimated 536.6 million were living with diabetes in 2021, with an anticipated 46 % increase by 2045 [1]. As of 2019, it is estimated that a total 37.3 million (11.3 %) Americans have diabetes [2], with projections placing the prevalence of diabetes in 2031 at roughly 14 % [3,4]. As a whole, diabetes patients report much higher rates of medical disability than those of the general American population, including disabilities resulting from or comorbid with diabetes [2]. Among these are vision disabilities, cardiovascular disease, lower-extremity amputation, and chronic kidney disease [2,5]. While many of these are preventable or may be mitigated with proper care, they are rarely reversible and may develop before treatment is begun [6]. As such, early diagnosis is critical to the disease management and continuing health of many Americans. Fasting glucose and glycated hemoglobin blood test screening are currently the most established and accepted tools for the diagnosis of diabetes. While both have been shown to be accurate [7,8], an estimated 23 % of adults with diabetes in the United States and 44.7 % of the global adult population with diabetes remain undiagnosed [2,9].

One of the key hallmarks of diabetes is its effect on the micro-vasculature; the eye, and the retina in particular, is one of the few places where neurovascular circulation can be accessed, imaged, and analyzed non-invasively. For many decades, the retina has been a focal point for the monitoring of diabetic complications, as diabetes tends to cause significant changes in the retina with advanced diabetic disease such as non-proliferative and proliferative diabetic retinopathy. More recently, with the advent of machine learning and AI, there has been increasing interest in otherwise “normal” non-diseased retina fundus images which can reveal systemic pathology. Artificial intelligence (AI) algorithms have shown the ability to interpret subclinical information from retinal anatomy in order to make predictions about systemic indications, such as cardiovascular risk factors [10], biomarkers including muscle mass and height [11], and chronic kidney disease (CKD) and diabetes [12,13]. This includes the future development of diseases, such as diabetic retinopathy [14]. Additionally, automated algorithms have shown increasing accuracy and reliability in diagnosing clinical conditions in recent years. A pivotal FDA study by our group reported on clinical validation for the diagnosis of diabetic retinopathy from a single image per eye, with sensitivity and specificity both significantly above 90 %, with both tabletop and portable cameras [15,16].

One of the key arguments in favor of the use of AI in healthcare is that of scalability [[17], [18], [19]]. Records may be reviewed, data aggregated, and patients diagnosed in the time it would typically take a doctor to see a patient. Additionally, the portability of many AI systems allows patients access to healthcare in areas or locations that are typically underserved or unvisited by specialists. Although a lack of stable computational infrastructure and resources remains a challenge in particularly low-resource areas and settings, AI systems which utilize smartphones and handheld cameras have been shown to be effective [12,20,21]. More specifically, two studies showed impressive results with the use of fundus images for the detection of Type 2 Diabetes Mellitus (T2DM) [12,13].

The first work, which was performed on a Chinese population, utilized both fundus cameras and smartphones in order to accurately diagnose diabetes from fundus images with an AUC of 0.91 [12]. The second utilized the Qatar Biobank dataset with a novel neural network design to do the same, with an AUC of 0.95 [13]. However, due to the demographically narrow populations, and the lack of filtering of patients with diabetic eye disease, there were limitations which this study aims to address. Additionally, in the previous studies there are no differentiation between different disease durations, while the patients in the current study were selected for their recent diagnosis and low disease duration. The current study shows that diagnosis of diabetes is significantly easier and more accurate in patients with a longer disease duration due to vascular changes in the eye, and in this study the accuracy of diagnosis improved in correlation with disease duration.

This study utilized retinal fundus images of patients with and without diabetes, all of whom had no evidence of diabetic eye disease. It aimed to develop an AI system for the early diagnosis of diabetes from the analysis of retinal fundus images which had no evidence of diabetic retinopathy. Furthermore, this study differentiated between different disease durations.

2. Materials and methods

2.1. Dataset

We utilized a dataset compiled and provided by EyePACS [22], comprised of fundus retinal images. The data consisted of 51,394 images from 7,606 patients who visited the clinics involved in the project between 2016 and 2021. Of the patients, 38 % were male and 6 % were female or other; mean age was 56.151 years old (Table 1). All images and data were de-identified according to the Health Insurance Portability and Accountability Act “Safe Harbor” before they were transferred to the researchers. Institutional Review Board exemption was obtained from the Independent Review Board under a category 4 exemption (DHHS).

Table 1.

Key dataset characteristics – general.

| Number of patients | 7,606 |

| Number of images | 51,394 |

| Age: mean, years (SD) | 56.51 (12.42), n = 7,605 |

| Gender (% male) | 38.3 %, n = 7,322 |

| HbA1c: mean, % (SD) | 7.82 (2.60), n = 4,585 |

| Ethnicity | 52.2 % Latin American, 13.2 % ethnicity not specified, 12.7 % Caucasian, 8.8 % African Descent, 6.5 % Indian subcontinent origin, 3.7 % Asian, 1.5 % Other, n = 7449 |

The dataset contained up to 6 images per patient per visit: one macula centered image, one disk centered image, and one centered image, per eye. Patients’ metadata contained a self-reported measure regarding time elapsed since receiving a diabetes diagnosis, or the absence of such, which were then discretized to the categories in Table 2 by EyePACS (for demographic data of the two groups see Table 3). Images without this metadata, or which were deemed ungradable by EyePACS doctors following study protocols [23], were excluded. Indications of diabetic eye disease were provided by professional ophthalmologists as part of the EyePACS dataset metadata and such images were subsequently excluded as well. Of the ensuing cohort, the nondiabetic category contained the fewest patients. As a result, data was used from all patients without diabetes. The number of visits by patients without diabetes was calculated and an equivalent number of visits was then randomly sampled from each other disease duration category (for demographic data divided by disease duration see appendix A).

Table 2.

Key dataset characteristics divided by diagnosis.

| Patients with diabetes | Healthy participants | |

|---|---|---|

| Number of patients | 6,907 | 699 |

| Number of images | 46,948 | 4,446 |

| Age: mean in years (SD) | 56.58 (12.32), n = 6907 | 55.85 (13.34), n = 698 |

| Gender (% male) | 38.4 %, n = 6696 | 37.0 %, n = 626 |

| HbA1c: mean % (SD) | 7.93 (2.52), n = 4330 | 5.88 (3.08), n = 255 |

| Ethnicity | 51.3 % Latin American, 13.2 % Caucasian, 12.2 % ethnicity not specified, 9.2 % African Descent, 7.1 % Indian subcontinent origin, 4.0 % Asian, 1.4 % Other, n = 6765 | 60.5 % Latin American, 23.4 % ethnicity not specified, 7.7 % Caucasian, 4.7 % African Descent, 2.5 % Other, n = 684 |

Table 3.

Disease length cohort distribution in years.

| Disease duration (years) | Patients |

|---|---|

| Healthy | 699 |

| ≤1 | 826 |

| 2 | 781 |

| 3 | 762 |

| 4 | 741 |

| 5 | 732 |

| 6–10 | 777 |

| 11–15 | 777 |

| 16–20 | 763 |

| >20 | 748 |

2.2. Algorithm development

The model used was a convolutional neural network (CNN) (for full architecture, see appendix E) with an Adam optimizer, a learning rate of 0.001, and cross entropy loss. The model was trained on two GTX 2080 Ti graphic cards. The hyperparameters for the model training were chosen beforehand, and not changed, to prevent overfitting.

In order to accurately measure performance, given the size of the dataset, the models were trained using 10-fold validation, i.e., the dataset was divided into tenths and each model was trained on 9/10 parts, and validated on the final tenth. Each model was validated using a different tenth of the data. The model was then validated on the entire dataset.

Additionally, the model was trained on cohorts of patients divided according to disease duration, for exact model analysis pipeline see appendix F. Each cohort consisted of patients diagnosed within the timeframe in question (e.g., 15 years) and healthy participants. Ten-fold validation was similarly done on these cohorts. Patient images were additionally adjusted for camera type, meaning an equal number of patients with and without diabetes were used across camera types, and age-adjusted for equality between types of patients. Cohort distribution is available in Table 1.

2.3. Statistical analysis

Results were analyzed in two ways: by image and by visit. For the image analysis, statistical measures were calculated for the model’s predictive value on each individual image. For the visit analysis, the model’s average prediction for all images taken during the same patient visit was calculated.

To ensure robust statistical inferences, the results were resampled 10,000 times using the bias-corrected and accelerated (BCa) bootstrap method [24] to determine the confidence intervals. Additionally, results were analyzed separately for ethnic and device subgroups, provided these subgroups were large enough. A subgroup was considered large enough if it had less than a 0.01 % chance of one side of the distribution being sampled uniformly (e.g., only healthy or only diabetic), which corresponded to our resampling rate.

2.4. Data and resource availability

Data may be obtained from a third party and are not publicly available. De-identified data used in this study are not publicly available at present. Parties interested in data access should contact JC (jcuadros@eyepacs.com) for queries related to EyePACS. Applications will need to undergo ethical and legal approvals by the respective institutions.

No applicable resources were generated or analyzed during the current study.

3. Results

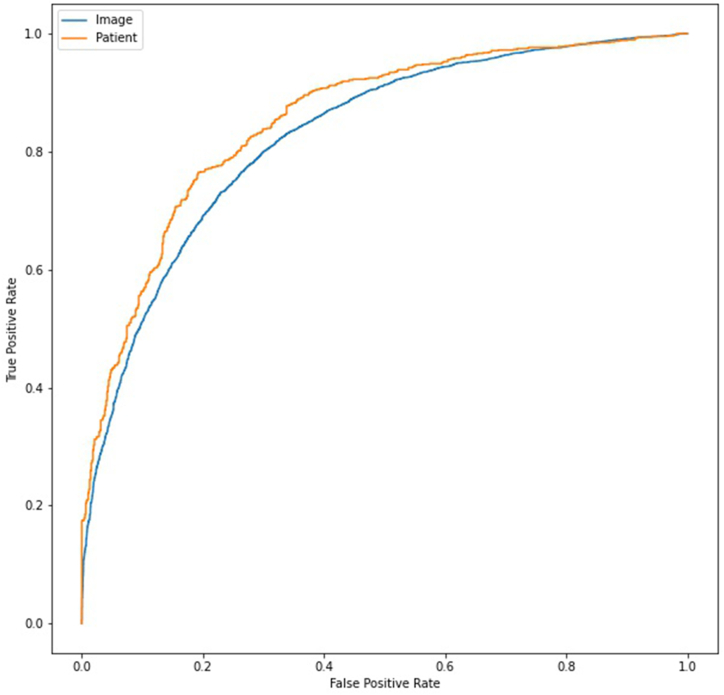

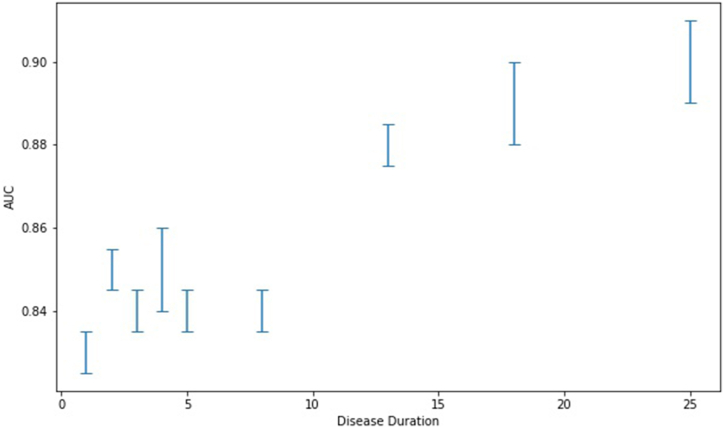

When averaging across images per patient the results were AUC 0.86 (0.84, 0.88–95 % CI), sensitivity 79 % (76 %, 81 %–95 % CI), specificity 80 % (77 %, 82 %–95 % CI) and accuracy 79 % (77 %, 81 %–95 % CI) (see Fig. 1). The results were unaffected by ethnicity (see Table 4) and varied by camera model, efficacy was affected by lack of data (see Table 5). For the two cameras with the highest amount of images, the Canon and the Centervue, the model displayed AUC 0.86 (0.84, 0.89–95 % CI) and 0.89 (0.85, 0.92–95 % CI), respectively (see Table 5). For extended versions of these tables see appendices C and D. Model performance also improved as a function of disease duration (correlation 0.92 (p < 0.001), see Fig. 2). The model’s performance in diagnosing diabetes from fundus images during single-image analysis was AUC 0.83 (0.83, 0.84–95 % CI), sensitivity 76 % (75 %, 77 %–95 % CI), specificity 75 % (74 %, 76 %–95 % CI) and accuracy 76 % (75 %, 76 %–95 % CI). For full model performance statistics divided by disease duration see Appendix B.

Fig. 1.

ROC curve of the model’s success in diagnosing per image (AUC 0.83) and per patient (AUC 0.86).

Table 4.

Ethnicity distribution and associated AUC for patients with reported ethnicity: healthy participants vs. patients diagnosed less than a year ago.

| Ethnicity | Patients | AUC | Sensitivity | Specificity |

|---|---|---|---|---|

| Ethnicity not specified | 288 | 0.85 (0.80, 0.89) | 0.81 (0.73, 0.87) | 0.70 (0.63, 0.77) |

| Latin American | 901 | 0.84 (0.82, 0.87) | 0.87 (0.84, 0.90) | 0.65 (0.61, 0.70) |

| Caucasian | 163 | 0.87 (0.79, 0.92) | 0.93 (0.87, 0.97) | 0.52 (0.39, 0.64) |

| Other | 37 | 0.70 (0.49, 0.85) | 0.88 (0.62, 1.00) | 0.50 (0.26, 0.70) |

| African Descent | 95 | 0.85 (0.75, 0.91) | 0.90 (0.80, 0.96) | 0.50 (0.32, 0.66) |

Table 5.

Camera distribution and associated AUC: healthy participants vs. patients diagnosed less than a year ago.

| Device | Images | AUC (CI) | Sensitivity | Specificity |

|---|---|---|---|---|

| Canon CR2 | 1129 | 0.86 (0.84, 0.88) | 0.90 (0.87, 0.92) | 0.67 (0.62, 0.71) |

| Centervue DRS | 308 | 0.89 (0.85, 0.92) | 0.91 (0.85, 0.94) | 0.73 (0.65, 0.80) |

| Crystalvue | 84 | 0.55 (0.42, 0.67) | 0.62 (0.46, 0.76) | 0.38 (0.24, 0.53) |

| Topcon NW400 | 112 | 0.63 (0.52, 0.73) | 0.77 (0.65, 0.86) | 0.29 (0.18, 0.43) |

Fig. 2.

Model accuracy as a function of disease duration.

These results improve on previous findings [12] by demonstrating that neural networks can not only infer the presence of Type 2 Diabetes Mellitus (T2DM) with high specificity and sensitivity from fundus images but also do so from images that lack clinically significant signs of the disease. Moreover, detection can occur at an early stage of the disease, within less than one year after diagnosis. This capability positions the model as a highly effective screening tool for early T2DM detection. It is also apparent that accuracy is highly dependent on disease duration, as the model’s performance improved on previous results for patients with higher disease duration and under-performed on newly diagnosed patients.

4. Conclusions

This research demonstrates that the diagnosis of diabetes may be predicted from non-diabetic retinopathy fundus images using machine learning methods. The model demonstrated high sensitivity and specificity, surpassing previous results and improving on limitations of previous studies. Additionally, the study revealed that the impact of T2DM on the fundus becomes more pronounced and easier to detect as the disease progresses. Future research should acknowledge this phenomenon when reporting accuracy, given that recently diagnosed patients are the relevant cohort in real-world screening. These findings suggest that fundus imaging could serve as an effective, inexpensive, and non-invasive screening tool for T2DM.

Future research should further investigate the nature of these changes and their potential to predict the development of diabetic retinopathy or other T2DM complications. Additionally, exploring the model's predictive capabilities for pre-diabetes could enable early screening and prevention of disease onset. By addressing these areas, we can enhance our understanding of T2DM progression and improve early detection and intervention strategies.

5. Discussion

This work proposed a method for the diagnosis of diabetes from non-diabetic retinopathy images, improving on previous works which did not differentiate between disease stages or the presence of diabetic eye disease [12,13]. As such, this work is more applicable to newly diagnosed patients, as well as patients in early stages of diabetes, who are more likely to need diagnosis. Diabetes is currently one of the leading causes of morbidity and mortality, with many patients remaining undiagnosed. Although diabetes is often diagnosed from fundus imagery after the development of related retinal complications, we hypothesized that a machine learning model could be trained to accurately diagnose patients from images of fundi without diabetic eye disease, doing such before an ophthalmologist can do so. The model performed as expected, further improving as a function of disease duration, seemingly due to the increased impact of the disease on optical microvasculature over time. As previously mentioned, AI-based screening for various conditions offers a low-cost, accessible, and scalable tool with great potential. In the case of diabetes, specifically, the use of AI-based retinal screening may increase testing, due to factors of both comfort and ease. Additionally, due to the same factors, this may also increase early testing which is critical for previously discussed reasons.

Currently, to perform the standard blood work required for screening, a referral from a physician is required. The increased burden of follow-up, or a lack of concern, on the patient’s part may very well be a major cause for under-detection, especially if they are presumably healthy. Many patients remain undiagnosed up to the point of vision impairment and their subsequent ophthalmological exam [25].

As a whole, novel non-invasive technologies may be of high value as alternative screening tools, facilitating early detection and diagnosis of diabetes. There are currently a handful of devices that allow non-invasive detection of diabetes, such as the Scout DS [26,27], which uses light to detect advanced glycation end-products (AGEs) and other biomarkers in the skin. To our knowledge, the usage of these technologies in the clinical field is limited.

Novel methods will not necessarily replace traditional ones, but their adoption will enable the development of parallel, physician independent screening processes. Integrating these novel technologies will make screening at accessible sites possible. Possible screening sites could be at workplaces, at pharmacies, shopping malls, or even at home, using portable devices. Increasing the screening ratio, and specifically at earlier stages of diseases, may decrease long term microvascular complications [28], and may prove to be cost-effective.

There may be, however, legal and ethical issues concerning the use of these new technologies. Community screenings, independent of an established healthcare setting, are currently generally not encouraged by the ADA. There are questions of liability on the results of the tests, follow-up testing, and the provision of proper explanations to and treatment of the patients. Lastly, there are economic issues regarding the costs of these tests, coverage by medical insurance companies both for the test itself and further diagnostic tests, and the ability of existing healthcare systems to accommodate the increase in referrals of newly diagnosed patients.

There are numerous ways to mitigate the above-mentioned risks of the “democratization of screening”. In order to properly allow this shift, a comprehensive, interdisciplinary thinking process is required. While further risk-benefit analysis is required, the benefits may very well outweigh the risks, enabling the diagnosis and proper care of millions.

Limitations and further research

This study’s main limitation, which we hope to address in the future, lies in the research’s retrospective nature, meaning that both prospective research and external validation are lacking. Furthermore, results varied by camera model, probably due to data availability in the training set. An additional limitation is that patients' diabetes status was self-reported, which may have had the effect of lowering the model’s final AUC. Given the extremely high percentages of undiagnosed patients, there is a substantial likelihood that there are people with diabetes among the designated image set of healthy participants. As such, the model’s actual results are probably more accurate. This is also true given that the majority of patients with diabetes are under medical treatment for their diabetes, and it is assumed that the model would be significantly more accurate in predicting unmanaged diabetes. This research also does not differentiate between type 1 and type 2 diabetes. The likelihood of this differentiation causing significantly different results is low, given the relatively low prevalence of T1DM [1], but are still worth exploring. These issues may be possible to address in further research, including prospective research or research utilizing different datasets. It should be noted, however, that research differentiating between T1DM and T2DM would require a much wider pool of patients, including children, in order to include patients with similarly low disease durations between groups.

The diagnosis of pre-diabetes is not included in the scope of the current research, however will be included in future validation of the model in question.

Our work raises an additional clinical question: assuming that machine learning models for the detection of diabetes from retinal images are mainly based on subclinical changes in the vasculature of an end organ, further research would be necessary to define whether medical treatment should differ in patients with positive retinal screening tests or differ based on the model’s degree of certainty in the diagnosis.

Ethics Statement

Institutional Review Board exemption was obtained from the Sterling Independent Review Board under a category 4 exemption (DHHS).

Funding

Employees and board members of AEYE Health designed and carried out the study; managed, analyzed and interpreted the data; prepared, reviewed, and approved the article; and were involved in the decision to submit the article. This study was fully funded by AEYE Health, Inc.

Data availability statement

Data may be obtained from a third party and are not publicly available. De-identified data used in this study are not publicly available at present. Parties interested in data access should contact JC (jcuadros@eyepacs.com) for queries related to EyePACS. Applications will need to undergo ethical and legal approvals by the respective institutions.

CRediT authorship contribution statement

Yovel Rom: Methodology, Investigation, Formal analysis. Rachelle Aviv: Writing – review & editing, Writing – original draft. Gal Yaakov Cohen: Writing – original draft, Supervision. Yehudit Eden Friedman: Writing – original draft. Tsontcho Ianchulev: Writing – review & editing, Supervision. Zack Dvey-Aharon: Supervision, Funding acquisition, Conceptualization.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.heliyon.2024.e36592.

Appendix A. Supplementary data

The following is/are the supplementary data to this article:

References

- 1.Sun H., Saeedi P., Karuranga S., Pinkepank M., Ogurtsova K., Duncan B.B., Stein C., Basit A., Chan J.C.N., Mbanya J.C., Pavkov M.E., Ramachandaran A., Wild S.H., James S., Herman W.H., Zhang P., Bommer C., Kuo S., Boyko E.J., Magliano D.J. IDF Diabetes Atlas: global, regional and country-level diabetes prevalence estimates for 2021 and projections for 2045. Diabetes Res. Clin. Pract. 2022;183 doi: 10.1016/j.diabres.2021.109119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Centers for Disease Control and Prevention National diabetes statistics report | diabetes | CDC, national diabetes statistics report website. 2022. https://www.cdc.gov/diabetes/data/statistics-report/index.html

- 3.Lin J., Thompson T.J., Cheng Y.J., Zhuo X., Zhang P., Gregg E., Rolka D.B. Projection of the future diabetes burden in the United States through 2060. Popul. Health Metrics. 2018;16:9. doi: 10.1186/s12963-018-0166-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mainous A.G., Baker R., Koopman R.J., Saxena S., Diaz V.A., Everett C.J., Majeed A. Impact of the population at risk of diabetes on projections of diabetes burden in the United States: an epidemic on the way. Diabetologia. 2007;50:934–940. doi: 10.1007/s00125-006-0528-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nathan D.M. Long-term complications of diabetes Mellitus. N. Engl. J. Med. 1993;328:1676–1685. doi: 10.1056/NEJM199306103282306. [DOI] [PubMed] [Google Scholar]

- 6.UK Prospective Diabetes Study (UKPDS) Group Intensive blood-glucose control with sulphonylureas or insulin compared with conventional treatment and risk of complications in patients with type 2 diabetes (UKPDS 33). UK Prospective Diabetes Study (UKPDS) Group. Lancet. 1998;352:837–853. doi: 10.1016/S0140-6736(98)07019-6. [DOI] [PubMed] [Google Scholar]

- 7.Ghazanfari Z., Haghdoost A.A., Alizadeh S.M., Atapour J., Zolala F. A comparison of HbA1c and fasting blood sugar tests in general population. Int. J. Prev. Med. 2010;1:187–194. [PMC free article] [PubMed] [Google Scholar]

- 8.Nathan D.M. Diabetes: advances in diagnosis and treatment. JAMA. 2015;314:1052–1062. doi: 10.1001/jama.2015.9536. [DOI] [PubMed] [Google Scholar]

- 9.Ogurtsova K., Guariguata L., Barengo N.C., Ruiz P.L.-D., Sacre J.W., Karuranga S., Sun H., Boyko E.J., Magliano D.J. IDF diabetes Atlas: global estimates of undiagnosed diabetes in adults for 2021. Diabetes Res. Clin. Pract. 2022;183 doi: 10.1016/j.diabres.2021.109118. [DOI] [PubMed] [Google Scholar]

- 10.Poplin R., Varadarajan A.V., Blumer K., Liu Y., McConnell M.V., Corrado G.S., Peng L., Webster D.R. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2018;2:158–164. doi: 10.1038/s41551-018-0195-0. [DOI] [PubMed] [Google Scholar]

- 11.Rim T.H., Lee G., Kim Y., Tham Y.-C., Lee C.J., Baik S.J., Kim Y.A., Yu M., Deshmukh M., Lee B.K. Prediction of systemic biomarkers from retinal photographs: development and validation of deep-learning algorithms. The Lancet Digital Health. 2020;2:e526–e536. doi: 10.1016/S2589-7500(20)30216-8. [DOI] [PubMed] [Google Scholar]

- 12.Zhang K., Liu X., Xu J., Yuan J., Cai W., Chen T., Wang K., Gao Y., Nie S., Xu X. Deep-learning models for the detection and incidence prediction of chronic kidney disease and type 2 diabetes from retinal fundus images. Nat. Biomed. Eng. 2021;5:533–545. doi: 10.1038/s41551-021-00745-6. [DOI] [PubMed] [Google Scholar]

- 13.Al-Absi H.R.H., Pai A., Naeem U., Mohamed F.K., Arya S., Sbeit R.A., Bashir M., El Shafei M.M., El Hajj N., Alam T. DiaNet v2 deep learning based method for diabetes diagnosis using retinal images. Sci. Rep. 2024;14:1595. doi: 10.1038/s41598-023-49677-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Rom Y., Aviv R., Ianchulev T., Dvey-Aharon Z. Predicting the future development of diabetic retinopathy using a deep learning algorithm for the analysis of non-invasive retinal imaging. BMJ Open Ophthalmology. 2022;7 doi: 10.1136/bmjophth-2022-001140. [DOI] [Google Scholar]

- 15.Food and Drug Administration (FDA) FDA device regulation: 510(k) 2022. https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfpmn/pmn.cfm?ID=K221183

- 16.Food and Drug Administration (FDA) FDA device regulation: 510(k) 2024. https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfpmn/pmn.cfm?ID=K240058

- 17.T. Panch, P. Szolovits, R. Atun, Artificial intelligence, machine learning and health systems, J Glob Health 8 (n.d.) 020303. 10.7189/jogh.08.020303. [DOI] [PMC free article] [PubMed]

- 18.Rajkomar A., Oren E., Chen K., Dai A.M., Hajaj N., Hardt M., Liu P.J., Liu X., Marcus J., Sun M. Scalable and accurate deep learning with electronic health records. NPJ Digital Medicine. 2018;1:1–10. doi: 10.1038/s41746-018-0029-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sierra-Sosa D., Garcia-Zapirain B., Castillo C., Oleagordia I., Nuno-Solinis R., Urtaran-Laresgoiti M., Elmaghraby A. Scalable healthcare assessment for diabetic patients using deep learning on multiple GPUs. IEEE Trans. Ind. Inf. 2019;15:5682–5689. [Google Scholar]

- 20.Liu T.A. Smartphone-based, artificial intelligence–enabled diabetic retinopathy screening. JAMA Ophthalmology. 2019;137:1188–1189. doi: 10.1001/jamaophthalmol.2019.2883. [DOI] [PubMed] [Google Scholar]

- 21.AEYE, AEYE Health Reports Pivotal Clinical Trial Results of its AI Algorithm for the Autonomous Screening and Detection of More-Than-Mild Diabetic Retinopathy, (n.d.). https://www.prnewswire.com/news-releases/aeye-health-reports-pivotal-clinical-trial-results-of-its-ai-algorithm-for-the-autonomous-screening-and-detection-of-more-than-mild-diabetic-retinopathy-301476299.html (accessed February 28, 2022).

- 22.Eye Picture Archive Communication System . 2010. eyepacs.com. [Google Scholar]

- 23.EyePACS L.L.C. EyePACS protocol narrative: grading procedures and rules. 2020. https://www.eyepacs.org/consultant/Clinical/grading/EyePACS-DIGITAL-RETINAL-IMAGE-GRADING.pdf

- 24.DiCiccio T.J., Efron B. Bootstrap confidence intervals. Stat. Sci. 1996;11:189–228. doi: 10.1214/ss/1032280214. [DOI] [Google Scholar]

- 25.American Public Health Association A call to improve patient and public health outcomes of diabetes through an enhanced integrated care approach. 2021. https://www.apha.org/Policies-and-Advocacy/Public-Health-Policy-Statements/Policy-Database/2022/01/07/Call-to-Improve-Patient-and-Public-Health-Outcomes-of-Diabetes

- 26.Maynard J.D., Barrack A., Murphree P., Lathouris P., Tentolouris N., Londou S., Paley C., Swanepoel M., Pope R. Comparison of SCOUT DS, the ADA diabetes risk test and random capillary glucose for diabetes screening in at-risk populations. Can. J. Diabetes. 2013;37:S78. doi: 10.1016/j.jcjd.2013.08.239. [DOI] [PubMed] [Google Scholar]

- 27.Ediger M.N., Olson B.P., Maynard J.D. Noninvasive optical screening for diabetes. J. Diabetes Sci. Technol. 2009;3:776–780. doi: 10.1177/193229680900300426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Perreault L., Pan Q., Aroda V.R., Barrett-Connor E., Dabelea D., Dagogo-Jack S., Hamman R.F., Kahn S.E., Mather K.J., Knowler W.C., the D.P.P.R. Group Exploring residual risk for diabetes and microvascular disease in the diabetes prevention program outcomes study (DPPOS) Diabet. Med. 2017;34:1747–1755. doi: 10.1111/dme.13453. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data may be obtained from a third party and are not publicly available. De-identified data used in this study are not publicly available at present. Parties interested in data access should contact JC (jcuadros@eyepacs.com) for queries related to EyePACS. Applications will need to undergo ethical and legal approvals by the respective institutions.