Abstract

Episodic memory (EM) allows us to remember and relive past events and experiences and has been linked to cortical-hippocampal reinstatement of encoding activity. While EM is fundamental to establish a sense of self across time, this claim and its link to the sense of agency (SoA), based on bodily signals, has not been tested experimentally. Using real-time sensorimotor stimulation, immersive virtual reality, and fMRI we manipulated the SoA and report stronger hippocampal reinstatement for scenes encoded under preserved SoA, reflecting recall performance in a recognition task. We link SoA to EM showing that hippocampal reinstatement is coupled with reinstatement in premotor cortex, a key SoA region. We extend these findings in a severe amnesic patient whose memory lacked the normal dependency on the SoA. Premotor-hippocampal coupling in EM describes how a key aspect of the bodily self at encoding is neurally reinstated during the retrieval of past episodes, enabling a sense of self across time.

Subject terms: Long-term memory, Consciousness, Hippocampus

This study describes a premotor-hippocampal coupling in episodic memory, revealing how the bodily sensory context and in particular the sense of agency of the observer at encoding is neurally reinstated during the retrieval of past episodes.

Introduction

Episodic memory (EM) refers to a form of long-term declarative memory associated with the recall of the sensory, perceptual and emotional details of an event1,2. EM retrieval is linked to the event’s encoding context and is modulated by various parameters such as the emotional state of the observer and the sensory stimuli of the event during encoding (i.e., visual, auditory, or olfactory cues)3–6. A key neural mechanism in EM is the reactivation of brain regions involved at encoding during retrieval, a process which has been called reinstatement and has been observed in several cortical areas7–10. For example, studies reported the activation of primary and extrastriate visual cortex during the retrieval of events containing visual stimuli as well as the reactivation of auditory brain regions during retrieval of events containing auditory stimuli6,11. Reinstatement has also been observed in the medial temporal lobe (i.e., in hippocampus, parahippocampus, perirhinal cortex)12–15. Moreover, such hippocampal reinstatement has been associated with memory performance: hippocampal reinstatement was stronger for correctly compared to incorrectly retrieved items15 and was more similar to hippocampal activity at encoding when participants successfully retrieved items. Accordingly, it has been proposed that the hippocampus is a key node necessary for EM recollection, mediating the retrieval of sensory information stored in respective sensory cortical regions16–18, further supported by findings that hippocampal activity at encoding19,20 and retrieval9,15,21,22 predicts the reinstatement of cortical areas at retrieval.

However, the sensory context of the observer’s body at encoding and its potential reinstatement during the retrieval process has only received scant attention. Thus, although previous authors have speculated about the importance of the observer’s body in episodic memory23,24, there are only very few laboratory-based empirical studies that have tested whether sensory bodily inputs - such as tactile, proprioceptive, or vestibular stimuli and their integration with motor signals of the observer’s body at encoding and retrieval - impact EM. This neglect is surprising because the body provides a rich set of sensory-motor inputs during encoding and may provide cues that aid memory formation for visual and auditory stimuli. The few studies that have been carried out revealed that congruent body posture between encoding and retrieval facilitates retrieval of words25 and personal events24,26. However, none of these studies investigated whether neural reinstatement, as described for the visual and auditory context6,11, applies to the bodily sensory context.

Beyond their importance in body representation, certain sensory bodily signals of the observer’s body are also critical for a bodily form of self-consciousness, termed bodily self-consciousness (BSC27–30). BSC is based on the integration of multisensory bodily inputs and motor signals31–37 and includes the sense of agency (SoA), body ownership, the first-person perspective (1PP), and self-location27,29. Specific components of BSC, such as self-location and 1PP can be altered experimentally using virtual reality (VR), for example by exposing participants to conflicting multisensory visuotactile or visuomotor stimulation38,39 from either a first-person28,36 or a third-person perspective (3PP)36,40,41. Visuomotor and perspectival incongruencies have also been shown to modulate SoA42–46. However, despite prominent proposals that self-consciousness is an essential part of EM, as argued by Endel Tulving1,47,48, the impact of experimental alterations of BSC during encoding on later retrieval processes has only recently been investigated. Thus, behavioral evidence demonstrated that the modulation of BSC, using conflicting multisensory and sensorimotor stimulation, influences EM and spatial memory49–54. Although not focusing on BSC framework, the work of St. Jacques and colleagues provided preliminary evidence that BSC may impact EM by showing that the retrieval of an event from a 3PP (compared to 1PP) led to poorer recollection of the sensory and perceptual details experienced at encoding, characterized at the neural level by changes in posterior parietal regions49,50,53–55. More recently, other studies showed that events seen from a natural 1PP56–59 or with higher body ownership60,61, during encoding, were associated with more vivid memories and better memory performance compared to events encoded from the 3PP or without a body view56–59. Collectively, these studies suggest that the modulation of BSC during the encoding of an event affects the later retrieval of that event.

Even fewer studies investigated the underlying brain mechanisms56,59,61. Such research recorded brain activity either only during encoding or only during retrieval, and focused on the hippocampus and medial temporal lobe structures, thus leaving out the investigation of the coupling of the medial temporal lobe regions with BSC-sensitive areas in fronto-parietal cortex56,59,61. For example, one study related hippocampal activity during the retrieval of an event to the vividness of the recollection and showed that this depends on the perspective (1PP and 3PP) participants had adopted at encoding56. Another study observed functional connectivity changes between the right hippocampus and the right parahippocampus, depending on whether participants saw their body from their habitual perspective (1PP) or not, again during encoding59. Therefore, it is currently unknown how the neural networks mediating the bodily sensory context and BSC are coupled with EM networks and whether this is based on neural reinstatement.

Here, we investigated how changes in BSC during the encoding of virtual scenes impact EM and neural reinstatement using behavioral experiments, virtual reality (VR), and fMRI. We carried out a series of four VR experiments in a total of 75 healthy subjects as well as in a rare amnesic patient with severe autobiographical memory loss (but preserved BSC). Based on previous work57–59, we designed a VR paradigm and tested EM one hour after the encoding session. For this we combined fully immersive VR with motion tracking and fMRI, allowing us to modulate BSC, by using different levels of visuomotor and perspectival congruency, during the encoding of objects presented in three different virtual scenes. Each scene was associated with a specific experimental condition differing in visuomotor and perspectival congruency (first-person synchronous avatar, first-person asynchronous avatar, and third-person asynchronous avatar). Our participants’ level of BSC modulation was assessed in a separate session (i.e., in a fourth immersive virtual scene). EM was tested one hour after the encoding session using a scene recognition task, critically immersing our participants in the same virtual scenes but without the avatar and the related BSC manipulation. In healthy participants (experiments 1-3), we expected to find higher BSC and better recognition performance for the scenes encoded under visuomotor and perspectival congruency, and higher hippocampal reinstatement in the recognition task for scenes encoded under visuomotor and perspectival congruency, for successful trials. We report that reinstatement-related activity in the hippocampus, activity that we associated with our participants’ performance in scene recognition, is explained by the activation of an independently defined BSC network, including the left dorsolateral premotor cortex (dPMC) contralateral to the moving right hand. Moreover, such premotor-hippocampal coupling of reinstatement was modulated by visuomotor and perspectival conditions, being stronger for visuomotor and perspectival congruency, linking BSC with the recognition of objects in complex three-dimensional scenes. These data were confirmed and extended by clinical and experimental findings (using the same VR paradigms) in a rare patient, with severe amnesia caused by damage to bilateral hippocampi and adjacent medial temporal structures, who had a normal BSC but was unable to relive and re-experience our complex 3D scenes when she encoded the scenes with visuomotor and perspectival congruency, compatible with a disruption of normal BSC-EM coupling.

Results

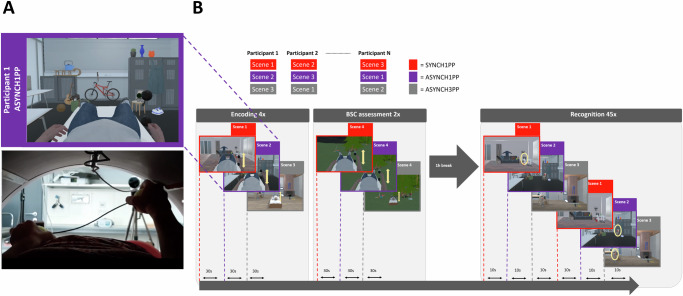

To investigate the effects of BSC on EM, as manipulated by visuomotor and perspectival congruency during encoding, we immersed participants into different 3D virtual environments. The VR paradigm and the visual stimuli were inspired and adapted from previous EM research using immersive VR50,57,59. Because immersive VR during fMRI is challenging, all participants were first carefully familiarized with the setup and the different virtual environments and were embodied with a virtual body representation. For Experiments 1 and 3, we used a head-mounted display and for Experiment 2 an MRI-compatible system (see Methods). Participants were lying down in a mock MR scanner (Experiments 1 and 3) or in the MRI scanner and their movements were tracked with the motion tracking system as described in ref. 62. VR paradigms and experimental procedures were implemented using our custom-build system ExVR (ExVR, https://github.com/BlankeLab/ExVR), performing real time control and visual rendering with realistic lighting and shading and, for Experiment 2, synchronizing MRI acquisition with VR experimental data.

We tested memory for complex indoor scenes in three experimental conditions, differing in levels of BSC, manipulated by visuomotor and perspectival congruency. In each condition, participants were immersed and observed the 3D scene and their avatar while moving their right hand. In the first condition, the scene and the avatar were observed from a 1PP and moved synchronously with participants’ upper limb movement (SYNCH1PP; visuomotor and perspectival congruency, no manipulation of BSC; Supplementary Video 1). In the second condition, the avatar was observed from a 1PP but the avatar movement was delayed with respect to the participants’ upper limb movement (ASYNCH1PP; visuomotor mismatch, altered BSC, Fig. 1A). Finally, in the third condition, participants observed an avatar from a 3PP, and the movement was delayed as well (ASYNCH3PP; visuomotor and perspectival mismatch, strong alteration of BSC). Memory for the scenes was tested one hour after the encoding session in a recognition task where participants were immersed back in the three different virtual scenes (Fig. 1B; randomized across participants). Critically, during the recognition task, no avatar or body view was implemented, thus, all scenes across conditions were observed from the same location and viewpoint as during encoding, but without any avatar and without manipulation of visuomotor and perspectival congruency. The VR scene was either a scene (Fig. 1B) containing the same objects (as shown during the encoding session) or a scene that contained the same number of objects, of which one was changed compared to the encoding session. Healthy participants were asked to report if the scene had changed compared to the encoding session or not. Encoding was incidental in Experiments 1 and 2, that is, participants were not told during encoding that their memory was later tested for the scenes (see Methods). In Experiments 1 and 3, the task was only behavioral, and in Experiment 2, we also recorded brain activity during encoding, BSC assessment and recognition sessions, with fMRI. Since Experiments 1 and 2 were performed under similar instructions, we combined both samples for behavioral analysis. Because our primary interest was to understand how BSC, as manipulated by visuomotor and perspectival congruency, modulates EM, we compared the synchronous condition seen from the congruent first-person viewpoint (visuomotor and perspectival congruency; SYNCH1PP) with the two conditions in which visuomotor and perspectival congruency was altered (ASYNCH1PP, ASYNCH3PP). BSC and its modulation across the three conditions were assessed using standard questionnaire with continuous ratings between 0 and 1 (see Methods) and, importantly, we used a different complex outdoor scene, to avoid any interference with the encoding of the three scenes used during encoding and recognition sessions (Fig. 1B). More specifically, we used a fourth scene for the BSC assessment to avoid that participants may have focused differently on the body (and therefore avoiding potential interference with the encoding process), and to minimize their awareness of the different experimental conditions concerning the bodily manipulations. The sense of agency (SoA) was used to quantify BSC and its modulation across conditions as the delay is the major parameter varying between the preserved BSC condition (SYNCH1PP) and the two other conditions (ASYNCH1PP and ASYNCH3PP). However, to further quantify the overall effect of the experimental manipulation on BSC, we also asked participants to rate their body ownership with respect to the avatar, as well as their level of fear when the avatar was threatened with a virtual knife (see Methods). In the following section, we first describe the behavioral results of Experiment 1 to 3 and then focus on the imaging results of Experiment 2.

Fig. 1. Experimental design: alteration of visuomotor synchrony and perspective in immersive virtual reality.

A Scene snapshot as presented to participants (upper panel) depicts the ASYNCH1PP condition, corresponding to the altered condition of BSC in which the participant was shown the avatar with a first-person perspective (1PP), and with delayed arm movements (lower panel). B Encoding of three immersive virtual scenes was associated with three different levels of visuomotor and perspectival congruency. One hour after the encoding session, a recognition task assessed scene memory. Note that conditions were attributed solely based on encoding association and the recognition task was always performed without any avatar. BSC sensitivity was tested for each condition in an independent session (with a fourth virtual scene to avoid memory interference). BSC, Bodily self-consciousness.

Higher SoA during incidental encoding when immersed with visuomotor and perspectival congruency (behavior, Experiments 1 and 2)

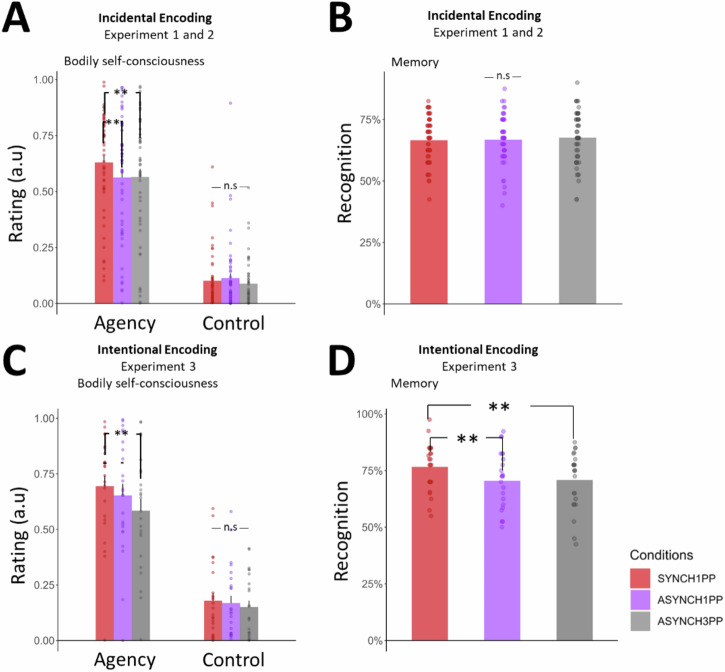

To quantify the difference in SoA under the different conditions we applied a linear mixed model for each of the questions asked during the BSC assessment (see Methods). As predicted, SoA was higher in SYNCH1PP as compared to both other conditions with visuomotor and perspectival mismatch (ASYNCH1PP: estimate = −0.07, t = −2.93, p = 0.003; ASYNCH3PP: estimate = −0.07, t = −2.84, p = 0.005; Fig. 2A). Ratings were much lower and close to zero for the control questions (Fig. 2A; SYNCH1PP compared to ASYNCH1PP: estimate = 0.01, t = 0.94, p = 0.35; SYNCH1PP compared to ASYNCH3PP: estimate = −0.01, t = −1.04, p = 0.3) and differed from the SoA ratings (estimate = −0.47, t = −26.17, p < 0.0001). Applying the same approach to the items about body ownership and threat, we found that participants rated their body ownership significantly higher in SYNCH1PP than ASYNCH3PP (estimate = −0.09, t = −2.9, p = 0.004). Threat was also rated as stronger in SYNCH1PP compared to ASYNCH3PP (estimate = −0.18, t = −4.45, p < 0.0001). The ratings were not significantly different when comparing SYNCH1PP with ASYNCH1PP (body ownership: estimate = 0.01, t = 0.39, p = 0.7; Threat: estimate = −0.037, t = −0.94, p = 0.35; Fig. S1A). There was no difference between Experiments 1 and 2 when adding Experiment as a variable in the model (see Table S1 for the details of the model).

Fig. 2. Higher SoA is associated with higher recognition performance under visuomotor and perspectival congruency in intentional encoding.

A When using incidental encoding participants have a higher SoA in SYNCH1PP compared to the two other conditions. Plot of average SoA per (dots) and across (bar) participants, N = 50. B During incidental encoding there is no difference in recognition performance between conditions, N = 48. C During intentional encoding participants have a higher SoA in SYNCH1PP compared to ASYNCH3PP, N = 25. D During intentional encoding, participants better recognized the scenes in SYNCH1PP compared to the other conditions, N = 24. a.u = arbitrary unit. SoA = Sense of Agency. ** indicates significance level with p value < 0.01 as tested with a linear mixed model using SoA as a dependent variable and conditions as fixed factor. Error bars represent the standard error of the mean.

Incidental encoding. Visuomotor and perspectival congruency for incidental encoding does not modulate object recognition (behavior, Experiments 1 and 2)

To investigate the effect of visuomotor and perspectival congruency during encoding on recognition performance, we tested participants with a recognition task one hour after the encoding session. Critically, although participants were immersed in the same virtual scenes during the recognition task, they did not see an avatar during the recognition task and there was no manipulation of visuomotor or perspectival congruency. During incidental encoding, participants showed no significant difference in recognition performance (accuracy) between the three experimental conditions (Fig. 2B, SYNCH1PP vs. ASYNCH1PP estimate = 0.03, z = 0.4, p = 0.73; SYNCH1PP vs. ASYNCH3PP estimate = 0.06, z = 0.93, p = 0.35). There was no difference in recognition performance between both Experiments 1 and 2 (estimate = −0.02, z = −0.24, p = 0.8, see Table S2).

Intentional encoding. Higher SoA and better recognition performance for intentional encoding when immersed with visuomotor and perspectival congruency (behavior, Experiment 3)

Experiment 3 was similar in all aspects, except that participants were told before the encoding session that their memory for the scenes would be tested subsequently (intentional encoding). As in Experiments 1 and 2, participants’ SoA was higher in SYNCH1PP compared to ASYNCH3PP (Fig. 2C; estimate = −0.11, t = −3.16, p = 0.002). The comparison between the SYNCH1PP and the ASYNCH1PP condition was not significant, but similar in direction compared to Experiments 1 and 2 (estimate = −0.04, t = −1.2, p = 0.23). To investigate whether the SoA effect was comparable to what was observed under incidental encoding, we compared the effect size of Experiments 1 and 2 with the one of Experiment 3 and ran additional analysis, confirming a similar SoA effect across all three experiments (for detail see Supplementary Note 1). The average ratings for the control items were significantly lower than SoA ratings (estimate = −0.47, t = −17.6, p < 0.0001) and not significantly different between conditions (SYNCH1PP compared to ASYNCH1PP: estimate = −0.01, t = −0.56, p = 0.58; SYNCH1PP compared to ASYNCH3PP: estimate = −0.028, t = −1.5, p = 0.13). We also showed higher body ownership and threat ratings in SYNCH1PP versus ASYNCH3PP (body ownership: estimate = −0.2, t = −4.6, p < 0.0001; threat: estimate = −0.22, t = −3.13, p = 0.002; Fig. S1B), similar to what was found in Experiments 1 and 2. There was no significant difference in body ownership and threat ratings when SYNCH1PP was compared with ASYNCH1PP (body ownership: estimate = −0.06, t = −1.23, p = 0.22; threat: estimate = −0.04, t = −0.5, p = 0.61, Table S3).

For the one-hour delayed recognition task, we found that participants had significantly higher performance in the SYNCH1PP condition compared to ASYNCH1PP (Fig. 2D, estimate = −0.33, z = −3.06, p = 0.002) and ASYNCH3PP (estimate = −0.32, z = −2.95, p = 0.003, Table S4).

To summarize, these three experiments demonstrate that the SYNCH1PP condition induces a higher SoA by exposing participants to objects that were embedded in a 3D VR scene and seen under visuomotor and perspectival congruency during intentional and incidental encoding. Concerning memory, one-hour delayed recognition was boosted for scenes encoded in the SYNCH1PP condition for intentional, but not incidental encoding.

Better recognition performance for objects presented in the right visual field (behavior, Experiments 1-3)

Because participants moved their right upper limb during encoding, we investigated whether the laterality of objects (right versus left) impacted recognition performance. Such right-handed movements, that were shown in the immersive scenes during the encoding sessions, could have decreased recognition performance due to visual occlusion of the objects placed on the right side or may have, on the contrary, improved recognition performance due to motor facilitation (right-sided upper limb movements) or enhanced attention towards the right side. Results from Experiments 1 and 2 show that participants had better recognition performance for right-sided objects. This was found irrespective of the three conditions (Fig. S2A; Table S5; estimate = 0.288, z = 2.028, p = 0.043). We found no such effect of object laterality in Experiment 3 (estimate = 0.037, z = 0.168, p = 0.867; Fig. S2B) and no interaction with condition (Table S6), showing that attention or movement-related processes did not differently impact recognition performance in Experiment 3. These data suggest that processes related to attention and/or hand movements may enhance recognition performance for right-sided versus left-sided objects, but only during incidental encoding. Critically, this did not differ between our three experimental conditions in either of the three experiments.

fMRI (Experiment 2)

Experiment 2 (fMRI, 29 participants) was identical to Experiment 1 (mock scanner, 26 participants), and we recorded fMRI during the three critical periods of the experiment: the encoding session, the BSC assessment session, and the 1-hour delayed recognition session. During all three sessions, participants were exposed to different immersive VR conditions (see Fig. 1). We carried out the following fMRI analyses. First, we performed a searchlight representational similarity analysis (RSA) to identify brain areas that were modulated by visuomotor and perspectival congruency and, critically, similarly modulated during both encoding and recognition. Because RSA did not allow us to quantify the level of similarity for each condition separately, we computed the encoding-recognition similarity scores (ERS) for each condition and participant and each of the brain regions identified with the RSA. Finally, we investigated if ERS for regions showing significant ERS score differences was associated with participants’ recognition performance.

RSA analysis. Visuomotor and perspectival congruency impact the reinstatement of encoding activity during recognition

We first explored the condition-dependent reinstatement of encoding activity across the whole brain, to identify reinstatement of brain activity similarly modulated by visuomotor and perspectival congruency. For this, we applied a searchlight RSA procedure (Fig. 3A) and identified four regions where brain activity showed similar differences that depended on the level of visuomotor and perspectival congruency when comparing encoding with recognition. These regions were left hippocampus, left middle temporal gyrus (MTG), visual cortex, and orbitofrontal cortex (Fig. 3B) (Npermutation = 1000, p < 0.05, cluster size> 500 voxels).

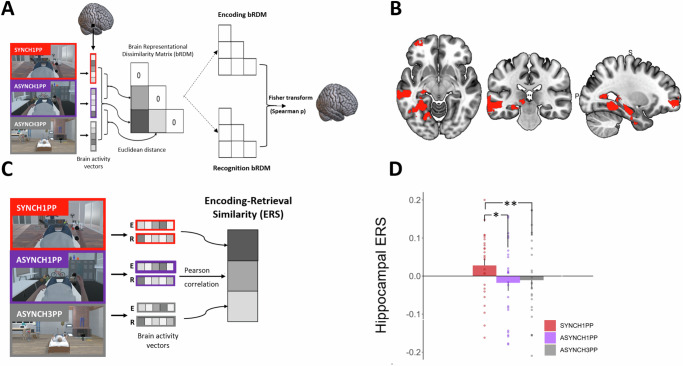

Fig. 3. Left hippocampal ERS is higher under visuomotor and perspectival congruency.

A RSA identified brain regions with the same differential pattern between conditions during encoding and recognition sessions in Experiment 2. B RSA identified four regions: left hippocampus, visual cortex, left middle temporal, and left frontal superior orbital gyrus (permutation test, Npermutations = 1000, p < 0.05, cluster size> 500). C ERS was computed for the four regions identified by RSA, by applying a Pearson correlation between the voxel activity at encoding and at recognition in Experiment 2. D Hippocampal ERS is significantly higher under visuomotor and perspectival congruency (SYNCH1PP, red) compared to the two other conditions. Error bars represent the standard error of the mean. * and ** indicates significance level with p-value < 0.05 and <0.01 respectively. ERS Encoding recognition similarity score. RSA Representational Similarity Analysis, N = 24.

ERS analysis. Reinstatement in the left hippocampus is higher for visuomotor and perspectival congruency and indexes recognition memory

Although RSA analysis identified four regions that showed a similar pattern of activity between encoding and recognition, it does not provide information about the condition-dependent similarity of activity between encoding and recognition. To further quantify the similarity of activity of these four brain regions between encoding and recognition, we computed their ERS for each of the three conditions separately19,22,63. This analysis revealed that ERS in the left hippocampus was significantly higher in SYNCH1PP compared to the two conditions with visuomotor and perspectival incongruency (Fig. 3D; SYNCH1PP vs. ASYNCH1PP estimate = −0.045, t = −2.57, p = 0.01; SYNCH1PP vs. ASYNCH3PP estimate = −0.045, t = −2.6, p = 0.009, Table S7). There was also a higher ERS in SYNCH1PP compared to ASYNCH1PP in the left MTG (SYNCH1PP vs ASYNCH1PP estimate = −0.048, t = −2.77, p = 0.006, for other comparisons see Table S8). No such ERS differences were observed in visual cortex nor in orbitofrontal cortex (see Tables S9 and S10 for detailed results).

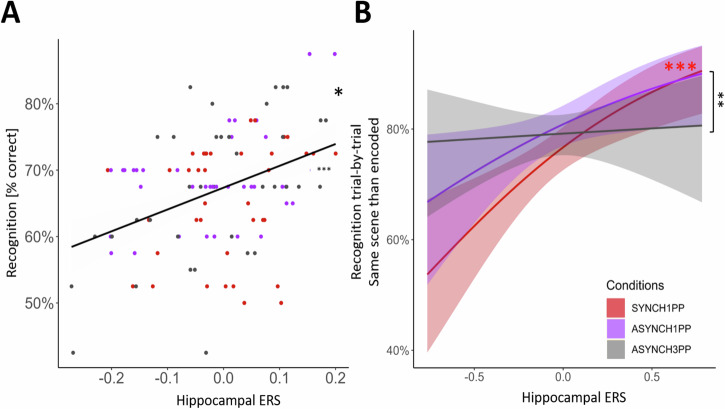

Based on previous studies showing that hippocampal ERS is a predictor of recognition memory15,22, we investigated whether the ERS of the left hippocampus and of the left MTG correlated with participants’ recognition performance. A linear mixed model predicting recognition performance using hippocampal ERS (see Supplementary Note 2 and Table S11 for more details about model selection) revealed that ERS of the left hippocampus predicted recognition performance, irrespective of condition (Fig. 4A; estimate = 0.29, t = 2.72, p = 0.006, <pcorr = 0.0125, Table S12), such that higher ERS led to better recognition performance. We found a similar relationship between hippocampal ERS and performance in a trial-by-trial model (see Methods), reaching significance only in the condition characterized by visuomotor and perspectival congruency (Fig. 4B, Supplementary Note 3 and Table S13). The same ERS analysis for the left MTG did not detect a significant association with recognition performance (See Table S14).

Fig. 4. Hippocampal reinstatement of hippocampal encoding activity predicts recognition performance.

A Hippocampal ERS is positively correlated with participants’ recognition performance irrespective of condition (Experiment 2, p = 0.006; pcorrected = 0.013; linear mixed model). The mean recognition rate (black line) is plotted for each condition (SYNCH1PP: red dots, ASYNCH1PP: purple dots, ASYNCH3PP: gray dots). B Recognition performance of the original scene is predicted by hippocampal ERS, only in the condition with visuomotor and perspectival congruency. *,** and *** indicates significance level with p < 0.05, p < 0.01 and p < 0.001 respectively (mixed effect logistic regression). ERS Encoding recognition similarity score, N = 24.

Hippocampal-neocortical interactions revealed by ERS are modulated by visuomotor and perspectival congruency

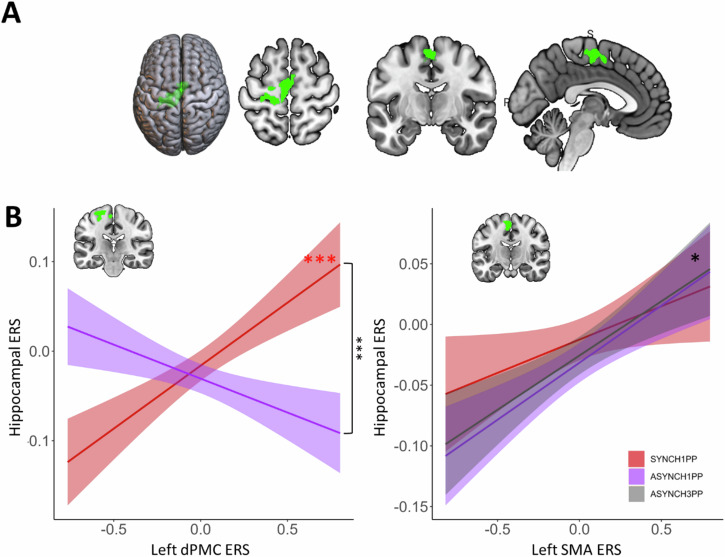

Next, we identified brain activity linked to the SoA and investigated whether these SoA regions were associated with the condition-dependent reinstatement of encoding activity. To do so, we first used univariate GLM to identify brain regions modulated by visuomotor and perspectival congruency during the BSC session and then quantified the relationship between ERS of these regions and ERS of the left hippocampus. By contrasting SYNCH1PP with ASYNCH1PP and ASYNCH3PP (SYNCH1PP > ASYNCH1PP + ASYNCH3PP; second level within-subject ANOVA) during the BSC session, we identified a SoA network (Fig. 5A) composed of left dorsal premotor cortex (dPMC, MNI coordinate −18, −24, 62) and bilateral supplementary motor area (SMA, MNI coordinate right SMA 4, −4, 55, left SMA −4, −11, 56, Table S15). Post-hoc analysis showed that activity in these regions correlated with participant’s SoA ratings (Supplementary Note 4). Second, we analyzed the interaction between these three brain regions (left dPMC and bilateral SMA) and the left hippocampus (as revealed by RSA and ERS analysis). For this, we applied a linear mixed model investigating how the hippocampal ERS (reflecting recognition performance; dependent variable) was related to the ERS of the left dPMC and bilateral SMA (SoA sensitive regions), for each level of visuomotor and perspectival congruency. This analysis revealed a significant difference in the coupling of the left hippocampal ERS and left dPMC ERS that depended on the experimental condition (Fig. 5B; i.e., significant interaction between SYNCH1PP and ASYNCH1PP; ERS dPMC estimate = −0.19, t = −5.2, p < 0.0001, Table S16). These data show that reinstatement in a key memory region (hippocampus) is differently linked with reinstatement in a key SoA region (dPMC), depending on visuomotor and perspectival congruency.

Fig. 5. Neural reinstatement of encoding brain activity in SoA-related regions correlates with left hippocampal reinstatement only under visuomotor and perspectival congruency.

A Left dPMC and bilateral SMA activity is higher in the condition with visuomotor and perspectival congruency (SYNCH1PP, red), as compared to the two other conditions. B Trial-by-trial ERS of the dPMC correlates positively with trial-by-trial hippocampal ERS under visuomotor and perspectival congruency (SYNCH1PP, red) (linear mixed model). Trial-by-trial ERS of the left SMA was found to correlate positively with hippocampal ERS irrespective of condition (p = 0.01, linear mixed model). dPMC dorsal premotor cortex, SMA Supplementary motor area, ERS Encoding recognition similarity score, SoA Sense of Agency. *, *** indicates significance level with p value < 0.05 and <0.001 respectively, N = 24.

This was further extended by post-hoc analysis, revealing that the dPMC ERS was significantly positively related to hippocampal ERS in SYNCH1PP (estimate = 0.18, t = 6.4, p < 0.0001), but not significantly related to hippocampal ERS for scenes encoded under visuomotor and perspectival mismatch (for details see Table S16). This shows that higher similarity between encoding and retrieval in the hippocampus (hippocampal ERS) is linked to higher similarity between encoding and retrieval in an SoA sensitive region, dPMC, only in the condition with visuomotor and perspectival congruency and characterized by the highest SoA in the present experiments.

We performed the same analysis for SMA and left hippocampus. Hippocampal ERS was also associated with the left SMA (estimate = 0.06, t = 7.4, p = 0.014, Fig. 5B, Table S17). Such hippocampal-SMA coupling was characterized by a positive relation but did not differ between conditions, as found for hippocampal-dPMC coupling. The same analysis applied to the right SMA did not show any coupling with hippocampal ERS (Table S18).

To summarize, we found that reinstatement-related activity in the left hippocampus, activity that we linked with performance in scene recognition, was systematically related to activity within a cortical SoA network consisting of left dPMC (contralateral to the moving right hand) and bilateral SMA. Whereas hippocampal-SMA coupling was present in all three conditions (reflecting a more general coupling), premotor-hippocampal coupling in the left hemisphere was found for reinstatement-related activity that was stronger under visuomotor and perspectival congruency, suggesting a neural mechanism linking SoA and recognition of objects in complex three-dimensional scenes.

Amnestic patient with bilateral hippocampal damage is impaired in recognizing objects encoded with visuomotor and perspectival congruency

Would damage to the left hippocampus and its connections with dPMC and SMA, impair the present SoA effects on recognition performance, mediated by visuomotor and perspectival congruency during encoding? We had the unique opportunity to investigate this question in a patient suffering from a severe deficit in autobiographical EM following a fungal brain infection.

The patient is a 62 years old, right-handed woman, who worked as a secretary. She was hospitalized for an epileptic seizure with secondary generalization, followed by several focal epileptic seizures (of temporal origin) with secondary generalizations and status epilepticus (necessitating antiepileptic quadritherapy). The patient showed severe retrograde amnesia (i.e., her daughter’s wedding, holidays, and other important family events) and participated in an intensive neuropsychological rehabilitation program. Despite being able to relearn key facts about her life prior to the infection, the patient is to this day not able to re-experience these key events of her life and her amnesia also extends to new memories following her hospitalization. Repeated neuropsychological examinations revealed a severe EM deficit affecting retrograde events (early childhood to adulthood without temporal gradient) and a moderate anterograde EM deficit. Learning for verbal memory was normal, but deficient for delayed recall (normal after indication). Visuo-spatial memory was at the inferior limit of the norm. There was a mild-to-moderate semantic memory deficit (i.e., public events and celebrities; See Table S19 for a summary of the performance on the neuropsychological tests). At the moment of the present investigation (9 months after hospitalization), EM for autobiographical events remained severely deficient with only slight improvements in anterograde EM. A mild executive deficit persisted, but attention was normal.

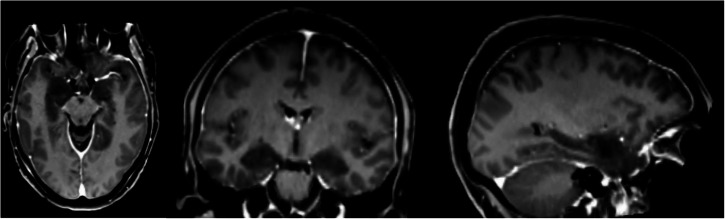

Seizures were caused by a meningoencephalitis, following a right sphenoidal sinusitis with right sphenoidal bone loss and meningeal contact. Initial MRI (December 2021) showed prominent lesions in the bilateral medial temporal lobe involving both hippocampi, parahippocampi, and amygdalae (Figs. 6 and S3). Three smaller lesions (all below 1 cm) were also seen in the right middle frontal gyrus, the left inferior parietal gyrus, and right lingual gyrus. Subsequent examinations showed progressive improvement with persistence of lesions in the medial temporal lobe and parietal cortex and disappearance of lesions on MRI in the lingual gyrus and frontal cortex (January 2022). In March 2022 there were no more lesions visible, but the MRI showed bilateral hippocampal atrophy (Fig. 7A). This latter finding was further corroborated by volumetric analysis revealing bilateral atrophy affecting both hippocampi (Fig. 7B, D, Table S20). Hippocampal atrophy affected the four cornu ammoni (CA 1 to 4), the dentate gyrus, the subiculum and the stratum (lacunosum SL; radiatum SR; and moleculare SM). The volumes of amygdala, parahippocampus and entorhinal cortex were in the normal range (Table S20).

Fig. 6. Medial temporal inflamation early during hospitalization of patient.

Patient’s structural MRI (T1 MPRAGE, voxel size 1x1x1, 200 slices, 3 T MR scanner) during the first week of hospitalization. Dark regions in bilateral medial temporal lobe indicate site of inflammation.

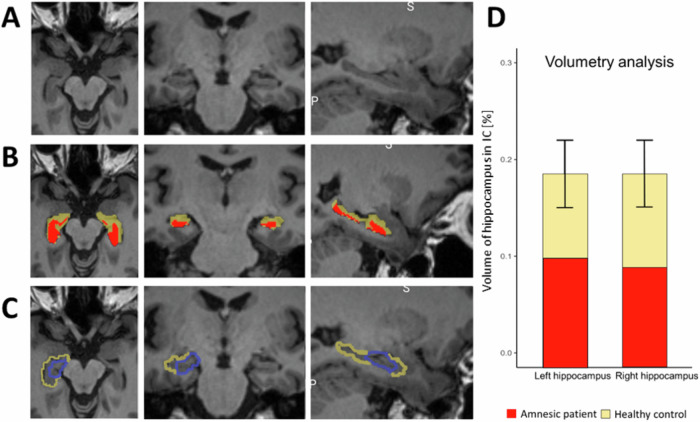

Fig. 7. Bilateral hippocampal damage of patient.

A Coronal, axial, and sagital view of the patient’s anatomical MRI eight months after hospitalization. B Patient’s hippocampus (red) is shown with the delineation of a normal hippocampus (yellow), registered in the patient’s native space. C Hippocampus from the automated anatomical labeling atlas (yellow), and the hippocampal activity, as identified by RSA (blue) in healthy participants, transformed in the patient’s native space overlayed on the patient’s anatomical MRI eight months after hospitalization. D Volume of the patient’s right and left hippocampi (red bars) compared to the normal range for neurologically healthy age- and gender-matched control (yellow bars). The volume is expressed as the percentage of the hippocampal volume, compared to the total intracranial volume.

The patient’s hippocampal damage involved the left hippocampal region, homologous to the region detected by the present RSA and ERS analysis in Experiment 2, in healthy participants (Fig. 7C). Five months after her hospitalization, we tested the patient in the same immersive VR paradigms as tested in healthy participants, adapted to patient comfort. We tested the patient with the same immersive VR scenes (as Experiments 1 and 3), in the same three conditions (SYNCH1PP, ASYNCH1PP, ASYNCH3PP), and with the same number of trials. She managed to perform all three sessions: the encoding session, the BSC session, and a one-hour delayed recognition session. The patient sat on a chair, with her legs resting on a second chair in front of her, approximating as much as possible the position and field of view of the scenes as tested in healthy participants (Experiments 1-3). We used the same VR setup and head-mounted display. Another motion tracking system was used (LEAP motion; Leap Motion Controller®) because for the patient’s comfort, she was tested at a hospital closer to her home.

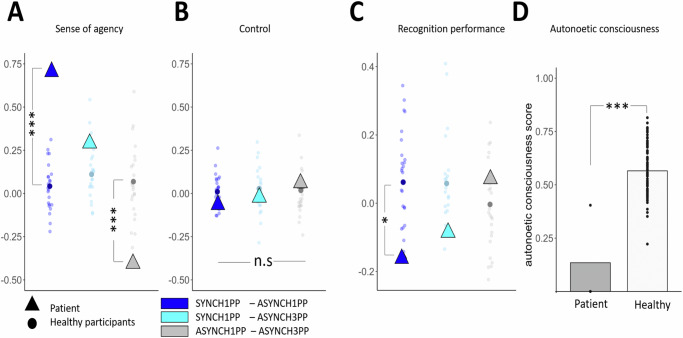

As predicted, the patient had preserved SoA, with SoA ratings comparable with those observed in healthy participants in the BSC assessment of Experiments 1–3: she had higher SoA ratings in the SYNCH1PP condition compared to both ASYNCH1PP and ASYNCH3PP conditions (Fig. 8A) (Crawford test to compare the patient’s ratings with respect to healthy participants: SYNCH1PP compared to ASYNCH1PP: mean = 0.05, sd ± = 0.16, p < 0.001; ASYNCH1PP compared to ASYNCH3PP: mean = 0.03, sd ± = 0.16, p = 0.004). The SoA difference between SYNCH1PP and ASYNCH3PP was not significantly different compared to healthy participants but going in the same direction (Crawford test: SYNCH1PP-ASYNCH3PP: mean = 0.08, sd ± = 0.18, p = 0.134). Importantly, the patient’s ratings on control items were low and did not differ from those of healthy participants (Fig. 8B) (for a detailed comparison between the patient’s and healthy participants’ SoA ratings see Supplementary Note 5). These data show that the patient was sensitive to our experimental manipulation during encoding, showing a similar modulation of the SoA as healthy participants.

Fig. 8. Patient has normal SoA, but abnormally low recognition performance, which does not increase with visuomotor and perspectival congruency.

A Patient has normal SoA (higher SoA for conditions with visuomotor and perspectival congruency versus conditions with visuomotor and perspectival incongruency). The SoA rating difference between SYNCH1PP-ASYNCH1PP (dark blue) and ASYNCH1PP-ASYNCH3PP (gray) are significantly bigger when compared to healthy participants (N = 24) (Crawford test). B Patient also has normal and low ratings for control questions, which did not differ between conditions and were not different from healthy participants. C Patient’s recognition performance is lower under visuomotor and perspectival congruency compared to visuomotor and perspectival incongruency differing from healthy participants (colored dots; Experiment 3; Crawford test). D The patient was able to remember only the scene encoded under the strongest visuomotor and perspectival incongruency (ASYNCH3PP). The autonoetic consciousness of the patient (dark gray) was lower compared to healthy participants (gray; Experiment 3; N = 24) (Crawford test). *, **, and *** indicate significance levels with p-value of <0.05, <0.01 and <0.001, respectively.

The patient was well aware of her memory deficits, for which she had been tested repeatedly during her neuropsychological examinations and memory rehabilitation sessions. Although we initially tested the patient under incidental encoding, she admitted she was expecting this experiment to test her memory. Therefore, we compared her performance with participants who performed the task under intentional encoding instruction (Experiment 3). Inspection of Fig. 8C shows that, despite her preserved SoA, she displayed the opposite pattern compared to healthy participants (Fig. 8C). She showed the lowest recognition performance in the SYNCH1PP condition (58% correct responses) compared with ASYNCH1PP (73% correct) and ASYNCH3PP (66% correct) conditions. The accuracy difference the patient showed between SYNCH1PP and ASYNCH1PP was significantly different from that observed in healthy participants (Experiment 3) (mean = 0.06, sd ± = 0.11, p = 0.036; the comparison SYNCH1PP-ASYNCH3PP was not significantly different compared to healthy participants (mean = 0.06, sd ± = 0.13, p = 0.148). From our 24 participants, only 7 participants showed slightly better performance in the ASYNCH1PP condition compared to SYNCH1PP. Five of these participants had only a very minor ASYNCH1PP > SYNCH1PP difference of 0.075. Two participants did show a slightly bigger difference of 0.1, which was still smaller than the patient’s difference between conditions (0.16). Thus, no participant showed a performance difference that was larger or comparable with the patient’s, supporting the results of the Crawford test.

To provide further evidence for altered EM, depending on visuomotor and perspectival congruency, we investigated the patient’s autonoetic consciousness, that is, her ability to re-experience the sensory and perceptual details of an event1,64,65. For this, we asked several questions from the memory characteristics questionnaire (MCQ)66, the episodic autobiographical memory interview67, and the “affected limb intentional feeling questionnaire” (ALEFq)68. Although autonoetic consciousness is predominantly tested for autobiographical real-life events, we here tested her autonoetic consciousness for the three virtual 3D scenes into which she was immersed during the encoding session. In particular, we were interested in testing whether her autonoetic consciousness would differ across the three encoding conditions. Based on her lower recognition performance in the SYNCH1PP condition, we predicted that she would indicate lower autonoetic consciousness scores in this condition. Autonoetic consciousness was tested one week after encoding and confirmed this prediction. The patient was able to remember the scene encoded in 3PP (ASYNCH3PP: “the cabin”), even without showing her the picture of the scene, and was able to recollect the requested information. However, her overall rating scores in the autonoetic consciousness questionnaire for this scene were still low (Fig. 8D). Her memory for the global vividness of the scene was vague (second last choice on a scale from 1 to 7) and global re-experience was low (25%, second last choice on the scale). The averaged ratings significantly differed from the scores of healthy participants as tested with a Crawford test (mean = 0.57, sd = 0.12, p < 0.001). More strikingly, she was not able to evoke at all either of the two scenes encoded with a 1PP (i.e., SYNCH1PP: “the living room”; ASYNCH1PP: “the changing room”), even after we showed her pictures of these scenes, and was not able to answer and rate the different questions of the ANC questionnaire. The patient reported: “I can remember seeing the cabin, all in wood, and my arm moving in the scene, but I have never seen this living room nor this changing room”. The patient showed easier recall for the scene encoded under visuomotor and perspectival mismatch (ASYNCH3PP).

To sum up, damage to bilateral hippocampi and adjacent structures, followed by later atrophy of both hippocampi, led to severe amnesia observed in the present patient. She had a normal SoA, consistent with the fact that dPMC and SMA were not affected by her brain damage. Critically, she remembered fewer objects encoded under visuomotor and perspectival congruency, contrarily to healthy controls. Moreover, she was not able to relive or re-experience the VR scenes that she encoded from a 1PP, but strikingly could only do so for some elements when these were encoded under maximal visuomotor and perspectival mismatch. Further analysis revealed that her brain damage included the left hippocampal region as found in our fMRI analysis in healthy participants (Fig. 3B and Fig. 7C) and that we linked to visuomotor and perspectival congruency, using RSA and ERS analysis. These clinical-imaging findings further support our previous experimental-imaging findings, that the left hippocampal region and/or its connections with dPMC mediate embodiment effects in EM.

Discussion

Leveraging the combination of immersive VR and fMRI and accessing brain activity during both encoding and retrieval of episodic memories, we found that (1) the hippocampal reinstatement of encoding-related brain activity during retrieval was higher for scenes encoded with normal visuomotor and perspectival congruency and that (2) such hippocampal reinstatement, critically, reflected recognition performance. We further (3) linked the SoA to hippocampal reinstatement by showing that hippocampal reinstatement was (4) coupled with dPMC reinstatement, a key region of the SoA, that we defined in an independent experimental session and that (5) dPMC activity was also modulated by visuomotor and perspectival congruency, but at encoding. These observations were corroborated and extended in a rare patient with severe amnesia caused by damage and atrophy to bilateral hippocampi, including the hippocampal area critical for reinstatement and dPMC coupling. Although the patient’s SoA was normally modulated by visuomotor and perspectival congruency, she showed worse memory and larger re-experiencing impairments in conditions of normal visuomotor and perspectival congruency. Collectively, these data describe premotor-hippocampal coupling in EM and reveal how the bodily sensory context and the related level of SoA of the observer at encoding is neurally reinstated during the retrieval of past episodes.

Although averaged reinstated hippocampal activity across conditions and average recognition performance across conditions were not associated, more fine-grained analysis (correlation analysis, single trial analysis) linked recognition performance with hippocampal reinstatement. We found that the hippocampal reinstatement of encoding activity across the experimental conditions was correlated with participants’ recognition performance, consistent with previous work using visual or auditory stimuli12,15. Thus, hippocampal reinstatement - reflected by the average hippocampal activity across all trials - correlated with average recognition performance (Fig. 4A). This is consistent with the idea that successful EM retrieval depends on the degree of remobilization of activity observed during encoding15,19, i.e., reactivation of the hippocampal engram69. Neural reactivation of the hippocampus during EM retrieval and its link with memory performance has been demonstrated previously10,15,19,22. Yet this earlier work presented single or paired stimuli at encoding (i.e., pictures, word cue), whereas the present results report hippocampal reinstatement in a richer sensory context with action-embedded 3D scenes using immersive VR in fMRI with incidental encoding, closer to encoding conditions in our everyday life. We did not expect necessarily a difference between conditions in this latter analysis, as it has been shown previously that hippocampal reinstatement is linked with recognition performance, more generally. Our finding is compatible with previous data on hippocampal reinstatement and recognition performance in a range of tasks10,15,19,22. Although there was no condition-dependent effect for the relationship between hippocampal reinstatement and memory performance on average, these findings were extended by our additional trial-by-trial analyses showing that hippocampal reinstatement reflects the successful recognition of the original scene presented during encoding but only when analyzed for single trials. Moreover, this was found only when encoding was done with visuomotor and perspectival congruency in the SYNCH1PP condition (Fig. 4B). This suggests that the hippocampal activity during recall is more similar to the hippocampal activity at encoding for scenes encoded with preserved SoA, compared to scenes encoded under disrupted visuomotor and perspectival congruency (as in ASYNCH1PP and ASYNCH3PP).This could be due to better reinstatement of the hippocampal encoding activity at retrieval in the preserved visuomotor and perspectival congruency condition compared to the conditions with visuomotor and perspectival mismatch (i.e., ref. 70), or participation of other brain regions to reinstatement when the scenes were encoded in conditions with disrupted visuomotor and perspectival congruency (ASYNCH1PP; ASYNCH3PP). Hence, the successful recognition of the scene observed at encoding is critically linked to the reactivation of the hippocampal encoding activity during retrieval, extending previous evidence about the hippocampus’ role in pattern separation to discriminate between previously encoded events and new events14,71–73.

These findings further indicate that EM retrieval not only depends on stronger similarity between the encoding and retrieval patterns in the hippocampus, but also on preserved visuomotor and perspectival congruency at encoding. Thus, hippocampal reinstatement depends on the bodily sensory context of the observer during encoding, because only the neural pattern of episodes encoded from a first-person perspective and with synchronous movements helped distinguish between the events presented at encoding and new events at retrieval.

We further show that hippocampal reinstatement of the averaged activity (across all trials) also depends on the level of the SoA at encoding, differing between the three experimental conditions. Critically, we show that the highest level of hippocampal reinstatement was found for scenes encoded under visuomotor and perspectival congruency. This is in line with a recent study linking hippocampal reinstatement with the modulation of body ownership during encoding61, although the latter work did not observe reinstatement using full brain analysis (but rather used a region of interest approach) and did not associate hippocampal reinstatement with EM performance or the modulated BSC level.

In addition to EM-related reinstatement in the hippocampus, we also observed reinstatement activity in the left MTG that was further modulated by the SoA level. Although this region has been reported in previous EM studies (using video stimuli)8, MTG reinstatement in the present study was not correlated with recognition performance, showing that reinstatement functionally differs between MTG and hippocampus and that only hippocampal reinstatement reflected SoA as well as EM performance. We did not find any relationship between recognition performance and cortical reinstatement in other regions such as visual cortex, which was detected by RSA analysis. Although several studies provided evidence of remobilization of visual cortex during retrieval11,74, our results show that this reinstatement does not reflect visuomotor and perspectival congruency.

We argue that the present data provides evidence for embodied hippocampal reinstatement by showing that the sensorimotor context of the observer’s body at encoding impacts encoding- and retrieval-related hippocampal activity. The finding of embodied hippocampal reinstatement (i.e., reinstatement that depends on visuomotor and perspectival congruency) extends previous reinstatement observations that investigated the visual or auditory information of the encoded scene, to the bodily sensorimotor context of the observer during the encoding of the scene.

By showing that the dPMC exhibited higher activation under visuomotor and perspectival congruency the present data support the PMC’s well-known role in motor control and motor-related cognition, consistent with previous brain imaging studies on the SoA75–77 We note that the hippocampal reinstatement (that was associated with EM performance and depended on visuomotor and perspectival congruency) as well as the dPMC activity (depending on visuomotor and perspectival congruency) were both found only in the left hemisphere, that is in the hemisphere contralateral to the participants’ right upper limb movements during encoding78, suggesting a further functional link of the bodily sensory context during encoding on premotor and hippocampal activity in the present experiment. Several reinstatement studies have observed left hippocampal reinstatement8,22,56,61. While some studies found that especially left hippocampal activity was linked with memory performance8,22 and associated with the perspective during encoding, others did not report hemispheric specificity of hippocampal reinstatement13,19. Further work is needed to investigate the lateralization of hippocampal activity during reinstatement processes and how this depends on the lateralization of sensory stimuli and movements of the participant.

Several studies have demonstrated that hippocampal activity during encoding is coupled with the reactivation of other cortical regions, during the retrieval process14,21, In particular, activation of visual cortex has been linked with hippocampal activity, at encoding and retrieval, and it has been suggested that hippocampal reinstatement mediates the reinstatement of cortical areas during retrieval14,21. We did not find any difference of hippocampal activity between conditions during the encoding session alone, suggesting that neural processes between encoding and retrieval and not during the encoding process itself, mediated this effect. In our study, we found that the reinstatement of the left hippocampus was linked to left dPMC reinstatement, at the single trial level (Fig. 5B). Together with past experimental work, this observation extends the widely accepted theory that the hippocampus indexes sensory information of EM stored in visual and auditory regions17,18,79–81 to indexing sensorimotor information stored in dPMC. Thus, the present results show that hippocampal-cortical coupling varies in function of the sensory encoding context and that, in the case of different bodily sensory contexts and SoA levels during encoding, activity in dPMC is coupled with the hippocampal activity. Moreover, strongest premotor-hippocampal coupling was found in the present study for scenes encoded under preserved SoA. Accordingly, our results provide further evidence for embodied reinstatement, showing that not only hippocampal reinstatement, but also the coupling between hippocampus and sensorimotor cortices depends on preserved normal SoA levels, as induced here by visuomotor and perspectival congruency.

We also report bilateral SMA-hippocampal coupling. The SMA is a brain region involved in movement selection and motor preparation and has also been involved in the SoA75,77,82. Bilateral SMA was more activated under visuomotor and perspectival congruency (independent of the reinstatement analysis) and, critically, characterized by a trial-by trial coupling between left hippocampal reinstatement and SMA reinstatement. However, while the premotor-hippocampal coupling (i.e., with left dPMC) was specific to the condition with preserved visuomotor and perspectival congruency, the coupling between the reinstatement of the left SMA and the left hippocampus was independent of our experimental conditions. These data show that SMA-hippocampal coupling also contributes to reinstatement, but that the reinstatement mechanism is a more general one that does not reflect visuomotor and perspectival congruency and the related SoA (compared to the embodied reinstatement that is mediated by premotor-hippocampal coupling).

As expected, the disruption of visuomotor and perspectival congruency applied in the ASYNCH1PP and ASYNCH3PP conditions significantly reduced the SoA under incidental encoding (Experiments 1 and 2), similar to what is observed and reported in the literature45,75,83. Although the SoA difference between SYNCH1PP and ASYNCH1PP did not differ significantly between conditions under intentional encoding (Experiment 3), the SoA was also higher in SYNCH1PP compared to ASYNCH1PP, showing that participants felt a higher SoA with preserved visuomotor and perspectival congruency across all experiments (see Supplementary material).

We note that we did not find a difference between conditions in recognition performance under incidental encoding instructions (Experiments 1 and 2). Although we did expect such a difference (as found in Experiment 3), we speculate that this may have resulted from different processes associated with the different instructions given at the beginning of Experiments 1-2 versus Experiment 3. Under incidental encoding, the participants were not instructed to pay particular attention to the scene and thus may have been more likely to focus on the avatar and its movements. This interpretation is supported by the fact that we found an effect of object laterality under incidental encoding (Experiments 1 and 2), but not under intentional encoding (Experiment 3). Thus, participants were better at recognizing scenes in which the change occurred on the right side (i.e., the same side where their avatar’s limb was moving). However, as our participants were above chance level in all three experiments, the fact that the attention towards the avatar was most likely emphasized during incidental encoding did not prevent them from performing the task. Moreover, we observed a reduced SoA under visuomotor and perspectival incongruency across the three experiments. Hence, even if there may have been a different focus of attention between experiments performed under incidental versus intentional encoding, the SoA was manipulated in the same way in all three experiments. Furthermore, as we observe differences of hippocampal reinstatement (Fig. 3D) between the conditions in our imaging results in Experiment 2 we assume that these differences are due to our experimental SoA manipulation at encoding, but that the incidental instruction gave rise to smaller difference of performance which may explain the absence of behavioral effect in the present study. Future studies should further test differences in episodic memory, depending on SoA and incidental versus intentional encoding.

Severe EM deficits including autobiographical EM have been described previously in several patients with bilateral hippocampal damage65,84–86. Here, we investigated the impact of the bodily sensory context and of BSC on EM, using the paradigm of experiments 1-3 in an amnestic patient, with a severe retrograde and a moderate anterograde EM deficit. Her recognition performance in all three conditions was significantly lower as compared to healthy participants in Experiments 3 (Fig. 8C) and so was her autonoetic consciousness (Fig. 8D). Concerning the SoA, the patient had normal levels of BSC and showed normal modulation of her SoA ratings that depended on visuomotor and perspectival congruency, comparable to healthy participants (Fig. 8A) and comparable to previous studies in healthy subjects75,83,87,88. Critically, the patient’s EM performance was lowest in conditions when her SoA was increased (i.e., the condition with visuomotor and perspectival congruency) (Fig. 8C), thus showing the opposite behavioral pattern as observed in healthy participants, especially experiment 3. This selective condition-dependent modulation was further confirmed when testing her autonoetic consciousness one week after the encoding session, when the patient was only able to re-experience the scene that she had encoded with the weakest SoA and thus the strongest visuomotor and perspectival incongruency (i.e., ASYNCH3PP) (Fig. 8D).

The present patient suffered from bilateral damage and later atrophy of both hippocampi (Fig. 7A), causing her memory and autonoetic consciousness deficits. Other regions in the medial temporal lobe (i.e, amygdala, entorhinal cortex) were not significantly reduced in volume compared to a healthy age-matched control group. Her lesion overlapped with the left hippocampal region identified in this study (Fig. 7C), but did not involve the left dPMC premotor cortex nor the SMA. This damage changed the way the bodily sensory context and BSC during encoding impact her EM, while keeping her SoA preserved. We argue that the altered modulation of EM by visuo-motor and perspectival congruency is caused either by damage to the left hippocampus or to structural or functional changes in premotor-hippocampal coupling in the present patient. This is compatible with animal work showing that the silencing hippocampal activity at retrieval prevents the reactivation of cortical areas involved during encoding processes7,89–91. Although there are many reports of single case patients with amnesia due to lesions in the medial temporal and frontal lobe65,84,86,92, to our knowledge, this study is the first to assess the effect of BSC manipulation in an amnesic patient and therefore provide novel clinical insight into the neural association between BSC and EM.

This study did not aim to separate the specific mechanisms associated with the first-person perspective or with visuo-motor synchrony, but to provide first evidence into the neural mechanisms linking BSC and EM. Therefore, we tested the effects of graded conditions, from preserved BSC (SYNCH1PP), to moderate (ASYNCH1PP), to strong BSC alterations (ASYNCH3PP). Future studies may investigate the specific effects of perspective and congruency on the behavioral, neural, and clinical mechanisms leading to the present coupling of BSC and EM. Additionally, this study tested memory using a recognition task at a one-hour delay, whereas long-term episodic memories expand over much larger time periods. We used a one-hour delay as it corresponds to the onset of the hippocampal consolidation process associated with long-term memory16,93–95. A recognition task was chosen as it allowed us to obtain many repeated trials that were critical to obtain sufficient data for the fMRI analysis (Experiment 2). We encourage future studies to measure memory with longer delays (days, weeks, etc) and with autobiography relevant stimulus material and presented in immersive VR scenarios. We also note that observations in single patients should be regarded with caution. The present neuropsychological-behavioral effects need to be confirmed in future clinical studies in amnesic patients and compared with age-matched healthy control groups.

In conclusion, we report that hippocampal reinstatement of encoding-related brain activity during retrieval was higher for scenes encoded with normal visuomotor and perspectival congruency and reflected recognition performance. We further linked hippocampal reinstatement with dPMC reinstatement, a key region of the SoA. Premotor-hippocampal coupling thus appears as a mechanism for the reinstatement of the bodily sensory context and the SoA during the retrieval of a past episode. These observations were corroborated and extended in a rare patient with severe amnesia caused by damage and atrophy to bilateral hippocampi, including the area of hippocampal reinstatement and coupling. Together, our study provides behavioral, imaging and clinical evidence of the involvement of bodily sensory context and BSC in the neural bases of EM.

Methods

Experimental design

All experiments consisted of three separate main sessions. The first session was a memory encoding session, which was followed by an assessment of bodily self-consciousness (BSC; see below). The last session was a recognition session carried out one hour later (Fig. 1). We also assessed autonoetic consciousness one week after the encoding session. Before the experiment, all participants underwent a familiarization session with VR for each of the four scenes (the three encoding scenes and the BSC assessment scene) that were used during the task. For Experiment 1 to 3, participants were lying in supine position (in a mock replicate of a MR scanner for Experiment 1 and in the MRI for Experiment 2) and equipped with VR headsets. They hold tennis ball in each hand equipped with button responses and reflective marker to track their movement. The following section explain the detail setup for each session and experiment.

Familiarization

We performed a familiarization in two steps. First, immediately after entering the mock scanner (Experiments 1 and 3) or the MR scanner (Experiment 2), we made sure participants could hear the instructions given in the headset (Experiments 1 and 3) and the headphone (Experiment2). We also asked participants to perform the arm movement while displaying an outdoor empty scene and gave feedback in case the movement were too fast. This part was important for participants to get used to the movement of the arm inside the MRI and make sure they would not touch the MRI boundaries with their arm during the experiment.

Second, prior to the encoding session, participants were immersed in the four scenes (three encoding scenes and the BSC scene) but emptied from all the objects being part of the later recognition task. They were instructed to move their hands and observe the scene for 15 s after which they were asked one binary question “2 plus 2 equal 4” where they had to answer if this statement was correct or not, followed by a second question “How confident are you about your answer ?” to train for the two types of questions that would be asked during the experiment. Finally, they were asked to move their right arm for 30 s to train them for the rest of the experiment. During this time, when necessary, we interacted with the participant to tell them if the movement was too fast or not having an amplitude big enough. We used participant’s mother tongue to give the instruction when it was possible (French and English) otherwise we used English. Each participant started the familiarization in the BSC scenes in the SYNCH1PP condition. The familiarization of the encoding scenes was performed in the same conditions as the one they would encode during the experiment.

Encoding session

During the encoding session, participants were instructed to keep moving their right upper limb while observing a virtual avatar animated in real-time. Upper limb movements were instructed to occur between two virtual black spheres that were displayed in the visual scene. The black spheres were aligned vertically, to the right of the virtual body, and placed at the level of the avatar’s hip (Fig. 1). We manipulated the sense of agency (SoA75,83,96) of participants by exposing them to three different scenes corresponding to the three different experimental conditions, which were characterized by different levels of sensorimotor synchrony. For this, participants were exposed to different levels of visuomotor and perspectival congruency between the movement of their right upper limb and the shown movements of the avatar’s upper limb in the virtual scene (i.e., ref. 45). In the SYNCH1PP condition there was no visuomotor manipulation thus, the virtual avatar was seen from a first-person perspective (1PP) and the right virtual upper limb was moving synchronously to the participant’s upper limb movements (SYNCH1PP). In the ASYNCH1PP condition, the virtual avatar was also seen from a 1PP, but was moving with a visuomotor delay that varied between 800-1000 ms to the movement of the participant (ASYNCH1PP). In a third, control, condition (ASYNCH3PP), the avatar was seen from a third-person perspective (3PP) and was moving with a visuomotor delay that varied between 800-1000 ms with respect to the movement of the participant. For the 3PP the body of the avatar was moved forward in the virtual scene to maintain the same visual angle of all objects in the scene, as compared to the other experimental conditions. Based on previous work on SoA97–99, we expected stronger SoA in the condition with no visuomotor manipulation and naturalistic perspective (SYNCH1PP) compared to the conditions with visuomotor manipulation (ASYNCH1PP and ASYNCH3PP).

Each of the three encoded scenes contained eighteen objects (see Supplementary Note 6 for the list of objects) and was associated with a specific experimental condition (SYNCH1PP, ASYNCH1PP, ASYNCH3PP) for each participant. This association between the encoded scene and experimental condition was pseudo-randomized across participants. For each experimental condition, each scene was presented for 30 s and repeated four times. An inter-trial interval consisting of a fixation cross appeared for five seconds in between each scene presentation to avoid potential carry-over effects from one condition to another.

Encoding was incidental in Experiments 1, 2, and 4. Thus, participants were not told that they participated in a memory experiment and that their object recognition was going to be tested (participants were told that they were participating to a technical feasibility study testing a new VR environment). This was different in Experiment 3, where encoding was intentional. We instructed the participants to pay close attention to the scene during encoding and told them they would be tested on the scene one hour later. We did not specify which type of memory test would be performed and what kind of questions would be asked. The rest of the experimental design was the same between Experiments 1–4.

BSC assessment

Immediately after the encoding session, participants were immersed in a different outdoor scene containing eighteen new objects to avoid any memory interference with the encoding of the 3 scenes associated with the 3 experimental conditions. They were instructed to perform the same right upper limb movements and were observing the same avatar in SYNCH1PP, ASYNCH1PP, and ASYNCH3PP, but now performed in the outdoor environment (duration 30 s). Based on previous BSC work, in Experiments 1–3, we also included a response to a threat stimulus directed towards the avatar (i.e., after 30 s, an unexpected event consisting in a virtual knife seen as approaching the avatar’s trunk51,100.

Participants had to rate their agreement with five statements regarding different aspects of BSC: (Q1) “I felt that I was controlling the virtual body” to rate their SoA toward the movement of the virtual avatar; (Q2) “I felt that the virtual body was mine” to rate their level of body ownership toward the virtual avatar intentionally; (Q3, only in Experiment 1,2 and 3) “I was afraid to be hurt by the knife” to rate their threat response as a proxy of the subjective measure of their BSC as in ref. 51. We also included two control statements for experimental bias: (Q4) “I felt like I had more than three bodies” and (Q5) “I felt like the trees were my body”. The five statements were presented successively and in a randomized order. For each statement, a cursor was programmed to move between the two extreme points of the presented agreement scale (between 0 to 1, with an increment of 0.001) at a constant speed. The participant had to stop it at the desired position by a left button press and then validate their response with a right button press. Before validation, the participant was free to retry indefinitely to specify his agreement level by a left click until being satisfied by the answer. The BSC assessment was repeated twice per condition.

Break

At the end of the encoding and BSC session, participants left the MR/mock scanner environment and had a one hour break during which they were free, but asked to stay in the buildings of our research campus (we asked them not to consume any alcohol or drugs). One hour after the break they returned to the MR scanner (Experiment 2) or mock replicate of the scanner (Experiments 1 and 3) to start the recognition session.

Recognition session

One hour after the encoding session, participants were presented with the encoded scenes again. Participants were exposed to each tested scene for 10 s after which a white square appeared on a gray background. They were then asked to respond yes (right button press) or no (left button press) with their index finger to the question: “Is there any change in the room compared to the first time you saw it?”. Once they had answered, the white square disappeared and another scene was shown for 10 s. They were instructed just before the start of the recognition session that they will have to answer concerning the original scenes seen during the encoding session. Some of these scenes were identical to the encoded scenes (original scene), and other were modified (changed scene). Participants performed 45 trials per condition. Among those 45 trials, 20 trials corresponded to the presentation of the original scene and 20 trials corresponded to a modified version of the original scene in which one single object was changed in either color or shape. There were 5 additional attentional trials in which two to three objects were changing shape, color, or position in the scene. The attentional trials were used to incite the participants to carefully observe the entire scene instead of simply trying to spot a single object change. Attentional trials were not included in the analysis. Importantly, during the recognition session, participants did not move their arms and no avatar was shown to not modulate BSC during the recognition session. In Experiment 1, following the first statement participants had to answer an additional question: “How confident are you about your answer?”. They had to answer using the same cursor-stopping scheme as in the BSC assessment following the encoding session. In Experiments 2,3 and 4, the second question was replaced by a 3-second fixation cross, to reduce experimental time as well as to avoid carry-over effects.

Autonoetic consciousness session

We also tested the patient and the healthy participants’ autonoetic consciousness for each condition (and therefore each scene encoded), at one week after the encoding. Autonoetic consciousness was assessed using an association of questions from well-established questionnaires for a total of 31 questions, including questions from the “Memory characteristic questionnaire” (19 questions)66, part B of the “Episodic autobiographic memory interview” (EAMI; 8 questions)67, two questions from the “affected limb intentional feeling questionnaire” (ALFq)68 and one additional question related to our research question (“I remember the movement and gesture I was doing with my body during the event,” ordinal scale, see Table S21 for a list of all the questions). In Experiments 1, 2 and 3, participants answered the questionnaire by phone (to minimize drop-out rate, also to minimize close contact with participants due the covid pandemic). In Experiment 4, there were no pandemic-related restrictions and the patient filled the questionnaire in the experimenter’s presence, also to ensure that all questions were well understood. We measured autonoetic consciousness for each condition for each participant and the patient.

Participants

In Experiment 1, 26 participants (7 male; mean age 23 ± 3.4 years) took part in the study, in Experiment 2, 29 participants (11 male, 3 gender-nonconforming, mean age 24 ± 3.4 years) and in Experiment 3, 27 participants (10 male, mean age 27 ± 3.5). All participants were right-handed as tested by the FLANDERS (Flinders Handedness Survey; FLANDERS101) and reported no history of neurological or psychiatric disorder and no drug consumption in the 48 h h preceding the experiment. All participants were compensated for their participation and provided written informed consent following the local ethical committee (Cantonal Ethical Committee of Geneva: 2015-00092, and Vaud and Valais: 2016-02541) and the declaration of Helsinki (2013). All ethical regulations relevant to human research participants were followed. We based our sample size on previous studies published with a similar experimental design, or similar research question57–60. These studies recruited between 16 and 33 participants. We aimed to recruit 24 participants in each study to ensure that we would have four participants encoding the scene with a similar scene-condition association. For the fMRI study, we recruited 5 more participants, in case we had to exclude participants based on motion or other imaging artifact.

Immersive virtual reality

The VR paradigm and the visual stimuli were inspired and adapted from former works on EM research using immersive VR50,57,59. Particular care was given to the progressive VR immersion procedure to build a strong experience of presence in the virtual environments102,103 and to maintain it throughout the experiment. Because the immersion in VR of participant lying in an MRI scanner is particularly challenging, we based our approach on the work of62, including the methods of familiarization with the virtual environment, and embodiment into a virtual body representation104.

To improve the reproducibility of our paradigm, all instructions were fully automatized and provided by audio recordings through headphones. We ensured that instructions were both heard and understood during the familiarization session.

VR display

Participants were visually immersed in VR using either a head-mounted display (Oculus Rifts S, refreshing rate 80 Hz, resolution 1280 x 1440 per eye, 660 ppi; Experiments 1, 3 and 4) or MRI-compatible goggles composed of two full-HD resolution displays (1920 x 1200, 16:10 WUXGA) allowing stereoscopic rendering at 60 Hz with a diagonal field of view of 60° (Experiment 2; Visual System HD, NordicNeuroLab, Bergen, Norway).

VR scenes