Abstract

Since its public release on November 30, 2022, ChatGPT has shown promising potential in diverse healthcare applications despite ethical challenges, privacy issues, and possible biases. The aim of this study was to identify and assess the most influential publications in the field of ChatGPT utility in healthcare using bibliometric analysis. The study employed an advanced search on three databases, Scopus, Web of Science, and Google Scholar, to identify ChatGPT-related records in healthcare education, research, and practice between November 27 and 30, 2023. The ranking was based on the retrieved citation count in each database. The additional alternative metrics that were evaluated included (1) Semantic Scholar highly influential citations, (2) PlumX captures, (3) PlumX mentions, (4) PlumX social media and (5) Altmetric Attention Scores (AASs). A total of 22 unique records published in 17 different scientific journals from 14 different publishers were identified in the three databases. Only two publications were in the top 10 list across the three databases. Variable publication types were identified, with the most common being editorial/commentary publications (n=8/22, 36.4%). Nine of the 22 records had corresponding authors affiliated with institutions in the United States (40.9%). The range of citation count varied per database, with the highest range identified in Google Scholar (1019–121), followed by Scopus (242–88), and Web of Science (171–23). Google Scholar citations were correlated significantly with the following metrics: Semantic Scholar highly influential citations (Spearman’s correlation coefficient ρ=0.840, p<0.001), PlumX captures (ρ=0.831, p<0.001), PlumX mentions (ρ=0.609, p=0.004), and AASs (ρ=0.542, p=0.009). In conclusion, despite several acknowledged limitations, this study showed the evolving landscape of ChatGPT utility in healthcare. There is an urgent need for collaborative initiatives by all stakeholders involved to establish guidelines for ethical, transparent, and responsible use of ChatGPT in healthcare. The study revealed the correlation between citations and alternative metrics, highlighting its usefulness as a supplement to gauge the impact of publications, even in a rapidly growing research field.

Keywords: ChatGPT in healthcare, bibliometric analysis, citation metric, publication impact, generative AI in healthcare

Introduction

The accelerated advancement in generative artificial intelligence (AI) could have a transformative impact on different scientific and societal aspects [1-3]. In particular, the utility of AI-based conversational chatbots can be paradigm-shifting in healthcare [4-6]. Consequently, the integration of generative AI models in healthcare education, research, and practice offers unique and unprecedented transformative opportunities [7,8]. For example, AI-based models can help in data analysis, refinement of clinical decision-making, and improving personalized medicine and health literacy [7,9-11]. Additionally, integration of the generative AI models in healthcare settings can help streamline the workflow with subsequent efficient and cost-effective delivery of timely care [7,9,12]. In healthcare education, AI-based conversational chatbots can offer personalized learning tailored to individual student needs and simulate complex medical scenarios for training purposes at lower costs [7,13-15]. The growing prevalence of generative AI use among university students and educators illustrates the expanding opportunities presented by this technology [16-18]. In healthcare-related research, AI-based models can aid in organizing and analyzing massive datasets with expedited novel insights, besides the ability to aid in medical writing [7,19,20].

Since its public release on November 30, 2022, ChatGPT, developed by OpenAI (San Francisco, California, US), has emerged as the prime, popular, and widely used example of AI-based conversational models. The wide use of ChatGPT was highlighted in various studies that investigated its utility and applications in healthcare [7,21]. ChatGPT demonstrated considerable potential in various healthcare-related applications based on its perceived usefulness and ease of use [7,16,21]. Applications of ChatGPT in healthcare that have been identified so far include facilitating health professional-patient interactions, helping in medical documentation, assisting in various research aspects, and offering medical education support [7,9,22-24].

The recent rapid increase in the number of studies exploring the potential of ChatGPT in healthcare demonstrates its potential positive impact in this research field [7,9,25,26]. However, several studies highlighted valid concerns and weaknesses that should be addressed for the successful and responsible use of ChatGPT in healthcare [7,9,20]. These limitations are mainly related to ethics, privacy, cybersecurity issues, and potential biases in ChatGPT algorithms [7,9,27]. Therefore, it is crucial to address ChatGPT-related concerns to ensure the safe, responsible, ethical, and effective utilization of this generative AI model in healthcare [7,9,28].

Bibliometric analysis is a helpful and widely used approach to assess the impact and trends of academic literature [29-31]. The investigation of bibliographic data involves tracking several metrics of scientific records, such as citation counts, authorship features, and publication outreach; thereby, bibliometric analysis can provide valuable insights into the impact and trends of research within a specific scientific field [32]. Several bibliometrics measures are currently used to assess the impact and outreach of publications [29]. For example, the Semantic Scholar (SS) highly influential citations (HICs) tool can be used to highlight references that have a significant impact on the citing publication [33,34]. Another measure is the PlumX from Plum Analytics (Philadelphia, Pennsylvania, US), which offers the following metrics to highlight a publication impact [35-39]: (1) The “PlumX Captures” metric measures engagement with a publication via tracking publication downloads, saves, and bookmarks; (2) the “PlumX Mentions” metric which shows the publication relevance in society highlighted by the frequency of publication use by various digital media platforms; (3) the “PlumX Social Media” metric which assesses the social media interactions [40]. In addition, the Altmetric Attention Score (AAS) (Altmetric Limited, London, UK) aggregates attention across diverse platforms with different weights of different sources, indicating the publication’s social and news impact [41-43].

The use of bibliometric analysis can be a valuable tool to systematically map the landscape of research tackling ChatGPT applications in healthcare [25]. The potential insights of bibliometric analysis can provide an overview of the key research themes and influential publications within an emerging and swiftly evolving research subject, namely AI in healthcare [44]. Additionally, bibliometric analysis can help to identify gaps in research and shape the trajectory of ongoing and future studies addressing the utility of ChatGPT in healthcare [25,45,46].

Therefore, the aim of this study was to conduct a bibliometric analysis to identify and assess the most influential publications addressing ChatGPT utility in healthcare. To achieve this aim, this study relied on a systematic search across prominent and widely used scientific databases (i.e., Scopus, Web of Science, and Google Scholar), with the search process coinciding with the first anniversary of ChatGPT public release [47,48]. A robust bibliometric analysis in publications can offer valuable insights into the research trends involving ChatGPT applications and challenges in healthcare. Bibliometric analysis can also help to identify the topics that received the most attention from researchers, media, and the general public. Additionally, the identification of the most influential publications in this growing field can help delineate the current and future research priorities, which in turn can help facilitate the successful integration of AI technologies, including ChatGPT, in healthcare.

Methods

Study design

This descriptive bibliometric analysis study was designed to identify and analyze the top publications addressing ChatGPT utility in healthcare that were published over a period of one year. The classification was based on the citation counts in three academic databases (Scopus, Web of Science, and Google Scholar). These scientific databases were selected based on their extensive coverage of scholarly literature, including healthcare and technology [47,48]. While PubMed/MEDLINE is considered a significant and widely-used academic database in healthcare research, the decision to exclude this relevant database from the search process was based on the lack of a clear feature for direct retrieval of citation counts in PubMed/MEDLINE. The search process concluded on November 30, 2023, ensuring the inclusion of all relevant publications up to the first anniversary of ChatGPT’s public release [21].

Detailed search strategies

In Scopus, the search strategy focused on the article title, abstract, and keywords. The exact search string was as follows: (TITLE-ABS-KEY (“ChatGPT” OR “GPT-3” OR “GPT-3.5” OR “GPT-4”) AND TITLE-ABS-KEY (“healthcare” OR “medical” OR “health care”)). The search in Scopus was conducted at 11:08 GMT on November 27, 2023. For the Web of Science database, the search was conducted using the topic search (TS) field. The exact search was as follows: TS=(“ChatGPT” OR “GPT-3” OR “GPT-3.5” OR “GPT-4”) AND TS=(“healthcare” OR “health care”). This search was completed at 11:27 GMT on November 27, 2023. The Google Scholar search was conducted using the Publish or Perish software (Version 8) [49]. The search covered the years 2022–2023 and was concluded at 10:36 GMT on November 27, 2023. In the “Title words” function of the software, the following search terms were used: (“ChatGPT” OR “GPT-3” OR “GPT-3.5” OR “GPT-4”) AND (“healthcare” OR “health care”).

The data from the three databases were retrieved separately as comma-separated values (CSV) files, and the results were sorted based on citation counts in descending order. Then, the top 10 records in each database were identified based on the screening of the title and abstract. For inclusion in this study, the record must have evaluated any aspect of ChatGPT applications in healthcare education, research, or practice [7].

Data on the 2022 journal impact factor was obtained via the Clarivate Journal Citation Reports [50], while the 2022 CiteScore data were obtained directly from Scopus [51].

Alternative metrics retrieval

For the top ten records identified in each database, a manual search for the alternative metrics was conducted. These alternative metrics included (1) the highly influential citations (SS HICs) identified through Semantic Scholar [52]; the PlumX metrics were sourced from Scopus [40,51]; and the Altmetric Attention Scores (AASs) were procured directly from each respective record if available [41]. The SS HICs are citations characterized by having a significant impact on the citing record. The determination of HICs was performed by a machine-learning model that analyzed multiple factors, including the frequency of citations and the context in which the reference was used [52,53]. Each unique record title was manually entered into the Semantic Scholar search tool, and the corresponding Semantic Scholar HICs metric were retrieved directly for each title as of November 30, 2023.

For the PlumX metrics, the “PlumX Captures” tracks and aggregates the frequency of downloads, saves, or bookmarks of a record, giving an indication of engagement in the scientific community [40]. The “PlumX Mentions” is a metric that assesses the frequency with which a publication is being mentioned or referenced in news media, blogs, and Wikipedia, reflecting the broader societal engagement [40]. The “PlumX Social Media” metric assesses social media engagement via tracking shares, likes, posts, and other forms of social media interactions to measure the publication visibility and impact in social media (e.g., Vimeo, Facebook, Amazon, Goodreads, SourceForge, YouTube, and Figshare) [40]. The PlumX metrics were retrieved manually through individual entry of each unique record title into the Scopus search tool. This was followed by a manual inspection of the PlumX metrics for each record under the Scopus sub-title “Metrics” for each record on November 30, 2023. The AAS is a composite metric by Altmetric Limited (London, UK) that measures the attention received by a publication across various social media and digital platforms, including news media, social media, policy documents, and online forums, reflecting broad visibility [41,43]. The AAS for each unique record was manually retrieved from the central position of the Altmetric donut on each publication page on November 30, 2023.

To unify the final comparisons, Google Scholar citations, as of November 30, 2023, were used for the final included publications, with data retrieved directly from Google Scholar for each publication approximately between 11:00 and 12:00 GMT. This decision was made since all the retrieved records were available on Google Scholar with the exception of a single reference, for which the citation count was obtained directly through Crossref (Lynnfield, Massachusetts, US) on the publication website [54].

Statistical and data analysis

The statistical analysis was conducted using IBM SPSS Statistics for Windows, Version 26.0 (IBM Corp, New York, US). The level of statistical significance was p=0.05. Correlations between the citation counts and the alternative metrics were assessed using Kendall’s tau-b (τb) correlation coefficient and Spearman’s rank-order correlation coefficient (ρ) based on the non-normality of metrics for the majority of variables as assessed using the Shapiro-Wilk test. The correlation between publication metrics as scale variables and the region of the corresponding authors was measured using the Kruskal-Wallis H (K-W) test.

Results

Top 10 records in Scopus, Web of Science, and Google Scholar by citation count

The top 10 identified records in Scopus varied in citation count from 242 to 88 citations (Table 1). Based on the first affiliations of the corresponding authors, the records were mostly US-based (n=5, 50%). Record types varied from editorial/comment (n=3, 30%), special/brief report or perspective (n=3, 30%), original article/investigation (n=2, 20%), and review (n=2, 20%). The 10 records were published in nine different scientific journals, with 2022 CiteScores ranging from 0.9 to 134.4, and the journals were published by nine different publishers (Table 1).

Table 1.

Top ten ChatGPT records in healthcare in the Scopus database

| Authors | Title | Scopus citation count | Record type | Affiliation and country of the corresponding author | Journal, (CiteScore), publisher |

|---|---|---|---|---|---|

| Sallam [7] | ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns |

242 | Review | The University of Jordan, Jordan | Healthcare (Switzerland), (2.7), MDPI |

| Gilson et al. [55] | How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment |

225 | Article | Yale University, US | JMIR Medical Education, (5.0), JMIR Publications Inc. |

| Lee et al. [56] | Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine | 174 | Special report | Microsoft Research, US | New England Journal of Medicine, (134.4), Massachusetts Medical Society |

| Shen et al. [57] | ChatGPT and other large language models are double-edged swords | 157 | Editorial | New York University, US | Radiology, (34.2), Radiological Society of North America Inc. |

| Patel and Lam [22] | ChatGPT: The future of discharge summaries? | 145 | Comment | St Mary’s Hospital, UK | The Lancet Digital Health, (33.1), Elsevier Ltd |

| Liebrenz et al. [58] | Generating scholarly content with ChatGPT: Ethical challenges for medical publishing | 131 | Comment | University of Bern, Switzerland | The Lancet Digital Health, (33.1), Elsevier Ltd |

| Ayers et al. [59] | Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum | 129 | Original Investigation | University of California, US | JAMA Internal Medicine, (43.2), American Medical Association |

| Biswas [60] | ChatGPT and the future of medical writing | 124 | Perspective | University of Tennessee, US | Radiology, (34.2), Radiological Society of North America Inc. |

| Cascella et al. [61] | Evaluating the feasibility of ChatGPT in healthcare: An analysis of multiple clinical and research scenarios | 112 | Brief report | University of Parma, Italy | Journal of Medical Systems, (11.8), Springer |

| Ray [62] | ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope |

88 | Review | Sikkim University, India | Internet of Things and Cyber- Physical Systems, (0.9), KeAi Communications Co. |

AI: artificial intelligence

The top 10 identified records in Web of Science varied in citation count from 171 to 23 citations (Table 2). Based on the first affiliations of the corresponding authors, the records were varied, with two being US-based (n=2/9, 22.2%) and two being India-based (n=2/9, 22.2%) records. Record types varied and included editorial/comment (n=4, 40%), review (n=3, 30%), original article (n=2, 20%), and a brief report (n=1, 10%). The 10 records were published in 8 different scientific journals with a 2022 impact factor ranging from 1.2 to 82.9, and the journals were published by 6 different publishers.

Table 2.

Top ten ChatGPT records in healthcare in the Web of Science database

| Authors | Title | Web of Science Core citation count | Record type | Country of the corresponding author | Journal, (impact factor), publisher |

|---|---|---|---|---|---|

| Sallam [7] | ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns |

171 | Review | The University of Jordan, Jordan | Healthcare (Switzerland), (2.8), MDPI |

| Alkaissi and McFarlane [63] | Artificial hallucinations in ChatGPT: Implications in scientific writing | 102 | Editorial | Kings County Hospital Center, US | Cureus Journal of Medical Science, (1.2), Springer |

| Cascella et al. [61] | Evaluating the feasibility of ChatGPT in healthcare: An analysis of multiple clinical and research scenarios | 78 | Brief report | University of Parma, Italy | Journal of Medical Systems, (5.3), Springer |

| Nature Medicine Editorial [54] | Will ChatGPT transform healthcare? | 48 | Editorial | NA | Nature Medicine, (82.9), Nature portfolio |

| Korngiebel and Mooney [64] | Considering the possibilities and pitfalls of Generative Pre-trained Transformer 3 (GPT-3) in healthcare delivery |

45 | Comment | The Hastings Center Garrison, US | npj Digital Medicine, (15.2), Nature Research |

| Dave et al. [23] | ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations | 34 | Review | Bukovinian State Medical University, Ukraine | Frontiers in Artificial Intelligence, (4.0), Frontiers Media SA |

| Vaishya et al. [65] | ChatGPT: Is this version good for healthcare and research? | 31 | Article | Indraprastha Apollo Hospitals, India | Diabetes and Metabolic Syndrome-Clinical Research and Reviews, (10.0), Oxford University Press |

| Hopkins et al. [66] | Artificial intelligence chatbots will revolutionize how cancer patients access information: ChatGPT represents a paradigm-shift | 26 | Commentary | Flinders University, Australia | JNCI Cancer Spectrum, (4.4), Oxford University Press |

| Sinha et al. [67] | Applicability of ChatGPT in assisting to solve higher order problems in pathology | 24 | Article | All India Institute of Medical Sciences, India | Cureus Journal of Medical Science, (1.2), Springer |

| Temsah et al. [68] | Overview of early ChatGPT’s presence in medical literature: Insights from a hybrid literature review by ChatGPT and human experts | 23 | Review | Universiti Sains Malaysia, Malaysia | Cureus Journal of Medical Science, (1.2), Springer |

The top 10 identified records in Google Scholar varied in citation count from 1019 to 121 citations (Table 3). Based on the first affiliations of the corresponding authors, the records were variable, with three US-based (30%) and two Italy-based (20%) records. Record types varied, including editorial/comment (n=4, 40%), brief report/perspective/special communication (n=3, 30%), original article (n=2, 20%), and a review (n=1, 10%). The 10 records were published in 8 different scientific journals, and the journals were published by 8 different publishers.

Table 3.

Top ten ChatGPT records in healthcare in the Google Scholar database

| Authors | Title | GS citation count | Record type | Country of the corresponding author | Journal, publisher |

|---|---|---|---|---|---|

| Kung et al. [69] | Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models | 1019 | Article | AnsibleHealth, Inc Mountain View, US | PLOS Digital Health, PLOS |

| Sallam [7] | ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns | 523 | Review | The University of Jordan, Jordan | Healthcare (Switzerland), MDPI |

| Gilson et al. [55] | How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment |

430 | Article | Yale University, US | JMIR Medical Education, JMIR Publications Inc. |

| Shen et al. [57] | ChatGPT and other large language models are double-edged swords | 309 | Editorial | New York University, US | Radiology, Radiological Society of North America Inc. |

| Patel and Lam [22] | ChatGPT: The future of discharge summaries? | 255 | Comment | St Mary’s Hospital, UK | The Lancet Digital Health, Elsevier Ltd |

| Liebrenz et al. [58] | Generating scholarly content with ChatGPT: Ethical challenges for medical publishing | 255 | Comment | University of Bern, Switzerland | The Lancet Digital Health, Elsevier Ltd |

| Cascella et al. [61] | Evaluating the feasibility of ChatGPT in healthcare: An analysis of multiple clinical and research scenarios | 249 | Brief report | University of Parma, Italy | Journal of Medical Systems, Springer |

| Khan et al. [70] | ChatGPT - reshaping medical education and clinical management | 180 | Special Communication | PharmEvo (Pvt) Ltd, Pakistan | Pakistan Journal of Medical Sciences, Professional Medical Publications |

| Eysenbach [14] | The role of ChatGPT, generative language models, and artificial intelligence in medical education: A conversation with ChatGPT and a call for papers | 179 | Editorial | JMIR Publications, Canada | JMIR Medical Education, JMIR Publications Inc. |

| De Angelis et al. [71] | ChatGPT and the rise of large language models: The new AI-driven infodemic threat in public health | 121 | Perspective | University of Pisa, Italy | Frontiers in Public Health, Frontiers Media SA |

AI: artificial intelligence; GS: Google Scholar; USMLE: United States Medical Licensing Examination

Compiled list of top unique records across the three databases and emerging topics for future research

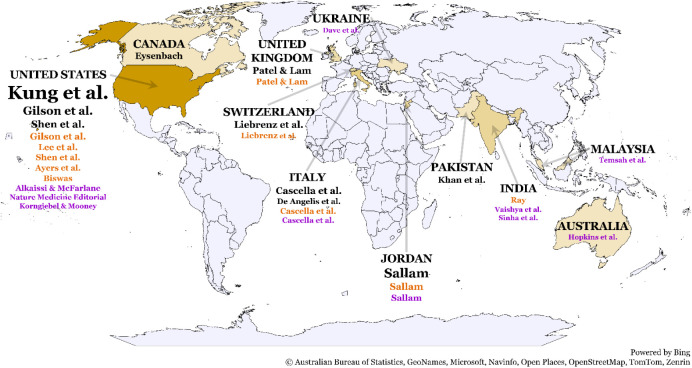

The number of unique records identified in the three databases was 22. Only two records appeared in the top ten list in the three databases out of the 22 records (9.1%) [5,55], while four appeared in two databases (18.2%) [17,49,51,52]. The geographic distribution of the top records across the three databases based on the affiliations of the corresponding authors varied, with the most common being US-based (Figure 1).

Figure 1.

The top 10 healthcare-related ChatGPT records based on citation count across Scopus, Web of Science, and Google Scholar databases. Records in Scopus are shown in orange, Web of Science in violet, and Google Scholar in black. The font size of the authors is relative to the citation count. The map was generated in Microsoft Excel, powered by Bing, ©GeoNames, Microsoft, Navinfo, TomTom, and Wikipedia.

Six themes emerged from the final list of 22 ChatGPT healthcare-related influential publications. The first theme is the enhancement of healthcare education, which involves the exploration of ChatGPT’s potential to improve academic performance and cost-effectiveness in education [7,55,69]. The second theme is assistance in academic editorial and review processes, which includes the investigation of ChatGPT’s utility as an academic editor or peer reviewer in healthcare research and developing policies for its ethical integration to ensure scientific integrity [7,57,58,61,63,65]. The third theme focuses on improving patient engagement and interactions, entailing the evaluation of the effectiveness of ChatGPT in patient communications, understanding patient preferences for AI versus human support, and assessing the impact of ChatGPT on improving health literacy [57,62,66]. The fourth theme addresses the proactive mitigation of misinformation, which involves addressing the risks of misinformation from ChatGPT and developing comprehensive guidelines for its responsible use in healthcare [58,71]. The fifth theme, benchmarking the performance of ChatGPT in healthcare, involves establishing standard methodologies for the evaluation of ChatGPT performance in various tasks in different healthcare settings [66]. The last theme is the effective integration of ChatGPT in healthcare settings, which involves establishing best practices for effective integration of ChatGPT with human expertise in healthcare, including training for ethical and judicious use, developing quality standards, and engaging stakeholders to maximize ChatGPT benefits while mitigating its risks [54,64,71].

Correlation between Google Scholar citation count and alternative metrics

The full bibliometrics for the final 22 influential records retrieved across the three scientific databases are illustrated in Table 4.

Table 4.

Full bibliometrics of the 22 ChatGPT-related healthcare influential records

| Authors | Title | GS citation count | SS HICs | PlumX captures | PlumX mentions | PlumX social media | AAS |

|---|---|---|---|---|---|---|---|

| Kung et al. [69] | Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models | 1032 | 35 | - | - | - | 1541 |

| Sallam [7] | ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns | 540 | 17 | 675 | 3 | 13 | 51 |

| Gilson et al. [55] | How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment |

441 | 14 | 550 | 113 | 52 | 478 |

| Lee et al. [56] | Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine | 381 | 8 | 278 | 60 | 0 | 775 |

| Alkaissi and McFarlane [63] | Artificial hallucinations in ChatGPT: Implications in scientific writing | 334 | 5 | 319 | 18 | 0 | 212 |

| Shen et al. [57] | ChatGPT and other large language models are double-edged swords | 317 | 5 | 229 | 9 | 0 | 95 |

| Ray [62] | ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope | 306 | 10 | 734 | 20 | 0 | 7 |

| Ayers et al. [59] | Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum | 284 | 4 | 327 | 573 | 0 | 6086 |

| Patel and Lam [22] | ChatGPT: The future of discharge summaries? | 261 | 1 | 218 | 10 | 37 | 143 |

| Liebrenz et al. [58] | Generating scholarly content with ChatGPT: Ethical challenges for medical publishing | 255 | 6 | 288 | 3 | 34 | 50 |

| Cascella et al. [61] | Evaluating the feasibility of ChatGPT in healthcare: An analysis of multiple clinical and research scenarios |

253 | 4 | 394 | 2 | 0 | 19 |

| Biswas [60] | ChatGPT and the future of medical writing | 253 | 5 | 198 | 12 | 0 | 389 |

| Eysenbach [14] | The role of ChatGPT, generative language models, and artificial intelligence in medical education: A conversation with ChatGPT and a call for papers | 188 | 4 | 384 | 109 | 9 | 463 |

| Khan et al. [70] | ChatGPT - reshaping medical education and clinical management | 184 | 4 | - | - | - | 2 |

| De Angelis et al.

[71] |

ChatGPT and the rise of large language models: The new AI-driven infodemic threat in public health | 124 | 5 | 138 | 1 | 0 | 18 |

| Dave et al. [23] | ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations | 121 | 0 | 213 | 1 | 18 | 94 |

| Korngiebel and Mooney [64] | Considering the possibilities and pitfalls of Generative Pre-trained Transformer 3 (GPT-3) in healthcare delivery | 111 | 1 | 137 | 4 | 0 | 94 |

| Vaishya et al. [65] | ChatGPT: Is this version good for healthcare and research? | 92 | 2 | 139 | 1 | 24 | 14 |

| Hopkins et al. [66] | Artificial intelligence chatbots will revolutionize how cancer patients access information: ChatGPT represents a paradigm-shift | 78 | 1 | 113 | 7 | 1 | 73 |

| Nature Medicine Editorial [54] | Will ChatGPT transform healthcare? | 70 | 0 | 93 | 10 | 0 | 134 |

| Sinha et al. [67] | Applicability of ChatGPT in assisting to solve higher order problems in pathology | 64 | 0 | 75 | 0 | 0 | 3 |

| Temsah et al. [68] | Overview of early ChatGPT’s presence in medical literature: Insights from a hybrid literature review by ChatGPT and human experts | 49 | 3 | 96 | 0 | 0 | 10 |

AAS: altmetric attention score; AI: artificial intelligence; GS: Google Scholar; SS HICs: Semantic Scholar highly influential citations; USMLE: United States Medical Licensing Examination

To determine the possible correlations between the latest Google Scholar citations as of 30 November 2023 and the alternative metrics (PlumX, SS HICs, and AASs), Kendall’s tau-b (τb) correlation coefficient and Spearman’s rank-order correlation coefficient (ρ) were used. Significant positive correlations were detected between the Google Scholar citations and SS HICs (τb=0.696, ρ=0.84, p<0.001 for both), PlumX captures (τb=0.67, ρ=0.831, p<0.001 for both), PlumX mentions (τb=0.456, p=0.006, ρ=0.609, p=0.004), and AASs (τb=0.396, p=0.010, ρ=0.542, p=0.009) (Table 5). The PlumX mentions and AAS were significantly associated with the region of the corresponding author’s affiliation, with the highest being in the United States or Canada (Table 6).

Table 5.

Correlation between Google Scholar citation count and alternative metrics

| Metrics | Kendall’s tau-b (τb) correlation coefficient |

GS citation count |

SS HICs | PlumX captures | PlumX mentions | PlumX social media |

AAS |

|---|---|---|---|---|---|---|---|

| Spearman’s correlation coefficient (ρ) GS citation count |

- | τb | τb | τb | τb | τb | |

| ρ | - | 0.696** | 0.670** | 0.456** | 0.144 | 0.396* | |

| p-value | <0.001 | <0.001 | 0.006 | 0.418 | 0.010 | ||

| SS HICs | ρ | 0.840** | - | 0.554** | 0.295 | 0.034 | 0.190 |

| p-value | <0.001 | 0.001 | 0.081 | 0.853 | 0.231 | ||

| PlumX captures |

ρ | 0.831** | 0.739** | - | 0.406* | 0.195 | 0.237 |

| p-value | <0.001 | <0.001 | 0.013 | 0.269 | 0.144 | ||

| PlumX mentions | ρ | 0.609** | 0.411 | 0.547* | - | 0.007 | 0.745** |

| p-value | 0.004 | 0.072 | 0.013 | 0.971 | <0.001 | ||

| PlumX social media | ρ | 0.169 | 0.056 | 0.244 | 0.005 | - | 0.072 |

| p-value | 0.476 | 0.813 | 0.299 | 0.984 | 0.685 | ||

| AAS | ρ | 0.542** | 0.27 | 0.287 | 0.805** | 0.092 | - |

| p-value | 0.009 | 0.225 | 0.219 | <0.001 | 0.699 |

AAS: altmetric attention score; GS: Google Scholar; SS HICs: Semantic Scholar highly influential citations

Correlation is significant at p=0.05

Correlation is significant at p=0.01

Table 6.

Association of publication metrics with the region of the affiliation of the corresponding author

| Region | US or Canada | Australia, Italy, UK, Switzerland, or Ukraine | India, Jordan, Malaysia, or Pakistan | p-value a |

|---|---|---|---|---|

| Mean±SD | Mean±SD | Mean±SD | ||

| GS citation count | 341.1±268.83 | 182±83.08 | 205.83±189.66 | 0.214 |

| SS HICs | 8.1±10.2 | 2.83±2.48 | 6±6.36 | 0.456 |

| PlumX captures | 279.44±137.93 | 227.33±102.77 | 343.8±330.74 | 0.884 |

| PlumX mentions | 100.89±182.22 | 4±3.69 | 4.8±8.58 | 0.007 |

| PlumX social media | 6.78±17.22 | 15±17.32 | 7.4±10.85 | 0.291 |

| AAS | 1026.7±1830.26 | 66.17±48.02 | 14.5±18.43 | <0.001 |

AAS: altmetric attention score; GS: Google Scholar; SS HICs: Semantic Scholar highly influential citations

Analyzed using Kruskal-Wallis H (K-W) test

Discussion

In the current study, bibliometric analysis was used to examine the growing literature that addressed the utility of ChatGPT in healthcare over a single year. Bibliometric analysis used in the current study involved a systematic search across three prominent academic databases, with a ranking of influential publications based on the frequency of citations received by the retrieved publications [72-74]. The use of bibliometric analysis in this study was justified by the previous evidence highlighting the valuable role of this approach in enhancing the collective understanding of scientific research dynamics, especially in growing research topics [75-77].

The major finding in this study was the demonstration of the rapid growth of literature addressing ChatGPT in healthcare and the swift impact of publications on this emerging research topic. Marking the first anniversary of ChatGPT’s public release and its recognition as the fastest-growing web-based platform with active users ever [78], the current study pointed to the intricate interplay between AI and healthcare. This dynamic interaction promises major improvements in medical science and patient care, but it also requires careful handling of the expected technical, ethical, and regulatory issues [79].

A major finding in this study was the identification of the seminal study by Kung et al. highlighting the impressive ChatGPT performance in the United States Medical Licensing Examination (USMLE) as the most influential publication [69]. In less than a year, the impact of Kung et al.’s study was highlighted by more than 1,000 citations in Google Scholar, underlining the potential of ChatGPT in medical education which is gaining a huge momentum [7,20,55,69,80-82]. Notably, the publication by Kung et al. has not been identified in both Scopus and Web of Science databases. The absence of this publication from these prominent academic databases is attributed to its publication in the newly established, yet-to-be-indexed scientific journal, PLOS Digital Health [69]. This result suggests the necessity of Google Scholar’s inclusion in bibliometric analyses and systematic reviews, considering its comprehensive coverage and immediate indexing for various scholarly sources [83].

Additionally, a systematic review that explored the applications of ChatGPT in healthcare education, research, and practice has been identified in this study as one of the most frequently cited publications across the three databases, being the most commonly cited publication in Web of Science and Scopus [7]. Despite being published in a journal with a relatively modest impact factor (2.8 in 2022) and CiteScore (2.7 in 2022), the aforementioned review achieved a significant level of citations within a short period of time. This result suggests that influential research can transcend the traditional metrics of journal impact [84,85].

Geographical analysis that involved the affiliations of corresponding authors of the top publications in this study revealed a wide range of contributing countries in spite of the relative predominance of US-based publications [54-57,59,60,63,64,69]. This result can be related to the forefront role and influence of US-based research with advanced research infrastructure and funding opportunities [86]. Nevertheless, the presence of an additional ten countries contributing to healthcare-related ChatGPT influential research can point to the global interest in this emerging scientific field. This diversity appears valuable since the utility of ChatGPT in healthcare should be guided by the consideration of varied healthcare systems and patient demographics worldwide.

The current study identified 22 unique records in the top healthcare-related ChatGPT publications list, a figure that surpassed the anticipated number of 10 publications across the three searched scientific databases. This result demonstrates the notable variation in citation counts across different scientific databases [87]. Therefore, variability in citations per database highlights the necessity for reliance on multiple databases in bibliometric analysis to avoid biases in publication impact evaluation [88]. Additionally, it is important to emphasize that while a high citation count can be indicative of a high impact, the current study showed clear discrepancies in citation counts per database. This result suggests that the sole dependence on conventional citation metrics to assess publications’ influence is an inadequate approach, particularly in emerging research topics such as ChatGPT utility in healthcare [89].

A notable finding of this study was the identification of a wide range of influential publications on the role of ChatGPT in healthcare, encompassing editorials, commentaries, perspectives, original research articles, and reviews. This result reflects the dynamic nature of scholarly communication on ChatGPT’s role in healthcare. Importantly, the vast majority of top-ranked publications found in this study were published in open access journals, suggesting that open access policies might influence publication impact, although further evidence is needed to confirm this tentative link [90-92].

Another interesting finding in this study was the strong correlation between citation counts and alternative metrics (Semantic Scholar HICs, PlumX metrics, and AASs). This result emphasizes the potential use of alternative publication metrics to refine the assessment of scholarly and societal influence of scientific publications [42]. Thus, the use of alternative publication metrics is important to complement the citation count metric in assessing the outreach and influence of publications involving ChatGPT in healthcare, similar to its use across diverse academic disciplines [93,94].

Finally, the influential publications identified in the current study pointed to three primary application areas of ChatGPT in healthcare. First, enhancing healthcare practice through improved workflows and patient engagement [7,22,56,57,62]. Second, augmenting healthcare education with personalized learning and clinical simulations [7,55,69,70]. Third, supporting medical research in areas like academic writing and data management [7,58,61,62,65]. However, these applications should be made in light of challenges, including the generation of inaccurate content, ethical concerns, and potential biases [7,54,62]. Additionally, future research should prioritize establishing standard methodologies for the design and reporting of generative AI applications in healthcare to ensure the reliability and credibility of assessing AI performance in various healthcare settings [46,66,95-97]. Future research should also focus on multidisciplinary approaches involving AI developers, computer scientists, healthcare professionals, experts in healthcare education, and ethicists [46,98,99].

Limitations

It is important to clearly and explicitly point out that the use of citation counts or alternative metrics is by no means a direct measure of the quality of publications ranked in this study or a reflection of their direct impact. These metrics can only be viewed as a surrogate marker of the publication trends in this newly emerging research field, namely ChatGPT applications in healthcare.

Several other caveats in this study should be highlighted clearly and taken into consideration before any attempt to interpret the study results. First, this study used Scopus, Web of Science, and Google Scholar as the databases for publication selection. Despite the extensive coverage of these databases, it is important to consider that this approach might overlook publications in less prominent or regional journals due to differing indexing criteria and inherent coverage biases. The incorporation of Google Scholar, characterized by comprehensive and immediate indexing, as an additional source for retrieving publications was done to mitigate this limitation to a large extent.

Second, the search strategy focused on the titles and abstracts of the records. This approach may have resulted in inadvertent exclusion of publications that addressed ChatGPT utility in healthcare in the main text but not explicitly in the title/abstract.

Third, the geographic allocation of publications based on the affiliation of the corresponding authors could be viewed as a source of selection bias since this approach might not be fully representative of the authorship and collaboration networks, potentially causing bias in the interpretation of publication sources and subsequent geographic analysis.

Fourth, it is important to reiterate that the use of citation counts and alternative metrics, such as Semantic Scholar HICs, PlumX, and Altmetric AAS for publication ranking is influenced by a variety of factors such as scientific journal perceived impact, journal visibility, the date of publication, and time to indexing of the records in various databases. For example, more recently published articles might have lower citation counts due to a limited time frame for acknowledging their results. Thus, the ranking approach in this study might not represent a direct reflection of the scientific quality or impact of the included publications.

Finally, based on the descriptive nature of the current study, the results were confined to descriptive and subjective identification of trends and correlations, without the ability to elucidate the underlying reasons for such observed attributes of the included publications.

Conclusions

The bibliometric analysis conducted in this study highlighted the dynamic nature of ChatGPT-related research in healthcare. The range of publication types and the variability in citation patterns across the three searched databases highlighted the complexity of the scholarly discourse addressing ChatGPT applications in healthcare. The current study identified 22 influential publications that studied ChatGPT utility in healthcare in Scopus, Web of Science, and Google Scholar. The findings revealed clear correlations between GS citations and various alternative metrics, such as SS HICs, PlumX captures and mentions, and AAS, demonstrating the discernible impact of the identified publications and the usefulness of alternative metrics as an approach for gauging the publication impact. However, the regional affiliations of corresponding authors of the identified records, particularly in the U.S. and Canada, were correlated with higher PlumX mentions and AAS, suggesting the possible influence of research origin on its news coverage and public visibility.

The study identified three key emerging themes regarding ChatGPT’s utility in healthcare. ChatGPT has the potential to enhance clinical efficiency, personalize education, and support research. However, it is important to address the emerging challenges of ChatGPT in healthcare, including possible content inaccuracy, ethical issues, and biases. The study calls for standardized methodologies and multidisciplinary collaboration to ensure effective and ethical ChatGPT integration in healthcare.

Acknowledgments

None.

Ethics approval

Not applicable.

Competing interests

The authors declare that there are no conflicts of interest.

Funding

This research received no external funding.

Underlying data

The datasets analyzed during the current study are available in the original records included in the study.

How to cite

Sallam M. Bibliometric top ten healthcare-related ChatGPT publications in the first ChatGPT anniversary. Narra J 2024; 4 (2): e917 - http://doi.org/10.52225/narra.v4i2.917.

References

- 1.Gruetzemacher R, Whittlestone J.. The transformative potential of artificial intelligence. Futures 2022;135:102884. [Google Scholar]

- 2.Xu Y, Liu X, Cao X, et al. Artificial intelligence: A powerful paradigm for scientific research. Innovation (Camb) 2021;2:100179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mijwil M, Doshi R, Hiran K, et al. The effect of human-computer interaction on new applications by exploring the use case of ChatGPT in healthcare services. In: Hiran KK, Doshi R, Patel M, editors. Modern technology in healthcare and medical education: Blockchain, IoT, AR, and VR. Hershey, PA:IGI Global;2024. [Google Scholar]

- 4.Sallam M, Salim NA, Al-Tammemi AB, et al. ChatGPT output regarding compulsory vaccination and COVID-19 vaccine conspiracy: A descriptive study at the outset of a paradigm shift in online search for information. Cureus 2023;15:e35029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhang J, Oh YJ, Lange P, et al. Artificial intelligence chatbot behavior change model for designing artificial intelligence chatbots to promote physical activity and a healthy diet: Viewpoint. J Med Internet Res 2020;22:e22845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mijwil M, Hiran K, Doshi R, et al. ChatGPT and the future of academic integrity in the artificial intelligence era: A new frontier. Al-Salam J Eng Technol 2023;2:116–127. [Google Scholar]

- 7.Sallam M. ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare (Basel) 2023;11:887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Egger J, Sallam M, Luijten G, et al. Medical ChatGPT – A systematic meta-review. medRxiv 2024;2024.2004.2002.24304716. [Google Scholar]

- 9.Li J, Dada A, Puladi B, et al. ChatGPT in healthcare: A taxonomy and systematic review. Comput Methods Programs Biomed 2024;245:108013. [DOI] [PubMed] [Google Scholar]

- 10.Shahsavar Y, Choudhury A.. User intentions to use ChatGPT for self-diagnosis and health-related purposes: Cross-sectional survey study. JMIR Hum Factors 2023;10:e47564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hu JM, Liu FC, Chu CM, et al. Health care trainees’ and professionals’ perceptions of ChatGPT in improving medical knowledge training: Rapid survey study. J Med Internet Res 2023;25:e49385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mesko B. The ChatGPT (generative artificial intelligence) revolution has made artificial intelligence approachable for medical professionals. J Med Internet Res 2023;25:e48392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Preiksaitis C, Rose C.. Opportunities, challenges, and future directions of generative artificial intelligence in medical education: Scoping review. JMIR Med Educ 2023;9:e48785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Eysenbach G. The role of ChatGPT, generative language models, and artificial intelligence in medical education: A conversation with ChatGPT and a call for papers. JMIR Med Educ 2023;9:e46885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sallam M, Salim NA, Barakat M, et al. ChatGPT applications in medical, dental, pharmacy, and public health education: A descriptive study highlighting the advantages and limitations. Narra J 2023;3:e103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Abdaljaleel M, Barakat M, Alsanafi M, et al. A multinational study on the factors influencing university students’ attitudes and usage of ChatGPT. Sci Rep 2024;14:1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ibrahim H, Liu F, Asim R, et al. Perception, performance, and detectability of conversational artificial intelligence across 32 university courses. Sci Rep 2023;13:12187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Sallam M, Salim NA, Barakat M, et al. Assessing health students’ attitudes and usage of ChatGPT in Jordan: Validation study. JMIR Med Educ 2023;9:e48254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Noorbakhsh-Sabet N, Zand R, Zhang Y, et al. Artificial intelligence transforms the future of health care. Am J Med 2019;132:795–801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gödde D, Nöhl S, Wolf C, et al. A SWOT (strengths, weaknesses, opportunities, and threats) analysis of ChatGPT in the medical literature: Concise review. J Med Internet Res 2023;25:e49368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.OpenAI. ChatGPT. 2023. Available from: https://openai.com/chatgpt. Accessed: 30 November 2023.

- 22.Patel SB, Lam K.. ChatGPT: The future of discharge summaries? Lancet Digit Health 2023;5:e107–e108. [DOI] [PubMed] [Google Scholar]

- 23.Dave T, Athaluri SA, Singh S.. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front Artif Intell 2023;6:1169595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schopow N, Osterhoff G, Baur D.. Applications of the natural language processing tool ChatGPT in clinical practice: Comparative study and augmented systematic review. JMIR Med Inform 2023;11:e48933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Barrington NM, Gupta N, Musmar B, et al. A bibliometric analysis of the rise of ChatGPT in medical research. Med Sci (Basel) 2023;11:61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ruksakulpiwat S, Kumar A, Ajibade A.. Using ChatGPT in medical research: Current status and future directions. J Multidiscip Healthc 2023;16:1513–1520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Li J. Security implications of AI chatbots in health care. J Med Internet Res 2023;25:e47551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kostick-Quenet KM, Gerke S.. AI in the hands of imperfect users. npj Digit Med 2022;5:197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ellegaard O, Wallin JA. The bibliometric analysis of scholarly production: How great is the impact? Scientometrics 2015;105:1809–1831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Pears M, Konstantinidis S.. Bibliometric analysis of chatbots in health-trend shifts and advancements in artificial intelligence for personalized conversational agents. Stud Health Technol Inform 2022;290:494–498. [DOI] [PubMed] [Google Scholar]

- 31.Yang K, Hu Y, Qi H.. Digital health literacy: Bibliometric analysis. J Med Internet Res 2022;24:e35816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Wallin JA. Bibliometric methods: Pitfalls and possibilities. Basic Clin Pharmacol Toxicol 2005;97:261–275. [DOI] [PubMed] [Google Scholar]

- 33.Jones N. Artificial-intelligence institute launches free science search engine. Nature 2015;1–2. [Google Scholar]

- 34.Fricke S. Semantic scholar. J Med Libr Assoc 2018;106:145–147. [Google Scholar]

- 35.Champieux R. PlumX. J Med Libr Assoc 2015;103:63–64. [Google Scholar]

- 36.Wong EY, Vital SM. PlumX: A tool to showcase academic profile and distinction. Digit Libr Perspect 2017;33:305–313. [Google Scholar]

- 37.Lindsay JM. PlumX from Plum analytics: Not just altmetrics. J Electron Resour Med Libr 2016;13:8–17. [Google Scholar]

- 38.Torres-Salinas D, Gumpenberger C, Gorraiz J.. PlumX as a potential tool to assess the macroscopic multidimensional impact of books. Front Res Metr Anal 2017;2:5. [Google Scholar]

- 39.Torres-Salinas D, Robinson-Garcia N, Gorraiz J.. Filling the citation gap: Measuring the multidimensional impact of the academic book at institutional level with PlumX. Scientometrics 2017;113:1371–1384. [Google Scholar]

- 40.Plum Analytics. About PlumX Metrics. 2023. Available from: https://plumanalytics.com/learn/about-metrics. Accessed: 1 December 2023.

- 41.Altmetric. Guide for describing Altmetric data in publications. 2023. Available from: https://help.altmetric.com/support/solutions/articles/6000242693-guide-for-describing-altmetric-data-in-publications. Accessed: 1 December 2023.

- 42.Elmore SA. The altmetric attention score: What does it mean and why should I care? Toxicol Pathol 2018;46:252–255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rosenkrantz AB, Ayoola A, Singh K, et al. Alternative metrics (“Altmetrics”) for assessing article impact in popular general radiology journals. Acad Radiol 2017;24:891–897. [DOI] [PubMed] [Google Scholar]

- 44.Williams K. What counts: Making sense of metrics of research value. Sci Public Policy 2022;49:518–531. [Google Scholar]

- 45.de Oliveira OJ, da Silva FF, Juliani F, et al. Bibliometric method for mapping the state-of-the-art and identifying research gaps and trends in literature: An essential instrument to support the development of scientific projects. In: Kunosic S, Zerem E, editors. Scientometrics Recent Advances. Rijeka: IntechOpen; 2019. [Google Scholar]

- 46.Sallam M, Al-Farajat A, Egger J.. Envisioning the future of ChatGPT in healthcare: Insights and recommendations from a systematic identification of influential research and a call for papers. Jordan Med J 2024;58:95–108. [Google Scholar]

- 47.Pranckutė R. Web of Science (WoS) and Scopus: The titans of bibliographic information in today’s academic world. Publications 2021;9:12. [Google Scholar]

- 48.Vine R. Google Scholar. J Med Libr Assoc 2006;94:97. [Google Scholar]

- 49.Harzing AW. Publish or perish. 2016. Available from: https://harzing.com/resources/publish-or-perish. Accessed: 27 November 2023.

- 50.Clarivate. Journal citation reports. 2023. Available from: https://clarivate.com/products/scientific-and-academic-research/research-analytics-evaluation-and-management-solutions/journal-citation-reports/. Accessed: 1 December 2023.

- 51.Elsevier. Scopus sources. 2023. Available from: https://www.scopus.com/sources.uri?zone=TopNavBar&origin=searchbasic. Accessed: 1 December 2023.

- 52.Semantic Scholar. Semantic Scholar frequently asked questions: What are highly influential citations? 2023. Available from: https://www.semanticscholar.org/faq#influential-citations. Accessed: 1 December 2023.

- 53.Valenzuela M, Ha V, Etzioni O.. Identifying meaningful citations. In: Caragea C, Giles C, Bhamidipati D, editors. Scholarly big data: AI perspectives, challenges, and ideas. Menlo Park, CA: AAAI Press; 2015. [Google Scholar]

- 54.Nature Medicine Editorial. Will ChatGPT transform healthcare? Nat Med 2023;29:505–506. [DOI] [PubMed] [Google Scholar]

- 55.Gilson A, Safranek CW, Huang T, et al. How does ChatGPT perform on the United States medical licensing examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ 2023;9:e45312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Lee P, Bubeck S, Petro J.. Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine. N Engl J Med 2023;388:1233–1239. [DOI] [PubMed] [Google Scholar]

- 57.Shen Y, Heacock L, Elias J, et al. ChatGPT and other large language models are double-edged swords. Radiology 2023;307:e230163. [DOI] [PubMed] [Google Scholar]

- 58.Liebrenz M, Schleifer R, Buadze A, et al. Generating scholarly content with ChatGPT: Ethical challenges for medical publishing. Lancet Digit Health 2023;5:e105–e106. [DOI] [PubMed] [Google Scholar]

- 59.Ayers JW, Poliak A, Dredze M, et al. Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum. JAMA Intern Med 2023;183:589–596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Biswas S. ChatGPT and the future of medical writing. Radiology 2023;307:e223312. [DOI] [PubMed] [Google Scholar]

- 61.Cascella M, Montomoli J, Bellini V, et al. Evaluating the feasibility of ChatGPT in healthcare: An analysis of multiple clinical and research scenarios. J Med Syst 2023;47:33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Ray PP. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems 2023;3:121–154. [Google Scholar]

- 63.Alkaissi H, McFarlane SI. Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus 2023;15:e35179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Korngiebel DM, Mooney SD. Considering the possibilities and pitfalls of generative pre-trained transformer 3 (GPT-3) in healthcare delivery. npj Digit Med 2021;4:93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Vaishya R, Misra A, Vaish A.. ChatGPT: Is this version good for healthcare and research? Diabetes Metab Syndr 2023;17:102744. [DOI] [PubMed] [Google Scholar]

- 66.Hopkins AM, Logan JM, Kichenadasse G, et al. Artificial intelligence chatbots will revolutionize how cancer patients access information: ChatGPT represents a paradigm-shift. JNCI Cancer Spectr 2023;7:pkad010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Sinha RK, Deb Roy A, Kumar N, et al. Applicability of ChatGPT in assisting to solve higher order problems in pathology. Cureus 2023;15:e35237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Temsah O, Khan SA, Chaiah Y, et al. Overview of early ChatGPT’s presence in medical literature: Insights from a hybrid literature review by ChatGPT and human experts. Cureus 2023;15:e37281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS Digit Health 2023;2:e0000198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Khan RA, Jawaid M, Khan AR, et al. ChatGPT - reshaping medical education and clinical management. Pak J Med Sci 2023;39:605–607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.De Angelis L, Baglivo F, Arzilli G, et al. ChatGPT and the rise of large language models: The new AI-driven infodemic threat in public health. Front Public Health 2023;11:1166120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.King DA. The scientific impact of nations. Nature 2004;430:311–316. [DOI] [PubMed] [Google Scholar]

- 73.Cai L, Tian J, Liu J, et al. Scholarly impact assessment: A survey of citation weighting solutions. Scientometrics 2019;118:453–478. [Google Scholar]

- 74.Larsen PO, von Ins M.. The rate of growth in scientific publication and the decline in coverage provided by science citation index. Scientometrics 2010;84:575–603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Wiyono N, Yudhani RD, Wasita B, et al. Exploring the therapeutic potential of functional foods for diabetes: A bibliometric analysis and scientific mapping. Narra J 2024;4:e382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Sofyantoro F, Kusuma HI, Vento S, et al. Global research profile on monkeypox-related literature (1962–2022): A bibliometric analysis. Narra J 2022;2:e96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Ahmad T, Dhama K, Tiwari R, et al. Bibliometric analysis of the top 100 most cited studies in apolipoprotein e (Apoe) research. Narra J 2021;1:e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Reuters, Hu K. ChatGPT sets record for fastest-growing user base. 2023. Available from: https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/. Accessed: 14 June 2024.

- 79.Xu R, Wang Z.. Generative artificial intelligence in healthcare from the perspective of digital media: Applications, opportunities and challenges. Heliyon 2024;10:E32364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Abd-Alrazaq A, AlSaad R, Alhuwail D, et al. Large language models in medical education: Opportunities, challenges, and future directions. JMIR Med Educ 2023;9:e48291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Karabacak M, Ozkara BB, Margetis K, et al. The advent of generative language models in medical education. JMIR Med Educ 2023;9:e48163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Spallek S, Birrell L, Kershaw S, et al. Can we use ChatGPT for mental health and substance use education? Examining its quality and potential harms. JMIR Med Educ 2023;9:e51243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Halevi G, Moed H, Bar-Ilan J.. Suitability of Google Scholar as a source of scientific information and as a source of data for scientific evaluation - review of the Literature. J Informetr 2017;11:823–834. [Google Scholar]

- 84.Brembs B, Button K, Munafò M. Deep impact: Unintended consequences of journal rank. Front Hum Neurosci 2013;7:291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Ari MD, Iskander J, Araujo J, et al. A science impact framework to measure impact beyond journal metrics. PLoS One 2020;15:e0244407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Wang X, Liu D, Ding K, et al. Science funding and research output: A study on 10 countries. Scientometrics 2012;91:591–599. [Google Scholar]

- 87.Aksnes DW, Langfeldt L, Wouters P.. Citations, citation indicators, and research quality: An overview of basic concepts and theories. SAGE Open 2019;9:2158244019829575. [Google Scholar]

- 88.Kumpulainen M, Seppänen M.. Combining Web of Science and Scopus datasets in citation-based literature study. Scientometrics 2022;127:5613–5631. [Google Scholar]

- 89.Bornmann L, Marx W.. Methods for the generation of normalized citation impact scores in bibliometrics: Which method best reflects the judgements of experts? J Informetr 2015;9:408–418. [Google Scholar]

- 90.Craig ID, Plume AM, McVeigh ME, et al. Do open access articles have greater citation impact?: A critical review of the literature. J Informetr 2007;1:239–248. [Google Scholar]

- 91.Dorta-González P, Dorta-González MI. Citation differences across research funding and access modalities. J Acad Librariansh 2023;49:102734. [Google Scholar]

- 92.Langham-Putrow A, Bakker C, Riegelman A.. Is the open access citation advantage real? A systematic review of the citation of open access and subscription-based articles. PLoS One 2021;16:e0253129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Tonia T, Van Oyen H, Berger A, et al. If I tweet will you cite? The effect of social media exposure of articles on downloads and citations. Int J Public Health 2016;61:513–520. [DOI] [PubMed] [Google Scholar]

- 94.Jeong JW, Kim MJ, Oh HK, et al. The impact of social media on citation rates in coloproctology. Colorectal Dis 2019;21:1175–1182. [DOI] [PubMed] [Google Scholar]

- 95.Meskó B. Prompt engineering as an important emerging skill for medical professionals: Tutorial. J Med Internet Res 2023;25:e50638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Sallam M, Barakat M, Sallam M.. Pilot testing of a tool to standardize the assessment of the quality of health information generated by artificial intelligence-based models. Cureus 2023;15:e49373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Sallam M, Barakat M, Sallam M.. A preliminary checklist (METRICS) to standardize the design and reporting of studies on generative artificial intelligence–based models in health care education and practice: Development study involving a literature review. Interact J Med Res 2024;13:e54704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Veras M, Labbé DR, Furlano J, et al. A framework for equitable virtual rehabilitation in the metaverse era: Challenges and opportunities. Front Rehabil Sci 2023;4:1241020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Pratama R, Suhanda R, Aini Z, et al. Application of artificial intelligence technology in monitoring students’ health: Preliminary results of Syiah Kuala Integrated Medical Monitoring (SKIMM). Narra J 2024;4:e644. [Google Scholar]