This systematic review assesses the quality of evidence from scientific literature and registration databases for machine learning algorithms implemented in primary care to predict patient outcomes.

Key Points

Question

Which machine learning (ML) predictive algorithms have been implemented in primary care, and what evidence is publicly available for supporting their quality?

Findings

In this systematic review of 43 predictive ML algorithms in primary care from scientific literature and the registration databases of the US Food and Drug Administration and Conformité Européene, there was limited publicly available evidence across all artificial intelligence life cycle phases from development to implementation. While the development phase (phase 2) was most frequently reported, most predictive ML algorithms did not meet half of the predefined requirements of the Dutch artificial intelligence predictive algorithm guideline.

Meaning

Findings of this study underscore the urgent need to facilitate transparent and consistent reporting of the quality criteria in literature, which could build trust among end users and facilitate large-scale implementation.

Abstract

Importance

The aging and multimorbid population and health personnel shortages pose a substantial burden on primary health care. While predictive machine learning (ML) algorithms have the potential to address these challenges, concerns include transparency and insufficient reporting of model validation and effectiveness of the implementation in the clinical workflow.

Objectives

To systematically identify predictive ML algorithms implemented in primary care from peer-reviewed literature and US Food and Drug Administration (FDA) and Conformité Européene (CE) registration databases and to ascertain the public availability of evidence, including peer-reviewed literature, gray literature, and technical reports across the artificial intelligence (AI) life cycle.

Evidence Review

PubMed, Embase, Web of Science, Cochrane Library, Emcare, Academic Search Premier, IEEE Xplore, ACM Digital Library, MathSciNet, AAAI.org (Association for the Advancement of Artificial Intelligence), arXiv, Epistemonikos, PsycINFO, and Google Scholar were searched for studies published between January 2000 and July 2023, with search terms that were related to AI, primary care, and implementation. The search extended to CE-marked or FDA-approved predictive ML algorithms obtained from relevant registration databases. Three reviewers gathered subsequent evidence involving strategies such as product searches, exploration of references, manufacturer website visits, and direct inquiries to authors and product owners. The extent to which the evidence for each predictive ML algorithm aligned with the Dutch AI predictive algorithm (AIPA) guideline requirements was assessed per AI life cycle phase, producing evidence availability scores.

Findings

The systematic search identified 43 predictive ML algorithms, of which 25 were commercially available and CE-marked or FDA-approved. The predictive ML algorithms spanned multiple clinical domains, but most (27 [63%]) focused on cardiovascular diseases and diabetes. Most (35 [81%]) were published within the past 5 years. The availability of evidence varied across different phases of the predictive ML algorithm life cycle, with evidence being reported the least for phase 1 (preparation) and phase 5 (impact assessment) (19% and 30%, respectively). Twelve (28%) predictive ML algorithms achieved approximately half of their maximum individual evidence availability score. Overall, predictive ML algorithms from peer-reviewed literature showed higher evidence availability compared with those from FDA-approved or CE-marked databases (45% vs 29%).

Conclusions and Relevance

The findings indicate an urgent need to improve the availability of evidence regarding the predictive ML algorithms’ quality criteria. Adopting the Dutch AIPA guideline could facilitate transparent and consistent reporting of the quality criteria that could foster trust among end users and facilitating large-scale implementation.

Introduction

In most high-income countries, primary health care is affected by the increasing burden of illness experienced by aging and multimorbid populations along with personnel shortages.1 Primary care generates large amounts of routinely collected coded and free-text clinical data, which can be used by flexible and powerful machine learning (ML) techniques to facilitate early diagnosis, enhance treatment, and prevent adverse effects and outcomes.2,3,4,5 Therefore, primary care is a highly interesting domain for implementing predictive ML algorithms in daily clinical practice.6,7,8

Nevertheless, scientific literature describes the implementation of artificial intelligence (AI), especially predictive ML algorithms, as limited and far behind other sectors in data-driven technology. Predictive ML algorithms in health care often face criticism regarding the lack of comprehensibility and transparency for health care professionals and patients as well as lack of explainability and interpretability.8,9,10,11 Additionally, the reporting of peer-reviewed evidence is limited, and the utility of predictive ML algorithms in clinical workflows is often unclear.8,12,13,14 In response to these challenges, the Dutch Ministry of Health, Welfare, and Sports commissioned the development and validation of a Dutch guideline for high-quality diagnostic and prognostic applications of AI in health care. Published in 2022, the Dutch Artificial Intelligence Predictive Algorithm (AIPA) guideline is applicable to predictive ML algorithms.15,16 The guideline encourages the collection of data and evidence consistent with the 6 phases and criteria outlined in the AI life cycle (requirements), providing a comprehensive overview of existing research guideline aspects across the AI life cycle.

In this systematic review, we aimed to (1) systematically identify predictive ML algorithms implemented in primary care from peer-reviewed literature and US Food and Drug Administration (FDA) and Conformité Européene (CE) registration databases and (2) ascertain the public availability of evidence, including peer-reviewed literature, gray literature, and technical reports, across the AI life cycles. For this purpose, the Dutch AIPA guideline was adapted into a practical evaluation tool to assess the quality criteria of each predictive ML algorithm.

Methods

We conducted the systematic review in 2 steps. First, we systematically identified predictive ML algorithms by searching peer-reviewed literature and FDA and CE registration databases. Second, we ascertained the availability of evidence for the identified algorithms across the AI life cycle by systematically searching literature databases and technical reports, examining references in relevant studies, conducting product searches, visiting manufacturer websites, and contacting authors and product owners. We followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) reporting guideline.17

Eligibility Criteria

Peer-reviewed studies were included if they met all of the following eligibility criteria: (1) published between January 2000 and July 2023; (2) written in English; (3) published as original results; (4) concerned a predictive ML algorithm intended for primary care; and (5) focused on the implementation of the predictive ML algorithm in a research setting or clinical practice with, for example, pilot, feasibility, implementation, or clinical validation study designs. This review examined ML techniques (eg, [deep] neural networks, support vector machines, and random forests) developed for tasks such as computer vision and natural language processing that generated the prediction of health outcomes in individuals. We classified a study as applying ML if it used a nonregression statistical technique to develop or validate a prediction model, similar to Andaur Navarro et al,9 excluding traditional statistical approaches, such as expert systems and decision trees based on expert knowledge. Studies that addressed predictive ML algorithm development or external validation without implementation in primary care were excluded. Given that over 60% of CE-marked AI tools are not found in electronic research databases,12 this review included CE-marked or FDA-approved predictive ML algorithms published in FDA and CE registration databases.18,19,20

Data Sources and Search Strategy

Searches were conducted using the following electronic databases: PubMed, Embase, Web of Science, Cochrane Library, Emcare, Academic Search Premier, IEEE Xplore, ACM Digital Library, MathSciNet, AAAI.org (Association for the Advancement of Artificial Intelligence), arXiv, Epistemonikos, PsycINFO, and Google Scholar. All databases were searched in July 2023 for entries from January 2000 to July 2023. The search terms, derived from the National Library of Medicine MeSH (Medical Subject Headings) Tree Structures and the review team’s expertise, formed a combination related to AI, primary care,21 and implementation22 (defined in Box 1). The full search strategy is provided in eAppendix 1 in Supplement 1.

Box 1. Definitions.

Predictive Algorithm

“An algorithm leading to a prediction of a health outcome in individuals. This includes, but is not limited to, predicting the probability or classification of having (diagnostic or screening predictive algorithm) or developing over time (prognostic or prevention predictive algorithm) desirable or undesirable health outcomes.”16

Implementation

Primary Care

“Universal access to essential healthcare in communities, facilitated by practical, scientifically sound, socially acceptable methods and technology, sustainably affordable at all developmental stages, fostering self-reliance and self-determination.”21

Selection Process

Three of us (M.M.R., M.M.vB., and S.K.) conducted an independent review of the selection process, resolving disagreements among us through discussion with a senior reviewer (H.J.A.vO.). The full selection process is detailed in eAppendix 2 in Supplement 1.

Data Extraction

Five strategies were used to gather publicly available evidence for all identified predictive ML algorithms: (1) searches of PubMed and Google Scholar using product, company, and author names; (2) searches of technical reports from the FDA and CE registration online databases; (3) exploration of references within selected studies; (4) visits to predictive ML algorithm manufacturer websites; and (5) solicitation of information from authors and product owners via email or telephone, with a request to complete an online questionnaire about the reported evidence (eAppendix 3 in Supplement 1). Accepted data sources included original, peer-reviewed articles in English as well as posters, conference papers, and data management plans (DMPs).

The availability of evidence was categorized according to the life cycle phases (Box 2) and the requirements per phase set forth by the Dutch AIPA guideline.15,16 These requirements (Table 1) are defined as aspects necessary to address during the AI predictive algorithm life cycle. Therefore, developers, researchers, or owners of predictive ML algorithms should ideally provide data and evidence regarding these aspects.

Box 2. Summary of the 6 Life Cycle Phasesa .

Phase 1: Preparation and Verification of the Data

A DMP should be used to prepare for the collection and management of the necessary data for phases 2 to 5. In this plan, agreements and established procedures for collecting, processing, and storing data and managing this data are captured. During the implementation of the DMP, any changes should be continuously updated.

Phase 2: Development of the AI Model

The development of the AI model, which results from the analysis of the training data, entails the development of the algorithm and the set of algorithm-specific data structures.

Phase 3: Validation of the AI Model

The AI model undergoes external validation, which involves evaluating its performance using data not used in phase 2. The validation process assesses the statistical or predictive value of the model and examines issues related to fairness and algorithmic bias.

Phase 4: Development of the Necessary Software Tool

The focus shifts to developing the necessary software tool around the AI model. This phase encompasses designing, developing, conducting user testing, and defining the system requirements for the software.

Phase 5: Impact Assessment of the AI Model in Combination With the Software

This phase determines the impact or added value of integrating the AI model and software within the intended medical practice or context. It evaluates how these advancements affect medical actions and the health outcomes of the target group, such as patients, clients, or citizens. Additionally, conducting a health technology assessment is part of this phase.

Phase 6: Implementation and Use of the AI Model With Software in Daily Practice

The AI model and software are implemented, monitored, and incorporated into daily practice. Efforts are made to ensure smooth integration, continuous monitoring, and appropriate education and training related to their use.

Table 1. Overview of Requirements Within the Dutch Artificial Intelligence Predictive Algorithm Guideline for High-Quality Diagnostic and Prognostic Applications of Artificial Intelligence in Health Care.

| Artificial intelligence life cycle phase | Requirement | Maximum score of availability of evidence per requirement |

|---|---|---|

| Phase 1: Preparation | Data management plan | 2 |

| Phase 2: Development | Definition target use | 14 |

| Analysis and modeling steps | ||

| Internal validation | ||

| Robustness | ||

| Size of the dataset for AI model development | ||

| Reproducibility and replicability | ||

| Phase 3: Validation | Evaluation of statistical characteristics of the artificial intelligence model | 12 |

| Fairness and algorithmic bias | ||

| Determining the outcome variable | ||

| Reproducibility and replicability | ||

| Size of the dataset for external validation | ||

| Phase 4: Software application | Explainability, transparency, design, and information | 4 |

| Required standards and regulations | ||

| Phase 5: Impact assessment | Impact assessment | 10 |

| Health technology assessment | ||

| Phase 6: Implementation | Implementation plan | 6 |

| Monitoring | ||

| Education | ||

| Total availability score | Not applicable | 48 |

Statistical Analysis

The extent to which the evidence of each predictive ML algorithm aligned with the requirements of the Dutch AIPA guideline was assessed per life cycle phase (Table 1; Box 2; eTable 1 in Supplement 1), using availability scores (2 for complete, 1 for partial, and 0 for none). Two analyses were conducted. First, availability of evidence per requirement was represented as a percentage, considering the requirements per life cycle phase. The availability of evidence per life cycle phase was reported as a percentage and calculated by dividing the sum of scores of a specific life cycle phase by the maximum possible score. Second, evidence availability per predictive ML algorithm was calculated as the sum of values for all requirements divided by the total applicable requirements, excluding the requirements that were not applicable because of the life cycle phase of the algorithm (eTable 1 in Supplement 1; requirements are shaded in orange); the denominator value was 48. These availability scores aimed to provide an overview of implemented predictive ML algorithms and evidence per life cycle phase.

The analysis was conducted independently by 3 of us (M.M.R., M.M.vB., and S.K.), who resolved discrepancies through discussion with another author (H.J.A.vO.). Data were analyzed using Microsoft Excel for Windows 11 (Microsoft Corp).

Results

Predictive ML Algorithms Implemented in Primary Care

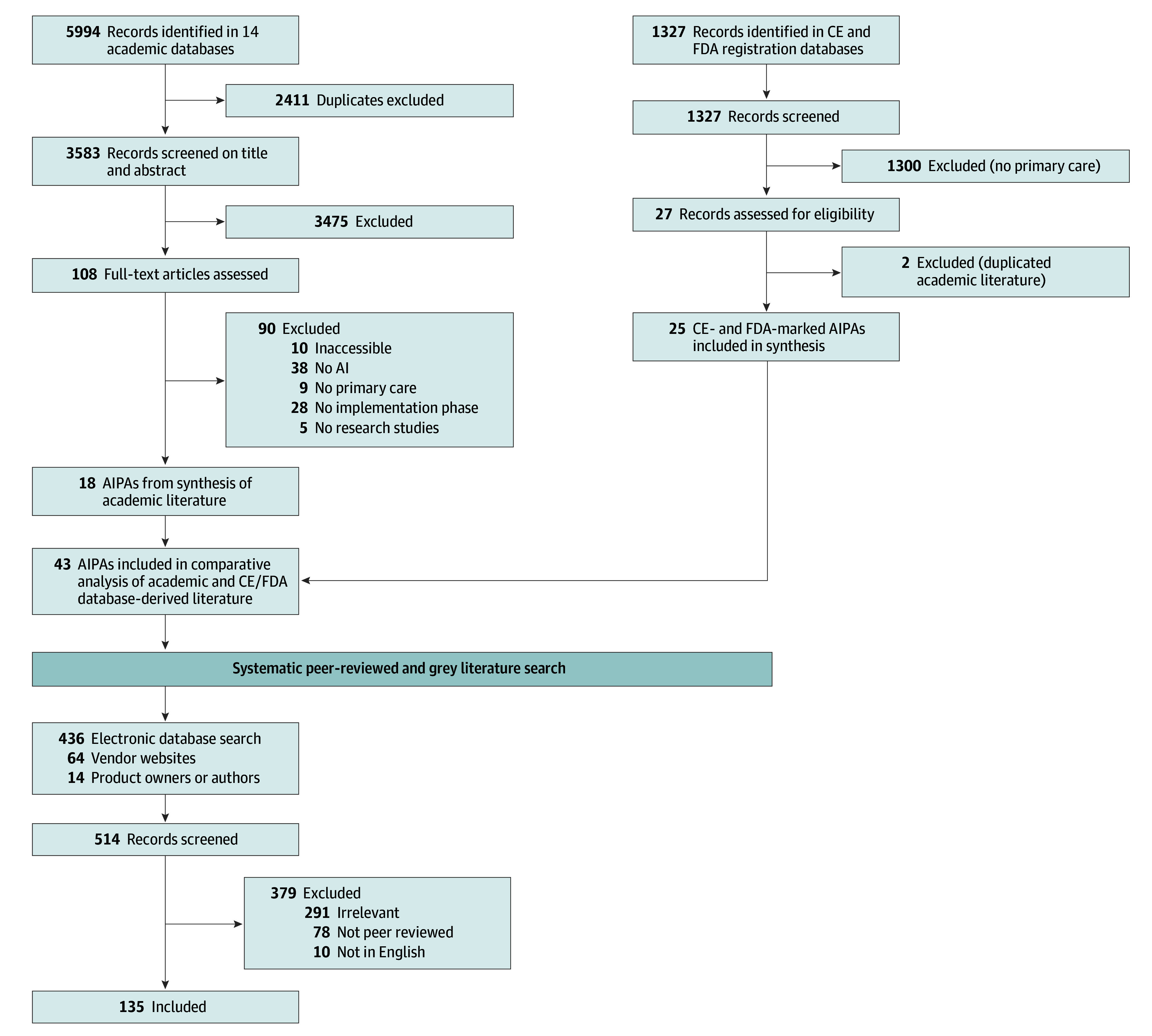

Of the 5994 studies identified initially, 20 (comprising 19 predictive ML algorithms) met the inclusion criteria and were included in this systematic review. One algorithm was excluded after personal communication confirmed that the tool used was a rule-based expert system.23 Additionally, 25 commercially available CE-marked or FDA-approved predictive ML algorithms in primary care were included.18 Only 2 predictive ML algorithms were found in the FDA or CE registration databases and the literature databases searched.24,25,26,27 Forty-three AIPAs were included in the analysis (Figure 1).24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65

Figure 1. PRISMA Flowchart.

AI indicates artificial intelligence; AIPA, artificial intelligence predictive algorithm; CE, Conformité Européene; FDA, US Food and Drug Administration.

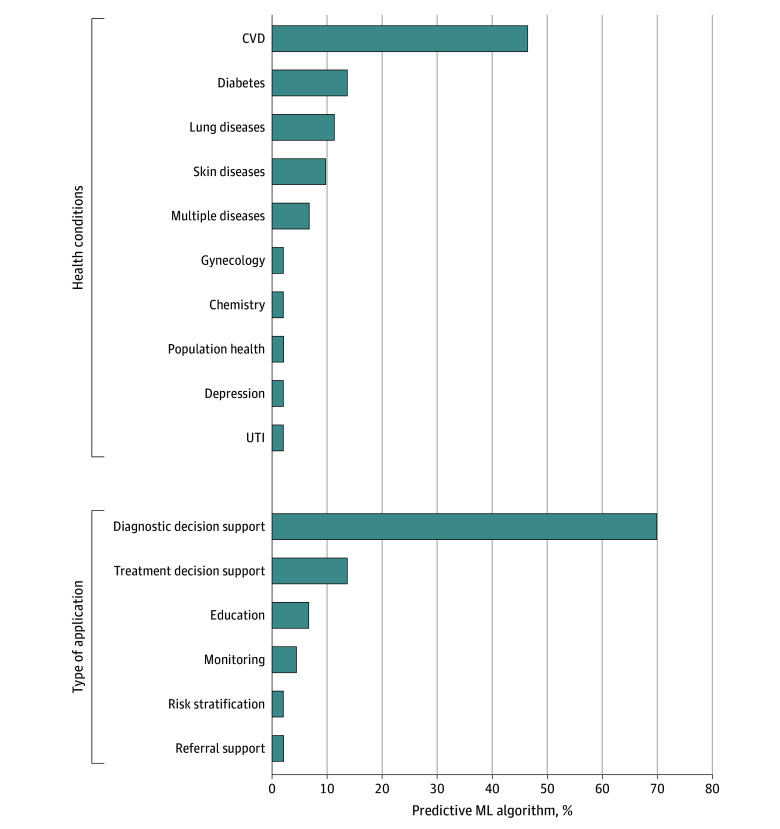

Table 2 provides an overview of the key characteristics of the 43 predictive ML algorithms included in this review.24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67 Most studies (35 [81%]) were published in the past 5 years (2018-2023).24,26,27,28,29,30,31,32,33,34,35,36,40,41,42,43,44,45,46,50,51,52,53,54,55,57,58,59,60,61,62,64,65,66,68 Most predictive ML algorithms (36 [84%]) fit under the category of clinical decision support systems for either diagnosis or treatment indication (Figure 2).24,25,26,27,28,29,31,32,33,34,35,37,38,39,40,41,42,44,46,47,49,51,52,53,55,56,57,58,59,60,61,63,64,65,66,67 Twenty-seven predictive ML algorithms (63%) focused on cardiovascular diseases and diabetes (Figure 2).24,25,26,27,28,32,33,34,37,38,39,40,41,42,43,44,45,46,47,50,57,60,62,64,66,68 Furthermore, 9 AIPAs (21%) mentioned the use of AI in their product descriptions but did not offer any specific details about the AI technique applied to develop the model.37,38,39,42,45,47,48,50,54 These 9 predictive ML algorithms were FDA approved or CE marked. Twelve of 43 (28%) predictive ML algorithms were implemented (life cycle phase 6) in a research setting but not in practice.26,31,32,33,34,58,61,62,63,64,66,67

Table 2. Key Characteristics of the 43 Predictive Machine Learning Algorithms in Primary Care Included From Scientific Literature and US Food and Drug Administration and Conformité Européene Registration Databases.

| Name of predictive ML algorithm | Source | Country of origin | Types of applications | Health condition | AI model | Study design |

|---|---|---|---|---|---|---|

| From scientific literature | ||||||

| Aifred | Benrimoh et al,58 2021 | Canada | Treatment decision | Major depression | DL | Usability or acceptability testing |

| VisualDx | Breitbart et al,61 2020 | Germany | Diagnostic decision | Skin lesions | ML | Randomized feasibility study |

| DSS framework | Frontoni et al,66 2020 | Italy | Diagnostic decision support | Type 2 diabetes | ML | Development of a novel framework |

| ECG-enabled stethoscope | Bachtiger et al,26 2022 | UK | Diagnostic decision support | LVEF | DL | Observational, prospective, multicenter study |

| AI systems to identify diabetic retinopathy | Kanagasingam et al,62 2018 | Australia | Referral support | Diabetic retinopathy | ML | Case-control |

| Automated diabetic retinopathy screening system | Liu et al,24 2021 | Canada | Diagnostic decision support | Diabetic retinopathy | DL | Prospective cohort study |

| Medication reconciliation, iPad-based software tools | Long et al,63 2016 | US | Education | Adverse drug events | NLP | Development and observational study |

| AI for risk prediction glycemic control | Romero-Brufau et al,64 2020 | US | Treatment decision support | Diabetes | ML | Usability testing or surveys |

| A-GPS | Seol et al,65 2021 | US | Treatment decision support | Asthma | NLP and ML | A single-center pragmatic RCT with stratified randomization |

| PULsE-AI | Hill et al,28 2020 | UK | Diagnostic decision support | AF | ML | Prospective RCT |

| ML-based decision support for UTIs | Herter et al,29 2022 | The Netherlands | Treatment decision support | UTIs | ML | A routine practice-based prospective observational study design |

| Risk stratification AI tool | Bhatt et al,30 2021 | US | Risk stratification | Population health | ML | Preimplementation and postdeployment evaluation and monitoring |

| CUHAS-ROBUST | Herman et al,31 2021 | Indonesia | Diagnostic decision support | TB screening | DL | A qualitative approach with content analysis |

| Risk-stratification AI tool AF | Wang et al,32 2019 | US | Treatment decision support | AF | ML | Stepped wedge RCT |

| Personalized BP and lifestyle identification | Chiang et al,332021 | US | Treatment decision support | BP | ML | Prospective cohort study |

| EAGLE | Yao et al,34 2021 | US | Diagnostic decision support | Low ejection fraction | ML | Pragmatic RCT |

| MEDO-Hip | Jaremko et al,35 2023 | Canada | Diagnostic decision support | Hip dysplasia | ML | Implementation study |

| Firstderm | Escalé-Besa et al,67 2023 | Spain | Diagnostic decision support | Skin lesions | ML | Prospective, multicenter, observational feasibility study |

| From CE and FDA registration databases | ||||||

| Tyto Scope | TytoCare,37 2016 | US | Diagnostic decision support | Cardiovascular | Unknown | NA |

| Peerbridge Health | Peerbridge Health,38 2017 | US | Diagnostic decision support | Cardiovascular | Unknown | NA |

| RootiRx | Rooti Labs Limited,39 2016 | Taiwan | Diagnostic decision support | Cardiovascular | Unknown | NA |

| LumineticsCore (formerly IDx-DR) | Digital Diagnostics,40 2018 | US | Diagnostic decision support | Diabetic retinopathy | DL | NA |

| FibriCheck | FibriCheck,41 2018 | Belgium | Diagnostic decision support | Cardiovascular | DL | NA |

| Cardio-HART | Cardio-HART,42 2018 | US | Diagnostic decision support | Cardiovascular | Unknown | NA |

| eMurmur | eMurmur,43 2019 | Austria | Diagnostic decision support | Cardiovascular | ML | NA |

| Smartho-D2 electronic stethoscope | Minttihealth,44 2019 | China | Diagnostic decision support | Cardiovascular | DL | NA |

| Biosticker system | BioIntelliSense,45 2019 | US | Monitoring | Cardiovascular | Unknown | NA |

| EKO analysis software | EKO,27 2020 | US | Diagnostic decision support | Cardiovascular | DL | NA |

| EchoNous (formerly KOSMOS) | EchoNous Inc,46 2020 | US | Diagnostic decision support | Cardiovascular | DL | NA |

| EyeArt | EyeNuk Inc,25 2015 | US | Diagnostic decision support | Diabetic retinopathy | DL | NA |

| Coala heart monitor | Coala,47 2016 | Sweden | Diagnostic decision support | Cardiovascular | Unknown | NA |

| MyAsthma | my mhealth,48 2017 | England | Education | Respiratory | Unknown | NA |

| eMed (formerly Babylon) | eMed,49 2017 | England | Diagnostic decision support | General hospital | ML | NA |

| MedoPad | MedoPad,50 2018 | England | Monitoring | Cardiovascular | Unknown | NA |

| DERM | Skin Analytics,51 2018 | England | Diagnostic decision support | Dermatology | DL | NA |

| ResAppDx-EU | ResApp Health,52 2019 | Australia | Diagnostic decision support | Respiratory | ML | NA |

| AVE (formerly AVEC) | MobileODT,53 2019 | Israel | Diagnostic decision support | Obstetrics/Gynecology | ML | NA |

| Kata | Kata,54 2019 | Germany | Education | Respiratory | Unknown | NA |

| SkinVision | SkinVision,55 2020 | The Netherlands | Diagnostic decision support | Dermatology | ML | NA |

| DeepRhythmAI | Medicalgorithmics,56 2022 | Poland | Diagnostic decision support | Cardiovascular | DL | NA |

| IRNF app | Apple,57 2021 | US | Diagnostic decision support | Cardiovascular | ML | NA |

| Minuteful Kidney | Healthy.io,59 2022 | Israel | Diagnostic decision support | Chemistry | ML | NA |

| Zeus | iRhythm Technologies,60 2022 | US | Diagnostic decision support | Cardiovascular | DL | NA |

Abbreviations: AF, atrial fibrillation; AI, artificial intelligence; BP, blood pressure; CE, Conformité Européene; DL, deep learning; DSS, decision support system; ECG, electrocardiogram; FDA, US Food and Drug Administration; LVEF, left ventricular ejection fraction; ML, machine learning; NA, not applicable; NLP, natural language processing; RCT, randomized clinical trial; TB, tuberculosis; UTI, urinary tract infection.

Figure 2. Overview of the Type of Application and Health Conditions Targeted by the Included Predictive Machine Learning (ML) Algorithms.

CVD indicates cardiovascular disease; UTI, urinary tract infection.

Availability of Evidence of the Predictive ML Algorithms

The search for public availability of evidence on the 43 predictive ML algorithms resulted in 1541 hits, of which 33 were duplicates. Eighty-two publications met the inclusion criteria. Additionally, 80 publications were provided by the product owners or authors or obtained from vendors’ websites. A total of 162 publications were included in the study, which included peer-reviewed articles, technical reports, posters, conference papers, and DMPs (eFigure in Supplement 1). Nine authors and product owners responded and completed the online questionnaire about the reported evidence of the predictive ML algorithm. An overview of the publication characteristics per predictive ML algorithm is provided in eTable 2 in Supplement 1. An overview of the availability of evidence per predictive ML algorithm is provided in eTable 3 in Supplement 1.

Availability of Evidence per Requirement

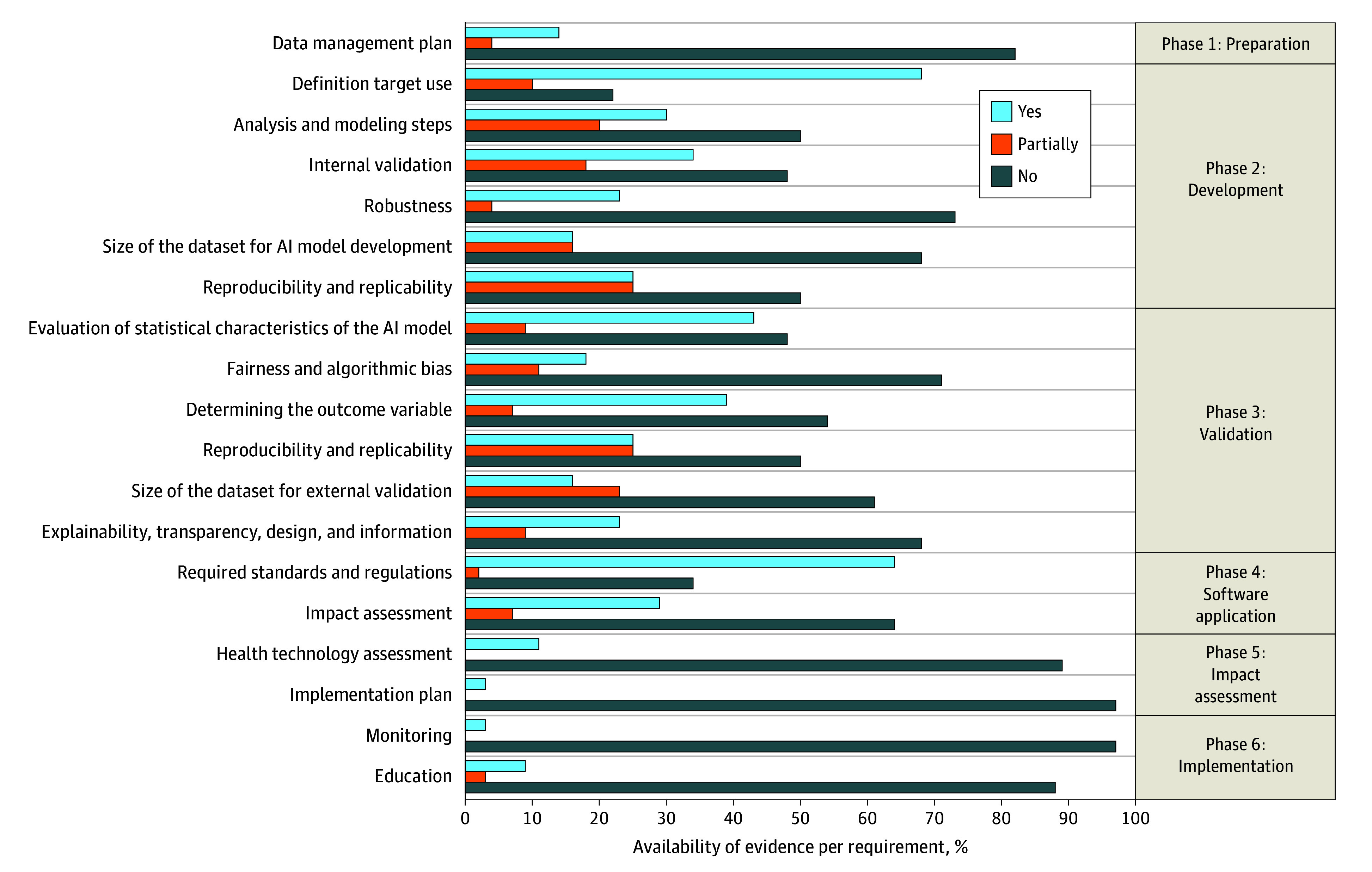

An overview of the availability of evidence per requirement and according to the life cycle phase is shown in Figure 3. The 3 most commonly available types of evidence per requirement were a clear definition of the target use of the AI model, evaluation of its statistical characteristics, and adherence to software standards and regulations (78%, 47%, and 66% availability, respectively). Conversely, the least available evidence pertained to the implementation plan, monitoring, and health technology assessment (2%, 2%, and 14% availability, respectively), largely due to a lack of information from FDA-approved and CE-marked predictive ML algorithms.

Figure 3. Percentage of Peer-Reviewed Requirements per Phase.

Light blue indicates the public availability of evidence. Orange indicates that the available evidence partially covered the requirement. Dark blue indicates no evidence was publicly available. AI indicates artificial intelligence.

The life cycle phase with the most comprehensive evidence was phase 2 (development), where 46% evidence availability for the relevant requirements was identified. This finding was followed by life cycle phase 3 (validation), with a 39% evidence availability. The life cycle phases with the most limited availability of evidence were phase 1 (preparation) at 19% and phase 5 (impact assessment) at 30%. Commercially available CE-marked and FDA-approved AIPAs offered less evidence across all life cycle phases compared with AIPAs found in the literature database search (29% vs 48% of the overall score to be determined).

Availability of Evidence per Predictive ML Algorithm

For 5 predictive ML algorithms (12%), evidence was available only for 2 requirements: definition of the target use and required standards and regulations (availability per predictive ML algorithm score: 4 of 48 possible points).37,38,47,48,69 Twelve (28%) predictive ML algorithms obtained approximately half of their individual maximum attainable evidence availability score.24,26,27,28,30,31,33,34,36,41,43,65 Twelve (28%) did not reach life cycle phase 6 (implementation).26,31,32,33,34,36,58,61,62,63,64,66 The predictive ML algorithms that reported the highest availability of evidence per predictive ML algorithm score were a risk-prediction algorithm for identifying undiagnosed atrial fibrillation28 (36 of 48 possible points) and an AI-powered clinical decision support tool that enabled early diagnosis of low ejection fraction34 (37 of 42 possible points). Both predictive ML algorithms were neither CE-marked nor FDA-approved at the time of publication. Overall, predictive ML algorithms identified through the peer-reviewed literature database search yielded more publicly available evidence24,26,28,29,30,31,32,33,34,35,36,58,61,62,63,64,65,66 compared with the predictive ML algorithms identified solely from FDA-approved or CE-marked databases25,27,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,57,59,60,68 (45% vs 29%) (eTable 2 in Supplement 1).

Discussion

To our knowledge, this systematic review provides the most comprehensive overview of predictive ML algorithms implemented in primary care to date and reveals insufficient public availability of evidence of a broad set of predictive ML algorithm quality criteria. The availability of evidence was highly inconsistent across the included predictive ML algorithms, life cycles, and individual quality criteria. Predictive ML algorithms identified from peer-reviewed literature generally provided more publicly available evidence compared with predictive ML algorithms identified solely from FDA or CE registration databases.

The results align with those of previously published research. The scarcity of evidence is particularly pronounced among predictive ML algorithms that have received FDA approval or CE marking.9,12,13,70,71,72 Many AI developers in the health care sector are known not to disclose information in the literature about the development, validation, evaluation, or implementation of AI tools.12,19,73 There may be tension between protecting intellectual property and being transparent.74 Moreover, not all evidence requires peer review, including regulatory processes such as obtaining a CE mark, where notified bodies assess the high-risk medical devices’ evidence for compliance. However, concerns may arise regarding the complexity of methodologies when reporting on effectiveness in a clinical setting. In such cases, there might be a preference for a peer-reviewed process to ensure that evaluation does not solely rely on notified bodies.75 Although the FDA and the European Union Medical Device Regulation and, more recently, the AI Act, have released new initiatives to enhance transparency, disclosure of evidence was not mandatory at the time of writing this systematic review.76,77,78,79,80 It would be interesting to assess the impact of new regulation in the future.

The availability of evidence fosters transparency and trust among end users, allowing other investigators to scrutinize the data and methods used and thus ensuring ethical and unbiased research and development practices.81,82 Researchers can build on previous work, advancing scientific knowledge by making evidence available. If studies lack the necessary details, subsequent researchers may be more likely to create a new AI model instead of validating or updating an existing one. In addition, transparent reporting of predictive ML algorithms encourages vigilance among users, increasing the level of trust humans have in AI, as shown by human factors research.82 On the other hand, failing to provide evidence can hamper patient safety due to, for example, algorithmically generated outcomes, interpretations, and recommendations that exhibit unfair advantages or disadvantages for specific individuals or groups.83

The results show that evidence was the most scarce regarding the availability of, or reference to, a DMP. The DMP, while not necessarily required to be publicly accessible, is critical to preparing for collecting, managing, and processing data. The DMP plays an overarching role in the entire trajectory toward structurally implementing and using the AI model in daily practice.84 It forms an essential component for every stage of the predictive ML algorithm life cycle and can ensure and safeguard data quality, reproducibility, and transparency while striving for Findable, Accessible, Interoperable, and Reusable (FAIR) data.85,86,87,88 The FAIR principles aim to support the reuse of scholarly data, including algorithms, and to focus on making data findable and reusable by humans and machines.87 Although FAIR principles have been widely adopted in academic contexts, the response of the industry has been less consistent.89

Evidence was also limited regarding the impact and health technology assessments of predictive ML algorithms. The lack of accessible evaluations of the outcome and implementation in everyday clinical practice may hinder the translation of research findings into practical applications in health care.90 Lack of such information may also impede adoption, as medical professionals need robust evidence to gain trust in these technologies and consistently integrate them into their everyday workflow. Medical professionals stress the importance of adhering to legal, ethical, and quality standards. They voice the need to be trained to interpret the availability of evidence supporting the safety of AI systems, including predictive ML algorithms and their effectiveness.8,81 Without this information, it is challenging to ascertain whether the success of a predictive ML algorithm model is attributable to the model itself, the elements of its implementation, or both. As a result, it can be challenging to inform stakeholders about which, how, and for whom predictive ML algorithms are most effective.

Implications for Research and Practice

Applying the Dutch AIPA guideline requirements to structure the availability of evidence, as demonstrated in this systematic review, can serve as a blueprint for showcasing to policymakers, primary care practitioners, and patients the reliability, transparency, and advancement of predictive ML algorithms. The guideline also has the potential to accelerate the process of complying with regulations.16 Although not legally binding, the guideline can be used by developers and researchers as the basis for self-assessment. Furthermore, in the context of Dutch primary care, wherein general practitioners often operate within smaller organizations, limited resources may impede their ability to evaluate complex AI models effectively.91 Therefore, comprehensive tools for assessing the availability of evidence on predictive ML algorithms, such as the Dutch AIPA guideline and the practical evaluation tool we developed, are valuable to primary care professionals and may aid large-scale adoption of predictive ML algorithms in practice. Since primary care worldwide is under substantial pressure, from a health systems perspective, it is essential to remove barriers to implementing innovation such as predictive ML algorithms.

Limitations

This study has methodological limitations that should be taken into account. First, the scope of the systematic review excluded regression-based predictive models and simple rule-based systems. Although these approaches can be of substantial value in primary care, the focus on predictive ML algorithms enabled us to provide an in-depth overview of the aspects of model complexity and interpretability. Although most accepted definitions of ML do not exclude simple regression, the scope was beneficial for maintaining a manageable overview of more complex models that pose unique challenges for standardized reporting of model development, validation, and implementation. Second, we restricted the systematic review to articles published in English. We believe this restriction does not substantially affect the generalizability of the results since previous research has found no evidence of systematic bias due to English-language limitations.92 Third, we could not formally compare the predictive validity across predictive ML algorithms due to the substantial variations in the types of AI models and heterogeneous methods between studies. Additionally, the availability scores presented in this study should be seen as an approximation of the degree of public availability of evidence, in line with the objectives described . Fourth, the Dutch AIPA guideline is a local norm, which is not legally binding. Several international AI guidelines exist that apply to predictive ML algorithms, such as TRIPOD+AI (Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis) for development and validation, DECIDE-AI (Developmental and Exploratory Clinical Investigations of Decision Support Systems Driven by Artificial Intelligence) for feasibility studies, and SPIRIT-AI (Standard Protocol Items: Recommendations for Interventional Trials–Artificial Intelligence) and CONSORT-AI (Consolidated Standards of Reporting Trials–Artificial Intelligence) for impact assessments.93,94,95,96,97 These international guidelines, however, are aimed primarily at researchers. We chose to build on the Dutch AIPA guideline because it provides complete, structured, and pragmatic quality assessment derived from existing guidelines across the entire AI life cycle, and it is specific for predictive algorithms, which are considered to be of great potential in the medical field.15 It specifically emphasizes implementation aspects and practical applications in clinical practice and may therefore be useful for primary care professionals.

Conclusions

In this systematic review, we comprehensively identified the availability of evidence of predictive ML algorithms in primary care, using the Dutch AIPA guideline as a reference. We found a scarcity of evidence across the AI life cycle phases for implemented predictive ML algorithms, particularly from algorithms published in FDA-approved or CE-marked databases. Adopting guidelines such as the Dutch AIPA guideline can improve the availability of evidence regarding the predictive ML algorithms’ quality criteria. It could facilitate transparent and consistent reporting of the quality criteria in literature, potentially fostering trust among end users and facilitating large-scale implementation.

eAppendix 1. Search Strategies Used Up to July 7, 2023

eAppendix 2. Selection Process

eAppendix 3. Online Questionnaire for Information From Authors and Commercial Product Owners

eTable 1. The Evidence Requirements Established per Life Cycle Phase as Described in the Dutch AIPA Guideline

eFigure. Flowchart of Literature Inclusion for Assessment of the Six Phases

eTable 2. Overview of Publication Characteristics per Predictive ML Algorithm

eTable 3. Overview of the Availability of Evidence per Predictive ML Algorithm

Data Sharing Statement

Footnotes

References

- 1.Boerma W, Bourgueil Y, Cartier T, et al. Overview and future challenges for primary care. 2015. Accessed October 20, 2023. https://www.ncbi.nlm.nih.gov/books/NBK458729/

- 2.Smeets HM, Kortekaas MF, Rutten FH, et al. Routine primary care data for scientific research, quality of care programs and educational purposes: the Julius General Practitioners’ Network (JGPN). BMC Health Serv Res. 2018;18(1):735. doi: 10.1186/s12913-018-3528-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kuiper JG, Bakker M, Penning-van Beest FJA, Herings RMC. Existing data sources for clinical epidemiology: the PHARMO Database Network. Clin Epidemiol. 2020;12:415-422. doi: 10.2147/CLEP.S247575 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Shilo S, Rossman H, Segal E. Axes of a revolution: challenges and promises of big data in healthcare. Nat Med. 2020;26(1):29-38. doi: 10.1038/s41591-019-0727-5 [DOI] [PubMed] [Google Scholar]

- 5.Moons KGM, Kengne AP, Woodward M, et al. Risk prediction models: I. development, internal validation, and assessing the incremental value of a new (bio)marker. Heart. 2012;98(9):683-690. doi: 10.1136/heartjnl-2011-301246 [DOI] [PubMed] [Google Scholar]

- 6.Babel A, Taneja R, Mondello Malvestiti F, Monaco A, Donde S. Artificial intelligence solutions to increase medication adherence in patients with non-communicable diseases. Front Digit Health. 2021;3:669869. doi: 10.3389/fdgth.2021.669869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hazarika I. Artificial intelligence: opportunities and implications for the health workforce. Int Health. 2020;12(4):241-245. doi: 10.1093/inthealth/ihaa007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Liyanage H, Liaw ST, Jonnagaddala J, et al. Artificial intelligence in primary health care: perceptions, issues, and challenges. Yearb Med Inform. 2019;28(1):41-46. doi: 10.1055/s-0039-1677901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Andaur Navarro CL, Damen JAA, Takada T, et al. Risk of bias in studies on prediction models developed using supervised machine learning techniques: systematic review. BMJ. 2021;375:n2281. doi: 10.1136/bmj.n2281 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Shaw J, Rudzicz F, Jamieson T, Goldfarb A. Artificial intelligence and the implementation challenge. J Med Internet Res. 2019;21(7):e13659. doi: 10.2196/13659 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Norori N, Hu Q, Aellen FM, Faraci FD, Tzovara A. Addressing bias in big data and AI for health care: a call for open science. Patterns (N Y). 2021;2(10):100347. doi: 10.1016/j.patter.2021.100347 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.van Leeuwen KG, Schalekamp S, Rutten MJCM, van Ginneken B, de Rooij M. Artificial intelligence in radiology: 100 commercially available products and their scientific evidence. Eur Radiol. 2021;31(6):3797-3804. doi: 10.1007/s00330-021-07892-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Andaur Navarro CL, Damen JAA, Takada T, et al. Completeness of reporting of clinical prediction models developed using supervised machine learning: a systematic review. BMC Med Res Methodol. 2022;22(1):12. doi: 10.1186/s12874-021-01469-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Daneshjou R, Smith MP, Sun MD, Rotemberg V, Zou J. Lack of transparency and potential bias in artificial intelligence data sets and algorithms: a scoping review. JAMA Dermatol. 2021;157(11):1362-1369. doi: 10.1001/jamadermatol.2021.3129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.de Hond AAH, Leeuwenberg AM, Hooft L, et al. Guidelines and quality criteria for artificial intelligence-based prediction models in healthcare: a scoping review. NPJ Digit Med. 2022;5(1):2. doi: 10.1038/s41746-021-00549-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.van Smeden M, Moons KG, Hooft L, Chavannes NH, van Os HJ, Kant I. Guideline for high-quality diagnostic and prognostic applications of AI in healthcare. OSFHome. November 14, 2022. Accessed August 6, 2024. http://OSF.IO/TNRJZ

- 17.Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Syst Rev. 2021;10(1):89. doi: 10.1186/s13643-021-01626-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Muehlematter UJ, Daniore P, Vokinger KN. Approval of artificial intelligence and machine learning-based medical devices in the USA and Europe (2015-20): a comparative analysis. Lancet Digit Health. 2021;3(3):e195-e203. doi: 10.1016/S2589-7500(20)30292-2 [DOI] [PubMed] [Google Scholar]

- 19.Zhu S, Gilbert M, Chetty I, Siddiqui F. The 2021 landscape of FDA-approved artificial intelligence/machine learning-enabled medical devices: an analysis of the characteristics and intended use. Int J Med Inform. 2022;165:104828. doi: 10.1016/j.ijmedinf.2022.104828 [DOI] [PubMed] [Google Scholar]

- 20.US Food and Drug Administration. Artificial intelligence and machine learning (AI/ML)-enabled medical devices. Accessed August 23, 2023. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices#resources

- 21.Rifkin SB. Alma ata after 40 years: primary health care and health for all-from consensus to complexity. BMJ Glob Health. 2018;3(suppl 3):e001188. doi: 10.1136/bmjgh-2018-001188 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gama F, Tyskbo D, Nygren J, Barlow J, Reed J, Svedberg P. Implementation frameworks for artificial intelligence translation into health care practice: scoping review. J Med Internet Res. 2022;24(1):e32215. doi: 10.2196/32215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tenhunen H, Hirvonen P, Linna M, Halminen O, Hörhammer I. Intelligent patient flow management system at a primary healthcare center - the effect on service use and costs. Stud Health Technol Inform. 2018;255:142-146. [PubMed] [Google Scholar]

- 24.Liu J, Gibson E, Ramchal S, et al. Diabetic retinopathy screening with automated retinal image analysis in a primary care setting improves adherence to ophthalmic care. Ophthalmol Retina. 2021;5(1):71-77. doi: 10.1016/j.oret.2020.06.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Eyenuk, Inc . Harnessing deep learning to prevent blindness. Accessed August 5, 2024. https://www.eyenuk.com/en/

- 26.Bachtiger P, Petri CF, Scott FE, et al. Point-of-care screening for heart failure with reduced ejection fraction using artificial intelligence during ECG-enabled stethoscope examination in London, UK: a prospective, observational, multicentre study. Lancet Digit Health. 2022;4(2):e117-e125. doi: 10.1016/S2589-7500(21)00256-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.EKO. Unlock AI murmur & afib detection with Eko+. Accessed August 5, 2024. https://www.ekohealth.com/

- 28.Hill NR, Arden C, Beresford-Hulme L, et al. Identification of undiagnosed atrial fibrillation patients using a machine learning risk prediction algorithm and diagnostic testing (PULsE-AI): study protocol for a randomised controlled trial. Contemp Clin Trials. 2020;99:106191. doi: 10.1016/j.cct.2020.106191 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Herter WE, Khuc J, Cinà G, et al. Impact of a machine learning-based decision support system for urinary tract infections: prospective observational study in 36 primary care practices. JMIR Med Inform. 2022;10(5):e27795. doi: 10.2196/27795 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bhatt S , Cohon A, Rose J, et al. Interpretable machine learning models for clinical decision-making in a high-need, value-based primary care setting. NEJM Catal Innov Care Deliv. 2021;2(4). doi: 10.1056/CAT.21.0008 [DOI] [Google Scholar]

- 31.Herman B, Sirichokchatchawan W, Nantasenamat C, Pongpanich S. Artificial intelligence in overcoming rifampicin resistant-screening challenges in Indonesia: a qualitative study on the user experience of CUHAS-ROBUST. J Health Res. 2021;36(6):1018-1027. doi: 10.1108/JHR-11-2020-0535 [DOI] [Google Scholar]

- 32.Wang SV, Rogers JR, Jin Y, et al. Stepped-wedge randomised trial to evaluate population health intervention designed to increase appropriate anticoagulation in patients with atrial fibrillation. BMJ Qual Saf. 2019;28(10):835-842. doi: 10.1136/bmjqs-2019-009367 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Chiang PH, Wong M, Dey S. Using wearables and machine learning to enable personalized lifestyle recommendations to improve blood pressure. IEEE J Transl Eng Health Med. 2021;9:2700513. doi: 10.1109/JTEHM.2021.3098173 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Yao X, Rushlow DR, Inselman JW, et al. Artificial intelligence-enabled electrocardiograms for identification of patients with low ejection fraction: a pragmatic, randomized clinical trial. Nat Med. 2021;27(5):815-819. doi: 10.1038/s41591-021-01335-4 [DOI] [PubMed] [Google Scholar]

- 35.Jaremko JL, Hareendranathan A, Ehsan S, et al. AI aided workflow for hip dysplasia screening using ultrasound in primary care clinics. Sci Rep. 2023;13(1):9224. doi: 10.1038/s41598-023-35603-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Escalé-Besa A, Fuster-Casanovas A, Börve A, et al. Using artificial intelligence as a diagnostic decision support tool in skin disease: protocol for an observational prospective cohort study. JMIR Res Protoc. 2022;11(8):e37531. doi: 10.2196/37531 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.TytoCare. TytoCare. Accessed August 5, 2024. https://www.tytocare.com/

- 38.Peerbridge Health. Home. Accessed August 5, 2024. https://peerbridgehealth.com/

- 39.Rooti Labs Limited. RootiCare: dependable, continuous montioring. Accessed August 5, 2024. https://www.rootilabs.com/doctor

- 40.Digital Diagnostics . LumineticsCore. Accessed August 5, 2024. https://www.digitaldiagnostics.com/products/eye-disease/idx-dr/

- 41.FibriCheck. Advanced monitoring of your heart rhythm for detection and treatment of atrial fibrillation. Accessed August 5, 2024. https://www.fibricheck.com/nl/

- 42.Cardio-Phoenix . Cardio-HART. Accessed August 5, 2024. https://www.cardiophoenix.com/

- 43.eMURMUR. Join the world’s first enterprise-level, open platform for advanced digital auscultation. Accessed August 5, 2024. https://emurmur.com/

- 44.Minttihealth . Home. Accessed August 5, 2024. https://minttihealth.com/

- 45.BioIntelliSense, Inc . BioIntelliSence. Accessed August 5, 2024. https://biointellisense.com/

- 46.EchoNous Inc . EchoNous. Accessed August 5, 2024. https://echonous.com/

- 47.Coala. COALA heart monitoring system. Accessed August 5, 2024. https://www.coalalife.com/us/

- 48.my mhealth. Empowering patients to manage their asthma for a lifetime. Accessed August 5, 2024. https://mymhealth.com/myasthma

- 49.eMed . eMed weight loss programme. Accessed August 5, 2024. https://www.emed.com/uk

- 50.Huma. Longer, fuller lives with digtal-first care and research. Accessed August 8, 2024. https://medopad.com/

- 51.Skin Analytics . Skin analytics. Accessed August 5, 2024. https://skin-analytics.com/

- 52. ResApp Health . ResAppDx-EU. Accessed August 8, 2024. https://digitalhealth.org.au/wp-content/uploads/2020/06/ResAppDx-EU-flyer.pdf

- 53.MobileODT. Automated Visual Evaluation (AVE) explained: everything you need to know about the new AI for cervical cancer screening. January 14, 2019. Accessed June 29, 2022. https://www.mobileodt.com/blog/everything-you-need-to-know-about-ave-automated-visual-examination-for-cervical-cancer-screening/

- 54.Kata. Inhale correctly, live better. Accessed August 5, 2024. https://kata-inhalation.com/en/

- 55.SkinVision. Skin cancer melanoma tracking app. Accessed August 5, 2024. https://www.skinvision.com/nl/

- 56.Medicalgorithmics. The most effective technology solutions for cardiology. Accessed August 25, 2023. https://www.medicalgorithmics.com/

- 57.Apple. IRN Global 2.0. instructions for use. 2021. Accessed August 25, 2023. https://www.apple.com/legal/ifu/irnf/2-0/irn-2-0-en_US.pdf

- 58.Benrimoh D, Tanguay-Sela M, Perlman K, et al. Using a simulation centre to evaluate preliminary acceptability and impact of an artificial intelligence-powered clinical decision support system for depression treatment on the physician-patient interaction. BJPsych Open. 2021;7(1):e22. doi: 10.1192/bjo.2020.127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Healthy.io Ltd. Increase ACR testing by up to 50%. Accessed August 5, 2024. https://healthy.io/services/kidney/

- 60.Zio by iRhythm Technologies, Inc. iRhythm gains FDA clearance for its clinically integrated ZEUS system. July 22, 2022. Accessed August 25, 2023. https://www.irhythmtech.com/company/news/irhythm-gains-fda-clearance-for-its-clinically-integrated-zeus-system

- 61.Breitbart EW, Choudhury K, Andersen AD, et al. Improved patient satisfaction and diagnostic accuracy in skin diseases with a visual clinical decision support system-a feasibility study with general practitioners. PLoS One. 2020;15(7):e0235410. doi: 10.1371/journal.pone.0235410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Kanagasingam Y, Xiao D, Vignarajan J, Preetham A, Tay-Kearney ML, Mehrotra A. Evaluation of artificial intelligence-based grading of diabetic retinopathy in primary care. JAMA Netw Open. 2018;1(5):e182665-e182665. doi: 10.1001/jamanetworkopen.2018.2665 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Long J, Yuan MJ, Poonawala R. An observational study to evaluate the usability and intent to adopt an artificial intelligence-powered medication reconciliation tool. Interact J Med Res. 2016;5(2):e14. doi: 10.2196/ijmr.5462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Romero-Brufau S, Wyatt KD, Boyum P, Mickelson M, Moore M, Cognetta-Rieke C. A lesson in implementation: a pre-post study of providers’ experience with artificial intelligence-based clinical decision support. Int J Med Inform. 2020;137:104072. doi: 10.1016/j.ijmedinf.2019.104072 [DOI] [PubMed] [Google Scholar]

- 65.Seol HY, Shrestha P, Muth JF, et al. Artificial intelligence-assisted clinical decision support for childhood asthma management: a randomized clinical trial. PLoS One. 2021;16(8):e0255261. doi: 10.1371/journal.pone.0255261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Frontoni E, Romeo L, Bernardini M, et al. A decision support system for diabetes chronic care models based on general practitioner engagement and EHR data sharing. IEEE J Transl Eng Health Med. 2020;8:3000112. doi: 10.1109/JTEHM.2020.3031107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Escalé-Besa A, Yélamos O, Vidal-Alaball J, et al. Exploring the potential of artificial intelligence in improving skin lesion diagnosis in primary care. Sci Rep. 2023;13(1):4293. doi: 10.1038/s41598-023-31340-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.VivaQuant. Introducing the world’s smallest one-piece MCT: RX-1 mini. Accessed August 5, 2024. https://rhythmexpressecg.com/

- 69.Kaia Health. Digitale therapien bei COPD und rückenschmerzen. Accessed June 29, 2022. https://kaiahealth.de/

- 70.Zuckerman D, Brown P, Das A. Lack of publicly available scientific evidence on the safety and effectiveness of implanted medical devices. JAMA Intern Med. 2014;174(11):1781-1787. doi: 10.1001/jamainternmed.2014.4193 [DOI] [PubMed] [Google Scholar]

- 71.Andaur Navarro CL, Damen JAA, van Smeden M, et al. Systematic review identifies the design and methodological conduct of studies on machine learning-based prediction models. J Clin Epidemiol. 2023;154:8-22. doi: 10.1016/j.jclinepi.2022.11.015 [DOI] [PubMed] [Google Scholar]

- 72.Lu JH, Callahan A, Patel BS, et al. Assessment of adherence to reporting guidelines by commonly used clinical prediction models from a single vendor: a systematic review. JAMA Netw Open. 2022;5(8):e2227779. doi: 10.1001/jamanetworkopen.2022.27779 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Lin SY, Mahoney MR, Sinsky CA. Ten ways artificial intelligence will transform primary care. J Gen Intern Med. 2019;34(8):1626-1630. doi: 10.1007/s11606-019-05035-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Gerke S, Minssen T, Cohen G. Ethical and legal challenges of artificial intelligence-driven healthcare. Artif Intell Healthc. 2020:295-336. doi: 10.1016/B978-0-12-818438-7.00012-5 [DOI] [Google Scholar]

- 75.Fraser AG, Nelissen RGHH, Kjærsgaard-Andersen P, Szymański P, Melvin T, Piscoi P; CORE–MD Investigators . Improved clinical investigation and evaluation of high-risk medical devices: the rationale and objectives of CORE-MD (Coordinating Research and Evidence for Medical Devices). EFORT Open Rev. 2021;6(10):839-849. doi: 10.1302/2058-5241.6.210081 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.US Food and Drug Administration. Fostering transparency to improve public health. Accessed May 15, 2023. https://www.fda.gov/news-events/speeches-fda-officials/fostering-transparency-improve-public-health

- 77.US Food and Drug Administration. Public access to results of FDA-funded research. Accessed August 8, 2024. https://www.fda.gov/science-research/about-science-research-fda/public-access-results-fda-funded-scientific-research

- 78.Wu E, Wu K, Daneshjou R, Ouyang D, Ho DE, Zou J. How medical AI devices are evaluated: limitations and recommendations from an analysis of FDA approvals. Nat Med. 2021;27(4):582-584. doi: 10.1038/s41591-021-01312-x [DOI] [PubMed] [Google Scholar]

- 79.MDR-Eudamed . Welcome to EUDAMED. Accessed September 7, 2023. https://webgate.ec.europa.eu/eudamed/landing-page#/

- 80.Gasser U. An EU landmark for AI governance. Science. 2023;380(6651):1203-1203. doi: 10.1126/science.adj1627 [DOI] [PubMed] [Google Scholar]

- 81.Markus AF, Kors JA, Rijnbeek PR. The role of explainability in creating trustworthy artificial intelligence for health care: a comprehensive survey of the terminology, design choices, and evaluation strategies. J Biomed Inform. 2021;113:103655. doi: 10.1016/j.jbi.2020.103655 [DOI] [PubMed] [Google Scholar]

- 82.Zerilli J, Bhatt U, Weller A. How transparency modulates trust in artificial intelligence. Patterns (N Y). 2022;3(4):100455. doi: 10.1016/j.patter.2022.100455 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Kordzadeh N, Ghasemaghaei M. Algorithmic bias: review, synthesis, and future research directions. Eur J Inf Syst. 2022;31(3):388-409. doi: 10.1080/0960085X.2021.1927212 [DOI] [Google Scholar]

- 84.Smale N, Unsworth K, Denyer G, Barr D. A review of the history, advocacy and efficacy of data management plans. International Journal of Digital Curation. 2020;15(1):30-58. doi: 10.2218/ijdc.v15i1.525 [DOI] [Google Scholar]

- 85.Michener WK. Ten simple rules for creating a good data management plan. PLoS Comput Biol. 2015;11(10):e1004525. doi: 10.1371/journal.pcbi.1004525 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Williams M, Bagwell J, Nahm Zozus M. Data management plans: the missing perspective. J Biomed Inform. 2017;71:130-142. doi: 10.1016/j.jbi.2017.05.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Wilkinson MD, Dumontier M, Aalbersberg IJ, et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data. 2016;3:160018. doi: 10.1038/sdata.2016.18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Kanza S, Knight NJ. Behind every great research project is great data management. BMC Res Notes. 2022;15(1):20. doi: 10.1186/s13104-022-05908-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.European Commission . H2020 Programme Guidelines on FAIR Data Management in Horizon 2020. 2016. Accessed March 20, 2023. https://ec.europa.eu/research/participants/data/ref/h2020/grants_manual/hi/oa_pilot/h2020-hi-oa-data-mgt_en.pdf

- 90.Matheny M, Israni ST, Ahmed M, Whicher D, eds. Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril. The National Academies Press; 2019. doi: 10.17226/27111. [DOI] [PubMed] [Google Scholar]

- 91.Terry AL, Kueper JK, Beleno R, et al. Is primary health care ready for artificial intelligence? What do primary health care stakeholders say? BMC Med Inform Decis Mak. 2022;22(1):237. doi: 10.1186/s12911-022-01984-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Morrison A, Polisena J, Husereau D, et al. The effect of English-language restriction on systematic review-based meta-analyses: a systematic review of empirical studies. Int J Technol Assess Health Care. 2012;28(2):138-144. doi: 10.1017/S0266462312000086 [DOI] [PubMed] [Google Scholar]

- 93.Collins GS, Moons KGM. Reporting of artificial intelligence prediction models. Lancet. 2019;393(10181):1577-1579. doi: 10.1016/S0140-6736(19)30037-6 [DOI] [PubMed] [Google Scholar]

- 94.Vasey B, Nagendran M, Campbell B, et al. ; DECIDE-AI Expert Group . Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. BMJ. 2022;377:e070904. doi: 10.1136/bmj-2022-070904 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Liu X, Cruz Rivera S, Moher D, Calvert MJ, Denniston AK; SPIRIT-AI and CONSORT-AI Working Group . Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. BMJ. 2020;370:m3164. doi: 10.1136/bmj.m3164 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Eldridge SM, Chan CL, Campbell MJ, et al. ; PAFS Consensus Group . CONSORT 2010 statement: extension to randomised pilot and feasibility trials. BMJ. 2016;355:i5239. doi: 10.1136/bmj.i5239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Collins GS, Dhiman P, Andaur Navarro CL, et al. Protocol for development of a reporting guideline (TRIPOD-AI) and risk of bias tool (PROBAST-AI) for diagnostic and prognostic prediction model studies based on artificial intelligence. BMJ Open. 2021;11(7):e048008. doi: 10.1136/bmjopen-2020-048008 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eAppendix 1. Search Strategies Used Up to July 7, 2023

eAppendix 2. Selection Process

eAppendix 3. Online Questionnaire for Information From Authors and Commercial Product Owners

eTable 1. The Evidence Requirements Established per Life Cycle Phase as Described in the Dutch AIPA Guideline

eFigure. Flowchart of Literature Inclusion for Assessment of the Six Phases

eTable 2. Overview of Publication Characteristics per Predictive ML Algorithm

eTable 3. Overview of the Availability of Evidence per Predictive ML Algorithm

Data Sharing Statement