Abstract

Recent progress in artificial intelligence is largely attributed to the rapid development of machine learning, especially in the algorithm and neural network models. However, it is the performance of the hardware, in particular the energy efficiency of a computing system that sets the fundamental limit of the capability of machine learning. Data-centric computing requires a revolution in hardware systems, since traditional digital computers based on transistors and the von Neumann architecture were not purposely designed for neuromorphic computing. A hardware platform based on emerging devices and new architecture is the hope for future computing with dramatically improved throughput and energy efficiency. Building such a system, nevertheless, faces a number of challenges, ranging from materials selection, device optimization, circuit fabrication, and system integration, to name a few. The aim of this Roadmap is to present a snapshot of emerging hardware technologies that are potentially beneficial for machine learning, providing the Nanotechnology readers with a perspective of challenges and opportunities in this burgeoning field.

Introduction

It is believed that hardware is on the critical path for the future of artificial intelligence in the big data era [1]. State-of-the-art hardware for machine learning such as the central processing unit (CPU), graphics processing unit (GPU) and tensor processing unit (TPU) are built upon the complementary metal oxide semiconductor (CMOS) transistors. Although superior computing capability has been demonstrated with such hardware, the end of transistor scaling and the separation of logic and memory units in the von Neumann architecture limit the performance in particular energy efficiency, for data-centric tasks. Inspired by the extremely low power consumption of the human brain, neuromorphic hardware has been an intensive research topic, such as those that based on emerging solid state devices.

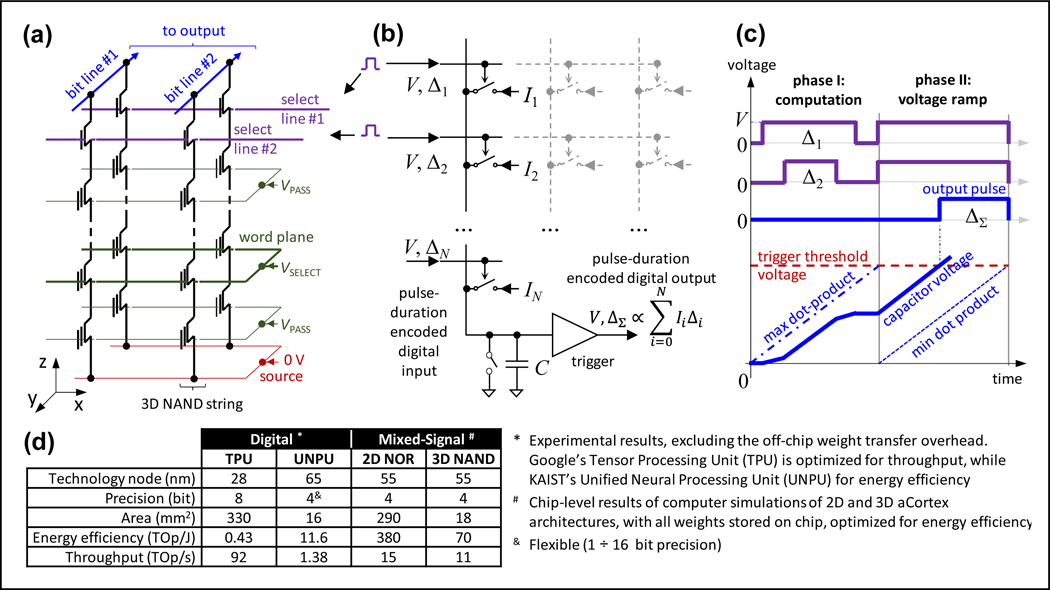

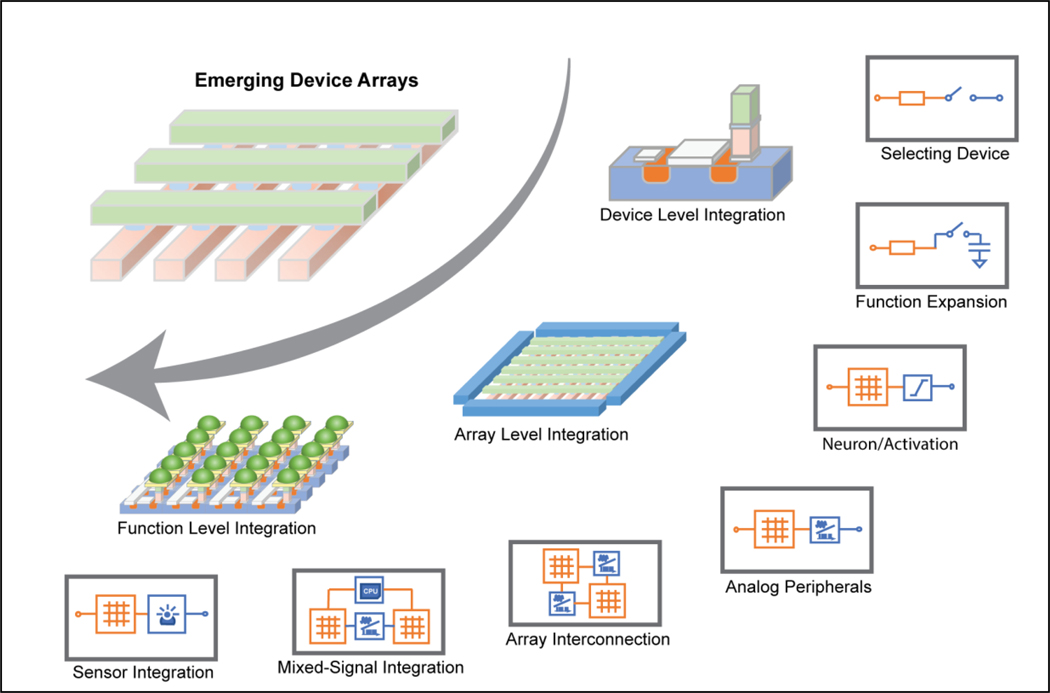

Emerging non-volatile devices can store information without dissipating power. When organized into a computing system, it can implement a so-called ‘in-memory computing’ (IMC) paradigm, in which computation takes place where the data is stored. IMC avoids the time and energy spent on data shuttling between memory and logic units in a traditional digital computer, especially suitable for tasks in which data needed to be computed are naturally collocated in the physical memory. Taking advantage of physical laws, such as Ohms law for multiplication and Kirchhoff’s current law for summation, IMC with a large-scale emerging device offers massive parallelism as well. Furthermore, the physical computing is analog in nature and the hardware could interface with analog data acquired directly from sensor arrays, reducing the energy overhead from analog/digital conversions. Depending on the properties of the device, such hardware is suitable for three types of applications [2]. Devices with excellent stability can be used to build an inference system where the synaptic weights have already been trained somewhere else. With high enough endurance, they may be incorporated into a training system for scalable algorithms such as backpropagation. For devices with intrinsic dynamic behavior similar to biological synapse and neurons, they may be promising building blocks for spiking neural networks that takes advantage of the timing in electric pulses for computing.

Extensive simulation has shown that neural networks built with emerging non-volatile memories will bring orders of magnitude higher speed-energy efficiencies [3]. However, experimental demonstration of large systems that can solve real-world problems has had limited success to date in part because of the lack of ideal devices that can efficiently implement the machine learning algorithms or faithfully emulate the essential properties of synapses and neurons. In addition, the heterogeneous integration of the devices into massively parallel networks is a major technical obstacle as well.

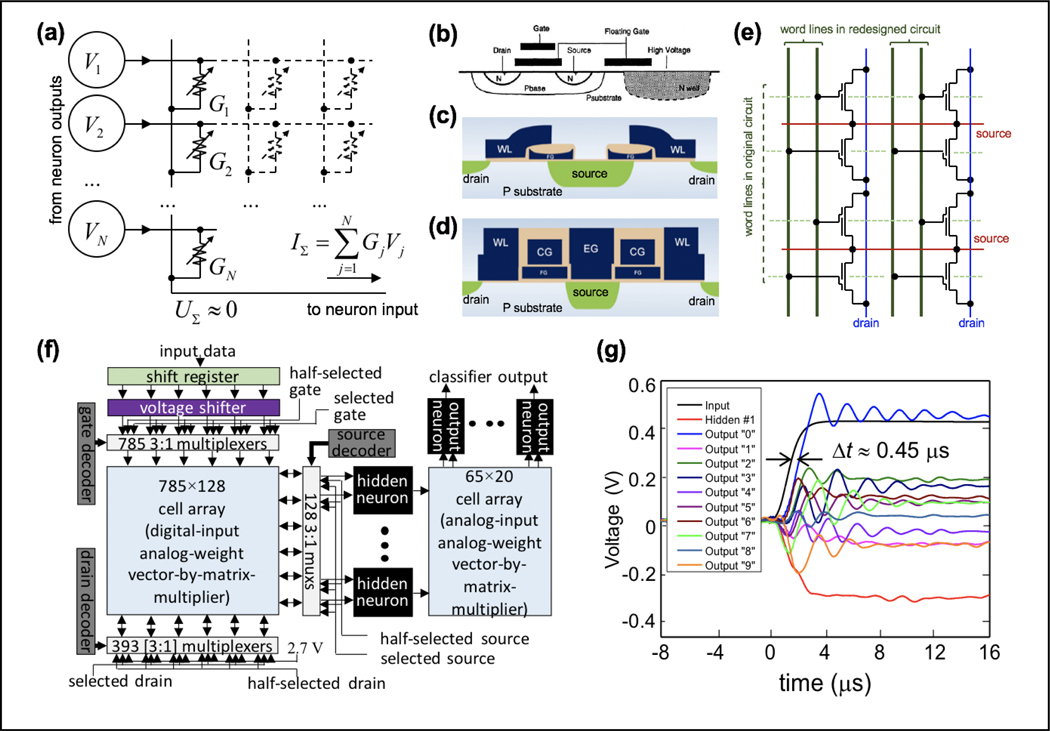

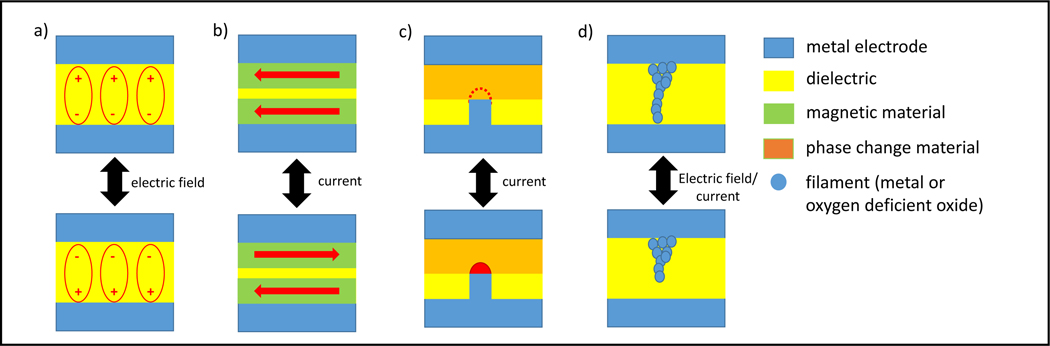

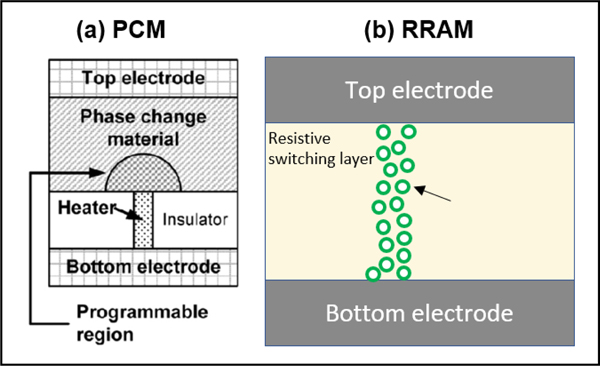

In the present Roadmap article, we pick up several topics on the emerging neuromorphic hardware and technology with machine learning applications that we think are particularly appealing to the readers of Nanotechnology, a community that is more interested in device and technology rather than machine learning algorithm and neural network architecture. The Roadmap starts with FLASH-based hardware that uses non-volatile transistors and targets inference systems. We believe this is a good segue from CMOS to emerging devices. Several resistance switching phenomena based devices including the phase change, memristor, magnetoresistance and ferroelectric devices are the subject of the next few sections, including the materials selection, electrical property optimization, device fabrication and circuit integration, and metrology control, etc. In addition, new materials (such as 2D materials and organic materials) and technologies (for example, self-assembly) are also covered. Finally, novel concepts, such as using photons, quantum phenomenon, superconductors, and the timing of electrical spikes for computing are introduced.

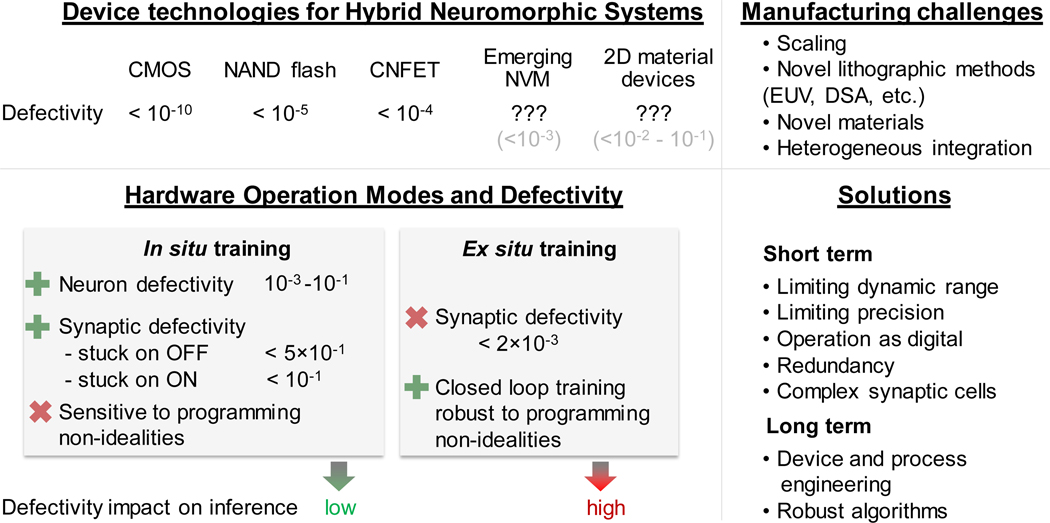

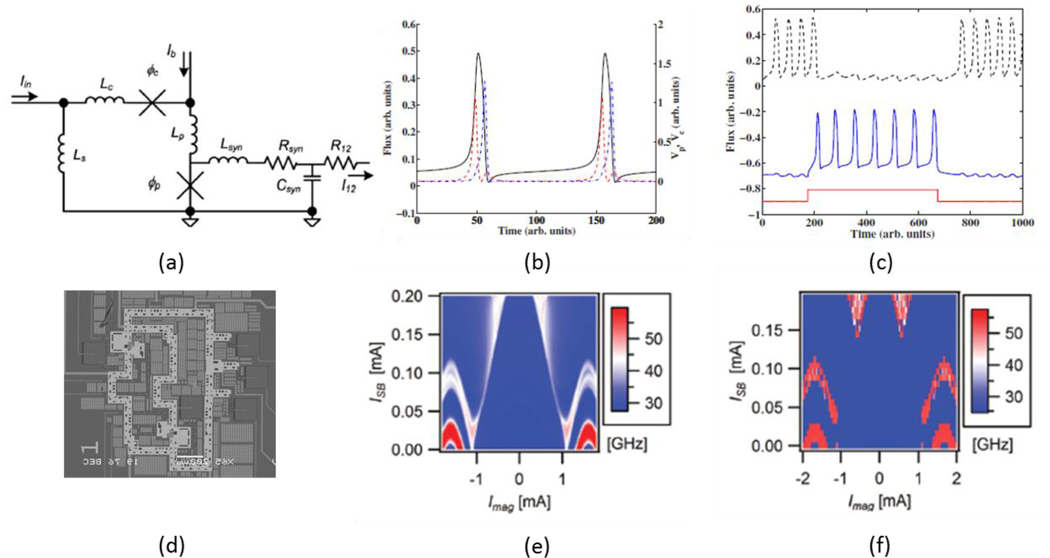

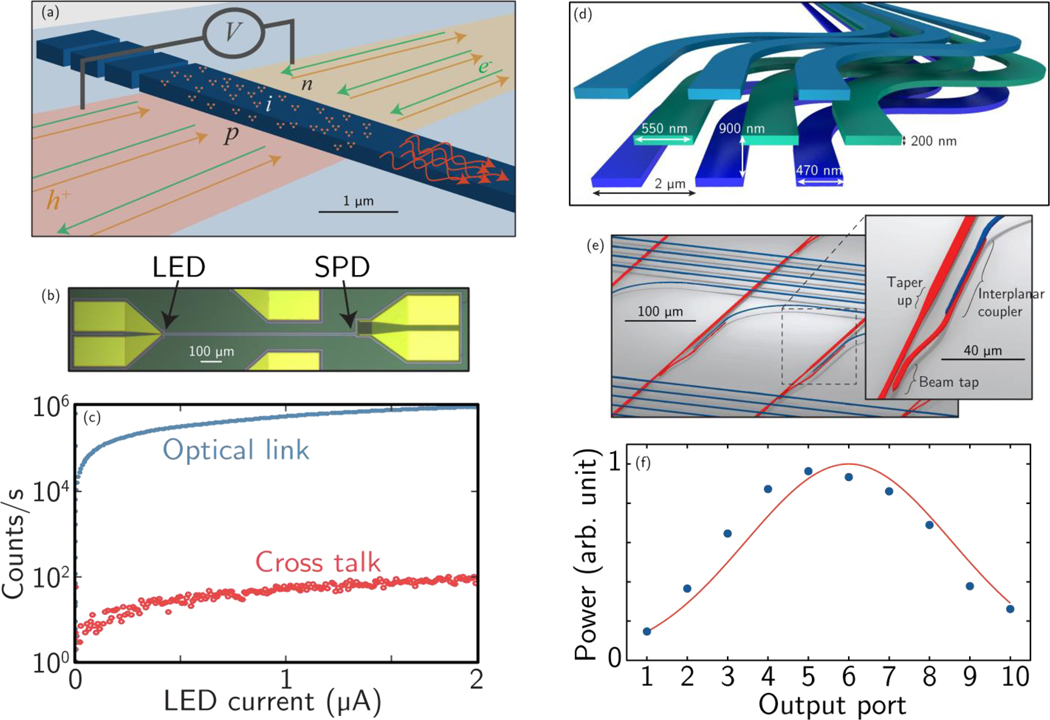

Figure 1.

Defectivity ranges, tolerances, and mitigation actions for different operational modes. Defectivity ranges are approximate and sensitive to degree of redundancy, network design (for networks) and the precise technology and fabrication routes (for device technologies).

References:

- 1.Big data needs a hardware revolution. Nature 554, 145–146 (2018). doi: 10.1038/d41586-018-01683-1 [DOI] [PubMed] [Google Scholar]

- 2.Xia Q. and Yang JJ. Nature Materials 18, 309–323 (2019). doi: 10.1038/s41563-019-0291-x [DOI] [PubMed] [Google Scholar]

- 3.Hu M. et al. Proceedings of the 53rd Annual Design Automation Conference. Art. No. 19. Austin, Texas. June 05-09, 2016. doi: 10.1145/2897937.2898010 [DOI] [Google Scholar]