Abstract

Objective:

To assess the accuracy, quality, and readability of patient-focused breast cancer websites using expert evaluation and validated tools.

Background:

Ensuring access to accurate, high-quality, and readable online health information supports informed decision-making and health equity but has not been recently evaluated.

Methods:

A qualitative analysis on 50 websites was conducted; the first 10 eligible websites for the following search terms were included: “breast cancer,” “breast surgery,” “breast reconstructive surgery,” “breast chemotherapy,” and “breast radiation therapy.” Websites were required to be in English and not intended for healthcare professionals. Accuracy was evaluated by 5 breast cancer specialists. Quality was evaluated through the DISCERN questionnaire. Readability was measured using 9 standardized tests. Mean readability was compared with the American Medical Association and National Institutes of Health 6th grade recommendation.

Results:

Nonprofit hospital websites had the highest accuracy (mean = 4.06, SD = 0.42); however, no statistical differences were observed in accuracy by website affiliation (P = 0.08). The overall mean quality score was 50.8 (“fair”/“good” quality) with no significant differences among website affiliations (P = 0.10). Mean readability was at the 10th grade reading level, the lowest being for commercial websites with a mean 9th grade reading level (SD = 2.38). All websites exceeded the American Medical Association- and National Institutes of Health-recommended reading level by 4.4 levels (P < 0.001). Websites with higher accuracy tended to have lower readability levels, whereas those with lower accuracy had higher readability levels.

Conclusion:

As breast cancer treatment has become increasingly complex, improving online quality and readability while maintaining high accuracy is essential to promote health equity and empower patients to make informed decisions about their care.

Keywords: breast, breast cancer, breast surgery, breast surgical oncology, internet, online information, readability

INTRODUCTION

Online medical information is easily accessible, and an increasing number of patients are turning to the internet for health information, usually before seeking evaluation by a healthcare provider.1 In the United States alone, approximately 72% of adults reported searching online for medical information.2 This shift toward accessing online health information can empower patients and potentially enhance patient-physician relationships, as patients who seek information online tend to take a more proactive role in managing their health and are more likely to engage in shared decision-making with physicians.3,4 Thus, it is critical for online medical information to be accurate, accessible, high quality, and easily readable, especially when discussing complex malignant conditions, such as breast cancer, for which treatment is individualized.

A new breast cancer diagnosis can be overwhelming, and women with a cancer diagnosis commonly search online for information on their diagnosis, treatment options, potential side effects, and coping strategies.5 Since breast cancer is the most commonly diagnosed cancer in women in the United States,6 online breast cancer information has the potential to significantly influence patient’s understanding of their diagnosis and care plan. However, the quality and readability of online medical information may be suboptimal as 9 out of 10 adults struggle with health literacy.7 As a result, both the American Medical Association (AMA) and the National Institutes of Health (NIH) recommend that patient health information be written at no greater than a 6th grade reading level.4,8

Ensuring that breast cancer patients have access to accurate, high-quality, and readable health information can support their journey toward informed decision-making with their multidisciplinary care team. This study aimed to assess the accuracy, quality, and readability of online breast cancer information using expert evaluation and validated tools.

METHODS

A qualitative analysis was conducted using validated tools and an expert review of 50 patient-focused breast cancer websites (see Supplementary Appendix, http://links.lww.com/AOSO/A380). Searches were conducted in the Midwestern United States in June 2022 using the following 5 search terms: 1) breast cancer, 2) breast cancer surgery, 3) breast cancer reconstruction surgery, 4) breast cancer chemotherapy, and 5) breast cancer radiation. The first 10 eligible websites appearing for each search term were included in the final count of 50 websites. Websites were categorized into five affiliation categories for comparative analyses: nonprofit hospitals, nonprofit organizations, government, commercial, and media.

Website Selection

Online searches were conducted using Google, the most widely used search engine in the United States.9 To be included in this analysis, websites were required to be written in English, provide text on breast cancer and/or breast cancer treatments, and be written for public use/not intended for healthcare professionals. The exclusion criteria were sponsored websites, news reports, duplicate websites, and video- and/or picture-only websites. Browser and cookie information was erased before conducting the search to ensure objectivity.

Accuracy Evaluation

Accuracy was defined as whether the information was based on current medical knowledge and accepted clinical practices. Accuracy was evaluated by a panel of 5 board-certified clinical breast cancer specialists, including 3 fellowship-trained breast surgical oncologists, 1 fellowship-trained medical oncologist, and 1 radiation oncologist. Blind website assessment was achieved by extracting plain text from individual websites with any website identifiers removed. The panel was directed to evaluate the accuracy of therapy descriptions, goals, and adverse therapy effects. Each member independently evaluated all 50 websites using a 5-point scale: 1 for ≤25% accuracy, 2 for 26–50% accuracy, 3 for 51–75% accuracy, 4 for 76–99% accuracy, and 5 for 100% accuracy. The mean accuracy scores for each website were calculated and compared among the affiliation categories and search term results.

Quality Evaluation

Websites that were included in the study were evaluated for quality by 1 person using the DISCERN Instrument, a tool developed for critically appraising written health information for consumers.10 The DISCERN instrument has been validated to reliably distinguish between low- and high-quality publications.11 DISCERN is comprised of 16 questions graded on a 5-point scale, ranging from 1 = none to 5 = entirely, of which the sum results in a final score. The total score ranges from 16 to 80 and was adapted from Nghiem et al,12 with a higher score indicating greater quality: 63–80 “excellent”, 51–62 “good”, 39–50 “fair”, 27–38 “poor”, and 16–26 “very poor.”

Readability Evaluation

Readability was evaluated using Readability Studio Software, Professional Edition version 2021 (Oleander Software, Ltd.). Nine readability formulas commonly used in the healthcare setting were used to analyze the websites: Coleman-Liau Index, Flesch-Kincaid Grade Level, FORCAST Readability Formula, Fry Readability Graph, Gunning Fog Index, New Dale-Chall Readability, New Fog Count (Kincaid), Raygor Readability Estimate, and Simple Measure of Gobbledygook Readability Formula. The use of multiple standardized readability formulas has been shown to improve accuracy.13 The results from the individual tests were used to calculate the average readability of each website. These averages were compared among affiliation categories, specific search terms, and the 6th grade recommended reading level for healthcare information set forth by both the AMA and NIH.8

Statistical Analysis

Accuracy, DISCERN scores, and readability levels were tested for normality using the Shapiro-Wilk normality test. After determining that the data were normally distributed, one-way analysis of variance (ANOVA) tests were performed to determine whether accuracy, quality, or readability had any significant differences among the search terms and affiliation groups. A post hoc pairwise comparison was performed using Tukey’s Honestly Significant Differences (HSD) test to further evaluate any significant differences found in the initial ANOVA. An unpaired t test was used to compare the mean readability scores with the recommended NIH- and AMA-readability level. Inter-rater reliability was calculated using Kendall’s Correlation of Concordance (W). Statistical analyses were performed using the R and RStudio software.

RESULTS

Websites Included

A total of 55 websites were screened for study inclusion and 5 were excluded: 2 were duplicates, 1 contained solely video information, 1 was a scientific manuscript, and 1 lacked patient educational information. Fifty websites (N = 50) were eligible for evaluation: 21 (42%) were from nonprofit organizations, 17 (34%) were from nonprofit hospitals, 5 (10%) were from government organizations, 5 (10%) were from commercial organizations, and 2 (4%) were from media outlets. The content on the included websites typically provided a broad overview of the search term as well as descriptions of what the patient can expect from the treatment modality searched. This included typical risks, side effects, and recovery.

Accuracy

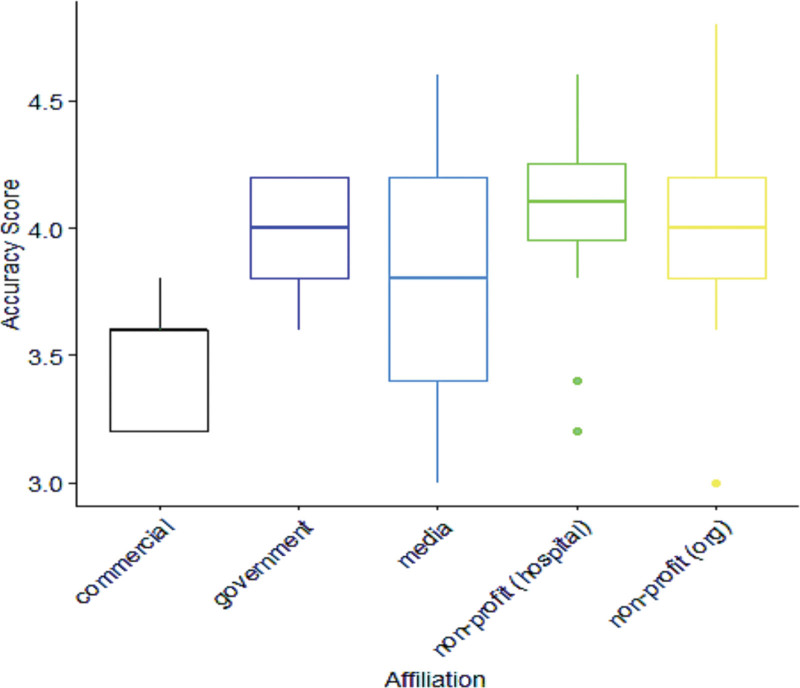

The mean (M) accuracy score for all 50 websites was 3.97 with a standard deviation (SD) of 0.42, corresponding to 76%–99% accuracy. Inter-rater reliability was calculated to be 0.4512 (P < 0.0001) which corresponds to moderate agreement. Among the different website affiliations, the highest accuracy was observed for nonprofit hospital websites (M = 4.06, SD = 0.38) and nonprofit organization websites (M = 4.03, SD = 0.40), whereas the lowest accuracy was found for commercial websites (M = 3.8, SD = 1.13). No significant differences were found when comparing accuracy according to website affiliation (P = 0.08) or search terms (P = 0.98) (Fig. 1) (Table 1).

FIGURE 1.

Accuracy scores and website affiliations.

TABLE 1.

Accuracy of Online Resources by Website Affiliation

| Search Term | Count | Accuracy Mean (SD) [Range] |

Statistically Significant Comparisons* |

|---|---|---|---|

| Commercial | 5 | 3.48 (0.27) [3.2, 3.8] |

None |

| Government | 5 | 3.96 (0.26) [3.6, 4.2] |

None |

| Media | 2 | 3.80 (1.13) [3.0, 4.6] |

None |

| Nonprofit (hospital) | 16 | 4.06 (0.38) [3.2, 4.6] |

None |

| Nonprofit (organization) | 22 | 4.03 (0.40) [3.0, 4.8] |

None |

One-way ANOVA test with overall model P value of 0.08.

Quality

The mean DISCERN quality score rating among all 50 websites was 50.8 (SD = 5.86), which corresponds to “fair”/borderline “good” quality, with a range of 38 to 62. The highest-quality information was found on nonprofit hospital websites (M = 52.6, SD = 5.25), while the lowest-quality information was found on government websites (M = 45.2, SD = 6.8). No significant differences were found when comparing the quality among website affiliations (P = 0.1) or search terms (P = 0.58) (Table 2).

TABLE 2.

Quality (DISCERN) of Online Resources by Website Affiliation

| Search Term | Count | Quality (DISCERN) Mean (SD) [Range] |

Statistically Significant Comparisons* |

|---|---|---|---|

| Commercial | 5 | 47.80 (6.30) [41, 54] |

N/A |

| Government | 5 | 45.20 (6.80) [38, 53] |

N/A |

| Media | 2 | 50.00 (2.83) [48, 52] |

N/A |

| Nonprofit (hospital) | 16 | 52.56 (5.25) [44, 62] |

N/A |

| Nonprofit (organization) | 22 | 51.55 (5.56) [43, 61] |

N/A |

One-way ANOVA test with overall model P value of 0.10.

Readability

There was a significant difference when comparing readability among website affiliations; nonprofit hospital websites were found to be significantly harder to read than commercial websites (P = 0.036). Nonprofit hospital websites had a mean 11th grade reading level (SD = 0.98), whereas commercial websites had a mean 9th grade reading level (SD = 2.38) (Table 3). The mean reading-grade level among all 50 websites was at the 10th grade level (SD = 1.19), which exceeds the AMA- and NIH-recommended 6th grade level by 4.4 levels (P < 0.001).

TABLE 3.

Readability Level of Online Resources by Website Affiliation

| Search Term | Count | Readability Level Mean (SD) [Range] |

Statistically Significant Comparisons* | P † |

|---|---|---|---|---|

| Commercial | 5 | 9.08 (2.38) [7.6, 13.3] |

Nonprofit (hospital) | 0.04 |

| Government | 5 | 9.82 (1.50) [8.4, 12.0] |

None | N/A |

| Media | 2 | 10.45 (0.64) [10.0, 10.9] |

None | N/A |

| Nonprofit (hospital) | 16 | 11.08 (0.98) [9.7, 13.0] |

Commercial | 0.04 |

| Nonprofit (organization) | 22 | 10.44 (1.20) [8.3, 12.9] |

None | N/A |

One-way ANOVA test with overall model P value of 0.049.

Tukey multiple comparisons of means.

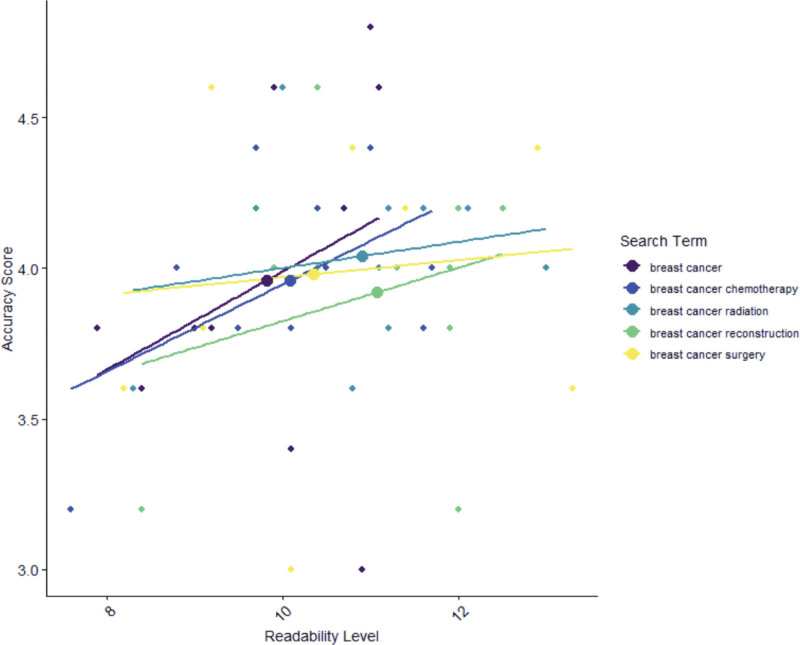

When analyzing accuracy, quality, and readability simultaneously, nonprofit hospital websites had the lowest readability (M = 11th grade, SD = 0.98), but the highest quality (M = 52.6, “good”; SD = 5.25) and accuracy (M = 4.06, SD = 5.25). In contrast, commercial websites had the lowest accuracy (M = 3.8, SD = 1.13), but were the most readable (M = 9th grade, SD = 2.38) (Fig. 2). No significant differences were found when comparing reading-grade levels among the search terms (P = 0.98).

FIGURE 2.

Readability level and website accuracy by search term.

DISCUSSION

In our evaluation of online breast cancer information, most websites were found to be reasonably accurate; however, information quality was fair/good, and the mean reading grade level was found to be at the 10th grade level, which was significantly higher than the AMA- and NIH-recommended 6th grade level. These findings demonstrate a significant opportunity for improving patient-focused breast cancer websites.

As many patients turn to the internet as their first source of health information, and breast cancer accounting for approximately one-third of diagnosed malignancies in women, it is critical that online patient-focused breast cancer information is accurate, high-quality, and digestible for the average reader.1,5,6 Patients often seek information online to prepare themselves for healthcare appointments; online resources can inform patients what may be discussed during their upcoming visit and prepare them to ask appropriate and relevant questions.1 Objective data have found that when patients have access to accurate and high-quality information, they are better equipped to understand their conditions, follow treatment plans, and recognize warning signs of complications.14 Breast cancer website quality has been evaluated previously, and our findings are consistent with those of other authors. One study conducted in 2016 by Nghiem utilized the DISCERN instrument to evaluate 20 breast cancer patient websites. They similarly found that although website quality is generally good, there is significant variability across websites. Another study conducted by Arif et al in 2018 found similar results when analyzing 200 websites discussing breast cancer treatment options using the Journal of the American Medical Association criteria scores.12,15–17

Few studies have assessed the accuracy of online information on breast cancer. However, a 2010 study analyzed the accuracy of 289 breast cancer websites. This study compared website data to the NIH and Clinical Excellence guidelines on breast cancer18 and websites were considered inaccurate if they contained information not mentioned in the guidelines. The authors found that educational facility websites were more accurate, whereas interest group websites were less accurate, which contrasts with our findings as we identified no statistical difference among website affiliations. However, this study was conducted over a decade ago and may not be indicative of the expansion of online health information and changes in breast cancer treatment over time.

In our accuracy evaluation, we adapted a similar methodology to a study on pancreatic cancer performed previously by Storino et al13 in which board-certified experts personally reviewed the website information. Using readability software and expert panel evaluation, the authors concluded that online information on pancreatic cancer overestimated reading ability and was reasonably accurate. Similar to Storino et al13 we also found that online information on breast cancer was reasonably accurate and had higher than recommended reading levels, suggesting that high readability levels are not isolated to a specific cancer type, but maybe a widespread issue when it comes to online oncologic information.

A significant challenge exists for online health materials in providing complex medical information while maintaining adequate readability. Nearly 9 out of 10 adults struggle with understanding health information, which leads the AMA and NIH to recommend that patient health information be written at no greater than the 6th grade reading level.4,8 Similarly, we found that the readability of online breast cancer health information greatly surpasses the recommended NIH/AMA level. A previous study assessing the quality of online breast cancer resources used multiple readability tests to analyze 100 websites over time and identified that readability worsened over time.17 This lack of readable health information can influence health inequities as patients with lower health literacy are more likely to have inferior healthcare outcomes.19,20 Low health literacy disproportionately affects certain demographic groups, particularly those with lower educational attainment, limited English proficiency, or lower socioeconomic status.8,19,20 As breast cancer treatment has become increasingly complex and individualized,21 it appears that the readability of such information for the general public, and especially for marginalized populations, requires significant improvement.

Patient-focused breast cancer websites require a balance to ensure that information is of high quality and accuracy, while simultaneously maintaining adequate readability. This poses a significant challenge; methods for achieving this task can be gleaned from prior ventures to make health information more readable. Shepperd et al22 and Williams et al23 improved the readability of institutional patient handouts by following evidence-based guidelines from the NIH, such as lowering all sentences to less than 15 words, using bullet points, and writing shorter paragraphs. Another strategy includes incorporating visual aids such as diagrams, charts, and infographics to illustrate key points and concepts. Visuals can convey information more effectively than text alone and can help clarify complex ideas. Utilizing interactive learning tools such as videos, animations, or interactive modules to visually demonstrate concepts can enhance comprehension and retention. Additionally, input can be obtained from patient focus groups, patient advocacy groups, and experts in healthcare education/communication to assess comprehension and identify areas of improvement. The quality of content on these websites can be improved by including reliable sources of information and providing references. By providing additional resources, credibility can be improved while allowing readers to access more in-depth information if desired; however, reference quality and readability must also be considered. Providing plain language summaries or interpretations of complex research findings alongside technical references could aid comprehension; these summaries could distill key information into clear, accessible language without sacrificing accuracy.

The limitations of this study include the fact that the readability software used does not consider the use of graphics or website text organization, both of which may improve patient comprehension, and it does not factor in prior knowledge of the material or the level of education of the reader. It was also presumed that the Google search engine was the most used source of information by patients; however, this may not be the case for all patients, who may also use social media, online forums, or other online sources. Nevertheless, providers can still use these findings to guide their patients toward reliable online resources and help them navigate the vast amount of information available on the Internet.

CONCLUSIONS

While most breast cancer websites were reasonably accurate, information quality requires improvement, and the mean reading grade level was found to be at the 10th grade level, which was significantly higher than the AMA- and NIH-recommended 6th grade level. These findings demonstrate the need for online breast cancer information that is not only accurate but also of high quality and readable for all patients. Online breast cancer information often exceeds recommended reading levels, raising concerns about its potential as a source of health inequities. Future research should investigate methods to improve the quality and readability of online information while maintaining its accuracy. Ultimately, improving these aspects of online breast cancer information is not only critical for patient outcomes but also a step toward promoting health equity and empowering patients to make informed decisions about their breast cancer care.

ACKNOWLEDGMENTS

We thank Bethany Canales, MPH for her role in statistical data analysis.

E.A.V.: study design, data collection, data interpretation, writing of article. C.S.C.: data collection, data interpretation, writing of article, critical review, project supervision. A.N.C., L.N.C., T.K.: data collection, critical review. A.L.K.: study design, data collection, data interpretation, writing of article, critical review, project supervision.

Supplementary Material

Footnotes

Disclosure: The authors declare that they have nothing to disclose.

Funding for this project was provided by the Medical College of Wisconsin.

This project was reviewed by the Medical College of Wisconsin Institutional Review Board and did not meet the criteria for human subject research.

Data can be accessed by contacting the corresponding author.

Supplemental digital content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal’s Web site (www.annalsofsurgery.com).

REFERENCES

- 1.Tan SS, Goonawardene N. Internet health information seeking and the patient-physician relationship: a systematic review. J Med Internet Res. 2017;19:e9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Pew Research Center. Information Triage. Available at: https://www.pewresearch.org/internet/2013/01/15/information-triage. Accessed June 3, 2022. [Google Scholar]

- 3.Bylund CL, Gueguen JA, D’Agostino TA, et al. Cancer patients’ decisions about discussing Internet information with their doctors. Psychooncology. 2009;18:1139–1146. [DOI] [PubMed] [Google Scholar]

- 4.Holden CE, Wheelwright S, Harle A, et al. The role of health literacy in cancer care: a mixed studies systematic review. PLoS One. 2021;16:e0259815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sedrak MS, Soto-Perez-De-Celis E, Nelson RA, et al. Online health information-seeking among older women with chronic illness: analysis of the women’s health initiative. J Med Internet Res. 2020;22:e15906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Siegel R, Miller K, Fuchs H, et al. Cancer statistics, 2022. CA Cancer J Clin. 2022;72:7–33. [DOI] [PubMed] [Google Scholar]

- 7.Network of the National Library of Medicine. An Introduction to Health Literacy. National Institutes of Health, 2021.Available at: https://www.nnlm.gov/guides/intro-health-literacy. Accessed June 20, 2022. [Google Scholar]

- 8.Weiss BD. Health literacy: a manual for clinicians: part of an educational program about health literacy. American Medical Association/American Medical Association Foundation; 2003. [Google Scholar]

- 9.Pew Research Center. Search Engine Use Over Time. 2012. Available at: https://www.pewresearch.org/internet/2012/03/09/main-findings-11. Accessed June 20, 2022. [Google Scholar]

- 10.Charnock D, Shepperd S, Needham G, et al. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health. 1999;53:105–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rees CE, Ford JE, Sheard CE. Evaluating the reliability of DISCERN: a tool for assessing the quality of written patient information on treatment choices. Patient Educ Couns. 2002;47:273–275. [DOI] [PubMed] [Google Scholar]

- 12.Nghiem AZ, Mahmoud Y, Som R. Evaluating the quality of internet information for breast cancer. Breast. 2016;25:34–37. [DOI] [PubMed] [Google Scholar]

- 13.Storino A, Castillo-Angeles M, Watkins AA, et al. Assessing the accuracy and readability of online health information for patients with pancreatic cancer. JAMA Surg. 2016;151:831–837. [DOI] [PubMed] [Google Scholar]

- 14.Murray M, Tu W, Wu J, et al. Factors associated with exacerbation of heart failure include treatment adherence and health literacy skills. Clin Pharmacol Ther. 2009;85:651–658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Arif N., Pietro G. Quality of online information on breast cancer treatment options. Breast. 2018;37:6–12. [DOI] [PubMed] [Google Scholar]

- 16.Scullard P, Peacock C, Davies P. Googling children’s health: reliability of medical advice on the internet. Arch Dis Child. 2010;95:580–582. [DOI] [PubMed] [Google Scholar]

- 17.Killow V, Lin J, Ingledew P. The past and present of breast cancer resources: a re-evaluation of the quality of online resources after eight years. Cureus. 2022;14:e28120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Quinn EM, Corrigan MA, McHugh SM, et al. Breast cancer information on the internet: analysis of accessibility and accuracy. Breast. 2012;21:514–517. [DOI] [PubMed] [Google Scholar]

- 19.Kutner M, Greenberg E, Jin Y, Paulsen C. The health literacy of America’s adults: Results from the 2003 National Assessment of Adult Literacy. 2006. Available at: https://nces.ed.gov/pubs2006/2006483.pdf. Accessed September 9, 2023. [Google Scholar]

- 20.Hasnain-Wynia R, Wolf M. Promoting health care equity: is health literacy a missing link? Health Serv Res. 2010;45:897–903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Moo T, Sanford R, Dang C, et al. Overview of breast cancer therapy. PET Clin. 2018;13:339–354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sheppard ED, Hyde Z, Florence MN, et al. Improving the readability of online foot and ankle patient education materials. Foot Ankle Int. 2014;35:1282–1286. [DOI] [PubMed] [Google Scholar]

- 23.Williams AM, Muir KW, Rosdahl JA. Readability of patient education materials in ophthalmology: a single-institution study and systematic review. BMC Ophthalmol. 2016;16:133. [DOI] [PMC free article] [PubMed] [Google Scholar]