Abstract

The rapid evolution of Artificial Intelligence (AI) and its widespread adoption have given rise to a critical need for understanding the underlying factors that shape users' behavioral intentions. Therefore, the main objective of this study is to explain user perceived behavioral intentions and use behavior of AI technologies for academic purposes in a developing country. This study has adopted the unified theory of acceptance and use of technology (UTAUT) model and extended it with two dimensions: trust and privacy. Data have been collected from 310 AI users including teachers, researchers, and students. This study finds that users' behavioral intention is positively and significantly associated with trust, social influence, effort expectancy, and performance expectancy. Privacy, on the other hand, has a negative yet significant relationship with behavioral intention unveiling that concerns over privacy can deter users from intending to use AI technologies which is a valuable insight for developers and educators. In determining use behavior, facilitating condition, behavioral intention, and privacy have significant positive impact. This study hasn't found any significant relationship between trust and use behavior elucidating that service providers should have unwavering focus on security measures, credible endorsements, and transparency to build user confidence. In an era dominated by the fourth industrial revolution, this research underscores the pivotal roles of trust and privacy in technology adoption. In addition, this study sheds light on users' perspective to effectively align AI-based technologies with the education system of developing countries. The practical implications encompass insights for service providers, educational institutions, and policymakers, facilitating the smooth adoption of AI technologies in developing countries while emphasizing the importance of trust, privacy, and ongoing refinement.

Keywords: Artificial intelligence, UTAUT, Extended UTAUT, Behavioral intention, Academia, Technology adoption

1. Introduction

Artificial intelligence (AI), as a concept, first ascended in the 1940s and 1950s, when several researchers from diverse fields, such as philosophers, scientists, economists, and mathematicians began to discuss the possibility of forming an artificial human brain that could think of its own with problem solving capabilities [1]. In the 20th century, the word AI in science fiction spread like wildfire. However, in the 21st century, that concept is no longer an abstract idea. Instead, it took form and is being used in diverse fields [[1], [2], [3], [4]]. AI has permeated nearly every facet of modern life, finding applications across diverse sectors [[5], [6], [7]] such as healthcare, business operations, and educational systems. In the education sector, the adoption of AI can be noticed in terms of personalized learning, intelligent tutoring system, simulations and virtual reality, career counseling using AI and others [[8], [9], [10], [11], [12], [13], [14]]. Most applications for artificial intelligence in education (AIEd) may be found in the academic disciplines of science, mathematics, engineering, and technology [15,16]. Prior research in AIEd suggested that a system that is holistic and dynamic can help by planning pedagogic strategies along with unleashing the true potentials of learning management systems (LMS) used in diverse educational institutions for learning and teaching the students [17,18].

Compared with developed countries, the ratio of students taking higher education is much higher in developing countries such as Bangladesh thanks to the low cost of tertiary-level education [19]. The use of AI in the education sector can mostly be noticed at higher educational institutions in developed countries, such as universities, are integrating diverse AI-driven facilities for university students to get virtual laboratories, AI tutoring systems, AI-based student support, and other facilities [20]. However, universities in developing countries are yet to integrate AI in their educational system because of lack of proper infrastructure, insufficient financial condition, lack of expertise, and government support [21]. Another factor discouraging universities in developing countries from integrating AI into their system is the perception that AI is still in its nascent and experimental phase of development [19]. Because there are only a handful of collaborations between higher educational institutions and companies that provide AI technologies in developing countries, there is a gap in integrating and adopting such AI technologies [22,23]. Therefore, prior studies on this topic are primarily based on the context of developed countries where most of the higher educational institutions have integrated AI into their education system [20]. Also, there are avenues for research describing the adoption of AI technologies by universities, students, and researchers [5,24,25]. In resource constrained environments such as countries with unique challenges in adopting new technologies such as social or cultural perceptions, limited infrastructure, financial constraints, AI can play a revolutionary role in improving access to information and quality of education [8,26]. In addition, global priorities, nowadays, have shifted towards equal opportunities for technology-driven learning to promote inclusive educational advancements [27,28]. Also, investigating AI adoption can help developing nations take proactive measures to keep pace with technological advancement and harness the potential of AI in education. This study, therefore, addresses the following research questions:

RQ1. What are the key factors that influence the behavioral intention and use behavior of AI technologies for academic purposes in developing countries like Bangladesh?

RQ2. How do trust and privacy concerns affect the adoption of AI technologies in academia within a developing country context?

RQ3. To what extent does the extended UTAUT model, incorporating trust and privacy, explain the behavioral intention and use behavior related to AI adoption in developing countries' academia?

This paper, therefore, explores the factors that shape AI user's behavioral intention to adopt AI and current usage behavior including responses from students, academicians, teachers, and researchers. This area of research is yet to be explored as there are very few prior studies on the adoption of AI for academic purposes, especially in developing countries. This research will also provide valuable insights to AI developers, educational administrators, teachers, and policymakers to design and apply an AI-driven education system most effective for university students, faculty members, and researchers. By shedding light on the patterns and factors influencing AI adoption in this context, the research contributes valuable insights that can inform educational policies, technology integration strategies, and future developments in AI education, ultimately facilitating the advancement of technology-driven learning in developing nations. Additionally, this study may offer broader implications for the global educational landscape as AI continues to play an increasingly prominent role in modern education systems.

This research represents one of the novel explorations into the adoption of AI as it incorporates and extends the UTAUT framework in a developing country academic setting, contributing to the field of educational technology and technology acceptance. UTAUT is a well-established framework that incorporates different technology acceptance theories and explains key drivers of technology acceptance in different research settings. Developed by Venkatesh et al. [29], it states that four primary dimensions including effort expectancy, facilitating conditions, social influence, and performance expectancy greatly impact use behavior and user perceived behavioral intentions. The UTAUT framework provides a robust lens through which adoption of AI technologies can be examined properly [[30], [31], [32], [33], [34]]. Since AI uses personal data, it is also imperative to assess user's perceptions towards the privacy and trust issues of adopting AI [[35], [36], [37]]. Therefore, this study provides valuable insights into the critical role played by privacy and trust in shaping users adoption decisions that got limited attention in previous works. It also paves the way for future studies, advancing the understanding of technology diffusion in the educational domain, particularly in developing nations.

This paper is structured as follows: Section 2 illustrates a comprehensive review of relevant literature, covering AI applications and its adoption in academia, with a focus on developing countries. Section 3 outlines the research methodology, including data collection procedures, sampling techniques, and analytical approaches employed. Section 4 presents the results of the data analysis, validating the proposed hierarchical model. Section 5 offers an in-depth discussion of the findings whereas Section 6 underscores the key contributions of the research and delineates future research directions to further advance knowledge in this domain. Finally, Section 7 concludes the paper by summarizing the main findings and emphasizing the significance of the study in facilitating the adoption of AI technologies in developing countries' academia.

2. Literature review

2.1. Artificial intelligence (AI)

AI is a broad term where “algorithms”, “rational performance,” and “computational intelligent system” have been used in its definition. Artificial intelligence (AI), a theoretical concept from the mid-20th century until its practical application in the 21st century [[38], [39], [40]]. With the advent of the internet, AI-mediated technologies can practically learn, reason, correct, perceive, and understand language [41]. The advancement of computer-based technology verifies that computers are now used to imitate human psychology and minds to solve problems, make decisions, and think critically [27]. The present-day expansions in expert systems, speech recognition, and machine learning have changed the overall landscape of AI [42]. AI technologies have diversified applications ranging from education to supporting decision-making and creative processes [[43], [44], [45]]. AI has revolutionized diagnostic, treatment, and patient care services [[46], [47], [48]]. In education, it has not only enhanced the productivity of students but also that of academicians [49,50]. AI would appraise the system of measurement and development of student's capabilities [51,51].

2.2. Adoption of AI in education

According to Wang [52], AI adoption in education brings several key benefits, including (a) institutional aptitude, in which AI can abstract information from numerous systems; (b) learning and instruction, wherein AI supports students and academicians with pertinent resources to flourish; (c) student concerns, whereby AI transport modified planning of degree and provision with supplementary advice and training. The use of AI is swelling in almost every sector, and the educational sector is no exception, as the use of AI opens an original prospect for learning in higher education, staff support, and student assortment [53,54]. At the university level, the adoption of AI is endorsed for a significant element called enthusiasm of people, and a greater aptitude towards AI will provide the students a greater latent for potential achievement [55,56].

In the view of Winkler-Schwartz et al. [48], AI has now distorted the education system by improving learning outcomes, systematizing administrative tasks, and creating opportunities for personalizing education. AI expedites the engagement of students by supporting collaboration, delivering rapid feedback, and improving study materials with collaborative essentials [57,58]. The AI-based applications acclimate students' and teachers' preferences and learning styles, thus developing the knowledge base [59,60]. Rahman & Watanobe [61] illustrated the benefits ChatGPT offers to researchers, educators, and learners, including interactive conversations, personalized feedback, increased accessibility, lesson preparation, evaluation and advanced methods for teaching complex thoughts. As per their research, ChatGPT posed several threats including the risk of online exam cheating, generating humanized text leading to diminished critical thinking skills and complications in assessing information generated by ChatGPT. Abaddi [62] highlights the transformative potential of Generative Pretrained Transformers (GPTs) in enhancing digital entrepreneurship among students and suggests that GPTs can meaningfully influence students' intention and perception towards starting digital ventures. This study illustrates a valuable insight for digital entrepreneurship and emphasizes the significance of assimilating advanced AI technologies like GPTs into support structures and entrepreneurial education. Fuchs [63] illustrates the complex perspective of NLP (Natural Language Processing) models like Google Bard and ChatGPT in higher education, weighing both the challenges and advantages they present. These AI tools could revolutionize learning and teaching, enabling more interactive and customized educational experiences. Moreover, the role in advancing learning and teaching, AI has now been modernizing the administrative tasks in educational institutions and making teaching more fulfilling through solving complex tasks, virtual guidance, and effective engagement. Along with these advantages, several significant disadvantages of AI illustrated by Saheb and Saheb [64] include privacy, algorithm bias, and ethical concerns. As per the study of Perrotta and Selwyn [65] AI has a limited understanding of individual learning needs as it may fail to grasp the nuanced necessity of individual students. Additionally, the teachers generate their lesson plan, and instructional strategy through AI driven technology hence reducing the creativity and autonomy of a teacher [66,67]. This loss may not only demoralize the role of educators but also weakens the learning experience quality. According to Baker and Hawn [68], most of the students use AI algorithms for their academic tasks and there have a significant risk of algorithmic bias and unfair treatment. Sun et al. [69] illustrate the dependency of AI may introduce the risk of technical disappointment i.e. software bugs, system crashes and connectivity issues. The intermissions of AI-driven educational platforms may impede learning progress and activities [70,71]. The automation of educational sector by AI driven technology could lead the displacement of educational professionals i.e. tutors, support staffs, and teachers [72,73]. It possibly undermines the quality of education and extensive adoption of AI-driven systems which may result in loss of expertise and job losses. Moreover, the erosion of human existence in educational sector could lead to an adverse effect on learning outcomes and student engagement [68]. Some of the shadow side of AI that demand attention marked by social and ethical implications includes (a) information bias, in which AI hinders the learners ability to develop a well-rounded understanding [74,75]; (b) plagiarism, undervalues the standing of originality and critical thinking in learning, and demoralizes the integrity of educational system [76]; (c) hindering social skills, as the interactions occurs mostly using digital interfaces rather than face-to-face-communication, the learners as well as the students have struggled to advance their interpersonal skills i.e. conflict resolution, empathy, collaboration, and networking skills [77,78]; (d) users privacy and data security, whereas the student's academic record, learning behaviors and personal information may be vulnerable to unauthorized access, breaches or misuse, compromising their confidentiality and privacy [79].

AI provides enormous prospects to support governance with sophisticated efficiency and effectiveness [80]. AI is anticipated to help researchers, administrative staff, teachers, and students in higher education [54,81]. AI is a better service provider than conventional teacher-centric educational systems [80]. The adoption of AI has become an emergent field in educational technology as it provides supplementary resources and learning tools based on the provided guidelines [57]. It provides appropriate guidance and timely assistance for enabling learning effectiveness. Alongside, AI delivers intuition into learner's knowledge development, so the instructor may actively propose guidance and support when learners are in need [82]. Moreover, the computer-based adaptive education system helps the intellectual and affective position of learners improve learning outcomes and contribute to completing academic tasks on time [82].

In almost all developing countries, the governmental apprehension is to improve the educational quality, and this might be accomplished through implementing contemporary technology like AI [83]. To expand the range of higher education, most governments are expanding their investment in AI-based technology [83]. AI has instantly impacted decision-makers in the academia and AI courses with applied mechanisms casing major topics i.e., machine learning, planning, and data structures [84]. A deep interrogation of the value system is necessary for educational institutions before instigating the mechanisms of AI-driven systems. Although AI is gaining much popularity, higher educational institutions in developing countries still have not adopted this sophisticated medium of technology.

AI along with cloud, data analytics, and block chain, brought the 4th revolutionary advancement and changes in education, and it has a growing demand for innovative education and a personalized knowledge atmosphere [85]. The demand for higher education system builds upon mostly diverse issues, e.g., adaptation, resource availability, potential benefits, and acceptance of change [86]. AI encourages more significant real-time learning, revised outcomes, and an appropriate knowledge setting when educational assessment progresses more swiftly than ever before [66,87]. The use of AI in educational assessment is becoming more extensive and the learner must appreciate the standards behind the technology for academic honesty and cheating prevention [59,88,89].

2.3. Models related to technology adoption

Prominent researchers have articulated diverse theories and models related to technology adoption, such as: IDT developed by Rogers in 1962 [90]; TAM developed by Davis in 1989 [91]; Model of PC Utilization (MPCU) proposed by Thompson, Higgins, and Howell in 1991 [92]; the combined TAM-TPB introduced by Ajzen in 1991 [93]; and the Unified Theory of Acceptance and Use of Technology (UTAUT) proposed by Venkatesh et al., 2003 [29]. TAM observed that perceived simplicity or ease of use and perceived usefulness are the significant predictors of the intentions to utilize technologies. According to Ref. [94], IDT demonstrates that progressive persons are more likely to admit new ideas and can cope with more significant levels of ambiguity. By considering a wide variety of factors, including Social Factors, Complexity, Job-fit, and Long-term Consequences, the MPCU creates a framework for the study of innovation in a wide variety of application situations. In theory, the combined TAM-TPB model is able to provide precise estimations of users' intentions to adopt new technologies. Venkatesh et al. [29], emphasized upon the understanding of human acceptance behavior in different domains. These include Social Influence (SI), Facilitating Condition (FC), Effort Expectancy (EE), and Performance Expectancy (PE). PE, SI and EE are explicitly related with behavioral intentions, and the final factor, FC is accompanied by actual usage. This model is prevalent in technology acceptance and concentrates much on technological components to successfully implement information structures.

2.4. An extended UTAUT model

This study tries to assess the adoption of AI technologies in developing countries for accomplishing academic tasks. The UTAUT model explicates the user intention for practicing an interactive structure conventional performance, and because of its multi-dimensional factors, it determines the highest illustrative power of all potential standard models and thus helps to support the technology development process. The fundamental constructs of UTAUT are anticipated as the direct elements of assessing the adoption of AI technologies for academic purposes includes FC, SI, EE, and PE [29]). In the view of researchers such as [52,[95], [96], [97]], trust and privacy have been playing role in shaping users’ behavior in data driven economy. As technology continues to advance and the collection and analysis of personal data become more pervasive, individuals have become increasingly aware of the importance of safeguarding their privacy and ensuring that their data is handled responsibly [98,99].

Performance Expectancy (PE) is illustrated as that potential users have confidence that using a new approach will support users to substantially improve their existing performance [100]. PE is a vigorous BI (Behavioral Intention) factor for accepting technology. It is considered an imperative component that affects users’ intention to use a technology [101]. Attitude have played a fundamental part and is anticipated to be affected by PE, which is considered the specific characteristic affecting the behavior to adopt AI [102]. AI technology helps to simplify the collective learning process and facilitate team-based learning activities [103,104]. AI provides continuous assessment and immediate feedback to improve learning effectiveness and teaching progress [67,105]. Moreover, individuals are more likely to learn about technology rapidly if they contemplate it is convenient in their daily lives [54]. Integration of AI technologies in educational setting is prophesied on the belief that AI tools may suggestively augment administrative efficacy, learning outcomes and teaching methodology [106]. In the setting of AI in education, PE demonstrates through numerous avenues i.e. improve the accessibility to educational resources [107]; personalized learning involvement that adapt to specific student needs [108], and inform teaching strategies through the provision of data-driven insights [109,110]. Additionally, the ability of AI to automate administrative settings promises to streamline processes, operations and present a clear performance advantage to institutional stakeholders [111]. Nevertheless, the assumption of a straightforward path from PE to AI adoption oversees several critical determinants. The expected performance benefits of AI may not justifiably realize across different socio-economic groups and regions as the digital divide presents a noteworthy challenge [112]. The technological determinism fails to account for the institutional and socio-cultural barriers that might obstruct technology integration. The ethical considerations and privacy concern surrounds AI use [113], along with the fear to foster an overreliance on technology at the expense of critical thinking skills [114] and additionally complicates the narrative supporting unbridled AI adoption in Education. Addressing these apprehensions necessitates a balanced approach to AI integration in Academic environment. Reasonable access to digital literacy and AI resources ingenuity can help to narrow the digital divide and also ensure the benefit of AI are widely accessible. Therefore, we may state our first hypotheses as the following:

H1

PE significantly and positively impacts the intention to adopt AI for Academic Purposes

Effort Expectancy (EE) demonstrates the extent of easiness regarding using newer systems [91]. Complexity and apparent ease of use confined in other models carries similar concepts of EE [115]. The perception of EE is similar to the hypothesis of ease of use as predicted by Diffusion of Innovation Theory [116,117]. The usage of technology relies mostly on the characteristics of individual behavior [102]. Therefore, the nature of AI adoption from an academic perspective requires certain skills and knowledge levels, and the EE plays a decisive role in determining the user's intent to use such technologies. Previous studies have shown that EE is crucial since it helps people determine how much work is involved while using a given piece of technology [118,119]. Effort expectancies' positive impact proclaims that users are more persuaded to embrace technologies as it is easy to learn and user-friendly [120]. In the academic settings, both students and educators are more likely to accept AI for seamlessly integrate into prevailing workflows, and minimizing disruptions [89,121]. AI tools have offered a straightforward functionality and intuitive interfaces that helps to enhance learning and teaching experiences, thus encouraging their adoption [51]. Additionally, AI technology requires minimal effort to use and have an easy accessibility can suggestively benefit users with varying levels of tech-savviness [122]. This inclusive nature helps to extend the potential users base within the educational institutions, driving higher acceptance rates [123]. Critics argue that concentrating significantly on EE may surpass other critical factors i.e. ethical considerations, cost and pedagogical effectiveness [124]. This standpoint illustrates that the ease of use alone might not be adequate to drive the intention to adopt AI in academic contexts [125,126]. As per the study of Biesta [114] there has an apprehension that prioritizing ease of use may lead to the simplification of AI tools but potentially limiting their in-depth educational experiences and capabilities. To resolve these arguments and counterarguments, it is necessary to identify that while EE is a critical determinant of AI adoption in educational settings, it operates within a multifaceted ecosystem of influencing factors. Hence, we may assume,

H2

EE positively impacts the intention to adopt AI for Academic Purposes

Social influence is defined as the perceived importance others place on the individual's adopting the new system [100]. Various literatures have shown the impact of SI on behavioral acceptance at the individual level [127]. The social influence is comparable with social factors, image construct, and subjective norms used in TPB, TRA, IDT, and MPCU in the way that represent that individual's behavior is adjusted to the observation of other about them. Individuals use technology not only for personal preferences but also for compliance requirement [115]. Social Influence is about the view of persuasive individuals, the students concerning the use of technology, and the social atmosphere that has a major impact on individual activities [128]. Within the academic settings, the collective ratification and rejection of new technologies by peers might create a normative pressure that influences individual behaviors and attitudes towards AI adoption [129]. This circumstance is predominantly pronounced in environment that value collective decision-making and conformity. The support and advocacy from authority figures i.e. department heads, educators and academic assessors have played a crucial role to shape faculty members and learner's willingness to engage with AI tools [130,131]. Their encouragement for AI may signal its applicability and value in enhancing educational outcomes, thus motivating adoption [66]. As per the social learning theory, individuals learn not only through direct experience but also by perceiving the outcomes and movements of others behavior [132]. Following this context of AI for educational settings, witnessing a successful implementation by influential figures may significantly affect individual's intention to adopt similar technologies [77]. Critics may argue that an over prominence on SI risks diminish the importance of critical evaluation and individual agency of AI technologies [133]. This might lead to a situation where the potential individuals are adopting AI tools based on authority directives or peer pressure rather than a conversant assessment of their benefits and drawbacks [134]. As per the study of Flanagin and Metzger [134], the effect of SI in more individualistic academic settings might be weaker compared to the collectivistic settings where the communal consensus plays a more vital role in decision-making procedures [135]. SI is considered an important determinant of behavioral intention and the outside impact on individual's perception regarding adopting AI technologies for educational purposes. Therefore,

H3

SI positively impacts the intention to adopt AI for Academic Purposes

The degree to which users have reliance on allied infrastructure and conductive techniques is effectually obtainable to support the usage of new system [100]. FC determines the acceptance and use of innovative technology [47]. This determining factor is critical to influencing the user behavior towards the technological know-how [102,136]. Delivering introductory instruction to users or improving the quality of the technical infrastructure involved in adopting new technology are both said to fall under FC and may aid the users in clearly understanding the system. Dwivedi et al. [136] show how FC affects AI-specific perspectives in tailored technical infrastructure. According to Blau and Shamir-Inbal [137], studies in the context of educational technology have shown that the FC i.e. the access to AI tools, and technical supports, significantly enhances educators’ and students' abilities and willingness to assimilate AI into academic activities. This study indicates that FC not only removes the barriers to technology but also positively affects attitudes towards its utilization. When users believe that they have the necessary support and resources, they are more prospective to experiment with AI functionalities, and leading to a deeper understanding and more innovative applications in educational contexts [66,138]. Critics argued about the over-reliance on technological supports may lead an overemphasis on FC [139]. FC may lead to an over-reliance on hypothetically stiffening the development of independent critical thinking skills and problem-solving skills among educators and students. One of the fundamental assumptions that FC homogeneously impact user behavior across diverse demographics overlooks the potential disparities in access to technology [140]. Factors i.e. geographic location, socioeconomic status, and institutional resources may create a digital divide and also affect the equitable use of AI for academic purposes [141]. As a result, providing users with sufficient resources, assistance, and time could encourage them to adopt AI-based programs.

H4

FC significantly and positively impact the use behavior (UB) of AI for Academic Purposes

The term “Trust” has been demarcated as the assurance user gain in the AI based learning system's ability to provide an efficient and reliable service [95,98]. Educational technology adoption have demonstrated that trust in AI systems – neighboring trust in their fairness, reliability and accuracy-meaningfully predicts students' and educators' intention to incorporate AI into learning and teaching process [142,143]. This may lead that trust may act as a crucial facilitator in the initial adoption phases. Trust (TR) in AI also incorporates trust in data security and privacy measures [95,144]. Through increasing the awareness of privacy concern and data breaches, sensitive and personal information will be handled strongly which is paramount for the AI adoption in education [145,146]. The use of AI is considered controversial [147] and antagonistic [148] in many decision-making instances. Critics argued that, blind trust in AI technology may overshadow critical assessment and leads to the adoption of tools that might not be beneficial or suitable for educational purposes [149,150]. Along with that, in educational environment where the AI tools are perceived as reliable, transparent, and ethically designed, there has a higher possibility of those tools being integrated in daily academic events, emphasizing the positive impact of trust on use behavior [151,152]. On the contrary, an overemphasis on AI might leads to the reliance on automated processes and reduced human interactions, possibly detracting from the educational experience, which thrives on critical thinking and personal engagement [153]. Despite the accuracy of the algorithm, prominent practitioners and researchers have observed people's unwillingness to use algorithms [154]. The lack of trust in AI-based technologies [95] and poor comprehension of using AI-based programs discourage people from using AI. To gain trust, the AI service provider should propose higher quality services by implementing them securely and effectively [155]. On the contrary, lack of trust might lead to an intensification in the amount of resistance to use AI based services [95]. The trust factor might meaningfully contribute to AI usage and acceptance success. Trust has a substantial influence in not only usage behavior (UB) but also on Performance Expectance (PE) [156]. We can predict,

H5

TR positively impacts the intention to adopt AI for Academic Purposes

H6

TR positively impacts the use behavior (UB) of AI for Academic Purposes

AI in education profoundly relies on data analysis, which is often sensitive and personal. Privacy (PV) concerns arise from PI (Personal Information) misuse, data breaches, and unauthorized access to confidential data [99,157]. The algorithms used in AI might hinder adoption and trust and lead to unintended violation of privacy, predominantly when there are manipulated and misused algorithms [158]. Though AI outperforms human co-workers, algorithm aversion and privacy concerns discourage users not to accepting the intelligent-based system in a professional framework [154]. Studies have found that in educational settings, where subtle and sensitive data about teachers and students are often administered, the assurance of robust privacy measures significantly boosts the willingness to adopt AI technologies [159]. On the other side, despite highest privacy concerns, users might still opt to adopt AI technologies due to perceived benefits outweighing perceived risks [160,161]. According to Liu and Tao [162] individuals' sensitivity to privacy may varies widely and this variability may influence the relationship between privacy concerns and the intention to adopt AI. What constitutes adequate privacy protection for one individual may be insignificant for another, confounding the generalizability of this study. For the continued use of AI tools in educational settings, constant trust in protecting privacy is paramount [144]. Users are more prospective to continue using AI tools when they believe that their privacy is continually protected and respected [163]. As per the study of Martin and Murphy [164], in the competitive perspective of educational technologies, AI solution providers offer a superior privacy protection which not only attract more users base but also retain them over time. Critics argue that, implementing a comprehensive privacy protection in AI system is technically costly and complex, hypothetically hinders their widespread use and adoption [165]. Furthermore, excessively stringent privacy measure might restrict the effectiveness and functionality of AI tools thus negatively affecting UB [166]. AI based system is only effective when users are enthusiastic and have confidence in that system [167]. This confidence can be built upon the grounds of data security and privacy. For academic purposes, the increasing role of AI brings forth both promise and concern [99]. AI has the potential to revolutionize education by personalizing learning experiences, automating administrative tasks, and offering valuable insights into student performance [96]. However, these benefits are intricately tied to sensitive personal data, which, if mishandled, can lead to privacy breaches, identity theft, and algorithmic bias [157]. From user's perspective, students could deter using AI if they perceived their personal data not to be properly handled by the service provider. Thus, we can develop the following two hypotheses,

H7

PV positively impacts the intention to adopt AI for Academic Purposes

H8

PV positively impacts the use behavior (UB) of AI for Academic Purposes

According to Ref. [100], "Behavioral Intention" refers to a user's plan to incorporate a new technology into their daily activities. Research across various educational technologies have consistently shown a positive relationship between the intention to use a technology and its actual usage [168,169]. BI may fluctuate over time due to new information, evolving perceptions, and the changes in educational environment [72]. As a result, the initial positive intentions towards AI for academic purposes may not necessarily lead to sustained UB if the underlying conditions and attitudes change [170]. Nevertheless, the evolution from intention to behavior is not always forthright. The changing intentions, influence of external factors, and the intention behavior gap represents a significant challenge to this linear relationship [171]. In the UTAUT model, every element, including PE, EE, and SI, impacts BI; however, only one factor, FC, directly affects the actual utilization of technology. This contrasts with the other factors which affect BI indirectly. Our final hypothesis of this study can be stated as the following:

H9

Behavioral Intention (BI) positively impacts the use behavior (UB) of AI for Academic Purposes

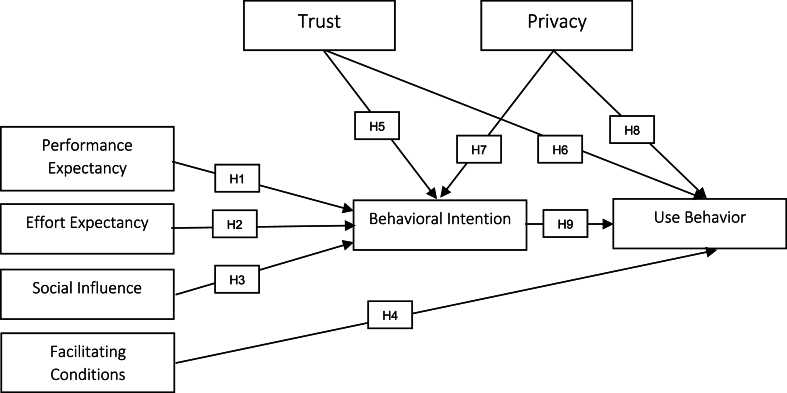

Therefore, based on the hypotheses formulated above, to evaluate the acceptance behavior of AI technology, the UTAUT model is used as the theoretical framework with an extended version comprising two new variables as shown in Fig. 1.

Fig. 1.

Conceptual framework.

3. Methodology

Holding a positivist philosophy, this study tries to explain the associated factors of AI adoption in developing countries. Therefore, the results can be generalized in similar contexts [172]. Since all the items are collected from relevant theories and literature, this study follows a deductive approach to theory development [173].

In terms of survey instrument, a questionnaire, composed in English, was developed utilizing a five-point Likert scale stretching from strongly disagree (1) to strongly agree (5). We have utilized subjective measurements, a practice widely recognized and accepted in the field of behavioral research [174,175]. It is worth noting that there were two screening questions to ascertain: (i) whether the respondents engaged with AI technologies for academic purposes and (ii) whether they had utilized these technologies within the preceding six months. To ensure the questionnaire's robustness, a preliminary study was conducted involving 30 graduate students. This pretest revealed that certain components within the original UTAUT model—precisely, one item each from FC and SI—were not congruent with the local context and were subsequently excluded. This is due to the nature of the original item and the context at hand. For instance, the FC item stating, ‘A specific person (or group) is available for assistance with system difficulties,’ and the SI item, ‘In general, the organization has supported the use of the system,’ were deemed irrelevant. This study focuses on individual user levels rather than organizational support or resources, highlighting the need for adjustments to ensure the questionnaire's relevance to the targeted user experience.

However, for the measurement of EE, BI, PE, and UB, all remaining items of the UTAUT model were retained and employed. The comprehensive questionnaire, comprising 27 questions, is outlined in Table 1. In addition, elements concerning trust and privacy were adapted and refined from established sources: [176,177], respectively.

Table 1.

Measurement items.

| Constructs | Items | Sources | |

|---|---|---|---|

| Performance Expectancy | PE1 | I find AI technologies useful in my academic work. | [100] |

| PE2 | Using AI technologies enables me to accomplish tasks more quickly. | [100] | |

| PE3 | Using AI technologies increases my productivity. | [100] | |

| PE4 | If I use AI technologies, I believe it will enhance my academic performance. | [100] | |

| Effort Expectancy | EE1 | My interaction with AI technologies is clear and understandable. | [100] |

| EE2 | It would be easy for me to become skillful at using AI technologies. | [100] | |

| EE3 | I find AI technologies easy to use. | [100] | |

| EE4 | Learning to operate AI technologies is easy for me. | [100] | |

| Social Influence | SI1 | People who influence my behavior think that I should use AI technologies. | [100] |

| SI2 | People who are vital to me suggest that I should use AI technologies for some crucial academic and nonacademic tasks. | [100] | |

| SI3 | My classmates and coworkers have helped encourage me to use AI technologies. | [100] | |

| Facilitating Condition | FC1 | I have the resources (e.g., phone or computer) to use AI technologies. | [100] |

| FC2 | I know that it is necessary to use AI technologies. | [100] | |

| FC3 | The AI technologies are compatible with other systems I use. | [100] | |

| Trust | TR1 | I trust that the AI technologies will function as promised. | [176] |

| TR2 | AI technologies appear to be trustworthy. | [176] | |

| TR3 | I trust AI keeps customers' best interests in mind. | [176] | |

| Privacy | PV1 | I feel that AI technologies respect my privacy. | [177] |

| PV2 | AI technologies do not collect more information than they need. | [177] | |

| PV3 | AI technologies protect and do not disclose my personal information to other parties. | [177] | |

| Behavioral Intention | BI1 | I intend to use AI technologies in the next six months. | [100] |

| BI2 | I predict I will use AI technologies in the next six months. | [100] | |

| BI3 | I plan to use AI technologies in the next six months. | [100] | |

| Use Behavior | UB1 | Using AI technologies is a good idea. | [100] |

| UB2 | The AI technologies make work more enjoyable. | [100] | |

| UB3 | Working with AI technologies is fun. | [100] | |

| UB4 | I like working with AI technologies. | [100] |

We used the G*Power software (version 3.1.9.7) to determine the sample size with conventional medium effect size [178] of f2 = 0.15, α = 0.05, power = 0.80, and predictors = 6, which would have yielded a minimum sample of 98. However, to ensure robustness in prediction, f2 = 0.05 was specified that increased the sample size to 279.

The data collection process commenced in September 2023 and concluded in November 2023. The questionnaire was sent to 400 individuals whereby 330 people responded with a response rate of 83 %. However, due to incomplete information, 20 responses were removed. This study, therefore, includes responses from 310 valid respondents, including students, teachers, and researchers affiliated with various universities across Bangladesh. Data was collected through a cross-sectional online questionnaire survey using google forms. The decision to finalize the sample size at 310 respondents was influenced by the study of Budiarani and Nugroho [179] to ensure reliability and validity of our findings.

For any research it is necessary to acknowledge and alleviate the potential biases to make the findings valid and reliable [180]. In our study, we have used existing scales, as mentioned above, to ensure clearness, relevance, and unbiasedness. We pretested the instruments before jumping into final data collection from a sample akin to the population. We ensured confidentiality and anonymity of the respondents' responses to reduce social desirability bias that results from respondents participating in a manner they perceive as more socially acceptable rather than being truthful [181,182]. We standardized the data collection procedure to minimize interviewer bias. All participants received the same instructions and survey instrument, which was administered in a consistent manner. This standardization helped to ensure that differences in responses were due to actual differences in perceptions and experiences rather than variations in the data collection process [182]. We have also considered the cultural context of the study and ensured the appropriateness while avoiding misunderstanding or offense. In addition, we translated and back-translated the questionnaire to ensure comprehensibility and accuracy of the questionnaire. Moreover, we used Harman's single factor test and found no evidence of a single factor accounting for the majority of the variance, indicating that common method bias was not a significant concern [183].

After the successful collection of data, the subsequent stages of data cleaning and coding were meticulously conducted using Microsoft Excel. In the subsequent analytical phase, SmartPLS 4.0 was used to validate the research models. SmartPLS is a robust analytical software that can handle multiple dependent and independent variables regardless of the sample size. Given the nature of our research model, it was required to conduct a multivariate analysis and SmartPLS 4.0 stood out for its adeptness in partial least squares structural equation modeling (PLS-SEM). Moreover, the software's user-friendly interface and comprehensive output significantly contributed to its selection, ensuring that the analysis could be conducted with a high degree of precision and clarity, facilitating the derivation of meaningful conclusions relevant to the research questions and data characteristics. We employed SmartPLS 4.0 for validating both the measurement and structural models. Before testing the hypothesized relationships between the exogenous and endogenous variables, we first evaluated reliability metrics including VIF (Variance Inflation Factor), Cronbach's Alpha, and Composite Reliability, alongside measures of convergent and discriminant validity. Following this preliminary assessment, the structural model was rigorously examined, allowing us to confirm the hypothesized relationships.

4. Results

4.1. Respondents’ demographic characteristics

In this research, three hundred ten respondents completed a valid questionnaire on AI adoption for academic purposes in a developing country. Among the respondents, there were 59 % male respondents and the remaining 41 % were female (Table 2). Regarding age group, the leading portion of the participants were aged 18–26 years, covering 61 % of the total respondents, whereas for the age group between 27 and 34, 35–42, 43–50 and more than 50 years, the percentages are 18 %, 11 %, 8 %, and 2 % respectively. Regarding occupation, 190 respondents were students, comprising 61 % of the total sample whereby the rest 39 % are teachers and researchers. Analysis of the duration of AI technology use revealed that most respondents (31 %) use AI technology for 6 months to 1 year, indicating that they have substantial knowledge and interaction with AI to use it for diverse academic purposes. Regarding AI services, a significant portion of the respondents used ChatGPT (29 %) and Grammarly was used by 19 % for academic purposes.

Table 2.

Respondents’ demographic characteristics.

| Variable | Categories | N | % |

|---|---|---|---|

| Gender | Male | 184 | 59 % |

| Female | 126 | 41 % | |

| Total | 310 | 100 % | |

| Age Group | 18–26 | 190 | 61 % |

| 27–34 | 57 | 18 % | |

| 35–42 | 35 | 11 % | |

| 43–50 | 23 | 8 % | |

| More than 50 | 5 | 2 % | |

| Grand Total | 190 | 100 % | |

| Occupation | Student | 190 | 61 % |

| Teaching/Research | 120 | 39 % | |

| Total | 310 | 100 % | |

| Duration of AI Technology Use | Six months - 1 year | 96 | 31 % |

| Less than six months | 63 | 20 % | |

| 1–2 years | 58 | 19 % | |

| More than three years | 53 | 17 % | |

| 2–3 years | 40 | 13 % | |

| Grand Total | 310 | 100 % | |

| Services Used | Google Assistant | 163 | 17 % |

| Siri | 35 | 4 % | |

| Grammarly | 177 | 19 % | |

| ChatGPT | 275 | 29 % | |

| Quillbot | 176 | 18 % | |

| Gemini | 73 | 8 % | |

| Midjourney AI | 30 | 3 % | |

| Tome AI | 23 | 2 % |

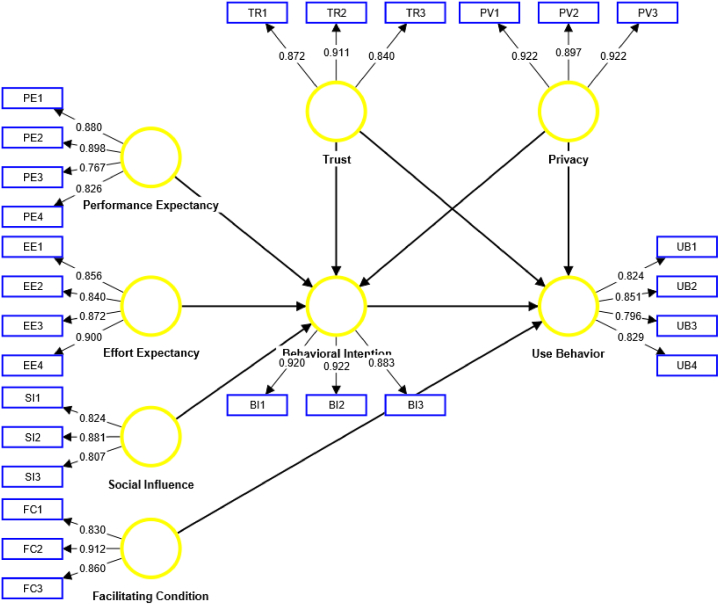

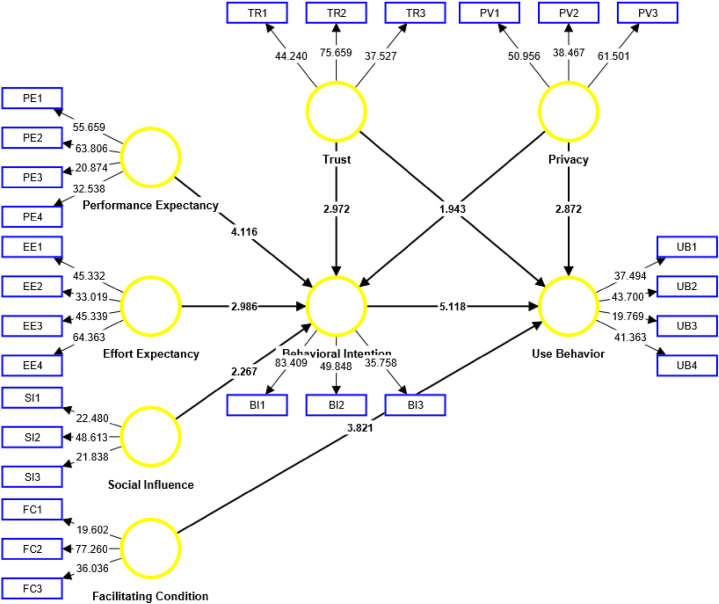

4.2. Measurement model

The study's measurement model was analyzed before the relationship between the endogenous variables was measured. As illustrated in Fig. 2, all the items of individual constructs are above the threshold of 0.7. Different questions were simplified according to pretest result. Some items were eliminated at the pretest stage as their loadings were very poor. The VIF of all the items is lower than the cutoff value of 5.0 [184,185] and ranges between 1.574 and 2.095.

Fig. 2.

Extended UTAUT measurement model.

4.2.1. Reliability and validity of constructs

Reliability measures the consistency of items used to measure a construct [172]. It also ensures that items used are error-free. This study considers two dimensions to measure the reliability of the constructs. As recommended by Holmes-Smith et al. [186] and Hair et al. [184], the cutoff value for both these measures is 0.70. All the constructs of this study confirm reliability as Cronbach's alpha ranges between 0.788 and 0.907. Likewise, composite reliabilities of all the constructs satisfy the requirement and are between 0.876 and 0.938 (Table 3).

Table 3.

Reliability statistics.

| Construct | Composite reliability | Cronbach's alpha | AVE |

|---|---|---|---|

| Behavioral Intention | 0.934 | 0.894 | 0.825 |

| Effort Expectancy | 0.924 | 0.890 | 0.752 |

| Facilitating Condition | 0.901 | 0.836 | 0.753 |

| Performance Expectancy | 0.908 | 0.866 | 0.713 |

| Privacy | 0.938 | 0.901 | 0.835 |

| Social Influence | 0.876 | 0.788 | 0.702 |

| Trust | 0.907 | 0.846 | 0.765 |

| Use Behavior | 0.895 | 0.844 | 0.681 |

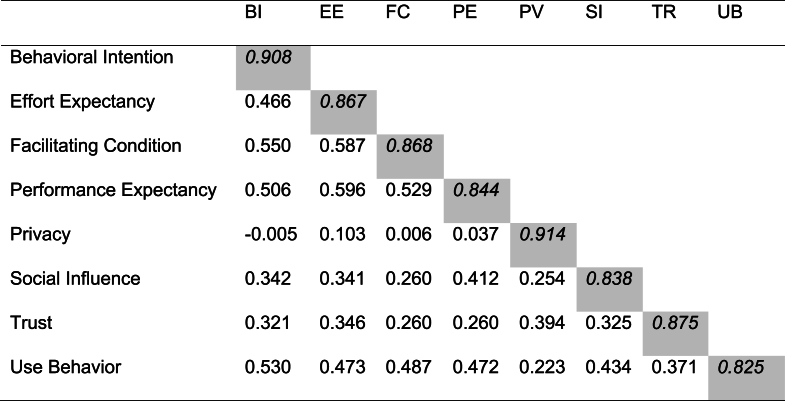

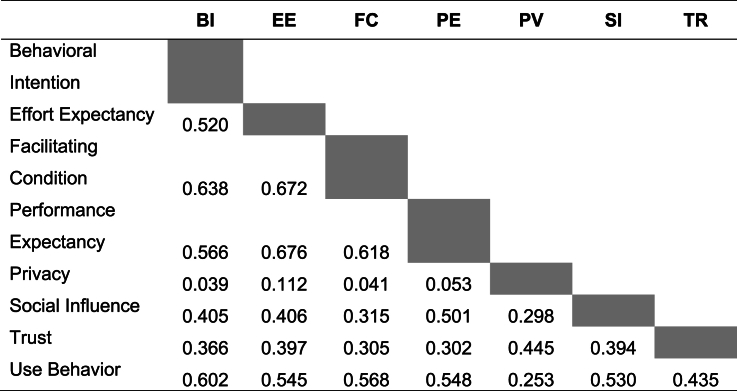

In terms of validity, this study considers both discriminant and convergent validity. The term “convergent validity” describes the agreement level among several scales of the identical concept. This implies that findings from several approaches to measuring the same construct should be consistent. Hair et al. [187] suggested Average Variance Extracted (AVE) as an influential convergent validity determinant. In this case, the value should be higher than 0.5. As Table 2 illustrates, the AVEs of all the constructs are between 0.681 and 0.835. On the contrary, discriminant validity pertains to how measurements related to distinct constructs remain separate and do not overlap in a research context [172,188]. It ensures that the concept is not correlating too strongly with different, unrelated constructs [185]. Comparing correlation estimates with the square root of AVE is an effective method to assess this measure. As Fornell and Larcker [189] mentioned, “if the square root of the AVE for any given construct is larger than the correlations involving that construct, discriminant validity is established”. In the following Table 4, as the correlation between constructs is not above 0.85, and the square root of AVEs exceeds all the corresponding correlations, this study confirms discriminant validity.

Table 4.

Discriminant validity (Fornell-Larcker criterion).

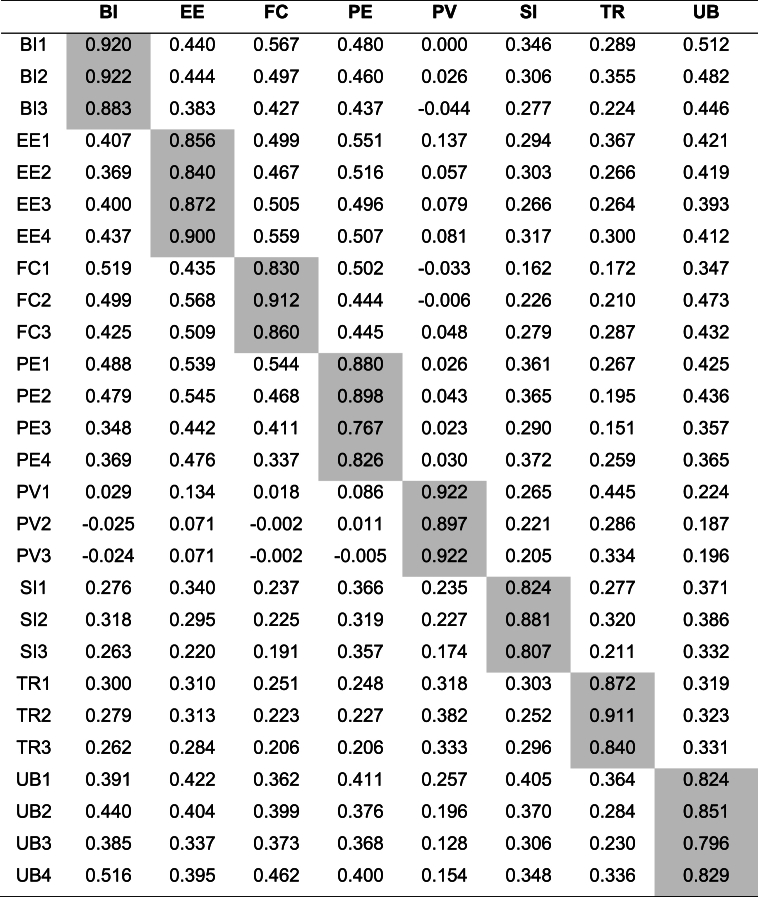

This study also considers the cross-loading statistics, as it is suggested that the loading of each construct should be higher than all the constructs within its own domain compared to other constructs [190]. In Table 5, all the loadings of specific constructs are higher than others and thus confirm discriminant validity.

Table 5.

Cross-loading.

Henseler et al.‘s [191] simulation study confirms that the Fornell-Larcker criterion, to some extent, fails to determine the validity of a measurement model. Therefore, they suggested that HTMT (Heterotrait-Monotrait) ratio could be a useful measure to assess the discriminant validity of a model. Table 6 reveals that the HTMT ratio of all the constructs are below the recommended threshold of 0.85. This is further assessed by a bootstrapping test of 5000 subsamples, with a one-tailed test at 5 % significance level, confirmed that the correlations remained below 0.85, thereby ensuring sufficient discriminant validity among the constructs.

Table 6.

HTMT ratio.

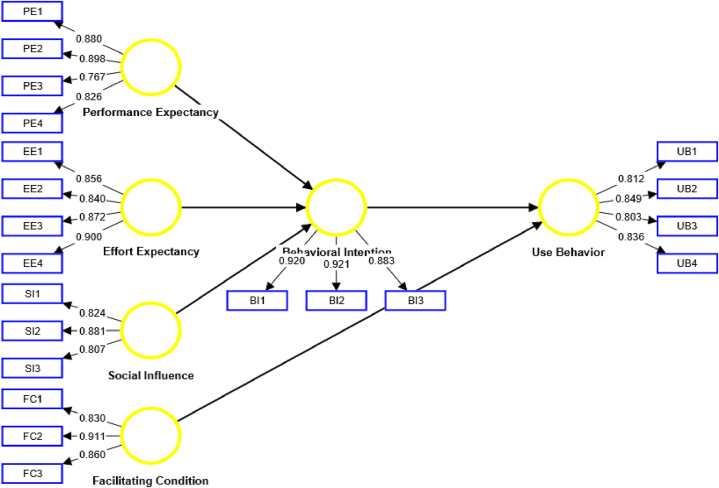

4.3. Structural model

Hair et al. [187] suggested using a pool of 5,000 subsamples through bootstrapping analysis to determine statistical relationships among the exogenous and endogenous variables. Fig. 3 represents the PLS-SEM results whereby both the outer and inner models show the t-values of the constructs.

Fig. 3.

PLS-SEM results.

Table 7 reveals significant insights into the relationships between various factors. Behavioral intention can be significantly projected by three factors taken from the original UTAUT model: social influence (β = 0.130, p < 0.023), effort expectancy (β = 0.197, p < 0.003), and performance expectancy (β = 0.291, p < 0.001). Likewise, trust has been identified to be significant (β = 0.192, p < 0.003) in predicting the behavioral intention of AI users. On the contrary, privacy has a significant negative relationship with behavioral intention (β = −0.145, p < 0.008). The negative relationship might suggest that privacy concerns can deter individuals from intending to use AI technologies, a valuable insight for developers and educators. Regarding the R square value, these five variables can explain 34.6 % of variances in the behavioral intention of AI users for academic purposes.

Table 7.

Hypotheses testing result.

| Path | Estimates | T statistics | P values | Result |

|---|---|---|---|---|

| Privacy - > Use Behavior | 0.175 | 2.872 | 0.004 | Accepted |

| Facilitating Condition - > Use Behavior | 0.263 | 3.821 | 0.000 | Accepted |

| Trust - > Behavioral Intention | 0.192 | 2.972 | 0.003 | Accepted |

| Performance Expectancy - > Behavioral Intention | 0.291 | 4.116 | 0.000 | Accepted |

| Trust - > Use Behavior | 0.123 | 1.943 | 0.052 | Rejected |

| Privacy - > Behavioral Intention | −0.145 | 2.666 | 0.008 | Rejected |

| Behavioral Intention - > Use Behavior | 0.346 | 5.118 | 0.000 | Accepted |

| Social Influence - > Behavioral Intention | 0.130 | 2.267 | 0.023 | Accepted |

| Effort Expectancy - > Behavioral Intention | 0.197 | 2.986 | 0.003 | Accepted |

Conforming to the UTAUT model, the facilitating condition significantly affects use behavior (β = 0.263, p < 0.000). Correspondingly, privacy is significantly associated with use behavior (β = 0.175, p < 0.004); thus, the hypothesis is accepted. Trust, however, is not associated with use behavior as the P value is 0.052, which is above the acceptance range. This suggests that trust affects AI users’ intentions but does not directly translate into definite use behavior. Behavioral intention can sufficiently predict use behavior as the estimate is 0.346, and the P value is 0.000. In Summary, the study accepts all the hypotheses from H1-H5 and H8-H9, whereby the two hypotheses to be rejected were H6 and H7. The R2 value for predicting behavioral intention was 34.6 %, whereas it was 39.6 % for use behavior. These R2 values of our model are notably acceptable, surpassing [185,192] recommended 30 % threshold.

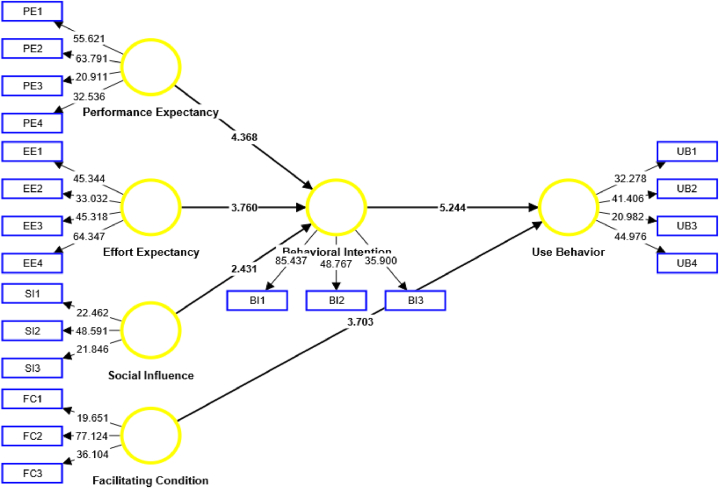

We have also measured the predictive power of exogenous variables of the original UTAUT model to predict use behavior (UB) and behavioral intention (BI). It was found that the R2 values without the extended dimensions of trust and privacy were as follows: 31.3 % for behavioral intention and 33.7 % for use behavior. This implies that trust and privacy are significant indicators in predicting use behavior and behavioral intention. Therefore, it can be said that the research model gains more power in explaining the use behavior and behavioral intention once trust and privacy are integrated with the original UTAUT dimensions.

5. Discussion

Bangladesh, a developing country, has observed a drastic change in internet-mediated technology adoption with the advancement of high-speed internet and other related technological infrastructures [175,193,194]. As per the Data Reports (2022) statistics, there are 10.8 million internet users in Bangladesh. AI users, specifically for academic purposes, find it easy and convenient to accomplish their day-to-day activities ranging from creative writing to slide-making [11,195]. Therefore, this study's core aim is to assess why these AI technologies are adopted, utilizing the UTAUT model extensively, with a focus on trust and privacy.

In accordance with the UTAUT model, our findings revealed a strong positive relationship between performance expectancy and behavioral intentions. As expected, those who believe their performance will be enhanced using AI technologies have adopted it and intend to use it further [196]. They also adopt AI technologies to enhance their productivity and speed [197,198].

This study demonstrates that users' effort expectancy, or the degree to which they perceive that engaging with AI-driven systems platforms, affects behavioral intention. Specifically, the study looked at the effect of effort expectancy on behavioral intention [29,199], significantly impacting behavioral intention. This conforms to previous studies of the UTAUT model, whereby it is supported that user-perceived easiness of a system enhances the formation of positive intention to adopt and utilize it [29,199,200].

Our study reinforced the role of social influence in driving behavioral intentions, as posited by the UTAUT model. The positive relationship between social influence and behavioral intention highlights the significance of peer and societal pressures in shaping individuals' decisions to adopt AI technologies within academic settings. This finding resonates with previous studies such as [196,198,201], emphasizing the impact of social networks, friends, family, and educators on technology acceptance.

Facilitating conditions that make it easier to do anything include having the necessary resources, information, and compatibility with other systems [202]. The association between facilitating conditions and use behavior has been confirmed in this study, with a path estimate of 0.263. This result is consistent with prior studies demonstrating the impact of infrastructure, technical support, and organizational support on technology utilization [[203], [204], [205], [206]].

Interestingly, while trust significantly influenced behavioral intention (estimate 0.192), it was not associated with use behavior (P-value 0.052). This suggests that while trust is essential in forming the intention to use AI technologies, it might not directly influence the actual usage [207,208]. As a psychological state, trust entails accepting vulnerability based on optimistic expectations regarding another party's actions [97,209]. When discussing the topic of technology, trust can be defined as the conviction that the technology in question will carry out its functions in a way that is by the user's favorable anticipations [210]. As such, cultivating trust through credibility, safety, familiarity, and endorsements from reliable sources may be particularly crucial in fostering intentions to adopt AI technologies in academic settings [[211], [212], [213], [214]]. Furthermore, it suggests that trust may play a more significant part in the beginning phases of the adoption process [97,215].

In contrast, privacy showed an unexpected negative relationship with behavioral intention (estimate −0.145) but a positive relationship with use behavior (estimate 0.175). This outcome underscores the complex role privacy concerns play in the adoption of new technologies within academic environments [216]. Contrary to the initial expectation that higher privacy assurances would bolster the willingness to embrace AI tools, the findings suggest that heightened awareness and concerns about privacy dampen such intentions [217]. This could reflect apprehensions about the misuse of personal data or skepticism towards the adequacy of data protection measures in AI systems. Privacy is a multifaceted concept that often plays a crucial role in technology adoption, especially in AI, where data security and confidentiality are primary concerns [218]. It underscores the importance of effectively communicating privacy measures and providing transparency regarding handling personal information [218,219]. Service providers must take the necessary steps to assure users of the security of their data and must comply with ethical and legal standards concerning privacy [[220], [221], [222], [223]].

Finally, the robust relationship between behavioral intention and use behavior (estimate 0.346) validates the core premise of UTAUT and corroborates findings from numerous technology acceptance models [[224], [225], [226]]. This study sheds important light on the elements affecting the implementation of AI technology for educational purposes in Bangladesh. Our study contributes novel insights to the UTAUT literature, particularly in the context of AI adoption in developing country academia, and highlights the unique roles of trust and privacy considerations in shaping technology acceptance in this domain.

5.1. Theoretical contributions

There are some novel theoretical contributions worth noting. First, this study extends the study of the adoption of AI-based technologies in a new framework, i.e., a developing country. Also, the knowledge domain is novel as it works with the adoption for academic purposes. While UTAUT has been widely adopted in various technology domains, its application to AI implementation, especially in developing countries remain underexplored. Second, the original UTAUT model can explain 31.3 % of behavioral intention to adopt AI-based technologies for academic purposes, whereas the prediction rate is 33.7 % for use behavior. The extended model integrating the role of privacy and trust can better predict the adoption since the explanation rate is 34.6 % for behavioral intention and 39.6 % for use behavior. Third, by integrating trust and privacy as key constructs within the UTAUT framework, our research offers valuable insights into the intricate roles these factors play in shaping AI adoption intentions and behaviors. The contrasting effects of trust (positively influencing intentions) and privacy (negatively impacting intentions but positively associated with use behavior) highlight the complex interplay between these factors and the adoption process. This finding challenges the conventional notion that higher privacy assurances universally bolster technology acceptance [227,228] and calls for a more nuanced theoretical conceptualization of privacy concerns within the UTAUT model [229]. Therefore, the model can be utilized to assess the adoption of other AI-based technologies and domains. Fourth, this study sheds light on users' perspective to effectively align AI-based technologies with the education system of developing countries. Fifth, the unexpected negative relationship between privacy and behavioral intention, challenges existing theoretical models and calls for further investigation of cross-cultural variations in privacy attitudes that shape users’ adoption behavior [230]. Finally, this study has adapted and modified several items to measure different constructs specifically in the AI context; further researchers can adopt these scales and verify their context-specific models.

5.2. Practical implications

From a practical stance, this study has revealed significant insights into the behavioral intention of AI-based technologies by academics, researchers, and students in developing countries. Although using AI-based platforms is not encouraged for academic purposes, especially in developing countries [25], it can help reduce efforts and attain information from different facets [205]. Regarding performance expectations and effort expectations having a positive relationship with behavioral intention, service providers should communicate the benefits of AI through demonstrations, case studies, and tutorials. They should design user-friendly AI tools and ensure proper support and guidance for making AI adoption more demanding [21,25,231]. Social influence, a significant contributor to behavioral intention, emphasizes academic influencers' role in promoting and endorse AI tools, using testimonials and success stories to encourage others to reduce users’ efforts [232]. Enhancing facilitating conditions must also be a priority, potentially through partnerships between educational institutions, the public sector, the corporate sector, internet service providers, etc., to improve infrastructure, technical assistance, and interoperability with current systems [231]. As trust is a pivotal factor that influences trust but does not affect user behavior, service providers should have an unwavering focus on security measures, credible endorsements, and transparency to build user confidence [229]. This study provides practical guidance for academic institutions, policymakers, and technology providers to facilitate successful AI adoption in developing countries. Academic institutions should foster trust through transparent data policies, cybersecurity protocols, educational campaigns, and leveraging social influence. Policymakers, on the other hand, should develop regulatory frameworks balancing AI innovation with robust data protection tailored to cultural nuances. Technology providers should design user-centric AI platforms prioritizing transparency, privacy, and trust-building features like intuitive interfaces and clear policies. By addressing trust, privacy, and contextual factors shaping AI adoption in developing country academia, this study offers novel insights to navigate implementation complexities and capitalize on opportunities in this evolving domain.

6. Limitations and future research directions

Although the study attempts to illustrate the actual scenario of AI adoption in developing countries, it has some limitations. First, the study utilized data using Google Forms that is related to snowball sampling techniques. This can question the generalizability of the research model in similar contexts. Therefore, this study can be further investigated using probabilistic sampling techniques. Second, most of the respondents (86 %) who participated in the research are students, whereas only 14 % use AI technologies in teaching or research activities. Having said so, this study paves the way for further investigation through the inclusion of stakeholders from different domains. Also, this study covers respondents using some of the prominent AI-based technologies for academic or research purposes, while the rest of these technologies are yet to be studied. Third, the results of this research are based on a cross-sectional study that may question the behavioral intentions of AI users in the long term. Hence, there is a need for longitudinal study for further understanding. Fourth, this study has leveraged the UTAUT model initially proposed by Ref. [29], which was subsequently extended to UTAUT 2 in 2012 [233]. Recognizing the significance of addressing privacy and trust factors in adopting technology, this study has taken a step further by augmenting the original UTAUT model with two additional variables – trust and privacy. The extended model provides a more comprehensive framework for understanding the adoption of AI technologies for academic purposes in developing countries as a result of incorporating these characteristics. However, further studies can adopt similar adoption models, for instance, TRI [234], TAM [91], TPB [235], TRA [236], TAM 2 [199], to explain the adoption of AI in developing countries. Finally, this study has rigorously measured and validated the proposed research model, offering researchers a reliable framework to assess technology adoption behaviors and factors across diverse scenarios (e.g., technology adoption in different industries, contexts, or geographical regions). This contributes not only to the understanding of technology adoption but also to the improvement of the broader field of adoption research.

7. Conclusions

Artificial intelligence (AI) is considered to be the future of information science technologies and studying the reasons of adoption of this novel innovation is a fascinating area for researchers since the number of users are increasing geometrically every day [205,229,232,237,238]. This research apprehended that it is essential to analyze the key factors that could reflect the behavioral intention and use behavior of AI technology adoption in developing countries especially for academic purposes. Besides, selecting a theoretical groundwork that can apprehend the utmost crucial aspects related to the adoption of AI for academic purposes was necessary for the study. Therefore, the UTAUT model of [29] was adopted and extended with two crucial dimensions i.e. privacy and trust considering their significance in the study of AI-based technologies. The results of this study can validate the research model explaining 34.6 % of variances in the behavioral intention of AI users, whereas for use behavior, it was 39.6 %. Performance Expectancy, Social Influence, Effort Expectancy, Trust, and Privacy are all accepted variables predicting users’ behavioral intention of AI adoption for academic purposes. On the other hand, Facilitating Conditions, Behavioral Intention, and Privacy have proven to be significant predictors of AI technology adoption and can accurately forecast use behavior.

Ethics statement

This research was conducted in full compliance with ethical principles and policies. The study protocol was reviewed and approved by the Director of Master of Professional Human Resource Management Program (MPHRM), at the University of Dhaka (Reference: BST/202307P). All participants were provided detailed information about the study and gave their informed consent prior to participation. Measures were taken to ensure the anonymity and confidentiality of participant data throughout the research process. The research was carried out in an ethical manner, prioritizing the well-being, autonomy, and privacy of participants.

Data availability statement

Data will be available on request.

CRediT authorship contribution statement

Md. Masud Rana: Validation, Software, Data curation, Conceptualization. Mohammad Safaet Siddiqee: Writing – original draft, Visualization, Resources, Formal analysis. Md. Nazmus Sakib: Writing – review & editing, Supervision, Methodology, Conceptualization, Validation. Md. Rafi Ahamed: Writing – original draft, Visualization, Validation.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.heliyon.2024.e37569.

Appendices.

Fig. A1.

UTAUT measurement model without privacy and trust.

Fig. A2.

UTAUT structural model without privacy and trust.

Appendix A. Supplementary data

The following is the Supplementary data to this article:

References

- 1.Cubric M. Drivers, barriers and social considerations for AI adoption in business and management: a tertiary study. Technol. Soc. 2020;62 doi: 10.1016/j.techsoc.2020.101257. [DOI] [Google Scholar]

- 2.Chong L., Zhang G., Goucher-Lambert K., Kotovsky K., Cagan J. Human confidence in artificial intelligence and in themselves: the evolution and impact of confidence on adoption of AI advice. Comput. Hum. Behav. 2022;127 doi: 10.1016/j.chb.2021.107018. [DOI] [Google Scholar]

- 3.Madan R., Ashok M. AI adoption and diffusion in public administration: a systematic literature review and future research agenda. Govern. Inf. Q. 2023;40 doi: 10.1016/j.giq.2022.101774. [DOI] [Google Scholar]

- 4.Rasheed H.M.W., Chen Y., Khizar H.M.U., Safeer A.A. Understanding the factors affecting AI services adoption in hospitality: the role of behavioral reasons and emotional intelligence. Heliyon. 2023;9 doi: 10.1016/j.heliyon.2023.e16968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Abadie A., Roux M., Chowdhury S., Dey P. Interlinking organisational resources, AI adoption and omnichannel integration quality in Ghana's healthcare supply chain. J. Bus. Res. 2023;162 doi: 10.1016/j.jbusres.2023.113866. [DOI] [Google Scholar]

- 6.Dastjerdi M., Keramati A., Keramati N. A novel framework for investigating organizational adoption of AI-integrated CRM systems in the healthcare sector; using a hybrid fuzzy decision-making approach. Telemat. Inform. Rep. 2023;11 doi: 10.1016/j.teler.2023.100078. [DOI] [Google Scholar]

- 7.Dekker I., De Jong E.M., Schippers M.C., De Bruijn-Smolders M., Alexiou A., Giesbers B. Optimizing students' mental health and academic performance: AI-enhanced life crafting. Front. Psychol. 2020;11:1063. doi: 10.3389/fpsyg.2020.01063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ahmad S.F., Alam M.M., Rahmat M.K., Mubarik M.S., Hyder S.I. Academic and administrative role of artificial intelligence in education. Sustainability. 2022;14:1101. [Google Scholar]

- 9.Chen L., Chen P., Lin Z. Artificial intelligence in education: a review. IEEE Access. 2020;8:75264–75278. [Google Scholar]

- 10.Flogie A., Aberšek B. Artificial intelligence in education. Act, Learn.-Theory Pract. 2022 IntechOpen. [Google Scholar]

- 11.Huang J., Saleh S., Liu Y. A review on artificial intelligence in education. Acad. J. Interdiscip. Stud. 2021;10 [Google Scholar]

- 12.Knox J. Artificial intelligence and education in China, Learn. Med. Tech. 2020;45:298–311. [Google Scholar]

- 13.Malik G., Tayal D.K., Vij S. Recent Find. Intell. Comput. Tech. Proc. 5th ICACNI. vol. 1. Springer; 2017. An analysis of the role of artificial intelligence in education and teaching; pp. 407–417. 2019. [Google Scholar]

- 14.McArthur D., Lewis M., Bishary M. The roles of artificial intelligence in education: current progress and future prospects. J. Educ. Technol. 2005;1:42–80. [Google Scholar]

- 15.Pedro F., Subosa M., Rivas A., Valverde P. 2019. Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development. [Google Scholar]

- 16.Quon R.J., Meisenhelter S., Camp E.J., Testorf M.E., Song Y., Song Q., Culler G.W., Moein P., Jobst B.C. AiED: artificial intelligence for the detection of intracranial interictal epileptiform discharges. Clin. Neurophysiol. 2022;133:1–8. doi: 10.1016/j.clinph.2021.09.018. [DOI] [PubMed] [Google Scholar]

- 17.Quon R.J., Meisenhelter S., Camp E.J., Testorf M.E., Song Y., Song Q., Culler G.W., Moein P., Jobst B.C. AiED: artificial intelligence for the detection of intracranial interictal epileptiform discharges. Clin. Neurophysiol. 2022;133:1–8. doi: 10.1016/j.clinph.2021.09.018. [DOI] [PubMed] [Google Scholar]

- 18.Tapalova O., Zhiyenbayeva N. Artificial intelligence in education: AIEd for personalised learning pathways, electron. J. E-Learn. 2022;20:639–653. [Google Scholar]

- 19.Kshetri N. Artificial intelligence in developing countries. IT Prof. 2020;22:63–68. [Google Scholar]

- 20.Khosravi H., Shum S.B., Chen G., Conati C., Tsai Y.-S., Kay J., Knight S., Martinez-Maldonado R., Sadiq S., Gašević D. Explainable artificial intelligence in education. Comput. Educ. Artif. Intell. 2022;3 [Google Scholar]

- 21.Luan H., Geczy P., Lai H., Gobert J., Yang S.J.H., Ogata H., Baltes J., Guerra R., Li P., Tsai C.-C. Challenges and future directions of big data and artificial intelligence in education. Front. Psychol. 2020;11 doi: 10.3389/fpsyg.2020.580820. https://www.frontiersin.org/articles/10.3389/fpsyg.2020.580820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Nye B.D. Intelligent tutoring systems by and for the developing world: a review of trends and approaches for educational technology in a global context. Int. J. Artif. Intell. Educ. 2015;25:177–203. [Google Scholar]

- 23.Ocaña-Fernández Y., Valenzuela-Fernández L.A., Garro-Aburto L.L. Artificial intelligence and its implications in higher education. J. Educ. Psychol.-Propos. Represent. 2019;7:553–568. [Google Scholar]

- 24.Dastjerdi M., Keramati A., Keramati N. A novel framework for investigating organizational adoption of AI-integrated CRM systems in the healthcare sector; using a hybrid fuzzy decision-making approach. Telemat. Inform. Rep. 2023;11 doi: 10.1016/j.teler.2023.100078. [DOI] [Google Scholar]

- 25.Sharma H., Soetan T., Farinloye T., Mogaji E., Noite M.D.F. Re-Imagining Educ. Futur. Dev. Ctries. Lessons Glob. Health Crises. Springer; 2022. AI adoption in universities in emerging economies: prospects, challenges and recommendations; pp. 159–174. [Google Scholar]

- 26.Ocaña-Fernández Y., Valenzuela-Fernández L.A., Garro-Aburto L.L. Artificial intelligence and its implications in higher education. J. Educ. Psychol. - Propos. Represent. 2019;7:553–568. [Google Scholar]

- 27.Su J., Zhong Y., Ng D.T.K. A meta-review of literature on educational approaches for teaching AI at the K-12 levels in the Asia-Pacific region. Comput. Educ. Artif. Intell. 2022;3 doi: 10.1016/j.caeai.2022.100065. [DOI] [Google Scholar]

- 28.Kizilcec R.F. To advance AI use in education, focus on understanding educators. Int. J. Artif. Intell. Educ. 2023 doi: 10.1007/s40593-023-00351-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Venkatesh V., Morris M.G., Davis G.B., Davis F.D. User acceptance of information technology: toward a unified view. MIS Q. 2003:425–478. [Google Scholar]

- 30.Tanantong T., Wongras P. A UTAUT-based framework for analyzing users' intention to adopt artificial intelligence in human resource recruitment: a case study of Thailand. Systems. 2024;12 doi: 10.3390/systems12010028. [DOI] [Google Scholar]

- 31.Tian W., Ge J., Zhao Y., Zheng X. AI Chatbots in Chinese higher education: adoption, perception, and influence among graduate students—an integrated analysis utilizing UTAUT and ECM models. Front. Psychol. 2024;15 doi: 10.3389/fpsyg.2024.1268549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bokhari S.A.A., Myeong S. An analysis of artificial intelligence adoption behavior applying extended UTAUT framework in urban cities: the context of collectivistic culture. Eng. Proc. 2023;56 doi: 10.3390/ASEC2023-15963. [DOI] [Google Scholar]