Abstract

Background

Data dashboards are published tools that present visualizations; they are increasingly used to display data about behavioral health, social determinants of health, and chronic and infectious disease risks to inform or support public health endeavors. Dashboards can be an evidence-based approach used by communities to influence decision-making in health care for specific populations. Despite widespread use, evidence on how to best design and use dashboards in the public health realm is limited. There is also a notable dearth of studies that examine and document the complexity and heterogeneity of dashboards in community settings.

Objective

Community stakeholders engaged in the community response to the opioid overdose crisis could benefit from the use of data dashboards for decision-making. As part of the Communities That HEAL (CTH) intervention, community data dashboards were created for stakeholders to support decision-making. We assessed stakeholders’ perceptions of the usability and use of the CTH dashboards for decision-making.

Methods

We conducted a mixed methods assessment between June and July 2021 on the use of CTH dashboards. We administered the System Usability Scale (SUS) and conducted semistructured group interviews with users in 33 communities across 4 states of the United States. The SUS comprises 10 five-point Likert-scale questions measuring usability, each scored from 0 to 4. The interview guides were informed by the technology adoption model (TAM) and focused on perceived usefulness, perceived ease of use, intention to use, and contextual factors.

Results

Overall, 62 users of the CTH dashboards completed the SUS and interviews. SUS scores (grand mean 73, SD 4.6) indicated that CTH dashboards were within the acceptable range for usability. From the qualitative interview data, we inductively created subthemes within the 4 dimensions of the TAM to contextualize stakeholders’ perceptions of the dashboard’s usefulness and ease of use, their intention to use, and contextual factors. These data also highlighted gaps in knowledge, design, and use, which could help focus efforts to improve the use and comprehension of dashboards by stakeholders.

Conclusions

We present a set of prioritized gaps identified by our national group and list a set of lessons learned for improved data dashboard design and use for community stakeholders. Findings from our novel application of both the SUS and TAM provide insights and highlight important gaps and lessons learned to inform the design of data dashboards for use by decision-making community stakeholders.

Trial Registration

ClinicalTrials.gov NCT04111939; https://clinicaltrials.gov/study/NCT04111939

Keywords: data visualizations, dashboards, public health, overdose epidemic, human-centered design

Introduction

Background

Data dashboards are tools, often published digitally on websites or dedicated apps, that present visualizations; they are increasingly used to display data about behavioral health, social determinants of health, chronic and infectious disease risks, and environmental risks to inform or support public health endeavors [1-4]. Dashboards can be an evidence-based approach used by communities to influence public awareness and decision-making and to focus the provision of resources and interventions in health care toward specific populations [2,3,5-8]. For example, local public health agencies across the nation have communicated health data about the COVID-19 pandemic through dashboards, using these as tools to generate awareness and motivate behavior change (eg, adherence to public health guidelines) [9].

Despite widespread use, evidence on how to best design and use dashboards in the public health realm is limited [1,9-12]. There is also a notable dearth of studies that examine and document the complexity and heterogeneity of dashboards in community settings. Experiences from the Healing Communities Study (HCS) provided an opportunity to empirically learn how dashboards and health data visualizations can be assessed to inform design and use, especially among community end users.

The HCS is implementing the Communities That HEAL (CTH) intervention aimed at reducing overdose deaths by working with community coalitions in selected counties and cities highly affected by opioid deaths in each state [13]. In January 2020, as part of the community engagement component of the CTH, 4 research sites followed a common protocol to develop community dashboards to support community coalitions in selecting evidence-based practices (EBPs) to reduce opioid overdose deaths in their respective communities. The protocol stipulated which key metrics to present to each community regarding opioid overdose deaths and associated factors that may contribute to their prevalence [14]. The CTH intervention protocol involved dashboard cocreation by HCS researchers and community stakeholders from each community that incorporated the principles of user-centered design [15].

We define community stakeholders for this analysis as individuals in the community who are engaged in fighting the overdose crisis, including coalition members (eg, county public health officials and behavioral health practitioners) and community research staff. The CTH intervention envisioned community-tailored dashboards as a tool that coalition members would use to discuss and understand baseline conditions and trends, to inform EBP selections, and monitor research outcomes of interest to the community. Community research staff, often with preexisting ties to the community, were to support and lead coalitions through the use of data dashboards for decision-making and ongoing monitoring. These stakeholders were the anticipated dashboard end users who were not expected to have expertise in the use of dashboards, even though community research staff had additional training in community engagement and overall study protocols. Our research team oversaw the design, implementation, maintenance, and evolution of the dashboards used by these stakeholders.

The CTH dashboard cocreation involved, at a minimum, iterative show-and-tell sessions in which feedback from community stakeholders was provided on wireframes with the goal of refining the dashboard and its ability to align with specific objectives (eg, to address local challenges in fighting the opioid crisis and to highlight key performance indicators). Although the CTH intervention required the same specific core components of the dashboards (eg, use of predetermined metrics, annotations for metrics, and granularity of the data presented), communities could incorporate unique components based on preferences and resources, such as displaying local data (eg, county opioid overdose death rates) acquired by the HCS. Thus, dashboards varied in layout, interface, and content across the 4 sites.

Objectives

To elucidate lessons learned from providing dashboards to the coalitions and community stakeholders, we investigated the following questions on the CTH dashboards: Are the CTH dashboards usable and useful for community decision-making? Are the dashboards easy to use and understand? Will the dashboards be used in the long term, and, if yes, for what purposes will they be used? Our study is novel because of four specific areas: (1) the use of a qualitative approach (instead of a quantitative approach) to expound on the technology adoption model (TAM) constructs, informing existing perspectives on dashboard usability; (2) the investigation of dashboard usability across 67 diverse communities; (3) the generation of themes and subthemes on the usability of a dashboard in the substance abuse domain; and (4) the identification of common themes on dashboard usability from a TAM and System Usability Scale (SUS) perspective [16,17] by comparing feedback from 4 unique applications of a dashboard that was tailored to the needs of end users. Our findings will be used to establish current perceptions of the dashboards and prioritize gaps in knowledge, design, and use that can be considered for future dashboard applications.

Methods

Ethical Considerations

Advarra Inc, the HCS’s single institutional review board, approved the study protocol (Pro00038088). The HCS study is a registered trial (ClinicalTrials.gov NCT04111939).

Research Setting

Data collection and analyses were conducted as part of the HCS, a 4-site, waitlisted community-level cluster-randomized trial seeking to significantly reduce opioid overdose deaths by implementing the CTH intervention in 67 communities across Kentucky, Massachusetts, New York, and Ohio [13,18]. For the first wave of the HCS, each site created interactive dashboards with community stakeholder input to support local data-driven decision-making using community-level metrics for 33 communities randomized to receive the intervention (ie, wave 1 communities) from January 2020 through June 2022 [14]. Our analysis involved all wave 1 communities: 8 communities from Kentucky, 8 communities from Massachusetts, 8 communities from New York, and 9 communities from Ohio.

The CTH Dashboards

Common Components Across the Dashboards

More details about the cocreation of the dashboards and required core components can be found elsewhere [14]. Briefly, each site was responsible for developing a secure portal for each community receiving the CTH intervention. The portals contained downloadable intervention materials (eg, information on EBPs, community profiles, and community landscape data). Each portal had a CTH dashboard with data visualizations (eg, bar and line graphs and tables) displaying metrics related to the HCS’s primary outcome of reducing opioid deaths (eg, opioid overdose rates) and secondary outcomes connected to EBPs (eg, number of naloxone kits distributed and number of buprenorphine prescriptions filled).

Unique Components of the CTH Dashboards

Each site developed its CTH dashboard using different software, including Power BI (Microsoft Corp), Tableau (Salesforce Inc), SharePoint (Microsoft Corp), D3.js (Mike Bostock and Observable, Inc; Data-Driven Documents), Drupal (Drupal Community), and different types of visualizations, such as bar graphs, line plots, and tables with directional markers [14]. The 4 distinct dashboards displayed community-specific data that were accessible only to that community’s stakeholders. Data acquisition and display were limited by site-specific data use agreements, which informed the timing of the data (lags), data suppression, and granularity (eg, aggregation by state vs county), as well as the requirement of user and password-protected access in some cases.

Study Design, Sampling, and Recruitment

Between June and July 2021, we conducted a mixed methods assessment of the use of the CTH dashboards. This period reflects the postimplementation phase of the second version of the cocreated dashboards (Figures 1 and 2) and was a stable time during which no fundamental revisions were made to the dashboards across the HCS. We administered the SUS to and conducted TAM-informed semistructured interviews with community stakeholders involved in the HCS. We collected sociodemographic data from participants to help characterize respondents. The interview data and SUS scores were concurrently examined to identify factors that influenced dashboard perceived use and usability.

Figure 1.

Mockups of the Communities That HEAL (CTH) dashboards: (A) CTH dashboard for Kentucky and (B) CTH dashboard for Ohio. Please note that any names of communities present are not real, and only synthetic data are used in the images. DAWN: deaths avoided with naloxone; EMS: emergency medical service; HCS: Healing Communities Study; MOUD: medication for opioid use disorder; OD: overdose; OUD: opioid use disorder; TBD: to be determined.

Figure 2.

Mockups of the Communities That HEAL (CTH) dashboards: (A) CTH dashboard for Massachusetts and (B) CTH dashboard for New York. Please note that any names of communities present are not real, and only synthetic data are used in the images. EMS: emergency medical service; HCS: Healing Communities Study; HEAL: Helping to End Addiction Long-term; MOUD: medication treatment for opioid use disorder; NIH: National Institutes of Health; OUD: opioid use disorder.

Each site sampled and recruited participants. The sample was drawn from community stakeholders in the HCS communities who had a community portal account (ie, active users). Researchers from each site generated a roster of eligible participants from site server audit log files. Our research team worked with field staff to achieve a diverse participant pool of community stakeholder active users within each community. The sample included coalition members, including stakeholders in the HCS communities responsible for championing the use of a specific CTH intervention component, and HCS community staff who had the role of coordinating the use of data for decision-making. These active users were invited via email to participate in a group interview via Zoom (Zoom Communications, Inc) with up to 6 participants, although a few interviews were conducted individually due to scheduling challenges.

Data Collection

Mixed methods evaluation data were collected by each site, which were then shared with the HCS data coordinating center (DCC) for analysis. Interviewers at each site first received common training on the research protocol, interview guide, and interviewing techniques. During the review, participants verbally provided consent and then were asked to individually fill out a short survey on REDCap [19]. The survey included sociodemographic questions and the SUS. After participants completed the survey, the research team displayed the site-specific CTH dashboard with synthetic community data to reorient participants to the dashboard used in their community. Next, the research team asked participants semistructured, open-ended questions based on the TAM dimensions of perceived usefulness, perceived ease of use, intention to use, contextual factors (Multimedia Appendix 1). Table 1 provides operational definitions for each of the TAM constructs used to develop our interview guide questions. We focused on 4 constructs to explore how the dashboards were used for community decision-making, their ease of use, the intent for future use, and the context around dashboard use. Our interviews lasted approximately 1 hour and were recorded and transcribed.

Table 1.

Technology adoption model themes and operationalized definitionsa.

| Construct | Operationalized definition | ||

| Perceived usefulness | |||

|

|

Description | Alignment of the dashboard and its functions with the community stakeholder’s expectations for goals and tasks | |

|

|

Benefits | How the dashboard positively influences the work and decision-making expectations of the community stakeholder | |

|

|

Drawbacks | How the dashboard did not meet the work and decision-making expectations of the community stakeholder | |

| Perceived ease of use | |||

|

|

Description | Alignment of the technical functionality of the dashboard with the community stakeholder’s workflow (needs and desires) | |

|

|

Barriers | Specific challenges faced with using the portal | |

|

|

Facilitators | Specific resources needed to support the use of the portal | |

| Intention to use | |||

|

|

Description | The willingness of the community stakeholder to use the dashboard in the future, even if modified slightly | |

|

|

Acceptance | The approachability of the dashboard as a technological tool to accomplish work | |

|

|

Preference | Specific improvements, changes, or recommendations to the dashboard | |

| Contextual factors | |||

|

|

Description | Circumstances (eg, social, cultural, and historical circumstances) that influence a community stakeholder’s use of the dashboard | |

|

|

Community data orientation | Collective perceptions about how the community connects and works with data, including community data tools and approaches for decision-making | |

aDefinitions derived from Davis [16].

Data Analysis

The validated SUS consisted of 10 five-point Likert scale questions. Each SUS item was scored (scale of 0 to 4), and their scores were summed and multiplied by 2.5 to assign a total score from 0 to 100 (lowest to highest usability). For the qualitative data, 1 research site constructed the codebook using an iterative constant comparative method [20]. Initially, the team created general codes using concepts from the TAM from the interview guide. Two researchers (RGO and YW) each coded an interview independently, compared coding, and discussed agreement and differences with a senior researcher (NF), creating a refined codebook with consensus codes and clarified definitions. The remaining interviews from this site were coded by these researchers, with review by the senior researcher. One researcher (RGO) working with the team inductively created subthemes for each TAM dimension, revised the codebook, and coded all the interviews from the site. The established codebook and coded interviews were then shared with the DCC. Two new coders from the DCC were trained by the experienced coders; each independently coded 6 test interviews and compared results in meetings with the experienced coders. Then, the DCC coders coded all remaining interviews (n=14) with weekly meetings to discuss differences and ensure alignment. The DCC coders then reviewed the original 7 interviews coded by the research site to confirm alignment across all data. After coding, the DCC conducted an exploratory analysis to better understand the emergent themes across different sites. Interview transcriptions were analyzed using NVivo (version 12; QSR International) [21].

The reporting of our qualitative methods and results adheres to the COREQ (Consolidated Criteria for Reporting Qualitative Research) guidelines (Multimedia Appendix 2; Table 1) [22]. Altogether, scientific rigor was supported by the use of participant IDs and labels to ensure data were appropriately associated with participants across communities; encouraging consistency in data collection and the fidelity of the interview guide through mentorship and weekly meetings to ensure agreement and the reliability of codes and results; discussions with various experts in the data visualization field during the development of themes; and triangulation (data from multiple HCS communities and diverse roles) and parallel data collection (SUS and TAM) to achieve theoretical sufficiency for themes and diverse representation across sites [23,24].

Results

Descriptive Summary of Survey Respondents and Interview Participants

A total of 159 individuals from across all HCS sites were invited to participate in our study. Of them, 62 (39%) individuals enrolled and participated in interviews. We conducted a total of 17 group interviews (with an average of 3 participants per group) and 10 individual interviews (with an average of 7 interviews per site). Community coalition members represented 56% (35/62) of interviewees, with the remaining 44% (27/62) comprising community staff hired by the HCS to work with their specific community coalition (Multimedia Appendix 2; Table 2). At least 1 person was interviewed from every community. Participants from all sites typically had a master’s degree, and a majority of participants from Ohio and Massachusetts were younger. Participants also typically identified as non-Hispanic White and female across sites (Multimedia Appendix 2; Table 3).

Table 2.

Subthemes under perceived usefulness.

|

|

Illustrative quotes | |

| Benefits | ||

|

|

Knowledge dissemination |

|

|

|

Decision-making tool |

|

|

|

Access to more data |

|

| Drawbacks | ||

|

|

Time constraints |

|

|

|

Misalignment of the dashboard as a tool |

|

|

|

Disutility of data |

|

Table 3.

Subthemes under perceived ease of use.

|

|

Illustrative quotes | |

| Barriers | ||

|

|

Access to dashboard |

|

|

|

Data manageability |

|

|

|

Unable to locate usable data |

|

|

|

Presentation of numbers and labels |

|

| Facilitators | ||

|

|

Additional support |

|

|

|

Data accessibility |

|

|

|

Navigability |

|

aHCS: Healing Communities Study.

Perceived Usability and Acceptance of the CTH Dashboards

Of the 62 participants, 58 (94%; Kentucky: n=13, 21%; Massachusetts: n=14, 23%; New York: n=15, 24%; Ohio: n=16, 26%) completed the SUS survey. The grand mean for overall SUS scores across all HCS sites was 73 (SD 4.6), indicating that the CTH dashboards were within the 70th and 80th percentile of SUS responses (Multimedia Appendix 2; Table 4). This score is recognized in the literature as being within the acceptable range [25,26]. The overall SUS score for the New York dashboard was the highest (mean 77, SD 11.4) and akin to the SUS scores of Microsoft Word and Amazon. The overall SUS score for the Ohio dashboard was the lowest (mean 67, SD 12.3), which was similar to the usability of a GPS system. The overall SUS scores for the Kentucky and Massachusetts dashboards were 75.8 (SD 10.4) and 70.2 (SD 16.3), respectively.

Table 4.

Subthemes under intention to use.

|

|

Illustrative quotes | |

| Acceptance | ||

|

|

Alternate data uses |

|

|

|

Future strategy monitoring and modification |

|

|

|

Use conditional on data changes |

|

| Preference | ||

|

|

Content |

|

|

|

Function |

|

|

|

Aesthetics |

|

aHCS: Healing Communities Study.

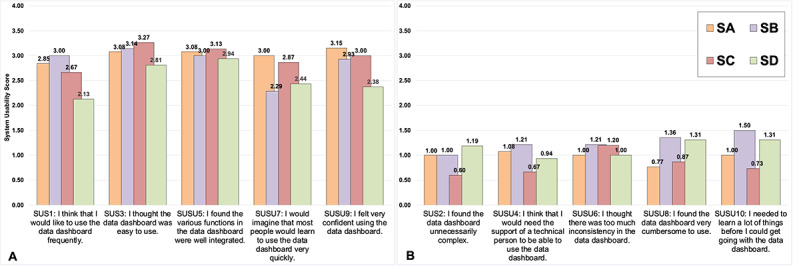

Figure 3 illustrates the breakdown of each SUS domain and contrasts the average scores by participants from each HCS site. The New York dashboard scored the highest across most of the SUS domains. Although Ohio participants did not score the dashboard as favorably as participants from the other sites across many of the domains, it should be noted that the responses were generally positive. The discussion that follows expounds on these SUS scores, with additional support from the data from our TAM-informed interviews. We link specific SUS dimensions around usability with closely associated TAM themes on usefulness. This novel approach highlights potential drivers of SUS scores based on design considerations elucidated by a specific set of TAM themes and subthemes.

Figure 3.

System Usability Scale (SUS) results by domain and study site (n=58). Raw SUS scores are presented. (A) SUS questions that were framed positively. (B) SUS questions that were framed negatively. Each SUS item is scored on a scale of 0 (strongly disagree) to 4 (strongly agree). A total of 4 participants did not respond to the SUS. SA: Kentucky; SB: Massachusetts; SC; New York; SD: Ohio.

Perceived Usefulness

Sites were generally positive in their reported perceptions about inconsistencies with the dashboards (SUS6); however, there was variability in the scores across states when it came to confidence in using the dashboards (SUS9). For example, participants from Ohio reported the least confidence, whereas participants from Kentucky scored the confidence domain the highest.

The alignment of the dashboards and community stakeholders’ expectations may have influenced perceptions of confidence in using the dashboards and consistency in using the dashboards. On the basis of the TAM-informed interviews, 3 subthemes emerged regarding the benefits of using the CTH dashboards: knowledge dissemination (the dashboard was used to increase the awareness of activities in a community [eg, sharing data and validating existing assumptions]); decision-making tools (the dashboard was used for choosing EBPs in a community and evaluating EBPs strategies); and access to more data (broader and granular dashboard data about a community were available). In contrast, 3 subthemes emerged regarding the drawbacks of using the CTH dashboards: time constraints (the use of the dashboard felt time intensive, and other priorities distracted from its use); misalignment of dashboard as a tool (the use of the dashboard was not aligned with the HCS’s goals and workflow); and disutility of data (dashboard data were not translatable to anything actionable). Table 2 provides illustrative quotes from the interviews for each of the primary themes under perceived usefulness.

Perceived Ease of Use

Around integration (SUS5), perceptions among all participants were generally positive. Participants from Ohio did not respond as positively about ease of use and complexity of use (SUS2 and SUS3). Participants from Massachusetts, New York, and Kentucky scored the dashboards higher in these areas. Participants from Ohio and Massachusetts also scored training, support, and the cumbersomeness of the system (SUS4, SUS7, and SUS8) less favorably.

The alignment of the technical functionality of the dashboards with users’ workflows may be linked to the users’ perceptions of the dashboards’ complexity and ease of use and the need for training and support. Our TAM-informed interviews revealed 4 subthemes around barriers to use: access to dashboard (participants faced challenges with accessing the dashboard); data manageability (accessing the data users needed was cumbersome); unable to locate usable data (data in the dashboard were not easy to find because of the lack of availability [due to lags in reporting or suppression rules] or because of navigation issues on the dashboard); and presentation of numbers and labels (the data were displayed in ways that made their use difficult for participants). However, we discovered 3 subthemes that facilitated the use of the dashboards: additional support (training or information that informed the use of the dashboard or data); data accessibility (the dashboard was useful because of aspects of the technology or the way it is displayed); and navigability (different ways in which the dashboard was easy to use and navigate and aspects of the dashboard that could be changed to make it better). Table 3 provides illustrative quotes for each of the primary themes under perceived ease of use.

Intention to Use

Scores of the SUS items on the frequency of planned use and the knowledge needed to use the dashboard (SUS1 and SUS10) were equivocal and lower across all the sites. Participants from Massachusetts reported that they wanted to use the dashboard frequently, but they needed to learn more about how to use it. Participants from Ohio provided the lowest score for the SUS item on the frequency of planned use of the dashboard and, similar to their Massachusetts counterparts, indicated the need to learn more about how to use the dashboard.

The willingness of community stakeholders to use the dashboard in the future may influence their desire to use the dashboard frequently and learn how to use it. During the TAM-informed interviews, participants provided several recommendations for improving the dashboard that could promote future use. A total of 3 subthemes emerged regarding the acceptance of the dashboards in the future: alternate data uses (data on the dashboard will be useful but for purposes not initially part of the focus of the HCS); future strategy monitoring and modification (the dashboard will be used as intended for HCS purposes, including for EBP strategy monitoring and data-driven decision-making about these strategies); and use conditional on data changes (the dashboard will be useful if some conditions are met [to help with its use]). In addition, 3 subthemes emerged regarding preferences with desirable use over time: content (participants desired changes to the data on the dashboard, including the provision of different data, to improve the usability of the data and their interpretation); function (participants desired additional tools or different ways to navigate the dashboard and use data); and esthetics (participants described ways in which the appearance of the dashboard could be improved to effectively communicate information). Table 4 provides illustrative quotes on recommendations for each of the primary themes under intention to use.

Contextual Factors

Collective circumstances may influence the dashboard’s perceived usability and use. A total of 2 subthemes emerged regarding how the community connects and works with data (community data orientation): comfort with data (collective perceptions of the comfort with using tools such as dashboards that contain data to make decisions); established tools and approaches (perceptions that ranged from those of human-centered data sources [eg, existing relationship with a coroner’s office] to technology-based sources that were community preestablished substitutes to the HCS dashboard). Table 5 provides exemplary quotes for each of the primary themes under the community context that illustrate how dashboards and their use may have been perceived when implemented.

Table 5.

Subthemes under contextual factors.

| Community data orientation | Illustrative quotes |

| Comfort with data |

|

| Established tools and approaches |

|

Discussion

Principal Findings

Our assessment indicated that the community stakeholders in the HCS found the CTH dashboards to be usable, as measured by the SUS, and easy to use and understand, as indicated by the themes identified through our TAM-informed interviews. Some respondents indicated the usefulness of the dashboards, with many indicating areas for improvement. From these findings, we have synthesized and prioritized the following gaps and lessons learned for future consideration, which are generalizable to community stakeholders engaged in dashboard use. In the spirit of Chen and Floridi [27], our lessons learned are specifically about different visualization pathways for use in dashboards among community stakeholders. Prior research has identified similar practices as supportive of the effective use (eg, greater engagement, cognitive alignment between end users, and decision aids) of dashboards. The lessons learned that we describe subsequently may help researchers design higher-fidelity dashboards that future scientific studies should consider when developing similar interventions and more general tools that integrate data visualizations for use among community stakeholders in community-oriented studies.

Prioritized Gaps in and Lessons Learned About Designing Dashboards for Community Stakeholders

Prioritized Gap: Cognitive Dissonance With the Dashboards

Many of the community stakeholders already had frontline knowledge of their community opioid crisis and its complexities. Hence, some community stakeholders may have seen the dashboards as tools simply providing hard data, which were secondary to their lived experiences within their communities and knowledge about contextual nuances with these communities. There may have been, arguably, a cognitive disconnect between what the community stakeholder expected from a dashboard and what the dashboard provided. This disconnect may have been exacerbated by factors such as adherence to the main HCS research protocol and purpose (eg, a brief timeline for development and programming before deployment and the prohibition of data downloads from visualization), use of suppression rules that masked values and contributed to the spareness of data, lags in metrics due to reporting, and limited connections of the data with local resources for community stakeholders to act upon. Other challenges included balancing the need for “real-time” data with the validity of these data due to retrospective corrections.

Lesson 1: Use Storytelling via Dashboards

Cognitive and information science theories suggest the importance of aligning information representation formats provided by decision aids with mental representations required for tasks and cognitive styles of individuals [28-30]. We propose storytelling via dashboards as an effective, historically validated [31] approach to achieving this alignment. At a minimum, this involves providing basic data summaries in plain English, as done by the New York HCS site, and mixing and matching measures in graphs to allow the illustration of specific points, as adopted by the Ohio and Kentucky sites. Richer storytelling may involve multiple iterations or cocreations with community stakeholders that help craft the right set of qualitative contexts and information that situates quantitative data to evoke understandings that fit with internal mental models of the task and lived experiences [32,33]. Powerful stories could provide community stakeholders with structured templates to analyze, justify, and communicate data. Our findings suggested that there were indeed community stakeholders who were not comfortable with using data; individuals with such predispositions could find data presented as stories more meaningful, especially during decision-making processes.

Lesson 2: Link Actionable Insights to Useful Resources

Community stakeholders may require additional support with using insights garnered from dashboards. Dashboards can be seen as a mediator between data and a call to action to address specific issues. Moreover, dashboards can present structured sets of actions a community stakeholder can undertake, also known as an actionable impetus [34], as guidance on addressing a problem identified with the data [35-37]. For example, some community stakeholders in the HCS would have appreciated a set of recommendations for EBPs or resources within a local neighborhood that a coalition could have acted upon, given an outbreak of opioid deaths. Design considerations could have made affordances (eg, alerts in the user interface) to create an impetus for action, which has been described as an “actionable data dashboard” [34]. Such actionable dashboards could provide important links to specific issues with the opioid crisis, such as the linkage of distribution centers for naloxone kits to community hot spots where there is unequal access to these kits based on factors such as race and ethnicity [38].

Prioritized Gap: Gatekeeping of the Data and Dashboards

Access to the dashboards was a noteworthy barrier under perceived ease of use. Some study participants indicated that this barrier hindered the use of data to facilitate decision-making among community members. Dashboard requirements limiting access to certain stakeholders was due to study protocol requirements to prevent communities from benchmarking data across communities. However, the user and password verification requirements were seen as inconvenient, and access control was seen as a form of gatekeeping that limited information sharing with key stakeholders who did not have dashboard access and were critical to decision-making in communities.

Lesson 3: Use Processes and Tools That Promote Access and Sharing

Arguments to enhance the use of data included approaches that facilitate human-human interactions in the sensemaking of data within dashboards and visualizations [3,39,40]. In addition to storytelling, other practices include improving how study insights are shared with communities and how communities are encouraged to share insights; permissible sharing includes dashboard export features or providing embedded codes so that data or narratives can be used in media, reports, presentations, websites, and social media content [41].

Prioritized Gap: Low Engagement With the Dashboards

Several themes (eg, data manageability, additional support, use conditional on data changes, and aesthetics) highlighted additional challenges to perceived ease of use and intention to use that could be represented by a broader concept used in the technological literature known as digital engagement [42-44]. The HCS missed opportunities to promote community stakeholder engagement with the CTH dashboards through which enhancements could have been made in areas such as dashboard training and clearer protocols around how community stakeholders used the dashboards during coalition meetings.

Lesson 4: Use a Multisector and Interdisciplinary Approach to Understand and Improve Engagement With Data Visualizations

The use of audit log file data or eye tracking to monitor and characterize use and user preferences (eg, line chart vs bar graphs) can inform design decisions and tailored interventions to foster behavioral changes that support the effective use of data among community stakeholders [44-46]. Our study, while recognizing early on that log files can support engagement, faced challenges with implementing a standard data model to track visual preferences and visit frequencies among community stakeholders, a decision that could have supported systematic transformations to the dashboards. In addition, our findings demonstrated that the combined use of SUS scores and TAM-informed interview data was beneficial in obtaining a comprehensive perspective on how engagement, via usability, can be transformed. For example, prior research has indicated that barriers in usability may have been overlooked if only measured by a single approach, such as exclusively using the SUS [47].

The use of innovative training (eg, dashboard navigators and indexed videos) and workflow changes (eg, starting meetings with referencing insights from a dashboard) are 2 other practice-based examples that can foster engagement among community stakeholders by supporting the realignment of existing mental models and the building of new ones with visual representations to achieve specific tasks [48,49]. For example, the Massachusetts HCS site adopted “community data walks,” in which expert dashboard users provided active demonstrations to community stakeholders on how to use the CTH dashboards.

Our findings suggest that the fidelity of the cocreation process to develop the CTH dashboards had gaps. The HCS is one of the first large-scale applications of the cocreation process to design dashboards within the community context. The pragmatic, multisetting nature of the study reflects challenges to balancing a research agenda with the expectations of diverse communities. Interventions and strategies (eg, multiple plan-do-study-act cycles during the cocreation process) that can address gaps in fidelity in using cocreation could be adopted to ensure that the final outputs are indeed relevant to end users’ work and promotes higher engagement. There were no “checklists” to support our implementation at this level of complexity, and we hope that the lessons we learned establish the foundations for such an approach for future endeavors.

Limitations

Our definition of community stakeholders may be idiosyncratic to our study and parent study research protocols. However, the 4-site design provides a diverse sample of individuals who represent different professional roles and social and demographic groups; the samples were not homogeneous across sites, including in terms of technology literacy, which was not assessed yet could shape end users’ experience with the dashboards [48]. The COVID-19 pandemic may have influenced perceptions of use of and intentions to use the CTH dashboards, given pandemic-driven competing demands, technology-mediated workflow, etc, experienced by the respondents. This may have resulted in conservative perceptions of dashboard usability; however, the public health domain is generally faced with competing demands and emerging situations, which might mitigate such notions. Our research study was relatively large in scope; hence, ensuring the fidelity of our assessment in general and the interviews specifically was challenging. However, as noted in the Methods section, we worked closely across sites to maintain rigor from study inception. We acknowledge that although the SUS is a validated tool, it may have lacked specificity for assessing CTH dashboards. Other TAM constructs (eg, external environment) and more updated versions of the TAM exist [50,51]. However, one of our original goals was to build a framework for dashboard use among community stakeholders; therefore, we chose to use a fundamental version of the TAM that had both the critical constructs for our focus and did not have other constructs that may have imposed a priori assumptions on dashboard usability from other fields and studies.

Conclusions

Data dashboards can be an evidence-based approach to support community-based public health decision-making. These technological interventions to visualize and interact with data can support transformations in community health, including during public health crises, such as the COVID-19 pandemic and the opioid epidemic. Our study is a novel assessment using both the SUS and TAM to examine the usability of dashboards among community stakeholders. Participants provided multifaceted perspectives on the usability of the CTH dashboards and their intention to use these dashboards. On the basis of our findings, we presented important gaps that motivated the consolidation of lessons learned regarding dashboard use among community stakeholders.

Acknowledgments

This research was supported by the National Institutes of Health (NIH) and the Substance Abuse and Mental Health Services Administration through the NIH HEAL (Helping to End Addiction Long-term) Initiative under the award numbers UM1DA049394, UM1DA049406, UM1DA049412, UM1DA049415, and UM1DA049417 (ClinicalTrials.gov Identifier: NCT04111939).

The authors wish to acknowledge the participation of the Healing Communities Study communities, community coalitions, and community advisory boards and state government officials who partnered with us on this study. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH, the Substance Abuse and Mental Health Services Administration, or the NIH HEAL Initiative.

The authors are grateful to many individuals who were instrumental in the development of the Healing Communities Study. In particular, the authors acknowledge the work of those who supported coordination and the collection of data for this study.

Abbreviations

- COREQ

Consolidated Criteria for Reporting Qualitative Research

- CTH

Communities That HEAL

- DCC

data coordinating center

- EBP

evidence-based practice

- HCS

Healing Communities Study

- SUS

System Usability Scale

- TAM

technology adoption model

Semistructured group interview guide on dashboards.

Supplementary tables with additional data.

Footnotes

Authors' Contributions: All authors contributed to the design, writing, and review of the manuscript. NF led in the writing of the manuscript. RGO, JV, LG, EW, and MH contributed to the original draft. Formal analysis was conducted by NF, RGO, LG, YW, and MH. All authors reviewed and edited subsequent versions of the manuscript.

Conflicts of Interest: JV reports affiliation with the National Institute on Drug Abuse.

References

- 1.Lange SJ, Moore LV, Galuska DA. Data for decision-making: exploring the division of nutrition, physical activity, and obesity's data, trends, and maps. Prev Chronic Dis. 2019 Sep 26;16:E131. doi: 10.5888/pcd16.190043. https://europepmc.org/abstract/MED/31560645 .E131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wu DT, Chen AT, Manning JD, Levy-Fix G, Backonja U, Borland D, Caban JJ, Dowding DW, Hochheiser H, Kagan V, Kandaswamy S, Kumar M, Nunez A, Pan E, Gotz D. Evaluating visual analytics for health informatics applications: a systematic review from the American Medical Informatics Association Visual Analytics Working Group Task Force on Evaluation. J Am Med Inform Assoc. 2019 Apr 01;26(4):314–23. doi: 10.1093/jamia/ocy190. https://europepmc.org/abstract/MED/30840080 .5320044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Streeb D, El-Assady M, Keim DA, Chen M. Why visualize? Untangling a large network of arguments. IEEE Trans Vis Comput Graph. 2021 Mar;27(3):2220–36. doi: 10.1109/TVCG.2019.2940026. [DOI] [PubMed] [Google Scholar]

- 4.Wahi MM, Dukach N. Visualizing infection surveillance data for policymaking using open source dashboarding. Appl Clin Inform. 2019 May;10(3):534–42. doi: 10.1055/s-0039-1693649. https://europepmc.org/abstract/MED/31340399 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fareed N, Swoboda CM, Lawrence J, Griesenbrock T, Huerta T. Co-establishing an infrastructure for routine data collection to address disparities in infant mortality: planning and implementation. BMC Health Serv Res. 2022 Jan 02;22:4. doi: 10.1186/s12913-021-07393-1. https://bmchealthservres.biomedcentral.com/articles/10.1186/s12913-021-07393-1 .10.1186/s12913-021-07393-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fareed N, Swoboda CM, Lawrence J, Griesenbrock T, Huerta T. Co-establishing an infrastructure for routine data collection to address disparities in infant mortality: planning and implementation. BMC Health Serv Res. 2022 Jan 02;22(1):4. doi: 10.1186/s12913-021-07393-1. https://bmchealthservres.biomedcentral.com/articles/10.1186/s12913-021-07393-1 .10.1186/s12913-021-07393-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hester G, Lang T, Madsen L, Tambyraja R, Zenker P. Timely data for targeted quality improvement interventions: use of a visual analytics dashboard for bronchiolitis. Appl Clin Inform. 2019 Jan;10(1):168–74. doi: 10.1055/s-0039-1679868. https://europepmc.org/abstract/MED/30841007 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kummer BR, Willey JZ, Zelenetz MJ, Hu Y, Sengupta S, Elkind MS, Hripcsak G. Neurological dashboards and consultation turnaround time at an academic medical center. Appl Clin Inform. 2019 Oct;10(5):849–58. doi: 10.1055/s-0039-1698465. https://europepmc.org/abstract/MED/31694054 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fareed N, Swoboda CM, Chen S, Potter E, Wu DT, Sieck CJ. U.S. COVID-19 state government public dashboards: an expert review. Appl Clin Inform. 2021 Mar;12(2):208–21. doi: 10.1055/s-0041-1723989. http://www.thieme-connect.com/DOI/DOI?10.1055/s-0041-1723989 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Everts J. The dashboard pandemic. Dialogue Hum Geogr. 2020 Jun 17;10(2):260–4. doi: 10.1177/2043820620935355. [DOI] [Google Scholar]

- 11.Vahedi A, Moghaddasi H, Asadi F, Hosseini AS, Nazemi E. Applications, features and key indicators for the development of Covid-19 dashboards: a systematic review study. Inform Med Unlocked. 2022;30:100910. doi: 10.1016/j.imu.2022.100910. https://linkinghub.elsevier.com/retrieve/pii/S2352-9148(22)00060-0 .S2352-9148(22)00060-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dixon BE, Grannis SJ, McAndrews C, Broyles AA, Mikels-Carrasco W, Wiensch A, Williams JL, Tachinardi U, Embi PJ. Leveraging data visualization and a statewide health information exchange to support COVID-19 surveillance and response: application of public health informatics. J Am Med Inform Assoc. 2021 Jul 14;28(7):1363–73. doi: 10.1093/jamia/ocab004. https://europepmc.org/abstract/MED/33480419 .6106131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chandler RK, Villani J, Clarke T, McCance-Katz EF, Volkow ND. Addressing opioid overdose deaths: the vision for the HEALing communities study. Drug Alcohol Depend. 2020 Dec 01;217:108329. doi: 10.1016/j.drugalcdep.2020.108329. https://linkinghub.elsevier.com/retrieve/pii/S0376-8716(20)30494-4 .S0376-8716(20)30494-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wu E, Villani J, Davis A, Fareed N, Harris DR, Huerta TR, LaRochelle MR, Miller CC, Oga EA. Community dashboards to support data-informed decision-making in the HEALing communities study. Drug Alcohol Depend. 2020 Dec 01;217:108331. doi: 10.1016/j.drugalcdep.2020.108331. https://linkinghub.elsevier.com/retrieve/pii/S0376-8716(20)30496-8 .S0376-8716(20)30496-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mao AY, Chen C, Magana C, Caballero Barajas K, Olayiwola JN. A mobile phone-based health coaching intervention for weight loss and blood pressure reduction in a national payer population: a retrospective study. JMIR Mhealth Uhealth. 2017 Jun 08;5(6):e80. doi: 10.2196/mhealth.7591. https://mhealth.jmir.org/2017/6/e80/ v5i6e80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989 Sep;13(3):319–40. doi: 10.2307/249008. [DOI] [Google Scholar]

- 17.Brooke J. Usability Evaluation In Industry. Boca Raton, FL: CRC Press; 1996. SUS: a 'quick and dirty' usability scale. [Google Scholar]

- 18.HEALing Communities Study Consortium The HEALing (Helping to End Addiction Long-term SM) communities study: protocol for a cluster randomized trial at the community level to reduce opioid overdose deaths through implementation of an integrated set of evidence-based practices. Drug Alcohol Depend. 2020 Dec 01;217:108335. doi: 10.1016/j.drugalcdep.2020.108335. https://linkinghub.elsevier.com/retrieve/pii/S0376-8716(20)30500-7 .S0376-8716(20)30500-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Harris PA, Taylor R, Minor BL, Elliott V, Fernandez M, O'Neal L, McLeod L, Delacqua G, Delacqua F, Kirby J, Duda SN. The REDCap consortium: building an international community of software platform partners. J Biomed Inform. 2019 Jul;95:103208. doi: 10.1016/j.jbi.2019.103208. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(19)30126-1 .S1532-0464(19)30126-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Fram SM. The constant comparative analysis method outside of grounded theory. Qual Rep. 2013;18(1):1–25. doi: 10.46743/2160-3715/2013.1569. [DOI] [Google Scholar]

- 21.NVivo (version 12) Lumivero. [2022-12-15]. https://www.qsrinternational.com/nvivo-qualitative-data-analysis-software/home .

- 22.Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007 Dec;19(6):349–57. doi: 10.1093/intqhc/mzm042.mzm042 [DOI] [PubMed] [Google Scholar]

- 23.Gorbenko K, Mohammed A, Ezenwafor EI, Phlegar S, Healy P, Solly T, Nembhard I, Xenophon L, Smith C, Freeman R, Reich D, Mazumdar M. Innovating in a crisis: a qualitative evaluation of a hospital and Google partnership to implement a COVID-19 inpatient video monitoring program. J Am Med Inform Assoc. 2022 Aug 16;29(9):1618–30. doi: 10.1093/jamia/ocac081. https://europepmc.org/abstract/MED/35595236 .6589894 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Colorafi KJ, Evans B. Qualitative descriptive methods in health science research. HERD. 2016 Jul;9(4):16–25. doi: 10.1177/1937586715614171. https://europepmc.org/abstract/MED/26791375 .1937586715614171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kortum PT, Bangor A. Usability ratings for everyday products measured with the system usability scale. Int J Hum Comput Interact. 2013 Jan 03;29(2):67–76. doi: 10.1080/10447318.2012.681221. [DOI] [Google Scholar]

- 26.Bangor A, Kortum PT, Miller JT. An empirical evaluation of the system usability scale. Int J Hum Comput Interact. 2008 Jul 30;24(6):574–94. doi: 10.1080/10447310802205776. [DOI] [Google Scholar]

- 27.Chen M, Floridi L. An analysis of information visualisation. Synthese. 2012 Sep 26;190:3421–38. doi: 10.1007/s11229-012-0183-y. [DOI] [Google Scholar]

- 28.Luo W. User choice of interactive data visualization format: the effects of cognitive style and spatial ability. Decis Support Syst. 2019 Jul;122:113061. doi: 10.1016/j.dss.2019.05.001. [DOI] [Google Scholar]

- 29.Vessey I. Cognitive fit: a theory-based analysis of the graphs versus tables literature. Decis Sci. 1991 Mar;22(2):219–40. doi: 10.1111/j.1540-5915.1991.tb00344.x. [DOI] [Google Scholar]

- 30.Engin A, Vetschera R. Information representation in decision making: the impact of cognitive style and depletion effects. Decis Support Syst. 2017 Nov;103:94–103. doi: 10.1016/j.dss.2017.09.007. [DOI] [Google Scholar]

- 31.Kosara R, Mackinlay J. Storytelling: the next step for visualization. Computer. 2013 May;46(5):44–50. doi: 10.1109/mc.2013.36. [DOI] [Google Scholar]

- 32.Schuster R, Koesten L, Gregory K, Möller T. "Being simple on complex issues" -- accounts on visual data communication about climate change. arXiv. doi: 10.1109/TVCG.2024.3352282. Preprint posted online November 18, 2022. [DOI] [PubMed] [Google Scholar]

- 33.Anderson KL. Analytical engagement - constructing new knowledge generated through storymaking within co-design workshops. In: Anderson KL, Cairns G, editors. Participatory Practice in Space, Place, and Service Design. Wilmington, DE: Vernon Press; 2022. [Google Scholar]

- 34.Verhulsdonck G, Shah V. Making actionable metrics “actionable”: the role of affordances and behavioral design in data dashboards. J Bus Tech Commun. 2021 Oct 29;36(1):114–9. doi: 10.1177/10506519211044502. [DOI] [Google Scholar]

- 35.Cheng CK, Ip DK, Cowling BJ, Ho LM, Leung GM, Lau EH. Digital dashboard design using multiple data streams for disease surveillance with influenza surveillance as an example. J Med Internet Res. 2011 Oct 14;13(4):e85. doi: 10.2196/jmir.1658. https://www.jmir.org/2011/4/e85/ v13i4e85 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Fanzo J, Haddad L, McLaren R, Marshall Q, Davis C, Herforth A, Jones A, Beal T, Tschirley D, Bellows A, Miachon L, Gu Y, Bloem M, Kapuria A. The food systems dashboard is a new tool to inform better food policy. Nat Food. 2020 May 13;1:243–6. doi: 10.1038/s43016-020-0077-y. [DOI] [Google Scholar]

- 37.Eckerson WW. Performance Dashboards: Measuring, Monitoring, and Managing Your Business. Hoboken, NJ: John Wiley & Sons; 2005. [Google Scholar]

- 38.Nolen S, Zang X, Chatterjee A, Behrends CN, Green TC, Linas BP, Morgan JR, Murphy SM, Walley AY, Schackman BR, Marshall BD. Evaluating equity in community-based naloxone access among racial/ethnic groups in Massachusetts. Drug Alcohol Depend. 2022 Dec 01;241:109668. doi: 10.1016/j.drugalcdep.2022.109668. https://europepmc.org/abstract/MED/36309001 .S0376-8716(22)00405-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.van Wijk JJ. The value of visualization. Proceedings of the VIS 05. IEEE Visualization; VISUAL 2005; October 23-28, 2005; Minneapolis, MN. 2005. [DOI] [Google Scholar]

- 40.Bruner J. Acts of Meaning: Four Lectures on Mind and Culture. Cambridge, MA: Harvard University Press; 1992. [Google Scholar]

- 41.Nelson LA, Spieker AJ, Mayberry LS, McNaughton C, Greevy RA. Estimating the impact of engagement with digital health interventions on patient outcomes in randomized trials. J Am Med Inform Assoc. 2021 Dec 28;29(1):128–36. doi: 10.1093/jamia/ocab254. https://europepmc.org/abstract/MED/34963143 .6433096 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Short CE, DeSmet A, Woods C, Williams SL, Maher C, Middelweerd A, Müller AM, Wark PA, Vandelanotte C, Poppe L, Hingle MD, Crutzen R. Measuring engagement in eHealth and mHealth behavior change interventions: viewpoint of methodologies. J Med Internet Res. 2018 Nov 16;20(11):e292. doi: 10.2196/jmir.9397. https://www.jmir.org/2018/11/e292/ v20i11e292 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Perski O, Blandford A, West R, Michie S. Conceptualising engagement with digital behaviour change interventions: a systematic review using principles from critical interpretive synthesis. Transl Behav Med. 2017 Jun;7(2):254–67. doi: 10.1007/s13142-016-0453-1. https://europepmc.org/abstract/MED/27966189 .10.1007/s13142-016-0453-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Fareed N, Walker D, Sieck CJ, Taylor R, Scarborough S, Huerta TR, McAlearney AS. Inpatient portal clusters: identifying user groups based on portal features. J Am Med Inform Assoc. 2019 Jan 01;26(1):28–36. doi: 10.1093/jamia/ocy147. https://europepmc.org/abstract/MED/30476122 .5199368 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Morgan E, Schnell P, Singh P, Fareed N. Outpatient portal use among pregnant individuals: cross-sectional, temporal, and cluster analysis of use. Digit Health. 2022 Jul 06;8:20552076221109553. doi: 10.1177/20552076221109553. https://journals.sagepub.com/doi/abs/10.1177/20552076221109553?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed .10.1177_20552076221109553 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Nadj M, Maedche A, Schieder C. The effect of interactive analytical dashboard features on situation awareness and task performance. Decis Support Syst. 2020 Aug;135:113322. doi: 10.1016/j.dss.2020.113322. https://europepmc.org/abstract/MED/32834262 .S0167-9236(20)30077-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Tieu L, Schillinger D, Sarkar U, Hoskote M, Hahn KJ, Ratanawongsa N, Ralston JD, Lyles CR. Online patient websites for electronic health record access among vulnerable populations: portals to nowhere? J Am Med Inform Assoc. 2017 Apr 01;24(e1):e47–54. doi: 10.1093/jamia/ocw098. https://europepmc.org/abstract/MED/27402138 .ocw098 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Chrysantina A, Sæbø JI. Assessing user-designed dashboards: a case for developing data visualization competency. Proceedings of the 15th IFIP WG 9.4 International Conference on Social Implications of Computers in Developing Countries; ICT4D 2019; May 1-3, 2019; Dar es Salaam, Tanzania. 2019. [DOI] [Google Scholar]

- 49.McAlearney AS, Walker DM, Sieck CJ, Fareed N, MacEwan SR, Hefner JL, Di Tosto G, Gaughan A, Sova LN, Rush LJ, Moffatt-Bruce S, Rizer MK, Huerta TR. Effect of in-person vs video training and access to all functions vs a limited subset of functions on portal use among inpatients: a randomized clinical trial. JAMA Netw Open. 2022 Sep 01;5(9):e2231321. doi: 10.1001/jamanetworkopen.2022.31321. https://europepmc.org/abstract/MED/36098967 .2796087 [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 50.Venkatesh V, Davis FD. A theoretical extension of the technology acceptance model: four longitudinal field studies. Manag Sci. 2000 Feb;46(2):186–204. doi: 10.1287/mnsc.46.2.186.11926. [DOI] [Google Scholar]

- 51.Venkatesh V, Morris MG, Davis GB, Davis FD. User acceptance of information technology: toward a unified view. MIS Q. 2003 Sep;27(3):425–78. doi: 10.2307/30036540. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Semistructured group interview guide on dashboards.

Supplementary tables with additional data.