Abstract

Understanding the behavioral and neural dynamics of social interactions is a goal of contemporary neuroscience. Many machine learning methods have emerged in recent years to make sense of complex video and neurophysiological data that result from these experiments. Less focus has been placed on understanding how animals process acoustic information, including social vocalizations. A critical step to bridge this gap is determining the senders and receivers of acoustic information in social interactions. While sound source localization (SSL) is a classic problem in signal processing, existing approaches are limited in their ability to localize animal-generated sounds in standard laboratory environments. Advances in deep learning methods for SSL are likely to help address these limitations, however there are currently no publicly available models, datasets, or benchmarks to systematically evaluate SSL algorithms in the domain of bioacoustics. Here, we present the VCL Benchmark: the first large-scale dataset for benchmarking SSL algorithms in rodents. We acquired synchronized video and multi-channel audio recordings of 767,295 sounds with annotated ground truth sources across 9 conditions. The dataset provides benchmarks which evaluate SSL performance on real data, simulated acoustic data, and a mixture of real and simulated data. We intend for this benchmark to facilitate knowledge transfer between the neuroscience and acoustic machine learning communities, which have had limited overlap.

1. Introduction

An ongoing renaissance of ethology in the field of neuroscience has shown the importance of conducting experiments in naturalistic contexts, particularly social interactions [38, 1]. Most experiments in social neuroscience have focused on relatively constrained contexts over short timescales, however an emerging paradigm shift is leading laboratories to adopt longitudinal experiments in semi-natural or natural environments [49]. With this shift comes significant data analytic challenges—such as how to track individuals in groups of socially interacting animals—necessitating collaboration between the fields of machine learning and neuroscience [10, 43].

Substantial progress has been made in applying machine vision to multi-animal pose tracking and action recognition [44, 31, 36, 55], however applications of machine audio for acoustic analysis of animal generated social sounds (e.g. vocalizations or footstep sounds) have only recently begun [48, 19]. To study the dynamics of vocal communication and their neural basis, ethologists and neuroscientists have developed a multitude of approaches to attribute vocal calls to individual animals within an interacting social group, however many existing approaches for vocalization attribution necessitate specialized experimental apparatuses and paradigms that hinder the expression of natural social behaviors. For example, invasive surgical procedures, such as affixing custom-built miniature sensors to each animal [16, 47, 60], are often needed to obtain precise measurements of which individual is vocalizing. In addition to being labor intensive and species specific, these surgeries are often not tractable in very small or young animals, may alter an animal’s natural behavioral repertoire, and are not scalable to large social groups. Thus, there is considerable interest in developing non-invasive sound vocal call attribution methods that work off-the-shelf in laboratory settings.

Sound source localization (SSL) is a decades old problem in acoustical signal processing, and several neuroscience groups have adapted classical algorithms from this literature to localize animal sounds [40, 53, 62]. These approaches can work reasonably well in specialized acoustically transparent environments, however they tend to fail in reverberant environments (see Supplement) that are required for next-generation naturalistic experiments.

Data-driven modeling approaches with fewer idealized assumptions may be expected to achieve greater performance [65]. Indeed, in the broader audio machine learning community, deep networks are commonly used to localize sounds [21]. Typically, these approaches have been targeted at much larger environments—e.g. localizing sounds across multi-room home environments [51]. To our knowledge, no benchmark datasets or deep network models have been developed for localizing sounds emitted by small animals (e.g. rodents) interacting in common laboratory environments (e.g. a spatial footprint less than one square meter). To address this, we present benchmark datasets for training and evaluating SSL techniques in reverberant conditions.

2. Background and Related Work

2.1. Existing Benchmarks

Acoustic engineers are interested in SSL algorithms for a variety of downstream applications. For example, localization can enable audio source separation [34] by disentangling simultaneous sounds emanating from different locations. Other applications include the development of smart home and assisted living technologies [18], teleconferencing [61], and human-robot interactions [32]. To facilitate these aims, several benchmark datasets have been developed in recent years including the L3DAS challenges [22, 23, 20], LOCATA challenge [14], and STARSS23 [51].

Notably, all of these applications and associated benchmarks are (a) focused on a range of sound frequencies that are human audible, and (b) focused on large environments such as offices and household rooms with relatively low reverberation. Our benchmark differs along both of these dimensions, which are important for neuroscience and ethology applications.

Many rodents vocalize and detect sounds in both sonic and ultrasonic ranges. For example, mice, rats, and gerbils collectively have hearing sensitivity that spans ~50–100,000 Hz with vocalizations spanning ~100–100,000 Hz [42]. Localizing sounds across a broad spectrum of frequencies introduces interesting complications to the SSL problem. Phase differences across microphones carry less reliable information for higher frequency sounds (see e.g. [27]). Moreover, a microphone’s spatial sensitivity profile will generally be frequency dependent (see microphone specifications for ultrasonic condenser microphone CM16-CMPA from Avisoft Bioacoustics). Therefore, sounds emanating from the same location with the same source volume but distinct frequencies can register with unique level difference profiles across microphones. Thus, different acoustical computations are required to perform SSL for high and low frequency sounds. Indeed, we find that deep networks trained on low frequency sounds in our benchmark fail to generalize when tested on high frequency sounds (see Supplement).

Moreover, many model organisms (rodents, birds, and bats) are experimentally monitored in laboratory environments made of rigid and reverberant materials. The use of these materials is necessary to prevent animals from escaping experimental arenas, which is of particular concern when doing longitudinal semi-natural experiments. For example, in attempts to mitigate reverberance using specialized equipment such as anechoic foam and acoustically transparent mesh, we found that gerbils will climb or chew through material after a short time in the arena. Therefore, use of hard plastic materials, even at the expense of being more reverberant, is required. Thus, the prevalence and character of sound reflections is a unique feature of the VCL benchmark. For variety, we also include benchmark data from an environment with sound absorbent wall material (E3).

2.2. Classical work on SSL in engineering and neuroscience

Conventional methods for SSL from acoustic signal processing are summarized in [11]. These methods primarily use differences in arrival times or signal phase across microphones to estimate sources; differences in volume levels are often ignored as a source of information (but see [3]). We use the Mouse Ultrasonic Source Estimation (MUSE) tool [40, 62] as a representative stand-in for these classic approaches in our benchmark experiments. An alternative method based on arrival times was recently proposed by Sterling, Teunisse, and Englitz [54] (see also [41]).

Neural circuit mechanisms of SSL have been extensively studied in model organisms like barn owls, which utilize exquisite SSL capabilities to hunt prey [29]. Neurons in the early auditory system represent both interaural timing and level differences in multiple animal species [9, 4, 7]. Behavioral studies in humans also establish the importance of both interaural timing and level differences [5], and the relative importance of these cues depends on sound frequency and the level of sound reverberation, among other factors [33, 27, 15]. Altogether, the neuroscience and psychophysics literature establishes that animals are adept at localizing sounds in reverberant environments. Moreover, in contrast to many classical SSL algorithms that leverage phase differences across audio waveforms, humans and animals use a complex combination of acoustical cues to localize sounds.

2.3. Deep learning approaches to SSL

SSL algorithms account for a variety of event-specific factors including sound frequency, volume, and reverberation. It is challenging to rationally engineer an algorithm to account for all of these factors and the acoustic machine learning community has therefore increasingly turned to deep neural networks (DNNs) to perform SSL. Grumiaux et al. [21] provide a recent and comprehensive review of this literature, including popular architectures, datasets, and simulation methods. Interestingly, researchers have prototyped a variety of preprocessing steps, such as converting raw audio to spectrograms. In our experiments, we apply DNNs with 1D convolutional layers to raw audio waveforms, which are a reasonable standard for benchmarking purposes (see e.g. [59]). Similar to the existing SSL benchmarks listed above, the vast majority of published DNN models have focused on large home or office environments, which differ substantially from our applications of interest.

2.4. Acoustic simulations

Across a variety of machine learning tasks, DNNs tend to require large amounts of training data [25]. This is problematic, since it is labor intensive to collect ground truth localization data and curate the result to ensure accurate labels. To overcome this limitation, there is recent interest in leveraging acoustic simulations to supplement DNN training sets. A popular simulation approach is the image source method (ISM) [2]. ISM is computationally inexpensive, relative to alternatives, and preserves much of the spatial information necessary for SSL by accurately modeling the early reflections of sound events. ISM-based approaches to SSL using white noise [8] as well as music, speech and other sound events [30] have shown success when evaluated on data also generated from the ISM.

Recent work has shown that use of room simulations generated using the ISM can also benefit model performance on real-world data [26] and can improve robustness by simulating a wider range of acoustic conditions than is present in an existing training dataset [46], despite perceptual limitations of the ISM. Given these trends in the field, our dataset release includes simulated environments and code for performing ISM simulations.

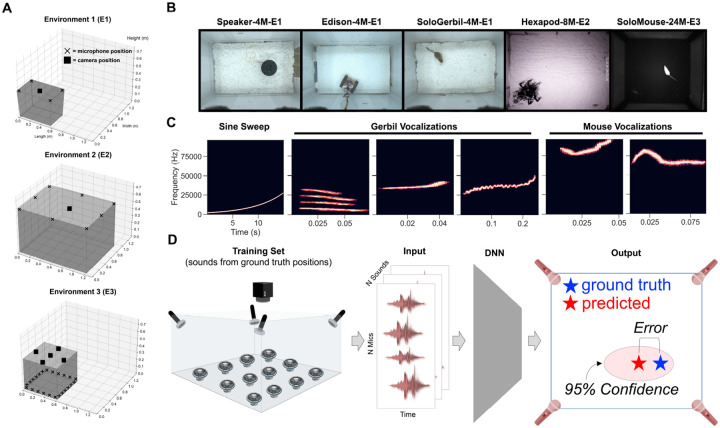

3. The VCL Dataset

The VCL Dataset consists of raw multi-channel audio and image data from 770,547 sound events with ground truth 2D position of the sound event source established by an overhead camera. We recorded synchronized audio (125 or 250 kHz sampling rate) and video (30 Hz or 150 Hz sampling rate) during sound generating events from point sources emanating from either a speaker or real rodents. Sound events were sampled across three environments of varying size, microphone array geometries, and building material (Figure 1A–B, Table 1–2). Ground truth positions were extracted from the video stream using SLEAP [44] or OpenCV, and vocal events from real rodents were segmented from the audio stream using DAS [52]. Timestamps from sound events using speaker playback were either recorded by a National Instruments data acquisition device or pre-computed and used to generate a wav file with known sound event onset times (see Supplement for additional detail).

Figure 1:

Overview of VCL benchmark. (A) Schematics of three laboratory arenas summarized in Table 2 showing relative size and positions of mics (X’s) and cameras (squares). (B) Top-down views of different environments and training data generation modalities. (C) Examples of stimuli used for playback from Speaker, Edison, Earbud, and Hexapod datasets. (D) Schematic of pipeline depicting inputs (raw audio) and outputs (95% confidence interval).

Table 1:

Summary of datasets. Datasets in blue were used as training sets and for test sets when benchmarking SSL. Datasets in red were used as test sets when benchmarking sound attribution.

| Name | # Samples |

|---|---|

| Speaker-4M-E1 | 70,914 |

| Edison-4M-E1 | 266,877 |

| GerbilEarbud-4M-E1 | 7,698 |

| SoloGerbil-4M-E1 | 61,513 |

| DyadGerbil-4M-E1 | 653 |

| Hexapod-8M-E2 | 156,900 |

| MouseEarbud-24M-E3 | 200,000 |

| SoloMouse-24M-E3 | 549 |

| DyadMouse-24M-E3 | 2,191 |

Table 2:

Summary of environments. The final two characters in each dataset name (refer to Table 1) specifies the environment in which it was collected.

| Name | # Mics | Dimensions (m) |

|---|---|---|

| E1 | 4 | Top: 0.61595 × 0.41275 Bottom: 0.5588 × 0.3556 Height: 0.3683 |

| E2 | 8 | Top: 1.2182×0.9144 Bottom: 1.2182×0.9144 Height: 0.6096 |

| E3 | 24 | Top: 0.615 × 0.615 Bottom: 0.615 × 0.615 Height: 0.425 |

For datasets that involved speaker playback, we used primarily rodent vocalizations as stimuli (Figure 1C). In addition, we played sine sweeps in each environment which were used to compute a room impulse response (RIR, see Section 3.5).

3.1. Speaker Datasets

The Speaker Dataset (Speaker-4M-E1) was generated by repeatedly presenting five characteristic gerbil vocal calls and a white noise stimulus at three volume levels (18 total stimulus classes) through an overheard Fountek NeoCd1.0 1.5” Ribbon Tweeter speaker. Between every set of presentations, the speaker was manually shifted two centimeters to trace a grid of roughly 400 points along the cage floor. This procedure yielded a dataset of 70,914 presentations spanning the 18 stimulus classes. Gerbil vocalizations can range in frequency from approximately 0.5–60 kHz and different vocalizations correspond to different types of social interactions in nature [57]. In this study, we selected a diverse set of commonly used vocal types vary in frequency range and ethologcial meaning.

3.2. Robot Datasets

The generation of the Speaker Dataset was quite labor intensive due to manual movement of the speaker, therefore the procedure was impractical for generating additional training datasets at numerical and spatial scale. To get around this issue, we developed two robotic approaches for autonomous playback of sound events. The Edison and Hexapod Datasets (Edison-4M-E1, Hexapod-8M-E2) were generated by periodically playing vocalizations through miniature speakers affixed to the robots as they performed a pseudo-random walk around the environment. The vocalizations used were sampled from a longitudinal recording of gerbil families [45].

3.3. Earbud Datasets

Speaker and robotic playback of vocalizations may not accurately represent the spatial usage and direction of vocalizations in real animals. To address this, we acquired two “Earbud” datasets (GerbilEarbud-4M-E1, MouseEarbud-24M-E3), in which gerbils or mice freely explored their environment with an earbud surgically affixed to their skull. We then played species typical vocalizations out of the earbud while animals exhibited a range of natural behaviors.

3.4. Solo/Dyad Gerbil & Mouse Datasets

Although isolated animals usually do not vocalize, we found that adolescent gerbils produce antiphonal responses to conspecific vocalizations played through a speaker. We leveraged this behavior to generate a large scale dataset, SoloGerbil-4M-E1, containing real gerbil-generated vocalizations in isolation. In addition, we elicited solo vocalizations in male mice (SoloMouse-24M-E3) by allowing female mice in estrus to explore the environment prior to male exploration.

Our ultimate goal is to use sound source estimates to attribute vocalizations to individuals in a group of socially interacting animals. To this end, we acquired vocalizations from pairs of interacting gerbils and mice (DyadGerbil-4M-E1, DyadMouse-24M-E3). Although we are unable to determine the ground truth position of vocalizations recorded from these interactions, we do know the locations of both potential sources and can therefore ascertain whether our model generates predictions with zero, one, or two animals within its confidence interval (See Task 2 below).

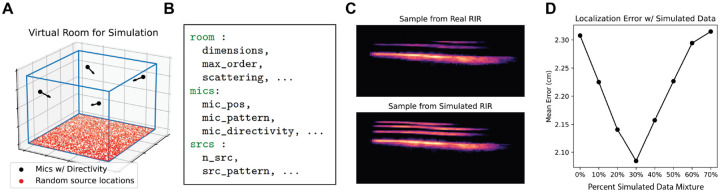

3.5. Synthetic Datasets

Since DNNs often require large training datasets and generation of datasets in the domain of SSL is laborious, we explored the use of acoustic simulations for supplementing real training data (Figure 2). We generated in silico models of our three environments accounting for physical measurements of the geometry, microphone placement, microphone directivity, and estimates of the material absorption coefficients (calculated via the inverse Sabine formula on room impulse response measurements with a sine sweep excitation). Code to reproduce these simulations and adapt them to new environments is included in our code package accompanying the VCL benchmark. In preliminary experiments, we found that training DNNs on mixtures of real and simulated data can benefit performance (Figure 2D), but we do not include simulated data in our benchmark experiments described below.

Figure 2:

(A) Visualization of virtual room used for sythetic RIR generation via ISM (B) Sample of a room configuration YAML used to specify room geometry for simulations (C) Spectrograms comparing vocalizations convolved with recorded RIRs and simulated RIRs (D) Localization error as a function of added simulated data to the training corpus.

4. Benchmarks on VCL

We established a benchmark on the VCL Dataset using two distinct tasks.

Task 1 - Sound Source Localization: Compare the performance of classical sound source localization algorithms with deep neural networks.

Task 2 - Vocalization Attribution: Assign vocalizations to individuals in a dyad.

We evaluated performance on Task 1 using datasets with a single sound source (marked in blue in Table 1). We calculated the centimeter error between ground truth and predicted positions. Our aim is to achieve errors less than or equal to ~1 cm, as this is the approximate resolution required to attribute sound events to individual animals.3 We also sought to benchmark the accuracy of model-derived confidence intervals. That is, for each prediction the model should produce a 2D set that contains the sound source with specified confidence (e.g. a 95% confidence set fail to contain the true sound source on only 5% of test set examples). Following procedures from Guo et al. [24], we plot reliability diagrams and report the expected calibration error (ECE) and maximal calibration error (MCE).

We evaluated performance on Task 2 using datasets with two potential sound sources (marked in red in Table 1). For Task 2, we report the number of animals inside the 95% confidence set of model predictions. For each sound event, the model can predict zero, one, or two animals within its confidence set. We report the frequency of each of these outcomes and interpret them as follows. First, if only one animal is within the confidence set, the model attributes the vocalization to that animal. We cannot for verify whether this attribution is correct because (unlike the datasets used in Task 1) we do not have ground truth measurements of the sound source. Second, if two animals are within the confidence set, then the model is unable to reliably attribute the sound to an individual. This outcome is neither correct nor incorrect. Finally, if zero animals are within the confidence set, then the model has falsely attributed the sound to a region. This outcome is clearly incorrect and should ideally happen less than 5% of the time when using a 95% confidence set.

4.1. Convolutional Deep Neural Network

The network consists of 1D convolutional blocks connected in series. The network takes in raw multi-channel audio waveforms and outputs the mean and covariance of a 2D Gaussian distribution over the environment. Intuitively, the mean represents the network’s best point estimate of the sound source and the scale and shape of the covariance matrix corresponds to an estimate of uncertainty. The network is trained with respect to labeled 2D sound source positions to minimize a negative log likelihood criterion—this is a proper scoring rule [17] which encourages the model to accurately portray its confidence in the predicted covariance. That is, the 95% upper level set of the Gaussian density should ideally act as a 95% confidence set. However, in line with previous reports, we sometimes observe that DNN confidence intervals are overconfident. In these cases, we use a temperature scaling procedure to calibrate the confidence intervals [24]. Further details on data preprocessing, model architecture, training procedure are provided in the Supplement.

4.2. MUSE Baseline Model

We compare the DNNs to a delay-and-sum beamforming approach used by neuroscientists called MUSE [40, 62]. MUSE works by computing cross-correlation signal between all pairs of microphone signals across hypothesized sound source locations, using the distance between microphones and the speed of sound to compute arrival time delays. The location that maximizes the summed response power over all microphones is then selected as a point estimate. We generate 95% confidence sets using a jackknife resampling technique proposed in Warren, Sangiamo, and Neunuebel [62].

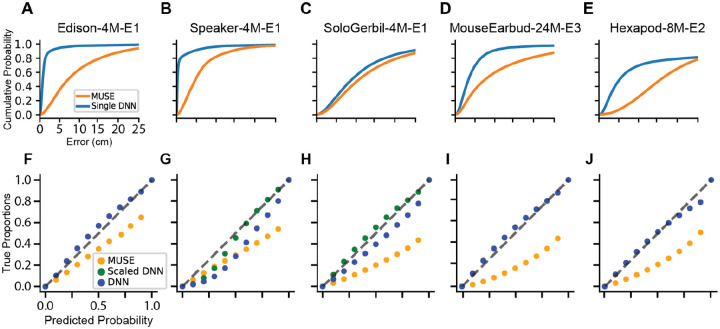

4.3. Task 1 Results

Deep neural networks consistently produced estimates closer to the ground truth source than MUSE (Figure 3 A–E, Table 3). DNN performance was particularly strong on the Edison-4M-E1 and Speaker-4M-E1 datasets, achieving <1 cm error on 80.6% and 66.0% on the respective test sets. As mentioned above, this level of resolution should enable attribution of most vocalizations in realistic social encounters in rodents [54]. DNNs also outperformed MUSE on the remaining three datasets; however, they achieved sub-centimeter errors on less than 10% of the test set in all cases.

Figure 3:

Benchmark performance. (A-E) Cumulative error distributions for MUSE and neural networks. (F-J) Reliability diagrams for MUSE (orange) and neural networks with (green) and without (blue) temperature scaling on heldout data from each dataset.

Table 3:

Summary of sound source localization errors for Task 1.

| Dataset | DNN Error (cm) | MUSE Error (cm) | ||||

|---|---|---|---|---|---|---|

| Mean | Median | % <1cm | Mean | Median | % <1cm | |

| Speaker-4M-E1 | 1.4 | 0.2 | 80.6% | 6.4 | 4.8 | 5.9% |

| Edison-4M-E1 | 1.4 | 0.7 | 66.0% | 9.5 | 7.1 | 3.1% |

| SoloGerbil-4M-E1 | 12.0 | 10.0 | 1.0% | 13.2 | 10.8 | 1.0% |

| Hexapod-8M-E2 | 12.9 | 5.2 | 4.8% | 18.1 | 15.6 | 0.3% |

| MouseEarbud-24M-E3 | 4.1 | 2.6 | 8.7% | 11.3 | 7.6 | 3.3% |

Moreover, we found that DNNs provide more accurate estimates of uncertainty relative to MUSE, as calculated by ECE and MCE (Table 4). This performance difference is visible in reliability diagrams, which show that MUSE predictions are over-confident (Figure 3F–J).

Table 4:

Expected Calibration Error (ECE) and Maximum Calibration Error (MCE) for Task 1.

| Dataset | DNN | Scaled DNN | MUSE | |||

|---|---|---|---|---|---|---|

| ECE | MCE | ECE | MCE | ECE | MCE | |

| Speaker-4M-E1 | 0.13 | 0.23 | 0.05 | 0.13 | 0.17 | 0.36 |

| Edison-4M-E1 | 0.03 | 0.07 | - | - | 0.12 | 0.25 |

| SoloGerbil-4M-E1 | 0.08 | 0.13 | 0.03 | 0.06 | 0.24 | 0.47 |

| Hexapod-8M-E2 | 0.03 | 0.11 | - | - | 0.22 | 0.40 |

| MouseEarbud-24M-E3 | 0.03 | 0.06 | - | - | 0.25 | 0.45 |

4.4. Task 2 Results

To test the ability of our DNNs to assign vocalizations to individuals in dyadic interactions, we used DNNs trained on single-agent datasets, MouseEarbud-24M-E3 and SoloGerbil-4M-E1 respectively, to compute confidence bounds on vocalizations from the dyadic datasets MouseDyad-24M-E3 and GerbilDyad-4M-E1. As described above, we used temperature rescaling to ensure DNN confidence sets were well-calibrated. While we were capable of assigning between 19–29% of these calls to a single animal, over half of the vocalizations in each interaction yielded a confidence bound containing both animals (Table 5). Methods to resolve these shortcomings remain a focus of future work.

Table 5:

Vocalization attribution results. Number of animals captured within the 95% confidence set.

| Gerbil Dyad | Mouse Dyad | |||||

|---|---|---|---|---|---|---|

| # Animals Captured | 0 | 1 | 2 | 0 | 1 | 2 |

| Percentage | 6.1% | 28.6% | 65.2% | 8.9% | 19.4% | 71.7% |

5. Limitations

Neuroscientists are interested in localizing sounds across a broad range of settings. We aimed to cover multiple rodent species (gerbils and mice), environment sizes, and microphone array geometries in this initial release. We also leveraged robots and head-mounted earbud speakers to collect sounds with known ground truth. However, this benchmark does not yet cover all use cases in neuroscience. Other commonly used model species—e.g., marmosets[13], bats[58], and various bird species[6]—are of great interest and are not covered by the current benchmark. Our experiments show that deep neural networks trained to localize sounds can fail to generalize across vocal call types (see Supplement). It would therefore be valuable to expand this benchmark to include a wider variety of animal species, call types, and increase the number of training samples. To this end, we include additional datasets which were not used in Task 1 due to their relatively small size (GerbilEarbud-4M-E1, SoloMouse-24M-E3), which will aid future experiments assessing generalization performance across datasets (e.g. train on Speaker-4M-E1, predict on GerbilEarbud-4M-E1).

Our current benchmark only provides images from a single camera view, which can be used to localize sounds in 2D. While this agrees with current practices within the field [40, 53, 37] and is in line with the equipment readily available to most labs, it is insufficient to infer 3D body pose information. One could imagination that knowing the 3D position and 3D heading direction of a vocalizing rodent could provide a more rich and effective supervision signal to train a deep network. A number of 3D pose tracking tools for animal models have been developed in very recent years [64, 39, 28, 35, 12]. These tools could be leveraged if future benchmarks collect multiple camera views. Ultimately, it would be useful to compare performance across 3D and 2D benchmarks, to ascertain whether the sound source localization problem is indeed easier in one or the other setting.

6. Discussion

SSL is a well-known and challenging problem. We collected a variety of datasets and developed benchmarks to assess these challenges in the context of neuroethological experiments in vocalizing rodents. This involves localizing sounds in reverberant environments across a very broad frequency range (including ultrasonic events), distinguishing our work from more standard SSL benchmarks and algorithms. Our experiments reveal that DNNs are a promising approach. In controlled settings (Edison-4M-E1 and Speaker-4M-E1 datasets), DNNs achieved sub-centimeter resolution. In larger environments (Hexapod-8M-E2) and in datasets with uncontrolled 3D variation in sound emissions (SoloGerbil-4M-E1 and MouseEarbud-24M-E3), DNN performance was less impressive, but still outperformed a well-established benchmark algorithm (MUSE), that is currently utilized.

In addition to continuing to experiment with advances in machine vision/audio, we are also interested in exploring performance improvements due to hardware optimization. Parameters such as number of microphones, their positions/directivity, and environment reverberance can all affect SSL performance. Future experiments will leverage acoustic simulations to explore this parameter space. Initial results suggest that varying the amount of reverberation in an environment drastically affects SSL performance and that this effect is more pronounced in MUSE than DNNs (see Supplement).

The ultimate goal of most neuroscientists is to attribute vocal calls to individuals amongst an interacting social group. Accurate SSL would enable this, but it is also possible to reframe this problem as a direct prediction task. Specifically, given a video and audio recording of K interacting animals with ground truth labels for the source of each sound event, DNNs could be trained to perform K-way classification to identify the source. Future work should investigate this promising alternative approach, as it would enable DNNs to jointly leverage information from audio and video data as network inputs. On the other hand, we note several challenges that must be overcome. First, establishing ground truth in multi-animal recordings is non-trivial, though feasible in certain experiments [16, 47, 60]. Second, DNNs trained to process raw video can have trouble generalizing across recording sessions due to subtle changes in lighting or animal appearance [63, 50]. Finally, we note that at least K=2 animals are required to make the problem nontrivial (when K=1 the DNN could ignore the audio input to predict the source). It will be important to establish a flexible DNN architecture that can make accurate predictions even when the animal group size, K, is altered (see e.g. [66]). It is already possible to use the VCL datasets to explore these possibilities. For example, one could use audio and video data taken from the same or different sound events to train a DNN with a multimodal contrastive learning objective (see e.g. [56], for a related concept).

In summary, there are many promising, but under-investigated, machine learning methodologies for annotating vocal communication in rodents. The VCL benchmark is our attempt to spark a broader community effort to investigate the potential of these computational approaches. Indeed, collecting and curating these datasets is labor-intensive and in our case involved collaboration across multiple neuroscience labs. To our knowledge, very little (if any) comparable data containing raw audio and video from many thousands of rodent vocal calls currently exists in the public domain. Thus, we expect the VCL benchmark will enable new avenues of research within computational neuroscience.

Supplementary Material

Acknowledgements and Ethics Statement

We do not foresee any negative societal impacts arising from this work. We thank Megan Kirchgessner (NYU), Robert Froemke (NYU), and Marcelo Magnasco (Rockefeller) for discussions and suggestions regarding SSL applications in neuroscience. This work was supported by the National Institutes of Health R34-DA059513 (AHW, DHS, DMS), National Institutes of Health R01-DC020279 (DHS), National Institutes of Health 1R01-DC018802 (DMS, REP), National Institutes of Health Training Program in Computational Neuroscience T90DA059110 (REP), New York Stem Cell Foundation (DMS), CV Starr Fellowship (BM), EMBO Postdoctoral Fellowship (BM), National Science Foundation Award 1922658 (CI).

Footnotes

Submitted to the 38th Conference on Neural Information Processing Systems (NeurIPS 2024) Track on Datasets and Benchmarks. Do not distribute.

Data is available at: vclbenchmark.flatironinstitute.org

References

- [1].Adolphs Ralph. “Conceptual challenges and directions for social neuroscience”. In: Neuron 65.6 (2010), pp. 752–767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Allen Jont B. and Berkley David A.. “Image method for efficiently simulating small-room acoustics”. In: The Journal of the Acoustical Society of America 65.4 (Apr. 1979), pp. 943–950. ISSN: 0001–4966. DOI: 10.1121/1.382599. eprint: https://pubs.aip.org/asa/jasa/article-pdf/65/4/943/11426543/943\_1\_online.pdf. URL: 10.1121/1.382599. [DOI] [Google Scholar]

- [3].Argentieri S., Danès P., and Souères P.. “A survey on sound source localization in robotics: From binaural to array processing methods”. In: Computer Speech & Language 34.1 (2015), pp. 87–112. ISSN: 0885–2308. DOI: 10.1016/j.csl.2015.03.003. URL: https://www.sciencedirect.com/science/article/pii/S0885230815000236. [DOI] [Google Scholar]

- [4].Ashida Go and Carr Catherine E. “Sound localization: Jeffress and beyond”. In: Current opinion in neurobiology 21.5 (2011), pp. 745–751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Blauert Jens. Spatial hearing: the psychophysics of human sound localization. MIT press, 1997. [Google Scholar]

- [6].Brainard Michael S and Doupe Allison J. “Translating birdsong: songbirds as a model for basic and applied medical research”. In: Annual review of neuroscience 36 (2013), pp. 489–517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Carr Catherine E and Christensen-Dalsgaard Jakob. “Sound localization strategies in three predators”. In: Brain Behavior and Evolution 86.1 (2015), pp. 17–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Chakrabarty Soumitro and Habets Emanuel A. P.. “Broadband doa estimation using convolutional neural networks trained with noise signals”. In: 2017 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA). IEEE, Oct. 2017. DOI: 10.1109/waspaa.2017.8170010. URL: . [DOI] [Google Scholar]

- [9].Cohen Yale E and Knudsen Eric I. “Maps versus clusters: different representations of auditory space in the midbrain and forebrain”. In: Trends in neurosciences 22.3 (1999), pp. 128–135. [DOI] [PubMed] [Google Scholar]

- [10].Datta Sandeep Robert et al. “Computational neuroethology: a call to action”. In: Neuron 104.1 (2019), pp. 11–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].DiBiase Joseph H, Silverman Harvey F, and Brandstein Michael S. “Robust localization in reverberant rooms”. In: Microphone arrays: signal processing techniques and applications. Springer, 2001, pp. 157–180. [Google Scholar]

- [12].Dunn Timothy W et al. “Geometric deep learning enables 3D kinematic profiling across species and environments”. In: Nature methods 18.5 (2021), pp. 564–573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Eliades Steven J and Miller Cory T. “Marmoset vocal communication: behavior and neurobiology”. In: Developmental neurobiology 77.3 (2017), pp. 286–299. [DOI] [PubMed] [Google Scholar]

- [14].Evers Christine et al. “The LOCATA Challenge: Acoustic Source Localization and Tracking”. In: IEEE/ACM Transactions on Audio, Speech, and Language Processing 28 (2020), pp. 1620–1643. DOI: 10.1109/TASLP.2020.2990485. [DOI] [Google Scholar]

- [15].Francl Andrew and McDermott Josh H. “Deep neural network models of sound localization reveal how perception is adapted to real-world environments”. In: Nature human behaviour 6.1 (2022), pp. 111–133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Fukushima Makoto and Margoliash Daniel. “The effects of delayed auditory feedback revealed by bone conduction microphone in adult zebra finches”. In: Scientific Reports 5.1 (2015), p. 8800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Gneiting Tilmann and Raftery Adrian E. “Strictly proper scoring rules, prediction, and estimation”. In: Journal of the American statistical Association 102.477 (2007), pp. 359–378. [Google Scholar]

- [18].Goetze Stefan et al. “Acoustic monitoring and localization for social care”. In: Journal of Computing Science and Engineering 6.1 (2012), pp. 40–50. [Google Scholar]

- [19].Goffinet Jack et al. “Low-dimensional learned feature spaces quantify individual and group differences in vocal repertoires”. In: Elife 10 (2021), e67855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Gramaccioni Riccardo F et al. “L3DAS23: Learning 3D Audio Sources for Audio-Visual Extended Reality”. In: IEEE Open Journal of Signal Processing (2024). [Google Scholar]

- [21].Grumiaux Pierre-Amaury et al. “A survey of sound source localization with deep learning methods”. In: The Journal of the Acoustical Society of America 152.1 (2022), pp. 107–151. [DOI] [PubMed] [Google Scholar]

- [22].Guizzo Eric et al. “L3DAS21 challenge: Machine learning for 3D audio signal processing”. In: 2021 IEEE 31st International Workshop on Machine Learning for Signal Processing (MLSP). IEEE. 2021, pp. 1–6. [Google Scholar]

- [23].Guizzo Eric et al. “L3DAS22 Challenge: Learning 3D Audio Sources in a Real Office Environment”. In: ICASSP 2022 – 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 2022, pp. 9186–9190. DOI: 10.1109/ICASSP43922.2022.9746872. [DOI] [Google Scholar]

- [24].Guo Chuan et al. “On calibration of modern neural networks”. In: International conference on machine learning. PMLR. 2017, pp. 1321–1330. [Google Scholar]

- [25].Hestness Joel et al. “Deep learning scaling is predictable, empirically”. In: arXiv preprint arXiv:1712.00409 (2017). [Google Scholar]

- [26].Ick Christopher and McFee Brian. Leveraging Geometrical Acoustic Simulations of Spatial Room Impulse Responses for Improved Sound Event Detection and Localization. 2023. arXiv: 2309.03337[eess.AS].

- [27].Ihlefeld Antje and Shinn-Cunningham Barbara G. “Effect of source spectrum on sound localization in an everyday reverberant room”. In: The Journal of the Acoustical Society of America 130.1 (2011), pp. 324–333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Karashchuk Pierre et al. “Anipose: A toolkit for robust markerless 3D pose estimation”. In: Cell reports 36.13 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Knudsen Eric I.. “Instructed learning in the auditory localization pathway of the barn owl”. In: Nature 417.6886 (2002), pp. 322–328. ISSN: 1476–4687. DOI: 10.1038/417322a. URL: 10.1038/417322a. [DOI] [PubMed] [Google Scholar]

- [30].Krause Daniel, Politis Archontis, and Kowalczyk Konrad. “Data Diversity for Improving DNN-based Localization of Concurrent Sound Events”. In: 2021 29th European Signal Processing Conference (EUSIPCO). 2021, pp. 236–240. DOI: 10.23919/EUSIPCO54536.2021.9616284. [DOI] [Google Scholar]

- [31].Lauer Jessy et al. “Multi-animal pose estimation, identification and tracking with DeepLabCut”. In: Nature Methods 19.4 (2022), pp. 496–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Li Xiaofei et al. “Reverberant sound localization with a robot head based on direct-path relative transfer function”. In: 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE. 2016, pp. 2819–2826. [Google Scholar]

- [33].Macpherson Ewan A and Middlebrooks John C. “Listener weighting of cues for lateral angle: The duplex theory of sound localization revisited”. In: The Journal of the Acoustical Society of America 111.5 (2002), pp. 2219–2236. [DOI] [PubMed] [Google Scholar]

- [34].Makino Shoji. Audio source separation. Vol. 433. Springer, 2018. [Google Scholar]

- [35].Marshall Jesse D et al. “Continuous whole-body 3D kinematic recordings across the rodent behavioral repertoire”. In: Neuron 109.3 (2021), pp. 420–437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Marshall Jesse D et al. “The PAIR-R24M Dataset for Multi-animal 3D Pose Estimation”. In: Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 1). 2021. URL: https://openreview.net/forum?id=-wVVl_UPr8. [Google Scholar]

- [37].Matsumoto Jumpei et al. “Acoustic camera system for measuring ultrasound communication in mice”. In: Iscience 25.8 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Miller Cory T et al. “Natural behavior is the language of the brain”. In: Current Biology 32.10 (2022), R482–R493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Nath Tanmay et al. “Using DeepLabCut for 3D markerless pose estimation across species and behaviors”. In: Nature protocols 14.7 (2019), pp. 2152–2176. [DOI] [PubMed] [Google Scholar]

- [40].Neunuebel Joshua P et al. “Female mice ultrasonically interact with males during courtship displays”. In: eLife 4 (2015). Ed. by Mason Peggy, e06203. ISSN: 2050–084X. DOI: 10.7554/eLife.06203. URL: 10.7554/eLife.06203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Oliveira-Stahl Gabriel et al. “High-precision spatial analysis of mouse courtship vocalization behavior reveals sex and strain differences”. In: Scientific Reports 13.1 (2023), p. 5219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Otto Gleich and Jürgen Strutz. “The Mongolian gerbil as a model for the analysis of peripheral and central age-dependent hearing loss”. In: Hearing Loss (2012). [Google Scholar]

- [43].Pereira Talmo D, Shaevitz Joshua W, and Murthy Mala. “Quantifying behavior to understand the brain”. In: Nature neuroscience 23.12 (2020), pp. 1537–1549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Pereira Talmo D et al. “SLEAP: A deep learning system for multi-animal pose tracking”. In: Nature methods 19.4 (2022), pp. 486–495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Peterson Ralph E et al. “Unsupervised discovery of family specific vocal usage in the Mongolian gerbil”. In: eLife (2023), e89892.1. DOI: 10.7554/eLife.89892.1. URL: 10.7554/eLife.89892.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Roman Iran R. et al. Spatial Scaper: A Library to Simulate and Augment Soundscapes for Sound Event Localization and Detection in Realistic Rooms. 2024. arXiv: 2401.12238 [eess.AS].

- [47].Rose Maimon C. et al. “Cortical representation of group social communication in bats”. In: Science 374.6566 (2021), eaba9584. DOI: 10.1126/science.aba9584. eprint: https://www.science.org/doi/pdf/10.1126/science.aba9584. URL: https://www.science.org/doi/abs/10.1126/science.aba9584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Sainburg Tim, Thielk Marvin, and Gentner Timothy Q. “Finding, visualizing, and quantifying latent structure across diverse animal vocal repertoires”. In: PLoS computational biology 16.10 (2020), e1008228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Shemesh Yair and Chen Alon. “A paradigm shift in translational psychiatry through rodent neuroethology”. In: Molecular psychiatry 28.3 (2023), pp. 993–1003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [50].Shi Changhao et al. “Learning Disentangled Behavior Embeddings”. In: Advances in Neural Information Processing Systems. Ed. by Ranzato M. et al. Vol. 34. Curran Associates, Inc., 2021, pp. 22562–22573. URL: https://proceedings.neurips.cc/paper_files/paper/2021/file/be37ff14df68192d976f6ce76c6cbd15-Paper.pdf. [Google Scholar]

- [51].Shimada Kazuki et al. “STARSS23: An Audio-Visual Dataset of Spatial Recordings of Real Scenes with Spatiotemporal Annotations of Sound Events”. In: Advances in Neural Information Processing Systems. Ed. by Oh A. et al. Vol. 36. Curran Associates, Inc., 2023, pp. 72931–72957. URL: https://proceedings.neurips.cc/paper_files/paper/2023/file/e6c9671ed3b3106b71cafda3ba225c1a-Paper-Datasets_and_Benchmarks.pdf. [Google Scholar]

- [52].Steinfath Elsa et al. “Fast and accurate annotation of acoustic signals with deep neural networks”. In: Elife 10 (2021), e68837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Sterling Max L, Teunisse Ruben, and Englitz Bernhard. “Rodent ultrasonic vocal interaction resolved with millimeter precision using hybrid beamforming”. In: eLife 12 (2023). Ed. by Bathellier Brice, e86126. ISSN: 2050–084X. DOI: 10.7554/eLife.86126. URL: 10.7554/eLife.86126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Sterling Max L, Teunisse Ruben, and Englitz Bernhard. “Rodent ultrasonic vocal interaction resolved with millimeter precision using hybrid beamforming”. In: Elife 12 (2023), e86126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Sun Jennifer J. et al. “The Multi-Agent Behavior Dataset: Mouse Dyadic Social Interactions”. In: Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 1). 2021. URL: https://openreview.net/forum?id=NevK78-K4bZ. [PMC free article] [PubMed] [Google Scholar]

- [56].Sun Weixuan et al. “Learning audio-visual source localization via false negative aware contrastive learning”. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023, pp. 6420–6429. [Google Scholar]

- [57].Ter-Mikaelian Maria, Yapa Wipula B, and Rübsamen Rudolf. “Vocal behavior of the Mongolian gerbil in a seminatural enclosure”. In: Behaviour 149.5 (2012), pp. 461–492. [Google Scholar]

- [58].Ulanovsky Nachum and Moss Cynthia F. “What the bat’s voice tells the bat’s brain”. In: Proceedings of the National Academy of Sciences 105.25 (2008), pp. 8491–8498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Vera-Diaz Juan Manuel, Pizarro Daniel, and Macias-Guarasa Javier. “Towards end-to-end acoustic localization using deep learning: From audio signals to source position coordinates”. In: Sensors 18.10 (2018), p. 3418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Waidmann Elena N. et al. “Mountable miniature microphones to identify and assign mouse ultrasonic vocalizations”. In: bioRxiv (2024). DOI: 10.1101/2024.02.05.579003. eprint: https://www.biorxiv.org/content/early/2024/02/06/2024.02.05.579003.full.pdf. URL: https://www.biorxiv.org/content/early/2024/02/06/2024.02.05.579003. [DOI] [Google Scholar]

- [61].Wang Hong and Chu Peter. “Voice source localization for automatic camera pointing system in videoconferencing”. In: 1997 IEEE International Conference on Acoustics, Speech, and Signal Processing. Vol. 1. IEEE. 1997, pp. 187–190. [Google Scholar]

- [62].Warren Megan R, Sangiamo Daniel T, and Neunuebel Joshua P. “High channel count microphone array accurately and precisely localizes ultrasonic signals from freely-moving mice”. In: Journal of neuroscience methods 297 (2018), pp. 44–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Whiteway Matthew R. et al. “Partitioning variability in animal behavioral videos using semisupervised variational autoencoders”. In: PLOS Computational Biology 17.9 (Sept. 2021), pp. 1–50. DOI: 10.1371/journal.pcbi.1009439. URL: 10.1371/journal.pcbi.1009439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [64].Wiltschko Alexander B et al. “Mapping sub-second structure in mouse behavior”. In: Neuron 88.6 (2015), pp. 1121–1135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [65].Woodward Sean F, Reiss Diana, and Magnasco Marcelo O. “Learning to localize sounds in a highly reverberant environment: Machine-learning tracking of dolphin whistle-like sounds in a pool”. In: PloS one 15.6 (2020), e0235155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [66].Zaheer Manzil et al. “Deep Sets”. In: Advances in Neural Information Processing Systems. Ed. by Guyon I. et al. Vol. 30. Curran Associates, Inc., 2017. URL: https://proceedings.neurips.cc/paper_files/paper/2017/file/f22e4747da1aa27e363d86d40ff442fe-Paper.pdf. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.