Abstract

Surveys often estimate vaccination intentions using dichotomous ("Yes"/"No") or trichotomous ("Yes," "Unsure," "No") response options presented in different orders. Do survey results depend on these variations? This controlled experiment randomized participants to dichotomous or trichotomous measures of vaccine intentions (with “Yes” and “No” options presented in different orders). Intentions were measured separately for COVID-19, its booster, and influenza vaccines. Among a sample of U.S. adults (N = 4,764), estimates of vaccine intention varied as much as 37.5 ± 17.4 percentage points as a function of the dichotomous or trichotomous response set. Among participants who had not received the COVID-19 vaccine, the “Unsure” option was more likely to reduce the share of “No” (versus “Yes”) responses, whereas among participants who had received the COVID-19 vaccine, the “Unsure” option was more likely to reduce the share of “Yes” (versus “No”) responses. The “Unsure” category may increase doubt and decrease reliance on past vaccination behavior when forming intentions. The order of “Yes” and “No” responses had no significant effect. Future research is needed to further evaluate why the effects of including the “Unsure” option vary in direction and magnitude.

Subject terms: Immunology, Psychology, Diseases, Medical research

Introduction

The decision to delay or refuse inoculation, is one of the most pressing, global health threats1,2. Attempting to predict the proportion of a population that will receive a vaccine in a timely fashion, thousands of yearly surveys estimate intentions to immunize against COVID-19, influenza, or other infectious diseases. The survey questionnaires commonly measure vaccination intentions because behavioral intention is a strong (but rarely perfect) predictor of future behavior3,4. These survey results are of interest to public health leaders, elected officials, and others who seek to gauge the likely level of uptake for a vaccine5,6.

Although behavioral intention is known to be the most proximal psychological determinant of behavior, there is more than one commonly recommended approach to measuring intention and, currently, there is no consensus about the best approach7. When conducting survey research to predict vaccination uptake, some investigators measure intention to receive the vaccine of interest using a questionnaire item that asks respondents to choose between two answers: “Yes” or “No”8,9. Other investigators add a third option, often labelled “Unsure” or “Don’t Know,” to capture uncertainty10,11. Despite the common use of both dichotomous ("Yes," "No") and trichotomous ("Yes," "Unsure," "No") response options, we are not aware of empirical comparisons between these measurement approaches. However, it is important to know what bias these different instruments introduce.

Although not focused on vaccination, the literature on survey methods has shown that the number of response options can generate systematic differences in estimates, which may create challenges for interpretating and comparing survey results, or even bias these estimates12,13. The non-random response error caused by characteristics of the measurement approach is referred to as an instrumentation effect that can explain up to 85% of variance in estimates14–16. Consequently, estimates obtained from surveys can produce inherent biases in our estimates of vaccination intent.

In this study, we systematically test if and how survey responses differ when measuring vaccine intentions using either dichotomous (“Yes” and “No”) measures or trichotomous measures that include an additional “Unsure” option. These tests were conducted separately for the initial COVID-19 vaccine, COVID-19 booster vaccines, and the influenza vaccine. (Technically, a booster can be the same vaccine as the initial vaccine. However, initial and booster vaccinations are distinct behaviors and intentions are presumed to vary depending on the behavior of interest.) We hypothesized that the “Unsure” option could influence survey results, although the direction of the expected effect was unclear. Because people commonly use their past behavior to infer what they will do in the future17, their recent vaccination history may influence their vaccine intentions, but in one of two different ways. If the “Unsure” option primes respondents to feel less certain about their current intention, those who vaccinated in the recent past may use their past behavior as guidance and choose “Yes” more with the trichotomous (versus dichotomous) response set. Alternatively, if the “Unsure” option primes respondents to feel less certain about repeating their past behavior, the same vaccinated respondents may choose “No” more with the trichotomous (versus dichotomous) response set.

As a secondary objective, we varied the order of the response options, which could also affect reported intentions via a primacy effect18–20. The primacy effect has been observed in a variety of contexts, but we are not aware of testing relevant to vaccine surveys. For example, during elections, the order of candidates’ names on the ballot influences voting by disproportionately benefiting those listed first or early18,19. However, compared to instrumentation effects attributed to the number of response options, those attributed to the order of response options have been smaller14–16. In turn, we hypothesized that the order of response options would have a relatively minor instrumentation effect.

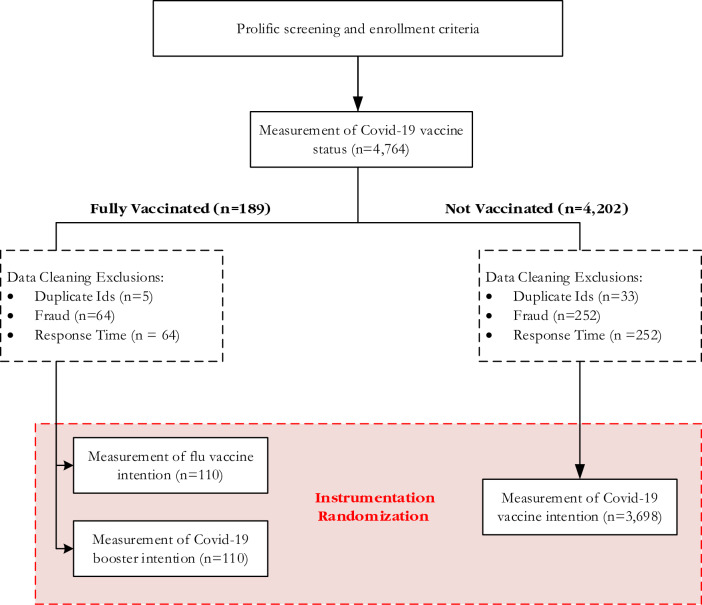

We pursued our two objectives by conducting a randomized, controlled experiment that was embedded within a survey. Among a national sample of adults, each participant received at least one item measuring intention to receive a specific vaccine: the COVID-19 vaccine, its booster, or the influenza vaccine. For the primary objective, each participant was randomized to response options that included only the dichotomous “Yes” and “No,” or options that included “Unsure.” For the secondary objective, the response order was randomized such that “Yes” appeared first for some respondents, while “No” appeared first for others. (In the three-choice option set, “Unsure” was never presented first because we are not aware of vaccine questionnaires that have offered this option first.) By varying the order and number of response options, we tested six response option sets: “Yes–No,” “No-Yes,” “Yes-Unsure-No,” “No-Unsure-Yes”, “Yes–No-Unsure,” and “No-Yes-Unsure.” Within Fig. 1, the box labeled “Instrumentation Randomization” refers to our manipulation of the order and number of response options that generated each of these six response option sets. The Methods section includes additional information.

Fig. 1.

Schematic representation of survey experiment. The exclusion numbers reported based on data cleaning for duplicate IDs, fraud, and response time, which are not mutually exclusive. Several respondents were excluded based on more than one of these exclusion criteria. The numbers reported in the figure correspond to the total number of responses that fail a given criterion.

Results

A survey company helped us identify a national sample of 4764 adults who had reported no prior COVID- 19 vaccination when pre-screened by the company (in mid-2021) several weeks prior to our study. The company routinely measures COVID-19 vaccination status and several other health variables for all individuals in their participant pool. In September 2021, our study questionnaire measured their COVID- 19 vaccination status again, and after data cleaning, the analyses included 110 individuals who had received COVID-19 vaccination in the interim, plus 3698 individuals who still reported no prior COVID-19 vaccination.

The group that had not received COVID-19 vaccination was asked only to report their COVID-19 vaccine intentions. The group that received COVID-19 vaccination were instead asked to report their intentions to receive the COVID-19 booster vaccine and the influenza vaccine. Therefore, with each study participant, we measured intentions to receive at least one vaccine, but the target vaccine(s) varied depending on whether an individual already had received COVID-19 vaccination.

The randomization to one of the six response option sets successfully balanced each measured socio-demographic variable with no statistically significant differences across conditions. (In turn, it is not necessary or appropriate for analyses to control demographics.) To illustrate the successful randomization, Table 1 reports average respondent age (and standard deviation) across each sample and treatment condition. In each case, the average age of participants was between 27.18 and 30.71 years. Similarly, there were no significant condition differences in gender, race, political affiliation, education levels, or financial circumstances.

Table 1.

Respondent demographic distributions across treatment conditions.

| Demographic variable | Estimation sample | Treatment condition [M (SD)] | |||

|---|---|---|---|---|---|

| “No” First = 0 | “No” First = 1 | Unsure = 0 | Unsure = 1 | ||

| Respondent age | COVID-19 vaccine | 28.42 (10.29) | 28.45 (10.16) | 28.77 (10.35) | 28.28 (10.16) |

| COVID-19 booster | 30.69 (10.58) | 27.88 (9.56) | 28.89 (9.81) | 29.56 (10.48) | |

| Influenza vaccine | 30.71 (10.55) | 27.88 (9.38) | 27.18 (8.59) | 30.29 (10.58) | |

| Female (%) | COVID-19 vaccine | 77.77 (41.59) | 79.33 (40.50) | 79.25 (40.57) | 78.23 (41.27) |

| COVID-19 booster | 69.23 (46.60) | 70.69 (45.91) | 71.05 (45.96) | 69.44 (46.39) | |

| Influenza vaccine | 69.23 (46.60) | 70.69 (45.91) | 71.05 (45.96) | 69.44 (46.39) | |

| White (%) | COVID-19 vaccine | 83.68 (36.96) | 84.78 (35.93) | 86.25 (34.45) | 83.29 (37.31) |

| COVID-19 booster | 80.77 (39.80) | 82.76 (38.10) | 81.58 (39.29) | 81.94 (38.73) | |

| Influenza vaccine | 80.76 (39.79) | 82.76 (38.10) | 81.58 (39.29) | 81.94 (38.73) | |

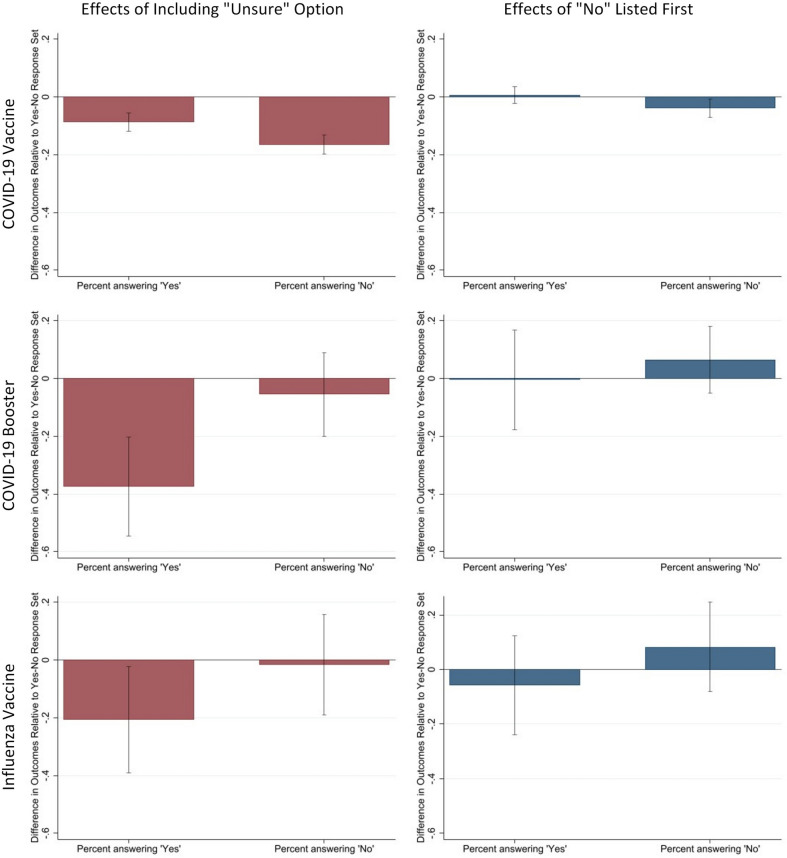

We present the effects separately for COVID-19 vaccination, COVID-19 boosters, and influenza vaccination. Table 2 focuses on the primary aim by reporting the share of respondents who select “Yes” or “No” when assigned the dichotomous response option or the trichotomous response option. The statistical comparisons across the conditions in Table 2 appear in Table 3 and the left column of Fig. 2. For the secondary aim, the right column of Fig. 2 displays response order effects.

Table 2.

Proportion of participants endorsing “Yes” and “No” for Dichotomous and Trichotomous Option Sets.

| Vaccine of interest | Dichotomous option set | Trichotomous option set with "unsure" | ||

|---|---|---|---|---|

| Yes | No | Yes | No | |

| COVID-19 vaccine | 0.37 | 0.63 | 0.28 | 0.47 |

| COVID-19 booster | 0.84 | 0.16 | 0.51 | 0.08 |

| Influenza vaccine | 0.74 | 0.26 | 0.53 | 0.25 |

Table 3.

The total net change based on the percentage change in “Yes” and “No” responses when “Unsure” is a response option for a measure of vaccination intention.

| Vaccine | Change in responding “yes” | Change in responding “no” | Total net change |

|---|---|---|---|

| COVID-19 initial vaccine | − 8.7 ± 3.2 | − 16.5 ± 3.3 | − 12.6 ± 2.4% |

| COVID-19 booster vaccine | − 37.5 ± 17.4 | − 11.9 ± 12.9 | − 20.3 ± 13.7% |

| Influenza vaccine | − 20.7 ± 18.6 | − 3.1 ± 15.9 | − 11.2 ± 0.84% |

The numbers behind the “±” sign indicate the 95% confidence interval for the estimated effect. Coefficients for these contrasts appear in Fig. 2.

Fig. 2.

Estimated instrumentation effects of “Yes” and “No” outcomes. For each of three different vaccines, Fig. 2 reports the estimated effects when varying the number of response options (left column) and the response order (right column). These estimates correspond to coefficients β̂Unsure and β̂NoFirst from Eq. (1), respectively. Initial COVID-19 vaccine intentions were reported by those without COVID-19 vaccination (n = 3,698). Those who were vaccinated against COVID-19 (n = 110) were asked to report their intentions to receive the COVID-19 booster and influenza vaccine. Note that the error bars represent the 95% confidence interval for the corresponding estimate.

Effects of the “Unsure” option when measuring COVID-19 vaccination intention among individuals who have not yet received this vaccine

When participants who reported not receiving the COVID-19 vaccine were asked about their intention to receive this vaccine, the response option set with “Unsure” had a large, significant effect on the share of “No” responses, reducing it from 63 to 47% (a difference of 16.5 ± 3.3 percentage points) (see Tables 2 and 3 and Fig. 2). In comparison, the “Unsure” option set had a significantly smaller effect on the share of “Yes” responses (reducing the share from 37 to 28%, a difference of 8.7 ± 3.2 percentage points), although the reduction in responses was significant as well (see Tables 2 and 3 and Fig. 2). Therefore, among the participants without COVID-19 vaccination, the “Unsure” predominantly reduced “No” intention responses.

Effects of the “Unsure” option when measuring COVID-19 booster and influenza vaccination among individuals with COVID-19 vaccination

When participants reported that they had received the COVID-19 vaccine, they were asked about their intention to receive the COVID-19 booster and the “Unsure” option reduced the share of “Yes” responses from 84 to 51% (Table 2), generating a significant 37.5 ± 17.4 percentage point net change (see Table 3 and Fig. 2). This effect was statistically larger than any other effects generated in this experiment. The “Unsure” option also reduced the share of “No” responses for the.

COVID-19 booster (see Table 2), but this effect was smaller than the effect on “Yes” responses and not statistically significant (i.e., -11.9 ± 12.9%; see Table 3).

Although smaller in magnitude than the effects for the COVID-19 booster, the direction of the effects were similar for the influenza vaccine, as shown in Tables 2 and 3 and Fig. 2. Specifically, introducing the “Unsure” option reduced the share of “Yes” responses from 74 to 53% (Table 2), a significant effect of 20.7 ± 18.6 percentage points (see Table 3 and Fig. 2). The “Unsure” response option did not have a statistically significant effect on the probability that respondents reported “No” (see difference of -11.9 ± 12.9 percentage points in Fig. 2). Therefore, among those who had received the COVID-19 vaccine, the “Unsure” option predominantly reduced the “Yes” responses when reporting their intentions to receive the COVID-19 booster and influenza vaccine.

Response order effects

When measuring COVID-19 vaccination intentions among participants who reported no prior COVID-19 vaccination, respondents who had the “No” option presented first were 3.8 ± 3.2 percentage points less likely to select the “No” compared to when “Yes” is presented first (as shown in Fig. 2, right column). However, presenting “No” first had no significant effect on choosing “Yes” (point estimate: 0.7 ± 2.9). The response order also had no statistically significant effects on the intention to receive the COVID-19 booster or the influenza vaccine among participants who reported having COVID-19 vaccination (see Fig. 2).

Discussion

Different approaches are used commonly to estimate vaccine intentions and predict future vaccination rates. Some survey questionnaires use dichotomous response sets (“Yes” or “No”), while others rely on a trichotomous set that includes an “Unsure” option. In this study, our primary aim examined if the dichotomous and trichotomous approaches are interchangeable or if their estimates differ. As hypothesized, the results of our experiment documented substantial instrumentation effects when comparing the dichotomous and trichotomous response options and not when comparing the order of the “Yes” versus “No” options. In case the order effects may be larger in magnitude than estimated, with minimal cost, vaccine surveys can routinely guard against this potential source of bias by rotating whether response options start with “Yes” or “No” and counterbalancing any effects.

Implications of the substantial instrumentation effects

The results from the experiment indicate that a dichotomous (versus trichotomous) response option set generates reports of vaccine intention that are more consistent with participants’ recent decision to receive or avoid COVID-19 vaccination. In contrast, the “Unsure” option within the trichotomous response set may prompt uncertainty among respondents and reduce their tendency to formulate intentions consistent with their recent decision to receive or avoid COVID-19 vaccination. However, future research should measure respondents’ level of certainty that they will repeat or deviate from past vaccination behavior. Future research should also attempt to replicate these effects in other contexts, such as those concerning other vaccines and non-vaccination behaviors.

Because the estimates vary substantially depending on the measurement approach, a standardized approach will benefit vaccination survey research by allowing estimates to be pooled across studies and compared among different study populations or time periods. The methodological survey literature, which has not focused on vaccination, currently lacks consensus on whether an “Unsure” option improves data quality compared to the dichotomous (“Yes” or “No”) options. For example, Taylor et al.21 have advocated for the dichotomous response option set because the “Unsure” option “simply defers endorsing a decision.” Iyer et al.22 would also exclude the indecisive option because “vaccine uptake is a binary outcome” where “everyone in the population will either get vaccinated or they will not.”

However, some researchers have argued that an “Unsure” or similar option should be offered to capture actual uncertainty23,24. If respondents’ uncertainty is genuine25,26, and equally likely among those who will ultimately resolve their doubt by getting vaccinated or abstaining, the inclusion of the “Unsure” response option would not necessarily degrade predictive validity. Several scientists have argued that the “Unsure” option is often selected not because of true uncertainty, but because individuals avoid the cognitive effort required to decide23,24. In this case, the “Unsure” response option would reduce the data’s validity23,24. When the goal is to predict vaccination rates, future research is needed to determine whether dichotomous or trichotomous response options better predict actual vaccination.

In our experiment, the effect of being offered the “Unsure” option differed in magnitude with statistical significance. Among the same group of individuals, the “Unsure” instrumentation effects were larger in magnitude for the novel COVID-19 booster than for the traditional influenza vaccination. Potentially, the difference in magnitude could capture the level of genuine uncertainty about the benefits and risks of the inoculation. Levels of uncertainty could differ depending on how unfamiliar respondents are with a particular vaccine and the disease it prevents.

Although the study did not measure the perception of novelty, respondents probably considered influenza to be a familiar disease. Whereas the COVID-19 disease emerged recently, influenza is a well-known disease for which symptoms and risks are established. In contrast to the revolutionary RNA technology, the influenza vaccine relies on traditional vaccine technologies; although the formulation varies annually, it is a familiar type of immunization. Even though individuals had received the COVID-19 vaccine before, the benefits and risks of a booster dose were controversial, and this was the first booster to be offered.

Study limitations

We note several study limitations, including the potential for enrollment bias. To limit enrollment bias, the study was described to potential participants in general terms (as a survey of health preferences) and did not mention vaccination or instrumentation effects. This bias is potentially relevant because the current study used a convenience sample rather than probability samples. However, the randomization performed for this experiment helps reduce the likelihood that this sampling strategy would explain the study results. Namely, estimates of vaccination intention varied between study arms in an experiment where randomization appears to have evenly distributed socio-demographic factors. Still, future research should test the degree to which estimates differ when using a nationally representative sample.

Since assignment to each study arm was randomized, specific vaccination beliefs, such as perceived risks, are also likely to be evenly distributed across these arms and unlikely to bias the study results. Admittedly, this study was not designed to identify the specific beliefs–such as perceived risks and benefits– that may influence vaccination intentions. (There are many studies designed to learn why some people intend to vaccinate and others do not.) Instead, this study was designed to compare estimates of vaccine intention when using two different measurement approaches.

Because this is a methodological study comparing measurement approaches for self-reported data, it is also worthwhile to note the potential role of social desirability. When self-reporting intentions toward some behaviors, social desirability bias may increase the chance that respondents select the “Unsure” option, which can be considered an evasive response25,26. As a remedy, anonymous, self-administered questionnaires reduce the chance that an indecisive response option is selected because of social desirability bias. In the current experiment, all questionnaires were self-administered anonymously, making it unlikely that social desirability bias explains the magnitude and direction of our effects. Yet, additional explanations of the instrumentation effects should also be evaluated, as well as remedies for any sources of bias.

Given that the results document substantial instrumentation effects, we call for additional research that was beyond the scope of the current study. In particular, future research could further evaluate the degree to which the documented instrumentation effects apply to other vaccines, including new vaccines (such as those under development using RNA technology) versus older ones (such as those that prevent shingles or pneumococcal disease). Additional testing could explore potential patterns if, for example, the magnitude or direction of the instrumentation effects tend to differ for newer versus older vaccines.

Lastly, it is important to stress that the current study was not designed to test which measure of vaccination intention best predicts future vaccination. Because the current study documents substantial instrumentation effects, these results justify a line of research testing which measurement approach has the strongest predictive validity and whether this determination depends on the vaccine or populations of interest. For example, it is worth considering if the measurement approach that best predicts uptake of a new, unfamiliar vaccine is the same approach that best predicts uptake of older, more familiar vaccines. With a new vaccine, intentions may be less stable over time (as new information becomes available), and this level of instability is known to influence the strength of the intention-behavior relationship3,4. When vaccination intentions are relatively unstable, the trichotomous measure of intention may have stronger predictive validity than the dichotomous measure. The opposite could be true for familiar vaccines that inspire less uncertainty. Other known moderators of the intention-behavior relationship may or may not equally weaken the predictive validity of the trichotomous versus dichotomous measurement approaches.

Conclusions

To our knowledge, this is the first study to systematically investigate instrumentation effects by varying the measurement approach used to estimate vaccine intention. As hypothesized, estimates of vaccine intention varied substantially depending on whether they were measured using dichotomous or trichotomous response options. These instrumentation effects were documented repeatedly when measuring intentions towards each of three different vaccination behaviors.

Meanwhile, we found little evidence for concern with order effects. Consistent with our results, survey methodologists have concluded that the number of response options “is often the most important decision to assure good measurement properties”14. In our study, if the “Unsure” option was included, the share reporting that they intend to vaccinate decreased as much as 37.5 ± 17.4 percentage points. Additionally, we learned that, compared to trichotomous options, the dichotomous options substantially increase the share of participants who report they intend to vaccinate. Conversely, when measuring intentions to vaccinate, threats to public health may appear to be much larger if questionnaires use trichotomous options.

Our results have immediate implications because, currently, it is unclear whether surveys can best predict future vaccination when using the dichotomous or trichotomous measures of intention. Given the magnitude of the instrumentation effects, our results suggest that one of these measurement approaches is likely to substantially degrade our ability to predict future vaccination. Given that the direction of these effects also varies, future research is needed to further examine potential mechanisms. Until the measurement approach is standardized, it is also unclear how the results from vaccination surveys using different approaches can be pooled or compared. Ideally, future research will address the need for more methodological rigor. As others have cautioned, if measurement errors are ignored, “one runs the risk of very wrong conclusions”16.

Methods

Ethics

The University of Pennsylvania's Institutional Review Board (Section 8: Social and Behavioral Research), approved this study and waived formal consent because the participation was determined to pose minimal risk. All methods were carried out in accordance with relevant guidelines and regulations.

Study design and sample

Using a national sample, we conducted a randomized, control experiment that was embedded within a larger survey study, described elsewhere5 and preregistered with ClinicalTrials.gov (NCT04747327). We recruited an online sample through the survey company, Prolific (www.prolific.com) that was developed by behavioral scientists.

Using demographic filters provided by Prolific, we limited enrollment to adults (at least 18 years old) residing in the United States. Prolific also includes filters for screening by lifestyle and health behaviors, one of which measures COVID-19 vaccine status. For the purpose of the larger survey study, we selected those who were not vaccinated against COVID-19. Prolific conducted their screening months prior to our experiment, our survey questionnaire measured COVID-19 vaccine status again. At this point, those who now reported being COVID-19 vaccinated were not excluded. In turn, the current study enrolled a group that had been vaccinated against COVID-19 and a group that had not.

Specific vaccines of interest varied by participant’s vaccination history

Among those who had received COVID-19 vaccination, we measured their intention to receive a COVID-19 booster and influenza vaccine. Those individuals who did not receive COVID-19 vaccination were only asked about their intention to do so; they were not asked about their intention to receive the influenza vaccine.

The group of respondents who had not received a COVID-19 vaccine, also participated in a separate randomized test comparing the effects of vaccine mandates and incentives, with results detailed elsewhere6. To summarize, in the separate test, participants were randomly assigned to imagine a hypothetical scenario or not. The presence and type of scenario was randomly assigned so as not to bias the current study’s estimates of instrumentation effects. (For example, some were assigned to imagine a hypothetical vaccine mandate policy).

Measuring vaccination intentions

Among those who had been fully vaccinated against COVID-19, the study outcome was based on responses to the following questions:

When the yearly flu vaccine becomes available in the next four weeks, would you want to get the shot?

When a COVID-19 vaccine booster becomes available in the next four weeks, would you want to get the shot?

Those who had not been vaccinated against COVID-19 did not receive the above questions. Instead, the study outcome for this group was based on responses to the below:

The COVID-19 shot is available. Would you want to get vaccinated against COVID-19 in the next 4 weeks?

The above items specify a time period for the future behavior (i.e., vaccination within four weeks) because measures of behavioral intention improve their validity and reliability when doing so7. However, measures of intention are not assumed to perfectly predict future behavior3. When intending to vaccinate within any time period, people may not always succeed if they encounter logistical challenges or other barriers out of one’s control3.

We also recognize that people can have various reasons for not wanting to vaccinate in the specified time period of four weeks, including perhaps not being eligible for vaccination within this time period. If the measure instead asked about a longer period, it is still possible that some people would not be eligible for vaccination. (For example, some may have previously experienced allergic reactions that preclude vaccination, even during a longer time period.) Fortunately, regardless of the time period specified, the use of random assignment helps ensure that those who are eligible for vaccination are evenly distributed across study arms.

Vaccine distribution timelines and recommendations

At the time of our study, vaccines against COVID-19 and influenza were officially recommended for adults. COVID-19 vaccines had been available for about ten months and the first COVID-19 vaccine booster was expected to be available in a few weeks, which was the same time frame expected for the influenza vaccine. COVID-19 vaccine distribution began in December 2020, with limited availability initially. This study was conducted in September 2021, when access to the COVID-19 vaccine had been greatly expanded nationally.

Measuring standard socio-demographics

To characterize all participants, we also measured their current age, gender, race, educational level, degree of financial stress and political party affiliation.

Statistical analysis

We used Qualtrics, the web-based software, to host the online experiment, automate randomization, and collect data and Stata 16 statistical software to conduct the analyses. The primary analytic goal was to test the effects of including the “Unsure” response option compared to the dichotomous response set. The below analyses used Stata, which is available from StataCorp at https://www.stata.com/. The version of the software we used is Stata18-MP.

For the primary analyses, we constructed a regression model with a binary dependent variable (Vi) and two constructions of this outcome variable. First, the variable was defined as a “Yes” outcome variable, scored as 1 if a given respondent i responded that she/he wanted to receive the vaccine, otherwise Vi = 0. We also considered a “No” outcome dependent variable specification, where the dependent variable receives a 1 if the respondent indicated s/he did not want to receive the vaccine. If the respondent did want to receive the vaccine, or they were unsure, this variable received a 0.

Finally, we estimated the relative uncertainty in the decisions across the vaccine choices by comparing the effect size on the "Unsure" variable, versus either definitive response (“Yes” or “No”). To do this we reconfigured the data, “stacking” the binary “Yes” and “No” outcome data to assess net changes in outcomes associated with instrumentation effects simultaneously, which is analogous to examining the absolute value of a change). The new variable takes value “one” if the respondent gave a decisive response (i.e., either “Yes” or “No”) to the vaccine intention question and equals “zero” if the respondent gave an indecisive response (i.e., “Unsure”). We reported the net change associated with the inclusion of the "Unsure" option away from decisive responses, either in the affirmative ("Yes," I intend to receive the vaccine) or in the negative ("No," I do not intend to receive the vaccine).

To examine potential order effects, we included an indicator for whether the respondent viewed a response option set in which the “No” response option was presented first (denoted “NoFirst”) and another for whether the response option set included an “Unsure” option (denoted “Unsure”). The regression is formally specified as follows:

| 1 |

The coefficients βNoFirst and βUnsure measured the corresponding instrumentation effects. For respondents who had not received a COVID-19 vaccine, we included in Eq. (1) an additional vector of dummy variables, collectively referred to as Zi, for whether the respondent viewed one of ten randomized, hypothetical scenarios (as summarized above and detailed elsewhere5). The instrumentation effects NoFirst and Unsure are interpreted relative to a dichotomous “Yes–No” response option set. The variance–covariance matrix is estimated using White heteroskedasticity robust standard errors.

Data cleaning and sample size

When cleaning the data, we eliminated data from those who had duplicate identification numbers or a fraud score > 0. Fraud scores and identification numbers are assigned by Qualtrics. A fraud score is a numerical value that indicates the risk level of a survey participant’s illegitimacy. Use of the fraud score helps survey research identify and remove data created by bots completing surveys en masse, or a person who participated multiple times, on behalf of others.

Currently, it is unclear if survey data quality could be improved by excluding those with unusually fast completion time. Some prior research suggests that instrumentation effects can be stronger for respondents whose completion times are the fastest27,28. Others have argued that speeding respondents only adds random noise to the data and does not change the results or only attenuates correlations slightly [31, 32]. We conducted the analyses when excluding data from respondents who were unlikely to have paid attention. In the latter case, the fastest 5% of respondents were eliminated, which is a recommendation based on their completion times being more than 1.5 standard deviations below the mean. [31, 32]. The screened data are shown in the tables, figures, and discussion. We also ran the model with the full sample, and the results did not change meaningfully.

Supplementary Information

Acknowledgements

We thank Andy Tan for reviewing an early draft.

Author contributions

Study conception (DA, JF); data collection (KAS, DS, CR, JF); interpreting results (KAS, DA, KAS, DS, CR, JF); data analyses (KAS, DS); drafting manuscript (KAS, JF); reviewing and/or editing manuscript (KAS, DS, CR, JF).

Funding

Support was provided to JF by the Message Effects Lab and the Institute for RNA Innovation, both at the University of Pennsylvania.

Data availability

The de-identified, raw data for this study is provided in the Supplemental Materials.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-024-69129-5.

References

- 1.MacDonald, N. E., SAGE Working Group on Vaccine Hesitancy. Vaccine hesitancy: Definition, scope and determinants. Vaccine33, 4161–4164 (2015). [DOI] [PubMed] [Google Scholar]

- 2.Akbar, R. World Health Organization. Ten threats to global health in 2019. https://www.who.int/news-room/spotlight/ten-threats-to-global-health-in-2019 (2019).

- 3.Sheeran, P. Intention—Behavior relations: A conceptual and empirical review. Eur. Rev. Soc. Psychol.12(1), 1–36 (2002). [Google Scholar]

- 4.daCosta, D. M. & Chapman, G. B. Moderators of the intention-behavior relationship in influenza vaccinations: Intention stability and unforeseen barriers. Psychol. Health20(6), 761774 (2005). [Google Scholar]

- 5.Fishman, J., Mandell, D. S., Salmon, M. K. & Candon, M. Large and small financial incentives may motivate COVID-19 vaccination: A randomized, controlled survey experiment. PLoS ONE18(3), e0282518 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fishman, J., Salmon, M., Scheitrum, K., Schaefer, A. & Robertson, C. Comparative effectiveness of mandates and financial policies targeting COVID-19 vaccine hesitancy: A randomized, controlled survey experiment. Vaccine40(51), 7451–7459 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fishman, J., Lushin, V. & Mandell, D. S. Predicting implementation: comparing validated measures of intention and assessing the role of motivation when designing behavioral interventions. Implement. Sci. Commun.1, 81 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pol Campos-Mercade, P. et al. Monetary incentives increase COVID-19 vaccinations. Science374, 879–882 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Callaghan, T. et al. Correlates and disparities of intention to vaccinate against COVID-19. Soc. Sci. Med.272, 113638 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ung, C. O. L. et al. Investigating the intention to receive the COVID-19 vaccination in Macao: Implications for vaccination strategies. BMC Infect. Dis.22, 218 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Latkin, C. A., Dayton, L., Yi, G., Colon, B. & Kong, X. Mask usage, social distancing, racial, and gender correlates of COVID-19 vaccine intentions among adults in the US. PLoS ONE16(2), e0246970 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Saris, W. & Gallhofer, I. Design, Evaluation, and Analysis of Questionnaires for Survey Research (Wiley, 2014). [Google Scholar]

- 13.Peterson, R. A. Constructing Effective Questionnaires. Vol. 1 (Sage publications Thousand Oaks, 2000). [Google Scholar]

- 14.DeCastellarnau, A. A classification of response scale characteristics that affect data quality: A literature review. Qual. Quant.52(4), 1523–1559 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Andrews, F. Construct validity and error components of survey measures: A structural modelling approach. Public Opin. Q.48, 409–442 (1984). [Google Scholar]

- 16.Saris, W. E. & Revilla, M. Correction for measurement errors in survey research: Necessary and possible. Soc. Indic. Res.127, 1005–1020 (2016). [Google Scholar]

- 17.Albarracín, D. & Wyer, R. S. Jr. The cognitive impact of past behavior: Influences on beliefs, attitudes, and future behavioral decisions. J. Personal. Soc. Psychol.79(1), 5–22 (2000). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Miller, J. M. & Jon, A. K. The impact of candidate name order on election outcomes. Public Opin. Q. 291–330 (1998).

- 19.van Erkel, P. F. & Thijssen, P. The first one wins: Distilling the primacy effect. Electoral Stud.44, 245–254 (2016). [Google Scholar]

- 20.Israel, G. D. & Taylor, C. L. Can response order bias evaluations?. Eval. Program Plan.13(4), 365–371 (1990). [Google Scholar]

- 21.Taylor, S. et al. A proactive approach for managing COVID-19: The importance of understanding the motivational roots of vaccination hesitancy for SARS-CoV2. Front. Psychol.10.3389/fpsyg.2020.575950 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Iyer, G., Nandur, V. & Soberman, D. Vaccine hesitancy and monetary incentives. Humanit. Soc. Sci. Commun.9, 81 (2022). [Google Scholar]

- 23.Krosnick, J. & Presser, S. Question and Questionnaire Design. Handbook of Survey Research. (2010).

- 24.Krosnick, J. et al. The impact of “no opinion” response options on data quality: Non-attitude reduction or an invitation to satisfice?. Public Opin. Q.66(3), 371–403 (2002). [Google Scholar]

- 25.Gordon, R. A. Social desirability bias: A demonstration and technique for its reduction. Teach. Psychol.14, 40–42 (1987). [Google Scholar]

- 26.Paulhus, D. L. Two-component models of socially desirable responding. J. Personal. Soc. Psychol.46, 598–609 (1984). [Google Scholar]

- 27.Malhotra, N. Completion time and response order effects in web surveys. Public Opin. Q.72(5), 914–934 (2008). [Google Scholar]

- 28.Greszki, R. et al. Exploring the effects of removing ‘too fast’ responses and respondents from web surveys. Public Opin. Q.79(2), 471–503 (2015). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The de-identified, raw data for this study is provided in the Supplemental Materials.