Abstract

Importance

Declining mortality in the field of pediatric critical care medicine has shifted practicing clinicians’ attention to preserving patients’ neurodevelopmental potential as a main objective. Earlier identification of critically ill children at risk for incurring neurologic morbidity would facilitate heightened surveillance that could lead to timelier clinical detection, earlier interventions, and preserved neurodevelopmental trajectory.

Objective

Develop machine-learning models for identifying acquired neurologic morbidity while hospitalized with critical illness and assess correlation with contemporary serum-based, brain injury-derived biomarkers.

Design

Retrospective cohort study.

Setting

Two large, quaternary children’s hospitals.

Exposures

Critical illness.

Main Outcomes and Measures

The outcome was neurologic morbidity, defined according to a computable, composite definition at the development site or an order for neurocritical care consultation at the validation site. Models were developed using varying time windows for temporal feature engineering and varying censored time horizons prior to identified neurologic morbidity. Optimal models were selected based on F1 scores, cohort sizes, calibration, and data availability for eventual deployment. A generalizable created at the development site was assessed at an external validation site and optimized with spline recalibration. Correlation was assessed between development site model predictions and measurements of brain biomarkers from a convenience cohort.

Results

After exclusions there were 14,222–25,171 encounters from 2010–2022 in the development site cohorts and 6,280–6,373 from 2018–2021 in the validation site cohort. At the development site, an extreme gradient boosted model (XGBoost) with a 12-hour time horizon and 48-hour feature engineering window had an F1-score of 0.54, area under the receiver operating characteristics curve (AUROC) of 0.82, and a number needed to alert (NNA) of 2. A generalizable XGBoost model with a 24-hour time horizon and 48-hour feature engineering window demonstrated an F1-score of 0.37, AUROC of 0.81, AUPRC of 0.51, and NNA of 4 at the validation site. After recalibration at the validation site, the Brier score was 0.04. Serum levels of the brain injury biomarker glial fibrillary acidic protein measurements significantly correlated with model output (rs=0.34; P=0.007).

Conclusions and Relevance

We demonstrate a well-performing ensemble of models for predicting neurologic morbidity in children with biomolecular corroboration. Prospective assessment and refinement of biomarker-coupled risk models in pediatric critical illness is warranted.

Introduction

An estimated 340,000 children are hospitalized with critical illness every year in the United States and brain injury has been cited as the proximate cause of death in approximately 90% of previously healthy children who do not survive their intensive care admission.1,2 Of children who survive critical illness, acquired neurologic morbidity can have long-lasting implications which range from mild impairments in cognition to profound debilitation. Declining mortality in the field of pediatric critical care has led to increased attention to the longer-term functional outcomes of children who survive an intensive care admission.3

Granular, time-series data harbored by the electronic health record (EHR) offer a rich training ground for probabilistic models of important patient outcomes. Implementing well-performing models as clinical decision support (CDS) systems is a promising approach for improving outcomes related to many different conditions and situations, though there are currently no established tools for identifying children at risk for new brain injury.4–7 Recently enacted federal mandates in the United States of America (USA) are promoting the development of EHRs that facilitate the deployment of interoperable decision support tools built to leverage a core dataset.8

The main objective of the present work was to construct and externally validate predictive models to support the identification of critically ill children at high risk for acquired neurologic morbidity, as a first step towards the development of a decision support tool that might be used to forewarn of neurologic morbidity amongst critically ill children, as well as to aid in the enrichment of prospective trials examining strategies to mitigate the risk of brain injury during pediatric critical illness. A second objective was to corroborate the biological underpinnings of the developed prediction models by assessing correlation with novel, brain-derived, serum-based biomarkers of brain injury obtained from a diagnostically diverse cohort of critically ill children.

Methods

Study Sites

Model development used data from all encounters to a quaternary pediatric intensive care unit (PICU) in a large, freestanding children’s hospital between January 1, 2010 and December 31, 2022. The development site PICU serves a region of approximately 5 million people, encompassing Western Pennsylvania and bordering states, and is a level 1 pediatric trauma center. External model validation occurred using data from encounters admitted between January 1, 2018 and December 31, 2023 to a quaternary PICU in a large, freestanding children’s hospital that serves as a referral center for the 5-state region of Washington, Wyoming, Alaska, Montana, and Idaho. Approval was granted by the institutional review boards of the University of Pittsburgh (Institutional Review Board [IRB] #17030743) and Seattle Children’s Hospital (IRB #STUDY00001374). Findings are reported according to the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) statement (Supplemental Table 1).

Model Development Frameworks

Conceptualization of the model adhered to the Littenberg framework for the development of clinical decision support tools, which considers the clinical and technical plausibility of the tool, as well as the process outcomes, patient outcomes, and eventual societal outcomes addressed by the tool (Supplemental Table 2).9 The first 5 steps of the cross-industry standard process for data modeling (CRISP-DM) framework were followed for model design. CRISP-DM outlines six steps for data science projects that include 1) understanding the use case; 2) understanding the data; 3) data curation; 4) model development; 5) model evaluation; and 6) model deployment.10

Model Development Approach

Model development proceeded in 2 phases: 1) Development of models for use locally at the development site; 2) Development of generalizable models with external validation. The outcome of neurologic morbidity was defined using structured EHR data surrogates based on each study site’s clinical and electronic workflows. At the development site, the outcome was a previously validated, computable, composite definition of neurologic morbidity that incorporated orders for electroencephalography (EEG), brain computed tomography (CT), brain magnetic resonance imaging (MRI), or indicators of treated delirium within 72-hours of one another (Supplemental Table 3).11 This outcome has also been validated in a separate cohort of children with sepsis.12 At the validation site, orders for a neurocritical care service consultation were deemed to be the most reliable surrogate for neurologic morbidity during an episode of critical illness. Data for control cases (hospitalized children who did not meet the definition of a neurologic morbidity) were collected from a random period during the encounter with preference to a window following the first PICU admission.

Candidate data elements for model construction were selected based on clinical expertise and with attention to the United States Core Data for Interoperability (USCDI) requirements to facilitate eventual, interoperable deployment (Supplemental Tables 4 and 5).13 A ‘Biodigital Rapid Alert to Identify Neurologic morbidity, A-I bundle (BRAIN A-I)’ standard clinical vocabulary value set was filed with the National Library of Medicine’s Value Set Authority Center.14 Features were engineered with the dual aims of representing the temporality of the data while also preserving clinical interpretability of the features, using methods previously reported.15 Features were then discretized, or categorized into information bins, with missingness encoded as a feature. In addition to preserving possible information associated with missingness, discretization was performed to reduce the influence of outlier data, represent data nonlinearity in linear modeling processes such as logistic regression, further mitigate overfitting, and preserve clinical interpretability of the features. Additional details of data curation and model development are in the Supplemental Model Methods. Supplemental Figure 4 summarizes model construction at the development site and evaluation at the external validation site. At the development site, data were queried from an Oracle (Oracle Corp, Austin, TX) data warehouse containing a subset of transformed tables from the Cerner Millennium database (Oracle Cerner, Kansas City, MO). The model was developed and assessed using Python (version [v]3.10.11), Jupyter (v1.0.0), and the packages Pandas (v1.5.3), Numpy (v1.25.0), Matplotlib (v3.71.1), Sklearn (v1.1.1), XGBoost (v1.7.3), Seaborn (v0.11.2), SHAP (v0.41.0), and tqdm (v4.65.0).

Biomolecular Corroboration of the Model at the Development Site

Model predictions were compared to measured levels of 6 serum-based, brain-derived biomarkers of brain injury obtained from a previously assembled convenience cohort of 101 children hospitalized between 2012–2014 (IRB #19040172). The biomarkers were ubiquitin C-terminal hydrolase-L1 (UCH-L1), glial fibrillary acidic protein (GFAP), myelin basic protein (MBP), neuron-specific enolase (NSE), S100 calcium binding protein B (S100B), and spectrin breakdown product 150 (SBDP150). After prospective consent from a legal guardian, biomarker levels were collected for up to 7 consecutive days from critically ill children with preexisting central venous catheters or arterial catheters. Details of the assays are provided in the Supplemental Biomarker Methods. Maximum values of each biomarker for each encounter were assessed for correlation with the predicted probability of neurologic deterioration for that encounter. Patients were determined to have a neurologic complication by chart review if it occurred no more than 7 days after the last date a biomarker was collected.

Model Selection and Statistical Analysis

The top-performing model was selected based on F1 score, considering a clinically actionable time horizon, as well as the volume of available training data for feature engineering. Models with <0.15 difference in F1 scores were then compared both by visual inspection of calibration plots and Brier scores. Additional Fβ thresholds of 0.5, 2, and 3 were secondarily evaluated to identify whether there were any substantial differences in the optimal classifier based on the relative weight of recall compared to precision. Statistical performances of the top-performing models were evaluated at varied model outputs ranging from 0.025 to 0.9. Spline regression was performed on top-performing models to improve calibration. Normally distributed continuous data are presented as means and 95% confidence intervals, nonparametric continuous data are presented as medians with interquartile ranges (IQRs), and categorical data are presented as counts with corresponding proportions. Model discrimination was compared to the discrimination of the last Glasgow coma scale (GCS) score prior to the censored time horizon using DeLong’s method. For the biomolecular corroboration analysis, Spearman’s rank-order correlation was assessed between a chart-adjudicated neurologic morbidity outcome and the composite neurologic morbidity outcome, as well as between the probability output of top-performing models and the composite neurologic morbidity outcome. Correlation was then assessed between biomarker levels and the probability output of the top-performing models. Notched boxplots with overlying violin plots were constructed for significantly correlated biomarkers by dichotomizing predicted neurologic morbidity according to whether the probability was <0.5 or ≥0.5. The distributions of biomarker measurements were normalized for plotting using log transformation and significance testing was assessed using an independent t test. An α < 0.05 is considered significant. Statistical analyses not performed in Python were performed in R version 4.3.1 (R Foundation, Vienna, Austria).

Results

Development Site Models Performance

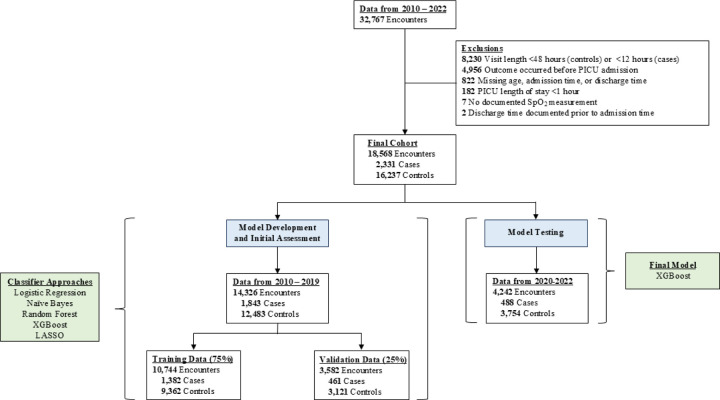

There were 32,702 encounters with a PICU stay. After exclusions, cohort sizes ranged from 14,222–25,171 encounters, with 18,568 encounters in the final model cohort (Supplemental Table 8; Figure 1A). Patients were slightly older, received less mechanical ventilation, and less sedative-analgesic medications in the final test dataset compared to the training and validation datasets (Table 1). The final models evaluated in the test dataset was the extreme gradient boosting (XGBoost) model with a 12-hour time horizon and 48-hour feature window. This model was determined by investigator agreement to be a reasonable balance of favorable F1 scores, calibration as assessed by a Brier score, visual inspection of the calibration plot, clinically actionable time horizon, and sufficient cohort size for the training, validation, and test datasets. Complete development site model performance characteristics, 1 for each of the combinations of a 6, 12, and 24-hour censored time horizons and 24, 48, and 72-hour feature windows selected based on F1 score, are detailed in Supplemental Table 9. Each approach generated 605 features prior to information gain feature selection. The F1 scores are reported in Supplemental Tables 10A and 10B. Additional Fβ scores largely agreed with the model assessments provided by F1 scores and are presented in Supplemental Table 11A–C.

Figure 1.

Cohort ascertainment for the final model at the development site, which included features engineered using 48 hours of preceding data and censoring 12-hours prior to the event for cases. Initial model development and validation proceeded using data from 2010–2019. The model was tested using data from 2020–2022. Abbreviations: LASSO, least absolute shrinkage and selection operator; PICU, pediatric intensive care unit; XGBoost, extreme gradient boosting.

Table 1.

Demographic characteristics of the entire cohort and the parsed training, validation, and final test datasets at the development site and the entire cohort, training, and validation datasets, as well as the generalizable model validation dataset at the external validation site.

| Development Site | Validation Site | ||||

|---|---|---|---|---|---|

| Characteristic | Entire Cohort | Training Dataset | Validation Dataset | Final Test Dataset | Generalizable Model Validation Dataset |

| N = 18,568 | n = 10,744 | n = 3,582 | n = 4,242 | N = 6,825 | |

| Age (months), median (IQR) | 70 (18, 161) | 67 (18, 158) | 68.5 (18, 160) | 77 (19, 167) | 96 (18,171) |

| Female, n (%) | 8,325 (45) | 4,748 (44) | 1,593 (44) | 1,984 (47) | 3,159 (46) |

| Glasgow Coma Scale Score, median (IQR)* | 15 (12, 15) | 15 (11, 15) | 15 (11, 15) | 15 (14, 15) | 14 (14,15) |

| Mechanical Ventilation, n (%) | 5,352 (29) | 3,322 (31) | 1,143 (32) | 887 (21) | 1,948(29) |

| Endotracheal Tube, n (%) | 1,759 (9) | 1,142 (11) | 370 (10) | 247 (6) | 1,283 (19) |

| Vasoactive Medication, n (%)** | 720 (4) | 393 (4) | 148 (4) | 179 (4) | 221 (3) |

| Sedative-Analgesic Medication, n (%)*** | 4,660 (25) | 2,948 (27) | 987 (27) | 727 (17) | 1,930 (28) |

The last recorded Glasgow coma scale score for the encounter prior to the censored time horizon

Vasoactive medications include dobutamine, dopamine, epinephrine, norepinephrine, or milrinone

Sedative-analgesic medications include fentanyl, hydromorphone, midazolam, or morphine

Abbreviations: IQR, interquartile range

The final model contained 352 features and had a number needed to alert (NNA) of 2 when considering a model prediction of greater than or equal to 0.5 as positive. At a model prediction threshold of 0.025 in the test dataset, sensitivity increased to 0.86 and NAA was 4. Statistical performance of the top-performing validation models and final test model at a range of output thresholds are in Supplemental Table 12A and 12B and Supplemental Figure 5. All development site models had a NNA of 2–3 at this prediction threshold. In the test dataset the final model had a sensitivity of 0.47 (range for all models in the validation dataset [range] 0.24–0.63), specificity of 0.98 (range 0.96–0.99), AUPRC 0.68 (range 0.39–0.78), and AUROC of 0.89 (range 0.80–0.87). The final model had significantly greater discrimination compared to the last GCS AUROC of 0.72 obtained prior to the censored time horizon, P<0.001. Calibration plots of models with comparable performance based on F1-scores are displayed in Supplemental Figure 6. The top 10 features of the final model are displayed in Supplemental Figure 7. Average hourly scores for cases and controls for the 12 hours preceding and 4 hours following an outcome event are displayed in Figure 2.

Figure 2.

Average hourly scores in the test dataset (encounters with a PICU stay in the year 2020 – 2022) for varied censored time horizon windows for the extreme gradient boosted model developed using a 12-hour time horizon and 48-hour feature window. The red dots are the average hourly scores 12 hours prior to an event and 4 hours after an event for the case encounters (encounters with an identified neurologic morbidity) and the shaded red region represents the 95% confidence interval. The black dots are the average hourly scores for the control encounters (encounters without an identified neurological morbidity). Confidence intervals for the control encounters are not discernable in the figure due to the large cohort size. The size of the dots is proportionate to the cohort size at that timepoint.

Biomolecular Corroboration at the Development Site

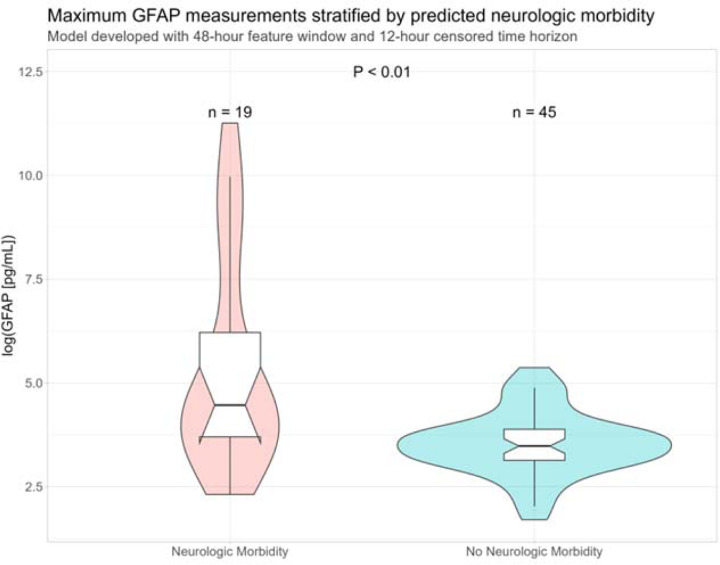

Of the 101 patients with available brain-derived biomarkers measured, 64 also had model predictions for the 12-hour time horizon and 48-hour feature window models. Chart-adjudicated neuromorbidity within 7 days of last biomarker collection was significantly correlated with the composite neurologic morbidity outcome, rs=0.38 (P=0.002). The extreme gradient boosting model was significantly correlated with the composite neurologic morbidity outcome, rs=0.80 (P<0.001). The logistic regression model had an F1 score that was nearly identical to the extreme gradient boosting model and was also significantly correlated with the composite neurologic morbidity outcome, rs=0.55 (P<0.001). Extreme gradient boosting predictions were significantly correlated with maximum GFAP measurements, rs=0.34 (P=0.007) (Figure 3).

Figure 3.

Log-transformed maximum GFAP measurements for a convenience cohort of 64 patients, stratified by predicted neurologic morbidity using the 12-hour time horizon 48-hour feature window extreme gradient boosting model. Abbreviations: GFAP, glial fibrillary acidic protein; mL, milliliter; pg, picogram.

Generalizable Model Performance

Cohort ascertainment for the generalizable model is reported for the 24-hour time horizon and 48-hour feature window model at the development site in Supplemental Table 8 and for the validation site in Supplemental Table 13. The generalizable model performance at the development and validation sites is reported in Table 2. Performance was comparable to earlier 24-hour time horizon 48-hour feature window models at the development. As the XGBoost and logistic regression models performed comparably, both were assessed at the validation site. There were 6,825 encounters in the external validation site final cohort (387 cases and 6,438 controls). As assessed by an F1 score of 0.37 at a threshold of 0.5, the top performing model was the XGBoost model, with an external validation AUROC of 0.81, AUPRC of 0.51, and an NNA of 4. Model performance characteristics across varied thresholds are displayed in Supplemental Table 14 and Supplemental Figure 8. Calibration was again excellent at the development site, initially poor at the external validation site, then substantially improved after spline recalibration at the validation site (Supplemental Figure 9). Feature importance analysis for the generalizable model was similar between the development and validation sites (Supplemental Figure 10). All models outperformed the GCS, P<0.001.

Table 2.

Performance of the generalizable model at the development and external validation sites.

| Development Site | Validation Site | |||||||

|---|---|---|---|---|---|---|---|---|

| Dataset | Development | Validation | Test | External Validation | ||||

| Encounters (Cases/Controls), N |

10,457 (1,095/9,362) |

3,486 (365/3,121) |

4,152 (398/3,754) |

6,825 (387/6,438) |

||||

| Model | XGB | LR | XGB | LR | XGB | LR | XGB | LR |

| Feature Selection | IG | IG | IG | IG | IG | IG | IG | IG |

| AUROC | 1.00 | 0.90 | 0.81 | 0.81 | 0.87 | 0.86 | 0.81 | 0.82 |

| AUPRC | 0.99 | 0.71 | 0.52 | 0.54 | 0.62 | 0.61 | 0.51 | 0.48 |

| PPV | 1.00 | 0.84 | 0.67 | 0.69 | 0.82 | 0.80 | 0.26 | 0.18 |

| NPV | 0.99 | 0.94 | 0.93 | 0.93 | 0.94 | 0.94 | 0.97 | 0.98 |

| Sensitivity | 0.91 | 0.47 | 0.35 | 0.41 | 0.35 | 0.38 | 0.61 | 0.70 |

| Specificity | 1.00 | 0.99 | 0.98 | 0.98 | 0.99 | 0.99 | 0.90 | 0.81 |

| F1 score | 0.95 | 0.61 | 0.46 | 0.51 | 0.49 | 0.52 | 0.37 | 0.29 |

| F2 score | 0.92 | 0.52 | 0.39 | 0.45 | 0.39 | 0.43 | 0.48 | 0.45 |

| F3 score | 0.92 | 0.50 | 0.37 | 0.43 | 0.37 | 0.41 | 0.54 | 0.55 |

| F0.5 score | 0.98 | 0.73 | 0.57 | 0.60 | 0.65 | 0.66 | 0.30 | 0.22 |

Abbreviations: AUROC, area under the receiver operating characteristics curve; AUPRC, area under the precision recall curve; CFS, correlation-based feature selection; IG, information gain; LR, logistic regression; NB, naïve Bayes; NPV, negative predictive value; PPV, positive predictive value; XGB, XGBoost (extreme gradient boosting)

Discussion

In this study, we constructed well-performing models for predicting neurologic morbidity among critically ill children using EHR data from 2 large children’s hospitals. These models were trained using more than 600 features engineered to capture nonlinear relationships between predictors and the outcome. The top performing model at the development site had 352 features and a NNA of 2, suggesting the utility of incorporating more features than can be accommodated by traditional, manually-tabulated clinical decision rules. A generalizable model demonstrated robust performance at both the development site and the external validation site. All models outperformed the GCS, supporting machine-learning-based methods to facilitate clinical activities including identification of high-risk patients for clinical intervention and for identifying an enriched population for enrollment in clinical trials.16 By largely adhering to data elements prioritized by USCDI, the developed models have a clearer path to implementation in modern informatics architectures capable of data transfer using standard clinical vocabularies and the fast healthcare interoperability resources (FHIR) standard.17 The generalizable model relies on 41 variables, 37 of which are included in USCDI versions 1 or 2 and are therefore expected to ease eventual work associated with deployment.

Many predictive models are constructed utilizing a snapshot of information from a discrete moment in time.18,19 For predictive models to more completely leverage the content of the EHR, the temporality of data must be incorporated into model features. The performance of the present models was likely bolstered by incorporating features engineered using vector space representations of patient state, resulting in performance metrics that surpass those of other commonly used critical care risk models.15 The Simplified Acute Physiology Score, a commonly used mortality prediction tool for critically ill adults, has reported AUPRCs between 0.2–0.3 for in-hospital and 30-day mortality.20 The sequential organ failure assessment (SOFA), quick SOFA, and systemic inflammatory response syndrome criteria have reported AUPRCs of 0.06, 0.1, and 0.09 predicting mortality at the time of sepsis onset, respectively.21 By comparison, our model ensemble had AUPRCs ranging from 0.39–0.78 at the development site and 0.2–0.42 at the validation site. We undertook the present work with an expectation that identification of impending neurologic deterioration requires examination of contextual elements of care and more subtle vital sign and laboratory signatures which may serve as a harbinger of unfavorable trajectory.

Correlation between a top-performing model and measurements of GFAP from a convenience cohort is compatible with our previous investigations of brain biomarkers in critically ill children.22 GFAP is found in astrocytes and plays a role responding to central nervous system injuries and related neurodegeneration.23 GFAP measurements from our convenience cohort were obtained for the first 7 days of the PICU stay and may have been obtained remote from an incurred brain injury, including one detected by the composite neurologic morbidity outcome. Notably, our composite neurologic morbidity outcome was significantly correlated with chart-adjudicated neurologic morbidity and an XGBoost model was significantly associated with GFAP levels. Most extensively studied in the context of traumatic brain injury, a growing body of evidence suggest GFAP may be useful to identify more subtle insults to the central nervous system, and that the ability to measure GFAP in the bloodstream in non-traumatic diseases might relate to its dispersion into the bloodstream via recently discovered glymphatic pathways.24,25 Our models may prove useful both to determine for which patients a GFAP level should be obtained, as well as coupled with the GFAP measurements to bolster model performance.

This work has some important limitations. Use of a composite definition of neurological morbidity intrinsically omits occult neurological morbidities that did not trigger clinical action and represents a source of potential bias in model development. While the computable composite definition of neurologic morbidity used in the present study has previously demonstrated high specificity, the modest sensitivity of the definition suggests that the present models may miss neurologic morbidities that do not warrant inpatient imaging, EEG, a mental health assessment or a medication directed at psychosis or delirium. This limitation, however, can be mitigated by assessing performance characteristics, including varied Fβ scores or sensitivities at different output thresholds, according to context and adjusting the model actionable threshold in a manner tailored to the clinical environment in which it is deployed. Moreover, while performance was robust at an external validation site relative to other established risk scores, statistical metrics did deteriorate compared to those observed in the test dataset at the development site. Notably, the GCS also had a lower AUROC at the validation site compared to the GCS AUROC at the development site, suggesting that the choice of neurocritical care consult as an outcome influenced the models’ performance characteristics.

In conclusion, we developed well-performing models for predicting children with critical illness at risk for neurologic morbidity. A flexible, distributed strategy for model development in partnership with an external validation site demonstrated the utility of adapting to varied informatics infrastructures and EHR deployments to generate well-performing predictive models for a common clinical goal. A generalizable model demonstrated robust performance in external validation. Prospective, multi-site assessment of a generalizable model coupled with brain-based biomarkers is warranted to assess the combined utility for identifying patients at high-risk for incurred neurologic morbidity and evaluating interventions to improve outcomes in this population.

Supplementary Material

Supplemental Table 1. TRIPOD checklist.

Supplemental Table 2. Littenberg framework for the assessment of medical technology as applied to BRAIN A-I.

Supplemental Table 3. Data curation steps for individual data elements for the BRAIN AI outcome.

Supplemental Table 4. Data curation steps for individual data elements for BRAIN A-I.

Supplemental Table 5. Standard vocabulary crosswalk for BRAIN-AI components.

Supplemental Table 6. Predictive performance of the XGBoost model with a 12-hour censor horizon and 48-hour feature window in the test dataset, after manual tuning, and after Bayesian tuning.

Supplemental Table 7. Predictive performance of the XGBoost model with a 12-hour censor horizon and 48-hour feature window in the validation dataset, after manual tuning, and after Bayesian tuning.

Supplemental Table 8. Cohort ascertainment and exclusions for varied censored time horizons and feature windows at the development site.

Supplemental Table 9. Performance of the optimal models in the validation dataset at the development and validation sites.

Supplemental Table 10 A) F1 scores of top performing models in the development site validation dataset. B) F1 scores of top performing models in the validation site test dataset.

Supplemental Table 11 A) Fβ (β =2) scores of top performing models in the development site validation dataset; B) Fβ (β =3) scores of top performing models in the development site validation dataset; C) Fβ (β =0.5) scores of top performing models in the development site validation dataset.

Supplemental Table 12 A) Statistical performance of the 12-hour time horizon, 48-hour feature window XGBoost and logistic regression models over a range of score thresholds in the development site validation dataset; B) Statistical performance of the 12-hour time horizon, 48-hour feature window XGBoost model over a range of score thresholds in the development site test dataset.

Supplemental Table 13. Cohort ascertainment and exclusions for varied feature windows for the validation site.

Supplemental Table 14 A) Statistical performance of the extreme gradient boosting (XGBoost) and logistic regression generalizable models at the development site across varied output thresholds. B) Statistical performance of the extreme gradient boosting (XGBoost) and logistic regression generalizable models at the external validation site across varied output thresholds.

Supplemental Figure 1. A representation of the time window and censor horizons used to define cases and controls as part of the development, validation, and test cohorts. The blue boxes in the top, ‘Cases’ box identify a time window that is also demarcated by a horizontal, gold, bidirectional arrow, the horizontal black lines represent length of stay for individual encounters, the vertical black line represents the occurrence of the neurological morbidity outcome, and the gold, horizontal, bidirectional arrow indicates the censor horizon, or period of time that data were not incorporated into the model. In the bottom, ‘Controls’ box, the blue boxes indicate the time window of data used for each stage of model development and evaluation.

Supplemental Figure 2. Data cleaning and feature engineering process.

Supplemental Figure 3. A representation of the feature engineering for continuous biomarker measurements. Temporal information is represented as a variety of summary measurements for discrete windows of time. Feature windows of 24-hours, 48-hours, and 72-hours are displayed in the figure. The models at the development site were trained with 6-hour, 12-hour, and 24-hour censored time horizons, with 12-hour and 24-hour horizons demonstrated in the figure. Definitions and related examples for a 24-hour window of data prior to the censor period are displayed in the table. Abbreviations: h, hours.

Supplemental Figure 4. The process of BRAIN A-I model development and external validation. Curated data at the development site were divided into a train cohort, validation cohort, and 2-years of holdout test data. The curated data were used to generate synthetic data with comparable single variable statistical distributions. The synthetic data were then distributed to the external validation site with model training code, facilitating local data curation by providing the necessary details of data structure. Finally, the working BRAIN A-I pipeline was applied to real-world data at the external validation site, applied separate training and validation datasets. Abbreviations: BRAIN A-I, Biodigital Rapid Alert to Identify Neuromorbidity A-I Bundle; Dev. Site, Development Site; Valid. Site, Validation Site.

Supplemental Figure 5. Plots the key statistical performance metrics sensitivity (gold line), positive predictive value (PPV, red line), F1 score (blue line), and F2 score (green line) with metric values on the y-axis and model output thresholds on the x-axis for A) the logistic regression model with a 12-hour time horizon and 48-hour feature window in the development site validation dataset; B) the extreme gradient boosting model with a 12-hour time horizon and 48-hour feature window in the development site validation dataset; and C) the extreme gradient boosting model with a 12-hour time horizon and 48-hour feature window in the development site test dataset.

Supplemental Figure 6. Calibration plots and associated Brier scores for the top performing models for varied time horizons and features windows in the validation dataset. A) 6-hour time horizon and 24-hour feature window; B) 6-hour time horizon and 48-hour feature window; C) 6-hour time horizon and 72-hour feature window; D) 12-hour time horizon and 24-hour feature window; E) 12-hour time horizon and 48-hour feature window; F) 12-hour time horizon and 72-hour feature window; G) 24-hour time horizon and 24-hour feature window; H) 24-hour time horizon and 48-hour feature window; I) 24-hour time horizon and 72-hour feature window. Abbreviations: LR, logistic regression; XGB, extreme gradient boosted.

Supplemental Figure 7. Top 10 biomarker feature categories based on Shap values for the 12-hour time horizon 48-hour feature window XGBoost model from the development site. Each category contains several features, e.g. Temperature contains maximum temperature, minimum temperature, average temperature, etc. The blue bars represent the sum of the mean Shap values for each category. Abbreviations: biomrkrs, biomarkers.

Supplemental Figure 8. Plots the key statistical performance metrics sensitivity (gold line), positive predictive value (PPV, red line), F1 score (blue line), and F2 score (green line) with metric values on the y-axis and model output thresholds on the x-axis for the generalizable A) logistic regression model in the development site test dataset; B) extreme gradient boosting model in the development site test data set; C) logistic regression model in the external validation dataset; D) extreme gradient boosting model in the external validation site dataset.

Supplemental Figure 9. Calibration plots for the generalizable model in the A) development site test dataset and B) the external validation site dataset.

Supplemental Figure 10. Feature importance analysis for the generalizable model in the A) development site test dataset and B) external validation site dataset.

Key Points.

Question

Can interoperable models for predicting neurological deterioration in critically ill children be developed, correlated with serum-based brain-derived biomarkers, and validated at an external site?

Findings

A development site model demonstrated an area under the receiver operating characteristics curve (AUROC) of 0.82 and a number needed to alert (NNA) of 2. Predictions correlated with levels of glial fibrillary acidic protein in a subset of children. A generalizable model demonstrated an AUROC of 0.81 and NNA of 4 at the validation site.

Meaning

Well performing prediction models coupled with brain biomarkers may help to identify critically ill children at risk for acquired neurological morbidity.

Acknowledgements

We would like to posthumously thank Dr. Ron Hayes (Banyan Biomarkers) for brain biomarker measurements; Dr. Pat Kochanek for helpful guidance; Dr. Henry Ogoe for helping to run an early version of the pipeline at UPMC Children’s Hospital of Pittsburgh; Mrs. Nassima Bouhenni for her initial efforts updating code; Mr. Dan Ricketts for his assistance setting up and maintaining virtual machines used for the final analyses; and Mr. Thomas Mathie for his assistance querying EHR data. United States Patent Application No. 17/760,558 and International Patent Application PCT/US2020/061985 have been filed related to this work.

Funding:

NINDS R01NS118716 and NLM 5T15LM007059-38

Funding Statement

NINDS R01NS118716 and NLM 5T15LM007059-38

Footnotes

Data Sharing Statement:

Data are available for specific use cases with investigator approval.

References

- 1.Au AK, Carcillo JA, Clark RSB, Bell MJ. Brain injuries and neurological system failure are the most common proximate causes of death in children admitted to a pediatric intensive care unit. Pediatr Crit Care Med 2011; 12: 566–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bell JL, Saenz L, Domnina Y, et al. Acute Neurologic Injury in Children Admitted to the Cardiac Intensive Care Unit. Ann Thorac Surg 2019; 107: 1831–7. [DOI] [PubMed] [Google Scholar]

- 3.Pollack MM, Holubkov R, Funai T, et al. Simultaneous Prediction of New Morbidity, Mortality, and Survival Without New Morbidity From Pediatric Intensive Care: A New Paradigm for Outcomes Assessment. Crit Care Med 2015; 43: 1699–709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sepanski RJ, Godambe SA, Mangum CD, Bovat CS, Zaritsky AL, Shah SH. Designing a pediatric severe sepsis screening tool. Front Pediatr 2014; 2: 56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Schlapbach LJ, MacLaren G, Festa M, et al. Prediction of pediatric sepsis mortality within 1 h of intensive care admission. Intensive Care Med 2017; 43: 1085–96. [DOI] [PubMed] [Google Scholar]

- 6.Ruiz VM, Saenz L, Lopez-Magallon A, et al. Early prediction of critical events for infants with single-ventricle physiology in critical care using routinely collected data. J Thorac Cardiovasc Surg 2019; 158: 234–243.e3. [DOI] [PubMed] [Google Scholar]

- 7.Sutherland SM. Electronic Health Record-Enabled Big-Data Approaches to Nephrotoxin-Associated Acute Kidney Injury Risk Prediction. Pharmacotherapy 2018; 38: 804–12. [DOI] [PubMed] [Google Scholar]

- 8.21st Century Cures Act: Interoperability, Information Blocking, and the ONC Health IT Certification Program. Federal Register. 2020; published online May 1. https://www.federalregister.gov/documents/2020/05/01/2020-07419/21st-century-cures-act-interoperability-information-blocking-and-the-onc-health-it-certification (accessed Nov 26, 2022).

- 9.Littenberg B. Technology assessment in medicine. Academic Medicine 1992; 67: 424–8. [DOI] [PubMed] [Google Scholar]

- 10.Schröer C, Kruse F, Gómez JM. A Systematic Literature Review on Applying CRISP-DM Process Model. Procedia Computer Science 2021; 181: 526–34. [Google Scholar]

- 11.Alcamo AM, Clark RSB, Au AK, et al. Factors Associated With Neurobehavioral Complications in Pediatric Abdominal Organ Transplant Recipients Identified Using Computable Composite Definitions. Pediatr Crit Care Med 2020; 21: 804–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Alcamo AM, Barren GJ, Becker AE, et al. Validation of a Computational Phenotype to Identify Acute Brain Dysfunction in Pediatric Sepsis. Pediatr Crit Care Med 2022; 23: 1027–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.United States Core Data for Interoperability (USCDI). https://www.healthit.gov/isa/united-states-core-data-interoperability-uscdi (accessed Aug 8, 2022). [DOI] [PMC free article] [PubMed]

- 14.Value Set Authority Center. https://vsac.nlm.nih.gov/ (accessed Nov 6, 2023).

- 15.Hauskrecht M, Batal I, Valko M, Visweswaran S, Cooper GF, Clermont G. Outlier detection for patient monitoring and alerting. Journal of Biomedical Informatics 2013; 46: 47–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Harrer S, Shah P, Antony B, Hu J. Artificial Intelligence for Clinical Trial Design. Trends in Pharmacological Sciences 2019; 40: 577–91. [DOI] [PubMed] [Google Scholar]

- 17.Shareable Clinical Decision Support. http://www.healthit.gov/isa/shareable-clinical-decision-support (accessed Nov 7, 2023).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Table 1. TRIPOD checklist.

Supplemental Table 2. Littenberg framework for the assessment of medical technology as applied to BRAIN A-I.

Supplemental Table 3. Data curation steps for individual data elements for the BRAIN AI outcome.

Supplemental Table 4. Data curation steps for individual data elements for BRAIN A-I.

Supplemental Table 5. Standard vocabulary crosswalk for BRAIN-AI components.

Supplemental Table 6. Predictive performance of the XGBoost model with a 12-hour censor horizon and 48-hour feature window in the test dataset, after manual tuning, and after Bayesian tuning.

Supplemental Table 7. Predictive performance of the XGBoost model with a 12-hour censor horizon and 48-hour feature window in the validation dataset, after manual tuning, and after Bayesian tuning.

Supplemental Table 8. Cohort ascertainment and exclusions for varied censored time horizons and feature windows at the development site.

Supplemental Table 9. Performance of the optimal models in the validation dataset at the development and validation sites.

Supplemental Table 10 A) F1 scores of top performing models in the development site validation dataset. B) F1 scores of top performing models in the validation site test dataset.

Supplemental Table 11 A) Fβ (β =2) scores of top performing models in the development site validation dataset; B) Fβ (β =3) scores of top performing models in the development site validation dataset; C) Fβ (β =0.5) scores of top performing models in the development site validation dataset.

Supplemental Table 12 A) Statistical performance of the 12-hour time horizon, 48-hour feature window XGBoost and logistic regression models over a range of score thresholds in the development site validation dataset; B) Statistical performance of the 12-hour time horizon, 48-hour feature window XGBoost model over a range of score thresholds in the development site test dataset.

Supplemental Table 13. Cohort ascertainment and exclusions for varied feature windows for the validation site.

Supplemental Table 14 A) Statistical performance of the extreme gradient boosting (XGBoost) and logistic regression generalizable models at the development site across varied output thresholds. B) Statistical performance of the extreme gradient boosting (XGBoost) and logistic regression generalizable models at the external validation site across varied output thresholds.

Supplemental Figure 1. A representation of the time window and censor horizons used to define cases and controls as part of the development, validation, and test cohorts. The blue boxes in the top, ‘Cases’ box identify a time window that is also demarcated by a horizontal, gold, bidirectional arrow, the horizontal black lines represent length of stay for individual encounters, the vertical black line represents the occurrence of the neurological morbidity outcome, and the gold, horizontal, bidirectional arrow indicates the censor horizon, or period of time that data were not incorporated into the model. In the bottom, ‘Controls’ box, the blue boxes indicate the time window of data used for each stage of model development and evaluation.

Supplemental Figure 2. Data cleaning and feature engineering process.

Supplemental Figure 3. A representation of the feature engineering for continuous biomarker measurements. Temporal information is represented as a variety of summary measurements for discrete windows of time. Feature windows of 24-hours, 48-hours, and 72-hours are displayed in the figure. The models at the development site were trained with 6-hour, 12-hour, and 24-hour censored time horizons, with 12-hour and 24-hour horizons demonstrated in the figure. Definitions and related examples for a 24-hour window of data prior to the censor period are displayed in the table. Abbreviations: h, hours.

Supplemental Figure 4. The process of BRAIN A-I model development and external validation. Curated data at the development site were divided into a train cohort, validation cohort, and 2-years of holdout test data. The curated data were used to generate synthetic data with comparable single variable statistical distributions. The synthetic data were then distributed to the external validation site with model training code, facilitating local data curation by providing the necessary details of data structure. Finally, the working BRAIN A-I pipeline was applied to real-world data at the external validation site, applied separate training and validation datasets. Abbreviations: BRAIN A-I, Biodigital Rapid Alert to Identify Neuromorbidity A-I Bundle; Dev. Site, Development Site; Valid. Site, Validation Site.

Supplemental Figure 5. Plots the key statistical performance metrics sensitivity (gold line), positive predictive value (PPV, red line), F1 score (blue line), and F2 score (green line) with metric values on the y-axis and model output thresholds on the x-axis for A) the logistic regression model with a 12-hour time horizon and 48-hour feature window in the development site validation dataset; B) the extreme gradient boosting model with a 12-hour time horizon and 48-hour feature window in the development site validation dataset; and C) the extreme gradient boosting model with a 12-hour time horizon and 48-hour feature window in the development site test dataset.

Supplemental Figure 6. Calibration plots and associated Brier scores for the top performing models for varied time horizons and features windows in the validation dataset. A) 6-hour time horizon and 24-hour feature window; B) 6-hour time horizon and 48-hour feature window; C) 6-hour time horizon and 72-hour feature window; D) 12-hour time horizon and 24-hour feature window; E) 12-hour time horizon and 48-hour feature window; F) 12-hour time horizon and 72-hour feature window; G) 24-hour time horizon and 24-hour feature window; H) 24-hour time horizon and 48-hour feature window; I) 24-hour time horizon and 72-hour feature window. Abbreviations: LR, logistic regression; XGB, extreme gradient boosted.

Supplemental Figure 7. Top 10 biomarker feature categories based on Shap values for the 12-hour time horizon 48-hour feature window XGBoost model from the development site. Each category contains several features, e.g. Temperature contains maximum temperature, minimum temperature, average temperature, etc. The blue bars represent the sum of the mean Shap values for each category. Abbreviations: biomrkrs, biomarkers.

Supplemental Figure 8. Plots the key statistical performance metrics sensitivity (gold line), positive predictive value (PPV, red line), F1 score (blue line), and F2 score (green line) with metric values on the y-axis and model output thresholds on the x-axis for the generalizable A) logistic regression model in the development site test dataset; B) extreme gradient boosting model in the development site test data set; C) logistic regression model in the external validation dataset; D) extreme gradient boosting model in the external validation site dataset.

Supplemental Figure 9. Calibration plots for the generalizable model in the A) development site test dataset and B) the external validation site dataset.

Supplemental Figure 10. Feature importance analysis for the generalizable model in the A) development site test dataset and B) external validation site dataset.

Data Availability Statement

Data are available for specific use cases with investigator approval.