Abstract

Purpose

Coaching is a well-described means of providing real-time, actionable feedback to learners. We aimed to determine whether dual coaching from faculty physicians and real inpatients led to an improvement in history-taking skills of clerkship medical students.

Patients and Methods

Expert faculty physicians (on Zoom) directly observed 13 clerkship medical students as they obtained a history from 26 real, hospitalized inpatients (in person), after which students received immediate feedback from both the physician and the patient. De-identified audio-video recordings of all interviews were scored by independent judges using a previously validated clinical rating tool to assess for improvement in history-taking skills between the two interviews. Finally, all participants completed a survey with Likert scale questions and free-text prompts.

Results

Students’ history-taking skills – specifically in the domains of communication, medical knowledge and professional conduct – on the validated rating tool, as evaluated by the independent judges, did not significantly improve between their first and second patient interviews. However, students rated the dual coaching as overwhelmingly positive (average score of 1.43, with 1 being Excellent and 5 being Poor), with many appreciating the specificity and timeliness of the feedback. Patients also rated the experience very highly (average score of 1.23, with 1 being Excellent and 5 being Poor), noting that they gained new insights into medical training.

Conclusion

Students value receiving immediate and specific feedback and real patients enjoy participating in the feedback process. Dual physician-patient coaching is a unique way to incorporate more direct observation into undergraduate medical education curricula.

Keywords: direct observation, immediate feedback, medical student education, real patients, virtual teaching

Introduction

A 2012 report by the Association of American Medical Colleges showed that up to one-third of medical student respondents felt they did not receive adequate feedback on their performance during the core clerkships.1 Research has shown that an important aspect of effective feedback is the timeliness of its delivery.2 Timely feedback grounds learners in the recent experience, links feedback to specific observations, and draws clear connections between the feedback and its future applications. A videotape analysis by Maguire and Rutter3 of histories obtained by 50 medical students revealed significant deficiencies in their history-taking skills. The authors suggested that additional opportunities for practice – for example, under direct observation followed by detailed feedback – may be more effective in equipping students with the skills necessary for clinical practice. However, studies have shown that direct observation is used relatively infrequently compared to other methods of evaluating students’ clinical skills.4,5 Thus, we believe the question remains of how to provide more meaningful feedback to students in clinical settings.

Coaching can be a powerful way to provide meaningful feedback because it is grounded in direct observation and occurs in real-time. The importance of coaching in physician training has been described by scholars both in the medical literature and lay press.6,7 In a study by Smith et al, trained faculty directly observed over 500 patient encounters in an outpatient pediatrics resident clinic and provided feedback within three prespecified domains.8 The majority (89%) of residents reported that they changed their approach to some aspects of patient care within each domain due to the feedback they received. Residents also felt that this program resulted in an increased frequency of feedback. In addition to physicians, patients are also an important source of feedback for medical trainees, and incorporation of patients (particularly standardized patients) in medical education has been widely described.9–11 However, our review of the literature, to date, has not revealed studies involving real, hospitalized inpatients providing immediate feedback to learners. Ultimately, such real-time, targeted feedback is vital for improving learners’ clinical skills, and is an integral part of becoming an expert learner and developing a growth mindset.12,13

In this COVID-19-pandemic time frame study at a large, urban academic medical center, we designed a novel method of coaching second-year internal medicine clerkship students in history-taking utilizing direct observation and immediate feedback. Students were coached by both expert clinical physicians (virtually) and real hospitalized inpatients (at the bedside). We elected to focus on the history portion given its key role in cultivating a strong patient-physician relationship and reaching an accurate final diagnosis, coupled with the wide array of methods already demonstrated, including the use of virtual patient cases, to teach history taking to students.14,15 Furthermore, the patient’s point of view has become increasingly important for their satisfaction with the hospital experience.16 As such, incorporating the patient view can provide students with additional insight into history-taking and communication skills required for a successful career. To add to the existing literature on methods of teaching history-taking, the aim of this study was to evaluate whether dual physician-patient coaching improves medical student history-taking skills as measured by a previously validated clinical rating tool called the Minicard.17,18

Materials and Methods

This study was approved by the Institutional Review Board at Brigham and Women’s Hospital (BWH) and the Program in Medical Education Executive Committee at Harvard Medical School (HMS) in 2020. The study was conducted from October 2020 – May 2021. All study participants – students, faculty physicians and volunteer patients – were required to complete a standard audio-visual written consent form provided by BWH (Supplementary Item 1).

Recruitment of Study Participants

We recruited 13 medical student participants from HMS between October 2020 and April 2021, specifically while they were completing their internal medicine clerkship. All students who elected to participate were asked to verbally consent to the study protocol (Supplementary Item 2). Students were assigned a computer-generated random number for anonymity during analysis.

The faculty physicians in this study (RJA, CBB and WCT) had no advising or evaluative relationship with the students. All three of them are recognized for their longstanding excellence in undergraduate and graduate medical teaching, mentoring and educational leadership at Harvard Medical School or Brigham and Women’s Hospital. Prior to the first student-patient interview, the lead authors held a one-hour training session with the three faculty physicians, during which we reviewed each of the three history sections of the Minicard clinical rating tool. Faculty also watched a short video, created by the study team, depicting both well done and poorly done methods for providing immediate feedback to medical students.

Admitted inpatients at BWH, ages 18 and older and who were on medical-surgical floors, were eligible for inclusion in this study and recruited from hospital floors on which clerkship students were not assigned. Patients were excluded if they were on isolation precautions beyond the standard precautions required during the COVID-19 pandemic, had any change in mental status, or if they required an interpreter. All patients were verbally consented (Supplementary Item 3) on the day they participated in the interview and coaching intervention. Immediately preceding the start of the interview, patients were provided brief, one-on-one teaching by the study staff, during which they received a handout with a series of guiding questions to assist them in coaching students (Supplementary Item 4).

Study Coaching Intervention

Student participants took a focused history from medical inpatients (in person), after which they received immediate feedback from the patients and faculty physicians. Feedback was provided directly at the bedside, and as a result, comments were heard by all members of the coaching team, including patients, students, and faculty. Students were not given access to patients’ electronic medical records during the intervention. Students performed two patient interviews – the first termed “pre-coaching”, and the second, “post-coaching” – during the study period. The interval between the first and second interview ranged from one to ten weeks due to the students’ inpatient clerkship schedules. We felt that this interval was adequate for students to demonstrate improvements in their history-taking skills. Due to the COVID-19 pandemic, faculty physicians joined the student-patient encounters virtually, via Zoom Technologies, Inc. All patient interviews and feedback sessions were audio-video recorded using a secure, HIPAA-compliant, hospital-provided computer, monitor and microphone. Patient participants were located in their hospital bed at a 45-degree angle with respect to the student interviewer. The hospital-provided computer and microphone were positioned by an audio-visual technician on a portable cart with a 32-inch television screen (Figure 1).

Figure 1.

Representative image of the setup used for the student-patient interviews. The faculty physician was connected via Zoom using a laptop computer which was connected to a 32-inch monitor. A microphone (shown in blue) was utilized so that faculty were able to clearly hear students and patients.

The first two students were given 20 minutes for the interview, while all remaining students were provided 30 minutes as a more reasonable time frame. Post-interview feedback was first provided by the patients, who were given approximately five minutes, followed by the faculty physicians who were provided 25 minutes. All students were emailed a written summary of the patient and faculty feedback within 48 hours of the interview. All students, patients and coaches were asked to complete a three-question survey (Supplementary Item 5) after each interview and coaching intervention. A Likert scale was used for the survey, with a score of “1” being “Excellent”, “2” being “Very Good”, “3” being “Good”, “4” being “Fair”, and “5” being “Poor”.

Scoring of Audio-Video Recordings

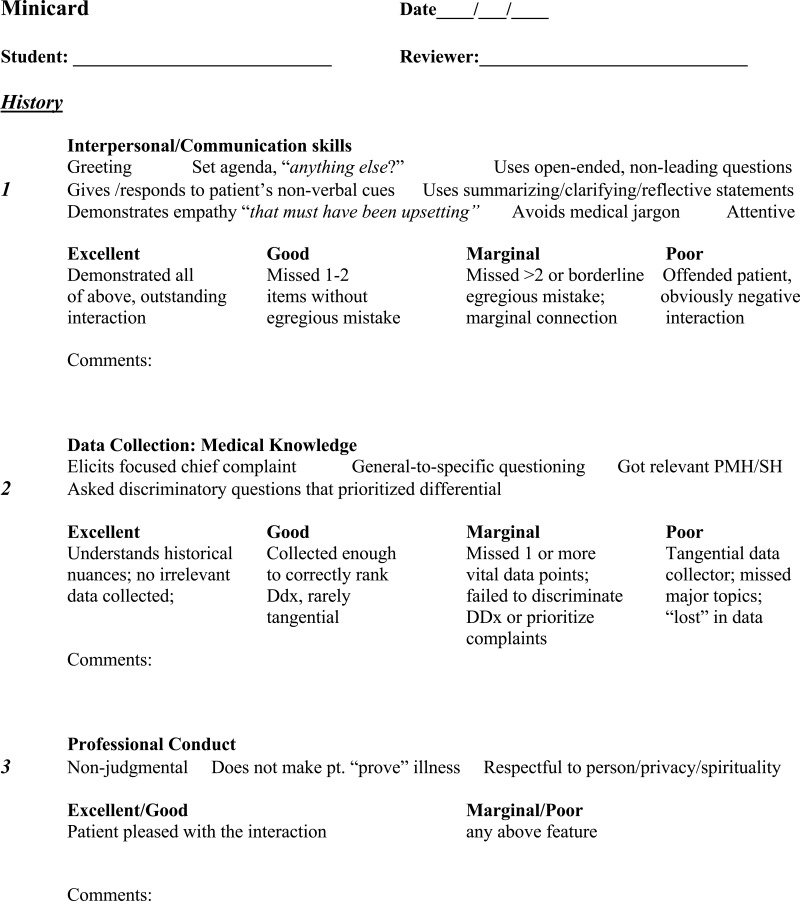

We used the previously validated and published Minicard tool18,19 – with permission from Dr. Anthony Donato – for evaluating students’ interview performance. All scoring was performed by independent, blinded judges. The study team made minor modifications to the original version of this tool, such that our tool focused solely on history-taking (Figure 2). In addition, we created a separate grading checklist (Supplementary Item 6), which listed the 15 specific skills contained in the Minicard, to facilitate the judges’ scoring.

Figure 2.

Modified Minicard tool – comprising three history domains, with a total of 15 skills for all domains – used by the study team to create the grading checklist for the independent judges.

We recruited two clinical faculty (NBA and BJN), who had no advising or evaluative relationship with students, to serve as independent, blinded judges. The judges reviewed de-identified audio-video recordings of each pre- and post-coaching interview and scored them using the grading checklist. Before recruiting the judges, the study team consulted with a separate set of clinical experts (AFL and ASV), who helped refine the scoring procedures, specifically with use of the grading checklist. The input from the clinical experts led to a more streamlined checklist, and ultimately, a more efficient and reproducible scoring procedure for the judges.

Training Session for Independent Judges

The study team conducted a 90-minute training session for the independent judges, during which we provided specific and concrete examples of how to utilize the grading checklist. The judges were shown a brief segment of a training video used by Donato et al in their original study18 (a copy of the full training video was provided to us by Dr. Donato). To further illustrate and standardize checklist scoring for medical student learners (as opposed to post-graduate trainees), the study team created and enacted four brief patient vignettes and asked each of the judges to provide their impressions. During the training session, judges were shown both the modified Minicard and the grading checklist; however, they were asked to use only the grading checklist to document their final scores. The percent agreement on the three domains of the grading checklist was used by the statistician (SRP) to measure the inter-rater reliability between the two independent, blinded judges.

Data Preparation and Analysis

The independent judges were provided an abridged version of the audio-video recordings, including only the 30-minute patient interview (the coaching intervention was removed). Audio-video recordings were identified only by random numbers; the date of the interview was not included. Each of the enrolled students’ pre- and post-coaching interviews was scored by the judges using the Minicard grading checklist.

Pre- and post-coaching survey data from students, faculty physicians and patients were analyzed by the statistician using a Wilcoxon signed-rank test, the non-parametric equivalent of the paired sample t-test. For the grading checklists, we first determined the total percentage of participating students who performed Minicard history-taking skills correctly, and then calculated the mean between the two independent judges for both the pre-and post-coaching interviews. We analyzed the means in aggregate using Wilcoxon signed-rank tests to determine whether there was a difference in student performance (in each of the three domains of the Minicard, separately, and combined) from pre-coaching to post-coaching, thus serving to quantify any effect of the coaching intervention between the first and second patient interview.

Results

Enrollment and Coaching Intervention

A total of 13 clerkship students at BWH were enrolled between October 2020 and April 2021. All students completed both of their patient interviews (pre-coaching and post-coaching) between November 2020 and May 2021, providing a total of 26 patient interviews that were audio-video recorded and subsequently scored by the independent judges. The time between students’ first and second interviews ranged from one to ten weeks. A total of 26 different volunteer inpatients were enrolled in the study, corresponding to each of the 26 student interviews (13 pre-coaching interviews and 13 post-coaching interviews).

Participant Surveys

The mean score of the 26 different patients who rated the patient interview and Zoom coaching session was “Excellent” (1.23 ± 0.439) (Table 1 and Supplementary Table 1). Multiple patients expressed gratitude for being able to help future doctors and added that they gained unique perspectives into medical training. Specifically, they noted that the intervention was “a fascinating insight into medical training”, and “a great learning experience and nice approach from a patient’s point of view”. One patient commented on the real-life nature of the study intervention, specifically that “It was in an actual setting/double room. A lot of things going on, yet student remained focused. Real life situation!” Several patients noted that they wished they had more time with students during the interview, whereas only one patient commented that the interview was longer than expected.

Table 1.

Survey Rating from Patients, Students, and Faculty, Where “1st” Refers to the Pre-Coaching Interview and “2nd” Refers to the Post-Coaching Interview

| N (1st/2nd) | Meana 1st (SD) | Mean 2nd (SD) | Range (1st/2nd) | |

|---|---|---|---|---|

| Patient Rating | (13/13) | 1.23 (0.44) | 1.23 (0.44) | (1–2/1-2) |

| Student Rating | (13/13) | 1.54 (0.66) | 1.31 (0.48) | (1–3/1-2) |

| Faculty Rating | (13/13) | 1.69 (1.11) | 1.69 (0.85) | (1–4/1-3) |

Notes: aAll surveys utilized a Likert scale rating, from 1–5, where 1 = “Excellent”, and 5 = “Poor”.

The students’ overall impression of the coaching intervention was overwhelmingly positive. There was a slight trend towards a more positive view of the coaching experience the second time around (mean score 1.31 ± 0.480) when compared to the first (mean score 1.54 ± 0.660) (Table 1 and Supplementary Table 1). A primary strength, in the view of several students, was that they received “specific” and “targeted” feedback from the faculty physicians. Students added that they were grateful to have been able to apply feedback from their first interview to the second, with one noting,

The session was well structured with clear expectations for all parties involved, which were met…I was particularly grateful to have incorporated the feedback of the patient and the attending and for them to relate to each other in the process.

Another student commented that they wished the coaching intervention could have been in person, recognizing that the COVID-19 pandemic prevented this, while a third remarked on the interruptions during the patient interview (from other hospital personnel). No student gave a score of “3” during the second round of patient interviews, when compared to the first (Table 1).

The three faculty members rated the interview and feedback sessions with an average score of 1.69 for both the pre- (±1.109) and post-coaching (±0.854) interviews (Table 1 and Supplementary Table 1). Faculty commented on the benefits of patient feedback, finding it useful for students’ learning, with one stating, “Previously skeptical, I have been persuaded by experience that the patient’s presence during my feedback enriches the feedback discussion…”. Furthermore, physician coaches were impressed by the effectiveness of the technology (namely, the monitor setup with microphone) in facilitating the student-patient interview during the COVID-19 pandemic. One physician noted that the time limit for the interview may have posed an added challenge to students in gathering the past medical history as well as the history of the present illness. Another faculty member commented that they would have preferred if all students reviewed the audio-video recording of the interview prior to the pre- and post-coaching feedback sessions.

Scoring of Student Interviews by Independent Judges

The overall inter-rater agreement in the Minicard scores between the two judges for all three domains (interpersonal/communication skills, data collection/medical knowledge and professional conduct) was 81%. However, there was some variation noted among the three domains, with domain-specific inter-rater agreements of 81%, 67% and 97% for interpersonal/communication skills, data collection/medical knowledge and professional conduct, respectively. To adhere as closely as possible to the format of the original Minicard, we elected to emphasize the combined inter-rater agreement for all three domains, which was 81%.

When considering the independent judges’ Minicard scores for all 13 students, we did not find a significant difference in the pre- and post-coaching interview scores for each of the three Minicard domains individually, and in the weighted mean of all domains (Table 2). However, there was a small trend towards improvement noted between pre- and post-coaching interviews with respect to students’ interpersonal/communication skills. When stratified by the time (in days) between students’ pre- and post-coaching interviews, we found no difference in the Minicard scores across three different intervals (≤14 days; 15–21 days; and ≥22 days).

Table 2.

Mean Percentage of Students Who Completed Each of the Grading Checklist Skills Correctly for the Pre and Post Interviews (as Rated by Blinded, Independent Judges), Reported for the Three Domains, and as a Weighted Meana

| Minicard Domain | Mean (pre) (SD) N=26 | Mean (post) (SD) N=26 | P-value | Effect Size (Cohen’s d) |

|---|---|---|---|---|

| Interpersonal/ Communication Skills | 0.81 (0.17) | 0.88 (0.11) | 0.08 | 0.14 |

| Data Collection/Medical Knowledge | 0.78 (0.24) | 0.76 (0.26) | 0.79 | 0.25 |

| Professional Conduct | 0.97 (0.09) | 1.0 (0) | 0.16 | 0.06 |

| Weighted Mean (all domains) | 0.83 (0.14) | 0.87 (0.12) | 0.06 | 0.13 |

Notes: aThe weighted mean places relatively more importance on the first two domains (interpersonal/communication skills and data collection/medical knowledge) compared to the third domain (professional conduct) given a greater number of individual criteria in the first two domains (see Supplementary Item 6 for the Minicard Grading Checklist, which shows all criteria under each domain).

Discussion

In this study, we describe the use of a novel coaching intervention through which clerkship medical students received immediate feedback on their history-taking after being directly observed by both medical inpatients (in person) and expert faculty (virtually). To our knowledge, this is the first study to incorporate hospitalized patients in the feedback process for medical students. We found that while students’ scores were not significantly improved post-coaching, students found the dual coaching intervention to be highly effective and beneficial for their learning.

Prior studies have shown that direct observation and immediate feedback can have a meaningful impact on the development of clinical skills.20–23 Many of the student comments from our study focus on how the feedback from faculty helped identify specific areas within the history of present illness that could have been improved upon. An aspect of the study that was frequently mentioned by students as helping them identify deficits in their history taking was the immediate and focused nature of feedback that they received. Killion discusses attributes of effective feedback, specifically noting the importance of timely, frequent, and process-based feedback as some of the features of “learning-focused feedback”, as compared to “traditional feedback”, which tends to be past-focused, untimely and occasional.24 Several studies in the medical education literature have also underscored the importance of immediate and specific feedback as having a greater effect on personal and professional development of trainees.25–27 Furthermore, our students remarked positively on the organization of the study intervention, specifically regarding the clear expectations and instructions received at the start of the interview, and the fact that they were able to take feedback received in the first session and apply it to the second patient interview. Both of these aspects were highlighted by Ramani and Krackov as components of effective feedback in clinical environments.28 Our study adds to the growing body of literature on the benefits of immediate feedback in medical education, particularly when that feedback occurs directly after observation of clinical encounters with patients.

An innovative aspect of our study was the incorporation of real, volunteer hospitalized patients in the feedback process. The patients in our study were overwhelmingly enthusiastic regarding their participation as coaches for the students, commenting on the insight they gained through this unique role. Likewise, students also found the feedback they received from patients to be helpful in several respects. Simek-Downing et al29 looked at the benefits of both standardized patients and real patients in teaching interviewing skills and found that standardized patients were of most value in teaching verbal interviewing skills, such as summarization, while real patients were most helpful in teaching psychosocial skills such as body posture, eye contact and the use of appropriate patient-centered language. Another study reported that, while students found real patients to be more authentic compared to standardized patients, the real patients in that study were ill-prepared regarding the purpose of the encounter, something which we attempted to address by preparing patients in advance with guiding questions.30 In another study, medical students practiced a variety of communication skills with real, volunteer patients in a classroom-based workshop, and rated the exercise as highly useful to their learning.31 Students in this study commented on the interpersonal skills they learned from their patients, such as the importance of “confidence and body language”, and “when and how to engage family”. This work highlights the idea that real patients can play a valuable role in teaching clinical skills – particularly interpersonal communication and bedside manner – to early learners.

We had to make several changes to the methods due to the ongoing COVID-19 pandemic during the study period. One of the most significant was the incorporation of the monitor and microphone setup, which allowed faculty to provide their feedback virtually rather than in person. This was a unique way of connecting faculty members to the student-patient interview, which occurred in person in the hospital room. A 2022 study describes the use of telehealth patient encounters for the Objective Standardized Clinical Examination, in which medical students were directly observed by in-person faculty as they interviewed standardized patients who were located remotely.32 This is one example demonstrating the feasibility and effectiveness of incorporating virtual encounters into undergraduate medical education. An important consideration with respect to the use of virtual platforms is the specific technology that is incorporated and how various components, such as equipment positioning, room lighting and individual body movements, factor into the effectiveness of the encounter.33 In this study, we had the assistance of expert audio-visual technicians when setting up the monitor and microphone, thus ensuring proper positioning to optimize the interview and feedback process.

We chose to use the grading checklist, which was derived from the Minicard rating tool, for the independent, blinded judges’ ratings for the first and second patient interviews. While the inter-rater agreement between the judges of 81% is reasonable,34 we would have preferred a higher overall and domain-specific level of agreement. A possible explanation for the variability in domain-specific agreement is the complexity of the grading criteria for both the medical knowledge and interpersonal and communication skills domains.35 In addition, students’ medical knowledge and communication skills are recognized to develop throughout medical school and beyond. In contrast, the professional conduct domain contains criteria (non-judgmental, does not make patient prove illness, and respectfulness to person, privacy, and spirituality) that are more straightforward for judges to score. These qualities are also typically present in medical students at the outset of their medical school careers.36

This study has several limitations. Because this was a pilot intervention conducted exclusively during the core clerkship period, students could only fit in two coaching sessions throughout the study. We believe that having only two coaching sessions was not sufficient to produce a meaningful change in history-taking skills. While the time between the two coaching sessions was highly variable among students, our analyses did not reveal any effect of the time interval. Furthermore, students’ approaches to taking histories may have been relatively fixed due to previously established habits during the first year of medical school, thus potentially blunting the effects of a small number of coaching sessions. The content of the coaching itself may have also been inconsistent with the skills emphasized on the Minicard – additional faculty development may help to mitigate this. Of note, online distance coaching may not have promoted the same degree of desired changes in history-taking skills that we expected, which could be related to the learning curve associated with the technology. Video conferencing may not have adequately conveyed the body language and expressional components that often play an important role in traditional, in-person coaching. This may have diminished the impact of the suggestions and recommendations from the faculty physicians. Finally, the number of enrolled students (13) in this study was also small, which likely decreased our ability to detect a significant change in student skills.

A key next step to build on the pilot intervention of dual physician-patient coaching, we believe, centers on having more frequent, regular, and longitudinally-scheduled feedback sessions (perhaps throughout the entirety of the clerkship year), which has been shown to positively impact student learning.37–39 Also, incorporation of video review by students, together with patients and coaches, immediately after the interview may foster a deeper feedback discussion, including the opportunity for self-assessment by students.40–42 Our innovative technique of dual physician-patient coaching is also applicable to physical exam and communication skills.31,43

Conclusion

In this study, we asked the question of whether a dual coaching model, incorporating feedback from faculty physicians and admitted inpatients, improved medical student history-taking skills as measured by a validated clinical rating tool. While our analysis showed that students’ history-taking skills did not measurably improve after the coaching intervention, students nonetheless benefitted from not only from the timely, specific, and actionable feedback provided by the faculty, but also the unique perspective provided by real patients. A small sample size, single time point and focus on only one aspect of the patient encounter (eg, the history) may have contributed to this lack of improvement. Overall, this study provides initial evidence for the utility of a dual coaching model for providing feedback to medical students and highlights the important role inpatients can play in the feedback process, which may also serve to improve the overall patient experience. We believe these findings open the door for larger, multi-site studies investigating dual coaching in medical education.

Acknowledgments

We thank the BWH Internal Medicine Leadership including Marshall Wolf, MD, Joseph Loscalzo MD, PhD, and Joel Katz, MD for their strong support of the Medical Education Fellowship for internal medicine residents at BWH.

We acknowledge our debt to Anthony A Donato, MD for generously giving us permission to use the validated Minicard workplace-based assessment tool in this study.

We thank Subha Ramani, MBBS, PhD, for her expert advice regarding faculty development in 2020.

We greatly appreciate the assistance of Angel Ayala, Anilton Gomes, Peter Linck, and all the audio-visual technicians at BWH who helped us with the individual audio-video recordings at the bedside.

RJA, CBB and WCT are equal co-second authors. AFL, ASV, NBA and BJN are equal co-third authors.

An abstract of this work was submitted to the BWH Department of Medicine Resident Research Day (May 2021) and was awarded the K. Frank Austen Award for Disruption of Scientific Thinking.

Ethics Approval and Informed Consent

This study was approved by the Institutional Review Board at Mass General Brigham (formerly Partners Healthcare), protocol #2020P000990, which was deemed Exempt by the IRB. Patients, students, and coaching faculty were all asked to sign the formal BWH audio-visual written consent form. Patients and students were also verbally consented for participation in the study prior to the student-patient interview taking place (see Supplementary Items 2 and 3).

Disclosure

KSP reports consulting fees from Medlearnity, Inc. CBB receives compensation from Wolters Kluwer for authoring chapters for UpToDate. ASV reports consulting fees from Broadview Ventures, Hippocratic AI, and Baim Clinical Research Institute. All of these are outside of the submitted work. The authors report no other potential conflicts of interest in this work.

References

- 1.Weinstein DF. Feedback in clinical education: untying the Gordian knot. Acad Med. 2015;90(5):559–561. doi: 10.1097/ACM.0000000000000559 [DOI] [PubMed] [Google Scholar]

- 2.Chowdhury RR, Kalu G. Learning to give feedback in medical education. Obstet Gynaecol. 2004;6(4):243–247. doi: 10.1576/toag.6.4.243.27023 [DOI] [Google Scholar]

- 3.Maguire GP, Rutter DR. History-taking for medical students. I-deficiencies in performance. Lancet. 1976;2(7985):556–558. doi: 10.1016/s0140-6736(76)91804-3 [DOI] [PubMed] [Google Scholar]

- 4.Howley LD, Wilson WG. Direct observation of students during clerkship rotations: a multiyear descriptive study. Acad Med. 2004;79(3):276–280. doi: 10.1097/00001888-200403000-00017 [DOI] [PubMed] [Google Scholar]

- 5.Kumar A, Gera R, Shah G, Godambe S, Kallen DJ. Student evaluation practices in pediatric clerkships: a survey of the medical schools in the United States and Canada. Clin Pediatr. 2004;43(8):729–735. doi: 10.1177/000992280404300807 [DOI] [PubMed] [Google Scholar]

- 6.Deiorio NM, Carney PA, Kahl LE, Bonura EM, Juve AM. Coaching: a new model for academic and career achievement. Med Educ Online. 2016;21:33480. doi: 10.3402/meo.v21.33480 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gawande A. Personal best: the coach in the operating room. The New Yorker; 2011. Available from: https://www.newyorker.com/magazine/2011/10/03/personal-best. Accessed August 6, 2023.

- 8.Smith J, Jacobs E, Li Z, Vogelman B, Zhao Y, Feldstein D. Successful implementation of a direct observation program in an ambulatory block rotation. J Grad Med Educ. 2017;9(1):113–117. doi: 10.4300/JGME-D-16-00167.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Björklund K, Stenfors T, Nilsson G, Leanderson C. Learning from patients’ written feedback: medical students’ experiences. Int J Med Educ. 2022;13:19–27. doi: 10.5116/ijme.61d5.8706 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Barr J, Ogden K, Robertson I, Martin J. Exploring how differently patients and clinical tutors see the same consultation: building evidence for inclusion of real patient feedback in medical education. BMC Med Educ. 2021;21(1):246. doi: 10.1186/s12909-021-02654-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bokken L, Linssen T, Scherpbier A, van der Vleuten C, Rethans JJ. Feedback by simulated patients in undergraduate medical education: a systematic review of the literature. Med Educ. 2009;43(3):202–210. doi: 10.1111/j.1365-2923.2008.03268.x [DOI] [PubMed] [Google Scholar]

- 12.Ericsson KA, Krampe RT, Tesch-Römer C. The role of deliberate practice in the acquisition of expert performance. Psychol Rev. 1993;100(3):363–406. doi: 10.1037/0033-295X.100.3.363 [DOI] [Google Scholar]

- 13.Osman NY, Sloane DE, Hirsh DA. When I say … growth mindset. Med Educ. 2020;54(8):694–695. doi: 10.1111/medu.14168 [DOI] [PubMed] [Google Scholar]

- 14.Keifenheim KE, Teufel M, Ip J, et al. Teaching history taking to medical students: a systematic review. BMC Med Educ. 2015;15:159. doi: 10.1186/s12909-015-0443-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Raafat N, Harbourne AD, Radia K, Woodman MJ, Swales C, Saunders KEA. Virtual patients improve history-taking competence and confidence in medical students. Med Teach. 2024;46(5):682–688. doi: 10.1080/0142159X.2023.2273782 [DOI] [PubMed] [Google Scholar]

- 16.Rockey NG, Ramos GP, Romanski S, Bierle D, Bartlett M, Halland M. Patient participation in medical student teaching: a survey of hospital patients. BMC Med Educ. 2020;20(1):142. doi: 10.1186/s12909-020-02052-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kogan JR, Holmboe ES, Hauer KE. Tools for direct observation and assessment of clinical skills of medical trainees: a systematic review. JAMA. 2009;302(12):1316–1326. doi: 10.1001/jama.2009.1365 [DOI] [PubMed] [Google Scholar]

- 18.Donato AA, Pangaro L, Smith C, et al. Evaluation of a novel assessment form for observing medical residents: a randomised, controlled trial. Med Educ. 2008;42(12):1234–1242. doi: 10.1111/j.1365-2923.2008.03230.x [DOI] [PubMed] [Google Scholar]

- 19.Donato AA, Park YS, George DL, Schwartz A, Yudkowsky R. Validity and feasibility of the MiniCard direct observation tool in 1 training program. J Grad Med Educ. 2015;7(2):225–229. doi: 10.4300/JGME-D-14-00532.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Luo P, Shen J, Yu T, Zhang X, Zheng B, Yang J. Formative objective structured clinical examination with immediate feedback improves surgical clerks’ self-confidence and clinical competence. Med Teach. 2023;45(2):212–218. doi: 10.1080/0142159X.2022.2126755 [DOI] [PubMed] [Google Scholar]

- 21.Junod Perron N, Louis-Simonet M, Cerutti B, Pfarrwaller E, Sommer J, Nendaz M. Feedback in formative OSCEs: comparison between direct observation and video-based formats. Med Educ Online. 2016;21:32160. doi: 10.3402/meo.v21.32160 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Thompson Buum H, Dierich M, Adam P, Hager KD. Implementation of a direct observation and feedback tool using an interprofessional approach: a pilot study. J Interprof Care. 2021;35(4):641–644. doi: 10.1080/13561820.2019.1640190 [DOI] [PubMed] [Google Scholar]

- 23.Cohen SN, Farrant PB, Taibjee SM. Assessing the assessments: U.K. dermatology trainees’ views of the workplace assessment tools. Br J Dermatol. 2009;161(1):34–39. doi: 10.1111/j.1365-2133.2009.09097.x [DOI] [PubMed] [Google Scholar]

- 24.Killion J. The Feedback Process: Transforming Feedback for Professional Learning. Learning Forward; 2015. [Google Scholar]

- 25.Murdoch-Eaton D, Sargeant J. Maturational differences in undergraduate medical students’ perceptions about feedback. Med Educ. 2012;46(7):711–721. doi: 10.1111/j.1365-2923.2012.04291.x [DOI] [PubMed] [Google Scholar]

- 26.Watling CJ, Kenyon CF, Zibrowski EM, et al. Rules of engagement: residents’ perceptions of the in-training evaluation process. Acad Med. 2008;83(10 Suppl):S97–S100. doi: 10.1097/ACM.0b013e318183e78c [DOI] [PubMed] [Google Scholar]

- 27.Garner MS, Gusberg RJ, Kim AW. The positive effect of immediate feedback on medical student education during the surgical clerkship. J Surg Educ. 2014;71(3):391–397. doi: 10.1016/j.jsurg.2013.10.009 [DOI] [PubMed] [Google Scholar]

- 28.Ramani S, Krackov SK. Twelve tips for giving feedback effectively in the clinical environment. Med Teach. 2012;34(10):787–791. doi: 10.3109/0142159X.2012.684916 [DOI] [PubMed] [Google Scholar]

- 29.Simek-Downing L, Quirk ME, Letendre AJ. Simulated versus actual patients in teaching medical interviewing. Fam Med. 1986;18(6):358–360. [PubMed] [Google Scholar]

- 30.Bokken L, Rethans JJ, Jöbsis Q, Duvivier R, Scherpbier A, van der Vleuten C. Instructiveness of real patients and simulated patients in undergraduate medical education: a randomized experiment. Acad Med. 2010;85(1):148–154. doi: 10.1097/ACM.0b013e3181c48130 [DOI] [PubMed] [Google Scholar]

- 31.Ali NB, Pelletier SR, Shields HM. Innovative curriculum for second-year Harvard-MIT medical students: practicing communication skills with volunteer patients giving immediate feedback. Adv Med Educ Pract. 2017;8:337–345. doi: 10.2147/AMEP.S135172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Farrell SE, Junkin AR, Hayden EM. Assessing clinical skills via telehealth objective standardized clinical examination: feasibility, acceptability, comparability, and educational value. Telemed J E Health. 2022;28(2):248–257. doi: 10.1089/tmj.2021.0094 [DOI] [PubMed] [Google Scholar]

- 33.Newcomb AB, Duval M, Bachman SL, Mohess D, Dort J, Kapadia MR. Building rapport and earning the surgical patient’s trust in the era of social distancing: teaching patient-centered communication during video conference encounters to medical students. J Surg Educ. 2021;78(1):336–341. doi: 10.1016/j.jsurg.2020.06.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Stemler SE. A comparison of consensus, consistency, and measurement approaches to estimating interrater reliability. Pract Assess Res Eval. 2004;9(4). doi: 10.7275/96jp-xz07 [DOI] [Google Scholar]

- 35.Patel R. Enhancing history-taking skills in medical students: a practical guide. Cureus. 2023;15(7):e41861. doi: 10.7759/cureus.41861 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Bore M, Munro D, Powis D. A comprehensive model for the selection of medical students. Med Teach. 2009;31(12):1066–1072. doi: 10.3109/01421590903095510 [DOI] [PubMed] [Google Scholar]

- 37.Bakke BM, Sheu L, Hauer KE. Fostering a feedback mindset: a qualitative exploration of medical students’ feedback experiences with longitudinal coaches. Acad Med. 2020;95(7):1057–1065. doi: 10.1097/ACM.0000000000003012 [DOI] [PubMed] [Google Scholar]

- 38.Bates J, Konkin J, Suddards C, Dobson S, Pratt D. Student perceptions of assessment and feedback in longitudinal integrated clerkships. Med Educ. 2013;47(4):362–374. doi: 10.1111/medu.12087 [DOI] [PubMed] [Google Scholar]

- 39.Voyer S, Cuncic C, Butler DL, MacNeil K, Watling C, Hatala R. Investigating conditions for meaningful feedback in the context of an evidence-based feedback programme. Med Educ. 2016;50(9):943–954. doi: 10.1111/medu.13067 [DOI] [PubMed] [Google Scholar]

- 40.Ozcakar N, Mevsim V, Guldal D, et al. Is the use of videotape recording superior to verbal feedback alone in the teaching of clinical skills? BMC Public Health. 2009;9(1):474. doi: 10.1186/1471-2458-9-474 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hammoud MM, Morgan HK, Edwards ME, Lyon JA, White C. Is video review of patient encounters an effective tool for medical student learning? A review of the literature. Adv Med Educ Pract. 2012;3:19–30. doi: 10.2147/AMEP.S20219 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Pinsky LE, Wipf JE. A picture is worth a thousand words: practical use of videotape in teaching. J Gen Intern Med. 2000;15(11):805–810. doi: 10.1046/j.1525-1497.2000.05129.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Shields HM, Fernandez-Becker NQ, Flier SN, et al. Volunteer patients and small groups contribute to abdominal examination’s success. Adv Med Educ Prac. 2017;8:721–729. doi: 10.2147/AMEP.S146500 [DOI] [PMC free article] [PubMed] [Google Scholar]