Abstract

We propose an improved superpixel segmentation algorithm based on visual saliency and color entropy for online color detection in printed products. This method addresses the issues of low accuracy and slow speed in detecting color deviations in print quality control. The improved superpixel segmentation algorithm consists of three main steps: Firstly, simulating human visual perception to obtain visually salient regions of the image, thereby achieving region-based superpixel segmentation. Secondly, adaptively determining the superpixel size within the salient regions using color information entropy. Finally, the superpixel segmentation method is optimized using hue angle distance based on chromaticity, ultimately achieving a region-based adaptive superpixel segmentation algorithm. Color detection of printed products compares the color mean values of post-printing images under the same superpixel labels, outputting labels with color deviations to identify areas of color differences. The experimental results show that the improved superpixel algorithm introduces color phase distance with better segmentation accuracy, and combines it with human visual perception to better reproduce the color information of printed materials. Using the method described in this article for printing color quality inspection can reduce data computation, quickly detect and mark color difference areas, and provide the degree of color deviation.

Keywords: Improved super-pixel segmentation, Visual saliency, Color printing, Color difference detection

Subject terms: Engineering, Mechanical engineering

Introduction

With the advancement of modern printing technology, printed products exhibit unprecedented levels of color richness and subtlety. The color performance and color consistency of printed images have always been key factors in quality assessment, while traditional manual color inspection methods suffer from inefficiency and poor accuracy1. Similarly, color detection methods relying on colorimeters and spectrophotometers are limited by their costly equipment, operational complexity, and lack of real-time capability, hindering their ability to provide timely feedback alongside printing processes. The application of machine vision technology has made real-time color inspection of color printed images a practical reality. By integrating machine vision with image processing techniques, color defects occurring during the printing process can be promptly detected and feedback can be provided to adjust the output devices in real time, thereby enhancing production efficiency and ensuring improved print quality2.

Currently, there have been numerous advancements in research on image quality detection based on machine vision. Ma et al.3 have utilized a quadratic template matching algorithm to segment the ROI (Region of Interest) in images and detect defect positions using grayscale information. The team led by Li4 has employed an improved cosine similarity for secondary gradient matching to identify defects in printed labels. Zhang5 has adopted a Codebook-based background subtraction method to detect foreground and characterize defect locations. Ha Quang Thinh Ngo6 has employed convolutional neural networks to learn features of scratches, punctures, and uneven fabric surfaces for textile defect detection.

In related research, it has been found that machine vision applications for shape defect quality inspection in printed images are more diverse, while studies on color defects in printed materials are relatively scarce. Japanese scholars Ichirou Ishimaru and Seiji Hata7 were among the first to propose views on shape and color defects in printed images. The research on shape defects focuses more on the shape features of linear defects, while the research on color defects emphasizes color changes and other color features, both of which are crucial for printing quality. Nussbaum et al.8 utilized color data and characterization datasets of printed materials for color comparison in the quality inspection of newspaper printing. C. Sodergard et al.9 employed CCD machine vision technology to obtain printing quality tables based on indicators such as color difference, gray balance, dot gain, and uniformity, comparing them with standard color tables. The PressSIGN10 software developed by the British company BodoniSystems integrates density and colorimetric detection functions to inspect the color quality of printed materials. It guides workers in correcting the color standards of printing equipment through multiple stages, including print control strip measurement, solid density adjustment, and curve compensation. These methods are limited by their inability to directly detect defects in printed images. researchers11 have found that three-dimensional histograms, as complex information-bearing structures of color images, can effectively reflect pixel information. J. Luo12 used the color distribution in the color histogram of images as input to a classifier neural network, calculating and comparing the output feature vectors to achieve automatic color detection. Considering the time cost of neural network training, researchers13 extracted color quantities from images in different color spaces, reconstructed them into one-dimensional feature vectors after quantization, and then performed comparisons. Hamdani et al.14 classified images into eight categories based on the histograms of image color channels and extracted features in multiple color spaces. They then used the PCA algorithm to extract principal components, forming new features to train neural networks for color difference detection. Histogram-based color analysis neglects the spatial relationship of image pixels, and the quantization approach increases the ambiguity of color difference comparison. These methods perform global detection on printed images, only verifying whether color differences exist. Our method, however, can pinpoint the exact location of the color difference and quantify the degree of deviation.

Image segmentation enables computers to perceive and understand image information in a way similar to human perception15. Image segmentation preserves the relationships between pixels in a region, and similar pixels are segmented together. In the study by Zhang et al.16, similar pixels are clustered into the same superpixel to represent local color information, enabling faster detection. Considering the complexity of spectral photometry measurements for colorful patterned fabrics, Nian Xiong17 proposed an imaging method based on clustering pixels by color similarity to save subsequent detection time. Superpixels, based on low-level image information, enhance the efficiency and stability of color segmentation, maintaining region consistency and stability, effectively improving image processing efficiency18. The above content inspired us to propose applying superpixels to printing color quality detection.

Regional saliency is the basis for determining the importance of areas within an image, as it contains more information. The identification of salient regions in an image utilizes methods such as regional contrast, for example, color contrast, making the segmented boundaries more stable in terms of color distinction19. Additionally, the human visual system exhibits high sensitivity to significant regions20. Our proposed improved superpixel algorithm considers human visual perception, performing finer segmentation in visually salient regions. The segmentation size is adaptively determined based on the information entropy of the region and the initial size. Additionally, the clustering process is optimized using the hue angle distance between pixels, resulting in better segmentation outcomes.

Our contributions compared to other state-of-the-art approaches are summarized as:

-

An improved Simple Linear Iterative Clustering superpixel algorithm is proposed for color detection in printed images following two new visions:

- Using visual saliency and color information entropy, the image is segmented into regions through superpixel segmentation, with adaptive adjustment applied to salient regions.

- The similarity measure in the traditional clustering process is optimized by incorporating the hue angle distance between pixels, improving label assignment.

Color detection in printed images: Based on the improved superpixel algorithm, the superpixel labels and positions of the reference image are obtained. The color information of the corresponding superpixel regions in the test image and the standard image is compared, and superpixel regions exceeding the threshold are marked. The proposed method performs global color detection on the color image and displays the superpixels of the color deviation regions at the corresponding positions in the image.

Method

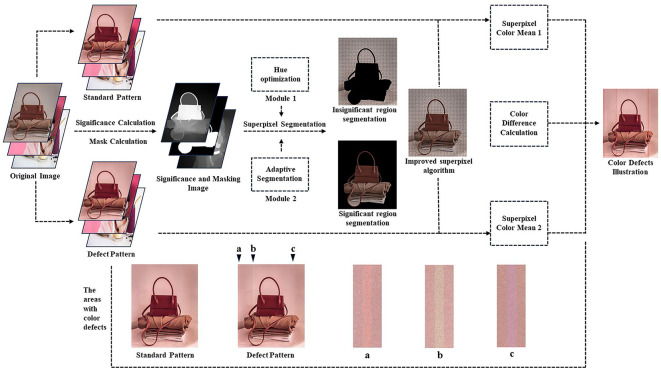

The relevant process of the algorithm is shown in Fig. 1. The pre-press image undergoes saliency computation to obtain the saliency map, which segments the foreground and background, resulting in a saliency mask. The original superpixel segmentation algorithm is optimized using the hue-optimized pixel clustering algorithm in Module 1 and the color entropy-based adaptive superpixel size control algorithm in significant regions in Module 2. The improved superpixel algorithm performs superpixel segmentation on the pre-press image, saving the pixel positions and corresponding superpixel label information.

Fig. 1.

Workflow diagram of printing color detection method based on improved superpixel segmentation algorithm.

For post-press standard images, color correction is performed, and for post-press defect images, color correction and registration are carried out. The post-press standard image is a sample image of a qualified print. Pixels at corresponding positions in the post-press image are obtained based on superpixel labels, and the color mean in the LAB color space is calculated for the region. The color difference of the same superpixel block is computed using the color difference formula, and superpixel labels with differences exceeding the threshold are output. The corresponding positions in the post-press image are marked based on the output superpixel labels, and the related color difference information and occurrence positions are saved as a file.

Superpixel clustering

Since 2009, superpixel technology has been widely applied in the field of computer vision, benefiting from its ability to extract advantageous features to support subsequent tasks. It has found extensive use in various domains such as saliency detection, object detection, semantic segmentation, and image detection. There are numerous types of superpixel segmentation algorithms, including those based on watershed, density, graph theory, clustering, and energy optimization. Research by David Stutz26 indicates that algorithms based on graph theory, clustering, and energy optimization tend to yield better segmentation results. Graph-based algorithms rely on edge weights in images for segmentation, merging pixels from bottom to top based on color differences, although resulting superpixels may vary in size. Energy optimization segmentation algorithms score highest in boundary recall and under-segmentation rates but exhibit lower uniformity and compactness compared to clustering-based methods.Considering the subsequent need for region color comparison, this paper adopts the mainstream clustering-based algorithm—Simple Linear Iterative Clustering (SLIC)27. The steps of the SLIC algorithm are as follows:

Step 1: Initialization of Cluster Centers. Determine the number of cluster centers based on the pre-set number of superpixels for image segmentation. Suppose the desired number of superpixels is K and the total number of pixels in the image is N. To ensure that each superpixel is of similar size, set the superpixel size to  . Distribute the cluster centers uniformly, with a step size

. Distribute the cluster centers uniformly, with a step size  of

of  .

.

Step 2: Gradient-Based Adjustment of Cluster Centers. Calculate the gradient values of pixels in the Moore neighborhood of each cluster center. Adjust the position of the cluster center to the pixel location with the minimum gradient value within this neighborhood. This correction ensures that the initial positions of the cluster centers do not fall on image texture locations.

Step 3: Similarity Search and Label Assignment. Search within a 2l × 2l region around each cluster center. Construct the Euclidean distance metric using the LAB color space and the spatial positions of the pixels. Compare the similarity between the pixels in the region and the cluster center. Update the cluster center position to the location of the most similar pixel within the region and assign the same label to similar pixels23.

|

1 |

|

2 |

|

3 |

Where ( ,

, ,

, ) are the color values of a pixel i in the region. (

) are the color values of a pixel i in the region. ( ,

, ,

, ) are the color values of the cluster center. (

) are the color values of the cluster center. ( ,

, ) and (

) and ( ,

, ) are the spatial coordinates of pixel i and the cluster center, respectively.

) are the spatial coordinates of pixel i and the cluster center, respectively.  is the color distance.

is the color distance.  is the spatial distance.

is the spatial distance.  represents the maximum color distance in the region.

represents the maximum color distance in the region.  represents the maximum spatial distance in the region.

represents the maximum spatial distance in the region.

Iterate the above Steps 2 and 3, allowing the cluster centers to converge to more optimal positions. Set the number of iterations to  .

.

In image detection, the choice of the number of superpixels is crucial for better reflecting image information. The non-uniformity in image sizes necessitates adjusting the value of K. In practice, the size of the superpixels is of greater concern, making the determination of  more direct. The parameter

more direct. The parameter  is used to control the influence of color during clustering and can be adjusted for different images. The optimized distance formula

is used to control the influence of color during clustering and can be adjusted for different images. The optimized distance formula  is as follows:

is as follows:

|

4 |

In the formula,  is a weight used to control the relative importance of color similarity and spatial proximity during the clustering process.

is a weight used to control the relative importance of color similarity and spatial proximity during the clustering process.

Calculation of salient map based on convex hull center

Print patterns are complex and diverse, including both natural images and artificially created non-natural images, lacking regularity. A bottom-up, data-driven approach to salient object detection is more suitable for print image inspection28. This paper uses the saliency region prediction algorithm proposed by Yang et al.29 to determine the visually salient regions in printed images. Below is a brief description of the implementation process from Ref.29. Initially, the image is segmented into superpixels using the SLIC algorithm to capture the underlying structure of the print pattern. Subsequently, the saliency value  for each superpixel region i in the LAB color space is computed.

for each superpixel region i in the LAB color space is computed.

|

5 |

Where j represents all other superpixel regions,  is the mean color feature of superpixel i in the color space,

is the mean color feature of superpixel i in the color space,  represents the normalized spatial coordinate mean of the region, and

represents the normalized spatial coordinate mean of the region, and  is the weight controlling the spatial position relationship.

is the weight controlling the spatial position relationship.

Using the convex hull that encloses the points of interest, the centroid of the convex hull is taken as the center. This corrects the error caused by the deviation of the target from the image center in the center prior concept and constructs the saliency value

|

6 |

Combining the contrast prior map and the center prior map, the initial saliency map  is obtained:

is obtained:

|

7 |

To construct a sparse connectivity graph  for reflecting the final saliency map, where V is the set of superpixel nodes and E is the undirected link of adjacent superpixels sharing boundaries. The boundary weight

for reflecting the final saliency map, where V is the set of superpixel nodes and E is the undirected link of adjacent superpixels sharing boundaries. The boundary weight  of adjacent superpixels is calculated as the smoothness prior, indicating similarity between neighbors, with

of adjacent superpixels is calculated as the smoothness prior, indicating similarity between neighbors, with  as the controlling weight:

as the controlling weight:

|

8 |

Define the saliency cost function E(S). By minimizing E(S) and combining graph regularization, salient objects are highlighted, and the background is suppressed:

|

9 |

where  and

and  are the saliency values of regions i and j. Respectively,

are the saliency values of regions i and j. Respectively,  is the regularization parameter. The optimal saliency value

is the regularization parameter. The optimal saliency value  is obtained by setting the derivative to zero. D is a diagonal matrix, and the elements on the diagonal are

is obtained by setting the derivative to zero. D is a diagonal matrix, and the elements on the diagonal are ,

,  , I represents region i:

, I represents region i:

|

10 |

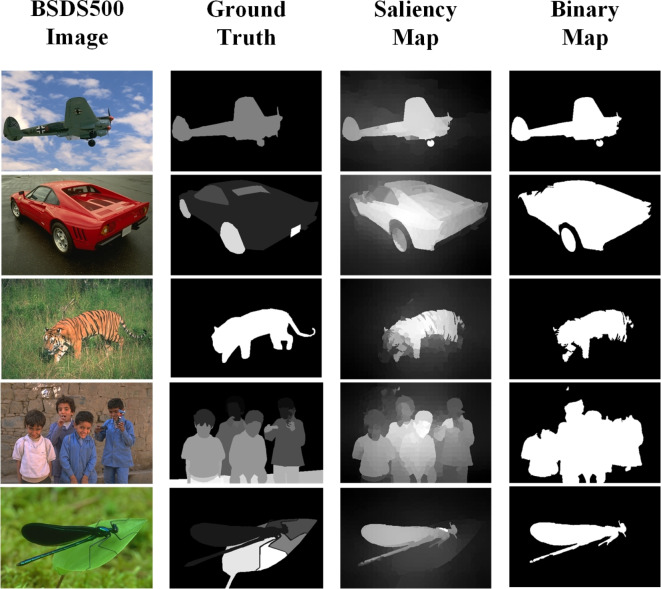

The visually salient regions in the image are obtained, as shown in the third column of Fig. 2. We used the Otsu30 method, the threshold is automatically calculated by minimizing intra-class variance and maximizing inter-class variance, distinguishing the foreground from the background in the image, resulting in a binary image of the salient regions, as shown in the fourth column of the Fig. 2. The first column shows images from the BSDS500 dataset, and the second column shows the ground truth segmentation images related to the provided dataset.

Fig. 2.

Image saliency detection illustration. The first column contains images from the BSDS500 dataset, the second column shows the manually generated saliency segmentation of the corresponding images, the third column displays the saliency detection results obtained by the algorithm, and the fourth column presents the binary saliency map of the saliency image.

Hue optimization clustering

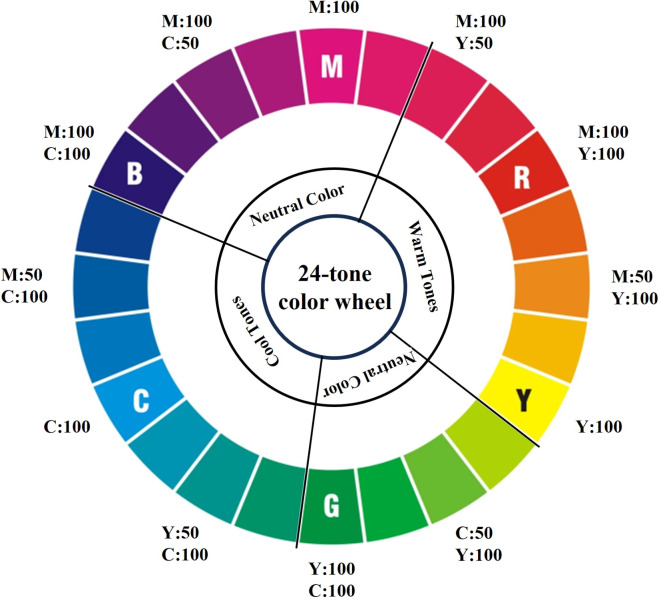

The LAB color space is a uniform and device-independent color space, with its three components L, A, and B representing brightness, the contrast between green and red colors, and the contrast between blue and yellow colors, respectively. Researchers have found that human perception of color exhibits nonlinear characteristics, showing differences in perception towards different color directions and the existence of the critical region for color discrimination by the human eye31. Superpixels only consider the Euclidean distance in the LAB color space when merging pixels of similar colors, ignoring the influence of inter pixel hue differences on similarity. Points with the same Euclidean distance in LAB space are often perceived to have more pronounced color differences along the red-green and blue-yellow diagonals compared to non-diagonal positions. When a set of colors transitions across the opposite sides of the color wheel, this change is typically more significant visually32, as shown in Fig. 3. Nian Xiong emphasized the importance of hue variations in the distribution of pixels within a sample, suggesting that hue variations have a greater impact than brightness18. Therefore, changes in hue have a significant influence on pixel clustering. We improve the SLIC algorithm by incorporating the degree of hue variation into the measurement of pixel similarity during the clustering process.

Fig. 3.

The image shows a 24-tonc color wheel. The color differences are more pronounced at the opposite positions on the color wheel.

The color wheel, also known as the hue circle, places each color along its edge. The visual color difference between cluster centers and pixels is quantified by measuring their distance on the hue circle. This approach aims to better distinguish pixels with the same distance in the original similarity measurement but with hue differences, thereby improving clustering quality and optimizing segmentation boundaries. By obtaining the green-red and yellow-blue components of cluster centers and other pixels in the LAB color space, the hue value discrepancy is calculated.

|

11 |

In the equation,  represents the hue value of the cluster center,

represents the hue value of the cluster center,  is the value of the green-red component of the cluster center in color, and

is the value of the green-red component of the cluster center in color, and  is the value of the blue-yellow component of the cluster center.

is the value of the blue-yellow component of the cluster center.  represents the hue value of the non-central pixels in the search space, and likewise,

represents the hue value of the non-central pixels in the search space, and likewise,  and

and  represent the values of various color components of the non-central pixels.

represent the values of various color components of the non-central pixels.

The difference value is quantified based on the angular distance between the hue angles of the two. Since hue angles are circular, with hue representing a full circle, calculating the distance between them directly requires considering two directions. Starting from both the clockwise and counterclockwise directions, the shortest circular distance is obtained as the actual hue angle difference distance. Normalizing this distance ensures that the larger the hue angle difference distance, the larger of hue difference  between pixels.

between pixels.

|

12 |

Where  represents the weight for controlling hue difference. By incorporating the difference in hue between cluster centers and non-central pixels into color space and spatial position measurements, a similarity metric formula

represents the weight for controlling hue difference. By incorporating the difference in hue between cluster centers and non-central pixels into color space and spatial position measurements, a similarity metric formula  is formulated for clustering. Parameter

is formulated for clustering. Parameter  is used to regulate the impact of hue difference.

is used to regulate the impact of hue difference.  replaces

replaces  from Eq. (4) to optimize the clustering between pixels. The relevant parameters can be found in Eq. (4) of the paper.

from Eq. (4) to optimize the clustering between pixels. The relevant parameters can be found in Eq. (4) of the paper.

|

13 |

Enhancing the consideration of spatial proximity importance in the clustering process may weaken color similarity. The introduction of the control parameter  enhances the control of pixel color similarity, which is beneficial for balancing the relative relationship between color and space. The increase in

enhances the control of pixel color similarity, which is beneficial for balancing the relative relationship between color and space. The increase in  is proportional to the increase in

is proportional to the increase in  , with a greater rate of increase needed.

, with a greater rate of increase needed.

Color information entropy adaptive control of salient regions

Information entropy is a measure of uncertainty of a random variable. A higher entropy indicates greater uncertainty of the random variable and, consequently, more information contained within it33. The richness of colors in an image, as conveyed through color variations, to some extent reflects the amount of information contained in the image. The color entropy can assess the complexity of an image, which is useful in determining the complexity of significant regions of the image content.

The richness of region content is determined by the color information contained in significant regions and influences the size of segmented superpixels. Regions with a richer color variety signify richer content, requiring smaller superpixels to cluster pixels with higher similarity. The number of grayscale levels in an image represents the possible colors in the image and is commonly used to describe the color depth or grayscale depth of the image. Grayscale levels can effectively reflect the color richness of an image, serving as a measure of color variety in place of the dimension of color space, thereby reducing computational complexity. The expression for region color entropy E is as follows:

|

14 |

Where  represents the number of grayscale levels contained in the significant region. And

represents the number of grayscale levels contained in the significant region. And  denotes the probability histogram at the i-th grayscale level, recording the number of times a pixel appears at a certain grayscale value i in the image area and calculating its percentage of the total number of pixels. The less color information in significant regions, the closer E approaches 0. Conversely, the larger the amount of color information, the higher E becomes.

denotes the probability histogram at the i-th grayscale level, recording the number of times a pixel appears at a certain grayscale value i in the image area and calculating its percentage of the total number of pixels. The less color information in significant regions, the closer E approaches 0. Conversely, the larger the amount of color information, the higher E becomes.

The size of significant regions also reflects the required size of segmented superpixels. For significant regions with a smaller area proportion but rich color information, smaller superpixels are needed to display the pixel relationships within the region. Thus, based on the proportion of significant regions to the whole image, the density of significant regions is determined. When the proportion of significant regions is high. The magnitude of superpixel size reduction is appropriately reduced. As the grayscale levels increase, indicating richer content, smaller superpixels are required. The expression for the size of superpixels in significant regions, A, is as follows:

|

15 |

In the equation, n represents the number of pixels in significant regions, r is the number of horizontal pixels in the image, c is the number of vertical pixels in the image, and  is the weight for controlling the size of superpixels, used to adjust the influence of color information entropy.

is the weight for controlling the size of superpixels, used to adjust the influence of color information entropy.

Considering that in the actual segmentation process, the superpixel size of non salient regions is artificially controlled, we hope that the superpixel size of salient regions can be adaptively adjusted.

|

16 |

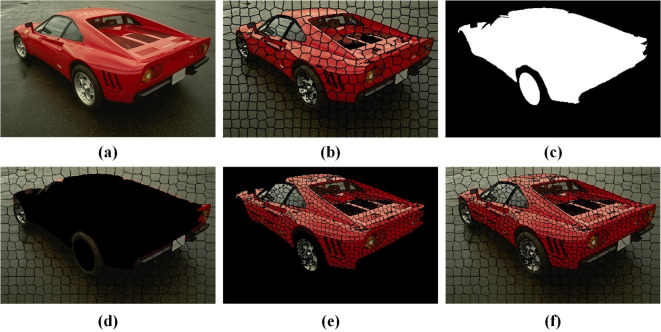

Figure 4 illustrates an adaptive superpixel segmentation of images based on color information entropy combined with significant regions. In non-significant regions, larger superpixels are utilized for segmentation, while in significant regions, finer segmentation is carried out.

Fig. 4.

Adaptive segmentation of images based on color information entropy. (a) Displays the original image from the BSDS500 dataset; (b) shows the SLIC segmentation image; (c) depicts the visual saliency map; (d) represents the non-significant region segmentation image; (e) showcases the significant region segmentation image; and (f) displays the adaptive superpixel segmentation image based on color information entropy.

Improved superpixel segmentation for color difference detection in printing images

For the main objects in the printing industry, such as advertisements and posters, the distinction between foreground and background content in printed images is obvious. The foreground area is characterized by rich color contrasts, complex textures, and constitutes the image content. In contrast, the background area primarily consists of solid colors and gradients. The differences between the two areas make the conventional SLIC algorithm inadequate for printing detection. Using the same superpixel size throughout the whole image poses challenges for color representation. Superpixels represent color homogeneity within regions; larger superpixels may lead to unclear segmentation of foreground content, while smaller superpixels may result in wastage of computational resources for the background.

To achieve different superpixel segmentation for different regions of an image, considering the characteristics of human visual attention, the detection accuracy of significant regions is enhanced during the color difference detection process. By obtaining the saliency region M of the image, a fixed superpixel size is set for non-significant regions, and the superpixel size for significant regions is adaptively controlled using color information entropy. Due to the low complexity of non- salient regions, the number of iterations  for superpixel calculation in this region can be appropriately reduced to improve the running speed. The improved superpixel segmentation image,

for superpixel calculation in this region can be appropriately reduced to improve the running speed. The improved superpixel segmentation image,  , is constructed as follows:

, is constructed as follows:

|

17 |

Where,  represents the superpixel segmentation image of the non-significant region,

represents the superpixel segmentation image of the non-significant region,  represents the superpixel segmentation image of the significant region.

represents the superpixel segmentation image of the significant region.  represents each pixel in the salient region M.

represents each pixel in the salient region M.  and

and  respectively indicate the pixel label values in their regions.

respectively indicate the pixel label values in their regions.

Minimize the distance between the optimization objective function  for similarity and spatial compactness, assigning pixel labels to the closest cluster center label.

for similarity and spatial compactness, assigning pixel labels to the closest cluster center label.

|

18 |

This formula calculates  .

.  denotes the label of the superpixel region to which pixel

denotes the label of the superpixel region to which pixel  belongs.

belongs.  represents the cluster center,

represents the cluster center,  denotes the pixel coordinates in the salient region,

denotes the pixel coordinates in the salient region,  signifies the coordinates of the cluster center, and

signifies the coordinates of the cluster center, and  is the balancing parameter used to balance similarity and spatial continuity. To determine

is the balancing parameter used to balance similarity and spatial continuity. To determine  , replace

, replace  with

with  . The label values of

. The label values of  are assumed to be connected to

are assumed to be connected to  .

.

Different images will yield different segmentation results through superpixel segmentation. Iterative clustering may lead to slight differences even in two superpixel segmentations of the same image. By performing superpixel segmentation on standard printing images and retaining the superpixel labels and color information as templates, the color information of the regions is compared with the template for test images. The differences between them reflect the degree of printing color deviation and spatial positioning during the printing process.

To obtain the mean color of each superpixel in the LAB color space, feature vectors E for the standard image and F for the test image are constructed. The vectors E and F are composed of multidimensional vectors represented as  and

and  , where each

, where each  and

and  is a three-dimensional vector representing the mean color under the corresponding superpixel. The

is a three-dimensional vector representing the mean color under the corresponding superpixel. The color difference formula in the LAB space

color difference formula in the LAB space  34 is used to compare

34 is used to compare  and

and  . The difference

. The difference  between the two feature vectors is calculated as follows:

between the two feature vectors is calculated as follows:

|

19 |

|

20 |

|

21 |

|

22 |

Where  represents the number of pixels in the i-th superpixel of E, and

represents the number of pixels in the i-th superpixel of E, and  is any pixel within that superpixel. Similarly,

is any pixel within that superpixel. Similarly,  represents the number of pixels in the i-th superpixel of F, and

represents the number of pixels in the i-th superpixel of F, and  is any pixel within that superpixel. The term

is any pixel within that superpixel. The term  denotes the norm of the matrix, i is the position in the feature vector, n is the length of the feature vector.

denotes the norm of the matrix, i is the position in the feature vector, n is the length of the feature vector.  is an indicator function which outputs 1 if

is an indicator function which outputs 1 if  exceeds a given threshold at position i; otherwise, it outputs 0. Based on the values of i, the superpixel labels are determined, and the edges of the corresponding

exceeds a given threshold at position i; otherwise, it outputs 0. Based on the values of i, the superpixel labels are determined, and the edges of the corresponding  are marked to indicate problematic superpixel positions in the image.

are marked to indicate problematic superpixel positions in the image.

Experiment

Creating datasets and experimental environments

The experiments described in this paper were conducted on a device equipped with an Intel Core i7-12700 H processor (14 cores, 2.7 GHz) and 16GB of DDR4 memory. The datasets used include the BSDS500 dataset35 and a custom dataset consisting of 200 digital printing images. The pre-press images in the printing image dataset were sourced from the open-source websites UNSPLASH and Pexels, both of which provide free commercial images. The printed images were collected using an Epson 12000XL scanner. The image format in color detection can be JPG or PNG, but it must meet the requirement that the tested image and the standard image are in the same image format. This article uses JPG format for both. The algorithms discussed in this paper were implemented in C + + and Python, using Visual Studio 2022 Preview and PyCharm Community Edition respectively.

Inkjet printing image color difference detection

Algorithm 1 is an improved superpixel segmentation algorithm that optimizes segmentation by introducing salient regions, information entropy, and hue angle distance, resulting in more accurate region color segmentation. The color detection steps for inkjet printed images based on this algorithm are as follows:

Step 1: Use the improved superpixel segmentation algorithm to obtain the superpixel label file of the pre-press image and interpolate it to match the size of the post-press image, ensuring that each pixel corresponds to a label value.

Step 2: Extract the mean color values of the corresponding superpixel regions in both the standard printed image and the test printed image.

Step 3: Calculate the color difference using Eq. (22). The human eye’s discernible threshold is set to 634, and superpixel labels exceeding this threshold are identified.

Step 4: Based on the label values, highlight the boundaries of superpixels with significant color differences, as shown in Fig. 5. The color differences in the L, A, and B components of the superpixel regions are outputted, as illustrated in Table 1.

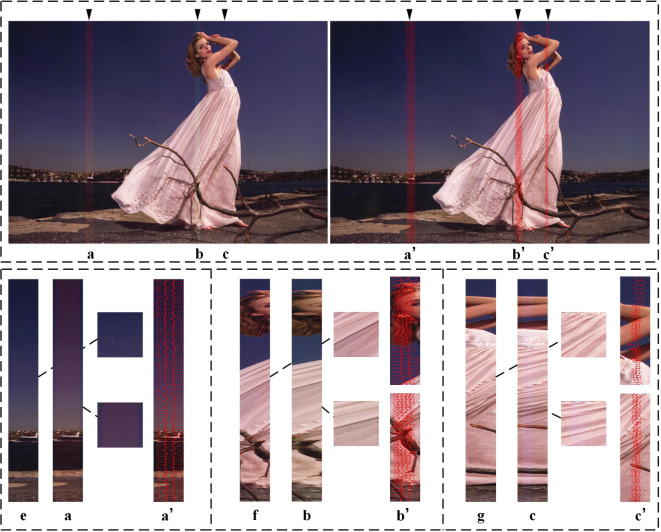

Fig. 5.

Diagram of Inkjet Printing Image Color Difference Detection Results. The top row displays an inkjet printing color defect image (left) and the color difference detection output image (right). The bottom row compares the color difference regions of defect images a, b, and c with the corresponding regions of the standard images e, f, and g, alongside superpixel segmentation highlight images aʹ, bʹ, cʹ. In regions bʹ and cʹ, more detailed comparisons of color differences in significant regions are demonstrated.

Table 1.

Examples of color deviation in superpixel regions.

| Label |

|

|

|

|---|---|---|---|

| 31 | 2.57143 | 3.25155 | − 21.3447 |

| 85 | − 2.27056 | − 0.2626 | − 1.28647 |

| 197 | − 1.03352 | − 6.36871 | − 0.79889 |

| 402 | 0.694237 | − 0.14536 | − 2.76441 |

| 812 | − 1.16988 | − 3.67761 | − 1.00193 |

| 1100 | 0.526581 | − 0.01519 | − 2.29873 |

The proposed detection method is intuitive and provides a quantitative description of color deviations in printed images.

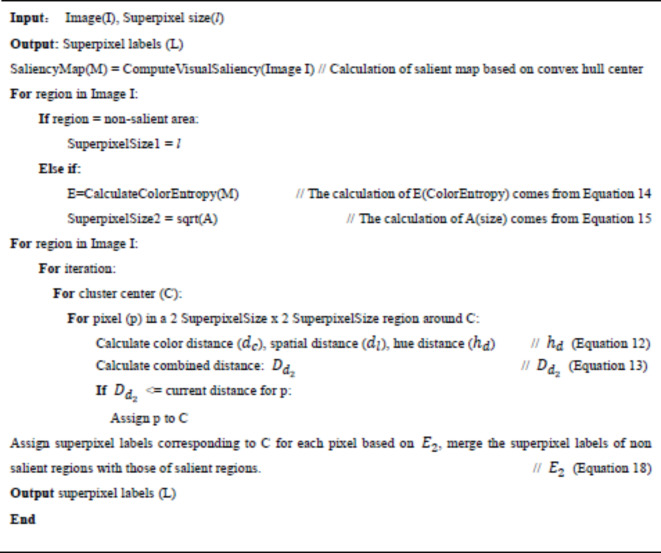

Algorithm 1.

Improved superpixel algorithm based on visual saliency and color entropy.

Our method detects and marks the color difference areas in the post-press test image, effectively identifying both significant and subtle color differences. The image is compared at different precision levels based on the importance of each region. Figure 5 shows the detection results, verifying the effectiveness of the method.

Our method records the degree of color deviation in the color difference areas. In the printed image shown in Fig. 5, there are 1,211 superpixel regions with color differences. Identifying these regions is beneficial for subsequent color compensation and correction. Table 1 randomly displays some superpixels in the LAB color space.

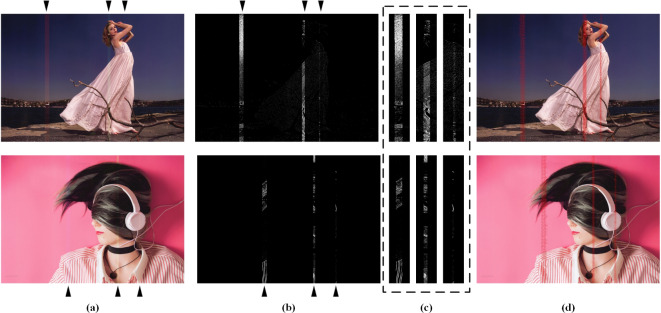

The goal of color difference detection in inkjet-printed images is to identify post-press defect locations visible to the human eye at the maximum discernible threshold, aiding in the control of printing costs. In Fig. 6, it is evident that the color difference in the pink region is difficult to observe with the naked eye, and this area is not detected as a color difference region in (c). By avoiding excessive detection that could lead to increased costs, the color difference detection method proposed in this paper demonstrates accuracy.

Fig. 6.

Color difference area detection diagram. (a) Represents the post-press standard sample, (b) shows the post-press defect sample, and (c) displays the color difference detection sample. The middle section between samples (a) and (b) provides a zoomed-in view of both images, as does the middle section between samples (b) and (c) for sample (c).

Figure 7 shows a comparison of the results from the proposed algorithm for color defect detection and the latest algorithm from Ref.4 for printing defect detection. The algorithm in Ref.4 has issues with accuracy and incomplete detection for global color image color difference detection. In contrast, our method provides higher accuracy in detecting color difference areas and successfully identifies all observable differences. The algorithm in Ref.14 only offers a similarity result between the test and reference images, while our method intuitively shows the location and magnitude of the color differences.

Fig. 7.

Comparison experiment diagram of color difference detection. (a) Shows the color difference image, (b) shows the defect detection implemented by the method in Ref.4, and (c) is the enlarged schematic of the detected color difference area in (b). (d) Shows the color difference area marked by our algorithm.

When detecting a 2000 × 3000 dpi image, our algorithm takes about 5 s, compared to approximately 960 s for pixel-by-pixel detection and around 16 s for the method from Ref.14. Our algorithm offers significantly faster detection speeds.

Ablation experiment

To validate the feasibility and reliability of the proposed algorithm, we conducted experiments to verify both the implementation results of pixel clustering based on hue and the effectiveness of adaptive superpixel segmentation in significant regions using color information entropy.

Feasibility analysis of hue optimization

Superpixels are used in print detection and post-processing, where uniformity, boundary compactness, and color reproduction are crucial. This study integrates hue optimization into superpixel segmentation, naming the optimized algorithm OurSLIC, to verify the method’s feasibility and reliability. Considering that hue optimization is an enhancement in the color space, the weight for the correlation between color and spatial position is set to  , and based on empirical formula,

, and based on empirical formula,  is set to 20. The number of iterations for all experiments is fixed at 10.

is set to 20. The number of iterations for all experiments is fixed at 10.

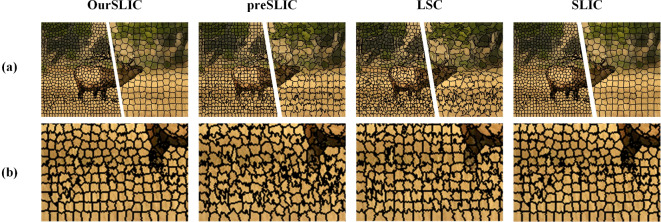

The optimized superpixel clustering based on the hue angle distance in colorimetry demonstrates a more natural performance in regions with gradient and transitional colors. Figure 8 shows the segmentation results of four superpixel segmentation methods, with images on either side of row (a) representing segmentations with different superpixel sizes and row (b) showcasing a local display of the superpixels.

Fig. 8.

Local schematic diagram of superpixel segmentation. The first column presents the superpixel segmentation results obtained using the OurSLIC algorithm. The second column shows the segmentation results from the preSLIC algorithm, the third column displays results from the LSC algorithm, and the fourth column provides the segmentation results from the SLIC algorithm.

To validate the feasibility of the proposed hue optimization algorithm, the segmented images obtained by the superpixel segmentation algorithm are evaluated on four metrics26: Boundary Recall (Rec), Undersegmentation Error (UE), Explained Variation (EV), and Achievable Segmentation Accuracy (ASA), and compared with three other algorithms (LSC36, preSLIC37, SLIC27).

Boundary Recall38 evaluates the adherence of superpixel boundaries to manually marked boundaries.  and

and  represent the number of false negative and true positive boundary pixels in the superpixel segmentation S relative to the ground truth segmentation G, respectively.

represent the number of false negative and true positive boundary pixels in the superpixel segmentation S relative to the ground truth segmentation G, respectively.  signifies correct superpixel segmentation, so a higher Rec is better.

signifies correct superpixel segmentation, so a higher Rec is better.

|

23 |

Undersegmentation Error (UE)38 measures the extent of superpixel deviation from the true boundary segmented regions. The ratio of the number of pixels outside the intersection between the superpixel and the ground truth (G) to the number of pixels in the ground truth, where N is the total number of pixels in the image. A smaller  value is better. Here,

value is better. Here,  represents a superpixel and

represents a superpixel and  represents each ground truth segment. Using argmax returns the index of the corresponding ground truth region, while max only provides a measurement.

represents each ground truth segment. Using argmax returns the index of the corresponding ground truth region, while max only provides a measurement.

|

24 |

Explained Variation (EV)26 is used to quantify the quality of superpixel segmentation, independent of benchmark manual annotations, evaluating whether superpixel boundaries can effectively capture color and structure changes in the image. In the formula,  represents the average color of superpixel

represents the average color of superpixel  and

and  represents the average color of the entire image I. A higher EV(S) indicates better segmentation.

represents the average color of the entire image I. A higher EV(S) indicates better segmentation.  is the feature value of the nth pixel.

is the feature value of the nth pixel.

|

25 |

Achievable Segmentation Accuracy (ASA)38 represents the intersection of  and the true segments

and the true segments  , with higher values being better.

, with higher values being better.

|

26 |

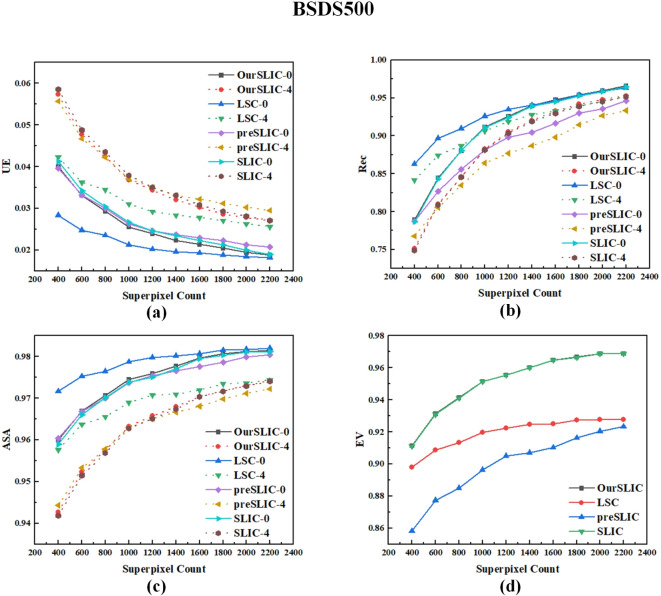

According to Fig. 9, the BSDS500 dataset collected multiple user-annotated boundary maps for 500 natural images. The numbers − 0 and − 4 in the figure indicate the use of coarse and fine annotated boundary maps as benchmark comparison images. The OurSLIC algorithm proposed in this paper outperforms the original SLIC algorithm across all four metrics. OurSLIC, based on SLIC, reduces the envelope of differing pixels as the number of superpixels increases, with the metrics of both algorithms converging. When the number of superpixels exceeds 1000, OurSLIC performs better than the preSLIC algorithm, with the advantage becoming more pronounced as the number increases. The LSC algorithm segments images based on local contrast and similarity of pixels, which generates pixels along image edges and textures, and does not strictly follow a given number of superpixels. In actual comparison, the LSC algorithm segments more superpixel blocks, which is less fair compared to the other three algorithms. This characteristic allows it to achieve higher metrics in Fig. 9(a), (b), and (c). However, as the number of superpixels increases, the metrics of the LSC algorithm fall below those of the algorithm proposed in this paper. The LSC algorithm performs far below the OurSLIC algorithm on the EV metric. Superpixel color is a concentrated representation of regional color and is the main criterion in color detection, making the proposed algorithm more advantageous under this metric. Experimental results demonstrate the reliability of hue angle optimization. Table 2 provides supplementary data for Fig. 9(d).

Fig. 9.

The evaluation results of the superpixel segmentation images obtained by different superpixel algorithms under the BSDS500 dataset across four metrics are presented. (a) Shows the evaluation results under the Undersegmentation Error (UE) metric for the largest boundary annotation (− 0) and the smallest boundary annotation (− 4). (b) Presents the corresponding evaluation results under the Boundary Recall (Rec) metric. (c) Displays the corresponding evaluation results under the Achievable Segmentation Accuracy (ASA) metric. (d) Illustrates the corresponding evaluation results under the Explained Variation (EV) metric.

Table 2.

Comparing EV benchmark OurSLIC with SLIC.

| 400 | 600 | 800 | 1000 | 1200 | 1400 | 1600 | 1800 | 2000 | 2200 | |

|---|---|---|---|---|---|---|---|---|---|---|

| OurSLIC | 0.91139 | 0.93128 | 0.94134 | 0.95153 | 0.95555 | 0.96012 | 0.96475 | 0.96665 | 0.968787 | 0.968795 |

| SLIC | 0.91109 | 0.93102 | 0.94118 | 0.95142 | 0.95540 | 0.96002 | 0.96473 | 0.966647 | 0.96878 | 0.968788 |

Adaptive segmentation of salient regions based on color information entropy

In the detection of color differences in printed images, comparing color averages of uniformly sized superpixels can lead to inaccuracies or waste of resources. To enhance the detection accuracy in visually salient regions, it is necessary to partition superpixels more finely in highly colorful and strongly contrasted areas. This experiment verifies that adaptive superpixel segmentation based on color information entropy yields better segmentation performance.

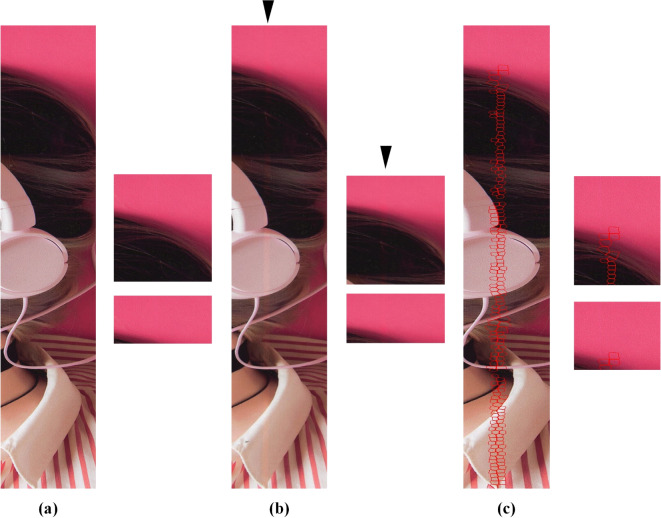

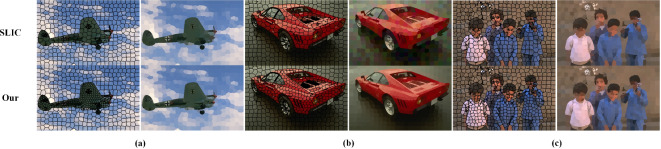

Figure 10 displays the segmentation results of two algorithms. The upper row shows the original superpixel segmentation images (left) and the color mean of the segmented superpixels (right). The lower row illustrates the adaptive segmentation images and their superpixel color means. From the three sets of images (a, b, c), it is evident that adaptive segmentation better preserves the color and details of the original image, which is crucial for superpixel-based color difference detection.

Fig. 10.

Adaptive superpixel segmentation based on salient region content.

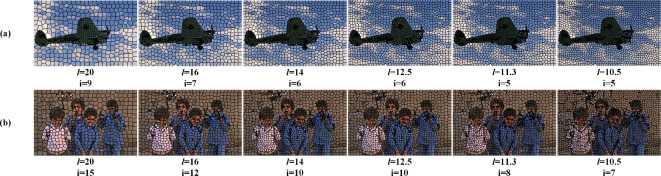

Figure 11 demonstrates how color information entropy adaptively determines the superpixel size in salient regions for different initial superpixel sizes. In the figure, l represents the initial superpixel step size, which is used to determine the superpixel size of non-salient regions. The step size i for salient regions is adaptively determined based on the formula and is correlated with l, changing adaptively with l. Additionally, i adapts based on the richness of color in salient regions and the size of these regions.

Fig. 11.

The size of superpixel segmentation in significant regions is automatically adjusted based on the size of superpixel segmentation in non-significant regions.

The partitioning of salient regions can effectively enhance the recognition of color and detail in images by superpixels. To provide objective metrics for comparison, Peak Signal-to-Noise Ratio (PSNR)39 and Structural Similarity Index (SSIM) [40] are introduced to compare the color mean images obtained by the two algorithms.

|

27 |

MAX represents the maximum possible pixel value in the image, which corresponds to the maximum value of the image bit depth. MSE measures the average squared differences between corresponding pixel values in two images

|

28 |

and

and  mean intensity values of images x and y, representing luminance, while

mean intensity values of images x and y, representing luminance, while  and

and  refer to the variance in intensity.

refer to the variance in intensity.  measures the covariance between images.

measures the covariance between images.  and

and  are constants.

are constants.

In the experiment, the initial superpixel step size was set to  . The results of the color mean image obtained using the SLIC algorithm are represented by PSNR1 and SSIM1. The results from the adaptive segmentation algorithm are represented by PSNR2 and SSIM2. We construct new evaluation metrics, NPSNR and NSSIM, to assess our method. If value greater than 1, it indicates that the proposed algorithm performs better than the traditional SLIC method.

. The results of the color mean image obtained using the SLIC algorithm are represented by PSNR1 and SSIM1. The results from the adaptive segmentation algorithm are represented by PSNR2 and SSIM2. We construct new evaluation metrics, NPSNR and NSSIM, to assess our method. If value greater than 1, it indicates that the proposed algorithm performs better than the traditional SLIC method.

|

29 |

|

30 |

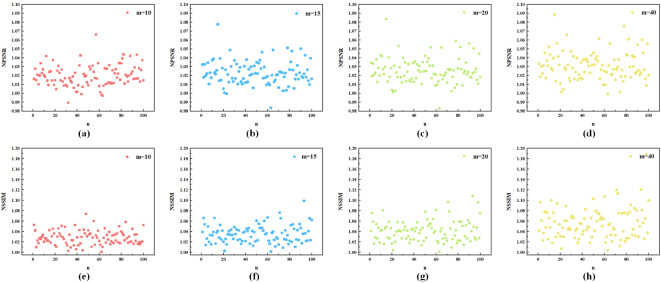

A random selection of 100 sample images from the dataset was used for superpixel segmentation quality evaluation. As shown in Fig. 12, the values of NPSNR and NSSIM are generally greater than 1, indicating that our algorithm outperforms the original method in both image quality and texture/detail preservation. The parameter m (from Eq. (13),  ) controls the relative importance of color and spatial position during clustering. As m increases, the quality of segmentation improves, and the distribution of sample values in the figure shifts upward with increasing m.

) controls the relative importance of color and spatial position during clustering. As m increases, the quality of segmentation improves, and the distribution of sample values in the figure shifts upward with increasing m.

Fig. 12.

Adaptive superpixel segmentation improves image quality, with the weight m, which controls the relative importance of color and spatial position, varying accordingly. Increasing m helps enhance image quality. However, due to the inherent characteristics of the image content, as m increases, the degree of improvement in segmentation quality becomes inconsistent, and the sample distribution becomes more uneven.

Conclusion

The improved superpixel segmentation algorithm based on saliency and color entropy proposed in this article has better reliability in detecting printing color quality. Replacing pixels with superpixels can reduce the computational load on image data while maintaining color fidelity in line with human visual perception. The detected color difference areas are intuitively reflected in the corresponding locations of the original image, and the resulting color shifts aid in subsequent color compensation and correction. Future work will focus on optimizing the algorithm’s speed and integrating it with additional functionalities.

Acknowledgements

Funded National Key R&D Plan 2018YBB1309404 Author Unit: Zhejiang University of Technology Special Equipment Manufacturing and Advanced Processing Technology Key Laboratory of the Ministry of Education.

Author contributions

H. Z. wrote the main manuscript text. Y. S. collected the data and implemented the proposed approach . F. X. guided the manuscript. H. Z., Y. S., L. W., F. X. and L. Z. reviewed the manuscript. All authors discussed the results and approved the manuscript.

Funding

This research is supported by the National Key Research and development Plan subproject 2018YFB1309404.

Data availability

The data presented in this study are available on request from the corresponding author due to protect the integrity and accuracy of the research. The BSD500 dataset is available online at https://paperswithcode.com/dataset/bsds500.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Vans, M. et al. Automatic visual inspection and defect detection on variable data prints. J. Electron. Imaging. 20, 013010–013010 (2011). [Google Scholar]

- 2.Abdelfatah, E., Abdelmajid, E. & Abdeljebar, M. AI and computer vision-based real-time quality control: A review of industrial applications. J. Procedia Comput. Sci. 231, 212–220 (2024). [Google Scholar]

- 3.Ma, B. et al. The defect detection of personalized print based on template matching. IEEE International Conference on Unmanned Systems (ICUS). 266–271 (IEEE, 2017). (2017). 10.1109/ICUS.2017.8278352

- 4.Li, D. et al. Printed label defect detection using twice gradient matching based on improved cosine similarity measure. Expert Syst. Appl. 204, 117372 (2022). [Google Scholar]

- 5.Zhang, L., Chen, M. & Zou, W. A codebook based background subtraction method for image defects detection. in Tenth international conference on computational intelligence and security. 704–706 (IEEE, 2014). (2014). 10.1109/CIS.2014.154

- 6.Ha, Q. T. N. Design of automated system for online inspection using the convolutional neural network (CNN) technique in the image processing approach. Results Eng. 19, 101346 (2023). [Google Scholar]

- 7.Ishimaru, I., Hata, S. & Hirokari, M. Color-defect classification for printed-matter visual inspection system. in 4th World Congress on Intelligent Control and Automation. 3261–3265WCICA, (2002). 10.1109/WCICA.2002.1020137

- 8.Nussbaum, P. & Jon, Y. H. Print quality evaluation and applied colour management in coldset offset newspaper print. Color. Res. Appl. 37, 82–91 (2012). [Google Scholar]

- 9.Södergård, C., Raimo, L. & Juuso, A. Inspection of colour printing quality. Int. J. Pattern Recognit. Artif. Intell. 10.02, 115–128 (1996). [Google Scholar]

- 10.Zhang, D. & Tang, W. The new testing and standardized solutions of printing quality—PressSIGN intelligent printing production. in IEEE International Conference on Intelligent Control, Automatic Detection and High-End Equipment. 181–185 (IEEE, 2012). 10.1109/ICADE.2012.6330123

- 11.Chauveau, J. & Paul, D. R. François Chapeau-Blondeau. Multifractal analysis of three-dimensional histogram from color images. J. Chaos Solitons Fractals. 43, 57–67 (2010). [Google Scholar]

- 12.LUO, J. Automatic colour printing inspection by image processing. J. J. Mater. Process. Technol. 139, 373–378 (2003). [Google Scholar]

- 13.Kikuchi, H. et al. Color-tone similarity on digital images. in Proceedings of The 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference. IEEE, 1–4 (2012).

- 14.Hamdani, H., Septiarini, A., Sunyoto, A., Suyanto, S. & Utaminingrum, F. Detection of oil palm leaf disease based on color histogram and supervised classifier. Optik. 245, 167753 (2021). [Google Scholar]

- 15.Tang, M. Image segmentation technology and its application in digital image processing. in 2020 Int. Conf. Adv. Ambient Comput. Intell. (ICAACI). 158-16010.1109/ICAACI50733.2020.00040 (2020). IEEE.

- 16.Zhang, Y., Pu, J. & Liang, L. On-line detection methods for Printing Image chromatic aberration based on super-pixel. J. Int. J. Sci. 4, 67–75 (2017). [Google Scholar]

- 17.Xiong, N. in Multicolored Pattern Segmentation and its Application in Color Quality Control. (North Carolina State University, 2023).

- 18.Zhou, M., Xu, Z. & Tong, R. K. Y. Superpixel-guided class-level denoising for unsupervised domain adaptive fundus image segmentation without source data. Comput. Biol. Med. 162, 107061 (2023). [DOI] [PubMed] [Google Scholar]

- 19.Zhang, M. et al. Saliency detection via local structure propagation. J. Vis. Commun. Image Represent. 52, 131–142 (2018). [Google Scholar]

- 20.Cheng, M. M., Mitra, N. J., Huang, X., Torr, P. H. & Hu, S. M. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 569–582 (2014). [DOI] [PubMed] [Google Scholar]

- 21.Romero-Zaliz, R. & J. F. Reinoso-Gordo. An updated review on watershed algorithms. Soft Comput. Sustain. Sci. 235–258 (2018).

- 22.Shen, J. et al. Real-time superpixel segmentation by DBSCAN clustering algorithm. IEEE Trans. Image Process. 25, 5933–5942 (2016). [DOI] [PubMed] [Google Scholar]

- 23.Jia, X. et al. Fast and automatic image segmentation using superpixel-based graph clustering. IEEE Access. 8, 211526–211539 (2020). [Google Scholar]

- 24.Chen, Z., Guo, B., Li, C. & Liu, H. Review on superpixel generation algorithms based on clustering. in 2020 IEEE 3rd International Conference on Information Systems and Computer Aided Education (ICISCAE). 532–537(IEEE, (2020). 10.1109/ICISCAE51034.2020.9236851

- 25.Sindeev, M., Konushin, A. & Rother, C. Alpha-flow for video matting. Computer Vision–ACCV: 11th Asian Conference on Computer Vision. 438–452(2012). (2012). https://link.springer.com/chapter/10.1007/978-3-642-37431-9_34#citeas

- 26.Stutz, D., Hermans, A., Leibe, B. & Superpixels An evaluation of the state-of-the-art. Comput. Vis. Image Underst. 166, 1–27 (2018). [Google Scholar]

- 27.Achanta, R. et al. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 34, 2274–2282 (2012). [DOI] [PubMed] [Google Scholar]

- 28.Wang, L. et al. Learning to detect salient objects with image-level supervision. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR). 136–145 (2017).

- 29.Yang, C., Zhang, L. & Lu, H. Graph-regularized saliency detection with convex-hull-based center prior. IEEE. Signal. Process. Lett. 20, 637–640 (2013). [Google Scholar]

- 30.Xu, X., Xu, S., Jin, L. & Song, E. Characteristic analysis of Otsu threshold and its applications. Pattern Recognit. Lett. 32, 956–961 (2011). [Google Scholar]

- 31.Bora, Dibya, J., Anil, K. G. Fayaz, A. K. Comparing the performance of L* A* B* and HSV color spaces with respect to color image segmentation. 10.48550/arXiv.1506.01472.

- 32.Hubbard, E. M., Manohar, S. & Ramachandran, V. S. Contrast affects the strength of synesthetic colors. Cortex. 42, 184–194 (2006). [DOI] [PubMed] [Google Scholar]

- 33.Shen, J., Chang, S., Wang, H. & Zheng, Z. Optimal illumination for visual enhancement based on color entropy evaluation. Opt. Express. 24, 19788–19800 (2016). [DOI] [PubMed] [Google Scholar]

- 34.Hill, B., Roger, T. & Vorhagen, F. W. Comparative analysis of the quantization of color spaces on the basis of the CIELAB color-difference formula. ACM Trans. Graphics (TOG). 16, 109–154 (1997). [Google Scholar]

- 35.Arbelaez, P., Maire, M., Fowlkes, C. & Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 33, 898–916 (2010). [DOI] [PubMed] [Google Scholar]

- 36.Li, Z. & Chen, J. Superpixel Segmentation using Linear Spectral Clustering. in IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 1356–1363 (2015).

- 37.Neubert, P. & Protzel, P. Compact watershed and preemptive slic: On improving trade-offs of superpixel segmentation algorithms. in 22nd international conference on pattern recognition. 996–1001(IEEE, (2014). 10.1109/ICPR.2014.181

- 38.Wang, M. et al. Superpixel segmentation: A benchmark. Sig. Process. Image Commun. 56, 28–39 (2017). [Google Scholar]

- 39.Tanchenko, A. Visual-PSNR measure of image quality. J. Vis. Commun. Image Represent. 25, 874–878 (2014). [Google Scholar]

- 40.Hore, A. & Ziou, D. Image quality metrics: PSNR vs. SSIM. in 20th international conference on pattern recognition. 2366–2369 (IEEE, 2010). 10.1109/ICPR.2010.579

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to protect the integrity and accuracy of the research. The BSD500 dataset is available online at https://paperswithcode.com/dataset/bsds500.