Abstract

Objective

To test and evaluate a sofware dedicated to the follow-up of oncological CT scans for potential use in the Radiology department.

Materials and methods

In this retrospective study, 37 oncological patients with baseline and follow-up CT scans were reinterpreted using a dedicated software. Baseline CT scans were chosen from the imaging reports available in our PACS (picture archiving and communicatin systems). Follow-up interpretations were independently assessed with the software. We evaluated the target lesion sums and the tumor response based on RECIST 1.1 (Response Evaluation Criteria in Solid Tumors).

Results

There was no significant difference in the target lesion sums and the tumor response assessments between the PACS data and the imaging software. There was no over or underestimation of the disease with the software. There was a sigificant deviation (progression versus stability) in three cases. For two patients, this difference was related to the evaluation of the response of non-target lesions. The difference in the third patient was due to comparison with a previous CT scan than to the baseline exam. There was a miscalculation in 13 % of the reports and in 28 % of the cases the examination was compared to the previous CT scan. Finally, the tumor response was not detailed in 43 % of the follow-up reports.

Conclusion

The use of dedicated oncology monitoring software could help in reducing intepretation time and in limiting human errors.

Keywords: Dedicated software, RECIST, Oncology, Follow-up, Tumor assessment

1. Introduction

The advent of cross-sectional imaging in the 1970s made feasible the detection of early cancer and monitoring. The development of anticancer chemotherapies has rapidly reinforced the need for objective disease information to manage and treat these patients [1].

The need to standardize criteria to evaluate the chemotherapy's efficacy and to estimate the tumor response, triggered the international collaboration [2,3].

In 1981, the World Health Organization (WHO) published the first draft for the estimation of tumor burden over time based on morphological criteria. The draft integrated the concepts of the initial CT scan (baseline), measurable and non-measurable lesions and size thresholds, which allowed conclusions, respect to the evolution of the tumor [4]. Considered imprecise, an International Working Group replaced these guidelines in 2000 by the Response Evaluation Criteria in Solid Tumors (RECIST) criteria. These updated guidelines provided the definition of target lesions (10 maximum in total, no more than five per organ) and non-target lesions and specifications on acquisition protocols [5]. These criteria were again updated in 2009 (RECIST 1.1) by the RECIST Working Group, reducing the number of target lesions to a maximum of five with no more than two per organ as well as adding details on the measurement of lymph nodes and response criteria [6].

Although RECIST tumor evaluation was validated and widely used, the criteria remained open to interpretation and raised many questions. Thus in 2016, the RECIST 1.1 committee published an update and clarification to address these concerns [7]. Nevertheless, the updated criteria still have some limitations; the subjective choice of the target lesions entails high interobserver variability and may be the basis of the difference in tumor responses in up to one third of the cases [8]. Tumor evaluation with RECIST criteria is also sensitive to intraobserver variability [9]. The subjective assessment of the maximum diameter, sharpness or blurring of tumor boundaries, and the quality of acquisition and contrast injection it is also a source of variability [10]. Finally, the RECIST 1.1 criteria are not suitable for monitoring certain pathologies (mesothelioma, hepatocellular carcinoma, bone metastases, etc.) or the evaluation of new local treatments (thermoablation, chemoembolization) and the increasingly used systemic treatments (targeted therapies, immunotherapies, etc) [11,12], which has resulted in the development of modified RECIST criteria, modelled for each situation.

Lesion measurements remain time-consuming as the radiologist's workload continues to increase [13]. The radiologist can now benefit from the help of trained medical electroimaging (MEM) technicians [14] or from dedicated diagnostic assistance software progressively developed by manufacturers or start-ups. This save interpretation time [15] and allows a reduction of interobserver variability [16]. Additionally, these software programs are enriched by artificial intelligence [17]. Since the RECIST criteria are used for a large proportion of oncology patients in our institution, we aim to assess the impact of a dedicated software on imaging interpretations.

2. Materials and methods

The institutional review board approved this retrospective study and waived informed consent to participate to the study due to its retrospective nature. This study includes a control group.

2.1. Patients reports

Filtered searches on our radiology information system (RIS) and picture archiving and communication system (PACS) enabled us to select 37 adult patients followed for metastatic solid tumors between April 2016 and May 2021. The selected patients had an initial CT exam (baseline) and at least two follow-up CTs. Baseline was defined as a CT exam performed within the 4 weeks before the first session of chemotherapy treatment.

A resident (1 to 5 years’ experience) or a senior radiologist (more than 10 years’ experience) performed the imaging reports according to RECIST 1.1 criteria. At least three target lesions were measured manually or by a dedicated software Myrian (Intrasense). Patients presenting a new lesion during the follow-up were excluded from the study, because, in this case, the progression was independent of our endpoint of the sum of the targeted lesions.

2.2. Methods of exploration

The follow-up CT scans were all performed in university hospital centers of the APHM except for the pre-treatment CT exam performed in external centers and imported into the PACS. The patients received between 70 cm3 and 120 cm3 of iodinated contrast medium (Omnipaque 350 mg I/ml or Xenetix 350 mg I/ml) except for two scans performed in the context of acute renal failure. All acquisitions analysed have a slice thickness of less than 2 mm.

2.3. Data collection

Age and tumor type were collected from our institution's computerized patient records (Axigate). The date of the examinations, the number of target lesions and the sums of target lesions were extracted from the reports available on our RIS (Xplore, EDL). Tumor response assessment was also collected when specified. When not specified, each follow-up was assigned a response assessment from the conclusion information. No further measurements were performed on the CTs. This was intended in order to evaluate the reported measurements and those obtained using the software.

Irregularities were defined as: 1) human calculation errors, 2) reports comparing measurements to a previous CT rather than to the baseline CT, and 3) tumor response not specified in the conclusion. The sums of the target lesions were recalculated for each report. Only follow-ups #2 and #3 were included in the analyses for comparisons to the baseline CT. Reports were considered non-compliant if they were compared to a previous CT scan, or if the sums of the target lesions used for comparison corresponded to those of a previous scan. Finally, the conclusion was considered non-compliant if the tumor response was not explicitly shown.

2.4. Analysis with the imaging software

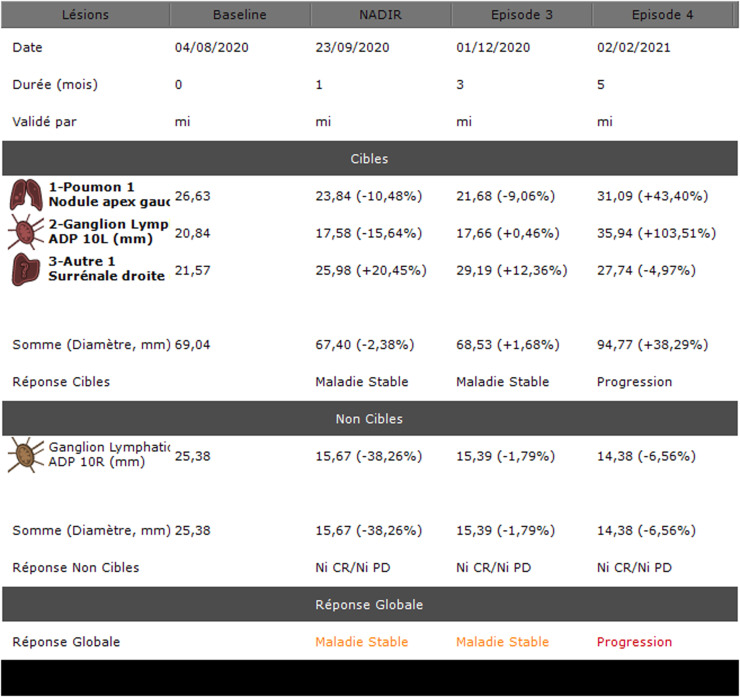

The Intrasense Company permitted us to use a demonstration of their Myrian XL Onco version 2.8 on a dedicated console. This tool is an oncology follow-up application that automatically retrieves the patient's history from the PACS, resizes the slices using the 3D elastic method and calculates the response to treatment according to the chosen criteria (RECIST, CHOI, etc.). An example of tumor response assessment with the software is presented in Fig. 1.

Fig. 1.

Testing and evaluation of a sofware for the follow-up of oncological CT scans in APHM’s Radiology department: Example of a summary table of tumor burden evolution.

Lésions: lesions; Durée (mois): duration (months); Validé par: validated by; Cibles: targets; 1-Poumon 1 Nodule apex gauche: 1-lung 1 left apex nodule; 2-Ganglion Lymph ADP (adénopathie) 10L (mm): lymph node 10L (mm); 3-Autre 1 Surrénale droite: other 1 right adrenal gland; Somme (Diamètre, mm): sum (diameter, mm); Réponse Cibles: targets response; Maladie Stable: stable disease; Progression: progression disease; Ganglion Lymphatic ADP 10R (mm): lymph node 10R; Réponse Non Cibles: non-target responses; Réponse Globale: global response.

All the CT scans were exported from PACS and anonymized on the automatic application. A five years’ experience radiologist was trained by the Intrasense staff. The radiologist then reprocessed each baseline CT exam on the software by selecting the same target lesions and performing nearly identical measurements. All follow-ups were performed under close to routine conditions. All target lesions were measured manually. Non-target lesions were labelled or measured manually. The tumor response assessment was done automatically for the target lesions and manually for non-target lesions. Non-target lesions were classified as a complete response, an unequivocal progression or neither a complete nor a progression response.

2.5. Statistical analysis

To estimate interexam agreement of target lesion sums, the intraclass correlation coefficient (ICC [95 % confidence interval]) was estimated based on mixed-effects, absolute agreement, and single-score models. To estimate the agreement between manually and software assisted tumor response assessments, a weighted kappa coefficient was calculated.

Paired t tests (target lesion sums) and paired Wilcoxon tests (tumor response assessment) were used to assess whether one examination was over- or underestimated as compared to the other. For all 2-sided analyses, a p < 0.05 was considered statistically significant. Statistical analyses were performed using IBM SPSS Statistics 20.0 (IBM Inc, New York, USA) except for weighted kappa calculated using the IRR package of R 4.0 software (The R Foundation for Statistical Computing Platform, Vienna, Austria).

3. Results

3.1. Study Population

Thirty-seven patients (23 men, 62 %) with a total of 121 CT exams were included in this study. The mean age at the time of the pretreatment exam (baseline CT scan) was 61 ± 11 years (range: 22-79 years). The most represented pathology was bronchial adenocarcinoma (13/37, 35 %) (Table 1).

Table 1.

Testing and evaluation of a sofware for the follow-up of oncological CT scans in APHM’s Radiology department: Patients demographics.

| Characteristics | Value |

|---|---|

| Average age (years)* | 61 ± 11 (22-79) |

| Gender (%) | |

| Women | 14 (38) |

| Men | 23 (62) |

| Primary neoplasia (%) | |

| Bronchial adenocarcinoma | 13 (35) |

| Bronchial squamous cell carcinoma | 4 (11) |

| Poorly differentiated bronchial carcinoma | 4 (11) |

| Small cell bronchial carcinoma | 3 (8) |

| Clear cell renal carcinoma | 2 (5) |

| Invasive ductal breast carcinoma | 2 (5) |

| Melanoma | 2 (5) |

| Non-small cell bronchial carcinoma | 1 (2.7) |

| Small cell neuroendocrine carcinoma | 1 (2.7) |

| Pulmonary large cell neuroendocrine carcinoma | 1 (2.7) |

| Sarcomatoid lung carcinoma | 1 (2.7) |

| Thymic carcinoma | 1 (2.7) |

| Liposarcoma | 1 (2.7) |

| UCNT cavum | 1 (2.7) |

| Number of target lesions (%) | |

| 3 | 18 (48.6) |

| 4 | 11 (29.7) |

| 5 | 8 (21.6) |

| Number of follow-ups (%) | |

| 2 | 27 (73) |

| 3 | 10 (27) |

* Average ± standard derivation

Values correspond to the number of patients (n = 37) unless specified. The numbers in parenthesis are percentages. UNCT: undifferentiated carcinoma of the nasopharynx.

Three target lesions were selected on the baseline CT in almost half of the patients (18/37, 48.6 %). Most of the patients had two follow-up CTs (27/37, 73 %).

3.2. Comparison of target lesion sums at baseline and follow-up CT scans

There was no significant difference between the target lesion sums at baseline CTs collected on RIS and those obtained using the software (p = 0.74). The interexam agreement (ICC) was estimated to be 0.995 [0.99–1.00] for the baseline.

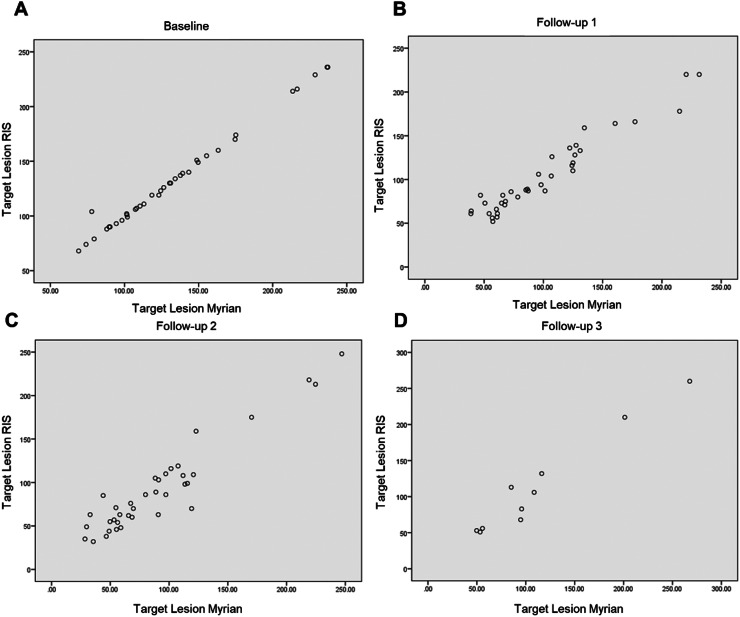

There was no significant difference in target lesion sums between the two methods during follow-ups #1, #2, and #3, with respective p-values of 0.10, 0.62, and 0.94 (Fig. 2).

Fig. 2.

Testing and evaluation of a sofware for the follow-up of oncological CT scans in APHM’s Radiology department: Agreement between the target lesion sums obtained on RIS and with the software at baseline (A) and follow-ups (B, C and, D).

The interexam agreement (ICC) was 0.96 [0.92-0.98] at follow-up #1, 0.95 [0.91-0.97] at follow-up #2, and 0.98 [0.92-1] at follow-up #3.

3.3. Tumor response assessment

There was a significant agreement between the tumor response in the RIS records and the the software (weighted kappa = 0.64, p < 0.001) (Table 2).

Table 2.

Testing and evaluation of a sofware for the follow-up of oncological CT scans in APHM’s Radiology department: Agreement of tumor response assessment obtained between RIS and the Myrian software (84 CT scans).

| RECIST Myrian |

||||

|---|---|---|---|---|

| PD | SD | PR | ||

| RECIST RIS | PD | 3 | 0 | 1 |

| SD | 2 | 34 | 6 | |

| PR | 0 | 8 | 30 | |

| Weighted kappa = 0,64 | ||||

PD: progressive disease; SD: stable disease; PR: partial response

There was major discordance in three cases. In the first case, the patient was classified as stable by manual measurement but progressive on non-target lesions with the software (progression of non-target lesions >20 % and a tendency to increase in the sum of target lesions). Conversely, another patient was classified as progressive on non-target lesions using the manual method, but the progression criteria was not found when using the imaging software. Finally, one patient was classified as stable disease by the manual method but progressive with the software. This was due to comparison to a previous CT scan, and not to the baseline scan.

3.4. Irregularities

Recalculation of the target lesion sums for each report revealed errors in 13 % of the reports (16/121) (Table 3).

Table 3.

Testing and evaluation of a sofware for the follow-up of oncological CT scans in APHM’s Radiology department: Types of assessment errors.

| Calculation error | 8 |

|---|---|

| Lymph node < 10 mm minor axis | 5 |

| Omission of a target lesion | 3 |

The measurements were compared to the previous scan in 30 % of the cases (11/37) at follow-up #2 and in 20 % (2/10) at follow-up #3.

Accuracy of the tumor response was lacking in 36 of the 84 follow-up reports (43 % of cases).

4. Discussion

Our study showed no significant difference between the usual interpretation method (manual lesion measurement) and the automatic registration method with the dedicated software Myrian (Intrasense). We overcame the intra- and interobserver variability inherent in the selection of target lesions [18,19] by using the data from the initial CT scans to establish almost identical baseline examinations.

The sums of the target lesions in the follow-up CT scans were similar between the imaging software and the RIS reports (manual measurement), although nearly 13 % of assessment errors were found in the last method. By reviewing the reports, we were able to highlight that these errors were, in half of the cases, related to calculation errors of a few millimeters. Errors were probably related to the mental calculation of the sums of the target lesions. In addition, lesion measurements were omitted in three reports. We hypothesize that all these errors are due to inattention related to a non-conducive work environment [20].

Five reports showed failure to measure the target lymph nodes during the follow-ups if the short axis was inferior to 10 mm. This is consistent with misapplication of the RECIST 1.1 criteria and may lead to an underestimation of the overall tumor burden [6,21]. It underlines how complex the use of RECIST criteria can routinely be for radiologists whose activities are not primarily oncology.

Comparison of manual measurement and the software showed difference in the tumor response assessment in 20 % (17/84) of the reports. This is mainly because some follow-ups were compared to a previous examination and not to the baseline CT or nadir. In other cases, the difference in classification was due to measurement variability.

The use of a dedicated software can provide a better overview of the evolution of non-target lesions. It is recommended to not measure these non-target lesions, since they are often ill-defined, infiltrated, or with effusions. However, in the case of nodular lesions or lymph nodes, measurement assessments are more reliable than subjective assessments. This is particularly true for the conclusion of unequivocal progression, which can be defined in the same way as for target lesions [22].

The use of a dedicated software for oncologic follow-up has many advantages. It takes in consideration the dimensional evolution of non-target lesions providing additional information for a more accurate diagnose of the tumor burden. In this study, we have observed two cases where the evolution of non-target lesions could play a discriminating role between stability and progression.

A dedicated software reduces interpretation time and decreases the risk of human error. It has been shown that Myrian, that automates baseline recovery, slice registration and tumor response assessment, saves an average time of 63.4 % and 36.1 % for the assessment of lung and liver lesions, respectively [15].

A dedicated software can allow junior radiologists and residents to interpret an examination according to the chosen protocol (RECIST, mRECIST, irRECIST), especially since such protocols are likely to multiply and possibly integrate different imaging techniques such as MRIs and nuclear medicine [12]. It retrieves the results in an easy to interpret format including tables and curves which standardizes the oncology reports and facilitates communications between the radiologists and the oncologists. The software was easy to learn in less than 30 min of training and can interact with the RIS and PACS. These tools support new advances such as radiomics, which has the potential to change practices in the field of oncology.

This study had several limitations. First, the manual measurements were done by a single radiologist and were not blinded. Second, the significant agreement between the results may be due to the small number of patients, probably insufficient to show a subtle difference. Third, in routine practice, most of the scans are interpreted by a different radiologist from one follow-up to the next that can lead to an over- or underestimation of the tumor response [23]. Fourth, longer follow up should be performed in order to assess tumor response. Finally, volume measurements were not assessed since they are still not performed routinely.

5. Conclusion

The RECIST 1.1 criteria are progressively modified and adapted to the various types of cancers and cancer treatments. Whether in clinical research or in routine practice, radiologists can use a software dedicated to oncology follow-up that allow them to work in a standardize environment. Ergonomics saves time when interpreting the ever-increasing number of examinations. It speeds up the measurement time of target and non-target lesions to focus on the detection of new lesions, and limits human error in tasks that are now automated. Application of these tools will be increasingly reinforced with the development of dedicated programs (cerebral perfusion, hepatic or vascular segmentations, etc) or diagnostic aids (lesion detection and characterization).

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Data statement

The data underlying this article will be shared on reasonable request to the corresponding authors.

Ethical Statements

This retrospective study was conducted at the La Timone Hospital (Assistance Publique Hopitaux de Marseille, Marseille, France) and followed the RGPD rules as well as the MR-004 rules.

All patients attending our university hospital are aware that imaging data may be used for scientific purposes. This information is exposed in the patient's waiting room of the department and included in all radiological reports. Patients has the right to oppose to the use of their data. Since this data is obtained in routine medical practice in our center, no dedicated consent form was needed.

CRediT authorship contribution statement

Mathias Illy: Investigation, Writing – original draft, Visualization. Axel Bartoli: Investigation. Julien Mancini: Formal analysis. Florence Duffaud: Validation. Vincent Vidal: Conceptualization, Validation, Resources, Supervision. Farouk Tradi: Conceptualization, Methodology.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

We acknowledge Sabrina Murgan PhD, medical writer at the Radiology Department of the Marseille university hospital (APHM) for writing support.

References

- 1.World Health Organization; Geneva, Switzerland: 1979. World Health Organization: WHO Handbook for Reporting Results of Cancer Treatment. WHO offset publication.https://apps.who.int/iris/bitstream/handle/10665/37200/WHO_OFFSET_48.pdf?sequence=1&isAllowed=y Disponible sur. [Google Scholar]

- 2.Tonkin K, Tritchler D, Tannock I. Criteria of tumor response used in clinical trials of chemotherapy. J Clin Oncol. 1985;3(6):8705. doi: 10.1200/JCO.1985.3.6.870. [DOI] [PubMed] [Google Scholar]

- 3.Folio LR, Nelson CJ, Benjamin M, Ran A, Engelhard G, Bluemke DA. Quantitative radiology reporting in oncology: survey of oncologists and radiologists. Am J Roentgeno. 2015;205(3):W23343. doi: 10.2214/AJR.14.14054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hoogstraten B, Staquet M, Winkler A. Reporting results of cancer treatment. (1):8. DOI: 10.1002/1097-0142(19810101)47:1<207::aid-cncr2820470134>3.0.co;2-6 [DOI] [PubMed]

- 5.Therasse P, Arbuck SG, Eisenhauer EA, Wanders J, Kaplan RS, Rubinstein L, et al. New guidelines to evaluate the response to treatment in solid tumors. J Natl Cancer Inst. 2000;92(3):12. doi: 10.1093/jnci/92.3.205. [DOI] [PubMed] [Google Scholar]

- 6.Eisenhauer EA, Therasse P, Bogaerts J, Schwartz LH, Sargent D, Ford R, et al. New response evaluation criteria in solid tumours: Revised RECIST guideline (version 1.1) Eur J Cancer. 2009;45(2):22847. doi: 10.1016/j.ejca.2008.10.026. [DOI] [PubMed] [Google Scholar]

- 7.Schwartz LH, Litière S, de Vries E, Ford R, Gwyther S, Mandrekar S, et al. RECIST 1.1—Update and clarification: from the RECIST committee. Eur J Cancer. 2016;62:1327. doi: 10.1016/j.ejca.2016.03.081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zimmermann M, Kuhl CK, Engelke H, Bettermann G, Keil S. Factors that drive heterogeneity of response-to-treatment of different metastatic deposits within the same patients as measured by RECIST 1.1 analyses. Acad Radiol. 2021;28(8):e235–e239. doi: 10.1016/j.acra.2020.05.029. [DOI] [PubMed] [Google Scholar]

- 9.Suzuki C, Torkzad MR, Jacobsson H, Åström G, Sundin A, Hatschek T, et al. Interobserver and intraobserver variability in the response evaluation of cancer therapy according to RECIST and WHO-criteria. Acta Oncol. 2010;49(4):50914. doi: 10.3109/02841861003705794. [DOI] [PubMed] [Google Scholar]

- 10.Thiesse P, Ollivier L, Di Stefano-Louineau D, Négrier S, Savary J, Pignard K, et al. Response rate accuracy in oncology trials: reasons for interobserver variability. Groupe Français d’Immunothérapie of the Fédération nationale des centres de lutte contre le cancer. J Clin Oncol. 1997;15(12) doi: 10.1200/JCO.1997.15.12.3507. [DOI] [PubMed] [Google Scholar]

- 11.Grimaldi S, Terroir M, Caramella C. Advances in oncological treatment: limitations of RECIST 1.1 criteria. Q J Nucl Med Mol Imaging. 2018;62(2):129–139. doi: 10.23736/S1824-4785.17.03038-2. [DOI] [PubMed] [Google Scholar]

- 12.Ko C-C, Yeh L-R, Kuo Y-T, Chen J-H. Imaging biomarkers for evaluating tumor response: RECIST and beyond. Biomark Res. 2021;9(1):52. doi: 10.1186/s40364-021-00306-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chokshi FH, Hughes DR, Wang JM, Mullins ME, Hawkins CM, Duszak R. Diagnostic radiology resident and fellow workloads: a 12-year longitudinal trend analysis using national medicare aggregate claims data. J Am Coll Radiol. 2015;12(7):6649. doi: 10.1016/j.jacr.2015.02.009. [DOI] [PubMed] [Google Scholar]

- 14.Beaumont H, Bertrand AS, Klifa C, Patriti S, Cippolini S, Lovera C, et al. Radiology workflow for RECIST assessment in clinical trials: Can we reconcile time-efficiency and quality? Eur J Radiol. 2019;118:25763. doi: 10.1016/j.ejrad.2019.07.030. [DOI] [PubMed] [Google Scholar]

- 15.René A, Aufort S, Mohamed S, Daures J, Chemouny S, Bonnel C, et al. How using dedicated software can improve RECIST readings. Informatics. 2014;1(2):16073. doi: 10.3390/informatics1020160. [DOI] [Google Scholar]

- 16.Folio LR, Sandouk A, Huang J, Solomon JM, Apolo AB. Consistency and efficiency of CT analysis of metastatic disease: semiautomated lesion management application within a PACS. Am J Roentgenol. 2013;201(3):61825. doi: 10.2214/AJR.12.10136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tang Y, Yan K, Xiao J, Summers RM. In: Medical Image Computing and Computer Assisted Intervention – MICCAI 2020. Martel AL, Abolmaesumi P, Stoyanov D, Mateus D, Zuluaga MA, Zhou SK, et al., editors. Springer International Publishing; Cham: 2020. One Click Lesion RECIST Measurement and Segmentation on CT Scans éditeurs; p. 57383. (Lecture Notes in Computer Science) [DOI] [Google Scholar]

- 18.Schwartz LH, Mazumdar M, Brown W, Smith A, Panicek DM. Variability in response assessment in solid tumors: effect of number of lesions chosen for measurement. Clin Cancer Res. 2003;9(12) https://clincancerres.aacrjournals.org/content/9/12/4318 [PubMed] [Google Scholar]

- 19.Kuhl CK, Alparslan Y, Schmoee J, Sequeira B, Keulers A, Brümmendorf TH, et al. Validity of RECIST Version 1.1 for response assessment in metastatic cancer: a prospective, multireader study. Radiology. 2019;290(2):34956. doi: 10.1148/radiol.2018180648. [DOI] [PubMed] [Google Scholar]

- 20.Bartoli A. Avant l'IA: en quoi des mesures d'ergonomie avancées et les techniques de lecture rapide peuvent améliorer les performances diagnostiques humaines en imagerie oncologique. Marseille: Aix Marseille Université; 2019. [Medical thesis dissertation]. Available online at: https://dumas.ccsd.cnrs.fr/dumas-02361246

- 21.Schwartz LH, Bogaerts J, Ford R, Shankar L, Therasse P, Gwyther S, et al. Evaluation of lymph nodes with RECIST 1.1. Eur J Cancer. 2009;45(2):2617. doi: 10.1016/j.ejca.2008.10.028. [DOI] [PubMed] [Google Scholar]

- 22.Morse B, Jeong D, Ihnat G, Silva AC. Pearls and pitfalls of response evaluation criteria in solid tumors (RECIST) v1.1 non-target lesion assessment. Abdom Radiol. 2019;44(2):76674. doi: 10.1007/s00261-018-1752-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Yoon SH, Kim KW, Goo JM, Kim D-W, Hahn S. Observer variability in RECIST-based tumour burden measurements: a meta-analysis. Eur J Cancer. 2016;53:515. doi: 10.1016/j.ejca.2015.10.014. [DOI] [PubMed] [Google Scholar]