Abstract

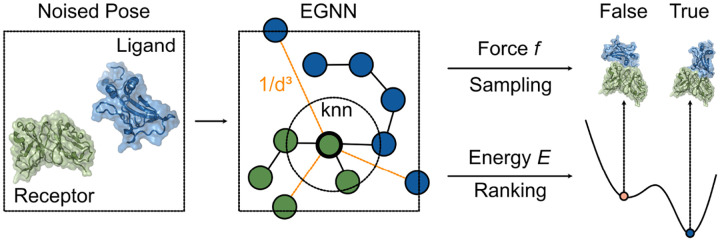

Diffusion models have shown promise in addressing the protein docking problem. Traditionally, these models are used solely for sampling docked poses, with a separate confidence model for ranking. We introduce DFMDock (Denoising Force Matching Dock), a diffusion model that unifies sampling and ranking within a single framework. DFMDock features two output heads: one for predicting forces and the other for predicting energies. The forces are trained using a denoising force matching objective, while the energy gradients are trained to align with the forces. This design enables our model to sample using the predicted forces and rank poses using the predicted energies, thereby eliminating the need for an additional confidence model. Our approach outperforms the previous diffusion model for protein docking, DiffDock-PP, with a sampling success rate of 44% compared to its 8%, and a Top- 1 ranking success rate of 16% compared to 0% on the Docking Benchmark 5.5 test set. In successful decoy cases, the DFMDock Energy forms a binding funnel similar to the physics-based Rosetta Energy, suggesting that DFMDock can capture the underlying energy landscape.

1. Introduction

1.1. Classical docking methods

Protein-protein docking predicts the structure of a protein complex from the structures of its individual unbound partners [1]. Classical methods involve two key components: (1) sampling algorithms, such as exhaustive global searches, local shape-matching, and Monte Carlo algorithms, generate possible docked structures, while (2) scoring functions evaluate these structures based on physical energy, structural compatibility, or empirical data [2]. An alternative approach is template-based docking, which leverages sequence similarity and evolutionary conservation [3]. However, these methods are time-consuming due to the extensive search and evaluation processes involved. Hence, this work aims to develop a fast and accurate deep-learning method for sampling and scoring protein complexes.

1.2. Related work

Co-folding models.

Co-folding models, which predict protein complex structure from sequences, have emerged as powerful tools for addressing protein docking. By leveraging large datasets of protein sequences [4] and structures [5], these models have shown remarkable accuracy in predicting protein complex structures. AlphaFold2 [6] and RoseTTAFold [7] marked a significant breakthrough in protein structure prediction. Originally developed for monomer structure prediction, extensions for multimers were released soon after, making these models the preferred approach for most protein complex predictions. However, challenges remain, such as the time-consuming multiple sequence alignment (MSA) searches and lower accuracy in predicting antibody-antigen interactions [8]. Recently, AlphaFold3 [9] introduced a diffusion module, replacing the previous structure module, and expanded its capabilities to predict interactions not only between proteins but also with DNA, RNA, and small molecules; but AlphaFold3 still fails in 40% of antibody-antigen cases.

Regression-based models.

Unlike co-folding models, regression-based models generally input the individual protein structures, either in 3D or as distance matrices, without relying on MSA. EquiDock [10] was the first model to apply equivariant neural networks for rigid protein docking. While its theoretical framework is robust, its success rate is lower compared to traditional and co-folding models. Following a similar approach, ElliDock [11] introduced elliptic-paraboloid interface representations but did not significantly improve performance. In contrast, GeoDock [12] and DockGPT [13] adopted architectures resembling AlphaFold2, using individual protein structures without MSA while allowing flexible backbones. Although they outperform EquiDock, these methods still underperform compared to co-folding approaches. The limitations of regression-based models are (1) they generate only a single prediction per model, and (2) they are less accurate for predicting protein interactions beyond the training data compared to co-folding models with MSAs.

Diffusion models.

Diffusion models [14–16] have been applied to protein docking [17]. Unlike regression-based objectives, DiffDock [18] reformulates docking as a generative process, training the model through denoising score matching [19] on the translation, rotation, and torsion spaces of small molecules. DiffDock-PP [20] adapts DiffDock for protein-protein docking and diffuses only along the translation and rotation spaces (rigid docking). DiffMaSIF [21] follows a similar framework but incorporates additional protein interface embeddings. LatentDock [22] applies diffusion in the latent space, first training a variational autoencoder [23] and then diffusing in the encoder’s output, akin to Stable Diffusion [24]. All these models comprise a sampling model, which generates diverse poses through reverse diffusion steps, and a confidence model, which ranks these poses based on their confidence scores.

Energy-based models.

Energy-based models [25] train neural networks to approximate the underlying energy function of the training data. DockGame [26] introduces a framework that trains energy functions either supervised by physics-based models or self-supervised through denoising score matching. EBMDock [27] employs statistical potential as its energy function and uses Langevin dynamics to sample docking poses. Arts et al. [28] developed diffusion model-based force fields for coarse-grained molecular dynamics by parameterizing the energy of an atomic system and training the gradient of the energy to match the denoising force. With this learned force field, they can both sample from the distribution and perform molecular dynamics simulations. Based on the theory that diffusion models learn the underlying training data distribution, which if well approximated can relate to the energy of the generated samples. DSMBind [29] adopts a similar framework for protein-protein interactions, demonstrating that the learned energy function correlates more strongly with binding energy than previous methods.

Building on these approaches, we propose DFMDock, a diffusion generative model for protein docking. To our knowledge, it is the first model to integrate sampling and ranking within a single framework, utilizing forces and energies learned from diffusion models. Our results show that DFMDock outperforms DiffDock-PP in both sampling and Top-1 ranking success rates. Furthermore, the learned energy function exhibits a binding funnel similar to the physics-based Rosetta energy function [30], suggesting that DFMDock can capture the underlying energy landscape for some protein-protein interactions.

2. Methods

2.1. Model

According to statistical physics, a docking pose is a random state sampled from the Boltzmann distribution: , where is the energy, is the Boltzmann constant, is the temperature, and is the partition function. For rigid docking between a “receptor” protein and a “ligand” protein, the search space spans all possible translations and rotations of the ligand, with the receptor fixed. The per-residue forces on the ligand are given by:

| (1) |

where are the coordinates of the ligand residues relative to their center. Our goal is to learn and , using for sampling docking poses and for ranking.

We use an equivariant graph neural network (EGNN) [31] to predict the energy and the forces on each residue:

| (2) |

represents the node embeddings, which for the input concatenate the amino acid sequence one-hot encoding with the ESM2 (650M) embeddings [32]. is the set of coordinates, and is an edge embedding of trRosetta [33] geometry and relative positional encoding [34]. To balance short- and long-range interactions, we construct graphs of 20 nearest neighbor edges and 40 edges selected randomly using an inverse cubic distance weighting [35]. The predicted energy is computed as the average output of a multi-layer perceptron (MLP) that inputs the concatenated node representations of ligand and receptor , where the distance between them, , is within a cutoff distance :

| (3) |

where is the number of residue pairs within the cutoff distance. The predicted forces on each ligand residue are derived as the displacement vectors between the input coordinates and the updated coordinates:

| (4) |

The translational force for the ligand is obtained by averaging the per-residue forces: . In the Appendix, we show that the gradient of energy with respect to a rotation vector is given by . Thus, the rotational force for the ligand is: .

To improve numerical stability [36], we normalize the translational and rotational forces and use two MLPs that input the unnormalized magnitude and timestep , to learn the scaling factors:

| (5) |

| (6) |

We train the model using denoising force matching for both translation and rotation:

| (7) |

| (8) |

where denotes the conditional probability distribution of the noised translation given the true translation , modeled as a Gaussian distribution in , and represents the conditional probability distribution of the noised rotation given the true rotation , modeled as an isotropic Gaussian distribution on SO(3) [37–39].

To train the energy function for ranking, the energy conservation loss [40] is calculated as the mean squared error between the predicted forces and the negative gradient of the predicted energy with respect to the coordinates:

| (9) |

To ensure that the global energy minimum aligns with the ground truth [41, 42], the energy contrastive loss is defined as:

| (10) |

where and are the energies of the ground truth and noised structures. The final loss function combines these components:

| (11) |

2.2. Data

We trained our model on DIPS-hetero, a subset of DIPS [43, 44] with approximately 11k heterodimers. For testing, we evaluated it on 25 targets, as selected in the EquiDock report, from the Docking Benchmark 5.5 (DB5.5) [45], a widely used dataset for assessing docking performance.

3. Results and Discussion

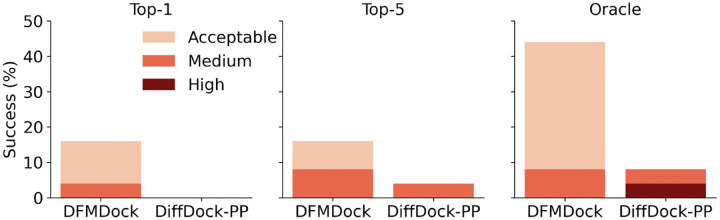

3.1. DFMDock achieves higher success rate than DiffDock-PP

We compared DFMDock to DiffDock-PP (trained on DIPS) on the DB5.5 test set. Both models generated 120 samples per target, each initialized from different starting positions, using 40 diffusion steps. DFMDock samples were ranked using the model’s energy function (DFMDock Energy) and DiffDock-PP samples were ranked using it’s confidence model. As shown in Figure 2, DFMDock consistently outperforms DiffDock-PP across all settings, with the largest margin observed in the Oracle setting. (Here Oracle refers to the highest DockQ among all samples per target). While DiffDock-PP achieved state-of-the-art success on the DIPS test set, its performance on DB5.5 dropped significantly (8%), likely due to data leakage between the DIPS training and test sets [12, 47]. In contrast, DFMDock demonstrates better generalization to protein-protein interactions in DB5.5.

Figure 2:

Success rates of DFMDock and DiffDock-PP in Top-1, Top-5, and Oracle settings, categorized by Acceptable (DockQ > 0.23), Medium (DockQ > 0.49), and High (DockQ > 0.80) accuracy ratings [46].

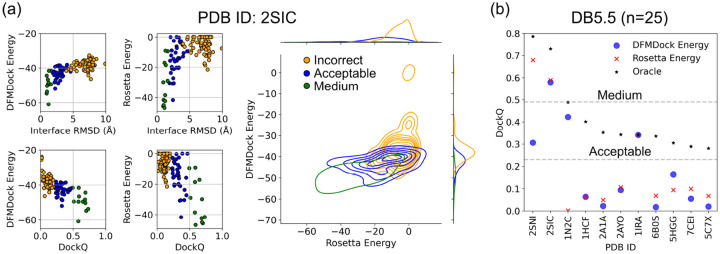

3.2. DFMDock learns physics-like energy

To evaluate our model’s energy function, we plotted both the DFMDock Energy and the Rosetta Energy vs the Interface RMSD and DockQ (two measures of docking accuracy) for the 120 DFMDock-generated samples per target. Figure 3a shows similar binding funnels under both scoring methods for a sample target (PDB ID: 2SIC), suggesting that DFMDock captures the physical energy landscape of protein docking. This funnel-like behavior indicates the model can distinguish between nearnative and non-native docking poses, making it valuable for ranking docking decoys. The contour plot of Rosetta Energy vs DFMDock Energy shows that DFMDock ranks medium and acceptable quality predictions higher than incorrect predictions. Figure 3b shows that DFMDock energy slightly outperforms Rosetta in identifying acceptable quality poses (4 vs 3 targets) but underperforms in discriminating medium quality structures (1 vs 2 targets). In 7 out of 11 cases where DFMDock succeeded in sampling, both scoring methods failed to rank the poses correctly, suggesting that the sampled poses may be of lower quality or contain steric clashes, thus requiring further development of the model.

Figure 3:

Comparison of ranking methods between DFMDock and Rosetta. (a) Binding funnels (Interface RMSD and DockQ) and contour plot (DFMDock Energy vs Rosetta Energy) for a target from the DB5.5 test set (PDB ID: 2SIC), colored by DockQ ranges: Incorrect (orange), Acceptable (blue), and Medium (green). (b) Comparison of Top-1 ranked DockQ scores for different PDB IDs: DFMDock Energy (blue circles), Rosetta Energy (red crosses), and Oracle (black stars). The dashed line at DockQ=0.23 and DockQ=0.49 indicates the Acceptable and Medium threshold. Only successful decoy sampling cases from DB5.5 (11 out of 25) are shown.

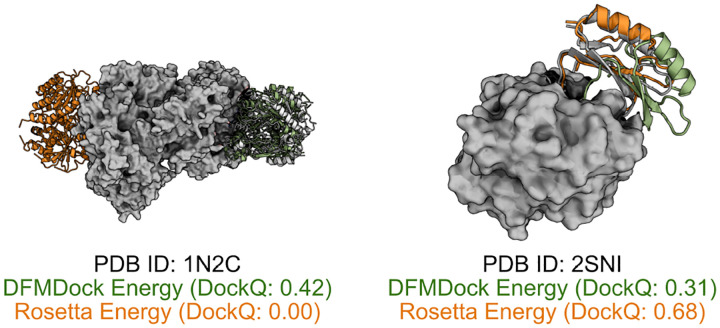

Figure 4 shows a structural comparison between DFMDock and Rosetta’s top-ranked predictions for two samples. For PDB ID 1N2C, DFMDock identifies an acceptable quality pose (DockQ=0.42), while Rosetta identifies an incorrect pose (DockQ=0.00). However, for 2SNI, DFMDock fails to identify medium quality structures as effectively as Rosetta energy, suggesting that DFMDock’s energy function is less accurate in this case. Incorporating all-atom details into DFMDock’s energy function could help address this issue, enhancing its ability to distinguish between acceptable and medium-quality docking poses and improving its overall reliability across diverse protein-protein interactions.

Figure 4:

Top-1 predictions ranked by DFMDock Energy (green) and Rosetta Energy (orange) aligned to the ground truth structures (grey).

4. Conclusion

DFMDock is a generative diffusion model that integrates sampling and ranking for protein docking. DFMDock outperforms DiffDock-PP on the DB5.5 test set and generates binding funnels comparable to those from the Rosetta interface score, highlighting its ability to mimic physical interactions. However, in many cases where DFMDock succeeded in sampling, it failed to rank poses accurately, indicating the need for further development. Additionally, its accuracy in identifying medium-quality structures can be further optimized. Currently, the model is trained on a limited dataset, which may constrain its generalization. With the availability of larger and more comprehensive datasets [48], future work will incorporate all-atom details to enhance DFMDock’s precision and reliability across a broader range of protein interactions.

Figure 1:

DFMDock model overview.

Acknowledgements

This work was supported by National Institutes of Health grant R35-GM141881 and by Moderna. Computational resources were provided by the Advanced Research Computing at Hopkins (ARCH). The authors thank Jeremias Sulam for valuable discussions and insightful feedback.

Appendix

Gradient of energy with respect to a rotation vector

Consider a rigid body with points labeled , where each represents the position of point relative to the center of mass . When the rigid body undergoes a small rotation , where is a rotation vector, the displacement of each point is given by:

The energy of the system depends on the positions . The change in energy due to small displacements of the points is:

where is the gradient of the energy with respect to the position of point . (In this work we assume the direct energy dependence on rotation angle of each residue is small.)

Substituting the expression for the displacement , we get:

Using the scalar triple product identity:

we can simplify the expression for :

Thus, the gradient of energy with respect to the rotation vector is:

where is the vector from the center of mass to the point , and is the gradient of the energy with respect to the position of point .

If we have a finite number of points , we can normalize the sum by the number of points to get an average gradient:

This provides an averaged estimate of the gradient of energy with respect to the rotation vector when dealing with a finite set of points on the rigid body.

Footnotes

Code Availability

The inference code, model weights, and test set are available at https://github.com/Graylab/DFMDock.

References

- [1].Vakser Ilya A. Protein-protein docking: From interaction to interactome. Biophysical journal, 107(8):1785–1793, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Huang Sheng-You. Search strategies and evaluation in protein–protein docking: principles, advances and challenges. Drug discovery today, 19(8):1081–1096, 2014. [DOI] [PubMed] [Google Scholar]

- [3].Szilagyi Andras and Zhang Yang. Template-based structure modeling of protein–protein interactions. Current opinion in structural biology, 24:10–23, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].The UniProt Consortium. Uniprot: the universal protein knowledgebase. Nucleic acids research, 46(5):2699–2699, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Berman Helen M, Battistuz Tammy, Bhat Talapady N, Bluhm Wolfgang F, Bourne Philip E, Burkhardt Kyle, Feng Zukang, Gilliland Gary L, Iype Lisa, Jain Shri, et al. The protein data bank. Acta Crystallographica Section D: Biological Crystallography, 58(6):899–907, 2002. [DOI] [PubMed] [Google Scholar]

- [6].Jumper John, Evans Richard, Pritzel Alexander, Green Tim, Figurnov Michael, Ronneberger Olaf, Tunyasuvunakool Kathryn, Bates Russ, Žídek Augustin, Potapenko Anna, et al. Highly accurate protein structure prediction with alphafold. nature, 596(7873):583–589, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Baek Minkyung, DiMaio Frank, Anishchenko Ivan, Dauparas Justas, Ovchinnikov Sergey, Lee Gyu Rie, Wang Jue, Cong Qian, Kinch Lisa N, Schaeffer R Dustin, et al. Accurate prediction of protein structures and interactions using a three-track neural network. Science, 373(6557):871–876, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Yin Rui, Brandon Y Feng Amitabh Varshney, and Brian G Pierce. Benchmarking alphafold for protein complex modeling reveals accuracy determinants. Protein Science, 31(8):e4379, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Abramson Josh, Adler Jonas, Dunger Jack, Evans Richard, Green Tim, Pritzel Alexander, Ronneberger Olaf, Willmore Lindsay, Andrew J Ballard Joshua Bambrick, et al. Accurate structure prediction of biomolecular interactions with alphafold 3. Nature, pages 1–3, 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Ganea Octavian-Eugen, Huang Xinyuan, Bunne Charlotte, Bian Yatao, Barzilay Regina, Jaakkola Tommi, and Krause Andreas. Independent se (3)-equivariant models for end-to-end rigid protein docking. arXiv preprint arXiv:2111.07786, 2021. [Google Scholar]

- [11].Yu Ziyang, Huang Wenbing, and Liu Yang. Rigid protein-protein docking via equivariant elliptic-paraboloid interface prediction. arXiv preprint arXiv:2401.08986, 2024. [Google Scholar]

- [12].Chu Lee-Shin, Ruffolo Jeffrey A, Harmalkar Ameya, and Gray Jeffrey J. Flexible protein–protein docking with a multitrack iterative transformer. Protein Science, 33(2):e4862, 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].McPartlon Matt and Xu Jinbo. Deep learning for flexible and site-specific protein docking and design. BioRxiv, pages 2023–04, 2023. [Google Scholar]

- [14].Jascha Sohl-Dickstein Eric Weiss, Maheswaranathan Niru, and Ganguli Surya. Deep unsupervised learning using nonequilibrium thermodynamics. In International conference on machine learning, pages 2256–2265. PMLR, 2015. [Google Scholar]

- [15].Ho Jonathan, Jain Ajay, and Abbeel Pieter. Denoising diffusion probabilistic models. Advances in neural information processing systems, 33:6840–6851, 2020. [Google Scholar]

- [16].Song Yang, Sohl-Dickstein Jascha, Kingma Diederik P, Kumar Abhishek, Ermon Stefano, and Poole Ben. Score-based generative modeling through stochastic differential equations. arXiv preprint arXiv:2011.13456, 2020. [Google Scholar]

- [17].Yim Jason, Stärk Hannes, Corso Gabriele, Jing Bowen, Barzilay Regina, and Jaakkola Tommi S. Diffusion models in protein structure and docking. Wiley Interdisciplinary Reviews: Computational Molecular Science, 14(2):e1711, 2024. [Google Scholar]

- [18].Corso Gabriele, Stärk Hannes, Jing Bowen, Barzilay Regina, and Jaakkola Tommi. Diffdock: Diffusion steps, twists, and turns for molecular docking. arXiv preprint arXiv:2210.01776, 2022. [Google Scholar]

- [19].Vincent Pascal. A connection between score matching and denoising autoencoders. Neural computation, 23(7):1661–1674, 2011. [DOI] [PubMed] [Google Scholar]

- [20].Ketata Mohamed Amine, Laue Cedrik, Mammadov Ruslan, Stärk Hannes, Wu Menghua, Corso Gabriele, Marquet Céline, Barzilay Regina, and Jaakkola Tommi S. Diffdock-pp: Rigid protein-protein docking with diffusion models. arXiv preprint arXiv:2304.03889, 2023. [Google Scholar]

- [21].Sverrisson Freyr, Akdel Mehmet, Abramson Dylan, Feydy Jean, Goncearenco Alexander, Adeshina Yusuf, Kovtun Daniel, Marquet Céline, Zhang Xuejin, Baugher David, et al. Diffmasif: Surface-based protein-protein docking with diffusion models. In Machine Learning in Structural Biology workshop at NeurIPS 2023, 2023. [Google Scholar]

- [22].McPartlon Matt, Marquet Céline, Geffner Tomas, Kovtun Daniel, Goncearenco Alexander, Carpenter Zachary, Naef Luca, Bronstein Michael, and Xu Jinbo. Latentdock: Protein-protein docking with latent diffusion. MLSB, 2023. [Google Scholar]

- [23].Kingma Diederik P. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013. [Google Scholar]

- [24].Rombach Robin, Blattmann Andreas, Lorenz Dominik, Esser Patrick, and Ommer Björn. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 10684–10695, 2022. [Google Scholar]

- [25].Du Yilun and Mordatch Igor. Implicit generation and modeling with energy based models. Advances in Neural Information Processing Systems, 32, 2019. [Google Scholar]

- [26].Somnath Vignesh Ram, Sessa Pier Giuseppe, Martinez Maria Rodriguez, and Krause Andreas. Dockgame: Cooperative games for multimeric rigid protein docking. arXiv preprint arXiv:2310.06177, 2023. [Google Scholar]

- [27].Wu Huaijin, Liu Wei, Bian Yatao, Wu Jiaxiang, Yang Nianzu, and Yan Junchi. Ebmdock: Neural probabilistic protein-protein docking via a differentiable energy model. In The Twelfth International Conference on Learning Representations, 2024. [Google Scholar]

- [28].Arts Marloes, Garcia Satorras Victor, Huang Chin-Wei, Zugner Daniel, Federici Marco, Clementi Cecilia, Noé Frank, Pinsler Robert, and van den Berg Rianne. Two for one: Diffusion models and force fields for coarse-grained molecular dynamics. Journal of Chemical Theory and Computation, 19(18):6151–6159, 2023. [DOI] [PubMed] [Google Scholar]

- [29].Jin Wengong, Chen Xun, Vetticaden Amrita, Sarzikova Siranush, Raychowdhury Raktima, Uhler Caroline, and Hacohen Nir. Dsmbind: Se (3) denoising score matching for unsupervised binding energy prediction and nanobody design. bioRxiv, pages 2023–12, 2023. [Google Scholar]

- [30].Alford Rebecca F, Leaver-Fay Andrew, Jeliazkov Jeliazko R, O’Meara Matthew J, DiMaio Frank P, Park Hahnbeom, Shapovalov Maxim V, Renfrew P Douglas, Mulligan Vikram K, Kappel Kalli, et al. The rosetta all-atom energy function for macromolecular modeling and design. Journal of chemical theory and computation, 13(6):3031–3048, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Garcia Satorras Victor, Hoogeboom Emiel, and Welling Max. E (n) equivariant graph neural networks. In International conference on machine learning, pages 9323–9332. PMLR, 2021. [Google Scholar]

- [32].Lin Zeming, Akin Halil, Rao Roshan, Hie Brian, Zhu Zhongkai, Lu Wenting, Smetanin Nikita, Verkuil Robert, Kabeli Ori, Shmueli Yaniv, et al. Evolutionary-scale prediction of atomic-level protein structure with a language model. Science, 379(6637):1123–1130, 2023. [DOI] [PubMed] [Google Scholar]

- [33].Yang Jianyi, Anishchenko Ivan, Park Hahnbeom, Peng Zhenling, Ovchinnikov Sergey, and Baker David. Improved protein structure prediction using predicted interresidue orientations. Proceedings of the National Academy of Sciences, 117(3):1496–1503, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Evans Richard, O’Neill Michael, Pritzel Alexander, Antropova Natasha, Senior Andrew, Green Tim, Žídek Augustin, Bates Russ, Blackwell Sam, Yim Jason, et al. Protein complex prediction with alphafold-multimer. biorxiv, pages 2021–10, 2021. [Google Scholar]

- [35].Ingraham John B, Baranov Max, Costello Zak, Barber Karl W, Wang Wujie, Ismail Ahmed, Frappier Vincent, Lord Dana M, Ng-Thow-Hing Christopher, Van Vlack Erik R, et al. Illuminating protein space with a programmable generative model. Nature, 623(7989):1070–1078, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Masters Matthew, Mahmoud Amr, and Lill Markus. Fusiondock: Physics-informed diffusion model for molecular docking. In ICML2023 CompBio Workshop, 2023. [Google Scholar]

- [37].Leach Adam, Schmon Sebastian M, Degiacomi Matteo T, and Willcocks Chris G. Denoising diffusion probabilistic models on so (3) for rotational alignment. ICLR2022 GTRL Workshop, 2022. [Google Scholar]

- [38].Yim Jason, Trippe Brian L, De Bortoli Valentin, Mathieu Emile, Doucet Arnaud, Barzilay Regina, and Jaakkola Tommi. Se (3) diffusion model with application to protein backbone generation. arXiv preprint arXiv:2302.02277, 2023. [Google Scholar]

- [39].Jagvaral Yesukhei, Lanusse Francois, and Mandelbaum Rachel. Unified framework for diffusion generative models in so (3): applications in computer vision and astrophysics. In Proceedings of the AAAI Conference on Artificial Intelligence, 2024. [Google Scholar]

- [40].Duval Alexandre Agm, Schmidt Victor, Hernández-Garcia Alex, Miret Santiago, Malliaros Fragkiskos D, Bengio Yoshua, and Rolnick David. Faenet: Frame averaging equivariant gnn for materials modeling. In International Conference on Machine Learning, pages 9013–9033. PMLR, 2023. [Google Scholar]

- [41].Du Yilun, Mao Jiayuan, and Tenenbaum Joshua B. Learning iterative reasoning through energy diffusion. arXiv preprint arXiv:2406.11179, 2024. [Google Scholar]

- [42].Lee Changsoo, Won Jonghun, Ryu Seongok, Yang Jinsol, Jung Nuri, Park Hahnbeom, and Seok Chaok. Galaxydock-dl: Protein-ligand docking by global optimization and neural network energy. Journal of Chemical Theory and Computation, 2024. [DOI] [PubMed] [Google Scholar]

- [43].Townshend RJL, Bedi R, Suriana PA, and Dror RO. End-to-end learning on 3d protein structure for interface prediction. arxiv. arXiv:1807.01297, 2018. [Google Scholar]

- [44].Morehead Alex, Chen Chen, Sedova Ada, and Cheng Jianlin. Dips-plus: The enhanced database of interacting protein structures for interface prediction. Scientific data, 10(1):509, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Vreven Thom, Moal Iain H, Vangone Anna, Pierce Brian G, Kastritis Panagiotis L, Torchala Mieczyslaw, Chaleil Raphael, Jiménez-García Brian, Bates Paul A, Fernandez-Recio Juan, et al. Updates to the integrated protein-protein interaction benchmarks: docking benchmark version 5 and affinity benchmark version 2. Journal of molecular biology, 427(19):3031–3041, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Basu Sankar and Wallner Björn. Dockq: a quality measure for protein-protein docking models. PloS one, 11(8):e0161879, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Bushuiev Anton, Bushuiev Roman, Sedlar Jiri, Pluskal Tomas, Damborsky Jiri, Mazurenko Stanislav, and Sivic Josef. Revealing data leakage in protein interaction benchmarks. arXiv preprint arXiv:2404.10457, 2024. [Google Scholar]

- [48].Kovtun Daniel, Akdel Mehmet, Goncearenco Alexander, Zhou Guoqing, Holt Graham, Baugher David, Lin Dejun, Adeshina Yusuf, Castiglione Thomas, Wang Xiaoyun, et al. Pinder: The protein interaction dataset and evaluation resource. bioRxiv, pages 2024–07, 2024. [Google Scholar]