Abstract

Background

The main objectives of the study were to analyse the use of the Objective Structured Clinical Examination (OSCE) to evaluate the skills of medical students in paediatric basic life support (PBLS), to compare two resuscitation training models and to evaluate the measures to improve the teaching program.

Methods

Comparative, prospective, observation study with intervention in two hospitals, one undergoing a PILS course (Paediatric Immediate Life Support) and another PBLS. The study was performed over three phases. 1º. PBLS OSCE in 2022 three months after the resuscitation training 2. Measures to improve the training program in 2023 3. PBLS OSCE in 2023. Overall results were analysed and comparison between both sites and those for 2022 and 2023 were made.

Results

A total of 210 and 182 students took part in the OSCE in 2022 and 2023, respectively. The overall mean score out of 100 was 83.2 (19), 77.8 (19.8) in 2022 and 89.5 (15.9) and 2023, P < 0.001. Overall cardiopulmonary resuscitation (CPR) effectiveness was adequate in 79.4% and 84.6% of students in 2022 and 2023, respectively. The results of hospital students undergoing a PILS course were better (86.4 (16.6) than those undergoing a PBLS. 80.2 (20.6) p < 0.001. The results from both hospitals improved significantly in 2023.

Conclusions

The OSCE is a valid instrument to evaluate PBLS skills in medical students and to compare different training methods and program improvements. Medical students who receive a PILS attain better PBLS skills than those who undergo a PBLS course.

Keywords: Education, Medical students, Objective structured clinical examination, Paediatric life support courses, Resuscitation education, Resuscitation training, Undergraduate

Background

The Objective Structured Clinical Examination (OSCE) is a skills evaluation method proposed by Harden in 1975 [1, 2]. It is performed by means of observing the action of several structured stations that simulate clinical situations. Evaluation is by means of an objective evaluation list.

The OSCE enables evaluating three levels of the Miller pyramid, know, know-how and show how, for different skills (history taking, physical examination, technical skills, communication, clinical opinion, diagnostic test planning, therapeutic schedule, healthcare education, drawing up reports, interprofessional relations and ethics and legal aspects). The OSCE uses different evaluation methods with standardized patients, manikins, computer or online simulators [3].

The OSCE is included in several medical schools to evaluate clinical skills. It replaced the examination with an actual patient and complemented the written examination that evaluated knowledge.

The OSCE has been proven to have suitable objectivity, and reliability while evaluating clinical and non-clinical skills both at undergraduate and postgraduate level in healthcare professions [4–8] and to compare different teaching methods [9–11].

The curriculum of many medical schools includes cardiopulmonary resuscitation (CPR) training with highly varied theoretical and practical programmes. Most curricula include adult CPR training [12, 13], and some also include paediatric CPR training [14–17].

Many OSCE include life support stations, generally for adults, performed with manikins. These enable evaluating technical CPR skills in adults [18–21]. However, few studies have analysed the usefulness of OSCE to evaluate the skills of medical students and paediatric residents in Paediatric Basic Life Support (PBLS) [22] and Neonatal CPR, respectively [23].

Our main hypothesis is that the OSCE is a valid instrument to evaluate PBLS skills in medical students and to compare different training methods and the improvement program changes.

The aims of this study were first, to evaluate the skills of medical students in PBLS in an OSCE. The second aim was to compare the PBLS skills in students from two hospitals who received different training in paediatric CPR. The third aim was to evaluate the usefulness of OSCE to analyse the effects of improvement CPR program changes on skills attained by medical students in PBLS.

Methods

Study design

A comparative, prospective, observational study was performed with a three-phase intervention.

Setting

The study was performed at the Hospital General Universitario Gregorio Marañón (HGM) and Hospital Clínico Universitario San Carlos (HCSC) of the Complutense University of Madrid, which is a public university. The study was carried out in accordance with The Code of Ethics of the World Medical Association (Declaration of Helsinki) for experiments involving humans. The study was approved by the local ethics committee (Proyecto Innova Docentia 332/2020). Students and teachers signed informed consent forms to take part in the OSCE and for the study.

The same core curriculum programme, including PBLS, is taught in all hospitals of the Complutense University in the 5th year of the six years of the medical degree. However, the Pediatrics theoretical part is taught independently in each hospital and the practical PBLS training is different in the different hospitals. In HCSC a PBLS seminar lasting two and a half hours is held. Meanwhile, in the HGM a structured theoretical-practical in person course on Pediatric Intermediate Life Support (PILS) lasting 8 h is taught. The PILS course includes training in PBLS, ventilation, vascular access, and intermediate CPR teamwork and it is accredited by the Spanish Group for Paediatric and Neonatal CPR (SGPNCPR). Students have CPR recommendations and classes available in the online campus paediatrics over the entire course. At the end of the fifth year of medicine an OSCE test with five stations is held for the hospitals HGM and HCSC together. The OSCE PBLS station is held three months after the PLS training.

The study was performed over three phases. 1º. PBLS skills were evaluated in the OSCE for 2022. The results were analysed and a comparison was made between the two hospitals. 2º. After the analysis of results corrective measures were set out for CPR training in both hospitals in 2023. 3º. PBLS skills were evaluated in the OSCE for 2023. The results were analysed and a comparison was made between the two hospitals and between 2022 and 2023.

Participants and study size: All students from the hospitals HGM and HCSC who underwent the OSCE in 2022 and 2023 were included in the study. Two similar but not identical cases of paediatric cardiac arrest (CA) were held for evaluation of PLS skills in the two years in order to prevent conveying the information from one year to another. The first year was a CA following trauma in a breastfeeding infant and the second year a CA after intoxication in a child. Each student had seven minutes to act. 1º. Read the case study and instructions outside the room 2º. Come in and ask the teacher acting as the child’s mother or father with CA 3º. Perform basic PBLS 4º. Explain what happened to the emergency services personnel and the child's parent. After the student´s performance the evaluator performed a brief analysis with the student to strengthen the positive aspects and correct mistakes.

There were 14 evaluators, one evaluator in each station. The evaluators were paediatricians and nurses accredited as paediatric CPR instructors by the SGPNCPR who received training on how the OSCE works. They were randomly distributed into the different PBLS stations and did not know the hospital to which students belonged. They scored each item in a computerized database.

Variables

A checklist was prepared according to the SGPNCPR basic CPR evaluation criteria (Table 1) [14, 17]. The same items were evaluated in both cases (clinical history, clinical examination, technical skills, communication skills, interprofessional relationships). Each item was evaluated as suitable (5 points) or unsuitable (0 points), in accordance with the criteria that would have been effective in a CA situation. The items for ordered CPR steps and overall evaluation of CPR efficacy had a greater weight (20 points) than the rest. The total maximum score was 100 points. (Table 1). According to the SGPNCPR criteria, it was considered that basic CPR skills were adequate if the student attained a total score greater than 70. Moreover, it included an evaluation of the overall effectiveness of the CPR, deciding, just as for CPR courses, whether the global student’s CPR action would have been sufficient to attain the patient’s recovery or maintenance until the emergency services arrived.

Table 1.

Evaluation sheet for the paediatric basic cpr station

| NAME: | ||

|---|---|---|

| Action | Score | |

| 1 | Check whether the scene is safe | 5 |

| 2 | Detect unconsciousness | 5 |

| 3 | Shout for help | 5 |

| 4 | Open the airway | 5 |

| 5 | Check whether the patient is breathing | 5 |

| 6 | Perform 5 emergency ventilations making sure they are effective | 5 |

| 7 | Detect vital signs or pulse | 5 |

| 8 | Commence chest compressions; 100 compressions a minute with compression and suitable decompression | 5 |

| 9 |

Coordinate chest compressions with the ventilation (15 compressions/2 ventilations ratio or 30/2) |

5 |

| 10 |

After one minute stop and activate the emergency system (only if not done before after ventilating) Call 112 and give the right information |

5 |

| 11 |

After two minutes check whether the patient has recovered (breathing and vital signs) |

5 |

| 12 | Ordered CPR steps | 20 |

| 13 | Overall CPR effectiveness | 20 |

| 14 | Convey the correct CPR information to the emergency service (patient, situation on arrival, CPR performed, duration, outcome). Give parents the correct information | 5 |

All items were score with 5 points except, “Ordered CPR steps” and “Overall CPR effectiveness” that score 20 points because they are the most important items in CPR. Total score was 100 points

After evaluating the results of the first year, several measures were set out to improve the training to address aspects that led to worse outcomes (for example; practice calling for help, and opening the airway with the head-chin manoeuver). In the HCSC an in-person theoretical course was given (the previous year students only had to review the theoretical documentation on the online platform). Moreover, the duration of the practical classes was increased and a previous theoretical evaluation included before and after the seminar as in HGM.

Statistical methods

An anonymous database was prepared. This included the hospital of origin and the score obtained for each item. The statistical study was performed using the programme SPSS v 29.0 para OsX (IBM, Armonk, NY, USA). Continuous qualitative variables are shown as means and standard deviation (mean ± SD). Categoric variables are shown in regard to the total (n/N) and percentage. The Kolmogorov–Smirnov test was used to check whether continuous variables followed a normal distribution. The Student t-test and Mann Whitney test were used to compare between means and the chi-squared test was used to compare proportions. A P < 0.05 value was deemed statistically significant.

Results

OSCE 2022 results

The results of the PBLS station in 2022 are shown in Table 2. 210 students took part and the mean score was 77.8 ± 19.8; 77.6% of students attained an overall score equal or higher than 70%. In 79.4% of students the effectiveness of the CPR was suitable. Less than 70% of students performed the first steps of CPR correctly; verified whether the situation was safe (66.7%), detected unconsciousness (45.7%), requested help (45.2%) and opened up the airway (38.6%).

Table 2.

Overall results. Comparison among 2022 and 2023

|

Overall 2022- + 2023 |

2022 | 2023 | Comparison (P) | |

|---|---|---|---|---|

| Students | 392 | 210 | 182 | |

| Mean score ± SD | 83.2 ± 19 | 77.8 ± 19.8 | 89.5 ± 15.9 | < 0.001 |

| Median score (IQR) | 90 (80–95) | 85 (70–90) | 95 (90–100) | < 0.001 |

| ITEMS | ||||

| 1. Safety | 291/392 (74.2%) | 140/210 (66.7%) | 151/182 (83%) | < 0.001 |

| 2. Unconsciousness | 133/392 (66.1%) | 96/210 (45.7%) | 163/182 (89.6%) | < 0.001 |

| 3. Shout for help | 266/292 (67.9%) | 95/210 (45.2%) | 171/182 (94%) | < 0.001 |

| 4. Airway | 221/392 (56.4%) | 189/210 (38.6%) | 140/182 (76.9%) | < 0.001 |

| 5. Check respiration | 351/392 (89.5%) | 189/210 (90%) | 162/182 (89%) | 0.750 |

| 6.Ventilation |

345/392 (88%) |

175/210 (83.3%) | 170/182 (93.4%) | 0.002 |

| 7. Check vital signs | 328/392 (83.7%) | 161/210 (76.7%) | 167/182 (91.8%) | < 0.001 |

| 8. Chest compressions | 308/392 (78.6%) | 155/210 (73.8%) | 153/182 (84.1%) | 0.014 |

| 9. Ventilation and chest compressions coordination | 374/392 (95.4%) | 200/210 (95.2%) | 174/182 (95.6%) | 0.863 |

| 10. Activate EMS | 354/392 (90.3%) | 186/210 (88.6%) | 168/182 (92.3%) | 0.212 |

| 11. Check vital signs | 348/392 (88.8%) | 185/210 (88.1%) | 163/182 (89.6%) | 0.647 |

| 12. Ordered CPR steps | 358/392 (91.3%) | 184/210 (87.6%) | 174/182 (95.6%) | 0.005 |

| 13. Overall CPR effectiveness | 320/392 (81.6%) | 166/210 (79%) | 154/182 (84.6%) | 0.156 |

| 14. Communication | 368/392 (93.6%) | 204/210 (97.1%) | 164/182 (90.1%) | 0.004 |

| Over 70% | 329/392 (83.9%) | 163/210 (77.6%) | 166/182 (91.2%) | < 0.001 |

Overall score did not follow a normal distribution. The Student t-test and Mann–Whitney test were used to compare mean and median scores. The Chi-squared test was used to compare proportions

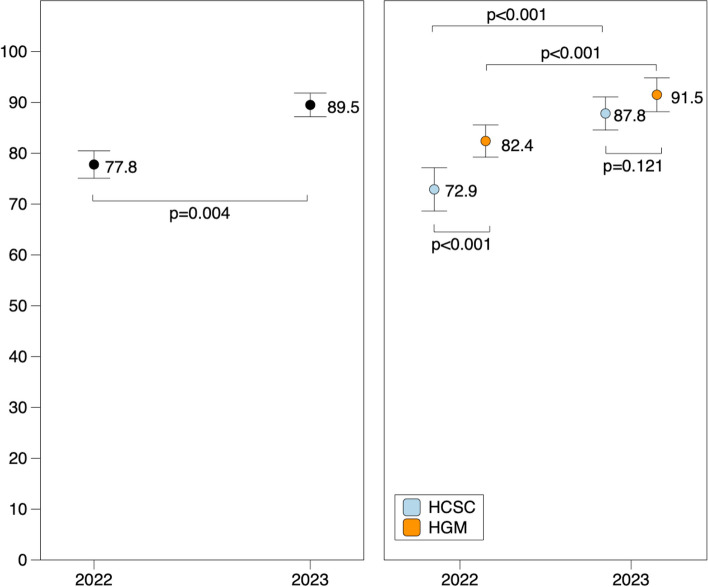

The score of HGM students 82.4 ± 26.6 was significantly higher than that HCSC students 72.9 ± 21.7, P < 0.001 (Fig. 1). In addition, the percentage of students with a score greater than 70 was also significantly higher in HGM than in HCSC (84.3% vs 70.6%, p = 0.018). 86.1% of HGM students performed an adequate CPR versus 71.6% of HCS students. (P = 0.010). Also for each manoeuvre, except for the information, the score was greater in HGM students (Table 3).

Fig. 1.

Comparison of the mean score for all students in 2022 and 2023 (left). Comparison of the mean scores for Hospital Clínico San Carlos (HCSC) and Hospital Gregorio Marañón (HGM) in 2022 and 2023 and between both years (right). The bars represent the mean value and 95% confidence interval for this value

Table 3.

Comparison between hospitals

|

Overall 2022 + 2023 |

2022 | 2023 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| HCSC | HGM | P | HCSC | HGM | P | HCSC | HGM | P | |

| Students | 201 | 191 | 102 | 108 | 99 | 83 | |||

|

Mean SD |

80.2 ± 20.6 |

86.4 ± 16.6 | < 0.001 | 72.9 ± 21.7 |

82.4 ± 26.6 |

< 0.001 |

87.8 ± 16.4 |

91.5 ± 15.3 | 0.121 |

| Median score (IQR) |

85 (72.5–95) |

90 (80–100) |

0.002 |

80 (60–90) |

85 (80–95) |

< 0.001 |

95 (85–100) |

95 (90–100) |

0.066 |

|

Correct ITEMS n/N (%) | |||||||||

| 1 | 149/201 (74.1%) | 142/191 (74.3%) | 0.961 | 67/102 (65.7%) | 73/108 (67.6%) | 0.770 | 82/99 (82.8%) | 69/83 (83.1%) | 0.957 |

| 2 | 125/201 (62.2%) | 134/191 (70.2%) | 0.096 | 41/102 (40.2%) | 55/108 (50.9%) | 0.119 | 84/99 (84.8%) | 79/83 (95.2%) | 0.023 |

| 3 | 137/201 (68.2%) | 129/191 (67.5%) | 0.985 | 41/102 (40.2%) | 54/108 (50%) | 0.154 | 96/99 (97%) | 75/83 (90.4%) | 0.062 |

| 4 | 104/201 (51.7%) | 117/191 (61.3%) | 0.058 | 32/102 (31.4%) | 49/108 (45.4%) | 0.037 | 72/99 (72.7%) | 68/83 (81.9%) | 0.142 |

| 5 | 173/201 (86.1%) | 178/191 (93.2%) | 0.021 | 87/102 (85.3%) | 102/108 (94.4%) | 0.027 | 86/99 (86.9%) | 76/83 (91.6%) | 0.313 |

| 6 | 170/201 (84.6%) | 175/191 (91.6%) | 0.032 | 78/102 (76.5%) | 97/108 (89.8%) | 0.010 | 92/99 (92.9%) | 78/83 (94%) | 0.777 |

| 7 | 159/201 (79.1%) | 169/191 (88.5%) | 0.012 | 68/102 (66.7%) | 93/108 (86.1%) | 0.001 | 91/99 (91.9%) | 76/83 (91.6%) | 0.931 |

| 8 | 151/201 (75.1%) | 157/191 (82.2%) | 0.088 | 69/102 (67.6%) | 86/108 (79.6%) | 0.048 | 82/99 (82.8%) | 71/83 (85.5%) | 0.618 |

| 9 | 190/201 (94.5%) | 184/191 (96.3%) | 0.393 | 97/102 (95.1%) | 103/08 (95.4%) | 0.926 | 93/99 (93.9%) | 81/83 (97.6%) | 0.231 |

| 10 | 183/201 (91%) | 171/191 (89.5%) | 0.612 | 92/102 (90.2%) | 94/103 (87%) | 0.472 | 91/99 (91.9%) | 77/83 (92.8%) | 0.830 |

| 11 | 174/201 (86.6) | 174/191 (91.1%) | 0.155 | 85/102 (83.3%) | 100/108 (92.6%) | 0.038 | 89/99 (89.9%) | 74/83 (89.2%) | 0.870 |

| 12 | 178/201 (88.6%) | 180/191 (94.2%) | 0.046 | 84/102 (82.4%) | 100/108 (92.6%) | 0.024 | 94/99 (94.9%) | 80/83 (96.4%) | 0.638 |

| 13 | 152/201 (75.6%) | 168/191 (88%) | 0.002 | 73/102 (71.6%) | 93/108 (86.1%) | 0.010 | 79/99 (79.8%) | 75/83 (90.4%) | 0.049 |

| 14 | 191/201 (95%) | 177/191 (92.7%) | 0.331 | 102/102 (100%) | 102/108 (94.4%) | 0.016 | 89/99 (89.9) | 75/83 (90.4%) | 0.917 |

| Over 70% | 160/201 (79.6%) | 169/191 (88.5%) | 0.017 | 72/102 (70.6%) | 91/108 (84.3%) | 0.018 | 88/99 (88.9%) | 78/83 (94%) | 0.227 |

HCSC (Hospital Clínico San Carlos). HGM (Hospital Gregorio Maranón)

1. Safety. 2. Unconsciousness 3. Shout for help. 4. Open airway. 5. Check respiration. 6. Ventilation 7. Check vital signs 8. Chest compressions. 9. Ventilation and chest compressions coordination 10. Activate EMS. 11. Check vital signs. 12. Ordered CPR steps. 13. Overall CPR effectiveness.14. Communication

Overall score did not follow a normal distribution. The Student t-test and Mann–Whitney test were used to compare mean and median scores. The chi-squared test was used to compare proportions

OSCE 2023 Results

The results from the PBLS station in 2023 are shown in Table 2. 182 students took part in OSCE. The mean score was 89.5 ± 15.9 and 91.2% of students attained a score higher than 70. In 79.4% of students the effectiveness of the CPR was suitable. All items were performed correctly by over 75% of students. Opening the airway was the manoeuvre with the worst results (76.9%).

There were no statistically significant differences in mean score among HGM students (91.5 ± 15.3) and HCSC students (87.8 ± 16.4) (P = 0.121) (Fig. 1). However, CPR was suitable in a statistically significantly higher percentage of HGM students than HCSC students (90.4% vs 79.8%) (P = 0.049). The percentage of students who correctly performed the manoeuvres was similar in both hospitals, except for the detection of unconsciousness (number 2), 95.2% for HGM students vs 84.8% in HCSC students (Table 3).

Comparison between 2022 and 2023

Table 1 compares the results of the evaluation in 2022 and 2023. The mean score was significantly higher in 2023 than in 2022 (89.5 ± 15.9 compared to 77.8 ± 19.8), P = 0.004 (Fig. 1). The percentage of students in whom the effectiveness of CPR was adequate was also higher in 2023 (84.6% vs 79%). However, the differences did not attain statistical significance, P = 0.156. The percentage of students who correctly performed each manoeuvre was significantly higher in 2023 except the information that was greater in 2022, but this manoeuver was performed correctly by more than 90% of the students both years (Table 2).

Tables 4 and 5 compare the scores between 2022 and 2023 for each hospital. In both hospitals the overall score was higher in 2023. The percentage of students in whom the overall effectiveness of CPR was adequate was also higher in 2023, but the differences did not attain statistical significance in any of the hospitals (Tables 4 and 5).

Table 4.

Comparison between 2022 and 2023 at HCSC

|

Overall 2022- + 2023 |

2022 | 2023 | P | |

|---|---|---|---|---|

| Number of students | 201 | 102 | 99 | |

| Mean (SD) | 80.2 ± 20.6 | 72.9 ± 21.7 | 87.8 ± 16.4 | < 0.001 |

| Median (IQR) | 85 (72.5 – 95) | 80 (60–90) | 95 (85–100) | < 0.001 |

| Correct items n/N (%) | ||||

| 1. Safety | 149/201 (74.1%) |

67/102 (65.7%) |

82/99 (82.8%) |

0.006 |

| 2. Unconsciousness | 125/201 (62.2%) |

41/102 (40.2%) |

84/99 (84.8%) |

< 0.001 |

| 3. Shout for help | 137/201 (68.2%) |

31/102 (40.2%) |

96/99 (97%) |

< 0.001 |

| 4. Airway | 104/201 (51.7%) |

32/102 (31.4%) |

72/99 (72.7%) |

< 0.001 |

| 5.Check respiration | 173/201 (86.1%) |

87/102 (85.3%) |

86/99 (86.9%) |

0.747 |

| 6.Ventilation | 170/201 (84.6%) |

78/102 (76.5%) |

92/99 (92.9%) |

0.001 |

| 7. Check vital signs | 159/201 (79.1%) |

68/102 (66.7%) |

91/99 (91.9%) |

< 0.001 |

| 8. Chest compressions | 151/201 (75.1%) |

69/102 (67.6%) |

82/99 (82.9%) |

0.013 |

| 9. Ventilation and chest compressions coordination | 190/201 (94.5%) |

97/102 (95.1%) |

93/99 (93.9%) |

0.718 |

| 10. Activate EMS | 183/201 (91%) |

92/102 (90.2%) |

91/99 (91.9%) |

0.669 |

| 11.Check vital signs | 174/201 (86.6%) |

85/102 (83.3%) |

89/99 (89.9%) |

0.172 |

| 12. Ordered CPR steps | 178/201 (88.6%) |

84/102 (82.4%) |

94/99 (94.9%) |

0.005 |

| 13. Overall CPR effectiveness | 152 /201 (75.6%) |

73/102 (71.6%) |

79/99 (79.8%) |

0.174 |

| 14. Communication | 191/201 (95%) |

102/102 (100%) |

89/99 (89.9%) |

< 0.001 |

| Over 70% | 160/201 (79.6%) |

72/102 (70.6%) |

88/99 (88.9%) |

0.001 |

Overall score did not follow a normal distribution. The Student t-test and Mann–Whitney test were used to compare mean and median scores. The chi-squared test was used to compare proportions

Table 5.

Comparison between 2022 and 2023 AT HGM

|

Overall 2022- + 2023 |

2022 | 2023 | P | |

|---|---|---|---|---|

| Number of students | 191 | 108 | 83 | |

| Mean (SD) | 86.4 ± 16.6 | 82.4 ± 16.6 | 91.5 ± 15.3 | < 0.001 |

| Median (IQR) | 80 (90–100) | 85 (80–95) | 95 (90–100) | < 0.001 |

| Correct items n/N (%) | 142/191 (74.3%) |

73/108 (67.6%) |

69/83 (83.1%) |

0.015 |

| 1. Safety | 134/191 (70.2%) |

55/108 (50.9%) |

79/83 (95.2%) |

< 0.001 |

| 2. Unconsciousness | 129/191 (67.5%) |

54/108 (50%) |

75/83 (90.4%) |

< 0.001 |

| 3. Shout for help | 117/191 (61.3%) |

49/108 (45.4%) |

68/83 (81.9%) |

< 0.001 |

| 4. Airway | 178/192 (93.2%) | 102/108 (94.4%) |

76/83 (91.6%) |

0.434 |

| 5.Check respiration | 175/191 (91.6%) |

97/108 (89.8%) |

78/83 (94%) |

0.304 |

| 6.Ventilation | 169/191 (88.5%) |

93/108 (86.1%) |

76/83 (91.6%) |

0.242 |

| 7. Check vital signs | 157/191 (82.2%) |

86/108 (79.6%) |

71/83 (85.5%) |

0.290 |

| 8. Chest compressions | 184/191 (96.3%) | 103/108 (95.4%) |

81/83 (97.6%) |

0.418 |

| 9. Ventilation and chest compressions coordination | 171/191 (89.5%) |

94/108 (87%) |

77/83 (92.8%) |

0.200 |

| 10. Activate EMS | 174/191 (91.1%) | 100/108 (92.6%) |

74/83 (89.2%) |

0.408 |

| 11.Check vital signs | 180/191 (94.2%) | 100/108 (92.6%) |

80/83 (96.4%) |

0.265 |

| 12. Ordered CPR steps | 168/191 (88%) |

93/108 (86.1%) |

75/83 (90.4%) |

0.371 |

| 13. Overall CPR effectiveness | 177/191 (92.7%) | 102/108 (94.4%) |

75/83 (90.4%) |

0.283 |

| 14. Communication | 69/191 (88.5%) |

91/108 (84.3%) |

78/83 (94%) |

0.037 |

Overall score did not follow a normal distribution. The Student t-test and Mann–Whitney test were used to compare mean and median scores. The chi-squared test was used to compare proportions

The percentage of students who exceeded a score of 70 was greater in 2023 (91.2%) than in 2022 (77.6%), P < 0.001. This was also the case in each hospital; HCSC 70.6% in 2022 and 88.9% in 2023 (P < 0.001); and HGM 84.3% in 2022 and 94% in 2023 (P = 0.037).

For HCSC the percentage of students who correctly underwent most manoeuvres was significantly higher in 2023 (Table 4). In the case of the HGM, the percentage of students who correctly performed each of the manoeuvers in 2023 was also higher than in 2022, but the differences were only significant in the detection of unconsciousness, shouting for help and opening the airway.

Discussion

Our study shows that the OSCE is a good method for assessing PBLS skills in medical students and for detecting the CPR manoeuvers in which they have more difficulties. These results suggest that the OSCE could be an appropriate method for monitoring and reinforcing CPR teaching. Furthermore, our study showed that three months after training, 10% of medical students are unable to perform adequate PBLS.

The OSCE is an objective, fast, reproducible and simple method to evaluate with prior preparation. It has been suggested that the stress of the OSCE examination may mean that students’ performance is lower and does not properly reflect their skills [24]. However, the stress undergone in a real CA situation is greater, whereby the stress of the test may even increase its utility for evaluation at the CPR station [24, 25]. Some authors have proven that prior preparation for the OSCE [26] and training with simulated clinical situations [27] reduce stress and improve performance.

Our results showed that the most probable cause of the differences in the results between the two hospitals was the differences in the theoretical and practical CPR teaching program (PBLS with 2.5 h versus PILS with 8 h). So, when the HCSC program was reinforced the differences diminished, but CPR training by means of a structured PILS course keep getting better results than exclusive training in PBLS. There is no clear consensus on the level of training in PLS that medical students should receive. Although in large part paediatric training universities is only a complementary part of the general training in CPR [12, 14–17]. Training in PILS requires more time, more resources and teaching. However, it is very well evaluated by students and attains a higher level of training. In our experience PILS training in medical students is feasible and attains better skills [17].

On the other hand, our study reveals that in the OSCE evaluation three months after training 10% of medical students do not manage to undertake proper PBLS. These data coincide with those found by other authors revealing that practical CPR skill quickly falls if CPR is not kept up to date, and regardless of the level of training taught, it is essential to undertake refresher and maintenance activities [28–30]. The OSCE performed several times during the medicine undergraduate degree may serve to verify the results of refresher activities for the training.

Evaluation of improvement measures

The OSCE enables evaluating the efficacy of improvement measures in CPR teaching, as occurred in our study.

Our results reveal that improvement activities in CPR training, increasing the practical exercise time and strengthening skills that students are worse at learning or forget attains a significant improvement in skills. Therefore, the OSCE may not only be used for the evaluation of student skills but also to evaluate the training model.

However, the OSCE should not only be an evaluation instrument but rather it should have a training function [31]. For this reason we include a succinct evaluation with the student at the end of the training to correct and strengthen knowledge.

Limitations

First, one possible limitation of the OSCE evaluation is the individual variability between evaluators. Ensuring homogeneity of evaluators’ criteria is not easy. Four evaluators acted during both years and the remainder were different and the existence of bias cannot be ruled out. However, all evaluators were accredited by the SGPNCPR paediatric CPR instructors and received specific training in the OSCE evaluation and this fact reduces the bias of individual assessment. To limit individual variability some authors have proposed the existence of two evaluators in each station [32], although this means a significant number of evaluators especially when the OSCE is simultaneous for many students.

Yeates has devised a method that includes a videorecording of training and its evaluation by several evaluators “Video-based Examiner Score Comparison and Adjustment” (VESCA) [33]. This may improve the individual variability although this also means more work for evaluators.

Another limitation was that the participants were not the same in 2022 and 2023. We cannot exclude that the better results in 2023 were due to the fact that the students of that year were better than those of the previous year and not to the effect of changes in teaching, but the number of students studied makes this hypothesis unlikely, since the selection of students to enter the Complutense University Medical School is carried out by the score achieved in a national exam and the criteria did not change in those years.

On the other hand, the case studies set out in the stations for both years were different and this could in part account for the differences in results. Some manoeuvres such as opening the airway may be a little more complicated in children with trauma, but the remainder are the same. However, CPR manoeuvres in children are no more complicated than in the breastfeeding infant.

The OSCE evaluation provided a score, but it is unclear whether this score corresponds to true competence in delivering CPR in a clinical setting. The verification list system used in the OSCE evaluation has the disadvantage that it only classifies the action in each item as suitable or unsuitable and does not enable a greater discrimination in terms of different degrees of compliance. This was the scoring system for the entire OSCE and did not enable its switch for the PBLS station. In our opinion a scoring system into five levels (e.g., very good: 5 points, good: 4 points, sufficient: 3 points, poor: 2 points, very poor: 1 point, not performed 0 points, which is the one the SGPNCPR recommends to the PLS courses, helps to better discriminate students’ skills, although it requires more time and may likely create greater discrepancies among the evaluators. Other authors propose a blend of verification lists and evaluation scales, mainly to evaluate complex skills [32].

Finally, in our study we did not perform a long-term evaluation to see whether PBLS skills are maintained over time. Although, as discussed, various studies have revealed that without refresher courses these skills gradually fall over time which strengthens the importance of undertaking periodic refresher courses [28–30].

Conclusions

The OSCE successfully identified differences in the performance of CPR skills between medical student populations exposed to different training programs, as well as score improvement following training program modifications.

Acknowledgements

UCM Paediatric CPR Training Group: Maria José Santiago, Angel Carrillo, Marta Cordero, Santiago Mencía, Laura Butragueño, Sara Alcón, Ana Belén García-Moreno, Raquel Cieza, Marta Paúl, María Escobar, Cristina Zapata, Cristina De Angeles, Gloria Herranz.

We are grateful to Francisco Cañizo and the fifth year OSCE coordinators.

Declaration of AI and AI-assisted technologies in the writing process

The authors hereby declare they did not use generative AI and AI-assisted technologies during the manuscript writing process.

Abbreviations

- (CPR)

Cardiopulmonary Resuscitation

- (HGM)

Hospital General Universitario Gregorio Maranón

- (HCSC)

Hospital Clínico Universitario San Carlos

- (OSCE)

Objective Structured Clinical Examination

- (PBLS)

Paediatric Basic Life Support

- (PILS)

Paediatric Immediate Life Support

- CPR (SGPNCPR)

Spanish Group for Paediatric and Neonatal

- (VESCA)

Video-based Examiner Score Comparison and Adjustment

Authors’ contributions

Jesús López-Herce (JLH): Conceptualization; data curation, formal analysis; Investigation; Methodology; Supervision; Writing—original draft; and Writing—review & editing. Esther Aleo (EA): Conceptualization; formal analysis; Investigation; Methodology; Supervision; Writing—original draft; and Writing—review & editing. Rafael González (RG): data curation; formal analysis: Investigation; Methodology; Writing—original draft; and Writing—review & editing. UCM Paediatric CPR Training Group; Investigation; Methodology; Writing—review & editing.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Declarations

Ethics approval and consent to participate

The study has been carried out in accordance with The Code of Ethics of the World Medical Association (Declaration of Helsinki) for experiments involving humans. The study was approved by the local ethics Committee (Proyecto Innova Docentia 332/2020). Students and teachers signed informed consent forms to take part in the ECOE and data were analyzed for the study.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Jesús López-Herce, Email: jesuslop@ucm.es.

Esther Aleo, Email: esther.aleo@salud.madrid.org.

Rafael González, Email: rafa_gonzalez_cortes@hotmail.com.

and UCM Paediatric C. P. R. Training Group:

Maria José Santiago, Angel Carrillo, Marta Cordero, Santiago Mencía, Laura Butragueño, Sara Alcón, Ana Belén García-Moreno, Raquel Cieza, Marta Paúl, María Escobar, Cristina Zapata, Cristina De Angeles, and Gloria Herranz

References

- 1.Harden RM, Stevenson M, Downie WW, Wilson GM. Assessment of clinical competence using objective structured clinical examination (OSCE). Br Med J. 1975;1:447–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Harden RM, Gleeson FA. Assessment of clinical competence using an objective structured clinical examination (OSCE). Med Ed. 1979;13:41–54. [PubMed] [Google Scholar]

- 3.Onwudiegwu U. OSCE: design, development and deployment. J West Afr Coll Surg. 2018;8:1–22. [PMC free article] [PubMed] [Google Scholar]

- 4.Ouldali N, Le Roux E, Faye A, et al. Early formative objective structured clinical examinations for students in the pre-clinical years of medical education: A non-randomized controlled prospective pilot study. PLoS One. 2023;18: e0294022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Alizadeh S, Zamanzadeh V, Ostovar S, et al. The development and validation of a standardised eight-station OSCE for registration of undergraduate nursing students: A Delphi study. Nurse Educ Pract. 2023;73: 103817. [DOI] [PubMed] [Google Scholar]

- 6.Wollen JT, Gee JS, Nguyen KA, Surati DD. Development of a communication-based virtual patient counseling objective structured clinical examination (OSCE) for first year student pharmacists. PEC Innov. 2023;3: 100215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Régent A, Thampy H, Singh M. Assessing clinical reasoning in the OSCE: pilot-testing a novel oral debrief exercise. BMC Med Educ. 2023;23:718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nuzzo A, Tran-Dinh A, Courbebaisse M, University of Paris OSCE and SBT groups, et al. Improved clinical communication OSCE scores after simulation-based training: Results of a comparative study. PLoS One. 2020;15:e0238542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chokshi B, Battista A, Merkebu J, Hansen S, Blatt B, Lopreiato J. The SOAP feedback training program. Clin Teach. 2023;20: e13611. [DOI] [PubMed] [Google Scholar]

- 10.Ortiz Worthington R, Sekar D, McNeil M, Rothenberger S, Merriam S. Development and pilot testing of a longitudinal skills-based feedback and conflict resolution curriculum for internal medicine residents. Acad Med. 2023. 10.1097/ACM.0000000000005560. [DOI] [PubMed] [Google Scholar]

- 11.Martinez FT, Soto JP, Valenzuela D, González N, Corsi J, Sepúlveda P. Virtual clinical simulation for training amongst undergraduate medical students: a pilot randomised trial (VIRTUE-Pilot). Cureus. 2023;15: e47527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.García-Suárez M, Méndez-Martínez C, Martínez-Isasi S, Gómez-Salgado J, Fernández-García D. Basic life support training methods for health science students: a systematic review. Int J Environ Res Public Health. 2019;16:768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.de Ruijter PA, Biersteker HA, Biert J, van Goor H, Tan EC. Retention of first aid and basic life support skills in undergraduate medical students. Med Educ Online. 2014;19:24841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Carrillo Álvarez A, López-Herce Cid J, Moral Torrero R, Sancho PL. The teaching of basic pediatric cardiopulmonary resuscitation in the degree course in medicine and surgery. An Esp Pediatr. 1999;50:571–5. [PubMed] [Google Scholar]

- 15.O’Leary F, Allwood M, McGarvey K, Howse J, Fahy K. Standardising paediatric resuscitation training in New South Wales, Australia: RESUS4KIDS. J Paediatr Child Health. 2014;50:405–10. [DOI] [PubMed] [Google Scholar]

- 16.Thomson NM, Campbell DE, O’Leary FM. Teaching medical students to resuscitate children: an innovative two-part programme. Emerg Med Australas. 2011;23:741–7. [DOI] [PubMed] [Google Scholar]

- 17.López-Herce J, Carrillo A, Martínez O, Morito AM, Pérez S, López J, Pediatric Cardiopulmonary Resuscitation Group. Basic and immediate paediatric cardiopulmonary resuscitation training in medical students. Educ Med. 2019;20:155–61. [Google Scholar]

- 18.Johnson G, Reynard K. Assessment of an objective structured clinical examination (OSCE) for undergraduate students in accident and emergency medicine. J Accid Emerg Med. 1994;11:223–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mahling M, Münch A, Schenk S, et al. Basic life support is effectively taught in groups of three, five and eight medical students: a prospective, randomized study. BMC Med Educ. 2014;14:185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Yang F, Zheng C, Zhu T, Zhang D. Assessment of life support skills of resident dentists using OSCE: cross-sectional survey. BMC Med Educ. 2022;22:710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Rodríguez-Matesanz M, Guzmán-García C, Oropesa I, Rubio-Bolivar J, Quintana-Díaz M, Sánchez-González P. A new immersive virtual reality station for cardiopulmonary resuscitation objective structured clinical exam evaluation. Sensors (Basel). 2022;22:4913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Stephan F, Groetschel H, Büscher AK, Serdar D, Groes KA, Büscher R. Teaching paediatric basic life support in medical schools using peer teaching or video demonstration: A prospective randomised trial. J Paediatr Child Health. 2018;54:981–6. [DOI] [PubMed] [Google Scholar]

- 23.Farhadi R, Azandehi BK, Amuei F, Ahmadi M, Zazoly AZ, Ghorbani AA. Enhancing residents’ neonatal resuscitation competency through team-based simulation training: an intervention educational study. BMC Med Educ. 2023;23:743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.White K. To OSCE or not to OSCE? BMJ. 2023;381:1081. [DOI] [PubMed] [Google Scholar]

- 25.Bartfay WJ, Rombough R, Howse E, Leblanc R. Evaluation. The OSCE approach in nursing education. Can Nurse. 2004;100:18–23. [PubMed] [Google Scholar]

- 26.Braier-Lorimer DA, Warren-Miell H. A peer-led mock OSCE improves student confidence for summative OSCE assessments in a traditional medical course. Med Teach. 2022;44:53540. [DOI] [PubMed] [Google Scholar]

- 27.Philippon AL, Truchot J, De Suremain N, et al. Medical students’ perception of simulation-based assessment in emergency and paediatric medicine: a focus group study. BMC Med Educ. 2021;21:586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Au K, Lam D, Garg N, et al. Improving skills retention after advanced structured resuscitation training: A systematic review of randomized controlled trials. Resuscitation. 2019;138:284–96. [DOI] [PubMed] [Google Scholar]

- 29.Patocka C, Cheng A, Sibbald M, et al. A randomized education trial of spaced versus massed instruction to improve acquisition and retention of paediatric resuscitation skills in emergency medical service (EMS) providers. Resuscitation. 2019;141:73–80. [DOI] [PubMed] [Google Scholar]

- 30.Greif R, Bhanji F, Bigham BL, et al. Education, implementation, and teams: 2020 International consensus on cardiopulmonary resuscitation and emergency cardiovascular care science with treatment recommendations. Resuscitation. 2020;156:A188–239. [DOI] [PubMed] [Google Scholar]

- 31.Chisnall B, Vince T, Hall S, Tribe R. Evaluation of outcomes of a formative objective structured clinical examination for second-year UK medical students. Int J Med Educ. 2015;6:76–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Rushforth HE. Objective structured clinical examination (OSCE): review of literature and implications for nursing education. Nurse Educ Today. 2007;27:481–90. [DOI] [PubMed] [Google Scholar]

- 33.Yeates P, Maluf A, Cope N, et al. Using video-based examiner score comparison and adjustment (VESCA) to compare the influence of examiners at different sites in a distributed objective structured clinical exam (OSCE). BMC Med Educ. 2023;23:803. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.