Abstract

Rapid and accurate point-of-care (POC) tuberculosis (TB) diagnostics are crucial to bridge the TB diagnostic gap. Leveraging recent advancements in COVID-19 diagnostics, we explored adapting commercially available POC SARS-CoV-2 tests for TB diagnosis in line with the World Health Organization (WHO) target product profiles (TPPs). A scoping review was conducted following PRISMA-ScR guidelines to systematically map POC antigen and molecular SARS-CoV-2 diagnostic tests potentially meeting the TPPs for TB diagnostic tests for peripheral settings. Data were gathered from PubMed/MEDLINE, bioRxiv, medRxiv, publicly accessible in vitro diagnostic test databases, and developer websites up to 23 November 2022. Data on developer attributes, operational characteristics, pricing, clinical performance, and regulatory status were charted using standardized data extraction forms and evaluated with a standardized scorecard. A narrative synthesis of the data is presented. Our search yielded 2003 reports, with 408 meeting eligibility criteria. Among these, we identified 66 commercialized devices: 22 near-POC antigen tests, 1 POC molecular test, 31 near-POC molecular tests, and 12 low-complexity molecular tests potentially adaptable for TB. The highest-scoring SARS-CoV-2 diagnostic tests were the near-POC antigen platform LumiraDx (Roche, Basel, Switzerland), the POC molecular test Lucira Check-It (Pfizer, New York, NY, USA), the near-POC molecular test Visby (Visby, San Jose, CA, USA), and the low-complexity molecular platform Idylla (Biocartis, Lausanne, Switzerland). We highlight a diverse landscape of commercially available diagnostic tests suitable for potential adaptation to peripheral TB testing. This work aims to bolster global TB initiatives by fostering stakeholder collaboration, leveraging SARS-CoV-2 diagnostic technologies for TB, and uncovering new commercial avenues to tackle longstanding challenges in TB diagnosis.

Keywords: tuberculosis, COVID-19, rapid diagnostic tests, point-of-care testing, missed diagnosis

1. Introduction

As healthcare systems gradually recover from the COVID-19 pandemic, tuberculosis (TB) remains the world’s leading infectious killer, with 1.6 million new cases and 1.3 million deaths in 2022 alone [1]. Despite a modest 8.7% reduction in TB incidence between 2015 and 2022, achieving the World Health Organization (WHO) End TB Strategy’s 50% reduction target by 2025 is still distant [1]. To reach this milestone and curb community transmission, closing the TB diagnostic gap is essential [2]. Sputum smear microscopy, commonly used in low-resource settings due to its rapid results and cost-effectiveness, suffers from low sensitivity, leading to missed TB cases [3]. WHO-recommended rapid diagnostic tests (WRDs) offer higher accuracy and the capability to detect drug resistance, even in decentralized settings. However, widespread adoption of WRDs is impeded by high costs and maintenance requirements [3]. As a result, only 47% of TB cases reported in 2022 were diagnosed using WRDs [4,5,6]. Bridging the TB diagnostic gap requires improved access to and utilization of rapid, accurate, and affordable point-of-care (POC) diagnostic tests and better linkage to treatment [7].

To guide developers toward fit-for-purpose TB diagnostics, the WHO defined high-priority target product profiles (TPP) in 2014, with a revision published in August 2024 [8,9]. Current WRDs fail to meet TPP requirements for peripheral settings due to their reliance on sputum, inadequate performance, high cost, and operational limitations [8,10,11]. Fit-for-purpose peripheral TB diagnostic tests meeting TPP criteria are needed to achieve the WHO’s goal of 100% global WRD coverage [6].

Increased funding and collaborative efforts, such as the Access to COVID-19 Tools Accelerator and RADx, have driven significant growth in diagnostic R&D, resulting in diverse diagnostic products for remote and at-home testing [12,13]. As the COVID-19 diagnostics market declines, developers are exploring new applications for their technologies [12]. TB is a promising choice due to its substantial disease burden, supportive government initiatives, and in-kind funding for validation through established research networks. Given the similarities in transmission through airborne infectious aerosols and droplets, primary pulmonary involvement, and replication sites, there is potential for applying current COVID-19 diagnostic tests to TB. Shared potential sample types, such as oral swabs further support the feasibility of using similar diagnostic approaches [3,14]. These shared characteristics suggest that diagnostic technologies developed for COVID-19 might be adapted for TB detection, potentially enhancing diagnostic efficiency and accessibility.

This scoping review systematically maps commercially available POC antigen and molecular SARS-CoV-2 diagnostics that could meet the TPP for TB diagnostics [8]. It aims to identify promising innovations to facilitate interactions among device and assay developers and other key stakeholders, leveraging COVID-19-driven diagnostics to address the TB diagnostic gap.

2. Methods

This scoping review examined the scientific literature, SARS-CoV-2 test databases, and information from developers, following PRISMA Extension for Scoping Reviews (PRISMA-ScR) guidelines (see Table S1) and Levac et al.’s methodological framework [15,16]. We previously published the protocol for this review [17]. Because of the vast literature, we split the review into two parts: this publication focuses on commercialized diagnostics, while a forthcoming publication will focus on tests in development or pre-commercialization.

2.1. Definitions and Eligibility Criteria

Definitions and eligibility criteria are defined in the protocol [17]. Due to infrequent reporting on ‘minimal biosafety requirements’, this parameter was omitted from data collection. We introduced the following sub-categories for peripheral in vitro diagnostic (IVD) tests [18]:

POC tests: Tests performed at or near the site of patient care and designed to be instrument-free, disposable, and independent of specific infrastructure (e.g., mains electricity, laboratory equipment, or a cold chain). They require no special skills to administer. Example: DetermineTM TB LAM Ag Test (Abbott, Abbott Park, IL, USA).

Near-POC tests: Tests that may be instrument-free or instrument-based but require minimal infrastructure, such as mains electricity for recharging batteries or operating. They can be used in healthcare settings without laboratories and require basic technical skills, such as simple pipetting and sample transfer that do not require precise timing or volumes, to administer. Ideally, they come with pre-set volume transfer pipettes. Example: GeneXpert Edge (Cepheid, Sunnyvale, CA, USA).

Low-complexity tests: Instrument-based tests intended for use in healthcare settings with basic laboratory infrastructure and mains electricity. They require basic technical skills and laboratory equipment, including pipettes, vortex mixers, heating devices, freezers, and/or separate test tubes. Examples: Truenat (Molbio Diagnostics, Nagve, India), GeneXpert 6-/10-color platforms (Cepheid, Sunnyvale, CA, USA).

2.2. Information Sources

We initially searched PubMed/MEDLINE for the peer-reviewed literature and bioRxiv and medRxiv for pre-prints [17]. Additional information on tests identified through these databases was obtained from the IVD databases and developer websites listed in the protocol [17]. The China National Medical Products Administration and Indian Central Drugs Standard Control Organization databases were not searched because of limited search function and language barriers [19,20]. The European Database on Medical Devices was inaccessible for data search at the time of data collection [21].

2.3. Search

Table 1 in the protocol shows the PubMed/MEDLINE search term [17]. It was adapted for bioRxiv and medRxiv using the medrxivr package in R (version 4.0.5; R Foundation for Statistical Computing) (see Supplementary Methods, Section S1). No restrictions were imposed on the publication date or language.

2.4. Selection of Sources of Evidence

Retrieved articles were collated using Covidence software (Veritas Health Innovation, Melbourne, Australia, available online: https://www.covidence.org/, accessed on 29 September 2024), which automatically removes duplicates [22]. Two reviewers (S.Y., L.H.) independently screened titles and abstracts against eligibility criteria, followed by full-text screening using the same software. Discrepancies were resolved through consensus.

2.5. Data Charting

We used two Google forms for data charting, developed by one reviewer (S.Y.) and revised in an iterative process by both reviewers (S.Y., L.H.). One reviewer (L.H.) charted information from peer-reviewed articles and pre-prints (see Table S2). For studies mentioning multiple tests, one record per test was charted. Additional information on tests identified in the included articles was collected using the second form from developer websites and IVD databases (see Table S3) [17]. Results tables were cross-checked by a second reviewer (S.J. and R.D.). Data charted from various sources were collated on separate Excel sheets for each diagnostic test.

2.6. Variables

We abstracted data on test description, operational characteristics, pricing, performance, and commercialization status, as listed and defined in Table 2 in the study protocol [17].

2.7. Synthesis of Results

A narrative synthesis detailing major aspects of included tests like developer information, test characteristics, and clinical performance stratified by technology type (antigen and molecular) and test categories (low-complexity, near-POC, and POC) is provided in the text and tables.

We modified Lehe et al.’s standardized scorecard for evaluating operational characteristics of POC diagnostic devices to match the requirements of the 2014 TPPs, also taking the draft version of the revised TPPs available at the time of data analysis into account [23]. Each test received an overall score ranging from 0 to 110 points based on 22 scoring criteria across seven categories. Each scoring criterion was assigned 1 to 5 points, with missing information scored as one point. A simplified version of the adapted scorecard is shown in Table 1 (See Table S4 for the detailed scoring methodology). Two reviewers (L.H., S.J.) independently scored each device, with conflicts resolved through consensus and discussion with a third reviewer (S.Y.). We present the highest-scoring tests’ characteristics and performance in tables, figures, and text.

Table 1.

Simplified version of the scorecard (adapted from Lehe et al.) [23].

| Scoring Category | Scoring Criteria | Scoring Variables |

|---|---|---|

| Category 1: POC features of equipment |

Technical specifications | 1 Instrument size |

| 2 Instrument weight | ||

| 3 Power requirements | ||

| 4 Instrument-free | ||

| 5 Connectivity (data export options) | ||

| Data analysis | 6 Integrated data analysis | |

| 7 Integrated electronics and software | ||

| Testing capacity | 8 Time-to-result | |

| 9 Hands-on-time | ||

| 10 Throughput capacity | ||

| Category 2: POC features of test consumables |

Operating conditions | 11 Operating Temperature |

| 12 Operating Humidity | ||

| Storage conditions | 13 Shelf life | |

| Category 3: Ease of use |

End user requirements | 14 Potential end-user |

| 15 Number of Manual Sample Processing Steps | ||

| Category 4: Performance * |

Analytical and clinical performance (COVID-19) | 16 Limit of detection (LoD) |

| 17 Clinical sensitivity | ||

| 18 Clinical specificity | ||

| Category 5: Cost |

Upfront and user costs | 19 Capital cost of equipment |

| 20 Consumable cost | ||

| Category 6: Platform versatility |

Multi-use ability | 21 Applicability of platform to pathogens other than SARS-CoV-2 |

| Category 7: Parameters |

Test parameters | 22 Number of test parameters available for scoring |

Abbreviations: PoC = Point-of-Care. Legend: * We only considered independently reported study estimates for scoring purposes. Developer-reported estimates were not used.

3. Results

3.1. Selection of Sources of Evidence

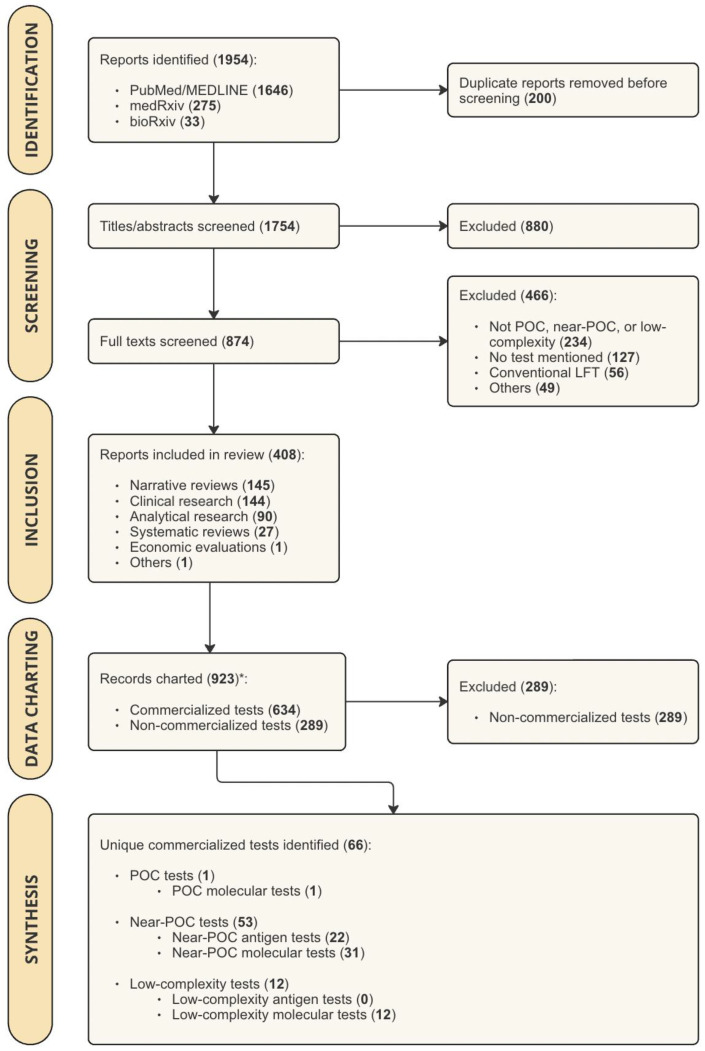

The literature search yielded 1954 results, which were imported into Covidence for screening. After removing 200 duplicates, 1754 articles underwent title/abstract screening. Of these, 874 were eligible for full-text screening. Common reasons for exclusion included assays and instruments not designed for peripheral settings (n = 234), no mention of specific tests (n = 127), and reporting on conventional LFAs without reading devices or enhanced detection technologies (n = 56) (Figure 1). Ultimately, 408 articles were included, identifying 66 commercialized diagnostic tests.

Figure 1.

PRISMA Flow Chart showing the results of study search and screening procedures. Abbreviations: POC = point-of-care; LFT = Lateral Flow Test. Legend: * For studies that mentioned more than one test, multiple records were charted (one record per test).

3.2. Characteristics of Sources of Evidence

The main sources of evidence were clinical research papers, systematic reviews, and narrative reviews. Clinical performance data were charted from clinical research papers and systematic reviews. Narrative reviews provided details on platform and assay characteristics, occasionally including cost. Developer websites supplemented technical data with information on the test workflow and end-user requirements. The FIND COVID-19 Test Directory provided information on regulatory status, validated assay targets, sample types, and links to country-specific IVD databases for authorization documents such as Instructions for Use. Table S5 offers detailed information on the variables charted from all sources of evidence.

3.3. Synthesis of Results

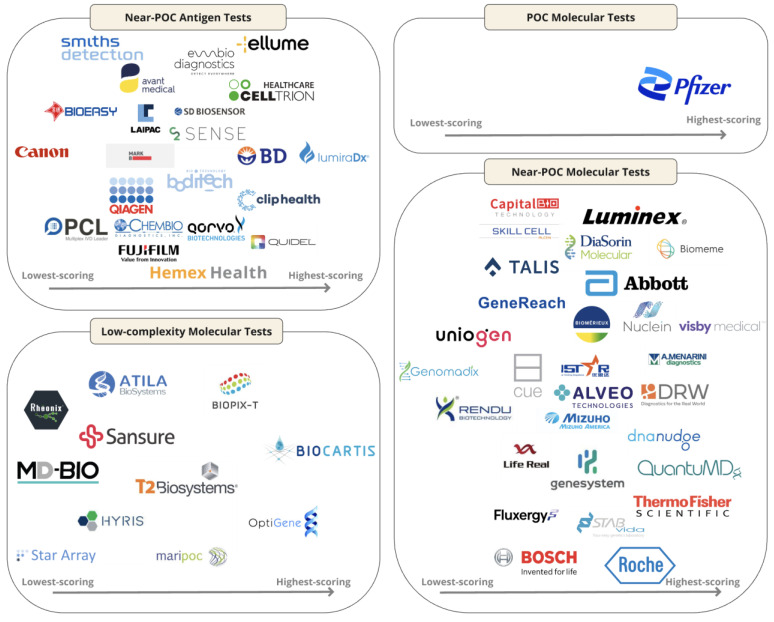

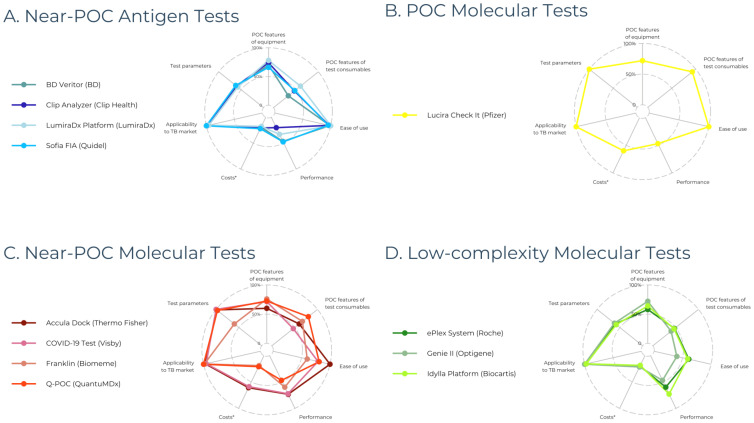

Among the 66 commercialized POC diagnostic tests for SARS-CoV-2, we identified 22 near-POC antigen tests, 1 POC molecular test, 31 near-POC molecular tests, and 12 low-complexity molecular tests. By definition, no POC antigen tests were included, as conventional instrument-free LFTs were excluded. The 63 manufacturers of included diagnostic tests are displayed in Figure 2. Developer and product characteristics, regulatory status, and clinical performance of included diagnostic tests are shown in Tables S6–S9. Table 2 presents the characteristics of the highest-scoring diagnostic test in each test category. Figure 3 displays scores across the seven categories for the three highest-scoring tests in each test category.

Figure 2.

Logo chart illustrating the 63 manufacturers of the 66 included diagnostic tests, sorted by test performance as indicated in the scorecard. Abbreviations: PoC = Point-of-Care.

Figure 3.

Scorecard performance of the three highest-scoring diagnostic devices across the seven scoring categories, expressed as percentages. (A) Four near-POC antigen tests are displayed because BD Veritor, Clip Analyzer, and Sofia FIA received the same score. (B) Only one POC molecular test is shown since we only identified one test in this category. (C,D) The three highest-scoring near-POC and low-complexity molecular tests are shown, respectively. Abbreviations: PoC = Point-of-Care. Legend: * costs (including capital costs and consumable costs) were not reported for most diagnostic tests, resulting in a score of 1/5 (displayed as 20%).

Table 2.

Characteristics of highest-scoring diagnostic devices stratified by test category.

| Technology Classification | Antigen Tests | Molecular Tests | ||||

|---|---|---|---|---|---|---|

| Test Classification | Near-POC | POC | Near-POC | Low-Complexity | ||

| Developer, Product Name | LumiraDx, LumiraDx |

Pfizer, Lucira Health |

Visby, COVID-19 Test |

Biocartis, IdyllaTM |

||

| Overall Score *,† | 73/110 (66%) | 83/110 (75%) | 78/110 (71%) | 66/110 (60%) | ||

| Test summary | Platform: Specifications | Dimensions (cm) | 21 × 9.7 × 7.3 | 19.1 × 8.0 × 5.2 | 13.8 × 6.7 × 4.4 | 30.5 × 19 × 50.5 |

| Weight (g) | 1100 | 150 | NR | 18,600 | ||

| Power-supply | Integrated battery (20 tests) |

AA batteries | Mains electricity (power adapter) |

Standard electricity | ||

| Connectivity | LumiraDx Connect cloud-based services; 2× USB ports; RFID reader; Bluetooth connectivity |

None | None | USB port; Direct RJ45 Ethernet cable; Idylla Visualizer (PDF viewer); Idylla Explore (cloud) | ||

| Max. operating temperature (°C)/humidity (%) | 30/90 | 45/95 | 30/80 | 30/80 | ||

| Multi-use ‡ | Yes | Yes | Yes | Yes | ||

| Throughput capacity | 1 | 1 | 1 | 8 | ||

| Costs (USD) | NR | Not applicable (instrument-free) |

Not applicable (instrument-free) |

NR | ||

| COVID-19 Assay: Specifications |

Sample type | NS, NPS | NS | NS, NPS | NPS | |

| Hands-on time (min) | 1 | 1 | <2 | <2 | ||

| Running time (min) | 12 | 30 | 30 | 90 | ||

| Shelf-life (months) | NR | 18 | NR | NR | ||

| Costs per test (USD) | NR | >10/test | NR | NR | ||

| LOD (copies/mL) | NR | 900 | 100–1112 | 500 | ||

| Sensitivity (%)/Specificity (%) | 82.7/96.9 | 93.1/100.0 | 100.0/98.7 | 100.0/100.0 | ||

| POC features of equipment |

Score | 39/50 (78%) | 36/50 (72%) | 36/50 (72%) | 32/50 (64%) | |

| Pros |

|

|

|

|

||

| Cons |

|

|

|

|

||

| POC features of consumables |

Score | 9/15 (60%) | 14/15 (93%) | 7/15 (47%) | 7/15 (47%) | |

| Pros |

|

|

None | None | ||

| Cons |

|

None |

|

|

||

| Ease of use | Score | 10/10 (100%) | 10/10 (100%) | 8/10 (80%) | 6/10 (60%) | |

| Pros |

|

|

|

|

||

| Cons | None | None | None |

|

||

| Performance | Score | 5/15 (33%) | 7/15 (47%) | 11/15 (73%) | 11/15 (73%) | |

| Pros | None |

|

|

|

||

| Cons |

|

|

|

|

||

| Cost | Score | 2/10 (20%) | 6/10 (60%) | 6/10 (60%) | 2/10 (20%) | |

| Pros | None |

|

|

None | ||

| Cons |

|

|

|

|

||

| Platform versatility |

Score | 5/5 (100%) | 5/5 (100%) | 5/5 (100%) | 5/5 (100%) | |

| Pros |

|

|

|

|

||

| Cons | None | None | None | None | ||

Abbreviations: POC = point-of-care; NR = Not Reported; NS = Nasal Swab; NPS = Nasopharyngeal Sample; LoD = Limit-of-Detection. Legend: * color coding: red = overall score/category score <33.3%; orange = overall score/category score <66.6%; green = overall score/category score ≥ 66.6%; † overall score: calculated by summing the seven category scores; category scores: calculated by aggregating the scores for each variable within the respective scoring category. Please refer to Table S4 for the detailed scoring methodology; ‡ multi-use is defined as the ability to analyze multiple biomarkers from one sample on a single diagnostic device (e.g., respiratory panels).

3.4. Near-POC Antigen Tests

We identified 22 near-POC antigen tests, including 9 reader-based LFTs, 11 automated immunoassays, and 2 biosensors. The LFT COVID-19 Home Test (Ellume Health, East Brisbane, Australia) was included in this category because result interpretation requires a mobile phone. The highest-scoring test, LumiraDx (LumiraDx, Stirling, UK, recently acquired by Roche Diagnostics, Basel, Switzerland) [24], is a multi-use, microfluidic immunofluorescence assay for detecting antigens in nasal swab (NS) and nasopharyngeal samples (NPS).

3.5. POC Molecular Tests

We identified one POC molecular test: Lucira Check It COVID-19 Test (Pfizer, New York, NY, USA), which operates on two AA batteries and is disposable, used for detecting RNA in NS samples.

3.6. Near-POC Molecular Tests

This review includes 31 near-POC molecular tests: 25 based on PCR and 6 on isothermal amplification. Most were tabletop platforms, with some handheld platforms like Cue Reader (Cue, Walnut Creek, CA, USA), DoctorVida Pocket Test (STAB Vida, Coimbra, Portugal), and Accula Dock (Thermo Fisher Scientific, Waltham, MA, USA). One disposable molecular test, Visby COVID-19 Test (Visby, San Jose, CA, USA), was included in this category due to its requirement for mains electricity.

3.7. Low-Complexity Molecular Tests

The twelve identified low-complexity molecular tests were based on PCR (eight tests) or isothermal amplification technology (four tests). The highest-scoring test in this category was IdyllaTM (Biocartis, Lausanne, Switzerland), a multi-use tabletop platform for detecting RNA in NPS, weighing 18 kg and running on mains electricity.

4. Discussion

In this comprehensive scoping review, we identified 66 commercially available antigen and molecular tests for diagnosing SARS-CoV-2 at POC and assessed their applicability to TB. Our findings reveal a diverse array of diagnostic tests that hold potential for peripheral TB diagnostic testing.

4.1. Antigen Tests

The identified antigen detection platforms excel in compact design, portability, and rapid turnaround times, making them suitable for peripheral settings. The front-runner, LumiraDx, is notable for its quick turnaround, battery operation, and data export options, though it operates within limited temperature ranges [25]. Developers must consider high temperatures and humidity in TB-endemic countries. Harsh environmental conditions may increase technical failures and result in enhanced utilization of maintenance services, resulting in delayed or missed diagnosis in remote settings where technical staff may not be readily available. Additionally, many tests support multi-disease testing, challenging siloed programs and facilitating differential diagnosis [3].

The tests included in this review may meet TPP sensitivity targets for TB detection by employing signal-amplifying technologies, such as LFAs with readers and automated immunoassays utilizing sensitive detection methods like fluorescence and electrochemical approaches. LumiraDx shows promising performance with a LoD of 2–56 PFU/mL for SARS-CoV-2 and a clinical sensitivity of 82.2% [26]. Overall, clinical sensitivity of SARS-CoV-2 assays varies widely, with limited LoD data, complicating the assessment of their potential to detect low-abundance TB antigens like lipoarabinomannan (LAM) in urine that likely requires a LoD in the low pg/mL range to detect TB in all patient groups [27]. Moreover, none of the identified platforms reported the use of urine samples. As a result, successful application to TB will require optimized sample pre-treatment, specific anti-LAM antibodies, and sensitive readout approaches [28,29].

4.2. Molecular Tests

The identified molecular tests feature novel assay technologies and platform attributes designed to enhance user-friendliness and testing capacity. These features include easy handling, self-testing options, rapid turnaround times, and the ability to detect multiple pathogens. For instance, at-home molecular tests such as the Visby COVID-19 Test, Lucira Check It, and Cue Reader show considerable promise for use in decentralized due to their compact size, quick results, and ease of use.

Some operational limitations may, however, hinder their widespread adoption in peripheral settings. For example, the Visby COVID-19 Test and Accula Dock require mains electricity, which may affect implementation in areas with unstable power supplies [30]. Although Lucira Check It and Cue Reader use AA batteries or smartphone power, any reliance on smartphones may still pose limitations. Further, most tests, including Lucira Check It and Visby COVID-19 Test, lack adequate data export options, increasing reliance on Wi-Fi and risking human error and data loss. Therefore, adapting these tests for areas with limited infrastructure is essential [31]. As highlighted earlier, their performance in high temperature and humidity conditions typical of TB-endemic regions should also be considered.

Most near-POC platforms have low daily sample throughput and limited multi-use capacity, which can hinder parallel sample analysis and potentially delay treatment delay. Among these, FranklinTM (Biomeme, Philadelphia, PA, USA) stands out with its ability to detect up to 27 targets in 9 samples per PCR run. Multi-disease panels are crucial for integrated public health interventions and should be prioritized in developing novel diagnostics [32]. The WHO’s essential diagnostic list strongly advocates for co-testing of common comorbidities such as TB, HIV, and respiratory pathogens [33]. By incorporating priority diseases into multi-pathogen platforms, testing processes can be streamlined, thus reducing the costs associated with expanding disease coverage [34,35]. These platforms have the potential to significantly enhance disease surveillance and management, especially in resource-limited settings. By offering comprehensive diagnostic coverage, they can enable early detection and treatment of co-infections, ultimately improving overall health outcomes [34]. Alternatively, multiplex capacities could be leveraged to enhance drug susceptibility testing.

The low-complexity GENIE® II (Optigene, Horsham, UK) holds the potential to bridge gaps left by current WRDs [36]. It is battery-powered, operates at high temperatures, delivers results in 30 min for 16 samples, and supports USB data export. However, the need for extra equipment for sample pre-treatment limits its peripheral deployment, though simplifying this process could improve its usability. Conversely, other highly ranked low-complexity platforms, ePlex System (Roche Diagnostics, Switzerland) and IdyllaTM, do not require sample pre-treatment but need continuous power and only operate at temperatures up to 30 °C, with longer turnaround times (90 to 120 min) for 3 to 8 samples. Their multiplexing capacity and high throughput are better suited for urban centers with substantial test volumes and laboratory infrastructure, similar to GeneXpert Dx [28].

Adapting these molecular platforms for TB poses technical challenges due to Mycobacterium tuberculosis (Mtb)’s complex cell wall and low bacterial loads in clinical samples [10]. Moreover, the need for sputum processing may not be compatible with these platforms and could affect MTB DNA detection accuracy. However, recent research offers a promising alternative: tongue swabs, when paired with mechanical lysis and sensitive detection methods, can achieve nearly the same sensitivity as sputum in symptomatic patients with low to high sputum bacillary loads [37]. Most identified near-POC molecular tests support oral swabs, suggesting easier adaptability for TB. Other alternative sample types, such as breath aerosols (XBA) and stool samples in children, show potential but still fall short in sensitivity compared to sputum testing [38,39]. While these sample types may be less sensitive than sputum, their ease of collection could improve diagnostic yield, enhance test accessibility and patient acceptability, and reduce overprescribing of empiric antibiotics [40,41,42,43].

However, additional manual processing steps—such as dissolving filters from face masks or heating samples to inactive pathogens—followed by mechanical lysis techniques, like bead beating or sonication to break down Mtb, even when non-sputum samples are used for TB detection [37,43]. These steps could create challenges for integration into the molecular platforms highlighted in this review, which primarily rely on enzymatic or chemical lysis to release SARS-CoV-2 nucleic acid [39,43,44]. One potential solution is to envision a separate POC device for mechanical lysis of non-sputum samples, provided the overall sample-to-result workflow remains user-friendly [45,46]. With efficient cell lysis, nucleic acid extraction could be skipped for tongue swabs, thereby simplifying the workflow [37,44].

Given the complexities associated with TB sample processing and the potential need for additional devices for lysis, adapting SARS-CoV-2 platforms for TB self-testing may be difficult, despite the authorization of several molecular SARS-CoV-2 tests for home-based testing [47,48].

High-yield sample lysis must pair with sensitive molecular detection methods. For instance, the frontrunner candidate, Lucira Check It, uses RT-LAMP, while the Visby COVID-19 Test employs RT-PCR. Currently, available isothermal amplification-based TB assays show high sensitivity but are limited in peripheral settings due to manual processes and outsourced DNA lysis and extraction [49,50]. Integrating these assays with sensitive POC platforms, as identified here, could streamline testing.

4.3. General Findings

Overall, we observed a lack of transparency in reporting instrument and test costs, with many exceeding WHO recommendations, when reported. Equitable access to WRDs remains elusive in LMICs despite large-scale investments and price negotiations [3,36,51]. Addressing global affordability and accessibility requires diversified manufacturing and minimized maintenance requirements [52]. However, most COVID-19 test manufacturers are based in high-income countries, hindering global access [52]. Translating these tests to TB will require a commitment from companies to global health and global access terms. SD Biosensor’s (Suwon, Republic of Korea) recent license agreement with the COVID-19 Technology Access Pool could serve as a model for TB diagnostics [53].

Many identified tests either lack independently reported clinical performance estimates or exhibit discrepancies between developer-reported and independently reported estimates, echoing recent findings on the overestimation of developer-reported sensitivity of SARS-CoV-2 antigen tests [54]. Moreover, standardized LoD reporting is necessary for meaningful comparisons between SARS-CoV-2 assays [55]. However, it is important to exercise caution when extrapolating LoD data from one pathogen to another.

4.4. Strengths

This scoping review has several strengths. Our systematic searches across various sources, including published studies, pre-prints, IVD databases, and manufacturers’ websites, ensure broad coverage of technologies from diverse developers, from start-ups to large IVD corporations. Data accuracy was ensured through rigorous screening by two independent reviewers and cross-verification with developer-reported information. Our TPP-aligned scorecard mitigates subjective reporting and can be readily applied to evaluate the potential adaptation of novel diagnostic tests emerging in future pandemics for TB. Additionally, focusing on commercially available, market-approved diagnostics may streamline the time-to-market for TB tests on these platforms.

4.5. Limitations

Several limitations should be noted. First, due to the extensive dataset and time constraint, charted data was not cross-verified by a second reviewer, and early-stage non-commercialized platforms were excluded from analysis, potentially overlooking promising POC technologies. Second, our search of IVD databases was confined to publicly accessible ones with English search functionality, which may introduce bias. Third, our scorecard needs further refinement, including weighing scores based on their relevance to end-users and incorporating additional TPP parameters, such as the current state of test deployment. Arguably, established platforms might score lower but could be more readily adopted by end-users than new platforms. Also, missing information was down-scored, which may have unfairly disadvantaged new platforms for which independently reported performance data was unavailable. Fourth, the scoring criteria were not specifically tailored to different technology classes. The scorecard, adapted to the 2014 TPPs for POC TB diagnostics, may be less applicable to low-complexity tests compared to (near)-POC tests. Fifth, data limitations, such as opaque cost reporting and a lack of clinical and analytical performance data, could bias device scoring and weaken the potential of identified tests to be effectively repurposed for TB. Moreover, the recent shutdown of some identified companies might result in the loss of promising platforms from the pipeline [56].

Lastly, the revised TPP was published only after our data analysis was completed. While we considered the draft version alongside the 2014 version during our analysis, we did not account for the specific requirements of different technology classes in our scoring. In addition, there are some minor differences in the revised TPPs, such as the reduction of the ‘maximum time-to-result’ from 120 to 60 min, and the ‘capital cost of equipment’ being capped at USD 2000. However, these changes do not affect the ranking of platforms within each technology class.

5. Conclusions

This scoping review highlights the potential for adapting SARS-CoV-2 POC diagnostic technologies for TB, identifying 66 commercially available antigen and molecular tests that may meet TPP criteria for peripheral settings. Platforms such as LumiraDx, Lucira Check-It, Visby, and Idylla, or those with similar features, should be prioritized for TB adaptation. The versatility of these technologies promises context-adapted tests that can integrate into local TB diagnostic algorithms. This study serves as a stepping stone toward leveraging COVID-19 diagnostics to bridge the TB diagnostic gap and urging stakeholders to collaborate on developing impactful TB diagnostic solutions.

Acknowledgments

The abstract and short summary were partially generated by ChatGPT (powered by OpenAI’s language model, GPT-3.5; http://openai.com; accessed on 3 June 2024). The editing was performed by the human authors.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jcm13195894/s1, Section S1. R code for the medrxivr package; Table S1. PRISMA-ScR Checklist; Table S2. Data Charting Form; Table S3. Product Information Form; Table S4. Scorecard to evaluate the potential application of SARS-CoV-2 diagnostic devices in peripheral TB diagnosis (adapted from Lehe et al.); Table S5. Characteristics of Included Sources of Evidence and Charted Data Variables; Table S6. Near-POC Antigen Tests; Table S7. POC Molecular Tests; Table S8. Near-POC Molecular Tests; Table S9. Low-complexity Molecular Tests.

Author Contributions

Conceptualization, S.Y.; methodology, S.Y. and L.M.L.H.; software, L.M.L.H. and S.Y.; validation, L.M.L.H., S.Y. and S.J.; formal analysis, L.M.L.H. and S.J.; investigation, S.Y., L.M.L.H. and S.J.; data curation, S.Y. and L.M.L.H.; writing—original draft preparation, S.Y., L.M.L.H. and S.J.; writing—review and editing, all co-authors; visualization, L.M.L.H.; supervision, S.Y. and C.M.D.; project administration, S.Y.; funding acquisition, C.M.D. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

This scoping review did not require ethical approval because it does not involve individual patient data and uses sources that are in the public domain.

Informed Consent Statement

No patients were involved in this study’s design, planning, or conception.

Data Availability Statement

All relevant data have been included in the article or the Supplementary Materials. Additional raw data and analytical code can be accessed on OSF at https://osf.io/srwhj/?view_only=2e42118e87374da6b76e0344b0c17261 (accessed on 30 September 2024).

Conflicts of Interest

Authors declare no financial conflicts of interest. T.B. holds patents in the fields of lipoarabinomannan detection and aerosol collection and is a shareholder of Avelo Ltd., a Swiss diagnostics company. C.I. is the owner of the commercial company Connected Diagnostics Limited. The company is incorporated in England with registration number 9445856. The company has worked for companies that sell medical devices and provides consultancy services to them. G.T.’s institution has received funding and in-kind consumables from LumiraDx for COVID-19 and TB research. C.M.D. has received or is expecting to receive research grants from the US National Institutes of Health (GN: 1U01AI152087-01), German Ministry of Education and Research (GN: 031L0298C; 01KX2121, 01KA2203A,), United States Agency for International Development (GN: 7200AA22CA00005), FIND, German Center for Infection Research and Global Health Innovative Technology Fund (GN: 8029802812, 8029802813, 8029802911, 8029802913, 8029903901, 8029903902) and WHO (GN: 2024/1440209-0, 2022/1253133-0, 2022/1237646-0). This includes grants to work with diagnostics developers. CMD never received funding from diagnostic developers except for a payment from Roche Diagnostics that she accepted as German law requires a manufacturer to pay for the use of data for regulatory purposes. The data was generated as part of an evaluation independent of Roche by CMD and team. CMD is an academic editor of PLoS Medicine and sits on the WHO technical advisory group on tuberculosis diagnostics. S.Y. is a member of the consultant staff of the R2D2 network.

Funding Statement

This work was funded by the National Institute of Allergy and Infectious Diseases of the National Institutes of Health [grant number U01AI152087].

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.PAHO Tuberculosis Response Recovering from Pandemic But Accelerated Efforts Needed to Meet New Targets. 2023. [(accessed on 30 September 2024)]. Available online: https://www.paho.org/en/news/7-11-2023-tuberculosis-response-recovering-pandemic-accelerated-efforts-needed-meet-new#:~:text=7%20November%202023%20(PAHO)%2D,19%20disruptions%20on%20TB%20services.

- 2.Sachdeva K.S., Kumar N. Closing the gaps in tuberculosis detection-considerations for policy makers. Lancet Glob. Health. 2023;11:e185–e186. doi: 10.1016/S2214-109X(23)00008-6. [DOI] [PubMed] [Google Scholar]

- 3.Pai M., Dewan P.K., Swaminathan S. Transforming tuberculosis diagnosis. Nat. Microbiol. 2023;8:756–759. doi: 10.1038/s41564-023-01365-3. [DOI] [PubMed] [Google Scholar]

- 4.WHO . WHO Standard: Universal Access to Rapid Tuberculosis Diagnostics. WHO; Geneva, Switzerland: 2023. [Google Scholar]

- 5.Ismail N., Nathanson C.M., Zignol M., Kasaeva T. Achieving universal access to rapid tuberculosis diagnostics. BMJ Glob. Health. 2023;8:e012666. doi: 10.1136/bmjgh-2023-012666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.WHO . Global Tuberculosis Report. WHO; Geneva, Switzerland: 2023. [Google Scholar]

- 7.Subbaraman R., Jhaveri T., Nathavitharana R.R. Closing gaps in the tuberculosis care cascade: An action-oriented research agenda. J. Clin. Tuberc. Other Mycobact. Dis. 2020;19:100144. doi: 10.1016/j.jctube.2020.100144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.WHO . WHO High-Priority Target Product Profiles for New Tuberculosis Diagnostics. WHO; Geneva, Switzerland: 2014. [Google Scholar]

- 9.WHO . Target Product Profile for Tuberculosis Diagnosis and Detection of Drug Resistance. WHO; Geneva, Switzerland: 2024. [Google Scholar]

- 10.Yerlikaya S., Broger T., Isaacs C., Bell D., Holtgrewe L., Gupta-Wright A., Nahid P., Cattamanchi A., Denkinger C.M. Blazing the trail for innovative tuberculosis diagnostics. Infection. 2024;52:29–42. doi: 10.1007/s15010-023-02135-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chopra K.K., Singh S. Tuberculosis: Newer diagnostic tests: Applications and limitations. Indian J. Tuberc. 2020;67:S86–S90. doi: 10.1016/j.ijtb.2020.09.025. [DOI] [PubMed] [Google Scholar]

- 12.Research TM. 2023. [(accessed on 15 June 2024)]. Available online: https://www.who.int/publications/i/item/9789240083851.

- 13.Research G.V. COVID-19 Diagnostics Market Size, Share & Trends Analysis Report by Product & Service (Instruments, Reagents & Kits, Services), by Sample Type, by Test Type, by Mode, by End Use, by Region, and Segment Forecasts, 2022–2030. 2024. [(accessed on 15 June 2024)]. Available online: https://www.grandviewresearch.com/industry-analysis/covid-19-diagnostics-market.

- 14.Cioboata R., Biciusca V., Olteanu M., Vasile C.M. COVID-19 and Tuberculosis: Unveiling the Dual Threat and Shared Solutions Perspective. J. Clin. Med. 2023;12:4784. doi: 10.3390/jcm12144784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Levac D., Colquhoun H., O’Brien K.K. Scoping studies: Advancing the methodology. Implement. Sci. 2010;5:69. doi: 10.1186/1748-5908-5-69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tricco A.C., Lillie E., Zarin W., O’Brien K.K., Colquhoun H., Levac D., Moher D., Peters M.D.J., Horsley T., Weeks L., et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018;169:467–473. doi: 10.7326/M18-0850. [DOI] [PubMed] [Google Scholar]

- 17.Yerlikaya S., Holtgrewe L.M., Broger T., Isaacs C., Nahid P., Cattamanchi A., Denkinger C.M. Innovative COVID-19 point-of-care diagnostics suitable for tuberculosis diagnosis: A scoping review protocol. BMJ Open. 2023;13:e065194. doi: 10.1136/bmjopen-2022-065194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.WHO Public Consultation for the Target Product Profile TB Diagnostic Tests for Peripheral Settings: WHO; 2023. [(accessed on 15 June 2024)]. Available online: https://www.who.int/news-room/articles-detail/public-consultation-for-the-target-product-profile-tb-diagnostic-tests-for-peripheral-settings.

- 19.Daily C. National Medicial Products Administration Database Beijing. [(accessed on 24 June 2024)];2024 Available online: https://www.nmpa.gov.cn/datasearch/en/search-result-en.html?nmpaItem=%2082808081889a0b5601889a251e33005c.

- 20.India Go. Central Drugs Standard Control Organization New Delhi. [(accessed on 24 June 2024)];2024 Available online: https://cdsco.gov.in/opencms/opencms/en/Medical-Device-Diagnostics/InVitro-Diagnostics/

- 21.Commission E. EUDAMED-European Database on Medical Devices: European Commission. 2021. [(accessed on 17 June 2024)]. Available online: https://ec.europa.eu/tools/eudamed/#/screen/home.

- 22.Innovation VH. Covidence [Computer Software] Melbourne, Australia, 2024. [(accessed on 30 September 2024)]. Available online: https://www.covidence.org/

- 23.Lehe J.D., Sitoe N.E., Tobaiwa O., Loquiha O., Quevedo J.I., Peter T.F., Jani I.V. Evaluating operational specifications of point-of-care diagnostic tests: A standardized scorecard. PLoS ONE. 2012;7:e47459. doi: 10.1371/journal.pone.0047459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.360Dx. Roche to Acquire LumiraDx Point-of-Care Testing Tech for Up to $350M. 2024. [(accessed on 5 April 2024)]. Available online: https://www.360dx.com/business-news/roche-acquire-lumiradx-point-care-testing-tech-350m?CSAuthResp=1712329219737%3A0%3A2731195%3A1911%3A24%3Asuccess%3AF7A508B010CAA6F6BDBA33CDA751578C&_ga=2.119195471.790131286.1712329165-1006766003.1711110768&adobe_mc=MCMID%3D26734529761092225790874085618419779486%7CMCORGID%3D138FFF2554E6E7220A4C98C6%2540AdobeOrg%7CTS%3D1712329173.

- 25.Twabi H.H. TB Diagnostics: Existing Platform and Future Direction. Am. J. Biomed. Sci. Res. 2022;15 doi: 10.34297/AJBSR.2022.15.002169. [DOI] [Google Scholar]

- 26.Kruger L.J., Klein J.A.F., Tobian F., Gaeddert M., Lainati F., Klemm S., Schnitzler P., Bartenschlager R., Cerikan B., Neufeldt C.J., et al. Evaluation of accuracy, exclusivity, limit-of-detection and ease-of-use of LumiraDx: An antigen-detecting point-of-care device for SARS-CoV-2. Infection. 2022;50:395–406. doi: 10.1007/s15010-021-01681-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Broger T., Nicol M.P., Sigal G.B., Gotuzzo E., Zimmer A.J., Surtie S., Caceres-Nakiche T., Mantsoki A., Reipold E.I., Székely R., et al. Diagnostic accuracy of 3 urine lipoarabinomannan tuberculosis assays in HIV-negative outpatients. J. Clin. Investig. 2020;130:5756–5764. doi: 10.1172/JCI140461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Broger T., Tsionksy M., Mathew A., Lowary T.L., Pinter A., Plisova T., Bartlett D., Barbero S., Denkinger C.M., Moreau E., et al. Sensitive electrochemiluminescence (ECL) immunoassays for detecting lipoarabinomannan (LAM) and ESAT-6 in urine and serum from tuberculosis patients. PLoS ONE. 2019;14:e0215443. doi: 10.1371/journal.pone.0215443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bulterys M.A., Wagner B.G., Redard-Jacot Ml Suresh A., Pollock N.R., Moreau E., Denkinger C.M., Drain P.K., Broger T. Point-Of-Care Urine LAM Tests for Tuberculosis Diagnosis: A Status Update. J. Clin. Med. 2019;9:111. doi: 10.3390/jcm9010111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Denkinger C.M., Nicolau I., Ramsay A., Chedore P., Pai M. Are peripheral microscopy centres ready for next generation molecular tuberculosis diagnostics? Eur. Respir. J. 2013;42:544–547. doi: 10.1183/09031936.00081113. [DOI] [PubMed] [Google Scholar]

- 31.Andre E., Isaacs C., Affolabi D., Alagna R., Brockmann D., de Jong B.C., Cambau E., Churchyard G., Cohen T., Delmee M., et al. Connectivity of diagnostic technologies: Improving surveillance and accelerating tuberculosis elimination. Int. J. Tuberc. Lung Dis. 2016;20:999–1003. doi: 10.5588/ijtld.16.0015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Dowell D., Gaffga N.H., Weinstock H., Peterman T.A. Integration of Surveillance for STDs, HIV, Hepatitis, and TB: A Survey of U.S. STD Control Programs. Public Health Rep. 2009;124((Suppl. 2)):31–38. doi: 10.1177/00333549091240S206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.WHO WHO Releases New List of Essential Diagnostics; New Recommendations for Hepatitis E Virus Tests, Personal Use Glucose Meters. WHO: Geneva, Switzerland, 2023. [(accessed on 14 March 2024)]. Available online: https://www.who.int/news/item/19-10-2023-who-releases-new-list-of-essential-diagnostics--new-recommendations-for-hepatitis-e-virus-tests--personal-use-glucose-meters.

- 34.FIND . Diagnostic Network Optimization. FIND; Geneva, Switzerland: 2024. [Google Scholar]

- 35.Girdwood S., Pandey M., Machila T., Warrier R., Gautam J., Mukumbwa-Mwenechanya M., Benade M., Nichols K., Shibemba L., Mwewa J., et al. The integration of tuberculosis and HIV testing on GeneXpert can substantially improve access and same-day diagnosis and benefit tuberculosis programmes: A diagnostic network optimization analysis in Zambia. PLOS Glob. Public Health. 2023;3:e0001179. doi: 10.1371/journal.pgph.0001179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ntinginya N.E., Kuchaka D., Orina F., Mwebaza I., Liyoyo A., Miheso B., Aturinde A., Njeleka F., Kiula K., Msoka E.F., et al. Unlocking the health system barriers to maximise the uptake and utilisation of molecular diagnostics in low-income and middle-income country setting. BMJ Glob. Health. 2021;6:e005357. doi: 10.1136/bmjgh-2021-005357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Steadman A., Andama A., Ball A., Mukwatamundu J., Khimani K., Mochizuki T., Asege L., Bukirwa A., Kato J.B., Katumba D., et al. New manual qPCR assay validated on tongue swabs collected and processed in Uganda shows sensitivity that rivals sputum-based molecular TB diagnostics. Clin. Infect. Dis. 2024;78:1313–1320. doi: 10.1093/cid/ciae041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Mesman A.W., Rodriguez C., Ager E., Coit J., Trevisi L., Franke M.F. Diagnostic accuracy of molecular detection of Mycobacterium tuberculosis in pediatric stool samples: A systematic review and meta-analysis. Tuberculosis. 2019;119:101878. doi: 10.1016/j.tube.2019.101878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hassane-Harouna S., Braet S.M., Decroo T., Camara L.M., Delamou A., Bock S., Ortuño-Gutiérrez N., Cherif G.-F., Williams C.M., Wisniewska A., et al. Face mask sampling (FMS) for tuberculosis shows lower diagnostic sensitivity than sputum sampling in Guinea. Ann. Clin. Microbiol. Antimicrob. 2023;22:81. doi: 10.1186/s12941-023-00633-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Aiano F., Jones S.E.I., Amin-Chowdhury Z., Flood J., Okike I., Brent A., Brent B., Beckmann J., Garstang J., Ahmad S., et al. Feasibility and acceptability of SARS-CoV-2 testing and surveillance in primary school children in England: Prospective, cross-sectional study. PLoS ONE. 2021;16:e0255517. doi: 10.1371/journal.pone.0255517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Daniels B., Kwan A., Pai M., Das J. Lessons on the quality of tuberculosis diagnosis from standardized patients in China, India, Kenya, and South Africa. J. Clin. Tuberc. Other Mycobact. Dis. 2019;16:100109. doi: 10.1016/j.jctube.2019.100109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Nooy A., Ockhuisen T., Korobitsyn A., Khan S.A., Ruhwald M., Ismail N., Kohli M., Nichols B.E. Trade-offs between clinical performance and test accessibility in tuberculosis diagnosis: A multi-country modelling approach for target product profile development. Lancet Glob. Health. 2024;12:e1139–e1148. doi: 10.1016/S2214-109X(24)00178-5. [DOI] [PubMed] [Google Scholar]

- 43.Williams C.M., Abdulwhhab M., Birring S.S., De Kock E., Garton N.J., Townsend E., Pareek M., Al-Taie A., Pan J., Ganatra R., et al. Exhaled Mycobacterium tuberculosis output and detection of subclinical disease by face-mask sampling: Prospective observational studies. Lancet Infect. Dis. 2020;20:607–617. doi: 10.1016/S1473-3099(19)30707-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Williams C.M., Cheah E.S., Malkin J., Patel H., Otu J., Mlaga K., Sutherland J.S., Antonio M., Perera N., Woltmann G., et al. Face mask sampling for the detection of Mycobacterium tuberculosis in expelled aerosols. PLoS ONE. 2014;9:e104921. doi: 10.1371/journal.pone.0104921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ferguson T.M., Weigel K.M., Lakey Becker A., Ontengco D., Narita M., Tolstorukov I., Doebler R., Cangelosi G.A., Niemz A. Pilot study of a rapid and minimally instrumented sputum sample preparation method for molecular diagnosis of tuberculosis. Sci. Rep. 2016;6:19541. doi: 10.1038/srep19541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Vandeventer P.E., Weigel K.M., Salazar J., Erwin B., Irvine B., Doebler R., Nadim A., Cangelosi G.A., Niemz A. Mechanical disruption of lysis-resistant bacterial cells by use of a miniature, low-power, disposable device. J. Clin. Microbiol. 2011;49:2533–2539. doi: 10.1128/JCM.02171-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.FDA Coronavirus (COVID-19) Update: FDA Authorizes First COVID-19 Test for Self-Testing at Home. [(accessed on 18 September 2024)];2020 Available online: https://www.fda.gov/news-events/press-announcements/coronavirus-covid-19-update-fda-authorizes-first-covid-19-test-self-testing-home.

- 48.FDA FDA Permits Marketing of First COVID-19 At-Home Test Using Traditional Premarket Review Process. [(accessed on 28 June 2024)];2024 Available online: https://www.fda.gov/news-events/press-announcements/fda-permits-marketing-first-covid-19-home-test-using-traditional-premarket-review-process.

- 49.Jaroenram W., Kampeera J., Arunrut N., Sirithammajak S., Jaitrong S., Boonnak K., Khumwan P., Prammananan T., Chaiprasert A., Kiatpathomchai W. Ultrasensitive detection of Mycobacterium tuberculosis by a rapid and specific probe-triggered one-step, simultaneous DNA hybridization and isothermal amplification combined with a lateral flow dipstick. Sci. Rep. 2020;10:16976. doi: 10.1038/s41598-020-73981-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Shanmugakani R.K., Bonam W., Erickson D., Mehta S. An isothermal amplification-based point-of-care diagnostic platform for the detection of Mycobacterium tuberculosis: A proof-of-concept study. Curr. Res. Biotechnol. 2021;3:154–159. doi: 10.1016/j.crbiot.2021.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.FIND Accessible Pricing: FIND. 2024. [(accessed on 15 March 2024)]. Available online: https://www.finddx.org/what-we-do/cross-cutting-workstreams/market-innovations/accessible-pricing/

- 52.Hannay E., Pai M. Breaking the cycle of neglect: Building on momentum from COVID-19 to drive access to diagnostic testing. EClinicalMedicine. 2023;57:101867. doi: 10.1016/j.eclinm.2023.101867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.360Dx. WHO, Medicines Patent Pool License Rapid Diagnostics Technology From SD Biosensor: 360Dx; 2024. [(accessed on 22 March 2024)]. Available online: https://www.360dx.com/business-news/who-medicines-patent-pool-license-rapid-diagnostics-technology-sd-biosensor?adobe_mc=MCMID%3D19782619068757078863840638341405804837%7CMCORGID%3D138FFF2554E6E7220A4C98C6%2540AdobeOrg%7CTS%3D1706883764&CSAuthResp=1%3A%3A2548842%3A273%3A24%3Asuccess%3A33042812B2DBBA2FDB7574A1C2C8E150.

- 54.Bigio J., MacLean E.L., Das R., Sulis G., Kohli M., Berhane S., Dinnes J., Deeks J.J., Brümmer L.E., Denkinger C.M., et al. Accuracy of package inserts of SARS-CoV-2 rapid antigen tests: A secondary analysis of manufacturer versus systematic review data. Lancet Microbe. 2023;4:e875–e882. doi: 10.1016/S2666-5247(23)00222-7. [DOI] [PubMed] [Google Scholar]

- 55.Arnaout R., Lee R.A., Lee G.R., Callahan C., Yen C.F., Smith K.P., Arora R., Kirby J.E. SARS-CoV2 Testing: The Limit of Detection Matters. bioRxiv. 2020 doi: 10.1101/2020.06.02.131144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Trang B. Cue Health, COVID-19 Testing Company, Is Shutting Down: STAT; 2024. [(accessed on 11 June 2024)]. Available online: https://www.statnews.com/2024/05/22/cue-health-covid-19-test-maker-layoffs/#:~:text=In%20an%20abrupt%20change,as%20of%20Friday%2C%20May%2024.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All relevant data have been included in the article or the Supplementary Materials. Additional raw data and analytical code can be accessed on OSF at https://osf.io/srwhj/?view_only=2e42118e87374da6b76e0344b0c17261 (accessed on 30 September 2024).