Abstract

Background:

Pediatric anesthesia has evolved to a high level of patient safety, yet a small chance remains for serious perioperative complications, even in those traditionally considered at low risk. In practice, prediction of at-risk patients currently relies on the American Society of Anesthesiologists Physical Status (ASA-PS) score, despite reported inconsistencies with this method.

Aims:

The goal of this study was to develop predictive models that can classify children as low risk for anesthesia at the time of surgical booking and after anesthetic assessment on the procedure day.

Methods:

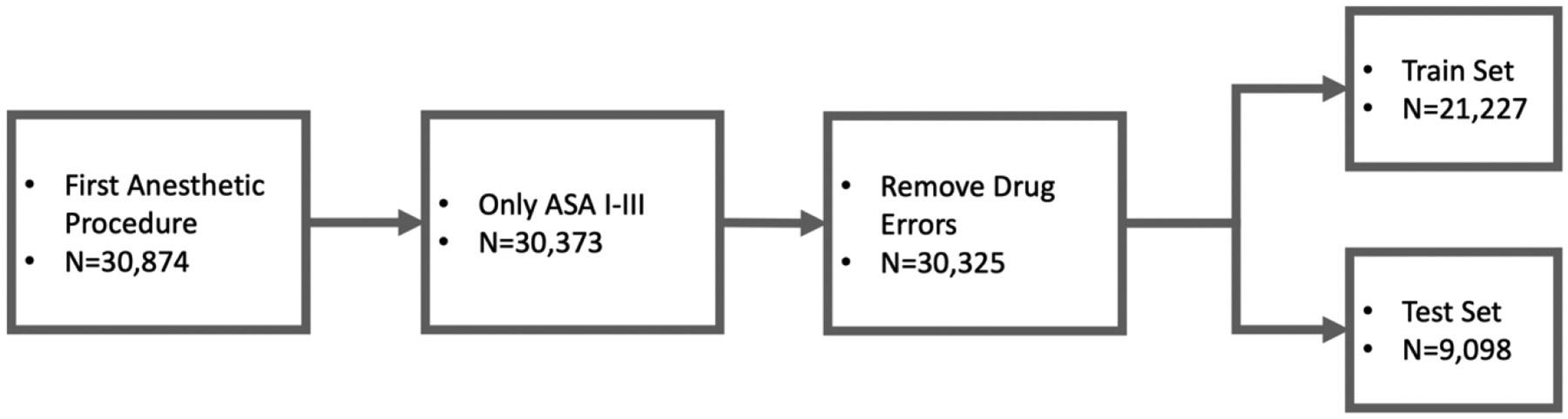

Our dataset was derived from APRICOT, a prospective observational cohort study conducted by 261 European institutions in 2014 and 2015. We included only the first procedure, ASA-PS classification I to III, and perioperative adverse events not classified as drug errors, reducing the total number of records to 30,325 with an adverse event rate of 4.43%. From this dataset, a stratified train:test split of 70:30 was used to develop predictive machine learning algorithms that could identify children in ASA-PS class I to III at low risk for severe perioperative critical events that included respiratory, cardiac, allergic, and neurological complications.

Results:

Our selected models achieved accuracies of >0.9, areas under the receiver operating curve of 0.6–0.7, and negative predictive values greater than 95%. Gradient boosting models were the best performing for both the booking phase and the day-of-surgery phase.

Conclusions:

This work demonstrates that prediction of patients at low risk of critical PAEs can be made on an individual, rather than population-based, level by using machine learning. Our approach yielded two models that accommodate wide clinical variability and, with further development, are potentially generalizable to many surgical centers.

Keywords: Machine learning, Artificial intelligence, Anesthesia, Pediatrics, Preoperative care

INTRODUCTION

Clinical practice has evolved to provide a high level of safety for children undergoing anesthesia. However, a small but clinically significant risk remains for complications that can lead to serious injury or even death. 1 Clinicians may stratify patient risk with the widely used but subjective American Society of Anesthesiologists Physical Status (ASA-PS) Classification System, although it is not designed for this purpose 2 Most pediatric patients undergoing diagnostic and surgical procedures will be scored as ASA-PS I-III, with patients scored IV-V considered to be at the highest risk of perioperative events. Some guidelines for assignment of pediatric surgical patients to Ambulatory Surgery Centers (ASCs) and District General Hospitals (DGHs) use ASA-PS status I/II in their selection criteria3, but others advise against this and stress individual patient assessment. Some patients assigned ASA-PS III are potentially suitable for ambulatory care in both North America4 and Europe5,6. The decision is influenced by local variables and referral patterns7, and these children are still susceptible to critical postoperative adverse events (PAEs) that may require management at specialist in-patient facilities4.

Widely used methods to improve risk stratification center around isolated medical conditions such as sleep disordered breathing (the Snoring, Trouble Breathing, and Un-Refreshed (STBUR) questionnaire), 8 upper respiratory infections (COLDS score) 9, and perioperative mortality (Pediatric Risk Assessment (PRAm) score) 10,11. These systems are simple to use and have been validated for use in the perioperative period, but their performance is limited by class imbalance: low prevalence of the event of interest and a large preponderance of patients who experienced uneventful anesthesia. Class imbalance produces a model with a low positive predictive value (PPV). Optimizing models instead for negative predictive potential may offer clinical utility. Accurate models that can be personalized to an individual and used easily at the point of care have not been achievable to date with traditional biostatistical methods and tools, partly due to class imbalance. Large, varied, and complete datasets are therefore necessary to yield clinically meaningful results.

The APRICOT (Anaesthesia PRactice In Children Observational Trial) study12 focused on the incidence of severe perioperative critical events in children across Europe and, in so doing, created the largest dataset of its kind. The APRICOT group identified age, medical history, and physical condition as major risk factors for perioperative events and found that greater years of experience of the most senior anesthesia team member had a beneficial effect.

Machine learning is used increasingly for predicting outcomes in medicine13 because it can compute complex nonlinear relationships across multiple variables and generate tailored recommendations for individual patients on a precision medicine basis. 14 We leveraged the APRICOT dataset with machine learning techniques to develop models that can determine which pediatric patients in ASA-PS class I-III are at low risk for severe perioperative critical events. We identified two time points in the clinical pathway at which individualized risk stratification would have utility. The first of these—the time of surgical booking—would help identify the children who are safest to assign to a secondary care facility or ambulatory surgical center. The second time point—the day of surgery after anesthesia assessment—would assist with choices in care, such as level of supervision from the appropriate seniority of anesthesia provider.

METHODS

APRICOT dataset

APRICOT was a prospective observational multicenter cohort study conducted by 261 institutions in 33 European countries from April 1, 2014, to January 31, 2015. 12 The dataset includes 30,874 children aged 0-16 years who were undergoing emergency or elective surgery and diagnostic procedures. The primary endpoint used for model development was based on the original APRICOT study. This encompassed the occurrence of “respiratory, cardiac, allergic, or neurological complications requiring immediate intervention and that led (or could have led) to major disability or death”. Drug errors were excluded for this work as they do not relate to underlying patient condition. The dataset comprises 31,127 anesthetic procedures with a critical perioperative adverse event rate of 4.7%. It includes details of patient demographics, preoperative assessment, intraoperative management, postoperative recovery, and, where applicable, management and outcome of the critical event. Table 1 outlines data elements identified as clinically relevant and therefore used in model training. A small subset of patients (188) had multiple procedures. Full details of the original data collection process have been reported previously, 12 but the original APRICOT authors did not create a risk stratification tool using their dataset. For our study, use of the APRICOT dataset was considered non-human subjects research by the Johns Hopkins All Children’s Hospital Institutional Review Board. We report our findings using the Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD) guidelines. 15

Table 1.

Name and description of Input Features and the presence of these features in the reduced dataset along with the Pearson Correlation with Perioperative Adverse Events.

| Feature | Feature used in model |

Description | Pearson Correlation with Target Feature |

|---|---|---|---|

| Wheezing | B, S | History of wheezing in the last 12 months (Yes, No, NA) | 0.041 |

| Prematurity | B, S | Was the child premature (less than 37 weeks)? (Yes, No, Unknown) | −0.050 |

| Family smokers | B, S | Do any family members smoke? (Yes, No, NA) | 0.003 |

| Anesthetic complications | B, S | History of previous anesthetic complications (Yes, No, NA) | 0.034 |

| Medication | B, S | Does the child take medications or natural remedies? (Yes, No, NA) | 0.037 |

| Handicap | B, S | Presence of metabolic/genetic disorder or neurological impairment (Yes, No, NA) | 0.023 |

| Age | B, S | Age of the patient (years) | −0.062 |

| Sex | B, S | Sex of the patient (Male, Female) | 0.007 |

| Weight | B, S | Weight of the patient (kg) | −0.052 |

| Surgical procedure | B, S | Is the procedure surgical? (Yes, No) | 0.039 |

| Nonsurgical procedure | B, S | Is the procedure nonsurgical? (Yes, No) | −0.039 |

| Anesthesia type | B, S | General anesthesia or sedation (Sedation, General Anesthesia, None) | 0.040 |

| Inpatient/outpatient | B, S | Inpatient or outpatient procedure | 0.07 |

| Procedure time scheduled | B, S | Is the procedure scheduled during regular working hours or after hours/weekend? (Regular hours, After-hours/weekend) | −0.011 |

| Asthma | B, S | History of any asthma diagnosis (Yes, No, NA) | 0.011 |

| Atopy | B, S | Symptoms of atopy in the last 12 months (Yes, No, NA) | 0.016 |

| Allergy | B, S | Any history of allergy (Yes, No, NA) | 0.004 |

| Snoring | B, S | Does the child snore? (Yes, No, NA) | 0.021 |

| ASA-PS score | S | ASA-PS score for the patient (I, II, III, IV, V) | 0.080 |

| Flu/cold | S | History of flu/cold in last 2 weeks (Yes, No, NA) | 0.043 |

| Fever | S | Presence of fever in last 24 hours (>38.5°C) (Yes, No, NA) | 0.004 |

| Urgency | S | Elective, urgent, or emergency surgery | 0.010 |

| Consultation | S | Face-to-face anesthesia consultation within 24 hours of procedure (Yes, No) | 0.027 |

| Type of anesthesia provider | S | Degree of specialization and role of provider (anesthesiologist with > 80% pediatric practice, anesthesiologist with 50% - 80% pediatric practice, anesthesiologist with < 50% pediatric practice, anesthesiologist in training, anaesthetic nurse, anaesthetic technician) | 0.017 |

| Experience | S | Years of experience of the anesthesia provider in charge of the case | −0.022 |

| Premedication | S | Sedative premedication given (Yes, No) | −0.003 |

| Parental presence at induction | S | Parent present during induction (Yes, No) | −0.039 |

| Monitoring | S | Type of monitoring (Standard (ECG, SpO2, anaesthetic agent, capnography, NIBP, temp), Standard + (arterial central line), Standard ++ (NIRS, EEG derived data), Standard minus (one equipment missing)) | −0.029 |

| Induction type | S | Inhalation, intravenous, or intramuscular | −0.012 |

| Airway interface type | S | Facemask, endotracheal tube (ETT), or supraglottic airway (SGAW) (Face Mask, SGAW, ETT, Other, None) | 0.005 |

| Fluids | S | Fluids given? (Yes, No) | 0.083 |

| Regional Anesthesia | S | Was regional anesthesia used? (Yes, No) | 0.001 |

| Ventilator Type | S | Type of ventilator (Spontaneous ventilation, pressure support ventilation, mechanical ventilation) | 0.007 |

B indicates that the feature was used in the booking model; S indicates that it was used in the day-of-surgery model.

ASA-PS: American Society of Anesthesiologists Physical Status.

Statistical methods and outcome

Scikit-learn and Microsoft Azure Machine Learning Studio (Redmond, WA) were used for model training and testing. 16 We utilized the APRICOT dataset to develop predictive machine learning algorithms that could help clinicians determine low-risk status in children using only the information that would be available at the time of model use. Model development consisted of three main stages: data pre-processing, model training using the training dataset, and model evaluation using the test dataset.

Enhanced technical details of the data processing and model development process can be found in table 1 of the supporting materials.

Data pre-processing

Feature selection

We included only the first procedure in the dataset for each child, only children with ASA-PS scores I-III, and only PAEs not classified as drug errors. This selection reduced the total number of records to 30,325 with an adverse event rate of 4.43% (Fig 1). Two sets of features were selected with input from subject matter experts, to ensure the clinical utility of the model (Table 1). The first were features available at surgical booking. The second set added information available on the day of surgery. A test-train split of 70:30 was assigned in a stratified manner. Stratification is important to preserve the distribution of PAEs when fewer than 5% of records in the dataset have the outcome of interest. 17 Using a stratified approach preserved the PAE distribution of 5% in both the train and test set.

Figure 1.

Flow chart showing data selection from the original apricot dataset.

Data scaling

The presence of different ranges for data can introduce bias into a model, favoring features with larger numerical values. To correct for this bias, data scaling can be used. Two common approaches are Min-Max (Normalization) and standard scaler (Standardization). Standard scaling was chosen to preserve the original normal distributions.

Multiple imputation

Missing data were imputed using Multiple Imputation with Chained Equations (MICE), a variation of multiple imputation. 18 Because each variable is independently iterated, Multiple Imputation with Chained Equations is more efficient than other multiple imputation methods and is generally preferred for large datasets. Additionally, this technique estimates missing values using the existing probability distributions for all available features, providing results superior to those of simpler approaches.

Class imbalance

With an incidence rate of 4.7% for severe perioperative critical events, the dataset had a high degree of class imbalance (~20:1). This imbalance can make it difficult for the model to learn the minority class, which is often the class of interest. Therefore, we used synthetic minority oversampling technique (SMOTE) to reduce the class imbalance. 17 SMOTE is a data generation technique that creates synthetic data using real data as input. By increasing the proportion of the minority class, algorithms are better able to learn that class. SMOTE was applied to the training set to increase the majority-minority ratio to 10:1. SMOTE was applied to the training data and a static validation set was used. 17

Model training

Along with synthetic data, several other approaches can improve learning on the minority class. These include the model type (algorithm) and optimization metric (F1 score). The F1 score is the harmonic mean of precision (PPV) and recall (true positive rate). Because the F1 metric is derived from both PPV and recall, both are optimized concurrently during training. Compared to other metrics, this better facilitates the model to learn positive examples. Gradient boosting provided the best performance for both datasets and was chosen for further optimization. Gradient boosting iteratively builds multiple decision trees, known as weak learners, and combines the output together into a final prediction. After the addition of each weak learner, a weight for that learner is calculated using the residuals. This helps improve performance when class imbalance is high, by more favorably weighting weak learners that perform better on the minority class.

Model evaluation

While the F1 score was chosen for optimization of the model during training, multiple metrics were used to evaluate model performance on the test set. These include AUROC, PPV, NPV, accuracy, sensitivity and specificity. Using multiple metrics provides a more complete approach to assessing model generalizability.

Feature importance

Feature ranking identifies elements that contribute the most to model decision-making and is an important step to providing clinical insights and an element of model transparency. We used permutation feature importance to assess the contribution of each feature to the prediction of a perioperative adverse event (PAE). In this approach, each column is randomly shuffled along the rows and the final metric (F1 score) is assessed. The feature ranking score is then determined by taking the difference between the original metric and the newly calculated metric. Positive feature importance scores indicate that the feature improved the prediction. Negative feature importance scores indicate that the feature was detrimental to the prediction. A score of zero indicates no effect on predicting the outcome. For each model, we performed five permutations with different random seeds for the shuffling and used the average for each.

RESULTS

Two machine learning models were produced that identify those children in ASA-PS class I-III who are at low risk for severe PAEs. The receiver operating characteristic curves for each top performing model are shown in Figure 3. The AUROC was 0.618 for the booking model and 0.722 for the day-of-surgery model. Gradient boosting models were the best performing for both the booking phase and the day-of-surgery phase and achieved accuracies of >0.9, AUROCs of 0.6–0.7, and negative predictive values (NPVs) greater than 95%. These models were built using data derived from European practice standards and would likely require additional calibration for use in non-European medical institutions.

Figure 3.

Receiver operating characteristic curves for each model. Diagonal gray line indicates a random selection (AUROC 0.5), and the curves above the line represent better performance.

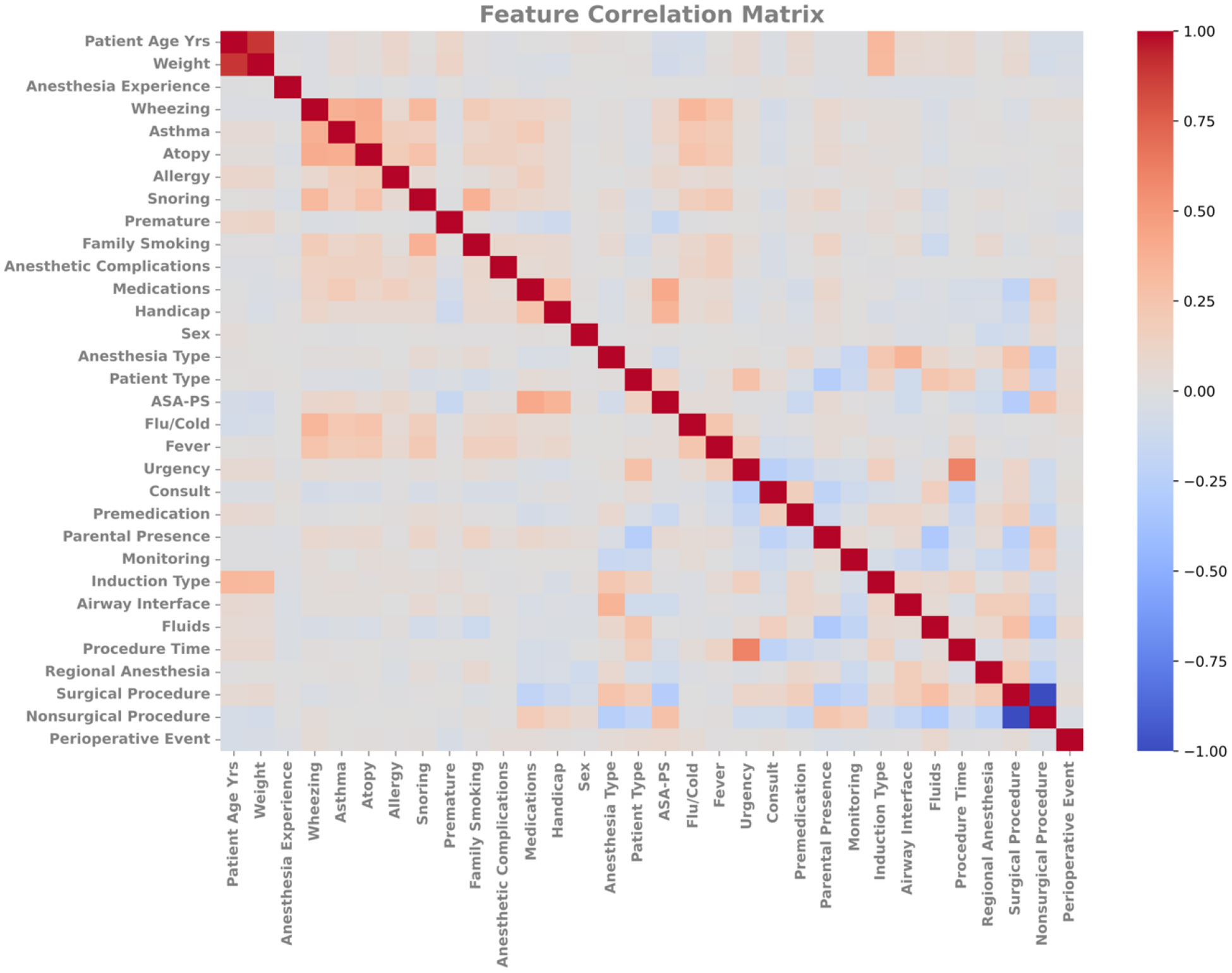

Pearson correlation coefficients were used to quantify feature interdependence for the entire subset of the APRICOT dataset (Figure 2, Supporting Materials 1). Data elements are described in Table 1. Snoring and wheezing were individually positively correlated with asthma, atopy, fever, flu/cold, and family smoking. Snoring and wheezing were also positively correlated with each other. Both asthma and atopy were individually positively correlated with allergy and anesthetic complications. Age and weight were both individually positively correlated with induction type. Urgency and prematurity were negatively correlated with one another. Negative correlations were also identified between fluids and parental presence at induction; snoring and prematurity; wheezing and prematurity; and family smoking and prematurity. Most of these correlations were not strong (<0.7), with only age/weight and surgical/nonsurgical being strongly correlated.

Figure 2.

Feature correlation matrix for all input features. Values are normalized between −1 and 1. Negative values indicate anticorrelation, meaning that changes correlate to opposing changes in the other. Positive values indicate that changes in one tend to correlate to similar changes in the other. ASA, American Society of Anesthesiologists.

The confusion matrix results (Table 2) clearly show that the models can learn the negative (non-PAE) outcome better than the PAE outcome. Further detail can be found in table 2 of the supporting materials.

Table 2.

Confusion matrices for time of booking and day of surgery model

| Model | PAE (label) | No PAE (label) |

Total | Accuracy | Sensitivity | Specificity | PPV | NPV |

|---|---|---|---|---|---|---|---|---|

| Booking | ||||||||

| 95.2% | 1.2% | 99.6% | 11.9% | 95.6% | ||||

| PAE | 5 | 37 | 42 | |||||

| No PAE | 398 | 8658 | 9056 | |||||

| Total | 403 | 8695 | 9098 | |||||

| Day of surgery | ||||||||

| 95.4% | 2.7% | 99.7% | 30.6% | 95.7% | ||||

| PAE | 11 | 25 | 36 | |||||

| No PAE | 392 | 8670 | 9062 | |||||

| Total | 403 | 8695 | 9098 | |||||

PAE: perioperative adverse event; NPV: negative predictive value; PPV: positive predictive value.

Booking Model

Permutation feature importance scores are shown in Figure 4. For the booking model, the top five predictors were patient age, family history of smoking, procedure time, snoring, and sex. Family smoking, use of any medications, previous anesthetic complications, history of handicap, and anesthesia type were also predictors. Surgical procedure, patient type, wheezing, atopy and asthma were not significant predictors. The most important predictors within standard deviation were procedure time, family history of smoking, patient age, anesthesia type, and snoring. That these well-known predictors carry most weighting for the models may reassure users in the early stages of future model implementation.

Figure 4.

Average feature ranking for both models in descending order for the day-of-surgery model taken from 5 different perturbations with different random seeds. The black lines show standard deviations for feature scores. ASA, American Society of Anesthesiologists.

Day of Surgery Model

For the day of surgery model patient age, ASA-PS score, anesthesia type, asthma and nonsurgical procedure where the top five predictors. Urgency, parental presence at induction, procedure time, patient type, weight and sex were all positive predictors. These findings are consistent with previous studies and indicate that the model is stratifying patients based on clinically relevant factors. This helps to further validate the model’s decision-making. The remaining features had negative feature permutation scores, indicating that they are not important predictors. The most important predictors within standard deviation were ASA-PS score, patient age, anesthesia type, procedure time, and nonsurgical procedure.

DISCUSSION

We developed machine-learning models to prospectively classify ASA-PS I-III pediatric patients aged 0-16 years as low risk for perioperative adverse events (PAEs). ASA-PS I-III was used as a surrogate marker for patients potentially suitable for care at ASCs and DGHs, or by providers who are not fellowship-trained pediatric anesthesiologists. These models were trained using only data that would be available at each of two time points in the clinical workflow—at surgical booking and on the day of surgery. The top performing models achieved accuracies of >0.9, AUROCs of 0.6–0.7, and negative predictive values (NPVs) greater than 95%. Generally, an AUROC of 0.7 is desirable as a benchmark for clinical performance. 19,20. Because of the high class imbalance, models can improve the optimization metric by favoring predictions of the majority class. This results in a much higher predictive value for the majority class and poses challenges in predicting the minority class.

Comparison to Current Risk Assessment Tools

ASA-PS classification is a standard tool that has been used globally for assessment of anesthesia risk, 21 but its reliability in both risk prediction and inter-observer variation has long been questioned. 22 Its poor reliability is more pronounced in children than in adults. 23-25 In addition, ASA-PS does not consider the impact of surgery. 26 No objective model to stratify anesthesia risk in children is in widespread clinical use, 27 although several have been developed.12 Malviya et al 28 developed the NARCO-SS tool using a “review of systems” approach, relying on clinical judgement rather than objective data analysis. The score places the patient on a four-point risk scale; however, Malviya et al did not report PPV, sensitivity, or specificity of the score as a screening tool for PAEs. Nasr et al29 developed and validated11 the Pediatric Risk Assessment score (PRAm), which uses multivariable logistic regression to predict perioperative mortality in children undergoing noncardiac surgery and achieved an impressive AUROC of 0.943. Because mortality is a specific and rare outcome, it is heavily imbalanced in a dataset. This led to reported PPVs for PRAm scores ≥6 of 2.57% to 6.03%. Faraoni and colleagues30 developed a risk stratification score for children with congenital heart disease undergoing noncardiac surgery and reported an AUROC of 0.831; however, no PPV is reported.

A tool with low PPV has limited clinical utility as a predictor of high-risk patients; however, a tool optimized for high NPV may help identify low-risk patients. The high NPVs of our model demonstrate a potential future clinical utility of the booking model, but its AUROC score of 0.626 currently falls short of the generally accepted performance of 0.7. With an AUROC of 0.722 and predictors that are well established, the day-of-surgery model demonstrates better performance. Our models incorporate more detailed information than is used to assign an ASA-PS score, delivering an objective risk assessment that is further individualized to the patient.

Feature Importance

Feature importance analysis (Figure 4) identifies patient age, ASA-PS score, anesthesia type, asthma and nonsurgical procedure as the strongest global predictors for PAEs in the day-of-surgery model. Among these, anesthesia type may be modifiable at the procedure level and asthma may be optimized. Additionally, given the importance of ASA-PS score for the surgery model, incorporating this into decision-making at the time of booking may be of further interest if the surgical team are appropriately trained in ASA-PS scoring. The feature importance analysis provides the global feature importance for the dataset but does not identify local feature importance values for individual patients. This means that the trends observed are not necessarily applicable to all patient subpopulations. Additionally, feature importance does not identify predictor correlation. For example, modifying the airway management technique may not lead to a change in perioperative risk for every child.

Model Limitations

Accurate reporting of preoperative history, ASA-PS classification, and inclusion of PAEs in the dataset are influenced by the individual anesthesiologist for each case. Errors or exclusions may lead to inaccurate input details, which could affect model performance. The APRICOT PAE criteria also led to the inclusion of adverse events that some anesthesiologists would consider nonsignificant. The inclusion of these “critical events” may produce a prediction that is overly sensitive for the needs of some clinicians, although this problem could be partially overcome by adjusting the model threshold. Each of the records in the APRICOT dataset documents the current working practice of the individual anesthesiologist managing the case. These practices may not align with current best practice, or the practice of an anesthesiologist in a given country, working culture, patient population, or center. Such mismatches may further limit the model generalizability to individual institutions, although the models should be locally validated before implementation at an institution to ensure that their performance met clinical requirements. Finally, despite being the largest of its kind, the dataset is also relatively small in machine learning terms, and, despite the use of synthetic data, this further limits model performance.

Effective prediction of high-risk patients (higher PPV) would require a larger, more granular dataset. The APRICOT dataset is valuable because of the scope of data collection. However, the hand-collected data are considered low fidelity when compared with current electronic record-keeping and automated data capture, and do not include important factors such as ethnicity and socioeconomic status, which are known to correlate with perioperative respiratory adverse events31. This, in part, contributes to the limitations of model performance. For example, height was missing from 41.6% of patient records and therefore was not included as an input to model development. This prohibited the use of BMI in model development, despite obesity being a common and known risk factor for PAEs. Obese and severely obese children are also frequently under-classified in the ASA-PS system 32 and may inappropriately be allocated to a non-tertiary center for surgery. Additional examples of more granular data that may improve the performance of a predictive model include details of acute symptoms, duration of fasting, liver and pancreatic diseases, diabetes, congenital heart disease, kidney injury, immunosuppression, and preoperative use of corticosteroids.

Future applications

Performance of our models is similar to that of the COLDS score (AUROC 0.69) used to risk-stratify patients with upper respiratory tract infections and could eventually serve in a similar capacity.9 Validation against a prospectively collected external dataset of similar patients would demonstrate the extent of model generalizability to new, similar, patient populations. If the population differs significantly from the data used for model training and evaluation, performance could decrease. If the model demonstrates good generalizability, then use of this model could serve as an additional clinical datapoint for traditionally lower risk patients and warrants further study. The model could be integrated into the clinical workflow to serve as decision support,33 with additional model calibration and revalidation for the specific clinical implementation as necessary.

The time-of-booking model, once refined, could be used as part of the assessment process for the surgical team, to support the allocation of patients to an ambulatory care center for their procedure. Patients screened by the model and identified as low risk is additional reassurance to the surgical team that booking at an ASC is appropriate. Any patient not assessed as low risk by the model could be highlighted to the surgical team for additional consideration. This may lead to one of three outcomes: book the patient at the ASC as planned, book the patient at a tertiary center, or refer to an anesthesia preoperative service for further guidance.

On the day of surgery, a model output of “low risk” for an individual patient could provide additional reassurance to the perioperative team, informing their decision-making in areas that include degree of supervision of trainees, use of benzodiazepines for pre-medication, choice of airway adjunct, and deep vs. awake extubation.

The long-term aims of model development and implementation therefore might be (1) to provide additional information to support clinician decision-makers to designate children as low risk for treatment at non-tertiary surgical sites, such as ASCs, satellite sites, or DGHs, and (2) to support decision-making on the day of surgery. Several challenges to deploying this model exist. These include differences in population between the patients used to build the model and those present in the pipeline, differences in anesthesia practice including data collected preoperatively and the potential for changing populations over time (data drift).

A further utility of the models is in identification of potential factors for clinical study or practical considerations. Future work can consider individual predictors to further explain model decisions. This will build clinical trust and can be guided by examples such as the United States Food and Drug Administration Artificial Intelligence and Machine Learning in Software as a Medical Device Action Plan.34

Conclusion

This work demonstrates that prediction of patients at low risk of critical PAEs can be made on an individual level by using machine learning Predictions generated based on individual patient factors can avoid the high variability and potentially misleading general population risk estimates generated by the ASA-PS. Our approach yielded two machine learning models that accommodate wide clinical variability and, with further development, are potentially generalizable to many surgical centers. Future work could focus on developing a more granular risk stratification capable of informing providers of an accurate perioperative risk level for all patients. Future models could be integrated into the clinical workflow.

Supplementary Material

ACKNOWLEDGEMENTS

The team would like to express its thanks to Medical Librarian Pamela Williams, MS, MLS, AHIP, for her expertise and assistance in performing medical literature searches. Claire F. Levine, MS, ELS, Department of Anesthesiology and Critical Care Medicine, Johns Hopkins University School of Medicine, and Yvonne Poindexter, MA, Editor, Department of Anesthesiology, Vanderbilt University Medical Center, edited the text of this article in manuscript form.

Glossary of Terms

- APRICOT

Anaesthesia PRactice In Children Observational Trial

- ASA

American Society of Anesthesiologists

- ASA-PS

American Society of Anesthesiologists Physical Status

- AUROC

area under the receiver operating curve

- FN

false negative

- FP

false positive

- MI

multiple imputation

- MICE

Multiple Imputation with Chained Equations

- NPV

negative predictive value

- PAE

perioperative adverse event

- PPV

positive predictive value

- SMOTE

synthetic minority oversampling technique

- TRIPOD

Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis

- ESAIC

European Society of Anaesthesiology and Intensive Care

Footnotes

Disclosures: This study was funded internally by the Department of Anesthesia and Pain Medicine at Johns Hopkins All Children’s Hospital. The APRICOT dataset was used with approval from the APRICOT steering committee and the European Society of Anaesthesiology and Intensive Care (ESAIC) and was originally entirely sponsored by a grant from their clinical trial network. The ESAIC was not involved in this work or in the original study’s data analysis and interpretation.

Conflicts of interest: None

Data availability statement:

The data are not publicly available; requests for access should be made via the European Society of Anaesthesiology and Intensive Care.

REFERENCES

- 1.Kurth CD, Tyler D, Heitmiller E, Tosone SR, Martin L, Deshpande JK. National pediatric anesthesia safety quality improvement program in the United States. Anesth Analg. 2014;119(1):112–121. [DOI] [PubMed] [Google Scholar]

- 2.Owens WD. American Society of Anesthesiologists Physical Status Classification System is not a risk classification system. Anesthesiology. 2001;94(2):378–378. [DOI] [PubMed] [Google Scholar]

- 3.Hartley B, Powell S, Bew S. Safe delivery of paediatric ENT surgery in the UK: a national strategy 2019. Accessed [2023-02-14]. https://www.entuk.org/news_and_events/news/77/safe_delivery_of_paediatric_ent_surgery_in_the_uk_a_national_strategy/ [Google Scholar]

- 4.Whippey A, Kostandoff G, Ma HK, Cheng J, Thabane L, Paul J. Predictors of unanticipated admission following ambulatory surgery in the pediatric population: a retrospective case–control study. Paediatr Anaesth.. 2016;26(8):831–837. [DOI] [PubMed] [Google Scholar]

- 5.Junger A, Klasen J, Benson M, et al. Factors determining length of stay of surgical day-case patients. Eur J Anaesthesiol. 2001;18(5):314–321. [DOI] [PubMed] [Google Scholar]

- 6.Green Z, Woodman N, McLernon DJ, Engelhardt T. Incidence of paediatric unplanned day-case admissions in the UK and Ireland: a prospective multicentre observational study. Br J Anaesth. 2020;124(4):463–472. [DOI] [PubMed] [Google Scholar]

- 7.Rabbitts JA, Groenewald CB, Moriarty JP, Flick R. Epidemiology of Ambulatory Anesthesia for Children in the United States: 2006 and 1996. Anesth Analg. 2010;111(4):1011–1015. [DOI] [PubMed] [Google Scholar]

- 8.Tait AR, Voepel-Lewis T, Christensen R, O'Brien LM. The STBUR questionnaire for predicting perioperative respiratory adverse events in children at risk for sleep-disordered breathing. Paediatr Anaesth. 2013;23(6):510–516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lee LK, Bernardo MKL, Grogan TR, Elashoff DA, Ren WHP. Perioperative respiratory adverse event risk assessment in children with upper respiratory tract infection: Validation of the COLDS score. Paediatr Anaesth. 2018;28(11):1007–1014. [DOI] [PubMed] [Google Scholar]

- 10.Nasr VG, DiNardo JA, Faraoni D. Development of a pediatric risk assessment score to predict perioperative mortality in children undergoing noncardiac surgery. Anesth Analg. 2017;124(5):1514–1519. [DOI] [PubMed] [Google Scholar]

- 11.Valencia E, Staffa SJ, Faraoni D, DiNardo JA, Nasr VG. Prospective External Validation of the Pediatric Risk Assessment Score in Predicting Perioperative Mortality in Children Undergoing Noncardiac Surgery. Anesth Analg. 2019;129(4):1014–1020. [DOI] [PubMed] [Google Scholar]

- 12.Habre W, Disma N, Virag K, et al. Incidence of severe critical events in paediatric anaesthesia (APRICOT): a prospective multicentre observational study in 261 hospitals in Europe. Lancet Respir Med. 2017;5(5):412–425. [DOI] [PubMed] [Google Scholar]

- 13.Lonsdale H, Jalali A, Ahumada L, Matava C. Machine learning and artificial intelligence in pediatric research: current state, future prospects, and examples in perioperative and critical care. J Pediatr. 2020;221:S3–S10. [DOI] [PubMed] [Google Scholar]

- 14.Lonsdale H, Gray GM, Ahumada LM, Yates HM, Varughese A, Rehman MA. The Perioperative Human Digital Twin. Anesth Analg. 2022;134(4):885–892. [DOI] [PubMed] [Google Scholar]

- 15.Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): the TRIPOD statement. J Clin Epidemiol. 2015;68(2):134–143. [DOI] [PubMed] [Google Scholar]

- 16.Pedregosa F, Varoquaux G, Gramfort A, et al. Scikit-learn: Machine learning in Python. J Mach Learn Res. 2011;12(Oct):2825–2830. [Google Scholar]

- 17.Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP. SMOTE: Synthetic minority oversampling technique. Journal of Artificial Intelligence Research. 2002;16:321–357. [Google Scholar]

- 18.White IR, Royston P, Wood AM. Multiple imputation using chained equations: Issues and guidance for practice. Stat Med. 2011;30(4):377–399. [DOI] [PubMed] [Google Scholar]

- 19.Pierpont GL, Parenti CM. Physician risk assessment and APACHE scores in cardiac care units. Clin Cardiol. 1999;22(5):366–368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Allaudeen N, Schnipper JL, Orav EJ, Wachter RM, Vidyarthi AR. Inability of providers to predict unplanned readmissions. J Gen Intern Med. 2011;26(7):771–776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Saklad M Grading of patients for surgical procedures. Anesthesiology. 1941;2(3):281–284. [Google Scholar]

- 22.Haynes SR, Lawler PG. An assessment of the consistency of ASA physical status classification allocation. Anaesthesia. 1995;50(3):195–199. [DOI] [PubMed] [Google Scholar]

- 23.Jacqueline R, Malviya S, Burke C, Reynolds PI. An assessment of interrater reliability of the ASA physical status classification in pediatric surgical patients. Pediatr Anesth. 2006;16(9):928–931. [DOI] [PubMed] [Google Scholar]

- 24.Burgoyne LL, Smeltzer MP, Pereiras LA, Norris AL, De Armendi AJ. How well do pediatric anesthesiologists agree when assigning ASA physical status classifications to their patients? Pediatr Anesth. 2007;17(10):956–962. [DOI] [PubMed] [Google Scholar]

- 25.Tollinche LE, Yang G, Tan K-S, Borchardt R. Interrater variability in ASA physical status assignment: an analysis in the pediatric cancer setting. J Anesth. 2018;32(2):211–218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nasr VG, Staffa SJ, Zurakowski D, DiNardo JA, Faraoni D. Pediatric Risk Stratification Is Improved by Integrating Both Patient Comorbidities and Intrinsic Surgical Risk. Anesthesiology. 2019;130(6):971–980. [DOI] [PubMed] [Google Scholar]

- 27.Aplin S, Baines D, De Lima J. Use of the ASA Physical Status Grading System in pediatric practice. Pediatr Anesth. 2007;17(3):216–222. [DOI] [PubMed] [Google Scholar]

- 28.Malviya S, Voepel-Lewis T, Chiravuri SD, et al. Does an objective system-based approach improve assessment of perioperative risk in children? A preliminary evaluation of the ‘NARCO’. Br J Anaesth. 2011;106(3):352–358. [DOI] [PubMed] [Google Scholar]

- 29.Nasr VG, DiNardo JA, Faraoni D. Development of a pediatric risk assessment score to predict perioperative mortality in children undergoing noncardiac surgery. Anesth Analg.. 2017;124(5):1514–1519. [DOI] [PubMed] [Google Scholar]

- 30.Faraoni D, Vo D, Nasr VG, DiNardo JA. Development and validation of a risk stratification score for children with congenital heart disease undergoing noncardiac surgery. Anesth Analg. 2016;123(4):824–830. [DOI] [PubMed] [Google Scholar]

- 31.Tariq S, Syed M, Martin T, Zhang X, Schmitz M. Rates of Perioperative Respiratory Adverse Events Among Caucasian and African American Children Undergoing General Anesthesia. Anesth Analg. 2018;127(1):181–187. [DOI] [PubMed] [Google Scholar]

- 32.Burton ZA, Lewis R, Bennett T, et al. Prevalence of PErioperAtive CHildhood obesitY in children undergoing general anaesthesia in the UK: a prospective, multicentre, observational cohort study. Br J Anaesth. 2021;127(6):953–961. [DOI] [PubMed] [Google Scholar]

- 33.Hofer IS, Burns M, Kendale S, Wanderer JP. Realistically integrating machine learning into clinical practice: a road map of opportunities, challenges, and a potential future. Anesth Analg. 2020;130(5):1115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Artificial intelligence and machine learning in software as a medical device action plan. Silverspring: U.S. Food and Drug Administration. 2019. Accessed [2023-02-14]. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data are not publicly available; requests for access should be made via the European Society of Anaesthesiology and Intensive Care.