Abstract

Objective

The purpose of the present study was to verify the diagnostic performance of an AI system for the automatic detection of teeth, caries, implants, restorations, and fixed prosthesis on panoramic radiography.

Methods

This is a cross-sectional study. A dataset comprising 1000 panoramic radiographs collected from 500 adult patients was analyzed by an AI system and compared with annotations provided by two oral and maxillofacial radiologists.

Results

A strong correlation (R > 0.5) was observed between AI perception and observers 1 and 2 in carious teeth (0.691–0.878), implants (0.770–0.952), restored teeth (0.773–0.834), teeth with fixed prostheses (0.972–0.980), and missing teeth (0.956–0.988).

Discussion

Panoramic radiographs are commonly used for diagnosis and treatment planning. However, they often suffer from artifacts, distortions, and superimpositions, leading to potential misinterpretations. Thus, an automated detection system is required to tackle these challenges. Artificial intelligence (AI) has revolutionized various fields, including dentistry, by enabling the development of intelligent systems that can assist in complex tasks such as diagnosis and treatment planning.

Conclusion

The automatic detection by the AI system was comparable to oral radiologists and may be useful for automatic identifications in panoramic radiographs. These findings signify the potential for AI systems to enhance diagnostic accuracy and efficiency in dental practices, potentially reducing the likelihood of diagnostic errors caused by unexperienced professionals.

Keywords: Artificial intelligence, panoramic radiographs, dentistry, diagnosis, caries, implants, fixed prosthesis, dental restoration, teeth numbering

Introduction

Artificial Intelligence (AI) is the branch of computer science that deals with designing computer system that can imitate intelligent human behavior to perform complex tasks, such as problem-solving, decision-making, human behavior understanding, and reasoning, among others.1,2 Ever since the conception of the term by John McCarthy in 1956, AI has been widely utilized in numerous fields including agriculture, automotive, industry, as well as medicine.3–5 AI has tremendous potential in the field of medicine, ranging from automatic disease diagnosis to the use of intelligent systems for assisted surgery.6–9 Machine learning (ML) is a branch of AI in which a computer model identifies patterns from a dataset, learns, and makes predictions without human instructions aiming to design a system with automated learning ability.10–13 Traditional machine learning techniques consisted of some features involving human intervention, making it more error-prone and time-consuming.3,11 To overcome this drawback, a more autonomous multilayered neural network system called ‘deep learning’ (DL) was developed.3,11,13–15 DL is multilayered system can detect hierarchical features such as lines, edges, textures, complex shapes, or even lesions and whole organs within a structure. 16 It attempts to predict outcomes by restructuring unlabeled and unstructured multilevel data. 13 DL is composed of numerous interconnected and sequentially stacked processing units called neurons, which collectively form artificial neural networks (ANN).3,11 It comprises an input layer, an output layer, and multiple hidden layers in between.11,17 Such ANNs possess remarkable information processing, learning, and generalization capabilities inspired by the analytical processes of the human brain.13,16 The involvement of numerous neurons in the network makes an ANN capable of solving complex real-world problems compared to conventional ML techniques. 18 Convolutional neural network (CNN), the most used subclass of ANN, is a special network architecture that uses a mathematical operation called convolution to process digital signals such as sound, images, and videos. 11 CNNs are primarily used for processing large and complex signals due to their ability to recognize and classify broader digital signals. 11 To process such wider signals, they use a sliding window to scan and analyze from left to right and top to bottom. 11 CNN can be employed for automated feature detection from two-dimensional (2D) and three-dimensional (3D) images.19,20 It involves the automated detection, segmentation, and classification of complex patterns in an image. 20

Radiographic examination is an integral part of the diagnosis, management, and treatment planning of most dental diseases.15,21,22 A panoramic radiograph, a low-dose and cost-effective imaging modality, is routinely used in dental practices due to its ability to portray all dentoalveolar structures together. 15 It can assist dentists in diagnosing dental pathologies, lesions, anomalies, and fractures of the maxillofacial structures, as well as planning restorative and prosthetic rehabilitation treatment. 21 However, panoramic radiograph images may sometimes be affected by enlargement, geometric distortions, unequal magnification, and multiple superimpositions, which could lead to misinterpretation and misdiagnosis.21,23 Hence, an automatic detection system for evaluating panoramic radiographs is needed to overcome these challenges.

Applications of AI in dentistry span across various specialties, including radiology, endodontics, periodontics, oral and maxillofacial surgery, and orthodontics. AI has demonstrated significant potential in dental disease diagnosis, treatment planning, and reducing errors in dental practice.13,14,16,22,24,25 Previously, it has shown promising results in numerous areas, including the detection of dental caries, identification of vertical root fractures (VRFs), diagnosis and classification of periodontal disease types, classification of malocclusion, automatic identification of cephalometric landmarks, as well as assistance in treatment planning.

The purpose of the current study is to assess the diagnostic performance of VELMENI Inc., an AI system that uses a convolutional neural network (CNN)-based architecture, for automatically detecting teeth, caries, implants, restorations, and fixed prostheses on panoramic radiographs.

Materials and methods

Radiographic dataset

Panoramic radiographs were randomly selected from the EPIC (an electronic medical record system used mostly in hospitals) and MiPacs systems of the Department of Oral and Maxillofacial Radiology at the University of Mississippi Medical Center, from June 2022 to May 2023. 1000 anonymized dental panoramic radiographs of 500 individuals 18 years or older were used to identify teeth, caries, implants, restorations, and fixed prostheses. Most patients identified themselves as Caucasians. This dataset compromised only panoramic radiographs with exposure parameters as low as reasonably achievable and diagnostically acceptable. Panoramic radiographs with artifacts caused by patient position, motion, or superposition of foreign subjects were not included in this study. The research protocol was approved by the IRB (2023–177). Panoramic radiographs were obtained using the Planmeca ProMax (Helsinki, Finland) with parameters of 66 kVp, 8 mA, and 15.8 s The reporting of this cross-sectional study conforms to STROBE guidelines. 28

Image annotation

The identification and detection of teeth, caries, implants, restorations (including amalgam and composites), and fixed prostheses were independently determined by two oral and maxillofacial radiologists, each with a minimum of 5 years of experience, to minimize personal bias. Each of the one thousand anonymized dental panoramic radiographs were analyzed and the findings were added to an Excel® spreadsheet including the number of teeth with caries (including all types such as enamel, dentin, secondary, radicular, etc.), number of implants, number of teeth with fillings (including amalgam, composite, etc.), number of teeth with fixed dental prostheses (FDPs), and the number of missing teeth.

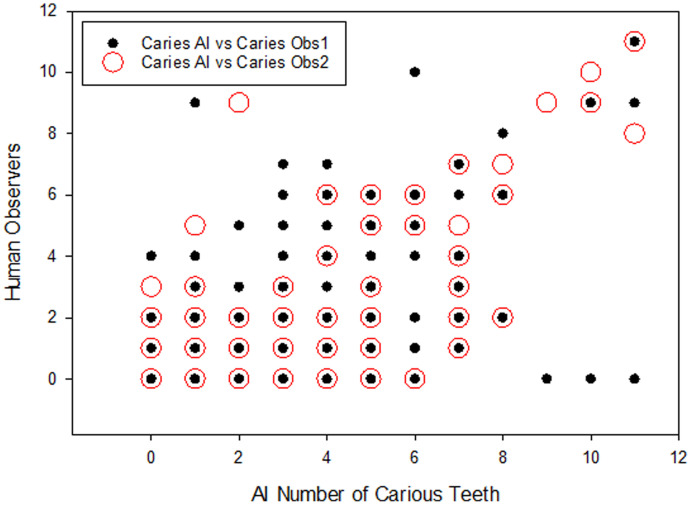

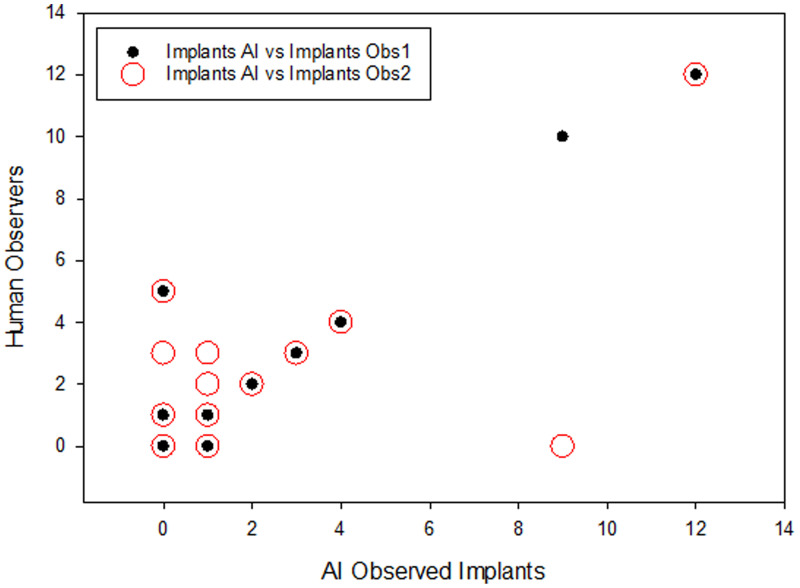

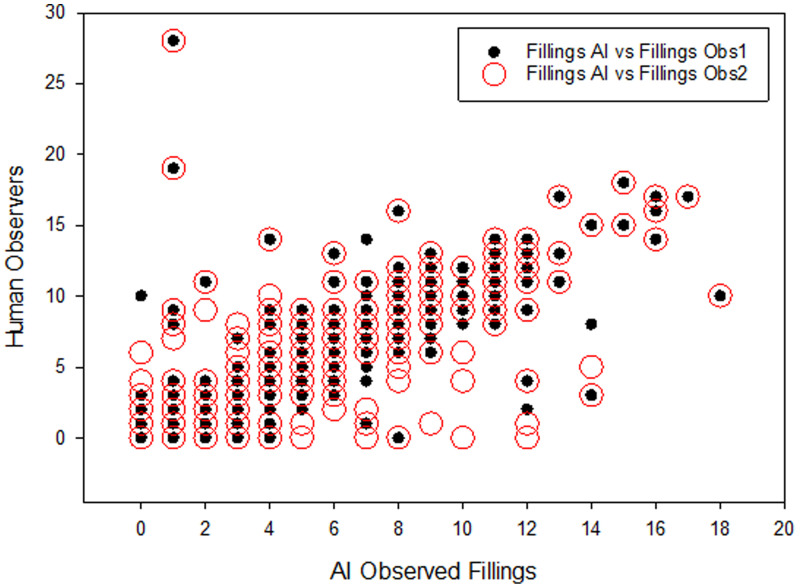

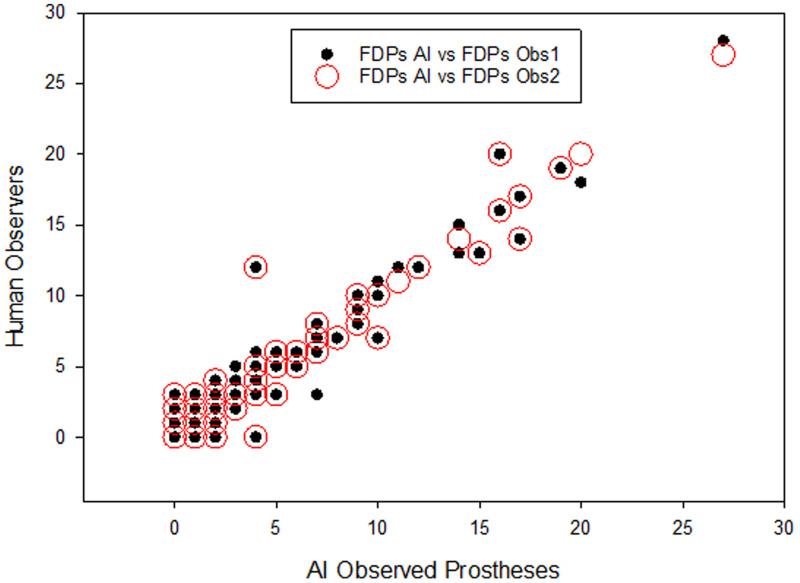

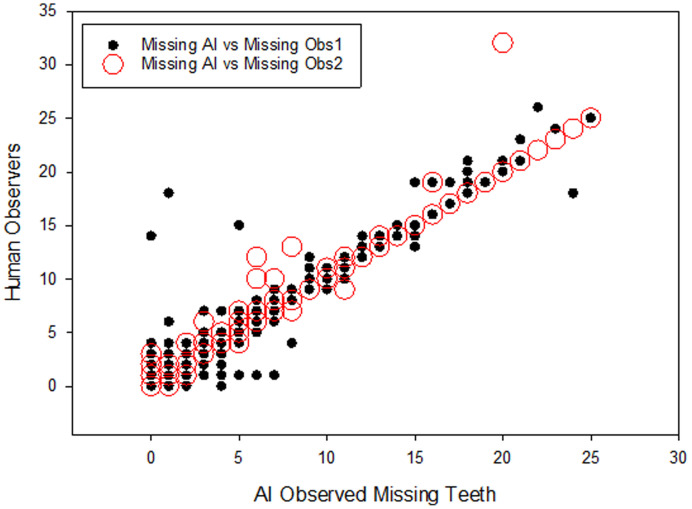

Furthermore, the convolutional neural network (CNN)-based architecture was analyzed for the detection of the number of teeth with caries, fillings, FDPs, and the number of implants on the same panoramic radiographs. The artificial intelligence (AI) system used for analysis was VELMENI Inc., based in CA, USA. A dark filled circle was used to indicate agreement on the labeling of the above dental findings between the AI system and observer 1, while a red empty circle was used for agreement on labeling between the AI system and observer 2.

Statistical analysis

Pearson's product moment correlation co-efficient was used to compare the observations between AI detected dental findings and observers 1 and 2.

Results

The Pearson Product Moment showed a strong correlation (R > 0.5) between the perception of the AI and perceptions of Observer 1 and Observer 2 for all structures that were identified in the panoramic radiograph. For the number of teeth with caries, the AI correlation was found to be 0.691–0.878 (Table 1 and Figure 1). For the number of implants, the AI correlation was found to be 0.770–0.952 (Table 2 and Figure 2). For the number of teeth with fillings, the AI correlation was found to be 0.773–0.834 (Table 3 and Figure 3). For the number of teeth with fixed prostheses, the AI correlation was found to be 0.972–0.980 (Table 4 and Figure 4), while that for the number of missing teeth was found to be 0.956–0.988 (Table 5 and Figure 5).

Table 1.

Interobserver agreement (Pearson product moment) for number of teeth with caries.

| Observer 1 | Observer 2 | AI | |

|---|---|---|---|

| Observer 1 | 0.730 P < 0.001 |

0.691 P < 0.001 |

|

| Observer 2 | 0.730 P < 0.001 |

0.878 P < 0.001 |

|

| AI | 0.691 P < 0.001 |

0.878 P < 0.001 |

Figure 1.

Interobserver agreement (Pearson product moment) for the number of teeth with caries showing strong correlation between the perception of the AI and perceptions of observer 1 and observer 2.

Table 2.

Interobserver agreement (Pearson product moment) for number of implants.

| Observer 1 | Observer 2 | AI | |

|---|---|---|---|

| Observer 1 | 0.797 P < 0.001 |

0.952 P < 0.001 |

|

| Observer 2 | 0.797 P < 0.001 |

0.770 P < 0.001 |

|

| AI | 0.952 P < 0.001 |

0.770 P < 0.001 |

Figure 2.

Interobserver agreement (Pearson product moment) for the number of implants showing strong correlation between the perception of the AI and perceptions of observer 1 and observer 2.

Table 3.

Interobserver agreement (Pearson product moment) for number of teeth with fillings.

| Observer 1 | Observer 2 | AI | |

|---|---|---|---|

| Observer 1 | 0.917 P < 0.001 |

0.834 P < 0.001 |

|

| Observer 2 | 0.917 P < 0.001 |

0.773 P < 0.001 |

|

| AI | 0.834 P < 0.001 |

0.773 P < 0.001 |

Figure 3.

Interobserver agreement (Pearson product moment) for the number of teeth with fillings showing strong correlation between the perception of the AI and perceptions of observer 1 and observer 2.

Table 4.

Interobserver agreement (Pearson product moment) for number of teeth with FDPs.

| Observer 1 | Observer 2 | AI | |

|---|---|---|---|

| Observer 1 | 0.991 P < 0.001 |

0.972 P < 0.001 |

|

| Observer 2 | 0.991 P < 0.001 |

0.980 P < 0.001 |

|

| AI | 0.972 P < 0.001 |

0.980 P < 0.001 |

Figure 4.

Interobserver agreement (Pearson product moment) for the number of teeth with FDPs showing strong correlation between the perception of the AI and perceptions of observer 1 and observer 2.

Table 5.

Interobserver agreement (Pearson product moment) for number of missing teeth.

| Observer 1 | Observer 2 | AI | |

|---|---|---|---|

| Observer 1 | 0.946 P < 0.001 |

0.956 P < 0.001 |

|

| Observer 2 | 0.946 P < 0.001 |

0.988 P < 0.001 |

|

| AI | 0.956 P < 0.001 |

0.988 P < 0.001 |

Figure 5.

Interobserver agreement (Pearson product moment) for the number of missing teeth showing strong correlation between the perception of the AI and perceptions of observer 1 and observer 2.

Discussion

Performing accurate diagnosis is one of the crucial steps in the dental office. Therefore, dental radiography, especially panoramic radiography, becomes a common and essential tool for assessing and planning patient treatment. Its widespread use among professionals and acceptance by patients stem from the ability to visualize all orofacial structures in a single image, as well as its simplicity of technique, low radiation dose absorbed by the patient, low cost, and painlessness.26,27 Despite its benefits, panoramic radiography has some limitations, such as a lack of three-dimensionality, the potential presence of artifacts, and a lack of homogeneity in regions of interest. These limitations may lead to incorrect interpretations by clinicians. 28 For these reasons, the utilization of automatic detection methods to assist dentists and their teams in interpreting panoramic radiographs will be highly significant for accurate diagnosis and planning.

The purpose of the present study was to verify the diagnostic performance of VELMENI Inc., for the automatic detection of teeth, caries, implants, restorations, and fixed prosthesis on panoramic radiography. The results of the study, as revealed by Pearson Product Moment correlation analysis, highlight key points regarding the agreement between the VELMENI artificial intelligence system, based on a CNN architecture, and two human observers in interpreting panoramic radiographs.

A study used an automated system for tooth detection and numbering and found that the CNN's performance was comparable to that of experts, potentially aiding in document completion processes and saving time for professionals. 29 For accurate tooth identification and numbering, it is important that the AI model is trained on a large dataset. 30 In the present study, the identification of the number of missing teeth yielded one of the best results, with a strong positive correlation of AI with both the human observers.

The use of Convolutional Neural Networks (CNNs) for identifying dental implants is a highly researched topic in the field of AI applied to implantology. Numerous studies have presented promising outcomes in the identification of dental implants in panoramic and periapical radiographs.31–35 These findings align with the results of our study, wherein a strong correlation was observed between AI and the two human observers. Given the vast array of brands and types of dental implants found worldwide, these results offer valuable assistance to dentists, addressing the need for identifying these points for the continuation of prosthetic treatment or for the replacement of past treatments where there is no access to the history of the prior treatment. 31

Convolutional Neural Networks (CNNs) are AI models particularly well-suited for image classification and, consequently, are widely used in cavity identification. 36 Early diagnosis of carious lesions pose a challenge due to low sensitivity and potential examiner disagreement.36–38 However, studies demonstrate that CNNs offer good accuracy in caries diagnosis.38–40 A literature review 36 highlighted that the accuracy of AI models in cavity detection ranges between 83.6% and 97.1%. Furthermore, AI assistance for dentists in exclusively enamel caries detection resulted in a significant increase in detection, rising from 44.3% to 75.8%. The present study also found a strong correlation in cavity detection between AI and the two human observers, with Pearson correlation coefficients of R = 0.691 with Observer 1 and R = 0.878 with Observer 2.

Previously, a study employed the U-Net architecture for automatic segmentation of amalgam and composite resin restorations in panoramic images, achieving highly accurate detection results. 41 Conversely, another study utilized a CNN and obtained good results in detecting metallic restorations, with a sensitivity of 85.48%, while the sensitivity in detecting composite resin restorations was 41.11%. 27 The present study found a strong correlation between CNN and the two human observers, with correlation coefficients ranging from R = 0.773 to R = 0.834. These findings emphasize the significant contribution of AI in dental restoration detection and enhance the existing knowledge in the field.

The detection of the number of teeth with fixed dental prostheses (FDPs) yielded the best results along with the identification of the number of missing teeth mentioned earlier. In our study, a strong correlation was found between observers 1 and 2 and the AI, being R = 0.972 and R = 0.980, respectively. Previous studies have utilized CNNs for the detection of fixed dental prostheses and full crowns, achieving remarkable precision and efficiency results.42,43 The findings from these studies further emphasize the potential of CNNs in dental prosthesis detection, with accuracy and efficiency comparable to or even surpassing those of dentists with 3 to 10 years of experience.

Although the current study showed promising results in identifying common conditions in panoramic radiographs, it's essential to recognize its limitations. The dataset utilized was restricted and lacked external data, potentially impacting the generalization of results. To address these limitations, future studies should consider employing larger and more diverse datasets. Our patient population consisted exclusively of the state of Mississippi, meaning that our findings may not be generalizable to other regions. Variations in demographics, socio-economic status, and healthcare access could affect the applicability of the results to broader populations. This approach could offer further insights and enhance the validity of the findings presented in this study.

Conclusion

The findings of the present study emphasize that AI systems based on deep learning methods may be useful for the automatic detection of teeth, caries, implants, restorations, and fixed prosthesis on panoramic images for clinical applications, thereby helping to improve efficiency. Furthermore, machine learning in dentistry might utilize fundamental dental radiography to complement further clinical examinations and the training of future dental practitioners.

Lastly, the strong correlation between the VELMENI Inc. AI system's detections and radiologists’ annotations highlights the clinical relevance of AI in dental diagnostics. This reliability indicates that AI may effectively assist in identifying dental conditions, potentially increasing diagnostic accuracy, reducing human error, and enhancing treatment planning. Integrating AI into clinical practice might improve efficiency, support dental professionals, and lead to better patient outcomes.

Acknowledgements

We thank the University of Mississippi Medical Center School of Dentistry Division of Oral and Maxillofacial Radiology Clinic.

Abbreviations

- AI

Artificial intelligence;

- ANN

Artificial neural network;

- CNN

Convolutional neural network;

- DL

Deep learning;

- ML

Machine learning

- 2D

two-dimensional

- 3D

three-dimensional

- VRFs

vertical root fractures

- FDPs

fixed dental prostheses.

Authors' contributions: R.J., A.S., P.N., designed a study, data analysis, annotation, and coordination. T.V. collected data. A.P., P.T., and P.J., performed literature review. J.G. performed the statistical analysis. A.P., P.T., and M.R. wrote the manuscript. A.S., M.S., and R.J editing the draft of the manuscript. R.J., M.F., and A.F. did final approval of the manuscript. MBG editing the draft of the manuscript. All authors read and approved the final manuscript.

Data availability statement: Data may be available upon request.

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethics approval: University of Mississippi Medical Center Institutional Review Committee gave ethical approval. IRB Approval number - (IRB-2023–177).

Funding: The authors received no financial support for the research, authorship, and/or publication of this article.

ORCID iDs: Rohan Jagtap https://orcid.org/0000-0002-9115-7235

Avula Samatha https://orcid.org/0000-0003-1603-2253

References

- 1.Xu Y, Liu X, Cao X, et al. Artificial intelligence: a powerful paradigm for scientific research. The Innovation 2021; 2: 100179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hashimoto DA, Rosman G, Rus Det al. et al. Artificial Intelligence in Surgery: Promises and Perils. (1528-1140 (Electronic)). [DOI] [PMC free article] [PubMed]

- 3.Joudi NAE, Othmani MB, Bourzgui F, et al. Review of the role of artificial intelligence in dentistry: current applications and trends. Procedia Comput Sci 2022; 210: 173–180. [Google Scholar]

- 4.Al-Salman O, Mustafina J, Shahoodh G. A systematic review of artificial neural networks in medical science and applications. In: 2020 13th international conference on developments in eSystems engineering (DeSE). Liverpool, United Kingdom: IEEE, 2020, pp.279–282. [Google Scholar]

- 5.Poplin R, Varadarajan AV, Blumer K, et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nature Biomedical Engineering 2018; 2: 158–164. [DOI] [PubMed] [Google Scholar]

- 6.Rahimy E, Wilson J, Tsao TC, et al. Robot-assisted intraocular surgery: development of the IRISS and feasibility studies in an animal model. Eye 2013; 27: 972–978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Yamada M, Saito Y, Imaoka H, et al. Development of a real-time endoscopic image diagnosis support system using deep learning technology in colonoscopy. Sci Rep 2019; 9: 14465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kalra S, Tizhoosh HR, Shah S, et al. Pan-cancer diagnostic consensus through searching archival histopathology images using artificial intelligence. npj Digital Medicine 2020; 3: 31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.McKinney SM, Sieniek M, Godbole V, et al. International evaluation of an AI system for breast cancer screening. (1476-4687 (Electronic)). [DOI] [PubMed]

- 10.Carleo G, Cirac I, Cranmer K, et al. Machine learning and the physical sciences. Rev Mod Phys 2019; 91: 045002. [Google Scholar]

- 11. Nguyen Tt Fau - Larrivée N, Larrivée N Fau - Lee A, Lee A Fau - Bilaniuk O, Bilaniuk O Fau - Durand R, Durand R . Use of Artificial Intelligence in Dentistry: Current Clinical Trends and Research Advances. (1488-2159 (Electronic)). [PubMed]

- 12.Schwendicke F, Samek WA-O, Krois J. Artificial Intelligence in Dentistry: Chances and Challenges. (1544-0591 (Electronic)). [DOI] [PMC free article] [PubMed]

- 13.Ghods K, Azizi A, Jafari Aet al. et al. Application of Artificial Intelligence in Clinical Dentistry, a Comprehensive Review of literature. 2023.

- 14.Ahmed NA-O, Abbasi MA-O, Zuberi FA-O, et al. Artificial Intelligence Techniques: Analysis, Application, and Outcome in Dentistry-A Systematic Review. (2314-6141 (Electronic)). [DOI] [PMC free article] [PubMed]

- 15.Başaran MA-O, Çelik Ö A-O, Bayrakdar IA-O, et al. Diagnostic charting of panoramic radiography using deep-learning artificial intelligence system. (1613-9674 (Electronic)). [DOI] [PubMed]

- 16.Bindushree V, Sameen R, Vasudevan V, et al. Artificial intelligence: in modern dentistry. Journal of Dental Research and Review 2020; 7: 27–31. [Google Scholar]

- 17.Ossowska A, Kusiak AA-O, Świetlik DA-O. Artificial Intelligence in Dentistry-Narrative Review. LID - 10.3390/ijerph19063449 [doi] LID - 3449. (1660-4601 (Electronic)). [DOI] [PMC free article] [PubMed]

- 18.Fatima A, Shafi I, Afzal HA-O, et al. Advancements in Dentistry with Artificial Intelligence: Current Clinical Applications and Future Perspectives. LID - 10.3390/healthcare10112188 [doi] LID - 2188. (2227-9032 (Print)). [DOI] [PMC free article] [PubMed]

- 19.Gan F, Liu H, Qin WGet al. et al. Application of artificial intelligence for automatic cataract staging based on anterior segment images: comparing automatic segmentation approaches to manual segmentation. (1662-4548 (Print)). [DOI] [PMC free article] [PubMed]

- 20.Hung KA-O, Ai QA-O, Wong LA-O, et al. Current Applications of Deep Learning and Radiomics on CT and CBCT for Maxillofacial Diseases. LID - 10.3390/diagnostics13010110 [doi] LID - 110. (2075-4418 (Print)). [DOI] [PMC free article] [PubMed]

- 21.White SC. Oral Radiology: Principles And Interpretation. 6th Edition. Amsterdam, The Netherlands: Elsevier (A Division of Reed Elsevier India Pvt. Limited), 2009. [Google Scholar]

- 22.Ding H, Wu J, Zhao W, et al. Artificial intelligence in dentistry—A review. Frontiers in Dental Medicine 2023; 4. [Google Scholar]

- 23.Perschbacher S. Interpretation of panoramic radiographs. (1834-7819 (Electronic)). [DOI] [PubMed]

- 24.Agrawal P, Nikhade P. Artificial Intelligence in Dentistry: Past, Present, and Future. (2168-8184 (Print)). [DOI] [PMC free article] [PubMed]

- 25.Ari T, Sağlam H, Öksüzoğlu H, et al. Automatic feature segmentation in dental periapical radiographs. Diagnostics 2022; 12: 3081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Yüksel AE, Gültekin S, Simsar E, et al. Dental enumeration and multiple treatment detection on panoramic X-rays using deep learning. Sci Rep 2021; 11: 12342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bonfanti-Gris M, Garcia-Cañas A, Alonso-Calvo R, et al. Evaluation of an artificial intelligence web-based software to detect and classify dental structures and treatments in panoramic radiographs. J Dent 2022; 126: 104301. [DOI] [PubMed] [Google Scholar]

- 28.Vinayahalingam S, Goey R-S, Kempers S, et al. Automated chart filing on panoramic radiographs using deep learning. J Dent 2021; 115: 103864. [DOI] [PubMed] [Google Scholar]

- 29.Tuzoff DV, Tuzova LN, Bornstein MM, et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofacial Radiology 2019; 48: 20180051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gülüm S, Kutal S, Cesur Aydin K, et al. Effect of data size on tooth numbering performance via artificial intelligence using panoramic radiographs. Oral Radiol 2023; 39: 715–721. [DOI] [PubMed] [Google Scholar]

- 31.da Mata Santos RP, Vieira Oliveira Prado HE, Soares Aranha Neto I, et al. Automated identification of dental implants using artificial intelligence. Int J Oral Maxillofac Implants 2021; 36: 918–923. [DOI] [PubMed] [Google Scholar]

- 32.Kim H-S, Ha E-G, Kim YH, et al. Transfer learning in a deep convolutional neural network for implant fixture classification: a pilot study. Imaging Sci Dent 2022; 52: 219–224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sukegawa S, Yoshii K, Hara T, et al. Multi-Task deep learning model for classification of dental implant brand and treatment stage using dental panoramic radiograph images. Biomolecules 2021; 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hadj Saïd M, Le Roux M-K, Catherine J-H, Lan R. Development of an Artificial Intelligence Model to Identify a Dental Implant from a Radiograph. The International journal of oral & maxillofacial implants 2020; 12/10: 1077–1082. [DOI] [PubMed] [Google Scholar]

- 35.Lee J-H, Kim Y-T, Lee J-Bet al. et al. A performance comparison between automated deep learning and dental professionals in classification of dental implant systems from dental imaging: a multi-center study. Diagnostics 2020; 10: 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Tabatabaian F, Vora SR, Mirabbasi S. Applications, functions, and accuracy of artificial intelligence in restorative dentistry: a literature review. J Esthet Restor Dent 2023; 35: 842–859. [DOI] [PubMed] [Google Scholar]

- 37.Mohammad-Rahimi H, Motamedian SR, Rohban MH, et al. Deep learning for caries detection: a systematic review. J Dent 2022; 122: 104115. [DOI] [PubMed] [Google Scholar]

- 38.Kunt L, Kybic J, Nagyová Vet al. et al. Automatic caries detection in bitewing radiographs: part I—deep learning. Clin Oral Investig 2023; 27: 7463–7471. [DOI] [PubMed] [Google Scholar]

- 39.Li S, Liu J, Zhou Z, et al. Artificial intelligence for caries and periapical periodontitis detection. J Dent 2022; 122: 104107. [DOI] [PubMed] [Google Scholar]

- 40.Mertens S, Krois J, Cantu AG, et al. Artificial intelligence for caries detection: randomized trial. J Dent 2021; 115: 103849. [DOI] [PubMed] [Google Scholar]

- 41.Oztekin F, Katar O, Sadak F, et al. Automatic semantic segmentation for dental restorations in panoramic radiography images using U-net model. Int J Imaging Syst Technol 2022; 32: 1990–2001. [Google Scholar]

- 42.Choi H-R, Siadari TS, Kim J-E, et al. Automatic detection of teeth and dental treatment patterns on dental panoramic radiographs using deep neural networks. Forensic Sciences Research 2022; 7: 456–466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Zhu J, Chen Z, Zhao J, et al. Artificial intelligence in the diagnosis of dental diseases on panoramic radiographs: a preliminary study. BMC Oral Health 2023; 23: 58. [DOI] [PMC free article] [PubMed] [Google Scholar]