Significance

Fundamentals of statistical physics explain that systems in thermal equilibrium exhibit spontaneous order because orderly configurations have low energy. This fact is remarkable, and powerful, because energy is a “local” property of configurations. Nonequilibrium systems, including engineered and living systems, can also exhibit order, but there is no property analogous to energy that generally explains why orderly configurations of these systems often emerge. However, recent experiments suggest that a local property called “rattling” predicts which configurations are favored, at least for a broad class of nonequilibrium systems. We develop a theory of rattling that explains for which systems it works and why, and we demonstrate its application across scientific domains.

Keywords: nonequilibrium steady states, Markov chains, self-organization, Boltzmann distribution

Abstract

The global steady state of a system in thermal equilibrium exponentially favors configurations with lesser energy. This principle is a powerful explanation of self-organization because energy is a local property of configurations. For nonequilibrium systems, there is no such property for which an analogous principle holds, hence no common explanation of the diverse forms of self-organization they exhibit. However, a flurry of recent empirical results has shown that a local property of configurations called “rattling” predicts the steady states of some nonequilibrium systems, leading to claims of a far-reaching principle of nonequilibrium self-organization. But for which nonequilibrium systems is rattling accurate, and why? We develop a theory of rattling in terms of Markov processes that gives simple and precise answers to these key questions. Our results show that rattling predicts a broader class of nonequilibrium steady states than has been claimed and for different reasons than have been suggested. Its predictions hold to an extent determined by the relative variance of, and correlation between, the local and global “parts” of a steady state. We show how these quantities characterize the local-global relationships of various random walks on random graphs, spin-glass dynamics, and models of animal collective behavior. Surprisingly, we find that the core idea of rattling is so general as to apply to equilibrium and nonequilibrium systems alike.

Self-organization abounds in nature, from the spontaneous assembly of fractal protein complexes (1), to nests of army ants, or bivouacs, formed by entangling potentially millions of their own, living bodies (2), to “marching bands” of desert locusts that can span hundreds of square kilometers (3). These varied phenomena warrant a common explanation because each entails a system that occupies relatively few configurations* among an overwhelming number of alternatives. For physical systems in thermal equilibrium, the Boltzmann distribution provides such an explanation: Systems preferentially occupy configurations of lower energy. Specifically, the probability of a configuration of a physical system in thermal equilibrium satisfies

| [1] |

in terms of a constant and the configuration’s energy (4).

The Boltzmann distribution is remarkable because the energy of a configuration is “local” in the sense that it does not depend on the dynamics that connect other configurations. This fact makes it possible to understand the equilibrium configurations of many-body systems, like proteins, far more efficiently than is possible with methods that apply to nonequilibrium systems (5). In contrast, there can be no local property of configurations that generally determines their weight in the global, steady-state distribution of a nonequilibrium system (6–8). Recent empirical evidence, however, suggests that a local property called “rattling” predicts the configurational weights of a broad class of nonequilibrium steady states (9–15).

Rattling.

Chvykov et al. offer a compelling heuristic: If the motion of a system is “so complex, nonlinear, and high-dimensional that no global symmetry or constraint can be found for its simplification,” then it amounts to diffusion in an abstract state space (10). A function of the motion’s effective diffusivity in the vicinity of a configuration , called the rattling, then predicts the steady-state weight of .

As a simple example, consider a one-dimensional diffusion with variable diffusivity , which has a steady-state distribution that is proportional to . In this case, Chvykov et al. define the rattling to be and the heuristic is literally true: The steady-state distribution has the form of the Boltzmann distribution with , written in terms of as

| [2] |

For more general dynamics, Chvykov et al. replace with an estimate of the rate at which the mean squared displacement from grows in each dimension and replace the rattling with , which is a higher-dimensional analogue of . Moreover, instead of the strict proportionality in Eq. 2, which implies that the logarithms of and are perfectly linearly correlated with a slope of , the heuristic vaguely predicts that their correlation is high and that the slope , which may vary by system, is “of order .”

Qualitatively, the rattling heuristic says that systems spend more time in configurations that they exit more slowly. This claim may seem trivial at first, but it is not—the steady-state distribution is global, while rattling is local—and it cannot be true in general. A theory of rattling should explain precisely for which systems Eq. 2 approximately holds, in terms of formulas for and .

Although the initial body of work on rattling does not identify the class of nonequilibrium systems to which the heuristic applies, it demonstrates that this class is broad. Examples include experiments with swarms of robots (10) and collectives of active microparticles (13); simulations of spin glasses (11) and stochastic dynamics with strong timescale separation (9); and numerical studies of the Lorenz equations (12) and mechanical networks (15). Strikingly, in many cases, the slope in Eq. 2 is nearly equal to (10).

Summary of Our Results.

The remarkable accuracy and scope of the rattling heuristic suggest that there is a simple, underlying mathematical theory of rattling. Our main results provide such a theory in terms of Markov chains, which serve as a general model of nonequilibrium steady states (16–21). Specifically, we derive formulas for Markov chain analogues of the correlation and slope in terms of two further quantities, denoted and , that respectively characterize the correlation between the stationary distribution’s local and global “parts” and the relative number of exponential scales over which these parts vary. The quantities and together determine the accuracy of rattling’s predictions for any Markov chain.†

Even when the rattling heuristic holds, in the sense that and are close to , the steady-state probabilities of some states can vastly differ from those predicted by Eq. 2. This discrepancy limits the value of the heuristic to some applications, like the estimation of observables. With this in mind, we also consider a stronger claim than the rattling heuristic makes: that the ratios of the steady-state probabilities and those in Eq. 2 are uniformly close to . Our results identify conditions on the rates of a Markov chain that guarantee that its stationary probabilities are within a small constant factor of those specified by an analogue of Eq. 2:

| [3] |

Here, denotes the exit rate of state , the logarithm of which is the Markov chain analogue of rattling (see SI Appendix, Text for details). When Eq. 3 holds, is akin to a Boltzmann distribution because, like the energy of a configuration, the exit rate of a state is “local” (Fig. 1). We show that for Eq. 3 to hold up to a small constant factor, it suffices for the global part of the stationary distribution to vary little.

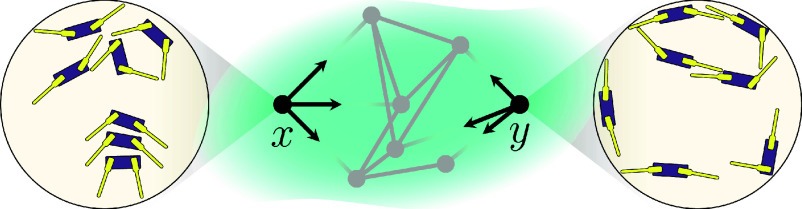

Fig. 1.

Exit rates are local. A state of a Markov chain abstracts a configuration of a physical system, like the robot swarm of Chvykov et al. (10). The relative probability of in the stationary distribution is purely a function of the rates of the chain. The sum of the rates leaving is experimentally accessible, because this sum is the reciprocal of the average amount of time that it takes the system to leave . This quantity is “local” to because it does not depend on the rates leaving any .

Applications.

We briefly highlight three important applications of our results: to explain self-organization in broad classes of nonequilibrium systems, to estimate the observables of some nonequilibrium steady states, and to analyze the local-global relationship in systems across domains.

First, if a nonequilibrium system has special states of low rattling, then rattling explains self-organization to the extent that the correlation is close to . Our results show that there are essentially two distinct classes of systems, defined in terms of the quantities and , for which . We later discuss how these quantities might be estimated from data.

Second, our results explain that, if the global part of a nonequilibrium steady state is hypothesized or inferred to vary little, then the configurational weights can be used to estimate observables, in the same way that Boltzmann weights are used to estimate observables of equilibrium steady states. For example, these weights could be used to efficiently generate one-shot samples from the nonequilibrium steady states of some many-body systems (5).

Third, our results are even useful for analyzing systems with known steady-state distributions, because they relate the local and global parts of arbitrary steady states. This is especially interesting when the rates of a Markov chain are functions of a parameter. (For example, this is the context of fundamental results in stochastic thermodynamics, like the Jarzynski equality (22) and the Crooks fluctuation theorem (23, 24), where the parameter models the influence of external forces.) Because we have not precisely defined and yet, we only explain the high-level idea. Every Markov chain defines a pair in the strip , and changing a parameter of the chain amounts to placing a dynamics on these pairs. Our results allow us to interpret certain regions of the strip as classes of steady states that have local weights. Later, we demonstrate how this idea can be used to analyze bifurcations in models of animal collective behavior.

Broader Perspective and Related Work.

Markov chains that satisfy detailed balance‡ are analogous to equilibrium systems because they are statistically time-reversible and have stationary distributions that can be expressed in the Boltzmann form (19). Accordingly, studies that model nonequilibrium steady states as Markov chains typically emphasize how and to what extent these chains violate detailed balance. For example, the conservation of probability requires that violations of detailed balance arise from net fluxes of probability around cycles of states. These circular fluxes form the basis of Hill’s thermodynamic formalism (25, 26), Schnakenberg’s network theory (16, 27), and cycle representations of Markov chains (28–31), and they appear in the Helmholtz–Hodge decomposition of Markov chains (32). Moreover, probability fluxes—and functions of them, like thermodynamic forces and entropy production—are the subject of nonequilibrium fluctuation theorems (33–36), thermodynamic uncertainty relations (37–39), and results concerning the response of nonequilibrium steady states to rate perturbations (40–43).

It may come as a surprise, then, that our results make no reference to detailed balance or the nature of its possible failure. Instead, we view the search for nonequilibrium analogues of the Boltzmann distribution as part of a broader effort to understand when local information about the rates of a Markov chain suffices to determine or predict its stationary distribution (Fig. 1). From this perspective, chains that satisfy detailed balance are not an ideal from which other chains depart; while their stationary distributions can be expressed in the Boltzmann form, the associated weights are nonlocal functions of the rates (19, 31) (Fig. 2).

Fig. 2.

The relationship between the rates of an irreducible Markov chain and its stationary distribution specializes for certain classes of rate matrices. In general, all of the rates are necessary to determine ; the Markov chain tree theorem specifies how to do so, by summing products of the rates along the spanning trees of the underlying adjacency graph (Eq. 4). However, if the chain satisfies detailed balance, then it suffices to know the ratio of the product of forward rates to the product of reverse rates along a path to from a fixed, reference state. If the chain has a doubly stochastic jump chain, then it suffices to know the exit rate of each state .

In general, if is an irreducible rate matrix, then its stationary distribution is the unique solution to , which implicates all of the rates . In fact, according to the Markov chain tree theorem (44), is proportional to a sum over spanning trees of products of rates:

| [4] |

Here, the sum ranges over spanning trees of the chain’s transition graph and is the set of directed edges that results from “pointing” all of the edges of toward (Fig. 2). Our results identify special classes of chains for which relatively little and, importantly, exclusively local information about their rates suffices to determine their stationary distributions. For example, for chains that satisfy Eq. 3, it suffices to know the exit rates to determine .

Results

We model the steady state of a physical system as a stationary, continuous-time Markov chain with a finite number of states (16, 18, 19).§ For simplicity, we refer to the chain by the matrix of its transition rates. We assume that the chain is irreducible, meaning that it can eventually reach any state from any other state; this guarantees that has a unique stationary distribution .

Our results emphasize the relationship between , the exit rates

and the stationary distribution of a chain that is closely related to , called the jump chain of . The jump chain is part of a standard construction of continuous-time Markov chains (45, Section 2.6). We define it to be the discrete-time Markov chain with transition probabilities

The jump chain is irreducible because is, hence it has a unique stationary distribution .

We view the logarithms of and as the local and global “parts” of . In this view, Markov chain analogues of the Boltzmann distribution (Eq. 1) and the rattling heuristic (Eq. 2) would approximate by a distribution with weights that are a function of , with an error that depends on the relationship between and . Indeed, a simple heuristic suggests that the analogue of rattling is (SI Appendix, Text). Eq. 2 therefore suggests that we approximate by

for a real number . Informally, our results identify two distinct ways for to approximate for some , i.e., for to have local weights: Either 1) the global part of is approximately uniform or 2) the local and global parts of are approximately collinear.

The first way for to have local weights is for to be close to uniform. To state this more precisely, we will say that two numbers and are within a factor of if

Additionally, we will say that two vectors or matrices and of the same size are within a factor of if all of their corresponding entries and are within a factor of .

Theorem 1

If is within a factor of of the uniform distribution, then and are within a factor of . In particular, if is uniform, then .

In fact, is uniform if and only if the jump chain is doubly stochastic, i.e., each of its rows and columns sums to . Since small perturbations of produce correspondingly small perturbations of (46, 47), the conclusion of Theorem 1 applies whenever the original chain has a jump chain that is nearly doubly stochastic.

Theorem 2

If there is a doubly stochastic matrix that is within a factor of of the jump chain’s transition probability matrix , then and are within a factor of . In particular, if is doubly stochastic, then .

Theorem 2 makes it easy to generate examples of nonequilibrium steady states with local weights (Fig. 3). Simply multiply the rows of a doubly stochastic matrix by any exit rates , and replace the diagonal entries with . This yields a Markov chain with local weights and the stationary distribution .

Fig. 3.

A variety of Markov chains that satisfy . (Transition graphs with red edges depict doubly stochastic matrices; edge widths are proportional to transition probabilities.) Doubly stochastic matrices are varied, ranging from sparsely connected, directed cycles () to densely connected, undirected graphs (). Moreover, they include arbitrary convex combinations, like , which is a convex combination of and . According to Theorems 1 and 2, scaling the rows by any positive numbers , and subsequently choosing the diagonal entries to make the row sums equal zero, produces the transition rate matrix of a continuous-time Markov chain that has a stationary distribution . (The blue edges have widths proportional to the corresponding rates of .) This requires to have entries of zero on its diagonal (no “self-loops”) and an adjacency graph that is strongly connected. The variety of doubly stochastic matrices, and the freedom to scale their rows by any exit rates, means that an even greater variety of chains satisfy .

Even when is far from uniform, the exit rates can “predict” the stationary probabilities, in the sense that the logarithms of and are highly collinear, for a random state . This is true, for example, of many Markov chains with random rates (Fig. 4). Our next results explain why the correlations are high and the slopes are close to .

Fig. 4.

Extent to which exit rates predict the stationary distributions of Markov chains with random transition rates. Each panel includes a scatter plot of versus , paired with a corresponding plot of versus , for one of four Markov chains . Each Markov chain has states that correspond to the vertices of either the complete graph (circular marks in A and B) or the -dimensional hypercube (square marks in C and D). In (A and C), independent and identically distributed (i.i.d.) exponential rates and with mean connect every pair of states that are adjacent in the underlying graph (green). In (B and D), the rates instead have i.i.d. log-normal distributions with parameters and (blue). (A) Observe that, for exponential rates on the complete graph, the logarithms of and are highly collinear, while those of and are not. (B) The same is true of log-normal rates on the complete graph, but the collinearity of and is greater than in (A) because varies over relatively fewer exponential scales than in the case of log-normal rates. (In the language of Theorem 3, is smaller in B than in A.) (C and D) On the hypercube, and remain highly collinear, but to a lesser extent than in (A and B), because the variance of is greater relatively in (C and D). The slopes of the lines of best fit are nearly equal to in all cases, because the correlation between the local and global “parts” of is close to (see Theorem 4).

We measure the strength of collinearity using the linear correlation coefficient, defined for random variables and that have finite, positive variances as

Specifically, we consider the correlation

| [5] |

While the value of depends on the distribution of the random state , our results hold for any distribution that makes defined. In other words, we merely assume that the logarithms of and have finite, positive variances. For example, could be the uniform distribution over states or the stationary distribution .

Our next result is a formula for in terms of the correlation between the local and global parts of

and the relative number of exponential scales over which they vary

Technically, is undefined when is constant. However, in this case, we will adopt the useful convention that .

Theorem 3

The correlation coefficient satisfies

[6]

According to Eq. 6, the correlation is strictly positive unless is at most , which is impossible if is less than because . Plots of the contours of in the strip (SI Appendix, Fig. S1) further show that is close to to the extent that is close to or is close to . A simple lower bound of , which makes no reference to , reflects the latter fact (SI Appendix, Corollary S1).

Theorem 3 explains the relatively high correlations exhibited by Markov chains with random rates (Fig. 4). Specifically, when the random rates have exponential distributions, the local and global parts of are approximately uncorrelated and have nearly equal variances (Fig. 4 A and C), meaning that and , hence by Eq. 6. When the rates instead have log-normal distributions, the local and global parts remain approximately uncorrelated, but the local part varies relatively more, leading to a lesser and a correspondingly greater (Fig. 4 B and D). The difference between the cases of exponential and log-normal rates is not due to the exponential rates’ lesser variance; the key quantities in Fig. 4A remain constant as the variance of the exponential rates increases over hundreds of scales (SI Appendix, Fig. S2). Note that the correlations in Fig. 4 concern a uniformly random state . If is instead distributed according to , then the experiments in Fig. 4A–C have qualitatively similar results, but the case of log-normal rates on the hypercube (Fig. 4D) is markedly different: the correlation equals , and the linear relationship between the logarithms of and has a lesser slope of (SI Appendix, Fig. S3).

In contrast to the conclusions of Theorems 1 and 2, there may be no for which and are within a small factor, even when is close to . (See SI Appendix, Proposition S1 for a bound on this factor.) However, in this case, there is a for which accurately predicts the relative probabilities of states, in the sense of making the following, log-ratio error small:

| [7] |

Note that the exponent in the expectation modifies the logarithm, not its argument, and the expectation is with respect to and , which are independent and distributed according to . In fact, while is distinct from the mean squared error that is used to fit the lines in Fig. 4, its minimizer coincides with the slopes of these lines (See SI Appendix Proposition S2). Our final result therefore explains that these slopes are close to because is close to .

Theorem 4

The log-ratio error is minimized by and equals

Theorem 4 states that the local distribution that best approximates in the sense of preserving its relative probabilities is . While the Markov chains with random rates in Fig. 4 have slopes , many familiar Markov chains have and even . For example, consider the random energy model on the -dimensional hypercube, which assigns i.i.d. random energies to states (48, 49). These energies determine a Boltzmann probability distribution . The two Markov chains defined by the rates and are in detailed balance with , as are the chains determined by the weighted geometric means of these rates, defined for by . [Markov chains of this kind are known as Glauber dynamics (50).] As ranges from to , the correlation increases from to , while the slope remains nonnegative (Fig. 5, red points). In contrast, under the Sherrington–Kirkpatrick (SK) model, i.i.d. random couplings of the coordinates determine the energies of each state (51). Due to the dependence of nearby states’ energies, the correlations and slopes take negative values when is less than roughly (Fig. 5, blue points).

Fig. 5.

The relationships between exit rates and stationary probabilities for spin glasses with reversible dynamics. Each Markov chain has states corresponding to the vertices of a -dimensional hypercube, random rates satisfying detailed balance, and a Boltzmann stationary distribution given by , where the rates and stationary distribution depend on energies assigned to each state. In (A–C), these energies are given by the random energy model (REM)—namely, i.i.d. normal random variables with a mean of and a variance of . In (D–F), the energies are those of the Sherrington–Kirkpatrick (SK) model, which are correlated through random couplings , according to , with couplings that are i.i.d. normal random variables with a mean of and a variance of . (A) We can see that the first set of rates has exit rates that exactly satisfy a linear relationship between the logarithms of and . (B) The other extreme, transition rates that depend on the energy of the destination, produce no detectable linear relationship. In (C), the geometric mean of the first two rates produces high correlation, with a slope greater than . (D) For the SK model, the logarithms of and are exactly collinear, as in (A). (E) However, because adjacent states have correlated energies under the SK model, and can be highly negatively correlated, unlike in (B). (F) The geometric mean of the rates again produces high, positive correlation, but with an even greater slope. Finally, (G and H) show that transition from local-global anticorrelation to correlation occurs in the SK model when the weighting in the geometric mean of the extreme rates is roughly .

Theorems 3 and 4 characterize the relationship between the exit rates and stationary probabilities of a Markov chain, in terms of and . We can use these results to better understand how the local-global relationship changes as the parameters of the chain vary. Fig. 6 demonstrates this idea by comparing two models of ant colony behavior that undergo stochastic bifurcations as colony size varies. The Föllmer–Kirman (FK) model describes the number of ants that choose one of two identical paths to a food source, under the effects of random switching and recruitment (52–54). It predicts that, as colony size increases, the colony abruptly transitions from alternately concentrating on one path to splitting evenly between the two. The Beekman–Ratnieks–Sumpter (BRS) model concerns the number of ants that follow and reinforce a pheromone trail, by spontaneously finding it or by being recruited to it (55, 56).¶ It predicts that there is a critical colony size above which the colony sustains a trail, but below which it does not. Despite the apparent similarities of the FK and BRS models, the relationships between their local and global parts change in entirely different ways as colony size increases, as the quantities and show.

Fig. 6.

Stochastic bifurcations in two models of ant collective behavior, characterized in terms of , , and . The Föllmer–Kirman (FK) and Beekman–Ratnieks–Sumpter (BRS) models are Markov chains with nearest-neighbor jumps on the state space . (A) The local and global parts of the FK model’s stationary distribution are essentially perfectly anticorrelated for every . However, as increases, the global part begins to vary more than the local part, which is reflected by going from below to above , and which causes the correlation between and to abruptly change from to , as shown in (B). (C) In contrast, the BRS model generally has much larger than , so the correlation between and is essentially determined by the correlation between the local and global parts. The correlation decreases as increases to the bifurcation point, but recovers to a moderate value for larger . The same is true of (B). (A–C) To make the bifurcation points of both models approximately , we used the parameters and .

Under the FK model, sharply decreases from to , as the colony size increases through the bifurcation point (Fig. 6B). The abrupt transition from correlation to anticorrelation in fact arises from a gradual increase in , the variance of the steady state’s global part relative to its local part (Fig. 6A). The slope decreases linearly during this transition, as Theorem 4 predicts (SI Appendix, Fig. S4A). The exit rates and steady state of the BRS model are also highly anticorrelated in the vicinity of the critical colony size (Fig. 6B). However, changes more gradually and the correlation recovers to a positive value at larger colony sizes. Fig. 6C shows that, in contrast to the FK model, the variation in drives the decrease in near the critical colony size. Since is much larger than for all but the smallest colonies, its variation matters little to , which is reflected by the flatness of the contours in Fig. 6C. Instead, the variation of greatly affects , which takes values as low as roughly and as high as roughly , as the colony size increases (SI Appendix, Fig. S4B). We note that the results in Fig. 6 concern a uniformly random state . The curves of the BRS model differ substantially when is distributed according to , but a sharp decrease in near the bifurcation point arises in largely the same way (SI Appendix, Figs. S5 and S6).

Discussion

The conceptual heart of rattling is the simple observation that many systems tend to spend more time in configurations that they exit more slowly. While systems can exhibit this tendency regardless of whether detailed balance holds, it fails to be universal because the time that a system spends in a configuration depends on both the duration and the frequency of its visits, which can oppose one another. The former is a local property of a configuration, while the latter is generally a global property of the system. In this sense, the failure of the rattling heuristic requires “adversarial global structure,” as Chvykov et al. claimed (10). Theorem 3 implies a precise version of this claim: is positive unless the local and global parts of the stationary distribution are sufficiently anticorrelated, that is, unless is at most . In particular, the rattling heuristic always holds when the local part varies more than the global part ().

Together, and determine the accuracy of the rattling heuristic as well as the form of the weights that it predicts (Theorems 3 and 4). We view each pair as defining a class of chains, say , for which a certain local-global principle applies (Fig. 2). The simplest class consists of chains with uniform global part, which therefore satisfy and . Theorem 2 explains that it is simple to make these chains by scaling the entries of doubly stochastic matrices (Fig. 3). More generally, the class consists of chains with uncorrelated local and global parts, hence they satisfy and , and the rattling heuristic predicts their weights to be . As Fig. 4 shows, many chains with random rates essentially belong to this class. The chains in are analogous to the systems that Chvykov et al. considered to be the domain of rattling (10). However, rattling also perfectly predicts the weights of chains in the class , with an exponent that is generally not of order and which can even be negative. Figs. 5 and 6 include relevant examples.

These key quantities depend on the distribution of the random state , which models how the system is observed. The preceding discussion and Figs. 4–6 emphasize the case when is uniformly random, primarily because then coincides with the empirical correlations that prior work observed and sought to explain (10, 13). However, Theorems 3 and 4 are far more general—they work for any distribution that makes defined (Eq. 5). For example, can be chosen according to the stationary distribution , so that emphasizes the correlation among states with the greatest steady-state weight. The comparison of Figs. 4D and 6 with SI Appendix, Figs. S3D and S5 shows that this choice can lead to qualitatively different results when is far from uniform.

While our results confirm the general sense that rattling should predict the steady states of a broad class of nonequilibrium systems (9, 10, 13, 15), they also show that the original rationale for its success was not entirely correct. Specifically, Chvykov et al. attributed the correlation in examples like Fig. 4A and B to the large number of transition rates into and out of each state and their independence (10). However, these properties fail to explain the similar correlations in Fig. 4C and D, where the states are sparsely connected, and those in Fig. 5, where the rates are strongly dependent. Theorem 3 reveals that these properties are neither necessary nor sufficient for high correlation.

To fully realize rattling’s promise as a principle of self-organization, future work should further develop its methodology, broaden its applications, and deepen its theoretical foundations. First, detecting the failure of the rattling heuristic, like detecting the failure of detailed balance, generally requires global information about the dynamics. Practical approaches to the latter are the focus of recent work (57–61) and we anticipate parallel developments for rattling. One possible approach is to estimate the local and global parts of by adapting, for example, the algorithm of ref. 62. Second, applications of rattling can take inspiration from Yang et al. (13), which engineered active microparticles that self-organize into a collective oscillator using rattling to infer a key design parameter. Finally, theoretical efforts can address the properties of rattling that are relevant to practice, like its behavior under transformations of the state space, possible analogues of our formulas for systems with continuous state spaces, and the relation of rattling to central topics of Markov chain theory, like mixing times (63) and metastability (64).

Materials and Methods

We prove Theorems 1–4 in this section. These results stem from the standard fact that the fraction of time that a Markov chain spends in state in the long run is proportional to the fraction of visits that it makes to state , multiplied by the expected duration of each visit. Throughout this section, we continue to assume that is an irreducible Markov chain on a finite number of states.

Proposition 0.1

The stationary distributions of a Markov chain and its jump chain are related by

[8]

Proof: Since is the stationary distribution of , by the definition of the jump chain, satisfies

In other words, is an invariant measure for :

Normalizing therefore gives the stationary distribution .

Proof of Theorem 1.

Recall that we are given such that

| [9] |

for every state , and we must show that and are within a factor of :

For the upper bound, we use Eq. 8 to write in terms of and then use Eq. 9 twice to find that

| [10] |

The lower bound follows in a similar way.

The proof of Theorem 2 combines a perturbation bound for Markov chain stationary distributions with Theorem 1.

Proof of Theorem 2.

We are given a doubly stochastic matrix of the same size as , and a number such that and are within a factor of , and we must show that and are within a factor of . We use Theorem 1 of ref. 46 to relate the ratio of the entries of and to the ratio of their stationary probabilities. It states that, if irreducible stochastic matrices on states are within a factor of , then their stationary distributions are within a factor of . This result applies to and because we assumed that is irreducible and that and are within a factor of , hence is also irreducible. Since is doubly stochastic, it has a uniform stationary distribution. Theorem 1 of ref. 46 and the fact that and are within a factor of therefore imply that and the uniform distribution are within a factor of . Using Theorem 1, we conclude that and are within a factor of .

The next proof uses the basic fact that, for any two random variables and with positive, finite variances, the correlation of and equals

With some algebra, we can rewrite this formula in terms of and as

| [11] |

Recall that denotes the correlation between the logarithms of and for a random state distributed according to . The proof of Theorem 3 is a direct calculation of , using Eq. 8.

Proof of Theorem 3.

By Eq. 8, the probability satisfies

Since the last term is constant, it does not affect the correlation , which therefore equals

in terms of and . We then obtain the formula for from Eq. 11, where and .

Recall the definition of the log-ratio error in Eq. 7. The proof of Theorem 4 entails a calculation of , using Eq. 8.

Proof of Theorem 4.

The log-ratio error equals

in terms of the auxiliary variables

due to the linearity of expectation and because and are i.i.d. By Eq. 8, the variance of equals

where and . We write the variance as

and then identify factors of and , to find that

The error is minimized by , which then satisfies

A simple but tedious manipulation of this equation using Eqs. 6 and 8 shows that further equals . See SI Appendix for details.

Supplementary Material

Appendix 01 (PDF)

Acknowledgments

We thank Thomas Berrueta, Pavel Chvykov, and Jeremy England for their generous feedback on an early draft of this paper and for suggesting applications of our results. We further acknowledge them, along with Daniel Goldman, Todd Murphey, and Andréa Richa, for helpful discussions of the content of Chvykov et al. We thank James Holehouse for his feedback on the content of Fig. 6. Last, we thank the anonymous reviewers for valuable feedback. We acknowledge the support of the NSF award CCF-2106687 and the US Army Research Office Multidisciplinary University Research Initiative award W911NF-19-1-0233. Part of this work was completed while the second author was visiting the Simons Laufer Mathematical Sciences Institute in Berkeley, CA, in Fall 2023.

Author contributions

J.C. and D.R. designed research; J.C. performed research; and J.C. and D.R. wrote the paper.

Competing interests

The authors declare no competing interest.

Footnotes

*We use “configuration” to refer to what is typically called a “microstate,” to avoid confusion with the states of a Markov chain.

†Here and throughout, by “Markov chain,” we mean an irreducible, continuous-time Markov chain on a finite state space. Every such Markov chain has a unique stationary distribution.

‡A Markov chain satisfies detailed balance if there is no net probability flux between every pair of states, i.e., if for all and .

§In fact, with minor modifications, our results hold for Markov chains with countably infinite state spaces, semi-Markov processes, and discrete-time chains with self-loops.

¶The original BRS model is deterministic; we use a stochastic version.

This article is a PNAS Direct Submission.

Data, Materials, and Software Availability

There are no data underlying this work.

Supporting Information

References

- 1.Sendker F. L., et al. , Emergence of fractal geometries in the evolution of a metabolic enzyme. Nature 628, 894–900 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Daniel J. C., Kronauer, Army Ants: Nature’s Ultimate Social Hunters (Harvard University Press, Cambridge, Massachusetts, 2020). [Google Scholar]

- 3.Buhl J., et al. , From disorder to order in marching locusts. Science 312, 1402–1406 (2006). [DOI] [PubMed] [Google Scholar]

- 4.Sethna J. P., Statistical Mechanics: Entropy, Order Parameters, and Complexity, Oxford Master Series in Physics (Oxford University Press, Oxford, New York, ed. 2, 2021). [Google Scholar]

- 5.Noé F., Olsson S., Köhler J., Wu H., Boltzmann generators: Sampling equilibrium states of many-body systems with deep learning. Science 365, eaaw1147 (2019). [DOI] [PubMed] [Google Scholar]

- 6.Landauer R., Inadequacy of entropy and entropy derivatives in characterizing the steady state. Phys. Rev. A 12, 636–638 (1975). [Google Scholar]

- 7.Andresen B., Zimmermann E. C., Ross J., Objections to a proposal on the rate of entropy production in systems far from equilibrium. J. Chem. Phys. 81, 4676–4677 (1984). [Google Scholar]

- 8.Landauer R., Motion out of noisy states. J. Stat. Phys. 53, 233–248 (1988). [Google Scholar]

- 9.Chvykov P., England J., Least-rattling feedback from strong time-scale separation. Phys. Rev. E Stat. Phys. Plasmas Fluids 97, 032115 (2018). [DOI] [PubMed] [Google Scholar]

- 10.Chvykov P., et al. , Low rattling: A predictive principle for self-organization in active collectives. Science 371, 90–95 (2021). [DOI] [PubMed] [Google Scholar]

- 11.J. Gold, “Self-organized fine-tuned response in a driven spin glass,” PhD thesis, Massachusetts Institute of Technology (2021).

- 12.Jackson Z., Wiesenfeld K., Emergent, linked traits of fluctuation feedback systems. Phys. Rev. E Stat. Phys. Plasmas Fluids 104, 064216 (2021). [DOI] [PubMed] [Google Scholar]

- 13.Yang J. F., et al. , Emergent microrobotic oscillators via asymmetry-induced order. Nat. Commun. 13, 5734 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.England J. L., Self-organized computation in the far-from-equilibrium cell. Biophys. Rev. 3, 041303 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kedia H., Pan D., Slotine J. J., England J. L., Drive-specific selection in multistable mechanical networks. J. Chem. Phys. 159, 214106 (2023). [DOI] [PubMed] [Google Scholar]

- 16.Schnakenberg J., Network theory of microscopic and macroscopic behavior of master equation systems. Rev. Mod. Phys. 48, 571–585 (1976). [Google Scholar]

- 17.Crooks G. E., Path-ensemble averages in systems driven far from equilibrium. Phys. Rev. E Stat. Phys. Plasmas Fluids 61, 2361–2366 (2000). [Google Scholar]

- 18.Jiang D. Q., Qian M., Qian M. P., Mathematical Theory of Nonequilibrium Steady States (Springer, Berlin, Heidelberg, 2004), vol. 1833. [Google Scholar]

- 19.Zia R. K. P., Schmittmann B., Probability currents as principal characteristics in the statistical mechanics of non-equilibrium steady states. J. Stat. Mech. Theory Exp. 2007, P07012 (2007). [Google Scholar]

- 20.Esposito M., Van den Broeck C., Three faces of the second law. I. Master equation formulation. Phys. Rev. E 82, 011143 (2010). [DOI] [PubMed] [Google Scholar]

- 21.Seifert U., Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75, 126001 (2012). [DOI] [PubMed] [Google Scholar]

- 22.Jarzynski C., Equilibrium free-energy differences from nonequilibrium measurements: A master-equation approach. Phys. Rev. E 56, 5018–5035 (1997). [Google Scholar]

- 23.Crooks G. E., Nonequilibrium measurements of free energy differences for microscopically reversible Markovian systems. J. Stat. Phys. 90, 1481–1487 (1998). [Google Scholar]

- 24.Crooks G. E., Entropy production fluctuation theorem and the nonequilibrium work relation for free energy differences. Phys. Rev. E Stat. Phys. Plasmas Fluids 60, 2721–2726 (1999). [DOI] [PubMed] [Google Scholar]

- 25.Hill T. L., Studies in irreversible thermodynamics IV. Diagrammatic representation of steady state fluxes for unimolecular systems. J. Theor. Biol. 10, 442–459 (1966). [DOI] [PubMed] [Google Scholar]

- 26.Hill T. L., Free Energy Transduction in Biology: The Steady-State Kinetic and Thermodynamic Formalism (Academic Press, 1977). [Google Scholar]

- 27.Schnakenberg J., Thermodynamic Network Analysis of Biological Systems, Universitext (Springer, Berlin, Heidelberg, 1981). [Google Scholar]

- 28.MacQueen J., Circuit Processes. Ann. Probab. 9, 604–610 (1981). [Google Scholar]

- 29.Minping Q., Min Q., Circulation for recurrent Markov chains. Z. Wahrscheinlichkeitstheorie und Verwandte Geb. 59, 203–210 (1982). [Google Scholar]

- 30.Kalpazidou S., Cycle Representations of Markov Processes, Stochastic Modelling and Applied Probability (Springer, New York, NY, 2006), vol. 28. [Google Scholar]

- 31.Altaner B., et al. , Network representations of nonequilibrium steady states: Cycle decompositions, symmetries, and dominant paths. Phys. Rev. E 85, 041133 (2012). [DOI] [PubMed] [Google Scholar]

- 32.A. Strang, “Applications of the Helmholtz-Hodge Decomposition to Networks and Random Processes,” PhD thesis, Case Western Reserve University (2020).

- 33.Lebowitz J. L., Spohn H., A gallavotti-cohen-type symmetry in the large deviation functional for stochastic dynamics. J. Stat. Phys. 95, 333–365 (1999). [Google Scholar]

- 34.Qian H., Nonequilibrium steady-state circulation and heat dissipation functional. Phys. Rev. E 64, 022101 (2001). [DOI] [PubMed] [Google Scholar]

- 35.Andrieux D., Gaspard P., Fluctuation theorem for currents and schnakenberg network theory. J. Stat. Phys. 127, 107–131 (2007). [Google Scholar]

- 36.Bertini L., Faggionato A., Gabrielli D., Flows, currents, and cycles for Markov chains: Large deviation asymptotics. Stochastic Process. Appl. 125, 2786–2819 (2015). [Google Scholar]

- 37.Barato A. C., Seifert U., Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015). [DOI] [PubMed] [Google Scholar]

- 38.Gingrich T. R., Horowitz J. M., Perunov N., England J. L., Dissipation bounds all steady-state current fluctuations. Phys. Rev. Lett. 116, 120601 (2016). [DOI] [PubMed] [Google Scholar]

- 39.Horowitz J. M., Gingrich T. R., Thermodynamic uncertainty relations constrain non-equilibrium fluctuations. Nat. Phys. 16, 15–20 (2020). [Google Scholar]

- 40.Owen J. A., Gingrich T. R., Horowitz J. M., Universal thermodynamic bounds on nonequilibrium response with biochemical applications. Phys. Rev. X 10, 011066 (2020). [Google Scholar]

- 41.Owen J. A., Horowitz J. M., Size limits the sensitivity of kinetic schemes. Nat. Commun. 14, 1280 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Fernandes Martins G., Horowitz J. M., Topologically constrained fluctuations and thermodynamics regulate nonequilibrium response. Phys. Rev. E 108, 044113 (2023). [DOI] [PubMed] [Google Scholar]

- 43.Aslyamov T., Esposito M., Nonequilibrium response for markov jump processes: Exact results and tight bounds. Phys. Rev. Lett. 132, 037101 (2024). [DOI] [PubMed] [Google Scholar]

- 44.Leighton F., Rivest R., Estimating a probability using finite memory. IEEE Trans. Inf. Theory 32, 733–742 (1986). [Google Scholar]

- 45.Norris J. R., Markov Chains, Cambridge Series in Statistical and Probabilistic Mathematics (Cambridge University Press, Cambridge, 1997). [Google Scholar]

- 46.O’Cinneide C. A., Entrywise perturbation theory and error analysis for Markov chains. Numer. Math. 65, 109–120 (1993). [Google Scholar]

- 47.Thiede E., Van Koten B., Weare J., Sharp entrywise perturbation bounds for markov chains. SIAM J. Matrix Anal. Appl. 36, 917–941 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Derrida B., Random-energy model: Limit of a family of disordered models. Phys. Rev. Lett. 45, 79–82 (1980). [Google Scholar]

- 49.Derrida B., Random-energy model: An exactly solvable model of disordered systems. Phys. Rev. B 24, 2613–2626 (1981). [Google Scholar]

- 50.Mathieu P., Convergence to equilibrium for spin glasses. Commun. Math. Phys. 215, 57–68 (2000). [Google Scholar]

- 51.Sherrington D., Kirkpatrick S., Solvable model of a spin-glass. Phys. Rev. Lett. 35, 1792–1796 (1975). [Google Scholar]

- 52.Kirman A., Ants, rationality, and recruitment. Q. J. Econ. 108, 137–156 (1993). [Google Scholar]

- 53.Biancalani T., Dyson L., McKane A. J., Noise-induced bistable states and their mean switching time in foraging colonies. Phys. Rev. Lett. 112, 038101 (2014). [DOI] [PubMed] [Google Scholar]

- 54.Holehouse J., Pollitt H., Non-equilibrium time-dependent solution to discrete choice with social interactions. PLoS One 17, e0267083 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Beekman M., Sumpter D. J. T., Ratnieks F. L. W., Phase transition between disordered and ordered foraging in Pharaoh’s ants. Proc. Natl. Acad. Sci. U.S.A. 98, 9703–9706 (2001). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Sumpter D. J. T., Beekman M., From nonlinearity to optimality: Pheromone trail foraging by ants. Anim. Behav. 66, 273–280 (2003). [Google Scholar]

- 57.Battle C., et al. , Broken detailed balance at mesoscopic scales in active biological systems. Science 352, 604–607 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Gnesotto F. S., Mura F., Gladrow J., Broedersz C. P., Broken detailed balance and non-equilibrium dynamics in living systems: A review. Rep. Prog. Phys. 81, 066601 (2018). [DOI] [PubMed] [Google Scholar]

- 59.Li J., Horowitz J. M., Gingrich T. R., Fakhri N., Quantifying dissipation using fluctuating currents. Nat. Commun. 10, 1666 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Martínez I. A., Bisker G., Horowitz J. M., Parrondo J. M. R., Inferring broken detailed balance in the absence of observable currents. Nat. Commun. 10, 3542 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Lynn C. W., Cornblath E. J., Papadopoulos L., Bertolero M. A., Bassett D. S., Broken detailed balance and entropy production in the human brain. Proc. Natl. Acad. Sci. U.S.A. 118, e2109889118 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Lee C. E., Ozdaglar A., Shah D., “Computing the stationary distribution locally” in Advances in Neural Information Processing Systems, Burges C., Bottou L., Welling M., Ghahramani Z., Weinberger K., Eds. (Curran Associates, Inc., 2013), vol. 26. [Google Scholar]

- 63.Levin D. A., Peres Y. L., Wilmer E., Markov Chains and Mixing Times (American Mathematical Society, Providence, RI, 2009). [Google Scholar]

- 64.Bovier A., Den Hollander F., Metastability: A Potential-Theoretic Approach (Grundlehren der mathematischen Wissenschaften, Springer International Publishing, Cham, 2015), vol. 351. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix 01 (PDF)

Data Availability Statement

There are no data underlying this work.