Abstract

In this work, we aim to predict the survival time (ST) of glioblastoma (GBM) patients undergoing different treatments based on preoperative magnetic resonance (MR) scans. The personalized and precise treatment planning can be achieved by comparing the ST of different treatments. It is well established that both the current status of the patient (as represented by the MR scans) and the choice of treatment are the cause of ST. While previous related MR-based glioblastoma ST studies have focused only on the direct mapping of MR scans to ST, they have not included the underlying causal relationship between treatments and ST. To address this limitation, we propose a treatment-conditioned regression model for glioblastoma ST that incorporates treatment information in addition to MR scans. Our approach allows us to effectively utilize the data from all of the treatments in a unified manner, rather than having to train separate models for each of the treatments. Furthermore, treatment can be effectively injected into each convolutional layer through the adaptive instance normalization we employ. We evaluate our framework on the BraTS20 ST prediction task. Three treatment options are considered: Gross Total Resection (GTR), Subtotal Resection (STR), and no resection. The evaluation results demonstrate the effectiveness of injecting the treatment for estimating GBM survival.

Keywords: Survival Prediction, Individualized Treatment Effect, Glioblastoma, Magnetic Resonance Imaging

1. INTRODUCTION

Glioblastoma (GBM) is one of the most aggressive malignant brain tumors, with a particularly poor survival rate. Despite significant advances in medical treatments for brain tumors, the median survival duration for GBM patients remains limited.1 The prognosis of GBM can vary widely depending on the patient’s individual status and treatment approach.

We propose to develop a data-driven deep learning (DL) model to answer the question of whether a GBM patient would have a longer survival time (ST) with alternative treatments, based on the preoperative magnetic resonance (MR) scans. Our goal is to enable treatment-specific ST prediction, allowing for a comprehensive comparison of different treatments for a given patient. This capability is highly desired in the field of precision medicine, as it provides valuable insights for informed treatment planning.

The complementary status is a basis for an accurate prediction of ST. In addition to the tabular electronic health record (EHR),2 there is increasing evidence that the multi-parametric MR scans have shown great potential for GBM prognosis.3, 4 Richer information can be expected from multimodal imaging. However, previous studies in MR-based GBM survival prediction have primarily focused on modeling a direct mapping from GBM patient status (represented as MR scans) to ST with direct models,1 ignoring the underlying causal relationship between treatments and ST.

In practice, the influence of both the current state of the patient and the selection of treatment on the effect of the outcome is well justified.5, 6 However, the previous direct models either focused on a single treatment3, 4, 7 or did not incorporate the impact of the treatment.8, 9 In particular, without taking treatment into account, it is not possible to achieve a comparison between treatments to instruct the following treatment planning.10 Although a possible solution is to have different direct models for each treatment, there is only a limited amount of training data that can be used for each model.

To the best of our knowledge, this is the first attempt at a DL-based multimodal medical imaging study for individualized multi-treatment advisory, which takes both comprehensive multi-parametric preoperative MR status depictions and treatment selection into consideration for GBM survival inference. By considering the patient’s MR scans and incorporating the treatment information in a unified framework, our proposed approach aims to unravel the intricate relationship between treatments and ST, thereby facilitating more accurate and personalized treatment decisions.

To this end, in this work, we propose to address the above issues with a treatment-conditioned GBM survival regression model using preoperative MR scans. Instead of training independent models for each treatment, we are able to use all treatment data in a unified manner. Specifically, we adopt a multichannel convolutional backbone as the previous direct model9 to aggregate the information in registered multiparametric MR scans and the corresponding segmentation map. Then, the treatment vector is integrated with either concatenation in the fully connected layer or the adaptive instance normalization (AdaIN) as in11–15 in each convolutional layer of the survival time prediction network. In particular, we normalize the mean and variance of AdaIN layers to align with the relative-pose code instead of the feature map itself. Injecting treatment at earlier stages in a more interactive manner can potentially contribute to fine-grained treatment-conditioned modeling.

We evaluate the performance of our framework on the BraTS2020 survival prediction task,16 considering three potential types of tumor resection. Our evaluation results demonstrate the effectiveness of our approach in estimating GBM survival and the benefit of the introduction of treatment.

2. METHODS

The patient status and treatment are used to predict the effect in individualized treatment effect inference. Specifically, in GBM survival analysis, multiparametric MR scans and the corresponding segmentation masks have been widely used to represent the status , which includes the location and radiomic features. In addition to the medical imaging status, the choice of treatment may also affect survival. For generality, we have a one-hot treatment vector for possible treatment choices. Although it can be simplified to 0/1 for the binary treatment case, we aim to train a unified model .

For a fair comparison, we follow the previous work9 and adopt the four layers of convolutional neural networks for 3D multi-modality information fusion. Then, we make use of three fully connected layers, each with a one-dimensional output unit, to predict the scalar value of survival days. Moreover, in the previous work,9 the patient age and manually crafted radiomics features were concatenated with the first fully connected layer representations, and then processed by either a neural network or conventional machine learning methods. However, in the present work, we focus solely on investigating the neural network approach and do not include the manually crafted features. Nevertheless, these predictive features can be easily incorporated if needed.

Instead, our objective is to investigate the impact of inducing treatment to achieve a more accurate ST estimation by modeling fine-grained treatmentwise with a unified framework. Thus, a critical problem is how to seamlessly induce treatment into the status feature extraction to model the conditional distribution. In previous conditional generative works,11, 17 it has been shown that simple concatenation in a fully connected layer can be less effective in combining two features, as the previous convolution layers are not well-informed, and hard to learn their correlation with limited subsequent parameters.

Therefore, following previous successful conditional modeling works,11, 17 we propose to adopt adaptive instance normalization (AdaIN)18 to induce treatment in each convolutional layer. Specifically, we process with three multi-layer perceptron (MLP) layers (all with 16-dim) to produce a 16-dim vector in the intermediate latent space , the learned affine transformations, i.e., the linear layers of each layer, then specialize to scalars of treatment-wise scale and bias in the convolutional layer to control the AdaIN operators. Note that the dimensionality of or should match the number of feature maps in that layer, which requires the dimension of the linear layer to be twice that of or . Specifically, we have the following AdaIN operation in each layer:

| (1) |

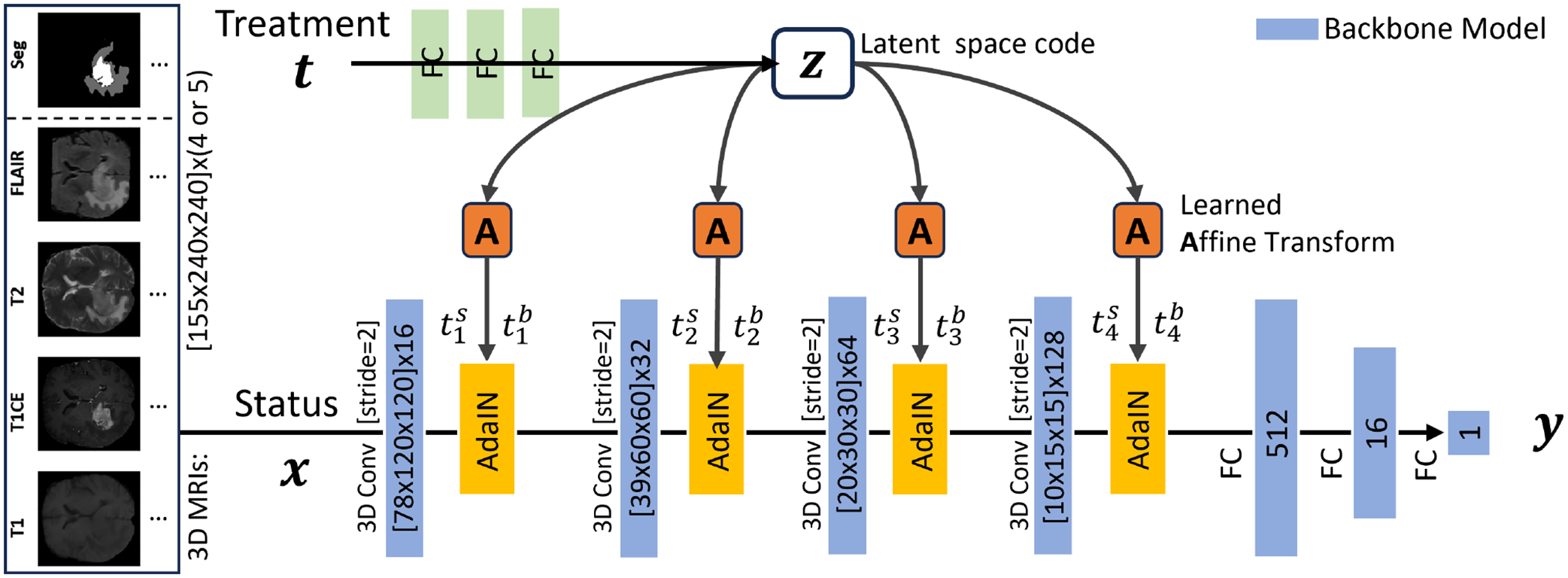

where is the extracted feature map after the -th convolutional layer. As a result, is individually normalized and subsequently scaled and biased using the corresponding scalar components from . By doing so, it injects a stronger inductive bias of into the DL model. The model is detailed in Fig. 1, which is trained by the mean absolute error (MAE), i.e., .

Figure 1.

Illustration of our framework for the treatment-wise GBM survival time estimation with multi-parametric preoperative MR scans and segmentation mask. The treatment is injected by the AdaIN to every convolutional layer.

3. RESULTS

In the BraTS 2020 dataset,16, 19 the preoperative T1-weighted (T1), T1-weighted with contrast enhancement (T1-CE), T2-weighted (T2), and fluid attenuation inversion recovery (FLAIR) MR scans and the segmentation mask are collected as the status. Survival days (5–1767d) are reported for 236 subjects. 119 were treated with Gross Total Resection (GTR), 10 with Subtotal Resection (STR), and 107 had no treatment (NA). We use the subject-independent 5-fold cross-evaluation on the 236 subjects, in which both training and test sets contain three treatments. Note that the split is different from the previous direct model,9 which does not consider the distribution of treatments. In addition, it is difficult to independently train a separate model for STR with very limited samples. The training was performed on an NVIDIA A100 GPU for 200 epochs.

We input status and treatment to predict the survival day . We adopt their multi-channel 3D convolutional network as our backbone to process the four-channel 3D MR scans, which can be extended to incorporate the segmentation mask as five channels. Our framework was implemented using the PyTorch deep learning toolbox.20 For performance quantification, the MAE is reported in Table 1. We can see that adding the treatment to the survival estimation can significantly reduce MAE. Furthermore, the AdaIN injection shows a superior capability to effectively combine the patient status and treatment compared to the simple concatenation method at the first fully connected layer.

Table 1.

Numerical comparisons of different inputs and their combination methods. The best results are indicated in bold. The standard deviation is reported based on five random trials.

4. CONCLUSION

In this work, we introduced a novel and accurate survival estimation model for GBM, using preoperative MRI data and segmentation maps to model the fine-grained treatment-dependent distribution. Our framework efficiently injects the treatment vector into the status representation extraction process. Compared with conventional direct models that do not consider treatment,9 or simply concatenate the treatment vector into the FC layers, our model demonstrates superior and effective performance. Moreover, our approach can serve as a simple add-on module to enhance more advanced backbones used in direct models, such as vision transformers. In future work, we will replace the manual segmentation map with the predictions of advanced DL-based tumor segmentation models21–25 to establish a fully automated analysis pipeline.

ACKNOWLEDGMENTS

This work is supported by R01CA165221 and P41EB022544.

REFERENCES

- [1].Kaur G, Rana PS, and Arora V, “State-of-the-art techniques using pre-operative brain mri scans for survival prediction of glioblastoma multiforme patients and future research directions,” Clinical and Translational Imaging 10(4), 355–389 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Lacroix M, Abi-Said D, Fourney DR, Gokaslan ZL, Shi W, DeMonte F, Lang FF, McCutcheon IE, Hassenbusch SJ, Holland E, et al. , “A multivariate analysis of 416 patients with glioblastoma multiforme: prognosis, extent of resection, and survival,” Journal of Neurosurgery 95(2), 190–198 (2001). [DOI] [PubMed] [Google Scholar]

- [3].Chang K, Zhang B, Guo X, Zong M, Rahman R, Sanchez D, Winder N, Reardon DA, Zhao B, Wen PY, et al. , “Multimodal imaging patterns predict survival in recurrent glioblastoma patients treated with bevacizumab,” Neuro-oncology 18(12), 1680–1687 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Nie D, Lu J, Zhang H, Adeli E, Wang J, Yu Z, Liu L, Wang Q, Wu J, and Shen D, “Multi-channel 3d deep feature learning for survival time prediction of brain tumor patients using multi-modal neuroimages,” Scientific Reports 9(1), 1–14 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Athey S and Imbens G, “Recursive partitioning for heterogeneous causal effects,” Proceedings of the National Academy of Sciences 113(27), 7353–7360 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Shalit U, Johansson FD, and Sontag D, “Estimating individual treatment effect: generalization bounds and algorithms,” in [International Conference on Machine Learning], 3076–3085, PMLR; (2017). [Google Scholar]

- [7].Chaddad A, Desrosiers C, Hassan L, and Tanougast C, “A quantitative study of shape descriptors from glioblastoma multiforme phenotypes for predicting survival outcome,” The British journal of radiology 89(1068), 20160575 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Baid U, Rane SU, Talbar S, Gupta S, Thakur MH, Moiyadi A, and Mahajan A, “Overall survival prediction in glioblastoma with radiomic features using machine learning,” Frontiers in Computational Neuroscience, 61 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Huang H, Zhang W, Fang Y, Hong J, Su S, and Lai X, “Overall survival prediction for gliomas using a novel compound approach,” Frontiers in Oncology 11, 724191 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Tan K, Huang W, Liu X, Hu J, and Dong S, “A multi-modal fusion framework based on multi-task correlation learning for cancer prognosis prediction,” Artificial Intelligence in Medicine 126, 102260 (2022). [DOI] [PubMed] [Google Scholar]

- [11].Liu X, Che T, Lu Y, Yang C, Li S, and You J, “Auto3d: Novel view synthesis through unsupervisely learned variational viewpoint and global 3d representation,” in [European Conference on Computer Vision], 52–71, Springer; (2020). [Google Scholar]

- [12].Xu X, Chen Y-C, and Jia J, “View independent generative adversarial network for novel view synthesis,” in [Proceedings of the IEEE International Conference on Computer Vision], 7791–7800 (2019). [Google Scholar]

- [13].Huang H, He R, Sun Z, Tan T, et al. , “Introvae: Introspective variational autoencoders for photographic image synthesis,” in [Advances in Neural Information Processing Systems], 52–63 (2018). [Google Scholar]

- [14].Nguyen-Phuoc T, Li C, Theis L, Richardt C, and Yang Y-L, “Hologan: Unsupervised learning of 3d representations from natural images,” arXiv preprint arXiv:1904.01326 (2019). [Google Scholar]

- [15].Zakharov E, Shysheya A, Burkov E, and Lempitsky V, “Few-shot adversarial learning of realistic neural talking head models,” arXiv preprint arXiv:1905.08233 (2019). [Google Scholar]

- [16].Bakas S, Reyes M, Jakab A, Bauer S, Rempfler M, Crimi A, Shinohara RT, Berger C, Ha SM, Rozycki M, et al. , “Identifying the best machine learning algorithms for brain tumor segmentation, progression assessment, and overall survival prediction in the brats challenge,” arXiv preprint arXiv:1811.02629 (2018). [Google Scholar]

- [17].Karras T, Laine S, and Aila T, “A style-based generator architecture for generative adversarial networks,” in [IEEE / CVF Computer Vision and Pattern Recognition Conference], 4401–4410 (2019). [DOI] [PubMed] [Google Scholar]

- [18].Huang X and Belongie S, “Arbitrary style transfer in real-time with adaptive instance normalization,” in [Proceedings of the IEEE International Conference on Computer Vision], 1501–1510 (2017). [Google Scholar]

- [19].Bakas S, “Multimodal brain tumor segmentation challenge 2020: Data https://www.med.upenn.edu/cbica/brats2020/data.html,” (2020). [Google Scholar]

- [20].Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z, Desmaison A, Antiga L, and Lerer A, “Automatic differentiation in pytorch,” (2017). [Google Scholar]

- [21].Liu X, Xing F, El Fakhri G, and Woo J, “Memory consistent unsupervised off-the-shelf model adaptation for source-relaxed medical image segmentation,” Medical image analysis 83, 102641 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Liu X, Xing F, Yang C, El Fakhri G, and Woo J, “Adapting off-the-shelf source segmenter for target medical image segmentation,” in [International Conference on Medical Image Computing and Computer-Assisted Intervention], 549–5059, Springer; (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Liu X, Xing F, El Fakhri G, and Woo J, “Self-semantic contour adaptation for cross modality brain tumor segmentation,” in [2022 IEEE 19th International Symposium on Biomedical Imaging], 1–5 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Liu X, Xing F, Shusharina N, Lim R, Kuo C, Fakhri GE, and Woo J, “Act: Semi-supervised domain-adaptive medical image segmentation with asymmetric co-training,” International Conference on Medical Image Computing and Computer Assisted Intervention (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Liu X, Shih HA, Xing F, Santarnecchi E, El Fakhri G, and Woo J, “Incremental learning for heterogeneous structure segmentation in brain tumor mri,” in [International Conference on Medical Image Computing and Computer-Assisted Intervention], 46–56, Springer; (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]