Abstract

Due to signal shielding caused by building structures, conventional mature positioning technologies such as the Global Positioning System (GPS) are only suitable for outdoor navigation and detection. However, there are many scenarios that urgently require high-precision indoor positioning technologies, such as indoor wireless optical communications (OWCs), navigation in large buildings, and warehouse management. Here, we proposed a millimeter-precision indoor positioning technology based on metalens-integrated camera, which determines the position of the device through imaging of beacon LEDs. Thanks to the wide-angle imaging design of our metalens, the camera can accurately capture images of beacon LEDs even when it is situated in distant corner locations. Consequently, our localization scheme achieves millimeter-level positioning accuracy across majority of wide-angle (∼120°) indoor area. Compared to traditional positioning schemes by photodiode (PD), our imaging-based approach demonstrates superior resistance to interference, thereby safeguarding positioning precision from the external signals influence. Furthermore, the compact dimensions and high performances of the positioning device make it suitable for integration into highly portable devices, such as smartphones and drones, revealing its broad potential applications in the future.

Keywords: high-precision positioning, wide-angle imaging, integrated meta-device, imaging processing

1. Introduction

Location information is of great importance in many applications or services, for it determines the accuracy and efficiency of information transmission [1], [2], [3]. The widely used global positioning systems (GPS) face challenges in indoor or obstructed environments, due to factors such as electromagnetic shielding, multipath fading, and obstacles blocking signals [4], [5]. To address the indoor operational blind spots of GPS, various wireless positioning solutions have been developed, such as wireless local area networks (WLAN), radio frequency identification (RFID), ultra-wideband (UWB), etc. [6], [7], [8], [9], [10]. However, the development of these solutions is limited by the electromagnetic interference, noise, stability, cost, and other factors [11]. With the significant advancement of light-emitting diodes (LEDs) as light sources, visible light communication (VLC) technology based on LEDs has been extensively researched in recent years [12], [13], [14], [15]. The positioning technologies based on VLC is a kind of green service, and attracts much attentions, which possesses advantages of electromagnetic interference resistance, system stability, and relative high positioning accuracy [16], [17], [18], [19]. In addition, the positioning devices can also integrate with lighting systems, significantly reducing construction and operational costs.

VLC positioning technologies can be broadly categorized into two types, one is the geometric analyze methods based on intensity measurement and the other is the imaging methods by cameras [20], [21]. Geometric methods are generally based on analyzing the characteristics of VLC signals received through photodiodes (PD), which establish geometric relationships for positioning measurement using parameters such as time of arrival (ToA), angle of arrival (AoA), angle difference of arrival (ADoA), and received signal strength (RSS). However, some of these methods require strict synchronization clock cycles, leading to higher equipment costs. Additionally, the measurement accuracy of this type is susceptible to interference from noise generated by other external light sources [22], [23], [24], [25], [26]. In comparison, the imaging methods analyze the location information from the lens imaging relationship between objects and images. The large number of pixels on imaging sensors guarantees the higher positioning accuracy than geometric analyze methods. However, to achieve high-quality imaging, complex lens assemblies are needed, resulting in a larger overall volume of the positioning device, which is less conducive to integration into mobile devices. Furthermore, with an increase in the detection angle, not only does the detection intensity decrease significantly but the original positioning analysis models also become ineffective, which severely restricts the detection range of both two types of positioning technologies [27], [28].

In this work, we proposed a three-dimensional (3D) positioning scheme based on a metalens-integrated camera, which takes full advantages of the ultra-light and ultra-thin properties of metasurface. Metasurfaces are capable of effectively manipulating multidimensional properties of light on an ultra-thin surface, including amplitude [29], phase [30], [31], polarization [32], and orbital angular momentum [33]. Various compact optical functional devices have been realized based on metasurfaces, such as metasurface-based optical detectors, metalens-integrated cameras, or microscopy [34], [35], [36], [37], [38], [39], [40], [41], [42], etc. Distance measurement has also been realized by using metasurface-based devices [43], [44]. However, these devices only achieved one-dimensional positioning function. Three-dimensional positioning based on metasurfaces has not yet been proposed. The metalens-integrated positioning device (MPD) we designed is highly compact in size, facilitating easy integration into mobile devices. The scheme also utilizes the flexible phase design advantage of metasurfaces, realizing a planar wide-angle imaging metalens that can accurately images LEDs over a large angular range (∼120°). Additionally, to address the issue of reduced image intensity of LEDs at large viewing angles, we introduced an analysis algorithm for precisely determining the imaging positions of LEDs, which further reduces the impact of noise on positioning accuracy. The positioning device we proposed combines the advantages of compact size, high positioning accuracy, large detection range, and low cost, providing a revolutionary solution for future high-precision indoor positioning devices.

2. Results and discussion

2.1. Working principle and architecture of the MPD

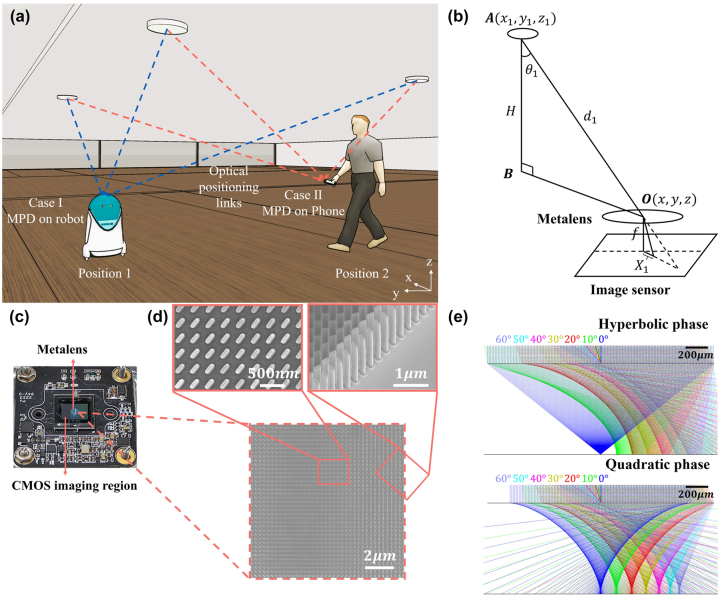

The application scenario of the MPD is shown in Figure 1(a). The MPD can be integrated into the mobile phones or moving vehicles and images the LED lights deployed on the ceiling with known positions. Through the geometric relationship of metalens imaging process, as shown in Figure 1(b), the specific position of the device can be deduced by the following equation,

| (1) |

where (x, y, z) is the coordinates of the mobile device that is needed to be calculated, (x i , y i , z i ) is the coordinates of the i-th LED, and θ i is the included angle between the normal line and the line connecting point (x i , y i , z i ) and point (x, y, z). X i is the image position of the i-th LED relative to metalens center on CMOS sensor, which is related to the angle θ i . Equation (1) indicates that if the imaging positions X i were designed in advance, the unknown (x, y, z) would be derived from geometric relationships of three LEDs and their corresponding images.

Figure 1:

The positioning device and its working principle. (a) Schematic of the MPD application scenario. The MPD can be integrated into the mobile phone or robot and then determine its own position by imaging the beacon LEDs with known locations on the ceiling. (b) Working principle of the MPD. The position of the device is related with the imaging positions of beacon LEDs on the CMOS sensor. f: metalens focal length, X 1: distance between the image of the i-th LED and the center point of CMOS sensor, d 1: distance between the i-th LED and the center point of metalens. (c) The photograph of the MPD, in which the CMOS imaging region and the metalens region are marked. (d) The SEM images of the fabricated metalens structures. (e) The simulated focusing light rays of hyperbolic phase metalens and quadratic phase metalens. The different colors from left to right represent the light rays with incident angles of 0°, 10°, 20°, 30°, 40°, 50°, and 60°, respectively.

In traditional geometric optics imaging model, it is assumed that the direction of light remains unchanged when it passes through a lens center. Therefore, the relationship between the imaging position and the incident angle conforms to the condition X i = f tanθ i . However, this condition is only satisfied when the incident angle θ is small, because when θ comes larger, non-negligible imaging aberrations (mainly coma) will appear [38], resulting in a significant imaging position deviation from theoretical f tanθ. Therefore, using a traditional lens for positioning will lead to significant positioning errors beyond a certain viewing angle range. Although the utilization of commercial fisheye lenses can enhance the imaging viewing angle, the bulky and heavy properties present challenges in terms of integration into mobile devices, like smartphones.

Benefitting from the ultra-thin, ultra-light, and flexible phase design advantages, metalens with only a single-layer of structure can realize the function comparable to conventional bulk refractive lenses, providing a promising way for miniaturization of positioning devices. In this work, we design a quadratic phase metalens that meets the imaging requirements of wide-angle and aberration-free, the phase profile of which is expressed as

| (2) |

where k is the wave number, and f is the metalens focal length. When an extra phase caused by oblique incidence is imposed, the quadratic phase profile would translate the linear extra phase into a spatial shift, shown as

| (3) |

As a result, the effect of oblique incidence is a spatial translation of the focal spot equals to f sinθ, as shown in Figure 1(e). In this work, the metalens is designed with a diameter of 1 mm, a focal length of 450 μm, and working wavelength of 470 nm, making the theoretical viewing angle reaches ±90°.

The metalens was fabricated in silicon nitride (Si3N4) nano-posts on a SiO2 substrate using standard electron-beam lithography (EBL) and dry etching (see Methods for details). The meta-atoms are geometric phase type designed in wavelength of 470 nm, which are 240 nm in length, 80 nm in width, 800 nm in height, and 300 nm in period. The geometric phase metasurface can be used to filter the environment noise, when the noise significantly interferes the imaging of beacon LEDs (see Supplementary Note S1 for detailed discussion). The modulation phase of the meta-atom is related with the rotating angle. Figure 1(c) shows the physical photograph of the MPD, in which the metalens is directly mounted upside down on the CMOS sensor (Imaging source, DMM 27UJ003-ML, pixel size: 1.67 μm) and fixed by an optically clear adhesive (OCA) tape (Tesa, 69402). The thickness of the OCA tape was chosen equals to the metalens focal length, so as to ensure the far-field objects could be clearly imaged on the CMOS sensor. The size of the entire device is 3 cm × 3 cm × 0.3 cm, while the main photosensitive area measures 1 cm × 1 cm, demonstrating significant advantages in volume compared to traditional imaging devices. Figure 1(d) shows the scanning electron microscope (SEM) image of part of the wide-angle metalens, the two zoom-in pictures are the top view and side view of structure details, respectively. The SEM images illustrate that the processed structure retains optimal design dimensions and impressive steepness.

Figure 1(e) shows the Zemax OpticStudio simulated focusing performances of hyperbolic phase metalens and quadratic phase metalens, respectively, at different incident angles. The different colors from right to left sequentially represent the light rays with incident angles of 0°, 10°, 20°, 30°, 40°, 50°, and 60°, respectively. The simulation results reveal that the hyperbolic phase metalens can perfectly focus the normally incident light. However, as the incident angle increases, the focusing performance deteriorates rapidly. On the contrary, for the quadratic phase metalens, although some stray light may be present during the focusing of light at each incident angle, the majority of the energy from even large-angle incident light can still be focused. This is why our device is capable of achieving good-quality imaging of wide-angle LED lights and exhibits high positioning accuracy.

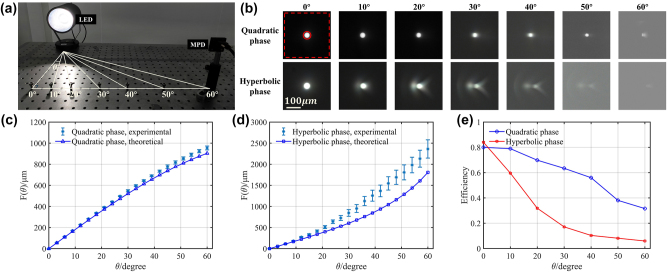

2.2. Characterization of the MPD imaging performance

To demonstrate the positioning performances of the MPD, we first characterized its imaging of a fixed beacon LED when it was placed at different viewing angles. The experimental setup is shown in Figure 2(a). The LED was fixed at a specific position and height on the optical platform, while the MPD was placed at a line with the same longitudinal distance and height of the LED. The relative viewing angle between the MPD and the LED was altered by adjusting the lateral distance. For comparison, a metalens with hyperbolic phase profile, which possesses diffraction limited imaging capability, was also implemented to image the LED at different viewing angles. Figure 2(b) shows the comparison of LED images captured by the MPD and the hyperbolic phase metalens at viewing angles of 0°, 10°, 20°, 30°, 40°, 50°, and 60°, respectively. It is readily apparent that the shapes and intensity of MPD images are well preserved, whereas hyperbolic metalens images exhibit noticeable coma aberrations. Moreover, as the viewing angle increases, the intensity of the hyperbolic phase metalens decays rapidly. We demonstrate the intensity decrease quantitatively by using focusing efficiency, which is defined as the ratio of energy within the focusing region (solid red circle in Figure 2(b)) to the energy within the observed region (dashed red square in Figure 2(b)). The focusing efficiencies shown in Figure 2(e) indicate that the quadratic phase metalens has much better focusing property, especially for large-angle incident light. All these factors contribute to the difficulty in determining the positions of imaging spot in hyperbolic phase metalens, resulting in a significant localization error. Figure 2(c) and (d) show the comparison of the experimental detected image positions and the theoretical model at different viewing angles both for the MPD and the hyperbolic metalens. For the MPD, the experimental results align well with the theoretical model at various angles, while for hyperbolic metalens, when the viewing angle gets larger, the experimental results will extremely deviate from the theoretical model. This is why our MPD can achieve high-precision positioning performance across wide viewing angle ranges, while traditional lenses perform poorly in positioning within such extensive viewing angles.

Figure 2:

Imaging performance comparison of the MPD and the hyperbolic phase metalens at different viewing angles. (a) Experimental setup for imaging of the LED while the imaging device is placed at different viewing angles. The viewing angles are marked below the position. (b) Quadratic phase and hyperbolic phase metalens imaging results corresponding to different incident viewing angles from 0° to 60°. The red circle and red square represent the focusing region and observed region, respectively. The measured and theoretical imaging positions of (c) quadratic phase metalens (the MPD) and (d) hyperbolic phase metalens at different viewing angles. The abscissa represents the LED viewing angles, while the ordinate (blue marked line & error bar) represents the distance from the imaging point to the CMOS center. (e) Focusing efficiency of quadratic phase and hyperbolic phase metalens at different viewing angles.

2.3. Indoor positioning detection system

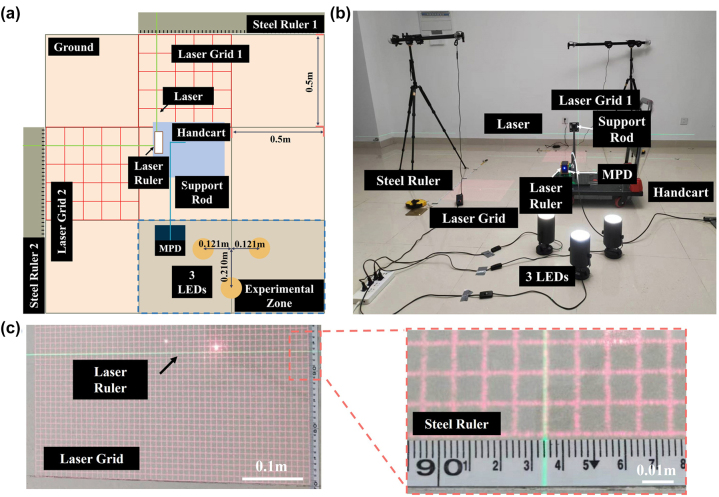

To experimentally assess the accuracy of our positioning scheme, it is essential to compare its test results with ground truth coordinates. However, determining ground truth coordinates within indoor spaces is not straightforward, as it requires expensive instruments such as ultra-high-definition motion capture cameras or sophisticated laser rangefinders. In the absence of such complex equipment, measurements using a ruler are necessary to precalibrate the coordinates of each position. However, this method not only consumes a significant amount of time and effort but also introduces considerable errors. Here, we propose a cost-effective and easily implementable solution capable of determining high-precision ground truth coordinates. The schematic of our solution is depicted in Figure 3(a), where each large square represents a tile area of 0.5 m × 0.5 m. The experimental testing area is outlined by the blue dashed rectangle in the lower right corner, covering an area of 1 m × 0.5 m, corresponding to imaging viewing angles exceeding ±60°. Three LEDs are arranged in an equilateral triangle configuration at the center of the experimental testing zone, with the distances between each LED illustrated in the figure. The MPD is affixed to a movable handcart (indicated by the blue rectangle) with a long support rod, enabling the MPD to move within the experimental testing zone while the handcart remains outside the zone.

Figure 3:

Indoor positioning detection system. (a) Schematic diagram of the positioning solution and the related setups. (b) The physical diagram of the experimental setups and their indoor layout. (c) Schematic diagram for reference position reading, reducing reading errors by aligning the laser ruler with the laser grid.

A laser ruler is also affixed to the handcart, emitting two perpendicular green laser beams directed toward steel rulers placed at the room edges, facilitating determination of the MPD and handcart positions in the XY plane. To prevent testing errors due to handcart rotation, it is crucial to ensure the green laser beams project vertically onto the steel rulers. Two sets of red laser grids with 50 × 50 square units are projected, aligned parallel to the steel rulers. During testing at each coordinate position, we verify the alignment of the green laser beams with the red laser grids to ensure vertical projection onto the steel rulers, enabling accurate MPD position readings. The physical layout of the experimental setups and their indoor arrangement is depicted in Figure 3(b). The MPD’s positions in the Z-direction are adjusted using standard connecting rods, with heights accurately measured using a ruler. Figure 3(c) illustrates the arrangement of the red grids, green laser, and steel ruler. The inset provides a detailed illustration, demonstrating the precise alignment of the green laser with the red grids, with the green laser pointing to a determined reading on the steel ruler. The dimensions of the red laser grids can be adjusted based on the projection height. In this experiment, the total size of the grids was adjusted to 50 cm × 50 cm, with each square unit measuring as 1 cm in length. By traversing the green laser over each grid line, specific areas can be tested, and then by moving the grids, other areas can be subsequently tested.

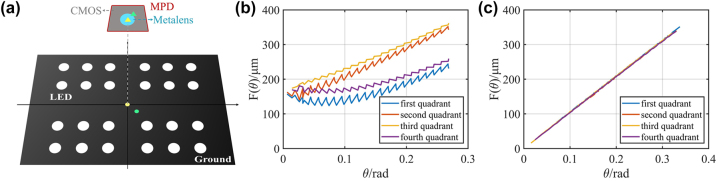

2.4. Determination of the MPD initial position

With the aforementioned positioning detection system, precise relative movement can be achieved. However, due to the certain relative distance between the MPD and the laser ruler, which is difficult to be directly and accurately measured, it is crucial to effectively determine the precise initial coordinate of the MPD for subsequent position calibration. Benefitting from the relationship between the imaging position and the LED viewing angle expressed in Eq. (3), the MPD initial coordinate can be obtained from imaging of multiple LEDs at different positions. We divided the experimental zone into four quadrants, with the center of the zone as the origin, and set LEDs symmetrically positioned in each quadrant, as shown in Figure 4(a). If the MPD initial position (shown as the blue triangle on the ground in Figure 4(a)) and the metalens center point (shown as the blue circle on CMOS in Figure 4(a)) are coarsely determined, the experimentally tested relation curves of LED viewing angles θ and the image point distances F(θ) in the four quadrants would be irregular, as shown in Figure 4(b). We traversed all points near the coarsely determined MPD initial position and the metalens center point and calculated the θ and F(θ) relation curves in four quadrants. An objective function was then set to characterize the total differences of these four curves, expressed as

| (4) |

where F i (θ) represents the image point distance corresponding to viewing angle θ of the i-th quadrant, and represents the average image point distance of the four quadrants. We ultimately determined the accurate MPD initial position and the metalens center, by finding the minimum value of objective function O. Based on the accurate positions, the calculated curves of θ and F(θ) would follow the f sinθ relation, and the four curves would completely overlap, as shown in Figure 4(c). The distance between the object and the LED can be precisely determined using the magnification ratio, as the imaging distance is fixed at f. The object distance equals the imaging distance multiplied by the magnification ratio. The detailed process for determining MPD initial position is shown in Supplementary Note S2.

Figure 4:

Determination of the MPD initial position. (a) Schematic diagram of determining the MPD initial position. The yellow and green circles on the ground represent the correct initial position and the coarsely determined initial position, respectively. The yellow and green triangles on CMOS represent the correct center of metalens and the coarsely determined center of metalens, respectively. (b) When the MPD position and the metalens center are not determined correctly, the curves of LED viewing angles θ and the image point distances F(θ) in four quadrants would be irregular. (c) When the MPD position and the metalens center are determined correctly, the curves of LED viewing angles θ and the image point distances F(θ) in four quadrants would completely overlap.

The arrangement of the three beacon LEDs is also of importance to the positioning performances. In Supplementary Note S3, we conducted a detailed analysis of the relationship between the spacing of LEDs and positioning errors, ultimately determining that the positioning error is minimized when the spacing between three LEDs is set as 0.242 m.

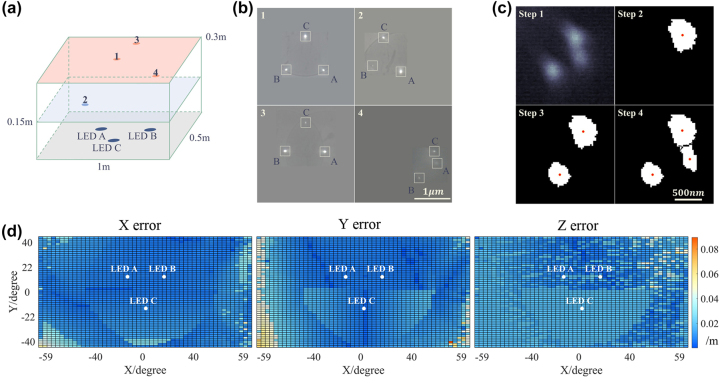

2.5. Positioning performances of the MPD

The MPD positioning performances are demonstrated by the positioning errors within the 1 m × 0.5 m experimental zone. Figure 5(a) shows the relative positions of four typical points in the experimental zone, three of which are located within the plane 0.3 m above the ground and one of which is located within a plane 0.15 m above the ground. The imaging results of 3 LEDs captured at these positions are shown in Figure 5(b), which indicate that at different positions, we would obtain different LEDs images distributions on CMOS. It can be seen from Figure 5(b) that when the LED viewing angle is small (position 1), the LED image would be relatively bright, making it easy to determine the image center. However, when the LED viewing angle is large enough (position 4, viewing angle near 60°), the imaging intensity would be too weak to directly determine the center.

Figure 5:

Positioning performance of the MPD. (a) Schematic of four MPD locations in the room and (b) the corresponding captured images. (c) Steps for determining the image positions of large viewing angle LEDs, the images of which are too weak to be analyzed directly. The red points represent the determined center of these LED images. (d) The analyzed positioning error distributions of the 0.3 m height plane, in X, Y, and Z directions, respectively. The relative positions of the three LEDs are marked in white. The experimental zone covers a viewing angle region over ±60° in X direction and ±40° in Y direction.

To address the issue, we adopted a point-by-point threshold method for large viewing angle images. The purpose of this method is to binarize the image by applying different intensity thresholds, in order to facilitate the extraction of the LED images contours and centers. It is essential to set appropriate binarization thresholds for each LED image, as setting a threshold too high will result in an undetermined image contour, while setting it too low will introduce imaging noise into the contour.

Therefore, our method is referred to as the point-to-point threshold method. Figure 5(c) shows the steps of our method. Firstly, preprocessing of removing bad points from the image was conducted. Then the threshold was increased until a readable target LED image appears, shown as the step 2 in Figure 5(c). After determining the image center, this LED image area was covered up to avoid the determination of other LED images as the threshold increases. By repeating the above steps, all the three LED images contours and centers would be determined, shown as step 4 in Figure 5(c). The detailed process of this method is shown in Supplementary Note S4.

Positioning errors were calculated by comparing experimentally obtained position coordinates with ground truth position coordinates at each testing point. A total of 2,601 (51 × 51) positions were tested by the MPD on the 0.3 m height plane within the 1 m × 0.5 m experimental zone. This zone covers a wide-angle region spanning over ±60° in the X direction and ±40° in the Y direction, with sampling intervals of 2 cm and 1 cm, respectively. The calculated positioning errors in the X, Y, and Z directions are 0.0095 m, 0.0116 m, and 0.015 m, respectively. This indicates a positioning accuracy approaching the millimeter-level across the wide-angle area. However, due to inevitable operational errors inherent in testing thousands of positions, certain points may exhibit significant errors. When averaging positioning accuracy, these points are included, potentially skewing the overall assessment. To address this, we also calculated the positioning accuracy for 90 % and 80 % of the positions in the region, detailed in Table 1. These results demonstrate that positioning accuracies in the majority of the wide-angle region have indeed reached millimeter-level precision. From the positioning accuracy results, it can be observed that the method exhibits slightly lower accuracy in the z direction compared to the x and y directions. From the perspective of the imaging model shown in Figure 1(b), this discrepancy arises because the movements in the x and y directions can be directly reflected by the LED imaging positions’ changes on CMOS. However, movements in the z direction can only be indirectly reflected in the LED imaging positions’ changes, through a geometric projection, resulting in additional positioning errors.

Table 1:

Average positioning accuracies for different percentages of area.

| Area percentages | X error (m) | Y error (m) | Z error (m) |

|---|---|---|---|

| 100 % | 0.0095 | 0.0116 | 0.0142 |

| 90 % | 0.0078 | 0.0091 | 0.0129 |

| 80 % | 0.0073 | 0.0080 | 0.0124 |

The MPD’s imaging performance is primarily influenced by the viewing angle rather than the object distance. As a result, the positioning accuracy of the MPD is mainly determined by the viewing angle. Therefore, the millimeter precision positioning performance of the MPD remains applicable to larger areas, provided that the viewing angle is smaller than ±60°. This conclusion is experimentally supported by positioning accuracy results across different height planes, as depicted in Supplementary Note S5.

3. Discussion and conclusion

Our method can be expanded to not only achieve (x, y, z) three-dimensional coordinate positioning but also determine the attitude angles (ψ x , ψ y ) of the MPD device. However, in this case, five beacon LEDs are necessary. By establishing imaging relationships for these LEDs, five independent geometric equations can be formulated, thus enabling the determination of all position and orientation angle information. The detailed derivation is provided in Supplementary Note S6. Furthermore, our approach demonstrates excellent scalability in terms of workspace expansion. By simply increasing the number of beacon LEDs, the working range can be easily extended to hundreds of meters. This enables our scheme to be applicable not only for positioning within single rooms but also for scenarios such as indoor parking lots, large shopping malls, and warehouses. The position information can be encoded into the on–off states of LEDs and captured by the camera [45]. Detailed analysis regarding the scalability of the workspace expansion is provided in Supplementary Note S7. When the viewing angle of LED gets larger, the imaging intensity decreases, which will lead to the reduction of positioning accuracy. One effective way to improve the large viewing angle imaging efficiency involves topological optimization, which targets the imaging efficiency as the optimization objective, and use the structure shapes as optimization parameters [46]. Through the optimization process, it is possible to achieve free-form structure units or nonperiodic arranging units with high modulation efficiency for large viewing angle imaging.

Compared to traditional lens positioning devices, the advantages of our metalens-based device are reflected in the following aspects. First, traditional lenses are typically bulk lenses with curved surfaces. In contrast, metalenses are designed and fabricated on a flat surface, with an ultra-thin thickness, making our device more compact. Second, thanks to the unique polarization characteristics of our geometric phase metalens, the imaging contrast of beacon LEDs can be effectively enhanced through polarization filtering when there is significant noise interference, thereby improving the positioning accuracy. Third, the metalens we designed possesses the capability for wide-angle imaging, which is difficult to achieve with a single traditional bulk lens (see Supplementary Note S8 for detailed analysis). Therefore, our positioning device based on the wide-angle metalens offers a much larger working range. In addition, the metasurface unit structure provides a vast space for parameter design and optimization, which offers a pathway to achieve more complex functions, such as high-efficiency wide-angle imaging.

In summary, utilizing a metalens-integrated camera, we have achieved high-precision positioning in a wide-angle area by imaging beacon LEDs. The quadratic phase metalens ensures accurate imaging of beacon LEDs within a 120° viewing angle range, unaffected by external signals. Consequently, our method achieves millimeter-level positioning accuracy across most parts of the wide-angle experimental region. Moreover, we have introduced a point-by-point threshold binarization method for low-contrast images to tackle the challenge of determining the imaging positions of low intensity LED images, caused by large viewing angle. This approach ensures high positioning accuracy within 120° field of view, ultimately delivering millimeter-level accuracy for the majority of positions. Beyond its high-precision positioning capabilities, the ultra-compact size of our MPD makes it highly suitable for integration into camera modules of smartphones, drones, and other devices, presenting a novel technical solution for future high-performance indoor communication, IoT, and related fields.

4. Experimental section

Metalens fabrication: step 1, deposit an undoped layer of silicon nitride (Si3N4) with thickness of 800 nm on the fused-silica substrate (SiO2) using plasma-enhanced chemical vapor deposition (PECVD) method. Step 2, spin coat 200 nm PMMA A4 resist film onto the surface of the material to be processed, and bake at 170 °C for 5 min. Step 3, a 42 nm thick layer of water-soluble conductive polymer (AR-PC 5090) was next spin coated on the resist on which the metalens structure pattern was written by using an E-beam writer (Elionix ELS-F125). The conductive polymer was then dissolved in water, and resist was developed in a resist developer solution. Step 4, an electron beam evaporated chromium layer was used to reverse the generated pattern with a lift-off process and was then used as a hard mask for dry etching the silicon nitride layer. Step 5, the dry etching was performed in a mixture of CHF3 and SF6 plasmas using an inductively coupled plasma reactive ion etching process (Oxford Instruments, PlasmaPro 100 Cobra300). Step 6, the chromium layer was removed by a stripping solution (Ce(NH4)2(NO3)6).

Supporting Information

See Supplementary 1 for supporting content.

Supplementary Material

Supplementary Material Details

Supplementary Material

This article contains supplementary material (https://doi.org/10.1515/nanoph-2024-0277).

Footnotes

Research funding: National Natural Science Foundation of China (Nos. 12104223, 61960206005 and 61871111), Jiangsu Key R&D Program Project (No. BE2023011-2), Young Elite Scientists Sponsorship Program by CAST (No. 2022QNRC001), the Fundamental Research Funds for the Central Universities (Nos. 2242022R10128 and 2242022k60001), Project of National Mobile Communications Research Laboratory (No. 2024A03), and Postgraduate Research & Practice Innovation Program of Jiangsu Province (No. KYCX24_0409).

Author contributions: JC and ZZ developed the idea. ML and YZ proposed the design and performed the numerical simulation. ML and YW conducted the optical testing experiments with the assistance from Z-WZ, HL and ZY. ML and YW fabricated the samples. JC and ZZ supervised the project. JC and ML analyzed the results and wrote the manuscript. All authors contributed to the discussion.

Conflict of interest: Authors state no conflicts of interest.

Data availability: The datasets generated and analyzed during the current study are available from the corresponding author upon reasonable request.

Contributor Information

Muyang Li, Email: 230238197@seu.edu.cn.

Yue Wu, Email: 230238218@seu.edu.cn.

Haobai Li, Email: 220225944@seu.edu.cn.

Zi-Wen Zhou, Email: 220230979@seu.edu.cn.

Yanxiang Zhang, Email: 18030138550@163.com.

Zhongyi Yuan, Email: 220221046@seu.edu.cn.

Zaichen Zhang, Email: zczhang@seu.edu.cn.

Ji Chen, Email: jichen@seu.edu.cn.

References

- [1].Farahsari P., Farahzadi A., Rezazadeh J., Bagheri A. A survey on indoor positioning systems for IoT-based applications. IEEE Internet Things J. . 2022;9(10):7680–7699. doi: 10.1109/jiot.2022.3149048. [DOI] [Google Scholar]

- [2].Lin X., et al. Positioning for the internet of things: a 3GPP perspective. IEEE Commun. Mag. . 2017;55(12):179–185. doi: 10.1109/mcom.2017.1700269. [DOI] [Google Scholar]

- [3].Luo J., Fan L., Li H. Indoor positioning systems based on visible light communication: state of the art. IEEE Commun. Surv. Tutor. . 2017;19(4):2871–2893. doi: 10.1109/comst.2017.2743228. [DOI] [Google Scholar]

- [4].Liu X., Yang A., Wang Y., Feng L. Combination of light-emitting diode positioning identification and time-division multiplexing scheme for indoor location-based service. Chin. Opt. Lett. . 2015;13(12):120601–120606. doi: 10.3788/col201513.120601. [DOI] [Google Scholar]

- [5].Kim J., Kwon J., Seo J. Multi‐UAV‐based stereo vision system without GPS for ground obstacle mapping to assist path planning of UGV. Electron. Lett. . 2014;50(20):1431–1432. doi: 10.1049/el.2014.2227. [DOI] [Google Scholar]

- [6].Khalajmehrabadi A., Gatsis N., Akopian D. Modern WLAN fingerprinting indoor positioning methods and deployment challenges. IEEE Commun. Surv. Tutor. . 2017;19(3):1974–2002. doi: 10.1109/comst.2017.2671454. [DOI] [Google Scholar]

- [7].Xiang Z., Song S., Chen J., Wang H., Huang J., Gao X. A wireless LAN-based indoor positioning technology. IBM J. Res. Dev. . 2004;48(5–6):617–626. doi: 10.1147/rd.485.0617. [DOI] [Google Scholar]

- [8].Saab S., Nakad Z. A standalone RFID indoor positioning system using passive tags. IEEE Trans. Ind. Electron. . 2011;58(5):1961–1970. doi: 10.1109/tie.2010.2055774. [DOI] [Google Scholar]

- [9].Xu J., et al. The principle, methods and recent progress in RFID positioning techniques: a review. IEEE J. Radio Freq. Identif. . 2023;7:50–63. doi: 10.1109/jrfid.2022.3233855. [DOI] [Google Scholar]

- [10].Alarifi A., et al. Ultra wideband indoor positioning technologies: analysis and recent advances. Sensors . 2016;16(5):707. doi: 10.3390/s16050707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Liu H., Darabi H., Banerjee P., Liu J. Survey of wireless indoor positioning techniques and systems. IEEE Trans. Syst. Man Cybern. C . 2007;37(6):1067–1080. doi: 10.1109/tsmcc.2007.905750. [DOI] [Google Scholar]

- [12].Nan C. Led-based Visible Light Communications . Berlin, Germany, Heidelberg: Springer; 2018. [Google Scholar]

- [13].Wang Y., Tao L., Huang X., Shi J., Chi N. 8-Gb/s RGBY LED-based WDM VLC system employing high-order CAP modulation and hybrid post equalizer. IEEE Photonics J. 2015;7(6):1–7. doi: 10.1109/jphot.2015.2489927. [DOI] [Google Scholar]

- [14].Burchardt H., Serafimovski N., Tsonev D., Videv S., Haas H. VLC: beyond point-to-point communication. IEEE Commun. Mag. . 2014;52(7):98–105. doi: 10.1109/mcom.2014.6852089. [DOI] [Google Scholar]

- [15].Song J., Ding W., Yang F., Yang H., Yu B., Zhang H. An indoor broadband broadcasting system based on PLC and VLC. IEEE Trans. Broadcast. . 2015;61(2):299–308. doi: 10.1109/tbc.2015.2400825. [DOI] [Google Scholar]

- [16].Do T., Yoo M. An in-depth survey of visible light communication based positioning systems. Sensors . 2016;16(5):678. doi: 10.3390/s16050678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Zhuang Y., et al. A survey of positioning systems using visible LED lights. IEEE Commun. Surv. Tutor. . 2018;20(3):1963–1988. doi: 10.1109/comst.2018.2806558. [DOI] [Google Scholar]

- [18].Sun X., Duan J., Zou Y., Shi A. Impact of multipath effects on theoretical accuracy of TOA-based indoor VLC positioning system. Photonics Res. . 2015;3(6):296. doi: 10.1364/prj.3.000296. [DOI] [Google Scholar]

- [19].Gu W., Aminikashani M., Deng P., Kavehrad M. Impact of multipath reflections on the performance of indoor visible light positioning systems. J. Lightwave Technol. . 2016;34(10):2578–2587. doi: 10.1109/jlt.2016.2541659. [DOI] [Google Scholar]

- [20].Shahjalal M., Hossan M., Hasan M., Chowdhury M., Le N., Jang Y. An implementation approach and performance analysis of image sensor based multilateral indoor localization and navigation system. Wirel. Commun. Mob. Comput. . 2018;2018(8):1–13. doi: 10.1155/2018/7680780. [DOI] [Google Scholar]

- [21].Chowdhury M., Hossan M., Islam A., Jang Y. A comparative survey of optical wireless technologies: architectures and applications. IEEE Access . 2018;6:9819–9840. doi: 10.1109/access.2018.2792419. [DOI] [Google Scholar]

- [22].Chuang S., Wu W., Liu Y. High-Resolution AoA estimation for hybrid antenna arrays. IEEE Trans. Antennas Propag. . 2015;63(7):2955–2968. doi: 10.1109/tap.2015.2426795. [DOI] [Google Scholar]

- [23].Cong L., Zhuang W. Hybrid TDOA/AOA mobile user location for wideband CDMA cellular systems. IEEE Trans. Wirel. Commun. . 2002;1(3):439–447. doi: 10.1109/twc.2002.800542. [DOI] [Google Scholar]

- [24].Güvenç I., Chong C. A survey on TOA based wireless localization and NLOS mitigation techniques. IEEE Commun. Surv. Tutor. . 2009;11(3):107–124. doi: 10.1109/surv.2009.090308. [DOI] [Google Scholar]

- [25].Wang G., Yang K. A new approach to sensor node localization using RSS measurements in wireless sensor networks. IEEE Trans. Wirel. Commun. . 2011;10(5):1389–1395. doi: 10.1109/twc.2011.031611.101585. [DOI] [Google Scholar]

- [26].Zhu B., Cheng J., Wang Y., Yan J., Wang J. Three-dimensional VLC positioning based on angle difference of arrival with arbitrary tilting angle of receiver. IEEE J. Sel. Areas Commun. . 2018;36(1):8–22. doi: 10.1109/jsac.2017.2774435. [DOI] [Google Scholar]

- [27].Hossen M., Park Y., Kim K. Performance improvement of indoor positioning using light-emitting diodes and an image sensor for light-emitting diode communication. Opt. Eng. . 2015;54(4):045101. doi: 10.1117/1.oe.54.4.045101. [DOI] [Google Scholar]

- [28].Guan W., Wen S., Liu L., Zhang H. High-precision indoor positioning algorithm based on visible light communication using complementary metal–oxide–semiconductor image sensor. Opt. Eng. . 2019;58(02):1. doi: 10.1117/1.oe.58.2.024101. [DOI] [Google Scholar]

- [29].Ren H., Fang X., Jang J., Bürger J., Rho J., Maier S. Complex-amplitude metasurface-based orbital angular momentum holography in momentum space. Nat. Nanotechnol. . 2020;15(11):948–955. doi: 10.1038/s41565-020-0768-4. [DOI] [PubMed] [Google Scholar]

- [30].Zheng G., Mühlenbernd H., Kenney M., Li G., Zentgraf T., Zhang S. Metasurface holograms reaching 80% efficiency. Nat. Nanotechnol. . 2015;10(4):308–312. doi: 10.1038/nnano.2015.2. [DOI] [PubMed] [Google Scholar]

- [31].Khorasaninejad M., Chen W., Devlin R., Oh J., Zhu A., Capasso F. Metalenses at visible wavelengths: diffraction-limited focusing and subwavelength resolution imaging. Science . 2016;352(6290):1190–1194. doi: 10.1126/science.aaf6644. [DOI] [PubMed] [Google Scholar]

- [32].Mueller J., Rubin N., Devlin R., Groever B., Capasso F. Metasurface polarization optics: independent phase control of arbitrary orthogonal states of polarization. Phys. Rev. Lett. . 2017;118(11):113901. doi: 10.1103/physrevlett.118.113901. [DOI] [PubMed] [Google Scholar]

- [33].Chen J., Chen X., Li T., Zhu S. On‐chip detection of orbital angular momentum beam by plasmonic nanogratings. Laser Photonics Rev. . 2018;12(8):1700331. doi: 10.1002/lpor.201700331. [DOI] [Google Scholar]

- [34].Zhou J., et al. Nonlinear computational edge detection metalens. Adv. Funct. Mater. . 2022;32(34):2204734. doi: 10.1002/adfm.202204734. [DOI] [Google Scholar]

- [35].Pan M., et al. Dielectric metalens for miniaturized imaging systems: progress and challenges. Light: Sci. Appl. . 2022;11(1):195. doi: 10.1038/s41377-022-00885-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Fan Z., et al. Integral imaging near-eye 3D display using a nanoimprint metalens array. eLight . 2024;4(1):3. doi: 10.1186/s43593-023-00055-1. [DOI] [Google Scholar]

- [37].Liu Y., et al. Ultra-wide FOV meta-camera with transformer-neural-network color imaging methodology. Adv. Photonics Res. . 2024;6(05):056001. doi: 10.1117/1.ap.6.5.056001. [DOI] [Google Scholar]

- [38].Chen J., et al. Planar wide-angle-imaging camera enabled by metalens array. Optica . 2022;9(4):431. doi: 10.1364/optica.446063. [DOI] [Google Scholar]

- [39].Chen J., Hu S., Zhu S., Li T. Metamaterials: from fundamental physics to intelligent design. Interdiscip. Mater. . 2023;2(1):5–29. doi: 10.1002/idm2.12049. [DOI] [Google Scholar]

- [40].Zhang Y., et al. Deep-learning enhanced high-quality imaging in metalens-integrated camera. Opt. Lett. . 2024;49(10):2853. doi: 10.1364/ol.521393. [DOI] [PubMed] [Google Scholar]

- [41].Li L., et al. Single-shot deterministic complex amplitude imaging with a single-layer metalens. Sci. Adv. . 2024;10(1):0501. doi: 10.1126/sciadv.adl0501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Luo Y., et al. Meta-lens light-sheet fluorescence microscopy for in vivo imaging. Nanophotonics . 2022;11(9):1949–1959. doi: 10.1515/nanoph-2021-0748. [DOI] [Google Scholar]

- [43].Shen Z., Zhao F., Jin C., Wang S., Cao L., Yang Y. Monocular metasurface camera for passive single-shot 4D imaging. Nat. Commun. . 2023;14(1):1035. doi: 10.1038/s41467-023-36812-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Jin C., et al. Dielectric metasurfaces for distance measurements and three-dimensional imaging. Adv. Photonics Res. . 2019;1(3):036001. doi: 10.1117/1.ap.1.3.036001. [DOI] [Google Scholar]

- [45].Li H., et al. A fast and high-accuracy real-time visible light positioning system based on single LED lamp with a beacon. IEEE Photonics J. 2020;12(6):1–12. doi: 10.1109/jphot.2020.3032448. [DOI] [Google Scholar]

- [46].Sell D., Yang J., Doshay S., Yang R., Fan J. A. Large-angle, multifunctional metagratings based on freeform multimode geometries. Nano Lett. . 2017;17(6):3752–3757. doi: 10.1021/acs.nanolett.7b01082. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Material Details