Abstract

Recent advancements in age-related macular degeneration treatments necessitate precision delivery into the subretinal space, emphasizing minimally invasive procedures targeting the retinal pigment epithelium (RPE)-Bruch’s membrane complex without causing trauma. Even for skilled surgeons, the inherent hand tremors during manual surgery can jeopardize the safety of these critical interventions.

This has fostered the evolution of robotic systems designed to prevent such tremors. These robots are enhanced by FBG sensors, which sense the small force interactions between the surgical instruments and retinal tissue. To enable the community to design algorithms taking advantage of such force feedback data, this paper focuses on the need to provide a specialized dataset, integrating optical coherence tomography (OCT) imaging together with the aforementioned force data.

We introduce a unique dataset, integrating force sensing data synchronized with OCT B-scan images, derived from a sophisticated setup involving robotic assistance and OCT integrated microscopes. Furthermore, we present a neural network model for image-based force estimation to demonstrate the dataset’s applicability.

Keywords: Data Sets for Robotic Vision, Medical Robots and Systems, Data Sets for Robot Learning

I. Introduction

In recent years, age-related macular degeneration (AMD) treatment has seen significant advancements. Innovative approaches, such as gene vector therapies and stem cell transplants, offer substantial promise to overcome this condition. However, their effectiveness hinges on the delicate delivery of these treatments into the subretinal space, with a specific focus on the retinal pigment epithelium (RPE)-Bruch’s membrane complex.

During the delivery process, it becomes imperative to precisely puncture the superior retinal layers while protecting the integrity of the RPE layer and the critical retinal support cells situated below it. Any inadvertent damage inflicted upon these cells could result in irreversible harm to the patient, underscoring the utmost care and precision required in these procedures.

During manual surgery, the precision of surgery can be affected by natural hand tremors, even when highly skilled surgeons are involved. In procedures demanding the utmost precision, even minor tremors have the potential to inflict damage on critical tissues. To address this challenge, researchers have developed specialized robotic systems to mitigate these tremors [9], [12], [14], [15], [17], [20]. These robotic systems can mimic the surgeon’s movements while effectively filtering out any unintended tremors that might occur if the procedure were solely human-controlled. Furthermore, there have been significant advancements in augmenting these robotic systems with force-sensing capabilities. This enhancement involves the integration of Fiber Bragg Grating (FBG) sensors into the surgical equipment, allowing the robots to provide valuable feedback regarding the subtle forces involved in the interaction between the tool’s shaft and the sclera, as well as the interaction between the needle tip and the retinal tissue during surgery. These forces are so minuscule that they are imperceptible during manual surgery, making the robotic system’s ability to capture this data exceptionally valuable. For example, this knowledge is crucial if we aim to prevent the exposure of retinal tissue to excessive pressure. Additionally, recording and analyzing these forces are essential in gaining insights into various procedures that require high-frequency force measurements, such as analyzing potential variations in needle tip force during retinal punctures or detecting and averting any unwanted needle tip contact with the RPE layer.

To tackle the mentioned challenges, there is a need for a relevant dataset. Such datasets are not publicly available as the generation of such datasets is very complex. Apart from the force sensing tools, one of the main required components in this data generation process is the Optical Coherence Tomography (OCT) device. In our case, OCT uses near-infrared light to capture high-resolution cross-sectional images of the different retinal layers at high frame rates. When aligning these cross-sectional images with the relevant surgical instrument during the procedure, the modality captures both the instrument position within the tissue and any deformation during the interaction. This has been used to visualize subretinal injection procedures and is an excellent option for acquiring ground truth information regarding the position of the instrument within the retina and the phase of the procedure.

When collecting both force interaction data and OCT imaging data, it is crucial to add timestamps to all the data points to synchronize later. An example of this can be seen in Figure 1: The blue curve in the graph shows the force data along the insertion direction, with some of the B-scans at key moments aligned below. Between the second and third B-scan, we can clearly identify a puncture, both from the drop in the force graph and from the relaxation of the retinal layers in the B-scans.

Fig. 1.

Synchronized B-scan and force sensor stream. The smoothed force data is plotted in 2 axes, along with the norm of the force. As a showcase, the B-scans from three noteworthy time stamps are visualized. Namely, before touching the ILM (Internal limiting membrane), during the insertion, and after the puncture of retina, from left to right.

Thus, when building datasets to develop algorithms for force sensors in retinal surgery, we need to integrate a force sensing tool, ideally mounted on a robotic system to guarantee controlled and repeatable data acquisition, and an imaging system such as OCT that can capture high-resolution imaging and assess the status of instrument insertion for ground truth generation.

In this paper, for the first time, we provide a dataset that integrates force sensing data at 180 Hz update rates and synchronized temporal OCT B-scan images collected at 20 frames a second. The dataset was generated using a complex system consisting of a force-sensing tool in conjunction with a robot platform and a microscope with an integrated OCT system. In the lack of any similar dataset, we assume that such a dataset will help the community to further the research in this field. The dataset is accessible through our public repository 1.

To validate the correlation between the measured force values and the imaging data and to provide an example for the potential application of our dataset, we developed a neural network emphasizing the feasibility of image-based force estimation. The model is designed to process a video sequence of N consecutive OCT B-scans. For each input frame in this sequence, the networks try to deduce the corresponding force exerted on the needle tip, providing an output of N force values as output for N images. We are using a time series approach since forces applied to tissue are not solely reliant on deformations observed in a single frame; instead, the sequential context of the prior frames is also essential to conduct information about movement direction and velocity. We argue that force increments may vary with the speed at which the needle traverses the tissue.

II. Related Works

As one of the significant challenges of moving from manual to robot-assisted surgery is the loss of perception of forces applied to the retina, researchers have worked on technologies to reintroduce this perception, for example, by using auditory feedback or adaptive force control instead. [4]-[6]

Direct force sensing is often not feasible in a surgical environment. Force sensors usually come at a very high cost, especially if they must be sensitive enough for the small forces we see during microsurgery. Sterilization is also significant as the tools are in direct contact with the patient. They need to be easily replaceable in case of material fault, and an increased size of the tools due to the attached force sensor should not interfere with the surgical procedure. Because of these factors, researchers have tried integrating indirect force sensing into the surgical workflow.

In alignment with this, Aviles et al. proposed an innovative method focused on Vision-Based Force Measurement in 2014. The methodology captures a tissue deformation mapping and then uses RNNs to estimate the force by looking at kinematic variables and the extracted deformation mapping. [1]

In 2018, Gessert et al. used 3D CNNs to estimate the force between tissue and an instrument from OCT volume scans. They aimed to provide haptic feedback to surgeons during robot-assisted teleoperation using OCT scans. They used a Siamese 3D CNN architecture to compare the deformed sample to a reference image without deformation. [7] As this architecture will not look at a sequence of images, it is important to note that the model will not include the time of the deformation as a factor, even though this is also relevant for measuring the applied force.

The same group also researched embedding a single optical fiber into a needle. They then used the A-scan data over time to estimate the force on the needle tip. [8] Compared to the results of the paper mentioned before, the results of the single optical fiber seem to be much more reliable, with the MAE being consistently lower.

In 2023, Neidhardt et al. used shear wave elastography to observe local tissue properties and combined this with deformation detected using OCT. The results prove that elastic properties are critical for accurate image-based force estimation across tissue types. [16]

III. Experimental setup

A. OCT Microscope

This work uses Optical Coherence Tomography (OCT) to capture volumetric scans of the different retinal layers. An A-scan results from sending a single near-infrared beam into tissue and analyzing the different layers by looking at the reflection. We can concatenate multiple A-scans into a two-dimensional B-scan and multiple B-scans into one three-dimensional C-scan.

All Microscope and OCT images were recorded using a Leica Proveo 8 microscope with an intraoperative OCT EnFocus™ device. Capturing a C-scan with this OCT takes multiple seconds (the exact time depends on the resolution). As we are interested in capturing B-scans in real-time, using C-scans in our data collection is not feasible. By interfacing with the Leica API, we can collect B-scans with an average frame rate of around 20 frames a second, allowing us to use the images for real-time analysis. We argue that if the needle tip is aligned with the B-scan, most relevant information should still be captured. Each B-Scan consists of 1000 A-scans, capturing a total length of 3 mm.

B. Robotic Platform

For our insertions, we are using the Eye Robot Version 2, a continuation of the Eye Robot 1, developed by researchers at Hopkins. [21] The robot has 5-DoF and is a development and research platform for the field of microsurgery. During (retinal) microsurgery, surgeons must make incisions with sub-millimeter precision. As the name suggests, the Steady-Hand Eye robot was developed to make tool movement more steady by following the hand movement of a surgeon while removing hand tremors from the movement during microsurgery. Apart from this feature, the robot can also be controlled using instructions from a computer using ROS [18], allowing for granular control, keeping any needle movement aligned in a B-scan plane while maintaining a consistent insertion angle.

C. FBG-based Force Sensing

FBG sensors capture the tiny forces between the needle tip and retinal tissue. The sensors rely on the bending of the tool along the tool shaft during insertion to reliably measure the forces applied to the needle. An interrogator device then converts the raw FBG data into force data using a calibration matrix, custom for each tool we use. This provides us with a ground truth during training and testing. In our experiments, we conducted experimental insertions with three different tools:

Tool A is a 25-gauge (OD=500 μm) sensorized instrument with optical fibers to detect tooltip forces [19]. Three fiber Bragg grating optical strain–sensing fibers were integrated into a 50-mm-long titanium wire of 0.5 mm diameter. A 3-mm-long 25-gauge bevel-tipped needle piece was attached to the titanium wire, which serves as the tool shaft, and the needle tip was bent at 30°.

Tool B is a dual force-sensing tool [10] that can measure the contact force at the tooltip and tool-to-sclera contact point. The needle tip consists of the distal end of a 36-gauge (OD=110 μm) beveled needle type NF36BV (World Precision Instruments, FL, USA).

Tool C is similar to tool A, but the tooltip consists of a distal portion (10 mm length) of a 36-gauge (OD=110 μm) micro-needle type INCYTO Needle-RNT (INCYTO Co., Ltd., Korea).

The sensors are monitored by an optical-sensing interrogator (Hyperion si115; Micron Optics, Inc), which has a resolution of 0.001 nm and a scan frequency of 2 kHz, with four channels. It is important to note that even though we are measuring interaction forces during the insertions, the calibration of such tools is very sensitive and even slight changes to a tool’s sharpness require re-calibration. The calibration matrix we used during our experiments was created for previous work, so any collected force information does not necessarily reflect real-world force data and should be seen as tool-specific force information. All instruments detect forces in 2-DoF, we do not account for axial forces. We argue this captures the most important forces of the interaction given our insertion angle. Even though this might be a limitation for some future work, this is not an issue for most of our applications as we are not interested in the absolute force values but in any relative force changes over time.

IV. Building the Data Set

A. Eye preparation

Our experiments used cadaveric pig eyes that were continuously cooled and used within 36 hours after harvesting. When leaving the eyes intact, one issue is fogging up of the lens over time, obstructing the view into the eye. Before performing any insertions, the eyes were cleaned from any unwanted tissue still left from harvesting and cut in half to expose the retina (open sky procedure), providing a clearer view into the eye, even if it fogged up previously. This also allows for easier access to the retinal tissue, as the fixed entry point does not restrict the needle through a sclerotomy opening.

B. Performing insertions

When performing insertions, we tried to maintain a similar angle over all insertions while following the shape of the needle tip bent when moving through the tissue. Those two angles varied from which tool we were using when collecting data.

When looking at the data collected with tool B, we quickly noticed that the signal-to-noise ratio is too low to see any difference in force during an insertion, even after applying a low-pass filter to remove the noise. We saw an increase in force when interacting with the RPE. Still, as we are primarily interested in the interaction with the ILM and the puncture, we decided not to proceed with data collection using tool B.

During data collection with tool A, we identified the tooltip bent to be around 30° and adjusted the insertion direction accordingly. We tried to keep the insertion angle relatively shallow between 40° and 60° degrees, keeping the needle from interacting with the RPE too early and mainly capturing the interaction forces between the upper retinal layers and the needle tip.

When performing insertions with tool C, we were able to see the point of puncture, marked by a quick bounce-back of the upper retinal layers (see Figure 7), if the eyes were fresh enough, we have show any noticeable detachments of the retina and the injection angle was steep enough. This led to some adjustments when collecting data, trying to reproduce this bounce-back as much as possible, allowing us to mark the point of puncture for all insertions showing this behavior. For those reasons, we tried to keep the insertion angle as steep as possible, around 80°. A more vertical angle is not possible due to the needle tip being bent and without the tool handle blocking the OCT image. The needle tip is bent by 20°, so we adjusted the robot movement to consider the altered angle.

Fig. 7.

Image sequence of needle insertion showing bounce-back of retinal tissue during an insertion using tool C. The orange arrow shows the direction of needle movement, the blue arrow the direction of the retinal layers. In the center image, the direction of the retina reverses, as it stops deforming further and starts to jump back.

C. Data processing

All data points are timestamped as the B-scan images and the force information are captured on two different devices. We assume that both clocks are synchronized to synchronize the two data sources. During data loading, we then load the force values closest to the timestamp of the current B-scan. Since the frequency of captured force values is higher, we are not just taking the closest force value. Instead, we have decided to average the N values most proximate to the B-scan of interest, adjusting N to the sampling rate.

Since the second dataset shows a clear point of puncture during the insertions due to the observed bounce-back, we decided to label three critical points during insertion for the collected data:

Traversing retina: This marks the point from which the needle touches the ILM and traverses the retina.

Retina punctured: Marks the point of puncture of the retina, identified by looking at the bounce-back of the ILM.

Traversing RPE: This marks the point when the needle hits the RPE. In an optimal insertion, this would never happen during an insertion, since touching the RPE causes irreversible damage to the eye. Since we do not have a way of stopping the needle at the correct point in time, this type of interaction is still contained in our dataset. For some of the pig eyes, the point of touching the RPE happens slightly before the puncture of the retina. We think this is because of a slight deterioration of the retinal layers, leading to a higher elasticity and, therefore, a later point in puncture. Insertion angle and sharpness of the needle also play a role in when the puncture will happen.

These labels can be used to classify or predict different phases during sub-retinal injections. One possible use-case could be puncture prediction: During insertion, we want to stop the robot in the critically small time window just when a puncture happens but before the needle hits the RPE and destroys the retina in that region.

V. Method

To demonstrate possible use cases of the dataset, we have employed a neural network to show the feasibility of image-based force estimation. The goal is to predict the force values by providing a sequence of N consecutive B-scans. If we feed N images into the network, we expect the network to return N force values we can compare to the ground truth force values from our dataset. We look at multiple frames in a row instead of the deformation of single frames only because the previous frames will also play a role in force estimation. The increase in force differs depending on the speed at which the needle traverses the tissue.

As the problem described above is a time series problem, it is reasonable to tackle it with an LSTM approach. In the first step, the network extracts the features of the image into a vector of length 512 using Resnet50 [11]. Those feature vectors are then inserted into the LSTM, estimating the force magnitude for every feature vector. Three LSTM layers with a hidden layer size of 1024 and a dropout of 0.15 are used for our demonstration. The LSTM output of length 1024 is then reduced to 128 and finally to a continuous force value using two fully connected layers.

For training, we used a smooth L1 loss:

| (1) |

, with . The loss function will punish errors less if they are small enough to be caused by the noise. The final loss value is calculated by summing up the error for every point in the sequence.

VI. Materials

We collected 372 insertions on 23 different pig eyes for the first dataset recorded with tool A. From those, 150 insertions were removed due to misalignment of the B-scans or inadequate force feedback due to deterioration of the pig eyes in that area.

For the second dataset recorded with tool C, we collected 183 insertions on 16 pig eyes. From those, 68 insertions were removed due to misalignment of the B-scans or inadequate force feedback due to deterioration of the pig eyes in that area.

All pig eyes used were collected a maximum of 48 hours prior. When collecting insertion data for the second dataset, we used eyes harvested in the last 16 hours. If an eye showed signs of deterioration, like major retinal detachments, they were not used for the data collection.

VII. Results

A. Image-based force estimation

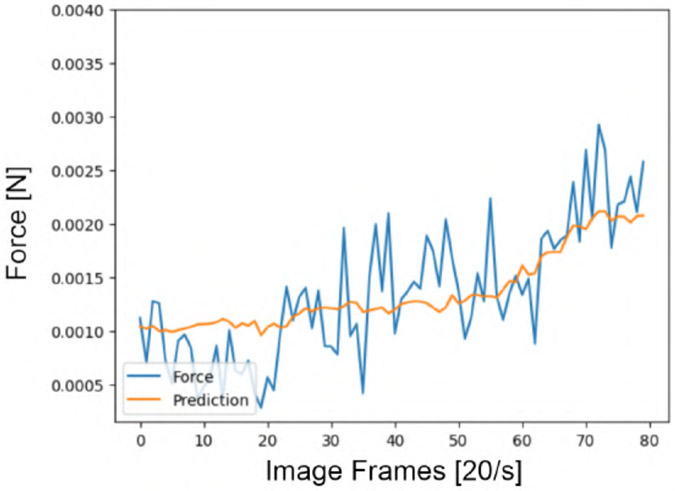

Figures 8 and 9 show the effectiveness of the trained LSTM in performing force estimation on the first dataset collected with tool A. The blue curve shows the raw force data for each insertion. The orange line shows the force prediction of the model, only given the b-scan series of the insertions as an input.

Fig. 8.

Force estimation using the LSTM model, given a sequence of 80 images

Fig. 9.

Force estimation using the LSTM model, showing some underestimation at the end of the series

B. Discussion and Further Works

In this paper, we have comprehensively explored intraoperative OCT B-scan correlation with the data from the force sensor mounted on the robot end-effector. There are several issues for further investigation of the proposed approaches. Due to the high noise in the force sensor, the variance of the learned models is increased, making the current system not directly useable with medical standards. However, the acquired insights from the research have substantial implications for optimizing the robotic setups for the future. The robotic setups always need a complicated design with multiple sensing and imaging sensors, requiring per-sensor and pairwise calibration for each. Reducing the quantity of these sensors helps the more efficient integration of robotic eye surgery. Learning the essential features of OCT data by force sensors and vice versa identifies the areas where the sensors can be used interchangeably. Both of these sensors have crucial importance in the setups, but for specific tasks, we have shown that they have the potential to replace each other interchangeably. At the same time, a new aspect of the underlying data could be revealed by utilizing a multi-modal paradigm of learning these two modalities jointly. Furthermore, in this dataset, the full interaction of the needle tip and the retina is captured during subretinal insertions, and the deformation of the retina layers coupled with the force feedback is collected. Learning the deformations of the retinal layers by the force values, similar to the approach proposed in [3], helps in understanding the elastography of the retinal tissues, which has a high impact on the planning and simulation purposes.

Another possible use case is training a model to perform puncture detection and prediction based on the images, the force values, or utilizing both. Puncture detection can be beneficial in a surgical environment, where an insertion can be terminated once a puncture is detected. On the other hand, when a puncture is unneeded, a puncture prediction model is helpful to stop the robotic movement when the risk of puncture is increased. An extension of this work could also be estimating the needle-tip position in the subretinal regions. As discussed in section I, the localization of the needle-tip between the sub-retinal layers is essential. Namely, the position of the instrument in between the ILM and RPE layers is more of interest, while for the treatment of age-related macular degeneration (AMD), for instance, a safe delivery of therapeutic payload is necessary in the space between the photoreceptors and the RPE-Bruch’s membrane complex [13]. Conventionally, this constraint is controlled by utilizing the OCT microscope. Based on the force data, the need for an OCT microscope is reduced with needle-tip localization.

Nevertheless, we are tackling the problem by considering the real-time 2D B-scan aligned with the tool through time, while [7] are considering the static 3D OCT scans. Both of these approaches can be relevant for different use cases, but naturally, force, being a time-derived component, makes it reasonable to utilize time-derived mod§alities. A study needs to be done using 4D OCT, as proposed in [2]. The 4D OCT microscope provides a high frame rate, making time-derived estimations more accurate, compared to [7] and also covers a broader region than what we have proposed by considering the tool-aligned B-scans.

VIII. Conclusion

In this work, we have gathered a general dataset, capturing the force sensor data of the needle tip and the OCT B-scans while applying a robotic insertion on ex-vivo porcine eyes. Our analysis in section VII-A reveals a significant correlation between these two domains, suggesting the possibility of substituting one domain for the other when necessary. Our experimental setup enabled us to collect this dataset, and we have made it publicly accessible to encourage research groups with similar interests to explore the potential of this field. Our initial findings regarding force estimation from OCT B-scans exhibit exciting patterns in specific data fragments, hinting at the possibility of eventually replacing one modality with another in relevant applications.

Supplementary Material

Fig. 2.

General setup: Eye robot 2.0 performing an insertion into an ex-vivo open-sky pig eye, recorded by the Leica Proveo 8

Fig. 3.

Demonstration of the communication between the a) Leica Proveo 8, b) Needle with integrated FBG force sensor, c) Robotic Platform, d) Work Station, and e) Hyperion si115 Interrogator

Fig. 4.

Two dimensional (B-scan) Optical Coherence Tomography (OCT) image slice, showing the needle interaction between retinal tissue and needle tip during insertion.

Fig. 5.

These three FBG force sensing tools, A (4 channels, 25-gauge micro-needle), B (dual force-sensing, 36-gauge micro-needle), and C (4 channels, 36-gauge micro-needle), were used in the experiments and to build the datasets.

Fig. 6.

Cadaveric pig eye, prepared according to the open sky procedure, with a needle inserted into it

Acknowledgments

This work was supported by U.S. National Institutes of Health under the grants number 2R01EB023943-04A1 and 1R01 EB025883-01A1, and partially by JHU internal funds.

Footnotes

References

- [1].Aviles Angelica I., Marban Arturo, Sobrevilla Pilar, Fernandez Josep, and Casals Alicia. A recurrent neural network approach for 3d vision-based force estimation. In 2014 4th International Conference on Image Processing Theory, Tools and Applications (IPTA), pages 1–6, 2014. [Google Scholar]

- [2].Britten Anja, Matten Philipp, Weiss Jakob, Niederleithner Michael, Roodaki Hessam, Sorg Benjamin, Hecker-Denschlag Nancy, Drexler Wolfgang, Leitgeb Rainer A, and Schmoll Tilman. Surgical microscope integrated mhz ss-oct with live volumetric visualization. Biomedical Optics Express, 14(2):846–865, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Li YD, Tang Min, Yang Yun, Huang vZi, Tong RF, Yang Shuang Cai, Li Yao, and Manocha Dinesh. N-cloth: Predicting 3d cloth deformation with mesh-based networks. In Computer Graphics Forum, volume 41, pages 547–558. Wiley Online Library, 2022. [Google Scholar]

- [4].Ebrahimi Ali, He Changyan, Patel Niravkumar, Kobilarov Marin, Gehlbach Peter, and Iordachita Iulian. Sclera force control in robot-assisted eye surgery: Adaptive force control vs. auditory feedback. 2019 International Symposium on Medical Robotics (ISMR), 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Ebrahimi Ali, He Changyan, Roizenblatt Marina, Patel Niravkumar, Sefati Shahriar, Gehlbach Peter, and Iordachita Iulian. Real-time sclera force feedback for enabling safe robot-assisted vitreoretinal surgery. 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Ebrahimi Ali, Patel Niravkumar, He Changyan, Gehlbach Peter, Kobilarov Marin, and Iordachita Iulian. Adaptive control of sclera force and insertion depth for safe robot-assisted retinal surgery. 2019 International Conference on Robotics and Automation (ICRA), 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Gessert Nils, Beringhoff Jens, Otte Christoph, and Schlaefer Alexander. Force estimation from oct volumes using 3d cnns. International Journal of Computer Assisted Radiology and Surgery, 13(7):1073–1082, 2018. [DOI] [PubMed] [Google Scholar]

- [8].Gessert Nils, Priegnitz Torben, Saathoff Thore, Antoni Sven-Thomas, Meyer David, Hamann Moritz Franz, Jünemann Klaus-Peter, Otte Christoph, and Schlaefer Alexander. Needle tip force estimation using an oct fiber and a fused convgru-cnn architecture. Medical Image Computing and Computer Assisted Intervention – MICCAI 2018, page 222–229, 2018. [Google Scholar]

- [9].Gijbels Andy, Vander Poorten EB, Gorissen Benjamin, Devreker Alain, Stalmans Peter, and Reynaerts Dominiek. Experimental validation of a robotic comanipulation and telemanipulation system for retinal surgery. In 2014 5th IEEE RAS EMBS Int. Conf. Biomed. Robot. Biomechatronics, pages 144–150. IEEE, 2014. [Google Scholar]

- [10].He C, Roizenblatt M, Patel N, Ebrahimi A, Yang Y, Gehlbach PL, and Iordachita I. Towards Bimanual Robot-Assisted Retinal Surgery: Tool-to-Sclera Force Evaluation. Proc IEEE Sens, 2018, Oct 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].He Kaiming, Zhang Xiangyu, Ren Shaoqing, and Sun Jian. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016. [Google Scholar]

- [12].He Xingchi, Roppenecker Daniel, Gierlach Dominikus, Marcin Balicki Kevin Olds, Gehlbach Peter, Handa James, Taylor Russell, and Iordachita Iulian. Toward clinically applicable steady-hand eye robot for vitreoretinal surgery. In ASME International Mechanical Engineering Congress and Exposition, volume 45189, pages 145–153. American Society of Mechanical Engineers, 2012. [Google Scholar]

- [13].Karampelas Michael, Sim Dawn A, Keane Pearse A, Papastefanou Vasilios P, Sadda Srinivas R, Tufail Adnan, and Dowler Jonathan. Evaluation of retinal pigment epithelium–Bruch’s membrane complex thickness in dry age-related macular degeneration using optical coherence tomography. British Journal of Ophthalmology, 97(10):1256–1261, 2013. [DOI] [PubMed] [Google Scholar]

- [14].Molaei Amir, Abedloo Ebrahim, de Smet Marc D, Safi Sare, Khorshidifar Milad, Ahmadieh Hamid, Khosravi Mohammad Azam, and Daftarian Narsis. Toward the art of robotic-assisted vitreoretinal surgery. Journal of ophthalmic & vision research, 12(2):212, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Nasseri MA, Eder Martin, Nair Saurabh, Dean EC, Maier Martin, Zapp D, Lohmann CP, and Knoll Aaron. The introduction of a new robot for assistance in ophthalmic surgery. In Eng. Med. Biol. Soc. (EMBC), 2013 35th Annu. Int. Conf. IEEE, pages 5682–5685. IEEE, 2013. [DOI] [PubMed] [Google Scholar]

- [16].Neidhardt Maximilian, Mieling Robin, Bengs Marcel, and Schlaefer Alexander. Optical force estimation for interactions between tool and soft tissues. Scientific Reports, 13(1), 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Rahimy E, Wilson J, Tsao TC, Schwartz S, and Hubschman JP. Robot-assisted intraocular surgery: development of the IRISS and feasibility studies in an animal model. Eye, 27(8):972–978, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Stanford Artificial Intelligence Laboratory et al. Robotic operating system. https://www.ros.org. Version: ROS Melodic Morenia. Date: 2018-May-23. [Google Scholar]

- [19].Sunshine S, Balicki M, He X, Olds K, Kang JU, Gehlbach P, Taylor R, Iordachita I, and Handa JT. A force-sensing microsurgical instrument that detects forces below human tactile sensation. Retina, 33(1):200–206, Jan 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Ullrich Franziska, Bergeles Christos, Pokki Juho, Ergeneman Olgac, Erni Sandro, Chatzipirpiridis George, Páne Salvador, Framme Carsten, and Nelson Bradley J. Mobility experiments with microrobots for minimally invasive intraocular surgery. Investigative ophthalmology & visual science, 54(4):2853–2863, 2013. [DOI] [PubMed] [Google Scholar]

- [21].Üneri Ali, Balicki Marcin A., Handa James, Gehlbach Peter, Taylor Russell H., and Iordachita Iulian. New steady-hand eye robot with micro-force sensing for vitreoretinal surgery. In 2010 3rd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, pages 814–819, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.