Abstract

Objective: Attention-deficit/hyperactivity disorder (ADHD) is a childhood-onset neurodevelopmental disorder with a prevalence ranging from 6.1 to 9.4%. The main symptoms of ADHD are inattention, hyperactivity, impulsivity, and even destructive behaviors that may have a long-term negative influence on learning performance or social relationships. Early diagnosis and treatment provide the best chance of reducing and managing symptoms. Currently, ADHD diagnosis relies on behavioral observations and ratings by clinicians and parents. Medical diagnosis of ADHD was reported to be delayed because of a global shortage of well-trained clinicians, the heterogeneous nature of ADHD, and combined comorbidities. Therefore, alternative ways to increase the efficiency of early diagnosis are needed. Previous studies used behavioral and neurophysiological data to assess patients with ADHD, yielding an accuracy range from 56.6% to 92%. Several factors were shown to affect the detection rate, including methods and tasks used and the number of electroencephalogram (EEG) channels. Given that children with ADHD have difficulty sustaining attention, in this study, we tested whether data from multiple tasks with different difficulties and prolonged experiment times can probe the levels of brain resources engaged during task performance and increase ADHD detection. Specifically, we proposed a Deep Neural Network-based (DNN) fusion model of multiple tasks to enhance the detection of ADHD. Methods & Results: Forty-nine children with ADHD and thirty-two typically developing children were recruited. Analytic results show that the fusion of multi-task neurophysiological data can increase the separation rate to 89%, whereas a single data type can only achieve a best accuracy of 81%. Moreover, the use of multiple tasks helps distinguish between children with ADHD and typically developing children. Our results suggest that different neurophysiological models from multiple tasks can provide essential information to assist in ADHD screening. In conclusion, the proposed model offers a more efficient, and accurate alternative for early clinical diagnosis and management of ADHD. The application of artificial intelligence and multimodal neurophysiological data in clinical settings sets a precedent for digital health, paving the way for future advancements in the field.

Keywords: Attention-deficit/hyperactivity disorder, assessment, deep learning, multi-model fusion, virtual reality

Clinical and Translational Impact Statement– By employing a DNN-based fusion model that integrates data from multiple neurophysiological tasks, the study achieves an 89% accuracy in ADHD detection. The proposed model offers a more objective, efficient, and accurate alternative, facilitating early clinical diagnosis and management of ADHD. The application of artificial intelligence and multimodal neurophysiological data in clinical settings sets a precedent for digital health, paving the way for future advancements in the field.

I. Introduction

Attention-Deficit/Hyperactivity Disorder (ADHD) is a neurodevelopmental disorder that usually occurs in childhood. The prevalence of ADHD in children aged 3 to 12 years around the world ranges from 6.1 to 9.4% estimated in October 2020 [1]. Characterized by difficulty concentrating, hyperactivity, impulsivity, and even destructive behaviors, ADHD may be associated with other problems like learning difficulties and behavior disorders [2]. ADHD is usually diagnosed before school age but symptoms can persist into adolescence and even adulthood if not treated effectively and in a timely manner [3]. Therefore, early diagnosis and treatment is critical as it provides the best chance of reducing symptoms. Currently, the assessment of ADHD relies on behavioral observations and ratings by clinicians and parents. No standard objective laboratory tests (blood, urine, x-ray or psychological analysis) can be used to further support the diagnosis of ADHD [4]. As a result, medical diagnosis of ADHD was reported to be delayed because of the heterogeneous nature of ADHD, the presence of comorbidities with similar symptoms [5], and a global shortage of well-trained clinicians [6]. Children with ADHD may struggle with paying attention, get easily distracted, display impulsive or overly active behaviors, and appear restless compared to their age-matched peers [3]. These challenges can lead to academic, family, and life difficulties, so early diagnosis and treatment are crucial [7]. Furthermore, an increasing rate of ADHD misdiagnosis with other types of brain disorders was also reported [6], [8]. It was suggested that the development of alternative and objective ways to increase early diagnosis efficiency is important. Previous studies for objective ADHD assessment and detection employed neuropsychological tests, Behavioral Rating Scales (BRS), or Continuous Performance Test (CPT) [9], yielding an accuracy range from 56.6 % [10] to 92% [11]. In 2020, we used neuro-behavioral data during a CPT in a virtual classroom to separate children with ADHD from typically developing children with an accuracy of 83.6% [12]. We recently demonstrated the use of EEG features during a virtual classroom-based CPT with irrelevant distractions to help diagnose ADHD and accuracy increased to 85.45% [13]. In this study, we examined whether a fusion of multi-modal data from multiple tasks can further enhance ADHD detection.

The paper is organized as follows: Section II discusses previous studies on physiological information assessment and their shortcomings. Then, the advantage and contribution of our work is mentioned. Section III describes the flow of our experiment. In Section IV, observations and experiment results are discussed. Section V concludes our work and informs future research.

II. Related Work

ADHD is a common neurodevelopmental disorder in children. Children with ADHD may exhibit structural immaturity in subcortical and cortical areas, such as the nucleus accumbens, amygdala, caudate, hippocampus, putamen, prefrontal, parietotemporal cortex and cerebellum [14], [15], [16], [17]. Behavioral symptoms include inattention, impulsiveness, and hyperactivity [3]. To diagnose ADHD, clinicians used the reference guide known as the Diagnostic and Statistical Manual of Mental Disorders – 5th Edition (DSM-5). In addition to the above-mentioned medical diagnosis, behavioral or neuronal data have been used as a supportive measure in the evaluation of ADHD. Advantages include faster diagnostic decisions, shorter clinical consultation times and higher clinician confidence in the diagnosis, compared to standard clinical assessment [18]. The CPT, for instance, has been used in the assessment of ADHD based on behavioral performance of task, including correct response, reaction time, omission errors, and commission errors [19], [20], [21]. Importantly, with advances in VR techniques, it was reported that using VR-based CPT can help diagnose ADHD with increased accuracy when compared with traditional CPT [10], [22] and even provide additional information for treatment. Mühlberger et al. [23] used a virtual classroom test to examine the influence of methylphenidate on task performance in children with ADHD and reported that ADHD children off-medication displayed a significantly higher omission-error rate and longer mean reaction time compared to on-medication and neurotypical children.

Additionally, EEG features in time domain, i.e., event-related potentials (ERPs), and in frequency domain, i.e., frequency-specific signal power, during different tasks were reported to provide an objective assessment of ADHD. See [24] for a review. For instance, an examination of two ERPs, N2 and P3, during a GO/NOGO task, a variation of CPT, revealed that NOGO-P3 was most likely related to response inhibition and NOGO-N2 may reflect the central inhibition or response conflict [25] and can be used in ADHD diagnosis. Specifically, it was reported that the N2/P2 amplitudes over the right centro-frontal regions [26] and the NOGO-P3 peak and latencies [27] significantly differed between children with ADHD and typically developing children, suggesting immature or impaired executive function associated with attention control and inhibition [24].low By a meta-analysis, it was suggested that the N2 and P3 reveal moderate deviations and heterogeneity in ADHD at the level of functional brain systems [28]. Regarding signal power, Alexander et al. [29] reported that in ADHD subjects, low-frequency wave activity was inversely related to clinical and behavioral measures of hyperactivity and impulsivity during CPT tasks. This inverse relationship normalized following treatment with stimulant medication. Clarke et al. [30] found that these oscillatory abnormalities during the eyes-closed resting state in ADHD groups can help distinguish between two subtypes of ADHD. Barry et al. [31] reviewed articles that used resting EEG to assess ADHD and concluded that “elevated relative theta power, and reduced relative alpha and beta, together with elevated theta/alpha and theta/beta ratios, are most reliably associated with AD/HD.” Furthermore, power spectral density of delta, theta, alpha, beta and gamma oscillations can be used to assess the executive functions related to the cluster working memory and the training effects in ADHD (See [24]).

Recently, applying machine learning (ML) methods and artificial intelligence has enabled the analysis of multiple and massive variables and provided a more objective, reliable, and efficient evaluation of multifaceted complex clinical datasets with high accuracy. In ADHD studies, Chen et al. [32] and Sun et al. [33] used discriminant analysis to achieve 56.6% and 76.1% accuracy, respectively, in distinguishing ADHD from non-ADHD. In 2019, Kim and Kastner [34] used a deep learning model with features extracted from 60 channels of EEG during a time estimation task, achieving an accuracy of up to 86% in distinguishing ADHD from healthy subjects. In 2020, we used task performance and neuro-behavioral data, such as head rotation (HR) and eye movements (EM), during a CPT in a virtual classroom to separate children with ADHD from typically developing children (TDC) with an accuracy of 83.6% [12]. Recently, we used EEG features during a VR-based GO/NOGO task to achieve an improved accuracy of 85.45% in detecting ADHD [13]. In this study, we employed multi-task neurophysiological data and proposed a DNN-based fusion model to enhance the detection of ADHD.

III. Methodology

This section elaborates on the system architecture, VR environment, experimental procedure and feature analysis process.

A. System Architecture

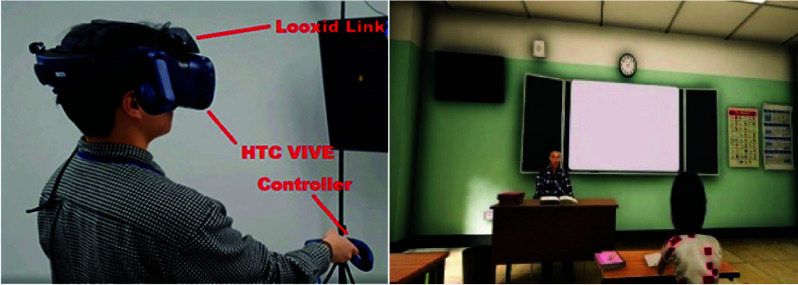

The proposed system is shown in Fig. 1. In our VR system, the game engine Unity3D was used to develop an environment that simulates a virtual classroom. Cognitive tasks, including visual, audio and visual + audio CPT were added to the game environment. The subjects used the HTC Vive TM® controller to complete the task. In addition to test tasks, the HTC Vive TM helmet also provides information on the subject’s head rotation (HR) and eye movements (EM). Moreover, six EEG channels, Af3, Af4, Af7, Af8, Fp1 and Fp2 (® Looxid Link), were integrated with the HTC Vive and recorded during tasks with a rate of 512Hz. The mind index, which shows attention and relaxation levels, as well as left and right brain activity, raw impulses from each sensor, and the distinct brain frequencies picked up by each sensor, can be visualized and recorded.

FIGURE 1.

The proposed system (right) and VR scheme (right).

B. Virtual Reality Game of Continuous Performance Test (CPT)

The CPT tasks were designed using the game engine Unity3D in a virtual classroom (Fig. 1; right). The CPT tasks were based on the classical GO/NOGO paradigm and the visual CPT were used in our previous studies (see papers [12] for details). In summary, participants wore the HTC headset and were instructed to continually look at numbers on a blackboard directly in front during the experiment. Those numbers ranged from 0 to 9 and randomly appeared on the blackboard with an interstimulus interval (ISI) of 2 seconds. Participants were instructed to respond when they saw a ‘0’ following a ‘1’ (GO condition) and to withhold their response when ‘0’ was not after ‘1’ (NOGO condition). The GO and NOGO stimuli appeared randomly. Additionally, 25 trials (12 GO stimuli and 13 NOGO stimuli) included various task-irrelevant auditory and visual distractions. Ten different distractive events were introduced to affect the participants’ attention (see supplementary Table 1 for properties of those distractions), and denoted as the D trials while those without any distractions were denoted as ND trials. In this study, we further converted the visual CPT into audio and visual + audio forms in which the stimulus were delivered either audibly or both visually and audibly.

TABLE 1. Behavior Result Task Performance (*: P<0.016 After Correction for Multiple Comparisons).

| Task | Visual CPT | |||||||

|---|---|---|---|---|---|---|---|---|

| Dataset | TPC (n=32) | ADHD Children (n=49) | Between Group | |||||

| Trial | D | ND | Within-group Significance | D | ND | Within-group Significance | D (p-value) | ND (p-value) |

| Correc (%) | 94.42% | 93.45% | 0.434 | 88.46% | 86.97% | 0.516 | 0.010* | 0.050 |

| RT(ms) | 443.63±115.08 | 445.98±165.49 | 0.894 | 397.97±185.08 | 419.22±188.82 | 0.662 | 0.304 | 0.560 |

| Omission errors | 0.18±0.23 | 0.10±0.22 | 0.064 | 0.47±0.31 | 0.22±0.32 | 0.0001* | 0.0001* | 0.090 |

| Commission errors | 0.04±0.07 | 0.06±0.08 | 0.213 | 0.08±0.10 | 0.12±0.16 | 0.115 | 0.149 | 0.090 |

| Task | Audio CPT | |||||||

| Dataset | TPC | ADHD Children | Between Group | |||||

| Trial | D | ND | Within-group Significance | D | ND | Within-group Significance | D (p-value) | ND (p-value) |

| Correc (%) | 86.77% | 90.60% | 0.001* | 76.96% | 82.05% | 0.010* | 0.00002* | 0.010* |

| RT(ms) | 263.74±221.89 | 367.96±300.31 | 0.065 | 304.83±233.08 | 382.41±356.90 | 0.259 | 0.482 | 0.866 |

| Omission errors | 0.59±0.31 | 0.43±0.40 | 0.002* | 0.81±0.17 | 0.71±0.25 | 0.042 | 0.001* | 0.001* |

| Commission errors | 0.01±0.02 | 0.03±0.05 | 0.008* | 0.08±0.14 | 0.08±0.18 | 1.000 | 0.012* | 0.159 |

| Task | Visual + Audio CPT | |||||||

| Dataset | TPC | ADHD Children | Between Group | |||||

| Trial | D | ND | Within-group Significance | D | ND | Within-group Significance | D (p-value) | ND (p-value) |

| Correc (%) | 74.12% | 64.44% | 0.0005* | 58.16% | 58.50% | 0.696 | 0.000001* | 0.033 |

| RT(ms) | 631.54±79.43 | 683.04±97.46 | 0.010* | 717.69±45.09 | 733.27±85.11 | 0.278 | 0.000001* | 0.033 |

| Omission errors | 0.49±0.28 | 0.68±0.28 | 0.0001* | 0.76±0.16 | 0.78±0.24 | 0.636 | 0.00001* | 0.149 |

| Commission errors | 0.03±0.08 | 0.03±0.09 | 0.825 | 0.08±0.14 | 0.07±0.17 | 0.732 | 0.116 | 0.251 |

C. Participants

The study was approved by the institutional review board of Children’s Hospital of Fudan University (RESEARCH ETHICS BOARD APPROVAL [No.(2018) 02]) in accordance with the Declaration of Helsinki and Good Clinical Practice Guidelines. Eighty-one children aged 6 to 12 years participated in this study. Of these children, 49 had been diagnosed with ADHD (mean age = 7.4±1.6; 22 boys) and 32 were TDC (mean age = 8.0±1.5; 16 boys). The diagnosis of ADHD was based on the DSM-5 standard. Throughout the experiment, behavioral data were collected for the calculation of task performance, including correct detection, reaction times, omission errors, and commission errors.

D. Data Analysis

1). Feature Extraction

-

•

Task Performance (TP): In the task performance data, we considered the active accuracy rate, passive accuracy rate and the reaction time as features. Because there are three CPT tasks and three conditions (distraction, non-distraction and the allover mean), the task performance yields 27 features in total.

-

•

EEG: The steps for typical EEG data analysis were described in detail in our previous studies [32]. In summary, EEG data were first bandpass filtered (2-56 Hz) to remove power line artifacts and head movement artifacts. These artifact-removed EEG were transformed into the frequency domain using fast Fourier transform and the resultant spectral densities at each channel were averaged across frequencies with respect to the pre-defined five frequency bands of interests (FOIs), including the

(1-3),

(1-3),

(4-7 Hz),

(4-7 Hz),

(8-14 Hz),

(8-14 Hz),

(15-24 Hz), and

(15-24 Hz), and

(25-48 Hz) bands. In this study, spectral densities were obtained directly from the device, Looxid Link. For each FOI, we derived the maximum, minimum, average and standard deviation values of each channel with respect to the conditions, resulting in 120 features per task and 360 features in total.

(25-48 Hz) bands. In this study, spectral densities were obtained directly from the device, Looxid Link. For each FOI, we derived the maximum, minimum, average and standard deviation values of each channel with respect to the conditions, resulting in 120 features per task and 360 features in total. -

•

Eye Movement (EM): We used the embedded eye tracking device of HTC Vive TM to collect eye movement data. The system preprocesses data and reports information, such as the gaze point coordinates, pupil position, pupil diameter, and opening and closing of the left and right eyes during tasks. These data serve as the eye movement (EM) features.

-

•

Head Rotation (HR): The head rotation amplitude has been considered an important feature to assess the focus of the subject. The HR during the task was calculated by the HTC Vive TM.

2). Statistical Analysis

Welch’s t-test was used on each aspect of task performance, EEG data, eye movement and head rotation to measure their significance on separating the two groups. The number of features extracted are 27, 180, 99 and 9 in accordance with task performance, EEG, EM and HR, respectively (in total, 315 features).

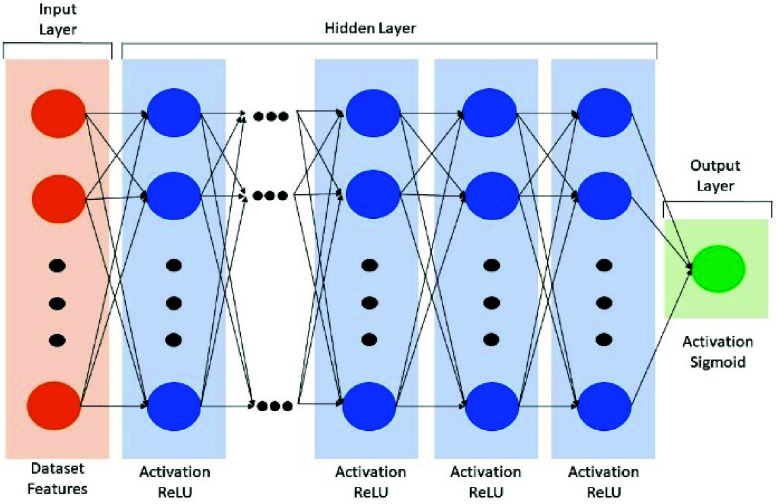

3). Deep Neural Network Model

A deep neural network (DNN) method was employed to perform the ADHD assessment classification. The basic structure of DNN model comprises three connected layers: the input, hidden and output layers (Fig. 2). According to the complexity of different datasets, we systematically adjusted the hyperparameters of the modal-specific DNN model to achieve the optimal classification accuracy, including the numbers of hidden layers and the nodes of neurons. The design of decreasing nodes was aimed at reducing feature abstraction and complexity while retaining critical features. ReLU activation functions were utilized across all hidden layers. For all output layer, the sigmoid activation function was used because of its suitability for binary classification problems.

FIGURE 2.

The architecture of the DNN model.

Specifically, the TP dataset employed 2 hidden layers with neuron counts of 128 and 32, respectively. The HR dataset had 3 hidden layers with neuron counts of 64, 32, and 8, respectively. The EM dataset employed a 7-layer neural network structure with neuron counts of 1024, 512, 256, 128, 32, 16, and 4, respectively. For the EEG dataset, different approaches were compared. Firstly, we tested the importance of each channel by processing a DNN model of 8 hidden layers featuring neuron counts of 512, 512, 256, 128, 128, 32, 16, and 4, respectively. Then, all EEG data of 6 channel as a whole entered a DNN model of 6 hidden layers with neuron counts of 1024, 1024, 512, 512, 256, and 128, respectively.

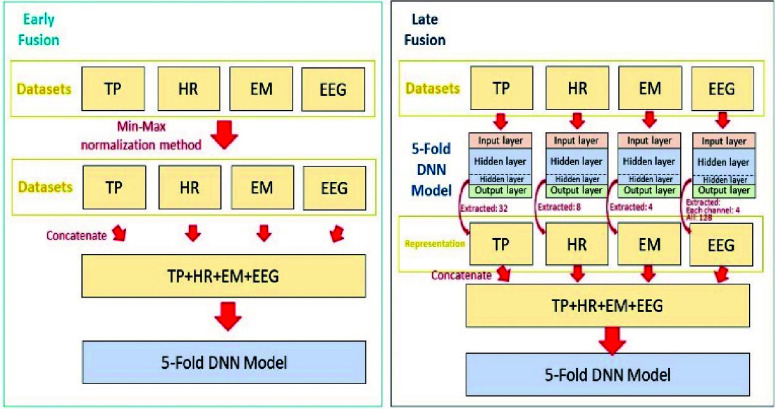

4). Fusion Model Architecture

Having obtained the features from different modalities, we implemented two fusion models: early and late fusion (Fig. 3). In early fusion, EEG, EM and HR were concatenated into one feature set for training the predictive model. Because dataset value ranges differ, we employed the min-max normalization method to rescale the features (Fig. 3 left). In late fusion, all different features were separately processed using DNN models for obtaining the important DNN model parameters. The data-specific weighting factors of the last hidden layer were extracted as the feature representation. These extracted features were merged as the inputs to the fusion model for training (Fig. 3 right).

FIGURE 3.

The architecture of the early (left) and late (right) DNN-based fusion model.

IV. Results

A. Task Performance

Table 1 summarizes the task-dependent performance of the two groups. First, we examined the within-group effects of distractions and highlighted the significant values in grey. For visual CPT, no differences were seen in task performance between D and ND trials in TDC, but the omission errors were significantly greater in D trials compared to ND trials in children with ADHD. For auditory CPT, when there were distractions, both groups exhibited significantly lower correct detection rates and greater omission errors. Regarding the visual and auditory CPT, the presence of distractions degraded the task performance of correct detection rates, reaction time and omission errors only in TPC, but not in children with ADHD. The task performances were similar in D and ND trials during the visual and auditory CPT in children with ADHD.

Concerning the between-group differences, the D trials and the ND trials resulted in 8 and 3 between-group differences of task performance in all three tasks, respectively. The ND trials led to significant between-group differences in correct detection rates, omission errors and commission errors in auditory CPT and in correct detection rates and reaction time in the visual and auditory CPT. When there were distractions, the two groups significantly differed in correct detection rates, omission errors and commission errors in all three CPTs. Additionally, the visual and auditory CPT elicited significant differences in reaction times between the two groups.

B. Statistical Tests on Individual Features

We first examined the between-group differences of each individual feature statistically using the Welch’s t-test and Table 2 summarizes the results. It can be seen that 22, 39, 8 and 34 features of TP, EM, HR and EEG, respectively, were statistically identified as important features after the correction for multiple comparisons. The ratios of significant features to all features were 61%, 40%, 89% and 3.1% of TP, EM, HR and EEG, respectively.

TABLE 2. Modal-Specific Significant Features.

| Dataset | TP | EM | HR | EEG |

|---|---|---|---|---|

| significant | 22 | 39 | 8 | 34 |

| Ratio (%) | 61% | 40% | 89% | 3.1% |

C. Classification Results of Single-Modality Datasets

Table 3 lists the modality-specific classification results using different classifiers. To increase readability, we highlighted the best accuracies in grey obtained by a classifier given data. The best performance across all classifiers can be obtained by using DNN and the data of TP or HR, followed by LGB using HR. When XGB was employed, the best accuracy was 76% using FP1 EEG. The best performance of SVM was 74% using HR. Moreover, when compared to the performance of features extracted from different EEG channels, FP1 outperformed the other channels in 3 of 4 classifiers. Therefore, FP1 was selected as the representative channel for further investigation.

TABLE 3. Classification Results of Each Dataset.

| Dataset | TP | EM | HR | EEG | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| All | All | All | All | AF3 | AF4 | AF7 | AF8 | FP1 | FP2 | |

| Classifier | DNN | |||||||||

| Accuracy | 0.81 | 0.70 | 0.81 | 0.72 | 0.68 | 0.64 | 0.67 | 0.67 | 0.71 | 0.62 |

| SD | 0.12 | 0.14 | 0.14 | 0.13 | 0.07 | 0.12 | 0.06 | 0.10 | 0.07 | 0.10 |

| Classifier | SVM | |||||||||

| Accuracy | 0.73 | 0.43 | 0.74 | 0.64 | 0.70 | 0.43 | 0.70 | 0.43 | 0.66 | 0.59 |

| SD | 0.10 | 0.03 | 0.10 | 0.15 | 0.15 | 0.03 | 0.18 | 0.03 | 0.18 | 0.08 |

| Classifier | LGB | |||||||||

| Accuracy | 0.68 | 0.59 | 0.79 | 0.64 | 0.62 | 0.61 | 0.65 | 0.62 | 0.72 | 0.57 |

| SD | 0.05 | 0.06 | 0.09 | 0.17 | 0.16 | 0.13 | 0.15 | 0.11 | 0.14 | 0.13 |

| Classifier | XGB | |||||||||

| Accuracy | 0.73 | 0.71 | 0.73 | 0.75 | 0.60 | 0.59 | 0.61 | 0.70 | 0.76 | 0.73 |

| SD | 0.05 | 0.08 | 0.09 | 0.13 | 0.08 | 0.10 | 0.08 | 0.17 | 0.08 | 0.16 |

D. Classification Results of Fusion Model

To verify whether the fusion of multi-task neurophysiological data can increase the separation rate, combinations of different models were tested using DNN. Table 4 lists the fusion results. Fusion model accuracies were 83%, 84% and 89% when using 2, 3 and 4 datasets, respectively, for fusion. To examine whether more features always lead to higher accuracy, we tested the fusion of all possible features (i.e., all EEG features) and the accuracy decreased to 75% (Table 4).

TABLE 4. Classification Results of Fusion Models.

| Dataset | TP+HR | TP + EM + HR | TP + EM + HR + EEG (FP1) | TP + EM + HR + EEG (All) |

|---|---|---|---|---|

| Accuracy | 0.83 | 0.84 | 0.89 | 0.75 |

| SD | 0.08 | 0.11 | 0.08 | 0.14 |

V. Discussion

In this study, we developed a fusion model using DNN and multi-task neurophysiological data of EEG, EM, HR, and TP to enhance ADHD detection. The analytic results confirmed that this fusion model can increase the separation rate to 89%, whereas data from single modality only achieves an accuracy of 81%. Our findings suggest that different neurophysiological models from multiple tasks can provide essential information to assist in ADHD screening.

A. Task Performance Differences Across Tasks

In this study, we developed three tasks to engage varying amounts of brain resources, namely the visual system, the auditory system and both systems combined. When considering the impacts of distractions in normally developing children, task performance was affected only in auditory CPT, not in visual CPT. Previous studies had reported that school-aged children had compensatory “adult-like” attentional suppression resources to resist irrelevant distractors in visual spatial attention [33] and achieved adult-level selective attention functions in cluttered visual scenes by the interactions between the developing sensory cortices and frontoparietal control network (see review in [34]). With the increase of task demand in visual + auditory CPT, task performance was further degraded by distractions in normally developing children. These results indicate that the effects of distractions were related to stimuli type and task difficulty, possibly due to insufficient brain resources to suppress irrelevant information. By contrast, task performance in children with ADHD were similarly poor with and without distractions in the most difficult visual + auditory CPT. Taken together, when the task is complex, children with ADHD exceeded their limitation of brain resources quicker than normally developing children, reflecting their immaturity of brain function.

B. The Importance of Features

In this study, we used univariate analysis to statistically test the importance of every feature and calculate the ratios of important feature numbers to the total feature numbers in each modality. It can be seen that HR has the greatest ratio of important features of all modalities and leads to the highest separation rates in 3 of 4 classifiers tested. On the other hand, EEG have the lowest ratio of important features, but the accuracy is not necessarily the poorest. This result suggests that appropriate features can facilitate the accuracy and statistical significance may help evaluate the importance of features.

C. Fusion Model Enhances ADHD Detection

Previous studies used one task to assess the behavioral and neuronal abnormalities in children with ADHD, yielding an accuracy rate between 56.6% and 92% depending on employed methods and the number of EEG channels [10], [11], [13], [21]. In this study, multiple neurophysiological data from three tasks with varying levels of difficulty were used to probe the level of brain resources engaged when children with and without ADHD performed tasks. The analytic results confirmed that the fusion of multiple tasks increases detection accuracy from 83% to 89%. Notably, we used only one channel of EEG data during tasks. This channel was chosen because of its greatest accuracy when using only EEG data features. Finally, greater classification results of individual data type may not always lead to the best result when the data are combined. This can be seen in TP and HR results, which achieved the greatest accuracy among the four datasets but the result of their fusion model was not the best. One possible explanation is that TP and HR were largely related with each as they both were associated directly with behavioral outcomes. By contrast, adding EEG features can serve as a supplement to reveal more differences between children with and without ADHD.

VI. Conclusion

In this study, we designed three tasks with varying difficulties to manipulate the levels of brain resources engaged during task performance. We tested whether this data from multiple tasks can enhance the detection of ADHD. The results demonstrated that the fusion of multi-task neurophysiological data can increase ADHD detection accuracy to 89%, whereas the highest accuracy of a single dataset was only 81%. Moreover, the combination of behavioral and neuronal features can aid in the separation of children with ADHD from TDC. Our results suggest that different neuro-sensing models from multiple tasks can provide essential information to assist in ADHD screening. In conclusion, the DNN-based fusion model offers a more efficient, and accurate alternative for early clinical diagnosis and management of ADHD. The application of artificial intelligence and multimodal neurophysiological data in clinical settings during VR tasks sets a precedent for digital health, paving the way for future advancements in the field.

Supplementary Materials

Funding Statement

This work was supported in part by the Ministry of Science and Technology of Taiwan (MOST) under Grant 109-2314-B-039-027- and Grant 109-2218-E-002-017-, and in part by NSTC under Grant 111-2218-E-008-010- and Grant 112-2635-E-008-001-MY2.

References

- [1].Ayano G., Yohannes K., and Abraha M., “Epidemiology of attention-deficit/hyperactivity disorder (ADHD) in children and adolescents in Africa: A systematic review and meta-analysis,” Ann. Gen. Psychiatry, vol. 19, no. 1, p. 21, Dec. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Sun W., Yu M., and Zhou X., “Effects of physical exercise on attention deficit and other major symptoms in children with ADHD: A meta-analysis,” Psychiatry Res., vol. 311, May 2022, Art. no. 114509. [DOI] [PubMed] [Google Scholar]

- [3].Pliszka S. R., “Patterns of psychiatric comorbidity with attention-deficit/hyperactivity disorder,” Child Adolescent Psychiatric Clinics North Amer., vol. 9, no. 3, pp. 525–540, Jul. 2000. [PubMed] [Google Scholar]

- [4].Abibullaev B. and An J., “Decision support algorithm for diagnosis of ADHD using electroencephalograms,” J. Med. Syst., vol. 36, no. 4, pp. 2675–2688, Aug. 2012. [DOI] [PubMed] [Google Scholar]

- [5].Demopoulos C., Hopkins J., and Davis A., “A comparison of social cognitive profiles in children with autism spectrum disorders and attention-deficit/hyperactivity disorder: A matter of quantitative but not qualitative difference?,” J. Autism Develop. Disorders, vol. 43, no. 5, pp. 1157–1170, May 2013. [DOI] [PubMed] [Google Scholar]

- [6].Loh H. W., Ooi C. P., Barua P. D., Palmer E. E., Molinari F., and Acharya U. R., “Automated detection of ADHD: Current trends and future perspective,” Comput. Biol. Med., vol. 146, Jul. 2022, Art. no. 105525. [DOI] [PubMed] [Google Scholar]

- [7].Bowling Z. and Nettleton A., “The diagnosis and management of ADHD (attention deficit hyperactivity disorder) in children and young people: A commentary on current practice and future recommendations,” BJGP Open, vol. 4, no. 1, Apr. 2020, Art. no. bjgpopen20X101043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Elder T. E., “The importance of relative standards in ADHD diagnoses: Evidence based on exact birth dates,” J. Health Econ., vol. 29, no. 5, pp. 641–656, Sep. 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Parsons T. D., Bowerly T., Buckwalter J. G., and Rizzo A. A., “A controlled clinical comparison of attention performance in children with ADHD in a virtual reality classroom compared to standard neuropsychological methods,” Child Neuropsychol., vol. 13, no. 4, pp. 363–381, Jun. 2007. [DOI] [PubMed] [Google Scholar]

- [10].Rodríguez C., Areces D., García T., Cueli M., and González-Castro P., “Comparison between two continuous performance tests for identifying ADHD: Traditional vs. virtual reality,” Int. J. Clin. Health Psychol., vol. 18, no. 3, pp. 254–263, Sep. 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Mueller A., Candrian G., Kropotov J. D., Ponomarev V. A., and Baschera G.-M., “Classification of ADHD patients on the basis of independent ERP components using a machine learning system,” Nonlinear Biomed. Phys., vol. 4, no. S1, Jun. 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Yeh S.-C., et al. , “A virtual-reality system integrated with neuro-behavior sensing for attention-deficit/hyperactivity disorder intelligent assessment,” IEEE Trans. Neural Syst. Rehabil. Eng., vol. 28, no. 9, pp. 1899–1907, Sep. 2020. [DOI] [PubMed] [Google Scholar]

- [13].Chen C.-C., Wu E. H., Chen Y.-Q., Tsai H.-J., Chung C.-R., and Yeh S.-C., “Neuronal correlates of task irrelevant distractions enhance the detection of attention deficit/hyperactivity disorder,” IEEE Trans. Neural Syst. Rehabil. Eng., vol. 31, pp. 1302–1310, 2023. [DOI] [PubMed] [Google Scholar]

- [14].Castellanos F. X., et al. , “Developmental trajectories of brain volume abnormalities in children and adolescents with attention-deficit/hyperactivity disorder,” J. Amer. Med. Assoc., vol. 288, pp. 1740–1748, Oct. 2002. [DOI] [PubMed] [Google Scholar]

- [15].Durston S., et al. , “Magnetic resonance imaging of boys with attention-deficit/hyperactivity disorder and their unaffected siblings,” J. Amer. Acad. Child Adolescent Psychiatry, vol. 43, no. 3, pp. 332–340, Mar. 2004. [DOI] [PubMed] [Google Scholar]

- [16].Frodl T. and Skokauskas N., “Meta-analysis of structural MRI studies in children and adults with attention deficit hyperactivity disorder indicates treatment effects,” Acta Psychiatrica Scandinavica, vol. 125, no. 2, pp. 114–126, Feb. 2012. [DOI] [PubMed] [Google Scholar]

- [17].Greven C. U., et al. , “Developmentally stable whole-brain volume reductions and developmentally sensitive caudate and putamen volume alterations in those with attention-deficit/hyperactivity disorder and their unaffected siblings,” J. Amer. Med. Assoc. psychiatry, vol. 72, pp. 490–499, May 2015. [DOI] [PubMed] [Google Scholar]

- [18].Hollis C., et al. , “The impact of a computerised test of attention and activity (QbTest) on diagnostic decision-making in children and young people with suspected attention deficit hyperactivity disorder: Single-blind randomised controlled trial,” J. Child Psychol. Psychiatry, vol. 59, no. 12, pp. 1298–1308, Dec. 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Edwards M. C., Gardner E. S., Chelonis J. J., Schulz E. G., Flake R. A., and Diaz P. F., “Estimates of the validity and utility of the Conners’ continuous performance test in the assessment of inattentive and/or hyperactive-impulsive behaviors in children,” J. Abnormal Child Psychol., vol. 35, no. 3, pp. 393–404, Jun. 2007. [DOI] [PubMed] [Google Scholar]

- [20].Slobodin O., Yahav I., and Berger I., “A machine-based prediction model of ADHD using CPT data,” Frontiers Human Neurosci., vol. 14, Sep. 2020, Art. no. 560021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Areces D., Rodríguez C., García T., Cueli M., and González-Castro P., “Efficacy of a continuous performance test based on virtual reality in the diagnosis of ADHD and its clinical presentations,” J. Attention Disorders, vol. 22, no. 11, pp. 1081–1091, Sep. 2018. [DOI] [PubMed] [Google Scholar]

- [22].Areces D., Dockrell J., García T., González-Castro P., and Rodríguez C., “Analysis of cognitive and attentional profiles in children with and without ADHD using an innovative virtual reality tool,” PLoS ONE, vol. 13, no. 8, Aug. 2018, Art. no. e0201039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Mühlberger A., et al. , “The influence of methylphenidate on hyperactivity and attention deficits in children with ADHD: A virtual classroom test,” J. Attention Disorders, vol. 24, no. 2, pp. 277–289, Jan. 2020. [DOI] [PubMed] [Google Scholar]

- [24].Arpaia P., et al. , “A systematic review on feature extraction in electroencephalography-based diagnostics (don’t short) and therapy in attention deficit hyperactivity disorder,” Sensors, vol. 22, no. 13, p. 4934, Jun. 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Falkenstein M., Hoormann J., and Hohnsbein J., “ERP components in Go/Nogo tasks and their relation to inhibition,” Acta Psychologica, vol. 101, nos. 2–3, pp. 267–291, Apr. 1999. [DOI] [PubMed] [Google Scholar]

- [26].Zarka D., Leroy A., Cebolla A. M., Cevallos C., Palmero-Soler E., and Cheron G., “Neural generators involved in visual cue processing in children with attention-deficit/hyperactivity disorder (ADHD),” Eur. J. Neurosci., vol. 53, pp. 1207–1224, Feb. 2021. [DOI] [PubMed] [Google Scholar]

- [27].Kaga Y., et al. , “Executive dysfunction in medication-Naïve children with ADHD: A multi-modal fNIRS and EEG study,” Brain Develop., vol. 42, no. 8, pp. 555–563, Sep. 2020. [DOI] [PubMed] [Google Scholar]

- [28].Kaiser A., Aggensteiner P.-M., Baumeister S., Holz N. E., Banaschewski T., and Brandeis D., “Earlier versus later cognitive event-related potentials (ERPs) in attention-deficit/hyperactivity disorder (ADHD): A meta-analysis,” Neurosci. Biobehavioral Rev., vol. 112, pp. 117–134, May 2020. [DOI] [PubMed] [Google Scholar]

- [29].Alexander D. M., et al. , “Event-related wave activity in the EEG provides new marker of ADHD,” Clin. Neurophysiol., vol. 119, no. 1, pp. 163–179, Jan. 2008. [DOI] [PubMed] [Google Scholar]

- [30].Clarke A. R., Barry R. J., and Johnstone S., “Resting state EEG power research in attention-deficit/hyperactivity disorder: A review update,” Clin. Neurophysiol., vol. 131, no. 7, pp. 1463–1479, Jul. 2020. [DOI] [PubMed] [Google Scholar]

- [31].Barry R. J., Clarke A. R., and Johnstone S. J., “A review of electrophysiology in attention-deficit/hyperactivity disorder: I. Qualitative and quantitative electroencephalography,” Clin. Neurophysiol., vol. 114, no. 2, pp. 171–183, Feb. 2003. [DOI] [PubMed] [Google Scholar]

- [32].Chen C.-C., et al. , “Neuronal abnormalities induced by an intelligent virtual reality system for methamphetamine use disorder,” IEEE J. Biomed. Health Informat., vol. 26, no. 7, pp. 3458–3465, Jul. 2022. [DOI] [PubMed] [Google Scholar]

- [33].Sun M., et al. , “Attentional selection and suppression in children and adults,” Develop. Sci., vol. 21, no. 6, Nov. 2018, Art. no. e12684. [DOI] [PubMed] [Google Scholar]

- [34].Kim N. Y. and Kastner S., “A biased competition theory for the developmental cognitive neuroscience of visuo-spatial attention,” Current Opinion Psychol., vol. 29, pp. 219–228, Oct. 2019. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.