Abstract

This study proposes a novel hybrid method, FVMD-WOA-GA, for enhancing traffic flow prediction in 5G-enabled intelligent transportation systems. The method integrates fast variational mode decomposition (FVMD) with optimization techniques, namely, the whale optimization algorithm (WOA) and genetic algorithm (GA), to improve the accuracy of overall traffic flow based on models tailored for each decomposed sub-sequence. The selected predictive models—long short-term memory (LSTM), bidirectional LSTM (BiLSTM), gated recurrent unit (GRU), and bidirectional GRU (BiGRU)—were considered to capture diverse temporal dependencies in traffic data. This research explored a multi-stage approach, where the decomposition, optimization, and selection of models are performed systematically to improve prediction performance. Experimental validation on two real-world traffic datasets further underscores the method’s efficacy, achieving root mean squared errors (RMSEs) of 152.43 and 7.91 on the respective datasets, which marks improvements of 3.44% and 12.87% compared to the existing methods. These results highlight the ability of the FVMD-WOA-GA approach to improve prediction accuracy significantly, reduce inference time, enhance system adaptability, and contribute to more efficient traffic management.

Keywords: traffic flow, parameter optimization, whale optimization algorithm, genetic algorithm, fast variation mode decomposition

1. Introduction

The surge in traffic volume has exacerbated congestion, placing immense pressure on transportation infrastructure. Addressing these challenges requires innovative approaches to forecasting traffic patterns and efficiently managing the flow of vehicles in complex urban environments. In this context, 5G technology plays a pivotal role by offering high-speed, low-latency communication, which allows for real-time data collection of large volumes of traffic data from various sensors, cameras, and connected vehicles. This means traffic data can be collected instantly, making real-time monitoring more accurate and timely. This wealth of real-time data enables the development of intelligent transportation systems (ITS) that can enhance traffic flow prediction to contribute to more efficient traffic management. Furthermore, 5G technology also helps deploy traffic flow prediction models based on machine learning to process in real-time through edge computing.

Despite the potential of 5G-enabled ITS, traffic flow prediction remains a challenging task due to the non-stationary nature of traffic data, which are influenced by a variety of factors, including weather conditions, human activities, and unforeseen events [1]. These factors introduce fluctuations that reduce prediction accuracy. Therefore, preprocessing is essential to ensure satisfactory prediction accuracy. Techniques for processing time-series data—including decomposition—are commonly used for this purpose, with popular methods including wavelet decomposition, empirical mode decomposition (EMD), and variational mode decomposition (VMD). Wavelet decomposition splits data into different frequency modes to extract traffic flow information; however, selecting an appropriate wavelet basis can be challenging [2,3,4]. EMD adaptively decomposes data to enhance predictive performance without requiring a selection of the number of modes; however, it can cause endpoint effects and mode mixing [5,6,7]. VMD, introduced by Dragomiretskiy in 2014, is a nonrecursive, adaptive, and quasi-orthogonal method [8]. Several of the prior studies [9,10,11] have also shown that VMD effectively solves modal aliasing and improves traffic flow prediction accuracy.

When utilizing VMD, the selection of appropriate values K and the parameter is pivotal, as these values significantly affect the accuracy of forecast outcomes [10,12,13]. Parameter K controls the number of output sub-sequences of the VMD, while alpha is the moderate bandwidth penalty constraint that controls the smoothness of each sub-sequence. A higher value of results in more narrow subsequences and is less prone to capturing noise. The previous studies have employed optimization algorithms, such as genetic algorithms (GA) [14,15], whale optimization algorithm (WOA) [16,17], and particle swarm optimization (PSO) [18,19], to fine-tune these parameters.

In addition, the original VMD framework is typically solved using the alternating direction method of multipliers (ADMM), which is a popular technique for distributed optimization owing to its numerous advantages, including a modular structure, straightforward implementation, excellent convergence, and flexibility [8]. Although ADMM has a convergence rate of , it might not be sufficiently quick in complex scenarios, resulting in time-consuming operations for the entire system [20]. Furthermore, optimization-based VMD is known to be highly time-consuming, as mentioned in [21,22]. Thus, the acceleration of VMD can lead to a decrease in resource usage and an improvement in overall performance.

Moreover, because the output of VMD consists of K subsequences with different features, the use of different models for these subsequences can improve the predictive performance [23]. However, the prior studies did not address the issue of parameter optimization for FVMD and model selection for each subsequence simultaneously, thereby compromising model flexibility. Instead, most of the prior studies adopted a single model for all subsequent data, ignoring differences in the characteristics of subsequences [2,24,25].

These problems motivated us to propose a novel system for predicting the traffic flow for intelligent transportation systems integrated with 5G technology. To the best of our knowledge, this study represents the first attempt to improve VMD speed and use WOA-GA to fine-tune VMD parameters, enabling the selection of a suitable model for each subsequence after decomposition. The main contributions of this study are summarized as follows:

We introduce FVMD as a novel VMD variant that employs fast ADMM instead of ADMM to enhance its convergence rate.

We also propose the hybrid WOA-GA to fine-tune FVMD parameters, enabling the selection of a suitable model for each subsequence based on its unique characteristics.

We conducted experiments to evaluate the effectiveness of traffic flow prediction using several metrics—including the mean square error (MSE), root mean square error (RMSE), mean absolute error (MAE), and mean absolute percentage error (MAPE)—on two real-world datasets.

The paper is organized as follows: Section 2 surveys the existing research on traffic flow prediction in 5G-enabled intelligent transportation systems. Section 3 outlines the fundamental techniques employed in the proposed approach. Section 4 presents the evaluation results, and Section 5 concludes the paper.

2. Related Work

This section examines prior studies associated with traffic volume decomposition using VMD. Table 1 presents a summary of the related works.

Table 1.

Related works in traffic flow prediction using VMD technique. The “✓” symbol denotes the use of an Adaptive Predictor.

| Paper | Year | VMD Fine-Tuning Algorithm | VMD Solving Algorithm | Predictor | Adaptive Predictor |

|---|---|---|---|---|---|

| [25] | 2021 | ADMM | BiLSTM | ||

| ine [26] | 2022 | cosine similarity | ADMM | ELM | |

| ine [27] | 2022 | Fuzzy Entropy | ADMM | Hybrid GAT-BiGRU | |

| ine [28] | 2022 | ADMM | Hybrid BiLSTM, GMDH, ELMAN | ||

| ine [29] | 2023 | ADMM | DELM | ||

| ine [30] | 2023 | ADMM | LSTM | ||

| ine [31] | 2023 | ADMM | Auto-correlation | ||

| ine [10] | 2023 | NGO | ADMM | ARO-KELM | |

| ine [9] | 2023 | ADMM | LSTM | ||

| ine [11] | 2024 | BA | ADMM | L-BILSTM | |

| ine Our | 2024 | WOA-GA | Fast ADMM | LSTM, BiLSTM, GRU, BiGRU | ✓ |

Hong Yang et al. [26] integrated cosine similarity (CS)-based VMD (CSVMD) with an extreme learning machine (ELM) and an iterative error compensation strategy to create a new model called CSVMD-ELM-error. This model was designed to address the limitations of the existing traffic flow data prediction models, particularly those related to mode mixing and the selection of mode numbers. The authors decomposed traffic flow data into intrinsic mode functions (IMFs) using VMD and then employed ELM to predict each IMF. In addition, they determined the optimal mode number (K) for VMD by calculating the CS of the original and decomposed data. As the K value increased, the CS value continuously increased until it reached the optimal K value, i.e., the K value closest to 1. The CSVMD-ELM-error model demonstrated superior prediction performance for various traffic flow data datasets.

Huang et al. [25] investigated the effects of various multi-scale decomposition algorithms on neural network performance, specifically in the context of short-term traffic flow prediction. These algorithms included empirical mode decomposition (EMD), ensemble EMD (EEMD), complete EEMD with adaptive noise (CEEMDAN), VMD, wavelet decomposition (WD), and wavelet packet decomposition (WPD). After being decomposed into various components, the traffic flow data were analyzed using a bidirectional long short-term memory (BiLSTM) neural network model. The study provides comprehensive insights into the selection of multiscale decomposition algorithms.

Yin et al. [27] introduced a hybrid model called ST-VGBiGRU, for traffic flow prediction, effectively capturing both spatial and temporal correlations in traffic data. The authors utilized the VMD algorithm to decompose traffic flow sequences into stationary modal components, which were then noise-reduced using fuzzy entropy to enhance accuracy. A hybrid graph attention network–bidirectional gated recurrent unit (GAT-BiGRU) model was applied to each IMF. The GAT network captured the attention levels of prediction nodes to neighboring traffic nodes, whereas the BiGRU network captured the temporal correlation of each modal component. The ST-VGBiGRU model demonstrated significant improvements in prediction accuracy on the RTMC traffic dataset. However, model performance tended to decrease over longer prediction horizons, suggesting that although the model excels in short-term predictions, its effectiveness may diminish in longer-term forecasting scenarios.

Zhong et al. [28] introduced a hybrid model that combines VMD, a deep extreme learning machine (DELM), and Harris hawks optimization (HHO). They used VMD to decompose short-term traffic flows, and combined an extreme learning machine (ELM) with the deep neural network framework of a stacked autoencoder (AE) to construct the DELM, which was subsequently optimized via HHO. The VMD-HHO-DELM model demonstrated significant improvements in prediction accuracy, achieving scores of 0.9984 for non-stationary data and 0.9993 for stationary data.

Zhao et al. [9] introduced a short-term traffic flow prediction model that combines VMD with an improved dung beetle optimization (IDBO) algorithm to optimize LSTM networks, effectively addressing the challenges posed by nonlinear traffic flow data characterized by high noise levels. VMD was initially employed to decompose the original traffic flow sequence into several IMFs, and the LSTM networks with hyperparameters optimized using IDBO were subsequently deployed to predict traffic flow based on the decomposed IMFs. The proposed VMD-IDBO-LSTM model demonstrated significant improvements in prediction accuracy compared to the traditional models, including single LSTM and other combined models such as EMD-LSTM. Despite these improvements, the IDBO algorithm still faces challenges related to local optima, which can affect overall prediction accuracy.

Yin et al. [28] developed a new hybrid model for short-term traffic flow forecasting, incorporating VMD, a group method of data handling (GMDH) neural network, BiLSTM, and an ELMAN network optimized by the imperialist competitive algorithm (ICA). Comparative experiments demonstrated that this model exhibits excellent predictive capabilities, with the BiLSTM, GMDH, and ELMAN networks outperforming other single models, and VMD significantly improving predictive performance. Subsequently, Yin et al. confirmed the effectiveness of VMD by combining LSTM with each individual component [30].

Li et al. [10] developed a secondary decomposition system using a complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN), neural network estimation time entropy (NNetEn), and VMD enhanced by the northern goshawk optimization (NGO) algorithm. The kernel extreme learning machine (KELM) was optimized by the artificial rabbits optimization (ARO) algorithm with error correction (EC). High- and low-frequency IMF components were separated using NNetEn following CEEMDAN decomposition. VMD, fine-tuned by NGO, performed a secondary decomposition on all high-frequency components. All decomposed components were then predicted using ARO-KELM, with error correction included to further improve accuracy.

Dai et al. [11] introduced optimized variational mode decomposition (OVMD), where VMD was fine-tuned using the bat algorithm (BA). The predictor for each subsequence combined the basic LSTM network with the BiLSTM network.

Guo et al. [31] proposed a hybrid model that utilizes VMD and autocorrelation for long-term traffic flow prediction, wherein VMD is used to decompose data into intrinsic mode functions and extract features to capture traffic flow changes. They incorporated a correction module with a convolutional layer and used it alongside an autocorrelation mechanism to build an encoder and decoder for feature extraction and fusion.

In general, the effectiveness of traffic flow predictions is enhanced by combining data decomposition methods with prediction models. However, the aforementioned studies failed to address potential tasks that may further improve predictive performance. First, most prior studies did not consider improving the speed of optimization-algorithm-based VMD. Second, they did not consider selecting an appropriate model for specific characteristics; instead, each of the aforementioned studies used a single model for all subsequences after decomposition.

3. Proposed Work

3.1. Overall Architecture

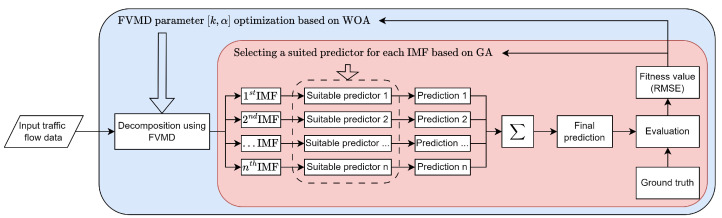

This study proposes a novel approach to enhance the performance of traffic flow data prediction by introducing FVMD and WOA-GA. The overall architecture of the proposed method is illustrated in Figure 1. Specifically, we replaced the ADMM in VMD with fast ADMM to enhance convergence speed, accelerating the decomposition of input traffic flow data to IMFs. Furthermore, the proposed WOA-GA fine-tunes the FVMD parameters, including K and , and selects a suitable machine learning (ML) model for each IMF. The list of candidate ML models includes the gated recurrent unit (GRU) [32], bidirectional GRU (BiGRU) [33], LSTM [34], and BiLSTM [35]. Finally, the predictions from all branches are fused to generate the final prediction. The RMSE, employed to compare the final prediction to the ground truth, served as the fitness value for WOA-GA.

Figure 1.

The overall architecture of our approach.

3.2. FVMD Algorithm

VMD is a signal-smoothing technique, wherein nonlinear historical traffic flow data are smoothed and broken down into some bits of comparatively stable data. This is crucial when predicting traffic flow, as predictive performance can be significantly enhanced if the input model contains both smooth and stable data. Most prediction models are not particularly successful in predicting non-stationary data.

The fundamental structure of a VMD is a variational algorithm that partitions nonstationary signals into many intrinsic mode components with a restricted bandwidth. Although the classical ADMM is widely used to resolve this problem, it has a relatively slow convergence rate of . As an accelerated variant of ADMM, fast ADMM, employs the Nesterov technique, achieving a faster convergence rate of for strongly convex problems [36]. Our proposed FVMD algorithm employs fast ADMM to improve VMD speed.

-

(1)

Formulate the variational problem.

A one-sided spectrum is derived by applying the Hilbert transform to compute the analytical signal for each mode function. The mode spectrum is then transformed into baseband, and the predicted center frequency is determined via exponential adjustment.

| (1) |

where indicates the fractions of the mode produced from signal decomposition, represents the center frequency of these mode fractions, K denotes the number of iterations, k takes values from 1 to K, represents the Dirac distribution, * represents the convolution operation, represents the central frequency, and f denotes the original signal.

-

(2)

Transform the confined variational problem into an unconstrained variational problem.

Equation (2) represents the modified Lagrange expression, incorporating a quadratic penalty factor and Lagrange multiplicative operator derived from Equation (1):

| (2) |

where denotes the penalty factor, and denotes the Lagrangian multiplier.

-

(3)

Solve the unconstrained variational problem using Fast ADMM

I. Initialize , , , , n = 0, and k = 1.

II. Update modes and frequencies using Equations (3) and (4) for each K, and then update using Equation (5).

| (3) |

| (4) |

Following each iteration, derive the modalities and central frequencies and update the Lagrangian multiplier using the following equation:

| (5) |

where denotes the update rate.

III. Update Nesterov stepsize using Equation (6)

| (6) |

IV. Update the modalities and central frequencies by Equations (7) and (8) for each K, then update the Lagrangian multiplier by Equation (9).

| (7) |

| (8) |

| (9) |

V. Evaluate whether the iterative termination condition is satisfied using (10). If it is, the iteration is halted, and the result is produced. Otherwise, increment the iteration number n by 1 and return to Step II.

| (10) |

where is the discriminant accuracy parameter.

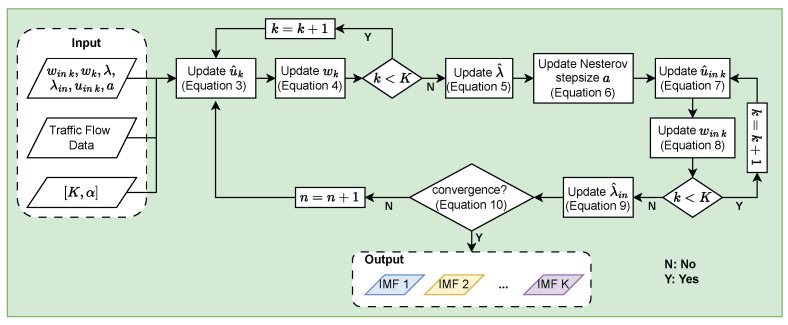

Figure 2 depicts a flowchart of VMD using fast ADMM.

Figure 2.

Flowchart of FVMD algorithm.

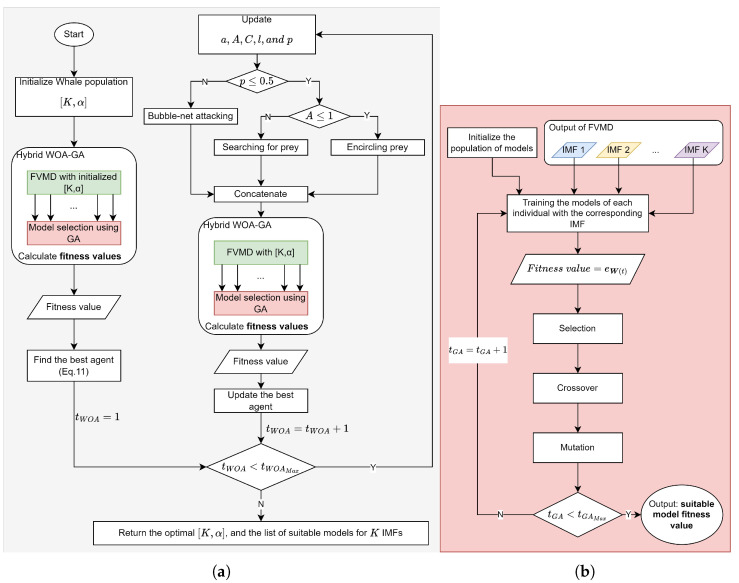

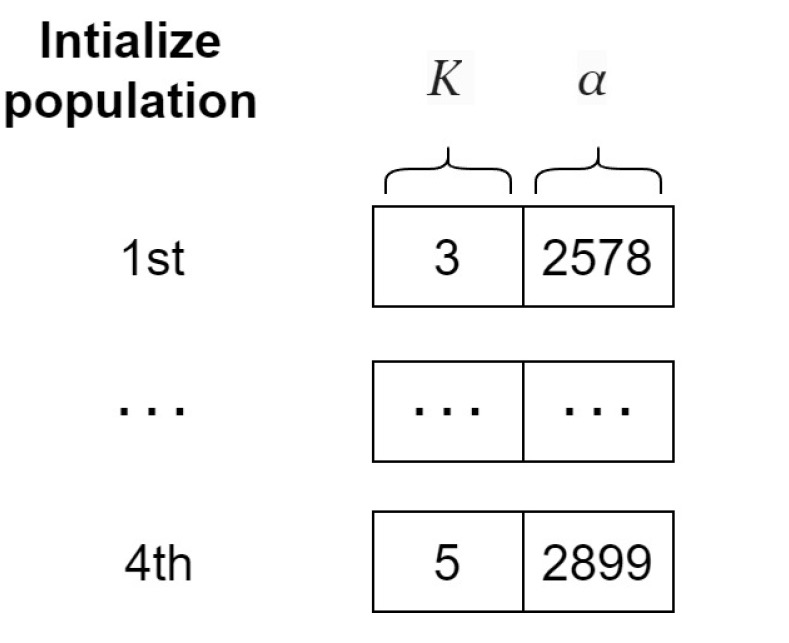

3.3. FVMD Optimized by GA-WOA

The selection of an appropriate number of decompositions (K) and values is crucial when using FVMD. To address this, we employed WOA, proposed by Mirjalili et al. [37], to refine the FVMD hyperparameters. Following decomposition, each IMF has different characteristics, necessitating the selection of a suitable prediction model. The GA, introduced by Professor J. Holland in 1975, solves this problem by selecting an ML model from a list including the GRU [32], BiGRU [33], LSTM [34], and BiLSTM [35] for each IMF. The combined WOA-GA approach simultaneously ensures optimal parameter tuning and model selection for each IMF. The architecture of WOA-GA-optimized FVMD is illustrated in Figure 3. Specifically, Figure 3a illustrates the WOA-facilitated fine-tuning of model parameters with the aid of the GA for computing the fitness value. The GA-based ML model selection for the calculation is illustrated in Figure 3b. The output of these processes is the optimal combination of hyperparameters with the lowest fitness value, along with the corresponding models. The WOA-GA process can be explained as follows:

-

I.

Initialization

Figure 3.

Overview of WOA-GA-optimized FVMD (a) WOA-GA; (b) GA.

To fine-tune FVMD and select an adaptive model for each IMF, we set the total number of iterations for both WOA and GA () to 10. Additionally, through comprehensive experiments, we determined the optimal number of whales in the WOA and GA populations to be 10. The specific range for each FVMD hyperparameter that requires fine-tuning was determined as follows:

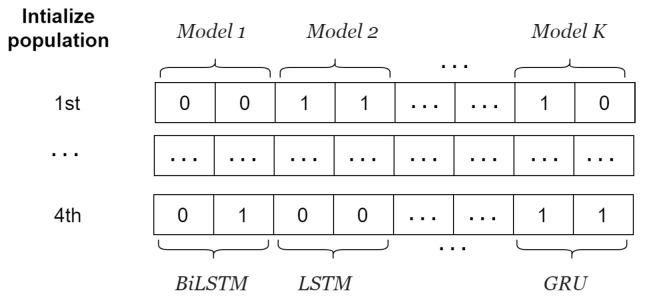

First, in Figure 3a, four potential whales are initialized randomly from the input WOA population, as depicted in Figure 4.

-

II.

Calculating the fitness value

Figure 4.

An instance of input WOA population.

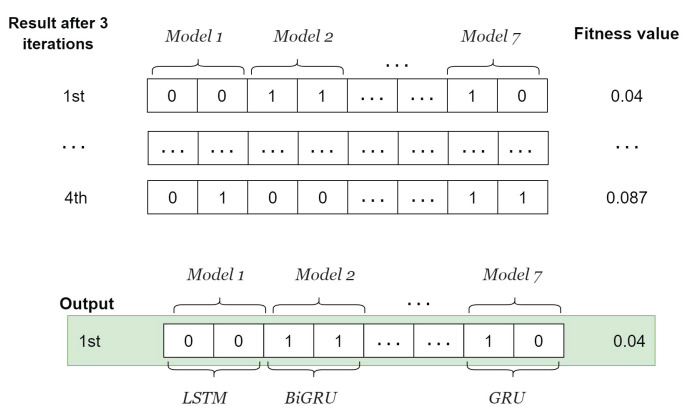

The fitness value is calculated by decomposing the traffic flow data into IMFs using FVMD, as illustrated in Figure 2, with derived from the whale population. The outputs of FVMD corresponding to each whale are IMFs. The GA processes these IMFs by selecting suitable models to generate corresponding predictions. The GA population consists of four individuals, each of which has genes. Specifically, every pair of genes shows the model for each IMF, as illustrated in Figure 5. These lists are then updated using the GA until the most suitable model is obtained. The list of candidate ML models is denoted as follows.

LSTM: 00

BiLSTM: 01

GRU: 11

BiGRU: 10

Figure 5.

An instance of input GA population for adaptive model selection.

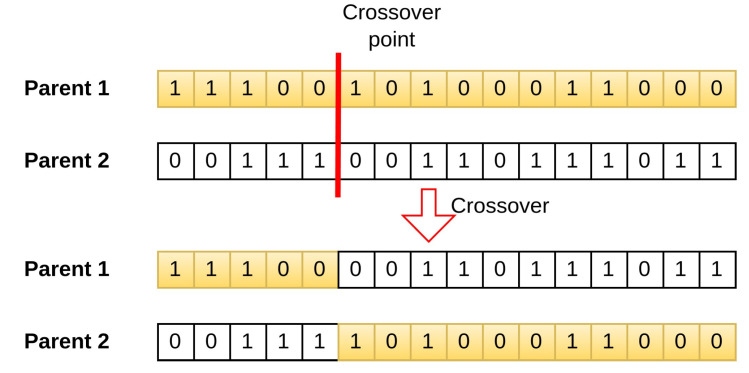

The GA involves three main operations: selection, crossover, and mutation. During selection, chromosomes are selected for reproduction based on their fitness, with those with higher fitness assigned a higher chance of becoming parents. These parents then undergo crossover to produce new offspring by exchanging segments of their chromosomes at randomly selected points. Figure 6 illustrates the process.

Figure 6.

Crossover operator of GA.

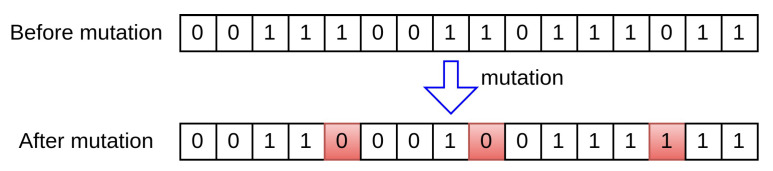

Mutation occurs after the crossover operation. This process involves randomly altering one or more genes to generate new offspring, thereby creating new adaptive solutions and avoiding local optima. Specific mutation operations are illustrated in Figure 7. Over multiple generations, the GA converges to the optimal solution.

Figure 7.

Mutation operator of GA.

Following FVMD and GA, each suitable model corresponding to each decomposed IMF is employed as a predictor to create a prediction for its IMF. After that, all predictions are summed up to form the final prediction that is compared with the ground truth using RMSE. The fitness function for both WOA and GA, as defined by Equation (11), is

| (11) |

where represents the prediction RMSE (Equation (23)) of all IMFs, and represents the model selected for each IMF.

With the list of fitness values for the whale population, the best agent is found, initiating a WOA iteration.

-

III.

Evaluating the iterative termination condition.

If , halt the iteration and return the optimal FVMD parameters along with the best-fit model for each corresponding IMF. Otherwise, increment the iteration number by 1, and update the whale population and WOA parameters.

-

IV.

Updating the whale population

In the WOA-GA-optimized FVMD iteration, the whales update their positions using one of three search strategies: surrounding prey, spiral bubble-net predation, or searching for prey. The selection of strategy depends on the values of p and , as shown in Figure 3a.

Surrounding prey

The optimal position is determined by selecting the location of the whale nearest to the target. Once the optimal position is established, other whales move towards it. The equation for encircling prey behavior is expressed as follows:

| (12) |

| (13) |

where t denotes the current iteration, denotes the current optimal position vector of an individual whale, and denotes the next position vector of the whale. The variable represents the distance between the whale and its prey. The coefficients A and C are calculated using the following equations:

| (14) |

| (15) |

| (16) |

where and represent random numbers between 0 and 1, the variable a decreases linearly from 2 to 0, and is the maximum number of iterations.

Spiral bubble-net predation

The following mathematical model is used to simulate humpback whale hunting behavior in the form of a spiral:

| (17) |

| (18) |

Equation (17) expresses the distance between the prey and the whale ith, where the constant variable b is used to define the spiral shape with a value of 1, and l is a random value between and 1.

When circling their prey, whales use contracted envelopes and spiral models. A mathematical model can be used to simulate this behavior with a chance of updating positions. The envelope gradually decreases in size during the circling process.

| (19) |

where p is a random probability variable between 0 and 1.

Searching for prey

When searching for pray, humpback whales swim randomly relative to each other. This behavior can be mathematically modeled as follows:

| (20) |

| (21) |

where are the positions of randomly selected whales. When is greater than 1, the whales must keep themselves away from their prey, and all whale positions are updated using the randomly generated . This strategy is helpful for identifying more appropriate prey species.

After updating the positions of whales, the fitness value calculated in Step II is again applied to determine the optimal whale. These iterative steps minimize the fitness value to determine optimal parameters for FVMD and the best-fit model for each IMF.

-

V.

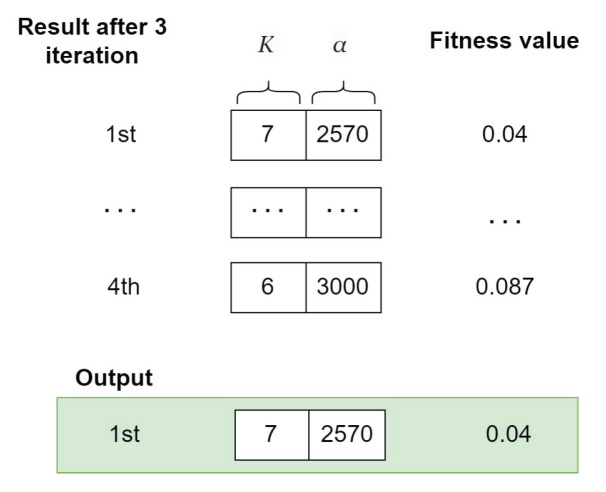

Output Selection for GA and WOA

After four iterations, the whale with the best fitness score is identified, representing the optimal FVMD parameters and a suitable model list for a given dataset. Figure 8 illustrates the selection of an optimal whale.

Figure 8.

Instance of WOA output.

Similarly, the model list with the optimal FVMD parameters and best fitness score is selected as the GA output, as illustrated in Figure 9.

Figure 9.

Instance of GA output.

The final output of WOA-GA is the combination of the outputs shown in Figure 8 and Figure 9, representing the optimal FVMD parameters and corresponding optimal model list.

4. Experiments and Analysis

4.1. Datasets

To evaluate the performance of the proposed method through experiments, two distinct real-life traffic datasets are used: PeMSD08 and the Metro Interstate Traffic Volume dataset.

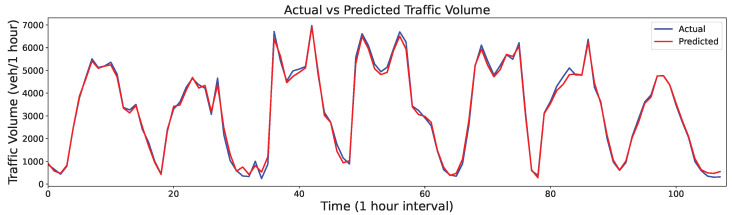

The Interstate Traffic Dataset https://archive.ics.uci.edu/dataset/492/metro+interstate+traffic+volume (Dataset 1; accessed on 28 August 2024) provides hourly traffic volume data for the westbound I-94, a major interstate highway in the United States connecting Minneapolis and St. Paul, Minnesota. The Minnesota Department of Transportation (MnDOT) collected the data from 2012 to 2018 at a station midway between the two cities. The dataset encompasses nine features representing traffic volume, holiday status, temperature, and weather. By adopting the same setup as in [26], we randomly sampled 720 continuous data points for our experiments, with 600 used for training and 120 allocated for testing (Figure 10).

Figure 10.

Traffic flow of dataset 1.

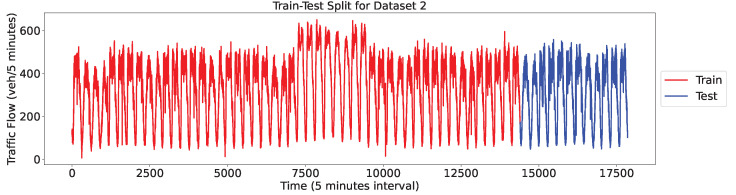

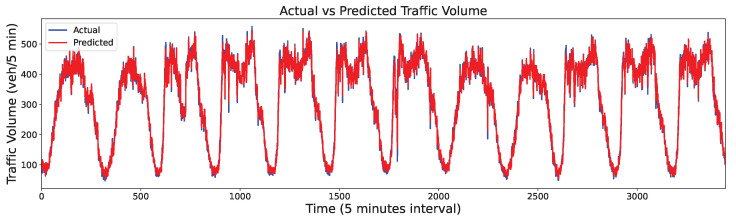

The PeMSD dataset contains information on highway traffic in California, collected using the Caltrans Performance Measurement System in real time every 30 s and then aggregated into 5-min intervals. The dataset includes more than 39,000 detectors deployed on highways in major metropolitan areas, along with geographic information about the sensor stations. For our experiment, we used the PEMDS08 [38] variant (Dataset 2) of PEMDS, wherein data were recorded from July to August 2016 using 170 different detectors. We selected 14,400 data points for training (corresponding to 50 days, approximately 80% of the data) and 3456 data points for testing (corresponding to 12 days, approximately 20% of the data), as shown in Figure 11. The detailed statistics information of the two datasets is shown in Table 2.

Figure 11.

Traffic flow of dataset 2.

Table 2.

Data characteristics for traffic flow on Dataset 1 and 2.

| Dataset | Minimum | Maximum | Average | Std | Unit |

|---|---|---|---|---|---|

| 1 | 231 | 7118 | 3237.40 | 2072.45 | vehicles per hour |

| 2 | 6 | 650 | 329.84 | 145.94 | vehicles per 5 min |

In traffic flow prediction, a fixed span of past data points—known as a history window—is used to generate predictions about future values. Throughout the experiments, a fixed history window size of 12 was used for both datasets. This means that the models were trained and evaluated using the last 12 time steps of the historical data to predict future values. After being decomposed into IMFs, the data are split into training and testing sets; details of the train/test split are shown in Table 3. Before being fed into the prediction model, the data are normalized using the min–max scaling method to make sure that the data are within the range of [0, 1].

Table 3.

Description of input-output structure for the training and testing sets in the prediction model.

| Subset | Input | Output |

|---|---|---|

| Train | ||

| … | … | |

| Test | ||

| … | … |

4.2. Time Comparison of FVMD and VMD

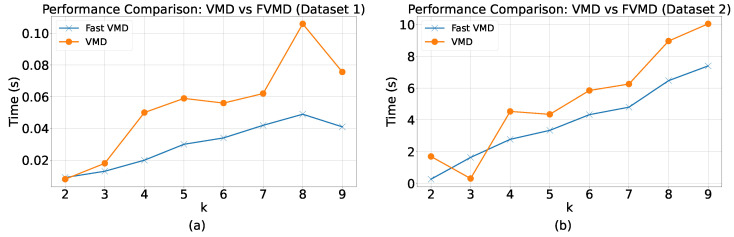

We investigated the performance of standard VMD and FVMD on the two datasets to compare their running times under varying conditions. During this process, the number of subsequences K was alternated between 2 and 9, and the alpha value was fixed at 2000.

Figure 12 presents the time consumption of FVMD and VMD under different values of K. For both datasets, the processing time required by both VMD and FVMD increased along with the number of subsequences. As expected, higher K values typically incur a higher computational complexity for the signal decomposition process.

Figure 12.

Time Comparison of VMD and FVMD Across Different K Values on Two Datasets. (a) Dataset 1, (b) Dataset 2.

For Dataset 1, the general trend in terms of processing time was better for FVMD than VMD. Given smaller values of K, the two algorithms exhibited similar time costs, as the overall computational complexity of VMD might not be sufficiently high for the adaptive penalty update of fast ADMM to provide a significant advantage over classic ADMM. However, the difference between the two algorithms’ processing times increased alongside K.

For Dataset 2, an exception was observed at K = 3, where FVMD performed slightly worse than VMD, proving that the stability of FVMD is not always maintained. However, K = 3 was not the optimal K for Dataset 2, and the general trend time of FVMD was better than that of VMD.

4.3. Comparison Experiment

4.3.1. Evaluation Metrics

To evaluate the prediction results, we used the MSE as the primary metric, along with the RMSE, MAE, and MAPE as references. The following formulas were used:

| (22) |

| (23) |

| (24) |

| (25) |

where n denotes the number of data points within the test set, denotes the ground truth, and denotes the prediction. Because all of these metrics represent error measures, they are inversely correlated with model performance. As an additional evaluation metric, we measured the running time (RT) of each variant.

4.3.2. Baseline Settings and Parameter Configurations

To evaluate the effectiveness of the proposed parameter optimization and adaptive model selection strategy, we compared the performance between various decomposition and prediction configurations across the two datasets. In the proposed method (FVMD-WOA-GA), WOA is utilized to select the optimal number of subsequences K and penalty parameter . Subsequently, FVMD is applied to decompose the time series into IMFs. Finally, the GA is employed to select the most suitable predictor for each IMF subsequence, ensuring that the prediction process is tailored to the characteristics of each component of the signal. For a comprehensive comparison, we set the following baselines:

FVMD-WOA-Random: This variant randomly chooses a predictor for each IMF following the decomposition phase with FVMD (GA not applied).

FVMD-WOA-LSTM: This variant uses LSTM to predict each IMF after the decomposition phase with FVMD (GA not applied).

FVMD-WOA-BiLSTM: This variant uses BiLSTM to predict each IMF after the decomposition phase with FVMD (GA not applied).

FVMD-WOA-GRU: This variant uses GRU to predict each IMF after the decomposition phase with FVMD (GA not applied).

FVMD-WOA-BiGRU: This variant uses BiGRU to predict each IMF after the decomposition phase with FVMD (GA not applied).

LSTM: Simple LSTM model using the original traffic flow as input (without FVMD).

BiLSTM: Simple BiLSTM model using the original traffic flow as input (without FVMD).

GRU: Simple GRU model using the original traffic flow as input (without FVMD).

BiGRU: Simple BiGRU model using the original traffic flow as input (without FVMD).

For simplicity, these variants are referred to as FVMD-WOA-GA #1, FVMD-WOA-Random #2, FVMD-WOA-LSTM #3, FVMD-WOA-BiLSTM #4, FVMD-WOA-GRU #5, FVMD-WOA-BiGRU #6, LSTM #7, BiLSTM #8, GRU #9, and BiGRU #10.

For WOA, the parameter setup included a maximum of four iterations and a population size of 10 whales. The parameter K was constrained to a range of 2 to 9, whereas the parameter ranged from 1500 to 5000. For the GA, the number of iterations was set to 10 with a population size of 10 and crossover rate of 0.9. For the RNN predictor, the setup included 16 units in the hidden layer, training for 100 epochs with a batch size of 1024, dropout rate of 0.1, and learning rate of 0.01. For FVMD, the parameters were set as follows: tau = 0.0, DC = 0, initialization method = 1, and convergence tolerance = .

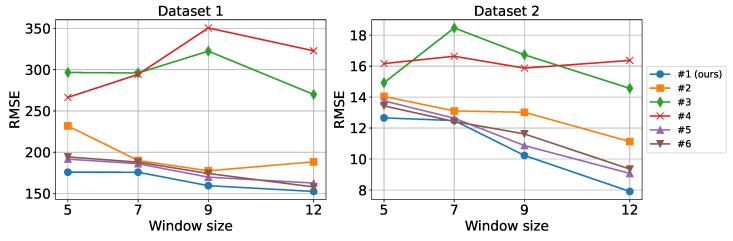

4.3.3. Performance with Different History Window Sizes

Figure 13 illustrates the performance of different model variants across various history window lengths. We evaluated the model using window lengths of 5, 7, 9, and 12 samples to determine the optimal amount of historical data required for accurate forecasting. As shown in the figure, model performance generally increased along with window size. As expected, the more historical data samples are provided, the more information the model can rely on to produce more accurate predictions. The proposed FVMD-WOA-GA model outperformed the other methods in terms of window size for both datasets. The time interval between two continuous samples in the first dataset is one hour, whereas, in the second dataset, it is five minutes. As a result, the second dataset can retain more information about the non-stationary traffic flow dataset (traffic light stops, congestion, and so on) in a shorter period of time than the first, which maps more general patterns. Therefore, in dataset 2, methods can capture more valuable information, resulting in more improvement when increasing the history window size compared to the first dataset.

Figure 13.

Performance with different history window sizes.

4.3.4. Impact of Each Subsequence

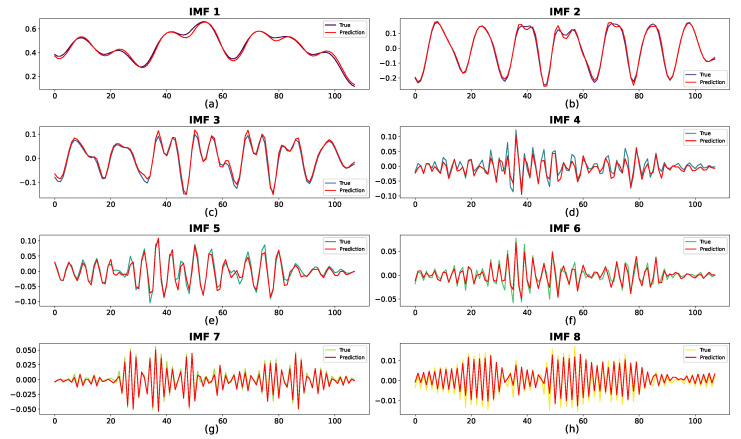

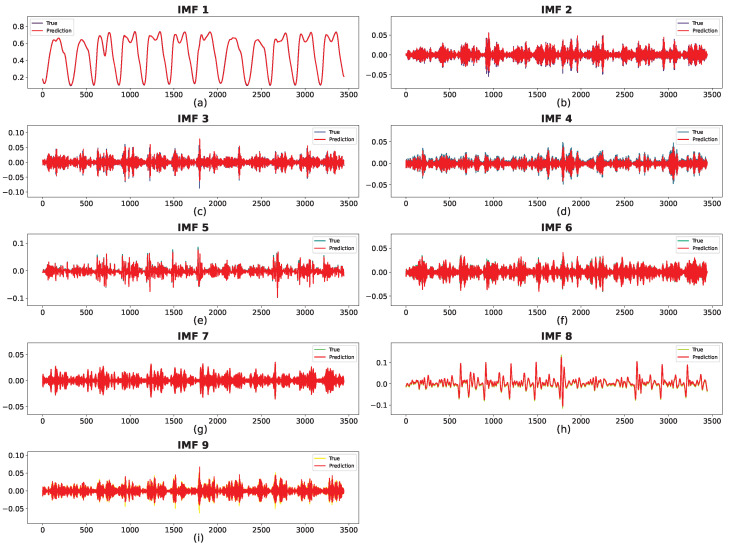

The prediction results for the two datasets are shown in Figure 14 and Figure 15, where the red curve indicates the predicted sequence. The actual curve is completely covered by the red curve, demonstrating the prediction accuracy of the proposed method for each IMF. In Figure 14, despite some bias at the extreme points for IMF4, IMF7, and IMF8, the overall patterns of the predicted and real values are closely aligned. For IMF1–IMF3, the forecasted values are remarkably consistent with the observed trends. In addition, as shown in Figure 15, the predictions for all subsequences are nearly identical to the ground truth.

Figure 14.

Prediction results of subsequences on Dataset 1 with best suitable K = 8, with corresponding predictors: (a) BiGRU, (b) BiGRU, (c) GRU, (d) LSTM, (e) LSTM, (f) BiLSTM, (g) BiGRU, (h) BiGRU.

Figure 15.

Prediction results of subsequences on Dataset 2 with best suitable K = 9, with corresponding predictors: (a) BiGRU, (b) BiGRU, (c) GRU, (d) BiLSTM, (e) BiGRU, (f) BiGRU, (g) GRU, (h) BiLSTM, (i) BiGRU.

Ultimately, FVMD was used to efficiently extract and process traffic volume fluctuations in both datasets, significantly enhancing predictive performance. Thus, the combination of FVMD and ML model optimization significantly improved the accuracy of traffic volume prediction.

The FVMD plays a crucial role in decomposing these raw data into multiple simpler sub-signals (IMFs), each capturing different aspects of the traffic flow, such as trends, periodic patterns, and noise. Each IMF requires a tailored predictor to capture its unique characteristics fully and play a different role in the final results. To evaluate their contribution, we sequentially remove each IMF from the final prediction and measure the increase in RMSE. The detailed results are shown in Table 4; in both datasets, the first IMF plays the most crucial role in the final prediction, with a performance loss when not integrating them of approximately 3058.19 and 329.61 in RMSE, corresponding to Datasets 1 and 2. In both datasets, IMFs with a high impact on the final results tend to have a smoother shape in their signal compared to the rest (IMF1 Figure 14a, IMF2 Figure 14b, and IMF3 Figure 14c on Dataset 1; IMF1 Figure 15, IMF8 Figure 15h for Dataset 2). The smoother signal contains the general trend of traffic flow data, resulting in more contribution than the other IMFs, which capture the noise and periodic patterns.

Table 4.

Impact of removing each IMF on the prediction error (measured in RMSE) for both datasets. The RMSE values of variant #1 (without any IMF removed) are provided in the “None” row. The values in parentheses indicate the increase in RMSE due to the removal of each corresponding IMF. Value of the most impact IMF are shown in bold.

| Removed IMF | Dataset 1 | Dataset 2 |

|---|---|---|

| RMSE | RMSE | |

| None | 152.43 | 7.91 |

| 1 | 3210.62 (↑ 3058.19) | 337.52 (↑ 329.61) |

| 2 | 954.45 (↑ 802.02) | 11.16 (↑ 3.25) |

| 3 | 498.15 (↑ 345.72) | 12.47 (↑ 4.57) |

| 4 | 268.19 (↑ 115.75) | 10.19 (↑ 2.28) |

| 5 | 300.55 (↑ 148.12) | 14.34 (↑ 6.43) |

| 6 | 237.56 (↑ 85.13) | 10.89 (↑ 2.98) |

| 7 | 234.18 (↑ 81.74) | 10.07 (↑ 2.16) |

| 8 | 163.69 (↑ 11.25) | 18.93 (↑ 11.02) |

| 9 | - | 11.43 (↑ 3.52) |

4.3.5. Results of Proposed Method

The final traffic volume output value was obtained by integrating the prediction results of all IMFs from Figure 14 and Figure 15, as shown in Figure 16 and Figure 17. Although the predictions exhibited some distortions at extreme points across the two datasets, they generally followed the trends of the actual values. Thus, the proposed FVMD-WOA-GA model demonstrated good prediction accuracy and robustness for both datasets, even when analyzing traffic volume data with dramatic fluctuations.

Figure 16.

Final prediction results on Dataset 1.

Figure 17.

Final prediction results on Dataset 2.

4.3.6. Analysis of Comparative Results

Table 5 and Table 6 present a comparison of prediction errors measured by five metrics (MAE, MAPE, MSE, RMSE, and RT) on the two datasets (best values for each metric are shown in bold). We compared our proposed approach with four other variants (FVMD-WOA-Random, FVMD-WOA-LSTM, FVMD-WOA-BiLSTM, FVMD-WOA-GRU, and FVMD-WOA-BiGRU) that use only one model to predict traffic flow for all subsequences but still fine-tune the FVMD parameters by WOA.

Table 5.

Comparison results on Dataset 1.

| Abbreviation | Model | MAE | MAPE | MSE | RMSE | RT (s) |

|---|---|---|---|---|---|---|

| #1 (ours) | FVMD-WOA-GA | 123.12 | 0.09 | 23,235.66 | 152.43 | 432.59 |

| #2 | FVMD-WOA-Random | 146.23 | 0.12 | 35,472.39 | 188.34 | 124.21 |

| #3 | FVMD-WOA-LSTM | 217.87 | 0.15 | 72,897.83 | 270.00 | 117.49 |

| #4 | FVMD-WOA-BiLSTM | 249.83 | 0.18 | 104,269.15 | 322.91 | 99.58 |

| #5 | FVMD-WOA-GRU | 131.98 | 0.10 | 26,434.11 | 162.59 | 116.36 |

| #6 | FVMD-WOA-BiGRU | 124.73 | 0.11 | 24,923.34 | 157.87 | 122.57 |

| #7 | LSTM | 689.94 | 0.44 | 1,100,158 | 1048.88 | 0.282 |

| #8 | BiLSTM | 702.19 | 0.45 | 1,092,327 | 1045.14 | 0.29 |

| #9 | GRU | 713.00 | 0.45 | 1,137,029 | 1066.32 | 0.30 |

| #10 | BiGRU | 705.55 | 0.46 | 1,096,488 | 1047.13 | 0.278 |

Table 6.

Comparison results on Dataset 2.

| Abbreviation | Model | MAE | MAPE | MSE | RMSE | RT (s) |

|---|---|---|---|---|---|---|

| #1 (ours) | FVMD-WOA-GA | 6.17 | 0.027 | 62.55 | 7.91 | 2828.57 |

| #2 | FVMD-WOA-Random | 8.74 | 0.032 | 123.79 | 11.13 | 1176.37 |

| #3 | FVMD-WOA-LSTM | 11.21 | 0.033 | 212.13 | 14.56 | 787.46 |

| #4 | FVMD-WOA-BiLSTM | 12.67 | 0.037 | 267.85 | 16.37 | 1108.84 |

| #5 | FVMD-WOA-GRU | 7.17 | 0.046 | 82.36 | 9.08 | 914.78 |

| #6 | FVMD-WOA-BiGRU | 7.38 | 0.054 | 87.44 | 9.35 | 865.00 |

| #7 | LSTM | 23.32 | 0.09 | 1011.65 | 31.81 | 0.91 |

| #8 | BiLSTM | 23.47 | 0.09 | 1024.31 | 32.00 | 1.18 |

| #9 | GRU | 22.38 | 0.08 | 927.81 | 30.46 | 0.93 |

| #10 | BiGRU | 21.98 | 0.08 | 891.48 | 29.86 | 0.92 |

The results of this experiment show that the FVMD-WOA-GA model consistently outperformed the other variants across all error metrics, indicating its reliability and accuracy in predicting traffic flow. In terms of RMSE, the proposed model demonstrated significant improvements over the second-best-performing configurations across both datasets. For Dataset 1, the proposed model achieved a reduction in RMSE of approximately 3.44% compared with the FVMD-WOA-BiGRU model. On Dataset 2, the proposed model achieved an even greater reduction in RMSE of approximately 12.87% compared to the second-best method (FVMD-WOA-GRU). These findings provide strong evidence for the effectiveness of the proposed model in the field of traffic flow prediction.

In contrast, the configurations that used fixed models for all components had higher error rates, suggesting that the use of a single model for all components might not effectively capture the complexities and characteristics of each subsequence of traffic flow data. Moreover, when predicting traffic flow with just raw signals, the methods have very poor performance with very high RMSE metric compared to the standard deviation of the dataset (variants #6 to #10).

It is worth mentioning that the error rates did not increase uniformly across all models, which can be attributed to the varying effectiveness of different models in capturing traffic flow patterns. For example, the BiLSTM and BiGRU models, which can capture both forward and backward dependencies, achieved lower error rates than the LSTM and GRU models. The GRU and BiGRU models have fewer parameters than the LSTM and BiLSTM models, as they combine the forget and input gates into a single update gate, thereby reducing computational complexity; thus, they exhibit better generalizability and avoid overfitting. The FVMD-WOA-Random configuration, which randomly selects a predictor for each IMF, exhibited relatively inconsistent performance on both datasets.

Owing to the use of GA for predictor selection and WOA for the FVMD parameter selection, the proposed method takes longer to converge, resulting in a longer running time than methods that use a single-type predictor and single models. However, it is essential to note that, in practice, this parameter selection process can be conducted offline to identify the best parameters tailored to the characteristics of the traffic flow data. Once the optimal parameters are determined, the inference phase can be executed in real-time, where the running time is acceptable for practical applications.

To measure the inference time of the proposed methods, we take WOA-GA-integrated variants to predict each sample in the test set and then calculate their average inference time; the results are shown in Table 7. For all WOA-GA-integrated methods, the average inference time is under 10 ms for both datasets. In our experiment, predictors work sequentially. However, in a real 5G environment, if the inference phase can be processed in parallel, the total inference time of the system can be significantly reduced to approximately the average inference time of a single predictor (around 1 ms according to Table 7). These results demonstrate the practicality of the proposed method in real-time environments.

Table 7.

Average inference times of different methods across two datasets. “K” represents the best number of IMFs selected for each model. At the same time, “Avg” shows the average inference time (in ms) per sample in the test set, and “Per Predictor” indicates the average inference time for each predictor (in ms).

| Abbreviation | Model | Dataset 1 | Dataset 2 | ||||

|---|---|---|---|---|---|---|---|

| K | Avg (ms) | Per Predictor (ms) | K | Avg (ms) | Per Predictor (ms) | ||

| #1 (ours) | FVMD-WOA-GA | 8 | 8.20 | 1.03 | 9 | 7.87 | 0.87 |

| #2 | FVMD-WOA-Random | 8 | 7.81 | 0.98 | 9 | 8.11 | 0.90 |

| #3 | FVMD-WOA-LSTM | 9 | 9.34 | 1.04 | 5 | 5.03 | 1.01 |

| #4 | VMD-WOA-BiLSTM | 4 | 4.20 | 1.05 | 8 | 7.74 | 0.97 |

| #5 | FVMD-WOA-GRU | 8 | 7.28 | 0.91 | 7 | 6.01 | 0.86 |

| #6 | FVMD-WOA-BiGRU | 8 | 8.03 | 1.00 | 8 | 7.06 | 0.88 |

5. Conclusions

In this paper, we propose FVMD-WOA-GA as a new method for predicting traffic flow in 5G networks. This method uses FVMD optimized by WOA for parameter optimization, along with GA, to select the best model for each decomposed subsequence. Our results showed that the combination of these models outperformed all other methods on both datasets by effectively capturing different temporal dependencies. Furthermore, the use of fast ADMM improves the efficiency of VMD, reducing the overall time consumption. Our findings highlight the potential of our proposed model to drive accurate and real-time traffic flow predictions, which is crucial for optimizing traffic management and planning in 5G-enabled ITS. By facilitating quicker, more precise decisions, this approach can support dynamic traffic routing, congestion management, and resource allocation in smart cities. Future extensions could include integrating the approach with real-time traffic planning systems on autonomous vehicles, which would enable them to avoid coming to congestion areas and leveraging 5G’s low latency for real-time decision-making to find the optimal route. Additionally, integrating advanced machine learning models, such as Transformer networks, could improve predictive accuracy for complex traffic patterns. We tend to design an encoder of the transformer to serve as FVMD and multiple decoders that are tailored to each feature of each decomposed branch.

Author Contributions

Conceptualization, T.M.N. and H.H.-P.V.; methodology, T.M.N. and H.H.-P.V.; formal analysis, K.A.B.; writing—original draft preparation, H.H.-P.V. and K.A.B.; writing and editing, T.M.N. and K.A.B.; review M.Y., T.M.N. and K.A.B.; visualization, K.A.B.; software, K.A.B.; supervision, M.Y.; funding acquisition, M.Y. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Suggested Data Availability Statements are available in Section 4.1.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding Statement

This work was supported in part by the Institute of Information and Communications Technology Planning and Evaluation (IITP) funded by the Korean Government [Ministry of Science and ICT (MSIT)], South Korea, through the Development of Candidate Element Technology for Intelligent 6G Mobile Core Network under Grant RS-2022-II221015; and in part by MSIT under the Information Technology Research Center (ITRC) Support Program Supervised by IITP under Grant IITP-2024-2021-0-02046.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Zhou S., Wei C., Song C., Pan X., Chang W., Yang L. Short-term traffic flow prediction of the smart city using 5G internet of vehicles based on edge computing. IEEE Trans. Intell. Transp. Syst. 2022;24:2229–2238. doi: 10.1109/TITS.2022.3147845. [DOI] [Google Scholar]

- 2.Zheng Y., Wang S., Dong C., Li W., Zheng W., Yu J. Urban road traffic flow prediction: A graph convolutional network embedded with wavelet decomposition and attention mechanism. Phys. A Stat. Mech. Its Appl. 2022;608:128274. [Google Scholar]

- 3.Xie Y., Zhang Y., Ye Z. Short-term traffic volume forecasting using Kalman filter with discrete wavelet decomposition. Comput.-Aided Civ. Infrastruct. Eng. 2007;22:326–334. doi: 10.1111/j.1467-8667.2007.00489.x. [DOI] [Google Scholar]

- 4.Jiang X., Adeli H. Wavelet packet-autocorrelation function method for traffic flow pattern analysis. Comput.-Aided Civ. Infrastruct. Eng. 2004;19:324–337. doi: 10.1111/j.1467-8667.2004.00360.x. [DOI] [Google Scholar]

- 5.Tian Z. Approach for short-term traffic flow prediction based on empirical mode decomposition and combination model fusion. IEEE Trans. Intell. Transp. Syst. 2020;22:5566–5576. [Google Scholar]

- 6.Chen X., Lu J., Zhao J., Qu Z., Yang Y., Xian J. Traffic flow prediction at varied time scales via ensemble empirical mode decomposition and artificial neural network. Sustainability. 2020;12:3678. doi: 10.3390/su12093678. [DOI] [Google Scholar]

- 7.Wang Y., Zhao L., Li S., Wen X., Xiong Y. Short term traffic flow prediction of urban road using time varying filtering based empirical mode decomposition. Appl. Sci. 2020;10:2038. doi: 10.3390/app10062038. [DOI] [Google Scholar]

- 8.Dragomiretskiy K., Zosso D. Variational mode decomposition. IEEE Trans. Signal Process. 2013;62:531–544. doi: 10.1109/TSP.2013.2288675. [DOI] [Google Scholar]

- 9.Zhao K., Guo D., Sun M., Zhao C., Shuai H. Short-term traffic flow prediction based on VMD and IDBO-LSTM. IEEE Access. 2023;11:97072–97088. [Google Scholar]

- 10.Li G., Deng H., Yang H. Traffic flow prediction model based on improved variational mode decomposition and error correction. Alex. Eng. J. 2023;76:361–389. doi: 10.1016/j.aej.2023.06.008. [DOI] [Google Scholar]

- 11.Dai G., Tang J., Luo W. Short-term traffic flow prediction: An ensemble machine learning approach. Alex. Eng. J. 2023;74:467–480. doi: 10.1016/j.aej.2023.05.015. [DOI] [Google Scholar]

- 12.Li J., Chen Y., Lu C. Application of an improved variational mode decomposition algorithm in leakage location detection of water supply pipeline. Measurement. 2021;173:108587. doi: 10.1016/j.measurement.2020.108587. [DOI] [Google Scholar]

- 13.Lin C., Li X., Shi T., Sheng J., Sun S., Wang Y., Li D. Forecasting of wind speed under wind-fire coupling scenarios by combining HS-VMD and AM-LSTM. Ecol. Informatics. 2023;77:102270. doi: 10.1016/j.ecoinf.2023.102270. [DOI] [Google Scholar]

- 14.Wu L., Tai Q., Bian Y., Li Y. Point and interval forecasting of ultra-short-term carbon price in China. Carbon Manag. 2023;14:2275576. doi: 10.1080/17583004.2023.2275576. [DOI] [Google Scholar]

- 15.Liu Z., Liu H. A novel hybrid model based on GA-VMD, sample entropy reconstruction and BiLSTM for wind speed prediction. Measurement. 2023;222:113643. doi: 10.1016/j.measurement.2023.113643. [DOI] [Google Scholar]

- 16.Li Y., Li R. A hybrid model for daily air quality index prediction and its performance in the face of impact effect of COVID-19 lockdown. Process Saf. Environ. Prot. 2023;176:673–684. doi: 10.1016/j.psep.2023.06.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zhou M., Yu J., Wang M., Quan W., Bian C. Research on the combined forecasting model of cooling load based on IVMD-WOA-LSSVM. Energy Build. 2024;317:114339. doi: 10.1016/j.enbuild.2024.114339. [DOI] [Google Scholar]

- 18.Zhang Q., Chen S., Fan Z.P. Bearing fault diagnosis based on improved particle swarm optimized VMD and SVM models. Adv. Mech. Eng. 2021;13:16878140211028451. doi: 10.1177/16878140211028451. [DOI] [Google Scholar]

- 19.Geng G., He Y., Zhang J., Qin T., Yang B. Short-Term Power Load Forecasting Based on PSO-Optimized VMD-TCN-Attention Mechanism. Energies. 2023;16:4616. doi: 10.3390/en16124616. [DOI] [Google Scholar]

- 20.Zhang J., Peng Y., Ouyang W., Deng B. Accelerating ADMM for efficient simulation and optimization. ACM Trans. Graph. (TOG) 2019;38:1–21. doi: 10.1145/3355089.3356491. [DOI] [Google Scholar]

- 21.Lu Y., Ma H., Zhang Z., Jiang L., Sun Y., Song Q., Liu Z. Real-time chatter detection based on fast recursive variational mode decomposition. Int. J. Adv. Manuf. Technol. 2024;130:3275–3289. doi: 10.1007/s00170-023-12832-w. [DOI] [Google Scholar]

- 22.Zhang P., Gao D., Lu Y., Kong L., Ma Z. Online chatter detection in milling process based on fast iterative VMD and energy ratio difference. Measurement. 2022;194:111060. doi: 10.1016/j.measurement.2022.111060. [DOI] [Google Scholar]

- 23.Yu C., Yan G., Ruan K., Liu X., Yu C., Mi X. Stochastic Environmental Research and Risk Assessment. Springer; Berlin/Heidelberg, Germany: 2023. An ensemble convolutional reinforcement learning gate network for metro station PM2. 5 forecasting; pp. 1–16. [Google Scholar]

- 24.Zhang Y., Pan G., Chen B., Han J., Zhao Y., Zhang C. Short-term wind speed prediction model based on GA-ANN improved by VMD. Renew. Energy. 2020;156:1373–1388. doi: 10.1016/j.renene.2019.12.047. [DOI] [Google Scholar]

- 25.Huang H., Chen J., Huo X., Qiao Y., Ma L. Effect of multi-scale decomposition on performance of neural networks in short-term traffic flow prediction. IEEE Access. 2021;9:50994–51004. doi: 10.1109/ACCESS.2021.3068652. [DOI] [Google Scholar]

- 26.Yang H., Cheng Y., Li G. A new traffic flow prediction model based on cosine similarity variational mode decomposition, extreme learning machine and iterative error compensation strategy. Eng. Appl. Artif. Intell. 2022;115:105234. doi: 10.1016/j.engappai.2022.105234. [DOI] [Google Scholar]

- 27.Yin L., Liu P., Wu Y., Shi C., Wei X., He Y. ST-VGBiGRU: A Hybrid Model for Traffic Flow Prediction With Spatio-Temporal Multimodality. IEEE Access. 2023;11:54968–54985. doi: 10.1109/ACCESS.2023.3282323. [DOI] [Google Scholar]

- 28.Liu H., Zhang X.Y., Yang Y.X., Li Y.F., Yu C.Q. Hourly traffic flow forecasting using a new hybrid modelling method. J. Cent. South Univ. 2022;29:1389–1402. doi: 10.1007/s11771-022-5000-2. [DOI] [Google Scholar]

- 29.Zhong K., Li W., Jin L., Zhang Y. VMD-HHO-DELM: Decomposition and Optimization Model with Based Deep Extreme Learning Machine Algorithm for Predicting Short-Term Traffic Flow; Proceedings of the 2023 IEEE 5th International Conference on Power, Intelligent Computing and Systems (ICPICS); Shenyang, China. 14–16 July 2023; pp. 1149–1157. [Google Scholar]

- 30.Lu J. An efficient and intelligent traffic flow prediction method based on LSTM and variational modal decomposition. Meas. Sensors. 2023;28:100843. doi: 10.1016/j.measen.2023.100843. [DOI] [Google Scholar]

- 31.Guo K., Yu X., Liu G., Tang S. A long-term traffic flow prediction model based on variational mode decomposition and auto-correlation mechanism. Appl. Sci. 2023;13:7139. doi: 10.3390/app13127139. [DOI] [Google Scholar]

- 32.Chung J., Gulcehre C., Cho K., Bengio Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv. 20141412.3555 [Google Scholar]

- 33.Li X., Ma X., Xiao F., Xiao C., Wang F., Zhang S. Time-series production forecasting method based on the integration of Bidirectional Gated Recurrent Unit (Bi-GRU) network and Sparrow Search Algorithm (SSA) J. Pet. Sci. Eng. 2022;208:109309. doi: 10.1016/j.petrol.2021.109309. [DOI] [Google Scholar]

- 34.Staudemeyer R.C., Morris E.R. Understanding LSTM—A tutorial into long short-term memory recurrent neural networks. arXiv. 20191909.09586 [Google Scholar]

- 35.Huang Z., Xu W., Yu K. Bidirectional LSTM-CRF models for sequence tagging. arXiv. 20151508.01991 [Google Scholar]

- 36.Goldstein T., O’Donoghue B., Setzer S., Baraniuk R. Fast alternating direction optimization methods. SIAM J. Imaging Sci. 2014;7:1588–1623. doi: 10.1137/120896219. [DOI] [Google Scholar]

- 37.Mirjalili S., Lewis A. The whale optimization algorithm. Adv. Eng. Softw. 2016;95:51–67. doi: 10.1016/j.advengsoft.2016.01.008. [DOI] [Google Scholar]

- 38.Guo S., Lin Y., Feng N., Song C., Wan H. Attention based spatial-temporal graph convolutional networks for traffic flow forecasting; Proceedings of the AAAI Conference on Artificial Intelligence; Honolulu, HI, USA. 27 January–1 February 2019; pp. 922–929. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Suggested Data Availability Statements are available in Section 4.1.