Abstract

Skin cancer affects people of all ages and is a common disease. The death toll from skin cancer rises with a late diagnosis. An automated mechanism for early-stage skin cancer detection is required to diminish the mortality rate. Visual examination with scanning or imaging screening is a common mechanism for detecting this disease, but due to its similarity to other diseases, this mechanism shows the least accuracy. This article introduces an innovative segmentation mechanism that operates on the ISIC dataset to divide skin images into critical and non-critical sections. The main objective of the research is to segment lesions from dermoscopic skin images. The suggested framework is completed in two steps. The first step is to pre-process the image; for this, we have applied a bottom hat filter for hair removal and image enhancement by applying DCT and color coefficient. In the next phase, a background subtraction method with midpoint analysis is applied for segmentation to extract the region of interest and achieves an accuracy of 95.30%. The ground truth for the validation of segmentation is accomplished by comparing the segmented images with validation data provided with the ISIC dataset.

Keywords: Background subtraction, Hair removal, Image enhancement, Segmentation, Skin cancer

Introduction

Skin cancer can significantly disrupt individuals’ lifestyles, and in severe cases, it can result in fatal consequences. The importance of the skin in the human body becomes evident as even minor alterations can impact various organs and body functions [1]. The skin is exposed to the outside world. Skin contamination and infections are therefore more prevalent. Millions of people are impacted by melanoma-related cancer. Melanoma is triggered by the inappropriate progression of skin cells known as melanocytes [2]. UV rays and tanning beds are major causes of this malignancy. Melanocytes generate melanin, which provides our skin color and protects it from damaging UV rays [3]. Individuals with a lighter complexion face an increased susceptibility to skin cancer due to a lack of melanin and excessive exposure to UV rays. Melanomas commonly exhibit black or brown pigmentation, although they can also appear in various colors such as skin colored, pink, red, purple, blue, or white [4]. Timely identification and treatment of melanoma are usually effective; however, if left untreated, the cancer has the probability to develop and metastasize to other organs of the body [5]. The resemblance between skin lesions and normal skin poses a challenge during visual examinations conducted as part of physical screenings for skin lesions, sometimes leading to incorrect diagnoses [6].

In recent decades, dermoscopy has been employed for the assessment of pigmented skin lesions and has demonstrated enhanced diagnostic precision for melanoma. A meta-analysis has substantiated that dermoscopy surpasses visual inspection alone in terms of accuracy [7]. Dermoscopy, a non-invasive technology utilizing polarized light, generates high-resolution images of skin lesions [8]. Despite the improved diagnostic accuracy of dermoscopy over visual examinations, dermatologists still encounter challenges in interpreting dermoscopy images, primarily due to the common occurrence of artifacts and reflections [9]. Visual identification remains a complex, subjective, time-consuming, and error-prone process, given the difficulty in detecting or defining clear boundaries for many cancerous lesions [10].

To identify the border between the lesion and the contiguous skin in cancerous images, image segmentation is used [11]. Segmentation’s main objective differentiate specific groups of interconnected picture elements within a designated region of interest (ROI) so that structural transitions between these groups can be more easily detected. Accurate segmentation is crucial to achieving less amount of error in the subsequent determination of the shape, border, and size features of a skin lesion. Ensuring precision in lesion segmentation is essential for reliable and meaningful analyses of the lesion’s characteristics. This process plays a vital role in enhancing the accuracy of subsequent measurements and assessments related to the skin lesion, contributing to more effective diagnostic and analytical outcomes [12]. For the segmentation of medical images, various segmentation techniques have been used, including thresholding based, edge based, region based, artificial intelligence based, and active contour based [13–15].

Threshold-based techniques: These approaches hinge on the selection of one or more histogram threshold values to effectively distinguish objects from the background [16].

Edge based: These methods identify region boundaries by utilizing edge operators which focus on detecting sharp transitions in pixel intensities [17, 18].

Region based: By merging, splitting, or both, pixels are organized into homogeneous regions [19].

AI based: These methods [20] depict images as random fields, with parameters determined through various optimization techniques, harnessing the power of artificial intelligence for segmentation.

Active contours: These techniques identify object contours by using curve evolution[21, 22].

Though segmentation approaches are important in the research and detection of skin cancer, they do have certain limitations. Skin cancer segmentation procedures involve manual input or monitoring. This procedure can be tedious and costly because it frequently includes physically outlining the lesion’s limits [23]. Skin lesions can have a wide range of appearances, including shape, size, texture, and color. Some segmentation methods may struggle to represent the complex and diverse features of many types of skin lesions [24]. Segmentation methods may suffer from over- or under-segmentation. Both scenarios can result in incorrect and inadequate segmentation data, which can affect future analysis and diagnosis [19]. To consider these kinds of issues in this article, an innovative technique has been presented for the segmentation of medical images. The proposed midpoint segmentation method using a background subtraction approach enhances the performance of different classifiers.

Novel Contribution of this Article

To tackle the noise within the image, a bottom-hat filter is employed, and image quality is improved through color space conversion and the application of DCT (discrete cosine transformation).

The introduction of a novel midpoint segmentation method is presented as an innovative approach for effectively segmenting skin cancer images.

Utilizing the ISIC dataset, which is publicly accessible, various classifiers are implemented using the newly proposed midpoint segmentation method.

Comparative analysis of different classifiers with different segmentation methods along with the proposed segmentation method is done.

This study emphasizes the significance of segmenting malignant tumors in dermoscopic images, as the outcomes of this phase play a pivotal role in subsequent diagnostic processes. Additionally, in computer-assisted cancer diagnosis, segmentation is of paramount importance when utilizing dermoscopic images. During the preprocessing phase, a bottom-hat filter is implemented to diminish noise, particularly targeting the removal of hair. The utilization of discrete cosine transform (DCT) and color space conversion contributes to enhancing the quality of medical images. To improve system performance during segmentation and classification, the background subtraction technique with midpoint analysis is introduced. The subsequent sections of the article are organized as follows: the second section presents a concise overview of prior research, the third section delineates the proposed methodology, the fourth section delves into the results and findings, and the fifth section concludes the article.

Related Work

In recent years, the research field has witnessed the emergence of various cancer detection methods, with a focus on segmenting and extracting diverse dermoscopic features. Esteva et al. [25] outlined the development of a CAD system specifically tailored for identifying melanoma. This system utilizes a synergistic blend of machine learning and image processing techniques aimed at improving the precision of melanoma diagnosis. To enhance image contrast by redistributing intensity levels, histogram equalization is incorporated, while a dilation operation is implemented to effectively remove hair or correct skin defects. This combination of methodologies contributes to the overall efficacy of the CAD system in accurately identifying and diagnosing melanoma, showcasing the potential of advanced technologies in the field of dermatology. In the same year, an innovative approach that incorporates a self-generating neural network, capable of autonomously learning to distinguish between skin lesions and colored edges, was presented by Xie et al. [26]. A key aspect of this methodology is the emphasis on texture-based feature extraction, which plays a crucial role in facilitating the subsequent classification of skin lesions into two groups based on the presence of cancerous features. To validate the effectiveness of this approach in real-world scenarios, it is imperative to assess its performance on relevant datasets, potentially incorporating clinical data. Such evaluations would provide valuable insights into the practical utility and reliability of this novel neural network-based approach in the context of skin lesion classification and cancer detection.

Rehman et al. [27] suggested framework integrates segmentation and a convolutional neural network (CNN) for identifying skin lesions with malignant tendencies in dermoscopy images. The ISIC-2016 dataset is utilized for both training and evaluation purposes. This strategy aligns with prevalent practices in medical image analysis, employing a CNN to achieve precise and automated detection of potential malignancies within skin lesions. To gauge the effectiveness and applicability of the framework, it is crucial to validate it across diverse datasets and employ various performance evaluation metrics. This approach ensures a comprehensive assessment of the framework’s capabilities and its potential for broader applicability. Mahmoud et al. [28] presented a new skin cancer CAD system based on texture analysis methodologies in their research. Hair removal, filtering, extraction of features, and classification are the four processes of the proposed CAD system. The CAD system was used to distinguish between non-malignant skin lesions (common nevi or dysplastic nevi) and malignant skin lesions. Extraction of histograms of oriented gradients (HOG) features after artifact removal offers the best classification results, according to the findings of their experiments.

In the concurrent year, Pathan et al. [29] conducted a thorough review of contemporary techniques employed in computer-aided diagnostic systems for melanoma, encompassing crucial phases. It underscores the noteworthy performance of algorithms tailored for automated melanoma analysis, demonstrating disease recognition capabilities on par with expert dermatologists. The paper strongly advocates for ongoing advancements and refinement in dermoscopic feature extraction methods offering a comprehensive overview of the procedural steps adopted by current systems in the creation of automatic diagnostic tools for the detection and classification of pigmented skin lesions. Al-masni et al. [30] presented a novel segmentation method known as full-resolution convolutional networks (FrCN), which was specifically built for the exact segmentation of melanoma in dermoscopy images. FrCN is distinguished by its unique methodology, which combines full-resolution features for each pixel in the input data. In comparison to previous deep learning segmentation algorithms, the suggested FrCN method performs better in terms of accuracy. The method’s effectiveness lies in its utilization of the image’s full spatial resolution, facilitating enhanced learning of distinctive and significant features, ultimately resulting in improved segmentation outcomes. Continuing in the same year, Carrera and Dominguez [31] developed a cost-effective and precise tool for the early detection of melanoma skin cancer. Such a tool had the potential to enhance patient outcomes and decrease mortality rates. The approach employed in this study utilized an optimal threshold for segmentation and SVM (support vector machine) for classification on the ISIC dataset, achieving an accuracy of 75.00%.

Aljanabi et al. [32] introduced an innovative technique leveraging artificial bee colony optimization to detect and analyze tumor lesions in skin images. The prime goal of this approach is to enhance the efficiency and accuracy of existing methods employed in the categorization of skin cancer. The proposed method incorporates a segmentation step, which not only improves information handling but also contributes to the development of preventive measures against potential harm, consequently reducing the risk of skin cancer lesions. In the same year, a comprehensive survey for skin lesion segmentation using computational intelligence techniques, including genetic algorithm (GA) and fuzzy C-means and CNN, was presented by Chouhan et al. [33]. These approaches are widely used to segment images in medical imaging, scientific analysis, engineering, and the humanities. Waghulde et al. [34] suggested using image processing techniques to identify melanoma in digital photos. To remove noise from the image, preprocessing techniques such as median filters are used. For additional analysis, the image is turned into an HSI color image. The image is segmented using texture segmentation algorithms and active shape segmentation algorithms. For feature extraction, the GLCM feature extraction algorithm is used. The image is classified as normal or melanoma using the probabilistic neural network (PNN) classifier. Continuing in the same year, Ahmed Thaajwer and Piumu Ishanka [18] employed watershed and Otsu thresholding methods for ROI, and features were extracted using the GLCM procedure. The SVM then scrutinizes the amalgamated features and categorizes the skin image as either malignant melanoma or benign melanoma based on the extracted characteristics. Notably, the proposed system attains a remarkable accuracy rate of 85.00% on the ISIC dataset, showcasing the efficacy of the integrated approach in accurately identifying melanoma in its early stages.

Kassem et al. [35] introduced a model for classifying skin lesions that employed both transfer learning and deep convolutional neural networks (DCNN). The method extracted features from images using GoogleNet. The effectiveness of the proposed model was evaluated on the ISIC dataset, showcasing an impressive classification accuracy of 94.92%. Kavitha and Vayelapelli et al. [36] presented various approaches and methods for each phase of pre-processing including image enhancement, restoration, and removal of hair. The paper emphasizes the importance of automated skin cancer detection for early diagnosis and saving lives and the potential advantages of pre-processing approaches that can improve the reliability and effectiveness of the systems for skin cancer diagnosis. In the same year, Krishna Monika et al. [37] proposed a color-based k-means clustering approach for segmentation, recognizing the significance of color as a key determinant in identifying malignancy types. Feature extraction involves both statistical and texture aspects. Experimental analysis is conducted on the ISIC dataset, comprising eight diverse types of dermoscopic images, providing a comprehensive evaluation platform. Classification is carried out using a multi-class SVM (MSVM), where features from different methods are amalgamated to enhance accuracy. Advanced techniques include the utilization of a 3D reconstruction algorithm to extract additional information, an adaptive snake algorithm for segmentation to improve precision, and shearlet transform coefficients in conjunction with a naive Bayes classifier specifically for melanoma classification. Kaymak and Ucar [38]. FCN-8, FCN-16, FCN-32, and FCN-Alex Net are four FCN architectures generated by using the CNN model for the segmentation of skin cancer images. The network was first put through its paces on the ISIC 2017 dataset. Plot and Jacquard coefficients are employed to assess the degree of agreement between the partitioned result and the actual ground truth. Even though the multiple FCN frameworks performed proportionately well, FCN-8 was discovered to be more effective in terms of segmentation.

Mohakud and Dash [39], the author proposed a hyperparameter-optimized Full Convolutional Encoder-Decoder Network (FCEDN) for segmentation of skin cancer images. The new exponential neighborhood Gray Wolf Optimization (EN-GWO) algorithm was employed to optimize the network hyperparameters. The neighborhood search strategy in EN-GWO is determined by combining the individual wolf hunting strategy with the global search strategy, and it reflects the appropriate balance of exploration and exploitation. In Murugan et al. [40], the median filter is applied in pre-processing phase, and the mean shift method is implemented for the separation of the region of interest. Pushpalatha et al. [41] introduced a sophisticated model for the precise classification and detection of skin cancer, employing a combination of CNN and SVM to ensure accurate prediction, particularly for melanoma. The incorporation of a segmentation stage, utilizing FCRN and U-NET methods, significantly enhances the analysis results, thereby improving the accuracy of SVM on the ISIC dataset by an impressive 70.00%.

Imran et al. [42] has addressed the challenges and issues of skin cancer detection through the introduction of an ensemble approach, which combined the decisions of multiple deep-learning models. This approach aimed to improve the accuracy along with overall performance of skin cancer detection. Leveraging deep learning models facilitated automatic feature extraction, eliminating the need for labor-intensive and time-consuming human-engineered feature extraction processes. The proposed ensemble of deep learners surpassed individual models in terms of specificity, F-score, accuracy, sensitivity, and precision. The experimental results presented in this study strongly advocated for the application of the ensemble approach not only in skin cancer detection but also in various disease detection scenarios. The paper significantly contributed to the domain of medical image classification by employing DCNN to address challenges in medical image analysis, with a specific focus on skin cancer detection. In the same year, a novel automated strategy for melanoma diagnosis was proposed, incorporating predictions from DCNN models through ensemble learning techniques by Guergueb et al. [43]. This model employed various different tactics, which include image cropping, upsampling, augmentation, digital hair removal, and class weighting. Training and testing were conducted using image data sourced from ISIC. Remarkably, the implemented pipeline surpassed the performance of existing state-of-the-art methodologies for predicting melanoma conditions.

Sundari et al. [44] introduced an automatic classification system designed for skin lesions, employing deep-learning neural networks, with a specific focus on DCNNs. The authors enhanced the performance of CNNs by fine-tuning layers through various methods, including VGG-16, InceptionResNet, InceptionV3, and DenseNet. Notably, the proposed system attained an accuracy level comparable to that of dermatologists in detecting malignancy, underscoring the efficacy of deep learning algorithms within this domain. The significance of timely recognition and treatment of skin diseases was emphasized in the paper, acknowledging their profound impact on patient’s quality of life and the potentially life-threatening nature of certain conditions. The integration of DCNNs in cancer classification and detection was identified as a promising approach that surpassed the capabilities of traditional image processing methods. In the concurrent year, Boudhir Abdelhakim et al. [45] proposed a model that combined deep learning and reinforcement learning algorithms to facilitate the early detection and classification of skin cancer using dermoscopic images. In the application of this model, various pre-processing techniques were employed, alongside the utilization of the watershed algorithm for segmentation and a DCNN for classification purposes. Remarkably, the proposed model achieved a notable accuracy rate of 80% in effectively classifying skin cancer into seven distinct types. The study underscored the successful amalgamation of reinforcement learning with deep learning, particularly in the context of skin cancer classification tasks. To train and evaluate the proposed model, dermoscopy images sourced from the ISIC database were employed, encompassing seven types of skin cancers. The research aimed to address the investigation of the CNN-DQN model’s performance concerning the classification and detection of skin lesions. The methodology adopted was rooted in deep reinforcement learning, presenting a novel and innovative approach for the classification and detection of skin cancer. Tembhurne et al. [46] proposed an ensembled method for lesion detection and achieved an accuracy of 93% on the ISIC dataset. In the proposed framework, a novel segmentation method “background subtraction with midpoint analysis” for finding ROI and DAA for feature extraction has been proposed. For the classification of skin cancer images, different classifiers have been implemented. It is observed that SVM perform better among other classifiers, then the state-of-the-art methods achieve an accuracy of 95.30%.

Table 1 provides a concise overview of each study, offering insights into the dataset used, the methodology employed, primary contributions, and any noteworthy remarks or limitations associated with the respective research. The table serves as a snapshot of the outcomes of various methods over the past few years, delineating the pros and cons associated with each approach.

Table 1.

Different techniques for skin cancer segmentation

| Author | Dataset | Method | Contribution and performance | Remarks |

|---|---|---|---|---|

| Giotis et al. [47] | Digital image repository | K-means clustering, ABCD rule, CLAM classifier | Proposed melanoma detection system with 80% accuracy | Noise sensitive; depends on data size |

| Premaladha and Ravichandran [48] | Dermoscopic images | Normalized Otsu’s segmentation, GLMC for feature extraction | CAD system with 93.00% accuracy | Data overfitting; Inaccurate decision parameters; dataset handling issues |

| Satheesha et al. [49] | ISIC, ATLAS, PH2 | Adaptive snake algorithm for segmentation, 3D tensor for feature extraction | Non-invasive dermoscopy system for skin lesion diagnosis | Actual lesion depth cannot be computed |

| Yuan et al. [50] | ISIC, PH2 | DCNN | Automatic segmentation with 95.50% accuracy | More training samples needed for deeper networks; risk of overfitting |

| Singh and Gupta [51] | Dermoscopic images | Literature survey on melanoma detection tools | Dermoscopic images crucial for computerized tools | Segmentation, feature extraction, and accuracy issues |

| Al-masni et al. [30] | ISIC, PH2 | Fully resolution convolution network | Automatic segmentation with 84.97% accuracy | Highly sensitive; large dataset issues; over and under-segmentation not addressed |

| Seeja and Suresh [52] | ISIC | DE convolutional network, FCN | Skin lesion segmentation with 85.19% accuracy | Dataset notation concerns |

| Zafar et al. [53] | ISIC, PH2 | CNN (U-Net and ResNet combination) | Lesion boundary segmentation with 85.40% accuracy | Dataset handling issues; data overfitting |

| Vani et al. [54] | - | Deep learning, active contour | Melanoma detection with 90.00% accuracy | Noise not moderated |

| Ashraf et al. [55] | ISIC | Deep learning | Automated skin segmentation with Jaccard index of 90.20% | Low-resolution image issues; loss of ground truth annotations |

| Tahir et al. [56] | ISIC, HAM10000, DermIS | Deep learning | Skin cancer detection with 94.17% accuracy | Suited for fair skin tone individuals |

After the study of various research articles [57–59] and review papers [13, 57, 60–62], some difficulties during preprocessing have been identified and summarized below:

-

(i)

The original datasets include high-resolution images, which require significant computational resources.

-

(ii)

Images may have little contrast when there is a lot of similarity between the lesion and skin tone.

-

(iii)

Another issue is the noise within the image, e.g., hair may interfere with the accurate analysis of skin lesions that have been imaged.

-

(iv)

Poor segmentation results are caused by images with more central lesion irregularities than border irregularities.

-

(v)

Additionally, in some situations, a higher segmentation threshold can result in a region of regression in the image’s center, which can result in the false detection of lesions.

-

(vi)

Some segmentation methods result in an overfitting problem.

Proposed Methodology

The overview of the proposed methodology is shown in Fig. 1. The framework comprises four diverse phases. The initial phase, pre-processing, involves resizing the image to a standard size, removing hair, and enhancing contrast. Following this, the next phase identifies the ROI from the pre-processed image, accomplished through background subtraction with midpoint analysis and region properties. The background subtraction algorithm employs a reference model for object detection, with the reference model created using midpoint analysis, a novel approach not previously implemented in background subtraction algorithms for skin cancer images. In the third phase, features are extracted from the ROI using a differential analyzer algorithm (DAA). For the final stage, four diverse classifiers (SVM, random forest, KNN, decision tree) are employed.

Fig. 1.

Proposed methodology

Dataset and Tools

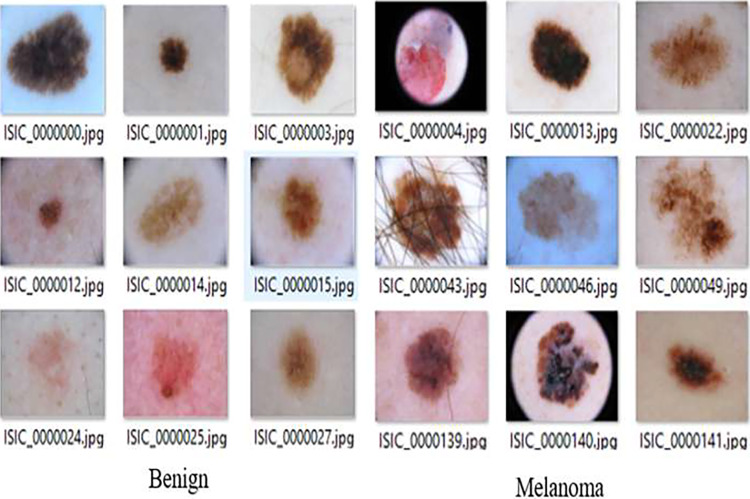

The complete experiment is carried out in MATLAB on a PC with a Core-i5 processor and 16 GB RAM. The experimentation is undertaken on a publicly available ISIC dataset [63]. The International Skin Imaging Collaboration (ISIC) dataset stands as a critical resource in the realm of dermatology, specifically for research concerning skin cancer. It contains both cancerous as well as non-cancerous image data as shown in Fig. 2. Skin cancer, a significant global health concern, necessitates comprehensive datasets to advance diagnostic and prognostic methodologies. The ISIC dataset, renowned for its diverse and extensive collection of dermoscopic images, serves as a cornerstone in addressing this challenge and propelling advancements in skin cancer research. However, working with the ISIC dataset comes with its set of challenges. Variability in image quality, diversity of skin types, and the need for rigorous validation are factors that necessitate careful consideration. Despite these challenges, the dataset offers researchers a unique opportunity to harness the potential of machine learning and artificial intelligence in healthcare. By researching novel approaches, researchers can contribute to more reliable, effective, and easily accessible skin cancer diagnoses.

Fig. 2.

ISIC dataset images (cancerous and non-cancerous)

Table 2 presents a summary of various studies conducted on the ISIC (International Skin Imaging Collaboration) dataset, focusing on the classification of skin images into cancerous and non-cancerous categories. The studies demonstrate varying degrees of success in skin image classification on the ISIC dataset, with accuracy ranging from 71.50 to 93.00%. The differences in performance highlight the evolving nature of research in this domain and the potential for further improvements in the accuracy of skin cancer detection models.

Table 2.

Various studies conducted on the ISIC dataset

Preprocessing

Several image processing methods are employed to input images during the preprocessing phase of skin cancer analysis before segmentation or other diagnostic activities are conducted. The preprocessing is required to improve image quality and subsequent analysis, but it has some drawbacks. The following are some of the most common problems associated with the preprocessing step of skin cancer analysis: Data loss may occur during some preprocessing processes [68]. When image resolution is lowered, details and small details may be blurred or destroyed, limiting the reliability of subsequent examination or diagnosis [69]. It is crucial to strike a balance between reducing noise and retaining key information during preprocessing [70].

To handle the above issues during pre-processing, the image is resized, hairy parts are removed, and DCT [71] and color space conversion are to enhance and restore the image during this pre-processing stage. Pre-processing is carried out using a dual strategy. First, the bottom hat filtering mechanism was applied to remove the hair from the image by reading the image and converting the RGB image into grayscale. During this operation, there is no need for the extensive amount of color information found in RGB images. The outcome of a conversion is kept in pixel coordinates [u, v]. The cross-shaped structured element’s results will be used to determine how well the image measures up to the region boundaries. The maximum size of an object is specified by the T_size variable. If the object’s size exceeds this maximum (T_Size), the values are substituted with the neighborhood intensity levels denoted by Object-1 (u, v). The operation of this mechanism is elucidated in Algorithm 1.

Algorithm 1.

Bottom_Hat(Image)

After replacing the hairy part from the image with neighborhood pixels, the image is required to be enhanced. To achieve this, a contrast enhancement strategy employing discrete cosine transform (DCT) is implemented. Most of the information within the signal is concentrated toward lower frequency components, and hence LL frequency bands will be transformed through 2D DCT. The correction coefficient for this purpose is determined by evaluating Eq. (1).

| 1 |

Here, “CF” represents the correction factor, LLi is the singular valued matrix of the input image, and is the similarity-valued matrix of the adaptive histogram equivalent image.

Subsequently, CF is multiplied by the color channel HSV (hue, saturation, value). The overall operation of enhancement with DCT and contrast enhancement is outlined as follows:

Here are the steps for the specified operations:

- Convert RGB image to HSV

- Take each pixel in the RGB image.

- Use the conversion formulas to calculate the corresponding HSV components.

- Store the resulting HSV values for each pixel.

- Apply DCT (discrete cosine transform):

- Apply the 2D DCT to the HSV image.

- Transform each block of data in the image from the spatial domain to the frequency domain using DCT.

- Apply correction factor:

- Calculate the correction factor (CF) using Eq. (2).

- Adjust hue, saturation, and value:

- Multiply the correction factor (CF) with the respective components of the HSV image.

- H = CF × H

- S = CF × S

- V = CF × V

- Apply inverse DCT:

- Apply the inverse 2D DCT to the modified HSV image.

- Transform each block of data back from the frequency domain to the spatial domain.

- Convert HSV to RGB:

- Take each pixel in the modified HSV image.

- Use the conversion formulas to calculate the corresponding red, green, and blue components.

- Store the resulting RGB values for each pixel.

The observations suggest that the utilization of the correction factor “CF” improves quality in contrast enhancement. The outcomes of this procedure are depicted in Fig. 3, showcasing the outcomes of the pre-processing stage.

Fig. 3.

A Original input images; B results corresponding to input images

Segmentation

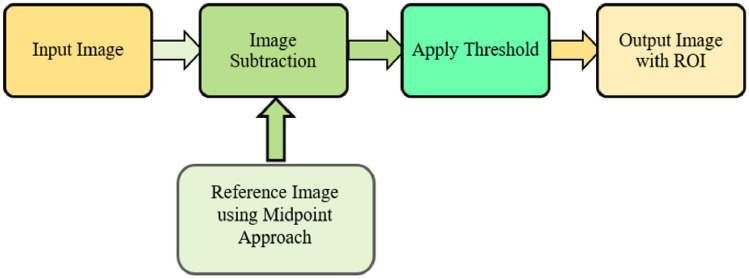

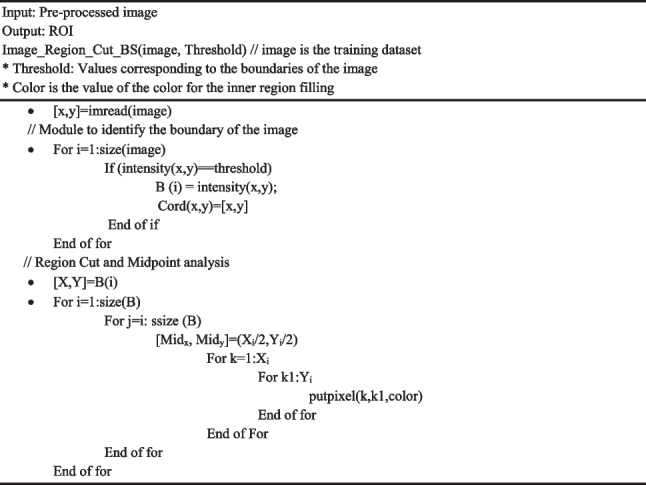

Background subtraction is a widely used technique for identifying video frames in a series of frames captured by stationary cameras. The fundamental principle of this method involves detecting objects by analyzing the disparity between the present frame and a reference frame, often termed the “background image” or “background model” [72]. This is generally achieved by pinpointing the region of interest (ROI) within an image, making ROI detection the core task of this entire approach. The proposed methodology has incorporated a background subtraction algorithm with midpoint analysis is used. Figure 4 provides an overview of the suggested approach.

Fig. 4.

Background subtraction method

In proposed methodology, the reference image is created by applying midpoint analysis. This reference image will be used to extract the region of interest from the input image. Region extraction is very significant since it will be used to examine irregularities in separate regions. Typically, the region extraction step is done with an inside-outside test that categorizes the boundaries of the image. These concerns have been addressed in [73]. In the proposed work, the process is reserved. The boundary separation of the image in region-based separation mechanisms is a problem. Therefore, extraction of the cancerous region may not be appropriate. The advantages of reorientation include the shortest time to identify regions of interest in subsequent segmentation processes. The process, which starts from the inside and moves outward, cannot be completed until it encounters the boundary; however, with the proposed method, the control shifts from the boundary toward the center, establishing a threshold point where alterations in intensity levels are identified. If, during the scanning of 50% of the area, no irregularities are observed, the entire region is labeled as non-critical.

In the beginning, the proposed method uses the levels of color intensity value (Ci) to locate the edge (E). Color is extremely important in identifying the region of the image (R). Equation (2) is used to identify the color. The region edges (RE), which are identified by a color comparison with the adjacent pixel, are stored in the Exe, ye variable, where “xe, ye” represent the edge at coordinates x and y.

| 2 |

To determine the region’s dimension, the region’s diameter is approximated. Equation (3) is used to determine the region’s center (CNi), which is obtained by dividing the region’s dimension by “2” to obtain the radius. The maximum and minimum values of the region’s edges (E) at coordinates (xe, ye) is represented as , respectively. The center of the region will be determined by the region’s edges (RE) and the system’s normal flow now originates from this central point.

| 3 |

The significance of the region extraction phase is paramount in the proposed segmentation process. Initiated by the identification of labeled segments, regions associated with critical points exhibit edge values. Intensity values (Int) pertinent to these edges are collected in buffers (Bfi). These intensity values play a crucial role in eliminating the background and extracting the region of interest. Those intensity values exceeding 0 at the center are preserved in the buffer for subsequent processing, while values equal to or less than 0 are excluded. The fundamental equation guiding the region extraction process is presented in Eq. (4).

| 4 |

The background color, combined with the skin image, has a maximum intensity level of 255. The background subtraction process utilizes the region acquired through region separation and its corresponding intensities. The outcome of this process is articulated in Eq. (5), where (Bgi) represents the background intensity level at pixel i. Bfi is the intensity of the background combined with the skin image at pixel i.

| 5 |

is the integer value of the intensity of the combined background and skin image. The intensity subtraction between maximum intensity level (255) and is applied to the entire image, resulting in a background-free image represented as WithoutBgi. To establish relationships between components, bwconncomp is utilized, and Regionprops is applied to acquire the coordinates of the region exhibiting the highest correlation. The detailed steps of the proposed method are delineated in Algorithm 2 in which the pre-processed image will be given as input and after preforming the segmentation operation, the output is generated as region of interest (ROI).

Algorithm 2.

ROI Extraction

Results and Discussion

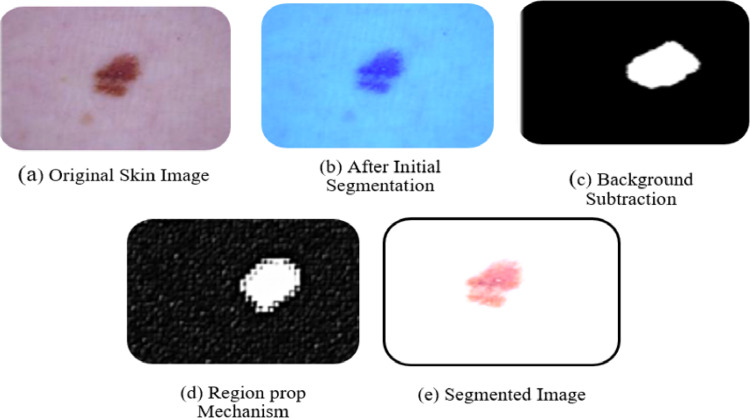

The initial phase of the proposed framework involves pre-processing for which the RGB image is initially converted into greyscale and a bottom hat filter is utilized to effectively remove noise, particularly in the form of hair. Image enhancement is carried out using DCT and color space conversion, facilitating the identification and isolation of diseased regions within the given dataset. The output image is obtained after removing the artifacts and improving the quality of the images. This pre-processed image is given as input to the segmentation phase for further operations.

For segmentation, the framework employs the background subtraction method, utilizing regionprops1 to track lesions within the image. The extraction of the ROI is achieved through the application of the midpoint analysis approach. This results in more accurate region separation and extraction, addressing issues related to regions overlapping. The proposed approach effectively resolves these concerns by incorporating enhanced inside-outside tests and region extraction mechanisms. Figure 5 illustrates the outcomes of the segmentation phase. The abnormal shapes are enclosed with boundaries in the skin images, and the separation mechanisms for normal and abnormal regions successfully delineate the lesions. The use of boundary value analysis forms a grid structure, contributing to the precise separation of lesions from normal skin.

Fig. 5.

Experimental outcome of segmentation phase

In the progression of the proposed framework, the feature extraction stage involves employing the DAA (differential analyzer algorithm) [74] to discern essential characteristics from segmented images. This includes extracting the center coordinates along the X and Y axes, as well as determining the major and minor axis lengths for each individual image. The culmination of this process is represented in Table 3. The extracted results offer a comprehensive insight into the spatial characteristics of each segmented image. The center coordinates, denoting the position within the coordinate system, reveal the image’s placement. Simultaneously, the major and minor axis lengths quantify the dimensions, providing crucial data for subsequent analysis and interpretation within the context of the proposed framework. These outcomes serve as foundational information, paving the way for a deeper understanding and utilization of the segmented images in the ensuing stages of the research. Table 3 shows the X and Y axis, major and minor axes length of some images, named image1 to image 4.

Table 3.

Region separation and extraction result

| Images | Center | Major axes length | Minor axes length | |

|---|---|---|---|---|

| X-axis | Y-axis | |||

| Image 1 | 201.5 | 201.5 | 855 | 855 |

| Image 2 | 302 | 119.2 | 80.566 | 82.55 |

| Image 3 | 335 | 269 | 109.55 | 126.6 |

| Image 4 | 450 | 242 | 90.25 | 100.59 |

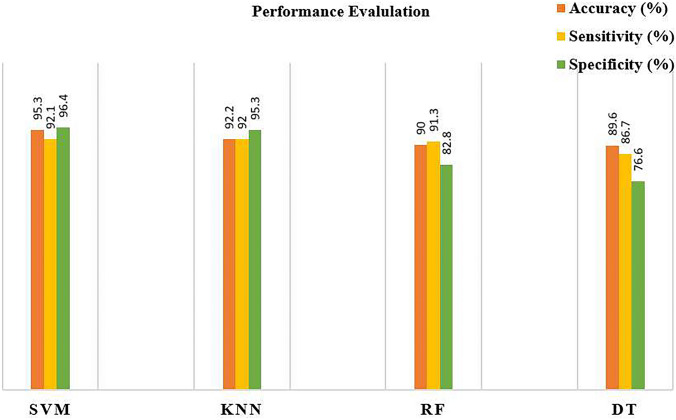

Performance Evaluation of Different Classifiers on the Same Dataset

The different machine learning classifiers (i.e., SVM, KNN, RF, DT) are implemented on same dataset to analyze their performance. The study addresses challenges in early-stage skin cancer detection by introducing an innovative segmentation mechanism. The proposed mechanism operates on the widely used ISIC dataset in dermatology research. Employing a multi-step approach, the preprocessing phase involves a combination of a bottom hat filter, DCT, and color coefficient application. Subsequently, background subtraction with midpoint analysis is implemented to address the complexities of skin image segmentation. In the feature extraction process, the study utilizes the DAA approach. To validate the proposed system, various classifiers, including decision tree, KNN (K-nearest neighbors), SVM (support vector machine), and random forest, are implemented. Performance evaluation metrics, such as accuracy, specificity, and sensitivity [75–78], are employed for comparison. The results, depicted in Fig. 6, highlight the superior performance of SVM across all performance metrics when compared to other classifiers. The findings suggest that the synergy between the proposed methodology and SVM yields enhanced segmentation and classification results within the testing dataset. The demonstrated superiority of SVM underscores its effectiveness in handling segmented data, showcasing its potential for accurate and reliable image classification in this specific context. The study emphasizes the significance of this combined approach in advancing early-stage skin cancer detection, ultimately contributing to a reduction in mortality rates associated with late diagnoses.

Fig. 6.

Performance evaluation of different classifiers

Results and Performance Evaluation of Different Segmentation Methods

In this section the comparative analysis of different segmentation methods but using same classifier (i.e., SVM) and tested on same dataset (i.e., ISIC) is discussed. The proposed system stands out as superior among the various segmentation methods evaluated in the study, particularly when considering the SVM classifier’s performance on the ISIC dataset. While previous authors have utilized different segmentation and feature extraction methods with SVM, the proposed background subtraction with midpoint analysis method showcased exceptional performance, surpassing state-of-the-art methods reported in Table 4.

Table 4.

Comparison of the proposed method with state-of-art segmentation methods using SVM classifier on ISIC dataset

| Author | Methods | Accuracy (%) |

|---|---|---|

| Carrera and Dominguez [31] | Optimal threshold | 75.00 |

| Ahmed Thaajwer and Piumi Ishanka [18] | Otsu segmentation and watershed method | 85.00 |

| Kassem et al. [35] | Google net | 94.92 |

| Pushpalatha et al. [41] | Deep Learning | 70.00 |

| Keerthana et al. [83] | CNN | 87.43 |

| Tembhurne et al. [46] | Deep learning and machine learning | 93.00 |

| Proposed method | Background subtraction with midpoint analysis | 95.30 |

Carrera and Dominguez [31] have used the optimal threshold method, and achieved an accuracy of 75.00%. Ahmed Thaajwer and Piumi Ishanka [18] used watershed and Otsu thresholding, followed by gray-level co-occurrence matrix (GLCM) and reached an accuracy of 85.00%. Kassem et al. [35] used transfer learning, GoogleNet, and DCNN. The model demonstrated a commendable accuracy of 94.92%. Fully convolutional residual networks (FCRN) and U-Net are used by Pushpalatha et al. [41] and accuracy witnessed a substantial improvement by 70.00%. Keerthana et al. [83] used CNN and SVM. The methodology reached an accuracy of 87.00% on the ISIC dataset. Tembhurne et al. [46] ensemble approach for lesion detection exhibited an accuracy of 93%. All these authors used the same classifier (i.e., SVM) and the same dataset (i.e., ISIC). It is found that deep learning methods were used in [35, 41, 46, 83], but the accuracy is not up to the mark as per the other author’s performance reported for the segmentation (as shown in Table 4). In this article, the background subtraction with midpoint analysis method’s performance (accuracy, 95.30%) is better than the state-of-the-art methods.

Comparative Analysis of Different Classifiers on ISIC Dataset

In comparing skin cancer classification methodologies, various authors employed common classifiers such as SVM, KNN, RF, and DT. Remarkably, the proposed method consistently surpassed these benchmarks, achieving accuracy rates of 95.30% (SVM), 92.20% (KNN), 90.00% (RF), and 89.60% (DT), highlighting its superior performance across diverse classifiers in skin cancer classification. The innovative approach of the proposed method in segmentation contributes to its success across all classifiers, showcasing its robustness in skin cancer classification on the ISIC dataset. The proposed background subtraction with midpoint analysis method not only outperforms other segmentation techniques on the ISIC dataset but also surpasses the accuracy achieved by state-of-the-art methods in the broader comparative analysis (as shown in Table 5). Its innovative approach, coupled with high accuracy, positions it as a promising and effective solution for skin cancer classification and detection.

Table 5.

A comparison of the proposed methods with different segmentation methods and classifiers

| Author | Segmentation and feature extraction methods | Classifiers (accuracy %) | |||

|---|---|---|---|---|---|

| SVM | KNN | RF | DT | ||

| Murugan et al. [79] |

Watershed segmentation ABCD rule GLMC |

85.72 | 69.54 | 74.32 | NA |

| Bassel et al. [80] | Xception, VGG16 Resnet50 | 86.70 | 81.00 | 80.30 | 75.70 |

| Wang [81] | Deep learning | 81.00 | 76.00 | NA | NA |

| Das et al. [82] | ABCD rule | 93.51 | 91.00 | 90.67 | NA |

| Proposed method | Background subtraction with midpoint analysis | 95.30 | 92.20 | 90.00 | 89.60 |

Murugan et al. [79] applied the watershed algorithm for the segmentation of skin cancer images and extracted GLMC-based features. For classification, SVM, RF, and KNN were implemented and attained the maximum accuracy of 85.72%. Bassel et al. [80] used an approach for classifying non-melanoma and melanoma skin cancers on the basis of the layering of classifiers and achieved the highest accuracy of 86.70%. Wang [81] used a methodology for skin lesion detection implemented on the ISIC dataset and employed a deep learning-based approach to accomplish an accuracy of 81.00%. Das et al. [82] employed the ISIC dataset; the ABCD rule was applied for feature extraction in conjunction with machine learning techniques to diagnose skin cancer with an astounding 93.51% accuracy. In the proposed methodology, the different classifiers are implemented on the proposed segmentation method, resulting in the highest accuracy across all classifiers.

Result Comparisons with the State-of-the-Art Methods

In this section the performance of the proposed segmentation method along with SVM classifier is discussed with the state-of-the-art methods. Table 6 presents a comparative analysis of diverse methods for skin cancer classification and detection. Zhang et al. [84] used an attention residual learning convolutional neural network (ARL-CNN) for automated skin lesion classification in dermoscopy images and achieved an accuracy of 91.70%. Hassan et al. [85] utilized a CNN classifier in an artificial skin cancer diagnosis system, collected features of skin cells from dermoscopic images and reached an overall accuracy of 93.70%. Wu et al. [64] used a method for detecting skin lesions by combining DenseNet with attention residual learning, achieving an accuracy of 83.70%. Javaid et al. [86] integrated image processing and machine-learning techniques for skin lesion classification and segmentation, particularly employing the random forest classifier, achieving notable results with a maximum accuracy of 93.89%. In Gouda et al. [87], an approach incorporating enhanced super-resolution generative adversarial network (ESRGAN) preprocessing along with a CNN for skin cancer detection is used and reached an accuracy of 83.20%. Reis et al. [88] developed InSiNet, a CNN rooted in deep learning specifically designed to detect malignant and non-malignant skin lesions, exhibiting superior performance with an accuracy of 94.59%. Tembhurne et al. [67] leveraged advanced neural networks within the deep learning model for intricate feature extraction, incorporating contourlet transform and local binary pattern histogram, and achieved an exceptional accuracy of 93.00%. Keethna et al. [89] used a hybrid CNN framework for classifying dermoscopy images as malignant or non-malignant, using SVM in the output layer, achieving a notable accuracy of 88.02%, assessed against expert dermatologist labels.

Table 6.

A comparative analysis of diverse methods for skin cancer classification and detection

| Author | Techniques used | Accuracy (%) |

|---|---|---|

| Zhang et al. [84] | ARL-CNN | 91.70 |

| Hasan et al. [85] | CNN | 93.70 |

| Wu et al. [64] | DenseNet | 83.70 |

| Javaid et al. [86] | OTSU, PCA, SVM, RF | 93.89 |

| Gouda et al. [87] | ESRGAN, CNN, transfer learning | 83.20 |

| Reis et al. [88] | InSiNet, CNN | 94.59 |

| Tembhurne et al. [67] | Machine learning, deep learning | 93.00 |

| Keerthna et al. [89] | CNN, SVM | 88.02 |

| Proposed method | Background subtraction with midpoint analysis, SVM | 95.30 |

The proposed method introduces an innovative approach to segmentation, showcasing its effectiveness in accurately delineating skin lesions from images. The proposed method achieved an impressive accuracy rate of 95.30% and outperforming with other state-of-the-art methods reported in the study. Overall, the findings suggest that the combination of the proposed segmentation method with SVM yields enhanced results in the segmentation and classification of images on the testing dataset. The superiority of the proposed method emphasizes its effectiveness in handling segmented data, showcasing its potential for accurate and reliable image classification in this particular context.

Conclusion

Cancer identification and diagnosis are difficult in the medical field. Early cancer detection is critical for accurate diagnosis and the prevention of disease spread. In the past decades, a vast study has been done in this field and various methods are proposed for skin cancer classification and detection. Many melanoma segmentation techniques face challenges in recovering complete structures due to issues like image quality, including noise, low contrast, or intensity inhomogeneity. This article introduces a novel technique for skin lesion segmentation, encompassing multiple steps such as image partitioning, separation, background elimination, and extraction of the region of interest from the input image. The proposed method incorporates a bottom hat filter for hair removal and employs discrete cosine transformation (DCT) and color coefficients for image enhancement. An enhanced background subtraction method, coupled with midpoint analysis, is utilized for skin cancer image segmentation, achieving an impressive accuracy of 95.30%. Segmentation validation is ensured by comparing the segmented images with validation data provided in the ISIC dataset.

Acknowledgements

The authors would like to thank Lovely Professional University, Punjab, India, for providing the systems and infrastructure to support this research work.

Author Contribution

All the authors have contributed to work.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Data Availability

Data supporting this study are openly available from ISIC database: https://challenge.isic-archive.com.

Declarations

Competing Interests

The authors declare no competing interests.

Footnotes

Regionpropos is one kind of function in MATLAB which is used to label the properties of an image.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.N. H. Khan et al., “Skin cancer biology and barriers to treatment: Recent applications of polymeric micro/nanostructures,” Journal of Advanced Research, vol. 36. Elsevier B.V., pp. 223–247, Feb. 01, 2022. 10.1016/j.jare.2021.06.014. [DOI] [PMC free article] [PubMed]

- 2.M. Dildar et al., “Skin cancer detection: A review using deep learning techniques,” International Journal of Environmental Research and Public Health, vol. 18, no. 10. MDPI AG, May 02, 2021. 10.3390/ijerph18105479. [DOI] [PMC free article] [PubMed]

- 3.R. Nuthan and V. Rohith, “Detection of Skin Cancer Using KNN and Naive Bayes Algorithms R. Nuthan 1 , V. Rohith 2*,” vol. 12, no. 6, pp. 8257–8266, 2021.

- 4.U. Saghir and V. Devendran, “A Brief Review of Feature Extraction Methods for Melanoma Detection,” in 2021 7th International Conference on Advanced Computing and Communication Systems, ICACCS 2021, Institute of Electrical and Electronics Engineers Inc., Mar. 2021, pp. 1304–1307. 10.1109/ICACCS51430.2021.9441787.

- 5.A. M. Glazer, D. S. Rigel, R. R. Winkelmann, and A. S. Farberg, “Clinical Diagnosis of Skin Cancer: Enhancing Inspection and Early Recognition,” Dermatol Clin, vol. 35, no. 4, pp. 409–416, 2017. 10.1016/j.det.2017.06.001. [DOI] [PubMed] [Google Scholar]

- 6.A. Jiang et al., “Skin cancer discovery during total body skin examinations,” Int J Womens Dermatol, vol. 7, no. 4, pp. 411–414, Sep. 2021. 10.1016/j.ijwd.2021.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.M. Q. Khan et al., “Classification of Melanoma and Nevus in Digital Images for Diagnosis of Skin Cancer,” IEEE Access, vol. 7, pp. 90132–90144, 2019. 10.1109/ACCESS.2019.2926837. [Google Scholar]

- 8.H. D. Heibel, L. Hooey, and C. J. Cockerell, “A Review of Noninvasive Techniques for Skin Cancer Detection in Dermatology,” Am J Clin Dermatol, vol. 21, no. 4, pp. 513–524, 2020. 10.1007/s40257-020-00517-z. [DOI] [PubMed] [Google Scholar]

- 9.J. Kato, K. Horimoto, S. Sato, T. Minowa, and H. Uhara, “Dermoscopy of Melanoma and Non-melanoma Skin Cancers,” Front Med (Lausanne), vol. 6, no. August, pp. 1–7, 2019. 10.3389/fmed.2019.00180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.M. E. Vestergaard, P. Macaskill, P. E. Holt, and S. W. Menzies, “Dermoscopy compared with naked eye examination for the diagnosis of primary melanoma: A meta-analysis of studies performed in a clinical setting,” British Journal of Dermatology, vol. 159, no. 3, pp. 669–676, 2008. 10.1111/j.1365-2133.2008.08713.x. [DOI] [PubMed] [Google Scholar]

- 11.L. Xu et al., “Segmentation of skin cancer images,” Image Vis Comput, vol. 17, no. 1, pp. 65–74, 1999. 10.1016/s0262-8856(98)00091-2. [Google Scholar]

- 12.N. S. Zghal and N. Derbel, “Melanoma Skin Cancer Detection based on Image Processing,” Current Medical Imaging Formerly Current Medical Imaging Reviews, vol. 16, no. 1, pp. 50–58, 2018. 10.2174/1573405614666180911120546. [DOI] [PubMed] [Google Scholar]

- 13.N. M. Zaitoun and M. J. Aqel, “Survey on Image Segmentation Techniques,” Procedia Comput Sci, vol. 65, no. Iccmit, pp. 797–806, 2015. 10.1016/j.procs.2015.09.027. [Google Scholar]

- 14.M. K. Hasan, M. A. Ahamad, C. H. Yap, and G. Yang (2023) A survey, review, and future trends of skin lesion segmentation and classification. Comput Biol Med 155(January). 10.1016/j.compbiomed.2023.106624. [DOI] [PubMed]

- 15.M. A. Siddique and S. K. Singh, “A Survey of Computer Vision based Liver Cancer Detection,” Int J Bioinform Res Appl, vol. 18, no. 6, p. 1, 2022. 10.1504/IJBRA.2022.10053584. [Google Scholar]

- 16.D. Divya and T. R. Ganesh Babu, “A Survey on Image Segmentation Techniques,” Lecture Notes on Data Engineering and Communications Technologies, vol. 35, pp. 1107–1114, 2020. 10.1007/978-3-030-32150-5_112.

- 17.A. T. Beuren, R. Valentim, C. Palavro, R. Janasieivicz, R. A. Folloni, and J. Facon, “Skin Melanoma Segmentation by Morphological Approach,” pp. 972–978, 2012.

- 18.M. A. Ahmed Thaajwer and U. A. Piumi Ishanka, “Melanoma skin cancer detection using image processing and machine learning techniques,” ICAC 2020 - 2nd International Conference on Advancements in Computing, Proceedings, pp. 363–368, 2020. 10.1109/ICAC51239.2020.9357309.

- 19.R. Javid, M. S. M. Rahim, T. Saba, and M. Rashid, “Region-based active contour JSEG fusion technique for skin lesion segmentation from dermoscopic images,” Biomedical Research, vol. 30, no. 6, pp. 1–10, 2019. [Google Scholar]

- 20.S. Garg and B. Jindal, “Skin lesion segmentation using k-mean and optimized fire fly algorithm,” Multimed Tools Appl, vol. 80, no. 5, pp. 7397–7410, 2021. 10.1007/s11042-020-10064-8. [Google Scholar]

- 21.R. Kasmi, K. Mokrani, R. K. Rader, J. G. Cole, and W. V. Stoecker, “Biologically inspired skin lesion segmentation using a geodesic active contour technique,” Skin Research and Technology, vol. 22, no. 2, pp. 208–222, 2016. 10.1111/srt.12252. [DOI] [PubMed] [Google Scholar]

- 22.F. Riaz, S. Naeem, R. Nawaz, and M. Coimbra, “Active Contours Based Segmentation and Lesion Periphery Analysis for Characterization of Skin Lesions in Dermoscopy Images,” IEEE J Biomed Health Inform, vol. 23, no. 2, pp. 489–500, 2019. 10.1109/JBHI.2018.2832455. [DOI] [PubMed] [Google Scholar]

- 23.A. Bassel, A. B. Abdulkareem, Z. A. A. Alyasseri, N. S. Sani, and H. J. Mohammed, “Automatic Malignant and Benign Skin Cancer Classification Using a Hybrid Deep Learning Approach,” Diagnostics, vol. 12, no. 10, Oct. 2022. 10.3390/diagnostics12102472. [DOI] [PMC free article] [PubMed]

- 24.M. Dildar et al., “Skin cancer detection: A review using deep learning techniques,” Int J Environ Res Public Health, vol. 18, no. 10, 2021. 10.3390/ijerph18105479. [DOI] [PMC free article] [PubMed]

- 25.A. Esteva et al., “Dermatologist-level classification of skin cancer with deep neural networks,” Nature, vol. 542, no. 7639, pp. 115–118, 2017. 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.F. Xie, H. Fan, Y. Li, Z. Jiang, R. Meng, and A. Bovik, “Melanoma classification on dermoscopy images using a neural network ensemble model,” IEEE Trans Med Imaging, vol. 36, no. 3, pp. 849–858, 2017. 10.1109/TMI.2016.2633551. [DOI] [PubMed] [Google Scholar]

- 27.M. u. Rehman, S. H. Khan, S. M. Danish Rizvi, Z. Abbas and A. Zafar, “Classification of Skin Lesion by Interference of Segmentation and Convolotion Neural Network,” 2018 2nd International Conference on Engineering Innovation (ICEI), Bangkok, Thailand, pp. 81–85, 2018. 10.1109/ICEI18.2018.8448814.

- 28.H. Mahmoud, M. Abdel-Nasser and O. A. Omer, “Computer aided diagnosis system for skin lesions detection using texture analysis methods,” 2018 International Conference on Innovative Trends in Computer Engineering (ITCE), Aswan, Egypt, pp. 140–144, 2018. 10.1109/ITCE.2018.8327948.

- 29.S. Pathan, K. G. Prabhu, and P. C. Siddalingaswamy, “Techniques and algorithms for computer aided diagnosis of pigmented skin lesions—A review,” Biomed Signal Process Control, vol. 39, pp. 237–262, 2018. 10.1016/j.bspc.2017.07.010. [Google Scholar]

- 30.M. A. Al-masni, M. A. Al-antari, M. T. Choi, S. M. Han, and T. S. Kim, “Skin lesion segmentation in dermoscopy images via deep full resolution convolutional networks,” Comput Methods Programs Biomed, vol. 162, pp. 221–231, 2018. 10.1016/j.cmpb.2018.05.027. [DOI] [PubMed] [Google Scholar]

- 31.E. V. C. B and D. Ron-dom, Technology Trends, vol. 895. in Communications in Computer and Information Science, vol. 895. Cham: Springer International Publishing, 2019. 10.1007/978-3-030-05532-5.

- 32.M. Aljanabi, Y. E. Özok, J. Rahebi, and A. S. Abdullah, “Skin lesion segmentation method for dermoscopy images using artificial bee colony algorithm,” Symmetry (Basel), vol. 10, no. 8, 2018. 10.3390/sym10080347.

- 33.S. S. Chouhan, A. Kaul, and U. P. Singh, Soft computing approaches for image segmentation: a survey, vol. 77, no. 21. Multimedia Tools and Applications, 2018. 10.1007/s11042-018-6005-6.

- 34.M. Waghulde, S. Kulkarni, and G. Phadke, “Detection of Skin Cancer Lesions from Digital Images with Image Processing Techniques,” in 2019 IEEE Pune Section International Conference (PuneCon), IEEE, Dec. 2019, pp. 1–6. 10.1109/PuneCon46936.2019.9105886.

- 35.M. A. Kassem, K. M. Hosny, and M. M. Fouad, “Skin Lesions Classification into Eight Classes for ISIC 2019 Using Deep Convolutional Neural Network and Transfer Learning,” IEEE Access, vol. 8, no. June, pp. 114822–114832, 2020. 10.1109/ACCESS.2020.3003890. [Google Scholar]

- 36.N. Kavitha and M. Vayelapelli, “A Study on Pre-processing Techniques for Automated Skin Cancer Detection,” 2020, pp. 145–153. 10.1007/978-981-15-2407-3_19.

- 37.M. Krishna Monika, N. Arun Vignesh, C. Usha Kumari, M. N. V. S. S. Kumar, and E. Laxmi Lydia, “Skin cancer detection and classification using machine learning,” Mater Today Proc, vol. 33, no. xxxx, pp. 4266–4270, 2020. 10.1016/j.matpr.2020.07.366.

- 38.Ç. Kaymak and A. Uçar, A brief survey and an application of semantic image segmentation for autonomous driving, vol. 136. 2019. 10.1007/978-3-030-11479-4_9.

- 39.R. Mohakud and R. Dash, “Skin cancer image segmentation utilizing a novel EN-GWO based hyper-parameter optimized FCEDN,” Journal of King Saud University - Computer and Information Sciences, no. xxxx, 2022. 10.1016/j.jksuci.2021.12.018.

- 40.A. Murugan, S. A. H. Nair, A. A. P. Preethi, and K. P. S. Kumar, “Diagnosis of skin cancer using machine learning techniques,” Microprocess Microsyst, vol. 81, no. October 2020, p. 103727, 2021. 10.1016/j.micpro.2020.103727.

- 41.A. Pushpalatha, P. Dharani, R. Dharini, and J. Gowsalya, “Retraction: Skin Cancer Classification Detection using CNN and SVM,” J Phys Conf Ser, vol. 1916, no. 1, 2021. 10.1088/1742-6596/1916/1/012148. [Google Scholar]

- 42.A. Imran, A. Nasir, M. Bilal, G. Sun, A. Alzahrani, and A. Almuhaimeed, “Skin Cancer Detection Using Combined Decision of Deep Learners,” IEEE Access, vol. 10, pp. 118198–118212, 2022. 10.1109/ACCESS.2022.3220329. [Google Scholar]

- 43.T. Guergueb and M. A. Akhloufi, “Skin Cancer Detection using Ensemble Learning and Grouping of Deep Models,” in International Conference on Content-based Multimedia Indexing, New York, NY, USA: ACM, Sep. 2022, pp. 121–125. 10.1145/3549555.3549584.

- 44.S. S. Sundari, Dr. S. AK, and Dr. M. Islabudeen, “Skin Lesions Detection using Deep Learning Techniques,” Int J Res Appl Sci Eng Technol, vol. 11, no. 5, pp. 2546–2548, May 2023. 10.22214/ijraset.2023.52129.

- 45.A. Boudhir Abdelhakim, B. Ahmed Mohamed, and D. Yousra, “A New Approach using Deep Learning and Reinforcement Learning in HealthCare,” International journal of electrical and computer engineering systems, vol. 14, no. 5, pp. 557–564, Jun. 2023. 10.32985/ijeces.14.5.7.

- 46.J. V. Tembhurne, N. Hebbar, H. Y. Patil, and T. Diwan, “Skin cancer detection using ensemble of machine learning and deep learning techniques,” Multimed Tools Appl, Jul. 2023. 10.1007/s11042-023-14697-3.35968414 [Google Scholar]

- 47.I. Giotis, N. Molders, S. Land, M. Biehl, M. F. Jonkman, and N. Petkov, “MED-NODE: A computer-assisted melanoma diagnosis system using non-dermoscopic images,” Expert Syst Appl, vol. 42, no. 19, pp. 6578–6585, 2015. 10.1016/j.eswa.2015.04.034. [Google Scholar]

- 48.J. Premaladha and K. S. Ravichandran, “Novel Approaches for Diagnosing Melanoma Skin Lesions Through Supervised and Deep Learning Algorithms,” J Med Syst, vol. 40, no. 4, pp. 1–12, 2016. 10.1007/s10916-016-0460-2. [DOI] [PubMed] [Google Scholar]

- 49.T. Y. Satheesha, D. Satyanarayana, M. N. G. Prasad, and K. D. Dhruve, “Melanoma Is Skin Deep: A 3D Reconstruction Technique for Computerized Dermoscopic Skin Lesion Classification,” IEEE J Transl Eng Health Med, vol. 5, no. c, 2017. 10.1109/JTEHM.2017.2648797. [DOI] [PMC free article] [PubMed]

- 50.Y. Yuan, M. Chao, and Y. C. Lo, “Automatic Skin Lesion Segmentation Using Deep Fully Convolutional Networks with Jaccard Distance,” IEEE Trans Med Imaging, vol. 36, no. 9, pp. 1876–1886, 2017. 10.1109/TMI.2017.2695227. [DOI] [PubMed] [Google Scholar]

- 51.N. Singh and S. K. Gupta, “Recent advancement in the early detection of melanoma using computerized tools: An image analysis perspective,” Skin Research and Technology, vol. 25, no. 2, pp. 129–141, 2019. 10.1111/srt.12622. [DOI] [PubMed] [Google Scholar]

- 52.R. D. Seeja and A. Suresh, “Deep learning based skin lesion segmentation and classification of melanoma using support vector machine (SVM),” Asian Pacific Journal of Cancer Prevention, vol. 20, no. 5, pp. 1555–1561, 2019. 10.31557/APJCP.2019.20.5.1555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.K. Zafar et al., “Skin lesion segmentation from dermoscopic images using convolutional neural network,” Sensors (Switzerland), vol. 20, no. 6, pp. 1–14, 2020. 10.3390/s20061601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.R. Vani, J. C. Kavitha, and D. Subitha, “Novel approach for melanoma detection through iterative deep vector network,” J Ambient Intell Humaniz Comput, no. 2018, 2021. 10.1007/s12652-021-03242-5.

- 55.H. Ashraf, A. Waris, M. F. Ghafoor, S. O. Gilani, and I. K. Niazi, “Melanoma segmentation using deep learning with test-time augmentations and conditional random fields,” Sci Rep, vol. 12, no. 1, pp. 1–16, 2022. 10.1038/s41598-022-07885-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.M. Tahir, A. Naeem, H. Malik, J. Tanveer, R. A. Naqvi, and S. W. Lee, “DSCC_Net: Multi-Classification Deep Learning Models for Diagnosing of Skin Cancer Using Dermoscopic Images,” Cancers (Basel), vol. 15, no. 7, Apr. 2023. 10.3390/cancers15072179. [DOI] [PMC free article] [PubMed]

- 57.M. Ruela, C. Barata, J. S. Marques, and J. Rozeira, “A system for the detection of melanomas in dermoscopy images using shape and symmetry features,” Comput Methods Biomech Biomed Eng Imaging Vis, vol. 5, no. 2, pp. 127–137, 2017. 10.1080/21681163.2015.1029080. [Google Scholar]

- 58.C. Barata, M. Ruela, M. Francisco, T. Mendonca, and J. S. Marques, “Two systems for the detection of melanomas in dermoscopy images using texture and color features,” IEEE Syst J, vol. 8, no. 3, pp. 965–979, 2014. 10.1109/JSYST.2013.2271540. [Google Scholar]

- 59.M. K. Hasan, M. T. E. Elahi, M. A. Alam, M. T. Jawad, and R. Martí, “DermoExpert: Skin lesion classification using a hybrid convolutional neural network through segmentation, transfer learning, and augmentation,” Inform Med Unlocked, vol. 28, p. 100819, 2022. 10.1016/j.imu.2021.100819. [Google Scholar]

- 60.C. Barata, J. S. Marques, and J. Rozeira, “A system for the detection of pigment network in dermoscopy images using directional filters,” IEEE Trans Biomed Eng, vol. 59, no. 10, pp. 2744–2754, 2012. 10.1109/TBME.2012.2209423. [DOI] [PubMed] [Google Scholar]

- 61.P. Dubai, S. Bhatt, C. Joglekar, and S. Patii, “Skin cancer detection and classification,” Proceedings of the 2017 6th International Conference on Electrical Engineering and Informatics: Sustainable Society Through Digital Innovation, ICEEI 2017, vol. 2017-Novem, pp. 1–6, 2018. 10.1109/ICEEI.2017.8312419.

- 62.H. Bhatt, V. Shah, K. Shah, R. Shah, and M. Shah, “State-of-the-art machine learning techniques for melanoma skin cancer detection and classification: a comprehensive review,” Intelligent Medicine, 2022. 10.1016/j.imed.2022.08.004. [Google Scholar]

- 63.N. C. F. Codella et al., “Skin lesion analysis toward melanoma detection: A challenge at the 2017 International symposium on biomedical imaging (ISBI), hosted by the international skin imaging collaboration (ISIC),” Proceedings - International Symposium on Biomedical Imaging, vol. 2018-April, no. Isbi, pp. 168–172, 2018. 10.1109/ISBI.2018.8363547.

- 64.J. Wu, W. Hu, Y. Wen, W. Tu, and X. Liu, “Skin Lesion Classification Using Densely Connected Convolutional Networks with Attention Residual Learning,” Sensors, vol. 20, no. 24, p. 7080, Dec. 2020. 10.3390/s20247080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.M. F. Jojoa Acosta, L. Y. Caballero Tovar, M. B. Garcia-Zapirain, and W. S. Percybrooks, “Melanoma diagnosis using deep learning techniques on dermatoscopic images,” BMC Med Imaging, vol. 21, no. 1, p. 6, Dec. 2021. 10.1186/s12880-020-00534-8. [DOI] [PMC free article] [PubMed]

- 66.B. Cassidy, C. Kendrick, A. Brodzicki, J. Jaworek-Korjakowska, and M. H. Yap, “Analysis of the ISIC image datasets: Usage, benchmarks and recommendations,” Med Image Anal, vol. 75, p. 102305, Jan. 2022. 10.1016/j.media.2021.102305. [DOI] [PubMed] [Google Scholar]

- 67.J. V. Tembhurne, N. Hebbar, H. Y. Patil, and T. Diwan, “Skin cancer detection using ensemble of machine learning and deep learning techniques,” Multimed Tools Appl, vol. 82, no. 18, pp. 27501–27524, Jul. 2023. 10.1007/s11042-023-14697-3. [Google Scholar]

- 68.M. K. Hasan, M. A. Ahamad, C. H. Yap, and G. Yang, “A survey, review, and future trends of skin lesion segmentation and classification,” Computers in Biology and Medicine, vol. 155. Elsevier Ltd, Mar. 01, 2023. 10.1016/j.compbiomed.2023.106624. [DOI] [PubMed]

- 69.S. L. Lee and C. C. Tseng, “Image enhancement using DCT-based matrix homomorphic filtering method,” 2016 IEEE Asia Pacific Conference on Circuits and Systems, APCCAS 2016, pp. 1–4, 2017. 10.1109/APCCAS.2016.7803880.

- 70.T. F. Sanam and H. Imtiaz, “A DCT-based noisy speech enhancement method using teager energy operator,” Proceedings of the 2013 5th International Conference on Knowledge and Smart Technology, KST 2013, pp. 16–20, 2013. 10.1109/KST.2013.6512780.

- 71.R. Rajagopal, “Ch01-P373624.tex Discrete Cosine and Sine Transforms 1.1 Introduction,” 2006.

- 72.U. Saghir and S. K. Singh, “Segmentation of Skin Cancer Images Applying Background Subtraction with Midpoint Analysis,” 2024. 10.1201/9781003405580-93.

- 73.P. A. Flores-Vidal, P. Olaso, D. Gómez, and C. Guada, “A new edge detection method based on global evaluation using fuzzy clustering,” Soft comput, vol. 23, no. 6, pp. 1809–1821, 2019. 10.1007/s00500-018-3540-z. [Google Scholar]

- 74.U. Saghir and M. Hasan, “Skin cancer detection and classification based on differential analyzer algorithm,” Multimed Tools Appl, 2023. 10.1007/s11042-023-14409-x. [Google Scholar]

- 75.A. Blundo, A. Cignoni, T. Banfi, and G. Ciuti, “Comparative Analysis of Diagnostic Techniques for Melanoma Detection: A Systematic Review of Diagnostic Test Accuracy Studies and Meta-Analysis,” Front Med (Lausanne), vol. 8, no. April, 2021. 10.3389/fmed.2021.637069. [DOI] [PMC free article] [PubMed]

- 76.J. Jaworek-Korjakowska, “Computer-aided diagnosis of micro-malignant melanoma lesions applying support vector machines,” Biomed Res Int, vol. 2016, 2016. 10.1155/2016/4381972. [DOI] [PMC free article] [PubMed]

- 77.A. Mohammed and R. Kora, “A comprehensive review on ensemble deep learning: Opportunities and challenges,” Journal of King Saud University - Computer and Information Sciences, vol. 35, no. 2, pp. 757–774, Feb. 2023. 10.1016/j.jksuci.2023.01.014. [Google Scholar]

- 78.R. B. Oliveira, M. E. Filho, Z. Ma, J. P. Papa, A. S. Pereira, and J. M. R. S. Tavares, “Computational methods for the image segmentation of pigmented skin lesions: A review,” Comput Methods Programs Biomed, vol. 131, pp. 127–141, 2016. 10.1016/j.cmpb.2016.03.032. [DOI] [PubMed] [Google Scholar]

- 79.A. Murugan, S. A. H. Nair, and K. P. S. Kumar, “Detection of Skin Cancer Using SVM, Random Forest and kNN Classifiers,” J Med Syst, vol. 43, no. 8, 2019. 10.1007/s10916-019-1400-8. [DOI] [PubMed]

- 80.A. Bassel, A. B. Abdulkareem, Z. A. A. Alyasseri, N. S. Sani, and H. J. Mohammed, “Automatic Malignant and Benign Skin Cancer Classification Using a Hybrid Deep Learning Approach,” Diagnostics, vol. 12, no. 10, 2022. 10.3390/diagnostics12102472. [DOI] [PMC free article] [PubMed]

- 81.X. Wang, “Deep Learning-based and Machine Learning-based Application in Skin Cancer Image Classification,” J Phys Conf Ser, vol. 2405, no. 1, 2022. 10.1088/1742-6596/2405/1/012024.

- 82.J. Das, D. Mishra, A. Das, M. Mohanty, and A. Sarangi, Skin cancer detection using machine learning techniques with ABCD features. 2022. 10.1109/ODICON54453.2022.10009956. [Google Scholar]

- 83.D. Keerthana, V. Venugopal, M. K. Nath, and M. Mishra, “Hybrid convolutional neural networks with SVM classifier for classification of skin cancer,” Biomedical Engineering Advances, vol. 5, no. December 2022, p. 100069, 2023. 10.1016/j.bea.2022.100069.

- 84.J. Zhang, Y. Xie, Y. Xia, and C. Shen, “Attention Residual Learning for Skin Lesion Classification,” IEEE Trans Med Imaging, vol. 38, no. 9, pp. 2092–2103, Sep. 2019. 10.1109/TMI.2019.2893944. [DOI] [PubMed] [Google Scholar]

- 85.M. Hasan, S. Das Barman, S. Islam, and A. W. Reza, “Skin cancer detection using convolutional neural network,” in ACM International Conference Proceeding Series, Association for Computing Machinery, Apr. 2019, pp. 254–258. 10.1145/3330482.3330525.

- 86.A. Javaid, M. Sadiq, and F. Akram, “Skin Cancer Classification Using Image Processing and Machine Learning,” in 2021 International Bhurban Conference on Applied Sciences and Technologies (IBCAST), IEEE, Jan. 2021, pp. 439–444. 10.1109/IBCAST51254.2021.9393198.

- 87.W. Gouda, N. U. Sama, G. Al-Waakid, M. Humayun, and N. Z. Jhanjhi, “Detection of Skin Cancer Based on Skin Lesion Images Using Deep Learning,” Healthcare, vol. 10, no. 7, p. 1183, Jun. 2022. 10.3390/healthcare10071183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.H. C. Reis, V. Turk, K. Khoshelham, and S. Kaya, “InSiNet: a deep convolutional approach to skin cancer detection and segmentation,” Med Biol Eng Comput, vol. 60, no. 3, pp. 643–662, Mar. 2022. 10.1007/s11517-021-02473-0. [DOI] [PubMed] [Google Scholar]

- 89.D. Keerthana, V. Venugopal, M. K. Nath, and M. Mishra, “Hybrid convolutional neural networks with SVM classifier for classification of skin cancer,” Biomedical Engineering Advances, vol. 5, p. 100069, Jun. 2023. 10.1016/j.bea.2022.100069. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data supporting this study are openly available from ISIC database: https://challenge.isic-archive.com.