Abstract

Objective. Many psychiatric disorders involve excessive avoidant or defensive behavior, such as avoidance in anxiety and trauma disorders or defensive rituals in obsessive-compulsive disorders. Developing algorithms to predict these behaviors from local field potentials (LFPs) could serve as the foundational technology for closed-loop control of such disorders. A significant challenge is identifying the LFP features that encode these defensive behaviors. Approach. We analyzed LFP signals from the infralimbic cortex and basolateral amygdala of rats undergoing tone-shock conditioning and extinction, standard for investigating defensive behaviors. We utilized a comprehensive set of neuro-markers across spectral, temporal, and connectivity domains, employing SHapley Additive exPlanations for feature importance evaluation within Light Gradient-Boosting Machine models. Our goal was to decode three commonly studied avoidance/defensive behaviors: freezing, bar-press suppression, and motion (accelerometry), examining the impact of different features on decoding performance. Main results. Band power and band power ratio between channels emerged as optimal features across sessions. High-gamma (80–150 Hz) power, power ratios, and inter-regional correlations were more informative than other bands that are more classically linked to defensive behaviors. Focusing on highly informative features enhanced performance. Across 4 recording sessions with 16 subjects, we achieved an average coefficient of determination of 0.5357 and 0.3476, and Pearson correlation coefficients of 0.7579 and 0.6092 for accelerometry jerk and bar press rate, respectively. Utilizing only the most informative features revealed differential encoding between accelerometry and bar press rate, with the former primarily through local spectral power and the latter via inter-regional connectivity. Our methodology demonstrated remarkably low training/inference time and memory usage, requiring 310 ms for training, 0.051 ms for inference, and 16.6 kB of memory, using a single core of AMD Ryzen Threadripper PRO 5995WX CPU. Significance. Our results demonstrate the feasibility of accurately decoding defensive behaviors with minimal latency, using LFP features from neural circuits strongly linked to these behaviors. This methodology holds promise for real-time decoding to identify physiological targets in closed-loop psychiatric neuromodulation.

Keywords: neural decoder, defensive behavior, machine learning, psychiatric brain-machine interfaces, neuro-marker

1. Introduction

Fear and anxiety serve as adaptive defensive responses to threats, a phenomenon observed across a variety of species [1]. These responses are evolutionary mechanisms designed to enhance survival by preparing an organism to confront or flee from immediate danger [2, 3]. However, the same reactions, when excessive or misplaced, can significantly disrupt an individual’s overall quality of life [4, 5]. Anxiety disorders, characterized by disproportionate and persistent fear and anxiety, are among the most prevalent psychiatric conditions [6, 7]. Fear and anxiety contribute to the manifestation of a wide array of psychiatric disorders, underscoring their critical role in mental health [8, 9].

Fear and anxiety are often studied through the lens of defensive behaviors, a set of responses or patterns elicited in the face of perceived threats [10]. Rodents exhibit various defensive behaviors in response to actual or potential threats [11, 12]. These behaviors are often used to model human illnesses, because anxiety can also be viewed as an excessive response to potential or actual threats [11, 13]. Therefore, exposure to a threatening stimulus evokes defensive responses that resemble emotional states related to fear and anxiety [12]. Recent studies indicate that the defensive patterns observed in normal human subjects show notable similarities to those of laboratory rodents. This parallel supports the hypothesis that rodent defensive behaviors may be reasonable models of similar behaviors in human anxiety disorders [14, 15]. The subjective human experience of fear is not the same as innate defensive behaviors in lower vertebrates, but those defensive behaviors are the closest available model [16]. Furthermore, both human fear/anxiety and rodent defensive behavior load onto the same frontal-amygdala circuits [17–19]. Hence, animal defensive behaviors offer a valuable model for understanding negative-valence processes in humans [20–22]. As a result, the excessive or contextually inappropriate exhibition of these behaviors can serve as a model for certain aspects of human psychiatric disorders [23, 24].

The long-term goal of modeling defensive and anxious behavior is to develop new treatments. Direct electrical stimulation of the brain is a particularly promising approach to that translation. Brain stimulation specifically improves the symptoms of multiple fear/anxiety disorders [25–28]. The approach involves the precise targeting of specific brain areas to modulate dysfunctional neural circuits associated with these conditions, which allows direct targeting of mechanisms discovered through animal models. Within the field of psychiatric brain stimulation, there is a strong drive towards closed-loop therapies [29–32]. The symptoms of psychiatric disorders, and of fear/anxiety disorders in particular, vary over time, and only some of those symptom states require neurostimulation. A closed-loop brain-machine interface (BMI) system that uses real-time neural activity from the subject to guide stimulation could help develop effective, precisely tailored therapies that stimulate only when it will be beneficial [29, 33–36].

There exists a main challenge for developing such closed-loop BMIs: we need a neural decoder that is capable of estimating the disorder symptom or behavior in real-time [37–39]. The development of highly accurate, fast, and memory efficient decoders is essential for optimizing the therapeutic outcomes, ensuring that stimulation protocols are dynamically adjusted to the fluctuating patterns of neural dysregulation associated with psychiatric disorders [40]. Consequently, advancements in BMI technology and decoding algorithms hold the promise of revolutionizing the treatment landscape for patients with psychiatric conditions, offering hope for more personalized and effective interventions.

Decoding plays a pivotal role in neural engineering and the analysis of neural data [41–43]. It leverages activity recorded from the brain to forecast behaviors or symptoms [44–47]. These predictions, derived from decoding, can be utilized to manipulate devices or to enhance our understanding of the brain’s involvement in disorders [48–50]. This is achieved by assessing the extent of information that neural activity conveys about a symptom or behavior and examining how this information varies across different brain areas, experimental conditions, and states of disorder [51–53]. Decoding psychiatric states poses unique modeling challenges due to the complex and widespread network of brain regions involved in neural processes linked to neuropsychiatric states and behaviors, particularly in disorders such as chronic pain, addiction, or post-traumatic stress disorder (PTSD) [30, 38, 54–57]. It is also important to emphasize decoding from local field potentials (LFP) as opposed to single-neuron recordings. Single-neuron activities can be highly informative and were the foundation of early successful human motor decoding examples [41, 43, 58]. Single unit signals also underpinned a recent study demonstrating decoding of anxiety/threat-related behaviors [59]. These signals, however, are unstable over long periods of time (i.e. the decades that a clinical BMI might need to function) and require sampling at rates above 10 kHz, dramatically increasing system power requirements. LFPs, in contrast, carry rich behaviorally relevant signals [60–62] and can be stable for years [63]. They may specifically carry defensive information, and therefore they can also be used to decode defensive behaviors. For instance, one study showed that freezing states could be partially classified using 4 Hz LFP power [64].

In essence, neural decoding represents a regression or classification challenge that links neural signals to specific variables [65]. Machine learning (ML) has emerged as a pivotal technique for elucidating the intricate patterns of neural activity, as well as the individual variations in brain function correlated with symptoms and behaviors [66]. Its utility is particularly pronounced when the primary research objective is to enhance predictive accuracy, a goal partly attributed to ML’s proven efficacy in addressing nonlinear challenges [67, 68]. Despite recent advances in ML techniques, the decoding of neural activity frequently employs traditional approaches such as linear regression (LR) and support vector machine (SVM) [69–71]. The adoption of contemporary ML tools for neural decoding might yield not only a substantial performance improvement but also the possibility of gaining more profound insights into neural functionality, as shown in recent studies. Encoding-decoding frameworks, based on linear state-space models, have decoded mood and cognitive state fluctuations from multi-site intracranial electrocorticogram (ECoG) or stereo-electroencephalography (sEEG) signals [38, 69]. A multi-layer perceptron (MLP) has been utilized to forecast depressive states in human patients from local field potential (LFP) signals [72]. A decoder leveraging random forest (RF) models has been developed for the prediction of multi-class affective behaviors via intracranial electroencephalography (iEEG) recordings from the human mesolimbic network [73]. A discriminative cross-spectral factor analysis model was utilized for identifying a brain-wide oscillatory pattern for predicting resilient versus susceptible mice to stress [74]. Episodes of mental fatigue and changes in vigilance were precisely decoded from ECoG signals in non-human primates (NHPs), using a gradient boosting classifier [75, 76].

In the aforementioned studies, beyond the decoding model utilized for prediction, the neuro-markers derived from neural data were crucial for decoding efficacy. The majority of previous efforts to detect psychiatric symptoms and behaviors in humans have focused on classical spectral power features [38, 69, 73, 77, 78]. It is not clear that spectral power is the best feature for decoding complex cognitive-emotional phenomena such as fear/defensive behaviors. For instance, spectral power features were outperformed by cross-region connectivity metrics when attempting to decode cognitive task engagement [70, 79]. Spectral (wavelet entropy), temporal (Hjorth parameters), and connectivity features (partial directed coherence and phase locking index) have all been identified as significant markers for detecting mental fatigue [75]. In contrast, shifts in depressive states were more influenced by variations in spectral power features within the subcallosal cingulate than by coherence and phase-amplitude coupling [72]. Consequently, the importance of spectral power vs. other neuro-markers for modeling and decoding defensive behaviors and fear expression requires further investigation.

Here, we studied the decoding of defensive behaviors from the prefrontal cortex (PFC) and amygdala, which together comprise a circuit believed to regulate the expression of threat/defense versus safety behaviors [24, 80–82]. In prior rodent work, the balance between defensive and safety behaviors was associated with theta band (5–8 Hz) LFP synchrony between the infralimbic cortex (IL) and basolateral amygdala (BLA) [60, 64, 83]. Therefore, IL-BLA LFP connectivity and power features are promising targets for the development and testing of decoding algorithms that could be used in closed-loop psychiatric BMIs. At the same time, prior work focused on simple categorical analyses (t-tests between groups) and did not consider the more clinically relevant question of how to decode imminent behavior at the timescale of milliseconds to seconds. Rapid decoding would be crucial for a closed-loop BMI aimed at mitigating anxious or avoidance behavior in humans. It is not clear that the same LFP features that broadly discriminate two groups will be able to predict moment-to-moment behavior. Similarly, past studies that employed decoding methods used them primarily to identify when and where specific information was encoded [59], or to identify behavior at longer timescales [64].

We thus developed a behavioral decoder based on IL-BLA LFP signals from rats undergoing a tone-shock conditioning and extinction protocol [84, 85]. Beyond conventional band power features, we explored and exploited a broad array of neuro-markers derived from the LFPs, across spectral, temporal, and connectivity domains. We considered three defensive behaviors: freezing, bar press suppression (bar press rate), and accelerometry, specifically the jerk (first derivative) calculated from a 3-axis head-mounted accelerometer. Freezing and bar press suppression are canonical defensive behaviors that have been studied for decades [20–22, 86–88]. Accelerometry jerk is a newer metric we have proposed and shown to correlate with, but not fully overlap the two other measures [89]. We developed a decoding framework based on Light Gradient-Boosting Machine (LightGBM), which outperformed other state-of-the-art ML-based decoders in both accuracy and latency. Our approach included a methodology to assess feature importance and a feature selection strategy utilizing the SHapley Additive exPlanations (SHAP), effectively reducing the dimensionality of the feature space. Band power and band power ratio between channels emerged as critical for decoding defensive behaviors, with the high-gamma band being particularly predictive compared to other frequency bands. By prioritizing highly-ranked neuro-markers, we enhanced decoding performance beyond that with solely band power features. Consequently, this study underscores the effectiveness of our proposed ML framework in the precise and rapid decoding of defensive behaviors within a closed-loop psychiatric Brain-Machine Interface (BMI) system.

2. Methods

2.1. Animals and behavior paradigm

We utilized 16 adult Long Evans rats, with weights ranging from 250 to 350 grams. Initially, rats were pair-housed in plastic cages for at least 7 days to facilitate acclimation to the research facility. Subsequent to this acclimation period, the rats underwent daily handling for 5 days to mitigate handling-related stress, after which they were individually housed in plastic cages. To prepare for experimental procedures, food intake was restricted to 10 grams per day until each rat achieved 85%–95% of its initial body weight. Thereafter, the animals were allocated 15–20 grams of food daily to maintain their weight within this specified range throughout the behavioral experiments. During the first three days of food restriction, sucrose pellets were introduced into the home cages to acquaint the rats with the reward, thereby facilitating the learning of bar-pressing behavior.

The behavioral training and experiments were conducted in the Coulbourn conditioning chambers, with dimensions of 30.5 × 24.1 × 21 cm. These chambers were equipped with a grid floor, consisting of rods spaced 1.6 cm apart and with a diameter of 4.8 mm, to facilitate the delivery of foot shocks. An aluminum wall of the chamber incorporated a retractable bar and a food trough for monitoring reward-seeking behaviors, while a speaker mounted on the opposite wall emitted sound stimuli. Additionally, a camera with an attached wide-angle lens was positioned outside the conditioning chamber, above the speaker unit, to record video footage through the chamber’s plexiglass top.

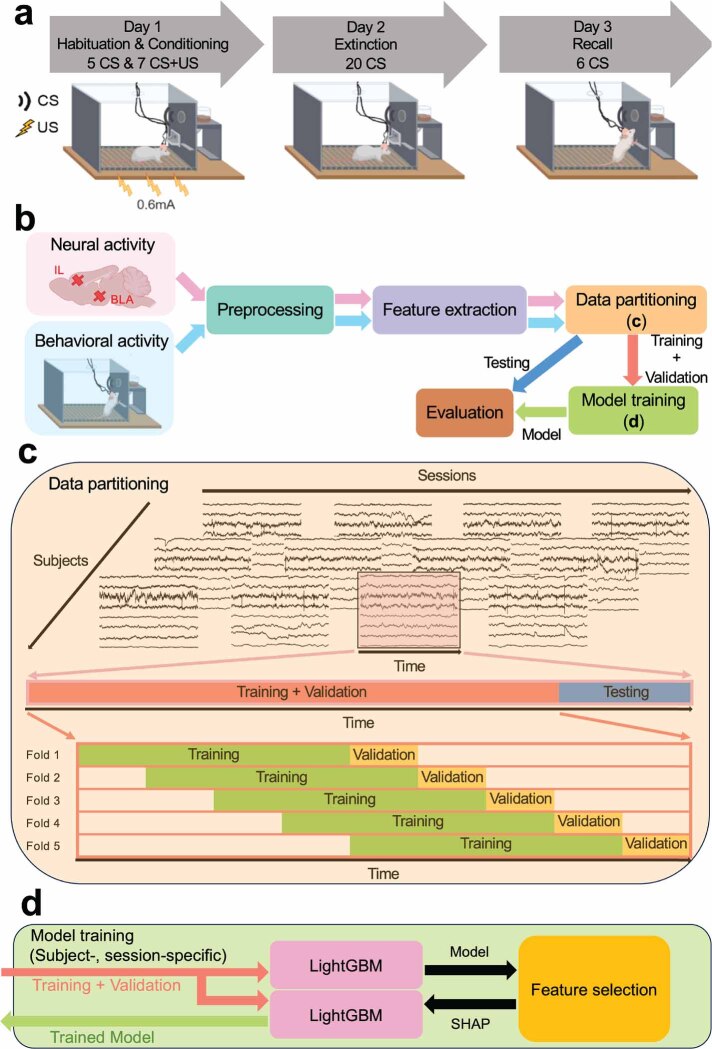

Initially, rats were trained to execute bar presses to obtain sucrose pellets. They subsequently underwent electrode implantation and participated in a post-surgical behavioral paradigm. To provoke defensive behaviors, the rats were exposed to a tone-shock conditioning protocol, which comprised three phases: habituation/conditioning, extinction, and extinction recall, as shown in figure 1(a). Electrophysiological and video recordings were systematically carried out during each experimental session. During the habituation phase on day 1, rats encountered 5 trials of the conditioned stimulus (CS: a 30-s, 82 dB tone). This was followed by the conditioning phase, where they experienced 7 instances of the CS paired with the unconditioned stimulus (US: a 0.6 mA, 0.5-s foot shock) immediately after the CS. On day 2, the extinction phase consisted of 20 presentations of the CS alone, without the US, in the same chamber. On day 3, to evaluate extinction memory (recall), the CS was presented 6 times without the US.

Figure 1.

Experimental paradigm and proposed ML framework for decoding defensive behaviors. (a) The three-day tone-shock conditioning protocol. The experiment consisted of three phases: habituation/conditioning, extinction, and extinction recall, with electrophysiological and behavioral data recorded during each phase. (CS: Conditioned stimulus. US: Unconditioned stimulus) (b) The proposed ML framework contained modules including neural data preprocessing, feature extraction in three representation domains, data partitioning into training, validation and testing sets as shown in (c), decoding model training as shown in (d), and model evaluation. (c) Data partitioning process in each recording session for each subject. A held-out testing set was taken at the end of the session, and the beginning of the session was split in a sliding window 5-fold cross-validation paradigm. (d) The procedure of training the decoding model. SHAP values were measured using the first trained LightGBM model with all features, and the second LightGBM was then trained using the high-rank features in the order of their SHAP values and subsequently used for final model evaluation.

Reward-seeking behavior, indicated by bar presses, functioned as a dynamic measure for defensive behavior, with a decrease in pressing activity interpreted as an elevated threat response. Bar press events were captured in the electrophysiology event data using a Data Acquisition System (DAQ) (USB 6343-BNC or PCIe-6353, National Instruments, Woburn, MA, USA). These event data were subsequently processed to isolate bar press incidents and their associated timestamps. Assessment of freezing behavior was conducted through offline video analysis, employing a Logitech HD Pro Webcam C910 equipped with a Neewer Digital High Definition 0.45 × Super Wide-angle Lens. The footage, captured at a rate of 24 frames per second with Debut Professional software, was analyzed using ANY-maze, which utilizes its integrated freezing detection functionality to assign a ‘freezing score’ to each frame. This score increased with more significant changes in pixels between consecutive frames. Meanwhile, accelerometry data were collected continuously at a 30 kHz sampling rate via the RHD 2132 electrophysiology headstage, which includes a built-in 3-axis accelerometer. These data were logged using the Open Ephys acquisition system, a widely used open-source platform for in-vivo electrophysiology research [90]. The synchronization of accelerometry records with video data was accomplished by aligning the ‘tone on’ events observed in both datasets. There were sparse bar press events for a few rats during the conditioning phase due to the bar press suppression resulting from foot shocks. We chose 10 rats with no less than 5 bar presses in the conditioning phase to ensure the threat responses were well-encoded in the following neural decoding study. All 16 rats were used for the analysis in habituation, extinction, and recall sessions.

2.2. Electrodes and surgery

Each electrode bundle was comprised of 8 nickel-chromium recording microwires, each measuring 12.5 µm in diameter, accompanied by one reference wire of the same diameter as the recording wires, and a platinum-iridium stimulating channel with a diameter of 127 µm [91]. The stimulation channel was used for another set of experiments not reported here, and no stimulation occurred during any of the data reported in this paper. These recording and stimulating components were collectively bundled within a 27-gauge stainless steel cannula, which also served as a pathway for the return current during stimulation. The recording wires were bonded to the individual pins of an Omnetics connector using silver paint, while the stimulating wire was soldered to a mill-max connector, enabling concurrent recording and stimulation at the same site. A ground wire was affixed to the connector, and the entire bundle was safeguarded with epoxy. Prior to surgery, the electrodes were sterilized using Ethylene Oxide (EtO).

The electrode arrays were surgically implanted into the left infralimbic cortex (IL) (+3 mm anterior-posterior (AP), +0.5 mm medial-lateral, and −3.95 mm dorsal-ventral (DV) from the brain surface) and the basolateral amygdala (BLA) (−2.28 mm AP, +5 mm medial-lateral, and −7.5 mm DV from the brain surface). Dental cement was utilized to secure the electrodes and to construct a protective head cap for the animals. The ground wire was securely wrapped around a skull screw prior to the implant fixation. A minimum recovery period of seven days was allowed for the animals before starting physiological experiments.

2.3. Electrophysiology and data processing

The electrophysiological signals, specifically local field potentials (LFPs), were recorded at a sampling rate of 30 kHz using an Open Ephys acquisition system throughout all experimental sessions. The recording headstage was interfaced with two mill-max male-male connectors, each comprising eight channels, through an adaptor.

Quantification of defensive behavior adhered to the methodology established in a prior study [89]. We calculated and attempted to decode three separate types of defensive behavior: freezing, bar press rate/suppression, and accelerometry rate of change (jerk). Freezing is the most common assay of defensive behavior in rodents, particularly in tone-shock conditioning paradigms as used in this study. It therefore places our results in context in the broader literature. Bar press rate is used less frequently because it requires extensive operant pre-training, but as we showed in [89], bar pressing is only partially correlated with freezing. That is, it captures a different aspect of defensive behavior that may be differentially encoded in IL-BLA activity. The same is true for accelerometry jerk: it correlates partially with the other two metrics, and can be used to compute an extinction/recall analysis, but does not measure precisely the same type of defensive reaction [89]. Jerk can be thought of as capturing the vigor or rapidity of response, and may be able to capture more active forms of defense such as darting [92].

The jerk is defined as the rate of change in total (3-axis) acceleration, calculated as:

where is the accelerometry jerk as a function of time , and , , and are the voltages of the accelerometer in X, Y, and Z axes, respectively. The accelerometry jerk was downsampled from its original sampling rate of 30 k samples/second to 1k samples/second, and was then smoothed with a Gaussian filter using a 200-sample window to remove non-biological noise transients. Bar press events and their corresponding timestamps were extracted from the electrophysiological recordings, with timestamps being resampled to 1k samples/second. The counts of presses was binned into each 1-ms time interval, and then these counts were smoothed using a Gaussian filter with a 1k-sample window. This process transformed the data from a discrete count of events into an approximation of a continuous press rate, hereby referred to as the bar press rate. The resampling process utilized the function in Matlab, while Gaussian smoothing was executed with the function in Matlab.

A total of 8 recording channels were obtained from bipolar-referenced LFP signals, with 4 channels from each of the IL and BLA regions. These bipolar-subtracted channels were subsequently band-pass filtered within the range of 1–150 Hz using a 3rd-order zero-phase infinite impulse response (IIR) Butterworth filter. Subsequently, line noise was removed by applying a notch filter at 60 Hz and its harmonics. The LFP data was then demeaned across the time series for each channel. For each subject and recording session, we visually inspected the neural data and excluded time epochs that exhibited clear non-neural artifacts, such as significant sharp voltage transients. For extracting features in various frequency bands, the neural data were processed using 3rd-order zero-phase IIR Butterworth band-pass filters across 7 frequency bands: 1–4 Hz (delta), 4–8 Hz (theta), 8–13 Hz (alpha), 13–30 Hz (beta), 30–50 Hz (low-gamma), 50–80 Hz (gamma), and 80–150 Hz (high-gamma). Phase and amplitude were extracted from the band-pass filtered signal via Hilbert transform. Cross-spectral density was estimated on the neural signals before band-pass filtering using the Multitaper method in the MNE package. The other preprocessing steps were implemented using the SciPy package.

Both behavioral data and the neuro-markers were computed in overlapping 1 s sliding windows with a 0.2 s step size. Behavioral measurements were quantified by averaging the measures of accelerometry jerk, bar press rate, and freezing score within each window.

2.4. Neuro-marker extraction

To investigate the neural representations in various aspects and enhance the accuracy of decoding defensive behaviors in our model, we extracted 17 types of neuro-markers across 3 representation domains as neural features for each window, as detailed in table 1. We chose these features based on existing evidence that, in general, local power and cross-region connectivity between IL and BLA have been linked to defensive behaviors in past research [83, 93]. Additionally, we computed time domain features that are pivotal in identifying patterns of neural activity associated with specific behaviors or pathological states, owing to their simplicity and the direct interpretation of neural dynamics [94–96].

Table 1.

Neuro-markers extracted in the spectral, temporal, and connectivity domains.

| Spectral domain | |

|---|---|

Band powera b b

|

BP

|

Relative band powera b b

|

RBP

|

Band power ratio between bandsa b b

|

BPRB

|

|

| |

| Temporal domain | |

|

| |

| Line lengtha | LL |

Hjorth parametersa c c

|

Act, Mob, Com = Mob |

| Maximuma | Max |

| Minimuma | Min |

| Nonlinear energya | NE |

Skewnessa c c d d

|

Skewness |

Approximate entropya e e

|

ApEn |

Sample entropya e e

|

SampEn |

|

| |

| Connectivity domain | |

|

| |

Band power ratio between channelsa b b f f

|

|

Coherencea f f g g

|

Coh

|

Phase amplitude couplinga f f h h i i

|

PAC

|

Phase locking valuea f f i i

|

PLV

|

Pearson correlationa c c d d f f

|

Corr

|

Band Pearson correlationa b b c c d d f f

|

BCorr

|

is the time-series neural signal within a window of length T, where .

are the and bands of the neural representations.

σ is the standard deviation of , and σ2 is the variance.

is the mean of .

number of such that and , number of times that , number of times that , where , and .

are the and channels of the neural representations.

is the cross-spectral density between and at frequency , and is the auto-spectral density of at frequency .

a(t) is the amplitude of .

is the phase of .

In the spectral domain, band power (BP) was quantified across 7 frequency bands [38, 97, 98]. Relative band power (RBP) refers to the power in a specific frequency band relative to the total signal power [94, 99, 100]. Band power ratio between bands (BPRB) facilitates the pairwise comparison of power levels across different bands within a single channel [99–101].

Regarding temporal features, line length (LL) calculates the absolute differences between successive time points [94, 97, 102]. Hjorth parameters (HP) reflect statistical attributes including variance, mean frequency, and frequency variation [94, 103–105]. Maximum (Max) and minimum (Min) represent the extreme values within the window [94]. Nonlinear energy (NE) gives an estimate of the energy content of the neural signal [106]. Skewness evaluates the asymmetry of the distribution of instances within the window [107]. Approximate entropy (ApEn) and sample entropy (SampEn) assess the existence of patterns within a sequence of instances [108, 109].

In the connectivity domain and cross-region representations, band power ratio between channels (BPRC) enables pairwise power level comparison across channels from two distinct brain regions [99]. Coherence (Coh) quantifies the similarities of neural oscillation between channels [110]. Phase-amplitude coupling (PAC) captures the linkage between the phase of a low-frequency band and the amplitude of a high-frequency band between channels [95, 111, 112]. Phase locking value (PLV) describes the phase relationship consistency between signals from different channels [64, 113]. Pearson correlation (Corr) and band Pearson correlation (BCorr) quantify functional connectivity between channels, across the full band and within individual frequency bands, respectively [79].

Here, BP, RBP, BPRC, Coh, PLV, and BCorr were assessed across the aforementioned 7 frequency bands. PAC analysis was performed between the amplitudes of the low-gamma, gamma, and high-gamma bands and the phases of the theta and alpha bands, with 6 phase-amplitude combinations. BPRB comparisons were made between each pair of the 7 bands, with 21 band-band combinations in total. BP, RBP, BPRB, LL, HP, Max, Min, NE, skewness, ApEn, and SampEn were calculated for each individual channel. BPRC, Coh, PAC, PLV, Corr, and BCorr were derived only from channel pairs between IL and BLA, with 16 channel-channel combinations. ApEn and SampEn were computed using the MNE-Features package.

2.5. Dataset partitioning and characteristics

After extracting the neuro-markers and behavioral data, we partitioned them into three distinct datasets for subsequent use in training the decoding model, selecting high-rank features, and evaluating the model’s performance. For each subject, we divided the data from each recording session into training, validation, and test sets, as depicted in figure 1(c). The models were further trained and evaluated on these datasets in a subject-dependent, session-dependent manner.

A held-out test set, constituting 20% of the entire recording, was designated from the final 20% of each behavior session, while the initial 80% served as the training and validation sets. The separation between the training and validation sets employed a sliding-window 5-fold cross-validation paradigm. The time series data were evenly divided into 9 windows. In the first fold, the initial 4 windows formed the training set, and the window served as the validation set. From the second to fifth folds, we sequentially shifted the training and validation sets by one window forward in time, ensuring that validation sets were different across folds and incorporating validation sets from preceding folds into the training sets of subsequent folds. Therefore, the division ratios for training and validation sets versus test sets, and training sets versus validation sets, were maintained at 80%–20%. This method respected the chronological sequence of the time series data by consistently organizing the datasets in a training-validation-testing order. This organization ensured that the model was always trained on historical data and validated/tested on subsequent data, thereby preventing data leakage across the temporal dimension. The size of training, validation, and test sets is shown in table A1 and appendix A. The test set was utilized for the final evaluation of the model’s decoding accuracy, trained using the complete training and validation sets. Feature selection and hyperparameter optimization were conducted based on the model’s validation set performance, trained on the training set data.

Our dataset covers both neural and behavioral responses under a structured experimental paradigm. Its richness and uniqueness lie in its detailed temporal resolution and the simultaneous recording of multiple modalities (neural signals and behavioral measures including accelerometry, reward-seeking, and freezing). This integration allows for advanced modeling of the neural correlates of behavior, providing insights into the neural dynamics underlying avoidance behaviors. Additionally, the specific conditioning paradigm, featuring sequential phases of habituation, conditioning, extinction, and recall, enables a nuanced analysis of conditioned/unconditioned behavioral responses. Furthermore, the integration of these diverse neuro-markers across multiple domains not only improves the accuracy of decoding models applied to predict behavioral outcomes, but also enables a deeper understanding of the underlying neurophysiological processes, therefore making it a valuable resource for both exploratory and predictive studies in defensive behaviors.

2.6. Decoding model

A diverse array of machine learning (ML) models has been employed for neuropsychiatric tasks and brain-machine interface applications, including LR [69, 110], SVM [64, 70, 74, 114, 115], RF [116, 117], and artificial neural network (ANN) [118, 119]. Moreover, gradient-boosted decision trees (GBDT) have demonstrated promising performance in previous neurophysiological task studies [97, 98, 104, 114, 120]. In this work, we utilized a GBDT-based model named Light Gradient-Boosting Machine (LightGBM), known for its efficiency in reducing data instances and features through gradient-based one-side sampling (GOSS) and exclusive feature bundling (EFB) [121]. GOSS retains data instances with gradients above a certain threshold while randomly discarding instances with smaller gradients, thereby maintaining the data’s substantial contribution to information gain. EFB efficiently reduces the number of effective features by bundling mutually exclusive features—those not taking non-zero values simultaneously—into a single feature. By leveraging GOSS and EFB, LightGBM achieves superior computational speed and lower memory usage compared to other GBDTs, without compromising the accuracy intrinsic to GBDT models.

In addition to LightGBM, we evaluated a variety of ML models widely applied in neurophysiological research, employing our proposed feature set as outlined in table 1. These models encompass traditional ML approaches such as LR, SVM with both Linear (SVM-Lin) and radial basis function kernels (SVM-RBF) [122], the tree-based RF model [123], and ANN models with diverse architectures, including MLP [68], long short-term memory (LSTM) [124], convolutional neural networks (CNN) [125], and Residual Networks (ResNet) [126]. The details of the training procedure, model architectures, and hyperparameter selection are presented in appendix C. In our preliminary decoding analysis shown in table 2, by leveraging all neural features, we assessed the decoding accuracy for accelerometry jerk and bar press rate across the aforementioned ML models, averaged over subjects in each recording session. Performance evaluation was conducted using both the coefficient of determination (R2) and the Pearson correlation coefficient (r) metrics. It should be noted that here, R2 is not the squared Pearson correlation coefficient, and its value lies within the range of . A negative R2 suggests that the decoded behavior captures less variation in the real behavior than a constant value equivalent to the average of the ground truth, indicating relatively poor decoding performance. Our findings in table 2 indicate that by using our extracted neuro-markers as inputs, LightGBM outperformed other ML models in decoding both accelerometry jerk and bar press rate in 14 out of 16 comparisons. We also employed ANNs to test their capability of automatic neural feature extraction for decoding defensive behaviors. LSTM-Raw, WaveNet-Raw (an advanced CNN model with a multi-temporal-scale structure) [127], and ResNet-Raw [126] used raw LFP signals as inputs for the decoding tasks appendix B. The results from this experimental setup, as delineated in table A2, were systematically compared with the decoding performance of models that incorporate neuro-markers (table 2). This analysis reveals that manual feature extraction consistently outperforms the ANN models using raw LFP signals, and it suggests that these neuro-markers introduce a level of specificity and relevance that current ANN architectures struggle to achieve autonomously when working with neural data.

Table 2.

Performance of ML models for decoding defensive behaviors averaged across subjects in each recording session. The performance was evaluated using the coefficient of determination () and the Pearson correlation coefficient (). The best results are bolded.

| Behavior | Metric | Session | LR | SVM-Lin | SVM-RBF | RF | MLP | LSTM | CNN | ResNet | LightGBM |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Accelerometry jerk | Habituation | −59.52 | −140.8 | 0.4495 | 0.4581 | 0.4668 | 0.4554 | 0.4614 | 0.4545 | 0.4677 | |

| Conditioning | −1099 | −3.593 | 0.3931 | 0.6324 | 0.6240 | 0.6301 | 0.6239 | 0.6216 | 0.6310 | ||

| Extinction | −62.73 | −6.935 | 0.4815 | 0.4913 | 0.4878 | 0.4646 | 0.4779 | 0.4592 | 0.4952 | ||

| Recall | −2.040 | −0.3346 | 0.4916 | 0.5417 | 0.5214 | 0.5391 | 0.5449 | 0.5390 | 0.5515 | ||

| Habituation | 0.4660 | 0.5038 | 0.6895 | 0.6974 | 0.6914 | 0.6827 | 0.6934 | 0.6817 | 0.6998 | ||

| Conditioning | 0.2497 | 0.5543 | 0.7052 | 0.8417 | 0.8313 | 0.8357 | 0.8224 | 0.8132 | 0.8425 | ||

| Extinction | 0.5340 | 0.5443 | 0.7037 | 0.7147 | 0.6951 | 0.6782 | 0.6978 | 0.6747 | 0.7187 | ||

| Recall | 0.5515 | 0.5940 | 0.7320 | 0.7688 | 0.7614 | 0.7712 | 0.7676 | 0.7615 | 0.7753 | ||

|

| |||||||||||

| Bar press rate | Habituation | −466.0 | −719.3 | −8.451 | 0.3299 | 0.3105 | 0.3201 | 0.3281 | 0.3188 | 0.3306 | |

| Conditioning | −638.8 | −92.94 | −20.55 | 0.2819 | 0.2697 | 0.2610 | 0.2734 | 0.2713 | 0.2848 | ||

| Extinction | −22.16 | −49.67 | −13.56 | 0.3713 | 0.3576 | 0.3654 | 0.3722 | 0.3578 | 0.3798 | ||

| Recall | −2.027 | −2.517 | −9.068 | 0.3746 | 0.3618 | 0.3597 | 0.3610 | 0.3576 | 0.3761 | ||

| Habituation | 0.3626 | 0.3194 | ——–a | 0.5995 | 0.5796 | 0.5809 | 0.6064 | 0.5856 | 0.6113 | ||

| Conditioning | 0.3202 | 0.2899 | ——–a | 0.5480 | 0.5313 | 0.5298 | 0.5334 | 0.5331 | 0.5435 | ||

| Extinction | 0.4536 | 0.3432 | ——–a | 0.6115 | 0.6072 | 0.6105 | 0.6204 | 0.6101 | 0.6237 | ||

| Recall | 0.3540 | 0.3248 | ——–a | 0.6249 | 0.6134 | 0.6029 | 0.6212 | 0.6251 | 0.6368 | ||

Not applicable due to the fact that the model produced a single constant value as its output for all inputs, and thus a Pearson correlation coefficient could not be computed.

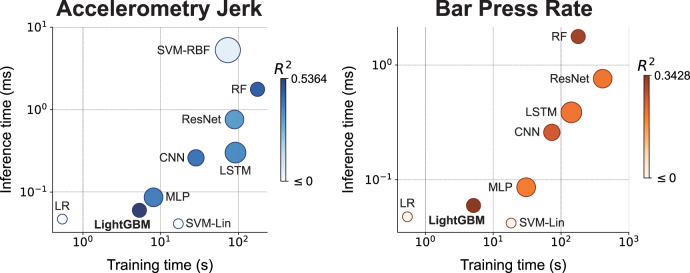

The performance superiority of LightGBM for decoding defensive behaviors is further evident from the comparative analysis of accuracy, training and inference times, as well as memory usage across different models. As illustrated in figure 2, LightGBM achieved the highest decoding accuracy among all tested ML models for both behavioral metrics, as indicated by the R2 values in the scatter plots (0.5364 for accelerometry jerk and 0.3428 s for bar press rate). Moreover, LightGBM not only shows a reduced memory footprint (28.30 kB) but also exhibits significantly lower training times (5.336 s for accelerometry jerk and 5.130 s for bar press rate) and inference times (0.06 006 ms for accelerometry jerk and 0.05 966 ms for bar press rate), compared with other models that achieve relatively lower decoding accuracy (SVM-RBF, RF, MLP, LSTM, CNN, and ResNet), presented in figure 2 and table A3. Training time is the duration in clock time for training a model using all training samples, and inference time refers to the time used for each model to provide a prediction for one test sample. These attributes of LightGBM suggest its significant advantages in predicting avoidance behaviors in a closed-loop application where accuracy, speed, and memory efficiency are all critical [39]. Therefore, we selected LightGBM as the basis model for subsequent analyses in our study.

Figure 2.

Comparative performance of ML models for decoding accelerometry jerk (Left) and bar press rate (Right) in accuracy, training time, inference time, and memory size, averaged across subjects and sessions. Each circle represents a model, with the size of the circle proportional to the memory size (on a logarithmic scale), and with the color of the circle proportional to the accuracy evaluated using (in an exponential scale).

All ML models were conducted on a single core of AMD Ryzen Threadripper PRO 5995WX CPU by Python 3.8.16, scikit-learn 1.2.2, and Pytorch 2.0.1, and they were run on the same platform Red Hat Enterprise Linux 7.9. The implementation of LR, SVM-Lin, SVM-RBF, and RF was conducted using scikit-learn, while MLP, LSTM, CNN, ResNet, LSTM-Raw, WaveNet-Raw, and ResNet-Raw were implemented via Pytorch. LightGBM was implemented using the LightGBM package provided by Microsoft.

2.7. Model training and evaluation

Figure 1(d) illustrates the model training process using the dataset configuration detailed in figure 1(c). LightGBM models were trained in a subject-specific and session-specific manner, premised on the hypothesis that neural representations of defensive behaviors exhibit inter-subject variability. Furthermore, we fitted models separately for each recording session because we expected the neural encoding to shift over time. Tone-shock conditioning and extinction learning both involve significant plasticity in the IL-BLA circuit, and thus defensive behaviors might be driven by different activity patterns before vs. after a given stage of learning. For each subject and session, 5 LightGBM models were trained and assessed using the 5 folds designated for the training and validation sets, which were subsequently used for the selection of top-ranked features based on high feature importance values. A final LightGBM model was then trained using the aggregated training and validation sets and evaluated against the held-out test set, incorporating either band power features, selected top-ranked features (named as LightGBM-Top in the following analyses), or the entire set of extracted features.

The model’s decoding performance was quantified using R2 and r to compare ground truth with predicted behavioral measurements. The loss in R2 served to evaluate the neuro-markers’ contribution to decoding performance by their exclusion from the model. R2 was also applied in the validation set’s performance analysis to guide the selection of a specific number of top-ranked features. Additionally, r was also utilized to compare the similarities between feature importance matrices. The evolution of the training curves, delineated by the percentage change in L2 Loss (Mean Squared Error Loss) with increasing iterations, provided further insight into training dynamics.

2.8. Feature selection

Integrating an increased number of neuro-markers across spectral, temporal, and connectivity domains may enhance the decoding accuracy for defensive behaviors. However, this augmentation results in a proliferation of features, increasing computation time and memory requirements. Furthermore, some features may be uninformative or redundant within the ML framework, complicating the derivation of neuroscientific insights from models based on an extensive array of features. In our study, we extracted 17 types of neuro-markers, totaling 1296 features as input into the model, which inflated the computational costs unnecessarily. Consequently, we implemented a feature selection method to reduce computational demands, mitigate the risk of model overfitting, and identify which LFP features were most informative and, thereby, potentially causal to behavior.

We utilized SHapley Additive exPlanations (SHAP) for the assessment of feature importance among neuro-markers [128]. SHAP is a comprehensive measure of feature importance based on the Shapley values from a conditional expectation function of the original model. These values offer a unique feature importance metric that adheres to three desirable properties including local accuracy, missingness, and consistency when evaluating the additive attribution of one feature to the prediction output [128].

For each defensive behavior across every recording session, we assessed the SHAP values for every feature across the 5 LightGBM models, each trained using a distinct fold. Subsequently, for each feature, we computed the mean of its absolute SHAP values across all data instances and folds, establishing this as the cumulative contribution of the feature within that session. To identify the top-ranked features that are both subject- and behavior-specific and exhibit consistency across different days, these calculated attributions were further averaged over 4 recording sessions to determine the ultimate feature importance, as shown below:

where is the importance of feature , is the SHAP value for data instance , fold , session , and feature , and are the numbers of features, samples, and sessions, respectively.

Features were subsequently ranked based on their SHAP importance in the training set. Then in the order of their rankings, we sequentially added the features into the feature set, as the input to the model. All models with increasing amounts of ranked features were subsequently trained on the training set and evaluated on the validation set using R2. The peak validation performance is the highest average R2 across 5 folds among these models. We implemented a paired-sample t-test between the average R2 of these models and the peak performance, and identified the feature subset with a p-value no less than 0.05 in the significance test and with the minimum number of features as the selected feature set. The feature selection process therefore can be written as follows:

where is the feature in the training set of the fold with and . are the sorted features such that imp , and are the features in the validation set of the fold sorted as in the training set. and are the target variables in the training and validation set of the fold respectively. is the model with parameters θ. is the loss function, is the coefficient of determination function, and is the function of calculating p-value in paired-sample t-test. is the amount of selected features, is the set of features, and is the selected feature set.

, and are the features in the validation set of the fold sorted as in the training set. and are the target variables in the training and validation set of the fold respectively. is the model with parameters θ. is the loss function, is the coefficient of determination function, and is the function of calculating p-value in paired-sample t-test. is the amount of selected features, is the set of features, and is the selected feature set.

2.9. Statistical analysis

We conducted paired-sample t-tests to assess the differences in decoding performance between accelerometry jerk, bar press rate, and freezing score. We applied the same method to determine whether decoding performance using selected top-ranked features was significantly different from the peak performance identified during feature selection. Additionally, paired-sample t-tests compared the SHAP values of features without or with various temporal delays, by employing neural features not only from the current window, but also from the preceding windows lagged by up to 20 s, across all recording sessions and subjects. Because there is an inherent motor delay between the perception of threat and the emission of defensive behavior, decoding might perform better if that delay were taken into account using this lagged method.

Independent-sample t-tests were utilized to determine the statistical significance of overall SHAP feature importance within specific frequency bands relative to all other bands, across all recording sessions and subjects. This test was also applied to evaluate the significance of feature contributions to decoding performance within specific frequency bands in comparison with contributions from all other frequency bands. The Wilcoxon signed-rank test was employed to compare decoding performance when using band power features, selected top-ranked features, and the entire set of extracted features across all subjects. To account for multiple comparisons, Bonferroni corrections were applied to adjust p-values, tailored to the number of comparisons conducted. The implementation of paired-sample t-tests, independent-sample t-tests, and the Wilcoxon signed-rank tests were carried out using the SciPy package.

2.10. Comparison with existing work using LightGBM for neural decoding

LightGBM is a well-established method in various fields, but its application within the context of predicting defensive responses for closed-loop systems in neuropsychiatric modulation presents unique challenges and opportunities. This section compares our innovative approach to the LightGBM application with existing methods, emphasizing the advancements we have made in decoding avoidance behaviors.

Most existing applications of LightGBM in neural decoding have typically focused on limited datasets, often emphasizing either spectral or temporal features but seldom integrating extensive connectivity measures [39, 98, 129–132]. Such studies include more straightforward applications in general classification tasks within neuroscience but lack the depth of neural correlates integration. Our study uniquely integrates a broad array of neuro-markers from three different domains-spectral, temporal, and connectivity. This comprehensive integration allows for a deeper understanding and modeling of neural dynamics associated with defensive behaviors, enhancing the model’s ability to predict and interact with complex neural phenomena.

While other studies may use LightGBM, they often employ more traditional feature selection techniques, such as recursive feature elimination or principal component analysis, which do not provide the same level of interpretability or direct linkage to neural dynamics [114, 129, 130, 133, 134]. Conversely, our approach utilizes SHAP to perform feature selection, enhancing interpretability and focusing on the most impactful features for model prediction. This methodology is innovative in its application, providing clear insights into how specific neuro-markers influence model outputs, thereby advancing the field towards more interpretable and effective neural decoding strategies.

Comparative analyses in existing works often focus solely on the neural decoding accuracy of ML models including LightGBM [131, 133, 135]. Our research, however, not only demonstrates the superior performance of LightGBM over traditional and some ANN models but also provides a detailed comparative analysis showing this advantage across multiple metrics (accuracy, training time, inference time, and memory size). These metrics are all critical factors for real-time decoding in closed-loop neuromodulation, highlighting our model’s suitability for high-stakes, real-time applications in clinical settings.

In summary, while LightGBM is used across various domains for decoding, our application in the context of neuropsychiatric closed-loop systems leverages unique dataset characteristics, advanced feature selection methods, and rigorous comparative performance evaluation to significantly enhance the utility and efficacy of this tool in neuroscientific research.

3. Results

3.1. Comparison of decodability of defensive behaviors using proposed ML framework

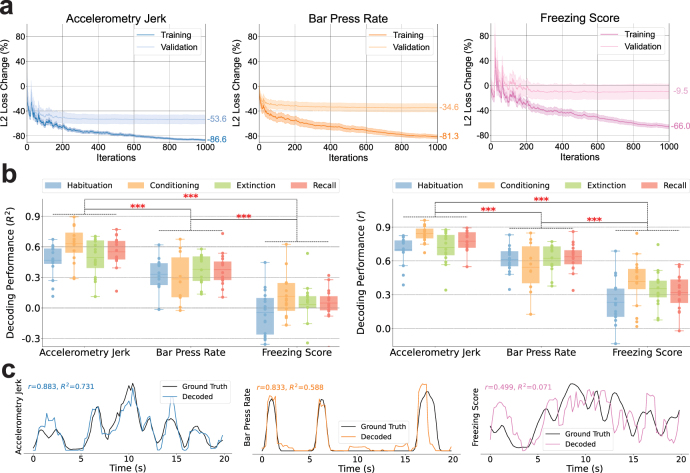

The comparison of training processes and decoding performances for accelerometry jerk, bar press rate, and freezing score is depicted in figure 3. Figure 3(a) depicts the training curves, showcasing the L2 loss changes, averaged across subjects and recording sessions. The models underwent training using the training set, with the percentage change in L2 loss from the initial untrained state evaluated on both the training and validation sets. For all three behaviors, the L2 loss for training sets exhibited a consistent decline with additional iterations. However, the validation set loss for the freezing score demonstrated minimal improvement (−9.5%), in contrast to accelerometry jerk (−53.6%) and bar press rate (−34.6%).

Figure 3.

Decoding performances for accelerometry jerk, bar press rate, and freezing score. (a) Comparisons of training curves of our ML model for decoding accelerometry jerk (Left), bar press rate (Middle), and freezing score (Right). The percentages of the change of L2 Loss on the training and validation sets are shown with the increasing number of iterations during the training process. The shading areas indicate standard errors across subjects. (b) Decoding performance for accelerometry jerk, bar press rate, and freezing score in four recording sessions averaged across subjects. The line in the boxplot shows the average performance across subjects, and each dot indicates the result of an individual subject. The performances were evaluated using the coefficient of determination (Left, ) and the Pearson correlation coefficient (Right, ). The asterisks denote the significant difference in the decoding performance of two defensive behaviors. (Paired-sample t-test; ) (c) Decoding examples for accelerometry jerk (Left), bar press rate (Middle), and freezing score (Right), from a single rat and session (OB44, Habituation), with performance evaluated using and .

There were large differences in the degree to which the different forms of defensive behavior could be decoded from the IL/BLA LFPs (i.e. in the degree to which these behaviors were encoded within the LFPs in that brain circuit). Specifically, the freezing score was only marginally decodable across sessions, with the mean coefficient of determination (R2) averaged across subjects never surpassing 0.12 in all recording sessions, as shown in figure 3(b). While the bar press rate showed a higher degree of decodability, performances were slightly diminished during the conditioning session, attributed to strong bar press suppression resulting from foot shocks. Accelerometry jerk emerged as the most reliably decodable behavior, with the mean R2 values across subjects consistently exceeding 0.46 in all recording sessions. Overall, decoding accuracy varied significantly among different defensive behaviors, following a descending order from accelerometry jerk to bar press rate to freezing score. These findings remained consistent when evaluated using both R2 and the Pearson correlation coefficient (r) for performance assessment.

The variation in decoding performance may arise in part from the distinct characteristics of each behavioral signal, as illustrated in figure 3(c). Accelerometry jerk is characterized by a smoothly fluctuating signal that remains predominantly non-zero. In contrast, bar press rate often drops to zero but then has sharp deviations from baseline during bouts of pressing. Freezing score exhibits some local deviations even after smoothing. Regarding the freezing score in figure 3(c), the model succeeds in tracking the global trend, resulting in a relatively high r. Nevertheless, it struggles to capture local variations, leading to an R2 of 0.071 for the freezing score. This indicates that the decoded behavior scarcely captures variance from the actual behavior, offering only marginal predictive improvement over the expected value of the true behavior. In light of these findings, subsequent analyses concentrated on accelerometry jerk and bar press rate, given that interpretations derived from the non-predictive models of freezing score could potentially be misleading.

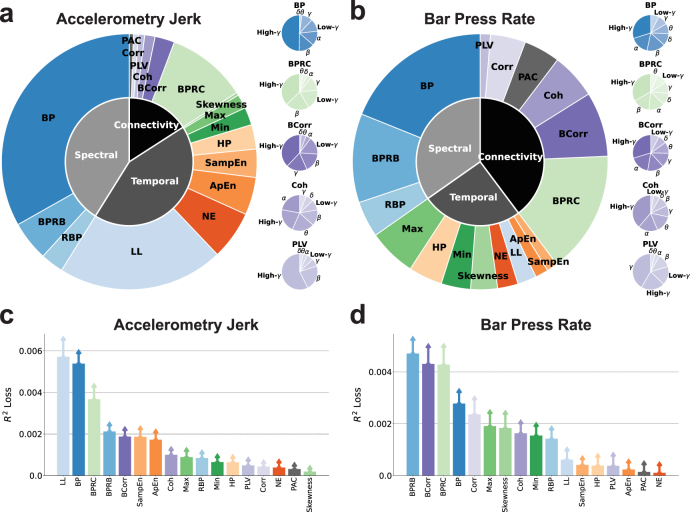

3.2. Importance and contribution of neuro-markers to the decoding performance

Subsequently, our focus shifted towards understanding the importance of each neuro-marker type in decoding defensive behaviors. Figures 4(a) and (b) delineate the importance of three feature domains, diverse neuro-markers, and frequency bands in decoding defensive behaviors. A notable observation is that spectral, temporal, and connectivity features all play a crucial role in decoding defensive behaviors. Specifically, temporal (43.0%) and spectral (41.1%) features outweigh connectivity features (15.9%) for the prediction of accelerometry jerk. In contrast, for bar press rate prediction, connectivity (39.8%) emerges as the predominant domain, surpassing spectral (34.7%) and temporal (25.5%) features. Among the individual types of neuro-markers for accelerometry jerk decoding, band power (BP) (33.2%), line length (LL) (21.0%), and band power ratio between channels (BPRC) (10.2%) stand out as the most influential features within the spectral, temporal, and connectivity domains, respectively. This holds true despite the availability of a larger number of connectivity features compared to spectral or temporal features, owing to connectivity’s reliance on the squared number of channels. For bar press rate, BP (18.9%) and BPRC (15.5%) consistently rank as critical, with other spectral and connectivity features like band power ratio between bands (BPRB) (11.2%) and band Pearson correlation (BCorr) (8.2%) also contributing substantially to predictions, unlike other temporal features. Notably, for the leading contributors (BP and BPRC) as well as other neuro-markers that span seven frequency bands, including BCorr, coherence (Coh), and phase locking value (PLV), their high-gamma components are identified as crucial for decoding defensive behaviors, except Coh for accelerometry jerk and PLV for bar press rate, which prominently feature alpha and gamma components, respectively.

Figure 4.

The importance of neuro-markers during decoding process. (a) (Left) The importance of various types of neuro-markers for decoding the accelerometry jerk is illustrated in the outer chart. The importance of markers in the spectral, temporal, and connectivity domains is shown in the inner chart. (Right) The importance of band power, band power ratio between channels, band Pearson correlation, coherence, and the phase locking value in different frequency bands is shown in the small charts. (b) Same as in (a), but for the bar press rate. (c) Contribution of various types of neuro-markers to the decoding performance for accelerometry jerk, averaged across subjects and recording sessions and evaluated using the loss after removing each type of markers from the ML input. Error bars indicate the standard errors across subjects and sessions. (d) Same as in (c), but for bar press rate.

Beyond quantifying feature importance by evaluating their attribution to the prediction, we also explored their impact on decoding performance, as illustrated in figures 4(c) and (d). For accelerometry jerk, BP, LL, and BPRC were identified as principal contributors, aligning with their established predictive importance in figure 4(a). The order of neuro-marker contributions to accelerometry jerk decoding performance as shown in figure 4(c) mirrors their predictive significance as depicted in figure 4(a). In the case of bar press rate, BCorr, BPRC, and BPRB maintain a substantial impact on decoding performance, consistent with figure 4(b). However, BP’s contribution appears noticeably diminished relative to the aforementioned features, underscoring its reduced spectral significance in comparison with BPRB for bar press rate decoding.

This analytical approach to feature importance in both prediction and decoding performance elucidates the substantial importance of neuro-markers across all three domains. BP and BPRC emerge as common key contributors for decoding both defensive behaviors, with LL for accelerometry jerk and BCorr and BPRB for bar press rate also deemed important in terms of prediction and performance.

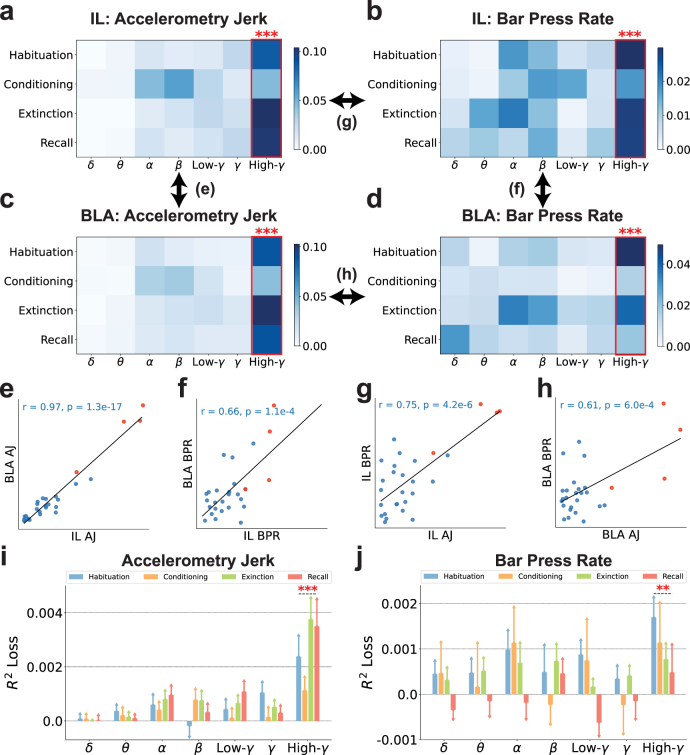

3.3. Importance and contribution of band powers in different frequency bands to the decoding performance

In figure 4, band power emerged as one of the most influential features. We delved deeper into its importance in terms of prediction and decoding performance across seven frequency bands, including delta, theta, alpha, beta, low-gamma, gamma, and high-gamma, extracted from both IL and BLA, for the decoding of accelerometry jerk and bar press rate, as detailed in figure 5. The importance matrices in figures 5(a)–(d) highlight the importance of band power in these frequency bands across recording sessions, brain regions, and targeted behaviors. Collectively, these matrices consistently reveal that high-gamma power in the IL and BLA is more important for predicting behavior than all other frequency bands, and that this is true across different phases of aversive learning and extinction. Figures 5(e)–(h) compare the pairwise similarities among the elements of the importance matrices from figures 5(a)–(d), examining either the significance of spectral power within identical bands and sessions across different brain regions or in decoding diverse defensive behaviors. These importance matrices exhibit substantial correlation with each other (r > 0.61, ), with the high-gamma components invariably displaying elevated importance values. This pattern suggests that high-gamma power maintains a consistent association with defensive behavior across various contexts.

Figure 5.

The high-gamma band powers are generally more predictive than other bands for decoding defensive behaviors. (a)–(d) The importance of band powers (BP) in seven frequency bands and four recording sessions is illustrated in the importance matrices, where each element is the SHAP values averaged across subjects. Bands with significantly higher importance than the other bands are marked with red asterisks. (Independent-sample t-test; ). (a) BP in IL for decoding accelerometry jerk. (b) BP in IL for decoding bar press rate. (c) BP in BLA for decoding accelerometry jerk. (d) BP in BLA for decoding bar press rate. (e)–(h) The similarities between the importance matrices were evaluated using the Pearson correlation coefficient () and p-value (). Each dot indicates its importance in the same band and the same session between different brain regions or defensive behaviors. Red points denote the importance of high-gamma powers. (e) BP in IL and BLA for decoding accelerometry jerk (AJ). (f) BP in IL and BLA for decoding bar press rate (BPR). (g) BP in IL for decoding AJ and BPR. (h) BP in BLA for decoding AJ and BPR. (i)–(j) The contribution of BP in seven frequency bands to the decoding performance, averaged across subjects, was evaluated using the loss after removing each band from the ML inputs. The error bars indicate the standard errors across subjects. Bands with significantly higher contributions than the other bands are marked with asterisks. (Independent-sample t-test; , ). (i) The contribution of BP to the decoding performance for accelerometry jerk. (j) The contribution of BP to the decoding performance for bar press rate.

Expanding our analysis to consider the band powers from another angle, we explored their impact on decoding performance across seven frequency bands, as depicted in figures 5(i) and (j). Notably, the exclusion of high-gamma power leads to a significantly stronger decline in model performance across subjects and sessions compared with all other bands, aligning with observations from figures 5(a)–(h). Therefore, the comprehensive findings of figure 5 underscore the pivotal role of high-gamma power as the spectral band most closely linked to defensive behavior, both in terms of attribution to prediction and decoding performance.

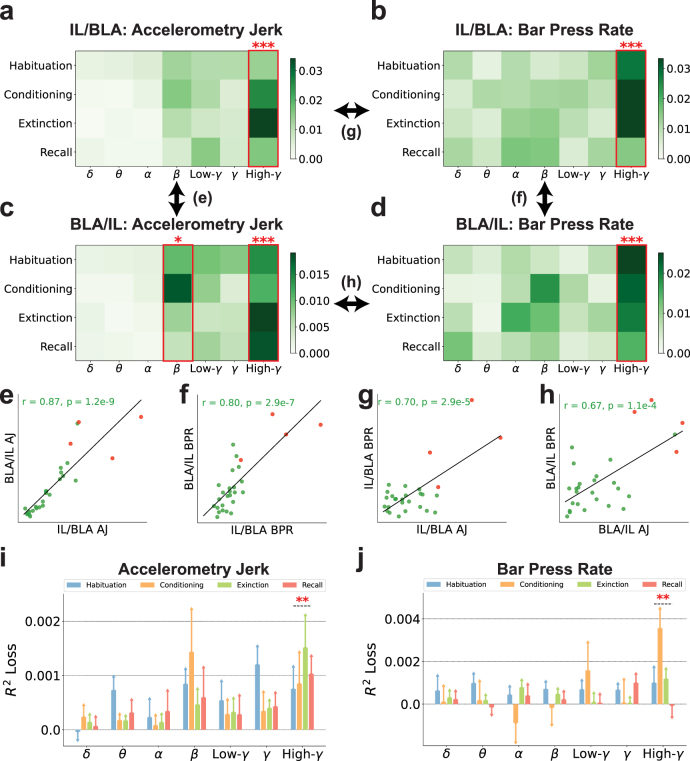

3.4. Importance and contribution of cross-region neuro-markers in different frequency bands to the decoding performance

In figure 4, the band power ratio between IL and BLA emerged as a pivotal feature, especially in the context of bar press rate decoding. We dissected the relative contribution of different frequency bands as depicted in figure 6. Here again, high gamma features were identified as the most influential encoders of defensive behaviors. Additionally, beta band ratios from BLA to IL exhibited marginal significance for accelerometry jerk, as illustrated in figure 6(c). Figures 6(e)–(h) explore the pairwise similarities among the elements of the importance matrices from figures 6(a)–(d), assessing either the importance of spectral power ratios within identical bands and sessions across two reciprocal ratios (IL/BLA and BLA/IL) or in decoding various defensive behaviors. These comparisons revealed significant similarities (r > 0.67, ). High-gamma power ratios were distinctly more important than other bands in various analyses presented in figures 6(e)–(h). This comprehensive analysis indicates a clear concordance in the significance of high-gamma power ratios between IL and BLA, aligning with the patterns of importance outlined in figures 6(a)–(d).

Figure 6.

The high-gamma band power ratios between IL and BLA are generally more predictive than other bands for decoding defensive behaviors. (a)–(d) The importance of band power ratios between IL and BLA (BPRC) in seven frequency bands and four recording sessions is illustrated in the importance matrices, where each element is the SHAP values averaged across subjects. Bands with significantly higher importance than the other bands are marked with red asterisks. (Independent-sample t-test; , ). (a) BPRC of IL to BLA (IL/BLA) for decoding accelerometry jerk. (b) IL/BLA for decoding bar press rate. (c) BPRC of BLA to IL (BLA/IL) for decoding accelerometry jerk. (d) BLA/IL for decoding bar press rate. (e)–(h) The similarities between the importance matrices were evaluated using the Pearson correlation coefficient () and p-value (). Each dot indicates its importance in the same band and the same session between different brain regions or defensive behaviors. Red points denote the importance of high-gamma power ratios. (e) BPRC of IL to BLA (IL/BLA) and BPRC of BLA to IL (BLA/IL) for decoding accelerometry jerk (AJ). (f) IL/BLA and BLA/IL for decoding bar press rate (BPR). (g) IL/BLA for decoding AJ and BPR. (h) BLA/IL for decoding AJ and BPR. (i)–(j) The contribution of BPRC in seven frequency bands to the decoding performance, averaged across subjects, was evaluated using the loss after removing each band from the ML inputs. The error bars indicate the standard errors across subjects. Bands with significantly higher contribution than the other bands are marked with asterisks. (Independent-sample t-test; ). (i) The contribution of BPRC to the decoding performance for accelerometry jerk. (j) The contribution of BPRC to the decoding performance for bar press rate.

To gain further insights into the band power ratios, we examined their impact on decoding performance, as illustrated in figures 6(i) and (j). High-gamma power ratios consistently led to the most substantial decrease in performance across subjects and sessions when excluded from the ML model. Thus, high-gamma power ratios are critical to decoding performance for both accelerometry jerk and bar press rate, surpassing the impact of all other frequency bands.

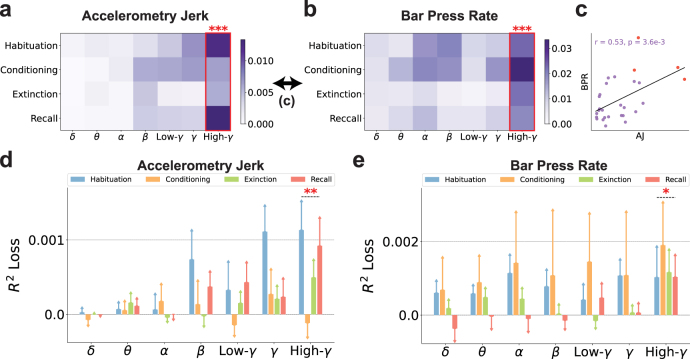

The band power ratios reveal variations in the activation levels between IL and BLA, offering insights into their differential engagement during defensive behaviors. These ratios allow researchers to deduce the degree of synchronization and the dynamic interactions between IL and BLA. However, it is important to note that band power ratios alone do not directly quantify the functional connectivity of these regions. Consequently, we further explored the Pearson correlations between neural signals of IL and BLA, evaluating their significance for prediction and impact on decoding performance across various frequency bands, as depicted in figure 7. In this analysis, correlations within the high-gamma frequency band emerged as the most informative features, outperforming those of other frequency bands in decoding both accelerometry jerk and bar press rate, as shown in figures 7(a) and (b). Figure 7(c) demonstrates the similarity between the elements of the importance matrices from figures 7(a) and (b), revealing a significant correlation (r = 0.53, ). We further explored the impact of band Pearson correlations on decoding performance, as depicted in figures 7(d) and (e). High-gamma correlations consistently led to the most significant decline in performance when excluded from the model.

Figure 7.

The high-gamma band Pearson correlations between IL and BLA are generally more predictive than other bands for decoding defensive behaviors. (a) and (b) The importance of band Pearson correlations between IL and BLA (BCorr) in seven frequency bands and four recording sessions is illustrated in the importance matrices, where each element is the SHAP values averaged across subjects. Bands with significantly higher importance than the other bands are marked with red asterisks. (Independent-sample t-test; ). (a) BCorr for decoding accelerometry jerk. (b) BCorr for decoding bar press rate. (c) The similarity between the importance matrices for decoding accelerometry jerk and bar press rate was evaluated using the Pearson correlation coefficient () and p-value (). Each dot indicates its importance in the same band and the same session between different defensive behaviors. Red points denote the importance of high-gamma correlations. (d) and (e) The contribution of BCorr in seven frequency bands to the decoding performance, averaged across subjects, was evaluated using the loss after removing each band in the ML inputs. The error bars indicate the standard errors across subjects. Bands with significantly higher contributions than the other bands are marked with asterisks. (Independent-sample t-test; , ). (d) The contribution of BCorr to the decoding performance for accelerometry jerk. (e) The contribution of BCorr to the decoding performance for bar press rate.

Collectively, band power ratios and band Pearson correlations elucidate the neural representations between IL and BLA through distinct lenses. Therefore, the findings presented in figures 5–7 together show that, across spectral and connectivity domains, oscillations in the high-gamma range within and between IL and BLA are the most reliable encoder of defensive behaviors. Thus, within the scope of our study, these features appear to be the most reliable among the spectral, temporal, and connectivity neuro-markers used for decoding avoidance behaviors in a closed-loop paradigm.

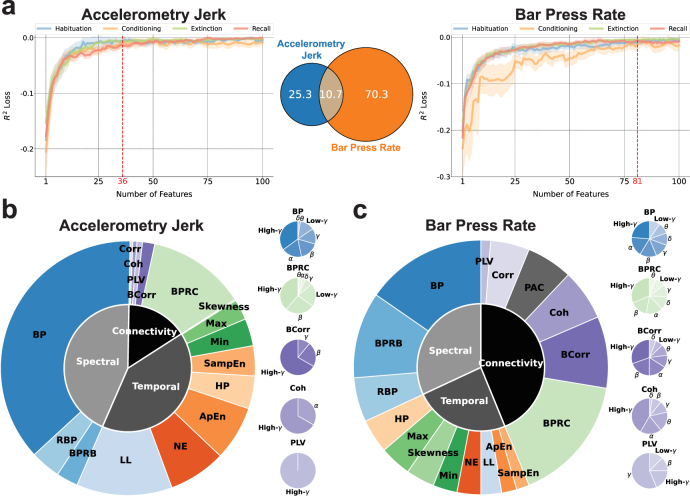

3.5. Feature selection chooses important neuro-markers and maintains decoding performance

In this study, we introduced 17 types of neuro-markers as features, yielding a total of 1296 features for inclusion in our ML framework. Incorporating all these features would lead to increased computational and memory demands. Feature selection is a widely recognized strategy for mitigating the computational burden of cognitive decoders [69, 136]. Figure 8 explores the impact of feature dimensionality on decoding performance and the proportion of various types of neuro-markers among the selected features. Figure 8(a) presents the feature selection process based on feature importance as quantified by SHAP values. Here, the decoding accuracy on the validation sets, averaged across subjects, is depicted in relation to the quantity of top-ranked features. Notably, performance improves with an increasing number of selected features, reaching a plateau at approximately 100 features. By selecting only 36 and 81 top-ranked features, we observed that decoding accuracy on validation sets across all recording sessions was comparable to, and not significantly inferior to, the peak performance identified through an exhaustive exploration of all possible counts of top-ranked features (Paired-sample t-test; accelerometry jerk: , bar press rate: . See section 2.8). These findings underscore the feasibility of dramatically reducing feature dimensionality by 97.2% (36 out of 1296) and 93.8% (81 out of 1296) without significantly compromising decoding efficacy. Within the subset of 36 and 81 top-ranked features selected for the decoding of accelerometry jerk and bar press rate, respectively, an average of 10.7 features are concordant and can predict both defensive behaviors across subjects.

Figure 8.

Neuro-markers with higher feature importance and contribution to the decoding performance were chosen through feature selection. (a) Feature selection using feature importance evaluated by SHAP values for decoding the accelerometry jerk (Left) and bar press rate (Right). Features were iteratively selected according to the decreasing order of SHAP feature importance. Shading areas indicate the standard errors across subjects. 36 and 81 top-ranked features were selected from all 1296 features respectively. The performance saturates after 100 features. Venn diagram represents the overlap of top-ranked features for the accelerometry jerk and bar press rate (Middle). (b) (Left) The proportion of different types of neuro-markers among the selected top-ranked features for decoding the accelerometry jerk is illustrated in the outer chart. The proportion of selected features in the spectral, temporal, and connectivity domains is shown in the inner chart. (Right) The proportion of band power, band power ratio between channels, band Pearson correlation, coherence, and the phase locking value in different frequency bands are shown in the small charts. (c) Same as in (b), but for decoding the bar press rate.

Figure 8(b) and (c) delineate the distribution of different types of neuro-markers within the selected features, aligning with the previously established importance of these markers regarding prediction and performance as depicted in figure 4. In the case of accelerometry jerk, spectral (43.8%) and temporal (40.6%) features were more frequently selected over connectivity features (15.6%). BP (37.2%), LL (12.2%), and BPRC (12.5%) emerged as the predominant feature groups within the spectral, temporal, and connectivity domains, respectively, as shown in figure 8(b). Conversely, for bar press rate, as illustrated in figure 8(c), the model exhibited a preference for selecting connectivity features (43.8%) over spectral (31.9%) and temporal (24.2%), with BPRC (16.2%), BP (15.4%), BPRB (11.0%), and BCorr (9.1%) identified as leading predictors. Across all neuro-markers that span 7 frequency bands, including BP, BPRC, BCorr, Coh, and PLV, high-gamma components were most frequently chosen for decoding both defensive behaviors, with the exception of PLV for bar press rate, where the gamma component was more prominently featured.

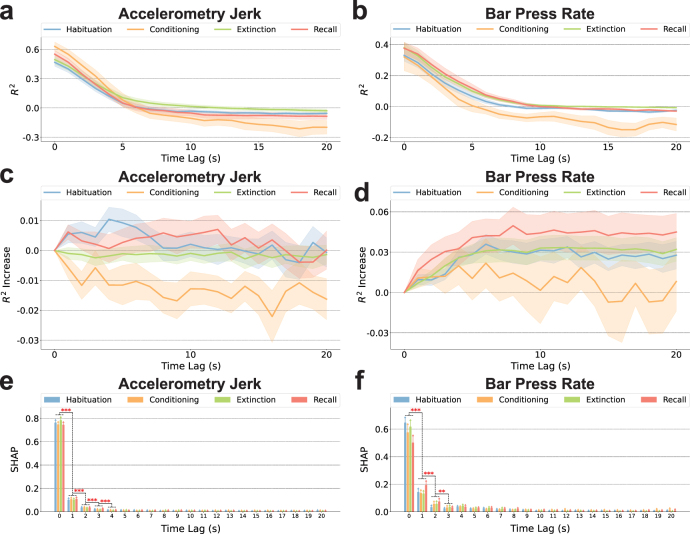

Since there can be a lag between decisions and manifested behavior, we also evaluated the decoding results using lagged neural data, as presented in figure A1. Figures A1(a) and (b) illustrate that neural features temporally close to the current time point yield superior decoding accuracy for both behaviors, suggesting these features encapsulate a richer neural representation regarding defensive responses than those from earlier time windows. As shown in figure A1(c), the inclusion of features from preceding time windows together with current features does not markedly enhance the decoding performance for accelerometry jerk. In contrast, figure A1(d) indicates a modest improvement in the decoding of bar press rate when previous time window features are incorporated. Furthermore, figures A1(e) and (f) indicate that the predictive power of features for both behaviors is predominantly concentrated in recent time windows, with a significant decline in predictivity as the temporal gap widens. The findings indicate that neural representations closest to the event of interest are most informative for decoding both defensive behaviors, with immediate past features contributing more significantly to model accuracy than older ones. We thus have emphasized the importance, in preceding and subsequent analyses, of features aligned to behavior with zero lag. The demonstrated temporal gradient in feature predictivity could inform the development of a more refined real-time decoder for neuropsychiatric interventions.

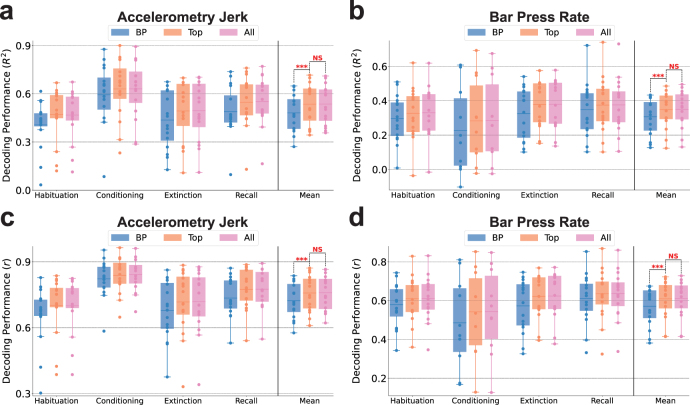

In figure 9, we explore the dependency of decoding performance on the diverse types of neuro-markers employed and the dimensionality of the feature set. This comparison is made between decoding outcomes utilizing only conventional band powers, decoding with a selected group of features as identified in figure 8, and decoding with the entire set of extracted features. Figures 9(a)–(d) present the decoding performance for accelerometry jerk and bar press rate using band power features, selected top-ranked features (LightGBM-Top), and all features, assessed by R2 and r metrics, respectively. The addition of other neuro-markers beyond only band power, coupled with feature selection, significantly enhances performance across sessions (for accelerometry jerk, R2 from 0.4815 to 0.5357, r from 0.7229 to 0.7579; for bar press rate, R2 from 0.3073 to 0.3476, r from 0.5708 to 0.6092). Moreover, employing the limited feature set as delineated in figure 8 does not lead to a significant reduction in performance when compared to the utilization of all features. This observation holds true for the decoding of both defensive behaviors evaluated by both R2 and r metrics. Notably, the adoption of feature selection exceptionally reduces the model’s training time (182.3 ms for accelerometry jerk, 309.6 ms for bar press rate), inference time (0.05 079 ms for accelerometry jerk, 0.05 072 ms for bar press rate), and memory usage (16.6 kB) across all subjects and sessions (table A3).

Figure 9.