Abstract

There is a massive hype of artificial intelligence (AI) allegedly revolutionizing medicine. However, algorithms have been at the core of medicine for centuries and have been implemented in technologies such as computed tomography and magnetic resonance imaging machines for decades. They have given decision support in electrocardiogram machines without much attention. So, what is new with AI? To withstand the massive hype of AI, we should learn from the little child in H.C. Andersen's fairytale “The emperor's new clothes” revealing the collective figment of the emperor having new clothes. While AI certainly accelerates algorithmic medicine, we must learn from history and avoid implementing AI because it allegedly is new – we must implement it because we can demonstrate that it is useful.

Keywords: Artificial intelligence, algorithms, bias, hallucination, hype

The emperor's new clothes

Artificial intelligence and machine learning (AI/ML) is expected to transform medicine, generating unprecedented hope and hype 1 in what has been coined an ‘algorithmic revolution’.2,3 However, what is new with AI? Medicine has been an algorithmic endeavour for millennia.

Medical reasoning and decision-making have followed specific (diagnostic, therapeutic, and prognostic) algorithms since antiquity,4,5 and medical schools convey algorithmic knowledge to students.6,7 Data-driven risk predictive models have been used for decades. Moreover, the vast increase in checklists and guidelines after the emergence of evidence-based medicine has escalated algorithmic medicine substantially.8,9 As such, AI/ML just accentuates constitutive characteristics and accelerates an already ongoing tendency. 10

In our euphoric enthusiasm we seem to need somebody like the small child in H.C. Andersen's fairytale about ‘The emperor's new clothes’ 11 to reveal that the Emperor is lured to believe that he has new clothes, but in fact is naked (Figure 1).

Figure 1.

Illustration by Vilhelm Pedersen from the first illustrated version of H.C. Andersen's book The Emperor's New Clothes (1837). The image is freely available at https://en.wikipedia.org/wiki/The_Emperor%27s_New_Clothes#/media/File:Emperor_Clothes_01.jpg.

Accordingly, we need to expose the hype to address the real challenges of AI/ML. Algorithmic medicine is not new. Neither are the problems with AI/ML, such as hallucinations, biases, transparency (the black box problem), and responsibility evasion.12–17

Algorithmic medicine

The most pervasive use of algorithms in medicine is found in visualizing techniques in radiology and nuclear medicine. Based on targeted ionizing radiation, magnetic fields, ultrasound beams, and radioactive tracers, images are reconstructed by advanced algorithms. The representation of intracorporal structures strongly depends on algorithms that are developed based on studies of many individuals and shaped to obtain and enhance specific clinically relevant characteristics. Thus, algorithms are by no means new.

New hallucination?

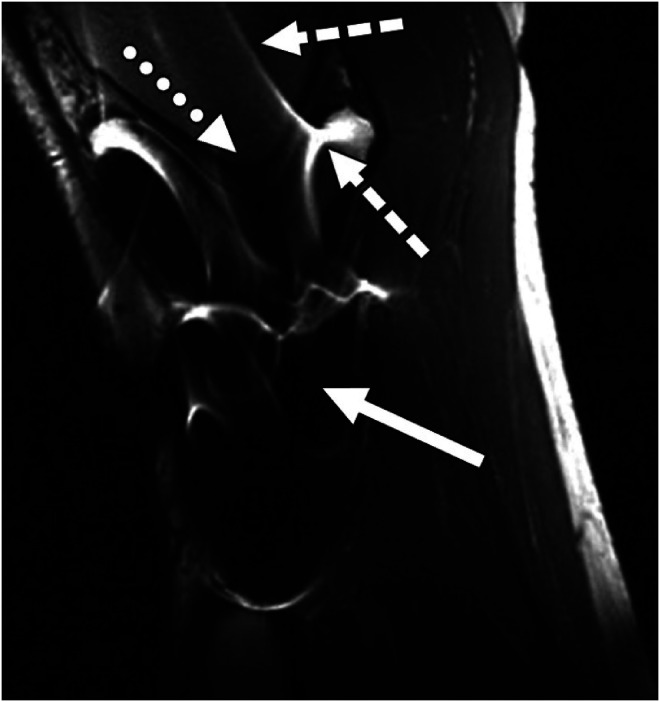

Correspondingly, the algorithms in traditional medicine can generate ‘seemingly realistic sensory experiences that do not correspond to any real-world input. This can include visual, auditory, or other types of hallucinations’. 18 During the 1920s, medical doctors were able to identify F-cells in the testicles of homosexual men 19 and more recently metal screws present magnetic resonance imaging (MRI) image artefacts 20 (see Figure 2).

Figure 2.

Image artefacts due to the presence of stainless-steel screws in a healthy 37-year-old man. The image is a reprint from Hargreaves et al. 20 with permission both from the first author and the publisher https://www.ajronline.org/doi/10.2214/AJR.11.7364.

Clearly, the distortions due to algorithmic image reconstruction have not been classified as hallucinations, but the principle is the same: they provide ‘fictional, erroneous, or unsubstantiated information’. 21 The history of medicine is full of entities, processes, conditions, and even diseases that in hindsight appear unsubstantiated and unwarranted. Still, researchers defined them, and clinicians diagnosed and treated them. Overdiagnosis is but one example where benign conditions are identified and treated as malign. 22

While we may be reluctant to think of these ‘perceptual errors’ as hallucinations, the principle is very much the same. The point is that new words for old phenomena do not make the phenomena new.

Old black boxes

Correspondingly, the so-called black-box problem is not new either. The algorithms for computed tomographies, MRIs, ultrasound, and positron emission tomography/single photon emission computed tomography machines are neither understood by clinicians using them nor by patients benefiting from them. With increasing experience, radiologists have come to trust, appreciate, and apply the algorithmic information, despite occasionally producing false negative and false positive test results.

Moreover, practical breakthroughs have come before causal explanations in medicine for millennia. 23 The understanding of digitalis came centuries after the acknowledgement of its effects. Uncertainty and unexplainability are not new even when put in black boxes.

Bias

Likewise, bias has been a problem for medicine in general. Clinicians have been subject to a wide range of biases in diagnostics, prognostics, and treatment,24–26 and publication bias has distorted medical evidence production. 27 Moreover, medical knowledge has been biased in terms of gender, race, age, and other characteristics. 28 No doubt, AI/ML threatens to enhance this problem, but the problem by no means is new.

Decision support

Similarly, a wide range of medical decision support systems have been developed, assessed, and implemented long before AI/ML.29–32 Many are based on information from large amounts of patients included in previous studies. Moreover, the responsibility for the clinical decisions has been situated with medical doctors using decision support.33,34

Although so-called ‘fully autonomous AI systems’ have been approved by the Food and Drug Administration and European Medicines Agency, a wide range of liability and regulatory issues are well-known. 35 AI/ML-based decision support systems may well accelerate such issues, increasing vendor liability, but the problems with machine-based decision-making are hardly new.

Alignment and containment

Two other overarching problems have been identified, that is, the problem that AI/ML will incite goals that are not aligned with human goals and values, and the problem that it may become difficult to control AI/ML. While warranted, these challenges are neither new nor unique. Technology has directed medical goals and values, not least through defining medicine's endpoints. Surrogacy endpoints do not always align with hard endpoints.36–38 Overdiagnosis and overtreatment are but two examples. Moreover, technology has been difficult to control – in medicine as elsewhere. Hence, AI/ML only pose and potentially enhances general problems with technology.

What is new? The emperor's new clothes

In sum, algorithms have played a crucial role in medicine since its inception. In the Aphorisms ascribed to Hippocrates of Cos, we learn that ‘[w]hen more food than is proper has been taken, it occasions disease’ and that ‘[a]cute disease come to a crisis in fourteen days’. Clearly, the algorithms of modern medicine have become ever more complex and complicated, not least through precision medicine. However, as argued, the problems with algorithms, such as hallucination, bias, transparency, and accountability are not new.39,40 Wrapping uncertainty and unexplainability in black boxes do not render them novel or different.

Clearly, the validation processes are much more challenging, 21 for example, when algorithms based on enormous amounts of data suggest decisions for (preventing) very rare events, or where the outcomes only can be properly measured in the far future. However, the principles are very much the same as before. Testing hypotheses and validating evidence has always been cumbersome and prone to error.

Certainly, AI/ML is able to analyse vast amounts of data, much faster and in different ways than before. As such, it accentuates and accelerates algorithmic medicine. However, AI/ML-generated algorithms are neither intelligent nor artificial. They are machine-made products that can become very helpful if we harness and govern their development.

Evidently, AI/ML will change the way we do medicine. Professions will change and some professionals be superfluous or find new tasks. However, this is typical for medicine, which has been changed by technology since the invention of the stethoscope by René Laënnec in 1816.

Learning from the little child in Andersen's fairytale and from the history of medicine, we must acknowledge that the introduction of AI/ML does not appear to be radically new. Accordingly, we must reveal the hype 1 and acknowledge that AI/ML enhances the potentials and perils of medical knowledge production.

We must recognize that new words do not always refer to new things. Instead of being fascinated and misled by a powerful tool, we must harness it so that we can help individual patients in a better and more sustainable way. Even more, we must avoid implementing AI/ML because it is new – we must implement it because of its demonstrated usefulness. Therefore, we must assess AI/ML not on the basis of how new it is, how advanced the algorithms are, and how much data it is trained on, but on how much good it can do for individuals.

Acknowledgement

Not applicable.

Footnotes

Consent statement: Not applicable.

Contributorship: I am the only contributor to this manuscript.

The author declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval: Not applicable

Funding: The author received no financial support for the research, authorship, and/or publication of this article.

Guarantor: I am the guarantor of the entire work.

ORCID iD: Bjørn Hofmann https://orcid.org/0000-0001-6709-4265

References

- 1.Matheny M, Israni ST, Ahmed M, et al. Artificial intelligence in health care: The hope, the hype, the promise, the peril. Washington, DC: National Academy of Medicine, 2019, p. 10. [PubMed] [Google Scholar]

- 2.Lee JC. The perils of artificial intelligence in healthcare: Disease diagnosis and treatment. J Comput Biol Bioinform Res 2019; 9: 1–6. [Google Scholar]

- 3.Hirsch-Kreinsen H. Artificial intelligence: A “promising technology”. AI Soc 2023; 39: 1–12.37358940 [Google Scholar]

- 4.Cohen MD. Accentuate the positive: We are better than guidelines. Arthritis Rheumat 1997; 40: 2–4. [DOI] [PubMed] [Google Scholar]

- 5.Watts MS. Contemporary aims for American medicine—1987: A report on a forum. Western J Med 1987; 146: 754. [PMC free article] [PubMed] [Google Scholar]

- 6.Hopkins WE. Medical students, intellectual curiosity, and algorithmic medicine: reflections of a teacher. Coron Artery Dis 2009; 20: 477–478. [DOI] [PubMed] [Google Scholar]

- 7.Sox HC, Jr, Sox CH, Tompkins RK. The training of physician's assistants: the use of a clinical algorithm system for patient care, audit of performance and education. N Engl J Med 1973; 288: 818–824. [DOI] [PubMed] [Google Scholar]

- 8.Martini C. What “evidence” in evidence-based medicine? Topoi (Dordr) 2021; 40: 299–305. [Google Scholar]

- 9.Straus SE, McAlister FA. Evidence-based medicine: a commentary on common criticisms. Cmaj 2000; 163: 837–841. [PMC free article] [PubMed] [Google Scholar]

- 10.Reddy S. Algorithmic medicine. Artificial intelligence. New York: Productivity Press, 2020, pp.1–14. [Google Scholar]

- 11.Andersen HC. The emperor's new clothes. Genesis Publishing Pvt Ltd, 1987. [Google Scholar]

- 12.Karimian G, Petelos E, Evers SM. The ethical issues of the application of artificial intelligence in healthcare: A systematic scoping review. AI Ethics 2022; 2: 539–551. [Google Scholar]

- 13.Benzinger L, Ursin F, Balke W-T, et al. Should artificial intelligence be used to support clinical ethical decision-making? A systematic review of reasons. BMC Med Ethics 2023; 24: 48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tang L, Li J, Fantus S. Medical artificial intelligence ethics: A systematic review of empirical studies. Digital Health 2023; 9: 20552076231186064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Möllmann NR, Mirbabaie M, Stieglitz S. Is it alright to use artificial intelligence in digital health? A systematic literature review on ethical considerations. Health Inf J 2021; 27: 14604582211052391. [DOI] [PubMed] [Google Scholar]

- 16.Murphy K, Di Ruggiero E, Upshur R, et al. Artificial intelligence for good health: A scoping review of the ethics literature. BMC Med Ethics 2021; 22: 1–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Khan AA, Badshah S, Liang P, et al. Ethics of AI: A systematic literature review of principles and challenges. In: Proceedings of the 26th international conference on evaluation and assessment in software engineering, Gothenburg, Sweden, 13–15 June 2022, pp.383–392. New York: Association for Computing Machinery. [Google Scholar]

- 18.Alkaissi H, McFarlane SI. Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus 2023; 15: e35179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.De Cecco JP, Parker DA. Historical and conceptual background. In: Parker DA (ed.) Sex, cells, and same-sex desire. New York: Routledge, 2014, pp.28–86. [Google Scholar]

- 20.Hargreaves BA, Worters PW, Pauly KB, et al. Metal-induced artifacts in MRI. Am J Roentgenol 2011; 197: 547–555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kumar M, Mani UA, Tripathi P, et al. Artificial hallucinations by Google bard: Think before you leap. Cureus 2023; 15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Welch HG, Schwartz L, Woloshin S. Overdiagnosed: Making people sick in the pursuit of health. Boston, MA: Beacon Press, 2011, p. xvii, 228 p. [Google Scholar]

- 23.London AJ. Artificial intelligence and black-box medical decisions: Accuracy versus explainability. Hastings Cent Rep 2019; 49: 15–21. [DOI] [PubMed] [Google Scholar]

- 24.Benishek LE, Weaver SJ, Newman-Toker DE. The cognitive psychology of diagnostic errors. Sci Am Neurol 2015: 1–21. [Google Scholar]

- 25.Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med 2008; 121: S2–S23. [DOI] [PubMed] [Google Scholar]

- 26.Every-Palmer S, Howick J. How evidence-based medicine is failing due to biased trials and selective publication. J Eval Clin Pract 2014; 20: 908–914. [DOI] [PubMed] [Google Scholar]

- 27.Brown AW, Mehta TS, Allison DB. Publication bias in science: What is it, why is it problematic, and how can it be addressed? In: Jamieson KH, Kahan DM and Scheufele DA (eds) The Oxford handbook of the science of science communication. Oxford Library of Psychology, 2017, pp.93–101. [Google Scholar]

- 28.Parkhurst JO, Abeysinghe S. What constitutes “good” evidence for public health and social policy-making? From hierarchies to appropriateness. Soc Epistemol 2016; 30: 665–679. [Google Scholar]

- 29.Kruse CS, Ehrbar N. Effects of computerized decision support systems on practitioner performance and patient outcomes: Systematic review. JMIR Med Inf 2020; 8: e17283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Moja L, Kwag KH, Lytras T, et al. Effectiveness of computerized decision support systems linked to electronic health records: A systematic review and meta-analysis. Am J Public Health 2014; 104: e12–e22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: A systematic review. Ann Intern Med 2012; 157: 29–43. [DOI] [PubMed] [Google Scholar]

- 32.Sutton RT, Pincock D, Baumgart DC, et al. An overview of clinical decision support systems: Benefits, risks, and strategies for success. npj Digit Med 2020; 3: 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fox J, Thomson R. Clinical decision support systems: a discussion of quality, safety and legal liability issues. In: Proceedings of the AMIA symposium, 2002, p. 265. American Medical Informatics Association. [PMC free article] [PubMed] [Google Scholar]

- 34.Greenberg M, Ridgely MS. Clinical decision support and malpractice risk. JAMA 2011; 306: 90–91. [DOI] [PubMed] [Google Scholar]

- 35.Saenz AD, Harned Z, Banerjee O, et al. Autonomous AI systems in the face of liability, regulations and costs. npj Digital Medicine 2023; 6: 185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kemp R, Prasad V. Surrogate endpoints in oncology: when are they acceptable for regulatory and clinical decisions, and are they currently overused? BMC Med 2017; 15: 134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Prasad V, Kim C, Burotto Met al. et al. The strength of association between surrogate end points and survival in oncology: A systematic review of trial-level meta-analyses. JAMA Intern Med 2015; 175: 1389–1398. [DOI] [PubMed] [Google Scholar]

- 38.Fleming TR, DeMets DL. Surrogate end points in clinical trials: Are we being misled? Ann Intern Med 1996; 125: 605–613. [DOI] [PubMed] [Google Scholar]

- 39.Floridi L. The ethics of artificial intelligence: Principles, challenges, and opportunities. 2023.

- 40.Haug CJ, Drazen JM. Artificial intelligence and machine learning in clinical medicine, 2023. N Engl J Med 2023; 388: 1201–1208. [DOI] [PubMed] [Google Scholar]