Abstract

Neural circuits in the brain perform a variety of essential functions, including input classification, pattern completion, and the generation of rhythms and oscillations that support processes such as breathing and locomotion [51]. There is also substantial evidence that the brain encodes memories and processes information via sequences of neural activity. In this dissertation, we are focused on the general problem of how neural circuits encode rhythmic activity, as in central pattern generators (CPGs), as well as the encoding of sequences. Traditionally, rhythmic activity and CPGs have been modeled using coupled oscillators. Here we take a different approach, and present models for several different neural functions using threshold-linear networks. Our approach aims to unify attractor-based models (e.g., Hopfield networks) which encode static and dynamic patterns as attractors of the network.

In the first half of this dissertation, we present several attractor-based models. These include: a network that can count the number of external inputs it receives; two models for locomotion, one encoding five different quadruped gaits and another encoding the orientation system of a swimming mollusk; and, finally, a model that connects the fixed point sequences with locomotion attractors to obtain a network that steps through a sequence of dynamic attractors. In the second half of the thesis, we present new theoretical results, some of which have already been published in [59]. There, we established conditions on network architectures to produce sequential attractors. Here we also include several new theorems relating the fixed points of composite networks to those of their component subnetworks, as well as a new architecture for layering networks which produces “fusion” attractors by minimizing interference between the attractors of individual layers.

Chapter 1 | Introduction

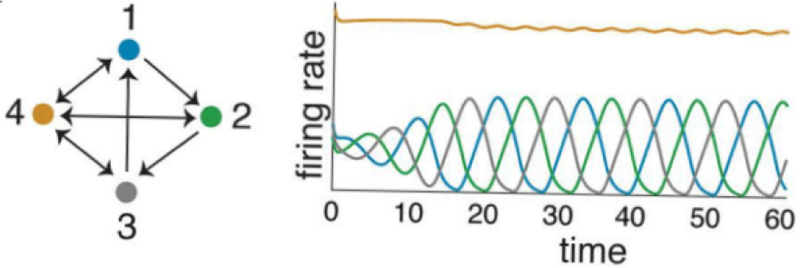

Attractor neural networks play an important role in computational neuroscience by providing a rich framework for modeling the dynamic behavior of neural systems and giving insights into how the brain might process information and perform computations [3,43]. Originally devised as models of associative memory, these networks were designed to store static patterns representing discrete memories as attractors. Their ability to simultaneously encode multiple static patterns, represented as fixed points, made them ideal for this purpose. Perhaps the most well-known example is that of Hopfield networks, a foundational example of attractor neural networks [39]. As illustrated in Figure 1.1A, in the classical Hopfield paradigm, memories are stored in the network as several coexistent stable fixed points, each one accessible via distinct input pulses (represented as color-coded pulses in the figure). The state space is partitioned into basins of attraction, and each input will start the trajectory into one of these basins. Coexistence of attractors, even if it’s just multiple stable fixed points, requires nonlinear dynamics.

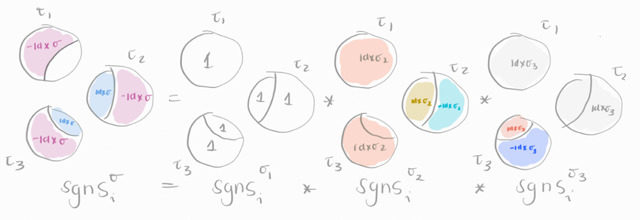

Figure 1.1. Summary of models as attractor networks.

(A) Classical Hopfield-like paradigm. Multiple stable fixed points (stable attractors) are encoded in the same network, each one accessible via distinct (colored) input pulses. (B) Multiple stable fixed points are encoded in the same network, each one accessible via identical pulses. Since the pulses are all identical, the network is internally encoding a sequence of fixed points. Each identical pulse causes one transition in the sequence, as indicated by the corresponding numbers in the pulses and arrows. (C) Multiple dynamic attractors encoded in the same network, each one accessible via distinct (colored) input pulses. (D) Internally encoded sequence of dynamic attractors, each step of the sequence accessible via identical inputs.

While Hopfield networks are well-suited for encoding multiple static patterns, some patterns of neural activity are better stored as dynamic attractors. Such is the case for the rhythms and oscillations produced by Central Pattern Generator circuits (CPGs). CPGs are neural circuits that generate rhythmic patterns that control movements like walking, swimming, chewing, and breathing [51]. Unlike static patterns (such as images), these rhythmic processes require attractors whose neurons fire sequentially, meaning that neurons take turns to fire. Furthermore, a single CPG network should potentially be able to encode multiple such patterns. Certainly, animals have several locomotive gaits, all of which activate the same limbs [4,34]. How can all these different overlapping dynamic patterns be produced by the same network? Modeling this using attractors requires multi-stability of dynamic attractors, which is known to be a difficult problem [24,63].

Traditional models of locomotion and other CPGs have tackled these challenges by using coupled oscillators [12,21,31,41,73,74]. In these models, the parameters are typically adjusted depending on the desired pattern, effectively altering the dynamical system in use. For various reasons, this approach presents some challenges. For instance, while there is evidence of pacemaker neurons, not all neurons are intrinsic oscillators [62]. Additionally, assuming that synaptic strengths change every time we transition between different locomotive gaits necessitates additional ingredients for the model, such as synaptic plasticity. Despite this, coupled oscillator models have remained popular for important reasons. One of the reasons they are so widely used is the availability of theoretical results coming from physics and mathematics [5]. Indeed, many CPG models stemmed from the physics and mathematics communities [12,31,73,74].

The availability of theoretical tools is indeed a very compelling argument for using coupled oscillators to model central pattern generators. Here we aim to take a different approach that also leverages recent theoretical advances, but within the world of attractor neural networks. The framework of attractor neural networks differs from traditional coupled oscillator models in two key ways. First, neurons do not intrinsically oscillate; instead, patterns of activity emerge as a result of connectivity. Second, these patterns are true attractors of the system, making them more robust and stable to noise and other perturbations. Additionally, providing models within this framework would unify our approach to CPGs with other classical models, like the aforementioned associative memory models, ring attractors, etc [3,43]. On the biological side, it has also been suggested that cortical circuits involved in associative memory encoding and retrieval have many features in common with CPGs [79].

To accomplish this, we propose the use of Threshold-Linear Networks (TLNs). TLNs are recurrent neural networks with simple non oscillating units and piecewise linear activation [38]. This makes them focus on the role of connectivity in emergent behaviors. TLNs have a rich history as neural models [7,35,46,67,70], and more importantly, they are supported by a wide array of theoretical results [13,14,37,53,77], many of them recent [15,16,54,59].

One such key finding is that threshold-linear networks with symmetric connectivity matrices can only have stable fixed point attractors (static patterns) [36,39], which is why here we use non-symmetric TLNs, introduced in Chapter 2. These are known to give rise to a rich variety of non-linear dynamics including multi-stability, limit cycles, chaos and quasi-periodicity. Therefore, we expect it to be possible to simultaneously encode multiple dynamic patterns of activity, whereas in classical Hopfield networks the stored patterns are all static. Within this unified framework, our aim is to provide attractor models for three broad neural functions, as summarized in Figure 1.1, and detailed below:

First, we propose a simple neural integrator model that is both robust to noise and can count inputs, using fixed point attractors. Neural integration refers to the process in which information from various sources is combined to create an output. For instance, counting the number of left and right cues to make a decision is quite literally integration (summation) in the mathematical sense. Here, we propose to model a discrete counter as a sequence of static attractors, as shown in Figure 1.1B. While this concept is akin to the classical Hopfield model (Figure 1.1A), our aim is to internally encode the sequence of fixed points, meaning that the input pulses are all identical and contain no information about which fixed point comes next in the sequence (i.e. they are just like pushing a single input button).

Second, we aim to devise a (small) network that has attractors corresponding to 4–5 distinct, but overlapping, quadruped gaits. The goal is for the attractors to coexist in the same network so that they can be accessed by different initial conditions, and without changing parameters. This requires the presence of several coexistent dynamic attractors, as pictured in Figure 1.1C, arising as distinct limit cycles in state space. Recall that the simultaneous encoding of multiple dynamic attractors (non fixed point attractors) is a network is challenging, especially when the attractors have overlapping units. While classic models circumvent this challenge by adjusting synaptic weights, we aim to obtain a single fixed network, which in turn means a simpler model with fewer control parameters.

In addition to quadruped gaits, we will also model “Clione’s hunting system ” [58], which is a different CPG example, using the same framework. Previous models for it have also used intrinsically oscillating units and fine-tuned parameters [71]. Here we intend to devise a more robust network for this using attractors to avoid the need for finely-tuned parameters.

Third, we combine the modeling approaches from panels B and C to devise a network that can step through a set of dynamic attractors sequentially, as in Figure 1.1D. Can different attractors be linked together so that they can be activated in sequence, where the sequence itself is stored within the network? This could be useful for modeling sequences of complex movements, such as a choreographed sequence of dance moves, for instance.

Sequences of sequential attractors.

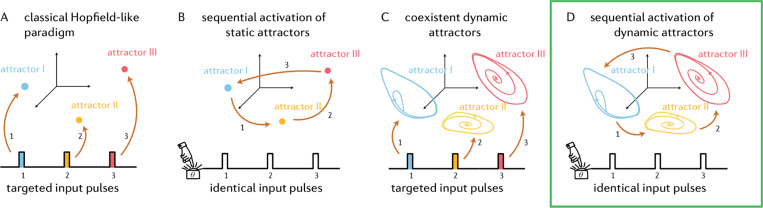

Note that in Figure 1.1C, we are dealing with dynamic attractors, that are sequential themselves, meaning attractors whose nodes activate in an ordered sequence [59]. This definition does not completely exclude attractors in which there is some synchrony in the activations. So for example, we consider both attractors in Figure 1.2 sequential attractors, even though the attractor on the right has nodes 2 and 3 synchronized (we will later formalize the dynamic prescription of the nodes, for now note the sequential of activations of nodes).

Figure 1.2. Examples of sequential attractors.

(A) Nodes 1,2,3 are activating in sequence, as seen by the curves to the left of the graph. (B) Node 1 activates, then 2 and 3 simultaneously, then node 4.

These are great to model rhythmic activations like those of CPGs. In contrast, Figure 1.1D deals with sequences of dynamic attractors, which means that different attractors, either static or dynamic, are activated in a specific ordered sequence (e.g., attractor A, then attractor B, then attractor C). With this distinction clear, our ultimate goal is to achieve an internally encoded sequence of sequential attractors.

Internally encoded sequences of sequential attractors are good models for complex sequences of movements, like choreographed dancing. These complex motor behaviors have also been modeled in the past using threshold-linear recurrent networks that choose and learn motor motifs, but whose choice mechanism requires plasticity, and where the sequence’s order is externally encoded [49]. It is not uncommon to think of broader cognitive sequential processes as a recombination of several pre-stored patterns, which offers an efficient alternative to re-encoding patterns with each occurrence. Studies in this vein include [65], where they utilize a combination of discrete metastable states, leveraging winnerless competition of oscillator neurons. Similar mechanisms have been explored using boolean and spiking networks [69,72].

Desired properties of models.

As it turns out, the versatility of threshold linear networks will prove ideal for modeling both sequential attractors and sequences of them.

To summarize the discussion above, we want our models to satisfy the following properties:

Neurons are not intrinsic oscillators.

Stored static and dynamic patterns should emerge as attractors of the network, rather than being fine-tuned trajectories. Static patterns should manifest as fixed points, while dynamic patterns should arise from non fixed point attractors, like limit cycles.

A network’s attractors should be accessible via different initial conditions, easily implemented via input pulses that target subsets of neurons.

A sequence of attractors should be accessible via a series of identical inputs pulses, with the sequence itself stored within the network (possibly in a separate layer, as observed in some biological brains).

The models should be mathematically tractable–that is, simple enough to be analyzed mathematically.

These properties will distinguish our models from previous coupled oscillator models and position them within the framework of attractor neural networks. We begin by adhering to the last point above, by choosing a framework that fits into the attractor neural network paradigm and also provides mathematically tractable models. With tractability also come great simplifications. Although TLNs are inspired by networks of biological neurons, real neurons and their interactions are of course far more complex than TLNs paint them to be. TLNs remain useful however because they capture two fundamental pieces of biological networks: connectivity and threshold-activation. This is why here we also focus on another simplification of TLNs, known as Combinatorial Threshold-Linear Networks (CTLNs) [15,53,54]. CTLNs are a special family of TLNs, whose connectivity matrix is defined by a simple directed graph (giving rise to binary connections), as in Figure 1.2. Their added simplicity can be used to gain further theoretical results.

Summary of models.

The table below lists all the models included in the dissertation. Each row defines a single model/network, and each is an example of the attractor behaviors described by Figure 1.1:

| Model | Network function | Type of attractor | Chapter |

|---|---|---|---|

| Model 1a | counter | sequence of static attractors | 3 |

| Model 1b | signed counter | sequence of static attractors | 3 |

| Model 1c | dynamic attractor chain | sequence of identical dynamic attractors | 3 |

| Model 2a | quadruped gaits | coexisting distinct dynamic attractors | 4 |

| Model 3a | molluskan swimming | coexisting identical dynamic attractors | 4 |

| Model 2b | sequential control of quadruped gaits | sequence of distinct dynamic attractors | 5 |

| Model 3b | sequential control of molluskan swimming | sequence of identical dynamic attractors | 5 |

In Chapter 3, we introduce three models for sequences of attractors. Models 1a and 1b are two counter networks that step through sequences of fixed points. Models 1a is shown in Figure 1.3A, where we can see that identical pulses move the network into the next stable fixed point, where it stays until it receives another pulse. This network serves as robust discrete neural integrators of inputs, as we show in their chapter by doing a thorough robustness analysis. Additionally, we extend our work to dynamic attractors by presenting an additional network, Model 1c, capable of encoding a sequence of dynamic attractors. These dynamic attractors are all qualitatively identical, and because of this, there are some symmetries in the basins of attractors, and thus all attractors easily accessible via distinct input pulses. However, to effectively model CPGs, we require different types of patterns to coexist simultaneously. How can we achieve this?

Figure 1.3. Summary of models I.

(A) Model 1a: counter network from Chapter 3. Pulses are all identical (in black). (B) Model 2a: quadruped gaits network from Chapter 4. Pulses are attractor-specific (colored according to stimulated node).

That is the content of Chapter 4, where we model two different CPGs, Model 2a and 3a, which require sequential activation of neurons. Model 2a, developed in in Section 4.2, is pictured in Figure 1.3B. It consists of a network encoding five different quadruped gaits as coexistent limit cycles in a 24 unit network. There, we see that all gaits coexist and are accessible via gait-specific pulses. We do a thorough analysis of its dynamics via the set of fixed point supports, and the effect of parameters in modulating gait characteristics. Model 3a, in Section 4.3, consists of a network encoding swimming orientation of a marine mollusk (Clione). Since the attractors are all identical, we manage to prove symmetry of its basins of attraction in Theorem 12.

Finally, in Chapter 5, we merge the concepts introduced in Chapters 3 and 4 to achieve sequences of sequential attractors. From Chapter 3 we get the counter network that will encode the sequence transitions, and from Chapter 4 we get the coexistent sequential attractors. From this integration we obtain Models 2b and 3b: a network for the sequential control of quadruped locomotion and for the sequential control directing swimming movements in Clione. The latter is the one pictured in Figure 1.4, where we observe the attractors from the CPG network “fuse” with the attractors of the counter network, as both are simultaneously active, and look qualitatively like they did when isolated. Figure 1.4 shows the resulting network using Clione’s model, but it can also be done, analogously, with the five-gait network. The fact that we could use the exact same construction with two different networks, led us to believe this is an even more general phenomenon, arising from some structural constraints on these networks, ad they were indeed built with similar principles.

Figure 1.4. Summary of models II.

Model 3b: sequential control of molluskan swimming directions from Chapter 5. Pulses are identical by layer. Attractors are labeled on top of the greyscale by which direction is active (up/down, left/right, front/back). These are also color-coded by node.

Code to reproduce the plots in Figures 1.3 and 1.4, and also all models listed in the table, can be found at https://github.com/juliana-londono/phd-thesis-basic-plots.

Note that in Figure 1.4, we see a “blend” of two different attractors: at the top of they greyscale we see the dynamic attractors coming from layer L3, and at the bottom we see fixed points coming from layer L1. This phenomenon was also observed in [61], where it was called fusion attractors. Fusion attractors offer a clean solution for managing sequences of static and dynamic attractors. Understanding the mechanisms behind this phenomenon motivates us to further explore the underlying structural constraints giving rise to it, from a theoretical standpoint. This is why we now transition from models to theory.

New network theory.

We want to note that all the models we have developed thus far were built within the TLN framework, for which there are plenty of well-established theoretical results. This theoretical foundation made the process of building these models a lot easier. However, our models have now surpassed the available theory and so now they serve as sources of inspiration for the development of new theoretical results. This is why in the second part of the dissertation, we take a reverse approach: while theory initially guided our modeling efforts, now the models are leading the development of new theoretical results.

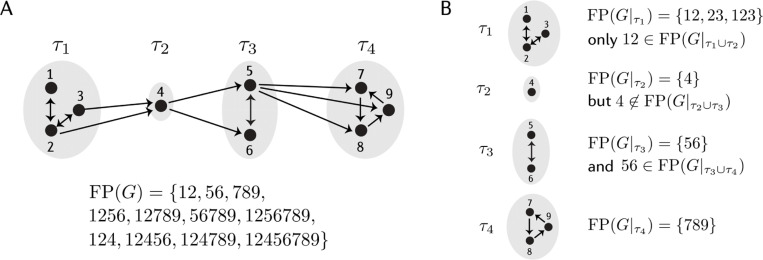

Chapter 6 presents original theoretical contributions, including several results recently published in the paper I co-authored: "Sequential Attractors in Combinatorial Threshold-Linear Networks" [59]. This chapter is divided in three parts. First, in Section 6.1, we establish some necessary technical results, some of which are earlier version of results that we end up generalizing in this chapter. Then, in Section 6.2, we derive new structural theorems for CTLNs supporting sequential attractors. All of the results within this section are my contribution to [59], which contains several other architectures that support sequential attractors. All theorems I proved are in bold. Most of these results relate the fixed point supports of a network to the fixed point supports of component subnetworks, as follows:

Theorem 21 for “simply-embedded partitions”, constrains the possible fixed point supports of a network to unions of fixed points chosen from a particular menu of component subnetwork fixed point supports. This is generalizing results from [15]. In the same section, Theorem 23 and Corollary 24 give conditions on when a node can be removed from a network without changing the set of fixed points supports. We include here a new result on removable nodes, that has not been published: Theorem 25.

Theorem 28 for “simple linear chains”, showing that the set of fixed point supports of a simple linear chain network is closed under unions of “surviving” component fixed point supports.

Theorem 31 for “strongly simply-embedded partitions”, showing that the set of fixed point supports of a network can be fully determined from knowledge of the component fixed point supports together with knowledge of which of those component fixed points “survive” in the full network.

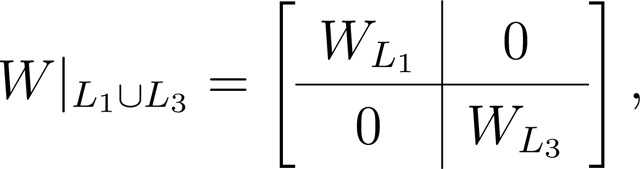

Finally, in Section 6.3, we extend some of these theorems to TLNs and provide theoretical explanations for the fusion attractors observed in Chapter 5, culminating with:

Theorem 40, which is an important technical result generalizing previous theorems on certain determinant factorizations that control the set of fixed point supports of a network. It relies on a new determinant factorization lemma, Lemma 38, which I have also proven. Theorem 40 is then used as a crucial ingredient in the proofs of: Theorem 42, generalizing Theorem 21 above. Theorem 44, explaining how the fixed points of some special networks are formed from fixed point of smaller component networks. That theorem generalizes both Theorem 17 (from two components to N components) and Theorem 31 (from several CTLN components to several TLN components). And finally, we present a similar result but for “nested” component fixed point supports in Theorem 45.

We also show that the networks in Chapter 5 satisfy these conditions, thus explaining the fusion attractors observed there.

In this dissertation’s final chapter, Chapter 7, we present partial and further theoretical results derived from projects in sections 4.2 and 6.3. In Section 7.1, Lemma 48, gives conditions under which the same attractor can arise from two different networks. This phenomenon is known as degeneracy. All code from this section is available online at https://github.com/juliana-londono/TLN-attractor-interpolation. In Section 7.2, Lemma 55, gives a new way to think about certain Cramer’s determinants, which are at the core of the dynamics of TLNs.

The rest of this dissertation is organized as follows: Chapter 2 introduces the framework, including firing rate models, attractor neural networks, TLNs, and CTLNs. Chapter 3 provides models for sequences of static and dynamic attractors, both internally and externally encoded. In Chapter 4, we provide two CPG models of locomotion, each consisting of several coexistent dynamic attractors, easily accessible via initial conditions or inputs. Chapter 6 explores new architectures and theoretical results, focusing on sequential dynamics complex and networks made up of simpler subnetworks. Finally, Chapter 7 discusses further theoretical results derived from the presented projects, suggesting avenues for further exploration. Appendix A contains the matrices and parameters used to construct all the models, along with some technical calculations of the fixed points of the five-gait quadruped network. That’s all. We hope you enjoy reading this dissertation. We encourage you to keep in mind Figure 1.1, which is the road map guiding us through the chapters on models.

Chapter 2 | Review of relevant background

This section offers a broad overview of the mathematical and historical background necessary for understanding and contextualizing the subject at hand. More detailed technical background specific to each chapter is provided later, as needed. None of the results in this chapter are original work of my own. Thus, the proofs of these results are not included here and can be found in their original publications, as cited.

2.1. Firing rate models and attractors

In this dissertation, we deal with the dynamics of recurrent neural networks. A recurrent neural network consists of a directed graph along with a prescription of the nodes’ dynamics. The nodes are thought of as neurons and edges represent synapses between them. One way to interpret the dynamics of the nodes is as firing rates, which indicate the average frequency at which the neuron generates action potentials (or “fires”) [19]. The dynamics of a firing rate network model can be described by a system of coupled differential equations:

| (2.1) |

where represents the firing rate of neuron , is a matrix prescribing the interaction strengths between neurons, and is some external input to neuron (which might vary in time), for recurrently connected neurons. In Equation 2.1, is referred to as the time constant, representing the rate of decay when there is no input to neuron , and is an activation function (e.g. sigmoid, ReLU).

Also, Equation 2.1 indicates that we are viewing recurrent neural networks here as dynamical systems, contrasting with the typical machine learning perspective that treats neural networks as learning algorithms or black-box function approximators.

While firing rate models are clearly a simplification of biological neural dynamics, they allow us to focus on factors such as the interactions between neurons, activation functions, and external inputs, and help bridge the gap between detailed spiking neuron models and large-scale network behavior. This provides a valuable framework for understanding the functional roles of these factors in neural computations.

Indeed, firing rate models of recurrent neural networks are a popular tool for studying nonlinear dynamics in neuroscience [19,20,23,67], particularly within the context of attractor neural networks. Attractor neural networks have emerged as a framework for studying neural dynamics, covering various cognitive processes like memory recall, decision-making, and perception [3,43]. Understanding how these networks compute is useful for advancing our understanding of how biological networks compute.

Under this framework, attractors of the system are thought of as representations of some cognitive process or pattern encoded in the system. Hopfield networks are a classical example [39]. In these networks, fixed points of the network are interpreted as encoded memories. In Hopfield networks, units can only be in one of two states, and the dynamics are discrete. Importantly, if the connections between neurons are symmetric, then the network is guaranteed to converge to a stable fixed point, which happens to be the minimum of an energy function, pictured as an energy landscape in Figure 2.1A [39,40].

Figure 2.1.

(A) Minima of energy landscape for symmetric network. (B) Diversity of attractors of non-symmetric networks. Adapted from [17].

Such theoretical results are not only available for Hopfield networks, but also more broadly for certain continuous-time recurrent neural networks, which are the main subject of this dissertation: threshold-linear networks (TLNs). In the case of TLNs, in Equations 2.1 (a.k.a. ReLU activation function, Fig. 2.2). TLNs were introduced around the 50s [38], and have been widely used in computational and mathematical neuroscience [7,35,46,67,70] since.

Figure 2.2.

A recurrent neural network, and the ReLU activation function.

A crucial aspect of TLNs is their piecewise linear activation function, which greatly simplifies mathematical analysis. This mathematical tractability has given rise to numerous theoretical results. Notably, as with Hopfield networks, symmetric TLNs were shown to converge to stable fixed points under some extra restrictions on (copositivity) [36]. Furthermore, there exist constraints on which sets of neurons can be co-active at a stable steady state (forbidden and permitted sets in [36]). These results have been further explored in subsequent studies [13,16].

We are however interested in a broader set of attractors, not only stable fixed points. That is why we focus here in non-symmetric inhibition-dominated TLNs, for which W is non-symmetric and non-positive. This choice is motivated by the fact that inhibition fosters competition between neurons, and thus neurons tend to alternate in reaching peak activity levels, leading to sequential behaviors within limit cycles.

Indeed, these networks, even though still piecewise linear, nonetheless permit rich non-linear dynamics like multi-stability, limit cycles, chaos and quasi-periodicity to arise and, moreover, coexist [53,54,61], as in Figure 2.1B. This, along with extra simplifying assumptions (particularly on ), has given rise to a robust body of theoretical work characterizing the dynamics of TLNs [13–16,37,53,54,59,77].

In particular, a main focus of this dissertation is on a special type of non-symmetric TLNs, called combinatorial threshold-linear networks (CTLNs), introduced in [54]. CTLNs are TLNs where the connectivity matrix is prescribed by a simple directed graph, and often the input is assumed to be uniform across neurons.

Below, we formally introduce TLNs and CTLNs, and present some results that we will use throughout the dissertation. The presentation of these results is adapted from [15,59], with slight modifications. Since CTLNs are a special family of TLNs, they inherit many properties from TLNs. Hence, we first provide an overview of the necessary background shared with TLNs, and then state results specific to CTLNs.

2.2. Threshold-linear networks (TLNs)

A threshold-linear network (TLN) is a continuous-time recurrent neural network (Eqns. 2.1) where . In addition, we assume for the time being that the input is constant in time and the timescales are constant and uniform across neurons (without loss of generality, we assume ). More precisely:

Definition 1.

A threshold linear network on neurons is a system of ordinary differential equations

| (2.2) |

where for all , . This can also be written in vector form as

where is an real matrix and .

Under these circumstances, a given TLN is completely determined by the choice of the connectivity matrix and its vector of inputs , so we denote it by . Unless otherwise specified, is the total number of neurons in the network. As mentioned above, here we only consider inhibition-dominated TLNs (a.k.a. competitive). This means we assume for all , . In addition, we pose some extra restrictions on degeneracies. The following definition requires some Cramer’s determinants of some sub-matrices to not vanish, we denote by the matrix the matrix where the column corresponding to the index has been replaced by , where the subindex denotes restriction to the rows/columns given by . In the definition below, and in all that follows, denotes the set of indices .

Definition 2 ([15, Definitions 1 and 2]).

We say that a TLN is competitive if , and for all . We say that it is non-degenerate if

for at least one

for each , and

for each such that for all , the corresponding Cramer’s determinant is nonzero: .

Unless otherwise noted, all TLNs here are competitive and non-degenerate.

A good point of entry to explore the dynamics of a competitive TLN are its fixed points: a fixed point of a TLN is a solution that satisfies for each . Per equations 2.2, this translates into

By the defintiion of the function, when , will evaluate to 0. Thus, these define hyperplanes that divide state space into chambers, and inside each of those chambers, Equations 2.2 define a linear system of ODEs. Under the non-degeneracy assumption, each of those can have exactly one fixed point, though its fixed point might not lie inside the correct chamber.

In Figure 2.3, for example, we have marked the four fixed points associated to the 4 linear systems with corresponding colors, but only the fixed point in chamber {2} (the pink one) is in its correct chamber. This makes it the only true fixed point of the TLN pictured there. Since there is at most one fixed point per chamber, we can label all the fixed points of a network by their support supp . We gather the supports of all fixed points into a set

| (2.3) |

Figure 2.3. Hyperplane chambers cartoon.

2-dimensional state space is divided in 22 chambers by the hyperplanes , inside each the dynamics are truly linear. The fixed points of the linear systems are color-coded by chamber.

We have experimentally observed that often , although no precise number for that “often” are provided. Thus, this set is often non trivial and so it already contains quite a bit of information about the dynamics of , as we will see later. We refer to this set many times throughout this dissertation, so it is good to keep it in mind. Finally, we give an important result about belonging to .

Corollary 3 ([15]).

Let be a TLN on n neurons, and let . The following are equivalent:

This concludes the necessary background on TLNs. Next, we formally introduce CTLNs and review some results particular to them.

2.3. Combinatorial threshold-linear networks (CTLNs)

A big part of this dissertation focuses on a special family of TLNs, called combinatorial threshold-linear networks (CTLNs). In this case, in addition to the network being a competitive non-degenerate TLN, the connectivity matrix in Equation 2.2 is prescribed by a simple directed graph G, as shown in Figure 2.4. We are thinking of the graph as retaining only the excitatory neurons and the connections between them, where the inhibitory neurons and their background inhibition are not represented in the graph, but are included in the equations defining the dynamics.

Figure 2.4. CTLNs.

Right: A neural network with excitatory neurons (black) and inhibitory neurons (gray), producing global inhibition. Left: Only the excitatory connections remain in our graph representation of the network. From [17].

More precisely, if we denote in by not in , we have the following definition

Definition 4.

A combinatorial threshold-linear network is a threshold-linear network whose connectivity matrix is prescribed by a simple directed graph as

| (2.4) |

where , and the input values are kept constant across neurons. When , , and , we say that the parameters are in the legal range.

Although this puts us further from reality, this simplification allows us to retain fundamental properties while being able to derive relationships between structure and function more easily. We are assuming input to be constant across neurons to further isolate the role of connectivity in the network.

When , , and , we say that the parameters are in the legal range. These are chosen both to have all inhibitory connections, but also to satisfy some conditions in some proofs. Parameters in this dissertation are always chosen from the legal range, unless otherwise noted. Before we show an example, it is important to clarify that our convention for the adjacency matrix of a graph is:

to match the connectivity matrix with the conventions.

Figure 2.5 shows an example of a CTLN where the defining graph consists of a 3-cycle. From it, we get the transposed adjacency matrix, and from it we get the connectivity matrix as defined by Equation 2.4 with parameters and . To get the rate curves in the Figure 2.5, we simulate the system of equations 2.2 with . The network activity follows the arrows in the graph. Peak activity occurs sequentially in the cyclic order 123.

Figure 2.5. CTLN example.

From a 3-cycle graph we obtain its adjacency matrix , from which we obtain the resulting connectivity matrix using Equation 2.4, from which we obtain the dynamics of the system using Equation 2.2. The activations follow the arrows of the graph. Modified from [61].

The dependence of the dynamics of a CTLN on its defining graph has given rise to extensive theory relating the dynamics and the combinatorial properties of through the set of its fixed point supports, which we now denote as 1 or , if clear from the context:

This set contains information about all the fixed points of the network, giving insights into the dynamics of the network through a special subset, as it will be seen next. Indeed, we will see that the fixed points shape the dynamics of the network, whether or not they are stable. In the sections that follow, we show connections between this set and the dynamics of the network, and our main entry point are the the core motifs, introduced in the next section. There are special minimal fixed point supports which have been conjectured to correspond to attractors. Since these have proven to be insightful, we provide rules to find them in Subection 2.3.2. We conclude the chapter by showing how to build in certain core motifs into the network, hoping to see the desired attractors, using constructions like cyclic and clique unions [15].

2.3.1. Core motifs

In prior work [59], it has been conjectured that the dynamic attractors of a network correspond to supports that are core motifs:

Definition 5 ([17]).

Let be the graph of a CTLN on nodes. An induced subgraph is a core motif of the network if .

We refer to both the support of the fixed point and the induced subgraph as the core motif. Note that a necessary condition to be a core motif of the network is that is minimal in by inclusion. Core motifs have been observed to be useful in predicting the dynamics of a network. For example, consider Figure 2.6. The CTLN defined by the graph in panel A has two core motifs in it, 123 and 4. 1234 is not a core motif, since it is not minimal in , by inclusion. Panel B shows the results of simulating the CTLN using , , and under two different initial conditions. In the top, the initial condition is a small perturbation of the fixed point supported on 123. The activity spirals out of the unstable fixed point and converges to a limit cycle where the high-firing neurons are the ones in the fixed point support. In the bottom, the initial condition is a small perturbation of the fixed point supported on 4, which is a stable fixed point. Thus, the activity converges to a static attractor where the high-firing neuron is the one in the fixed point support. In panel C, we see that the 1234 fixed point is fundamentally different: small perturbations of the fixed point supported on 1234 produce solutions that either converge to the limit cycle shown in panel B, or to the stable fixed point. This support therefore does not “correspond” to any attractor, but rather acts as a “tipping point” between two distinct attractors.

Figure 2.6. CTLN attractors.

(A) Graph of a CTLN and its set. (B) Solutions to the CTLN with the graph in panel A using two different initial conditions, which are perturbations of the fixed points supported in 123 (top) and 4 bottom. (C) Fixed points and example trajectories which are perturbations of the fixed point supported in 1234, depicted in a three-dimensional projection of the four-dimensional state space. From [61].

Less informally, we will say that a support corresponds to an attractor if initial conditions that are small perturbations from the fixed point lead to solutions that converge to the attractor. Heuristically, the high-firing neurons in the attractor tend to match the support of the fixed point. Core motifs often correspond to attractors [59] and, consequently, understanding the core motifs of a network becomes useful when predicting the dynamics of a given CTLN. We make use of this heuristic often in the dissertation and so we give the set of core motifs of a given network its own notation:

An example where the core-attractor correspondence is perfect is for cycle graphs, that is, a graph (or an induced subgraph) where each node has exactly one incoming and one outgoing edge, and they are all connected in a single directed cycle. First, it is a fact that all cycle graphs are core motifs:

Theorem 6.

If is a cycle, then is a core-motif.

Indeed, it was recently proven that a graph that is an 3-cycle has a corresponding attractor [6].

2.3.2. Graph rules

The connection between attractors and the fixed points has motivated an extensive research program where graph rules were developed [15, 18, 59, 61]. These refer to theoretical results directly connecting the structure of , to the set . Several of those results are independent of the choice of , and thus useful for engineering robust networks with prescribed attractors. We use of some these in the modeling section, and so we reproduce them below.

The first graph rule, central to our work here, concerns a special type of graph. We say that has uniform in-degree if every node has incoming edges from within . In that case, we have:

Theorem 7 (uniform in-degree, [15]).

Let be a graph on n nodes and . Suppose has uniform in-degree . For each , let be the number of edges receives from . Then

Furthermore, if and , then the fixed point is unstable. If , then the fixed point is stable.

From this theorem, we easily get the following Rules for families of uniform in-degree graphs that we use in our models:

Cliques are all-to-all connected graphs and therefore uniform in-degree with , where is the total number of nodes in the clique.

Rule 1 (Cliques, Fig. 2.7A). If is a clique, then supports a stable fixed point if and only if for all receives at most edges from . When no external node receives edges from , we say that is target-free.

Figure 2.7. Three families of uniform in-degree graphs.

Three example families of uniform in-degree graphs, and corresponding Rules.

Cycles are graphs whose vertices are connected in a closed chain and therefore uniform in-degree with .

Rule 2 (Cycles, Fig. 2.7B). If is a cycle, then supports an unstable fixed point if and only if for all receives at most one edge from .

A tournament is an orientation of a (undirected) complete graph. A tournament is called cyclic if the set of all automorphisms of contains . All cyclic tournaments must have an odd number of nodes , and have uniform in-degree [11].

Rule 3 (Cyclic tournaments, Fig. 2.7C). If is a cyclic tournament, then supports an unstable fixed point if and only if for all receives at most edges from , where is the number of nodes in .

All of these graphs are core motifs [11,15] and they indeed have a corresponding attractor, as expected. In the case of cliques, the fixed point is stable. In the case of cycles and cyclic tournaments, their unique fixed point is unstable and the dynamics reveal a corresponding limit cycle attractor.

In addition to providing tools for engineering certain patterns into a network, graph rules can also be used to prove that a given support belongs to in more general cases. Indeed, in Chapter 6, we use such rules to prove new theoretical results. A key one derives from the concept of graphical domination, introduced in [15] and summarized in Figure 2.8:

Figure 2.8. Graphical domination.

Four types of graphical domination from Rule 4. Modified from [17]. Dashed arrows represent optional edges.

Definition 8.

We say that graphically dominates with respect to if the following three conditions hold:

For each , if then .

If , then .

If , then .

If there is graphical domination within a graph, a lot can be said about its fixed points:

Rule 4 (graphical domination, [17]). Let be a graph on nodes, and . Suppose graphically dominates with respect to . Then the following statements hold:

(inside-in) If , then , and thus .

(outside-in) If and , then , and thus .

(inside-out) If and , then implies .

(outside-out) If , then implies .

A good example of the use of Rule 4 in proving more general results on the , is the rule of sources. A source is a node that has in-degree 0. The we have the following result:

Rule 5 (Sources, [15]). Let be a graph on nodes and . If there exists an such that contains a proper source in , then .

Proof. Suppose there exists an such that is a proper source in with . Then graphically dominates since has no inputs in and . Hence, by Theorem 4, and so by Corollary 3. □

We conclude this section with a cool application of Rule 4, which we use in Subection 6.3.1 to present an alternative proof of one of the results in [59]. The proof of the result below can be found in [60].

Theorem 9.

Let be a graph on n nodes, and suppose there is such that graphically dominates with respect to . Then .

2.3.3. Cyclic unions and sequential attractors

These graph rules allow us to construct graphs with prescribed fixed point supports , and facilitate the analysis of in terms of the structure of . This in turns allows for the derivation of more general structure theorems, supported on simpler building blocks. One of these structures, which does the heavy lifting of pattern generation in Chapter 4, is the cyclic union, pictured in Figure 2.9A:

Figure 2.9. Cyclic unions.

(A) A cyclic union of components, and Theorem 11. (B - C) Two examples of a cyclic union, and its sequential activation of the nodes. Nodes whose activations are synchronized appear in parenthesis.

Definition 10.

Given a set of component subgraphs , on subsets of nodes , the cyclic union of is constructed by connecting these subgraphs in a cyclic fashion so that there are edges forward from every node in to every node in (cyclically identifying with ), and there are no other edges between components

Cyclic unions are great for pattern generation because, as it can be seen in Figure 2.9B-C, they give rise to sequential attractors whose order of activation coincides with the overall structure and direction of the cyclic union. Indeed, this is a general fact, that derives from the way in which the set is made up for cyclic unions:

Theorem 11 (cyclic unions, theorem 13 in [15]).

Let be a cyclic union of component subgraphs . For any , we have

Moreover,

This means that the global fixed point supports can be completely understood in terms of the component fixed point supports. Theorem 11 guarantees that every fixed point of hits every component. In simulations we have observed that this ensures that the every component is active in corresponding attractors. Moreover, neurons are activated in the order of the components in cyclic order.

Indeed, Figure 2.10 shows an example of this. There, is a cyclic union of four component subgraphs . Thick colored edges from a node to a component indicate that the node send edges out to all the nodes in the receiving component. can be easily computed using graph rules, and it is shown below the graph in color-coded components. To simplify notation for , we denote a subset by . For example, 12 denotes the set {1,2}. follows the same color convention and consists of unions of component fixed point supports, exactly one per component. A solution for the corresponding CTLN is pictured. It shows that the attractor visits every component, in the cyclic union order.

Figure 2.10. of cyclic union.

Example of a cyclic union, how its set is made up of from component pieces (color-coded), and how the sequential activations of the nodes follow the cyclic union structure. Modified from [59].

There exists generalization of cyclic unions that also give rise to sequential attractors, see [59].

Theorem 11 is thus helpful when engineering networks that must follow a sequential activation, because the order of activation of neurons will match the direction of the cyclic union. This is exactly what we look for in central pattern generators models, which are the main topic of Chapter 4.

2.3.4. Fusion attractors

In the previous subsection, we saw by example that cyclic unions naturally gave rise to sequential attractors. Cyclic unions are one of the three block-structures first introduced in [15], that relate the parts to the whole. The two other structures are the clique union and the disjoint union of component networks.

We review the clique union here because it is special in that it gives rise to “fusion attractors”, introduced in [61]. Clique unions are built by partitioning the vertices of the graph into components , and putting all edges between all pairs of components. For example, the fusion 3-cycle from [61], in Figure 2.11, is the clique union of and . What we observe in the dynamics is the “fusion” of what seems to be two different attractors: the stable fixed point supported in 4, and the limit cycle supported in 123. That’s why it is called fusion attractor. However clique unions are a bit too restrictive. How can we relax the assumptions on the structure of the network, and still get fusion attractors? This is partly the topic of Chapter 6. Later, we will generalize this concept for layered TLNs to get attractors for sequential control of quadruped gaits and other CPGs.

Figure 2.11.

The fusion 3-cycle from [61].

Chapter 3 | Sequences of attractors

Recall that in Hopfield networks [39], memories are encoded as fixed points. Many memories can be encoded simultaneously, but accessing these fixed points usually requires attractor-specific inputs (equivalently, attractor-specific initial conditions), as illustrated in Figure 3.1A. Thus, we obtain a sequence of fixed points with whose transitions are controlled by external inputs, this means that, in a way, the order of the sequence is encoded in the external pulses, i.e. externally encoded. Would it be possible to have the sequence’s order internally encoded, as in Figure 3.1B, where the transitions happen in response to identical external pulses who do not know what comes next? This could be useful to model highly stereotyped sequences, like songbird songs [50] or as a counting mechanism, like in discrete neural integrators [30].

Figure 3.1. Sequences of attractors.

Reproduction of Figure 1.1. The focus of this chapter is Panel B: Internally encoded sequence of stable fixed points, each step of the sequence accessible via identical inputs. We also briefly touch on the situations of Panel C and D.

The first half of this chapter focuses on internally encoded sequences of static attractors, as depicted in Figure 3.1B. The second half will attempt to model the situation in Figures 3.1C,D, where several dynamic attractors coexist and are easily accessed via attractor-specific inputs. Our model will have a catch though, and it is that all attractors are identical to each other. Later, in Chapter 4, we will focus on modeling the situation of Figure 3.1C again, but this time all dynamic attractors are different. Finally, in Chapter 5, we join the models from this chapter and Chapter 4 to present a model/mechanism for the situation of Figure 3.1D, where we have an internally encoded sequence of diverse dynamic attractors.

This is, again, a good place to recall that we are using sequence/sequential in two different contexts. On one hand, we have sequential attractors (Figs. 3.1C,D), which refer to attractors whose nodes fire in sequence e.g. limit cycles. On the other hand, we have sequences of attractors, where the elements of the sequence are not the nodes, but the attractor themselves (Figs. 3.1B,D).

In this section, we leverage theoretical results from [15] to engineer networks with prescribed FP(G) sets. In doing so, we have a strong indication that the network will support the desired attractor. Throughout the chapter, we hope the important role of the theoretical results in facilitating the engineering work will become evident. Indeed, we begin the work by recalling the rules from Chapter 2 that followed from Theorem 7, and that we use in the sections that follow to design our attractors:

Rule 1. If is a clique, then supports a stable fixed point if and only if for all , receives at most edges from . When no external node receives edges from , we say that is target-free.

Rule 2. If is a cycle, then supports an unstable fixed point if and only if for all , receives at most one edge from .

Rule 3. If is a cyclic tournament, then supports an unstable fixed point if if and only if for all , receives at most edges from .

3.1. Sequences of fixed point attractors

In this section we model a discrete counter as a sequence of static attractors, each accessible via identical inputs. Neural integration is an important correlate of processes such as oculomotor and head orientation control, short term memory keeping, decision making, and estimation of time intervals [9,10,30,52,68]. Understanding how neurons integrate information has been a longstanding problem in neuroscience. How exactly do brain circuits generate a persistent output when presented with transient synaptic inputs?

Many of models have been proposed to model neural integrators, but classic models are known to be very fine-tuned, requiring exact values for the parameters in order to achieve perfect integration. By contrast, some robuster models tend to be rather insensitive to weaker inputs, requiring strong inputs to switch between adjacent states [30,68]. The models we propose here represent a very simple alternative to classic discrete neural integrators. Our models are both robust and respond well to a wide variety of input strengths. Importantly, since they are encoding sequences of stable fixed points, they could be also useful to model highly stereotyped sequences, like songbird songs [50].

CTLN counter.

Our initial model is a simple and robust CTLN that can keep a count of the number of input pulses it has received via well separated discrete states, providing a straightforward readout mechanism of the encoded count. We henceforth refer to this network, informally, as a “counter network”. Counting the number of inputs can be achieved with an ordered sequence of stable fixed points, each representing one position in the count. Each transition between fixed points indicates an increase in the count, and so in order to be integrating the external inputs, we want them to cause the fixed point transitions.

First, to encode a set of stable fixed points in a network we can appeal to Rule 1, reproduced again in Figure 3.2A, which says that a clique will yield a stable fixed point if it is target free. Thus, our network must have as many cliques as desired stable fixed points, and we need to embed them in the network in such a way that they will survive as fixed points, that is but also .

Figure 3.2. Construction of counter.

(A) Rule 1 from Chapter 2. A clique will yield a stable fixed point if and only if it is target free. (B) Rule 2 from Chapter 2. A cycle will be a fixed point support if and only if it every other node in the network receives at most one edge from the cycle. (C) Resulting construction of the CTLN counter network.

We also need some mechanism to be able to transition between these stable fixed points. Emphasis on stable, as this indicates we will need a strong enough perturbation to leave that steady state. We can potentially achieve this by adding some edges in the graph that will permit the activity to flow from clique to clique in response to input pulses. Graph-wise, these are all the ingredients we need to construct our network.

We get to work and assemble the network by chaining together several target-free 2-cliques, as shown in Figure 3.2C. Each of these cliques is embedded in a target-free way. More specifically, Rule 1 says that the 2-cliques will survive as fixed point supports as long as they do not have more than outgoing edge to any other node in the graph, and this is in fact the case for all of them. This would not be the case if, for example, we were to add the edge 1 → 4 in the network of Figure 3.2C, because then node 4 would be a target of {1,2}.

Note that the top and bottom cycles ({1,3,5,7,9,11}, {2,4,6,8,10,12}) also each support a fixed point of the network by Rule 2 (Fig. 3.2B), since no node receives more than one edge from these cycles either. This cycles will provide the perturbation we are looking for to be able to exit the fixed points we just built-in. We made the cycles wrap around to ensure activity will not stale in the last clique of the chain, and it will continue cycling. Adding six 2-cliques was an arbitrary decision, we could have had more or less.

In this way, we have built a network whose , by design, should contain all the cliques ({1,2}, {3,4}, {5,6}, {7,8}, {9,10}, {11,12}) and the bottom and top cycles as core fixed point supports. Computationally, we confirm these inclusions and find, more precisely that (yes, 141 fixed points, this is not that crazy because there are up to 212 linear systems that could potentially lead to a fixed point of the network) and that

| (3.1) |

Based on the core motif and attractor heuristic correspondence, this is indicative that we will have a stable fixed point attractor per clique, and two unstable fixed points, most likely giving rise to dynamic attractors (but also maybe not, as the heuristic is a heuristic and not a theorem or perfect correspondence, only the simulation will tell).

We have designed this network to have 6 stable fixed points, which we computationally checked that indeed it has. Since the set does not depend on , so far no mention of it has been necessary, and those stable fixed points will be there regardless of the value of , as long as it is in the legal range. However, if we aim to transition between stable fixed points, we will need exercise the perturbation somehow. And that’s where hysteresis comes. The perturbation will come in the form of external pulses, or -pulses, defined below.

External pulses are communicated the network by transiently changing the value of the external input in Equations 2.2, which we recall to be given by

Because we are using CTLNs, is prescribed by the graph of Figure 3.3A, using the rule

Figure 3.3. Hysteresis.

(A) 2-clique counter network. (B) Close-up of the pre- and post-pulse dynamics of counter network. (C) Three different 2-dimesional slices of the 4-dimensional state, at three time points: before the pulse, , when the network is settled in the fixed point where the only high-firing neurons are the blue ones. Right at the beginning of the pulse, , when the hyperplanes move, and the trajectory has to adapt to this change. And after the pulse has ceased, at , where the network stabilized in a different fixed point, the one where the green nodes are the high-firing. Nullclines are colored piecewise lines. The -pulse causes a translation of the nullclines, pushing the state of the network into the basin of attraction of a different stable fixed point. Black points indicate current state of the network, grey points indicate the current state of the network when the state does not lie in that plane, but it is projected to that plane.

Now, in the CTLN setup the external input would typically constant, but because we want to use pulses, the external input can no longer be constant. Because now we will have a temporary identical perturbation for all the neurons, above must be defined as a step function, pictured right at the very top of Figure 3.3B, that also does not depend on i, because we assume the pulses to be identical across neurons.

We refer to as the baseline and to as the pulse. It is important to note that this varying external pulse will cause our network to no longer be a CTLN (which requires external input to be constant and uniform across neurons), but rather a TLN, or a piecewise CTLN, if you wish. But because we will only need two different values, and we designed the network with CTLN principles, and because it really is a piecewise CTLN, we continue to call this network a CTLN.

Now, how does hysteresis arise from the pulse? Figure 3.3 explores how this transient change is affecting the dynamics of the network, at three time-stamps. First, note that the nullclines of the system are piecewise linear functions given by

That is, because TLNs are piecewise linear, the nullclines are piecewise linear as well. These are pictured in Figure 3.3C for neurons 1 to 4 of the network from panel A of the same figure, using corresponding colors.

By changing the value of , we are actually causing the nullclines of the system to shift in state space. More specifically, looking at Figure 3.3C, we see that at we are at the baseline , and the system is at rest in the stable fixed point supported on (cf. panel B). When the pulse arrives, at time , the nullclines experience a translation in state space, causing the trajectory to move towards the new fixed point (always at the intersection of the nullclines). When the pulse ceases, at , it is too late for the trajectory to go back, it has fallen in the basin of attraction of a different fixed point, the one supported on !

It is important to note that newer, more robust models of neural integration, also make use of hysteresis, but it typically arises from the use of bistable units [29,45,56]. In our case, hysteresis emerged as a property of connectivity, since CTLN units are not bistable by themselves (as they just die off), only when connected to each other, and so the multistability in our model is a property of the network, not of each unit.

What we explained above is exactly what we observe in simulations. We simulated the network using the parameters , , a baseline , and an input pulse . However, note that since Theorem 7 does not depend on the values of these parameters, any (C)TLN with dynamics prescribed by Equation 2.2, whose connectivity matrix is build from the graph of Figure 3.4A according to the binary synapse prescription of Equation 2.4, will have the supports of Eqn 3.1. This is true for any values of and , provided they are within the legal range.

Figure 3.4. CTLN counters.

(A) Counter. Pulses shown in the middle plot are sent to all neurons in the network. Activity slides to the next clique (stable fixed point) after reception of the pulse. Pulse duration is 3 time units, with no refractory period. (B) Signed counter. Black pulses are sent to odd-numbered neurons and red pulses are sent to even-numbered neurons. Activity slides to the right for black pulses, and to the left for red pulses. Pulse duration is 2 time units, with 3 time units of refractory period where .

The predictions about the dynamics are partly confirmed by simulations. We do observe the stable fixed points arise, but not the limit cycle. In any case, the rate curves of Figure 3.4A confirm the counting mechanism works: activity remains in the stable fixed point that the network was initialized to, unless a uniform -pulse is sent to all neurons in the network. When the network receives the pulse, activity slides down to the next stable state in the chain. Activity is maintained in this state indefinitely until future pulses are provided to the system. Indeed, the network is effectively counting the number of input pulses it has received via the position of the attractor in the linear chain of attractor states. This network is a very simple alternative to the neural integrator models often used to maintain a count in working memory of some number of input cues. The matrix and parameters needed to reproduce this Figure are available in Appendix A, Equation A.1.

Good! We have successfully encoded a sequence of fixed point attractors, all accessible via identical pulses. But can we adapt this construction to be able to decrease the count when presented with a negative input?

CTLN signed counter.

The architecture that made the transitions possible before, the cycles, can be so slightly modified to allow for the count to be decreased: making the bottom cycle travel in the opposite direction, as seen in Figure 3.4B, allows us to perturb the network with pulses so that the fixed points can also transition backwards in the chain, decreasing the count. This means that the pulses are now signed, and thus we informally refer to this modified network as “signed counter network”. The core motif analysis in this case is the same, as the cliques remain target-free and the two cycles again survive by Rule 2. Indeed, computationally, we found and

| (3.2) |

Again the predicted behavior of the network is confirmed by the rate curves of Figure 3.4B. The dynamics of the signed counter are analogous to those of the previous counter, except now pulses are signed, and the count can be decreased. More specifically, neurons are divided into two opposite populations (top and bottom cycles or, equivalently, odd and even nodes), and pulses are sent to either one of them. Note that pulses are now followed by a brief refractory period where to allow the system to reset. The color of the pulse in Figure 3.4B indicates which group of neurons received the pulse. Black pulses are sent to the top cycle (odd-numbered nodes), and red pulses are sent to the bottom cycle (even-numbered nodes). When the network receives a black pulse, the attractor slides to the right. If it receives a red pulse, the attractor slides to the left. That is, the activity travels in the direction of the cycle that received the pulse. This network can not only keep a count, but also store net displacement, position on a line, or relative number of left and right cues. The matrix and parameters needed to reproduce this Figure are available in Appendix A, Equation A.2.

Both counter models are simple in that they only require twice as many neurons as stable states, and pulses input to the system are given to all neurons on the network. Although both counter networks pictured in Figure 3.4 are able to keep a count modulo 6 (number of chained cliques), this can be generalized to an arbitrary (but finite) number of cliques, allowing to keep a memory of an arbitrary large count. This means that the number of possible states approaches infinity as the number of links in the chain approaches infinity as well, and so the count is effectively continuous. In addition, since the networks are able to keep a modular count of the number of pulses, it could be useful to estimate modular counts like time intervals or angles. Both counters thus constitute a simple alternative to traditional discrete neural integrators, but are they really that much robust?

Robustness of CTLN counters.

In general, CTLNs are expected to perform robustly, since a lot of their dynamic information is contained in and we know this set is preserved across the legal range.

To asses the performance of both counters across parameter space, we ran the simulations of Figure 3.4 using various pairs of parameters, and various combinations of pulses strengths and durations. We found three different possible outcomes, exemplified in Figure 3.5A. We classified these according to whether the function is preserved (keeps the correct count), corrupted (counts in multiples of two) or lost (does not keep a consistent count) as vary.

Figure 3.5. Good parameter grid for CTLN counters.

(A) Examples of what can go wrong in a single simulation. In the first plot the signed counters enter a “roulette” behavior (function is lost), in the second plot the pulses do not move the counter from the current fixed point (function is lost), and in the third plot the counter consistently slides two positions (function is corrupted). (B) Various counter behaviors for fixed values of baseline , with pulses for counter, and for signed counter. , vary according to the axes. Shaded in blue are parameter pairs outside the legal range . (C) Various counter behaviors for a fixed values of , and baseline , with pulse height and duration varying.

The results for all pairs of parameters and various combinations of pulses strengths and durations are recorded in the bottom row of Figure 3.5, where green indicates preserved, yellow indicates corrupted and red indicates lost performance. One dot corresponds to one simulation of 7 pulses, with a running time of time units for the counter and time units for the signed counter. We found that there is a good range of parameters where the counters behave as expected, successfully keeping the count of the number of input pulses received.

This robustness across parameter space was given to us by the theoretical results. But will the performance of the counters survive to added noise in the input and connections? To verify robustness to noise, we computed the proportion of failed transitions among pulses in both fixed point counters. This was done by introducing varying percentages of noise into the input, varied every of a time unit, and into the connectivity matrix , as specified in the axes of Figure 3.6. A failed transition is defined as any behavior that does not advance the counter a single clique forward/backwards, depending on the sign of the pulse. Figure 3.6 contains examples of all possible transition failures, as well as examples successful transitions in noisy conditions, as detailed below. All plots simulations used to quantify the failures were done with , .

Figure 3.6. Noisy CTLN counters.

(A) Example of a 20% noisy counter that performs well. (B) Example of a noisy counter with four failures, marked with red crosses: first it slides too many cliques down, and then it gets stuck in the same clique indefinitely. (C) Percentage of failed transitions for the counter in 100 trials, for several percentages of noise in and . (D) Example of a 20% noisy signed counter that performs well. (E) Example of a noisy signed counter with two failures, marked with red crosses: first it slides too many cliques down, and then it rolls around the counter until it settles in some arbitrary clique. (F) Percentage of failed transitions for the signed counter in 100 trials, for several percentages of noise in and .

Panel A of Figure 3.6 is an example of a very noisy counter that still preforms well. Each pulse advances the counter a single clique forward, as expected. Panel B of Figure 3.6 shows a noisy counter with two types of failures: first the counter advances too many steps, and then it gets stuck in one of the cliques (altogether these would count as four failures in out analysis, even though there is probably just two defective cliques). Panel D of Figure 3.6 is an example of a very noisy signed counter that performs perfectly well. Panel E of Figure 3.6 is an example of things that can go wrong in the signed counter. The first failure advances the counter one more than expected, the second failure makes the counter go into this kind of roulette behavior until it stops in a given clique. We have also observed the signed counter getting stuck at some point, as in Figure 3.6B.

The percentages of noise in Figure 3.6 were calculated as follows: The noise in the external input is i.i.d random noise from the interval , and it was introduced to vary every 0.1 fraction of a unit time. The noise in the connectivity matrix is obtained by perturbing the adjacency matrix with i.i.d random noise from the interval (0, 1). More precisely, if denote by , , the noisy versions of , , ; where is the transpose of the adjacency matrix of the graph defining the network, then the noisy versions are:

where , are the percentages of noise introduced, and , are random matrices the same size as , with entries between (0,1) and (−1, 1), respectively, and 1 is a matrix of 1’s the same size as . This is equivalent to perturbing by an amount proportional to length of the interval , that is:

We choose to perturb the adjacency matrix instead for computational easy. For each percentage of noise pair , we ran 20 simulations, each one consisting of 5 (signed) pulses in the CTLN (signed) counter, as exemplified in Figure 3.6. The results of counting over all these pulses are summarized in panels C and F of Figure 3.6. Each pixel represents the fraction of failed transitions for each pair . We found that discrete counters perform perfectly well up to 5% noise, proving that perfect synapses are not necessary. When the noise limits are pushed beyond, counters lose stability and start to slide too many attractors, or to get stuck in one of the cycles. The (unsigned) counter, not surprisingly, proved to be a lot more robust, being barely affected by the amount of noise in . Although not as robust, the signed counter accurately kept the count 50% of times under 20% noise. Not too bad.

Having successfully encoded sequences of stable fixed points, it is natural to now ask: can we use the same ideas to construct a network that encodes a sequence of dynamic attractors instead? Maybe by chaining together other kinds of core motifs, instead of cliques?

3.2. Sequences of dynamic attractors of the same type

Here we use one of the core motifs giving rise to a dynamic attractor from Chapter 2 to construct a network that steps through a sequence of dynamic attractors. In the previous section, we chained together repeated core motifs that we knew gave rise to stable fixed points to obtained a network that steps through a sequence of stable fixed points. We use the same idea as before, but a cyclic tournament instead of a 2-clique.

To construct the network, we chain together overlapping 5-stars, which are cyclic tournaments. These were introduced in Chapter 2 and are the motifs composing the networks shown in Figures 3.7C, D (a single 5-star is, for instance, the subgraph induced by 1 to 5). Rule 3 says that each 5-star will yield an unstable fixed point support, since each 5-star is connected in such a way that it survives to the larger network. Thus, we should get:

Figure 3.7. Dynamic attractor chains.

(A) Coexistent dynamic attractors. Each attractor is accessed via attractor-specific pulses. The sequential information is externally encoded. (B) Coexistent dynamic attractors. Each attractor is accessed via identical pulses sent to the network. The sequential information is internally encoded. (C) Patchwork of six 5-stars. Pulses are sent, one at the time, to neurons 6,9,12,15,18,3 (colored) in that order. Each pulse makes the attractor slide to the next limit cycle down the chain. Pulse duration is 1 time unit. (D) Same network as in panel A receives simultaneous pulses to neurons 3,6,9,12,15,18 (bold nodes). Pulse duration is 1 time unit. Two cycles go through the network in the direction opposite to attractor sliding. Despite their apparent symmetry, the green cycle is a core motif of the network, but the red cycle is not (it dies by Rule 2).

Indeed, computational work shows that contains precisely the six supports above, each for one 5-star. We also found (which again, is not surprising since there are up to 218 linear systems) and that

| (3.3) |

Notice that we got an extra core motif, the last one in Equation 3.3. This is a cycle that is also uniform in-degree, and that survives by Rule 2 (colored in green in Figure 3.7D). In contrast, the colored red cycle of Figure 3.7D, at first glance symmetric to the green cycle, will not support a fixed point, by Rule 2 again (because, for instance, node 7 has two inputs from it). The attractor corresponding to the core motif has not yet been found computationally.

We therefore expect to see at least six limit cycles, each one corresponding to a 5-star. Simulations confirm these predictions. In this case, both selective stimulation of specific nodes (Fig. 3.7C) and identical stimulation of all multiple-of-three nodes (Fig. 3.7D) can move the attractor to the next limit cycle in the chain. Indeed, in Figure 3.7C pulses are sent to specific nodes (color coded) which activate the attractor associated with the core motif that the node belongs to. For example, stimulating node 9 will activate the limit cycle associated with the core motif , to which 9 belongs. Note that the order in which pulses are sent matches the order in which the attractors are chained. Simulations show that is it not always possible to jump between non-adjacent 5-stars. The matrix and parameters needed to reproduce this Figure are available in Appendix A, Equation A.3.

By contrast, in Figure 3.7D, pulses are identically sent to the nodes (yes, all the multiples of 3), each producing a transition to the next limit cycle down the chain, analogous to the mechanism observed in the previous two counters, where pulses contain no information about which attractor comes next, but the state of the network counts pulses.This difference is more clearly conceptualized in the cartoon above each type of stimulation (Fig. 3.7A vs Fig. 3.7B).

Robustness of dynamic attractor chain.

We performed similar robustness simulations for both kinds of stimulation types in the dynamic attractor chain as we did for the fixed point counters. That is, we ran simulations for several parameter pairs , and several percentages of noise in and . The results of these noise simulations are summarized in Figure 3.8.

Figure 3.8. Noisy dynamic attractors chain.

(A) Example of a noisy dynamic attractor chain network that performs well for specific stimulation of all multiple-of-three nodes. (B) Example of a noisy dynamic attractor chain network with 3 failures, global stimulation. (C) Example of a noisy dynamic attractor chain network with 4 failures, global stimulation. (D) Percentage of failed transitions for specific and global stimulation in the dynamic attractor chain network in 120 trials for several percentages of noise in and . (E) Different behaviors of the dynamic attractor chain for various pairs of , parameter values.