Abstract

Heart disease is a complex and widespread illness that affects a significant number of people worldwide. Machine learning provides a way forward for early heart disease diagnosis. A classification model has been developed for the present study to predict heart disease. The attribute selection was done using a modified bee algorithm. Using the proposed model, practitioners can accurately predict heart disease and make informed decisions about patient health. In our study, we have proposed a framework based on Modified Artificial Bee Colony (M-ABC) and k-Nearest Neighbors (KNN) for predicting the optimal feature selection to obtain better accuracy. Using a modified bee algorithm, this paper focuses on identifying the optimal subset of attributes from the dataset. Specifically, during the classification-training phase, only the features that provide significant information are retained. The proposed study not only improves classification accuracy but also reduces training time for classifiers.

Keywords: Privacy awareness, Healthcare, Feature selection, Heart disease prediction

Subject terms: Health care, Engineering

Introduction

Context

Connected devices The Internet of Things (IoT) connects commonplace items to the web and each other, facilitating two-way dialogue between inanimate objects and humans. It is common practice to employ controllers, actuators, and sensors throughout the connection process1. The Internet of Medical Things (IoMT) aims to create a network of individuals and medical equipment that communicate wirelessly to share medical data. The utilization of modern technologies and population growth have resulted in rising healthcare expenditures and service pricing2. Smartwatches, wristbands, and other wearable sensing devices aid in the early detection and alerting of illnesses. These wearable devices incorporate robust and specialized computing architecture, which is located in a remote HSP cloud data center3. This setup enhances their capabilities, particularly in terms of real-time and early identification of health issues. IoMT-based healthcare solutions often involve the collection of wearable devices at the HSP’s data center. The purpose of this is to prevent, diagnose, and treat various human health-related concerns, such as cardiovascular illnesses (CVD). Constructing a robust electronic healthcare infrastructure is challenging because of the huge amount of data collected from many sources, such as end users and other parties involved in providing health services. Cardiovascular disease is one of the most deadly illnesses. Myocardial infarction is the primary cause of mortality. Regrettably, individuals frequently lack awareness regarding heart attacks and the associated symptoms. Many individuals are lethargic and lack awareness of the gravity of heart disease. Heart failure commonly occurs when the blood supply starts to degrade. The heart operates in a manner akin to a muscle within the human body. Chest pain, scorching sensation, gastric discomfort, arm discomfort, fatigue, and perspiration are all indications and factors contributing to heart disease4. The diagnosis of cardiac disease is a complex undertaking for physicians due to the involvement of multiple factors. The purpose is to assist clinicians in making prompt judgments and reducing errors in the process of diagnosis. Classification algorithms allow physicians to efficiently analyze medical data with great precision5. These systems are accomplished by constructing a model that can categorize existing information based on sample data. Several classification algorithms have been created and utilized as classifiers to aid clinicians in detecting patients with heart disease6. It is crucial to ascertain the underlying factors accountable for cardiovascular illnesses. Therefore, its solution could likewise be devised by employing appropriate methodologies. Hence, there is an immediate need to ascertain the causative factors of heart disorders and establish a proficient framework for diagnosing heart diseases7. Conventional methods are not successful in diagnosing this type of disease, hence it is crucial to develop a medical diagnostic system that relies on feature selection approaches to forecast and assess the condition8. It is possible to improve the accuracy of classification and shorten the time it takes to run a classification system by using feature selection methods. The leave-one-subject-out cross-validation method has also been used to learn the best ways to evaluate models and adjust hyperparameters. The metrics for measuring success are used to judge how well the classifiers work. It was checked how well the classifiers worked with the features chosen by features selection. A key step in pattern recognition and machine learning (ML) is the feature extraction or selection process. Techniques for feature selection can improve classification performance while lowering computing costs.

An essential step in machine learning and pattern recognition is the feature extraction or selection process. Both the computation cost and the classification performance may be improved by the feature selection procedure. In machine learning and data mining difficulties, an appropriate representation of the data from all characteristics is a crucial issue. Not every original characteristic is useful for tasks involving regression or classification. Certain characteristics in the distribution of the dataset are noisy, useless or redundant. These characteristics can affect classification performance9.

To improve classification accuracy and shorten the time it takes for the classification system to execute, feature selection techniques are employed. Moreover, hyper parameter altering and learning the best practices for model assessment have been accomplished through the use of the leave one subject out cross-validation technique. The classifiers’ performances are evaluated using the performance measurement measures. The classifiers’ performances have been evaluated using the features that the feature selection algorithms have chosen10.

There are three categories of feature selection algorithms: those that rely on filters, those that utilize wrappers, and those that are embedded. Each of these feature selection mechanisms possesses specific benefits and drawbacks in particular circumstances. In contrast to the filter-based approach, which assesses the significance of a feature through its correlation with the dependent variable, the wrapper feature selection algorithm evaluates the utility of a subset of features through its actual use in training the classifier. Filter method complexity is lower than wrapper method complexity. The feature set chosen through the filter is general, rendering it applicable to any model without being model-specific. When it comes to feature selection, global relevance takes precedence11.

An effective machine learning model, on the other hand, is required for optimal results. An exemplary machine learning model is one that not only exhibits strong performance on training data (at least a machine learning model would be able to learn the training data) but also on unobserved data. To evaluate all classifiers on data and determine that they correctly classify cases in the vicinity of 50% on average12. Moreover, it is imperative to employ suitable cross-validation methods and performance assessment criteria when training and evaluating a model on a given dataset.

Key contribution

In this paper, we have proposed a novel methodology based on the M-ABC algorithm and k-NN features selection. The M-ABC algorithm is used for heart disease prediction and k-NN is used for the feature selection method. Further, our research methodology has the following contribution.

In the first step, we will integrate the Firefly algorithm. The addition of the Firefly Algorithm to the M-ABC algorithm makes it better. The goal of this integration is to use the firefly’s light intensity and movement to search for the best feature groups even more precise.

In the next step, our focus will be on kNN classification. Here are numerous machine-learning algorithms that are used for high-definition (HD) prediction. We have chosen the k-NN method since it will enhance the overall performance when used with the M-ABC approach.

The next step will be performance and evaluation. The usefulness of the M-ABC method that has been presented will be thoroughly examined via the utilization of real-world datasets about cardiac disease. To evaluate the effect that our method has on the accuracy of predictions, we will evaluate the performance of k-nearest neighbors models that were trained with and without feature selection.

Organization of paper

The following is the arrangement of the paper’s sections. The following topics are covered in Sect. "Related works": feature selection using the MABC technique, federated learning, and the SVM classification method. An overview of important research is provided in Sect. "Materials and methods". Section "Experimental evaluation" describes the suggested method, which combines M-ABA with K-NN. In Sect. "Results" the main objective is to assess the work done and the outcomes achieved. In Sect. Discussion and comparison with other models, the latest findings are presented and future directions for research are discussed.

Related works

Using a modified form of SALP swarm optimization (MSSO) and the Adaptive Neuro-Fuzzy Inference System (ANFIS), the authors of Ref.13 created an IoMT framework for identifying heart disease. The application of the Levy flying strategy in the provided method enhances the search capabilities. To maximize ANFIS outcomes, MSSO enhances the learning parameters. The author of Ref.14 presented a machine learning-based approach to diabetes detection. The diabetes dataset, a medical dataset derived from the patient’s medical history, was used to test the suggested methodology. The iterative dichotomized 3 (ID3) decision tree algorithm was presented by the authors. A filtering model called ID3 is employed in the significant feature selection (FS) process. Moreover, feature selection (FS) is carried out using two ensemble learning models, Random Forest, and Ada Boost, and the classification accuracy of these models is contrasted with wrapper-based FS techniques.

The author of Ref.15 used a machine learning method to analyze activity tracker data linked to patient-reported outcomes (PROs) in a study of people with stable ischemic heart disease (SIHD). To track their daily activities, each participant was given a Fitbit Charge 2, and all individuals finished the 8 PRO Measurement Information Systems. Two approaches were developed to classify PRO ratings using information from activity trackers. In order to improve the conventional model and do a comparison analysis, the author of Ref.16 applied classification methods such as LR, KNN, SVM, DT, and RF to predict cardiac illness. The grid search technique can be used to alter the hyper parameters of the five specified classification models using the model that is provided.

To generate data, the authors of Ref.17 used a variety of machine-learning methods. A machine learning framework has been created to assist in the early identification of heart ailments with the use of the Internet of Things (IoT). The method is examined using Neural Networks, Decision Trees, Random Forests, Multilayer Perceptron, Naive Bayes, and Linear Support Vector Machines. The author of Ref.18 developed accurate and efficient algorithms based on ML models to detect cardiac anomalies. The recommended strategy is based on classification algorithms that combine KNN, SVM, DT, NB, ANN, and LR. Alternatively, the standard FS method makes use of a selection operator for minimal redundancy, relief, local learning to eliminate unnecessary and redundant features, and shortest absolute shrinkage. Furthermore, a new and effective method for feature selection in FS problems was presented. The author of Ref.19 prediction models utilizing five complex machine learning techniques—KNN, NB, LR, RF, and Ada Boost—was developed using the Python programming language. 30% of the available data were used to calculate the efficiency. The cloud server was then configured with the prediction model to provide easy online access. Modak et al.20 introduced an integrated feature selection (FS) and deep neural network (DNN) model for the prediction of heart disease. With this strategy, data subsets with strong correlations to cardiovascular illness are selected using the linear SVC algorithm as a penalty and the L1 norm as a measure. We input this feature into the DNN to train it. The author of Ref.21 contributed to the development of an artificial classifier intended for individuals with severe heart failure (CHF). The classifier can distinguish between individuals at low and high risk. The author of Ref.22 used a range of methodologies to investigate the prevalence of cardiac disease, including random forests, logistic regression, hyperparameter tuning, feature selection, support vector machines (SVMs), and deep learning methods. A comparative study of prominent contemporary data mining classification techniques, such as SVM, KNN, and Artificial Neural Networks (ANN), was conducted by Ref.23. SVM fared better than KNN and ANN, with an accuracy of 85%, according to their test data.

In addition to the earlier research, machine learning techniques were used to evaluate the prediction of heart illness24. Any algorithm’s performance can only be maximized using optimal data sets25. The author carried out a thorough review of the UCI dataset, looking at different machine-learning approaches applied to cardiovascular disease research. Algorithms for feature selection are necessary to carry out those techniques successfully26. The author of Ref.27 utilize sophisticated data mining methodologies to precisely forecast the incidence of heart conditions. They are evaluating the data from 909 patients as part of their revolutionary research. Nevertheless, data mining appears to be substantially more efficient when working with enormous quantities of data. The author of Ref.28 claims that this work uses several methods to achieve an accuracy level lower than 90%. MATLAB is used to implement the algorithms, and Python is used for feature selection techniques, which may result in improvements. The author of Ref.29 employs sophisticated techniques. However, as the cited source indicates, the precision is limited to less than 90%. The data can provide the highest level of accuracy if it is processed precisely. Their ultimate objective was to use patient clinical data from the UCI dataset to predict cardiac disease with the highest level of accuracy feasible.

In most cases, they were able to predict cardiac issues with greater accuracy than the usual 80% accuracy rate. By using a feature selection strategy that was customized for a machine-learning model or by considering every feature, they sought to maximize accuracy. Furthermore, they were unable to find any clear correlation between the traits. Furthermore, other studies only provide the prediction score of algorithms; they don’t go into detail about other performance evaluation metrics like sensitivity, specificity, log loss, etc. Using a dataset created especially for this purpose, The author of Ref.30 presented a machine learning-based diagnostic method for predicting heart disease. This hybrid intelligent system design included three feature selection procedures and six machine-learning algorithms. The feature selection techniques used were the most absolute shrinkage and selection operator, the minimal-redundancy maximal-relevance algorithm, and the relief feature selection algorithm. In this work, we used six machine-learning techniques: DT, KNN, ANN, SVM, NB, and logistic regression. The performance of the seven classifiers was evaluated using execution time, accuracy, specificity, sensitivity, and Matthews’ correlation coefficient as metrics. When the FS algorithm chose logistic regression classifiers, it achieved an accuracy of 89%. To predict diverse patterns in the data and develop a personalized model, authors in Refs.31 and 32 proposed a deep learning and machine learning-based architecture. They evaluated and compared the performance in terms of sensitivity, accuracy, average error and specificity of their proposed method with the existing methods. Authors proposed a nature-inspired optimizer named Greylag Goose Optimization (GGO) in Ref.33 and Puma Optimizer (PO) in Ref.34 respectively. They aim to enhance the efficiency across diverse models and improve the feature selection. In Ref.35, the authors proposed an asynchronous method to improve the diagnosis of heart disease for real-world applications.

Materials and methods

A healthcare system leveraging the Internet of Medical Things (IoMT) enables the real-time collection of patient data to aid in early disease detection and treatment, thereby reducing the risk of heart disease development. However, traditional machine learning methods face challenges in addressing privacy concerns posed by emerging international regulations such as GDPR, China Cyber Security Law, and CCPA, as they necessitate processing user data for disease diagnosis. IoMT-based sensors gather heart disease data from patients, but sharing this data in healthcare systems is constrained by privacy and security issues. This paper proposes a federated learning framework for predicting heart disease in a privacy-aware healthcare system, effectively addressing privacy concerns and enabling accurate disease prediction. The symbols used in the study are detailed in Table 1.

Table 1.

Description of the used symbols.

| Used symbol description | Used symbol description |

|---|---|

| Xni | A vector for initializing client sites |

| Cnie | A possible answer provided by the hired bee |

| Xpi | Local solutions generated at random |

| Fn | Fitness function |

| Cnio | A possible option proposed by an observer bee |

| Cnis | A possible answer for the Scout bee |

| wjl lth | Brain cells examined in dataset j |

| qi | Mean Gaussian |

| c (wjl, qi) | Similarity function |

| K | List of customer sites with a count of k |

| B | Capacity of the nearby mini-batch |

| h | Learning rate |

| E | The number of local epochs |

| wo | The first worldwide cloud protocol |

| wk | Model of kth client |

We apply the UCI Cleveland heart disease dataset to train and evaluate our proposed framework. This dataset comprises 303 records and includes 14 attributes including the target variable. In the Table 2 below is the detail description of the dataset. To handle the issue of missing values for features thal and ca., we mean imputation technique. For encoding of categorical features, we employed one-hot encoding method.

Table 2.

Dataset description.

| Feature name | Description |

|---|---|

| Age | Age of the patient (in years) |

| Sex | Gender (1 = male; 0 = female) |

| CP | Chest pain type (1 = typical angina, 2 = atypical angina, etc.) |

| Trestbps | Resting blood pressure (in mm Hg) |

| Chol | Serum cholesterol level (in mg/dl) |

| Fbs | Fasting blood sugar (> 120 mg/dl, 1 = true; 0 = false) |

| Restecg | Resting electrocardiographic results (0 = normal, etc.) |

| Thalach | Maximum heart rate achieved |

| exang | Exercise-induced angina (1 = yes; 0 = no) |

| oldpeak | ST depression induced by exercise relative to rest |

| slope | Slope of the peak exercise ST segment |

| ca. | Number of major vessels colored by fluoroscopy (0–3) |

| thal | Thalassemia (3 = normal; 6 = fixed defect; 7 = reversible defect) |

| target | Diagnosis of heart disease (0 = no disease; 1 = disease) |

The proposed M-ABC algorithm

A Modified Artificial Bee Colony (M-ABC) method, which uses swarm intelligence, has been created and suggested in reference36. The scout bee, observer bee, and worker bee all feature in the M-ABC algorithm. Scout bees have the task of investigating and discovering new sources of food, whereas the spectator bee selects a food source by observing the dance performed by a working bee. Consequently, the bees that are utilized are safeguarded from being taken advantage of since they are connected to their source of nourishment. Both the scout bees and the spectator bees are not specifically linked to any one food source. They are commonly known as “unemployed bees” as a consequence. The primary objective of the fitness function is to achieve the ideal balance between error rates in classification and communication efficiency when receiving models from the IoMT client sites. The fitness function aims to decrease both the classification error and the number of rounds required to obtain a better level of accuracy. The following algorithm, Algorithm 1, outlines the overall process of the M-ABC optimization method. The objective of the algorithm is to select an optimal subset of features for heart disease prediction using a modified version of the Artificial Bee Colony (ABC) algorithm, augmented with the Firefly Algorithm (FA). This hybrid approach aims to maximize the accuracy of a k-nearest Neighbors (KNN) classifier trained on the selected features. Algorithm 1 below presents the optimizer M-ABC Algorithm.

Algorithm 1: working of optimizer M-ABC algorithm

Phase of IoMT site initialization applying equation

Do repeat

Applied bees to develop a novel solution in accordance with Eq. (2)

Candidate solution for observer bees utilizing Eqs. (3) and (4)

Phase of the candidate solution proposed by Scout bees using Eq. (5)

Memorize the optimal solution you devised at step six. Continue doing so until the maximum number of cycles is reached at step seven.

Initialize phase

Each healthcare site begins with the initialization of vector Xni. The initialization of IoMT client sites is performed using Eq. (1), where i ranges from 1 to NP.

| 1 |

The parameters’ upper and lower boundaries are denoted by the ui and li, respectively.

Solution search by Employee Bee

The employee bee searches the community for novel concepts. This Eq. (2) can be used to get a new solution. Xpi is a local random solution, and the function τni yields a random number between − 1 and 1. The employed bee Cnie calculates the new candidate solution’s fitness, and if the fitness is high, the solution is committed to memory. An enhanced feature selection for IoMT client sites can be achieved by using the candidate solution with the utilized bee’s equation below.

| 2 |

Candidate solution by Onlooker Bee

The spectator bees use the following Eq. (3) to probabilistically select their candidate solution Cnio after employed bees have shared their candidate solution with them. The Cnio by onlooker bee is used to further enhance the quality of the candidate solution, as shown by the equation below.

| 3 |

where fitness function Fn is computed using below equation,

| 4 |

Scout Bee phase

In M-ABC, the scout bee makes sure that the novel solution is investigated and selects a potential solution. Equation (5) below shows how to calculate Cnis using the firefly method, where Cnis0 is the starting solution. An employed bee turns into a scout bee if it doesn’t enhance its solution in the allotted amount of time.

| 5 |

Data collection using IoMT clients

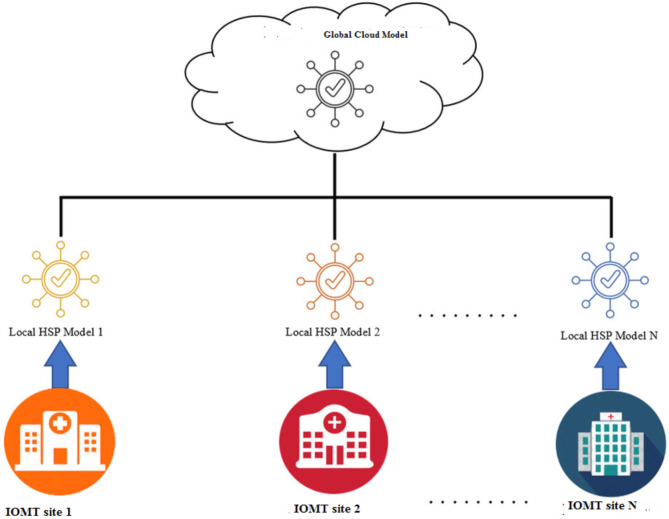

Initially, IoMT devices are employed to collect personal health records. The connected devices talk to each other when they send this data. IoMT devices start collecting bodily data from the moment they are implanted. Vital indicators including blood pressure, cholesterol, glucose, and pulse are included in this data. The proposed M-ABC technique is used in an IoMT healthcare facility to enhance these features at the regional level. Next, the local models are moved to the global cloud from every IoMT healthcare site. The effectiveness of the approach is also assessed using patient data kept in the UCI library. This design can be readily adapted to accommodate other scenarios, assuming five healthcare client sites and one cloud server. Devices that gather information on heart disease are part of the design we have proposed for the healthcare center. The healthcare locations are given a global model when they first connect to the global cloud. The sites use an M-ABC optimizer to select and aggregate attributes after model construction. Each location then trains the model using data specific to its region. Not to mention, medical facilities edit their models locally before uploading them to the cloud. Healthcare locations receive a lot of updates to their local models, which are then combined with k-NN to create a new global model. Predictions may be made more accurately, classification errors can be decreased, and privacy can be safeguarded by keeping all training data on the device. Algorithm 2 illustrates the operation of the proposed structure and Fig. 1 illustrates the proposed methodology.

Fig. 1.

Overview of the proposed framework for heart disease prediction.

Modified ABC algorithm heart disease prediction

Initialization

-

(i)

Initialize a population P of binary feature vectors, where each vector represents a potential solution.

-

(ii)

Let N be the population size and D be the number of features.

Employed bee phase

For each solution Si in the population:

-

(i)

Randomly select a feature j.

-

(ii)

Flip the value of feature j in Si, creating a new solution Si′.

-

(iii)

Evaluate the fitness of Si′ using the objective function F.

-

(iv)

If the fitness of Si′ is better than that of Si, replace Si with Si′.

Scout Bee phase

Apply Firefly Algorithm (FA) for scout bee solution search:

-

(i)

Initialize each solution Si as a firefly.

-

(ii)

Update the position of fireflies using FA, where the attractiveness between fireflies depends on their fitness values.

-

(iii)

Each firefly Si searches for a better solution in its neighborhood using FA.

Repeat Steps 2–3 for a predefined number of iterations.

Termination

(i) Repeat Steps 2–4 until a termination criterion is met (e.g. maximum iterations reached).

Output

-

(i)

Select the best solution Sbest from the final population based on its fitness value.

-

(ii)

Sbest represents the selected features.

Final evaluation with kNN:

Train a kNN classifier using the selected features from Sbest:

-

(i)

Let Xtrain be the training set with selected features.

-

(ii)

Let ytrain be the corresponding labels.

-

(iii)

Let Xtest be the test set with selected features.

-

(iv)

Let ytest be the corresponding labels.

-

b.

Use kNN algorithm to classify instances:

-

(i)

For each instance in Xtest, find the k nearest neighbors in Xtrain using Euclidean distance with majority voting distance metric.

-

(ii)

Assign the class label based on majority voting among the k neighbors.

-

c.

Evaluate the accuracy of the kNN classifier on Xtest using ytest.

The step wise working of the proposed method is depicted in Fig. 2. The above algorithm consists of three main parts objective function, modification and evaluation. The fitness function, denoted as F(S), evaluates the quality of a feature subset S. It is defined as the accuracy of a KNN classifier trained on the selected features. Initially, a population P of binary feature vectors is established, where each vector represents a potential solution. Parameters such as population size (N) and the number of features (D) are set. A KNN classifier is trained using the best-selected features from S. During testing, for each instance in the test dataset (Xtest), the algorithm finds the k nearest neighbors in the training dataset (Xtrain) using Euclidean distance with a majority voting distance metric. Based on the majority voting among these k neighbors, the class label is assigned. The accuracy of the KNN classifier on X-test using the true labels (y-test) is then evaluated. Overall, this algorithm iteratively optimizes feature selection using M-ABC with FA, followed by assessing the performance of the selected features with a KNN classifier for heart disease prediction. Its goal is to enhance prediction accuracy while keeping computational complexity to a minimum. About the k-nearest Neighbors (KNN) classification method, let’s talk about how the chosen features will be applied to the prediction process. A non-parametric classification approach called KNN groups instances into groups according to the feature space’s k nearest neighbors’ majority vote. The algorithm determines the distance between an instance and every other instance in the training set to classify the instance. The majority class among its neighbors determines the class label of the instance, and the k nearest neighbors are chosen based on the measured distances. The features that are chosen constitute a subset that is most pertinent for predicting heart disease after feature selection using the modified M-ABC algorithm. The kNN classifier is then trained using these particular features as input. Based on the majority vote of their k nearest neighbors, the trained KNN classifier uses the chosen attributes of new instances to predictably classify them into different groups, such as pathological or normal. In conclusion, the goal of the proposed M-ABC algorithm is to choose the best subset of features for the prediction of heart disease. It incorporates the Firefly Algorithm into its modifications. The KNN classifier, which predicts the class labels of new instances based on their nearest neighbors in the feature space, is then trained using the features that were chosen as input.

Fig. 2.

Flowchart of the proposed method.

The preprocessing of the used dataset involved several steps to ensure data quality and consistency. Data quality issues were handled by removing records with a significant number of missing entries and affecting other attributes using median values. Continuous features were standardized using Z-score normalization to ensure uniform scaling. Categorical features were encoded using one-hot encoding. Feature selection was carried out using the Modified ABC Algorithm, which overcomes the issue of dimensionality reduction and improving the model’s efficiency. Model evaluation was carried out using 10-fold stratified cross-validation to maintain class distribution within each fold. This method ensures that all data points are used for both training and testing, providing a comprehensive view of the model’s performance.

Experimental evaluation

In this section, we will go into the process of simulating our suggested framework, including specifics regarding the simulation environment as well as the experimental setups. Our objective is to provide an assessment of the overall efficacy of the framework that we have developed and to evaluate its performance in comparison to that of conventional federated learning models. To evaluate the effectiveness of the framework that we have proposed, we carried out a simulation that consisted of four thousand rounds of conversation. The simulations were carried out in a Python environment, with the PyTorch machine learning packages being utilized within the framework. Our simulations were carried out on a CPU with an Intel® Core TM i7-8550 operating at a frequency of 4 GHz. All of the experiments were conducted within this simulated environment without any deviations. For the data preprocessing of the proposed the steps include cleaning, standardization, encoding categorical variables, and feature selection with MABC to reduce dimensionality. To address the issue of class imbalance, synthetic minority over-sample (SMOTE) technique is used. This comprehensive approach strengthens the claims regarding the model’s robustness and effectiveness in heart disease prediction.

Results

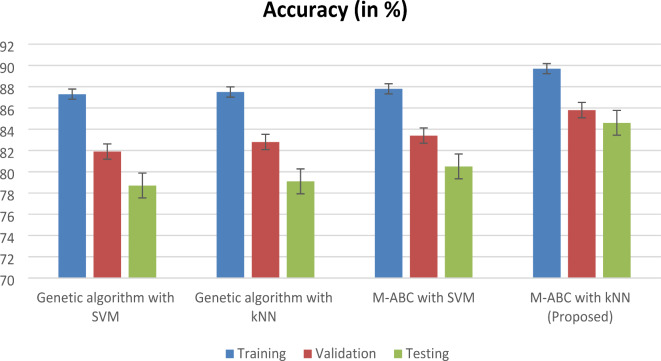

The framework that has been proposed for the prediction of heart disease produces greater performance in comparison to other techniques that are already in use. These techniques include the Genetic algorithm with SVM, the Genetic algorithm with KNN, and the M-ABC with SVM algorithms. The suggested framework obtains an accuracy of 89.7%, which is higher than the accuracy attained by any of the following algorithms: Genetic algorithm with SVM, Genetic algorithm with KNN, and M-ABC with SVM. The accuracy of the proposed model is illustrated in Fig. 3; Table 3.

Fig. 3.

Accuracy of the proposed model with comparison to existing methods.

Table 3.

Comparison of the accuracy of the proposed model.

| Technique | Accuracy |

|---|---|

| Genetic algorithm with SVM | 87.3 |

| Genetic algorithm with KNN | 87.5 |

| M-ABC with SVM | 87.8 |

| M-ABC with KNN (proposed) | 89.7 |

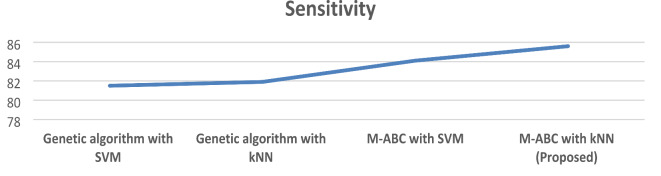

The framework that has been proposed obtains a sensitivity of 85.6%, which indicates that it contains the capability to accurately recognize positive situations. A higher sensitivity rate is achieved by this algorithm in comparison to the other methods. Table 4; Fig. 4 illustrate the sensitivity of the proposed model.

Table 4.

Comparison of the sensitivity of the proposed model.

| Technique | Sensitivity |

|---|---|

| Genetic algorithm with SVM | 81.5 |

| Genetic algorithm with kNN | 81.9 |

| M-ABC with SVM | 84.1 |

| M-ABC with kNN (proposed) | 85.6 |

Fig. 4.

Comparison of the proposed model for sensitivity.

A recall rate of 84.5% is achieved by the suggested framework, which demonstrates that it is successful in collecting all instances of favorable outcomes. When compared to the recall rates of the algorithms that were evaluated, this recall rate is superior. Table 5; Fig. 5 illustrate the sensitivity of the proposed model.

Table 5.

Comparison of the proposed model in terms of recall.

| Technique | Recall |

|---|---|

| Genetic algorithm with SVM | 80.8 |

| Genetic algorithm with KNN | 81.4 |

| M-ABC with SVM | 83.2 |

| M-ABC with KNN (proposed) | 84.5 |

Fig. 5.

Comparison of recall for the proposed model.

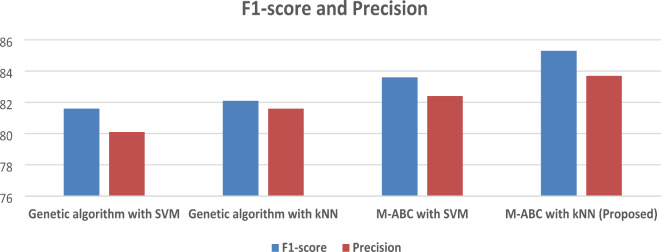

The F1-score is 85.3%, given the high sensitivity and recall rates of the proposed framework, likely, the F1-score is also superior compared to the other algorithms. Table 6; Fig. 6 illustrate the F1-Score and Precision of the proposed model.

Table 6.

F1-score and precision comparison of the proposed model.

| Technique | F1-score | Precision |

|---|---|---|

| Genetic algorithm with SVM | 81.6 | 80.1 |

| Genetic algorithm with KNN | 82.1 | 81.6 |

| M-ABC with SVM | 83.6 | 82.4 |

| M-ABC with KNN (proposed) | 85.3 | 83.7 |

Fig. 6.

F1-score and precision comparison of the proposed model.

Precision 83.7%, it can be inferred that the high accuracy and sensitivity rates of the proposed framework would result in a high precision rate as well as compared with the existing techniques.

The specificity rate is 94.8%, which is higher in the proposed framework, it is important to consider specificity alongside sensitivity for a comprehensive evaluation of the model’s performance.

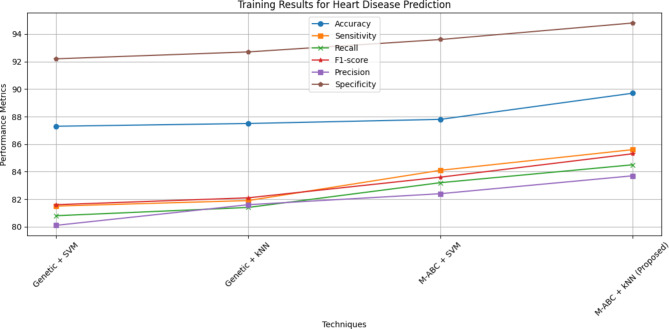

Overall, the proposed framework, which combines an M-ABC optimizer for healthcare user sites and KNN for the cloud model, demonstrates better accuracy, sensitivity, and recall compared to existing learning algorithms. Table 7; Fig. 7 illustrate the specificity of the proposed model. The overall performance parameter is illustrated in Table 8; Fig. 8. The training, validation and testing results for heart disease diagnosis are shown in Fig. 9. The proposed algorithm can achieve better results because of the optimal feature selection MABC algorithm combined with kNN.

Table 7.

Analysis of specificity for the proposed model.

| Technique | Specificity |

|---|---|

| Genetic algorithm with SVM | 92.2 |

| Genetic algorithm with KNN | 92.7 |

| M-ABC with SVM | 93.6 |

| M-ABC with KNN (Proposed) | 94.8 |

Fig. 7.

Specificity of the proposed model.

Table 8.

Performance of full feature set.

| Technique | Accuracy | Sensitivity | Recall | F1-score | Precision | Specificity |

|---|---|---|---|---|---|---|

| Genetic algorithm with SVM | 87.3 | 81.5 | 80.8 | 81.6 | 80.1 | 92.2 |

| Genetic algorithm with KNN | 87.5 | 81.9 | 81.4 | 82.1 | 81.6 | 92.7 |

| M-ABC with SVM | 87.8 | 84.1 | 83.2 | 83.6 | 82.4 | 93.6 |

| M-ABC with KNN (proposed) | 89.7 | 85.6 | 84.5 | 85.3 | 83.7 | 94.8 |

Fig. 8.

Training result for heart disease prediction.

Fig. 9.

Training, validation, and testing results for heart disease prediction.

Discussion and comparison with other models

Genetic algorithm with SVM

During the training phase, the genetic algorithm equipped with support vector machines (SVM) achieves an accuracy of 87.3%, which indicates how well the model fits the training data. There is a modest decline in accuracy to 81.9% during the validation process, which indicates that the performance of the model may not generalize well to fresh data.

When the model is applied to data that has not yet been observed, the accuracy drops even further to 78.7% during the testing phase, which indicates that the predictive capacity of the model reduces accordingly.

Genetic algorithm with K-NN

For training purposes, the genetic algorithm with KNN achieves an accuracy of 87.5%, which is comparable to the accuracy achieved by the SVM-based technique. A modest improvement in generalization capabilities is indicated by the fact that the accuracy increases marginally to 82.8% during the validation process. This is in comparison to the SVM-based technique.

On the other hand, during the testing phase, the accuracy reduces to 79.1%, which is comparable to the SVM-based technique and indicates that the usefulness is limited on data that has not yet been examined.

M-ABC with SVM

Can achieve a little greater accuracy of 87.8% during training in comparison to both of the approaches that came before it. In comparison to the approaches that are based on genetic algorithms, the accuracy improves even further during the validation process, reaching 83.4%. This indicates that the generalization is improved. During the testing phase, the accuracy continues to be relatively high at 80.5%, which indicates that it performs decently on data that has not yet been seen.

Proposed

M-ABC with KNN achieves the best accuracy of 89.7% during training compared to all other techniques as depicted in Table 5; this indicates that it fits the training data more accurately than any other methodology. When the validation process is carried out, the accuracy significantly improves to 85.8%, which indicates that the generalization skills are very strong.

In the testing phase, the accuracy remains high at 84.6%, indicating robust performance on unseen data.

Overall, the proposed M-ABC with KNN technique demonstrates the highest accuracy across all phases, suggesting its effectiveness in predicting heart disease. It exhibits strong performance during training, excellent generalization to new data during validation, and robustness when applied to unseen data during testing. Accuracy is determined by the proportion of accurate predictions within the positive instance category. Classification errors refer to inaccuracies or the percentage of errors present in a given case. Three performance measurements are used to determine crucial aspects of heart disease. Understanding the behavior of various groups’ aids in making informed decisions about feature selection. The M-ABC optimizer utilizes a set of optimized features, as outlined in Table 7, which provides a comprehensive overview of the achieved prediction accuracy. In the first experiment, the M-ABC optimizer achieved an accuracy rate of 87% with five functions. With the same dataset, the M-ABC optimizer demonstrated 87% accuracy when utilizing six features, while the accuracy increased to 89% when eight features were employed. Table 9 illustrates the comparison table of different studies with the proposed model. Integrating the MABC-optimized kNN model into healthcare IT can enable automated, real-time risk prediction of heart disease, supporting early diagnosis and personalized treatment planning. This integration can be achieved through APIs or cloud-based services, ensuring scalability and seamless adoption in various healthcare settings. The proposed method can be extended to predict various diseases by using MABC for feature optimization on relevant datasets. This ensures adaptability to different medical conditions. Utilizing federated learning allows decentralized training across multiple healthcare institutions, preserving patient privacy and improving the model’s generalizability.

Table 9.

Comparison with other studies.

Conclusion

Our proposed study describes an optimal feature selection for privacy awareness that we think can be used to accurately predict heart disease in healthcare. The suggested system is a mix of k-NN and M-ABC optimization methods that aim to make heart disease better in a healthcare system while dealing with privacy issues around forecasts. The main goal of this study is to make it easier to predict heart disease, take less time to train, and communicate more clearly. We checked that our suggested framework works by comparing it to the baseline Genetic algorithm with SVM, the Genetic algorithm with KNN, and M-ABC with SVM optimizer algorithms. We looked at how well it communicated and how well it predicted different model parameters. The suggested framework showed better performance in terms of precision, awareness, accuracy, and the speed with which information could be shared. It was seen that the suggested framework gives 2.6% better accuracy, 1.9% better sensitivity, 1.8% better recall, 1.9% better F-score, 1.7% better precision, and 1.6% better specificity are needed to get the best accuracy. Our suggested model has some flaws, such as the fact that it might not be able to handle many IoMT client sites at once and that changing the learning rate could change the whole model. Privacy concerns make it hard to diagnose, treat, and keep an eye on health problems. Although the proposed MABC with kNN algorithm is an approach to heart disease prediction, particularly in terms of interpretability and efficiency for smaller heart disease datasets, it has limitation as compared to newer machine learning methods such as ensemble models and deep learning. In the future, we will work on recovering from and treating many other serious illnesses, like Parkinson’s disease, breast cancer, diabetes, and skin cancer. Future research could explore advanced machine learning techniques, including deep learning models like CNN and RNN, to improve prediction accuracy and adapt to medical datasets.

Acknowledgements

The authors extend their appreciation to the King Salman Centre for Disability Research for funding this work through Research Group no KSRG-2023-348.

Author contributions

All authors have equally contributed.

Data availability

All data is publically available on the following link https://archive.ics.uci.edu/dataset/45/heart+disease.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Tehseen Mazhar, Email: tehseenmazhar719@gmail.com.

Muhammad Badruddin Khan, Email: mbkhan@imamu.edu.sa.

References

- 1.Khan, J., Khan, M. A., Jhanjhi, N. Z., Humayun, M. & Alourani, A. Smart-city-based data fusion algorithm for internet of things. Comput. Mater. Contin. 73, 2407–2421 (2022). [Google Scholar]

- 2.Dash, S., Shakyawar, S. K., Sharma, M. & Kaushik, S. Big data in healthcare: management, analysis and future prospects. J. Big Data6, 54 (2019). [Google Scholar]

- 3.Khan, M. A. An IoT framework for heart disease prediction based on MDCNN classifier. IEEE Access8, 34717–34727 (2020). [Google Scholar]

- 4.Mohan, S., Thirumalai, C. & Srivastava, G. Effective heart disease prediction using hybrid machine learning techniques. IEEE Access7, 81542–81554 (2019). [Google Scholar]

- 5.Anbarasi, M., Anupriya, E. & Iyengar, N. C. S. N. Enhanced prediction of heart disease with feature subset selection using genetic algorithm. Int. J. Eng. Sci. Technol.2 (10), 5370–5376 (2010). [Google Scholar]

- 6.Liu, X. et al. A hybrid classification system for heart disease diagnosis based on the RFRS method. Comput. Math. Methods Med.10.1155/2017/8272091 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tomar, D. & Agarwal, S. Feature selection based least square twin support vector machine for diagnosis of heart disease. Int. J. Bio-Sci. Bio-Technol.6 (2), 69–82 (2014). [Google Scholar]

- 8.Karayılan, T. & Kılıç, Ö. Prediction of heart disease using neural network. In Computer Science and Engineering (UBMK), 2017 International Conference, 719–723 (IEEE, 2017). 10.1109/UBMK.2017.8093512.

- 9.Polat, K. & Güneş, S. A new feature selection method on classification of medical datasets: Kernel F-score feature selection. Expert Syst. Appl.36 (7), 10367–10373 (2009). [Google Scholar]

- 10.Li, J., et al. Heart disease identification method using machine learning classification in e-healthcare. IEEE Access8, 107562–107582 (2020). [Google Scholar]

- 11.Li, Y., Li, T. & Liu, H. Recent advances in feature selection and its applications. Knowl. Inf. Syst., vol. 53 (3), 551–577 (2017).

- 12.Raschka, S. Model evaluation model selection and algorithm selection in machine learning. http://arxiv.org/abs/1811.12808. (2018).

- 13.Khan, M. A. & Algarni, F. A healthcare monitoring system for the diagnosis of heart disease in the IoMT cloud environment using MSSO-ANFIS. IEEE Access8, 122259–122269 (2020). [Google Scholar]

- 14.Mukherjee, R., Sahana, S. K., Kumar, S., Agrawal, S. & Singh, S. Application of different decision tree classifier for diabetes prediction: a machine learning approach. In Proc. of 4th International Conference on Frontiers in Computing and Systems. COMSYS 2023. Lecture Notes in Networks and Systems, (Kole, D.K., Roy Chowdhury, S., Basu, S., Plewczynski, D., Bhattacharjee, D. eds) vol 974 (Springer, 2024). 10.1007/978-981-97-2611-0_4.

- 15.Haq, A. U. et al. Intelligent machine learning approach for effective recognition of diabetes in E-healthcare using clinical data. Sensors20 (9), 2649 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dhilsath, F. M. & Samuel, S. J. Hyperparameter tuning of ensemble classifiers using grid search and random search for prediction of heart disease. In Computational Intelligence and Healthcare Informatics (O.P. Jena, A.R. Tripathy, A.A. Elngar and Z. Polkowski eds). 10.1002/9781119818717.ch8 (2021).

- 17.Meng, Y. et al. A machine learning approach to classifying self-reported health status in a cohort of patients with heart disease using activity tracker data. IEEE J. Biomed. Health Inf.24 (3), 878–884 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Boateng, E., Otoo, J. & Abaye, D. Basic tenets of classification algorithms K-Nearest-Neighbor, Support Vector Machine, Random Forest and Neural Network: a review. J. Data Anal. Inform. Process.8, 341–357. 10.4236/jdaip.2020.84020 (2020). [Google Scholar]

- 19.Hashi, E. K. & Zaman, M. S. U. Developing a hyperparameter tuning based machine learning approach of heart disease prediction. J. Appl. Sci. Process. Eng.7 (2), 631–647 (2020). [Google Scholar]

- 20.Modak, S., Abdel-Raheem, E. & Rueda, L. Heart disease prediction using adaptive infinite feature selection and deep neural networks. In 2022 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), 235–240. 10.1109/ICAIIC54071.2022.9722652 (2022).

- 21.Kishor, A. & Jeberson, W. Diagnosis of heart disease using Internet of things and machine learning algorithms. In Proc. of the Second International Conference on Computing, Communications, and Cyber-Security, 691–702 (Springer, 2021).

- 22.Dun, B., Wang, E. & Majumder, S. Heart disease diagnosis on medical data using ensemble learning. Comput. Sci.1 (1), 1–5 (2016). [Google Scholar]

- 23.Rabbi, M. F., Uddin, M. P., Ali, M. A. & Kibria, M. F. Performance evaluation of data mining classification techniques for heart disease prediction. Amer. J. Eng. Res.7 (2), 278–283 (2018). [Google Scholar]

- 24.Ramalingam, V. V., Dandapath, A. & Raja, M. K. Heart disease prediction using machine learning techniques: a survey. Int. J. Eng. Technol. 7 (2.8), 684–687 (2018).

- 25.Pouriyeh, S. et al. A comprehensive investigation and comparison of machine learning techniques in the domain of heart disease. In 2017 IEEE Symposium on Computers and Communications (ISCC),204–207 (2017).

- 26.Fix, E. & Hodges, J. L. Discriminatory analysis. Nonparametric discrimination: consistency properties. Int. Stat. Rev. Rev. Int. Stat.57 (3), 238–247 (1989).

- 27.Palaniappan, S. & Awang, R. Intelligent heart disease prediction system using data mining techniques. In 2008 IEEE/ACS International Conference on Computer Systems and Applications, 108–115 (2008).

- 28.Rabbi, M. F. et al. Performance evaluation of data mining classification techniques for heart disease prediction. Am. J. Eng. Res.7 (2), 278–283 (2018). [Google Scholar]

- 29.Haq, A. U., Li, J. P., Memon, M. H., Nazir, S. & Sun, R. A hybrid intelligent system framework for the prediction of heart disease using machine learning algorithms. Mobile Inf. Syst.2018, 21 (2018).

- 30.Amin, M. S., Chiam, Y. K. & Varathan, K. D. Identification of significant features and data mining techniques in predicting heart disease. Telemat. Inform36, 82–93 (2019).

- 31.Ahmed, M. H., Hongou, F., Mohamed, L. & Khan, A. Evaluating the efficacy of Deep Learning architectures in Predicting Traffic patterns for Smart City Development. J. Artif. Intell. Metaheurist. 26–35. 10.54216/JAIM.060203 (2023).

- 32.Towfek, S., Khodadadi, N., Abualigah, L. & Rizk, F. AI in higher education: insights from student surveys and predictive analytics using PSO-guided WOA and linear regression. J. Artif. Intell. Eng. Pract.1 (1), 1–17. 10.21608/jaiep.2024.354003 (2024). [Google Scholar]

- 33.El-Kenawy, E. S. M. et al. Greylag goose optimization: nature-inspired optimization algorithm. Expert Syst. Appl.238, 122147 (2024). [Google Scholar]

- 34.Abdollahzadeh, B. et al. Puma optimizer (PO): a novel metaheuristic optimization algorithm and its application in machine learning. Cluster Comput.27, 5235–5283. 10.1007/s10586-023-04221-5 (2024). [Google Scholar]

- 35.Khan, M. A. et al. . Asynchronous federated learning for improved cardiovascular disease prediction using artificial intelligence. Diagnostics13, 2340. 10.3390/diagnostics13142340 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Panniem, A. & Puphasuk, P. A modified artificial bee colony algorithm with firefly algorithm strategy for continuous optimization problems. J. Appl. Math.2018, 1237823 (2018).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data is publically available on the following link https://archive.ics.uci.edu/dataset/45/heart+disease.