Abstract

This study presents a novel training technique, visual + electrotactile proprioception training (visual + EP training), which provides additional proprioceptive information via tactile channel during motor training to enhance the training effectiveness. In this study, electrotactile proprioception delivers finger aperture distance information in real-time, by mapping frequency of electrical stimulation to finger aperture distance. To test the effect of visual + EP training, twenty-four healthy subjects participated in the experiment of matching finger aperture distance with distance displayed on screen. Subjects were divided to three groups: the first group received visual training and the other two groups received visual + EP training with or without a post-training test with electrotactile proprioception. Finger aperture control error was measured before and after the training (baseline, 15-min post, 24-h post). Experimental data suggest that both training methods decreased finger aperture control error at 15-min post-training. However, at 24-h post-training, the training effect was fully retained only for the subjects who received visual + EP training, while it washed out for the subjects with visual training. Distribution analyses based on Bayesian inference suggest that the most likely mechanism of this long-term retention is proprioceptive recalibration. Such applications of artificially administered sense have the potential to improve motor control accuracy in a variety of applications.

Subject terms: Biomedical engineering, Decision

Introduction

In cases of our day-to-day manual tasks such as grasping a bottle or opening a door, the first step is visually perceiving the locations of a target relative to an end effector (i.e.,fingertip)1. Visually perceived locations are processed by the brain, which then issue commands to each muscle based on prior experience2,3. However, these motor commands are often inaccurate and need to be updated or tuned in real time. This is true especially when the manipulation requires high control accuracy (e.g.,neurosurgery). Therefore, the central nervous system (CNS) continuously compares the perceived distance with the executed distance by the fingers, and adjusts motor output to address the error4–6. However, such error is often not addressed well through real-time tuning, mainly because of appreciable visual-proprioceptive mismatch. Note that the nervous system updates motor output based on not only visual feedback but also proprioceptive feedback. It is to use the most reliable sensory information (e.g.,mostly depends on proprioception when the operator’s finger is out of sight)7–9. When both visual and proprioceptive feedback are available, an estimate of each sensory modality is combined as a weighted average4. The main reason for visual-proprioceptive mismatch is the difference in the neural encoding scheme10. Visual feedback perceives spatial representation of the body based on visual surroundings1, while proprioceptive feedback perceives it based on the deformation of muscles, tendons, and skin5,11,12. Furthermore, the non-idealities of each sensory modality increase the mismatch.

Visual-proprioceptive mismatch results in subsequent errors in sensory integration, which can limit the accuracy of finger aperture estimates and reach-to-grasp tasks13,14. Previous works already showed that the human sensorimotor system is less effective in coding motor commands when a visual-proprioceptive mismatch is induced, either through bodily illusions or visuomotor trainings15–17. The human sensorimotor system mostly depends on visual input rather than somatosensory input and therefore shows a recalibration of the movement biased toward the visual image17,18. The simplest way to address this error is to fully depend on visual feedback and correct the execution error. However, such an approach requires high attention and full availability of vision, which is often partially or fully occluded in motor tasks. For example, in the case of neurosurgery applying the right amount of pinch on tissues or nerves, visual feedback is intermittently blocked by the tissue structure or surgical tools19.

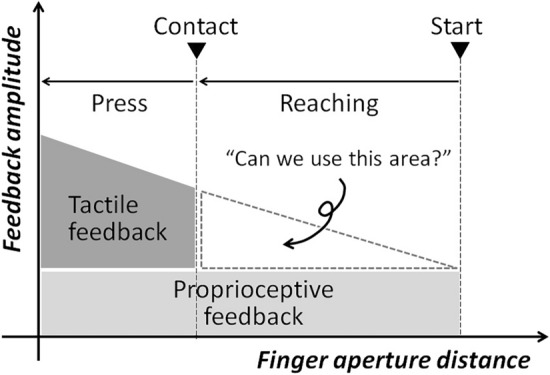

To overcome visual-proprioceptive mismatch and increase real-time motor control accuracy, researchers have employed audiovisual assistance20. For instance, auditory feedback system augments interaction force or distance to the target, by changing the intensity or frequency of sound21,22. Visual assistance is another popular approach, providing necessary information as a form of graphical representation23or color tone24. However, although these audiovisual approaches provide information effectively with a user-friendly interface, audiovisual channels are often occupied for other communication and planning tasks. Further, they are highly cognitive. Therefore, the engagement of audiovisual channels is often ineffective, especially in performing high-concentration tasks24. Moreover, intrinsic visual-proprioceptive matching error questions those approaches13. Haptic feedback via tactile pathway is another sensory modality to provide additional sensory information, while requiring less cognitive load than audiovisual assistance20,25–28. However, haptic feedback is mostly given after the end effector touches the object29, and therefore it does not improve the control accuracy during the reaching phase. Haptic feedback is sometimes given during the reaching phase before the contact, however, is provided as a form of repulsive force30. Therefore, it distorts the motor output, reduces the fine motor control accuracy, and risks mechanical stability. Indeed, we need a new approach to compensate for the limited finger control accuracy during the interactive finger reaching before the physical contact is made on the fingertip.

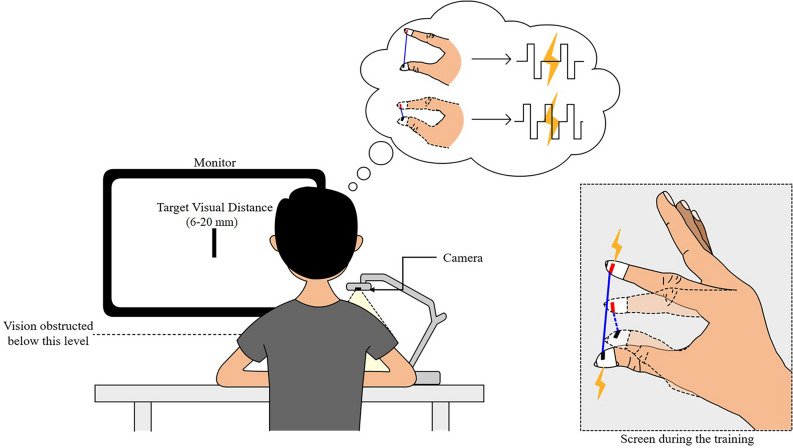

We paid attention to the fact that the tactile channel on the fingertip is usually not occupied for the finger-reaching task before the physical contact is made, although the tactile channel provides the sensory information intuitively (see Fig. 1). We employed electrotactile feedback with a frequency modulation as a modality to deliver finger aperture (between thumb and index finger) to the fingertip. The tactile feedback evoked by alternating electrical current can effectively deliver sensory information with its fast and flexible parameter modulation capability and minimal mechanical perturbation31,32. Electrical stimulation (E-stim), applied on the cutaneous nerves innervating into the skin of the fingertip, opens a voltage-gated ion channel to evoke afferent volleys corresponding to the tactile feedback on the fingertip. Although E-stim also causes a slight buzzing-like sensation (i.e.,tingling) at the stimulation site, through the excitation of nerve fibers attached to mechanoreceptors33,34, it is minimally disturbing and the frequency level is well recognized35.

Fig. 1.

Concept of electrotactile proprioception and its potential effect on enhancing motor control accuracy. It shows tactile channel on the fingertip available during the reaching phase of finger movement.

We call this new sensory modality, which delivers distance information by frequency-modulated electrotactile feedback, as Electrotactile Proprioception, as it employs tactile channel to deliver finger aperture distance (see Fig. 1)36. Note that the information of finger aperture distance is instrincially proprioceptive, as each finger aperture distance is mapped onto certain proprioceptive feedback from forearm and fingers. E-stim was applied with a frequency inversely proportional to the distance between the fingertip and the target. Electrotactile proprioception is uniquely positioned to narrow the mismatch between the visually and proprioceptively perceived distances. While proprioceptive feedback represents the spatial information in the intrinsic coordinate and visual feedback in the extrinsic coordinate, electrotactile proprioception provides spatial information in both coordinates because the body (intrinsic coordinate) contacts with the external target (extrinsic coordinate). In other words, electrotactile proprioception will generate a new schema of representing the extrinsic visual distance by a set of intrinsic coordinates. This hybrid representation of electrotactile proprioception would bridge between visual and proprioceptive feedback and decrease visual-proprioceptive matching errors.

Further, it is important to apply electrotactile proprioception intuitively, as one of the strengths of the tactile channel in delivering sensory information is its intuitiveness, compared to other sensory channels. Notably, visual and auditory channels are known for their high attentional demands, and a few studies pointed out that attentional demands impede the motor learning process37–39. The other studies on multisensory spatial tasks also pointed out that it is critical to provide spatial information in the most intuitive way minimizing attentional demands40,41. Even tactile channel is not free from the problem of attentional demands because tactile augmentation is typically applied to the body locations indirectly associated with the given motor task (i.e., indirect sensory cues). To maximally conserve the intuitiveness of the tactile channel, the presented electrotactile proprioception was designed to deliver the finger aperture distance to each fingertip, which is directly associated with the given motor task. In this study, we tested the effect of electrotactile proprioception on the control accuracy of interactive finger reaching, when it is applied during the visual-proprioceptive training.

Goal and hypotheses

The overall goal of the presented study is to determine the effect of the electrotactile proprioception on the control accuracy of interactive finger reaching. The first hypothesis is that the introduction of electrotactile proprioception, through the E-stim with stimulation frequency inversely proportional to the distance, will decrease the error in the interactive finger reaching. As electrotactile proprioception will be provided as a frequency-coded information based on the visually displayed target distance, it theoretically will work as an error-free sensory feedback for subjects to rely on. The second hypothesis is that visual-proprioceptive training with both visual feedback and electrotactile proprioception (we will call it as visual + EP training in the rest of the paper) will have a lasting effect on reducing the error, while the conventional visual-proprioceptive training only with visual feedback (we will call it as visual trainingin the rest of the paper) will not7,]13,]42. It is based on the idea the hybrid representation of electrotactile proprioception, bridging between the extrinsic visual coordinates and intrinsic proprioceptive coordinates, will be more effective on reducing visual-proprioceptive mismatch than visual feedback that solely depends on extrinsic visual coordinates.

Methods

Human subject recruitment

The study was performed in accordance with the relevant guidelines and regulations described in the protocol approved by the Institutional Review Board of Texas A&M University (IRB2020-0481). 24 healthy human subjects participated in the study. The subject group consisted of 11 females and 13 males. All the subjects gave informed consent to their participation in this study. Subjects who have any known problem in using their hands, history of neurological disease or disorder, or having any implanted electronic medical device, were excluded from the study.

Experiment preparation

Movement of the two fingers (index finger and thumb) was captured by a high-resolution camera, processed by the image processing unit, and converted to the proper stimulus at the microcontroller based on a distance-frequency mapping. A corresponding current pulse was generated and applied to the palmar digital nerves of the index finger and thumb, to evoke electrotactile feedback on the fingertips of each finger. 2N3904 transistors and resistors were used to build an H-bridge circuit to convert the control signal to the alternating current between the two electrodes. The circuit was powered by an adjustable DC power supply with an over-current protection. The biphasic signal from the microcontroller drove the H-bridge circuit to produce a biphasic electrical stimulus, composed of a positive pulse for 2 ms and a negative pulse for 2 ms, followed by an adjustable resting period. Biphasic stimulation was chosen to minimize the potential charge imbalance at the area of application43,44. Square-shaped gel electrodes of 10 mm × 10 mm dimensions were custom-made using a sheet of conductive carbon fiber with an adhesive hydrogel. A multi-threaded 36-AWG wire was attached to the carbon layer using silver conductive epoxy to provide a low-impedance current path. The electrodes were attached to the distal phalangeal region of the thumb and index finger, where the palmar digital nerves innervate. Tapes were wrapped around the thumb and index finger to keep a consistent connection between electrodes and skin (see Fig. 2). Conductive gel was applied at the site of electrode to reduce the skin impedance. The biphasic voltage from the H-bridge driver circuit provided E-stim to the subjects via these electrodes.

Fig. 2.

The visual-proprioceptive matching experiment. The camera monitored the finger aperture in real time during the whole experiment. During the training period, all subjects saw their fingers on the screen with a virtual line overlaid on the gap between the fingers. For subjects in Group EV and Group EV-s, stimulation frequency increased as the gap between the fingers decreased. During the training, subjects in Group EV and Group EV-s were asked to map the finger aperture with corresponding electrical stimulation frequency. During the test sessions before and after the training, target distance was displayed on the screen and vision over the fingers were obstructed during the finger movement.

Mapping between finger aperture distance and E-stim frequency

Finger aperture distance was mapped as inversely proportional to the stimulation frequency, for subjects to intuitively perceive closer distance between fingertips by higher frequency. The mapping function between the finger aperture distance and the stimulation frequency () is described in the equation below.

| 1 |

where is the maximum stimulation frequency to evoke pulsing sensation for each subject, and are the finger aperture distances of 25 mm and 0 mm (i.e., fully closed), respectively, and is the current finger aperture distance according to the displayed target distance at 6–20 mm range. According to the equation, subjects start to feel the slowest pulsing sensation ( 10 Hz) when the distance between the fingertips is 25 mm and the pulsing frequency increases up to Hz as the finger aperture distance decreases to zero.

Experiment procedure

Baseline session: Measure the baseline visual-proprioceptive matching error

At first, subjects were asked to keep their index finger and thumb far away from each other. Subjects were then asked to look at the monitor screen where lines of variable length were displayed, representing the target length (see Fig. 2). The eight different lengths of the lines were selected between 6 and 20 mm, in steps of 2 mm, and displayed in a random order, one at a time. Without visual feedback on their hands, subjects were asked to move their fingers to adjust the finger aperture distance, to match it with the length of line displayed on the screen. Subjects were given 10 s to position their fingers before the next target line was displayed. After 10 s, the distance between the fingertips was measured and recorded using the camera over the subject’s hands and then the subjects were asked to return their index finger and thumb to the initial position (i.e., far away from each other). The whole procedure (eight lines displayed in a random order) was repeated for five times to obtain sufficient data to test the hypotheses (see Fig. 3).

Fig. 3.

Timeline of the entire experiment. Group V and Group EV had 3 test sessions, and Group EV-s had 4 test sessions. Each session had 5 blocks of 8 trials totaling to 40 trials per session and in each block, 8 target lines of 6–20 mm in steps of 2 were displayed on the screen in random order. Each trial lasts for 10 s with a 2 s gap between them to reset the fingers to the initial stretched position. Between each block, there was a 30-s gap to avoid finger fatigue. For both groups, visual feedback of the fingers was given only during the training period. In Group EV and Group EV-s, before the training period, 10 min was used to establish the electrode location on the index and thumb fingers, the frequency range (fmin to fmax) and the stimulation voltage (Vsense) for the subject. In both groups, after establishing the lasting effect of visual + EP training, there was a 24-h gap before the final session to test the retention.

Parameter setup session: Set amplitude and frequency range of E-stim to evoke electrotactile proprioception

First, voltage was gradually increased to determine and , where subjects reported any tactile perception or discomfort, respectively. The average of these two voltage levels was then set as the amplitude of E-stim throughout the experiment, based on prior success35,41. The frequency range of the applied E-stim was also determined based on the prior works and verbal reports of the subjects. Based on prior works, the maximum frequency was initially set as 80 Hz and the minimum frequency as 10 Hz. The maximum frequency was reduced to 70 Hz for the subjects who were not able to distinguish between 70 and 80 Hz but able to distinguish between 60 and 70 Hz.

Training session: Visual feedback training (visual training) or visual feedback + electrotactile proprioception training (visual + EP training) was given during the training for subjects to map displayed distance to proprioceptively perceived distance.

24 subjects were randomly split into three groups (8 subjects per each group), and subjects underwent 5-min training session with different types of feedback on finger aperture distance. Group V (n = 8) received training of visual-proprioceptive matching with both the target length and the finger aperture displayed on the screen (see Fig. 2). Basically, a top view of the subject’s hand was captured by a camera and displayed on the monitor screen and a virtual line was overlaid onto the finger aperture on the screen (see Fig. 2). During the training, subjects were asked to freely move their fingers, with visual display of the finger aperture distance on the screen. Other subjects (n = 16) received electrotactile proprioception on top of the visual display on the screen. During the training, these subjects were asked to freely move their fingers, with both electrotactile proprioception and visual display on the screen. At the same time, subjects were asked to link between the finger aperture distance and electrotactile proprioception (i.e., frequency of E-stim). During this training process, we expect subjects to reduce visual-proprioceptive matching error with both visual and electrotactile cue. We categorized these 16 subjects as either Group EV or Group EV-s, according to the existence of a special post-training test session with electrotactile proprioception. Subjects at Group EV-s had a special post-training test session with electrotactile proprioception, while subjects at Group EV did not (see Fig. 3).

Post-training session: Measure the aftereffect of visual training or visual + EP training on reducing visual-proprioceptive matching error

After the training (visual training for Group V and visual + EP training for Group EV and Group EV-s), two or three test sessions were conducted to measure the change in visual-proprioceptive matching error. During each test session, subjects were asked to reproduce a target line displayed on a screen by the finger aperture, without visual feedback or electrotactile proprioception on the finger aperture distance (see Fig. 2). For Group V and Group EV, the 1st post-training test session was conducted 15-min post-training, without providing the display of the finger aperture. The 2nd post-training test session for Group V and Group EV was conducted 24-h after the training, with no display of the finger aperture. For Group EV-s, the 1st post-training test session was conducted right after the training, without visual display of the finger aperture but with electrotactile proprioception. The 2nd post-training test session for Group EV-s was conducted 3-min after the completion of the 1st test session, which means 15-min post-training. It corresponds to the 1st test session for Group V and Group EV. The 3rd post-training test session for Group EV-s was conducted 24-h after the training, which corresponds to the 2nd test session for Group V and Group EV. The experimental setup and timeline of the experiment are depicted in Figs. 2 and 3, respectively.

Statistical method

To determine the efficacy of the independent factors on dependent variable, and to account for across-subject variabilities, we performed a linear mixed model analysis (SPSS 16, IBM, Chicago, IL, USA) to analyze the experimental result. We set the subject as a random factor as the “subject” factor can unequally affect each measurement with the unique neural characteristics of each subject. For between-subject comparisons, we set the training method (visual training or visual + EP training) as an independent factor as each type of training was provided consistently for each group of subjects without random variation. For within-subject comparisons, we set three sampling points (baseline, 15-min post-training, and 24-h post-training) as an independent factor as the three sampling points were commonly shared for all subjects. To verify that the data satisfies the prerequisites for the linear mixed model analysis, we tested normality of data distribution using the Kolmogorov–Smirnov test of normality. All datasets satisfied the condition of p > 0.05 and normality could be assumed.

For all statistical tests, the significance level was set at 0.05 (95% confidence interval). All statistical data were represented as Mean ± STE (standard error) in the experimental result section and all statistical comparison results were represented with the corresponding p values. For the rest of the paper, the group with visual training will be referred to as Group V and the group with Visual + EP training will be referred to as either Group EV or Group EV-s. The difference between Group EV and Group EV-s is that Group EV-s received additional special post-training test sessions with electrotactile proprioception provided, while Group EV did not. Visual-proprioceptive matching error was calculated as the ratio of the difference between the actual distance and displayed target line length to the displayed target line length (see Eq. 2).

| 2 |

Visual-proprioceptive matching error was also presented as relative matching error, calculated as the ratio of the difference between the measured matching error and baseline matching error to the baseline matching error before any training (see Eq. 3).

| 3 |

As we performed multiple post-hoc comparisons, we corrected the level of p value (0.05) required to claim significance in 95% confidence interval, by dividing the level of p value (0.05) with the number of post-hoc comparisons. First, comparison within groups was conducted for 3 times for Group V and Group EV, and 6 times for Group EV-s. Note that there are 3 post-hoc comparisons between 3 sampling points of Groups V and EV, and 6 post-hoc comparisons between 4 sampling points of Group EV-s. Second, comparison between groups was conducted for 3 times for each time point (3 post-hoc comparisons between 3 groups). Accordingly, the division factors were determined as either /3 for 3 post-hoc comparisons or /6 for 6 post-hoc comparisons.

Data analysis based on Bayesian theory for sensory integration

Bayesian theory for sensory integration explains the process of estimation in the human sensory system45,46. Although our bodily sensors provide imperfect information, combining information from multiple sensory modalities can reduce the error in the estimate. It is simply because the information becomes more reliable by adding more sensory modalities. In the process of combining new sensory information with the original sensory information, Bayesian theory for sensory integration (i.e.,Bayesian integration) established a solid mathematical framework to come up with a new optimal estimate. In the process of combining new sensory information, Bayesian integration has been formulated as more precise sensory information having greater weight in determining the combined output, based on the maximum likelihood estimation4,47. Note that sensory information becomes more precise when it is combined with new sensory information, because both prior and current information are reflected in the posterior information48.

Based on the maximum likelihood estimation formula for mean and standard deviation, as described in Eqs. 4–7, we inferred the perceived distance for each sensory modality49,50. Note that the formula employed distance variables indicating a normalized distance offset (i.e., percent error relative to the displayed target distance) from the measured finger aperture distance and the displayed target distance. In Eqs. 4–7, DV means normalized distance offset from the visually perceived distance when only vision is available, DP means normalized distance offset from the visually perceived distance when only proprioception is available, DE means distance offset from the visually perceived distance when only electrotactile proprioception is available, DVP means normalized distance offset from the visually perceived distance when both vision and proprioception are available, DEP means normalized distance offset from the visually perceived distance when both electrotactile proprioception and proprioception are available, and DEVP means normalized distance offset from the visually perceived distance when all three sensory modalities (i.e., vision, proprioception, and electrotactile proprioception) are available.

| 4 |

| 5 |

When electrotactile proprioception was introduced to the Group EV or Group EV-s, the E-stim created the electrotactile proprioception estimate, which was combined with the prior visual and proprioceptive estimates. The multisensory integration of electrotactile proprioception, on top of visual and proprioceptive estimates, is given by Eqs. 6 and 7.

| 6 |

| 7 |

Based on the above equations, We calculated the means and standard variations of each sensory estimate.

Results

Visual-proprioceptive matching error, before and after the training, was compared among three groups (Groups V, EV, and EV-s). Overall comparison results are depicted in graphs in Figs. 4a and b, with individual data depicted in graphs in Fig. 5. Note that Group V (n = 8) received training with both the target length and the finger aperture displayed on the screen (see Fig. 2). Group EV (n = 8) or Group EV-s (n = 8) received electrotactile proprioception on top of the visual display on the screen. Subjects at Group EV-s had a special post-training test session with electrotactile proprioception, while subjects at Group EV did not (see Fig. 3).

Fig. 4.

Experimental results of visual-proprioceptive matching error: (a) Visual-proprioceptive matching error in Group V (n = 8), Group EV (n = 8), and Group EV-s (n = 8), indicated as the % error compared to its true value (displayed target distance); (b) Relative visual-proprioceptive matching error in all three groups, indicated as the % error compared to baseline error; Visual feedback of the finger aperture was not provided for all test conditions. Error bars represent standard error.

Fig. 5.

Individual data of visual-proprioceptive matching error (percent error relative to the target length), measured for each of eight subjects per group: (a) Group V, (b) Group EV, and (c) Group EV-s.

Amplitude and frequency range of E-stim to evoke electrotactile proprioception

Based on the data from 16 subjects who received EP, was measured as 18.25 ± 2.51 V, and was measured as 21.62 ± 2.83 V, as depicted in Fig. 6a. The frequency range for each subject in Group EV and Group EV-s was selected as either 10–70 Hz or 10–80 Hz, according to the capability of frequency discrimination, as shown in Fig. 6b.

Fig. 6.

Experimental results of visual-proprioceptive matching error: (a) Voltage range for each subject in the visual + EP training groups (Group EV and Group EV-s). Top and bottom lines indicate discomfort and perception thresholds, respectively. The middle lines indicate the voltage used for experiment; (b) Identifiable frequency range for each subject, based on 10-Hz resolution test. The max frequency corresponds to dmin = 0 mm and the min frequency corresponds to dmax = 25 mm.

All groups showed > 50% mean baseline error in regards to the target distance

The mean baseline errors in regards to the target distance are 83.0 ± 3.9%, 69.8 ± 3.1%, and 92.2 ± 3.9% for Group V, Group EV, and Group EV-s, respectively (see Fig. 4a). In other words, subjects set the finger aperture distance at the value larger than the displayed target lengths by amount of 83.0 ± 3.9%, 69.8 ± 3.1%, and 92.2 ± 3.9%, respectively for the cases of Group V, Group EV, and Group EV-s.

Both visual training and visual + EP training decreased visual-proprioceptive matching error at 15-min post-training

For Group V, visual-proprioceptive matching error at 15-min post-training was smaller than the baseline error (p < 0.001; η2 = 0.025), by 23.9% relative to the baseline error. For Group EV, visual-proprioceptive matching error at 15-min post-training was also smaller than the baseline error (p < 0.001; η2 = 0.026), by 36.8% relative to the baseline error. For Group EV-s, visual-proprioceptive matching error at 15-min post-training was also smaller than the baseline error (p < 0.001; η2 = 0.066), by 35.3% relative to the baseline error.

Training aftereffect (decrease of visual-proprioceptive matching error) was stronger with visual + EP training than visual training at 15-min post-training

At 15-min post-training, visual-proprioceptive matching error of Group EV was smaller than that of Group V (p = 0.005; η2 = 0.011), by 12.9% relative to the baseline error. Also, relative visual-proprioceptive matching error of Group EV-s was smaller than that of Group V (p = 0.015; η2 = 0.009), by 11.4% relative to the baseline error.

Training aftereffect (decrease of visual-proprioceptive matching error) persisted at 24-h post visual + EP training, while it disappered at 24-h post visual training

For Group V, visual-proprioceptive matching error at 24-h post-training was increased back to the ratio of 1.070 to baseline, which was different from the error at 15-min post-training (p < 0.001; η2 = 0.043). As a result, for Group V, visual-proprioceptive matching error at 24-h post-training (i.e., ratio of 1.070 to the baseline error) was not different from the baseline error (p = 0.288; η2 = 0.002). On the other hand, for Group EV, visual-proprioceptive matching error at 24-h post-training was not changed from the value at 15-min post-training (p = 0.659; η2 < 0.001). As a result, visual-proprioceptive matching error at 24-h post-training was maintained as a smaller value compared to the baseline error (p < 0.001; η2 = 0.073), by 39.7% relative to the baseline error (i.e., ratio of 0.603 to the baseline error). For Group EV-s, visual-proprioceptive matching error at 24-h post-training was also not changed from the value at 15-min post-training (p = 0.461; η2 = 0.001). As a result, visual-proprioceptive matching error at 24-h post-training was maintained as a smaller value compared to the baseline error (p < 0.001; η2 = 0.061), by 32.3% relative to the baseline error (i.e., ratio of 0.677 to the baseline error).

Aftereffect did not change by the additional training session with only electrotactile proprioception, right after the training session

At 15-min post-training, the visual-proprioceptive matching error of Group EV was not different from that of Group EV-s (p = 0.880; η2 < 0.001), when it was calculated as a percent error relative to the baseline error. Also at 24-h post-training, the visual-proprioceptive matching error of Group EV was not different from that of Group EV-s (p = 0.080; η2 = 0.004), when it was calculated as a percent error relative to the baseline error.

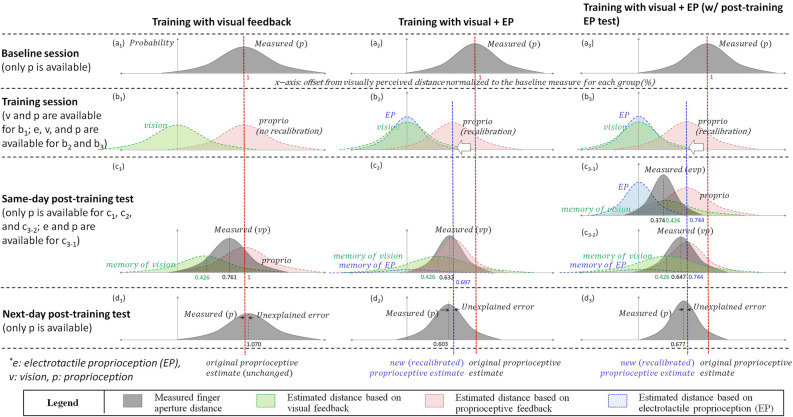

Mean and standard variations of each sensory estimate based on the Bayesian theory for sensory integration.

First, at the baseline test for all three groups, the measured values should be same as the proprioceptive estimate (see Figs. 7a1, a2, and a3), because no visual feedback was provided. Therefore, and were obtained as the baseline measures. As measured values were normalized to the mean value of baseline measurement for each group, all mean values at baseline tests were set as 1. Accordingly, we obtained = 1 with = 0.834 for Group V, = 1 with = 0.789 for Group EV, and = 1 with = 0.762 for Group EV-s.

Fig. 7.

Visualization of error estimates for different sensory modalities and their combinations. The x-axis indicates the offset from the visually perceived target distance by the brain. E, V, P, VP and EVP denote electrotactile proprioception, vision, proprioception, vision + proprioception, and electrotactile proprioception + vision + proprioception, respectively. Memory of vision represents residual training effect in vision and memory of electrotactile proprioception represents residual training effect in electrotactile proprioception.

During the training sessions, no measurement was made and therefore sensory estimates were determined based on the values calculated at the 15-min post-training tests. First, proprioceptive estimates (see Figs. 7b1, b2, and b3) were determined as the proprioceptive estimates at 15-min post-training test, calculated based on the measurement results for each group. Electrotactile proprioception estimates were determined as the value at 15-min post-training test, calculated based on the measurement results for Group EV-s.

The graphs at the 15-min and 24-h post-training tests were determined based on the measured or calculated values. At the 15-min post-training test, we calculated the visual and proprioceptive estimates based on the maximum likelihood estimation. At the 24-h post-training test, we considered the measured value as proprioceptive estimate. As these calculations at 15-min and 24-h post-training tests were done differently for each group, we summarized the calculation procedure per each group, as below.

For Group V, at the 15-min post-training test, we considered the effect of the visual estimate that should have persisted for a while. Therefore, the visual estimate and the proprioceptive estimate have been combined as in Fig. 7c1, measured as = 0.761 and =0.637. We then calculated and using Eqs. 4 and 5, with setting = 1 and = 0.834 (i.e., no proprioceptive recalibration) and obtained = 0.426 and = 0.987. Note that the proprioceptive estimate was set as same as the baseline value, based on the fact that the final measurement was not different from the baseline measurement. As anticipated, the calculated was larger than calculated (i.e., visual estimate was more dispersed than proprioceptive estimate) for Group V with no real-time visual feedback. As the visual estimate should have faded away by time, the proprioceptive estimate was approximated as the combined estimate at 24-h post-training test (Fig. 7d1). We indeed found that the baseline proprioceptive estimate (measured at baseline test) was well matched to the final proprioceptive estimate (measured at 24-h post-training test), in spite of a small unexplained mean error of 0.070 (Fig. 7d1).

For Group EV, we applied the visual estimate obtained from Group V ( as 0.426 and as 0.987), as the time interval between training session (Fig. 7b2) and 15-min post-training test session (Fig. 7c2) was same for both groups (as 15 min). It is also because there is no way to calculate proprioceptive estimate for Group EV without visual estimate, based on the fact that the final proprioceptive estimate (measured at 24-h post-training test) was significantly different from the baseline proprioceptive estimate (measured at baseline test). The combined estimate between vision and proprioception was measured as = 0.632 and =0.485. We calculated and with the same calculation procedure as Group V, based on Eqs. 4 and 5. Note that, at the aftereffect measures, we approximated the effect of “memory of electrotactile proprioception” as zero, which means no residual effect in electrotactile proprioception domain. It is because the electrotactile proprioception is a novel sensation to the nervous system and 5-min training would remain minimal memory effect. Accordingly, we obtained =0.697 with =0.556 for Group EV, at the test condition of Fig. 7c2. The new proprioceptive estimate (i.e., recalibrated proprioceptive estimate) was well matched to the proprioceptive estimate measured at 24-h post-training test, in spite of a small unexplained mean error of 0.094 (Fig. 7d2).

For Group EV-s, we applied the visual estimate obtained from Group V, as the time interval between the training and the 15-min post-training test was same for all groups (as 15 min). The combined estimate between vision and proprioception was measured as = 0.647 and = 0.545. We then calculated and by using the same calculation procedure as Group V and Group EV, based on Eqs. 4 and 5. Note that the “memory of electrotactile proprioception” was also neglected in the calculation. Accordingly, we obtained = 0.744 with =0.654 for Group EV-s, at the test condition of Fig. 7c3-2. The new proprioceptive estimate after the training (i.e., recalibrated proprioceptive estimate) was well matched to the final proprioceptive estimate measured at 24-h post-training test, in spite of a small unexplained mean error of 0.067 (Fig. 7d3).

Importantly, the additional post-training measurement results with electrotactile proprioception (at Group EV-s) provided an opportunity for us to confirm if it was reasonable to neglect the “memory of electrotactile proprioception”. We compared the theoretical with the measured , which would fit together if our approximation was reasonable. We calculated and at the test condition of Fig. 7c3-1, based on Eqs. 6 and 7 where all three sensory modalities (i.e., vision, proprioception, electrotactile proprioception) matter. As the electrotactile proprioception estimate is frequency-coded information based on the visually displayed target distance, it theoretically does not have the offset from the visually perceived distance (i.e., ). We first calculated based on Eq. 6, using (calculated as 0.654), (estimated as 0.987 from Group V measurement result), and (measured as 0.426), as depicted in Fig. 7c1. We then calculated based on Eq. 7, using (calculated as 0.744), (estimated as 0.426 from Group V measurement result), and (measured as 0.374), as depicted in Fig. 7c3-1. Accordingly, we obtained = -0.0532 and = 0.682. As was close to 0 (-5.32% error), we concluded that the calculation provided reasonable values and therefore it was proper to neglect the “memory of electrotactile proprioception”.

Discussion

This study presented a novel technique, electrotactile proprioception, which provided persisting learning effects after a short-term 5-min training. We found that the training supplemented with electrotactile proprioception led to striking beneficial advantages, with lower errors than controls immediately after training and it retained 24-h post training. These results present several interpretations, implications, and limitations, discussed in the following sections.

Visual + EP training reduced visual-proprioceptive matching error on finger aperture control at the absence of vision

Visual-proprioceptive mismatch leads to an error in replicating the visual distance by a distal body part like the finger, especially when the distal body part is out of sight. In other words, the finger aperture cannot replicate the visual distance accurately, unless there is visual feedback on the fingers with continuous neuromuscular effort to reduce the error. Indeed, our experimental result showed that the addition of electrotactile proprioception significantly reduced visual-proprioceptive matching error on finger aperture control, at the absence of vision on the fingers (see Figs. 4a and 4b), which supports the 1st hypothesis that the introduction of electrotactile proprioception, with stimulation frequency inversely proportional to the distance, will decrease the error in the interactive finger reaching. It is perhaps because electrotactile proprioception delivers the finger aperture by the stimulation frequency directly mapped onto the distance, while proprioception delivers the finger aperture indirectly by the information of muscle and tendon length and velocity.

Visual + EP training showed a strong lasting effect on reducing visual-proprioceptive matching error at 24-h post-training while visual training did not

Visual + EP training showed a strong lasting effect on reducing the visual-proprioceptive matching error, which supports the 2nd hypothesis that visual + EP training will have an aftereffect on reducing the error while visual training will not. At the test performed right after visual + EP training, with neither vision nor electrotactile proprioception, the visual-proprioceptive matching error was reduced from the baseline error by 36.8%. It was statistically and clearly lower than the baseline visual-proprioceptive error (see Fig. 4b). Visual training also showed a short-term lasting effect on the test right after the training, as a value of 23.9% smaller than the baseline visual-proprioceptive error (see Fig. 4b).

At 24-h post visual + EP training, visual-proprioceptive matching error stayed at the same level as the value measured right after visual + EP training. In case of visual training, the reduction of visual-proprioceptive matching error was washed out at 24-h post-training (no statistical difference from the baseline), which also supports the 2nd hypothesis. However, we should note that there is a possibility that the poor baseline performance of Group V compared to Group EV might have affected the difference in the lasting effects.

Data analysis with Bayesian theory for sensory integration suggests that proprioceptive estimate was recalibrated after visual + EP training

Indeed, Fig. 7b2 and b3 showed that visual + EP training recalibrated the proprioceptive estimate to be closer to the displayed target distance, while visual training did not change the proprioceptive estimate (Fig. 7b1). From the measurement results and calculations based on Bayesian inference and maximum likelihood estimation, we obtained clues on proprioceptive recalibration by visual + EP training. First, the change in the measured in Fig. 7c3-1 could not be explained without changing . Unless proprioceptive estimate changed (i.e., recalibrated) as Fig. 7c3-1, should be by far smaller than zero, which does not make sense considering that electrotactile proprioception was provided based on the displayed distance. Second, the proprioceptive estimates measured at 24-h post-training support the idea of proprioceptive recalibration by visual + EP training. The measured proprioceptive estimates at 24-h post-training test showed < 10% mean difference for all three groups (7.0% for Group V, 9.4% for Group EV, and 6.7% for Group EV-s), compared to the recalibrated proprioceptive estimates at 15-min post-training test. One more interesting observation is the reduced standard deviation for Group EV and Group EV-s at 24-h post-training, which can be well explained if the mean value of proprioceptive estimates moved toward the left (i.e., proprioceptive recalibration).

Proprioceptive recalibration is perhaps because of congruent multisensory input or physiological similarity between tactile and proprioceptive feedback

Proprioceptive recalibration was possible perhaps because the nervous system trusts on congruent multisensory input. Rubber hand illusion and the following studies well established the importance of congruent multisensory input to extend the body schema (or body ownership) onto the foreign object, which is supported by the proprioceptive drift to the rubber hand51,52. Multiple studies demonstrated that congruent visual and tactile feedback effectively extended the body schema and caused proprioceptive drift to the rubber hand (or robotic hand), while sole visual feedback was not enough. We speculate that there is a similar operating principle in proprioceptive recalibration, where the electrotactile proprioception along with visual feedback may have worked as congruent multisensory feedback for the nervous system to trust. However, we should also mention that the rubber hand illusion is a temporary phenomenon, which makes it hard to support the strong aftereffect reported in this work. The strong aftereffect may be supported at the context of somatosensory augmentation, as previous works on general somatosensory augmentation, via spinal cord stimulation or vague nerve stimulation, showed long-lasting motor changes even after spinal cord injury or stroke53,54. We also want to add a speculation that it is perhaps because electrotactile proprioception employs a tactile channel, which is anatomically and physiologically closer to the proprioceptive channel than the visual channel. Prior works on tongue motor learning also support this speculation55,56.

No lasting effect of visual training agrees with the results of prior studies

Prior studies also suggest that visual trainingdoes not recalibrate proprioceptive feedback but just leaves the memory effect of visual feedback. Smeets et al. demonstrated the washout effect of visual training by the absence of sensory recalibration7. In their experiment measuring hand positioning error through visual training, they concluded that the nervous system temporarily changes the dependency on each sensory feedback during the training, instead of the sensory recalibration. Our experimental data also showed that, visual training did not end up with the lasting effect at 24-h post training and therefore no recalibration, although visual-proprioceptive matching accuracy was temporarily improved right after the training.

We speculate that it is because visual feedback (on finger aperture) functions independently with the proprioceptive feedback. Although it makes the maximum likelihood estimates along with proprioceptive feedback, it does not affect the proprioceptive estimate. On the other hand, electrotactile proprioception seems to interact with proprioceptive feedback more actively, as it changes the proprioceptive estimate. During the training with subject’s own fingers displayed on the screen, the nervous system depends on both visual and proprioceptive feedback, as in Fig. 7b1, and therefore resulting error would be somewhere in the middle of visual and proprioceptive errors. However, in the absence of visual feedback, visual memory fades out over the trials and the proprioceptive matching error gradually increases and eventually returns to the baseline value, as in Fig. 7d1.

Visual + EP training can be applied to multiple applications that require high control accuracy and lasting effect

Visual + EP training can be applied to multiple applications with high-accuracy requirement. It may improve the performance of telerobotic surgery57–59, where surgeons use their fingers to remotely operate a surgical robot and a single unintended touch can cause serious damage on a delicate tissue structure60–62. The problem becomes even more critical if the visual feedback on the target surgical area is obstructed by the robotic tool or the surgical environment, which is often the case during the surgery. Without an accurate perception of the finger movement, it is challenging to delicately manipulate the tool and hence, surgeon may reach to a target tissue structure with a high initial contact force causing permanent tissue damage50. This initial touch can be extremely important when surgical tools access sensitive nerves or sophisticated tissues like brain and cornea63–66. Furthermore, the lasting effects of visual + EP training will enhance the learning process of the novice surgeons who are struggling with learning the novel surgical systems. In addition, visual + EP training can be potentially applied to the people after neurotrauma like stroke and spinal cord injury, to compensate for the compromised proprioception and promote motor rehabilitation.

Limitations in our approach and future plan

This study has limitations in several aspects: the number of subjects/trials, the length of observation on lasting effect, and the connection to the applications. Most of all, this study was performed with a limited number of subjects (eight per group) and should be limitedly accepted because it might be the consequence of the underpowered statistics. In the follow-up study, we plan to increase the number of subjects and trials to confirm the results over the biological variation. Also, the lasting effect of the training should be observed at longer period of time with a large number of subjects, to confirm the lasting effect and proprioceptive recalibration. A larger dataset is necessary also for reliable Bayesian analysis. Further, the presented Bayesian analysis had multiple approximations in the calculation, and they should be verified again with larger dataset. In addition, we will add a more strict control groups: (1) receiving electrotactile proprioception without visual feedback on finger aperture and (2) receiving electrotactile proprioception not spatially tuned with finger aperture distance. We will also connect the reduction in visual-proprioceptive matching error to the actual applications, such as telerobotic surgical maneuvers and motor rehabilitation after compromised proprioception.

Acknowledgements

This work was supported by National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (No. 00209864 and No. 2020R1A5A1019649).

Author contributions

HP and RR conceptualized the idea and designed the experiment. RR prepared an electrical system and optical recording system for experiments. RR and HP got the experimental protocol approved for human subject experiments. RR and HP recruited human subjects and collected experimental data. RR, HP, and JP analyzed experimental data. RR, HP, and JP wrote the manuscript. (RR: Rachen Ravichandran, JP, James L. Patton, HP: Hangue Park).

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Declarations

Competing interests

All authors have no conflict of interest.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Blouin, J. et al. Reference systems for coding spatial information in normal subjects and a deafferented patient. Exp. Brain Res.93, 324–331 (1993). [DOI] [PubMed] [Google Scholar]

- 2.Kawato, M. Internal models for motor control and trajectory planning. Curr. Opin. Neurobiol.9(6), 718–727 (1999). [DOI] [PubMed] [Google Scholar]

- 3.Albus, J. S. A theory of cerebellar function. Math. Biosci.10(1–2), 25–61 (1971). [Google Scholar]

- 4.Van Beers, R. J., Sittig, A. C. & Gon, J. J. Integration of proprioceptive and visual position-information: An experimentally supported model. J. Neurophysiol.81(3), 1355–1364 (1999). [DOI] [PubMed] [Google Scholar]

- 5.Blanchard, C., Roll, R., Roll, J. P. & Kavounoudias, A. Differential contributions of vision, touch and muscle proprioception to the coding of hand movements. PLoS ONE8(4), e62475 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Plooy, A., Tresilian, J. R., Mon-Williams, M. & Wann, J. P. The contribution of vision and proprioception to judgements of finger proximity. Exp. Brain Res.118, 415–420 (1998). [DOI] [PubMed] [Google Scholar]

- 7.Smeets, J. B., van den Dobbelsteen, J. J., de Grave, D. D., van Beers, R. J. & Brenner, E. Sensory integration does not lead to sensory calibration. Proc. Natl. Acad. Sci.103(49), 18781–18786 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dadarlat MC, O'doherty JE, Sabes PN. A learning-based approach to artificial sensory feedback leads to optimal integration. Nature neuroscience. 2015 Jan;18(1):138–44. [DOI] [PMC free article] [PubMed]

- 9.Ernst, M. O. & Banks, M. S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature.415(6870), 429–433 (2002). [DOI] [PubMed] [Google Scholar]

- 10.Holmes, N. P. & Spence, C. The body schema and multisensory representation (s) of peripersonal space. Cognitive processing.5, 94–105 (2004). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Renault, A. G. et al. Does proprioception influence human spatial cognition? A study on individuals with massive deafferentation. Frontiers in psychology.7(9), 1322 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Burke, R. E. Sir Charles Sherrington’s the integrative action of the nervous system: a centenary appreciation. Brain.130(4), 887–894 (2007). [DOI] [PubMed] [Google Scholar]

- 13.Kuling IA, de Bruijne WJ, Burgering K, Brenner E, Smeets JB. Visuo-Proprioceptive matching errors are consistent with biases in distance judgments. Journal of motor behavior. 2019 Sep 3. [DOI] [PubMed]

- 14.Dandu B, Kuling IA, Visell Y. Where are my fingers? Assessing multi-digit proprioceptive localization. In2018 IEEE Haptics Symposium (HAPTICS) 2018 Mar 25 (pp. 133–138). IEEE.

- 15.Graziano, M. S., Yap, G. S. & Gross, C. G. Coding of visual space by premotor neurons. Science.266(5187), 1054–1057 (1994). [DOI] [PubMed] [Google Scholar]

- 16.Graziano, M. S., Cooke, D. F. & Taylor, C. S. Coding the location of the arm by sight. Science.290(5497), 1782–1786 (2000). [DOI] [PubMed] [Google Scholar]

- 17.Limanowski, J. & Friston, K. Attentional modulation of vision versus proprioception during action. Cerebral Cortex.30(3), 1637–1648 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Folegatti, A., De Vignemont, F., Pavani, F., Rossetti, Y. & Farnè, A. Losing one’s hand: visual-proprioceptive conflict affects touch perception. PLoS One.4(9), e6920 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yahya, A., von Behren, T., Levine, S. & Dos Santos, M. Pinch aperture proprioception: reliability and feasibility study. Journal of physical therapy science.30(5), 734–740 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sigrist, R., Rauter, G., Riener, R. & Wolf, P. Augmented visual, auditory, haptic, and multimodal feedback in motor learning: a review. Psychonomic bulletin & review.20, 21–53 (2013). [DOI] [PubMed] [Google Scholar]

- 21.Massimino, M. J. Improved force perception through sensory substitution. Control Engineering Practice.3(2), 215–222 (1995). [Google Scholar]

- 22.Walker BN, Lane DM. Psychophysical scaling of sonification mappings: A comparison of visually impaired and sighted listeners. InProceedings of the International Conference on Auditory Display 2001 Jul 29 (pp. 90–94).

- 23.Kitagawa, M., Dokko, D., Okamura, A. M. & Yuh, D. D. Effect of sensory substitution on suture-manipulation forces for robotic surgical systems. The Journal of thoracic and cardiovascular surgery.129(1), 151–158 (2005). [DOI] [PubMed] [Google Scholar]

- 24.Petzold B, Zaeh MF, Faerber B, Deml B, Egermeier H, Schilp J, Clarke S. A study on visual, auditory, and haptic feedback for assembly tasks. Presence: Teleoperators & Virtual Environments. 2004 Feb 1;13(1):16–21.

- 25.Okamura, A. M. Haptic feedback in robot-assisted minimally invasive surgery. Current opinion in urology.19(1), 102–107 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Bergamasco M. Haptic interfaces: the study of force and tactile feedback systems. InProceedings 4th IEEE International Workshop on Robot and Human Communication 1995 Jul 5 (pp. 15–20). IEEE.

- 27.Culjat, M. et al. Pneumatic balloon actuators for tactile feedback in robotic surgery. Industrial Robot: An International Journal.35(5), 449–455 (2008). [Google Scholar]

- 28.Tavakoli M, Patel RV, Moallem M. A force reflective master-slave system for minimally invasive surgery. InProceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003)(Cat. No. 03CH37453) 2003 Oct 27 (Vol. 4, pp. 3077–3082). IEEE.

- 29.Abiri, A. et al. Multi-modal haptic feedback for grip force reduction in robotic surgery. Scientific reports.9(1), 5016 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Nakao M, Kuroda T, Oyama H. A haptic navigation system for supporting master-slave robotic surgery. InICAT 2003. [PubMed]

- 31.D’Alonzo, M., Dosen, S., Cipriani, C. & Farina, D. HyVE: hybrid vibro-electrotactile stimulation for sensory feedback and substitution in rehabilitation. IEEE Transactions on Neural Systems and Rehabilitation Engineering.22(2), 290–301 (2013). [DOI] [PubMed] [Google Scholar]

- 32.Kaczmarek, K. A., Webster, J. G., Bach-y-Rita, P. & Tompkins, W. J. Electrotactile and vibrotactile displays for sensory substitution systems. IEEE transactions on biomedical engineering.38(1), 1–6 (1991). [DOI] [PubMed] [Google Scholar]

- 33.Sato K, Tachi S. Design of electrotactile stimulation to represent distribution of force vectors. In2010 IEEE Haptics Symposium 2010 Mar 25 (pp. 121–128). IEEE.

- 34.Manoharan, S. & Park, H. Characterization of perception by transcutaneous electrical Stimulation in terms of tingling intensity and temporal dynamics. Biomedical Engineering Letters.19, 1 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Zhao, Z., Yeo, M., Manoharan, S., Ryu, S. C. & Park, H. Electrically-evoked proximity sensation can enhance fine finger control in telerobotic pinch. Scientific reports.10(1), 163 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Manoharan S, Oh S, Jiang B, Patton JL, Park H. Electro-prosthetic E-skin Successfully Delivers Finger Aperture Distance by Electro-Prosthetic Proprioception (EPT). In2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC) 2022 Jul 11 (pp. 4196–4199). IEEE. [DOI] [PubMed]

- 37.Adams JA, Gopher D, Lintern G. The effects of visual and proprioceptive feedback on motor learning. InProceedings of the Human Factors Society Annual Meeting 1975 Oct (Vol. 19, No. 2, pp. 162–165). Sage CA: Los Angeles, CA: SAGE Publications.

- 38.Laufer, Y. Effect of cognitive demand during training on acquisition, retention and transfer of a postural skill. Human movement science.27(1), 126–141 (2008). [DOI] [PubMed] [Google Scholar]

- 39.Roemmich, R. T., Long, A. W. & Bastian, A. J. Seeing the errors you feel enhances locomotor performance but not learning. Current biology.26(20), 2707–2716 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Wahn, B. & König, P. Is attentional resource allocation across sensory modalities task-dependent?. Advances in cognitive psychology.13(1), 83 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Azbell, J., Park, J., Chang, S. H., Engelen, M. P. & Park, H. Plantar or palmar tactile augmentation improves lateral postural balance with significant influence from cognitive load. IEEE Transactions on Neural Systems and Rehabilitation Engineering.10(29), 113–122 (2020). [DOI] [PubMed] [Google Scholar]

- 42.Kuling, I. A., de Brouwer, A. J., Smeets, J. B. & Flanagan, J. R. Correcting for natural visuo-proprioceptive matching errors based on reward as opposed to error feedback does not lead to higher retention. Experimental Brain Research.4(237), 735–741 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rangwani, R. & Park, H. A new approach of inducing proprioceptive illusion by transcutaneous electrical stimulation. Journal of NeuroEngineering and Rehabilitation.18, 1–6 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Bowman, B. R. & Baker, L. L. Effects of waveform parameters on comfort during transcutaneous neuromuscular electrical stimulation. Annals of biomedical engineering.13, 59–74 (1985). [DOI] [PubMed] [Google Scholar]

- 45.Körding, K. P. & Wolpert, D. M. Bayesian integration in sensorimotor learning. Nature.427(6971), 244–247 (2004). [DOI] [PubMed] [Google Scholar]

- 46.Chambers C, Sokhey T, Gaebler-Spira D, Kording KP. The development of Bayesian integration in sensorimotor estimation. Journal of Vision. 2018 Nov 1;18(12):8-. [DOI] [PMC free article] [PubMed]

- 47.Angelaki, D. E., Gu, Y. & DeAngelis, G. C. Multisensory integration: psychophysics, neurophysiology, and computation. Current opinion in neurobiology.19(4), 452–458 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Van Dongen, S. Prior specification in Bayesian statistics: three cautionary tales. Journal of theoretical biology.242(1), 90–100 (2006). [DOI] [PubMed] [Google Scholar]

- 49.Jacobs, R. A. What determines visual cue reliability?. Trends in cognitive sciences.6(8), 345–350 (2002). [DOI] [PubMed] [Google Scholar]

- 50.Deneve, S. & Pouget, A. Bayesian multisensory integration and cross-modal spatial links. Journal of Physiology-Paris.98(1–3), 249–258 (2004). [DOI] [PubMed] [Google Scholar]

- 51.Botvinick M, Cohen J. Rubber hands ‘feel’touch that eyes see. Nature. 1998 Feb;391(6669):756-. [DOI] [PubMed]

- 52.Tsakiris, M., Carpenter, L., James, D. & Fotopoulou, A. Hands only illusion: multisensory integration elicits sense of ownership for body parts but not for non-corporeal objects. Experimental Brain Research.204(3), 343–352 (2010). [DOI] [PubMed] [Google Scholar]

- 53.Wagner, F. B. et al. Targeted neurotechnology restores walking in humans with spinal cord injury. Nature.563(7729), 65–71 (2018). [DOI] [PubMed] [Google Scholar]

- 54.Sharififar S, Shuster JJ, Bishop MD. Adding electrical stimulation during standard rehabilitation after stroke to improve motor function. A systematic review and meta-analysis. Annals of physical and rehabilitation medicine. 2018 Sep 1;61(5):339–44. [DOI] [PubMed]

- 55.Jiang, B., Kim, J. & Park, H. Palatal Electrotactile Display Outperforms Visual Display in Tongue Motor Learning. IEEE Transactions on Neural Systems and Rehabilitation Engineering.4(30), 529–539 (2022). [DOI] [PubMed] [Google Scholar]

- 56.Jiang B, Biyani S, Park H. A wearable intraoral system for speech therapy using real-time closed-loop artificial sensory feedback to the tongue. In2019 9th International IEEE/EMBS Conference on Neural Engineering (NER) 2019 Mar 20 (pp. 118–121). IEEE.

- 57.Ballantyne GH. The future of telerobotic surgery. InRobotic Urologic Surgery 2007 (pp. 199–207). London: Springer London.

- 58.Avgousti, S. et al. Medical telerobotic systems: current status and future trends. Biomedical engineering online.15, 1–44 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ballantyne, G. H. & Moll, F. The da Vinci telerobotic surgical system: the virtual operative field and telepresence surgery. Surgical Clinics.83(6), 1293–1304 (2003). [DOI] [PubMed] [Google Scholar]

- 60.Nayyar, R. & Gupta, N. P. Critical appraisal of technical problems with robotic urological surgery. BJU international.105(12), 1710–1713 (2010). [DOI] [PubMed] [Google Scholar]

- 61.Mills, J. T. et al. Positioning injuries associated with robotic assisted urological surgery. The Journal of urology.190(2), 580–584 (2013). [DOI] [PubMed] [Google Scholar]

- 62.Westebring–van der Putten EP, Goossens RH, Jakimowicz JJ, Dankelman J. Haptics in minimally invasive surgery–a review. Minimally Invasive Therapy & Allied Technologies. 2008 Jan 1;17(1):3–16. [DOI] [PubMed]

- 63.Gan, L. S. et al. Quantification of forces during a neurosurgical procedure: A pilot study. World neurosurgery.84(2), 537–548 (2015). [DOI] [PubMed] [Google Scholar]

- 64.Azarnoush, H. et al. The force pyramid: a spatial analysis of force application during virtual reality brain tumor resection. Journal of neurosurgery.127(1), 171–181 (2016). [DOI] [PubMed] [Google Scholar]

- 65.Gupta PK, Jensen PS, de Juan E. Surgical forces and tactile perception during retinal microsurgery. InMedical Image Computing and Computer-Assisted Intervention–MICCAI’99: Second International Conference, Cambridge, UK, September 19–22. Proceedings 2 1999 1218–1225 (Springer, 1999). [Google Scholar]

- 66.Sunshine, S. et al. A force-sensing microsurgical instrument that detects forces below human tactile sensation. Retina.33(1), 200–206 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.