Abstract

Background

Natural language processing (NLP) is commonly used to annotate radiology datasets for training deep learning (DL) models. However, the accuracy and potential biases of these NLP methods have not been thoroughly investigated, particularly across different demographic groups.

Purpose

To evaluate the accuracy and demographic bias of four NLP radiology report labeling tools on two chest radiograph datasets.

Materials and Methods

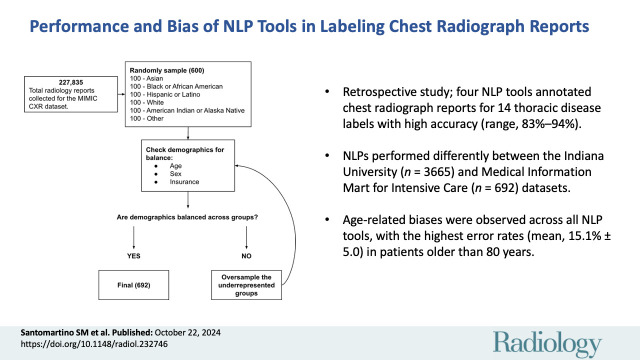

This retrospective study, performed between April 2022 and April 2024, evaluated chest radiograph report labeling using four NLP tools (CheXpert [rule-based], RadReportAnnotator [RRA; DL-based], OpenAI’s GPT-4 [DL-based], cTAKES [hybrid]) on a subset of the Medical Information Mart for Intensive Care (MIMIC) chest radiograph dataset balanced for representation of age, sex, and race and ethnicity (n = 692) and the entire Indiana University (IU) chest radiograph dataset (n = 3665). Three board-certified radiologists annotated the chest radiograph reports for 14 thoracic disease labels. NLP tool performance was evaluated using several metrics, including accuracy and error rate. Bias was evaluated by comparing performance between demographic subgroups using the Pearson χ2 test.

Results

The IU dataset included 3665 patients (mean age, 49.7 years ± 17 [SD]; 1963 female), while the MIMIC dataset included 692 patients (mean age, 54.1 years ± 23.1; 357 female). All four NLP tools demonstrated high accuracy across findings in the IU and MIMIC datasets, as follows: CheXpert (92.6% [47 516 of 51 310], 90.2% [8742 of 9688]), RRA (82.9% [19 746 of 23 829], 92.2% [2870 of 3114]), GPT-4 (94.3% [45 586 of 48 342], 91.6% [6721 of 7336]), and cTAKES (84.7% [43 436 of 51 310], 88.7% [8597 of 9688]). RRA and cTAKES had higher accuracy (P < .001) on the MIMIC dataset, while CheXpert and GPT-4 had higher accuracy on the IU dataset. Differences (P < .001) in error rates were observed across age groups for all NLP tools except RRA on the MIMIC dataset, with the highest error rates for CheXpert, RRA, and cTAKES in patients older than 80 years (mean, 15.8% ± 5.0) and the highest error rate for GPT-4 in patients 60–80 years of age (8.3%).

Conclusion

Although commonly used NLP tools for chest radiograph report annotation are accurate when evaluating reports in aggregate, demographic subanalyses showed significant bias, with poorer performance in older patients.

© RSNA, 2024

Supplemental material is available for this article.

See also the editorial by Cai in this issue.

Summary

Natural language processing tools for chest radiograph report annotation show high overall accuracy but exhibit age-related bias, with poorer performance in older patients.

Key Results

■ In this retrospective study, four natural language processing (NLP) tools annotated chest radiograph reports for 14 thoracic disease labels with high accuracy (range, 82.9%–94.3%) but showed differences (P < .001) in performance metrics between the Indiana University (n = 3665) and Medical Information Mart for Intensive Care (n = 692) datasets.

■ Age-related biases were observed across all NLP tools, with the highest error rates (mean, 15.8% ± 5.0 [SD]) in patients older than 80 years.

Introduction

Artificial intelligence (AI) has great promise in medical image diagnosis (1) and workflow optimization (2). However, the development of deep learning (DL) AI tools in radiology is limited by the availability of large annotated datasets (3). In particular, image annotation is a bottleneck in curation of large datasets, frequently requiring the time and labor of expert radiologists to review images.

Natural language processing (NLP), a set of automated techniques for analyzing written text (4), offers an alternative for curating datasets for DL model development by extracting imaging findings directly from radiology reports. Rule-based and machine learning (ML) are two NLP methods. Rule-based NLP uses predefined pattern-matching rules to extract meaning from text (5). ML NLP uses sample data to develop a model that can then make predictions without explicit instructions (6). DL has been the go-to approach in ML-based NLP tools, with large language models (LLMs) being the latest development. Trained on extensive datasets, LLMs can understand and generate human-like textual responses to user prompts.

Previous studies have evaluated NLP performance in annotating radiology reports (7–12). For example, rule-based and hybrid NLP tools have achieved good performance for classifying pneumonia from chest radiograph reports (92.7% sensitivity and 91.1% specificity [10], 76.3% accuracy and 88% sensitivity [7], respectively).

The recent introduction of LLMs has led to a scarcity of in-depth model evaluations. Preliminary reports have demonstrated variable accuracy of LLMs in identifying chest radiograph report findings, with performance not exceeding that of radiologists (13,14). Nevertheless, comparison of NLP approaches and their generalizability across datasets is lacking, which is important given the limited generalizability shown in radiologic DL imaging diagnostic models (15,16).

Furthermore, the potential demographic biases of these NLP tools have not been evaluated, despite similar issues identified in non-NLP AI models (17,18) and the potential for biased NLP tools to contribute to biased DL image diagnostic models (17), for example, by having higher error rates in older compared with younger patients. Without robust targeted evaluation for bias, NLP and the AI tools developed from it may perpetuate existing health care inequities related to socioeconomic factors.

Demographic biases in NPL tools for radiology report annotation have not previously been well established in the literature. The purpose of this study was to evaluate the accuracy and demographic bias of four NLP radiology report labeling tools on two chest radiograph datasets.

Materials and Methods

This retrospective study, performed between April 2022 and April 2024, used de-identified images from public domain datasets (19–21). The institutional review board waived formal review, classifying this research as nonhuman subjects research.

Chest Radiograph Datasets

The following two publicly available chest radiograph report datasets were used. Reports were included if they contained corresponding demographic variables in the dataset.

Indiana University.—The Indiana University (IU) chest radiograph dataset contains 3996 chest radiograph reports and 8121 associated images collected from two hospitals in the Indiana Network for Patient Care database (19). Each report represents a unique patient in the dataset. Reports were manually coded by two medical informaticists (conflicts resolved by a third), using the guidelines provided by the NLM Indexing Manual and Technical Memoranda as a reference standard. Of the 3996 radiology reports, 3665 were included (described further in Results; Tables 1, S1, S2) according to inclusion criteria outlined in Figure 1. Age and sex were the only demographic details provided in this dataset.

Table 1:

Patient Demographics and Imaging Details in the IU and MIMIC Chest Radiograph Datasets

Figure 1:

(A) Flowchart shows chest radiograph (CXR) report acquisition in the Indiana University (IU) dataset, accessed through the National Library of Medicine Open-i database. (B) Flowchart shows chest radiograph report acquisition in the Medical Information Mart for Intensive Care (MIMIC) dataset.

Medical Information Mart for Intensive Care.—The Medical Information Mart for Intensive Care (MIMIC) chest radiograph dataset, a credentialed public access dataset, is composed of 377 110 radiologic images associated with 227 835 radiographic reports retroactively collected from Beth Israel Deaconess Medical Center (20,21). The MIMIC dataset provides report labels generated by the CheXpert NLP labeler and does not provide human-annotated report findings. A subset of 692 radiology reports was stratified to include at least 100 patients per self-reported racial and ethnic group, with random oversampling to ensure balanced demographic representation across all demographics provided in the dataset (age, sex, race and ethnicity, type of insurance). This dataset is described further in Results (Tables 1, S3–S6). Age groups are defined as inclusive of the first number in each range but exclusive of the second number (eg, 20–40 years group includes individuals aged 20 through 39 years, >80 years group includes individuals 80 years and older).

Data Preprocessing

Report annotations for ground truth determination.—To allow for fair comparisons of NLP performance on the IU and MIMIC datasets, report labels were harmonized according to the CheXpert disease label convention. The IU radiology reports were labeled in an expansive range of terms with variable levels of granularity, while MIMIC reports were labeled for the presence or absence of only 14 disease labels (Table S7). The radiology reports were reviewed and labeled per the CheXpert disease labeling convention with original radiologist labels serving as the reference standard by one of two board-certified radiologists (K.H. and P.H.Y., each with more than 5 years of experience), with disagreements resolved by a third board-certified fellowship-trained cardiothoracic radiologist (J.J., with more than 20 years of experience). Details of the report review and labeling process are provided in Appendix S1 and Figure S1.

NLP labelers.—The following four publicly available, well-known, and widely used NLP tools for chest radiograph report labeling (encompassing several approaches) were evaluated: (a) CheXpert (rule-based NLP algorithm) (22), (b) RadReportAnnotator (RRA; DL-based NLP algorithm, non-LLM) (12), (c) cTAKES (The Apache Software Foundation; https://ctakes.apache.org) (hybrid approach) (23), and (d) OpenAI’s GPT-4 (DL-based NLP algorithm, LLM) (24). Developed by a group at Stanford University, the CheXpert labeling tool is a rule-based NLP tool that classifies reports as positive, negative, or uncertain for 14 possible diagnostic labels (Table S7). RRA was developed by a group at Mount Sinai and is a DL-based NLP labeling tool trained on features from a portion of a labeled report dataset (12). The cTAKES (clinical text analysis and knowledge extraction system) tool comes from a Mayo Clinic research group and combines both rule-based and ML techniques for a hybrid NLP approach (23). GPT-4 is an LLM developed by OpenAI that provides a chatbot interface for user manipulation (24,25). Additional details regarding these NLP tools, along with links to open-source code, are provided in Appendix S2.

Statistical Analysis

The performance of each NLP tool was evaluated using accuracy, positive predictive value (PPV), negative predictive value (NPV), sensitivity, and specificity. F1 scores were calculated using the standard harmonic mean of precision and recall to provide a composite “global” performance evaluation. NLP tool generalizability was assessed using the Pearson χ2 test, comparing each performance metric between the two datasets. Bias was evaluated by comparing the proportion of errors, accuracy, PPV, NPV, sensitivity, and specificity for each NLP tool, stratified according to demographic variable (age and sex for IU; age, sex, race and ethnicity, and insurance for MIMIC), using the Pearson χ2 test given the relatively large sample sizes. Confusion matrices were generated to identify patterns of NLP tool errors.

To account for multiple comparisons, Bonferroni adjustment was applied to correct all P values using the p.adjust function in R (version 4.1.1; The R Foundation). P < .05 was considered indicative of a statistically significant difference. Statistical analysis was performed using Google Sheets (version 114.0.5735.198; Google) and R. All statistical methods were reviewed by one of the study authors (J.R.Z.).

Results

Dataset and Patient Characteristics

With one report per patient, 3665 of 3996 chest radiograph reports were included and analyzed from IU and 692 of 227 835 reports were included and analyzed from MIMIC (Fig 1). In the IU dataset, the mean patient age was 49.7 years ± 17 (SD), with 1963 female and 1702 male patients. In the MIMIC dataset, the mean patient age was 54.1 years ± 23.1, with 357 female and 335 male patients (Tables 1, S1–S6). GPT-4 failed to follow instructions for several reports by not providing the output in the requested format (Appendix S2). Consequently, 212 of 3665 reports (5.8%) from the IU dataset and 168 of 692 reports (24.3%) from the MIMIC dataset were excluded from the GPT-4 performance and bias evaluations.

Labeler Performance and Generalizability

In both datasets, the four NLP labeling tools had high accuracy, NPV, and specificity, but lower PPV and sensitivity across all chest radiograph report findings (Table 2). For example, accuracy for each tool was as follows: CheXpert (IU, 92.6% [47 516 of 51 310; 95% CI: 92.4, 92.8]; MIMIC, 90.2% [8742 of 9688; 95% CI: 89.6, 90.8]), RRA (IU, 82.9% [19 746 of 23 829; 95% CI: 82.4, 83.3]; MIMIC, 92.2% [2870 of 3114; 95% CI: 91.2, 93.1]), GPT-4 (IU, 94.3% [45 586 of 48 342; 95% CI: 94.1, 94.5]; MIMIC, 91.6% [6721 of 7336; 95% CI: 91.0, 92.2)], and cTAKES (IU, 84.7% [43 436 of 51 310; 95% CI: 84.3, 85.0]; MIMIC, 88.7% [8597 of 9688; 95% CI: 88.1, 89.4]). However, the sensitivities were relatively lower, as follows: Chexpert (IU, 52.2% [2848 of 5451; 95% CI: 50.9, 53.6]; MIMIC, 69.7% [568 of 856; 95% CI: 66.7, 72.6]), RRA (IU, 23.6% [653 of 2763; 95% CI: 22.1, 25.3]; MIMIC, 62.7% [269 of 429; 95% CI: 57.9, 67.3]), GPT-4 (IU, 71.6% [3672 of 5130; 95% CI: 70.3, 72.8]; MIMIC, 88.2% [635 of 720; 95% CI: 85.6, 90.5]), and cTAKES (IU, 18.1% [987 of 5451; 95% CI: 17.1, 19.2]; MIMIC, 38.3% [364 of 950; 95% CI: 35.2, 41.5]).

Table 2:

Performance Metrics for NLP Tools across All Findings

RRA and cTAKES had higher F1 scores on the MIMIC dataset compared with the IU dataset (RRA, 0.69 vs 0.24; cTAKES, 0.40 vs 0.20), while the opposite was true for CheXpert (IU, 0.60; MIMIC, 0.58) and GPT-4 (IU, 0.73; MIMIC, 0.67).

All four tools had accuracy, PPV, NPV, sensitivity, and specificity values that were different between the two datasets (all P < .001) (Table 2). RRA demonstrated the greatest difference between the datasets across all metrics, consistently performing better on the MIMIC dataset. Differences in performance for RRA were as follows: 9.3% (accuracy), 51.3% (PPV), 4.2% (NPV), 39.1% (sensitivity), and 6.2% (specificity). For each NLP tool and dataset, the confusion matrices demonstrating the distribution of true-positive, true-negative, false-positive, and false-negative findings are reported in Table S8. Figures 2 and 3 present example reports where the four NLP tools were concordant or discordant in positive disease findings. Performance of each NLP tool stratified according to disease label is provided in Appendix S3.

Figure 2:

![(A, B) Example chest radiograph reports in the Medical Information Mart for Intensive Care dataset show concordant positive disease findings across natural language processing (NLP) tools (CheXpert [22], RadReportAnnotator [RRA; 12], OpenAI’s GPT-4 [24], cTAKES [23]).](https://cdn.ncbi.nlm.nih.gov/pmc/blobs/495d/11535863/24b6c7e5ad8a/radiol.232746.fig2.jpg)

(A, B) Example chest radiograph reports in the Medical Information Mart for Intensive Care dataset show concordant positive disease findings across natural language processing (NLP) tools (CheXpert [22], RadReportAnnotator [RRA; 12], OpenAI’s GPT-4 [24], cTAKES [23]).

Figure 3:

![Example chest radiograph report (top) in the Indiana University dataset shows concordant positive disease findings across natural language processing (NLP) tools (CheXpert [22], RadReportAnnotator [RRA; 12], OpenAI’s GPT-4 [24], cTAKES [23]), with corresponding frontal and lateral chest radiographs (bottom).](https://cdn.ncbi.nlm.nih.gov/pmc/blobs/495d/11535863/15668dbb2e76/radiol.232746.fig3.jpg)

Example chest radiograph report (top) in the Indiana University dataset shows concordant positive disease findings across natural language processing (NLP) tools (CheXpert [22], RadReportAnnotator [RRA; 12], OpenAI’s GPT-4 [24], cTAKES [23]), with corresponding frontal and lateral chest radiographs (bottom).

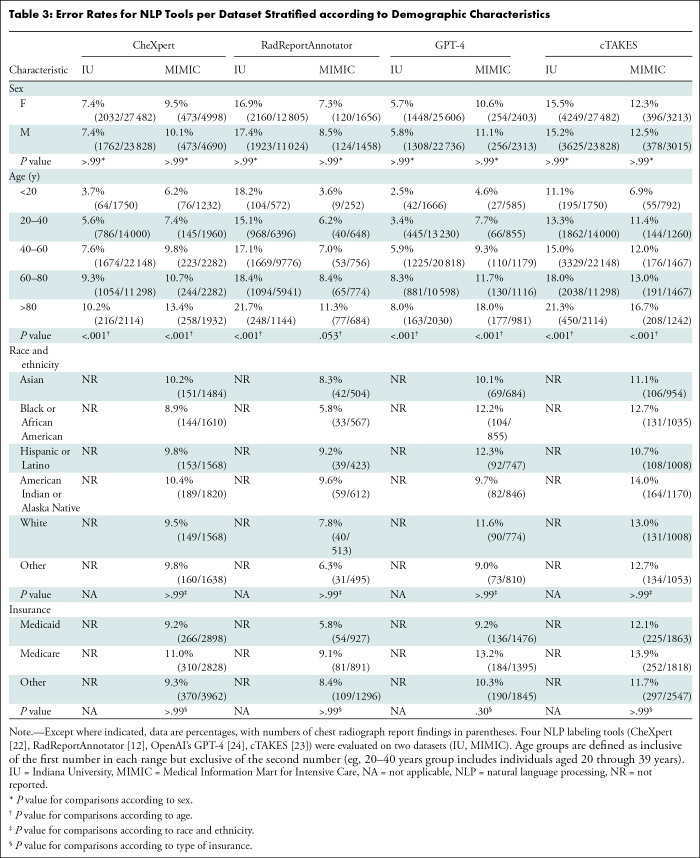

Bias

All four tools demonstrated a difference (P < .001) in error rates for all five age groups (<20, 20–40, 40–60, 60–80, >80 years) for the IU dataset (Table 3). All but the RRA tool demonstrated a difference (P < .001) in the error rate across age groups for the MIMIC dataset. The mean error rates of the CheXpert predictions stratified according to age were 7.3% ± 2.7 and 9.5% ± 2.8 for the IU and MIMIC datasets, respectively. The mean error rates of the RRA predictions stratified according to age were 18.1% ± 2.4 and 7.3% ± 2.8 for the IU and MIMIC datasets, respectively. The mean error rates of the GPT-4 predictions stratified according to age were 5.6% ± 2.6 and 10.3% ± 5.0 for the IU and MIMIC datasets, respectively. The mean error rates of the cTAKES predictions stratified according to age were 15.8% ± 4.0 and 12.0% ± 3.5 for the IU and MIMIC datasets, respectively.

Table 3:

Error Rates for NLP Tools per Dataset Stratified according to Demographic Characteristics

For CheXpert, RRA, and cTAKES, the highest error rate for both datasets was in the older than 80 years group, with a mean error rate of 15.8% ± 5.0. The second highest error rate for these three tools was in the 60–80 years of age group, with a mean error rate of 13.0% ± 4.4. GPT-4 had a slightly higher error rate for this age group (60–80 years, 8.3%) than the oldest age group (>80 years, 8.0%) (Table 3).

Across the four NLP labelers and both datasets, there was a difference in most performance metrics when stratified according to age (Tables 4, 5, S9–S11). CheXpert had differences for four of five metrics (all but PPV) when compared across all five age groups on the IU dataset (P ≤ .002) (Table 4). The oldest age group (>80 years) had the lowest accuracy (89.8% [1898 of 2114 findings]), NPV (91.6% [1738 of 1898 findings]), and specificity (96.9% [1738 of 1794 findings]), while the 60–80 years of age group had the lowest sensitivity (46.7% [668 of 1431 findings]). For the MIMIC dataset, CheXpert had differences across age groups in accuracy (P < .001) and specificity (P < .001) (Table 5). For both metrics, the lowest performance was in the older than 80 years group (accuracy, 86.7% [1674 of 1932 findings]; specificity, 88.4% [1508 of 1706 findings]).

Table 4:

Performance Metrics for CheXpert on the IU Dataset Stratified according to Demographic Characteristics

Table 5:

Performance Metrics for CheXpert on the MIMIC Dataset Stratified according to Demographic Characteristics

On the IU dataset, the RRA labeler demonstrated age-related differences (P < .001) in accuracy (lowest, >80 years group, 78.3% [896 of 1144 findings]) and NPV (lowest, >80 years group, 84.9% [861 of 1014 findings]). RRA showed no evidence of differences across age groups on the MIMIC dataset (P > .99), although the older than 80 years group did consistently have the lowest performance across all five metrics (Table S9).

GPT-4 had differences for all five metrics when compared across all five age groups on the IU dataset (P < .001). The 60–80 years of age group had the lowest accuracy (91.7% [9717 of 10 598 findings]), PPV (67.7% [880 of 1299 findings]), sensitivity (65.6% [880 of 1342 findings]), and specificity (95.5% [8837 of 9256 findings]), while the older than 80 years group had the lowest NPV (94.9% [1651 of 1739 findings]). For the MIMIC dataset, GPT-4 had differences across age groups in accuracy, PPV, and specificity (all P ≤ .03). For all three of these metrics, the lowest performance was demonstrated in the older than 80 years group (accuracy, 82.0% [804 of 981 findings]; PPV, 48.5% [149 of 307 findings]; specificity, 80.6% [655 of 813 findings]) (Table S10).

On the IU dataset, the cTAKES labeler demonstrated age-related differences (P < .001) in four of five metrics (all but specificity), with the older than 80 years group consistently having the lowest performance (accuracy, 78.7% [1664 of 2114 findings]; PPV, 13.1% [23 of 176 findings]; NPV, 84.7% [1641 of 1938 findings]; sensitivity, 7.2% [23 of 320 findings]). For the MIMIC dataset, cTAKES demonstrated differences across age groups in accuracy and NPV, with the older than 80 years group also performing lowest (83.3% [1034 of 1242 findings] and 49.6% [68 of 137 findings], respectively) (Table S11).

Performance did not differ (P > .99) between race and ethnicity or insurance status groups for any tool or either dataset (Tables 4, 5, S9–S11).

Discussion

Natural language processing (NLP) tools have demonstrated good performance for radiology report labeling (7–12), but they have not been evaluated for demographic biases. We evaluated four NLP tools on two chest radiograph datasets. These tools demonstrated good overall performance but performed variably between datasets. They also demonstrated age-based biases, with the highest error rate (P < .001) for CheXpert, RadReportAnnotator, and cTAKES in patients older than 80 years (mean, 15.8% ± 5.0 [SD]) and the highest error rate for GPT-4 in patients 60–80 years of age (8.3%). Because NLP forms the foundation for imaging dataset annotations, biases in these tools may explain biases observed in deep learning models for chest radiographic imaging diagnosis (17,18).

All four NLP tools had high (>80%) accuracy, NPV, and specificity for chest radiograph disease label extraction in both datasets. These findings echo prior work showing that a rule-based NLP tool (26) achieved 99% specificity for extracting 24 disease labels from radiology reports (8). Another study found that an ML NLP tool outperformed a rule-based one (F1 score, 0.91 vs 0.85) for identification of acute lung injury in radiology reports (11). Although the ML-based NLP tools, including GPT-4 and RRA, frequently outperformed the rule-based CheXpert tool, the converse was also true depending on the dataset. Thus, caution is warranted when interpreting results of NLP tools tested on data from a single site.

We evaluated NLP generalizability by comparing performance between the IU and MIMIC datasets. All four NLP tools had limited generalizability with differences in performance between datasets across all metrics, with the greatest differences for the RRA NLP tool. These results suggest that ML NLP tools (eg, RRA) might be more susceptible to annotation variations, as they can overfit to single-site training data (27). Additionally, limited generalizability might relate to nonstandard reporting techniques that may be present in datasets (28). A previous study (29) showed that a DL-based NLP tool had better generalizability for stroke phenotype prediction than three rule-based tools. Different NLP tools thus appear to have different performance under different circumstances and a one-size-fits-all approach may be suboptimal.

DL models for chest radiograph diagnosis can exhibit biases based on demographics such as sex, race, and age (17,18). Because datasets used to train these models are frequently annotated using NLP, unfair labeling performance could contribute to these biases. We found that all NLP tools were susceptible to biases related to age across all performance metrics, with higher error rates in older patients (>60 years of age). These biases may reflect geriatric patients tending to have multiple medical conditions, which likely translates into more complex radiology reports (30). While the distribution of findings for the IU dataset was relatively normal across age groups, the MIMIC dataset demonstrated a slight left skew, with increasing disease prevalence with age, which could create a false semblance of performance dip. However, as multiple NLP tools showed age-based bias in both datasets, the distribution of disease labels likely only partially contributes to these results. In any case, we underscore the importance of using prevalence-adjusted metrics (eg, PPV and NPV), as other metrics may be misleading.

Historically, NLP tools have been rule-based. DL approaches to NLP, such as LLMs, have recently emerged with advantages over traditional NLP; these include the ability to perform tasks without specific training for such tasks (eg, foundation models) (31). Interestingly, variable generalizability and demographic bias is still a limitation for NLP regardless of the underlying technologies.

This study had several limitations. First, we evaluated four NLP labeling tools encompassing three approaches (rules-based, DL-based, and hybrid). Future studies should validate our findings on other NLP tools. Second, we evaluated these NLP tools on two datasets only. Third, the IU dataset reported sex and age only, precluding evaluation for bias related to other demographic variables. Fourth, we did not assess the impact of demographic differences in disease prevalence on the NLP biases; however, the consistent age-based biases for both datasets suggests that these differences cannot alone explain the observed biases. Lastly, we acknowledge the absence of robust causative evidence of biases in our study. With the presence of these biases now established, future research should focus on identifying causal mechanisms to inform bias-reducing interventions.

In conclusion, although four natural language processing (NLP) tools demonstrated good performance for annotating chest radiograph reports, they lacked generalizability and demonstrated age-related biases, suggesting that they may contribute to previously observed biases in deap learning (DL) models for chest radiograph diagnosis (17,18). Algorithmic biases can be mitigated by making training data more diverse and representative of the population (32). Furthermore, NLP tools should be trained on contemporary data to ensure that they are representative of current demographic trends. Finally, debiasing algorithms during training through techniques such as fairness awareness and bias auditing may help mitigate biases (33,34). While NLP tools can facilitate DL development in radiology, they must be vetted for demographic biases prior to widespread deployment to prevent biased labels from being perpetuated at scale.

Disclosures of conflicts of interest: S.M.S. No relevant relationships. J.R.Z. Grant from RSNA R&E Foundation; editorial board member, Radiology: Artificial Intelligence. K.H. No relevant relationships. J.J. No relevant relationships. V.P. No relevant relationships. P.H.Y. Grants or contracts from National Institutes of Health–National Cancer Institute, American College of Radiology (ACR), RSNA, Johns Hopkins University, and University of Maryland; meeting attendance support from Society for Imaging Informatics in Medicine (SIIM) and Society of Nuclear Medicine and Molecular Imaging; vice chair of Program Planning Committee, SIIM; Informatics Commission, ACR; stock or stock options in Bunkerhill Health; editorial board member, Radiology: Artificial Intelligence.

Abbreviations:

- AI

- artificial intelligence

- DL

- deep learning

- IU

- Indiana University

- LLM

- large language model

- MIMIC

- Medical Information Mart for Intensive Care

- ML

- machine learning

- NLP

- natural language processing

- NPV

- negative predictive value

- PPV

- positive predictive value

- RRA

- RadReportAnnotator

References

- 1. Rajpurkar P , Irvin J , Ball RL , et al . Deep learning for chest radiograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing radiologists . PLoS Med 2018. ; 15 ( 11 ): e1002686 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Shin Y , Kim S , Lee YH . AI musculoskeletal clinical applications: how can AI increase my day-to-day efficiency? Skeletal Radiol 2022. ; 51 ( 2 ): 293 – 304 . [DOI] [PubMed] [Google Scholar]

- 3. Tajbakhsh N , Jeyaseelan L , Li Q , Chiang JN , Wu Z , Ding X . Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation . Med Image Anal 2020. ; 63 : 101693 . [DOI] [PubMed] [Google Scholar]

- 4. Cai T , Giannopoulos AA , Yu S , et al . Natural Language Processing Technologies in Radiology Research and Clinical Applications . RadioGraphics 2016. ; 36 ( 1 ): 176 – 191 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Zhang J , El-Gohary NM . Automated Information Transformation for Automated Regulatory Compliance Checking in Construction . J Comput Civ Eng 2015. ; 29 ( 4 ): B4015001 . [Google Scholar]

- 6. Maria R . Machine learning text classification model with NLP approach . Computational Linguistics and Intelligent Systems 2019. Apr 18 ; 2 : 71 – 73 . [Google Scholar]

- 7. Panny A , Hegde H , Glurich I , et al . A Methodological Approach to Validate Pneumonia Encounters from Radiology Reports Using Natural Language Processing . Methods Inf Med 2022. ; 61 ( 1-02 ): 38 – 45 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Hripcsak G , Austin JHM , Alderson PO , Friedman C . Use of natural language processing to translate clinical information from a database of 889,921 chest radiographic reports . Radiology 2002. ; 224 ( 1 ): 157 – 163 . [DOI] [PubMed] [Google Scholar]

- 9. Friedlin J , McDonald CJ . A natural language processing system to extract and code concepts relating to congestive heart failure from chest radiology reports . AMIA Annu Symp Proc 2006. ; 2006 : 269 – 273 . [PMC free article] [PubMed] [Google Scholar]

- 10. Liu V , Clark MP , Mendoza M , et al . Automated identification of pneumonia in chest radiograph reports in critically ill patients . BMC Med Inform Decis Mak 2013. ; 13 ( 1 ): 90 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Solti I , Cooke CR , Xia F , Wurfel MM . Automated Classification of Radiology Reports for Acute Lung Injury: Comparison of Keyword and Machine Learning Based Natural Language Processing Approaches . In: 2009 IEEE International Conference on Bioinformatics and Biomedicine Workshop . IEEE; , 2009. ; 314 – 319 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Zech J , Pain M , Titano J , et al . Natural Language-based Machine Learning Models for the Annotation of Clinical Radiology Reports . Radiology 2018. ; 287 ( 2 ): 570 – 580 . [DOI] [PubMed] [Google Scholar]

- 13. Adams LC , Truhn D , Busch F , et al . Leveraging GPT-4 for Post Hoc Transformation of Free-text Radiology Reports into Structured Reporting: A Multilingual Feasibility Study . Radiology 2023. ; 307 ( 4 ): e230725 . [DOI] [PubMed] [Google Scholar]

- 14. Sun Z , Ong H , Kennedy P , et al . Evaluating GPT4 on Impressions Generation in Radiology Reports . Radiology 2023. ; 307 ( 5 ): e231259 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Zech JR , Badgeley MA , Liu M , Costa AB , Titano JJ , Oermann EK . Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: A cross-sectional study . PLoS Med 2018. ; 15 ( 11 ): e1002683 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Xin KZ , Li D , Yi PH . Limited generalizability of deep learning algorithm for pediatric pneumonia classification on external data . Emerg Radiol 2022. ; 29 ( 1 ): 107 – 113 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Seyyed-Kalantari L , Zhang H , McDermott MBA , Chen IY , Ghassemi M . Underdiagnosis bias of artificial intelligence algorithms applied to chest radiographs in under-served patient populations . Nat Med 2021. ; 27 ( 12 ): 2176 – 2182 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Larrazabal AJ , Nieto N , Peterson V , Milone DH , Ferrante E . Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis . Proc Natl Acad Sci USA 2020. ; 117 ( 23 ): 12592 – 12594 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Demner-Fushman D , Kohli MD , Rosenman MB , et al . Preparing a collection of radiology examinations for distribution and retrieval . J Am Med Inform Assoc 2016. ; 23 ( 2 ): 304 – 310 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Johnson AEW , Pollard TJ , Berkowitz SJ , et al . MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports . Sci Data 2019. ; 6 ( 1 ): 317 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Johnson AEW , Pollard T , Mark R , Berkowitz S , Horng S . The MIMIC-CXR Database . Physionet.org. https://physionet.org/content/mimic-cxr/. Published 2019. Accessed August 15, 2023 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Irvin J , Rajpurkar P , Ko M , et al . CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison . arXiv 1901.07031 [preprint] https://arxiv.org/abs/1901.07031. Posted January 21, 2019. Accessed July 24, 2023 . [Google Scholar]

- 23. Savova GK , Masanz JJ , Ogren PV , et al . Mayo clinical Text Analysis and Knowledge Extraction System (cTAKES): architecture, component evaluation and applications . J Am Med Inform Assoc 2010. ; 17 ( 5 ): 507 – 513 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. GPT-4 . https://openai.com/research/gpt-4. Accessed February 23, 2024 .

- 25. Open AI , Achiam J , Adler S , et al . GPT-4 Technical Report . arXiv 2303.08774 [preprint] https://arxiv.org/abs/2303.08774. Posted March 15, 2023. Accessed February 23, 2024 . [Google Scholar]

- 26. Friedman C , Alderson PO , Austin JH , Cimino JJ , Johnson SB . A general natural-language text processor for clinical radiology . J Am Med Inform Assoc 1994. ; 1 ( 2 ): 161 – 174 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Nichols JA , Herbert Chan HW , Baker MAB . Machine learning: applications of artificial intelligence to imaging and diagnosis . Biophys Rev 2019. ; 11 ( 1 ): 111 – 118 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Hartung MP , Bickle IC , Gaillard F , Kanne JP . How to Create a Great Radiology Report . RadioGraphics 2020. ; 40 ( 6 ): 1658 – 1670 . [DOI] [PubMed] [Google Scholar]

- 29. Casey A , Davidson E , Grover C , Tobin R , Grivas A , Zhang H , et al . Understanding the performance and reliability of NLP tools: a comparison of four NLP tools predicting stroke phenotypes in radiology reports . Front Digit Health . 2023. Sep 28 ; 5 : 1184919 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Gossner J , Nau R . Geriatric chest imaging: when and how to image the elderly lung, age-related changes, and common pathologies . Radiol Res Pract 2013. ; 2013 : 584793 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Mukherjee P , Hou B , Lanfredi RB , Summers RM . Feasibility of Using the Privacy-preserving Large Language Model Vicuna for Labeling Radiology Reports . Radiology 2023. ; 309 ( 1 ): e231147 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Yi PH , Kim TK , Siegel E , Yahyavi-Firouz-Abadi N . Demographic Reporting in Publicly Available Chest Radiograph Data Sets: Opportunities for Mitigating Sex and Racial Disparities in Deep Learning Models . J Am Coll Radiol 2022. ; 19 ( 1 Pt B ): 192 – 200 . [DOI] [PubMed] [Google Scholar]

- 33. Majumder BP , He Z , McAuley J . InterFair: Debiasing with Natural Language Feedback for Fair Interpretable Predictions . arXiv 2210.07440 [preprint] https://arxiv.org/abs/2210.07440. Posted October 14, 2022. Accessed February 25, 2024 . [Google Scholar]

- 34. Mendelson M , Belinkov Y . Debiasing Methods in Natural Language Understanding Make Bias More Accessible . arXiv 2109.04095 [preprint] https://arxiv.org/abs/2109.04095. Posted September 9, 2021. Accessed February 25, 2024 . [Google Scholar]