Abstract

Background

Research shows that older adults' performance on choice reaction time (CRT) tests can predict cognitive decline. A simple CRT tool could help detect mild cognitive impairment (MCI) and preclinical dementia, allowing for further stratification of cognitive disorders on-site or via telemedicine.

Objective

The primary objective was to develop a CRT testing device and protocol to differentiate between two cognitive impairment categories: (a) subjective cognitive decline (SCD) and non-amnestic mild cognitive impairment (na-MCI), and (b) amnestic mild cognitive impairment (a-MCI) and multiple-domain a-MCI (a-MCI-MD).

Methods

A pilot study in Italy and Romania with 35 older adults (ages 61–85) assessed cognitive function using the Mini-Mental State Examination (MMSE) and a CRT color response task. Reaction time, accuracy, and demographics were recorded, and machine learning classifiers analyzed performance differences to predict preclinical dementia and screen for mild cognitive deficits.

Results

Moderate correlations were found between the MMSE score and both mean reaction time and mean accuracy rate. There was a significant difference between the two groups’ reaction time for blue light, but not for any other colors or for mean accuracy rate. SVM and RUSBoosted trees were found to have the best preclinical dementia prediction capabilities among the tested classifier algorithms, both presenting an accuracy rate of 77.1%.

Conclusions

CRT testing with machine learning effectively differentiates cognitive capacities in older adults, facilitating early diagnosis and stratification of neurocognitive diseases and can also identify impairments from stressors like dehydration and sleep deprivation. This study highlights the potential of portable CRT devices for monitoring cognitive function, including SCD and MCI.

Keywords: Elderly medicine, machine learning general, remote patient monitoring personalized medicine, mental health psychology, connected devices personalized medicine, well-being psychology

Introduction

Deceleration in sensorimotor processing times in elders and literature references

Aging is an inevitable part of the human experience, bringing about a multitude of physical and cognitive transformations. These transformations are particularly evident in cognitive processing, especially in tasks demanding swift decision-making abilities.

In contrast to younger adults, older individuals exhibit diminished gray matter volumes, 1 decreased integrity in white matter, 2 and engage extra neural resources during task performance. 3

These factors collectively may contribute to the observed deceleration in sensorimotor processing times. Among these tasks, choice reaction time (CRT) tests have captivated researchers as they gauge an individual's promptness in responding to diverse stimuli. While it is firmly established that aging correlates with delayed processing in CRT tasks, the specific cognitive phases affected by the aging process have remained somewhat elusive. 4

Recent research has provided clarity on this subject, indicating that the age-related deceleration in visual CRT latencies is predominantly influenced by delays in response selection and production. 4 Prior studies have consistently identified age-related latency increases in visual CRT tasks across various experimental paradigms, aiming to elucidate the intricate nexus between aging and cognitive functions with a specific focus on their impact on the intricate processes of stimulus perception and discrimination. 5

Other studies suggested that older adults prioritize accuracy over speed, leading to slower response times. However, Hardwick et al. challenge this notion by uncovering a consistent delay of approximately 90 ms between the minimum time required for movement preparation and the self-selected initiation time across the age range from 21 to 80. This suggests that the deceleration in response times among older adults may be linked to changes in their capacity to process stimuli and prepare for movements. 6

MCI represents a transitional phase in the continuum of cognitive aging, bridging the gap between typical age-related changes and the emergence of dementia, including conditions like Alzheimer's disease. Within the spectrum of MCI, cognitive deficits can manifest across several domains, encompassing memory, language, attention, visuospatial capabilities, and executive functions. Existing evidence suggests that choice reaction time (CRT) tasks may induce behavioral changes and improve objective cognitive performance in individuals diagnosed with MCI. Nevertheless, it is important to note that the effects of CRT on specific cognitive domains exhibit variability across different studies. 7

The extensive body of evidence underscoring the connection between reaction time (RT) and certain facets of brain structural integrity is highly pertinent to the potential clinical implications of this research. RT, serving as an indicator of information processing speed denoting the time interval between stimulus presentation and behavioral response, when measured across multiple trials of computer-based stimulus-response assessments, can serve as a behavioral indicator of neurophysiological integrity. 8

Simple reaction times are valid for measuring cognitive functions in people with physiological aging and those in the preclinical phase of dementia. Moreover, the accuracy rate can correlate significantly with episodic memory performance and other cognitive functions.9,10

Arguments for choice reaction time evaluation in MCI

Chen KC et al. 11 highlighted significant differences in Cognitive Abilities Screening Instrument (CASI), simple reaction time (SRT), and flanker reaction time (FRT) scores among patients with MCI, Alzheimer's disease, and healthy subjects, either with or without adjustment for age or education. The reaction time of patients with Alzheimer's disease was significantly slower than the other two groups. Moreover, a significant correlation between CASI and FRT and altered performance in a speed task was found in patients with MCI.

Chen YT et al. 12 found significant differences between the normal group, the MCI group, and the moderate-to-severe dementia group in both the reaction time and accuracy rate analyses. The reaction times of the MCI and dementia groups were shorter compared to those of the normal group, with poorer results also observed in accuracy rate. Moderate-to-severe subjects needed a longer response time than the normal and MCI groups, which may also indirectly lead to a lower accuracy rate when game speed is faster. Although in the reaction time analysis, the performance times of the MCI and dementia groups seemed shorter than those of the normal group for the same speed level, the accuracy rate analysis showed poor performance for both the MCI and dementia groups. These last two groups reacted randomly by pressing the button when the speed was increased, without considering the accuracy of the chosen object.

IoT sensor and tech: the need for an IoT CRT device in literature

Within the evolving landscape of tracking technologies for cognitive impairments such as dementia and a-MCI, there exist many diagnostic tools, each with its own strengths and weaknesses. Some examples include the Mini-Mental State Examination (MMSE) 13 and the Montreal Cognitive Assessment, 14 both of which have been proven to be invaluable in the screening and severity assessment of cognitive decline. Despite their effectiveness within their respective specific use cases, both methods are limited by the fact that they can only offer a static snapshot of an individual's cognitive state at the time of testing. Importantly, due to the time-intensive nature of these tests and the necessity for a trained professional to administer and evaluate the results, the frequency of these snapshots is constrained to instances when both the patient and the doctor can be available simultaneously. Typically, this would be done during a physical or virtual check-up appointment, incurring high costs and possible travel time.

Much more valuable than an occasional static snapshot of cognitive performance would be a method that employs continuous monitoring. This is where the herein proposed method becomes relevant. Alteration in reaction time has been shown to be correlated with increases in cognitive impairment,15–17 and a continuous stream of CRT data would be a critical factor in quickly identifying the subtle, often-overlooked early signs of cognitive impairment. Reliable early detection translates to timely treatment, potentially improving the prognosis of those who suffer from these conditions.

To facilitate continuous monitoring, we suggest that a CRT device's main requirements should be oriented toward core attributes such as connected, embedded, secure, and easy to use.

Connected. The Internet of Things (IoT), with its vast network of interconnected devices, provides an unparalleled platform for capturing and instantly transmitting continuous, real-world biometrics from the patient to the healthcare provider.18,19 This simultaneously ensures rapid and accurate communication of crucial medical data while reducing the financial and time costs of physical doctor visits. IoT technology also allows for the seamless integration of multiple medical devices, providing the opportunity for CRT to be part of a larger, individualized system of medical devices that relays a more holistic picture of the patient to the healthcare provider.

Embedded. By embedding IoT capabilities directly into the device, it is possible to facilitate more seamless and continuous connectivity without relying on external components. Embedded refers to the nonvisible technological features of the device, which are the “brains” that make deep integration with other similarly capable devices possible. 20 This allows the device to operate without the need for separate connections, capturing real-time biometric data and transmitting it securely to healthcare providers. Unlike devices that rely on external attachments, embedded solutions eliminate potential issues related to compatibility and ensure a compact, streamlined design. This not only enhances the device's reliability but also contributes to its portability, making it an unobtrusive and user-friendly tool for continuous cognitive monitoring. Additionally, embedded IoT architecture facilitates efficient integration with other medical devices, fostering a comprehensive and personalized healthcare system that can adapt to the unique needs of everyone.

Secure. A major limiting factor hindering the widespread adoption of IoT medical devices in clinical applications is data security. 21 Sensitive personal medical data must be handled securely both for ethical and legal reasons but also to foster a climate of trust among users, researchers, and healthcare providers such that there is as little friction as possible in adherence to continuous monitoring protocols. Self-contained embedded data processing avoids the step of sending personal medical data to a centralized server for processing before being forwarded to the healthcare provider. This ensures increased privacy, mitigating the vulnerabilities associated with potential unauthorized access or data breaches linked with cloud-based solutions.

Easy to Use. Ease of use and accessibility have been shown to be significant factors in adherence to medical protocols, especially in older patients and patients experiencing symptoms of cognitive impairment. 22 It has been documented that the willingness of participants to utilize IoT services is negatively correlated with the number and severity of their disabilities, 23 so the simpler and more frictionless the user experience is, the more likely it is for an older and/or cognitively impaired subject to willingly adopt the technology. The two principal accessibility aspects would be simple user interface and device portability. Simple user interfaces with minimal controls reduce the technological barrier to almost zero, making it an ideal solution for patients with disabilities. Devices such as smartphone applications or other medical devices often require complex inputs and return many outputs, making them difficult to use for cognitively impaired individuals or individuals unfamiliar with modern technology. Portability (meaning lightweight and with a small form factor) is also important, reducing friction related to storage, transport, and use. Many medical devices are heavy and bulky and require wires for both power and data transfer. These aspects can be mitigated using embedded IoT technologies. The CRT prototype used in this study is a small, wireless, handheld device not much larger than a smartphone. It has no screen, with just a single LED as output. The user inputs are similarly simple, with only three controls: (a) power switch and (b) choice buttons. It can easily be stored in a drawer when not in use and is lightweight enough to be carried in a single hand with minimal effort, even by an individual lacking muscle strength or motor control. The incredibly basic user interface design and the unobtrusive form factor are intentional, lowering the barrier to use as much as possible.

There are some existing tools and platforms that appear similar, leveraging CRT-like assessments for cognitive evaluations and IoT-like telecommunication schemes for data transfer. These alternatives, however, do not fully implement the idea of a direct IoT device specifically designed for CRT for patients with cognitive impairment. Here are some noteworthy existing platforms and tools:

Cogstate. 24 Originally designed for research, this platform provides computerized cognitive tests that evaluate multiple facets of cognition, including reaction time.

Cambridge Cognition CANTAB. 25 A well-established platform used worldwide in research and clinical settings. While it is not purely an IoT platform, its tablet-based assessments can measure aspects like reaction time and other cognitive domains.

BrainCheck. 26 An app-based platform available on tablets and smartphones. It offers a battery of neurocognitive tests, including reaction time assessments.

NeuroSky. 27 While better known for their EEG technologies, they also offer tools and SDKs for cognitive assessments that developers could integrate into broader IoT ecosystems.

Pebble Watch. 28 While not strictly a cognitive assessment tool, Pebble's smartwatch has been used in research settings to develop CRT-like apps for assessing cognitive performance, taking advantage of the watch's tactile interface and connectivity.

Leveraging IoT-based CRT systems for assessing cognitive function in elderly and other neurologically impaired individuals can be transformative. Here is a summary list of relevant points supporting this claim, each supported by a relevant reference: enhanced accessibility and convenience, 29 continuous real-time monitoring, 30 promotion of early detection, 31 empowerment through feedback, 32 collection for research, 33 integration with other health monitoring systems, 34 and cost-efficiency. 35

The integration of CRT into IoT devices for the elderly offers a promising frontier in cognitive health monitoring. Such devices can revolutionize early detection, continuous assessment, and personalized interventions, all while providing valuable data for broader research into cognitive aging and related conditions.

In this article, we explore the urgent need for developing an IoT-based CRT device reinforced by machine learning and artificial intelligence (AI) models. Drawing on existing research and recent technological advancements, we advocate for a paradigm shift in the landscape of cognitive performance monitoring—steering away from episodic assessments towards continuous AI-driven monitoring.

Hypothesis elaboration and reasoning

The main objective of this study is to determine the differences in the reaction time and accuracy rate between two categories of participants: (a) SCD na-MCI and (b) a-MCI and a-MCI-MD, using a device designed specifically for this goal.

Our research hypotheses are:

H1. Is the reaction time significantly different between the two compared groups?

H2. Is the accuracy rate significantly different between the two compared groups?

H3. Are these differences predictive of a preclinical phase of dementia?

Methods

Pilot methodology

A pilot study was set up in Italy and Romania to test the hypothesis. Thirty-five older adults participated voluntarily in a 2-day test assessment. Two main approaches were taken into consideration simultaneously:

Mini-Mental State Examination (MMSE), 13 which is a neuropsychological test for the evaluation of disorders of intellectual efficiency and the presence of cognitive impairment. The test consists of 30 questions, which refer to various cognitive areas: orientation in time and space, recording of words, attention and calculation, re-enactment, language, and constructive praxis. The total score is between a minimum of 0 and a maximum of 30 points. A score of 26 to 30 is an indication of cognitive normality. The score is adjusted with the coefficient for age and schooling.

The proposed choice reaction time (CRT) prototype was defined and developed by the Institute of Space Science-Subsidiary of INFLPR within “SAfety of elderly people and Vicinity Ensuring—SAVE” Project 36 cofinanced by the European Commission in the frame of Active Assisted Living—AAL Programme, which measures the time it takes for a human to choose an action (react to) when one of two stimuli is presented. The two (visual) stimuli may be simple (always the same color), usually used for target or combined (one of two colors), usually used for distraction. The principle is that the time length of the decision path (containing the eye as an input, the brain as a decision-maker and the muscle of the hand to execute the decision) depends on the state of the brain.

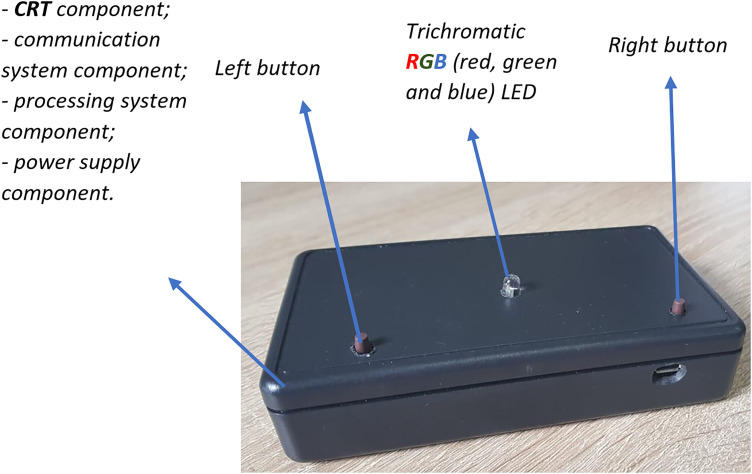

In paper, 37 the authors present an extensible e-Health Internet-connected embedded system, as shown in Figure 1, which allows a cognitive assessment through choice reaction time (CRT). The design process (specifications, software design, and hardware design) is detailed in the paper. 38 Due to a lack of hardware debugger in the chosen microcontroller, the simulator in the study of Bîră 39 was used to simulate the state machine of the CRT system and ensure that timings were met successfully.

Figure 1.

Choice reaction time device.

The decision to use blue as the target color and red and green as distractors in a choice reaction time task can be attributed to several factors, including color psychology and cognitive processing.40–42 Blue is often associated with tranquility and clarity, making it a highly distinguishable and attention-grabbing color. As the target color, blue prompts a rapid and accurate response from participants, facilitated by its perceptual saliency. In contrast, red and green, being more common in natural and cultural contexts, may induce cognitive interference due to their prevalence. By using red and green as distractors, participants must actively inhibit the instinctual response triggered by these colors and instead focus on the designated target color, blue. 43 This setup allows researchers to investigate cognitive processes such as selective attention and response inhibition in a controlled experimental environment, where participants must make rapid decisions based on specific visual cues.44,45 Additionally, assigning different response buttons for the target and distractor colors adds an additional layer of cognitive demand enhancing the complexity of the task and providing insights into the mechanisms underlying cognitive control and decision-making.46–48

Apart from these specifications, there are such requirements as being portable (lightweight, battery-based), the color-based choice input using two tactile switches, and being an Internet-connected device in order to output acquired data into the cloud and ensure randomness of generated stimuli.

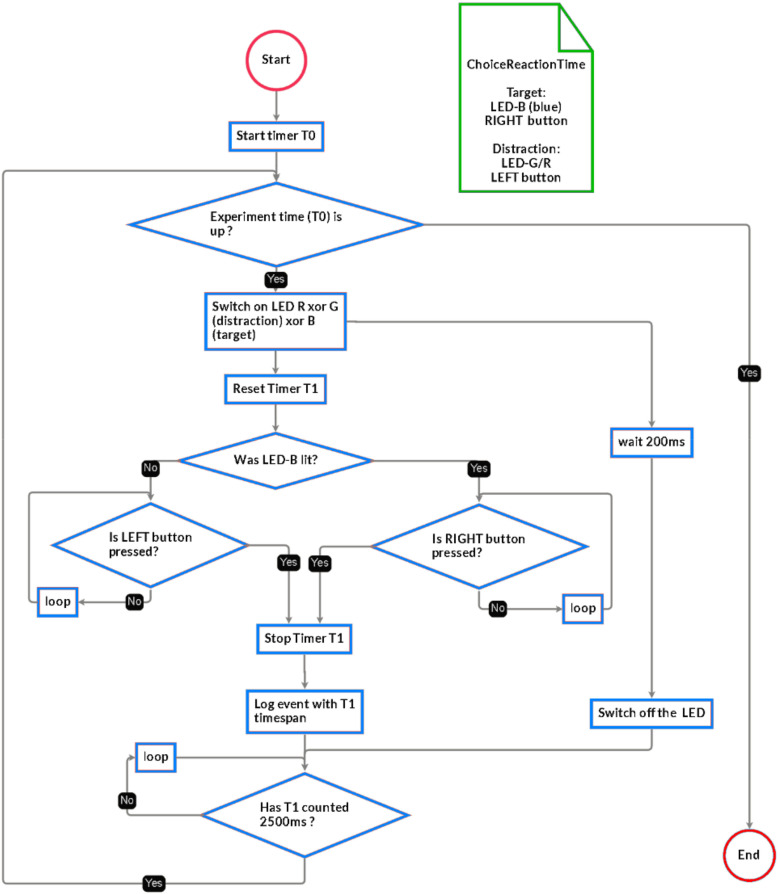

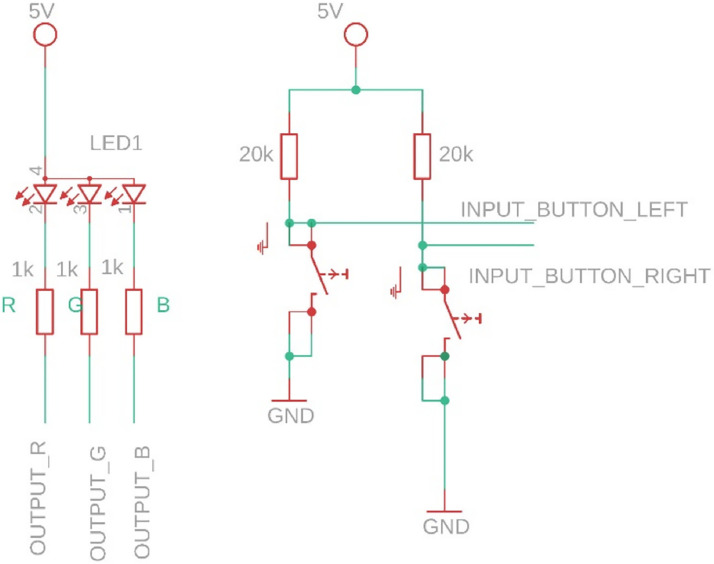

The hardware design is based on an embedded system (the microcontroller is programmed in C++ language) having one red-green-blue (RGB) LED and two buttons. In addition, it is fitted with a USB-serial connection for programming, a wireless network interface, and a charger + Li-Poly battery to enable remote utilization. The software design was based on certain timing requirements that needed to be fulfilled, but they are fully adjustable. A flowchart with the operation is in the study of Bira 38 and depicted in Figure 2, and a hardware schematic of the input and output system may be found in the study of Vizitiu et al. 37 and is depicted in Figure 3.

Figure 2.

Flowchart (FSM) of the CRT system as described in the study of Stadlbauer et al. 2 and Bira. 38

Figure 3.

Given that the results will be statistically analyzed, we would like to remove every bias, except the cognitive impairment bias. This is why we made the probability of target/distraction apparition equal (around 1/3 for each color) and assured the randomness of the apparitions. We specifically implemented an embedded pRNG engine using a combination of hardware (for the seed) and software and tested it for randomness using the National Institute of Standards and Technology (NIST) SP 800-22 Statistical Test Suite. 49

A statistic regarding the fastest/slowest reaction and correct or wrong response to stimuli is saved locally, and, if a computer is present, it may be saved as a file for later inspection.

The parameters that the CRT device extracts from each subject session are described below:

Reaction time: time elapsed between LED activation and correct button press, measured in milliseconds (ms)

Wrong red answers: number of rounds in which the subject pressed the right (R) button before the left (L) button within the allotted time following LED-R activation

Correct red answers: number of rounds in which the subject pressed the left (L) button within the allotted time following LED-R activation

Wrong blue answers: number of rounds in which the subject pressed the left (L) button before the right (R) button within the allotted time following LED-B activation

Correct blue answers: number of rounds in which the subject pressed the right (R) button within the allotted time following LED-B activation

Wrong green answers: number of rounds in which the subject pressed the right (R) button before the left (L) button within the allotted time following LED-G activation

Correct green answers: number of rounds in which the subject pressed the left (L) button within the allotted time following LED-G activation

Total correct answers: total number of rounds in which the subject pressed the correct corresponding button within the allotted time following LED activation

Total wrong answers: total number of rounds in which the subject pressed the incorrect button before the correct button within the allotted time following LED activation

Recruitment

The study involved older adults who met the inclusion and exclusion criteria described in Table 1.

Table 1.

Inclusion and exclusion criteria.

| Inclusion criteria | Exclusion criteria |

|---|---|

|

|

Description of the sample

There was a total of 35 participants enrolled in this study, including 11 males and 24 females between the ages of 61 and 85 years, with a mean of 73.4 ± 5.4 standard deviation (SD).

The majority of seniors (60%) had a secondary education level, 20% had a primary level, and the other 20% had a tertiary level (university or further education). All the participants in the study had experience in using smartphones or other electronic devices including tablets or home sensors.

The average score of the MMSE was 27.7 ± 1.6 SD and that of the attention sub-dimension of the MMSE was 4.6 ± 0.5 SD.

On the basis of the latest neuropsychological evaluation and the cognitive diagnosis, the participants were divided into two different categories: (a) 17 subjects with SCD and na-MCI and (b) 18 subjects with a-MCI and a-MCI-MD. This classification was proposed by the psychologists who supervised the study based on their expertise and clinical experience and on the severity of the patients.

Patients also reported suffering from the following pathologies, divided according to the two cognitive categories above-listed: (a) anxious-depressive syndrome, axial myopathy, scleroderma, mild Parkinson's disease, high blood pressure, hepatitis, osteoporosis, diabetes, cardiovascular diseases, prostate diseases, sleep disorders, kidney disease, gallbladder disease, and rheumatism and (b) myocardial infarction, anxious-depressive syndrome, Parkinson's disease, thyroid disease, chronic obstructive pulmonary disease, chronic migraine, chronic subdural hematoma, diabetes, high blood pressure, rheumatism, polyarthritis, cardiovascular diseases, spondylosis, prostate diseases, duodenal ulcer, lymphatic cancer, bronchial asthma, osteoporosis, and chronic polyneuropathy.

Table 2 shows the participants’ characteristics.

Table 2.

Participants’ characteristics.

| All participants (N = 35) | |

|---|---|

| Age, mean ± SD | 73.4 ± 5.4 |

| Gender, n (%) | |

| Male | 11 (31.4%) |

| Female | 24 (68.6%) |

| Education, n (%) | |

| Primary | 7 (20.0%) |

| Secondary | 21 (60.0%) |

| Tertiary | 7 (20.0%) |

| MMSE, mean ± SD | 27.7 ± 1.6 |

| Attention MMSE, mean ± SD | 4.6 ± 0.5 |

Procedure

The study was carried out in accordance with the European Union Regulation No. 679 of the European Parliament and of the Council of 27 April 2016 and the Helsinki Declaration (2013). The Ethics Committee of IRCCS INRCA approved the research protocol in Italy on 31 March 2022 with approval number 0015579. It was approved in Romania by the Local Medical Ethical Commission of the Transilvania University of Brasov, with approval number: 1, 2/07/2021. The research team obtained the necessary permission to use all applicable tools/questionnaires in this study according to the approval of ethical commissions.

The INRCA and Social Assistance Directorate of Brasov County (DAS), along with caregivers and the elderly, were approached by a research team at the beginning of the procedure. Participation of elders was voluntary. Written informed consent was obtained from the elderly and their caregivers prior to participation in the study. The measurements were conducted in two consecutive days, after breakfast, starting at 9.00 am in the morning. During the first day, participants were evaluated with MMSE questionnaires, and during the second day, they participated in the CRT assessment. The CRT assessment was conducted in individual sessions which took place in a quiet room at DAS and INRCA. The caregivers were comprehensively informed about the research project and the conditions of the participation. During the assessments with principals’ agreements, the elder also completed the demographic questionnaire.

Each subject played the game once under identical CRT configurations.

Hypothesis testing

To test hypotheses H1 and H2, a statistical analysis was performed to highlight the significant statistical differences between the two groups of subjects. Hypothesis 1 refers to the reaction time variable and hypothesis 2 to the accuracy rate variable. Reaction times and correct/incorrect answers for each LED color were recorded during the test. The accuracy rate was calculated for each subject and for each LED color. Mean reaction time and mean accuracy rate were also calculated as the mean of the three average reaction time and mean of the accuracy score for the three colors respectively. The Pearson correlation coefficient was computed to assess the relationship between reaction time and MMSE scores, as well as between accuracy rate and MMSE scores. A Mann-Whitney test was performed to compare the accuracy rate and reaction time between the two groups. A level of 0.05 was used as a significant level during the statistical analysis.

To test hypothesis H3, the performance of certain machine learning classifiers trained for the automatic detection of the two categories of participants was evaluated. The data considered for the development of an automatic cognitive diagnosis tool included aspects related to age, gender, education level, MMSE score, MMSE attention score, CRT statistics (number of wrong and right responses, minimum, maximum, and average response time, standard deviation for each light color, i.e., red, green, blue) and cognitive diagnosis. The classifiers were developed using MATLAB—Classification Learner Tool and Principal Component Analysis (PCA) code adapted using a PCA tutorial retrieved from the study of GeeksforGeeks. 50

Data analysis

Nonparametric tests were used in this research because the analysis of the variables showed a violation of the normality rules. Spearman test (rho) was used to calculate the association between variables. To calculate differences between groups, the Mann-Whitney (U) test is used to compare two independent groups when the distribution of the data violates the normality rules.

PCA was used to extract the first two principal components and visualize them in a 2D graph.

Machine learning and artificial intelligence algorithms were developed and tested to estimate the feasibility of an automatic cognitive diagnosis tool.

Randomness statistical tests were performed to evaluate the probability of the target and distraction apparition being equal and ensure the randomness of the apparitions.

Results

Hypothesis 1

Table 3 shows the results of the Pearson correlation coefficient (R) analysis between MMSE and reaction time for each led color and mean reaction time. Average reaction time resulted in a moderate inverse linear correlation for all three led colors with an R value of −0.404, −0.424, and −0.440 for red, green, and blue led, respectively. Mean reaction time shows a moderate inverse correlation with an R of −0.464.

Table 3.

MMSE Pearson correlation with reaction time.

| M (SD) | R | P | |

|---|---|---|---|

| Red minimum reaction time | −0396 | 0.018 | |

| Red maximum reaction time | −0244 | 0.157 | |

| Red average reaction time | −0404 | 0.016 | |

| Red standard deviation reaction time | −0213 | 0.219 | |

| Green minimum reaction time | −0460 | 0.005 | |

| Green maximum reaction time | −0187 | 0.282 | |

| Green average reaction time | −0424 | 0.011 | |

| Green standard deviation reaction time | −0273 | 0.113 | |

| Blue minimum reaction time | −0403 | 0.016 | |

| Blue maximum reaction time | −0301 | 0.079 | |

| Blue average reaction time | −0440 | 0.008 | |

| Blue standard deviation reaction time | −0261 | 0.131 | |

| Mean reaction time | −0464 | 0.005 |

Table—regression model with 1 predictor

The results of the Mann-Whitney U test indicated that there was a significant difference between the two groups’ blue reaction time, featured as average (P = .062), maximum (P = .042), and standard deviation (P = .031), as shown in Table 4.

Table 4.

Mann–Whitney U test.

| Mann–Whitney U | Z | P | r (effect size) | |

|---|---|---|---|---|

| Red minimum reaction time | 186 | −1.073 | 0.283 | 0.181 |

| Red maximum reaction time | 160 | −0.215 | 0.830 | 0.036 |

| Red average reaction time | 183 | −0.974 | 0.330 | 0.165 |

| Red standard deviation reaction time | 152 | 0.017 | 0.987 | 0.003 |

| Green minimum reaction time | 182 | −0.941 | 0.347 | 0.159 |

| Green maximum reaction time | 160 | −0.215 | 0.830 | 0.036 |

| Green average reaction time | 185 | −1.040 | 0.299 | 0.176 |

| Green standard deviation reaction time | 167 | −0.446 | 0.656 | 0.075 |

| Blue minimum reaction time | 174 | −0.677 | 0.499 | 0.114 |

| Blue maximum reaction time | 215 | −2.030 | 0.042 | 0.343 |

| Blue average reaction time | 210 | −1.865 | 0.062 | 0.315 |

| Blue standard deviation reaction time | 219 | −2.162 | 0.031 | 0.365 |

| Mean Reaction Time | 195 | −1.370 | 0.171 | 0.232 |

Hypothesis 2

As can be observed in Table 5, the Pearson correlation coefficients of accuracy rate for each led color resulted in a moderate correlation with MMSE with an R value of 0.582 for the mean accuracy rate.

Table 5.

MMSE Pearson correlation with accuracy rate.

| M (SD) | R | P | |

|---|---|---|---|

| Red accuracy rate | 0.373 | 0.027 | |

| Green accuracy rate | 0.623 | 0.000 | |

| Blue accuracy rate | 0.499 | 0.002 | |

| Mean accuracy rate | 0.582 | 0.000 |

Mann-Whitney test comparing the accuracy rate of the two groups resulted that there is a significant difference in green accuracy rate (P = .056), as shown in Table 6.

Table 6.

Mann–Whitney test.

| Mann–Whitney U | Z | P | r (effect size) | |

|---|---|---|---|---|

| Red accuracy rate | 166 | −0.497 | 0.619 | 0.084 |

| Green accuracy rate | 104 | 1.911 | 0.056 | 0.323 |

| Blue accuracy rate | 139 | 0.479 | 0.632 | 0.081 |

| Mean accuracy rate | 117 | 1.207 | 0.227 | 0.204 |

Hypothesis 3

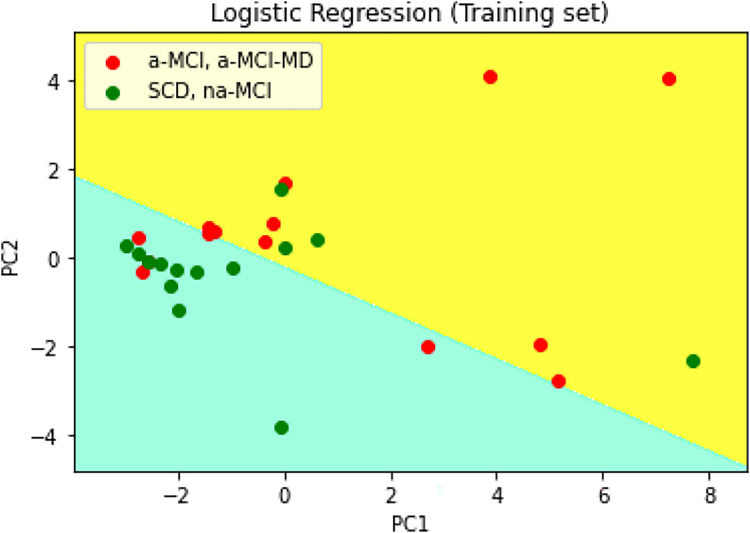

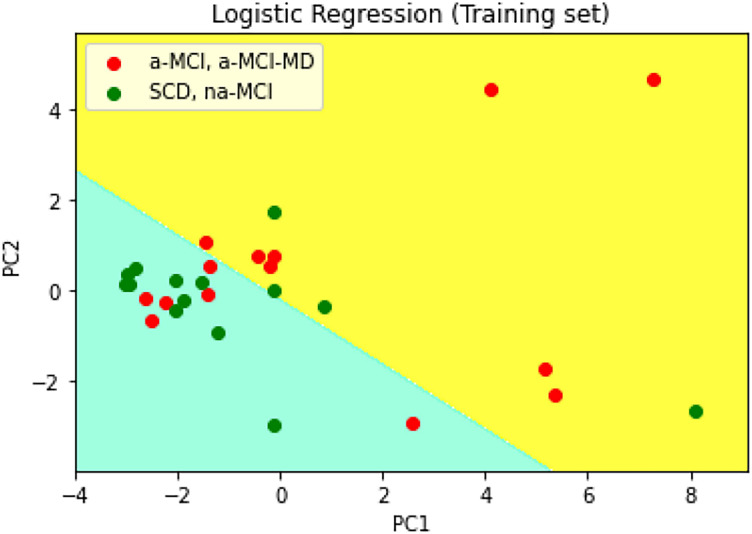

A PCA was performed on the data provided by the CRT device (i.e., number of wrong and right answers, minimum, maximum, and average response time, and standard deviation for each light color (i.e., red, green, blue)) to extract the first two principal components and visualize them in a 2D graph as can be observed in Figure 4.

Figure 4.

PCA prediction on training set—CRT data.

Another PCA was performed on the data provided by the CRT device and other data related to the subject (i.e., age, gender, educational level, MMSE score) to extract the first 2 principal components and visualize them in a two-dimensional graph as can be observed in Figure 5.

Figure 5.

PCA prediction on training set—CRT and demographic data.

Considering the PCA and the statistical analysis outcomes, the development of an automatic cognitive diagnosis tool is foreseen, with three approaches being considered using MATLAB—Classification Learner Tool:

A1. Building a classifier that uses only the data extracted by the CRT device for each LED lighting color (i.e., number of wrong answers, number of correct answers, minimum response time, maximum response time, average response time, and standard deviation) and data about the person performing the test (i.e., age, gender, education level, MMSE score).

A2. Building a classifier that uses only the data extracted by the CRT device for each LED lighting color.

A3. Building a classifier that uses parameters indicated by the statistical analysis developed for Hypotheses 1 and 2.

All the classifiers of MATLAB—Classification Learner Tool were tested through a cross-validation, leave-one-out cross-validation method. Also, 4 possibilities were tested, namely PCA 1/23 features, PCA 2/23 features, PCA 3/23 features, and without PCA. Table 7 presents the top of the most performant classifiers tested by the Classification Learner Tool and the accuracy obtained by them at validation. For the classifier development, 35 examples were used, in accordance with H1 and H2 testing processes.

Table 7.

Classifier ranking by accuracy on validation using MATLAB Classification Learner Tool.

| Classifier ranking by accuracy (validation) | ||||||

|---|---|---|---|---|---|---|

| First classifier | Second classifier | Third classifier | ||||

| Accuracy (%) | Classifier name | Accuracy (%) | Classifier name | Accuracy (%) | Classifier name | |

| A1. | ||||||

| 23 features | 77.1 | Linear SVM | 74.3 | MGSVM | 74.3 | Cosine KNN |

| PCA 3/23 features | 77.1 | RUSBoosted trees | 60.0 | Efficient linear SVM | 60.0 | Narrow neural network |

| PCA 2/23 features | 71.4 | RUSBoosted trees | 68.6 | Fine KNN | 65.7 | Binary GLM logistic regression |

| PCA 1/23 features | 71.4 | RUSBoosted trees | 65.7 | Linear discriminant | 65.7 | Efficient logistic regression |

| A2. | ||||||

| 18 features | 71.4 | Medium neural network | 65.7 | Bagged trees | 62.9 | Kernel naive Bayes |

| PCA 3/18 features | 74.3 | RUSBoosted trees | 62.9 | Trilayerd neural network | 60.0 | Efficient linear SVM |

| PCA 2/18 features | 77.1 | RUSBoosted trees | 68.6 | Fine KNN | 65.7 | Binary GLM logistic regression |

| PCA 1/18 features | 74.3 | RUSBoosted trees | 65.7 | Linear discriminant | 65.7 | Efficient logistic regression |

| A3. | ||||||

| 10 features | 66.7 | RUSBoosted trees | 63.6 | Kernel naive Bayes | 63.6 | Narrow neural network |

| PCA 3/10 features | 66.7 | Fine tree | 66.7 | Medium tree | 66.7 | Coarse tree |

| PCA 2/10 features | 69.7 | RUSBoosted trees | 66.7 | Trilayered neural network | 63.6 | Cosine KNN |

| PCA 1/10 features | 69.7 | Trees | 69.7 | Narrow neural network | 69.7 | RUSBoosted trees |

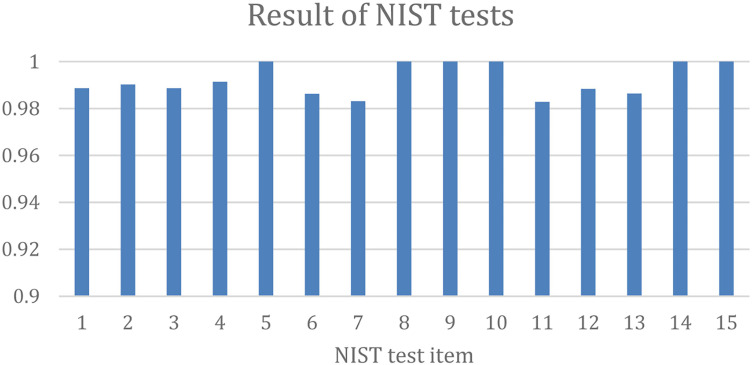

Regarding the randomness statistical test, a short description of the NIST tests is provided in Table 8 and shown in Figure 6.

Table 8.

NIST SP800-22 statistical test description.

| Test idx | Test name | Description/test idea |

|---|---|---|

| 1 | monobit_test | 50% of bits should have a value of 1 |

| 2 | frequency_within_block_test | ∼50% of bits in disjoint substrings should have a value of 1 |

| 3 | runs_test | Strings of ones should alternate with strings of zeros in an adequate manner (not too slow, not too fast) |

| 4 | longest_run_ones_in_a_block_test | Length of the longest string of 1's as opposed to the number of bits in block |

| 5 | binary_matrix_rank_test | Reduced linear dependence among lines and columns of the matrix build with strings of successive ones and successive zeros. |

| 6 | dft_test | No detection of periodic features |

| 7 | non_overlapping_template_matching_test | Should not exhibit too many or too few occurrences of a given aperiodic pattern |

| 8 | overlapping_template_matching_test | Should not exhibit too many or too few occurrences of fixed-length runs of 1’s |

| 9 | maurers_universal_test | Bitstring cannot be compressed significantly |

| 10 | linear_complexity_test | High linear complexity |

| 11 | serial_test | Tests uniformity of distributions of patterns of given lengths |

| 12 | approximate_entropy_test | For fixed block length m, in long irregular strings, the approximate entropy of order m is close to log2. The approximate entropy measures the logarithmic frequency with which blocks of length m that are close together remain close together for blocks augmented by one position |

| 13 | cumulative_sums_test | Maximum absolute value of the partial sums of the sequences represented in the ±1 fashion, which, depending on the value, indicates if there are too many zeros or ones at the early stages of the sequence, or if ones and zeros are intermixed too evenly |

| 14 | random_excursion_test | Detect deviations from the distribution of the number of visits of the random walk to a certain state (integer value) |

| 15 | random_excursion_variant_test | Alternative to the random excursion test |

Figure 6.

Results of NIST randomness tests.

The randomness test (regarding the probability of apparitions on each one of the three colors: R, G, B) was deemed acceptable (within 32.22% to 34.73% probability for each). In addition, we tested the quality of the used random number generator (RNG)51,52 used in the embedded device (seeded by an analog input), with NIST SP 800-22 Statistical Test Suite ported to Python 3 from the study of GINAR Team 53 and the results may be seen in Table 9, after a run of 2 million generated 2-bit numbers. The generated numbers were arranged in binary sequences of 256 bits and then served to the NIST tests as inputs. A full technical description of the NIST tests may be found in the study of Bassham et al. 49

Table 9.

Results for the NIST SP 800-22 statistical tests on CRT number generation.

| Test idx | Test Name | P_average | Passed percentage |

|---|---|---|---|

| 1 | monobit_test | 0.5005351781619204 | 0.988608 |

| 2 | frequency_within_block_test | 0.4969930170690642 | 0.990208 |

| 3 | runs_test | 0.49392471325562814 | 0.988608 |

| 4 | longest_run_ones_in_a_block_test | 0.4894939245786458 | 0.99136 |

| 5 | binary_matrix_rank_test | 0.551297196314446 | 1.0 |

| 6 | dft_test | 0.48580863569792093 | 0.98624 |

| 7 | non_overlapping_template_matching_test | 0.5773950672796546 | 0.983104 |

| 8 | overlapping_template_matching_test | 0.5444954165703868 | 1.0 |

| 9 | maurers_universal_test | 0.9994843687476738 | 1.0 |

| 10 | linear_complexity_test | 0.36511318279867216 | 1.0 |

| 11 | serial_test | 0.4999943169717927 | 0.982848 |

| 12 | approximate_entropy_test | 0.49115065728384405 | 0.988352 |

| 13 | cumulative_sums_test | 0.5176099598864391 | 0.986368 |

| 14 | random_excursion_test | 0.5030803968097696 | 1.0 |

| 15 | random_excursion_variant_test | 0.5829187234084725 | 1.0 |

Discussion

Despite being exploratory (proof of concept), our study's results enable us to conclude that CRT tests can be used for both the screening of mild cognitive deficits and the stratification of neurocognitive diseases (mild, moderate, and severe). Similarly, CRT tests can be used to screen for impairments in higher mental functions under stressful work environments or other physiopathological circumstances (e.g., dehydration, hypoglycemia, and sleep deprivation) that can compromise the performance of healthy individuals.

In order for the machine learning classifiers to be developed within hypothesis H3, principal component analyses (PCA) were applied both to a dataset containing exclusively CRT data and to another dataset composed of both CRT data and subject data. PCA extracted the first two principal components through a scatterplot graph, as can be observed in Figure 4 and Figure 5. The classifiers were ranked according to their performance at validation. As can be observed in Table 7, the best results were obtained in the configuration in which all the available data were considered, even without PCA. It can also be observed that good results were obtained when using the following classifiers as follows: support vector machine (SVM) (i.e., MGSVM, linear SVM), trees (i.e., RUSBoosted trees, fine trees), K-nearest neighbors (KNN) (i.e., cosine KNN, fine KNN), and neural networks (i.e., medium NN, trilayered NN, narrow NN). It can be observed that, although parameters with statistical significance, like during the testing of hypotheses H1 and H2, have an important role in the discrimination of the two classes, the rest of the parameters also contribute to increasing the performance of the classifiers. RUSBoossted stands out, being a classifier combining two techniques: random under-sampling (RUS) and AdaBoost (adaptive boosting). RUS is effective in unbalanced data and may remove instances to balance class distribution, which is necessary, considering the existence of outliers in the dataset. On the other hand, AdaBoost combines multiple weak learners to create a strong ensemble model. Hence, RUSBoosted could reduce bias but at the cost of increasing the variance and the risk of overfitting.

Regarding the risk of overfitting due to small sample size and high-dimensional data, different strategies were considered to mitigate this risk, such as data augmentation, weight regularization, early stopping, cross-validation, and sample methods. Of these techniques, the best strategy was to use cross-validation, as presented in the Results section.

The additional stratification of seniors with cognitive disorders can be achieved by using techniques like CRT or RT, either on-site or through telemedicine. These methods take into account the five characteristics that are commonly found in these patients and are linked in the literature to a change in CRT performance: menopause, age >50, depression and/or anxiety, mild cognitive disorders (non-amnestic), and chronic pain syndromes.

From SRT to CRT and lexical and semantic judgments, there is a broad range of methodologically sound tests that may evaluate processing speed and reaction time for both verbal and nonverbal inputs. In addition to pathological contexts (such as depression, attention-deficit/hyperactivity disorder, brain damage, Parkinson's disease 54 ), different forms of encephalopathies (hepatic, renal, metabolic, etc.), post-anesthetic status, ingestion of toxic or narcotic substances, dehydration, hypoglycemia, sleep deprivation, prolonged stress, etc., the family of RT and CRT tests can be used to assess executive functions in a physiological context (i.e., performance, senescence).

In multiple investigations, 55 individuals diagnosed with depression exhibited a longer latency compared to healthy people; nevertheless, the degree of influence on cortical information processing stages remains unclear. For instance, in contrast to response selection, stimulus preprocessing, for instance, is believed to be usually unaffected in depressive disorder. 56 When a person has been diagnosed with an anxiety condition, the consequences are more complicated, and the outcomes can vary according to the type of test and the characteristics of the population under investigation. 57 While the majority of traditional research 58 has found that latency time is much shorter in response to anxiogenic stimuli, another study 59 claims that anxiety can actually have the opposite impact, enhancing performance.

In comparison to individuals of the same age with normal cognitive health, mild non-amnestic cognitive deficiency is linked to an increase in latency. 60 Much research 61 about CRT alterations in patients with different chronic pain syndromes and different somatic sites can be found in the literature; in most cases, there is an increase in latency, particularly in older patients. 62 Considerable differences exist across the research findings based on factors such as personality type, analgesic therapy, length, intensity, and type of pain (neuropathic, neoplastic, etc.). The majority of studies conducted on postmenopausal women demonstrate an increase in reaction time delay, particularly after 5 years following the onset of menopause.

Moreover, CRT test modifications for non-transmissible illnesses whose prevalence rises with age are discussed. As a result, the CRT test results of diabetic patients vary according to the duration of their diabetes (persistence)63,64 and the presence and severity of their sensory-motor neuropathies. 65 According to body mass index values, the existence of cardiovascular disease, and the level of insulin resistance, patients with hyperlipidemia exhibit modifications of CRT test results. 66 Statistically significant alterations in CRT tests are also observed in individuals with chronic renal disease, a common pathology in patients with chronic or poorly managed diabetes and obesity and in the elderly (> 65 years). 67

A number of other risk factors or pathologies have been associated with changes in CRT test results, whose presence must be evaluated in clinical or experimental studies. There is a strong association between sleep deprivation and CRT test results, including in healthy young people who perform demanding professions.68–70 In a study conducted on a group of athletes, in the case of sleep deprivation, both an alteration of the CRT test results, a lowered mood, altered resting antioxidant status, and an increased inflammatory profile were observed. 71 Ventilatory disorders during sleep, including sleep apnea, are responsible for a series of changes in the performance of short-term memory, spatial memory, and CRT tests.72,73 Patients with acute forms of SARS-CoV-2 virus infection and subsequent persistent symptoms (long-covid) also presented in several studies with modified CRT test values, along with other negative correlations with values of standardized cognitive test scores, 74 aspects observed less intensively in the case of young people in social isolation during COVID-19 pandemics. 75 A series of medical situations, such as post-anesthetic76,77 and post-operative status, 78 determine time-limited changes in CRT test values.

Changes in CRT tests are associated with a large number of pathologies, risk factors, and medical conditions and have advanced the hypothesis of using these tests to assess all-cause mortality risk, especially due to cardiovascular disease (CVD).79,80

This study's limitations include its unicentric design, its short implementation period, the need for a much larger patient group to validate the study's observations, and the need to improve the selection criteria. Despite the benefits of collecting data from two different nations, the specific national centrality in Italy and Romania, as well as the small sample size, can be seen as biased and a significant limitation that does not allow for the generalization of results and external validity of the conclusion. Moreover, the lack of a control group of healthy older adults without cognitive impairment precluded an in-depth assessment of the effectiveness of the CRT device in differentiating between impaired and unimpaired individuals. Additionally, factors such as fatigue, individual differences in circadian rhythms, and visual acuity can be seen as biased in the results since these variables were not controlled during the test or evaluated before the test.

Testing for visual acuity and neurovisual performance, neurological conditions (particularly those affecting coordination and movement), assessment of chronic pain syndromes, degenerative muscle diseases, or neuromuscular disorders are other important considerations when choosing a patient. Another crucial factor that can affect the outcomes of CRT tests, separate from other psychiatric or physical disorders, is the screening and staging of depression. Furthermore, another limitation is the controlling for medication as a possible confounding factor in the presentation of results. In this study, we did not collect data on medications or their impact on participants’ outcomes.

Other limitations stemmed from limited access to some resources—for example, the possibility of performing multiple neuroimaging investigations on both groups of patients, as well as the strict monitoring of the effect of personal medication, especially in patients with polypharmacy (who are prescribed more than 5 distinct pharmacological substances) and with psychiatric and neurological pathologies. The study could not monitor the impact of subtle factors, such as gender, chronobiological, or interindividual variations during successive testing sessions. A series of statistical patterns of interest, not anticipated at the time of the study, became evident late and did not allow for more detailed investigations (e.g., aspects related to visual acuity or chronic pain syndromes). Finally, the standardization of the protocol and the devices used will contribute to the uniformity of the results and the increase of the precision of the results.

There were also several aspects for increasing the CRT Device availability, namely:

Accommodating color blindness: RGB LEDs can generate various colors, but for users with color blindness, certain colors might be indistinguishable or confusing. To address this, the system should provide alternative color representations or patterns that are discernible to color-blind users.

Use of diffused LEDs: When used in a dark environment, bright LEDs can cause discomfort or even pain to users due to their intensity. Therefore, employing diffused LEDs can help mitigate this issue by spreading the light more evenly and reducing glare.

Multi-user support: To facilitate multiple users, the device should have the capability to store and manage individual results or preferences. Biometric authentication (e.g., via a fingerprint reader) could ensure that each user's data remains secure and separate from others.

In terms of usage limitations and enhancements, some specific aspects were noticed by the authors of the current paper:

Multi-lingual support: Providing instructions in multiple languages caters to a diverse user base, ensuring that language barriers do not hinder understanding or usability. This could be implemented through text-based instructions or audio prompts in various languages.

Audio instructions: Alongside written instructions, incorporating audio instructions enhances accessibility, particularly for users with visual impairments or those who prefer auditory guidance. This feature could include spoken prompts or narrated guidance to supplement or replace written instructions.

By addressing these limitations and incorporating user-centric features, the system becomes more inclusive and user-friendly, enhancing its overall usability and accessibility.

Given the excellent features of our proposed CRT device, such as its embedded design, connectivity, user-friendly interface, permanent availability, and portability, the authors of this study may propose longitudinal studies across multiple days and time of day to be considered also by other fellow scholars and clinicians in terms of long-term study perspectives. Conducting experiments over extended periods and at different times of day provides valuable insights into the dynamics of users’ responses and conditions. By repeating experiments across various days and times, researchers can observe how users’ behaviors and physiological states vary under different contexts and conditions. This longitudinal approach allows for the identification of patterns, trends, and correlations over time, thereby enhancing the understanding of users’ health and lifestyle factors.

Overall, leveraging advanced techniques such as neural networks, multisensory integration, and longitudinal studies can greatly enhance the capabilities of IoT and technology-based detection/prevention systems. These approaches offer promising avenues for developing more robust, adaptive, and personalized solutions to improve users’ well-being and safety in diverse contexts.

Conclusions

The present study highlights the potential of integrating IoT technologies with cognitive performance monitoring, specifically through CRT tasks, to enhance the care and management of cognitive impairments in older adults. The development and validation of a portable, easy-to-use CRT device demonstrated its effectiveness in distinguishing between different levels of cognitive function among older adults, including those with SCD and various forms of MCI.

The study recruited 35 participants aged 60 and above, and utilizing a CRT device that measured reaction times to stimuli presented in different colors aimed to correlate these times with levels of cognitive impairment. Statistical analysis revealed significant differences in reaction times for specific colors, indicating that the CRT device could effectively differentiate between the cognitive capacities of participants. Moreover, the Mann-Whitney U test results supported the hypothesis that reaction time and accuracy rate differences between groups could predict a preclinical phase of dementia, with notable correlations between CRT outcomes and MMSE scores.

Additionally, the application of machine learning algorithms further validated the CRT device’s potential as an effective tool for automatic cognitive diagnosis. Through PCA and the testing of several classifiers, the study identified models that classified with good accuracy participants into cognitive categories based on CRT data alone or combined with demographic information.

The study’s results underscore the viability of IoT-based CRT systems in the early detection and continuous monitoring of cognitive impairments in older adults. The research supports the notion that real-time, continuous data collection via IoT devices can offer significant advantages over traditional, episodic cognitive assessments, providing a more nuanced and timely understanding of cognitive decline. Moreover, the study suggests potential directions for future research, including addressing limitations such as color blindness and enhancing the system with features like audio instructions and multisensory approaches.

Furthermore, the findings advocate for the expansion of IoT and technology-based solutions in healthcare, emphasizing the need for user-centric designs and the exploration of neural networks and longitudinal studies to improve the detection and management of cognitive impairments. The study opens a call for further research into how these technologies can be optimized to support the aging population, potentially transforming care practices through personalized, adaptive, and efficient monitoring systems.

The authors of this study believe that the originally proposed scientific research objectives have been achieved. Starting not only from the results and observations but also from the limitations of our research, the authors consider that further research is required, as randomized, prospective, complex, and interdisciplinary studies are needed, which include larger groups of patients in order to obtain conclusive data in establishing the role of CRT tests in strategies of screening and stratification of neurocognitive pathologies in senior patients or of decreased cognitive performance in healthy people in situations that involve long exposure to stressful conditions.

In addition to our research, we propose the following future research directions:

The development of a battery of tests derived from the complementary use of validated neuropsychological and CRT tests in the screening of depression and anxiety, as well as cognitive disorders in the elderly in order to obtain rapid, cost-effective results with improved sensitivity and specificity.

Assessment of the relationship between alterations in CRT tests in elderly people and systemic non-communicable diseases such as cancer, diabetes mellitus, chronic respiratory conditions, and CVDs.

Finding ways to translate cognitive reserve tests into an online setting through gamification, learning platforms, and virtual reality solutions in order to create a u-training/learning experience (both e- and m-training/learning), for both rehabilitation (e.g., neurological recovery) and human performance optimization (neurovisual or neuromotor training)

Using GPT-4 generative artificial intelligence and advanced machine learning solutions to design complex experiments by integrating language models that can ensure autonomous design, planning, and execution of complex scientific experiments and phenotyping patients based on the results of CRT tests.

Acknowledgements

The activities of CRT definition, development, and pilot were performed under the 2018 Programme “Smart Solutions for Ageing Well,” project AAL-CP-2018-5-149-SAVE entitled “SAfety of elderly people and Vicinity Ensuring-SAVE”.

Footnotes

Contributorship: CV, AN, MM, AD, KD, MR, VS, and CD contributed to the introduction parts. CV, AN, MM, AD, KD, MR, VS, LR, AM, DMK, and SAM contributed to the hypothesis elaboration and reasoning, pilot methodology, and results discussions. CRT system of interest: CV, AN, MM, AD, KD CD, and CEV. Statistical methods and results: VS, LA, MR, LR, SAM, DMK, AM, AD, and CV. Machine learning methods and results: AD, CV, CEV, LA, and DMK. Data interpretation: CV, CD, LR, SAM, DMK, and AM. All authors contributed to conclusions and participated in the integration, review, and editing of the manuscript and approved its final version.

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval: The Ethics Committee of IRCCS INRCA approved the research protocol in Italy on 31 March 2022 with approval number 0015579. In Romania by the Local Medical Ethics Commission of the Transilvania University of Brasov, with approval number: 1, 2/07/2021.

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the Smart Solutions for Ageing Well—AAL, Ricerca Corrente funding from the Italian Ministry of Health, Romanian Ministry of Research, Innovation, and Digitisation (grant number AAL-CP-2018-5-149-SAVE, 30N/2023).

Guarantor: We express our gratitude to Dr Cristian VIZITIU, who served as the guarantor for this research, ensuring the integrity of the article.

ORCID iD: Alexandru Nistorescu https://orcid.org/0000-0002-6166-6768

References

- 1.Giorgio A, Santelli L, Tomassini V, et al. Age-related changes in grey and white matter structure throughout adulthood. NeuroImage 2010; 51: 943–951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Stadlbauer A, Salomonowitz E, Strunk G, et al. Age-related degradation in the central nervous system: assessment with diffusion-tensor imaging and quantitative fiber tracking. Radiology 2008; 247: 179–188. [DOI] [PubMed] [Google Scholar]

- 3.Heuninckx S, Wenderoth N, Swinnen SP. Systems neuroplasticity in the aging brain: recruiting additional neural resources for successful motor performance in elderly persons. J Neurosci 2008; 28: 91–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Woods DL, Wyma JM, Yund EW, et al. Age-related slowing of response selection and production in a visual choice reaction time task. Front Hum Neurosci [Internet] 2015; 9: 1–12. Available from: http://journal.frontiersin.org/article/10.3389/fnhum.2015.00193/abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Müller-Oehring EM, Schulte T, Rohlfing T, et al. Visual search and the aging brain: discerning the effects of age-related brain volume shrinkage on alertness, feature binding, and attentional control. Neuropsychology 2013; 27: 48–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hardwick RM, Forrence AD, Costello MG, et al. Age-related increases in reaction time result from slower preparation, not delayed initiation. J Neurophysiol 2022; 128: 582–592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Huckans M, Hutson L, Twamley E, et al. Efficacy of cognitive rehabilitation therapies for mild cognitive impairment (MCI) in older adults: working toward a theoretical model and evidence-based interventions. Neuropsychol Rev 2013; 23: 63–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Haworth J, Phillips M, Newson M, et al. Measuring information processing speed in mild cognitive impairment: clinical versus research dichotomy. J Alzheimers Dis 2016; 51: 263–275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Jakobsen LH, Sorensen JM, Rask IK, et al. Validation of reaction time as a measure of cognitive function and quality of life in healthy subjects and patients. Nutrition 2011; 27: 561–570. [DOI] [PubMed] [Google Scholar]

- 10.Christ BU, Combrinck MI, Thomas KGF. Both reaction time and accuracy measures of intraindividual variability predict cognitive performance in Alzheimer’s disease. Front Hum Neurosci 2018; 12: 24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chen K, Weng C, Hsiao S, et al. Cognitive decline and slower reaction time in elderly individuals with mild cognitive impairment. Psychogeriatrics 2017; 17: 364–370. [DOI] [PubMed] [Google Scholar]

- 12.Chen YT, Hou CJ, Derek N, et al. Evaluation of the reaction time and accuracy rate in normal subjects, MCI, and dementia using serious games. Appl Sci 2021; 11: 28. [Google Scholar]

- 13.Folstein MF, Folstein SE, McHugh PR. Mini-mental state. J Psychiatr Res 1975; 12: 189–198. [DOI] [PubMed] [Google Scholar]

- 14.Nasreddine ZS, Phillips NA, Bédirian V, et al. The Montreal cognitive assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc 2005; 53: 695–699. [DOI] [PubMed] [Google Scholar]

- 15.Bielak AAM, Hultsch DF, Strauss E, et al. Intraindividual variability in reaction time predicts cognitive outcomes 5 years later. Neuropsychology 2010; 24: 731–741. [DOI] [PubMed] [Google Scholar]

- 16.Deary IJ, Der G. Reaction time, age, and cognitive ability: longitudinal findings from age 16 to 63 years in representative population samples. Aging Neuropsychol Cogn 2005; 12: 187–215. [Google Scholar]

- 17.Hagger-Johnson GE, Shickle DA, Roberts BAet al. et al. Neuroticism combined with slower and more variable reaction time: synergistic risk factors for 7-year cognitive decline in females. J Gerontol B Psychol Sci Soc Sci 2012; 67: 572–581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ahmadi H, Arji G, Shahmoradi L, et al. The application of Internet of things in healthcare: a systematic literature review and classification. Univers Access Inf Soc 2019; 18: 837–869. [Google Scholar]

- 19.Qadri YA, Nauman A, Zikria YB, et al. The future of healthcare internet of things: a survey of emerging technologies. IEEE Commun Surv Tutor 2020; 22: 1121–1167. [Google Scholar]

- 20.Baron C, Daniel-Allegro B. About adopting a systemic approach to design connected embedded systems: a MOOC promoting systems thinking and systems engineering. Syst Eng 2020; 23: 261–280. [Google Scholar]

- 21.Sun Y, Lo FPW, Lo B. Security and privacy for the Internet of medical things enabled healthcare systems: a survey. IEEE Access 2019; 7: 183339–55. [Google Scholar]

- 22.Thordardottir B, Malmgren Fänge A, Lethin C, et al. Acceptance and use of innovative assistive technologies among people with cognitive impairment and their caregivers: a systematic review. BioMed Res Int 2019; 2019: 1–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lee H, Park YR, Kim HR, et al. Discrepancies in demand of Internet of things services among older people and people with disabilities, their caregivers, and health care providers: face-to-face survey study. J Med Internet Res 2020; 22: e16614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Maruff P, Thomas E, Cysique L, et al. Validity of the CogState Brief Battery: relationship to standardized tests and sensitivity to cognitive impairment in mild traumatic brain injury, schizophrenia, and AIDS dementia complex. Arch Clin Neuropsychol 2009; 24: 165–178. [DOI] [PubMed] [Google Scholar]

- 25.Robbins TW, James M, Owen AM, et al. Cambridge Neuropsychological Test Automated Battery (CANTAB): a factor analytic study of a large sample of normal elderly volunteers. Dement Geriatr Cogn Disord 1994; 5: 266–281. [DOI] [PubMed] [Google Scholar]

- 26.Nasreddine ZS, Patel B. Validity of the BrainCheck battery for cognitive screening of patients referred for neuropsychological assessment: a preliminary report. Alzheimer’s & Dementia. J Alzheimers Assoc 2016; 12: 180. [Google Scholar]

- 27.eong J, Jeyraj S, Aung A. Usability of EEG neurofeedback system on ADHD children using NeuroSky’s MindWave. Adv Intell Syst Comput 2019; 562: 359–366. [Google Scholar]

- 28.Martin C, Thieme A, Clarke Ret al. et al. Neurodivercity: designing for neurodiverse ‘users’ in the smart city. In: Extended abstracts on human factors in computing systems. New York, NY: Association for Computing Machinery, 2017, pp.1304–1311. [Google Scholar]

- 29.Wild K, Boise L, Lundell Jet al. et al. Unobtrusive in-home monitoring of cognitive and physical health: reactions and perceptions of older adults. J Appl Gerontol 2008; 27: 181–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kaye J, Reynolds C, Bowman M, et al. Methodology for establishing a community-wide life laboratory for capturing unobtrusive and continuous remote activity and health data. J Vis Exp 2018, 137: 56942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sano M, Egelko S, Ferris S, et al. Pilot study to show the feasibility of a multicenter trial of home-based assessment of people over 75 years old. Alzheimer Dis Assoc Disord 2010; 24: 256–263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Demiris G, Thompson HJ, Reeder B, et al. Using informatics to capture older adults’ wellness. Int J Med Inf 2013; 82: e232–e241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Dodge HH, Mattek NC, Austin D, et al. In-home walking speeds and variability trajectories associated with mild cognitive impairment. Neurology 2012; 78: 1946–1952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Rantz MJ, Skubic M, Miller SJ, et al. Sensor technology to support aging in place. J Am Med Dir Assoc 2013; 14: 386–391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Steventon A, Bardsley M, Billings J, et al. Effect of telehealth on use of secondary care and mortality: findings from the whole system demonstrator cluster randomised trial. Br Med J 2012; 344: e3874–e3874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.AAL Programme [Internet]. [cited 2024 May 15]. SAVE. Available from: http://www.aal-europe.eu/projects/save/.

- 37.Vizitiu C, Bîră C, Dinculescu A, et al. Exhaustive description of the system architecture and prototype implementation of an IoT-based eHealth biometric monitoring system for elders in independent living. Sensors 2021; 21: 1837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bira C. [Digital] Electronics by Example When Hardware Greets Software. 2024.

- 39.Bîră C. Functional simulator for sensor-based embedded systems. In: Advanced topics in optoelectronics, microelectronics and nanotechnologies X [internet]. Bellingham, WA: SPIE, 2020 [cited 2024 May 15], pp.678–681. Available from: https://www.spiedigitallibrary.org/conference-proceedings-of-spie/11718/117182O/Functional-simulator-for-sensor-based-embedded-systems/10.1117/12.2572098.full. [Google Scholar]

- 40.Stanković M, Müller HJ, Shi Z. Task-irrelevant valence-preferred colors boost visual search for a singleton-shape target. Psychol Res 2024; 88: 417–437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Irons JL, Folk CL, Remington RW. All set! Evidence of simultaneous attentional control settings for multiple target colors. J Exp Psychol Hum Percept Perform 2012; 38: 758–775. [DOI] [PubMed] [Google Scholar]

- 42.Kuniecki M, Pilarczyk J, Wichary S. The color red attracts attention in an emotional context. An ERP study. Front Hum Neurosci 2015; 9: 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Green JJ, Spalek TM, McDonald JJ. From alternation to repetition: spatial attention biases contribute to sequential effects in a choice reaction-time task. Cogn Neurosci 2020; 11: 24–36. [DOI] [PubMed] [Google Scholar]

- 44.Houghton G, Tipper SP. A model of selective attention as a mechanism of cognitive control. In: Localist connectionist approaches to human cognition. Mahwah, NJ, US: Lawrence Erlbaum Associates Publishers, 1998, pp.39–74. [Google Scholar]

- 45.Petrucci M, Pecchinenda A. The role of cognitive control mechanisms in selective attention towards emotional stimuli. Cogn Emot 2017; 31: 1480–1492. [DOI] [PubMed] [Google Scholar]

- 46.Hamblin-Frohman Z, Becker SI. Inhibition continues to guide search under concurrent visual working memory load. J Vis 2022; 22: 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Noonan MP, Crittenden BM, Jensen Oet al. et al. Selective inhibition of distracting input. Behav Brain Res 2018; 355: 36–47. [DOI] [PubMed] [Google Scholar]

- 48.Wu T, Dufford AJ, Mackie MA, et al. The capacity of cognitive control estimated from a perceptual decision making task. Sci Rep 2016; 6: 34025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Bassham LE, Rukhin AL, Soto J, et al. A statistical test suite for random and pseudorandom number generators for cryptographic applications [Internet]. 0 ed. Gaithersburg, MD: National Institute of Standards and Technology, 2010 [cited 2024 May 15] p. NIST SP 800-22r1a. Report No.: NIST SP 800-22r1a. Available from: https://nvlpubs.nist.gov/nistpubs/Legacy/SP/nistspecialpublication800-22r1a.pdf. [Google Scholar]

- 50.GeeksforGeeks [Internet]. Principal Component Analysis with Python. Available from: https://www.geeksforgeeks.org/principal-component-analysis-with-python/. 2018 [cited 2024 May 15].

- 51.randomSeed()—Arduino Reference [Internet]. [cited 2024 May 15]. Available from: https://www.arduino.cc/reference/en/language/functions/random-numbers/randomseed/.

- 52.random()—Arduino Reference [Internet]. [cited 2024 May 15]. Available from: https://www.arduino.cc/reference/en/language/functions/random-numbers/random/.

- 53.GINARTeam/NIST-statistical-test [Internet]. GINAR Team. 2024 [cited 2024 May 15]. Available from: https://github.com/GINARTeam/NIST-statistical-test.

- 54.Trueman RC, Brooks SP, Dunnett SB. Choice reaction time and learning. In: Seel NM. (eds) Encyclopedia of the sciences of learning [internet]. Boston, MA: Springer US, 2012 [cited 2024 May 15], pp.534–537. 10.1007/978-1-4419-1428-6_594 [DOI] [Google Scholar]

- 55.Naismith SL, Hickie IB, Turner K, et al. Neuropsychological performance in patients with depression is associated with clinical, etiological and genetic risk factors. J Clin Exp Neuropsychol 2003; 25: 866–877. [DOI] [PubMed] [Google Scholar]

- 56.Azorin JM, Benhaïm P, Hasbroucq Tet al. et al. Stimulus preprocessing and response selection in depression: a reaction time study. Acta Psychol (Amst) 1995; 89: 95–100. [DOI] [PubMed] [Google Scholar]

- 57.Riedel P, Jacob MJ, Müller DK, et al. Amygdala fMRI signal as a predictor of reaction time. Front Hum Neurosci [Internet] 2016 [cited 2024 May 15]; 10: 1–13. Available from: http://journal.frontiersin.org/article/10.3389/fnhum.2016.00516/full. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Jones JG, Hardy L. The effects of anxiety upon psychomotor performance. J Sports Sci 1988; 6: 59–67. [DOI] [PubMed] [Google Scholar]

- 59.Snyder HR, Kaiser RH, Whisman MA, et al. Opposite effects of anxiety and depressive symptoms on executive function: the case of selecting among competing options. Cogn Emot 2014; 28: 893–902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Bunce D, Haynes BI, Lord SR, et al. Intraindividual stepping reaction time variability predicts falls in older adults with mild cognitive impairment. J Gerontol A Biol Sci Med Sci 2017; 72: 832–837. [DOI] [PubMed] [Google Scholar]

- 61.Attridge N, Eccleston C, Noonan D, et al. Headache impairs attentional performance: a conceptual replication and extension. J Pain 2017; 18: 29–41. [DOI] [PubMed] [Google Scholar]

- 62.Schiltenwolf M, Akbar M, Neubauer E, et al. The cognitive impact of chronic low back pain: positive effect of multidisciplinary pain therapy. Scand J Pain 2017; 17: 273–278. [DOI] [PubMed] [Google Scholar]

- 63.Padilla-Medina JA, Prado-Olivarez J, Amador-Licona N, et al. Study on simple reaction and choice times in patients with type I diabetes. Comput Biol Med 2013; 43: 368–376. [DOI] [PubMed] [Google Scholar]

- 64.Khan N, Ahmad I, Noohu MM. Association of disease duration and sensorimotor function in type 2 diabetes mellitus: beyond diabetic peripheral neuropathy. Somatosens Mot Res 2020; 37: 326–333. [DOI] [PubMed] [Google Scholar]

- 65.Leenders M, Verdijk LB, van der Hoeven L, et al. Patients with type 2 diabetes show a greater decline in muscle mass, muscle strength, and functional capacity with aging. J Am Med Dir Assoc 2013; 14: 585–592. [DOI] [PubMed] [Google Scholar]

- 66.Dua S, Singh P, Saha S. A study to assess and correlate serum lipid profile with reaction time in healthy individuals. Indian J Clin Biochem 2020; 35: 482–487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Ahmad S. Choice reaction time and adequacy of dialysis: a new application of an old method. Hemodial Int 2003; 7: 118–121. [DOI] [PubMed] [Google Scholar]

- 68.Kujawski S, Słomko J, Tafil-Klawe M, et al. The impact of total sleep deprivation upon cognitive functioning in firefighters. Neuropsychiatr Dis Treat 2018; 14: 1171–1181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Taheri M, Arabameri E. The effect of sleep deprivation on choice reaction time and anaerobic power of college student athletes. Asian J Sports Med 2012; 3: 15–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Benchetrit S, Badariotti JI, Corbett Jet al. et al. The effects of sleep deprivation and extreme exertion on cognitive performance at the world-record breaking Suffolk back yard ultra-marathon. PloS One 2024; 19: e0299475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Romdhani M, Hammouda O, Chaabouni Y, et al. Sleep deprivation affects post-lunch dip performances, biomarkers of muscle damage and antioxidant status. Biol Sport 2019; 36: 55–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Williams TB, Badariotti JI, Corbett J, et al. The effects of sleep deprivation, acute hypoxia, and exercise on cognitive performance: a multi-experiment combined stressors study. Physiol Behav 2024; 274: 114409. [DOI] [PubMed] [Google Scholar]

- 73.Alakuijala A, Maasilta P, Bachour A. The Oxford sleep resistance test (OSLER) and the multiple unprepared reaction time test (MURT) detect vigilance modifications in sleep apnea patients. J Clin Sleep Med JCSM Off Publ Am Acad Sleep Med 2014; 10: 1075–1082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Luvizutto GJ, Sisconetto AT, Appelt PA, et al. Can the choice reaction time be modified after COVID-19 diagnosis? A prospective cohort study. Dement Neuropsychol 2022; 16: 354–360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Murtaza G, Sultana R, Abualait T, et al. Social isolation during the COVID-19 pandemic is associated with the decline in cognitive functioning in young adults. PeerJ 2023; 11: e16532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Grant SA, Murdoch J, Millar Ket al. et al. Blood propofol concentration and psychomotor effects on driving skills. Br J Anaesth 2000; 85: 396–400. [DOI] [PubMed] [Google Scholar]

- 77.Smith C, Carter M, Sebel Pet al. et al. Mental function after general anaesthesia for transurethral procedures. Br J Anaesth 1991; 67: 262–268. [DOI] [PubMed] [Google Scholar]

- 78.Scott WA, Whitwam JG, Wilkinson RT. Choice reaction time. A method of measuring postoperative psychomotor performance decrements. Anaesthesia 1983; 38: 1162–1168. [DOI] [PubMed] [Google Scholar]