Abstract

Objective

To use a practical approach to examining the use of Expert Recommendations for Implementing Change (ERIC) strategies by Reach, Effectiveness, Adoption, Implementation, and Maintenance (RE‐AIM) dimensions for rural health innovations using annual reports on a diverse array of initiatives.

Data Sources and Study Setting

The Veterans Affairs (VA) Office of Rural Health (ORH) funds initiatives designed to support the implementation and spread of innovations and evidence‐based programs and practices to improve the health of rural Veterans. This study draws on the annual evaluation reports submitted for fiscal years 2020–2022 from 30 of these enterprise‐wide initiatives (EWIs).

Study Design

Content analysis was guided by the RE‐AIM framework conducted by the Center for the Evaluation of Enterprise‐Wide Initiatives (CEEWI), a Quality Enhancement Research Initiative (QUERI)‐ORH partnered evaluation initiative.

Data Collection and Extraction Methods

CEEWI analysts conducted a content analysis of EWI annual evaluation reports submitted to ORH. Analysis included cataloguing reported implementation strategies by Reach, Adoption, Implementation, and Maintenance (RE‐AIM) dimensions (i.e., identifying strategies that were used to support each dimension) and labeling strategies using ERIC taxonomy. Descriptive statistics were conducted to summarize data.

Principal Findings

A total of 875 implementation strategies were catalogued in 73 reports. Across these strategies, 66 unique ERIC strategies were reported. EWIs applied an average of 12 implementation strategies (range 3–22). The top three ERIC clusters across all 3 years were Develop stakeholder relationships (21%), Use evaluative/iterative strategies (20%), and Train/educate stakeholders (19%). Most strategies were reported within the Implementation dimension. Strategy use among EWIs meeting the rurality benchmark were also compared.

Conclusions

Combining the dimensions from the RE‐AIM framework and the ERIC strategies allows for understanding the use of implementation strategies across each RE‐AIM dimension. This analysis will support ORH efforts to spread and sustain rural health innovations and evidence‐based programs and practices through targeted implementation strategies.

Keywords: ERIC, evaluation, implementation strategies, RE‐AIM framework, rural health, Veterans

What is known on this topic

Implementation science theories, models, and frameworks can support the planning, implementation, evaluation, and sustainment of evidence‐based programs and practices.

A combination of thoughtfully selected and partner‐informed implementation strategies can be used to optimize the Reach, Effectiveness, Adoption, Implementation, and Maintenance of evidence‐based programs and practices.

Few studies have looked at the longitudinal use of implementation strategies across multiple programs.

What this study adds

Content analysis of readily‐available organizational documents such as annual reports can be used to examine implementation strategy utilization.

We identified what implementation strategies were used most frequently in conjunction with each RE‐AIM dimension.

1. INTRODUCTION

Use of implementation science theories, models, and frameworks can support and guide the planning, implementation, evaluation, and sustainment of evidence‐based programs and practices. The Reach, Effectiveness, Adoption, Implementation, and Maintenance (RE‐AIM) framework has been broadly used to guide all stages of the implementation continuum including iteratively optimizing pre‐implementation, implementation, evaluation, and sustainment activities. 1 , 2 , 3 When using RE‐AIM for all of these activities, one can explore not only progress on key implementation outcomes but also critical strategies that have the potential to enhance or optimize these outcomes. 4 , 5

The literature shows that the use of a combination of well thought out and partner‐informed strategies can increase the likelihood that evidence‐based programs and practices are meaningfully adopted, implemented, and sustained; reach priority populations; and have equitable impact. It is also expected that implementation strategies (what we do, how we do them, and how much of them we do) will change over the stages of the implementation process. 6 Hence, there is value in systematically and longitudinally documenting implementation strategies. At this time, there is little known about what types of strategies are best suited to support the different stages of the implementation process and whether we can generalize across evidence‐based programs and practices and contexts. The Expert Recommendations for Implementing Change (ERIC) taxonomy was proposed to help researchers and practitioners classify activities undertaken by a team to support program implementation. 7 , 8 ERIC is a taxonomy of implementation strategies developed through a systematic review 9 and a modified Delphi process to determine the 73 strategies and their definitions. 7 Implementation experts mapped the strategies into clusters and ranked each by importance and feasibility. 8 Combining the structure of an implementation science framework and the ERIC taxonomy enables us to compare the use of strategies across multiple projects and develop an understanding for key strategies used and needed at each stage. 6

The U.S. Department of Veterans Affairs (VA) Office of Rural Health (ORH) has funded over 30 enterprise‐wide initiatives (EWIs) and their evaluations. EWIs support the implementation and spread of innovations and evidence‐based programs and practices that improve the health of rural Veterans. EWIs range in terms of clinical topic, priority sub‐population, and implementation setting. 10 While the overall ORH EWI program serves all VA healthcare systems, each individual EWI is implemented at a smaller number of VA medical centers and community‐based outpatient clinics and the number of sites varies widely between EWIs. In addition, new sites are continuously added, while other sites are moving into sustainment or discontinuing an EWI; thus, each EWI may include sites in adoption, implementation, and sustainment phases within a single fiscal year. Annual evaluation reports are structured with the RE‐AIM framework. The VA ORH selected the RE‐AIM framework because it allows for emphasis of reach to rural Veterans and its relative ease of use as a planning and evaluation framework.

The Center for the Evaluation of Enterprise‐Wide Initiatives (CEEWI) was established in FY19 to develop a standardized EWI evaluation program for the VA ORH. CEEWI is also a Quality Enhancement Research Initiative (QUERI)‐ORH partnered evaluation initiative (PEC 19‐456), which allows integration of specific implementation research questions. QUERI initiated the program of partnered research nearly a decade ago for this purpose. 11 Partnered QUERIs strengthen implementation science questions as implementation scientists learn the real‐world issues their operational partners face and integrate and test the latest developments in operational settings.

CEEWI developed a RE‐AIM logic model to guide assessment of each EWI's progress. 12 , 13 As part of this process, we explored what types of activities were undertaken in EWIs to support different RE‐AIM dimensions. These activities were then classified based on ERIC taxonomy clusters. 8 We report a practical approach for tracking implementation strategies, our findings from this analysis, and describe the types and number of strategies that were used in each RE‐AIM dimension across EWIs with particular focus on reach to rural Veterans. Our objective was to present a practical approach for identifying implementation strategies in a readily available dataset reporting on evidence‐based programs and practice implementation and then describe patterns of strategy use in real‐world, operational settings. This work lays the groundwork for future analyses of clusters of implementation strategies and supporting EWIs' choice of implementation strategies in rural settings.

2. METHODS

2.1. Setting

The VA ORH supports the 2.7 million rural Veterans enrolled in the VA, as well as the VA employees who serve them. The VA ORH began implementing EWIs to ensure that innovations and evidence‐based programs and practices reach rural Veterans. In 2019, the VA ORH partnered with QUERI to establish CEEWI and standardize evaluations across all EWIs.

2.2. Data sources

This study focused on 30 EWIs with at least one annual evaluation report from FY20‐22. 10 In total, the dataset included 73 EWIs annual evaluation reports (FY20 = 23; FY21 = 27; FY22 = 23), which were completed using a standardized reporting template with RE‐AIM dimensions as a central feature of the content (Figure S1).

2.3. Study design

The study design was a content analysis, 14 which allowed for quantitative analysis of textual data (EWI annual evaluation reports). Analysis focused on systematically cataloguing and correlating enterprise‐wide initiative program activities to ERIC taxonomy implementation strategies. 7

2.4. Data collection and extraction methods

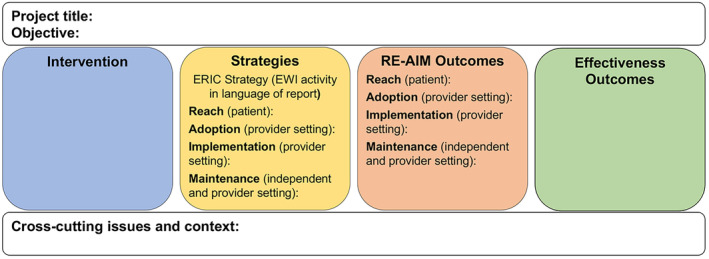

CEEWI analysts conducted a content analysis of all FY20‐22 EWI annual evaluation reports submitted to the VA ORH. The content analysis was aided by the development and use of a RE‐AIM logic model organized by the five RE‐AIM dimensions (Figure 1). We also created an ERIC taxonomy codebook in which each strategy was defined and categorized by the nine clusters (e.g., Engage consumers, Use evaluative and iterative strategies, and Change infrastructure). 8

FIGURE 1.

RE‐AIM logic model for categorizing ERIC (Expert Recommendations for Implementing Change) implementations strategies among enterprise‐wide initiatives (EWIs).

Reports were divided among three teams of three qualitatively trained analysts. Each team had no more than nine reports; thus, each team member was never responsible for more than three reports. Each team worked on their process for extracting activities and labeling them with the ERIC strategies. We also had weekly meetings where all teams came together to review questions about activities and ERIC strategy labels. During these meetings, questions were answered through consensus. Notes and frequently asked questions were tracked so team members could refer to them later.

CEEWI analysts then entered activities and corresponding ERIC strategies into a Microsoft Access database under one of four RE‐AIM dimensions (Reach, Adoption, Implementation, Maintenance). We determined that strategies across the RE‐AIM dimensions were used in support of the Effectiveness dimension (Figure 1). While the majority of activities and implementation strategies were entered in the same RE‐AIM dimension as the original EWI reports, CEEWI analysts made the final determination of corresponding dimensions. Each activity could only be labeled with one ERIC strategy and one RE‐AIM dimension. After CEEWI completed the RE‐AIM logic model, the EWI evaluation teams reviewed their logic models as a validation step.

The database was programmed to visually display the information in the RE‐AIM logic model (Figure S2). Of note, activities described by the EWI evaluation team were included alongside the corresponding ERIC strategy so the way the teams described their activities (emic/insider perspective) was included with the ERIC strategy (etic/outsider implementation science expert perspective). CEEWI determined some strategies did not correlate to an ERIC strategy and were tracked as “non‐ERIC strategies” (Table S1). Non‐ERIC strategies were not included in the analysis.

This evaluation‐of‐evaluations process was developed to support the VA ORH's need for efficient summarization of EWI annual reports. CEEWI completed the process described above within the first two months of receiving EWI annual reports, so a RE‐AIM logic model for each EWI was available to inform the VA ORH's budget decision‐making for the following fiscal year. CEEWI's process was guided by a continuous quality improvement framework. Each year, CEEWI reviewed the strengths and weaknesses of the reports as a whole and revised the annual report template to improve and standardize the information reported by each EWI (e.g., added RE‐AIM dimension definitions to the template, added a section for media coverage, etc.; Figure S3). The RE‐AIM logic models served not only as one‐page summaries of the annual reports but became part of the continuous quality improvement feedback process.

2.5. Analysis

Descriptive statistical analyses were conducted to summarize data and examine patterns and longitudinal trends, including the average number of strategies per EWI per year; number of strategies over time per EWI; and most used strategies overall, by RE‐AIM dimensions, by fiscal year, and by rurality.

3. RESULTS

A total of 875 implementation strategies (FY20 = 301; FY21 = 312; FY22 = 262) were catalogued in 73 reports. Sixty‐six unique ERIC strategies were reported among the 73 strategies in the taxonomy. Strategies occurred in each of the nine ERIC clusters, the most common being Develop stakeholder relationships (21%), Use evaluative/iterative strategies (20%), and Train/educate stakeholders (19%). Per fiscal year, EWIs used an average of 12 implementation strategies (range 3–22). Among the top quartile of strategies, the most strategies occurred in the same three clusters (Use evaluative/iterative strategies, Develop stakeholder relationships, and Train/educate stakeholders; Table 1). Within the Use evaluative/iterative strategies cluster, the individual strategy purposely reexamine the implementation was identified 48 times, and in reviewing the reports, these actions primarily included debriefings, qualitative interviews, and focus groups with a wide variety of stakeholders to assess implementation, examination of implementation, and clinical outcome data used to make changes, and use of additional theories, models, and frameworks to review implementation. For the Develop stakeholder interrelationships cluster, build a coalition (n = 29) was the top individual strategy and reports described relationship‐building with clinical services locally, regionally, and nationally, and other services such as biomedicine and public affairs offices. Conduct educational meetings (n = 35) was the top individual implementation strategy in Train/educate stakeholders cluster and included meetings to educate program staff and staff who may refer others to the program. Longitudinally, we examined the number of strategies each EWI used per fiscal year and found no distinct pattern in the number of strategies across years. We also compared the top quartile of implementation strategies across fiscal years and found that only purposely reexamine the implementation was catalogued in each year (Table 2).

TABLE 1.

Top quartile of implementation strategies among enterprise‐wide initiatives (EWI) combining fiscal years, FY20‐22.

| ERIC strategy | ERIC cluster | Number of occurrences |

|---|---|---|

| Purposely reexamine the implementation | Use evaluative and iterative strategies | 48 |

| Conduct educational meetings | Train and educate stakeholders | 35 |

| Promote adaptability | Adapt and tailor to context | 35 |

| Build a coalition | Develop stakeholder interrelationships | 29 |

| Capture and share local knowledge | Develop stakeholder interrelationships | 27 |

| Tailor strategies | Adapt and tailor to context | 27 |

| Promote network weaving | Develop stakeholder interrelationships | 26 |

| Assess for readiness and identify barriers and facilitators | Use evaluative and iterative strategies | 25 |

| Conduct ongoing training | Train and educate stakeholders | 25 |

| Develop and implement tools for quality monitoring | Use evaluative and iterative strategies | 25 |

| Audit and provide feedback | Use evaluative and iterative strategies | 24 |

| Provide ongoing consultation | Train and educate stakeholders | 24 |

| Centralize technical assistance | Provide interactive assistance | 21 |

| Use mass media | Engage consumers | 21 |

| Access new funding | Utilize financial strategies | 20 |

| Develop a formal implementation blueprint | Use evaluative and iterative strategies | 20 |

| Develop and organize quality monitoring systems | Use evaluative and iterative strategies | 20 |

Note: Change Infrastructure and Support Clinicians are the only 2 of the 9 ERIC (Expert Recommendations for Implementing Change) clusters with no strategies in the top quartile.

TABLE 2.

Comparison of top quartile of implementation strategies by fiscal years.

| Strategy | FY20 | Strategy | FY21 | Strategy | FY22 |

|---|---|---|---|---|---|

| Conduct educational meetings | 17 | Purposely reexamine the implementation | 14 | Purposely reexamine the implementation | 21 |

| Purposely reexamine the implementation | 13 | Promote adaptability | 13 | Tailor strategies | 12 |

| Promote adaptability | 13 | Develop and implement tools for quality monitoring | 13 | Promote network weaving | 12 |

| Centralize technical assistance | 12 | Conduct educational meetings | 11 | Build a coalition | 10 |

| Assess for readiness and identify barriers and facilitators | 11 | Use mass media | 11 | Capture and share local knowledge | 10 |

| Provide ongoing consultation | 11 | Build a coalition | 10 | ||

| Promote network weaving | 10 |

Note: Shaded cells indicate strategies that occur in more than one dimension.

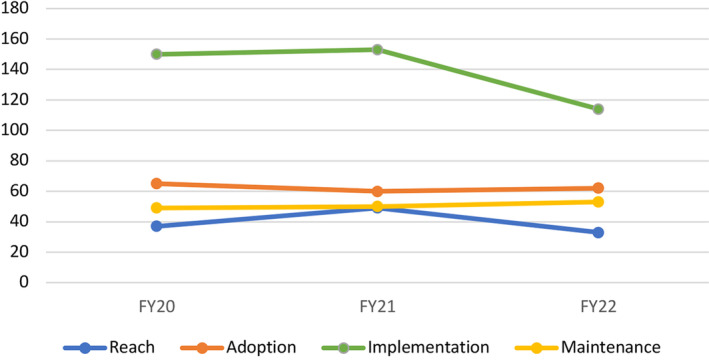

Integrating RE‐AIM into the analysis, nearly double the number of strategies were reported within the Implementation dimension compared with the three other dimensions (Figure 2). There were 119 Reach, 187 Adoption, 417 Implementation, and 152 Maintenance strategies in total. The top quartile of strategies in each RE‐AIM dimension is presented in Table 3. Among the top quartile strategies, two occurred in each of the RE‐AIM dimensions purposely reexamine the implementation and promote adaptability. While multiple strategies occurred in each of the dimensions, the dimensions each had four to five strategies that were unique to the dimension.

FIGURE 2.

Total number of implementation strategies among enterprise‐wide initiative (EWI) by RE‐AIM dimensions, FY20‐22.

TABLE 3.

Top quartile of implementations strategies per RE‐AIM dimension, FY20‐22.

| Reach | Adoption | Implementation | Maintenance | ||||

|---|---|---|---|---|---|---|---|

| Strategy | Grand total | Strategy | Grand total | Strategy | Grand total | Strategy | Grand total |

| Use mass media | 9 | Conduct educational meetings | 15 | Purposely reexamine the implementation | 24 | Purposely reexamine the implementation | 13 |

| Increase demand | 9 | Build a coalition | 12 | Tailor strategies | 20 | Access new funding | 11 |

| Conduct educational meetings | 6 | Assess for readiness and identify barriers and facilitators | 11 | Promote adaptability | 20 | Promote network weaving | 7 |

| Promote network weaving | 6 | Conduct local needs assessment | 9 | Capture and share local knowledge | 20 | Provide ongoing consultation | 6 |

| Purposely reexamine the implementation | 6 | Create new clinical teams | 8 | Conduct ongoing training | 18 | Develop educational materials | 5 |

| Promote adaptability | 5 | Promote adaptability | 5 | Develop and implement tools for quality monitoring | 17 | Build a coalition | 5 |

| Change record systems | 5 | Change service sites | 5 | Revise professional roles | 15 | Promote adaptability | 5 |

| Conduct local needs assessment | 4 | Tailor strategies | 5 | Audit and provide feedback | 15 | Involve executive boards | 5 |

| Distribute educational materials | 4 | Conduct educational outreach visits | 5 | Provide ongoing consultation | 13 | Identify and prepare champions | 4 |

| Involve patients/consumers and family members | 4 | Organize clinician implementation team meetings | 5 | Develop a formal implementation blueprint | 12 | Capture and share local knowledge | 4 |

| Conduct ongoing training | 5 | Develop and organize quality monitoring systems | 12 | Audit and provide feedback | 4 | ||

| Purposely reexamine the implementation | 5 | Centralize technical assistance | 11 | Develop a formal implementation blueprint | 4 | ||

| Assess for readiness and identify barriers and facilitators | 11 | ||||||

| Promote network weaving | 11 | ||||||

| Conduct educational meetings | 11 |

Note: Shaded cells indicate strategies that occur in more than one dimension.

We examined Reach strategies in greater detail due to the VA ORH's emphasis on reaching rural Veterans. Comparing Reach strategies across years, the most common strategy shifted from increase demand in FY20 and FY21 to purposely reexamine the implementation in FY22. However, among the top quartile, no strategies appeared for Reach across all three fiscal years. We also used the VA ORH's benchmark of EWIs serving at least 50% rural Veterans among their intervention population. For those that met the benchmark for a given fiscal year, the top three implementation strategies were audit and provide feedback, change record systems, and conduct education meeting. These same three strategies did not appear in the top quartile of strategies used by EWIs performing under the benchmark (Table S2).

4. DISCUSSION

QUERI's partnered evaluation initiative provided our team the opportunity to meet the VA ORH's needs for standardizing and improving their evaluation process, while at the same time allowing us to examine ongoing questions in implementation research regarding how implementation strategies are used in real‐world, operational settings. In our content analysis, we successfully combined the RE‐AIM dimensions with ERIC taxonomy to describe the use of strategies to support RE‐AIM of rural health innovations and evidence‐based programs and practices across EWIs. Many approaches to tracking implementation strategies have been developed, including surveys, 15 , 16 , 17 , 18 review of meeting minutes, 19 brainstorming and activity logs, 20 , 21 and multi‐model approaches. 6 , 22 Reviewing reports from innovations and evidence‐based programs and practices such as the work described here is a practical approach that can be systematically applied and analyzed for greater understanding of the use and potential effectiveness of implementation strategies using readily‐available data, similar to the work QUERI has done of its own reports. 11

A contribution of this study is the ability to examine implementation strategies across many different innovations and evidence‐based programs and practices focused particularly on rural health. Much attention has been placed on tracking implementation strategies for implementation research for single and multi‐site studies with a single intervention. 6 , 16 , 17 , 18 , 19 , 20 , 22 This study allowed us to compare across 30 diverse projects within the largest integrated healthcare system in the United States. The EWIs cover many different clinical and workforce development areas despite all focusing on improving the health and well‐being of rural Veterans. With over 66 of the 73 ERIC strategies being documented across the EWIs, it demonstrates the application and usefulness of a wide range of strategies in the area of rural health. More specifically, purposely reexamine the implementation was the most‐used implementation strategy and consistently applied across all fiscal years and RE‐AIM dimensions. Given its saliency in each of the analyses, EWI teams clearly chose this strategy to improve their program implementation among a variety of activities. It also aligns with implementation experts' rating of the importance and feasibility of the 73 implementation strategies. Among implementation experts in the Waltz and colleague's study, purposely reexamine the implementation was rated among the highest implementation strategies in both importance and feasibility. 8 Conduct educational meetings and promote adaptability were also used repeatedly and consistently across analyses of the enterprise‐wide reports. The high use of purposely reexamine the implementation and promote adaptability reinforces the importance of flexibility and willingness to change in support of program improvement. It is also noteworthy that Change in infrastructure and Support clinicians were two clusters of ERIC strategies that did not appear in the top quartile of strategies. Looking closely at these clusters, both contain strategies that change the focus from restructuring of professional roles and new clinical teams to changing record systems or physical structure. Comparing this with Waltz and colleagues' examination of importance and feasibility, strategies in both of these ERIC clusters (Change in infrastructure and Support clinicians) rank among the least important and least feasible. 8 In terms of importance and feasibility, our work demonstrates the real‐world value practitioners place on the range of implementation strategies and its general alignment with the Waltz et al study.

At the same time, we found several challenges in conducting our longitudinal analysis, including the tremendous variety of EWI clinical topics and the fact that “stages of implementation” were in constant flux—new sites adopting an evidence‐based programs and practice in the same year that other sites in the EWI were moving toward sustainment. This made it impossible to classify an EWI into a particular implementation phase. The number of strategies in each dimension was stable with the exception of the drop in the number of strategies in the implementation dimension (Figure 2). Our hypothesis is that implementation strategies stabilize over time and EWI begin to hone in on specific strategies that aid program implementation and drop less effective strategies. In short, strategies in the Implementation dimension become “core” to the EWI. However, reaching more Veterans, adding more sites, and sustaining the EWI is a constant.

We catalogued the use of 66 implementation strategies among 30 EWIs over three years. This is similar in comprehensiveness to Rogal and colleagues' study of implementation of a new Hepatitis C treatment in the VA Health Care System. In the first year of surveying sites, 3 of the 73 ERIC strategies were not endorsed, 18 while in the second year, only 1 of the 73 strategies was not endorsed (alter patient fees). 17 All three strategies not endorsed in Rogal and colleagues' studies were also not found in our content analysis of CEEWI annual reports. All three were in the ERIC cluster of Utilize financial strategies.

Interestingly, Rogal and colleagues focused on a single intervention and tracked 70–72 strategies, while CEEWI reviewed 30 interventions and tracked a total of 66. Boyd and colleagues reported 26 unique strategies for one intervention across six sites 19 ; Smith and colleagues reported 34 total implementation strategies at one site with one intervention 6 ; Perry and colleagues reported 33 across seven collaboratives (1721 clinics) with one intervention 22 ; and Bunger and colleagues reported 45 unique strategies in a statewide implementation of a single intervention. 20 Among the EWIs, the most implementation strategies in a single EWI reported was 22 with an average of 12 per EWI, while Rogal and colleagues reported an average of 25 and 28 per site, per year. 17 , 18 Given these inconsistencies, it is difficult to discern whether a site, an intervention, or the tracking approach has the most influence on the number of implementation strategies tracked.

Walsh‐Bailey and colleagues shed some light on the question of approach. 21 In their pilot study of tools to track implementation strategies, their brainstorming tool led to a greater number of barriers, implementation strategies, and adaptations being tracked compared with two different activity logs. Focusing specifically on the reporting by the implementation practitioner on the teams, the practitioners reported 22 implementation strategies with the brainstorming tool and 9 in both activity logs. These results across tools suggest more examination of tracking practices is needed when focused on number and frequency of strategies.

Very few studies have applied the ERIC taxonomy and a theory, model, or framework to examine how implementation strategies are used. 23 Haley and colleagues used the Consolidated Framework for Implementation Research (CFIR) to code barriers and contextual factors related to implementation strategies but primarily focused on documentation of adaptions. For RE‐AIM, it is not surprising that double the number of strategies were found within the Implementation dimension, since we would expect reporting of implementation strategies to occur in the Implementation dimension. Yet, by categorizing implementation strategies by Reach, Adoption, and Maintenance, our analyses demonstrated that implementation strategies can target RE‐AIM dimensions other than Implementation. Specifically, implementation strategies categorized as Reach or Adoption could help in targeted decision making if an innovation needs to increase its patient population or clinician users, while strategies that are categorized as Maintenance may lead to greater sustainment. Targeting strategies to particular theoretical constructs and phases of implementation are beginning to be explored 24 and should be examined in future studies. Combining ERIC strategies with RE‐AIM or other theories, models or frameworks can help to examine where implementation strategy taxonomies can provide targeted suggestions based on constructs and identify where gaps in types of strategies remain.

The choice of method for tracking and analyzing implementation strategies will depend on the analytic questions, resources, and expertise of the various stakeholders. For example, tracking and cataloguing implementation strategies to their corresponding ERIC strategy requires a coder (i.e., decision‐maker) who may have varying levels of implementation science expertise. The completion of surveys 16 , 17 , 18 also requires implementation expertise and raises the question of clinician burden depending on the role of the respondent. Many tracking approaches include Proctor and colleagues' recommendations for implementation strategy specification. 25 Neither the CEEWI team or Rogal and colleagues documented Proctor and colleagues' recommendations for reporting implementation strategies. In a research context, study teams used a variety of tracking strategies (e.g., meeting notes, brainstorming logs) which allowed them to also report specifications such as actor, action, and temporality from the Proctor recommendations. 6 , 19 , 20 , 21 , 22 While CEEWI's approach can be done in a short time period, it lacks much of the specifying information about implementation strategies that meeting note analysis may be able to answer, such as why a particular strategy was chosen (justification) at that time point (temporality) in the project. The variety of approaches found in the literature give implementation scientists the opportunity to compare and decide which aspects are most critical to their research study or operational evaluation, ranging from clinician burden to where implementation expertise is found on a team to readily‐available data sources from evaluation partners.

Using operational data from a diverse array of programs comes with a number of inherent limitations. First, the intent of the EWI annual evaluation reports was not focused on implementation strategies, but rather the impact of each EWI on rural Veterans and the VA staff who care for them. The audience for the reports is the VA ORH staff, and the goal is meeting their programmatic needs. The strength of our study was our ability to leverage our operational work to track implementation strategy uses across a diverse array of real‐world settings. How to best track and monitor implementation strategies in research and clinical/operational settings is an ongoing question in the field of implementation science, particularly since development of the ERIC taxonomy. 7 , 9 Another limitation is lack of power when analyses are divided by fiscal year, RE‐AIM dimensions, and rurality. While we identified patterns, strong conclusions cannot be made about the effectiveness of a strategy. Further limitations include lack of validation on the comprehensiveness of strategy reporting, lack of data on the fidelity of the strategies in practice, and lack of detail on the strategies as recommended by Proctor and colleagues, specifically the implementation actor, action target, temporality, dose, and justification. 25 Moreover, a single CEEWI team member was responsible for documenting the final decision on which ERIC strategy to label an activity reported in the EWI evaluation report. Rapid turnaround and limited resources led to the decision to have a single “coder;” however, weekly meetings of the full team enabled an intensive consensus process that promoted and supported team learning, which likely increased the reliability of coding. Related to strategy reporting, each year the EWI teams received their RE‐AIM logic model as compiled by the CEEWI team. The logic model included information on the activities described in the reports and how they correlate to the ERIC taxonomy. This may have changed the EWI teams' reporting behavior and incentivized them to include more activities, although we did not see this in the data within single EWI or in aggregate as the number of strategies actually declined in FY22. It is also possible that CEEWI team members improved their ability to recognize activities and correlate them to ERIC taxonomy, although again the data do not reflect this based on overall counts.

In summary, combining ERIC strategies and the RE‐AIM framework allows for comparison of implementation strategies across each dimension, and our methodology allowed us to track implementation strategies in real‐world clinical and operational settings that support rural health. This analysis supports the VA ORH efforts to spread and sustain rural health innovations and evidence‐based programs and practices through targeted implementation strategies. Further research could encompass interviews with EWI teams regarding implementation strategies and additional longitudinal analysis of the current dataset as it grows with additional fiscal years to examine initiation and discontinuation of implementation strategies. Feedbacking these analyses will be critical as the VA ORH seeks to support implementation, spread, and sustainment of impactful rural innovations and evidence‐based programs and practices across the enterprise.

Supporting information

Figure S1. VA Office of Rural Health enterprise‐wide initiative annual evaluation template.

Figure S2. Example of completed RE‐AIM logic model.

Figure S3. Revisions of the VA Office of Rural Health enterprise‐wide initiative annual evaluation template.

Table S1. Strategies derived from reports categorized as non‐ERIC strategies.

Table S2. Top quartile (with at least two) Reach implementation strategies among EWIs that met or exceeded the rurality benchmark in comparison with EWIs below the benchmark. Shaded cells occur in EWIs both above and below the benchmark.

ACKNOWLEDGMENTS

We would like to acknowledge our funding from Department of Veterans Affairs (VA) Quality Enhancement Research Initiative (PEC 19‐456) in partnership with funding from the VA Office of Rural Health. We would also like to thank the Enterprise‐Wide Initiative teams. Without their dedication to rural Veterans and commitment to reporting their work, this analysis would not have been possible. In particular, we would like to thank the TeleNeurology EWI for allowing us to share their completed RE‐AIM logic model in the Supporting Information. This work was partially supported by the University of Iowa's Year 2 P3 Strategic Initiatives Program through funding received for the project entitled “High Impact Hiring Initiative (HIHI): A Program to Strategically Recruit and Retain Talented Faculty.” We would like to thank Kris Greiner for her editorial assistance.

Reisinger HS, Barron S, Balkenende E, et al. Tracking implementation strategies in real‐world settings: VA Office of Rural Health enterprise‐wide initiative portfolio. Health Serv Res. 2024;59(Suppl. 2):e14377. doi: 10.1111/1475-6773.14377

REFERENCES

- 1. Glasgow RE, Harden SM, Gaglio B, et al. RE‐AIM planning and evaluation framework: adapting to new science and practice with a 20‐year review. Front Public Health. 2019;7:64. doi: 10.3389/fpubh.2019.00064 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Holtrop JS, Estabrooks PA, Gaglio B, et al. Understanding and applying the RE‐AIM framework: clarifications and resources. J Clin Transl Sci. 2021;5(1):e126. doi: 10.1017/cts.2021.789 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Kwan BM, McGinnes HL, Ory MG, Estabrooks PA, Waxmonsky JA, Glasgow RE. RE‐AIM in the real world: use of the RE‐AIM framework for program planning and evaluation in clinical and community settings. Front Public Health. 2019;7:345. doi: 10.3389/fpubh.2019.00345 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Glasgow RE, Battaglia C, McCreight M, et al. Use of the reach, effectiveness, adoption, implementation, and maintenance (RE‐AIM) framework to guide iterative adaptations: applications, lessons learned, and future directions. Front Health Serv. 2022;2:959565. doi: 10.3389/frhs.2022.959565 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Glasgow RE, Battaglia C, McCreight M, Ayele RA, Rabin BA. Making implementation dcience more rapid: use of the RE‐AIM framework for mid‐course adaptations across five health services research projects in the Veterans Health Administration. Front Public Health. 2020;8:194. doi: 10.3389/fpubh.2020.00194 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Smith JD, Merle JL, Webster KA, Cahue S, Penedo FJ, Garcia SF. Tracking dynamic changes in implementation strategies over time within a hybrid type 2 trial of an electronic patient‐reported oncology symptom and needs monitoring program. Front Health Serv. 2022;2:983217. doi: 10.3389/frhs.2022.983217 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21. doi: 10.1186/s13012-015-0209-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Waltz TJ, Powell BJ, Matthieu MM, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci. 2015;10:109. doi: 10.1186/s13012-015-0295-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Powell BJ, McMillen JC, Proctor EK, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69(2):123‐157. doi: 10.1177/1077558711430690 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Office of Rural Health . Enterprise‐Wide Initiatives. Washington, DC: U.S. Department of Veterans Affairs. Accessed December, 2023. https://www.ruralhealth.va.gov/providers/Enterprise_Wide_Initiatives.asp

- 11. Braganza MZ, Kilbourne AM. The Quality Enhancement Research Initiative (QUERI) impact framework: measuring the teal‐world impact of implementation science. J Gen Intern Med. 2021;36(2):396‐403. doi: 10.1007/s11606-020-06143-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. CDC Division for Heart Disease and Stroke Prevention . CDC Division for Heart Disease and Stroke Prevention Evaluation Guide: Developing and Using a Logic Model. Atlanta, GA: Centers for Disease Control and Prevention. Accessed December, 2023. https://www.cdc.gov/dhdsp/docs/logic_model.pdf

- 13. Smith JD, Li DH, Rafferty MR. The implementation research logic model: a method for planning, executing, reporting, and synthesizing implementation projects. Implement Sci. 2020;15(1):84. doi: 10.1186/s13012-020-01041-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Neuendorf KA. The Content Analysis Guidebook. 2nd ed. Sage; 2017. [Google Scholar]

- 15. Bustos TE, Sridhar A, Drahota A. Community‐based implementation strategy use and satisfaction: a mixed‐methods approach to using the ERIC compilation for organizations serving children on the autism spectrum. Implement Res Pract. 2021;2:26334895211058086. doi: 10.1177/26334895211058086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Rogal SS, Chinman M, Gellad WF, et al. Tracking implementation strategies in the randomized rollout of a Veterans Affairs national opioid risk management initiative. Implement Sci. 2020;15(1):48. doi: 10.1186/s13012-020-01005-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Rogal SS, Yakovchenko V, Waltz TJ, et al. Longitudinal assessment of the association between implementation strategy use and the uptake of hepatitis C treatment: year 2. Implement Sci. 2019;14(1). doi: 10.1186/s13012-019-0881-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Rogal SS, Yakovchenko V, Waltz TJ, et al. The association between implementation strategy use and the uptake of hepatitis C treatment in a national sample. Implement Sci. 2017;12(1):60. doi: 10.1186/s13012-017-0588-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Boyd MR, Powell BJ, Endicott D, Lewis CC. A method for tracking implementation strategies: an exemplar implementing measurement‐based care in community behavioral health clinics. Behav Ther. 2018;49(4):525‐537. doi: 10.1016/j.beth.2017.11.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Bunger AC, Powell BJ, Robertson HA, MacDowell H, Birken SA, Shea C. Tracking implementation strategies: a description of a practical approach and early findings. Health Res Policy Syst. 2017;15(1):15. doi: 10.1186/s12961-017-0175-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Walsh‐Bailey C, Palazzo LG, Jones SM, et al. A pilot study comparing tools for tracking implementation strategies and treatment adaptations. Implement Res Pract. 2021;2:26334895211016028. doi: 10.1177/26334895211016028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Perry CK, Damschroder LJ, Hemler JR, Woodson TT, Ono SS, Cohen DJ. Specifying and comparing implementation strategies across seven large implementation interventions: a practical application of theory. Implement Sci. 2019;14(1):32. doi: 10.1186/s13012-019-0876-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Haley AD, Powell BJ, Walsh‐Bailey C, et al. Strengthening methods for tracking adaptations and modifications to implementation strategies. BMC Med Res Methodol. 2021;21(1):133. doi: 10.1186/s12874-021-01326-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Cullen L, Hanrahan K, Edmonds SW, Reisinger HS, Wagner M. Iowa implementation for sustainability framework. Implement Sci. 2022;17(1):1. doi: 10.1186/s13012-021-01157-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139. doi: 10.1186/1748-5908-8-139 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Figure S1. VA Office of Rural Health enterprise‐wide initiative annual evaluation template.

Figure S2. Example of completed RE‐AIM logic model.

Figure S3. Revisions of the VA Office of Rural Health enterprise‐wide initiative annual evaluation template.

Table S1. Strategies derived from reports categorized as non‐ERIC strategies.

Table S2. Top quartile (with at least two) Reach implementation strategies among EWIs that met or exceeded the rurality benchmark in comparison with EWIs below the benchmark. Shaded cells occur in EWIs both above and below the benchmark.