Abstract

Suicide is a complex phenomenon that is often not preceded by a diagnosed mental health condition, therefore making it difficult to study and mitigate. Artificial Intelligence has increasingly been used to better understand Social Determinants of Health factors that influence suicide outcomes. In this review we find that many studies use limited SDoH information and minority groups are often underrepresented, thereby omitting important factors that could influence risk of suicide.

Subject terms: Risk factors, Predictive medicine, Computational models, Psychology, Human behaviour

Introduction

There has been an increased use of Natural Language Processing (NLP) and Machine Learning (ML), sub-disciplines in AI, in understanding and identifying suicidal ideation, behavior, risk and attempts in both public and clinical contexts. Suicide is one of the leading causes of death for people aged 15-29; over 700,000 people die every year as of 20191. For every person who has died by suicide, there are many more who attempt suicide, which increases their risk of dying by suicide in the future2.

The onset and progression of mental health issues have been linked to a person’s social, economic, political, and physical circumstances3. These circumstances have been summarized in a framework of social factors referred to as Social Determinants of Health (SDoH)4 where each factor is considered a driving force behind adverse health outcomes and inequalities (e.g.: hospitalization, increased mortality and lack of access to treatment)5. SDoH also affects people living with mental health conditions6, where addressing these inequalities across all stages of a person’s life at an individual, local and national level are vital to reduce the number of suicide attempts and deaths by suicide overall7. Recent work6 highlights that there is a set of factors and circumstances that are unique to individuals living with mental health concerns, calling for an expansion of traditional SDoH categories to a new subset called Social Determinants of Mental Health (SDoMH). The use of NLP and ML to extract SDoH in the context of suicide is sparsely investigated. Work in this space has predominantly focused on reviewing (i) AI for mental health detection and understanding8,9, (ii) the use of NLP and ML to predict suicide, suicidal ideation or attempts without considering social factors10–25, (iii) the use of NLP to extract SDoH without focusing on specific mental health incidents26,27, where suicide is one of many possible health outcomes or (iv) focus on suicidality in context of specific SDoMH’s without the use of AI13,28–30. It is also well understood that there are some groups that are underrepresented, which leads to greater health disparities31, which include but are not limited to groups of racial and ethnic minorities, underserved rural communities, sexual and gender minorities and people with disabilities. This underrepresentation can have great impacts on NLP and ML methods (e.g.: issues of bias and fairness).

In this literature review we analyze 94 studies at the intersection of suicide and SDoH and aim to answer the following research questions:

What NLP and ML methods are used to extract SDoH from textual data and what are the most common data sources?

What are the most commonly identified SDoH factors for suicide?

What socio-demographic groups are these algorithms developed from and for?

What are the most frequently used health factors and behaviors in NLP and ML algorithms?

Methodology

Data extraction and categories for review

For each paper in this review, we extracted metadata to identify overall trends in the collection. Data extraction is divided into four main categories, aiming to capture (i) general information about the NLP or ML method and data used, (ii) SDoH and SDoMH variables, (iii) socio-demographic factors, and (iv) physical, mental, and behavioral health factors.

General information

The year of publication, the data source for the presented study, and what type of method has been used (e.g.: NLP or ML) are captured.

Social determinants of (Mental) health

In this category we draw on previous and include general SDoH categories32 and categories that disproportionately affect people living with mental health disorders (SDoMH)5,6. For this, we grouped factors into broader categories called Social, Psychosocial and Economic, where within each category we capture more granular factors as outlined in Table 1. In this work, we use SDoH as the overarching term to describe such factors, where SDoMH factors are included and our categories were developed empirically through the wording and descriptions used in the works we reviewed. However, we would like to note that as we attempted to categorize each SDoH in the context of mental health we were not able to find clear distinctions between some terminology/categories in the literature. For example, many works use references to adverse life events and trauma as mutually exclusive categories and others use it as the same category. It is beyond the scope of this review, to make such a distinction but should be examined by further research.

Table 1.

Description of Social Determinants of Health categories

| Category | Sub category | Examples |

|---|---|---|

| Social | Relationships and social support |

• Being in a romantic relationship • Level of support in relations (incl. Romantic, platonic, family and at work) • Breakdown of relationships • References to family life |

|

Social Exclusion Stigmatization Religion / Culture |

• Discrimination based on protected characteristics • Cultural or family attitudes towards suicide and mental health • The influence of a person’s belief system on mental health (e.g.: religious views on suicide) |

|

| Level of socialization |

• Lack of social connections • No or low social support • Feeling lonely • Social isolation / loneliness (e.g.: Beck’s scale of Belonging67) |

|

| Psychosocial | Adverse life experiences |

• Abortion • Bullying • Responsible of enemy death |

| Abuse / Trauma [adult] |

• Sexual, physical harassment and/ or abuse • Military • Death of family or loved one / witnessing suicide • Full lista |

|

| Legal issues [adult] |

• Incarceration • Ongoing criminal investigations |

|

| Abuse / Trauma [childhood] |

• Parents’ divorce or losing a caregiver • Foster care • Bullying |

|

| Adverse childhood experiences | • Unspecified (e.g.: the reviewed literature does not give detailed examples) | |

| Imprisonment/criminal behavior [teens] |

• Trouble with the law during childhood/ teens • Time spent in jail |

|

| Economic | Housing Insecurity |

• Living with others • Having housing insecurity • Homelessness (in the past or present) |

| Build environment / Neighborhood | • Urban vs Rural | |

| Access to healthcare / insurance |

• Type of payer • Level of coverage |

|

| Education | • Level of education | |

| Occupation | • Type of job held now or in the past (e.g.: doctor, soldier, legal professional…) | |

| Employment status |

• Unemployment, lack of security in employment • Level of employment (e.g.: military: active duty, deployed, etc.) |

|

| Socioeconomic |

• Income level • Financial difficulties or pressures |

|

| Food Insecurity | • Lack of access to food, changes in food intake |

Sociodemographic factors

We capture basic demographic information about research participants, such as age, sex, and marital status. In addition to this, we also include factors for groups that are at greater risk of health disparities, such as gender, sexuality, disability, race and ethnicity31.

Health factors

In addition to the aforementioned categories, we also label each paper for a set of health conditions, treatments, behaviors and outcomes that were frequently mentioned as referenced in the original paper. This includes references to Physical, neurocognitive and mental health conditions, the use of psychiatric medications, in treatments (e.g.: stay in hospital, outpatient, ED visits, admissions), previous attempts, history of self-harm, aggressive/antisocial behavior, substance abuse, level of physical activity/overall health (e.g.: BMI, weight changes) and risky behavior. Prior research33–35 has shown that such factors can significantly increases a persons’ risk of suicide.

Search strategy and query

We retrieved 1,661 bibliographic records in December 2023 from two scientific databases (PubMed and the Anthology of the Association for Computational Linguistics). We use the following search queries on two popular scientific database (PubMed and ACL Anthology) and retrieved 1,585 and 76 papers respectively:

(Natural Language Processing OR Information Extraction OR Information Retrieval OR Text Mining OR Machine Learning OR Deep Learning) AND (Suicide OR Suicidality OR Suicidal) AND (Social Determinants of Health OR behavioral determinants of health)

Due to the different layout of the ACL Anthology (https://aclanthology.org/) we use a combination of terms to identify related literature:

Suicide + keyword or Suicide + related keyword

Our queries were constructed to capture as many relevant SDoH factors by name and also include related terms (see Table 2 for a full overview of keywords). Similarly to26, we first surveyed existing literature for SDOH related keywords, where we identified a total of 17 relevant keywords for our search.

Table 2.

Overview of keywords used in our literature search

| Keywords | Related keywords |

|---|---|

| smoking | Cigarette, cigarette use, tobacco, tobacco use, nicotine |

| Alcohol | Alcohol abuse, alcohol withdrawal, alcohol consume, alcoholism, alcohol disorder |

| Drug | Drug use, drug abuse |

| Substance abuse | Substance use, Substance misuse |

| Diet | Nutrition, food |

| Exercise | Activity, physical activity |

| Sexual activity | Sex, sexual |

| Child abuse | Child, children |

| Abuse | Abusive, trauma |

| Healthcare | Quality care, access to care, access to primary care, access health care, insurance |

| Education | Educational stress, studying |

| Income | Financial condition, financial constraint, economic condition |

| Work | Occupation, working condition, employment, unemployment |

| Social support | Social isolation, social connections, social cohesion |

| Relationships | Family, friends, friendship, relationship, mother, father, sister, brother, sibling |

| Food security | Food insecurity |

| Housing | Homeless, homelessness, living condition |

Filtering and review strategy

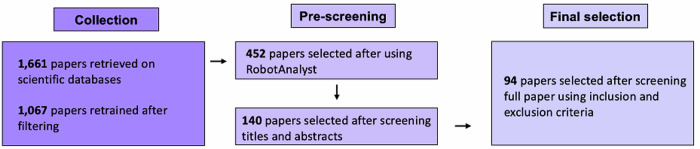

First, we removed duplicates and included all papers that have been peer-reviewed, published as a full text, in English between 2013 and 2023. Next, we screened both title and abstract using RobotAnalyst36 to reduce human workload. RobotAnalyst is a web-based software system that uses both text mining and machine learning methods to prioritize papers for their relevance based on human feedback (Free access to RobotAnalyst can be requested to reproduce this work here: http://www.nactem.ac.uk/robotanalyst/). For this, an iterative classification approach is used and RobotAnalyst was retrained six times during our screening process. We developed a set of inclusion and exclusion criteria to screen 452 papers that were predicted to be relevant by RobotAnalyst. Both title and abstract were screened for each paper based on the following exclusion and inclusion criteria:

Inclusion criteria:

-

I.

Papers that focus on the use of NLP or ML to extract SDOHs related to suicide

-

II.

Published work and studies that take place in English-speaking countries, including USA, United Kingdom, Australia, and Ireland

Exclusion criteria:

-

I.

Papers that only focus on suicide and SDOH without NLP or ML methods

-

II.

All retracted papers

-

III.

Workshop proceedings, commentaries, proposals, previous literature reviews

-

IV.

Papers focusing on unintentional death or homicides, methods of suicide

-

V.

Research that proposes new ideas, policies or intervention for suicide with or without the use of Artificial Intelligence

Based on our inclusion and exclusion criteria, we retrieved 94 papers for a full review. In Fig. 1 we show the full workflow of our search and filter strategy.

Fig. 1.

Overview of article selection process.

Findings

General Information

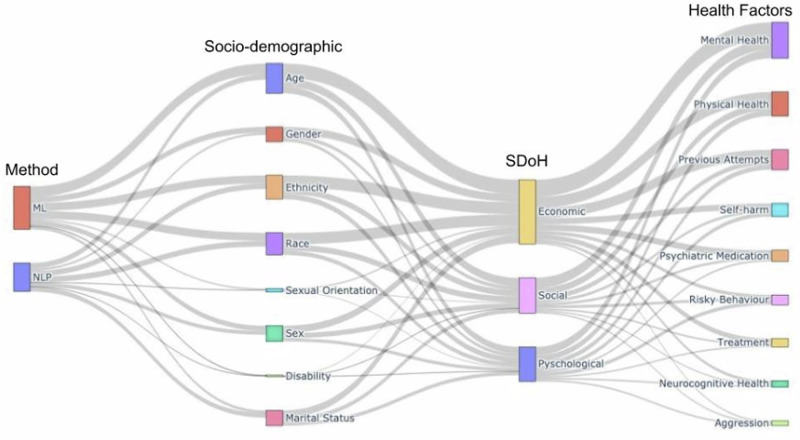

We generated a Sankey diagram37 to give a comprehensive overview of how many articles in our collection were assigned to each category. Figure 2 shows each rectangle node as a metadata category, where the node height represents the value and each line is proportional to the value. For example, we can see that both age and ethnicity are often considered as important factor alongside the economic SDoH, and mental health as a health factor.

Fig. 2. Sankey diagram of all reviewed articles.

A Sankey diagram37, showing the methods, socio-demographic information, SDoH, and other health factors for our selected articles.

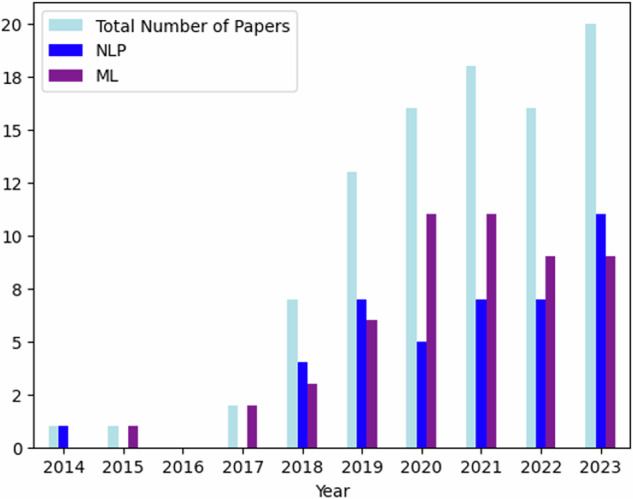

Figure 3 illustrates the overall number of publications and type of methods used over time, reflecting trends over the last 10 years. Here, we can see (i) that research is increasingly focusing on SDOH factors to better understand suicide and (ii) that Machine Learning (ML) based methodology are used more over time. This highlights not just the rising popularity of NLP and ML methods, but also their usefulness to understand and extract additional information at scale. At the same time, it is important to note that research in Information Extraction has also grown over recent with the advancements of new AI and NLP methods.

Fig. 3. Number of publications per year.

Number of papers published between 2014 - 2024 and categorized by methodology.

Data types and methods

In our collection of papers, two main approaches are typically used to predict a person’s risk of suicide or suicidal behavior. Feature-based approaches utilize information, such as a person’s demographic characteristics, frequency of treatments or other related health behaviors as input into an algorithm, whereas NLP approaches take advantage of language (e.g.: written online posts or EHRs) to gain insight into how suicidal ideation is expressed to predict risk. Within these approaches, we further distinguish between (i) traditional machine learning methods, such as linear/logistic regression, Decision Trees, or Support Vector Machines (SVM), (ii) deep learning methods (e.g.: artificial neural networks (ANN), CNNs (Convolutional Neural Networks) or Transformers) and (iii) unsupervised learning methods, such as topic modeling, to discover patterns from unlabeled data. Finally, some studies have utilized existing out-of-the box tools and software to conduct experiments and analysis and two papers did not disclose their full approach. In Table 3, we categorize each paper in our collection according to the methodology used.

Table 3.

All reviewed papers categorized by ML and NLP method used

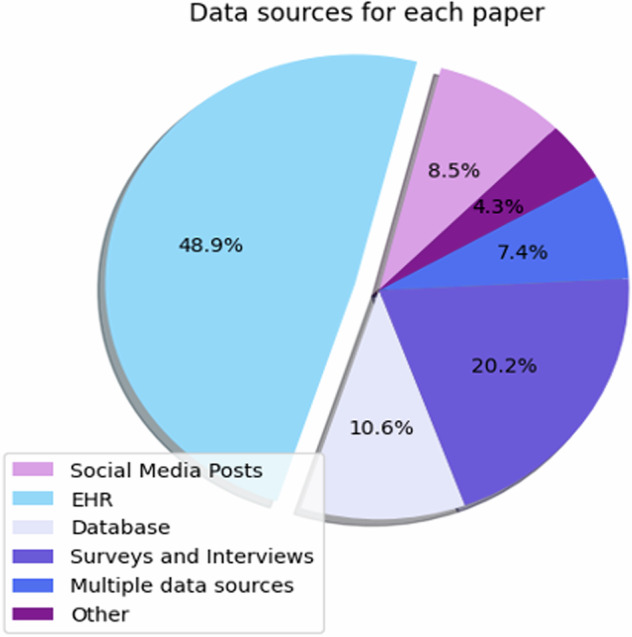

Furthermore, we find that the most commonly used data source to extract data from are Electronic Health Records (EHR) among the reviewed papers, closely followed by surveys and interviews (see Fig. 4). Multiple data sources refer to work that utilizes more than one type of data in their work, such as text data (e.g.: insurance claims) in combination with traditional clinical data38. Other data sources include audio recordings39, newspaper articles40, and mobile data collected via an app or smart device41.

Fig. 4. Type of data source used in each ML/NLP experiment.

Type of data sources in % .

Social Determinants of (Mental) Health and socio-demographic factors

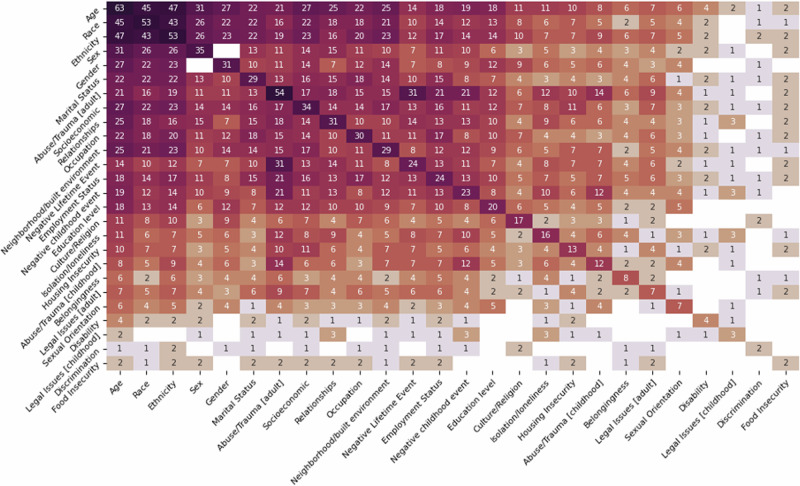

For each paper in our collection, we extracted SDoH and socio-demographic information for the population studied, where in Fig. 5 shows a heatmap that indicates the number of papers with combinations of SDoH and socio-demographic information. The diagonal indicates the total number of papers focusing on a single variable and there are a total of 18 SDoHs and 8 socio-demographic variables shown.

Fig. 5.

Heatmap showing the number of papers for each socio-demographic factor.

For SDoH variables the majority of papers focus on different types of abuse or trauma that has been experienced in adulthood (57.44%), followed by socioeconomic issues (36.17%), issues in relationships, and type of occupation held (31.91%). Very few works investigate the importance of legal issues experienced either in childhood (3.19%) or adulthood (7.44%), or the impact of discrimination (2.12%) and food insecurity (2.21%). For socio-demographic information we have found that the vast majority of papers focus on age (22.9%), race (19.3%) and ethnicity (19.3%). Only very few papers focus on other variables, such as sex (12.7%), gender (11.3%), marital status (10.5%), sexual orientation (2.5%) and disability (1.5%). However, the vast majority of papers focus on the intersection of multiple socio-demographic factors. This is particularly important as previous research has shown42 how multiple elements of a person’s identity (e.g., gender, race and age) can lead to compounded discrimination. Here it is noticeable that (i) most papers only control for age, race/ethnicity, sex/gender factors and (ii) very little research is investigating the impact of sexual orientation and disability in relation to any of the other frequently investigated factors. It is important to note that not all papers disclosed socio-demographic information in their study or did not have this information available to them. We find similar patterns when considering how many studies look at a combination of socio-demographic factors and SDoH information, where there are considerable gaps in research working on the intersection. These gaps may be due to a lack of data, where studies collecting such data points from participants or databases are leaving out this type of information by design. For example, for people with disabilities there are no considerations of education level, experiencing legal issues in adulthood, discrimination, belonging, having recorded negative lifetime events or trauma and abuse in childhood in our set of papers. There is also the question about how generalizable the findings are in relation to 1) who is represented in our healthcare system; 2) who these findings apply to; and 3) that by design some minority groups (e.g.: transgender people) are not represented in these studies. In Supplementary Table 1 we categorized each paper according to their focus on SDoH categories and in section 4 we provide a full discussion detailing the implications of our findings.

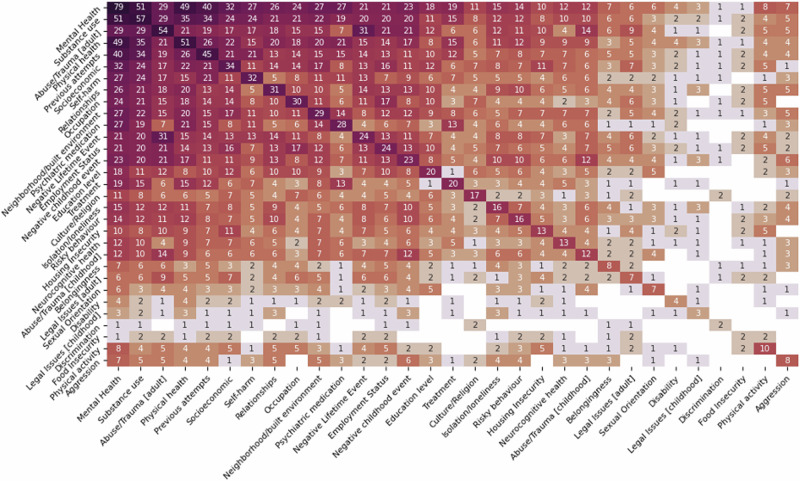

Social Determinants of Health and physical, mental, and behavioral health factors

Based on our findings, we can see that the most commonly researched health factors are existing mental health conditions (84.04%), substance use (60.63%), physical health (54.25%) and previous attempts (47.87%). However, few works consider levels of physical activity (10.63%) or aggression (8.51%). Similar to section 3.2 we also compared SDoH information to physical, mental and behavioral health factors, where in Fig. 6 we show a heatmap of the most frequently co-occurring factors in every paper. We find that existing mental health conditions and substance use are most often considered as a variable for SDoHs related to psychosocial (e.g.: abuse/trauma in adulthood (30.85% and 30.85%), negative lifetime experiences (22.34% and 21.27%) and economic factors (e.g.: socioeconomic status (34.04% and 24.46%), occupation (25.53% and 22.34%) and build environment/neighborhood (28.72% and 23.40%). Very few studies look at social SDoHs, such as discrimination (2.12%) or sexual orientation (7.44%). These results also illustrate that there is a gap in the research related to disability status.

Fig. 6.

A heatmap showing both SDoH and physical, mental, and behavioral health factors.

Discussion

The increased use of ML and NLP methods to successfully extract SDoH related to suicide brings new challenges that require multidisciplinary solutions. In the following section we highlight three key areas, based on the information from the review of the literature, that need to be further explored in future research as the algorithms and subsequent tools become more sophisticated.

Data sources

The majority of papers in this review utilize data that is typically not accessible to the wider research community to protect patient privacy and adhere to HIPPA (Health Insurance Portability and Accountability Act) legal requirements, which means that any kind of experimental results are hard to reproduce. In addition to this, many datasets are small in comparison to the treatment population and therefore findings may not be generalizable to larger or more diverse populations.

Similar concerns apply to data that is more readily available to researchers and is often sourced from social media. In such cases, (i) researchers usually do not have any ground truth information about the real mental health status of a user, (ii) data is sourced from platforms that have limited demographics (e.g.: Reddit’s user population is 70% male43) and (iii) often use a single post to assess risk of suicide24.

Previous work has called for the use of whole user timelines from social media to predict risk44,45. However, this also raises concerns around clinical validity of public social media data, which can be taken out of context and the risks to a user’s privacy may not warrant such an intrusive approach. In order to address concerns around ground truth and clinical validity of such approaches, researchers often ask human annotators to label data for levels of perceived suicide risk, however in few cases annotators have any kind of medical, psychological, or health science training that would enable them to make a more informed judgment46–48. However, this also increases the risk of annotation bias, which can develop when throughout the data annotation process systematic errors are introduced that impact the tool’s performance and fairness.

Developing tools to predict suicide from either clinical or social media can lead to a number of ethical questions, including but not limited to: Who is responsible for the tools decision making? And what do we do when a user receives a high suicide risk score? Therefore, we recommend that future research takes an interdisciplinary approach that incorporates perspectives from bioethics, law and policy, computer science and health science to outline how such technologies can be developed and deployed responsibly.

Bias and Fairness concerns

Existing disparities in health research49 also lead to inequalities in who is represented in the data that is used to develop methods and tools. Therefore, many vulnerable populations (e.g.: transgender people, people who are hard of hearing to name just two) are left out of this type of research by design, leading to an increased risk of biased and unfair automated diagnosis, treatment and possible health outcomes for different groups of people. Therefore, extracting SDoH information from public and clinical records can lead to further amplification of health disparities, biases and fairness concerns.

Recent research has proposed a variety of bias and fairness metrics to measure and mitigate biases, however; biases are not mutually exclusive and each method comes with its own benefits and disadvantages50. This ultimately means the person or group designing the tool decides what is fair. Future research in this field should carefully consider all aspects of the machine learning lifecycle (e.g.: data collection and preparation, algorithm choices) to reduce harm. For example, examining the dataset prior to training a new model, rebalancing training samples, and involving a diverse group of people in the development process to get feedback should be essential for any new algorithm or tool development.

Computational tasks on suicide

There have been a number of different tasks on detecting suicidal ideation51–53 or attempts14,54 often with the goal to predict risk24,55,56, using additional information that is not related to SDoH such as emotions or emojis44,57. Typically, such tasks are formulated using categorical labels that are meant to reflect levels of risk and whilst, ML models have been able to produce accurate predictions of suicide ideation, attempts and death58, they are rarely grounded in clinically theories of suicide as they are hard to implement due to their complexity59.

Furthermore, a diagnosed mental health condition is not a necessary precursor to dying by suicide60 nor is expressing suicidal ideation61,62, which can be used as a form of self-regulation63. Therefore, using AI/ML based tools to predict risk, especially from public sources, can lead to an increased risk of discrimination and stigmatization for those affected. This is particularly concerning given that some research proposes to use of such algorithms to predict individual risk of suicide and therefore making people with stigmatized conditions publicly identifiable.

At the same time, healthcare systems are under more pressure than ever to provide adequate mental health care64 and AI/ML based tools have often been sold as promising solutions, but often overlook real-world challenges of such systems (e.g.: clinical applicability, generalizability, methodological issues)20,65. Similar to66, we caution against overenthusiasm for the use of such technologies in the real world as it is yet to be determined whether these tools are “competing, complementary, or merely duplicative”21. Especially, given the current use of and task set up for suicide related tasks using AI/ML it is vital that we have multi-stakeholder conversations (clinicians, patient advocate groups, developers) to establish guidelines and regulations that ensures safeguarding of those most affected by such technologies. Subsequently, leading to the development of more promising tools and technologies that could aid in preventing suicide.

Conclusion

In this work, we have reviewed and manually categorized 94 papers that use ML or NLP to extract SDoH information on suicide, including but not limited to suicide risk and attempt prediction and ideation detection. We find that ML and NLP methods are increasingly used to extract SDoH information and the majority of current research focuses on (i) clinical records as a data source, (ii) traditional ML approaches (e.g.: SVMs, Regression), and (iii) a limited number of SDoH factors (e.g.: trauma and abuse as experienced in adulthood, socio-economic factors etc.) as they relate to demographic information (e.g.: binary gender, age and race) and other health factors (e.g.: existing mental health diagnosis and current or historic substance abuse) compared to the general population and those most in need of care. In our discussion we have highlighted challenges and necessary next steps for future research, which include not using AI to predict suicide, but rather uses it as a tool of many to aid in suicide prevention. Finally, this work is limited in that it focuses on only English-speaking countries and western nations, where SDoHs identified in this research may not be applicable in different contexts. Furthermore, we have chosen to only report broad categories of methods and dataset in order to identify general trends and patterns.

In spite of these limitations, this review highlights the need for future research to focus on not only on the responsible development of technologies in suicide prevention, but also more modern machine learning approaches that incorporate existing social science and psychology research.

Supplementary information

Acknowledgements

The study was supported by a grant from the Institute for Collaboration in Health.

Author contributions

A.M.S., S.S., and I.I. searched the literature, and categorized each paper according to predefined categories. B.I. generated visualizations. A.M.S. wrote the initial draft and A.M.S., S.G., and C.D. revised the paper. All authors reviewed the paper.

Data availability

No datasets were generated or analysed during the current study.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s44184-024-00087-6.

References

- 1.World Health Organization. Suicide in the World: Global Health Estimates (No. WHO/MSD/MER/19.3) (World Health Organization, 2019).

- 2.CDC. Suicide Data and Statistics. [online] Suicide Prevention (2024). Available at https://www.cdc.gov/suicide/facts/data.html?CDC_AAref_Val=https://www.cdc.gov/suicide/suicide-data-statistics.html.

- 3.World Health Organization. Social Determinants of Mental Health (World Health Organization, 2014).

- 4.Link, B. G. & Phelan, J. Social conditions as fundamental causes of disease. J. Health Social Behavior 80–94 (1995). [PubMed]

- 5.Alegría, M., NeMoyer, A., Falgàs Bagué, I., Wang, Y. & Alvarez, K.. Social determinants of mental health: where we are and where we need to go. Curr. Psychiatry Rep.20, 95. [DOI] [PMC free article] [PubMed]

- 6.Jeste, D. V. & Pender, V. B. Social determinants of mental health: recommendations for research, training, practice, and policy. JAMA Psychiatry79, 283–284 (2022). [DOI] [PubMed] [Google Scholar]

- 7.World Health Organization. Comprehensive Mental Health Action Plan 2013–2030 (World Health Organization, 2021).

- 8.Li, H., Zhang, R., Lee, Y. C., Kraut, R. E. & Mohr, D. C. Systematic review and meta-analysis of AI-based conversational agents for promoting mental health and well-being. NPJ Digital Med.6, 236 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Su, C., Xu, Z., Pathak, J. & Wang, F. Deep learning in mental health outcome research: a scoping review. Transl. Psychiatry10, 116 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Boggs, J. M. & Kafka, J. M. A critical review of text mining applications for suicide research. Curr. Epidemiol. Rep.9, 126–134 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Yeskuatov, E., Chua, S. L. & Foo, L. K. Leveraging reddit for suicidal ideation detection: a review of machine learning and natural language processing techniques. Int. J. Environ. Res. Public Health19, 10347 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nordin, N., Zainol, Z., Noor, M. H. M. & Chan, L. F.. Suicidal behaviour prediction models using machine learning techniques: a systematic review. Artif. Intell. Med.132, 102395 (2022). [DOI] [PubMed]

- 13.Castillo-Sánchez, G. et al. Suicide risk assessment using machine learning and social networks: a scoping review. J. Med. Syst.44, 205 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Arowosegbe, A. & Oyelade, T. Application of Natural Language Processing (NLP) in detecting and preventing suicide ideation: a systematic review. Int. J. Environ. Res. Public Health20, 1514 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kusuma, K. et al. The performance of machine learning models in predicting suicidal ideation, attempts, and deaths: a meta-analysis and systematic review. J. Psychiatric Res.155, 579–588 (2022). [DOI] [PubMed]

- 16.Cheng, Q. & Lui, C. S. Applying text mining methods to suicide research. Suicide Life‐Threatening Behav.51, 137–147 (2021). [DOI] [PubMed] [Google Scholar]

- 17.Wulz, A. R., Law, R., Wang, J. & Wolkin, A. F. Leveraging data science to enhance suicide prevention research: a literature review. Inj. Prev.28, 74–80 (2021). [DOI] [PMC free article] [PubMed]

- 18.Bernert, R. A. et al. Artificial intelligence and suicide prevention: a systematic review of machine learning investigations. Int. J. Environ. Res. Public Health17, 5929 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lopez‐Castroman, J. et al. Mining social networks to improve suicide prevention: a scoping review. J. Neurosci. Res.98, 616–625 (2020). [DOI] [PubMed] [Google Scholar]

- 20.Whiting, D. & Fazel, S. How accurate are suicide risk prediction models? Asking the right questions for clinical practice. Evid.-based Ment. Health22, 125 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Simon, G. E. et al. Reconciling statistical and clinicians’ predictions of suicide risk. Psychiatr. Serv.72, 555–562 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Corke, M., Mullin, K., Angel-Scott, H., Xia, S. & Large, M. Meta-analysis of the strength of exploratory suicide prediction models; from clinicians to computers. BJPsych Open7, e26 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Vahabzadeh, A., Sahin, N. & Kalali, A. Digital suicide prevention: can technology become a game-changer? Innov. Clin. Neurosci.13, 16 (2016). [PMC free article] [PubMed] [Google Scholar]

- 24.Coppersmith, G., Leary, R., Crutchley, P. & Fine, A. Natural language processing of social media as screening for suicide risk. Biomed. Inform. Insights10, 1178222618792860 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hopkins, D., Rickwood, D. J., Hallford, D. J. & Watsford, C. Structured data vs. unstructured data in machine learning prediction models for suicidal behaviors: a systematic review and meta-analysis. Front. Digital Health4, 945006 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Patra, B. G. et al. Extracting social determinants of health from electronic health records using natural language processing: a systematic review. J. Am. Med. Inform. Assoc.28, 2716–2727 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mitra, A. et al. Associations between natural language processing–enriched social determinants of health and suicide death among US veterans. JAMA Netw. Open6, e233079–e233079 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Nouri, E., Moradi, Y. & Moradi, G. The global prevalence of suicidal ideation and suicide attempts among men who have sex with men: a systematic review and meta-analysis. Eur. J. Med. Res.28, 361 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Mills, P. D., Watts, B. V., Huh, T. J., Boar, S. & Kemp, J. Helping elderly patients to avoid suicide: a review of case reports from a National Veterans Affairs database. J. Nerv. Ment. Dis.201, 12–16 (2013). [DOI] [PubMed] [Google Scholar]

- 30.Wood, D. S., Wood, B. M., Watson, A., Sheffield, D. & Hauter, H. Veteran suicide risk factors: a national sample of nonveteran and veteran men who died by suicide. Health Soc. work45, 23–30 (2020). [DOI] [PubMed] [Google Scholar]

- 31.National Institutes of Health. Minority health and health disparities: definitions and parameters (2024).

- 32.Wilkinson, R. G. & Marmot, M. (eds). Social Determinants of Health: the Solid Facts (World Health Organization, 2003).

- 33.McMahon, E. M. et al. Psychosocial and psychiatric factors preceding death by suicide: a case–control psychological autopsy study involving multiple data sources. Suicide Life‐Threatening Behav.52, 1037–1047 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Cha, C. B. et al. Annual Research Review: Suicide among youth–epidemiology, (potential) etiology, and treatment. J. Child Psychol. Psychiatry59, 460–482 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Renaud, J. et al. Suicidal ideation and behavior in youth in low-and middle-income countries: A brief review of risk factors and implications for prevention. Front. Psychiatry13, 1044354 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Przybyła, P. et al. Prioritising references for systematic reviews with RobotAnalyst: a user study. Res. Synth. Methods9, 470–488 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Riehmann, P., Hanfler, M. & Froehlich, B., October. Interactive sankey diagrams. In: IEEE Symposium on Information Visualization, 2005. INFOVIS 2005 233–240 (IEEE, 2005).

- 38.Green, C. A. et al. Identifying and classifying opioid‐related overdoses: a validation study. Pharmacoepidemiology drug Saf.28, 1127–1137 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Belouali, A. et al. Acoustic and language analysis of speech for suicidal ideation among US veterans. BioData Min.14, 1–17 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Moreno, M. A., Gower, A. D., Brittain, H. & Vaillancourt, T. Applying natural language processing to evaluate news media coverage of bullying and cyberbullying. Prev. Sci.20, 1274–1283 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Haines-Delmont, A. et al. Testing suicide risk prediction algorithms using phone measurements with patients in acute mental health settings: feasibility study. JMIR mHealth uHealth8, e15901 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Crenshaw, K. W. Mapping the margins: Intersectionality, identity politics, and violence against women of color. In The public nature of private violence (pp. 93-118). Routledge, 2013.

- 43.Anderson, S. Understanding Reddit demographics in 2024 (2024). https://www.socialchamp.io/blog/reddit-demographics/#:~:text=Reddit%20Demographics%20Gender,men%20and%20about%2035.1%25%20women.

- 44.Sawhney, R., Joshi, H., Gandhi, S. & Shah, R. A time-aware transformer based model for suicide ideation detection on social media. In: Proc. 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP) (7685–7697) (2020).

- 45.Sawhney, R., Joshi, H., Flek, L. & Shah, R. Phase: Learning emotional phase-aware representations for suicide ideation detection on social media. In: Proc. 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume 2415–2428 (2021).

- 46.Rawat, B. P. S., Kovaly, S., Pigeon, W. R. & Yu, H.. Scan: suicide attempt and ideation events dataset. In: Proc. Conference. Association for Computational Linguistics. North American Chapter. Meeting Vol. 2022, 1029 (NIH Public Access, 2022). [DOI] [PMC free article] [PubMed]

- 47.Liu, D. et al. Suicidal ideation cause extraction from social texts. IEEE Access8, 169333–169351 (2020). [Google Scholar]

- 48.Cusick, M. et al. Using weak supervision and deep learning to classify clinical notes for identification of current suicidal ideation. J. Psychiatr. Res.136, 95–102 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Fiscella, K., Franks, P., Gold, M. R. & Clancy, C. M. Inequality in quality: addressing socioeconomic, racial, and ethnic disparities in health care. JAMA283, 2579–2584 (2000). [DOI] [PubMed] [Google Scholar]

- 50.Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K. & Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. (CSUR)54, 1–35 (2021). [Google Scholar]

- 51.Roy, A. et al. A machine learning approach predicts future risk to suicidal ideation from social media data. NPJ Digital Med.3, 1–12 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Tadesse, M. M., Lin, H., Xu, B. & Yang, L. Detection of suicide ideation in social media forums using deep learning. Algorithms13, 7 (2019). [Google Scholar]

- 53.De Choudhury, M., Kiciman, E., Dredze, M., Coppersmith, G. & Kumar, M.. Discovering shifts to suicidal ideation from mental health content in social media. In: Proc. 2016 CHI Conference on Human Factors in Computing Systems 2098–2110 (2016). [DOI] [PMC free article] [PubMed]

- 54.Coppersmith, G., Ngo, K., Leary, R. & Wood, A. Exploratory analysis of social media prior to a suicide attempt. In Proc. 3rd wOrkshop on Computational Linguistics and Clinical Psychology 106–117 (2016).

- 55.Mbarek, A., Jamoussi, S., Charfi, A. & Hamadou, A. B. Suicidal profiles detection in Twitter. In: WEBIST 289–296 (2019).

- 56.Zirikly, A., Resnik, P., Uzuner, O. & Hollingshead, K. CLPsych 2019 shared task: predicting the degree of suicide risk in Reddit posts. In: Proc. 6th Workshop on Computational Linguistics and Clinical Psychology 24–33 (2019).

- 57.Zhang, T., Yang, K., Ji, S. & Ananiadou, S. Emotion fusion for mental illness detection from social media: A survey. Inf. Fusion92, 231–246 (2023). [Google Scholar]

- 58.Schafer, K. M., Kennedy, G., Gallyer, A. & Resnik, P. A direct comparison of theory-driven and machine learning prediction of suicide: a meta-analysis. PLoS ONE16, e0249833 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Parsapoor, M., Koudys, J. W. & Ruocco, A. C. Suicide risk detection using artificial intelligence: the promise of creating a benchmark dataset for research on the detection of suicide risk. Front. Psychiatry14 (2023). [DOI] [PMC free article] [PubMed]

- 60.World Health Organization. Suicide [online] (World Health Organization) (2023). Available at: https://www.who.int/news-room/fact-sheets/detail/suicide.

- 61.Khazem, L. R. & Anestis, M. D. Thinking or doing? An examination of well-established suicide correlates within the ideation-to-action framework. Psychiatry Res.245, 321–326 (2016). [DOI] [PubMed] [Google Scholar]

- 62.Baca-Garcia, E. et al. Estimating risk for suicide attempt: Are we asking the right questions?: Passive suicidal ideation as a marker for suicidal behavior. J. Affect. Disord.134, 327–332 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Coppersmith, D. D. et al. Suicidal thinking as affect regulation. J. Psychopathol. Clin. Sci.132, 385 (2023). [DOI] [PubMed] [Google Scholar]

- 64.Ford, S. Mental Health Services Now under ‘Unsustainable Pressure’. [online] Nursing Times (2022). Available at https://www.nursingtimes.net/news/mental-health/mental-health-services-now-under-unsustainable-pressure-02-12-2022/.

- 65.Franklin, J. C. et al. Risk factors for suicidal thoughts and behaviors: a meta-analysis of 50 years of research. Psychol. Bull.143, 187 (2017). [DOI] [PubMed] [Google Scholar]

- 66.Smith, W. R. et al. The ethics of risk prediction for psychosis and suicide attempt in youth mental health. J. Pediatrics263, 113583 (2023). [DOI] [PMC free article] [PubMed]

- 67.Beck, M. & Malley, J. A pedagogy of belonging. Reclaiming Child. Youth7, 133–137 (1998). [Google Scholar]

- 68.Lynch, K. E. et al. Evaluation of suicide mortality among sexual minority US veterans from 2000 to 2017. JAMA Netw. Open3, e2031357–e2031357 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Kuroki, Y. Risk factors for suicidal behaviors among Filipino Americans: a data mining approach. Am. J. Orthopsychiatry85, 34 (2015). [DOI] [PubMed] [Google Scholar]

- 70.Gradus, J. L., King, M. W., Galatzer‐Levy, I. & Street, A. E. Gender differences in machine learning models of trauma and suicidal ideation in veterans of the Iraq and Afghanistan Wars. J. Trauma. Stress30, 362–371 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Kessler, R. C. et al. Developing a practical suicide risk prediction model for targeting high‐risk patients in the Veterans health Administration. Int. J. Methods Psychiatr. Res.26, e1575 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Burke, T. A. et al. Identifying the relative importance of non-suicidal self-injury features in classifying suicidal ideation, plans, and behavior using exploratory data mining. Psychiatry Res.262, 175–183 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Walsh, C. G., Ribeiro, J. D. & Franklin, J. C. Predicting suicide attempts in adolescents with longitudinal clinical data and machine learning. J. Child Psychol. Psychiatry59, 1261–1270 (2018). [DOI] [PubMed] [Google Scholar]

- 74.Van Schaik, P., Peng, Y., Ojelabi, A. & Ling, J. Explainable statistical learning in public health for policy development: the case of real-world suicide data. BMC Med. Res. Methodol.19, 1–14 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Simon, G. E. et al. What health records data are required for accurate prediction of suicidal behavior? J. Am. Med. Inform. Assoc.26, 1458–1465 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Allan, N. P., Gros, D. F., Lancaster, C. L., Saulnier, K. G. & Stecker, T. Heterogeneity in short‐term suicidal ideation trajectories: Predictors of and projections to suicidal behavior. Suicide Life‐Threatening Behav.49, 826–837 (2019). [DOI] [PubMed] [Google Scholar]

- 77.Tasmim, S. et al. Early-life stressful events and suicide attempt in schizophrenia: machine learning models. Schizophrenia Res.218, 329–331 (2020). [DOI] [PubMed] [Google Scholar]

- 78.Hill, R. M., Oosterhoff, B. & Do, C. Using machine learning to identify suicide risk: a classification tree approach to prospectively identify adolescent suicide attempters. Arch. Suicide Res.24, 218–235 (2020). [DOI] [PubMed] [Google Scholar]

- 79.Haroz, E. E. et al. Reaching those at highest risk for suicide: development of a model using machine learning methods for use with Native American communities. Suicide Life‐Threatening Behav.50, 422–436 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Su, C. et al. Machine learning for suicide risk prediction in children and adolescents with electronic health records. Transl. Psychiatry10, 413 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Burke, T. A., Jacobucci, R., Ammerman, B. A., Alloy, L. B. & Diamond, G. Using machine learning to classify suicide attempt history among youth in medical care settings. J. Affect. Disord.268, 206–214 (2020). [DOI] [PubMed] [Google Scholar]

- 82.Oppenheimer, C. W. et al. Informing the study of suicidal thoughts and behaviors in distressed young adults: The use of a machine learning approach to identify neuroimaging, psychiatric, behavioral, and demographic correlates. Psychiatry Res.: Neuroimaging317, 111386 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Weller, O. et al. Predicting suicidal thoughts and behavior among adolescents using the risk and protective factor framework: a large-scale machine learning approach. Plos One16, e0258535 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.De La Garza, Á. G., Blanco, C., Olfson, M. & Wall, M. M. Identification of suicide attempt risk factors in a national US survey using machine learning. JAMA Psychiatry78, 398–406 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Cho, S. E., Geem, Z. W. & Na, K. S. Development of a suicide prediction model for the elderly using health screening data. Int. J. Environ. Res. Public Health18, 10150 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Coley, R. Y., Walker, R. L., Cruz, M., Simon, G. E. & Shortreed, S. M. Clinical risk prediction models and informative cluster size: assessing the performance of a suicide risk prediction algorithm. Biometrical J.63, 1375–1388 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Lekkas, D., Klein, R. J. & Jacobson, N. C. Predicting acute suicidal ideation on Instagram using ensemble machine learning models. Internet Interventions25, 100424 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Harman, G. et al. Prediction of suicidal ideation and attempt in 9 and 10 year-old children using transdiagnostic risk features. PLoS ONE16, e0252114 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Parghi, N. et al. Assessing the predictive ability of the Suicide Crisis Inventory for near‐term suicidal behavior using machine learning approaches. Int. J. Methods Psychiatr. Res.30, e1863 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Kim, S., Lee, H. K. & Lee, K. Detecting suicidal risk using MMPI-2 based on machine learning algorithm. Sci. Rep.11, 15310 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Edgcomb, J. B., Thiruvalluru, R., Pathak, J. & Brooks, J. O. III. Machine learning to differentiate risk of suicide attempt and self-harm after general medical hospitalization of women with mental illness. Med. Care59, S58–S64 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Dolsen, E. A. et al. Identifying correlates of suicide ideation during the COVID-19 pandemic: a cross-sectional analysis of 148 sociodemographic and pandemic-specific factors. J. Psychiatr. Res.156, 186–193 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Van Velzen, L. S. et al. Classification of suicidal thoughts and behaviour in children: results from penalised logistic regression analyses in the Adolescent Brain Cognitive Development study. Br. J. Psychiatry220, 210–218 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Cruz, M. et al. Machine learning prediction of suicide risk does not identify patients without traditional risk factors. J. Clin. Psychiatry83, 42525 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Nemesure, M. D. et al. Predictive modeling of suicidal ideation in patients with epilepsy. Epilepsia63, 2269–2278 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Wilimitis, D. et al. Integration of face-to-face screening with real-time machine learning to predict risk of suicide among adults. JAMA Netw. Open5, e2212095–e2212095 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Yarborough, B. J. H. et al. Opioid-related variables did not improve suicide risk prediction models in samples with mental health diagnoses. J. Affect. Disord. Rep.8, 100346 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Stanley, I. H. et al. Predicting suicide attempts among US Army soldiers after leaving active duty using information available before leaving active duty: results from the Study to Assess Risk and Resilience in Servicemembers-Longitudinal Study (STARRS-LS). Mol. Psychiatry27, 1631–1639 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Horwitz, A. G. et al. Using machine learning with intensive longitudinal data to predict depression and suicidal ideation among medical interns over time. Psychol. Med.53, 5778–5785 (2022). [DOI] [PMC free article] [PubMed]

- 100.Cheng, M. et al. Polyphenic risk score shows robust predictive ability for long-term future suicidality. Discov. Ment. Health2, 13 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Czyz, E. K. et al. Ecological momentary assessments and passive sensing in the prediction of short-term suicidal ideation in young adults. JAMA Netw. Open6, e2328005–e2328005 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Czyz, E. K., Koo, H. J., Al-Dajani, N., King, C. A. & Nahum-Shani, I. Predicting short-term suicidal thoughts in adolescents using machine learning: Developing decision tools to identify daily level risk after hospitalization. Psychol. Med.53, 2982–2991 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Edwards, A. C., Gentry, A. E., Peterson, R. E., Webb, B. T. & Mościcki, E. K. Multifaceted risk for non-suicidal self-injury only versus suicide attempt in a population-based cohort of adults. J. Affect. Disord.333, 474–481 (2023). [DOI] [PubMed] [Google Scholar]

- 104.Wang, J. et al. Prediction of suicidal behaviors in the middle-aged population: machine learning analyses of UK Biobank. JMIR Public Health Surveill.9, e43419 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Sheu, Y. H. et al. An efficient landmark model for prediction of suicide attempts in multiple clinical settings. Psychiatry Res.323, 115175 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Coley, R. Y., Liao, Q., Simon, N. & Shortreed, S. M. Empirical evaluation of internal validation methods for prediction in large-scale clinical data with rare-event outcomes: a case study in suicide risk prediction. BMC Med. Res. Methodol.23, 33 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Jankowsky, K., Steger, D. & Schroeders, U.. Predicting lifetime suicide attempts in a community sample of adolescents using machine learning algorithms. Assessment31, 557–573 (2023). [DOI] [PMC free article] [PubMed]

- 108.Kirlic, N. et al. A machine learning analysis of risk and protective factors of suicidal thoughts and behaviors in college students. J. Am. Coll. Health71, 1863–1872 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Shortreed, S. M. et al. Complex modeling with detailed temporal predictors does not improve health records-based suicide risk prediction. NPJ Digital Med.6, 47 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.DelPozo-Banos, M. et al. Using neural networks with routine health records to identify suicide risk: feasibility study. JMIR Ment. Health5, e10144 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Sanderson, M., Bulloch, A. G., Wang, J., Williamson, T. & Patten, S. B. Predicting death by suicide using administrative health care system data: can feedforward neural network models improve upon logistic regression models? J. Affect. Disord.257, 741–747 (2019). [DOI] [PubMed] [Google Scholar]

- 112.Gong, J., Simon, G. E. & Liu, S. Machine learning discovery of longitudinal patterns of depression and suicidal ideation. PLoS ONE14, e0222665 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Zheng, L. et al. Development of an early-warning system for high-risk patients for suicide attempt using deep learning and electronic health records. Transl. Psychiatry10, 72 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Choi, D. et al. Development of a machine learning model using multiple, heterogeneous data sources to estimate weekly US suicide fatalities. JAMA Netw. Open3, e2030932–e2030932 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Rozek, D. C. et al. Using machine learning to predict suicide attempts in military personnel. Psychiatry Res.294, 113515 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Homan, C. et al. Toward macro-insights for suicide prevention: analyzing fine-grained distress at scale. In: Proc. Workshop on Computational Linguistics and Clinical Psychology: From Linguistic Signal to Clinical Reality (107–117 (2014).

- 117.Zhang, Y. et al. Psychiatric stressor recognition from clinical notes to reveal association with suicide. Health Inform. J.25, 1846–1862 (2019). [DOI] [PubMed] [Google Scholar]

- 118.Carson, N. J. et al. Identification of suicidal behavior among psychiatrically hospitalized adolescents using natural language processing and machine learning of electronic health records. PLoS ONE14, e0211116 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119.Zhong, Q. Y. et al. Use of natural language processing in electronic medical records to identify pregnant women with suicidal behavior: towards a solution to the complex classification problem. Eur. J. Epidemiol.34, 153–162 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Buckland, R. S., Hogan, J. W. and Chen, E. S.. Selection of clinical text features for classifying suicide attempts. In: AMIA Annual Symposium Proceedings Vol 2020, 273 (American Medical Informatics Association, 2020). [PMC free article] [PubMed]

- 121.Levis, M., Westgate, C. L., Gui, J., Watts, B. V. & Shiner, B. Natural language processing of clinical mental health notes may add predictive value to existing suicide risk models. Psychol. Med.51, 1382–1391 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Tsui, F. R. et al. Natural language processing and machine learning of electronic health records for prediction of first-time suicide attempts. JAMIA Open4, ooab011 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123.Levis, M. et al. Leveraging unstructured electronic medical record notes to derive population-specific suicide risk models. Psychiatry Res.315, 114703 (2022). [DOI] [PubMed] [Google Scholar]

- 124.Rahman, N. et al. Using natural language processing to improve suicide classification requires consideration of race. Suicide Life‐Threatening Behav.52, 782–791 (2022). [DOI] [PubMed] [Google Scholar]

- 125.Goldstein, E. V. et al. Characterizing female firearm suicide circumstances: a natural language processing and machine learning approach. Am. J. Prev. Med.65, 278–285 (2023). [DOI] [PubMed]

- 126.Goldstein, E. V., Bailey, E. V. & Wilson, F. A. Poverty and suicidal ideation among Hispanic mental health care patients leading up to the COVID-19 Pandemic. Hispanic Health Care Int. 15404153231181110 (2023). [DOI] [PMC free article] [PubMed]

- 127.Ophir, Y., Tikochinski, R., Asterhan, C. S., Sisso, I. & Reichart, R. Deep neural networks detect suicide risk from textual facebook posts. Sci. Rep.10, 16685 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128.Yao, H. et al. Detection of suicidality among opioid users on reddit: machine learning–based approach. J. Med. Internet Res.22, e15293 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.Wang, S. et al. An NLP approach to identify SDoH-related circumstance and suicide crisis from death investigation narratives. J. Am. Med. Inform. Assoc.30, 1408–1417 (2023). [DOI] [PMC free article] [PubMed]

- 130.Dobbs, M. F. et al. Linguistic correlates of suicidal ideation in youth at clinical high-risk for psychosis. Schizophr. Res.259, 20–27 (2023). [DOI] [PMC free article] [PubMed]

- 131.Lu, H. et al. Predicting suicidal and self-injurious events in a correctional setting using AI algorithms on unstructured medical notes and structured data. J. Psychiatr. Res.160, 19–27 (2023). [DOI] [PubMed] [Google Scholar]

- 132.Workman, T. E. et al. Identifying suicide documentation in clinical notes through zero‐shot learning. Health Sci. Rep.6, e1526 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133.Purushothaman, V., Li, J. & Mackey, T. K. Detecting suicide and self-harm discussions among opioid substance users on instagram using machine learning. Front. Psychiatry12, 551296 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 134.Grant, R. N. et al. Automatic extraction of informal topics from online suicidal ideation. BMC Bioinform.19, 57–66 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135.Falcone, T. et al. Digital conversations about suicide among teenagers and adults with epilepsy: A big‐data, machine learning analysis. Epilepsia61, 951–958 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 136.Kim, K. et al. Thematic analysis and natural language processing of job‐related problems prior to physician suicide in 2003–2018. Suicide Life‐Threatening Behav.52, 1002–1011 (2022). [DOI] [PubMed] [Google Scholar]

- 137.Levis, M., Levy, J., Dufort, V., Russ, C. J. & Shiner, B. Dynamic suicide topic modelling: Deriving population‐specific, psychosocial and time‐sensitive suicide risk variables from Electronic Health Record psychotherapy notes. Clin. Psychol. Psychother.30, 795–810 (2023). [DOI] [PMC free article] [PubMed]

- 138.Zhong, Q. Y. et al. Screening pregnant women for suicidal behavior in electronic medical records: diagnostic codes vs. clinical notes processed by natural language processing. BMC Med. Inform. Decis. Mak.18, 1–11 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 139.Fernandes, A. C. et al. Identifying suicide ideation and suicidal attempts in a psychiatric clinical research database using natural language processing. Sci. Rep.8, 7426 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 140.Bittar, A., Velupillai, S., Roberts, A. & Dutta, R. Text classification to inform suicide risk assessment in electronic health records. Stud. Health Technol. Inform.264, 40–44 (2019). [DOI] [PubMed]

- 141.McCoy, T. H. Jr, Pellegrini, A. M. & Perlis, R. H. Research Domain Criteria scores estimated through natural language processing are associated with risk for suicide and accidental death. Depression Anxiety36, 392–399 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 142.Holden, R. et al. Investigating bullying as a predictor of suicidality in a clinical sample of adolescents with autism spectrum disorder. Autism Res.13, 988–997 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 143.Cliffe, C. et al. Using natural language processing to extract self-harm and suicidality data from a clinical sample of patients with eating disorders: a retrospective cohort study. BMJ Open11, e053808 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 144.Morrow, D. et al. A case for developing domain-specific vocabularies for extracting suicide factors from healthcare notes. J. Psychiatr. Res.151, 328–338 (2022). [DOI] [PubMed] [Google Scholar]

- 145.Xie, F., Grant, D. S. L., Chang, J., Amundsen, B. I. & Hechter, R. C. Identifying suicidal ideation and attempt from clinical notes within a large integrated health care system. Perm. J.26, 85 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 146.Boggs, J. M., Quintana, L. M., Powers, J. D., Hochberg, S. & Beck, A. Frequency of clinicians’ assessments for access to lethal means in persons at risk for suicide. Arch. Suicide Res.26, 127–136 (2022). [DOI] [PubMed] [Google Scholar]

- 147.Cliffe, C. et al. A multisite comparison using electronic health records and natural language processing to identify the association between suicidality and hospital readmission amongst patients with eating disorders. Int. J. Eating Disorders56, 1581–1592 (2023). [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

No datasets were generated or analysed during the current study.